Abstract

Cross-validation of methods is an essential component of genome-enabled prediction of complex traits. We develop formulae for computing the predictions that would be obtained when one or several cases are removed in the training process, to become members of testing sets, but by running the model using all observations only once. Prediction methods to which the developments apply include least squares, best linear unbiased prediction (BLUP) of markers, or genomic BLUP, reproducing kernels Hilbert spaces regression with single or multiple kernel matrices, and any member of a suite of linear regression methods known as “Bayesian alphabet.” The approach used for Bayesian models is based on importance sampling of posterior draws. Proof of concept is provided by applying the formulae to a wheat data set representing 599 inbred lines genotyped for 1279 markers, and the target trait was grain yield. The data set was used to evaluate predictive mean-squared error, impact of alternative layouts on maximum likelihood estimates of regularization parameters, model complexity, and residual degrees of freedom stemming from various strengths of regularization, as well as two forms of importance sampling. Our results will facilitate carrying out extensive cross-validation without model retraining for most machines employed in genome-assisted prediction of quantitative traits.

Keywords: cross-validation, genomic selection, genomic prediction, genomic BLUP, reproducing kernels, GenPred, Shared Data Resources

Whole-genome-enabled prediction, introduced by Meuwissen et al. (2001), has received much attention in animal and plant breeding (e.g., Van Raden 2008; Crossa et al. 2010; Lehermeier et al. 2013), primarily because it can deliver reasonably accurate predictions of the genetic worth of candidate animals or plants at an earlier time in the context of artificial selection. It has also been suggested for prediction of complex traits in human medicine (e.g., de los Campos et al. 2011; Makowsky et al. 2011; Vázquez et al. 2012; López de Maturana et al. 2014; Spiliopoulou et al. 2015)

An important contribution of Utz et al. (2000) and of Meuwissen et al. (2001) was implanting cross-validation (CV) in plant and animal breeding as a mechanism for comparing prediction models, typically multiple linear regressions on molecular markers. In retrospect, it is perplexing that the progression of genetic prediction models, e.g., from simple “sire” or “family” models in the late 1960s (Henderson et al. 1959) to complex multivariate and longitudinal specifications (Mrode 2014), proceeded without CV, as noted by Gianola and Rosa (2015). An explanation, at least in animal breeding, is the explosion of best linear unbiased prediction, BLUP (Henderson 1973, 1984). The power and flexibility of the linear mixed model led to the (incorrect) belief that a bigger model is necessarily better, simply because of extra explanatory power from an increasing degree of complexity. However, a growing focus on predictive inference and on CV has altered such perception. A simple prediction model may produce more stable, and even better, results than a complex hierarchical model (Takezawa 2006; Wimmer et al. 2013), and the choice can be made via CV. Today, CV is a sine qua non part of studies comparing genome-assisted prediction methods.

Most often, CV consists of dividing a data set with n cases (each including a phenotypic measurement and a vector of genomic covariables) into a number of folds (K) of approximately equal size. Data in K − 1 folds are used for model training, and to effect predictions of phenotypes in the testing fold, given the realized values of the genomic covariables. The prediction exercise is repeated for each fold, and the overall results are combined; this is known as a K-fold CV (Hastie et al. 2009). A loss function, such as mean-squared error (MSE) or predictive correlation is computed to gauge the various predictive machines compared. However, the process must be repeated a number of times, with folds reconstructed at random (whenever possible) to obtain measures of CV uncertainty (e.g., Okut et al. 2011; Tusell et al. 2014). CV is computationally taxing, especially when Bayesian prediction models with a massive number of genomic covariates and implemented via Markov chain Monte Carlo (MCMC) are involved in the comparison.

Stylized formulae (e.g., Daetwyler et al. 2008) suggest that the expected predictive correlation (“accuracy”) in genome-enabled prediction is proportional to training sample size (n). On intuitive grounds, more genetic variability ought to be spanned as a training sample grows, unless additional cases bring redundant information. With larger n, it is more likely that genomic patterns appearing in a testing set are encountered in model training. Although the formulae do not always fit real data well (Chesnais et al. 2016), it has been observed that a larger n tends to be associated with larger predictive correlations (Utz et al. 2000; Erbe et al. 2010).

Arguably, there is no better expectation than what is provided by a CV conducted under environmental circumstances similar to those under which the prediction machine is going to be applied. When n is small, the largest possible training set sample size is attained in a leave-one-out (LOO) CV, e.g., Ober et al. (2015) with about 200 lines of Drosophila melanogaster. In LOO CV, n − 1 cases are used for model training, to then predict the single out-of-sample case. Model training involves n implementations, each consisting of a training sample of size n − 1 and a testing set of size 1.

It is not widely recognized that it is feasible to obtain CV results by running the model only once, which is well known for least-squares regression (e.g., Seber and Lee 2003). Here, we show that this idea extends to other prediction machines, such as ridge regression (Hoerl and Kennard 1970), genome-based best linear unbiased prediction (GBLUP; Van Raden 2008), and reproducing kernel Hilbert spaces regression (RKHS; Gianola et al. 2006; Gianola and van Kaam 2008). It is also shown that the concept can be applied in a MCMC context to any Bayesian hierarchical model, e.g., members of the “Bayesian alphabet” (Meuwissen et al. 2001; Gianola et al. 2009; Gianola 2013). This manuscript reviews available results for least-squares based CV, and shows how CV without actually doing CV can be conducted for ridge regression, BLUP of marker effects, GBLUP, and RKHS for any given kernel matrix. It is described how importance sampling can be used to produce Bayesian CV by running MCMC only once, which has great advantage in view of the intensiveness of MCMC computations. Illustrations are given by using a well characterized data set containing wheat grain yield as phenotype and 1279 binary markers as regressors, and the paper concludes with a Discussion. Most technical results are presented in a series of Appendices, to facilitate reading the main body of the manuscript.

Cross-Validation with Ordinary Least-Squares

Setting

A linear model used for regressing phenotypes on additive codes at biallelic marker loci (−1, 0, 1 for aa, Aa and AA, respectively), such as in a genome-wide association study, is

| (1) |

Here, is the vector of phenotypic measurements, is the marker matrix, and is the number of copies of a reference allele at locus j observed in individual is a vector of fixed regressions on marker codes, known as allelic substitution effects. Phenotypes and markers are often centered; if an intercept is fitted, the model is expanded by adding as an effect common to all phenotypes. The residual vector is assumed to follow the distribution where is an identity matrix, and is a variance parameter common to all residuals.

The basic principles set here carry to the other prediction methods discussed in this paper. In this section, we assume so that ordinary least-squares (OLS) or maximum likelihood under the normality assumption above can be used. The OLS estimator of is , and the fitted residual for datum i is , where is the row of Assuming the model holds, , so the estimator is unbiased. A review of the pertinent principles is given in Appendix A, from which we extract results.

It is shown in Appendix A that the uncertainty of a prediction, as measured by variance, increases with p (model complexity), and decreases with the size of the testing set, . Two crucial matters in genome-enabled prediction must be underlined. First, if the model is unnecessarily complex, prediction accuracy (in the MSE sense) degrades unless the increase in variance is compensated by a reduction in prediction bias. Second, if the training set is made large at the expense of the size of the testing set, prediction mean squared error will be larger than otherwise. The formulae of Daetwyler et al. (2008) suggest that expected prediction accuracy, as measured by predictive correlation (not necessarily a good metric; González-Recio et al. 2014), increases with n. However, the variability of the predictions would increase, as found by Erbe et al. (2010) in an empirical study of Holstein progeny tests with alternative CV layouts. Should one aim at a higher expected predictive correlation or at a more stable set of predictions at the expense of the former? This question does not have a simple answer.

Leave-one-out (LOO) cross-validation

LOO is often used when n is small and there is concern about the limited size of the training folds. Let be with its row removed, so that its order is Since

| (2) |

are matrices. Likewise, if is with its element removed, the OLS right-hand sides in LOO are

| (3) |

Making use of (81) in Appendix B, the least-squares estimator of formed with the observation deleted from the model is expressible as

| (4) |

Employing Appendix C, the estimator can be written in the form

| (5) |

where and is the fitted residual using all n observations in the analysis; the fitted LOO residual is

| (6) |

Hence, the LOO estimator and prediction error can be computed directly from the analysis carried out with the entire data set: no need for n implementations.

Making use of (6), the realized LOO CV mean squared error of prediction is

| (7) |

and the expected mean squared error of prediction is given by

| (8) |

where is an diagonal matrix. As shown in Appendix A the LOO expected PMSE gives an upper bound for the expected squared error in least-squares based CV. The extent of overstatement of the error depends on the marker matrix (via the ) and on the prediction biases Hence, LOO CV represents a conservative approach, with the larger variance of the prediction resulting from the smallest possible testing set size If the prediction is unbiased, the terms vanish, and it is clear that observations with values closer to 1 contribute more to squared prediction error than those with smaller values, as the model is close to overfitting the former type of observations.

Leave-d-out cross-validation

The preceding analysis suggests that reallocation of observations from training into testing sets is expected to reduce PMSE relative to the LOO scheme. Most prediction-oriented analyses use fold CV, where K is chosen arbitrarily (e.g., ) as mentioned earlier; the decision of the number of folds is usually guided by the number of samples available. Here, we address this type of scheme generically by removing d out of the n observations available for training, and declaring the former as members of the testing set. Let be with d of its rows removed, and be the data vector without the d corresponding data points. As shown in Appendix D,

| (9) |

where note that does not always exist but can be replaced by a generalized inverse, and will be invariant to the latter if . Predictions are invariant with respect to the generalized inverse used. The similarity with (5) is clear: appears in lieu of of order contains (in columns) the rows of being removed, and are the residuals corresponding to the left out obtained when fitting the model to the entire data set.

The error of prediction of the d phenotypes entering into the testing set is

| (10) |

The mean-squared error of prediction of the d observations left out (testing set) becomes

| (11) |

The prediction bias obtained by averaging over all possible data sets is

| (12) |

where is a vector of true means of the distribution of observations in the testing sets. After algebra,

| (13) |

and

| (14) |

Observe that the term in brackets is a matrix counterpart of (76) in Appendix A, with playing the role of in the expression. The two terms in the equation above represent the contributions and bias and (co) variance to expected squared prediction error.

The next section illustrates how the preceding logic carries to regression models with shrinkage of estimated allelic substitution effects

Cross-Validation with Shrinkage of Regression Coefficients

BLUP of markers (ridge regression)

Assume again that phenotypes and markers are centered. Marker effects are now treated as random variables and assigned the normal distribution, where is a variance component. The BLUP of (Henderson 1975) is given by

| (15) |

where is a shrinkage factor taken as known. BLUP has the same mathematical form as the ridge regression estimator (Hoerl and Kennard 1970), developed mainly for tempering problems caused by colinearity among columns of in regression models where and with all regression coefficients likelihood-identified. The solution vector can also be assigned a Bayesian interpretation as a posterior expectation in a linear model with Gaussian residuals, and used as prior distribution, with variance components known (Dempfle 1977; Gianola and Fernando 1986). A fourth view of is as a penalized maximum likelihood estimator under an penalty (Hastie et al. 2009). Irrespective of its interpretation, provides a “point statistic” of for the situation. In BLUP, or in Bayesian inference, it is not a requirement that the regression coefficients are likelihood identified. There is one formula with four interpretations (Robinson 1991).

Given a testing set with marker genotype matrix the point prediction of yet to be observed phenotypes is We consider LOO CV because subsequent developments assume that removal of a single case has a mild effect on and . This assumption is reasonable for animal and plant breeding data sets where n is large, so removing a single observation should have a minor impact on, say, maximum likelihood estimates of variance components. If λ is kept constant, it is shown in Appendix E that

| (16) |

where , and is the residual from ridge regression BLUP applied to the entire sample. A similar expression for leave-d-out cross-validation using the same set of variance components is also in Appendix E. If is smaller and n is reasonably large, the error resulting from using variance components estimated from the entire data set should be small.

The error of predicting phenotype i is now and is expressible as

| (17) |

similar to that LOO OLS. Letting be a vector of prediction biases, and the expected prediction MSE is

| (18) |

The first term is the average squared prediction bias, and the second is the prediction error variance. As , (18) tends to the least-squares

Genomic BLUP

Once marker effects are estimated as , a representation of genomic BLUP (GBLUP) for n individuals is the vector with its element being . In GBLUP, “genomic relationship matrices” are taken as proportional to (where often has centered columns) various genomic relationship matrices are in, e.g., Van Raden (2008), Astle and Balding (2009) and Rincent et al. (2014). Using (16) LOO GBLUP (i.e., excluding case i from the training sample) is

| (19) |

The formula above requires finding given the procedure entails solving p equations on p unknowns and finding the inverse of is impossible or extremely taxing when p is large. A simpler alternative based on the well-known equivalence between BLUP of marker effects and of additive genotypic value is used here.

If is a vector of marked additive genetic values and then Many genomic relationship matrices are expressible as for some constant so that and is called “genomic variance” or “marked additive genetic variance” if encodes additive effects; clearly, there is no loss of generality if is used, thus preserving the λ employed for BLUP of marker effects. The model for the “signal” becomes

| (20) |

Letting , then is GBLUP using all data points. Appendix F shows how LOO GBLUP and d-out GBLUP can be calculated indirectly from elements or blocks of and elements of

RKHS regression

In RKHS regression (Gianola et al. 2006; Gianola and van Kaam 2008), input variables, e.g., marker codes, can be transformed nonlinearly, potentially capturing both additive and nonadditive genetic effects (Gianola et al. 2014a, 2014b), as further expounded by Jiang and Reif (2015) and Martini et al. (2016). When a pedigree or a genomic relationship matrix is used as kernel, RKHS yields pedigree-BLUP and GBLUP, respectively, as special cases (Gianola and de los Campos 2008; de los Campos et al. 2009, 2010).

The standard RKHS model is

| (21) |

with (and therefore ); is an positive (semi)definite symmetric matrix so that when the inverse is unique, and can be obtained by solving the system

| (22) |

with solution (since and is invertible)

| (23) |

The BLUP of under a RKHS model is

| (24) |

where K stands for “kernel,” and Putting the RKHS solution has the same form as as given in the preceding section. Using Appendix F, it follows that

| (25) |

| (26) |

and

| (27) |

The previous expressions reduce to the LOO CV situation by setting

Bayesian Cross-Validation

Setting

Many Bayesian linear regression on markers models have been proposed for genome-assisted prediction of quantitative traits (e.g., Meuwissen et al. 2001; Heslot et al. 2012; de los Campos et al. 2013; Gianola 2013). All such models pose the same specification for the Bayesian sampling model (a linear regression), but differ in the prior distribution assigned to allelic substitution effects. Implementation is often via MCMC, where computations are intensive even in the absence of CV; shortcuts and approximations are not without pitfalls. Is it possible to do CV by running an MCMC implementation only once? What follows applies both to LOO and out CV situations as well as to any member of the Bayesian alphabet (Gianola et al. 2009; Gianola 2013)

Suppose some Bayesian model has been run with MCMC, leading to S samples collected from a distribution with posterior density here, are all unknowns to be inferred and H denotes hyper-parameters arrived at in a typically subjective manner, e.g., arbitrary variances in a four-component mixture distribution assigned to substitution effects (MacLeod et al. 2014). In CV, the data set is partitioned into , training and testing sets are chosen according to the problem in question, and Bayesian learning is based on the posterior distribution . Predictions are derived from the predictive distribution of the testing set data

| (28) |

the preceding assumes that is independent of given , a standard assumption in genome-enabled prediction. The point predictor chosen most often is the expected value of the predictive distribution

| (29) |

| (30) |

In the context of sampling, representation (29) implies that one can explore the “augmented” distribution , and estimate by ergodic averaging of samples. Representation (30) uses Rao-Blackwellization: if can be written in closed form, as is the case for regression models , the Monte Carlo variance of an estimate of based on (30) is less than, or equal to, that of an estimate obtained with (29).

We describe Bayesian LOO CV, but extension to a testing set of size d is straightforward. In LOO, the data set is partitioned as where is the predictand and is the vector containing all other phenotypes in the data set. A brute force process involves running the Bayesian model n times, producing the posterior distributions Since LOO CV is computationally formidable in an MCMC context, procedures based on drawing samples from and converting these into realizations from can be useful (e.g., Gelfand et al. 1992; Gelfand 1996; Vehtari and Lampinen 2002). Use of importance sampling, and of sampling importance resampling (SIR), algorithms for this purpose is discussed next. Cantet et al. (1992) and Matos et al. (1993) present early applications of importance sampling to animal breeding.

Importance sampling

We seek to estimate the mean of the predictive distribution of the left-out data point Since is independent of , one has

| (31) |

As shown in Appendix G

| (32) |

Here, is called an “importance sampling” weight (Gelfand et al. 1992; Albert 2009). Expression (32) implies that the posterior mean of in a training sample can be expressed as the ratio of the posterior means of , and of taken under a Bayesian run using the entire data set. It is shown in Appendix F that, given draws from the full-posterior distribution, the posterior expectation can in equation (32) be estimated as

| (33) |

where

| (34) |

By making reference to (31), it turns out that a Monte Carlo estimate of the mean of the predictive distribution of datum i in the Bayesian LOO CV is given by

| (35) |

This type of estimator holds for any Bayesian linear regression model irrespective of the prior adopted, i.e., it is valid for any member of the “Bayesian alphabet” (Gianola et al. 2009; Gianola 2013). In CV, the prediction is

| (36) |

where

| (37) |

where for j being a member of the of observations forming the testing set.

The importance sampling weights are the reciprocal of conditional likelihoods; this specific mathematical representation can produce imprecise estimates of posterior expectations, especially if the posterior distribution with all data has much thinner tails than the posterior based on the training set. Vehtari and Lampinen (2002) calculate the “effective sample size” for a LOO CV as

| (38) |

If all weights are equal over samples, the weight assigned to any draw is and the variance of the weights is yielding on the other hand, can be much smaller than S if the variance among weights is large, e.g., when some weights are much larger than others.

The SIR algorithm described by Rubin (1988), Smith and Gelfand (1992) and Albert (2009) can be used to supplement importance sampling; SIR can be viewed as a weighted bootstrap. Let the sampled values and the (normalized) importance sampling weights be and respectively, for and Then, obtain a resample of size S by sampling with replacement over with unequal probabilities proportional to respectively, obtaining realizations Finally, average realizations for estimating in (31).

The special case of “Bayesian GBLUP”

The term “Bayesian GBLUP” is unfortunate but has become entrenched in animal and plant breeding. It refers to a linear model that exploits genetic or genomic similarity matrix among individuals (as in GBLUP), but where the two variance components are unknown and learned in a Bayesian manner. Prior distributions typically assigned to variances are scale inverted chi-square processes with known scale and degrees of freedom parameters (e.g., Pérez and de los Campos 2014). The model is with ; the hierarchical prior is and , where the hyper-parameters are . A Gibbs sampler iteratively loops over the conditional distributions

| (39) |

Above, denotes the data plus H and all other parameters other than the ones being sampled in a specific conditional posterior distribution; indicates the reciprocal of a draw from a central chi-square distribution on degrees of freedom. The samples of are drawn from a multivariate normal distribution of order Its mean, is GBLUP computed at the current state of the variance ratio, which varies at random from iteration to iteration; the covariance matrix is The current GBLUP is calculated as in this representation, must exist. If it does not, a representation of GBLUP that holds is

| (40) |

where is an matrix of regression coefficients of on . Likewise

| (41) |

Once the Gibbs sampler has been run and burn-in iterations discarded, S samples become available for posterior processing, with sample s consisting of In a leave-d-out CV, the posterior expectation of (the point predictor of the future phenotype of individual j) is estimated as

| (42) |

where

| (43) |

and

| (44) |

with the product of the normal densities taken over members of the testing set. Sampling weights may be very unstable if d is large.

Data availability

The authors state that all data necessary for confirming the conclusions presented in the article are represented fully within the article.

Evaluation of Methodology

The methods described here were evaluated using the wheat data available in package BGLR (Pérez and de los Campos 2014). This data set is well curated and has also been used by, e.g., Crossa et al. (2010), Gianola et al. (2011) and Long et al. (2011). The data originated in trials conducted by the International Maize and Wheat Improvement Center (CIMMYT), Mexico. There are 599 wheat inbred lines, each genotyped with 1279 DArT (Diversity Array Technology) markers and planted in four environments; the target trait was grain yield in environment 1. Sample size was then with being the number of markers. These DArT markers are binary and denote presence or absence of an allele at a marker locus in a given line. There is no information on chromosomal location of markers. The objective of the analysis was to illustrate concepts, as opposed to investigate a specific genetic hypothesis. The data set of moderate size allowed extensive replication and reanalysis under various settings.

LOO vs. leave-d-out CV: ordinary least-squares

The linear model had an intercept and regressions on markers 301 through 500 in the data file; markers were chosen arbitrarily. Here, and ensuring unique OLS estimates of substitution effects, i.e., there was no rank deficiency in .

Seven CV layouts were constructed in which testing sets of sizes 2, 3,…, 7, or 8 lines were randomly drawn (without replacement) from the 599 inbred lines. Training set sizes decreased accordingly, e.g., for training sample size was 599 – 7= 592. Larger sizes of testing sets were not considered because in (9) became singular as d increased beyond that point. The training-testing process was repeated 300 times at random, to obtain an empirical distribution of prediction mean squared errors.

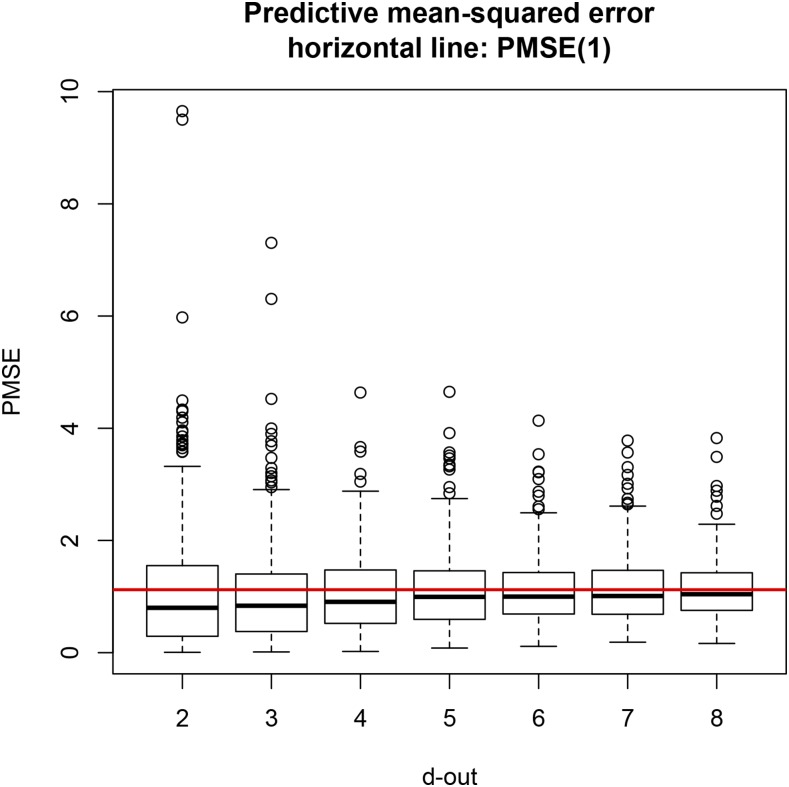

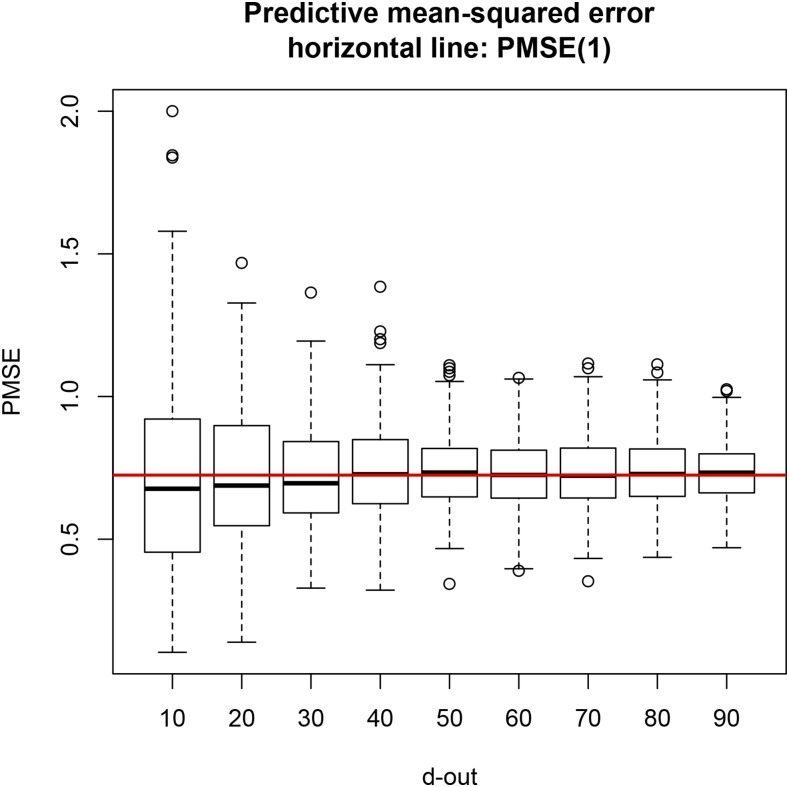

For LOO CV, regression coefficients were calculated using (5), and the predictive mean squared error was computed as in (7). For the leave-d-out CV, regressions and PMSE were computed with (9) and (11), respectively. Figure 1 shows that the median PMSE for leave-d-out CV was always smaller than the LOO PMSE (horizontal line), although it tended toward the latter as d increased, possibly due to the increasingly smaller training sample size. PMSE in LOO was 1.12, while it ranged from 0.80 to 1.04 for testing sets containing two or more lines. An increase in testing set size at the expense of some decrease in training sample size produced slightly more accurate but less variable predictions (less spread in the distribution of PMSE); this trend can be seen in the box plots depicted in Figure 1. Differences were small but LOO was always less accurate.

Figure 1.

Predictive mean squared error (PMSE) of ordinary least-squares for seven cross-validation (CV) layouts, each replicated 300 times at random. Training sets had size 599 – d (d = 2,3,…,7, and 8). Horizontal line is PMSE for leave-one-out CV.

BLUP of marker effects

The developments for ridge regression or BLUP of marker effects depend on assuming that allocation of observations into testing sets, with a concomitant decrease in training set size, does not affect the ratio of variance components appreciably.

First, we examined consequences of removing each of the 599 lines at a time on maximum likelihood estimates (MLE) of marker and residual variances. The model was as in (1), without an intercept (phenotypes were centered), assuming and were independently distributed, and with all 1279 markers used when forming An eigen-decomposition of coupled with the R function (G. de los Campos, personal communication) was used for computing MLE. It was assumed that convergence was always to a global maximum, as it was not practical to monitor the 599 implementations for convergence in each case. MLE of were found by replacing the unknown variances by their corresponding estimates.

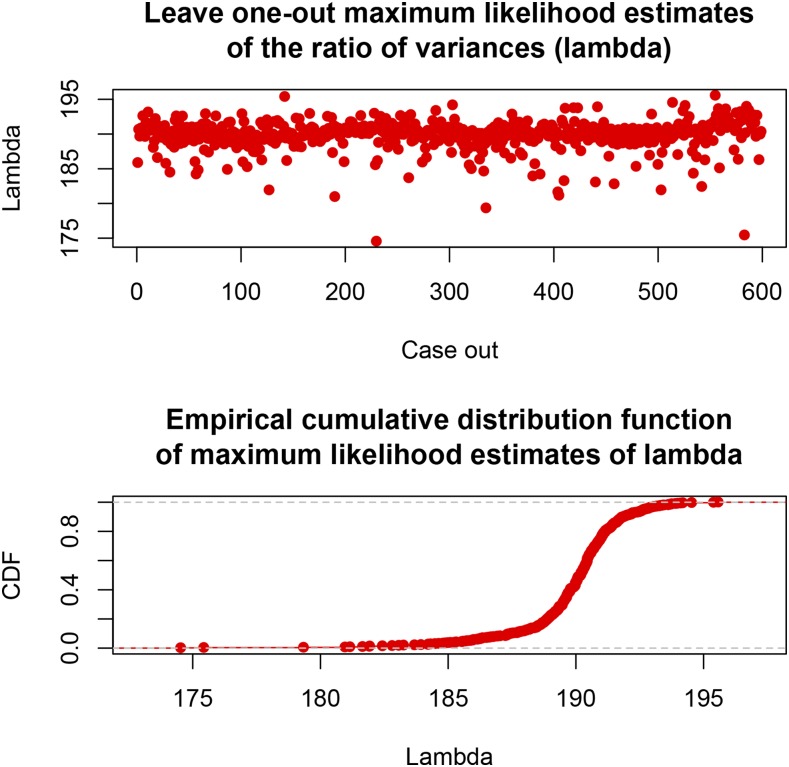

With all lines used in the analysis, Figure 2 displays the 599 estimates of λ, and the resulting empirical cumulative distribution function when LOO was used. Removal of a single line produced MLE of λ ranging from 174.5 to 195.6 (corresponding to estimates of spanning the range ); the 5 and 95 percentiles of the distribution of the LOO estimates of λ were 185.9 and 192.7, respectively. Model complexity (Ruppert et al. 2003; Gianola 2013) was gauged by evaluating the “effective number of parameters” as ; the “effective degrees of freedom” are For the entire data set with and variation of λ from 174.5 to 195.6 was equivalent to reducing from 164.2 to 155.3, with ranging from 435.8 to 443.7. These metrics confirm that the impact of removing a single line from the training process was fairly homogeneous across lines.

Figure 2.

Maximum likelihood estimates of for each of 599 leave-one-out settings; residual variance, variance of marker effects. The bottom panel gives the empirical distribution function of the estimates.

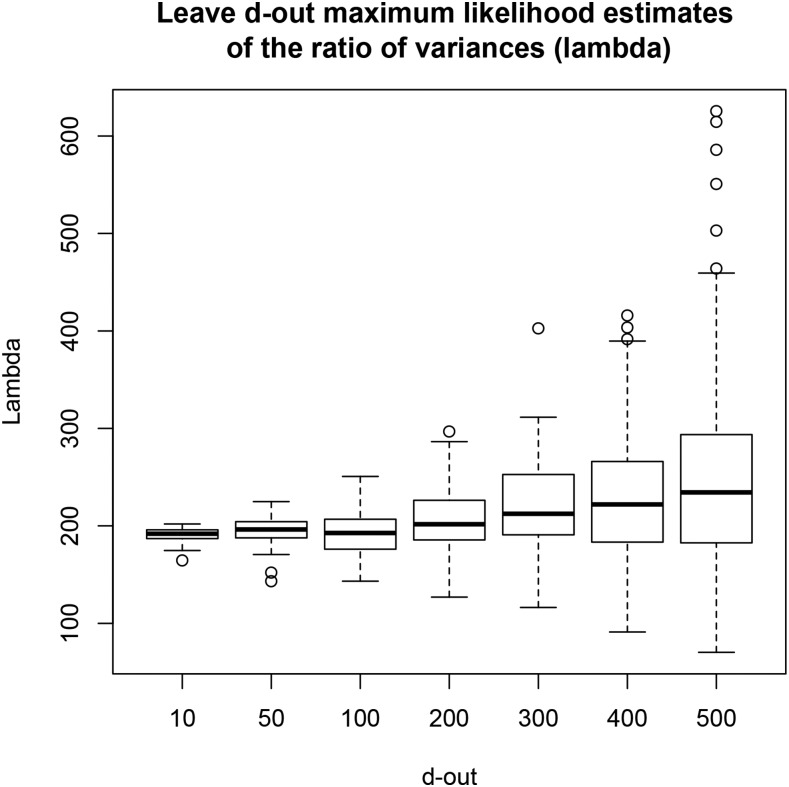

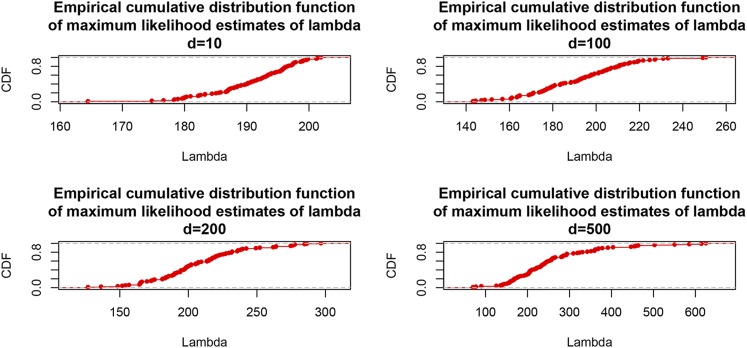

Next, we excluded 10, 50, 100, 200, 300, 400, and 500 lines from the analysis, while keeping constant. The preceding was done by sampling with replacement the appropriate number of rows from the entire data matrix and removing these rows from the analysis; the procedure was repeated 100 times at random for each value of to obtain an empirical distribution of the MLE. Figure 3 and Figure 4 depict the distributions of estimates. As d increased (training sample size decreased) the median of the estimates and their dispersion increased. Medians were 192.0 196.3 192.7 201.7 212.5 , 222.0 , and 234.3 The increase of medians as training sample size decreased can be explained as follows: (a) stronger shrinkage (larger ) must be exerted on marker effects to learn signal from 1279 markers as sample size decreases. (b) MLE of variance components have a finite sample size bias, which might be upwards for λ here; bias cannot be measured, so the preceding is conjectural.

Figure 3.

Maximum likelihood estimates of for each of seven CV settings, each replicated 100 times at random. Training sets had size 599 – d; d = 10, 50, 100, 200, 300, 400, and 500.

Figure 4.

Empirical distribution function of maximum likelihood estimates of for each of four CV settings each replicated 100 times at random residual variance, variance of marker effects. Training set sizes were 599 – d; d = 10, 100, 200, and 500.

In short, it appears that keeping λ constant in a LOO setting is reasonable; however, the estimated variance ratio was sensitive with respect to variation in training set size when 100 or more lines, i.e., 15% or more of the total number of cases, were removed for model training.

To assess the impact on PMSE of number of lines removed from training and allocated to testing, 300 testing sets for each of lines were formed by randomly sampling (with replacement) from the 599 lines. The regression model was trained on the remaining lines using the entire battery of 1279 markers with . Figure 5 shows the distribution of PMSE for each of the layouts. A comparison with Figure 1 shows that the PMSEs for BLUP were smaller than for OLS; this was expected because, even though training set sizes were smaller than those used for OLS, BLUP predictions with are more stable and the model was more complex, since 1279 markers were fitted jointly. As testing set size increased, median PMSE was 0.68 0.70 and 0.72–0.73 for the other testing set sizes. For LOO, PMSE was 0.72. As in the case of OLS, the distribution of PMSE over replicates became narrower as d grew. As anticipated, decreases in training set size produced a mild deterioration in accuracy of prediction (in an MSE sense) but generated a markedly less variable CV distribution. Testing sets of about 10% of all lines produced a distribution of PMSE with a similar spread to what was obtained with larger testing sets without sacrificing much in mean accuracy. We corroborated that attaining the largest possible training sample is not optimal if done at the expense of testing set size, because predictions are more variable.

Figure 5.

PMSE of BLUP of markers (ridge regression) for 300 testing sets in each of nine CV settings of sizes d = 10, 20, 30, 40, 50, 60, 70, 80, and 90; training set size was 599 – d.

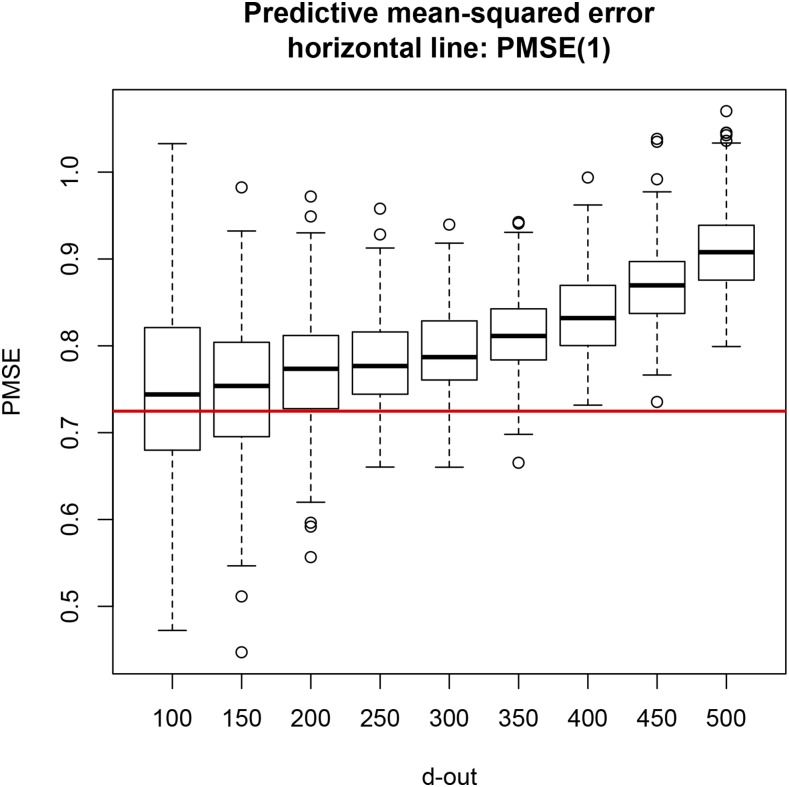

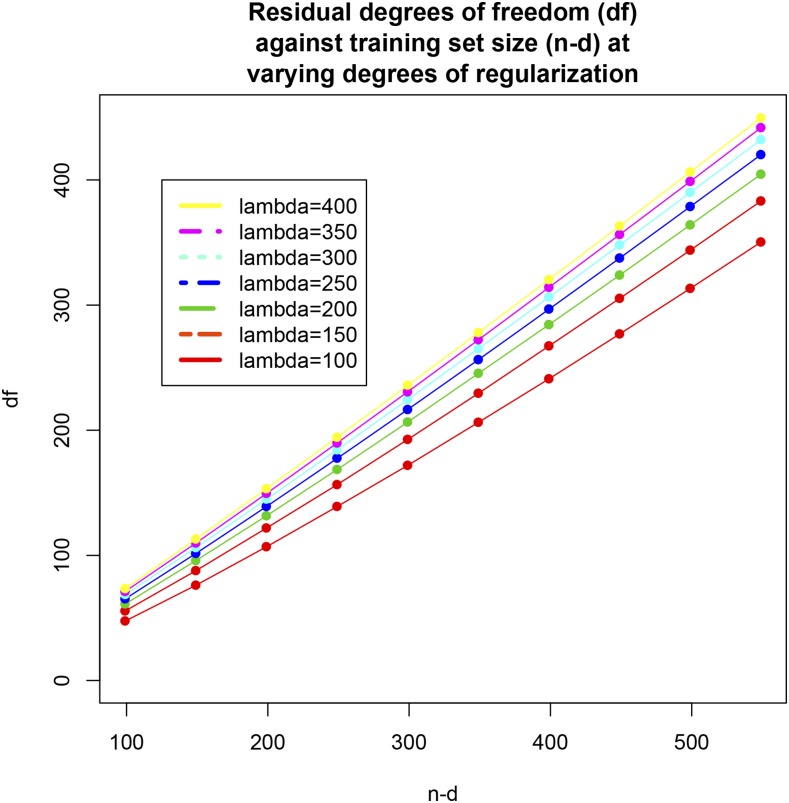

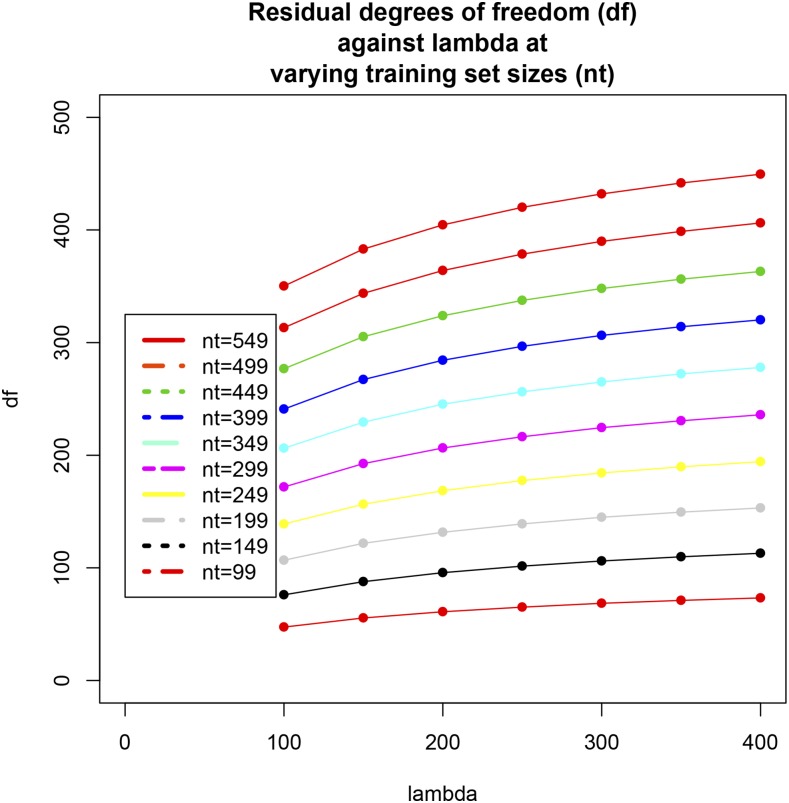

Testing sets of size to (in increments of 50 lines) were evaluated as in the preceding case, again using 300 replicates for each setting and with Comparison of Figure 6 with Figure 5 indicates that a marked deterioration in PMSE ensued, which may be due to insufficient regularization or overfitting from the decrease in training set size. For example, a testing set of size 500 implies that the model with 1279 markers was trained using only 99 inbred lines. In this case, stronger regularization (shrinkage of regression coefficients toward 0) may be needed than what is effected by To examine whether overfitting or insufficient regularization was the source of degradation in PMSE, the effective residual degrees of freedom

| (45) |

were calculated for each of 70 combinations of and each combination was replicated 50 times by sampling with replacement from and extracting the appropriate number of rows The values were averaged over the 50 replications. Figure 7 displays plotted against training set size ( 99, 149,…,499, 549): the impact of variations in λ on was amplified in absolute and relative terms as training set size increased. For instance, for , each observation in the training set contributed 0.48 and 0.74 residual degrees of freedom when λ varied from 100 to 400; when the corresponding contributions were 0.64 and 0.82. Figure 8 shows how varied with λ for each of the training set sizes. Overfitting did not seem to be the cause of degradation in PMSE because the models “preserved” a reasonable number of degrees of freedom in each case considered.

Figure 6.

PMSE of BLUP of markers (ridge regression) for 300 testing sets in each of nine CV settings of sizes d = 100, 150, 200, 250, 300, 350, 400, 450, and 500; training set size was 599 – d.

Figure 7.

Effective residual degrees of freedom against training sets sizes (n – d = 99, 149, 199, 249, 299, 349, 399, 449, 499, and 549) at selected values of the regularization parameter (λ = 100,150, 200, 250, 300, 350, and 400). Values are averages of 50 random replications.

Figure 8.

Effective residual degrees of freedom against the regularization parameter (λ = 100, 150, 200, 250, 300, 350, and 400) at various training sets sizes (n – d = 99, 149, 199, 249, 299, 349, 399, 449, 499, 549). Values are averages of 50 random replications.

These results reinforce the point that, in shrinkage-based methods, such as GBLUP or any member of the “Bayesian alphabet” (Gianola et al. 2009; Gianola 2013), there is an interplay between sample size, model complexity, and strength of regularization. The effective number of parameters in the training process is given by and here it varied from 25.64 to 198.73 Even though 1279 markers were fitted, the model was not able to estimate beyond about 200 linear combinations of marker effects. This illustrates that the “prior” (i.e., the distribution assigned to marker effects) matters greatly when . In other words, there were ∼ 1079 estimates of marker effects that are statistical artifacts from regularization, and which should not be construed as sensible estimates of marker locus effects, as pointed out by Gianola (2013). Bayesian learning would gradually improve over time if n would grow faster than which seems unlikely given a tendency toward overmodeling as sequence and postgenomic data accrue.

Genomic BLUP

Standard GBLUP, of genotypic values of the 599 lines was computed with . Subsequently, was obtained for each of the 599 lines, i.e., the GBLUP of all lines after removing line i in the training process. Euclidean distances between and were calculated as

| (46) |

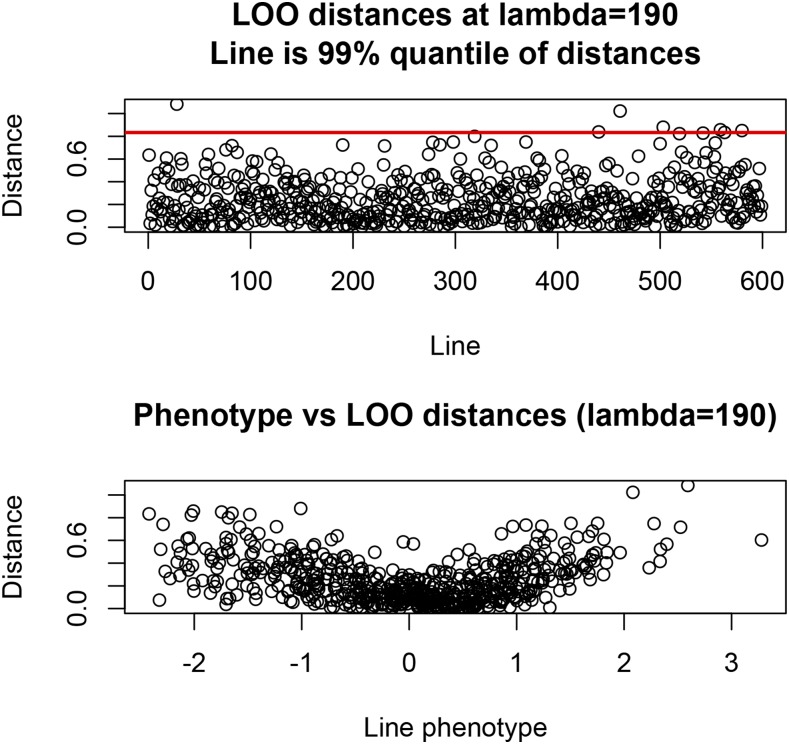

this metric measures the extent to which removal of line i influences model training. The minimum and maximum absolute distances were and 1.082, respectively, and the coefficient of variation of distances was about 80%. An observation was deemed influential when 0.83, the 99-percentile of the empirical distribution. Figure 9 (top panel) shows a scatter plot of the ; influential lines (28, 440, 461, 503, 559, and 580) correspond to points on top of the horizontal line. The relationship between the phenotype of the line excluded in the LOO CV is shown in the bottom panel: larger phenotypes tended to be associated with larger distances.

Figure 9.

Euclidean distances between genomic BLUP (GBLUP) with all observations and leave-one-out (LOO) GBLUP, by line removed from training in the CV. Bottom panel depicts the association between phenotype left out in training and distances. The regularization parameter used was .

Using data from all lines, GBLUP is ; the influence of phenotype of line j on GBLUP of line i is element of the matrix Observe that

| (47) |

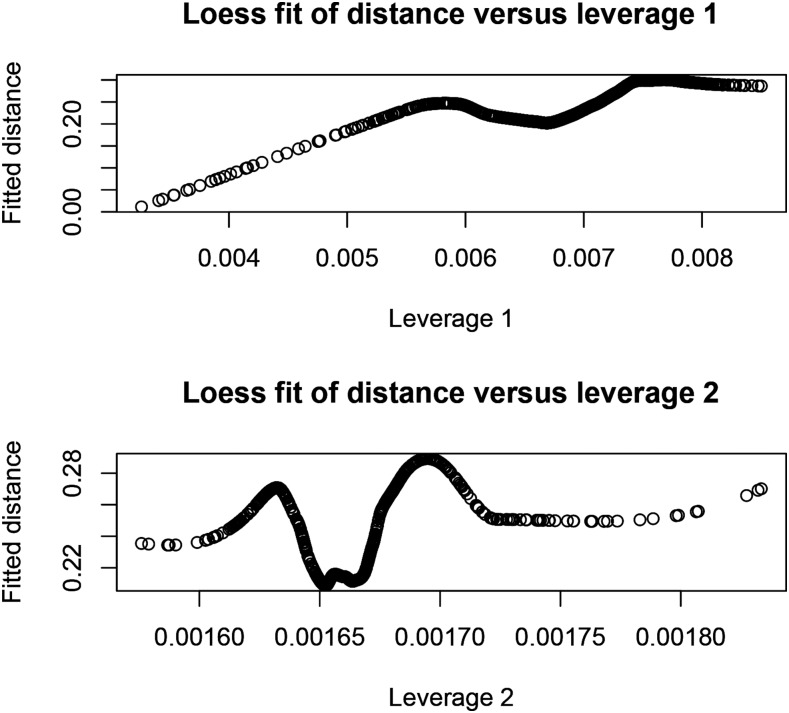

is as a matrix of regression coefficients; its row contains the regression of the genotype of line i on the n phenotypes. A measure of overall influence (leverage) of line j is the average of values (or absolute values) of elements in column j of Clearly, leverages depend on relatedness structure and on λ but not on phenotypes. Figure 10 depicts plots of LOESS regressions (Cleveland 1979) of Euclidean distance between GBLUP calculated with all lines, and LOO GBLUP on two measures of leverage: the average of absolute values of over rows for each line (leverage 1), and the average of elements of over rows, by line (leverage 2). LOESS fits (span parameter equal to 0.50) indicated that leverage 1 informs about the impact of removing a specific line in LOO: the larger the leverage 1 of a line, the larger the effect of its removal from the training process.

Figure 10.

Nonparametric (LOESS) fits of Euclidean distance between GBLUP with all observations and LOO GBLUP on two measures of influence (leverage) a line has on model training. Leverage 1 is the average of absolute values of the regression of all lines on the phenotype of a given line; leverage 2 is the average of such regressions.

RKHS

We built a kernel matrix with typical element (ranging between 0 and 1)

| (48) |

for Here is the vector of marker genotypes in line , and are bandwidth parameters tuned to establish “global,” “regional” and “local” similarities between individuals (as h increases, similarity decreases); , and are weights assigned to the three sources of similarity, such that and We arbitrarily chose , and and , and . From we created three additional kernels by placing for leading to matrices , and Mean off-diagonal elements of the four kernel matrices were 0.73 , 0.29 , 0.09 , and 0.47 these values can be interpreted as correlations between pairs of individuals. Hence, and produced the highest and lowest degrees of correlation, respectively; complexity of the models increases from kernel 1 to kernel 3 because the fit to the data increases with h (de los Campos et al. 2009, 2010).

The four no-intercept RKHS models had the basic form

| (49) |

where was either as in (48) or Variance components were estimated by maximum likelihood, producing as estimates of , , and The effective number of parameters was calculated (e.g., kernel 1) as yielding 224.5, 319.2, and 376.3 for kernel matrices 1, 2, and 3, respectively; for the effective number of parameters fitted was 330.3. As expected, model complexity increased as the model became more “local.”

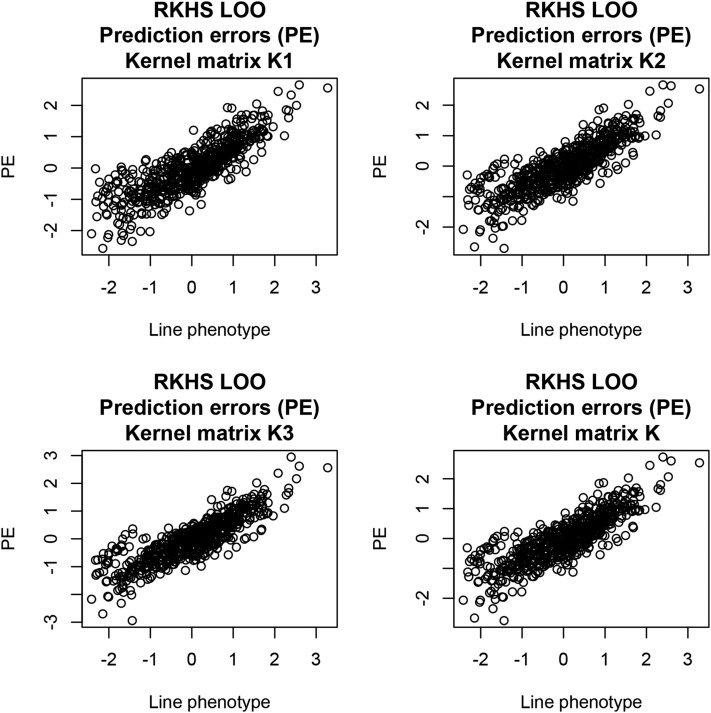

We fitted the four RKHS models to all 599 lines, and conducted a LOO CV for each model. In the fitting process, the corresponding regularization parameter was employed; e.g., for . For each of the models, RKHS predictions of genotypic values were calculated as

| (50) |

The implicit dependence of predictions on bandwidths and weights is indicated in the notation above, but not used hereinafter. In LOO (line j left out in the training process), predictions and predictive mean-squared errors are calculated as for LOO GBLUP, that is,

| (51) |

where is the diagonal element of and

| (52) |

Predictive MSEs were 0.6795 0.6446 0.6555 , and 0.6439 ; predictive correlations were 0.566, 0.597, 0.591, and 0.598. Differences between kernels with respect to the criteria used were nil, but the model combining three kernels conveying differing degrees of locality had a marginally smaller MSE and a slightly larger correlation. LOO prediction errors plotted against line phenotypes are shown in Figure 11 for the four kernels used. Prediction errors were larger in absolute value for lines with lowest and highest grain yields, suggesting that the model may benefit by accounting for possibly heterogeneous residual variances. and captured some substructure in the distribution of fitted residuals. The more global kernel arguably capturing mostly additive effects, did not suggest any substructure, which reemerged when the three kernels were combined into The preceding exercise illustrates that predictive correlations and PMSE do not fully describe the performance of a prediction machine.

Figure 11.

LOO prediction errors (testing set) of four reproducing kernel Hilbert spaces (RKHS) regression models against line phenotypes.

Bayesian GBLUP with known variance components

Bayesian GBLUP with known variances has a closed form solution: using all data, the posterior distribution is where and . Set , and is centered.

This problem was attacked (with Monte Carlo error) by drawing independent samples from the 599-variate normal posterior distribution; no MCMC is needed. Using (34), the importance sampling weights for LOO are

| (53) |

where is sample s for line i; is drawn from . The importance sampling weight becomes

| (54) |

Observe that the likelihood that confers to is inversely proportional to Hence, samples in which the phenotype removed confers little likelihood to the receive more weight.

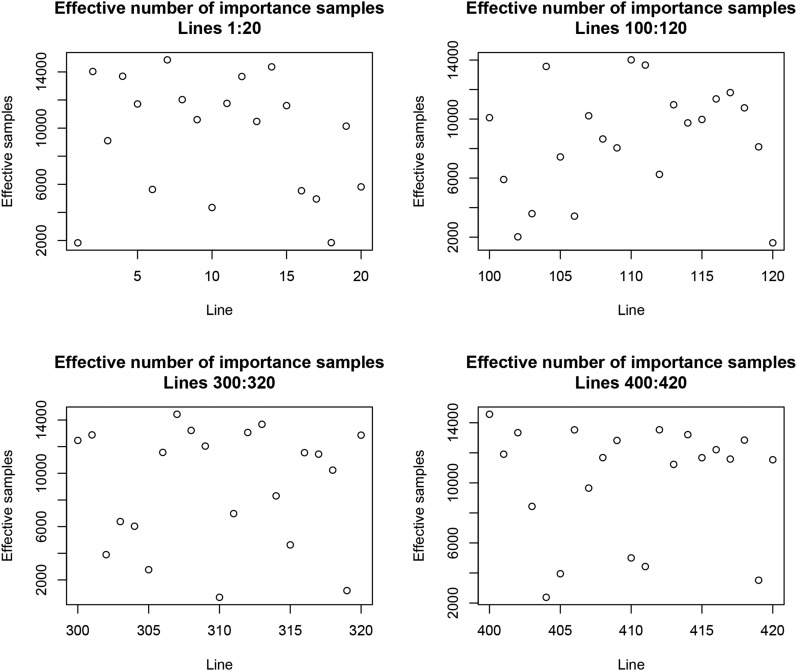

We took 15,000 independent samples from The effective number of weights per line, calculated with (38) ranged from 76.4 to 14,983.5; the median (mean) weight was 10,991.0 (9789.2), and the first and third quartiles of the distribution were 6826.1 and 14,983.5, respectively. On average, 1.54 independent samples were required for drawing an effectively independent LOO posterior sample. Figure 12 illustrates the variability among some arbitrarily selected lines of the mean number of importance weights. A phenotype having a small mean importance weight would be “surprising” with respect to the model. However, there are theoretical and numerical issues with the weights used here, a point retaken in the discussion.

Figure 12.

Effective number of importance samples for LOO Bayesian GBLUP (given the variances) for selected lines; 15,000 independent samples were drawn from the posterior distribution of genotypic values.

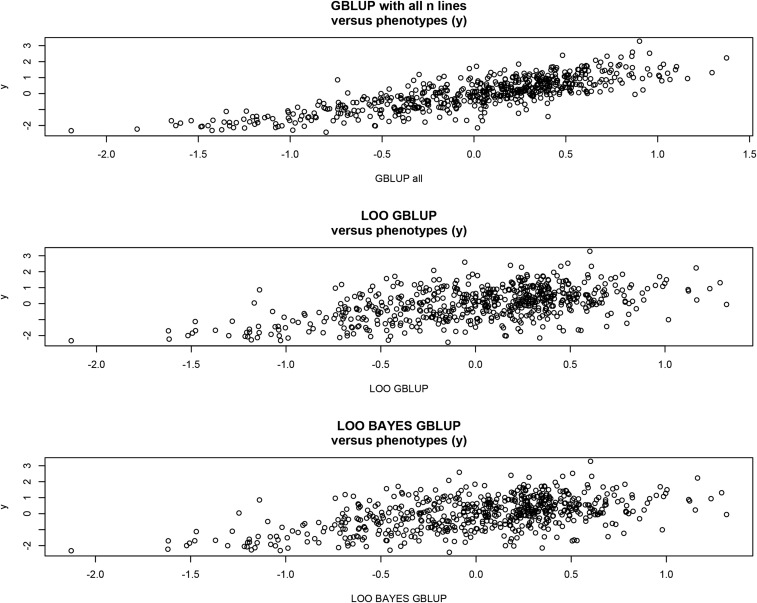

Figure 13 shows that GBLUP using the entire sample of size n fitted closely. Correlations between predictors were 0.91 in all cases. Mean-squared errors were 0.40 (GBLUP, entire sample), 0.73 (Bayes LOO), and 0.73 (LOO GBLUP). The correlation between predictions and phenotypes was 0.81 for GBLUP (entire sample), 0.52 for LOO GBLUP, and 0.51 for Bayes LOO.

Figure 13.

Associations between phenotypes and predictions (GBLUP with all lines used in training; LOO GBLUP, leave-one-out genomic BLUP; LOO BAYES GBLUP, direct sampling from the posterior distribution of genotypic values of followed by importance sampling to obtained the LOO predictions.

The importance sampling scheme worked well. Ionides (2008) suggested a modification of the importance ratio assigned to sample s and data point , as follows

| (55) |

where is the average (over samples) importance sample ratio for line i in the context of the wheat data set. Ionides (2008) argued that truncation of large importance sampling weights (TIS) would be less sensitive with respect to the importance sampling density function used (the posterior distribution using all data points in our case) than IS. We converted the IS weights into normalized TIS weights using rule (55) applied to the normalized IS weights. As mentioned earlier, the effective number of normalized IS weights ranged between 76.4 and 14983.5; for normalized TIS weights, the effective number spanned from 691.9 to 13,970. TIS produced more stable weights than standard IS. However, truncation of weights introduces a bias, which may affect predictive performance adversely (Vehtari et al. 2016). However, it may be that TIS made the weights “too homogeneous,” thus creating a bias toward the posterior distribution obtained with all data points. If all weights were equal to a constant, IS or TIS would retrieve the full posterior distribution.

Discussion

Cross-validation (CV) has become an important tool for calibrating prediction machines in genome-enabled prediction (Meuwissen et al. 2001), and is often preferred over resampling methods such as bootstrapping. It gives a means for comparing and calibrating methods and training sets (e.g., Isidro et al. 2015). Typically, CV requires dividing data into training and testing folds, and the models must be run many times. An extreme form of CV is LOO; here if the sample has size each observation is removed in the training process, and labeled as a testing set of size 1. Hence, n different models must be run to complete a LOO CV.

Our paper presented statistical methodology aimed at enabling extensive CV in genome-enabled prediction using a suite of methods. These were OLS, BLUP on markers, GBLUP, RKHS, and Bayesian procedures. Formulae were derived that enable arriving at the predictions that would be obtained if one or more cases were to be excluded from the training process and declared as members of the testing set. In the cases of OLS, BLUP, GBLUP, and RKHS, and assuming that the ratio of variance components do not change appreciably from those that apply to the entire sample, the formulae are exact.

The deterministic formulae can also be applied in a multiple-kernel or multiple-random factors setting, given the variance components. For example, consider the bikernel RKHS regression (e.g., de los Campos et al. 2010; Tusell et al. 2014)

| (56) |

Here, is genetic signal captured by pedigree, and is genetic signal captured by markers; and are unknown and independently distributed RKHS regression coefficients, and and are positive-definite similarity matrices. Suppose that , i.e., the numerator relationship matrix based on the assumption of additive inheritance, and that is a Gaussian kernel such as those employed earlier in the paper. The standard assumption for the RKHS regression coefficients is

| (57) |

where and are variance components associated with kernels and respectively. Hence, and The BLUP of and can be found by solving the linear system

| (58) |

where and . Hence, the solutions satisfy

| (59) |

| (60) |

Applying the logic leading to (102) for the LOO situation, the preceding equations can be written as

| (61) |

| (62) |

where are the diagonal elements of respectively; and are the corresponding solutions to the system of equations (58). The prediction of the left-out phenotype is

The situation is different for Bayesian models solved by sampling methods such as MCMC. Typically, there is no closed form solution, except in some stylized situations, so sampling must be used. The Bayesian model must be run with the entire data set and posterior samples weighted, e.g., via importance sampling, to convert realizations into draws pertaining to the posterior distribution that would result from using just the CV training set. Unfortunately, importance weights can be extremely variable. In (34), it can be seen that weights are proportional to the reciprocal of likelihoods evaluated at the posterior samples, so if a data point (or a vector of data points) “left out” confers a tiny likelihood to the realized value of the parameter sampled, then the sample is assigned a very large weight; on the other hand, if the likelihood is large, the weight is small. This phenomenon produces a large variance among weights, which we corroborated empirically. Another view at the issue at stake is as follows: the importance weight is hence, if the posterior obtained with all data points has much thinner tails than the posterior density constructed by excluding one or more cases, the weights can “blow up.”

The preceding problem would be exacerbated by including more than one observation in the testing set. Vehtari et al. (2016) examined seven data sets, and used “brute force” LOO MSE as a gold standard to examine the performance of various forms of importance sampling. TIS gave a better performance than standard importance sampling (IS) weights in two out of seven comparisons, with the standard method being better in three of the data sets; there were two ties. Vehtari et al. (2016) suggested another method called Pareto smoothed importance sampling (PSIS) that was better than IS in four of the data sets (two ties), and better than TIS in three of the analyses (two ties). Calculation of PSIS is involved, requiring several steps in our context: (a) compute IS ratios; (b) for each of the 599 lines, fit a generalized Pareto distribution to the 20% largest values found in (a); (c) replace the largest M IS ratios by expected values of the order statistics of the fitted generalized Pareto distribution, where (d) for each line, truncate the new ratios. Clearly, this procedure does not lend itself to large scale genomic data, and the results of Vehtari et al. (2016), obtained with small data sets and simple models, are not conclusive enough. Additional research is needed to examine whether TIS, IS, or PSIS are better for genome-enabled prediction.

It is known (e.g., Henderson 1973, 1984; Searle 1974) that the best predictor, that is, the function of the data with the smallest squared prediction error (MSE) under conceptual repeated sampling (i.e., infinite number of repetitions over the joint distribution of predictands and predictors) is . This property requires knowledge of the form of the joint distribution, and of its parameters. In the setting of the case study, under multivariate normality, and with a zero-mean model and known variance components, GBLUP is the best predictor. However, in CV, the property outlined above does not hold. One reason is that the data set represents a single realization of the conceptual scheme. Another reason is that incidence and similarity matrices change at random in CV, plus parameters are estimated from the data at hand. For example, if datum i is removed from the analysis, the training model genomic relationship matrix becomes whereas, if observation j is removed, the matrix used is Further, the entire data set is used in the CV, so yet-to-be observed data points appear in the training process at some point. The setting of best prediction requires that the structure of the data remains constant over repeated sampling, with the only items changing being the realized values of the data , and of the unobserved genotypic values The CV setting differs from the idealized scheme, and expectations based on theory may not always provide the best effective guidance in predictive inference.

In conclusion, CV appears to be the best gauge for calibrating prediction machines. Results presented here provide the basis for conducting extensive cross-validation from results of a single run with all data. Future research should evaluate importance sampling schemes for more complex Bayesian models, e.g., those using thick-tailed processes or mixtures as prior distributions. An important challenge is to make the procedures developed here computationally cost-effective, so that software for routine use can be developed.

Acknowledgments

The Editor and two anonymous reviewers suggested useful comments concerning the structure of the manuscript. We are grateful to the Institut Pasteur de Montevideo, Uruguay, for providing office space and facilities to D.G. from January–March 2016. Part of this work was done while D.G. was a Hans Fischer Fellow at the Institute of Advanced Study, Technical University of Munich-München, Germany; their support is gratefully acknowledged. Research was partially supported by a United States Department of Agriculture (USDA) Hatch Grant (142-PRJ63CV) to D.G., and by the Wisconsin Agriculture Experiment Station.

Appendix A: Prediction with Least-Squares

Following Seber and Lee (2003), consider out-of-sample prediction, and suppose that the distribution of residuals in left-out data (testing set) of size is as in a training set of size n, with the training phenotypes represented as ; residuals in training and testing sets are assumed to be mutually independent. In genome-enabled prediction, (1) is merely an instrumental model that may bear little resemblance with the state of nature, so we let the true distribution be allowing for the almost certain possibility that , where is the marker matrix in the testing set. In quantitative genetics, is an unknown function of quantitative trait locus (QTL) genotypes and of their effects; the latter may not be additive, and dominance or epistasis may enter into the picture without contributing detectable variance.

Model training yields , and the point predictor of is . The mean squared error of prediction , conditionally on training data and on but averaged with respect of all possible testing sets of size, , is

| (63) |

Define the conditional prediction bias as

| (64) |

Standard results on expectation of quadratic forms applied to (63) produce

| (65) |

Unconditionally, that is, by averaging over all possible sets of training (testing) data of size n with marker configuration

| (66) |

where

| (67) |

Here, the expected prediction bias is

| (68) |

after assuming for convenience of representation that i.e., that testing and training sets have the same size and unknown true mean ; note that is a matrix of “influences” describing how variation in training phenotypes affects predictions. Using (68) in (67), and this in (66), the expected prediction mean-squared error averaged over an infinite number of training and testing sets, but with fixed marker genotype matrices is

| (69) |

If that is, in the special case where the same genotypes appear in the training and testing sets, and so the preceding equation becomes

| (70) |

with

| (71) |

and typical element . Expression (70) shows that the uncertainty of prediction, as measured by variance (second term) increases with p (model complexity) and decreases with (equal to n here). The impact of increasing complexity on bias is difficult to discuss in the absence of knowledge of QTL and their effects. When , the training data set fits better and better and the model increasingly copies both signal and error: predictions become increasingly poorer.

Note that

| (72) |

where . Applying this result in (8)

| (73) |

where is the average squared prediction bias, and is the average prediction error variance. Note that

| (74) |

and that

| (75) |

Employing (74) and (75) in (73),

| (76) |

Examine the difference in expected prediction mean-squared error between the LOO procedure, as given in (76), and that from the “distinct testing set” layout given in (70), and set . One obtains

| (77) |

Since

| (78) |

The are bounded between 0 and 1, so the two terms in (78) are positive.

Appendix B: Matrix Algebra Result

Early derivations of the mixed model equations used for computing best linear unbiased estimation of fixed effects, and BLUP of random effects in mixed linear models used by Henderson’s (e.g., Henderson et al. 1959; Henderson 1975, 1984) made use of the Sherman-Morrison-Woodbury formula (Seber and Lee 2003). Moore-Penrose generalizations are in Deng (2011).

Assuming that the required inverses stated below exist, the following result holds

| (79) |

A special case is when where and denote column and row vectors; here

| (80) |

| (81) |

Appendix C: LOO Ordinary Least-Squares

In (4), we have

| (82) |

Let the regression “hat matrix” be , with diagonal elements Hence, (4) can be written as

| (83) |

Using (83), the error of fitting phenotype is then

| (84) |

Appendix D: Leave-d-Out Ordinary Least-Squares

If d cases are removed from the training data set, In (79), put , and , to obtain the coefficient matrix

| (85) |

for d being one of the possible For example, if , and or 500, then and respectively. Clearly, it is not feasible to consider all possible configurations of training and testing sets. Note that will not exist for all combinations of n and d. For the leave-d-out least-squares estimator given to exist, it is needed that

The right-hand side vector in least-squares takes the form

| (86) |

Let be the part of the hat matrix . The OLS estimator, , can be written as

| (87) |

Further,

| (88) |

Appendix E: LOO and Leave-d-Out BLUP of Markers

As indicated in (15), finding BLUP of markers requires computing the coefficient matrix in the corresponding equations, and the vector of right hand sides. Following the reasoning used for least-squares

| (89) |

If observation is to be predicted in a LOO CV, and , so

where Letting the preceding becomes

| (90) |

where is the residual from the ridge regression-BLUP analysis obtained with the entire sample.

For leave-d-out the algebra is as with least-squares, producing as coefficient matrix

| (91) |

and the right-hand sides are as before Letting , algebra similar to the one producing (88) yields

| (92) |

where is the dimensional residual from ridge regression BLUP applied to the entire sample.

Appendix F: LOO and Leave-d-Out GBLUP

LOO GBLUP

In LOO CV, one seeks to estimate the mean of the predictive distribution of the left-out data point Since and is independent of one has

| (93) |

More generally, we are interested in predicting all n genetic values Since our zero-mean BLUP is interpretable as the mean of the conditional or posterior distribution ( denotes and here), for denoting a density function, it follows that

| (94) |

since due to independence of residuals. The preceding posterior is Gaussian (given the dispersion parameters) because the prior and the sampling model are both Gaussian; hence, the mode and the median of this distributions are identical. Apart from an additive constant

| (95) |

or, explicitly,

| (96) |

where , and is a vector with a 1 in position i and 0’s elsewhere. The gradient of the log-posterior with respect to is

| (97) |

note that . Setting to zero yields the joint mode, i.e., the of all n lines (but using ) as the solution to the linear system

| (98) |

where is the BLUP of calculated with all observations other than is the coefficient matrix used for calculating using all data points; is as defined earlier and is an matrix of except for a 1 in position . Observe that

| (99) |

Further,

| (100) |

where is the column of , and is the element of . Thus

| (101) |

Inspection of the preceding expression reveals that element i of , that is, the genetic value of the observation left out in the LOO CV can be computed as

| (102) |

If desired, the remaining elements of can be calculated recursively as

| (103) |

The observed predictive MSE is given by

| (104) |

Leave-d-Out GBLUP

Consider next a leave-d-out setting. Assume that observations have been reordered such that phenotypes of members of the testing set are placed in the upper d positions in so and Thus

| (105) |

where is a matrix partitioned into an identity submatrix, and with a 0 as each element of the partition. Hence

| (106) |

Setting the vector of differentials to , and rearranging

| (107) |

where is with the d phenotypes in the testing set replaced by . Application of (79) produces

| (108) |

Let now the inverse of the coefficient matrix for computing the BLUP of all individuals, or lines from all data points in , be partitioned as

| (109) |

Then

| (110) |

| (111) |

| (112) |

Inspection of the form of (107) leads as BLUP predictor of phenotypes of individuals in the testing set to

| (113) |

with CV prediction error

and realized predictive mean-squared error

| (114) |

If desired, the BLUP predictors of the remaining genotypes (based on ) can be calculated as

| (115) |

All preceding developments rest on assuming that exclusion of d observations does not modify λ in training sets in an appreciable manner.

Appendix G: Cross-Validation Importance Sampling

The posterior expectation of the vector of marker effects is

| (116) |

| (117) |

Here, is an “importance sampling” weight (Albert, 2009). Bayes theorem yields

| (118) |

and employing this form of in (117) produces

| (119) |

The implication is that, given draws from the full-posterior distribution, the posterior expectation (119) can be estimated as

| (120) |

where

| (121) |

Footnotes

Communicating editor: D. J. de Koning

Literature Cited

- Albert J., 2009. Bayesian Computation with R, Ed. 2 Springer, New York. [Google Scholar]

- Astle W., Balding D., 2009. Population structure and cryptic relatedness in genetic association studies. Stat. Sci. 24: 451–471. [Google Scholar]

- Cantet R. J. C., Fernando R. L., Gianola D., 1992. Bayesian inference about dispersion parameters of univariate mixed models with maternal effects: theoretical considerations. Genet. Sel. Evol. 24: 107–135. [Google Scholar]

- Chesnais J. P., Cooper T. A., Wiggans G. R., Sargolzaei M., Pryce J. E., et al. , 2016. Using genomics to enhance selection of novel traits in North American dairy cattle. J. Dairy Sci. 99: 2413–2427. [DOI] [PubMed] [Google Scholar]

- Cleveland W. S., 1979. Robust locally weighted regression and smoothing scatterplots. J. Am. Stat. Assoc. 74: 829–836. [Google Scholar]

- Crossa J., de los Campos G., Pérez P., Gianola D., Burgueño J., et al. , 2010. Prediction of genetic values of quantitative traits in plant breeding using pedigree and molecular markers. Genetics 186: 713–724. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Daetwyler H. D., Villanueva B., Woolliams J. A., 2008. Accuracy of predicting the genetic risk of disease using a genome-wide approach. PLoS One 3: e3395. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de los Campos G., Gianola D., Rosa G. J. M., 2009. Reproducing kernel Hilbert spaces regression: a general framework for genetic evaluation. J. Anim. Sci. 87: 1883–1887. [DOI] [PubMed] [Google Scholar]

- de los Campos G., Gianola D., Rosa G. J. M., Weigel K. A., Crossa J., 2010. Semi-parametric genomic-enabled prediction of genetic values using reproducing kernel Hilbert spaces methods. Genet. Res. 92: 295–308. [DOI] [PubMed] [Google Scholar]

- de los Campos G., Gianola D., Allison D. A. B., 2011. Predicting genetic predisposition in humans: the promise of whole-genome markers. Nat. Rev. Genet. 11: 880–886. [DOI] [PubMed] [Google Scholar]

- de los Campos G., Hickey J. M., Pong-Wong R., Daetwyler H. D., Calus M. P. L., 2013. Whole-genome regression and prediction methods applied to plant and animal breeding. Genetics 193: 327–345. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dempfle L., 1977. Relation entre BLUP (best linear unbiased prediction) et estimateurs bayésiens. Ann. Genet. Sel. Anim. 9: 27–32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deng C. Y., 2011. A generalization of the Sherman-Morrison-Woodbury formula. Appl. Math. Lett. 24: 1561–1564. [Google Scholar]

- Erbe, M., E. C. G. Pimentel, A. R. Sharifi, and H. Simianer, 2010 Assessment of cross-validation strategies for genomic prediction in cattle. Book of Abstracts of the 9th World Congress of Genetics Applied to Livestock Production, Leipzig, Germany, p. S. 129. [Google Scholar]

- Gelfand A. E., 1996. Model determination using sampling-based methods, pp. 145–162 in Markov Chain Monte Carlo in Practice, edited by Gilks W. R., Richardson S., Spiegelhalter D. J. Chapman and Hall, London. [Google Scholar]

- Gelfand, A., D. K. Dey, and H. Chang, 1992 Model determination using predictive distributions with implementation via sampling-based methods. Technical Report no. 462. Prepared under contract for the Office of Naval Research. Stanford University, Stanford, CA.

- Gianola D., 2013. Priors in whole genome regression: the Bayesian alphabet returns. Genetics 194: 573–596. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gianola D., de los Campos G., 2008. Inferring genetic values for quantitative traits non-parametrically. Genet. Res. 90: 525–540. [DOI] [PubMed] [Google Scholar]

- Gianola D., Fernando R. L., 1986. Bayesian methods in animal breeding theory. J. Anim. Sci. 63: 217–244. [Google Scholar]

- Gianola D., Rosa G. J. M., 2015. One hundred years of statistical developments in animal breeding. Annu. Rev. Anim. Biosci. 3: 19–56. [DOI] [PubMed] [Google Scholar]

- Gianola D., van Kaam J. B. C. H. M., 2008. Reproducing kernel Hilbert spaces regression methods for genomic assisted prediction of quantitative traits. Genetics 178: 2289–2303. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gianola D., Fernando R. L., Stella A., 2006. Genomic assisted prediction of genetic value with semi-parametric procedures. Genetics 173: 1761–1776. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gianola D., de los Campos G., Hill W. G., Manfredi E., Fernando R. L., 2009. Additive genetic variability and the Bayesian alphabet. Genetics 187: 347–363. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gianola D., Okut H., Weigel K. A., Rosa G. J. M., 2011. Predicting complex quantitative traits with Bayesian neural networks: a case study with Jersey cows and wheat. BMC Genet. 12: 87 DOI: . 10.1186/1471-2156-12-87 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gianola D., Weigel K. A., Krämer N., Stella A., Schön C. C., 2014a Enhancing genome-enabled prediction by bagging genomic BLUP. PLoS One 9(4): e91693. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gianola, D., G. Morota, and J. Crossa, 2014b Genome-enabled prediction of complex traits with kernel methods: what have we learned? Proceedings of the 10th World Congress on Genetics Applied to Livestock Production, Vancouver, British Columbia, Canada. Available at: https://asas.org/wcgalp-proceedings.

- González-Recio O., Rosa G. J. M., Gianola D., 2014. Machine learning methods and predictive ability metrics for genome-wide prediction of complex traits. Livest. Sci. 166: 217–231. [Google Scholar]

- Hastie T., Tibshirani R., Friedman J., 2009. The Elements of Statistical Learning, Ed. 2 Springer, New York. [Google Scholar]

- Henderson, C. R., 1973 Sire evaluation and genetic trends, Proceedings of the Animal Breeding and Genetics Symposium in Honor of Dr. Jay L. Lush. American Dairy Science Association & American Society of Animal Science, Champaign, IL, pp. 10–41. [Google Scholar]

- Henderson C. R., 1975. Best linear unbiased estimation and prediction under a selection model. Biometrics 31: 423–449. [PubMed] [Google Scholar]

- Henderson C. R., 1984. Application of Linear Models in Animal Breeding, University of Guelph, Canada. [Google Scholar]

- Henderson C. R., Kempthorne O., Searle S. R., von Krosigk C. M., 1959. Estimation of environmental and genetic trends from records subject to culling. Biometrics 15: 192–218. [Google Scholar]

- Heslot N., Yang H. P., Sorrells M. E., Jannink J. L., 2012. Genomic selection in plant breeding: a comparison of models. Crop Sci. 52: 146–160. [Google Scholar]

- Hoerl A. E., Kennard R. W., 1970. Ridge regression: applications to non-orthogonal problems. Technometrics 12: 69–82. [Google Scholar]

- Ionides E. L., 2008. Truncated importance sampling. J. Comput. Graph. Stat. 17: 295–311. [Google Scholar]

- Isidro J., Jannink J. L., Akdemir D., Poland J., Heslot N., et al. , 2015. Training set optimization under population structure in genomic selection. Theor. Appl. Genet. 128: 145–158. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jiang Y., Reif J. C., 2015. Modeling epistasis in genomic selection. Genetics 201: 759–768. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lehermeier C., Wimmer V., Albrecht T., Auinger H. J., D. Gianolaet al. , 2013. Sensitivity to prior specification in Bayesian genome-based prediction models. Stat. Appl. Genet. Mol. Biol. 12: 1–17. [DOI] [PubMed] [Google Scholar]

- Long N., Gianola D., Rosa G. J. M., Weigel K. A., 2011. Marker-assisted prediction of non-additive genetic values. Genetica 139: 843–854. [DOI] [PubMed] [Google Scholar]

- López de Maturana E., Chanok S. J., Picornell A. C., Rothman N., Herranz J., et al. , 2014. Whole genome prediction of bladder cancer risk with the Bayesian LASSO. Genet. Epidemiol. 38: 467–476. [DOI] [PubMed] [Google Scholar]

- MacLeod I. M., Hayes B. J., Vander Jagt C. J., Kemper K. E., Haile-Mariam M.et al. , 2014. A Bayesian analysis to exploit imputed sequence variants for QTL discovery. Proceedings of 10th World Congress of Genetics Applied to Livestock Production. Vancouver, British Columbia, Canada. [Google Scholar]

- Makowsky R., Pajewski N. M., Klimentidis Y. C., Vázquez A. I., Duarte C. W., et al. , 2011. Beyond missing heritability: prediction of complex traits. PLoS Genet. 7: e1002051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Martini J. W. R., Wimmer V., Erbe M., Simianer H., 2016. Epistasis and covariance: how gene interaction translates into genomic relationship. Theor. Appl. Genet. 10.1007/s00122-016-2675-5. [DOI] [PubMed] [Google Scholar]

- Matos C. A. P., Ritter C., Gianola D., Thomas D. L., 1993. Bayesian analysis of lamb survival using Monte Carlo numerical integration with importance sampling. J. Anim. Sci. 71: 2047–2054. [DOI] [PubMed] [Google Scholar]

- Meuwissen T. H. E., Hayes B. J., Goddard M. E., 2001. Prediction of total genetic value using genome-wide dense marker maps. Genetics 157: 1819–1829. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mrode R., 2014. Linear Models for the Prediction of Animal Breeding Values, Ed. 3 CABI, Wallingford. [Google Scholar]

- Ober U., Huang W., Magwire M., Schlather M., Simianer H., et al. , 2015. Accounting for genetic architecture improves sequence based genomic prediction for a Drosophila fitness trait. PLoS ONE 10: e0126880. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Okut H., Gianola D., Rosa G. J. M., Weigel K. A., 2011. Prediction of body mass index in mice using dense molecular markers and a regularized neural network. Genet. Res. 93: 189–201. [DOI] [PubMed] [Google Scholar]

- Pérez P., de los Campos G., 2014. Genome-wide regression and prediction with the BGLR statistical package. Genetics 198: 483–495. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rincent R., Moreau L., Monod H., Kuhn E., Melchinger A. E., et al. , 2014. Recovering power in association mapping panels with variable levels of linkage disequilibrium. Genetics 197: 375–387. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Robinson G. K., 1991. That BLUP is a good thing: the estimation of random effects. Stat. Sci. 6: 15–32. [Google Scholar]

- Rubin D. B., 1988. Using the SIR algorithm to simulate posterior distributions, pp. 395–402 in Bayesian Statistics 3, edited by Bernardo J. M., DeGroot M. H., Lindley D. V., Smith A. F. M. Oxford University Press, Cambridge, MA. [Google Scholar]

- Ruppert D., Wand M. P., Carroll R. J., 2003. Semiparametric Regression, Cambridge University Press, New York. [Google Scholar]

- Searle S. R., 1974. Prediction, mixed models and variance components, Reliability and Biometry, edited by Proschan F., Serfling R. J. Society for Industrial and Applied Mathematics, Philadelphia. [Google Scholar]

- Seber G. A. F., Lee A. J., 2003. Linear Regression Analysis, Wiley-Blackwell, New York. [Google Scholar]

- Smith A. F. M., Gelfand A. E., 1992. Bayesian statistics without tears: a sampling-resampling perspective. Am. Stat. 46: 84–88. [Google Scholar]

- Spiliopoulou A., Nagy R., Bermingham M. L., Huffman J. E., Hayward C., et al. , 2015. Genomic prediction of complex human traits: relatedness, trait architecture and predictive meta-models. Hum. Mol. Genet. 2015: 1–16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Takezawa K., 2006. Introduction to Non-Parametric Regression, Wiley-Interscience, Hoboken. [Google Scholar]

- Tusell L., Pérez-Rodríguez P., Forni S., Gianola D., 2014. Model averaging for genome-enabled prediction with reproducing kernel Hilbert spaces: a case study with pig litter size and wheat yield. J. Anim. Breed. Genet. 131: 105–115. [DOI] [PubMed] [Google Scholar]

- Utz H. F., Melchingerm A. E., Schön C. C., 2000. Bias and sampling error of the estimated proportion of genotypic variance explained by quantitative trait loci determined from experimental data in maize using cross validation and validation with independent samples. Genetics 154: 1839–1849. [PMC free article] [PubMed] [Google Scholar]

- Van Raden P. M., 2008. Efficient methods to compute genomic predictions. J. Dairy Sci. 91: 4414–4423. [DOI] [PubMed] [Google Scholar]

- Vázquez A. I., de los Campos G., Klimentidis Y. C., Rosa G. J. M., Gianola D., et al. , 2012. A comprehensive genetic approach for improving prediction of skin cancer risk in humans. Genetics 192: 1493–1502. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vehtari A., Lampinen J., 2002. Bayesian model assessment and comparison using cross-validation predictive densities. Neural Comput. 14: 2439–2468. [DOI] [PubMed] [Google Scholar]

- Vehtari, A., A. Gelman, and J. Gabry, 2016 Practical Bayesian model evaluation using leave-one-out cross-validation and WAIC. arXiv: 1507.04544.

- Wimmer V., Lehermeier C., Albrecht T., Auinger H. J., Wang Y., et al. , 2013. Genome-wide prediction of traits with different genetic architecture through efficient variable selection. Genetics 195: 573–587. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The authors state that all data necessary for confirming the conclusions presented in the article are represented fully within the article.