Abstract

Objective

Little attention has been paid to the nuanced and complex decisions made in the clinical session context and how these decisions influence therapy effectiveness. Despite decades of research on the dual-processing systems, it remains unclear when and how intuitive and analytical reasoning influence the direction of the clinical session.

Method

This paper puts forth a testable conceptual model, guided by an interdisciplinary integration of the literature, that posits that the clinical session context moderates the use of intuitive versus analytical reasoning.

Results

A synthesis of studies examining professional best practices in clinical decision-making, empirical evidence from clinical judgment research, and the application of decision science theories indicate that intuitive and analytical reasoning may have profoundly different impacts on clinical practice and outcomes.

Conclusions

The proposed model is discussed with respect to its implications for clinical practice and future research.

Keywords: clinical judgments, case conceptualization, psychotherapy, cognitive processes, intuitive reasoning, analytical reasoning, hypothesis generation, hypothesis testing

The Unresolved Debate: Intuitive versus Analytical Judgment Methods

The efforts to compare and distinguish between intuitive and analytical judgment methods can be dated back to Meehl’s (1954) first use of the terms clinical and statistical methods, in which Meehl demonstrated that predictions of psychological variables made by mathematical models were superior to predictions made by clinical intuition (see also Dawes, 1979). Decades of decision science research have since discussed the existence of a dual-processing system (e.g., Evans & Stanovich, 2013; Ferreira, Garcia-Marques, Sherman, & Sherman, 2006; James, 1890; Kahneman & Frederick, 2002, 2005; Kruglanski & Gigerenzer, 2011). Specifically, the dual process models of judgment and decision-making distinguish between Type 1 or Intuitive (“clinical” per Meehl)—processes that are rapid, automatic, high capacity, and occurring outside of one’s awareness, and Type 2 or Analytical (“statistical” per Meehl)—processes that are slow, deliberate, and occurring in the context of active awareness and engagement (Bargh & Chartrand, 1999; Evans, 2008; Haidt, 2001; Kahneman & Frederick, 2002, 2005; Sloman, 1996, 2002; Stanovich, 1999). Although the relation between these two systems is still under discussion (e.g., Evans & Stanovich, 2013; Kruglanski & Gigerenzer, 2011), it is clear that judgments and decisions may require different levels of cognitive complexity. Literature has relied on this distinction to reify that different characteristics, predictors, moderators, mediators, and outcomes of intuitive and analytical processes can be explored. This parsimonious approach to examining judgment methods (analytical and intuitive) may ultimately reveal nuances about which to use when, with what else, how, and for what purpose. Several studies compared the accuracy of intuitive and analytical judgment methods. In these studies, accuracy is defined according to the specific task presented to participants in each study in which the researchers have defined a correct and incorrect outcome. For instance, in a meta-analysis focusing on the comparison of human intuitive judgments and models of analytical reasoning methods, researchers concluded that despite human capacity for sound judgment and decision-making, mathematical analytical models seem to be more accurate (Karelaia & Hogarth, 2008). Conversely, some decision science studies have emphasized that complex choices actually benefit from intuitive reasoning methods (e.g., Dijksterhuis, Bos, Nordgren, & von Baaren, 2006; Gigerenzer & Gaissmaier, 2011). These contrasting results may suggest that the merits of intuitive and analytical judgment methods depend on the conditions and contexts in which they are used (e.g., Kahneman & Klein, 2009). This means one judgment method is not always better than the other.

Clinical psychological research on the accuracy of intuitive and analytic methods is similarly equivocal in nature. Therapists are charged with making clinical judgments both during and between clinical sessions. Clinical judgments are defined as any judgment and/or decision made by the therapist about the client and/or the case during the therapy process. These judgments may be driven by both intentional and formal reasoning tasks as well as spontaneous decisions. Intentional and formal reasoning is most likely to be employed for diagnosis, case formulation, and treatment planning (among others, see Garb, 2005), as the therapist is likely to be aware that a judgment process is taking place during these tasks (see Bargh & Ferguson, 2000; Wegner & Bargh, 1998). Simultaneously, clinical judgments can also be spontaneous and intuitive decisions in circumstances where the therapist is not acutely aware that a specific judgment process is being made. For example, when the therapist associates a coping strategy used by the client in a past situation to the client’s description of an episode in a different context. Each type of judgment, more intentional or more spontaneous, is governed by different processes (analytical and intuitive) that capitalize on different information, and may result in more or less optimal outcomes, according to the circumstances in which they occur.

Important insights on the accuracy of intuition have emerged from naturalistic studies of professionals charged with making difficult decisions in complex clinical contexts (Klein, 2008; Lipshitz, Klein, Orasanu, & Salas, 2001). However, the majority of clinical decision-making research has focused on clinical judgments or decisions that are made outside the therapy session, such as making a diagnosis or conceptualizing a case (Garb, 2005). For instance, De Vries and colleagues found that the intuitive processing of case descriptions from the DSM-IV casebook resulted in significantly more correct diagnoses than the analytical processing of the same descriptions (De Vries, Witteman, Holland, & Dijksterhuis, 2010). Conversely, a recent randomized clinical trial indicates that modular approaches to evidence-based practice (EBP) implementation outperform treatment as usual and standardized protocol implementation when guided by clinical decision aids that enable analytical reasoning (Weisz et al., 2012). A meta-analysis of 136 psychotherapy studies revealed that judgments relying on therapist subjectivity were approximately as accurate as analytical predictions (Grove, Zald, Lebow, Snitz, & Nelson, 2000). Perhaps the most appropriate conclusion to draw is that one judgment method may be more appropriate and effective when conducted by particular individuals in certain circumstances. Simultaneously, it is important to acknowledge that, contrary to the fundamental studies in which accuracy is clearly defined, in many cases there is no one correct outcome or decision, particularly in clinical practice. Thus, it may be most useful for clinical judgment research to focus on the processes through which judgments are made, the impact these judgments may have on subsequent therapist behaviors, and ultimately client outcomes.

Understanding the cognitive processes that underlie judgment methods and associated outcomes may be critical to optimizing clinical care and focusing future research. Therefore, the overarching goal of this paper is to put forth an integrated conceptual model of clinical decision-making that interfaces two fields of psychological science (decision and clinical science) to enrich our understanding of the clinical judgment processes and to stimulate future research. Although this model may serve to inform clinical decision-making in a broad sense, the focus on judgments made by therapists within the clinical session is a critical but understudied component of effective therapy delivery. In order to advance the science and practice of psychotherapy, it is necessary to illuminate the nuances of each judgment method by: (a) articulating individual factors that may influence the use and utility of each judgment method; (b) exploring the clinical conditions (context and task characteristics) in which each judgment method may be more or less used; (c) characterizing the main cognitive processes that underlie the use of intuitive versus analytical methods in clinical judgments (hypothesis generation process); and (d) high-lighting the impact that intuitive versus analytical methods may have on information seeking strategies and clinical judgment validation.

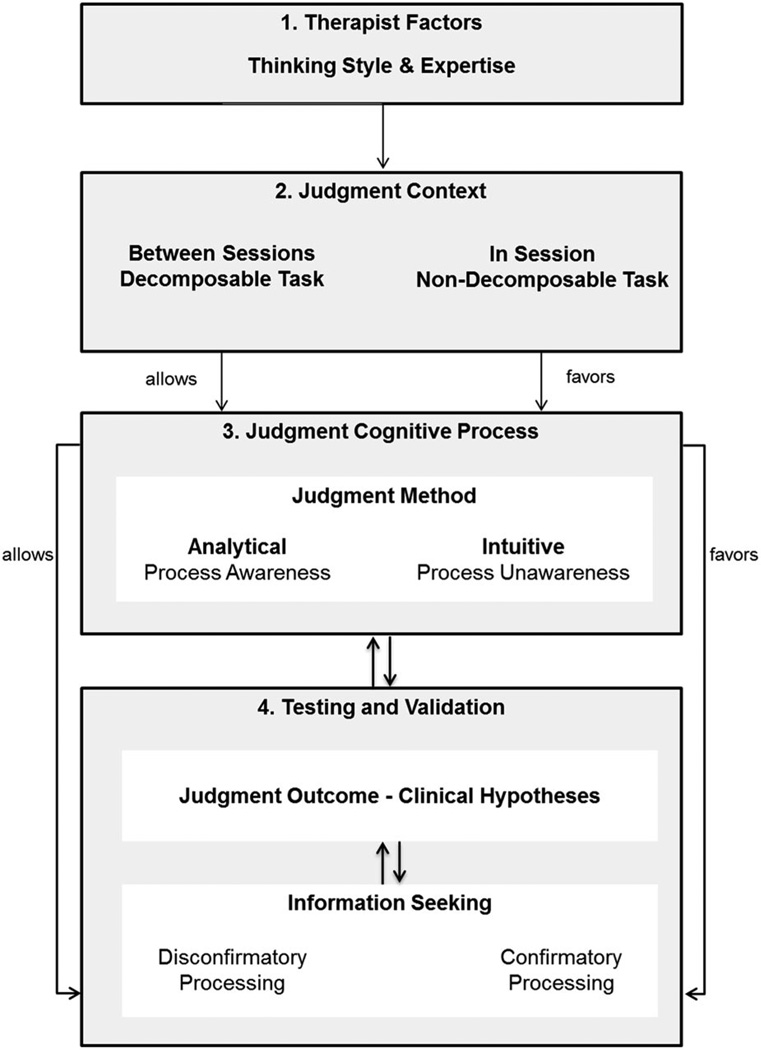

As a brief overview, the model proposes that therapists have a dispositional thinking style and unique set of experiences (Figure 1 Box 1: Therapist Factors) that may function as predictors and influence their propensity to use of either intuitive or analytical judgment methods. Next, the model conceptualizes the judgment context (between session or in session) as a moderator of the judgment method used (Box 2). That is, use of intuitive or analytical judgment methods likely depends on the context and task characteristics. Specifically, the context between sessions encourages the use of an analytical judgment method, whereas the in-session context, due to the session’s characteristics, limits its use, therefore favoring an intuitive judgment method. Subsequently, the model illuminates judgment methods (analytical and intuitive) as cognitive judgment processes underlying the generation of clinical hypotheses that may lead to different outcomes (Box 3). Finally, the judgment method and the associated judgment outcome will mediate the therapist’s information seeking process, which therefore will influence the process of hypotheses testing and ultimately the validation of the clinical judgments made. In this model it can be predicted that the context between sessions, with its propensity for the use of an analytical judgment method, invites the use of a disconfirmatory validation strategy (tendency to search for information that attempts to falsify/refute the hypotheses). Conversely, the in-session context with its propensity for use of an intuitive judgment method favors a confirmatory validation strategy (tendency to search for information that attempts to confirm the hypotheses) (Box 4). Ultimately, the validation of clinical judgments (Box 4) may reveal new hypotheses that can be subsequently tested and incorporated, for instance, into the case conceptualization. Figure 1 depicts the conceptual model, each component of which and their relations will be presented in sections according to the four aforementioned foci. The manuscript ends with propositions and associated recommendations for future research and clinical practice.

Figure 1.

A conceptual model for understanding in-session clinical judgments.

Therapist Factors that Influence the Use and Utility of Judgment Methods (Box 1)

General thinking style

Clinical judgment methods tend to be consistent within individuals but different across therapists (Falvey, 2001). For instance, outcomes for case formulation and treatment planning are relatively consistent both within and across cases for individual therapists, but not within or across groups of therapists (Falvey, 2001). This suggests that individual variables, such as a general thinking style, may contribute to the judgment method used by therapists. General thinking style is conceptualized as a dispositional individual variable (personality trait) that manifests in a preference for a particular way of processing information and distinguishes between experiential and rational thinking styles, which are associated with intuitive and analytical reasoning, respectively (Epstein, Pacini, Denes-Raj, & Heier, 1996; Pacini & Epstein, 1999; Stanovich & West, 2000). For instance, accuracy in diagnostic tasks was found to be negatively associated with a rational (analytical) thinking style (Aarts, Witteman, Souren, & Egger, 2012). Thinking style has also been shown to influence the willingness of therapists to use EBPs, as therapists with more rational (analytical) thinking styles were more willing to use EBPs (Gaudiano, Brown, & Miller, 2011). Other dispositional individual variables have also been shown to be important for judgment and decision processes, being, the need for cognition. The need for cognition is the extent in which one engages in and shows preference for effortful thinking tasks (Cacioppo & Petty, 1982) or the need for closure, which refers to the desire for an end state of a cognitive task, regardless of the cognitive strategy and effort put into that task (Webster & Kruglanski, 1994). Thus, in addition to other social and interpersonal variables that may influence the judgment method used (e.g., Ackerman & Hilsenroth, 2001, 2003), these individual thinking variables may be critical to understanding clinical decision-making and its effect on therapy outcomes.

Experience and expertise

The extant literature has shown that when individuals have expertise, the use of intuitive decision methods may lead to more accurate results when compared to analytical approaches (e.g., Dane, Rockmann, & Pratt, 2012; Klein, 1999; Klein & Calderwood, 1991). In the proposed model, we define expertise as resulting from the acquisition of tacit knowledge, over time, with experience, upon which we draw in making inferences (Hogarth, 2010). Thus, expertise in a specific task/context is expected to lead to better performances and outcomes when the feedback from the experience provides the knowledge that allows improvement (Hogarth, 2010; Tracey, Wampold, Lichtenberg, & Goodyear, 2014). Gaining experience allows experts to use acquired knowledge automatically when presented with a situation similar to one they have previously experienced (e.g., Hogarth, 2010; Reyna, 2004). However, this process can break down when there is poor feedback from the environment (Hogarth, 2001) or when a novel situation occurs in clinical practice (Reyna, 2004). For instance, experienced therapists often fail to perform better than novices in diagnostic decision-making (Garb, 2005; Strasser & Gruber, 2004; Witteman & Tollenaar, 2012; Witteman & Van den Bercken, 2007) despite their ability to process information from previous diagnostic tasks (Marsh & Ahn, 2012; Witteman & Tollenaar, 2012). A recent critical review reaffirms the limitations of therapists to learn from the feedback they get in their past clinical experiences making intuitive processing suboptimal even when therapists are experienced (see Tracey et al., 2014).

Context and Task Characteristics Affect the Judgment Method Used (Box 2)

Clinical judgments demand the confluence of information about a client presented in different formats and from different sources, including theoretical information (e.g., conceptualizing the client’s maladjustment as a function of cognitive or behavioral processes as in Cognitive Behavioral Therapy, or as a function of ego defense mechanisms as in Psychodynamic Therapy), empirical information (e.g., data from a self-report assessments), and information from the therapist’s past experiences (e.g., past history of treating anxiety; Eells, 2011). Additionally, the therapist must consider information regarding emotional (e.g., client’s level of distress) and culturally relevant content (e.g., client’s cultural background and values), which further increases the level of information complexity. All of this information is obtained and considered in different judgment contexts both during and between therapy sessions.

The context in which a judgment task occurs is a key factor that has been shown to differentially influence judgment methods (intuitive and/or analytical; Hammond, Hamm, Grassia, & Pearson, 1987). Tasks that occur between sessions can be viewed as fundamentally different from tasks that occur in session because of the context characteristics (e.g., time, cognitive complexity of the task, information available). Research suggests that decomposable tasks, defined as tasks that can be decomposed into smaller parts and approached sequentially in a deliberate process, tend to be conducive to analytical reasoning (see Hammond et al., 1987). As an example, conferring a diagnosis can be viewed as a decomposable task in which the therapist presumably has access to client endorsement of symptoms (often obtained in an interview) that can be sequentially mapped onto the Diagnostic and Statistical Manual of Mental Disorders (5th ed.; DSM–5; American Psychiatric Association, 2013), and is typically concluded in a time/place outside of client contact. Conversely, non-decomposable tasks, which cannot be easily decomposed into smaller parts and do not have an obvious sequence, are more suited to intuitive methods (e.g., Hammond et al., 1987) (see Figure 1 Box 2 for a simplified representation of these concepts). To illustrate, within a therapy session, a client may present to session very distraught about the recent death of her daughter and the decision to provide emotional support versus conduct a risk assessment cannot be easily decomposed into steps and approached sequentially. Although the decomposition of this decision task is not impossible, interrupting the session to sequentially analyze all the factors and to methodically consider theoretical and empirical information to aid in decision-making requires significant effort and may be overwhelmingly difficult to achieve.

Therefore, our model considers the clinical session as a context in which tasks are not often easily decomposed. That is, given the complexity of the session context, therapists are unlikely to engage in an analytical judgment method that involves (a) decomposing clinical tasks into smaller parts; (b) defining sequential steps for making judgments; (c) considering information available from the client, theory, past experiences, etc.; and, (d) assessing and distinguishing relevant and irrelevant information (Dane et al., 2012; Hogarth, 2010). Although some therapeutic approaches delineate discrete tasks within a clinical session (e.g., Cognitive Behavioral Therapy, Interpersonal Psychotherapy), the judgments made within these tasks cannot easily be decomposed into sequential subparts in the moment. The complexity of the clinical session context may favor the use of intuitive decision methods even though the therapist’s lack of awareness and control that characterizes this type of judgment method may compromise the outcome (see Hogarth, 2010).

Cognitive Processes Underlying Clinical Judgments (Box 3)

However, much of the judgment and decision-making literature in clinical psychological science has focused on intentional and deliberate clinical tasks that typically occur between sessions, and can be considered decomposable tasks (see Garb, 2005; Grove et al., 2000). Garb (2005) identified five types of clinical tasks that tend to favor and may benefit from analytical reasoning: (a) description of personality and psychopathology, (a) diagnosis, (c) case formulation, (d) behavioral predictions, and (e) treatment decisions. For these tasks, the therapist is making intentional judgments about the case in a context outside of direct client contact; therefore, the therapist is likely aware that the diagnosis or case conceptualization is the result of an analytical reasoning method (e.g., Bargh & Ferguson, 2000; Wegner & Bargh, 1998). Some literature has suggested that people can use explicit reasoning rules, be aware of the information used and weigh different pieces of information in their judgments when cognitive and attentional resources are available (e.g., Rieskamp & Hoffrage, 2008). There is a dearth of research exploring the complexity of the clinical session context and how this context influences the clinical judgment methods used. This is unfortunate because the face-to-face time between the therapist and client in the clinical session presents contextual constraints that do not exist while a therapist is developing a case conceptualization or assigning a diagnosis between sessions. Therapists are required to make within-session clinical judgments and decisions under time constraints, productivity pressure, and while considering emotional and relational factors, all of which add to the complex and demanding nature of clinical interactions and likely promote the use of intuitive reasoning (see Klein, 1993, for other examples of decision-making demands in real-world settings). In contexts such as the clinical session, even when the therapist is intentionally and actively engaged in using an analytical judgment method, the context complexity favors automatic, fast, and uncontrolled judgments (intuitive) in which the therapist is less aware of the information used to make the judgment. Unfortunately with the mental healthcare more often focused on service quantity, as opposed to quality (at least within the United States), this may have important implications for clinical practice outcomes. Thus, it is very important to maximize the fit of therapist’s judgments to client’s circumstances and needs, in order to maximize therapy effectiveness.

As an example, many therapists are required to conduct risk assessments with clients who have expressed suicidal ideation. This task typically occurs within a time-sensitive context with high emotional valence and requires the therapist to consider multiple decisions that could result in aversive safety and legal consequences if not handled correctly. In this context, the therapist makes multiple judgments, including which questions to ask and which to ignore, in order to gain a comprehensive understanding of the client’s experience and capacity to remain safe. In these circumstances, the therapist may be aware that decisions are being made, particularly if she has access to decision aids (e.g., reliable and valid suicidality risk assessments; Columbia-Suicide Severity Rating Scale; Posner et al., 2011). However, the therapist may not be aware of the details inherent to the judgment method employed. For instance, the therapist may struggle to identify which pieces of information were considered and which were ignored, what process guided the categorization of data (important versus non-important) and subsequent inquiry, and what causal explanations for the acuity of the risk were engaged, disregarded or missed altogether. Even with access to specific guidelines for conducting a risk assessment, there are contextual constraints that challenge the therapist’s capacity to use analytical reasoning (see Evans, 2008; Hammond, 1996; Hammond et al., 1987; see Hogarth, 2010 for a review). In the worst-case scenario, lack of control, contextual and task constraints, and cognitive load may negatively impact the quality of judgments made and result in a potentially dangerous outcome for the client (e.g., Garb, 2005).

Although the decision context illustrated above (suicidal risk assessment) is unlikely to occur in each clinical session with every client, tasks in which the therapist is unaware of her judgment processes are indeed present in every clinical session. As another example, consider a therapist who is actively working with a client on developing a collaborative therapeutic relationship. In a clinical session, the therapist may be curious about the client’s perspective on the role of a collaborative alliance to psychotherapy outcome and on the client’s experience in psychotherapy which leads to intentional information gathering. However, this intentional decision of gathering information may be accompanied by specific automatic (intuitive) judgments about what information should be collected (e.g., which client emotions to attend to, which therapist’s skill to employ to assess psychotherapy impact on client’s feelings) or how to order the questions (e.g. to first address client’s experience overall and then drill down to specific topics, or vice versa; see Elliott & Wexler, 1994). As such, the process of selecting information relevant to the therapist’s curiosity within the clinical session is likely a judgment made using an automatic or intuitive process that occurs with little therapist awareness (e.g., Bargh & Ferguson, 2000). The therapist may note that the client is presenting contradictory information about her experience of the session and may then make a judgment about how to proceed with little awareness of the process underlying that judgment. Faced with this contradictory information, the therapist can choose to (a) emphasize the information that supports the client’s ideas about the session, (b) emphasize information that contradicts the client’s initial perspective, (c) highlight both aspects of the contradictory information, or (d) disregard the information altogether. Regardless of the therapist’s decision in this example, a clinical judgment is made that guides the flow of the session, the outcome of which may drive subsequent clinical work. This is a prime example of an intuitive reasoning process that may invoke little awareness by the therapist that a judgment is being made (see Hogarth, 2010). The lack of awareness may prevent the therapist from revising the judgment, as it is difficult to unpack what information was considered and disregarded in order to make the judgment. In other words, not being aware of the judgment method prevents further judgment validation, which may compromise therapy effectiveness (see Garb, 1998; Persons & Bertagnolli, 1999). In Figure 1, in the interaction between Boxes 2 and 3, we present a simplification of the interaction between the contexts where the clinical judgment occurs and the two judgment methods, analytical and intuitive. This judgment process results in a judgment outcome, or in other words, a clinical hypothesis that can then be tested/validated (see Box 4), which will be developed in the following sections.

Clinical judgments as testable hypotheses

Persons, Beckner, and Tompkins (2013) assert that the process of testing clinical judgments is essential for optimizing therapy effectiveness primarily because it allows for therapists to validate case conceptualizations. Following a different approach, Schön (1983) identifies the implicit and tacit knowledge underlying therapists’ judgments that may fall outside of the therapists’ awareness. Accordingly, he proposes a reflective practice in which a deliberate analysis of thoughts, actions and feelings may inform subsequent clinical work and lead to clinical judgment adaptations. There is literature to suggest that it may be helpful to highlight the goal of causal reasoning for therapists engaged in clinical practice as making underlying clinical goals (causal explanations or predictions) more salient, which allows therapists to use controlled and deliberate reasoning in their judgments (Arnoult & Anderson, 1988; Strohmer, Shivy, & Chiodo, 1990).

This hypothesis formulation and testing process is clearly not a new idea. Several researchers have proposed that testing one’s clinical hypotheses is analogous to the scientific method where the goal is to focus on observation, hypothesis generation, and testing (Apel, 2011; Hayes, Barlow, & Nelson-Gray, 1999). To present a definition, hypothesis generation involves using data to formulate a judgment (making an inference) about a theme or idea. The hypothesis generated may subsequently lead to the hypothesis testing in which the hypothesis is tested in terms of its veracity by gathering evidence that either supports it or not (e.g., Thomas, Dougherty, Sprenger, & Harbison, 2008). Although no experimental studies, to our knowledge, have directly tested the effect of therapists’ use of the scientific method in practice, support for the effectiveness of this approach can be drawn from recent research. Specifically, mounting research suggests that therapists’ receipt of ongoing feedback regarding client progress enhances outcomes for clients demonstrating a negative response to treatment and increases the number of clients who respond to treatment (Lambert, Harmon, Slade, Whipple, & Hawkins, 2005). Typically, this feedback is provided to therapists in an automated fashion (e.g., using a measurement feedback system; e.g., Garland, Bickman, & Chorpita, 2010; Kelley & Bickman, 2009) and contains individual client item responses and summary scores from standardized symptom and functioning measures that inform clinical decision-making (e.g., Duncan et al., 2003; Lambert et al., 2001; Miller, Duncan, Brown, Sparks, & Claud, 2003). Although there is a dearth of literature delineating the mechanisms through which feedback exerts its effect, one possible pathway is that automated feedback brings empirical data into the therapist’s awareness, thus encouraging a direct test of generated hypotheses. Indeed, in one of the only papers exploring feedback mechanisms of change in psychotherapy context, Connolly Gibbons et al. (2015) found that therapists randomized to the feedback intervention were significantly more likely to address a wider range of relevant content (e.g., emotional issues, family issues, client hope for future) more quickly in the therapeutic process as compared to therapists in the no feedback intervention condition. The authors interpret these findings in a manner that suggests therapists receiving feedback may be more attuned to issues most important to clients.

There are currently efforts underway to determine how best to integrate feedback to guide psychotherapist practice (e.g., Lambert et al., 2001, 2002, Sundet, 2011; see Sundet, 2012 for a review) and to implement what is often referred to as “measurement-based care” in large community mental health centers (Lewis et al., 2015). For instance, humanistic psychotherapy research focused on the role of different types of session outcomes, such as a session’s helpfulness, depth and smoothness, as immediate feedback for therapists. This literature, has demonstrated the impact of linking the therapist’s and client’s experience in session to therapist’s specific interventions in that same session (e.g., Elliott & Wexler, 1994; Hill et al., 1988). As another example, the Case Formulation-Driven Approach put forth by Persons, Bostrom, & Bertagnolli (2013) advocates for developing and testing clinical hypotheses in order to optimize the fit of the case conceptualization, which is meant to guide therapy sessions and treatment planning. Findings from naturalistic studies indicate superior outcomes for this case formulation-based approach (reduction in depressive symptomatology) when compared to treatment that was not guided by a testable formulation (Persons, Bostrom, & Bertagnolli, 1999). In sum, elevating causal reasoning and associated inferences in the therapists’ awareness may serve as a pathway to optimize the work accomplished in the clinical session where intuitive judgment methods are favored.

The Impact of Judgment Methods on Clinical Judgment Validation (Box 4)

Inherent to the process of generating and testing clinical inferences (i.e., hypotheses) is subsequent information seeking, which can be defined as the process of gathering information by asking questions or seeking information in order to acquire more knowledge about a theme or an idea (e.g., Skov & Sherman, 1986). Applying this definition to the clinical session context in the proposed model, information seeking consists of the process of intentional collection of additional information by, for example, inquiring about or exploring an idea or situation that the client or therapist consider relevant for the therapy session. Unfortunately, little research is available that has directly tested the effect of therapist information seeking behavior on treatment effectiveness, and the research that does exist has traditionally focused on formal information collection (e.g., a structured interview to assign a diagnosis; Garb, 2005). Moreover, the cognitive processes involved in information seeking have not been adequately considered as part of the complex task of information interpretation in previous work (e.g., Dawes, Faust, & Meehl, 1989).

These two processes (information collection and information interpretation) occur through a dynamic interplay (Glöckner & Witteman, 2010) in which generated hypotheses guide information seeking that will lead to new hypotheses and interpretations and ultimately additional information seeking processes (e.g., Elstein & Schwarz, 2002; Radecki & Jaccard, 1995; Thomas et al., 2008; Weber, Böckenholt, Hilton, & Wallace, 1993). For instance, a therapist may generate the hypothesis that avoidant coping strategies are maintaining the client’s social anxiety symptoms. To test this hypothesis, the therapist may seek information about coping strategies and how the client currently employs them. This information seeking process allows the therapist to generate alternative hypotheses about the symptoms in order to promote additional information seeking and hypothesis validation. With a larger number of testable hypotheses available, both case conceptualization accuracy and therapy effectiveness are likely to be increased (Thomas et al., 2008). Thus, it is crucial to understand the mechanisms underlying hypothesis generation and testing processes in order to fully appreciate their effect on subsequent information seeking within the context of in-session clinical judgments. As depicted in Figure 1, the clinical judgment validation process is conceptualized as a constant process of re-validating the generated hypotheses through seeking information that will allow the therapist to adapt to the client’s specific situation.

Biases in hypothesis testing and validation

However, the ease and automaticity with which intuitive judgments are made may elicit a metacognitive reflection of confidence in the initial judgment, described in the literature as a subjective feeling of rightness (FOR; Thompson, Prowse Turner, & Pennycook, 2011). In other words, therapists likely feel confident in their judgments when using intuitive reasoning, and therefore perceive judgment outcomes as valid (Thompson, 2009, 2010). This sense of validity may subsequently result in fewer hypotheses and limited information seeking. This is in part because therapists (and humans in general) have the tendency to look for, favor, or interpret information in support of a generated hypothesis regardless of its accuracy in a process known as “confirmatory bias” (e.g., Nickerson, 1998).

Thomas et al. (2008) recently termed this process of seeking information as the hypothesis-guided search process. According to their model, the generation of a single hypothesis guides the information seeking process to be confirmatory in nature. In this case, a therapist may hypothesize that a client’s depressive symptoms (e.g., lack of energy, anhedonia) are maintaining a client’s social isolation behaviors, and the therapist may confirm this hypothesis by observing that the client does not engage in social events and reports feeling worse as a result. However, the therapist may have failed to consider the alternative hypothesis that the client is actually struggling with social anxiety and his fears of negative evaluation keep him from attending campus social events. Several factors, as briefly described below, may contribute to the tendency to draw biased confirmatory conclusions during the hypothesis testing process (e.g., Klayman & Ha, 1987).

There are at least three primary methods by which individuals attempt to access information necessary to test hypotheses (e.g., Nickerson, 1998). First, individuals may seek evidence by searching their memory for relevant data (e.g., Kunda, 1990), which may include the therapist’s memories of past experiences with other clients. Second, individuals may look for external sources of data through direct observations or by creating situations intended to elicit relevant behavior (e.g., Frey, 1986; Hilton & Darley, 1991; Swann, 1990). In this case, a therapist may administer a standardized assessment to a client in order to elicit specific relevant responses from the client. Third, individuals may use more indirect procedures such as formulating questions (see e.g., Devine, Hirt, & Gehrke, 1990; Hodgins & Zuckerman, 1993; Skov & Sherman, 1986; Snyder & Swann, 1978; Trope & Bassok, 1982, 1983; Trope & Thompson, 1997) that allow a therapist to engage in clinical inquiry with a client. These three methods of seeking information may be employed to inform clinical judgments both in formal clinical tasks (e.g., in making a diagnosis between sessions) or informally (e.g., in the clinical session).

Regardless of the method used for seeking information, it has been repeatedly demonstrated that individuals primarily seek information that confirms their existing hypotheses (Doherty & Mynatt, 1990; Klayman & Ha, 1987; Nickerson, 1998). Many social cognition studies suggest that when testing hypotheses about another person’s personality (e.g., that an individual is introverted), people tend to generate questions that inquire about behaviors consistent with the hypothesized trait (introverted behaviors) rather than with the alternative trait (extroverted behaviors; Devine et al., 1990; Evett, Devine, Hirt, & Price, 1994; Hodgins & Zuckerman, 1993; Skov & Sherman, 1986; Snyder & Swann, 1978). Additionally, retrieval of evidence from memory appears to be similarly biased in favor of confirming hypotheses (see Koehler, 1991). The presence of ambiguous information also frequently results in interpretations that are consistent with a generated hypothesis (e.g., Darley & Gross, 1983). For instance, observers looking for signs of anger to test their hypothesis that an individual is hostile may interpret pranks and practical jokes as displays of anger rather than as humorous acts (Trope, 1986). Moreover, studies of social judgment also provide evidence that people tend to overemphasize positive confirmatory evidence or underemphasize negative disconfirmatory evidence. Specifically, individuals generally require less hypothesis-consistent evidence in order to accept a hypothesis than they require hypothesis-inconsistent information to reject a hypothesis (Pyszczynski & Greenberg, 1987), and data consistent with the hypothesis is weighted more strongly (e.g., as a result of a theory guiding the hypothesis) than data that is not consistent (Zuckerman, Knee, Hodgins, & Miyake, 1995).

In the proposed model it is depicted that a confirmatory strategy of information seeking can be employed using either analytical or intuitive judgment methods. However, similar to the idea that the in-session clinical context favors an intuitive judgment method, this context also favors a confirmatory information seeking strategy. Conversely, the between-session clinical context, that carries with it little (or relatively less) cognitive load, creates greater space for using an analytical judgment method and also presents conditions that allow for the use of a disconfirmatory information seeking strategy as it is more demanding and requires more resources (see Figure 1).

In order to circumvent the natural tendency toward confirmatory bias, alternative hypotheses should be considered. However, exhaustive analysis of the primary hypothesis and its alternatives are likely only to be performed under optimal conditions (i.e., between sessions) when motivation and cognitive resources are readily accessible (Trope & Liberman, 1996). Under suboptimal conditions (i.e., during sessions), hypothesis generation and testing may not involve alternative hypotheses or may involve alternative hypotheses that are complementary to the primary hypothesis, which maintains the likelihood of falling victim to confirmation bias (e.g., Arkes, 1991; Trope & Liberman, 1996). Unfortunately, this process is likely to undermine therapist’s ability to make optimal clinical judgments and could ultimately limit therapy effectiveness.

Perhaps the most critical point is that individuals are unlikely to be aware of confirmation biases (Wilson & Brekke, 1994). Therapists, due the feelings of rightness often associated with their intuitive judgments, usually fail to realize that they have misinterpreted the data to support or disconfirm their hypotheses when drawing inferences, and will often not notice that their choice of questions may have influenced client responses. Hence, a critical area of future work may involve identifying ways to increase therapists’ awareness of their biases, identify (dis) confirmatory strategies used to test hypotheses, and explore strategies that promote control and monitoring of clinical judgment and information seeking processes (see Thompson et al., 2011).

Summary of the Conceptual Model: Directions for Future Research and Clinical Implications

In summary, the model proposes that the in-session context moderates the use of judgment methods. Since therapy sessions are usually characterized by the presentation of complex information, time constraints, and emotional arousal, it favors an intuitive method because tasks conducted within sessions are not easily broken into smaller sequential parts for deliberate processing. Intuitive decision methods have the propensity for limited hypothesis generation (typically only one hypothesis) and confirmatory processing given the feeling of rightness that accompanies the intuitive judgment process. This pathway to clinical decision-making is vulnerable to numerous limitations (e.g., confirmatory biases), particularly for the novice therapist who is less likely to be accurate in making judgments due to lack of experience. Conversely, analytical decision methods may lead to numerous hypotheses in the causal reasoning process, which invite the therapist to seek new information to support validation. This pathway to clinical decision-making is likely less prone to bias and may ultimately optimize therapy effectiveness, especially in the cases where the initial hypothesis does not accurately reflect the client’s specific problems and needs. However, analytical processes, by nature, may seem slower and more cumbersome and this process may not obviously fit with the various theoretical orientations that guide clinical care. In reality, it may be ideal to use these judgment methods in tandem in the therapy session.

For instance, Safran and Muran (e.g., 2000, 2006; Safran, Muran, Samstag, & Winston, 2005) present efforts to integrate intuitive and analytical judgments within the therapy session. In their model of exploring and ameliorating alliance ruptures, they propose that the identification of implicit relational patterns and internal experiences—which are intuitive judgments— will efficiently reveal an alliance rupture. Subsequently, they state the importance of bringing to awareness these intuitive judgments so as to trigger a deliberative (analytical) process that could then be used to repair the alliance (e.g., Safran & Muran, 2000, 2006; Safran et al., 2005). This line of research is one example in which intuition may be used as a cue to promote analytical judgment methods to enhance therapy effectiveness. A corollary of the interaction between intuitive and analytical methods at the client level is the work developed by Beevers and colleagues. In order to better understand the cognitive mechanisms that sustain depression, Beevers (2005) explored the conditions under which cognition is ruled by more automatic/associative (intuitive) and/or deliberative (reflective, analytical) and advocates for the use of analytical reasoning to interrupt clients’ automatic disruptive associations and schemas (Beevers, 2005). However, more research is needed to experimentally explore the cognitive processes underlying this approach and its ultimate impact on client outcomes.

Some preliminary clinical recommendations can emerge from this overview of the processes underlying the clinical judgments made in session. Because the clinical session context may leave therapists vulnerable to a cascade of processes falling outside of their awareness, we encourage therapists to focus on factors (e.g. the feelings of rightness associated to the judgment) underlying the judgment process that allow them to use different strategies that increase their control over the judgment process. We suggest that therapist actions focus on the process of validating their initial hypotheses, which will then influence subsequent hypothesis generation and testing (Box 4). The conceptual model suggests that it may be critical for the therapist to develop at least two sound initial hypotheses and subsequently test the validity of the judgments made (through memory retrieval, formal data collection, or informal inquiry) for optimal clinical practice. Thus, therapists should endeavor to be actively curious and openly search for and integrate new information in an effort to refute or support alternative hypotheses and avoid confirmatory biases. As such, therapist curiosity may be considered a form of competence that influences judgment appropriateness and therapy effectiveness.

In the proposed model, individual variables such as thinking style (Epstein et al., 1996) and need for cognition (Cacioppo & Petty, 1982) plus the context including tasks characteristics influence the therapist’s judgment methods. However, there is a lack of empirical literature regarding how these two factors interact with each other to influence the judgment process (see a discussion in Stanovich, 2012). Some theoretical orientations and therapeutic techniques may favor the use of one judgment method over the other. For example, free association implies the use of intuition, whereas reviewing a client’s episode using a chain analysis requires an analytical method. Knowing one’s proclivity toward a particular thinking style may allow therapists to intentionally select and adapt techniques that fit within therapists’ theoretical orientation without relying solely on one judgment method. Moreover, therapists could engage in a judgment process that leads to the identification of what information is being used to make the judgment. This may allow therapists to identify when a judgment is being made without an active engagement and outside of therapists’ full awareness. Therapists should also endeavor to identify their “feelings of rightness” about the judgments made and ensure that they still engage in an active and disconfirmatory hypothesis testing approach, even though the hypothesis seems correct.

Ultimately, the aim of this conceptual model is to improve our understanding of the impact of judgment methods on overall therapy effectiveness. The preliminary clinical recommendations described above may be relevant across theoretical orientations. Until research suggests otherwise, this conceptual model likely applies to therapeutic approaches regardless of level of structure, degree of directiveness, past or present emphasis, individual or relationship focus, or whether they attend to processing problems or solutions. Simply put, we contend that this approach can be used every time a therapist is aware that he or she is generating a clinical hypothesis or judgment. However, the question remains: How can therapists bring intuitive judgments into awareness and mitigate the strong feeling of rightness that follow in order to engage in disconfirmatory information seeking? There is a clear gap in the empirical literature that must be filled in order to answer this question.

Three testable propositions emerge from this conceptual model. First, intuitive reasoning is likely the primary judgment method used in the clinical session. Second, use of intuitive reasoning increases the therapist’s feelings of rightness and subsequently results in limited information seeking to disconfirm alternative hypotheses. Third, use of analytical reasoning in the clinical session is likely to optimize the case conceptualization and overall therapy effectiveness.

To test these propositions, a series of studies are necessary. For instance, a randomized study is needed to evaluate the unique contributions of clinician thinking styles (Epstein et al., 1996), clinical experience, and analytical and intuitive judgment processes (e.g., Radecki & Jaccard, 1995) on treatment effectiveness and client outcomes.

Future research should also explore therapists’ self-reported metacognitive “feelings of rightness” (Thompson et al., 2011) in a clinical session context and evaluate methods for encouraging therapists to seek additional information and monitor their intentional access of this information. Studies of this nature are necessary to inform clinical training opportunities to maximize therapy effectiveness. On the other hand, studies focused on disentangling different intuitive processes (e.g., Braga, Ferreira, & Sherman, 2015) should also contribute to broaden our understanding of therapist’s judgments made within the session.

Additionally, it is important to learn the underlying processes and conditions when therapist clinical judgments are most influenced by others, considering that therapists often make clinical judgments in collaboration with the client and other clinical team members (e.g., supervisor, prescriber). There is extensive literature demonstrating that collaboration with the client strongly impacts treatment outcome (e.g., Hill, 2005). For example, the collaborative/therapeutic assessment paradigm (e.g., Finn, 2007; Finn, Fischer, & Handler, 2012; Finn & Tonsager, 1997; Fischer, 2000) leverages a collaborative process of questioning and information gathering between the client and therapist to inform clinical decisions. Other examples of research on collaborative judgment and decision-making processes focus on acquisition and transfer of tacit knowledge and the co-construction, within the team, of implicit rules to make decisions and inform practice (Gabbay & le May, 2004, 2011). In both examples, the collaborative process allows therapists to adjust their practice to clients’ specific needs at each moment, which is expected to promote a more effective practice. However, it remains unclear what processes guide the therapist’s judgments within the session. For example, when does a client help the therapist clarify a narrative? What information is being transmitted between therapist and client and how is it interpreted? How do these judgment processes influence the hypotheses generation and testing in the session context?

Moreover, in recent years, perhaps to address the limitations of correlational quantitative research, many advances in psychotherapy research have come from qualitative and mixed methods study designs. These methods have allowed researchers to access and understand the complexity inherent to the psychotherapy process in order to capture the narratives underlying the quantitative data, and to explore the relational processes occurring in-session (e.g., Gonçalves et al., 2011; for reviews see Angus, Watson, Elliott, Schneider, & Timulak, 2015; Lutz & Hill, 2009). Along with a richer and more complex understanding of psychotherapy came a level of analysis focused on the last steps of the psychotherapy process, the client’s and therapist’s behaviors, as opposed to the cognitive processes that led to the observable behavioral outcomes. This has certainly contributed to a broader array of variables and emphasized the differences among psychotherapies; however, oftentimes this has diverted the attention from the core and common aspects of change in psychotherapy. For example, these designs have limited the investigation of basic therapists’ variables as common core factors (see a further discussion on the equivalency between psychotherapies in Stiles, Shapiro, & Elliott, 1986). Thus, the proposed model is designed to promote experimental investigations independent of the psychotherapy theoretical orientation to inform our understanding of the basic cognitive processes that govern clinical decision-making.

Finally, it is important to emphasize the shift in the field of clinical psychological science toward implementation of EBPs into the settings for which they were intended. The role of clinical judgment has largely gone overlooked as it relates to the effective implementation of EBPs in applied mental health settings. The challenges associated with bridging the gap between research and practice are complex, and although recent efforts have sought to facilitate and improve the implementation process, EBP implementation efforts have not yet reached their full potential (Aarons, Hurlburt, & Horwitz, 2011). In an effort to focus therapy session decisions, recent literature demonstrated that using a continuous and standardized assessment (before and during treatment) in youth psychotherapy focuses the treatment session on the problems that clients and therapists consider most important (Weisz et al., 2011). These results support the argument that therapist decision-making, if guided by research and informed with client feedback, can optimize EBP delivery when compared to both a standardized manual (most rigid approach) and usual care (most flexible approach) (Weisz et al., 2012). Indeed, the proposed conceptual model suggests that therapists’ clinical judgments made within each session play a central role in the application and success of EBPs. That is, the proposed model suggests that to optimize the implementation of EBPs in “real-world” settings, the therapist’s process of judgment validation requires careful attention. Moreover, by creating the conditions that allow therapists to have more control and awareness of their judgment processes during a session, they may improve their fidelity to the EBP and optimize its outcomes for a given client. Research focused on this issue could answer the questions of when does a therapist drift from the EBP as it was intended to be delivered and would an analytical approach help therapists to maintain fidelity? Since individual therapy is likely to remain a predominant mode of care delivery for those suffering from mental illness, implementation scientists will likely need to pay careful attention to the impact of therapist judgment methods and processes within the clinical session context.

Conclusion

This manuscript endeavored to integrate findings from basic and applied sciences in order to promote a better understanding of in-session clinical judgments within a dynamic and multi-level conceptual model. The purpose of this model is to promote further discussion and empirical research related to the judgment methods, influential factors (therapist variables, clinical session context, task characteristics), causal reasoning, and information seeking processes that impact clinical decision-making. To better understand the clinical judgment process and the effects of judgments on therapy effectiveness, it will be necessary to disentangle the influence of both contextual factors and clinical task characteristics (Dane et al., 2012). Careful consideration of circumstances and context, as well as an understanding of the role of therapist awareness and control in the judgment method, may illuminate innovative methods for optimizing therapist judgments (Evans, 2008; Wegner, 2003) and ultimately therapy effectiveness.

Attention should also be paid to the role of backward and forward inferences (Hogarth, 2010), causal reasoning (Lagnado, 2011) and hypothesis generation and testing (Thomas et al., 2008) as cognitive processes guiding the therapist’s information seeking behavior. In sum, analytical and intuitive judgment methods may occur both between and within the therapy session. Knowing the specific conditions that promote the use of a particular judgment method may be vital to adapt the session in order to meet the client’s needs and enhance therapy outcomes. The proposed model, once rigorously tested, may serve to inform what type of judgment and causal inference (causal explanation or prediction) are most effective under particular conditions, how many clinical hypotheses and how should these hypotheses be formulated in order to optimize the quality of mental health care.

Clinical research has yet to focus on the cognitive processes underlying clinical judgments, therefore experimental clinical studies are needed to address this research-practice gap. Ultimately, practice and training guidelines may emerge with associated techniques through which therapists will be able to exert deliberate and intentional control on the judgment method they wish to use, understanding its potential impact on the course of treatment. This deliberate control may then serve to enhance clinical outcomes across diagnoses and treatment modalities and lead to enhanced EBP fidelity and implementation success.

Acknowledgments

Funding

This work was supported by BIAL Foundation [Grant Number N°. 85/14] and Fundação para a Ciência e a Tecnologia [Grant Number SFRH/BD/101524/2014].

References

- Aarons GA, Hurlburt M, Horwitz SM. Advancing a conceptual model of evidence-based practice implementation in public service sectors. Administration and Policy in Mental Health and Mental Health Services Research. 2011;38:4–23. doi: 10.1007/s10488-010-0327-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aarts AA, Witteman CL, Souren PM, Egger JI. Associations between psychologists’ thinking styles and accuracy on a diagnostic classification task. Synthese. 2012;189:119–130. [Google Scholar]

- Ackerman SJ, Hilsenroth MJ. A review of therapist characteristics and techniques negatively impacting the therapeutic alliance. Psychotherapy: Theory, Research, Practice, Training. 2001;38:171–185. [Google Scholar]

- Ackerman SJ, Hilsenroth MJ. A review of therapist characteristics and techniques positively impacting the therapeutic alliance. Clinical Psychology Review. 2003;23:1–33. doi: 10.1016/s0272-7358(02)00146-0. [DOI] [PubMed] [Google Scholar]

- American Psychiatric Association. Diagnostic and statistical manual of mental disorders: DSM-5. 5th. Washington, DC: Author; 2013. [Google Scholar]

- Angus L, Watson JC, Elliott R, Schneider K, Timulak L. Humanistic psychotherapy research 1990–2015: From methodological innovation to evidence-supported treatment outcomes and beyond. Psychotherapy Research. 2015;25:330–347. doi: 10.1080/10503307.2014.989290. [DOI] [PubMed] [Google Scholar]

- Apel K. Science is an attitude: A response to Kamhi. Language, Speech, and Hearing Services in Schools. 2011;42:65–68. doi: 10.1044/0161-1461(2009/09-0036). [DOI] [PubMed] [Google Scholar]

- Arkes HR. Costs and benefits of judgment errors: Implications for debiasing. Psychological bulletin. 1991;110:486–498. [Google Scholar]

- Arnoult LH, Anderson CA. Identifying and reducing causal reasoning biases in clinical practice. In: Turk D, Salovey P, editors. Reasoning, inference, and judgment in clinical psychology. New York: Free Press; 1988. pp. 209–232. [Google Scholar]

- Bargh JA, Chartrand TL. The unbearable automaticity of being. American Psychologist. 1999;54:462–479. [Google Scholar]

- Bargh JA, Ferguson MJ. Beyond behaviorism: On the automaticity of higher mental processes. Psychological Bulletin. 2000;126:925–945. doi: 10.1037/0033-2909.126.6.925. [DOI] [PubMed] [Google Scholar]

- Beevers CG. Cognitive vulnerability to depression: A dual process model. Clinical Psychology Review. 2005;25:975–1002. doi: 10.1016/j.cpr.2005.03.003. [DOI] [PubMed] [Google Scholar]

- Braga JN, Ferreira MB, Sherman SJ. The effects of construal level on heuristic reasoning: The case of representativeness and availability. Decision. 2015;2:216–227. [Google Scholar]

- Cacioppo JT, Petty RE. The need for cognition. Journal of personality and social psychology. 1982;42:116–131. doi: 10.1037//0022-3514.43.3.623. [DOI] [PubMed] [Google Scholar]

- Connolly Gibbons MB, Kurtz JE, Thompson DL, Mack RA, Lee JK, Rothbard A, Crits-Christoph P. The effectiveness of clinician feedback in the treatment of depression in the community mental health system. Journal of Consulting and Clinical Psychology. 2015;83(4):748–759. doi: 10.1037/a0039302. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dane E, Rockmann KW, Pratt MG. When should I trust my gut? Linking domain expertise to intuitive decision-making effectiveness. Organizational Behavior and Human Decision Processes. 2012;119:187–194. [Google Scholar]

- Darley JM, Gross PH. A hypothesis-confirming bias in labeling effects. Journal of Personality and Social Psychology. 1983;44(1):20–33. [Google Scholar]

- Dawes RM. The robust beauty of improper linear models in decision making. American Psychologist. 1979;34:571–582. [Google Scholar]

- Dawes RM, Faust D, Meehl PE. Clinical versus actuarial judgment. Science. 1989;243:1668–1674. doi: 10.1126/science.2648573. [DOI] [PubMed] [Google Scholar]

- Devine PG, Hirt ER, Gehrke EM. Diagnostic and confirmation strategies in trait hypothesis testing. Journal of Personality and Social Psychology. 1990;58:952–963. [Google Scholar]

- De Vries M, Witteman CL, Holland RW, Dijksterhuis A. The unconscious thought effect in clinical decision making: An example in diagnosis. Medical Decision Making. 2010;30:578–581. doi: 10.1177/0272989X09360820. [DOI] [PubMed] [Google Scholar]

- Dijksterhuis A, Bos MW, Nordgren LF, von Baaren RB. On making the right choice: The deliberation-without-attention effect. Science. 2006;311:1005–1007. doi: 10.1126/science.1121629. [DOI] [PubMed] [Google Scholar]

- Doherty ME, Mynatt CR. Inattention to P (H) and to P (D\~H): A converging operation. Acta Psychologica. 1990;75:1–11. [Google Scholar]

- Duncan BL, Miller SD, Sparks JA, Claud DA, Reynolds LR, Brown J, Johnson LD. The session rating scale: Preliminary psychometric properties of a “working” alliance measure. Journal of Brief Therapy. 2003;3:3–12. [Google Scholar]

- Eells TD, editor. Handbook of psychotherapy case formulation. New York, NY: Guilford Press; 2011. [Google Scholar]

- Elliott R, Wexler MM. Measuring the impact of sessions in process experiential therapy of depression: The session impacts scale. Journal of Counseling Psychology. 1994;41:166–174. [Google Scholar]

- Elstein AS, Schwarz A. Evidence base of clinical diagnosis: Clinical problem solving and diagnostic decision making: Selective review of the cognitive literature. BMJ: British Medical Journal. 2002;324:729–732. doi: 10.1136/bmj.324.7339.729. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Epstein S, Pacini R, Denes-Raj V, Heier H. Individual differences in intuitive-experiential and analytic-rational thinking styles. Journal of Personality and Social Psychology. 1996;71:390–405. doi: 10.1037//0022-3514.71.2.390. [DOI] [PubMed] [Google Scholar]

- Evans JSB. Dual-processing accounts of reasoning, judgment, and social cognition. Annual Review of Psychology. 2008;59:255–278. doi: 10.1146/annurev.psych.59.103006.093629. [DOI] [PubMed] [Google Scholar]

- Evans JSB, Stanovich KE. Dual-process theories of higher cognition: Advancing the debate. Perspectives on Psychological Science. 2013;8:223–241. 263–271. doi: 10.1177/1745691612460685. [DOI] [PubMed] [Google Scholar]

- Evett SR, Devine PG, Hirt ER, Price J. The role of the hypothesis and the evidence in the trait hypothesis testing process. Journal of Experimental Social Psychology. 1994;30:456–481. [Google Scholar]

- Falvey JE. Clinical judgment in case conceptualization and treatment planning across mental health disciplines. Journal of Counseling & Development. 2001;79(3):292–303. [Google Scholar]

- Ferreira MB, Garcia-Marques L, Sherman SJ, Sherman JW. Automatic and controlled components of judgment and decision making. Journal of Personality and Social Psychology. 2006;91:797–813. doi: 10.1037/0022-3514.91.5.797. [DOI] [PubMed] [Google Scholar]

- Finn SE. In our clients’ shoes: Theory and techniques of therapeutic assessment. Mahwah, NJ: Lawrence Erlbaum Associates; 2007. [Google Scholar]

- Finn SE, Fischer CT, Handler L, editors. Collaborative/therapeutic assessment: A casebook and guide. Hoboken, NJ: John Wiley; 2012. [Google Scholar]

- Finn SE, Tonsager ME. Information-gathering and therapeutic models of assessment: Complementary paradigms. Psychological Assessment. 1997;9:374–385. [Google Scholar]

- Fischer CT. Collaborative, individualized assessment. Journal of Personality Assessment. 2000;74:2–14. doi: 10.1207/S15327752JPA740102. [DOI] [PubMed] [Google Scholar]

- Frey D. Recent research on selective exposure to information. Advances in Experimental Social Psychology. 1986;19:41–80. [Google Scholar]

- Gabbay J, le May A. Evidence based guidelines or collectively constructed “mindlines?” Ethnographic study of knowledge management in primary care. British Medical Journal. 2004;329:1–5. doi: 10.1136/bmj.329.7473.1013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gabbay J, le May A. Practice-based evidence for health-care: Clinical mindlines. Abingdon: Routledge; 2011. [Google Scholar]

- Garb HN. Studying the clinician: Judgment research and psychological assessment. Washington, DC: American Psychological Association; 1998. [Google Scholar]

- Garb HN. Clinical judgment and decision-making. Annual Review of Clinical Psychology. 2005;1:67–89. doi: 10.1146/annurev.clinpsy.1.102803.143810. [DOI] [PubMed] [Google Scholar]

- Garland AF, Bickman L, Chorpita BF. Change what? Identifying quality improvement targets by investigating usual mental health care. Administration and Policy in Mental Health and Mental Health Services Research. 2010;37:15–26. doi: 10.1007/s10488-010-0279-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gaudiano BA, Brown LA, Miller IW. Let your intuition be your guide? Individual differences in the evidence-based practice attitudes of psychotherapists. Journal of Evaluation in Clinical Practice. 2011;17:628–634. doi: 10.1111/j.1365-2753.2010.01508.x. [DOI] [PubMed] [Google Scholar]

- Gigerenzer G, Gaissmaier W. Heuristic decision making. Annual Review of Psychology. 2011;62:451–482. doi: 10.1146/annurev-psych-120709-145346. [DOI] [PubMed] [Google Scholar]

- Glöckner A, Witteman C. Beyond dual-process models: A categorization of processes underlying intuitive judgment and decision making. Thinking & Reasoning. 2010;16(1):1–25. [Google Scholar]

- Gonçalves MM, Ribeiro AP, Stiles WB, Conde T, Matos M, Martins C, Santos A. The role of mutual in-feeding in maintaining problematic self-narratives: Exploring one path to therapeutic failure. Psychotherapy Research. 2011;21:27–40. doi: 10.1080/10503307.2010.507789. [DOI] [PubMed] [Google Scholar]

- Grove WM, Zald DH, Lebow BS, Snitz BE, Nelson C. Clinical versus mechanical prediction: A meta-analysis. Psychological Assessment. 2000;12(1):19–30. [PubMed] [Google Scholar]

- Haidt J. The emotional dog and its rational tail: A social intuitionist approach to moral judgment. Psychological Review. 2001;108(4):814–834. doi: 10.1037/0033-295x.108.4.814. [DOI] [PubMed] [Google Scholar]

- Hammond KR. Human judgment and social policy: Irreducible uncertainty, inevitable error, unavoidable injustice. New York: Oxford University Press; 1996. [Google Scholar]

- Hammond KR, Hamm RM, Grassia J, Pearson T. Direct comparison of the efficacy of intuitive and analytical cognition in expert judgment. IEEE Transactions on Systems, Man, and Cybernetics, SMC-17. 1987;(5):753–770. [Google Scholar]

- Hayes SC, Barlow DH, Nelson-Gray RO. The scientist practitioner: Research and accountability in the age of managed care. 2nd. Needham Heights, MA: Allyn & Bacon; 1999. [Google Scholar]

- Hill CE. Therapist techniques, client involvement, and the therapeutic relationship: Inextricably intertwined in the therapy process. Psychotherapy: Theory, Research, Practice, Training. 2005;42:431–442. [Google Scholar]

- Hill CE, Helms JE, Tichenor V, Spiegel SB, O’Grady KE, Perry ES. Effects of therapist response modes in brief psychotherapy. Journal of Counseling Psychology. 1988;35:322–233. [Google Scholar]

- Hilton JL, Darley JM. The effects of interaction goals on person perception. In: Zanna MP, editor. Advances in experimental social psychology. Vol. 24. San Diego, CA: Academic Press; 1991. pp. 235–267. [Google Scholar]

- Hodgins HS, Zuckerman M. Beyond selecting information: Biases in spontaneous questions and resultant conclusions. Journal of Experimental Social Psychology. 1993;29(5):387–407. [Google Scholar]

- Hogarth RM. Educating intuition. Chicago, IL: University of Chicago Press; 2001. [Google Scholar]

- Hogarth RM. Intuition: A challenge for psychological research on decision making. Psychological Inquiry. 2010;21:338–353. [Google Scholar]

- James W. The principles of psychology (Vols. 1 and 2) New York: Dover; 1890. 1950. [Google Scholar]

- Kahneman D, Frederick S. Representativeness revisited: Attribute substitution in intuitive judgment. In: Gilovich T, Griffin D, Kahneman D, editors. Heuristic and biases: The psychology of intuitive judgment. New York, NY: Cambridge University Press; 2002. pp. 49–81. [Google Scholar]

- Kahneman D, Frederick S. A model of intuitive judgment. In: Holyoak KJ, Morrison RG, editors. The Cambridge handbook of thinking and reasoning. New York: Cambridge University Press; 2005. pp. 267–293. [Google Scholar]

- Kahneman D, Klein G. Conditions for intuitive expertise: A failure to disagree. American Psychologist. 2009;64(6):515–526. doi: 10.1037/a0016755. [DOI] [PubMed] [Google Scholar]

- Karelaia N, Hogarth RM. Determinants of linear judgment: A meta-analysis of lens model studies. Psychological Bulletin. 2008;134:404–426. doi: 10.1037/0033-2909.134.3.404. [DOI] [PubMed] [Google Scholar]

- Kelley SD, Bickman L. Beyond outcomes monitoring: Measurement feedback systems (MFS) in child and adolescent clinical practice. Current Opinion in Psychiatry. 2009;22:363–368. doi: 10.1097/YCO.0b013e32832c9162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Klayman J, Ha YW. Confirmation, disconfirmation, and information in hypothesis testing. Psychological Review. 1987;94:211–228. [Google Scholar]

- Klein G. Sources of power. Cambridge, MA: MIT Press; 1999. [Google Scholar]

- Klein G. Naturalistic decision making. Human Factors: The Journal of the Human Factors and Ergonomics Society. 2008;50:456–460. doi: 10.1518/001872008X288385. [DOI] [PubMed] [Google Scholar]

- Klein G, Calderwood R. Decision models: Some lessons from the field. IEEE Transactions on Systems, Man and Cybernetics. 1991;21:1018–1026. [Google Scholar]

- Klein GA. A recognition-primed decision (RPD) model of rapid decision making. Decision Making in Action: Models and Methods. 1993;5:138–147. [Google Scholar]

- Koehler DJ. Explanation, imagination, and confidence in judgment. Psychological Bulletin. 1991;110:499–519. doi: 10.1037/0033-2909.110.3.499. [DOI] [PubMed] [Google Scholar]

- Kruglanski AW, Gigerenzer G. Intuitive and deliberate judgments are based on common principles. Psychological Review. 2011;118:97–109. doi: 10.1037/a0020762. [DOI] [PubMed] [Google Scholar]

- Kunda Z. The case for motivated reasoning. Psychological Bulletin. 1990;108(3):480–498. doi: 10.1037/0033-2909.108.3.480. [DOI] [PubMed] [Google Scholar]

- Lagnado DA. Causal Thinking. In: McKay-Illari P, Russo F, Williamson J, editors. Causality in the sciences. Oxford: Oxford University Press; 2011. pp. 129–149. [Google Scholar]

- Lambert MJ, Harmon C, Slade K, Whipple JL, Hawkins EJ. Providing feedback to psychotherapists on their patients’ progress: Clinical results and practice suggestions. Journal of Clinical Psychology. 2005;61:165–174. doi: 10.1002/jclp.20113. [DOI] [PubMed] [Google Scholar]

- Lambert MJ, Whipple JL, Smart DW, Vermeersch DA, Nielsen SL, Hawkins EJ. The effects of providing therapists with feedback on patient progress during psychotherapy: Are outcomes enhanced? Psychotherapy Research. 2001;11:49–68. doi: 10.1080/713663852. [DOI] [PubMed] [Google Scholar]

- Lambert MJ, Whipple JL, Vermeersch DA, Smart DW, Hawkins EJ, Nielsen SL, Goates M. Enhancing psychotherapy outcomes via providing feedback on client progress: A replication. Clinical Psychology and Psychotherapy. 2002;9:91–103. [Google Scholar]

- Lewis CC, Scott K, Marti CN, Marriott BR, Kroenke K, Putz JW, Rutkowski D. Implementing measurement-based care (iMBC) for depression in community mental health: A dynamic cluster randomized trial study protocol. Implementation Science. 2015;10:1–14. doi: 10.1186/s13012-015-0313-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lipshitz R, Klein G, Orasanu J, Salas E. Focus article: Taking stock of naturalistic decision making. Journal of Behavioral Decision Making. 2001;14(5):331–352. [Google Scholar]

- Lutz W, Hill CE. Quantitative and qualitative methods for psychotherapy research: Introduction to special section. Psychotherapy Research. 2009;19:369–373. doi: 10.1080/10503300902948053. [DOI] [PubMed] [Google Scholar]

- Marsh JK, Ahn W. Memory for patient information as a function of experience in mental health. Applied Cognitive Psychology. 2012;26:462–474. doi: 10.1002/acp.2832. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meehl PE. Clinical vs. statistical prediction: A theoretical analysis and a review of the evidence. Minneapolis: University of Minnesota Press; 1954. [Google Scholar]

- Miller SD, Duncan BL, Brown J, Sparks JA, Claud DA. The outcome rating scale: A preliminary study of the reliability, validity, and feasibility of a brief visual analog measure. Journal of Brief Therapy. 2003;2:91–100. [Google Scholar]

- Nickerson RS. Confirmation bias: A ubiquitous phenomenon in many guises. Review of general psychology. 1998;2:175–220. [Google Scholar]

- Pacini R, Epstein S. The relation of rational and experiential information processing styles to personality, basic beliefs, and the ratio-bias phenomenon. Journal of Personality and Social Psychology. 1999;76(6):972–987. doi: 10.1037//0022-3514.76.6.972. [DOI] [PubMed] [Google Scholar]

- Persons JB, Beckner VL, Tompkins MA. Testing case formulation hypotheses in psychotherapy: Two case examples. Cognitive and Behavioral Practice. 2013;20(4):399–409. [Google Scholar]

- Persons JB, Bertagnolli A. Inter-rater reliability of cognitive-behavioral case formulations of depression: A replication. Cognitive Therapy and Research. 1999;23(3):271–283. [Google Scholar]

- Persons JB, Bostrom A, Bertagnolli A. Results of randomized controlled trials of cognitive therapy for depression generalize to private practice. Cognitive Therapy and Research. 1999;23(5):535–548. [Google Scholar]

- Posner K, Brown GK, Stanley B, Brent DA, Yershova KV, Oquendo MA, Mann JJ. The Columbia– suicide severity rating scale: Initial validity and internal consistency findings from three multisite studies with adolescents and adults. American Journal of Psychiatry. 2011;168(12):1266–1277. doi: 10.1176/appi.ajp.2011.10111704. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pyszczynski T, Greenberg J. Self-regulatory perseveration and the depressive self-focusing style: A self-awareness theory of reactive depression. Psychological Bulletin. 1987;102(1):122–138. [PubMed] [Google Scholar]

- Radecki CM, Jaccard J. Perceptions of knowledge, actual knowledge, and information search behavior. Journal of Experimental Social Psychology. 1995;31(2):107–138. [Google Scholar]

- Reyna VF. How people make decisions that involve risk: A dual-processes approach. Current Directions in Psychological Science. 2004;13(2):60–66. [Google Scholar]

- Rieskamp J, Hoffrage U. Inferences under time pressure: How opportunity costs affect strategy selection. Acta psychologica. 2008;127:258–276. doi: 10.1016/j.actpsy.2007.05.004. [DOI] [PubMed] [Google Scholar]

- Safran JD, Muran JC. Resolving therapeutic alliance ruptures: Diversity and integration. Journal of Clinical Psychology. 2000;56:233–243. doi: 10.1002/(sici)1097-4679(200002)56:2<233::aid-jclp9>3.0.co;2-3. [DOI] [PubMed] [Google Scholar]

- Safran JD, Muran JC. Has the concept of the alliance outlived its usefulness? Psychotherapy: Theory, Research, Practice, Training. 2006;43:286–291. doi: 10.1037/0033-3204.43.3.286. [DOI] [PubMed] [Google Scholar]

- Safran JD, Muran JC, Samstag LW, Winston A. Evaluating alliance-focused intervention for potential treatment failures: A feasibility study and descriptive analysis. Psychotherapy: Theory, Research, Practice, Training. 2005;42:512–531. [Google Scholar]

- Schön DA. The reflective practitioner: How professionals think in action. New York: Basic Books; 1983. [Google Scholar]

- Skov RB, Sherman SJ. Information-gathering processes: Diagnosticity, hypothesis-confirmatory strategies, and perceived hypothesis confirmation. Journal of Experimental Social Psychology. 1986;22(2):93–121. [Google Scholar]

- Sloman SA. The empirical case for two systems of reasoning. Psychological Bulletin. 1996;119:3–22. [Google Scholar]

- Sloman SA. Two systems of reasoning. In: Gilovich T, Griffin D, Kahneman D, editors. Heuristics and biases: The psychology of intuitive judgment. Cambridge: Cambridge Univ. Press; 2002. pp. 379–398. [Google Scholar]

- Snyder M, Swann WB. Hypothesis-testing processes in social interaction. Journal of Personality and Social Psychology. 1978;36(11):1202–1212. [Google Scholar]

- Stanovich KE. Who is rational? Studies of individual differences in reasoning. Mahwah, NJ: Lawrence Erlbaum Associates; 1999. [Google Scholar]

- Stanovich KE. On the distinction between rationality and intelligence: Implications for understanding individual differences in reasoning. In: Holyoak K, Morrison R, editors. The Oxford handbook of thinking and reasoning. New York: Oxford University Press; 2012. pp. 343–365. [Google Scholar]

- Stanovich KE, West RF. Individual differences in reasoning: Implications for the rationality debate? Behavioral and Brain Sciences. 2000;23(5):645–665. doi: 10.1017/s0140525x00003435. [DOI] [PubMed] [Google Scholar]

- Stiles WB, Shapiro DA, Elliott R. Are all psychotherapies equivalent? American Psychologist. 1986;41:165–180. doi: 10.1037//0003-066x.41.2.165. [DOI] [PubMed] [Google Scholar]

- Strasser J, Gruber H. The role of experience in professional training and development of psychological counsellors. In: Boshuizen HPA, Bromme R, Gruber H, editors. Professional learning: Gaps and transitions on the way from novice to expert. Netherlands: Springer; 2004. pp. 11–27. [Google Scholar]

- Strohmer DC, Shivy VA, Chiodo AL. Information processing strategies in counselor hypothesis testing: The role of selective memory and expectancy. Journal of Counseling Psychology. 1990;37(4):465–472. [Google Scholar]

- Sundet R. Collaboration: Family and therapists’ perspectives of helpful therapy. Journal of Marital and Family Therapy. 2011;37:236–249. doi: 10.1111/j.1752-0606.2009.00157.x. [DOI] [PubMed] [Google Scholar]