Abstract

Missing data occur frequently in a wide range of applications. In this paper, we consider estimation of high-dimensional covariance matrices in the presence of missing observations under a general missing completely at random model in the sense that the missingness is not dependent on the values of the data. Based on incomplete data, estimators for bandable and sparse covariance matrices are proposed and their theoretical and numerical properties are investigated.

Minimax rates of convergence are established under the spectral norm loss and the proposed estimators are shown to be rate-optimal under mild regularity conditions. Simulation studies demonstrate that the estimators perform well numerically. The methods are also illustrated through an application to data from four ovarian cancer studies. The key technical tools developed in this paper are of independent interest and potentially useful for a range of related problems in high-dimensional statistical inference with missing data.

Keywords: Adaptive thresholding, bandable covariance matrix, generalized sample covariance matrix, missing data, optimal rate of convergence, sparse covariance matrix, thresholding

1 Introduction

The problem of missing data arises frequently in a wide range of fields, including biomedical studies, social science, engineering, economics, and computer science. Statistical inference in the presence of missing observations has been well studied in classical statistics. See, e.g., Ibrahim and Molenberghs [18] for a review of missing data methods in longitudinal studies and Schafer [26] for literature on handling multivariate data with missing observations. See Little and Rubin [20] and the references therein for a comprehensive treatment of missing data problems.

Missing data also occurs in contemporary high-dimensional inference problems, whose dimension p can be comparable to or even much larger than the sample size n. For example, in large-scale genome-wide association studies (GWAS), it is common for many subjects to have missing values on some genetic markers due to various reasons, including insufficient resolution, image corruption, and experimental error during the laboratory process. Also, different studies may have different volumes of genomic data available by design. For instance, the four genomic ovarian cancer studies discussed in Section 4 have throughput measurements of mRNA gene expression levels, but only one of these also has microRNA measurements (Cancer Genome Atlas Research Network [11], Bonome et al. [4], Tothill et al. [27] and Dressman et al. [15]). Discarding samples with any missingness is highly inefficient and could induce bias due to non-random missingness. It is of significant interest to integrate multiple high-throughput studies of the same disease, not only to boost statistical power but also to improve the biological interpretability. However, considerable challenges arise when integrating such studies due to missing data.

Although there have been significant recent efforts to develop methodologies and theories for high dimensional data analysis, there is a paucity of methods with theoretical guarantees for statistical inference with missing data in the high-dimensional setting. Under the assumption that the components are missing uniformly and completely at random (MUCR), Loh and Wainwright [21] proposed a non-convex optimization approach to high-dimensional linear regression, Lounici [23] introduced a method for estimating a low-rank covariance matrix and Lounici [22] considered sparse principal component analysis. In these papers, theoretical properties of the procedures were analyzed. These methods and theoretical results critically depend on the MUCR assumption.

Covariance structures play a fundamental role in high-dimensional statistics. It is of direct interest in a wide range of applications including genomic data analysis, particularly for hypothesis generation. Knowledge of the covariance structure is critical to many statistical methods, including discriminant analysis, principal component analysis, clustering analysis, and regression analysis. In the high-dimensional setting with complete data, inference on the covariance structure has been actively studied in recent years. See Cai, Ren and Zhou [7] for a survey of recent results on minimax and adaptive estimation of high-dimensional covariance and precision matrices under various structural assumptions. Estimation of high-dimensional covariance matrices in the presence of missing data also has wide applications in biomedical studies, particularly in integrative genomic analysis which holds great potential in providing a global view of genome function (see Hawkins et al. [17]).

In this paper, we consider estimation of high-dimensional covariance matrices in the presence of missing observations under a general missing completely at random (MCR) model in the sense that the missingness is not dependent on the values of the data. Let X1, . . . , Xn be n independent copies of a p dimensional random vector X with mean μ and covariance matrix Σ. Instead of observing the complete sample {X1, . . . , Xn, one observes the sample with missing values, where the observed coordinates of Xk are indicated by a vector Sk ∈ {0, 1}p, k = 1, . . ., n. That is,

| (1) |

Here Xjk and Sjk are respectively the jth coordinate of the vectors Xk and Sk. We denote the incomplete sample with missing values by . The major goal of the present paper is to estimate Σ, the covariance matrix of X, with theoretical guarantees based on the incomplete data X* in the high-dimensional setting where p can be much larger than n.

This paper focuses on estimation of high-dimensional bandable covariance matrices and sparse covariance matrices in the presence of missing data. These two classes of covariance matrices arise frequently in many applications, including genomics, econometrics, signal processing, temporal and spatial data analyses, and chemometrics. Estimation of these high-dimensional structured covariance matrices have been well studied in the setting of complete data in a number of recent papers, e.g., Bickel and Levina [2, 3], Karoui [16], Rothman et al. [24], Cai and Zhou [10], Cai and Liu [5], Cai et al. [6, 9] and Cai and Yuan [8]. Given an incomplete sample X* with missing values, we introduced a “generalized” sample covariance matrix, which can be viewed as an analog of the usual sample covariance matrix in the case of complete data. For estimation of bandable covariance matrices, where the entries of the matrix decay as they move away from the diagonal, a blockwise tridiagonal estimator is introduced and is shown to be rate-optimal. We then consider estimation of sparse covariance matrices. An adaptive thresholding estimator based on the generalized sample covariance matrix is proposed. The estimator is shown to achieve the optimal rate of convergence over a large class of approximately sparse covariance matrices under mild conditions.

The technical analysis for the case of missing data is much more challenging than that for the complete data, although some of the basic ideas are similar. To facilitate the theoretical analysis of the proposed estimators, we establish two key technical results, first, a large deviation result for a sub-matrix of the generalized sample covariance matrix and second, a large deviation bound for the self-normalized entries of the generalized sample covariance matrix. These technical tools are not only important for the present paper, but also useful for other related problems in high-dimensional statistical inference with missing data.

A simulation study is carried out to examine the numerical performance of the proposed estimation procedures. The results show that the proposed estimators perform well numerically. Even in the MUCR setting, our proposed procedures for estimating bandable, sparse covariance matrices, which do not rely on the information of the missingness mechanism, outperform the ones specifically designed for MUCR. The advantages are more significant under the setting of missing completely at random but not uniformly. We also illustrate our procedure with an application to data from four ovarian cancer studies that have different volumes of genomic data by design. The proposed estimators enable us to estimate the covariance matrix by integrating the data from all four studies and lead to a more accurate estimator. Such high-dimensional covariance matrix estimation with missing data is also useful for other types of data integration. See further discussions in Section 4.4.

The rest of the paper is organized as follows. Section 2 considers estimation of bandable covariance matrices with incomplete data. The minimax rate of convergence is established for the spectral norm loss under regularity conditions. Section 3 focuses on estimation of high-dimensional sparse covariance matrices and introduces an adaptive thresholding estimator in the presence of missing observations. Asymptotic properties of the estimator under the spectral norm loss is also studied. Numerical performance of the proposed methods is investigated in Section 4 through both simulation studies and an analysis of an ovarian cancer dataset. Section 5 discusses a few related problems. Finally the proofs of the main results are given in Section 6 and the Supplement.

2 Estimation of Bandable Covariance Matrices

In this section, we consider estimation of bandable covariance matrices with incomplete data. Bandable covariance matrices, whose entries decay as they move away from the diagonal, arise frequently in temporal and spatial data analysis. See, e.g., Bickel and Levina [2] and Cai et al. [7] and the references therein. The procedure relies on a “generalized” sample covariance matrix. We begin with basic notation and definitions that will be used throughout the rest of the paper.

2.1 Notation and Definitions

Matrices and vectors are denoted by boldface letters. For a vector , we denote the Euclidean q-norm by , i.e., . Let be the singular value decomposition of a matrix , where D = diag{λ1(A),...} with λ1 (A) ≥ · · · ≥ 0 being the singular values. For 1 ≤ q ≤ ∞, the Schatten-q norm ∥A∥q is defined by . In particular, is the Frobenius norm of A and will be denoted as ∥A∥F; ∥A∥∞ = λ1(A) is the spectral norm of A and will be simply denoted as ∥A∥. For 1 ≤ q ≤ ∞ and , we denote the operator norm of A by which is defined as . The following are well known facts about the various norms of a matrix A = (aij),

| (2) |

and, if A is symmetric, . When R1, R2 are two subsets of {1,..., p1}, {1,..., p2} respectively, we note AR1 × R2 = (aij)i∈R1,j∈R2 as the sub-matrix of A with indices R1 and R2. In addition, we simply write AR1 × R1 as AR1.

We denote by X1,..., Xn a complete random sample (without missing observations) from a p-dimensional distribution with mean μ and covariance matrix Σ. The sample mean and sample covariance matrix are defined as

| (3) |

Now we introduce the notation related to the incomplete data with missing observations. Generally, we use the superscript “*” to denote objects related to missing values. Let S1, . . ., Sn be the indicator vectors for the observed values (see (1)) and let be the observed incomplete data where the observed entries are indexed by the vectors S1, . . ., Sn ∈ {0, 1}p. In addition, we define

| (4) |

Here is the number of vectors in which the ith and jth entries are both observed. For convenience, we also denote

| (5) |

Given a sample with missing values, the sample mean and sample covariance matrix can no longer be calculated in the usual way. Instead, we propose the “generalized sample mean” defined by

| (6) |

where Xik is the ith entry of Xk, and the “generalized sample covariance matrix” defined by

| (7) |

As will be seen later, the generalized sample mean and the generalized sample covariance matrix play similar roles as those of the conventional sample mean and sample covariance matrix in inference problems, but the technical analysis can be much more involved. Some distinctions between the generalized sample covariance matrix and the usual sample covariance matrix are that is in general not non-negative definite, and each entry is the average of a varying number of samples, which create additional difficulties in the technical analysis.

Regarding the mechanism of missingness, the assumption we use for the theoretical analysis is missing completely at random. This is a more general setting than the one considered previously by Loh and Wainwright [21] and Lounici [22].

Assumption 2.1 (Missing Completely at Random (MCR)) S = {S1, . . . , Sn} is not dependent on the values of X. Here S can be either deterministic or random, but independent of X.

We adopt Assumption 1 in Chen et al. [13] and assume that the random vector X is sub-Gaussian satisfying the following assumption.

Assumption 2.2 (Sub-Gaussian Assumption) X = {X1, . . . , Xn}. Here the columns Xk are i.i.d. and can be expressed as

| (8) |

where μ is a fixed p-dimensional mean vector, is a fixed matrix with q ≥ p so that ΓΓ⊤ = Σ, Zk = (Zik, . . . , Zmk)⊤ is an m-dimensional random vector with the components mean 0, variance 1, and i.i.d. sub-Gaussian, with the exception of i.i.d. Rademacher. More specifically, each Zik satisfies that , and there exists τ > 0 such that EetZik ≤ exp(τt2/2) for all t > 0.

Note that the exclusion of the Rademacher distribution in Assumption 2.2 is only required for estimation of sparse covariance matrices. See Remark 3.3 for further discussions.

2.2 Rate-optimal Blockwise Tridiagonal Estimator

We follow Bickel [2] and Cai et al. [9] and consider estimating the covariance matrix Σ over the parameter space where

| (9) |

Suppose we have n i.i.d. samples with missing values with covariance matrix . We propose a blockwise tridiagonal estimator to estimate Σ. We begin by dividing the generalized sample covariance matrix given by (7) into blocks of size k × k for some k. More specifically, pick an integer k and let N = ⌈p/k⌉. Set Ij = {(j – 1)k + 1, . . ., jk} for 1 ≤ j ≤ N – 1, and IN = {(N – 1)k + 1, . . ., p} For 1 ≤ j, j′ ≤ N and A = (ai1,i2)p×p, define

and define the blockwise tridiagonal estimator by

| (10) |

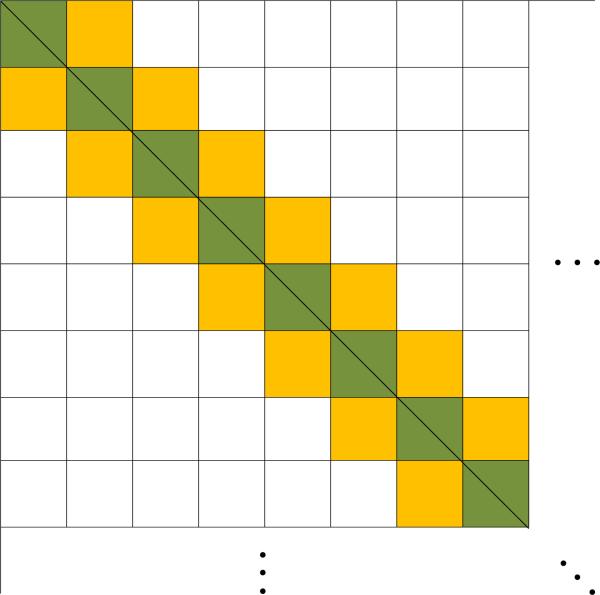

That is, , is estimated by its sample counterpart of and only j and j′ differ by at most 1. The weight matrix of the blockwise tridiagonal estmator is illustrated in Figure 1.

Figure 1.

Weight matrix for the blockwise tridiagonal estimator.

Theorem 2.1 Suppose Assumptions 2.1 and 2.2 hold. Then, conditioning on S, the blockwise tridiagonal with satisfies

| (11) |

where C is a constant depending only on M, M0, and τ from Assumption 2.2.

The optimal choice of block size k depends on the unknown “smoothness parameter” α. In practice, k can be chosen by cross-validation. See Section 4.1 for further discussions. Moreover, the convergence rate in (11) is optimal as we also have the following lower bound result.

Proposition 2.1 For any n0 ≥ 1 such that p ≤ exp(γn0) for some constant γ < 0, conditioning on S we have

Remark 2.1 (Tapering and banding estimators) It should be noted that the same rate of convergence can also be attained by tapering and banding estimators with suitable choices of tapering and banding parameters. Specifically, let and be respectively the tapering and banded estimators proposed in Cai et al. [9] and Bickel and Levina [2] with

| (12) |

where and are the weights defined as

| (13) |

Then the estimators and with attains the rate given in (11).

The proof of Theorem 2.1 shares some basic ideas with that for the complete data case (See, e.g. Theorem 2 in Cai et al. [9]). However, it relies on a new key technical tool which is a large deviation result for a sub-matrix of the generalized sample covariance matrix under the spectral norm. This random matrix result for the case of missing data, stated in the following lemma, can be potentially useful for other, related high-dimensional missing data problems. The proof of Lemma 2.1, given in Section 6, is more involved than the complete data case, as in the generalized sample covariance matrix each entry, , is the average of a varying number of samples.

Lemma 2.1 Suppose Assumptions 2.1 and 2.2 hold. Let be the generalized sample covariance matrix defined in (7) and let A and B be two subsets of {1, . . ., p}. Then, conditioning on S, the submatrix satisfies

| (14) |

for all x > 0. Here C > 0 and c > 0 are two absolute constants.

3 Estimation of Sparse Covariance Matrices

In this section, we consider estimation of high-dimensional sparse covariance matrices in the presence of missing data. We introduce an adaptive thresholding estimator based on incomplete data and investigate its asymptotic properties.

3.1 Adaptive Thresholding Procedure

Sparse covariance matrices arise naturally in a range of applications including genomics. Estimation of sparse covariance matrices has been considered in several recent papers in the setting of complete data (see, e.g., Bickel and Levina [3], El Karoui [16], Rothman et al. [24], Cai and Zhou [10] and Cai and Liu [5]). Estimation of a sparse covariance matrix is intrinsically a heteroscedastic problem in the sense that the variances of the entries of the sample covariance matrix can vary over a wide range. To treat the heteroscedasticity of the sample covariances, Cai and Liu [5] introduced an adaptive thresholding procedure which adapts to the variability of the individual entries of the sample covariance matrix and outperforms the universal thresholding method. The estimator is shown to be simultaneously rate optimal over collections of sparse covariance matrices.

In the present setting of missing data, the usual sample covariance matrix is not available. Instead we apply the idea of adaptive thresholding to the generalized sample covariance matrix . The procedure can be described as follows. Note that defined in (7) is a nearly unbiased estimate of Σ, we may write it element-wise as

where zi is approximately normal with mean 0 and variance 1, and θij describes the uncertainty of estimator to σij such that

We can estimate θij by

| (15) |

Lemma 3.1 given at the end of this section shows that is a good estimate of θij.

Since the covariance matrix Σ is assumed to be sparse, it is natural to estimate Σ by individually thresholding according to its own variability as measured by . Define the thresholding level λij by

where δ is a thresholding constant which can be taken as 2.

Let Tλ be a thresholding function satisfying the following conditions,

-

(1).

|Tλ(z)| ≤ cT|y| for all z, y such that |z – y| ≤ λ;

-

(2).

Tλ(z) = 0 for |z| ≤ λ;

-

(3).

|Tλ(z) – z| ≤ λ, for all .

These conditions are met by many well-used thresholding functions, including the soft thresholding rule Tλ(z) = sgn(z)(z – λ)+, where sgn(z) is the sign function such that sgn(z) = 1 if z > 0, sgn(z) = 0 if z = 0, and sgn(z) = −1 if z < 0, and the adaptive lasso rule Tλ(z) = z(1 – |λ/z|η)+ with η ≤ 1 (see Rothman et al. [24]). The hard thresholding function does not satisfy Condition (1), but our analysis also applies to hard thresholding under similar conditions.

The covariance matrix Σ is estimated by where is the thresholding estimator defined by

| (16) |

Note that here each entry is thresholded according to its own variability.

3.2 Asymptotic Properties

We now investigate the properties of the thresholding estimator over the following parameter space for sparse covariance matrices,

| (17) |

The parameter space contains a large collection of sparse covariance matrices and does not impose any constraint on the variances σii, i = 1, . . ., p. The collection contains some other commonly used classes of sparse covariance matrices in the literature, including an ℓq ball assumption in Bickel and Levina [3], and weak ℓq ball assumption for each integer k in Cai and Zhou [10] where |σj[k]| is the kth largest entry in magnitude of the jth row (σij)1≤i≤p. See Cai et al. [7] for more discussions.

We have the following result on the performance of over the parameter space .

Theorem 3.1 Suppose that δ ≥ 2, ln and Assumptions 2.1 and 2.2 hold. Then, conditioning on S, there exists a constant C not depending on p, or n such that for any ,

| (18) |

Moreover, if we further assume that and δ ≥ 4 + 1/ξ, we in addition have

| (19) |

Moreover, the lower bound result below shows that the rate in (19) is optimal.

Proposition 3.1 For any n0 ≥ 1 and cn,p > 0 such that for some constant M < 0, conditioning on S we have

Remark 3.1 (ℓq norm loss) We focus in this paper on estimation under the spectral norm loss. The results given in Theorem 3.1 can be easily generalized to the general matrix ℓq norm for 1 ≤ q ≤ ∞. The results given in Equations (18) and (19) remain valid when the spectral norm is replaced by the matrix ℓq norm for 1 ≤ q ≤ ∞.

Remark 3.2 (Positive definiteness) Under mild conditions on Σ, the estimator is positive definite with high probability. However, is not guranteed to be positive definite for a given data set. Whenever is not positive semi-definite, a simple extra step can make the final estimator positive definite and also rate-optimal.

Write the eigen-decomposition of as , where are the eigenvalues and v̂i are the corresponding eigenvectors. Define the final estimator

where Ip×p is the p × p identity matrix. Then is a positive definite matrix with the same structure as that of . It is easy to show that and attains the same rate of convergence over . See Cai, Ren and Zhou [7] for further discussions.

Remark 3.3 (Exclusion of the Rademacher Distribution) To guarantee that is a good estimate of θij, one important condition needed in the theoretical analysis is that is bounded from below by a positive constant. Howver when the components of Zk in (8) are i.i.d. Rademacher, it is possible that . For example, If Z1 and Z2 are i.i.d. Rademacher and Xi = Z1 – Z2, then , and this implies .

A key technical tool in the analysis of the adaptive thresholding estimator is a large deviation result for the self-normalized entries of the generalized sample covariance matrix. The following lemma, proved in Section 6, plays a critical role in the proof of Theorem 3.1 and can be useful for other high-dimensional inference problems with missing data.

Lemma 3.1 Suppose ln and Assumptions 2.1 and 2.2 hold. For any constants δ ≥ 2, ε > 0, M > 0, conditioning on S, we have

| (20) |

| (21) |

In addition to optimal estimation of a sparse covariance matrix Σ under the spectral norm loss, it is also of significant interest to recover the support of Σ, i.e., the locations of the nonzero entries of Σ. The problem has been studied in the case of complete data in, e.g., Cai and Liu [5] and Rothman et al. [24]. With incomplete data, the support can be similarly recovered through adaptive thresholding. Specifically, define the support of Σ = (σij)1≤i,j≤p by supp(Σ) = {(i, j) : σij ≠ 0}. Under the condition that the non-zero entries of Σ are sufficiently bounded away from zero, the adaptive thresholding estimator recovers the support supp(Σ) consistently. It is noteworthy that in the support recovery analysis, the sparsity assumption is not directly needed.

Theorem 3.2 (Support Recovery) Suppose ln and Assumptions 2.1 and 2.2 hold. Let γ be any positive constant. Suppose Σ satisfies

| (22) |

Let be the adaptive thresholding estinmator with δ = 2, then, conditioning on S, we have

| (23) |

4 Numerical Results

We investigate in this section the numerical performance of the proposed estimators through simulations. The proposed adaptive thresholding procedure is also illustrated with an estimation of the covariance matrix based on data from four ovarian cancer studies.

The estimators and introduced in the previous sections all require specification of the tuning parameters (k or δ). Cross-validation is a simple and practical data-driven method for the selection of these tuning parameters. Numerical results indicate that the proposed estimators with the tuning parameter selected by cross-validation perform well empirically. We begin by introducing the following K-fold cross-validation method for the empirical selection of the tuning parameters.

4.1 Cross-validation

For a pre-specified positive integer N, we construct a grid T of non-negative numbers. For bandable covariance matrix estimation, we set T = {1, ⌈p1/Nl⌉,..., ⌈pN/N⌉}, and for sparse covariance matrix estimation, we let T = {0,1/N,..., 4N/N}.

Given n samples with mising values, for a given positive integer K, we randomly divide them into two groups of size n1 ≈ n(K – 1)/K, n2 ≈ n/K for H times. For h = 1, . . . , H, we denote by and the index sets of the two groups for the h-th split. The proposed estimator, for bandable covariance matrices, or for sparse covariance matrices, is then applied to the first group of data with each value of the tuning parameter t ∈ T and denote the result by or respectively. Denote the generalized sample covariance matrix of the second group of data by and set

| (24) |

where is either for bandable covariance matrices, or for sparse covariance matrices.

The final tuning parameter is chosen to be

and the final estimator (or ) is calculated using this choice of the tuning parameter t*. In the following numerical studies, we will use 5-fold cross-validation (i.e., K = 5) to select the tuning parameters.

Remark 4.1 The Frobenius norm used in (24) can be replaced by other losses such as the spectral norm. Our simulation results indicate that using the Frobenius norm in (24) works well, even when the true loss is the spectral norm loss.

4.2 Simulation Studies

In the simulation studies, we consider the following two settings for the missingness. The first is MUCR where each entry Xik is observed with probability 0 < ρ ≤ 1, and the second is missing not uniformly but completely at random (MCR) where the complete data matrix X is divided into four equal-size blocks,

and each entry of X(11) and X(22) is observed with probability ρ(1) and each entry of X(12) and X(21) is observed with probability ρ(2), for some 0 < ρ(1), ρ(2) ≤ 1.

As mentioned in the introduction, high-dimensional inference for missing data has been studied in the case of MUCR and we would like to compare our estimators with the corresponding estimators based on a different sample covariance matrix designed for the MUCR case. Under the assumption that EX = 0 and each entry of X is observed independently with probability ρ, Wainwright [21] and Lounici [23] introduced the following substitute of the usual sample covariance matrix

| (25) |

where the missing entries of X* are replaced by 0's. It is easy to show that is a consistent estimator of under MUCR and could be used similarly as the sample covariance matrix in the complete data setting.

For more general settings where EX ≠ 0 and the coordinates X1, X2, . . ., Xp are observed with different probabilities ρ1, . . . , ρp, can be generalized as

| (26) |

where for i = 1, . . . , p and k = 1, . . . , n, and .

Based on , we can analogously define the corresponding blockwise tridiagonal estimator for bandable covariance matrices, and adaptive thresholding estimator for sparse covariance matrices.

We first consider estimation of bandable covariance matrices and compare the proposed blockwise tridiagonal estimator with the corresponding estimator . For both methods, the tuning parameter k is selected by 5-fold cross-validation with N varying from 20 to 50. The following bandable covariance matrices are considered:

(Linear decaying bandable model) Σ = (σij)1≤i,j≤p with σij = max{0, 1 – |i – j|/5}.

(Squared decaying bandable model) Σ = (σij)1≤i,j≤p with σij = (|i – j| + 1)−2.

For missingness, both MUCR and MCR are considered and (25) and (26) are used to calculate respectively. The proposed procedure is compared with the estimator , which is based on . The results for the spectral norm, ℓ1 norm and Frobenius norm losses are reported in Table 1. It is easy to see from Table 1 that the proposed estimator generally outperforms , especially in the fast decaying setting.

Table 1.

Comparsion between and in different settings of bandable covariance matrix estimation.

| Spectral norm |

ℓ1 norm |

Frobenius norm |

||||

|---|---|---|---|---|---|---|

| (p, n) | ||||||

| Linear Decay Bandable Model, MUCR ρ = .5 | ||||||

| (50, 50) | 2.78(0.17) | 2.88(0.18) | 4.37(0.57) | 4.57(0.76) | 7.73(0.85) | 7.85(0.80) |

| (50, 200) | 1.44(0.06) | 1.56(0.07) | 2.52(0.17) | 2.71(0.19) | 3.91(0.18) | 4.16(0.16) |

| (200, 100) | 2.25(0.13) | 2.44(0.16) | 3.83(0.32) | 4.22(0.46) | 10.27(0.29) | 10.89(0.29) |

| (200, 200) | 1.67(0.07) | 1.82(0.08) | 2.81(0.19) | 3.08(0.22) | 7.19(0.19) | 7.68(0.14) |

| (500, 200) | 2.00(0.07) | 2.18(0.10) | 3.45(0.16) | 3.74(0.27) | 12.10(0.36) | 12.87(0.42) |

| Squared Decay Bandable Model, MUCR ρ = .5 | ||||||

| (50, 50) | 1.34(0.08) | 1.40(0.11) | 2.28(0.16) | 2.37(0.21) | 3.78(0.19) | 3.91(0.18) |

| (50, 200) | 0.82(0.01) | 0.84(0.01) | 1.47(0.03) | 1.49(0.02) | 2.24(0.02) | 2.30(0.02) |

| (200, 100) | 1.13(0.01) | 1.17(0.02) | 2.12(0.05) | 2.18(0.07) | 5.74(0.04) | 5.91(0.05) |

| (200, 200) | 0.92(0.00) | 0.94(0.00) | 1.66(0.02) | 1.72(0.03) | 4.49(0.02) | 4.61(0.01) |

| (500, 200) | 0.97(0.00) | 0.98(0.00) | 1.80(0.02) | 1.86(0.02) | 7.15(0.01) | 7.35(0.01) |

| Linear Decay Bandable Model, MCR ρ(1) = .8, ρ(2) = .2 | ||||||

| (50, 50) | 2.76(0.26) | 3.46(1.43) | 4.24(0.73) | 5.87(2.91) | 7.03(1.25) | 8.47(1.29) |

| (50, 200) | 1.51(0.11) | 2.64(0.40) | 2.52(0.30) | 4.29(0.99) | 3.62(0.30) | 5.77(0.45) |

| (200, 100) | 2.32(0.22) | 3.93(0.67) | 3.73(0.47) | 6.21(1.11) | 9.04(0.48) | 13.47(0.84) |

| (200, 200) | 1.67(0.10) | 3.23(0.27) | 2.71(0.26) | 4.91(0.49) | 6.32(0.11) | 11.32(0.49) |

| (500, 200) | 1.98(0.09) | 3.78(0.20) | 3.19(0.20) | 5.70(0.42) | 10.39(0.12) | 18.48(0.49) |

| Squared Decay Bandable Model, MCR ρ(1) = .8, ρ(2) = .2 | ||||||

| (50, 50) | 1.26(0.08) | 1.49(0.13) | 2.21(0.23) | 2.60(0.28) | 3.48(0.14) | 4.18(0.23) |

| (50, 200) | 0.82(0.01) | 0.88(0.04) | 1.47(0.05) | 1.77(0.11) | 2.18(0.04) | 2.68(0.11) |

| (200, 100) | 1.06(0.01) | 1.30(0.04) | 1.96(0.04) | 2.44(0.07) | 5.32(0.02) | 6.51(0.06) |

| (200, 200) | 0.90(0.00) | 0.96(0.03) | 1.60(0.02) | 1.99(0.06) | 4.27(0.02) | 5.26(0.15) |

| (500, 200) | 0.93(0.00) | 1.03(0.01) | 1.69(0.01) | 2.11(0.03) | 6.73(0.01) | 8.25(0.04) |

Now we consider estimation of sparse covariance matrices with missing values under the following two models.

(Permutation Bandable Model) Σ = (σij)1≤i,j≤p, where σij = max(0, 1–0.2·|s(i)–s(j)|) and s(i), i = 1, . . ., p is a random permutation of {1, . . ., p}.

- (Randomly Sparse Model) Σ = Ip + (D + D⊤)/(∥D + D≊∥ + 0.01), where D is randomly generated as

Similar to the sparse covariance matrix estimation, for missingness, we consider both MUCR and MCR. The results for the spectral norm, matrix ℓ1 norm and Frobenius norm losses are summarized in Table 2. It can be seen from Table 2 that, even under the MUCR setting, the proposed estimator based on the generalized sample covariance matrix is uniformly better than the one based on . In the more general MCR setting, the difference in the performance between the two estimators is even more significant.

Table 2.

Comparsion between and in different settings of sparse covariance matrix estimation.

| Spectral norm |

ℓ1 norm |

Frobenius norm |

||||

|---|---|---|---|---|---|---|

| (p, n) | ||||||

| Permutation Bandable Model, MUCR ρ = .5 | ||||||

| (50, 50) | 4.26(0.24) | 4.45(0.41) | 5.58(0.58) | 6.19(7.54) | 11.34(0.79) | 11.73(1.08) |

| (50, 200) | 1.70(0.05) | 1.74(0.06) | 3.31(0.32) | 3.42(0.38) | 4.93(0.09) | 5.07(0.16) |

| (200, 100) | 3.48(0.07) | 3.66(0.58) | 5.80(0.39) | 6.23(14.89) | 18.34(0.81) | 19.37(5.50) |

| (200, 200) | 2.12(0.04) | 2.20(0.03) | 4.17(0.29) | 4.44(0.32) | 11.46(0.14) | 11.94(0.13) |

| (500, 200) | 2.28(0.03) | 3.51(0.17) | 4.17(0.15) | 6.55(0.72) | 16.85(0.10) | 21.96(0.49) |

| Randomly Sparse Model, MUCR ρ = .5 | ||||||

| (50, 50) | 1.76(0.07) | 1.96(0.62) | 3.69(0.24) | 4.20(5.89) | 5.75(0.51) | 6.27(2.95) |

| (50, 200) | 1.05(0.00) | 1.06(0.00) | 2.73(0.04) | 2.74(0.05) | 3.75(0.03) | 3.77(0.04) |

| (200, 100) | 1.40(0.01) | 1.45(0.01) | 4.88(0.08) | 4.94(0.09) | 8.34(0.07) | 8.50(0.07) |

| (200, 200) | 1.07(0.00) | 1.09(0.01) | 4.44(0.03) | 4.46(0.03) | 7.42(0.02) | 7.43(0.02) |

| (500, 200) | 1.14(0.01) | 1.31(0.01) | 6.39(0.04) | 6.65(0.08) | 11.73(0.01) | 12.23(0.05) |

| Permutation Bandable Model, MCR ρ(1) = .8, ρ(2) = .2 | ||||||

| (50, 50) | 4.23(0.38) | 4.71(1.17) | 6.67(2.30) | 7.46(8.92) | 11.22(1.34) | 11.71(2.01) |

| (50, 200) | 1.64(0.05) | 2.79(0.39) | 2.94(0.21) | 4.52(0.95) | 4.41(0.13) | 6.29(0.46) |

| (200, 100) | 3.17(0.06) | 4.16(0.57) | 5.73(0.66) | 8.11(1.87) | 15.93(0.53) | 18.03(0.77) |

| (200, 200) | 2.00(0.03) | 3.22(0.18) | 3.65(0.16) | 5.70(0.60) | 9.83(0.11) | 13.29(0.55) |

| (500, 200) | 2.22(0.03) | 3.45(0.17) | 4.09(0.17) | 6.44(0.96) | 16.80(0.14) | 21.93(0.45) |

| Randomly Sparse Model, MCR ρ(1) = .8, ρ(2) = .2 | ||||||

| (50, 50) | 2.15(0.46) | 2.19(0.49) | 4.21(0.94) | 4.47(4.65) | 6.36(0.96) | 7.25(1.57) |

| (50, 200) | 1.09(0.02) | 1.16(0.04) | 2.82(0.19) | 2.99(0.32) | 3.83(0.10) | 4.00(0.20) |

| (200, 100) | 1.46(0.02) | 1.82(0.03) | 4.96(0.12) | 5.61(0.21) | 8.45(0.07) | 10.10(0.14) |

| (200, 200) | 1.08(0.00) | 1.20(0.01) | 4.46(0.04) | 4.57(0.05) | 7.43(0.02) | 7.66(0.04) |

| (500, 200) | 1.12(0.01) | 1.33(0.01) | 6.35(0.04) | 6.60(0.07) | 11.71(0.02) | 12.20(0.06) |

4.3 Comparison with Complete Samples

For covariance matrix estimation with missing data, an interesting question is: what is the “effective sample size”? That is, for samples with missing values, we would like to know the equivalent size of complete samples such that the accuracy for covariance matrix estimation is approximately the same. We now compare the performance of the proposed estimator based on the incomplete data with the corresponding estimator based on the complete data for various sample sizes. We fix the dimension p = 100. For the incomplete data, we consider n = 1000 and MUCR with ρ = .5. The covariance matrix Σ is chosen as

Linear Decaying Bandable Model (in Bandable Covariance Matrix Estimation);

Permutation Bandable Model (in Sparse Covariance Matrix Estimation);

Correspondingly, we consider the similar settings for the complete data with the same Σ and p, but different sample size nc, where nc can be one of the following three values,

: the average number of pairs of (xi, xj)'s that can be observed within the same sample;

: the average number of single xi's can be observed;

n: the same number of samples with the missing values.

The results for all the settings are summarized in Table 3. It can be seen that the equivalent sample size depends on the loss function and in general it is between and . Overall, the average risk under the missing data setting is most comparable to that under the complete data setting for the sample size of , the average number of observed pairs.

Table 3.

Comparison between incomplete samples and complete samples.

| Setting | sample size | Spectral norm | ℓ1 norm | Frobenius norm |

|---|---|---|---|---|

| Bandable Covariance Matrix Estimation | ||||

| Missing Data | n = 1000 | 0.72(0.01) | 1.25(0.03) | 2.40(0.01) |

| Complete Data | 0.97(0.03) | 1.49(0.05) | 2.48(0.04) | |

| Complete Data | 0.65(0.01) | 1.01(0.03) | 1.69(0.03) | |

| Complete Data | nc = n | 0.48(0.01) | 0.73(0.01) | 1.22(0.01) |

|

Sparse Covariance Matrix Estimation | ||||

| Missing Data | n = 1000 | 0.75(0.01) | 1.37(0.04) | 2.90(0.02) |

| Complete Data | 0.83(0.02) | 1.31(0.05) | 2.94(0.04) | |

| Complete Data | 0.65(0.01) | 1.01(0.03) | 1.86(0.04) | |

| Complete Data | nc = n | 0.45(0.01) | 0.64(0.01) | 1.12(0.01) |

4.4 Analysis of Ovarian Cancer Data

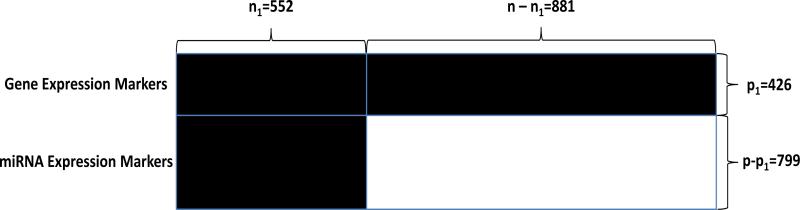

In this section, we illustrate the proposed adaptive thresholding procedure with an application to data from four ovarian cancer genomic studies, Cancer Genome Atlas Research Network [11] (TCGA), Bonome et al. [4] (BONO), Dressman et al. [15] (DRES) and Tothill et al. [27] (TOTH). The method introduced in Sections 3 enables us to estimate the covariance matrix by integrating data from all four studies and thus yields a more accurate estimator. The data structure is illustrated in Figure 2. The gene expression markers (the first 426 rows) are observed in all four studies without any missingness (the top black block in Figure 2). The miRNA expression markers are observed in 552 samples from the TCGA study (the bottom left block in Figure 2) and completely missing in the 881 samples from the TOTH, DRES, BONO and part of TCGA studies (the white block in Figure 2).

Figure 2.

Illustration of the ovarian cancer dataset. Black block = completely observed; White block = completely missing.

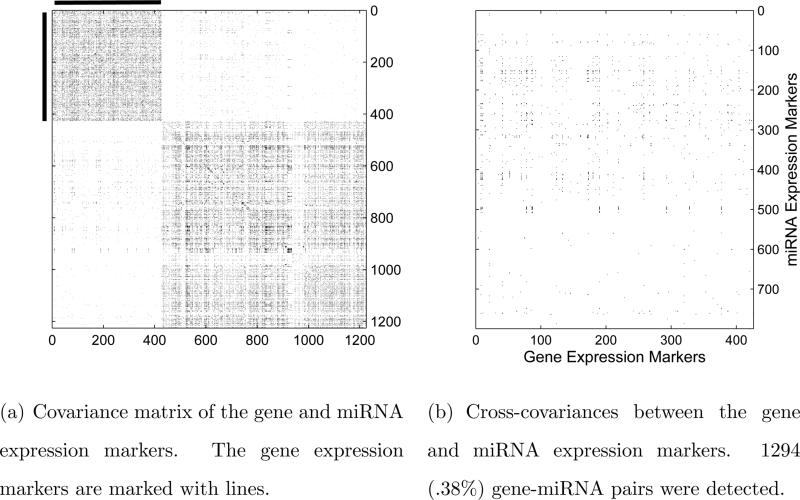

Our goal is to estimate the covariance matrix Σ of the 1225 variables with the particular interest in the cross-covariances between the gene and miRNA expression markers. It is clear that the missingness here is not uniformly at random. On the other hand, it is reasonable to assume the missingness does not depend on the value of the data and thus missing completely at random (Assumption 2.1) can be assumed. We apply the adaptive thresholding procedure with δ = 2 to estimate the covariance matrix and recover its support based on all the observations. The support of the estimate is shown in a heatmap in Figure 3. The left panel is for the whole covariance matrix and the right panel zooms into the cross-covariances between the gene and miRNA expression markers.

Figure 3.

Heatmaps of the covariance matrix estimate with all the observed data.

It can be seen from Figure 3 that the two diagonal blocks, with 12.24% and 8.39% nonzero off-diagonal entries respectively, are relatively dense, indicating that the relationships among the gene expression markers and those among the miRNA expression markers, as measured by their covariances, are relatively close. In contrast, the cross-covariances between gene and miRNA expression markers are very sparse with only 0.38% of significant gene-miRNA pairs. The gene and miRNA expression markers a ect each other through different mechanisms, the cross-covariances between the gene and miRNA markers are of significant interest (see Ko et al. [19]). It is worthwhile to take a closer look at the cross-covariance matrix displayed on the right panel in Figure 3. For each given gene, we count the number of miRNAs whose covariances with this gene are significant, and then rank all the genes by the counts. Similarly, we rank all the miRNAs. The top 5 genes and the top 5 miRNA expression markers are shown in Table 4.4.

Table 4.

Genes and miRNA's with most selected pairs

| Gene Expression Marker | Counts | miRNA Expression Marker | Counts |

|---|---|---|---|

| ACTA2 | 61 | hsa-miR-142-5p | 31 |

| INHBA | 57 | hsa-miR-142-3p | 29 |

| COL10A1 | 53 | hsa-miR-22 | 26 |

| BGN | 46 | hsa-miR-21* | 24 |

| NID1 | 41 | hsa-miR-146a | 21 |

Many of these gene and miRNA expression markers have been studied before in the literature. For example, the miRNA expression markers hsa-miR-142-5p and hsa-miR-142-3p have been demonstrated in Andreopoulos and Anastassiou [1] as standing out among the miRNA markers as having higher correlations with more genes, as well as methylation sites. Carraro et al. [12] finds that inhibition of miR-142-3p leads to ectopic expression of the gene marker ACTA2. This indicates strong interaction between miR-142-3p and ACTA2.

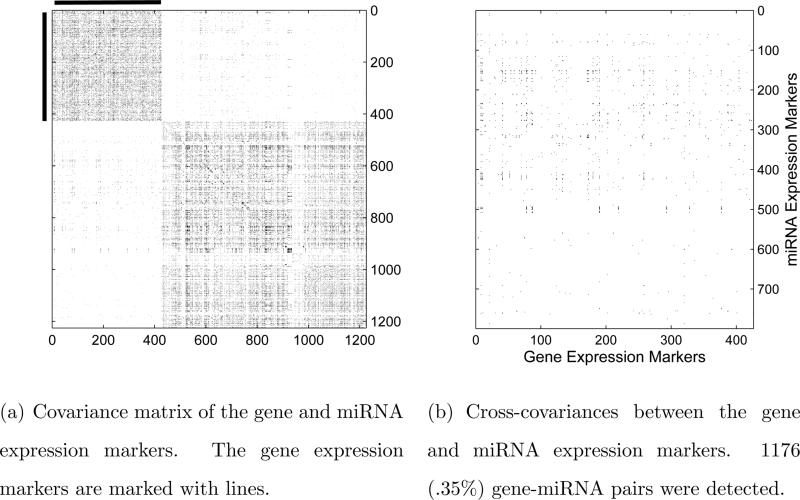

To further demonstrate the robustness of our proposed procedure against missingness, we consider a setting with additional missing observations. We first randomly select half of the 552 complete samples (where both gene and miRNA expression markers are observed) and half of the 881 incomplete samples (where only gene expression markers are observed), and then independently mask each entry of the selected samples with probability 0.05. The proposed adaptive thresholding procedure is then applied to the data with these additional missing values. The estimated covariance matrix is shown in heatmaps in Figure 4. These additional missing observations do not significantly a ect the estimation accuracy. Figure 4 is visually very similar to Figure 3. To quantify the similarity between the two estimates, we calculate the Matthews correlation coefficient (MCC) between them. The value of MCC is equal to 0.9441, which indicates that the estimate based on the data with the additional missingness is very close to the estimate based on the original samples. We also pay close attention to the cross-covariance matrix displayed on the right panel in Figure 4 and rank the gene and miRNA expression markers in the same way as before. The top 5 genes and the top 5 miRNA expression markers, listed in Table 5, are nearly identical to those given in Table 4.4, which are based on the original samples. These results indicate that the proposed method is robust against additional missingness.

Figure 4.

Heatmaps of the covariance matrix estimate with additional missing values.

Table 5.

Genes and miRNA's with most selected pairs after masking

| Gene Expression Marker | Counts | miRNA Expression Marker | Counts |

|---|---|---|---|

| ACTA2 | 60 | hsa-miR-142-3p | 31 |

| INHBA | 56 | hsa-miR-142-5p | 30 |

| COL10A1 | 50 | hsa-miR-146a | 21 |

| BGN | 43 | hsa-miR-150 | 21 |

| NID1 | 40 | hsa-miR-21* | 21 |

5 Discussions

We considered in the present paper estimation of bandable and sparse covariance matrices in the presence of missing observations. The pivotal quantity is the generalized sample covariance matrix defined in (7). The technical analysis is more challenging due to the missing data. We have mainly focused on the spectral norm loss in the theoretical analysis. Performance under other losses such as the Frobenius norm can also be analyzed.

To illustrate the proposed methods, we integrated four ovarian cancer studies. These methods for high-dimensional covariance matrix estimation with missing data are also useful for other types of data integration. For example, linking multiple data sources such as electronic data records, medicare data, registry data and patient reported outcomes could greatly increase the power of exploratory studies such as phenome-wide association studies (Denny et al. [14]). However, missing data inevitably arises and may hinder the potential of integrative analysis. In addition to random missingness due to unavailable information on a small fraction of patients, many variables such as the genetic measurements may only exist in one or two data sources and are hence structurally missing for other data sources. Our proposed methods could potentially provide accurate recovery of the covariance matrix in the presence of missingness.

In this paper, we allowed the proportion of missing values to be non-negligible as long as the minimum number of occurrences of any pair of variables is of order n. An interesting question is what happens when the number of observed values is large but is small (or even zero). We believe that the covariance matrix Σ can still be well estimated under certain global structural assumptions. This is out of the scope of the present paper and is an interesting problem for future research.

The key ideas and techniques developed in this paper can be used for a range of other related problems in high-dimensional statistical inference with missing data. For example, the same techniques can also be applied to estimation of other structured covariance matrices such as Toeplitz matrices, which have been studied in the literature in the case of complete data. When there are missing data, we can construct similar estimators using the generalized sample covariance matrix. The large deviation bounds for a sub-matrix and self-normalized entries of the generalized sample covariance matrix developed in Lemmas 3.1 and 2.1 would be helpful for analyzing the properties of the estimators.

The techniques can also be used on two-sample problems such as estimation of differential correlation matrices and hypothesis testing on the covariance structures. The generalized sample covariance matrix can be standardized to form the generalized sample correlation matrix which can then be used to estimate the differential correlation matrix in the two-sample case. It is also of significant interest in some applications to test the covariance structures in both one- and two-sample settings based on incomplete data. In the one-sample case, it is of interest to test the hypothesis {H0 : Σ = I} or {H0 : R = I}, where R is the correlation matrix. In the two-sample case, one wishes to test the equality of two covariance matrices {H0 : Σ1 = Σ2}. These are interesting problems for further exploration in the future.

6 Proofs

We prove Theorem 2.1 and the key technical result Lemma 6.1 for the bandable covariance matrix estimation in this section.

6.1 Proof of Lemma 2.1

To prove this lemma, we first introduce the following technical tool for the spectral norm of the sub-matrices.

Lemma 6.1 Suppose is any positive semi-definite matrix, A, B ∈ {1, . . ., p}, then

| (27) |

The proof of Lemma 6.1 is provided later and now we move back to the proof of Lemma 2.1. Without loss of generality, we assume that μ = EX = 0. We further define

| (28) |

Also for convenience of presentation, we use C, C1, c, . . . to denote uniform constants, whose exact values may vary in different senarios. The lemma is now proved in the following steps:

- We first consider for fixed unit vectors a, with , , the tail bound of . We would like to show that there exist uniform constants C1, c > 0 such that for all x < 0,

Specifically, we will bound and separately in the next two steps.(29) - We consider first. Since

can be written as

We can calculate from (30) that(30)

For the last term in (31), we have the following bound,(31)

Thus, by (31) and the inequality above, we have

The last term of (30) can be treated as a quadratic form of the vectorization of . We note the last term as vec(X)⊤Qvec(X), where and(32)

Q has the following properties,(33)

For , since its segments {Xk, k = 1, . . ., p} are independent and Xk = ΓZk we can further write vec(X) = DΓvec(Z), where is with n diagonal blocks of Γ, vec(Z) is a (qn)-dimensional i.i.d. sub-Gaussian random vector. Based on Hanson-Wright's inequality (Theorem 1.1 in Rudelson and Vershynin [25]),(34)

Here c > 0 is a uniform constant. Since Q is supported on {(i, k), (j, l) : i ∈ A, J ∈ B}, we have . Here , are with n diagonal block ΓA×[q] and ΓB×[q], respectively, where [q] = {1, . . ., q}. Since , , we know(35)

Then we further have

We define , combining the inequality above and (32), we have(36)

In the last inequality above, we used a fact that τ is lower bounded by a uniform constant. This is due to Assumption 2.2 that E(Z) = 0, var(Z) = 1, Eexp(tZ) ≤ exp(t2τ2/2). Then,(37)

which implies τ2 ≤ ½ ln(2).(38) - It is easy to see that , so . Then Here is a matrix such that . Note that Ck is supported on A × B, we can prove the following properties of Ck.

(39) (40)

Now, note that the last line of (38) can be also equivalently written as(41)

where vec(Z) is the vectorization of Z, which is an qn-dimensional i.i.d. sub-Gaussian vector. Based on the properties of Ck above, we have

Now applying Hanson-Wright's inequality (Theorem 1.1 in Rudelson and Vershynin [25]), we have

Thus,(42)

Here c is a uniform constant. Combining (43) and (37), we have (29).(43) - Next, we use the ε-net technique to give the bound on . Denote . Suppose is the (1/3)-net for all unit vectors in ; similarly is the (1/3)- net for all unit vectors in . Based on the proof of Lemma 3 in Cai et al. [9], we can let , . Since all a, , b, ,

we have for all , , , we can find , , such that ∥a0 – a∥2 ≤ 1/3, ∥b0 – b∥2 ≤ 1/3, then(44)

which yields

Finally, by combining (29) and the inequality above, we know there exist uniform constants C1, c > 0 such that for all t > 0,(45)

Since , we have finished the proof of Lemma 2.1.(46)

Proof of Lemma 6.1. Since Σ is positive semi-definite, we can find the Cholesky decomposition such that Σ = VVȪ4. Then and

Here we have used the Cauchy-Schwarz inequality.

6.2 Proof of Theorem 2.1

Define B = (bij)1≤i,j≤p such that bij = σij if i ∈ Is, j ∈ Is′ and |s – s′| ≤ 1, and 0 otherwise. Let Δ = Σ – B. Then

It is easy to see that

To bound , note that

For any , we have

The Cauchy-Schwarz inequality yields

| (47) |

Therefore,

which yields

According to lemma 2.1, there exists constant C, c > 0 which only depend on τ such that for all x > 0,

| (48) |

Now we set for C′ large enough. The spectral norm risk satisfies

| (49) |

then (49) yields

| (50) |

where C only depends on τ, M, M0. We can finally finish the proof of Theorem 2.1 by taking .

Acknowledgments

We thank Tianxi Cai for the ovarian cancer data set and for helpful discussions. We also thank the Editor, the Associate editor, one referee and Zoe Russek for useful comments which have helped to improve the presentation of the paper.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

The research of Tony Cai and Anru Zhang was supported in part by NSF Grant DMS-1208982 and DMS-1403708, and NIH Grant R01 CA127334.

Contributor Information

T. Tony Cai, Department of Statistics, The Wharton School, University of Pennsylvania, Philadelphia, PA (tcai@wharton.upenn.edu).

Anru Zhang, University of Wisconsin-Madison, Madison, WI (anruzhang@stat.wisc.edu)..

References

- 1.Andreopoulos B, Anastassiou D. Integrated analysis reveals hsa-mir-142 as a representative of a lymphocyte-specific gene expression and methylation signature. Cancer Informatics. 2012;11:61–75. doi: 10.4137/CIN.S9037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Bickel PJ, Levina E. Regularized estimation of large covariance matrices. Ann. Statist. 2008;36:199–227. [Google Scholar]

- 3.Bickel PJ, Levina E. Covariance regularization by thresholding. Ann. Statist. 2008;36:2577–2604. [Google Scholar]

- 4.Bonome T, Lee J-Y, Park D-C, Radonovich M, Pise-Masison C, Brady J, Gardner GJ, Hao K, Wong WH, Barrett JC, et al. Expression profiling of serous low malignant potential, low-grade, and high-grade tumors of the ovary. Cancer Research. 2005;65:10602–10612. doi: 10.1158/0008-5472.CAN-05-2240. [DOI] [PubMed] [Google Scholar]

- 5.Cai TT, Liu W. Adaptive thresholding for sparse covariance matrix estimation. J. Amer. Statist. Assoc. 2011;106:672–684. [Google Scholar]

- 6.Cai TT, Ma Z, Wu Y. Optimal estimation and rank detection for sparse spiked covariance matrices. Probab. Theory Rel. 2015;161:781–815. doi: 10.1007/s00440-014-0562-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Cai TT, Ren Z, Zhou HH. Estimating structured high-dimensional covariance and precision matrices: Optimal rates and adaptive estimation. Electron. J. Stat. 2016;10:1–59. [Google Scholar]

- 8.Cai TT, Yuan M. Adaptive covariance matrix estimation through block thresholding. Ann. Statist. 2012;40:2014–2042. [Google Scholar]

- 9.Cai TT, Zhang C-H, Zhou H. Optimal rates of convergence for covariance matrix estimation. Ann. Statist. 2010;38:2118–2144. [Google Scholar]

- 10.Cai TT, Zhou H. Optimal rates of convergence for sparse covariance matrix estimation. Ann. Statist. 2012;40:2389–2420. [Google Scholar]

- 11.Cancer Genome Atlas Research Network Integrated genomic analyses of ovarian carcinoma. Nature. 2011;474:609–615. doi: 10.1038/nature10166. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Carraro G, Shrestha A, Rostkovius J, Contreras A, Chao C-M, El Agha E, MacKenzie B, Dilai S, Guidolin D, Taketo MM, et al. mir-142-3p balances proliferation and differentiation of mesenchymal cells during lung development. Development. 2014;141(6):1272–1281. doi: 10.1242/dev.105908. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Chen SX, Zhang LX, Zhong PS. Tests for high-dimensional covariance matrices. J. Amer. Statist. Assoc. 2010;105:810–819. [Google Scholar]

- 14.Denny JC, Ritchie MD, Basford MA, Pulley JM, Bastarache L, Brown-Gentry K, Wang D, Masys DR, Roden DM, Crawford DC. Phewas: demonstrating the feasibility of a phenome-wide scan to discover gene-disease associations. Bioinformatics. 2010;26:1205–1210. doi: 10.1093/bioinformatics/btq126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Dressman HK, Berchuck A, Chan G, Zhai J, Bild A, Sayer R, Cragun J, Clarke J, Whitaker RS, Li, L. e. a. An integrated genomic-based approach to individualized treatment of patients with advanced-stage ovarian cancer. J. Clin. Oncol. 2007;25:517–525. doi: 10.1200/JCO.2006.06.3743. [DOI] [PubMed] [Google Scholar]

- 16.El Karoui N. Operator norm consistent estimation of large-dimensional sparse covariance matrices. Ann. Statist. 2008;36:2717–2756. [Google Scholar]

- 17.Hawkins RD, Hon GC, Ren B. Next-generation genomics: an integrative approach. Nat. Rev. Genet. 2010;11:476–486. doi: 10.1038/nrg2795. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Ibrahim JG, Molenberghs G. Missing data methods in longitudinal studies: a review. Test. 2009;18:1–43. doi: 10.1007/s11749-009-0138-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Ko SY, Barengo N, Ladanyi A, Lee JS, Marini F, Lengyel E, Naora H. Hoxa9 promotes ovarian cancer growth by stimulating cancer-associated fibroblasts. J. Clin. Invest. 2012;122:3603–3617. doi: 10.1172/JCI62229. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Little RJA, Rubin DB. Statistical Analysis with Missing Data. 2nd Edition. John Wiley & Sons; New York: 2002. [Google Scholar]

- 21.Loh P-L, Wainwright M. High-dimensional regression with noisy and missing data: Provable guarantees with non-convexity. Ann. Statist. 2012;40:1637–1664. [Google Scholar]

- 22.Lounici K. High dimensional probability VI, 66 of Prog. Proba. Institute of Mathematical Statistics (IMS) Collections; 2013. Sparse principal component analysis with missing observations. pp. 327–356. [Google Scholar]

- 23.Lounici K. High-dimensional covariance matrix estimation with missing observations. Bernoulli, to appear. 2014 [Google Scholar]

- 24.Rothman AJ, Levina E, Zhu J. Generalized thresholding of large covariance matrices. J. Amer. Statist. Assoc. 2009;104:177–186. [Google Scholar]

- 25.Rudelson M, Vershynin R. Hanson-wright inequality and sub-gaussian concentration. Electron. Commun. Probab. 2013;18:1–9. [Google Scholar]

- 26.Schafer JL. Analysis of Incomplete Multivariate Data. CRC press; 2010. [Google Scholar]

- 27.Tothill RW, Tinker AV, George J, Brown R, Fox SB, Lade S, Johnson DS, Trivett MK, Etemadmoghadam D, Locandro B. e. a. Novel molecular subtypes of serous and endometrioid ovarian cancer linked to clinical outcome. Clin. Cancer Res. 2008;14:5198–5208. doi: 10.1158/1078-0432.CCR-08-0196. [DOI] [PubMed] [Google Scholar]