Abstract

Emotion recognition with weighted feature based on facial expression is a challenging research topic and has attracted great attention in the past few years. This paper presents a novel method, utilizing subregion recognition rate to weight kernel function. First, we divide the facial expression image into some uniform subregions and calculate corresponding recognition rate and weight. Then, we get a weighted feature Gaussian kernel function and construct a classifier based on Support Vector Machine (SVM). At last, the experimental results suggest that the approach based on weighted feature Gaussian kernel function has good performance on the correct rate in emotion recognition. The experiments on the extended Cohn-Kanade (CK+) dataset show that our method has achieved encouraging recognition results compared to the state-of-the-art methods.

1. Introduction

Emotion recognition has necessary applications in the real world. Its applications include but are not limited to artificial intelligence and human computer interaction. It remains a challenging and attractive topic. There are many methods which have been proposed for handling problems in emotion recognition. Speech [1, 2], physiological [3–5], and visual signals have been explored for emotion recognition. Speech signals are discontinuous signals, since they can be captured only when people are talking. Acquirement of physiological signal needs some special physiological sensors. Visual signal is the best choice for emotion recognition based on the above reasons. Although the visual information provided is useful, there are challenges regarding how to utilize this information reliably and robustly. According to Albert Mehrabian's 7%–38%–55% rule, facial expression is an important mean of detecting emotions [6].

Further studies have been carried out on emotion recognition problems in facial expression images during the last decade [7, 8]. Given a facial expression image, estimate the correct emotional state, such as anger, happiness, sadness, and surprise. The general process has two steps: feature extraction and classification. For feature extraction, geometric feature, texture feature, motion feature, and statistical feature are in common use. For classification, methods based on machine learning algorithm are frequently used. According to speciality of features, applying weighted features to machine learning algorithm has become an active research topic.

In recent years, emotion recognition with weighted feature based on facial expression has become a new research topic and received more and more attention [9, 10]. The aim is to estimate emotion type from a facial expression image captured during physical facial expression process of a subject. But the emotion features captured from the facial expression image are strongly linked to not the whole face but some specific regions in the face. For instance, features of eyebrow, eye, nose, and mouth areas are closely related to facial expression [11]. Besides, the effect of each feature on recognition result is different. In order to make the best of feature, using feature weighting technique can further enhance recognition performance. While there are several approaches of confirming weight, it remains an open issue on how to select feature and calculate corresponding weight effectively.

In this paper, a new emotion recognition method based on weighted feature facial expression is presented. It is motivated by the fact that emotion can be described by facial expression and each facial expression feature has different impact on recognition results. Different from previous works by calculating weight of each feature directly, this method considers impact of feature by calculating subrecognition rate. Our method consists of two stages: weight calculation stage and recognition stage. In the weight calculation stage, we first divide face into 4 areas according to degree of facial behavior changes. Then, we use each area's features to calculate corresponding recognition rate. At last, we calculate weight of each area's features according to magnitude of recognition rate. In the recognition stage, we first use the above weight results to calculate weighted kernel function. Then, we obtain a new recognition model based on SVM with weighted kernel function.

For the proposed method, there are three main contributions and differences compared to the preliminary work. (1) A more advanced weight of feature method is used. In previous method, the weight of each feature was calculated individually without practical verification. To overcome this shortage, we group features and calculate corresponding subrecognition rate. Then we calculate weight of feature groups based on their respective subrecognition rate. (2) In the recognition stage, the previous method used the weight of features directly. In this paper, we use weight of feature groups to weight kernel function. Then we use new weighted kernel function in machine learning model. (3) The proposed method has been evaluated in a database which contains 7 kinds of emotions. Moreover, comparison results have been carefully analyzed and studied on whether to use weighted kernel function. The rest of the paper is organized as follows: Section 2 gives an overview of related works on feature extraction of facial expression, calculation of weight of feature, and classification of emotion. Section 3 describes the theorem in proposed method and proofs. Section 4 verifies the proposed method by experiment and analyzes experimental results. Section 5 concludes the paper.

2. Related Work

The recognition performance of motion based methods is highly dependent on the feature extraction methods. Many novel approaches have been proposed for feature extraction based on facial expression. They can be broadly classified into two categories: appearance-based methods and geometric-based methods. The appearance-based methods extract intensity or other texture features from facial expression images. The common methods of feature extraction include Local Binary Patterns (LBP) [12, 13], Histogram of Oriented Gradient (HOG) [14, 15], Gabor Wavelet [16, 17], and Scale-Invariant Feature Transform (SIFT) [18, 19]. These features can be used to extract Action Unit (AU) feature and recognize facial expression. The geometric-based methods describe facial component shapes based on key points of facial detected on images, such as eyebrows, eyes, nose, mouth, and contour line. The movement of these key points can be used for guiding the facial expression recognition process. For instance, the active appearance model (AAM) [20] or Active Shape Model (ASM) [21, 22] and the constrained local model (CLM) [23] are widely used to detect and trace these key points of face to record their displacement. However, the location accuracy of both ASM and AAM relies on their geometric face models. And the model training phases sometimes need manual works and are usually time-consuming.

The recognition results obtained by classification algorithm are affected by all features. So the introduction of weight can distinguish the contribution of different features and improve classification performance. A variety of methods have been proposed to calculate the weight of every feature. Reference [24] presented Euclidean metric in the criterion extended to Minkowski metric to calculate weight of each feature directly. Some methods divided the facial image into some uniform subregions and calculated the weight of each subregion. Reference [25] introduced information entropy to distinguish the contribution of different partitions of the face. Reference [26] estimated the weight of each subregion by employing the local variance. For feature weighting in different ways, feature selection and weight calculation might be recognized as a latent problem. One effective method to solve this problem is to perform feature weighting based on the obtained feedback. Some methods [27, 28] divided the facial image into some uniform subregions and returned the subregion result for feature weighting. There is no restriction on each feature, which provides freedom on how the feature representations are structured.

Many machine learning methods have been proposed to classify facial expressions, such as SVM [29], Random Forest (RF) [30], Neural Network (NN) [31], and K nearest neighbor (KNN) [32]. Reference [33] presented the performance of RF and SVM in classification of facial recognition. Reference [34] used boosting technique for the construction of NNEs and the final prediction is made by Naive Bayes (NB) classifier. Reference [35] divided the region into different types and combined the characteristic of the Fuzzy Support Vector Machine (FSVM) with KNN, switching the classification methods to the different types. The studies show that these methods are extremely suitable for facial expression classification.

3. Support Vector Machine

3.1. Linear Support Vector Machines

SVM is a new supervised learning model with associated learning algorithm for classification problem of data whose ultimate aim is to find the optimal separating hyperplane. The mathematical model of SVM is shown below.

Given a training set , where is input and y i ∈ {−1, +1} is the corresponding output, if there is a hyperplane which can divide all the points into two groups correctly, we aim to find the “maximum-margin hyperplane” where the distance between the hyperplane and the nearest point from either group is maximized. By introducing the penalty parameter c > 0 and the slack variable , the optimal hyperplane can be obtained by solving constraint optimization problem as follows:

| (1) |

Based on Lagrangian multiplier method, the problem is converted into a dual problem as follows:

| (2) |

where a i > 0 are the Lagrange multipliers of samples . Only a few a i > 0 are solutions of the problem of removing the parts of a i = 0, so that we can get the classification decision function as follows:

| (3) |

3.2. Nonlinear Support Vector Machines

For the linearly nonseparable problem, we first map the data to some other high-dimensional space H, using a nonlinear mapping which we call Φ. Then we use linear model to achieve classification in new space H. Through defined “kernel function” k, (2) is converted as follows:

| (4) |

And the corresponding classification decision function is converted as follows:

| (5) |

The selection of kernel function aims to take the place of inner product of basis function. The ordinary kernel functions investigated for linearly nonseparable problems are as follows:

-

(1)nth-degree polynomial kernel function

(6) -

(2)(Gaussian) radial basis kernel function

(7) -

(3)Sigmoid kernel function

(8)

3.3. Weighted Feature SVM

Weighted feature SVM is based on weighted kernel function of SVM, which is defined as Definition 1.

Definition 1 . —

Let k be a kernel function defined in X∗X, X⊆R n. P is a linear transformation square matrix of order n of given input space, where n is dimensionality of input space. Weighted feature kernel function k P is defined as

(9) where P is referred to as a weighted feature matrix. The different choices for P lead to different weight situation:

- (1)

P is an identity matrix of order n, which is no weight situation.

- (2)

P is a diagonal matrix of order n, where (P)ii = ω i (1 ≤ i ≤ n) is the weight of ith feature and not all ω i are equal to the others

(10) - (3)

P is an arbitrary matrix of order n, which is full weight situation

(11)

We only consider P is a diagonal matrix of order n in this paper.

Definition 2 . —

The ordinary weighted feature kernel function can be got by (9), and the process is shown as follows:

- (1)

Weighted feature polynomial kernel function

(12) - (2)

Weighted feature (Gaussian) radial basis kernel function

(13) - (3)

Weighted feature sigmoid kernel function

(14)

The motivation for introducing kernel function is to search nonlinear model in the new feature space which is obtained by using nonlinear mapping. Matrix P appears not to be related to the motivation, since it acts as linear mapping. However, it can be useful in practice, because it can change geometry shape of input space and feature space, thereby changing the weight of different functions in the feature space. And the weighted feature Gaussian basis kernel function is still a nonlinear model after using linear transformation. The conclusion can be proved by Theorem 3.

Theorem 3 . —

Let k be a kernel function defined in X∗X, X⊆R n. ϕ : X → F is a mapping from input space to feature space. P is a linear transformation square matrix and . Then it deduces .

Proof —

, acts as any of the three ordinary kernel functions in Definition 1; then it deduces

(15)

Theorem 4 . —

When there is ω k = 0 (1 ≤ k ≤ n), kth feature of sample data is irrelevant to calculation of kernel functions and output of classifier. Furthermore, the smaller the value of ω k (1 ≤ k ≤ n), the less the effect of calculation of kernel functions and output of classifier.

Proof —

From definition of weighted feature kernel function and classification decision function (5), the conclusion is straightforward.

Theorem 3 indicates that changes of location relation between spot and spot lead to changes of geometry shape of feature space after linear transformation. And there may be better linear separating hyperplane in new feature space to improve the classification performance of SVM. Theorem 4 indicates that weighted kernel function can reduce the effects of weak correlation and no-correlative features, and we are looking forward to better classification results. The experiment results in the following section of this article demonstrate this conclusion.

3.4. Weight Estimation of Features

Feature weighting technique based on certain principle gives a weight to various data features where calculating is the key element. The changes in facial expression lead to slight different instant changes in individual facial muscles in facial appearance. According to motion range of facial muscles, the whole face can be divided into three kinds of regions: rigid region (nose), semirigid region (eyes, forehead, and cheek), and on-rigid region (mouth). According to the principles above, we divide face into several areas and find out recognition rate p i (1 ≤ i ≤ n) of all the areas where the higher the recognition rates, the greater the influences. Otherwise, the lower the recognition rate, the smaller the influences. Regard weight determination as the base for calculating the value of weight, and the calculation formula is presented as follows:

| (16) |

This approach makes ω 1 + ω 2 + ω 3 + ω 4 = 1.

The area of the highest value of weight has the highest differentiation in the face, although it is also the largest contributor to classification results. Therefore, the higher the value of weight as a correlation measurement index, the stronger the correlation. The four constructing steps of weighted feature SVM are as follows:

-

(1)

Collecting origin facial expression image dataset O and extracting feature dataset , where is feature vector of facial expression, is the feature vector of ith region of face, and d is the corresponding class label of facial expression

-

(2)

Calculating recognition rates p i (1 ≤ i ≤ n) and corresponding value of weight ω i of each area. Constructing feature weight vector and linear transformation square matrix P, where

-

(3)

Replacing standard kernel function formulas with weighted feature kernel function formulas (12)–(14), and constructing a classifier based on sample dataset S

-

(4)

Evaluating the performance of achieved classifier

4. Experiment

The experiments on the extended Cohn-Kanade (CK+) dataset show the effectiveness of the proposed method. In our experiments, we use python programs based on LIBSVM software packages, and the platform of data processing is a computer with Windows 7, Intel® Core™ i3-2120 CPU (3.30 GHz), 4.00 GB RAM.

4.1. Extended Cohn-Kanade Dataset

Lucey et al. [36] presented the CK+ dataset containing 593 sequences from 123 subjects. Each of the sequences incorporates images from onset (neutral frame) to peak expression (last frame). But, only 327 of the 593 sequences were found to meet criteria for one of seven discrete emotions. And, 327 peak frames have been selected and labeled which come together to compose origin facial expression image dataset O. The detailed number of images of each discrete emotion is shown in Table 1.

Table 1.

The detailed number of images of each discrete emotion in dataset O.

| Sample set | Training set | Test set | |

|---|---|---|---|

| Anger | 45 | 13 | 32 |

| Contempt | 18 | 13 | 5 |

| Disgust | 59 | 13 | 46 |

| Fear | 25 | 13 | 12 |

| Happiness | 69 | 13 | 56 |

| Sadness | 28 | 13 | 15 |

| Surprise | 83 | 13 | 70 |

4.2. Facial Feature Extraction

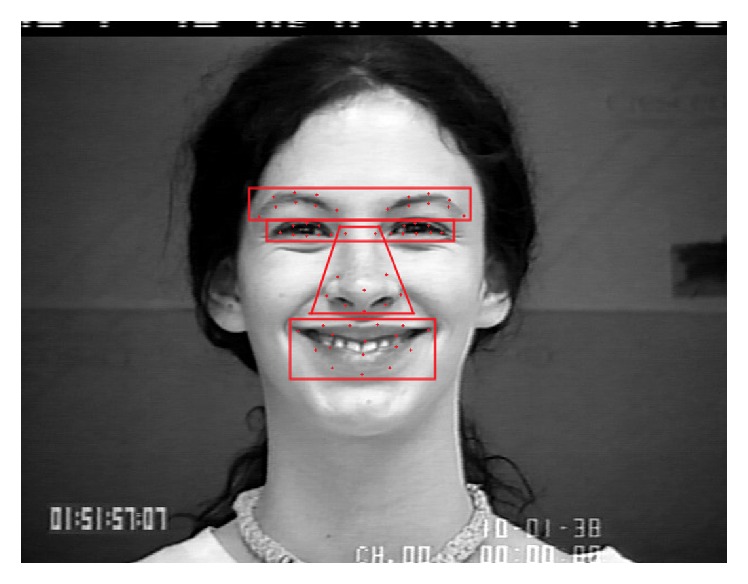

In the paper, we use facial key points of each image as feature points on emotion recognition based on facial expression. Each feature point is expressed as a 2-dimensional coordinate as follows: (x, y). The resolution of each image of dataset O is 640 × 490, 640 × 480, or 720 × 480. In order to unify the standard of coordinate system, image preprocessing is used to change the resolution of each image into 640 × 480. Reference [11] proposed the production of emotion, which has brought about facial behavior changes and is strongly linked to not the whole face but some specific areas, such as eyebrows, eyes, mouth, nose, and tissue textures. Besides, a face has different rigidness in different areas. According to the principles above, this paper divides face into 4 areas, which are shown in Figure 1 and corresponding feature vectors are listed as follows.

Figure 1.

Partition and key points of human face.

(1) Eyebrows Area. Select 8 key points from each eyebrow; their 2-dimensional coordinates (x 1,k, y 1,k), k = 1,…, 16, work together to form a 32-dimensional feature vector .

(2) Eyes. Select 8 key points from each eye; their 2-dimensional coordinates (x 2,k, y 2,k), k = 1,…, 16, work together to form a 32-dimensional feature vector .

(3) Nose. Select 10 key points from nose; their 2-dimensional coordinates (x 3,k, y 3,k), k = 1,…, 10, work together to form a 20-dimensional feature vector .

(4) Mouth. Select 17 key points from mouth; their 2-dimensional coordinates (x 4,k, y 4,k), k = 1,…, 17, work together to form a 34-dimensional feature vector .

Above all, we select 59 key points from the eyebrows, eyes, nose, and mouth. Therefore, 118-dimensional facial feature vector can be got from each frame where .

4.3. Experiment Contrast with Different Feature

Sample set S contains 327 feature vectors of facial images of seven discrete emotions. We use the method of stratification sampling to get training set and test set. First, we treat the sample set S in 7 disjoint layers on the basis of certain emotions. Then, we select a fixed number of feature vectors from each layer independently and randomly. The number is determined by the smallest size of 7 facial expression sample sets, which is 70% of the size of contempt sample set in this article. At last, all these selected feature vectors come together to compose training set T, while the rest of feature vectors come together to compose test set V. The detailed number of feature vectors of each emotion is shown in Table 1.

Select the component of feature vector to compose training set T i (1 ≤ i ≤ 4) and test set V i (1 ≤ i ≤ 4). Thus we experiment four times under different facial area features, respectively. The detailed recognition accuracy of each facial area feature is shown in Table 2. According to the analysis of experimental results in four feature areas, the influence of features of three types of region is different. The nonrigid region has the biggest impact; rigid region has the least while semirigid region has an impact at a fair level.

Table 2.

The recognition accuracy of each facial area feature.

| Subregion | Eyebrows | Eyes | Nose | Mouth |

|---|---|---|---|---|

| Recognition rate | 40.55% | 41.94% | 25.45% | 60.37% |

4.4. Experiment Contrast with Different Kernel Function

We use the previous experiment results and (10) and (16) to obtain the weight ω i of each area and corresponding linear transformation square matrix P as follows:

| (17) |

Standard Gaussian kernel function and weighted feature Gaussian kernel function can be got by (7) and (13) for the 118-dimensional facial feature vector

| (18) |

Thus we experiment twice under training set T and test set V with different kernel function, respectively. The number of correctly recognized facial expressions under two kernel functions is shown in Table 3.

Table 3.

The number and average precision of correctly recognized facial expressions under two kernel functions.

| Emotion | Test set | SVM | WF-SVM |

|---|---|---|---|

| Anger | 32 | 24 | 28 |

| Contempt | 5 | 2 | 4 |

| Disgust | 46 | 40 | 42 |

| Fear | 12 | 9 | 11 |

| Happiness | 56 | 50 | 54 |

| Sadness | 15 | 10 | 12 |

| Surprise | 70 | 62 | 69 |

| Average precision | — | 83% | 93% |

Finally, we compare our results with the experiments of two kernel functions, which are all image-based framework and tested on the CK+ dataset. The average precision of WF-SVM which uses weighted feature Gaussian kernel function is 93%, which is higher than SVM that uses standard Gaussian kernel function whose average precision is 83%, as is shown in Table 3. And the recognition rate is better than the previous method for the seven emotions. These confirm the effectiveness of our method. After investigating the reason, we find it can be explained from robustness of machine learning algorithm. This method reduces the influence of weak correlation feature by weighted feature, thus improving the robustness of algorithm.

5. Conclusion and Future Work

In this paper, we propose an approach of emotion recognition based on facial expression. In our approach, we propose a feature weighting technique since the effect of each feature on recognition result is different. Different from previous works by calculating weight of each feature directly, the facial expression images are divided into some uniform subregions and weight of subregion features is calculated based on their respective subrecognition rate. The experimental results suggest that the approach based on weighted feature Gaussian kernel function has good performance on the correct rate in emotion recognition. But our approach shows a pretty good performance for the dataset with limited head motion. Emotion recognition based on facial expression is still full of challenges in the future.

Acknowledgments

This work was supported by the National Natural Science Foundation of China (no. 61573066).

Competing Interests

The authors declare that there is no conflict of interests regarding the publication of this paper.

References

- 1.Alonso J. B., Cabrera J., Travieso C. M., López-de-Ipiña K. New approach in quantification of emotional intensity from the speech signal: emotional temperature. Expert Systems with Applications. 2015;42(4):9554–9564. doi: 10.1016/j.eswa.2015.07.062. [DOI] [Google Scholar]

- 2.Dai W. H., Han D. M., Dai Y. H., Xu D. R. Emotion recognition and affective computing on vocal social media. Information and Management. 2015;52(7):777–788. doi: 10.1016/j.im.2015.02.003. [DOI] [Google Scholar]

- 3.Zheng W.-L., Lu B.-L. Investigating critical frequency bands and channels for eeg-based emotion recognition with deep neural networks. IEEE Transactions on Autonomous Mental Development. 2015;7(3):162–175. doi: 10.1109/tamd.2015.2431497. [DOI] [Google Scholar]

- 4.Chanel G., Mühl C. Connecting brains and bodies: applying physiological computing to support social interaction. Interacting with Computers. 2015;27(5):534–550. doi: 10.1093/iwc/iwv013. [DOI] [Google Scholar]

- 5.Jatupaiboon N., Pan-Ngum S., Israsena P. Subject-dependent and subject-independent emotion classification using unimodal and multimodal physiological signals. Journal of Medical Imaging and Health Informatics. 2015;5(5):1020–1027. doi: 10.1166/jmihi.2015.1490. [DOI] [Google Scholar]

- 6.Mehrabian A. Silent Messages. Belmont, Calif, USA: Wadsworth Publishing Company; 1971. [Google Scholar]

- 7.Guo Y., Zhao G., Pietikäinen M. Dynamic facial expression recognition with atlas construction and sparse representation. IEEE Transactions on Image Processing. 2016;25(5):1977–1992. doi: 10.1109/tip.2016.2537215. [DOI] [PubMed] [Google Scholar]

- 8.Barroso E., Santos G., Cardoso L., Padole C., Proenca H. Periocular recognition: how much facial expressions affect performance? Pattern Analysis and Applications. 2016;19(2):517–530. doi: 10.1007/s10044-015-0493-z. [DOI] [Google Scholar]

- 9.Lei Y. J., Guo Y. L., Hayat M., Bennamoun M., Zhou X. Z. A Two-Phase Weighted Collaborative Representation for 3D partial face recognition with single sample. Pattern Recognition. 2016;52:218–237. doi: 10.1016/j.patcog.2015.09.035. [DOI] [Google Scholar]

- 10.Zhang X., Mahoor M. H. Task-dependent multi-task multiple kernel learning for facial action unit detection. Pattern Recognition. 2016;51:187–196. doi: 10.1016/j.patcog.2015.08.026. [DOI] [Google Scholar]

- 11.Fasel B., Luettin J. Automatic facial expression analysis: a survey. Pattern Recognition. 2003;36(1):259–275. doi: 10.1016/s0031-3203(02)00052-3. [DOI] [Google Scholar]

- 12.Zhao G., Pietikäinen M. Dynamic texture recognition using local binary patterns with an application to facial expressions. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2007;29(6):915–928. doi: 10.1109/TPAMI.2007.1110. [DOI] [PubMed] [Google Scholar]

- 13.Shan C. F., Gong S. G., McOwan P. W. Facial expression recognition based on Local Binary Patterns: a comprehensive study. Image and Vision Computing. 2009;27(6):803–816. doi: 10.1016/j.imavis.2008.08.005. [DOI] [Google Scholar]

- 14.Dhall A., Asthana A., Goecke R., Gedeon T. Emotion recognition using PHOG and LPQ features. Proceedings of the IEEE International Conference on Automatic Face and Gesture Recognition and Workshops (FG '11); March 2011; Santa Barbara, Calif, USA. IEEE; pp. 878–883. [DOI] [Google Scholar]

- 15.Dalal N., Triggs B. Histograms of oriented gradients for human detection. Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR '05); June 2005; San Diego, Calif, USA. pp. 886–893. [DOI] [Google Scholar]

- 16.Jones J. P., Palmer L. A. An evaluation of the two-dimensional Gabor filter model of simple receptive fields in cat striate cortex. Journal of Neurophysiology. 1987;58(6):1233–1258. doi: 10.1152/jn.1987.58.6.1233. [DOI] [PubMed] [Google Scholar]

- 17.Zhan Y.-Z., Ye J.-F., Niu D.-J., Cao P. Facial expression recognition based on gabor wavelet transformation and elastic templates matching. Proceedings of the 3rd IEEE International Conference on Image and Graphics (ICIG '04); December 2004; Hong Kong. pp. 254–257. [Google Scholar]

- 18.Ren F., Huang Z. Facial expression recognition based on AAM-SIFT and adaptive regional weighting. IEEJ Transactions on Electrical and Electronic Engineering. 2015;10(6):713–722. doi: 10.1002/tee.22151. [DOI] [Google Scholar]

- 19.Soyel H., Demirel H. Localized discriminative scale invariant feature transform based facial expression recognition. Computers & Electrical Engineering. 2012;38(5):1299–1309. doi: 10.1016/j.compeleceng.2011.10.016. [DOI] [Google Scholar]

- 20.Gao X. B., Su Y., Li X., Tao D. A review of active appearance models. IEEE Transactions on Systems, Man and Cybernetics—Part C: Applications and Reviews. 2010;40(2):145–158. doi: 10.1109/tsmcc.2009.2035631. [DOI] [Google Scholar]

- 21.Sung J. W., Kanada T., Kim D. J. A unified gradient-based approach for combining ASM into AAM. International Journal of Computer Vision. 2007;75(2):297–310. doi: 10.1007/s11263-006-0034-8. [DOI] [Google Scholar]

- 22.Wan K.-W., Lam K.-M., Ng K.-C. An accurate active shape model for facial feature extraction. Pattern Recognition Letters. 2005;26(15):2409–2423. doi: 10.1016/j.patrec.2005.04.015. [DOI] [Google Scholar]

- 23.Lucey S., Wang Y., Saragih J., Cohn J. F. Non-rigid face tracking with enforced convexity and local appearance consistency constraint. Image and Vision Computing. 2010;28(5):781–789. doi: 10.1016/j.imavis.2009.09.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Cordeiro de Amorim R., Mirkin B. Minkowski metric, feature weighting and anomalous cluster initializing in K-Means clustering. Pattern Recognition. 2012;45(3):1061–1075. doi: 10.1016/j.patcog.2011.08.012. [DOI] [Google Scholar]

- 25.Hu M., Li K., Wang X. H., Ren F. J. Facial expression recognition based on histogram weighted HCBP. Journal of Electronic Measurement and Instrument. 2015;29(7):953–960. [Google Scholar]

- 26.Cui C., Asari V. K. Adaptive weighted local textural features for illumination, expression, and occlusion invariant face recognition. Imaging and Multimedia Analytics in a Web and Mobile World; February 2014; San Francisco, Calif, USA. [DOI] [Google Scholar]

- 27.Lee R. S., Chung C.-W., Lee S.-L., Kim S.-H. Confidence interval approach to feature re-weighting. Multimedia Tools and Applications. 2008;40(3):385–407. doi: 10.1007/s11042-008-0212-5. [DOI] [Google Scholar]

- 28.Jang U.-D. Facial expression recognition by using efficient regional feature. Journal of Korean Institute of Information Technology. 2013;11(1):217–222. [Google Scholar]

- 29.Burges C. J. C. A tutorial on support vector machines for pattern recognition. Data Mining and Knowledge Discovery. 1998;2(2):121–167. doi: 10.1023/a:1009715923555. [DOI] [Google Scholar]

- 30.Breiman L. Random forests. Machine Learning. 2001;45(1):5–32. doi: 10.1023/A:1010933404324. [DOI] [Google Scholar]

- 31.Itti L., Koch C., Niebur E. A model of saliency-based visual attention for rapid scene analysis. IEEE Transactions on Pattern Analysis and Machine Intelligence. 1998;20(11):1254–1259. doi: 10.1109/34.730558. [DOI] [Google Scholar]

- 32.Caruana R. Multitask learning. Machine Learning. 1997;28(1):41–75. doi: 10.1023/a:1007379606734. [DOI] [Google Scholar]

- 33.Kremic E., Subasi A. Performance of random forest and SVM in face recognition. International Arab Journal of Information Technology. 2016;13(2):287–293. [Google Scholar]

- 34.Ali G., Iqbal M. A., Choi T.-S. Boosted NNE collections for multicultural facial expression recognition. Pattern Recognition. 2016;55:14–27. doi: 10.1016/j.patcog.2016.01.032. [DOI] [Google Scholar]

- 35.Wang X.-H., Liu A., Zhang S.-Q. New facial expression recognition based on FSVM and KNN. Optik. 2015;126(21):3132–3134. doi: 10.1016/j.ijleo.2015.07.073. [DOI] [Google Scholar]

- 36.Lucey P., Cohn J. F., Kanade T., Saragih J., Ambadar Z., Matthews I. The extended Cohn-Kanade dataset (CK+): a complete dataset for action unit and emotion-specified expression. Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops (CVPRW '10); June 2010; San Francisco, Calif, USA. IEEE; pp. 94–101. [DOI] [Google Scholar]