Abstract

Objective

The research describes an extensible method of evaluating and cancelling electronic journals during a budget shortfall and evaluates implications for interlibrary loan (ILL) and user satisfaction.

Methods

We calculated cost per use for cancellable electronic journal subscriptions (n=533) from the 2013 calendar year and the first half of 2014, cancelling titles with cost per use greater than $20 and less than 100 yearly uses. For remaining titles, we issued an online survey asking respondents to rank the importance of journals to their work. Finally, we gathered ILL requests and COUNTER JR2 turnaway reports for calendar year 2015.

Results

Three hundred fifty-four respondents completed the survey. Because of the level of heterogeneity of titles in the survey as well as respondents' backgrounds, most titles were reported to be never used. We developed criteria based on average response across journals to determine which to cancel. Based on this methodology, we cancelled eight journals. Examination of ILL data revealed that none of the cancelled titles were requested with any frequency. Free-text responses indicated, however, that many value free ILL as a suitable substitute for immediate full-text access to biomedical journal literature.

Conclusions

Soliciting user feedback through an electronic survey can assist collections librarians to make electronic journal cancellation decisions during slim budgetary years. This methodology can be adapted and improved upon at other health sciences libraries.

Keywords: Costs and Cost Analysis, Interlibrary Loans, Surveys and Questionnaires, Periodicals as Topic

The University of New Mexico (UNM) Health Sciences Center consists of the faculty, staff, and students of the School of Medicine and its health professions programs; the College of Nursing; the College of Pharmacy; and the UNM Hospital system. In 2014, the Health Sciences Library and Informatics Center (HSLIC) at the UNM experienced a shortfall in the collection budget of approximately $250,000. This shortfall was mainly due to inflation in e-journals and other e-resource subscriptions. Resource management librarians undertook a review of all collection resources to determine what could be canceled in order to stay within budget.

Research has shown that the most important factors used to make serials cancellation decisions are faculty consultations and data, such as vendor usage statistics and cost data [1]. A standard method for gathering faculty input is to compile lists of titles being considered for cancellation in each subject area and to send the lists to faculty in the relevant departments for input [2–7]. Other libraries have used a survey approach to solicit input from faculty and other stakeholders in a more quantitative manner [8–11]. Increase in interlibrary loan (ILL) requests has been relied upon in the past to determine the effect of journal cancellations [12–14], but few studies have been published since the 1990s [15, 16].

This paper describes the approach that HSLIC took to make journal cancellation decisions and to assess the impact cancellations had on ILL.

METHODS

Journals categorization

To begin the evaluation process, the authors gathered cost and usage data for all of HSLIC's individually subscribed e-journal titles (n=117) and titles in packages that allowed individual title cancellations (n=416) from the calendar year 2013, as well as usage from January 2014 until mid-August 2014. We calculated cost per use for all journals and sorted the list for all journals that exceeded $10 cost per use. We then sorted this list into subsets consisting of those journals exceeding $20 cost per use (n=5) and those with less than 100 total uses (n=5) and cancelled these titles. For the remaining 36 journals with cost per use greater than $10 and less than $20, we solicited user feedback by distributing an online survey to the greater health sciences center population.

Survey

The anonymous survey asked participants to rate the usefulness of a total of thirty-six journals being considered for cancellation (Appendix, online only). To get broad feedback, we distributed the survey to all students, faculty, staff, and house staff at the UNM Health Sciences Center during September 2014. The survey was distributed using faculty and resident email lists, listing in campus announcements, and posting in the library newsletter and on the library website. Respondents had to login with their university IDs and passwords to access the survey, and responding more than once was restricted based on Internet protocol (IP) address, but it was otherwise open to anyone with the university uniform resource locator.

Respondents were asked to list their primary academic or clinical affiliation as well as their status at the university. We sorted responses from each status into 3 response groups: faculty, residents, and staff and students. Respondents were provided a list including journal titles, costs, and number of uses for 2013, as well as major subject areas in a tabular format to facilitate browsing the list. Respondents were asked to choose from one of five options for each journal title: “Never Use,” “Rarely Use,” “Useful,” “Important,” or “Essential.” The default option was “Never Use,” so respondents did not have to enter a response for every title. Finally, respondents could enter free text comments up to 250 characters before submitting the survey. This study received exempt status from our institution's institutional review board prior to distribution of the survey.

At the end of calendar year 2015, we collected ILL requests and direct individual requests for purchase of the cancelled journal titles for the past calendar year. We also gathered turnaway reports (COUNTER JR2), which compile failed attempts to access full-text journal content that is not licensed by the institution [17].

RESULTS

Survey and analysis

The survey received 354 complete responses, of which 246 were from faculty (70%), 58 from residents (16%), and 49 from both staff and students (14%), whom we grouped together due to relatively low response rates from each group. At least 1 faculty member from all colleges and academic departments was represented among respondents, except for 1 department that did not have any relevant journals in the survey.

We converted the textual responses to numerical data for ease of analysis (e.g., “Essential”=5, “Never Used”=1) and cleaned affiliation values as some data were entered incorrectly or in the wrong area. We tallied responses by response group and averaged them for each journal title. We counted the total number of “Essential,” “Important,” and “Useful” responses for each title and averaged these counts to determine a minimum number of responses that each journal had to receive to gain a “keep” score. The minimum criteria differed for each response group.

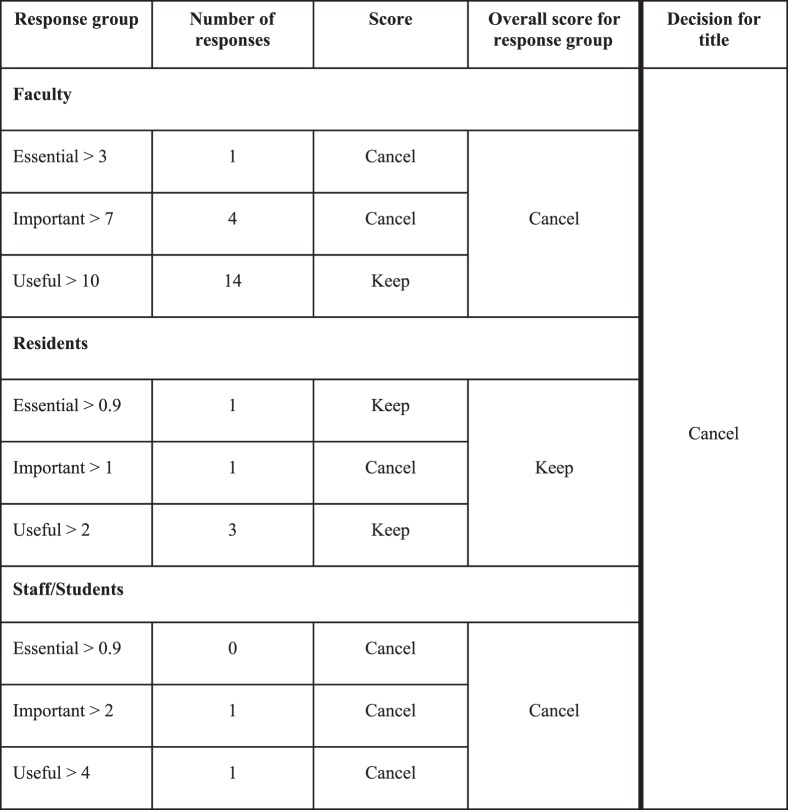

For example, the average “Essential” response from faculty across all journals was 2.56, so we set the minimum criteria for each journal as 3 for this response group. We followed the same process for “Important” and “Useful.” Journals that did not meet the minimum criteria for at least 2 of these categories were assigned a “Cancel” score for that response group. We repeated this process across all response groups and then compared the results of all the groups.

Journals with a “Cancel” score from 2 or more response groups were marked for cancellation. Figure 1 provides an example of this process. Due to survey feedback, we cancelled 8 journals for a total savings of approximately $27,000.

Figure 1.

Journal scoring workflow example

Requests for cancelled material

During 2015, we received a total of 43 ILL requests for articles from the 18 titles that we cancelled. Only 15 of these requests were for articles published during 2015. Only 2 of the titles were requested greater than 5 times, and only 1 title surpassed the CONTU Rule of 5 [18]. Reviewing our cancelled titles, the over $20 cost-per-use group had 12 requests, the under 100 uses group had 14 requests, while the survey group had a total of 17 requests.

Turnaway reports were available for all but one of the cancelled titles. The number of turnaways for the cancelled titles ranged from zero to seventy-six. Because turnaway reports do not indicate the publication year of the content that the user tried to access, we could not be certain that all of these turnaways resulted from attempts to access content from 2015. It was possible that the turnaways could have come from attempts to access articles in a journal's backfile. We did not find any correlation after comparing the number of turnaways with the number of ILL requests. Overall, the turnaway reports did not provide much insight into the success or failure of our cancellation decisions.

To date, librarians have not received any direct requests for subscriptions to the cancelled titles, either through liaison librarians or through the library's Request a Purchase web form.

DISCUSSION

The ILL request rate for cancelled titles indicated our methodology was likely successful in making cancellation decisions, as none of the titles received a significant number of ILL requests. The request rate and resulting copyright fees were financially negligible.

While past studies have relied on ILL statistics to determine the effectiveness of journal cancellations, as have we, they might not accurately capture the need for the cancelled titles. Current research in this area indicates that crowdsourcing scholarly material from various online sources and social media is preferable to ILL, due to quick turnaround [19]. This might explain why the entire group of cancelled titles only received a total of fifteen requests for material published during 2015, a figure much lower than reported usage for the prior year. While there is no way to accurately measure or predict the need for cancelled journals, ILL data can approximate this need and inform librarian selectors about whether it is more cost effective to subscribe to journals or to borrow them.

In January 2015, our library migrated to a new integrated library system and discovery layer. One of the features of the new system was the ability to enable an ILL request button within catalog records that auto-populates the ILL request form. Previously, users had to navigate to our ILL system and manually type or copy and paste article information into the ILL form. As a result, our ILL request rate rose 137% over the previous calendar year. The overall increase in ILL demand might account for some of the ILL requests for cancelled titles and demonstrated that our users were more aware of our ILL service. However, the 43 ILL requests for articles from the cancelled journals only constituted 1.4% of the total ILL requests for the year, so the cancellations did not place a burden on our ILL service.

Use of survey responses in decision making

After reviewing the responses to the survey, we decided that setting strict, quantitative criteria for retention or cancellation would not have led to the best results for a number of reasons, among which were the unbalanced response rates from colleges and departments and a propensity to primarily use journals within one's own area of study. In fact, in three cases, we made the decision to keep or cancel a journal despite user feedback. One of these cases was the Journal of Interprofessional Care, a title directly related to the nascent interprofessional education program at UNM. In this way, rather than using the survey as an absolute method of cancellation, we instead used it to help inform our decisions to retain or cancel specific titles, while still seeking to balance the many wants and needs of all the areas that we serve.

Free-text responses

The free-text response portion of the survey provided some insight as to why certain individuals found certain journals essential to their work. In other cases, the response provided an interesting look at misconceptions that many in our community have about the library and the need to cancel journal titles, as well as general misconceptions about the journal publishing marketplace and how much academic journals can cost.

Several respondents entered comments indicating that they were fine with the library cancelling titles as long as they could get necessary articles through ILL. Respondents also noted the importance of ILL remaining a free service.

Other comments suggest that some respondents did not fully understand the purpose of the journal survey. One respondent was concerned that the journals essential to the respondent's work were not represented in the survey, not understanding that the absence of those journals meant they were not being considered for cancellation. Another noted that it was fine to cancel the print version of the title as long as we maintained electronic access, even though our library had cancelled the vast majority of our print subscriptions more than ten years ago.

There were also comments suggesting a need for training on how to access library materials. One respondent said the library did not have any of the journals the respondent needed from a particular publisher, when in fact the library had access to almost all of that publisher's journals through a “Big Deal” bundle. Another respondent requested two journal subscriptions, both of which the library already purchased.

Limitations

We were unable to calculate a true response rate because we could not accurately determine the number of potential respondents. Because recruitment was primarily conducted using email lists and completion of the survey was voluntary, the results might be biased toward individuals who had an interest in the library's journal subscriptions. Some library liaisons more actively promoted the survey in their departments than others, and some department chairs encouraged all their faculty members to take the survey; therefore, some departments might have been better represented in the survey than others. We attempted to minimize the effect this had on the analysis by comparing the respondent's departmental affiliation with the subject area of the journal. However, there were limitations looking at department affiliation given the interdisciplinary nature of the health sciences and crossover between departments. For example, a pediatric endocrinologist who works in the Pediatrics Department would be interested in journals in the subject area of pediatrics and endocrinology. Our survey demographics were not this granular, and we did not have this type of information about respondents unless they chose to enter it as a free-text response.

Electronic Content

Biography

Jacob L. Nash, MSLIS, AHIP, jlnash@salud.unm.edu, Resource Management Librarian; Karen R. McElfresh, MSLS, AHIP, kmcelfresh@salud.unm.edu, Resource Management Librarian; Health Sciences Library & Informatics Center, 1 University of New Mexico, MSC09 5100, Albuquerque, NM 87131

Footnotes

A supplemental appendix is available with the online version of this journal.

REFERENCES

- 1.Williamson J, Fernandez P, Dixon L. Factors in science journal cancellation projects: the roles of faculty consultations and data. Issues Sci Technol Librariansh [Internet] 2014;54(3–4):78. [cited 24 Nov 2015]. < http://www.istl.org/14-fall/refereed4.html>. [Google Scholar]

- 2.Clement S, Gillespie G, Tusa S, Blake J. Collaboration and organization for successful serials cancellation. Ser Libr [Internet] 2008;54(3–4):229–34. < http://www.tandfonline.com/doi/abs/10.1080/03615260801974172>. [Google Scholar]

- 3.Calvert K, Fleming R. Giving them what they want: providing information for a serials review project. Proceedings of the Charleson Library Conference. 2011:393–6. p. [Google Scholar]

- 4.Sinha R, Tucker C, Scherlen A. Finding the delicate balance: serials assessment at the University of Nevada, Las Vegas. Ser Rev. 2005;31(2):120–4. [Google Scholar]

- 5.Carey R, Elfstrand S, Hijleh R. An evidence-based approach for gaining faculty acceptance in a serials cancellation project. Collect Build. 2006;30(2):59–72. [Google Scholar]

- 6.Enoch T, Harker KR. Planning for the budget-ocalypse: the evolution of a serials/ER cancellation methodology. Ser Libr. 2015;68(1–4):282–9. [Google Scholar]

- 7.Nixon JM. A reprise, or round three: using a database management program as a decision-support system for the cancellation of serials. Ser Libr. 2010;59(3–4):302–12. [Google Scholar]

- 8.Bucknell T, Design Stanley T. and implementation of a periodicals voting exercise at Leeds University Library. Serials. 2002;15(2):153–9. [Google Scholar]

- 9.Bloom M, Marks L. Growing opportunities in the hospital library: measuring the collection needs of hospital clinicians. J Hosp Librariansh [Internet] 13(2):113–9. 2013 Apr; [cited 25 Nov 2015]. < http://www.tandfonline.com/doi/abs/10.1080/15323269.2013.770658>. [Google Scholar]

- 10.Murphy A. An evidence-based approach to engaging healthcare users in a journal review project. Insights UKSG J. 2012;25(1):44–50. [Google Scholar]

- 11.Day A, Davis H. Collection intelligence: using data driven decision making in collection management. Proceedings of the Charleston Library Conference. 2010:81–97. p. [Google Scholar]

- 12.Crump MJ, Freund L. Serials cancellations and interlibrary loan: the link and what it reveals. Ser Rev. 1995;21(2):29. [Google Scholar]

- 13.Wilson MD, Alexander W. Automated interlibrary loan/document delivery data applications for serials collection development. Ser Rev. 1999;25(4):11–9. DOI: http://dx.doi.org/10.1016/S0098-7913(99)00043-X. [Google Scholar]

- 14.Kilpatrick TL, Preece BG. Serial cuts and interlibrary loan: filling the gaps. Interlend Doc Supply [Internet] 1996;24(1):12–20. < http://www.emeraldinsight.com/journals.htm?articleid=860468&show=abstract&>. [Google Scholar]

- 15.Calvert K, Fleming R, Hill K. Impact of journal cancellations on interlibrary loan demand. Ser Rev [Internet] 39(3):184–7. 2013 Sep; [cited 21 Jun 2016]. < http://www.sciencedirect.com/science/article/pii/S0098791313000956>. [Google Scholar]

- 16.Calvert K, Gee W, Malliet J, Fleming R. Is ILL enough? examining ILL demand after journal cancellations at three North Carolina universities. Proceedings of the Charleston Library Conference [Internet] 2013 [cited 21 Jun 2016]. p. 416–9. < http://docs.lib.purdue.edu/charleston/2013/Acquisitions/3>. [Google Scholar]

- 17.COUNTER Online Metrics. The COUNTER code of practice for e-resources: release 4. [Internet] 2012 [cited 23 Feb 2013]. < http://projectcounter.org/r4/COPR4.pdf>. [Google Scholar]

- 18.Copyright Clearance Center. The campus guide to copyright compliance. [Internet] The Center; 2005 [cited 23 Mar 2016]. < https://www.copyright.com/Services/copyrightoncampus/content/ill_contu.html>. [Google Scholar]

- 19.Gardner CC, Gardner GJ. Fast and furious (at publishers): the motivations behind crowdsourced research sharing. Coll Res Libr [Internet] 2017 [cited 21 Jun 2016]. < http://crl.acrl.org/content/early/2016/02/25/crl16-840.full.pdf+html>. Forthcoming. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.