Abstract

Enforcement of social norms by impartial bystanders in the human species reveals a possibly unique capacity to sense and to enforce norms from a third party perspective. Such behavior, however, cannot be accounted by current computational models based on an egocentric notion of norms. Here, using a combination of model-based fMRI and third party punishment games, we show that brain regions previously implicated in egocentric norm enforcement critically extend to the important case of norm enforcement by unaffected third parties. Specifically, we found that responses in the ACC and insula cortex were positively associated with detection of distributional inequity, while those in the anterior DLPFC were associated with assessment of intentionality to the violator. Moreover, during sanction decisions, the subjective value of sanctions modulated activity in both vmPFC and rTPJ. These results shed light on the neurocomputational underpinnings of third party punishment and evolutionary origin of human norm enforcement.

Keywords: social norms, altruistic punishment, fMRI, ventromedial prefrontal cortex

1. Introduction

Social norms, the shared understandings of actions that are obligatory, permitted, or forbidden, play a central role in human societies in regulating social behavior, maintaining social coherence, and promoting cooperation (Bendor and Swistak, 2001; Camerer, 2003; Elster, 1989; Fehr and Fischbacher, 2004; Ostrom, 2000). In particular, the ability to develop norms and enforce them through the use of sanctions is thought by many to be one of the distinguishing characteristics of the human species (Boyd, 1988; Fehr and Fischbacher, 2003). The sanction may be either through reciprocal means taken by individuals whose economic payoff is directly harmed by the norm violation, or through impartial bystanders, so called “third parties”, who are unaffected by the deviation but in a position to punish the violator (Bendor and Swistak, 2001; Fehr and Fischbacher, 2004; Ostrom, 2000).

In the case of reciprocal punishment, notable progress has been made in our understanding of its neural substrates through application of functional neuroimaging techniques to experimental games that capture core cognitive processes underlying norm-guided behavior (De Quervain et al., 2004; Knoch et al., 2006; Li et al., 2009). Using economic game paradigms such as the ultimatum game, these studies have identified critical roles for the insula cortex and anterior cingulate cortex (ACC), which are previously known to encode the emotion of disgust and conflict resolution respectively, in responding to norm violation in various settings (Sanfey et al., 2003; Xiang et al., 2013).

In addition, these studies have suggested that regions in the frontoparietal circuits to be important for assessment of intentionality and responsibility. Dorsolateral prefrontal cortex (DLPFC), for example, has been shown to be important in assessing intentionality of norm violation (Buckholtz et al., 2008; Haushofer and Fehr, 2008), and that their disruption via rTMS causally affects norm-related decisions (Buckholtz et al., 2015; Knoch et al., 2006). Studies of social behavior also reveal the right temporoparietal Junction (rTPJ) in mentalizing and theory of mind, the ability to take perspectives from others (Frith and Frith, 2006). Finally, reward-related regions including striatum and ventromedial prefrontal cortex (vmPFC) have also been implicated social reward processing and sanctioning behavior (De Quervain et al., 2004; Knoch et al., 2006; Li et al., 2009).

In contrast, despite its ubiquity and importance to norm enforcement in human societies, we know much less in the case of enforcement by impartial bystanders (Bendor and Swistak, 2001; Fehr and Fischbacher, 2004; Ostrom, 2000). This has important implications for our understanding of the computational underpinnings of norm-guided behavior and their evolutionary origins (Fehr and Fischbacher, 2004; Riedl et al., 2012). Evolutionarily, humans constitute the only species known to have individuals regularly sanction norm violations even when they themselves are not affected, whereas reciprocal punishment is observed in multiple social species (Fehr and Fischbacher, 2004; Riedl et al., 2012). It has been suggested in the literature that both reciprocal punishment and third party punishment are crucial to the establishment and maintenance of social norm (DeScioli and Kurzban, 2009, 2013). In addition, both types of punishment similarly depend on the extent of violation imposed on the offended as well as the intentionality of the violation on the part of the offender (Blount, 1995; Falk et al., 2003). That is, humans are capable of norm enforcement based on impartial community-based notions that are sensitive to the perspectives of the offender as well as the offended, which could be critical to both third party punishment and reciprocal punishment.

This is opposed to an alternative view that reciprocal punishment could be instead driven by non-norm-based concerns, such as retaliatory motives in response to status challenges, or simply “lashing out” (Fehr and Fischbacher, 2004; Riedl et al., 2012; Yamagishi et al., 2012). For example, under the “wounded pride hypothesis”, reciprocal punishment such as rejection of unfair behavior in the ultimatum game results from a psychological response to a challenge to the integrity or inferior status of the responder (Yamagishi et al., 2012). By and large, current studies of reciprocal punishment are unable to differentiate between these explanations and have great difficulty accounting for sanctions by impartial bystanders (De Quervain et al., 2004; Sanfey et al., 2003; Xiang et al., 2013).

This, however, poses a challenge for current models of norm-guided behavior widely used in the studies of reciprocal punishment (Sanfey AG et al. 2003; De Quervain DJ-F et al. 2004; Xiang T et al. 2013). Specifically, norm-violations in these models are measured by so-called “egocentric inequity”, defined as the difference between the absolute payoff difference between the decision-maker and other parties. That is, people are assumed to care about norm violation only to the extent their own relative position is affected. Note that the term “egocentric” refers only to the use of one’s self as the frame of reference, as opposed to other colloquial meaning of selfishness. Thus, an important question for current neuroscientific accounts of social norms and norm-guided behavior is the extent to which computational components implicated in reciprocal punishment reflect the sophisticated capacities for norm enforcement by unaffected third parties (Montague PR and T Lohrenz 2007; Spitzer M et al. 2007; Buckholtz J et al. 2008). In addition, to what extent do computational demands involved in assessing norm violation from the perspective of others rely upon and recruit additional neural systems? And finally, how are norm-related computations from the perspectives of both offended and offending parties integrated to drive sanction behavior in unaffected third parties?

Here we adopt a set of third party punishment (TPP) games to probe the computational substrates of norm enforcement from the perspective of an impartial bystander. Specifically, we introduced a third party into the widely-used dictator game (DG) and scanned participants in the role of the third-party to investigate the neural responses to three key components of third party punishment: (1) how a third party responds to inequity between the dictator and the recipient, (2) how a third party responds to inequity when giving the option to punish the dictator, and (3) how a third party responds differently when the intentionality of the dictator differs. In this game, the dictator (P1) is given an endowment of 100 monetary units (MU), and can distribute any proportion of this endowment between herself and a recipient (P2). The dictator’s decision is then revealed to the third party (P3). The third party, who is endowed with 160 MUs, must decide whether to sanction the behavior of the dictator at a ratio of 1:5. That is, for every MU spent by the third party, the dictator’s earning is reduced by five MUs (Fig. 1A). Critically, to manipulate the perspective of the norm violator, we included, in addition to the standard TPP, a “No-Intention” condition where the distribution between the dictator and the recipient was decided by a randomization device rather than the dictator. That is, whereas in the standard “Intention” condition, any unfair distribution is the result of the dictator’s choice, in the No- Intention condition, unfair distributions are the result of a random computer assignment. All other aspects of the game are identical between the conditions (Fig. 1A).

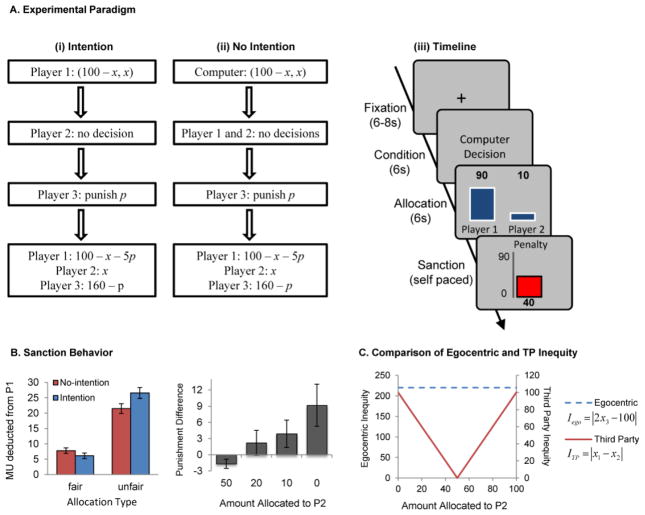

Fig. 1.

(A) In the Intention condition, a dictator (P1) is given an endowment of 100 monetary units (MU), and can distribute any proportion of this endowment between P1 and a recipient (P2). The dictator’s decision is then revealed to the third-party (P3), who decides whether to sanction the behavior of the dictator at a ratio of 1:5. That is, for every MU spent by the third-party, the dictator’s earning is reduced by five MUs. The No-Intention condition is identical except the distribution between the dictator and the recipient is decided by a randomization device. The distributions of P1 and P2 are matched between two conditions (B) Third-party sanction decisions are modulated by both distribution inequity as well as intentionality. Left panel shows sanction varying between distributional inequity and intentionality. Fair trials are classified as 50:50 allocations, and unfair trials as trials where P1 receives 80 MUs or greater. Right panel shows paired differences between sanction in Intention and No-Intention conditions under different levels of distributional inequity. (C) Under egocentric models of inequity, the third party experiences the same level of inequity (blue dashed line) in the current game regardless of the amount that was allocated to P2 (x-axis). In contrast, under impartial, third party models of inequity, the third party experiences high levels of inequity (solid red line) when P2’s allocation significantly departs from a 50–50 split of the initial endowment.

This paradigm has three important advantages as a cognitive probe of norm-guided behavior. First, unlike the ultimatum game and the trust game, the third party in this game does not stand to material gain or lose from the actions of the dictator. As a result, it is difficult for status or reciprocity motivated responses to account for observed sanctions. Most importantly, the parameters that the third party is endowed with more tokens than P1 were chosen such that standard egocentric models of norm enforcement would predict no punishment for all possible situations, including those that result in substantial inequity between the dictator and the recipient, thereby allowing us to separate egocentric and impartial motivations in observed sanction behavior. In addition, with a ratio of 1:3, it was observed that 40% of subjects choose no punishment for inequity distribution (Fehr and Fischbacher, 2004). As such, we use a higher ratio of 1:5 to better reveal heterogeneous preference for punishment. In addition, the temporal structure of the game enabled us to characterize not only the regions involved in processing key variables underlying behavior. More specifically, we are able to separately examine evaluation of the severity of norm violation when the P1’s choice is first revealed to the third party in the Allocation event, and computation of subjective value of sanctioning said violations when the third party decides the level of punishment in the Sanction event.

2. Materials and Methods

2.1. Subjects

22 right-handed student subjects (12 females, mean age 22.9 ± 3.2) were recruited through internet advertisements at Beijing Normal University. Of these subjects, one subject had excessive motion, and 3 subjects did not punish for all the trials. These four subjects were excluded from both behavioral and neuroimaging analyses.

2.2. Procedure

Subjects undergoing neuroimaging completed 24 rounds in one scanning session lasting 15–20 min. Each subjects’ informed consent was obtained via consent form approved by the Internal Review Board at the Hong Kong University of Science and Technology and Beijing Normal University. Subjects in the scanner played the role of the third party, and were matched with 24 pairs of P1 and P2 who were selected from pretest experiments. Half the trials are under the Intention condition with the other half under No-Intention condition. The order of appearance of the two kinds of trials was randomized. The distributions of 100 MUs between P1 and P2 included 50:50, 80:20, 90:10 and 100:0 for both conditions. In particular, subjects were told that they were playing with real people for each round and that we would randomly match him/her with one pair of P1 and P2 only. Both P1 and P2 were paid after the fMRI experiment. The third party was informed that they would be paid based on one randomly chosen round from the 24 rounds plus a RMB160 participation fee. This method, widely used in fMRI experiment involving social interaction, adheres to the no-deception principle in experimental economics (De Quervain et al., 2004; Spitzer et al., 2007). This one-shot nature of the game ensures that there is no reputation effect, and it is incentive compactable for subjects to reveal their preference.

2.3. FMRI scanning parameters

The experiment was conducted by SIEMENS MAGNETOM Trio Tim 3T MRI scanner. The echo spacing is 0.46 ms, EPI factor is 64, RF pulse type is normal, and gradient mode is fast. Subjects lay supine with their heads in the scanner bore and observed the rear-projected computer screen via a 45° mirror mounted above subjects’ faces on the head coil. Subjects’ choices were registered using two MRI-compatible button boxes. High-resolution T1-weighted scans (1.3×1.0×1.3mm) were acquired on Siemens 3T scanners. Functional images details: echo-planar imaging; repetition time (TR) = 2000 ms; echo time (TE) = 30 ms; flip angle = 90° and functional 3.4×3.4×4 mm3 voxels.

2.4. FMRI data preprocessing

All the imaging data were processed and analyzed using SPM8 (Wellcome Trust Centre for Neuroimaging, Institute of Neurology, UCL, London http://www.fil.ion.ucl.ac.uk/spm) and visualized in xjView (http://www.alivelearn.net/xjview8/). Functional images were realigned using a six-parameter rigid-body transformation. Each individual’s structural T1 image was co-registered to the average of the motion-corrected images using 12-parameter affine transformation. Individual T1 structural images were segmented into grey matter, white matter, and cerebrospinal fluid before the individual grey matter was nonlinearly warped into MNI grey matter template. Functional images were, in order, slice-timing corrected, motion corrected, normalized into MNI space, and smoothed with an 8mm isotropic Gaussian kernel.

2.5. FMRI data analysis

Random effects models were done in SPM8 by specifying a separate general linear model for each subject and pooled at the second level. All images were high-pass filtered in the temporal domain (filter width 128s). Autocorrelation of the hemodynamic responses was modeled as an AR(1) process. Analyses of fMRI time series were done by generating distributional inequity, intentionality and decision utility from the computational model calibrated on choices of subjects at the individual level. An event-related design was used where regressors were included for the Allocation and Decision events of the trials (Fig. 1A). That is, for each subject, we constructed a (first level) general linear model (GLM) consisting of two events: an event at the time of Allocation with duration of 2 seconds, and one at the time of Decision with duration of 2 seconds. Regressors were convolved with the canonical hemodynamic response function and entered into a regression analysis against each subject’s BOLD response data. The regression fits of each signal from each individual subject were then summed across their roles and then taken into random-effects group analysis.

For small-volume correction analysis, we use coordinates from previous studies within a 10-mm sphere (De Quervain et al., 2004; Greene et al., 2004; Mitchell, 2008; Sanfey et al., 2003). More specifically, we used coordinates of left insula (MNI coordinate, x = 35, y = 15, z = 3), right insula (MNI coordinate, x = −33, y = 14, z = −1) and ACC (MNI coordinate, x = 4, y = 20, z = 36) from the Sanfey et al. (2003), where activities in these regions were positively correlated with inequity between the proposer and the recipient in the ultimatum game. We adopted coordinates of left rDLPFC (MNI coordinate, x = −22, y = 48, z = 8) and right rDLPFC (MNI coordinate, x = 28, y = 49, z = 6) from an earlier study of the moral judgment (Greene et al., 2004), where rDLPFC was more activated when comparing the utilitarian with non-utilitarian moral judgment. We adopted the coordinates for our SVC analysis from De Quervain et al. (2004)’s study of reciprocal altruistic punishment (MNI coordinate, x = 2, y = 54, z = −4). Finally, the rTPJ coordinate is based on a review of the theory of mind by Mitchell et al (MNI coordinate, x = 54, y = −51, z = 27)(Mitchell, 2008). All these SVC results passed a corrected significance threshold of p < 0.05

2.6. Behavioral data analysis

Linear regression is used to test the effect of inequity on level of punishment, and standard errors are adjusted for clusters at the individual level. Structural estimation with logit specification is used to estimate the parameters of the model of third party punishment explained in next section. For trial t of subject i, for punishment level pit given the set of feasible punishment levels ϑ we specify the logit choice probability

The log-likelihood is specified as follows,

We use maximum likelihood to estimate γ̄ ∈ {γI;, γNI}, and test whether the two parameters are significantly greater than zero and whether they are significantly different for the two conditions.

3. Results

3.1. Third-party sanction behavior

First, we investigated how third-party sanction behavior varied as a function of both the distributional norm violation imposed upon the recipient, captured by inequity between the dictator and the recipient, and intentionality, captured by the intentionality of dictator’s action. Multiple regression analysis showed that both factors had highly significant effects on sanctioning decisions (Fig. 1B). Specifically, the amount allocated by the dictator was significantly correlated with the level of sanction in both the No-Intention and the Intention conditions (βI = 0.52, p < 0.001; βNI = 0.34, p < 0.001, Fig. 1B). Importantly, sanctioning behavior was sensitive to the intentionality of the dictator, with significantly higher sanctions observed in the Intention condition than the No-Intention condition (paired t-test, p < 0.001).

3.2. Egocentric models of inequity aversion cannot explain third-party punishment behavior

In the standard egocentric models of inequity averse behavior, decision-makers are assumed to be averse to payoff inequity between self and others only. In its linear version (Fehr and Schmidt, 1999), consider three players indexed by i ∈ {1,2,3} for P1 the dictator, P2 the recipient and P3 the third party and let x = {x1, x2, x3} denote the vector of monetary payoffs. The utility of the third party under egocentric inequity takes the form:

where x3 captures third party’s material payoffs, α · Σj = 1,2 max {xj − x3, 0} captures inequity aversion where others have more monetary payoffs than self, and β · Σj = 1,2 max {x3 − xj, 0}captures inequity aversion where others have less payoffs than self. The decision-maker is averse to both types of inequity when both α and β are positive.

In our game, the third party always has more monetary payoffs than P1 and P2, and hence punishment will increase the inequity between self and P1, leading to no punishment. Specifically, punishment will increase the advantageous inequity between third party and P1, (x3 − p − (x1 − 5p)), and decrease the advantageous inequity between third party and P2, [(x3 − p) − x2]. The aggregate effect on advantageous inequity would be (x3 − (x1 + x2) + 3p), which is smallest when p = 0. In addition, punishment also reduces her own monetary utility, (x3 − p). Put it together, punishment increases the overall egocentric inequity and decreases monetary payoffs, and thus the third party would choose minimal level of sanction 0. Therefore the egocentric inequity aversion utility could not account for third party punishment under fairly standard assumptions of inequity aversion (Fig. 1C).

3.3. Computational modeling of third party punishment behavior

Going beyond an egocentric perspective, we extend the inequity utility model (Fehr and Schmidt, 1999) to incorporate the perspective of a third party. Specifically, we assume the third party dislikes the distributional inequity between P1 and P2. As punishment is costly, she needs to trade-off between own payoff and the level of distributional inequity between P1 and P2 without being directly involved in the inequity comparison. This can be contrasted with the aforementioned model of reciprocal punishment where the enforcer is at the center of the inequity calculations. We specify the choice model as follows:

where (x3 − p) represents the post-sanction earnings of the third-party, and |x1 − x2 − 5 · p| the post-sanction distributional inequity between dictator and recipient. The aversion to inequity is captured by the parameter γ̄ = γk, with k = I corresponding to the intention condition and k = NI for the no-intention condition. The model captures three essential computation of third party punishment. First, the third party computes the inequity between P1 and P2 as |(x1 − 5p) − x2|. Second, the third party assigns different weights to inequity based on intentionality, which is captured by parameter γ̄. Third, the third party computes the overall utility of punishment, and choose a level of punishment p to maximize utility u(x1, x2, x3, p).

This model extends previous inequity aversion models to the setting of third party punishment. Firstly, the model would predict a higher level of punishment if there is more inequity between P1 and P2. Secondly, we allow for the possibility that the degree of inequity aversion can depend on the nature of intentionality for the inequity between dictator and recipient. Intuitively, the third party dislikes inequity more under Intention condition than No-Intention condition, which we test in the subsequent estimation. Using this model, we were able to capture sensitivity to both inequity and intentionality as found in the regression analysis above. Specifically, we found that the third-party was significantly inequity averse in both the Intention (γI = 0.18, p < 0.001) and the No-Intention conditions (γNI = 0.13, p < 0.001). More importantly, and consistent with the regression results above, we found that the third-party was significantly more inequity averse in the Intention condition than the No-Intention condition (paired t-test, p < 0.005, two-tailed). That is, P3 exerted greater sanctions on P1 when the distribution was more inequitable and when the offers were made by intentional acts of P1 (Fig. 1C).

In addition to the above “scaling” model, we considered an additional model where intentionality exerts additional weight in an additive manner, such that,

We next used a bootstrap procedure to compare these two models by comparing their AIC values. Our prediction is that the scaling model would outperform the additive model. In particular, the right panel of Fig. 1B shows that the difference in punishment between intention and non-intentional is modulated by the size of the inequity. This is inconsistent with a shift in the constant but consistent with a difference in scaling. Indeed, we found that the scaling model performed significantly better than the additive model (p < 0.001). Specifically, the AIC of the additive model exceeded that of the scaling model only once over 10,000 iterations.

3.4. Brain regions modulated by inequity from third-party perspective

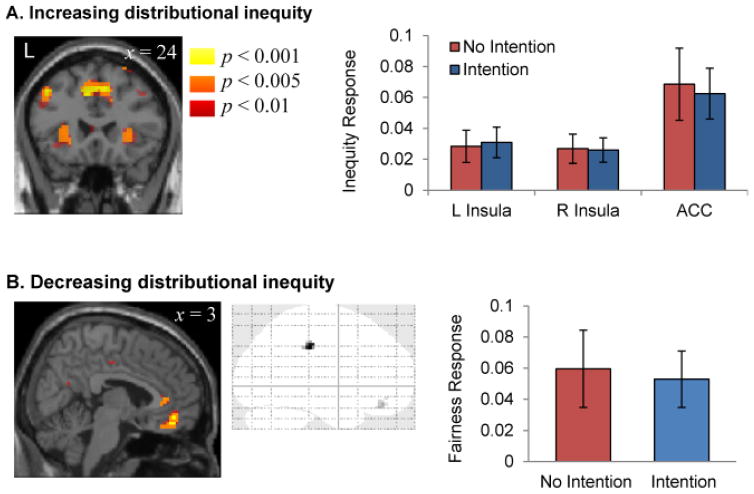

Taken together, the model-based characterization of behavior provides a means to capture neural signatures of (i) inequity from a third party perspective measured by |(x1 − 5p) − x2|, (ii) the extent to which inequity was incurred intentionally, captured by parameter γ̄, and (iii) subjective value of sanctions motivated by inequity and intentionality, captured by u(x1, x2, x3, p).. In our neuroimaging data analysis, we separately examine neural correlates of these three aspects of computation. First, we focused on the distributional inequity revealed upon Allocation event, measured by the difference in payoffs between the dictator and the recipient. If brain regions implicated in inequity processing during reciprocal punishment, in particular insula cortex and the ACC (Sanfey et al., 2003; Xiang et al., 2013), are sensitive to norms that apply to the community at large, we should observe a significant correlation between activity in these regions and inequity between the dictator and the recipient. Alternatively, such responses should be absent if the computational role of these regions is restricted to egocentric notions of inequity, as egocentric inequity is constant in our setup. Consistent with a general detecting norm-violation hypothesis, we found that activity in the ACC and bilateral insula cortex was significantly positively correlated with distributional inequity between the dictator and the recipient (Fig. 2A; Table 1). In addition to ACC and insula, we find that activation in the precuneus is significantly positively correlated distributional inequity. This is consistent with the role of precuneus in first-person perspective taking (Cavanna and Trimble, 2006).

Fig. 2.

(A) Bilateral insula and ACC where activities were significantly positively correlated with level of inequity pooling intention and no-intention condition (Left insula, MNI coordinate, x = −30, y = 21, z = 6; Right insula, MNI coordinate, x = 27, y = 18, z = −3; and ACC MNI coordinate, x = −9, y = 21, z = 42: p < 0.05, small-volume-corrected, cluster size k ≥ 10). Activities in these regions were not significantly modulated by intentionality (p > 0.5). (B) Glass brain and sagittal section of vmPFC where activity is significantly negatively correlated with level of inequity pooling intention and no-intention condition (vmPFC, MNI coordinate, x = 3, y = 42, z = −18: p < 0.05, small-volume-corrected, cluster size k ≥ 10). Activity in this region was not significantly modulated by intentionality (p > 0.5).

Table 1.

Neural Response for Increasing Distributional Inequity during Allocation Event.

| Voxel-Level Statistics | MNI Coor. | |||||

|---|---|---|---|---|---|---|

|

|

||||||

| Regions | Cluster Size (k) | T-val | punc | x | y | z |

| R Insula | 18 | 4.5 | 0 | 27 | 18 | −3 |

| L Insula | 5 | 4.39 | 0 | −30 | 21 | 6 |

| Anterior Cingulate Cortex | 253 | 5.04 | 0 | −9 | 21 | 42 |

| 5.04 | 0 | 33 | 0 | 45 | ||

| 5.01 | 0 | 12 | 15 | 51 | ||

| L Superior Frontal Gyrus | 12 | 3.88 | 0.001 | −21 | 54 | 0 |

| R Inferior Frontal Gyrus | 78 | 3.83 | 0.001 | −27 | 51 | −6 |

| 4.84 | 0 | 45 | 9 | 36 | ||

| L Superior Frontal Gyrus | 21 | 5.01 | 0 | −21 | 15 | 69 |

| L Precuneus | 1523 | 7.16 | 0 | −21 | −66 | 39 |

| 6.89 | 0 | −33 | −54 | 48 | ||

| 6.83 | 0 | −6 | −84 | 9 | ||

| L Middle Frontal Gyrus | 263 | 6.23 | 0 | −42 | 9 | 54 |

| 5.61 | 0 | −48 | 12 | 33 | ||

| 4.69 | 0 | −33 | 6 | 48 | ||

| R Inferior Parietal Lobule | 647 | 6.21 | 0 | 30 | −57 | 48 |

| 5.41 | 0 | 30 | −69 | 45 | ||

| 5.41 | 0 | 21 | −72 | 57 | ||

| R Thalamus | 64 | 5.16 | 0 | 24 | −18 | 3 |

| 4.95 | 0 | 15 | −21 | 12 | ||

| 4.22 | 0 | 21 | −9 | 9 | ||

| R Declive | 10 | 4.34 | 0 | 39 | −72 | −27 |

In addition, we investigated neural responses to the inverse of inequity in our game, fairness, during the Allocation Event. We found that activity in the ventromedial prefrontal cortex (vmPFC) was associated with distributional equity (Fig. 2B; Table 2). This is in line with a number of studies demonstrating vmPFC in reward computations including social ones (Hare et al., 2008; Li et al., 2009; O’Doherty et al., 2004; Padoa-Schioppa, 2007). Importantly, unlike in reciprocal punishment, the third-party stands neither direct monetary gain from equitable allocations, nor lose from inequitable allocations. Thus neural responses to equity (inequity) are more clearly reflective of impartial equity concerns per se, as opposed to either material gain (loss) or egocentric equity (inequity).

Table 2.

Neural Response for Decreasing Distributional Inequity during Allocation Event.

| Voxel-Level Statistics | MNI Coor. | |||||

|---|---|---|---|---|---|---|

|

|

||||||

| Regions | Cluster Size (k) | T-val | punc | x | y | z |

| Ventromedial PFC | 18 | 3.96 | 0.001 | 3 | 42 | −18 |

| Cingulate Gyrus | 18 | 4.86 | 0 | −9 | −27 | 39 |

3.5. Brain regions selectively modulated by intentionality of norm violation

Next, we separated responses in the above regions of ACC and insula according to the Intention and No-Intention conditions, in order to investigate whether these neural inequity signals were also modulated by intentionality of the norm violation during Allocation event, the other key consideration underlying sanctioning decisions. If so, it would suggest that norm-related computations in these regions are also sensitive to the intentions of the norm violator. However, we found that inequity responses in the both ACC and insula were not significantly modulated by intentionality (p > 0.5 for each).

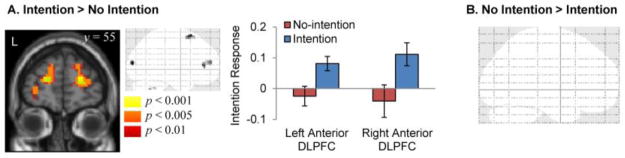

In contrast, we found that bilateral anterior dorsolateral prefrontal cortex (DLPFC) responded to intentionality of the norm violation. Specifically, we contrasted neural responses between the Intention and No-Intention conditions during the Allocation event to localize regions with greater response in the Intention condition as compared to the No-Intention condition (Fig. 3A; Table 3). Moreover, this region appeared to be selective for intentionality. That is, using whole brain search, we found that activity in DLPFC did not respond to distributional inequity (p > 0.5). Computationally, this may reflect neural processing related to assessment of the intentionality to the norm violator, and is consistent with previous neuroimaging results implicating this region in forming and updating beliefs about higher-order state associations (Burke et al., 2010; Gläscher et al., 2010). Indeed, we did not observe any significant activations in the reverse No Intension > Intention contrast even under liberal thresholds (p > 0.01, Fig. 3B).

Fig. 3.

(A) Glass brain and bilateral anterior DLPFC where activity is significantly greater in the Intention than No-Intention condition (Left DLPFC, MNI coordinate, x = −27, y = 41, z = 10; Right DLPFC, MNI coordinate, x = 21, y = 56, z = 13: p < 0.05, small-volume-corrected, k ≥ 10 voxels). (B) No brain region exhibited greater activation under No-Intention condition versus Intention condition (p > 0.01, uncorrected).

Table 3.

Neural Response for Intention > No-Intention during Allocation Event.

| Voxel-Level Statistics | MNI Coor. | |||||

|---|---|---|---|---|---|---|

|

|

||||||

| Regions | Cluster Size (k) | T-val | punc | x | y | z |

| L Anterior DLPFC | 39 | 4.71 | 0 | −27 | 41 | 10 |

| 4.31 | 0 | −21 | 53 | 13 | ||

| R Anterior DLPFC | 21 | 4.31 | 0 | 21 | 56 | 13 |

| R Superior Frontal Gyrus | 51 | 4.97 | 0 | 9 | 20 | 67 |

| L Occipital Lobe | 14 | 4.97 | 0 | −9 | −88 | 13 |

| L Cerebellum | 12 | 4.13 | 0 | −27 | −55 | −38 |

3.6. Brain regions modulated by subjective value of sanctions

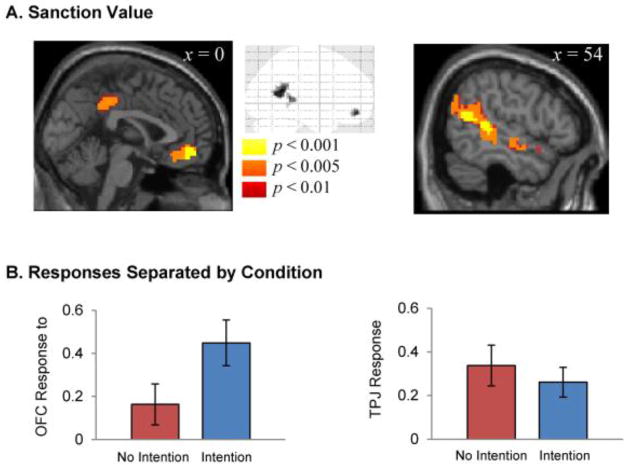

Finally, we investigated how the brain integrates both inequity and intentionality signals in arriving at sanction decisions. The third party chooses an optimal level of punishment to maximize utility u(x1, x2, x3, p).. For a chosen p*, we can compute the subjective value of punishment measured by utility u(x1, x2, x3, p*). for each trial, and examine the brain regions correlating with the subjective value of punishment. During the Sanction event, we found that none of the regions that responded to inequity, in particular ACC and insula cortex, was found to respond to subjective value of sanctioning decisions. Likewise, we did not observe a significant correlation with DLPFC activity. Instead, we found that responses in vmPFC and the right temporoparietal junction (rTPJ) were significantly correlated with the subjective value of sanctioning decisions (p < 0.05, small-volume-corrected, k ≥ 10, Fig. 4A; Table 4). Importantly, in follow-up region of interest analysis, we found that the vmPFC response was significantly greater in the Intention condition than in the No-Intention condition (p < 0.025, Fig. 4B). In contrast, rTPJ response did not show a significant difference between the two conditions (p > 0.5) (Fig. 4B).

Fig. 4.

(A) Glass brain and sagittal section of vmPFC and rTPJ where activity is significantly positively correlated with level of sanction value pooling intention and no-intention (vmPFC, MNI coordinate, x = 0, y = 47, z = −14; rTPJ, MNI coordinate, x = 54, y = −40, z = 7: p < 0.05, small-volume-corrected, k ≥ 10). (B) Activity of vmPFC to sanction value is significantly greater in Intention condition than in No-Intention condition (paired t-test, p < 0.025, two-tailed). Responsivity of rTPJ however is not significantly different for Intention condition and No-Intention condition (paired t-test p > 0.5, two-tailed).

Table 4.

Neural Response for decision utility during the Sanction event.

| Voxel-Level Statistics | MNI Coor. | |||||

|---|---|---|---|---|---|---|

|

|

||||||

| Regions | Cluster Size (k) | T-val | punc | x | y | z |

| Ventromedial PFC | 42 | 5.06 | 0 | 0 | 47 | −14 |

| R Temporoparietal Junction | 41 | 4.88 | 0 | 54 | −40 | 7 |

| R Superior Temporal Gyrus | 142 | 5.33 | 0 | 60 | −58 | 13 |

| 5.48 | 0 | 63 | −49 | 19 | ||

Furthermore, we found that responses to the sanction value in vmPFC and rTPJ appeared specific to the Sanction event, as we did not observe significant vmPFC and rTPJ activity for sanction value during the Allocation event (p > 0.1, uncorrected). Moreover, we did not find any brain region that responded to sanction value during the Allocation event even at liberal threshold (p < 0.01, uncorrected). Together, these results suggest that computations relating to distributional inequity and intentionality are integrated only at the time of sanction decision.

4. Discussion

Norm enforcement by impartial third parties is thought to be crucial to the development of large-scale human societies, as norm compliance solely relying on a two-party retaliatory system typically cannot be sustained beyond the small-scale (Fehr and Fischbacher, 2004; Marlowe et al., 2008; Ostrom, 2000). In particular, enforcement through reciprocal punishment alone is known to fail when the cost of sanction by the violated is sufficiently high, or if the norm violation produces diffuse costs among many individuals. For example, in the case of cooperation norms, a shirking individual may impose little direct cost on any particular member, but result in collectively substantial damages. In such cases, norm enforcement by third parties can be crucial by sharing the cost of punishment beyond those directly affected by the norm violation (Bendor and Swistak, 2001; Fehr and Fischbacher, 2004). Consistent with this idea, behavioral experiments across small-scale societies have found that the level of punishment by third parties to be highly correlated with the cooperation rates across societies (Henrich et al., 2006).

At the neural level, recent applications of functional neuroimaging methods, combined with computational models of inequity aversion, have transformed our understanding of the neural substrates of norm-guided behavior. Despite this progress, however, important questions remain about whether and to what extent these neural mechanisms reflect a general notion of norms that includes more general community concerns, or a narrower notion based on purely egocentric concerns. Here we address this question by characterizing the computational mechanisms underlying norm-guided behavior in third parties (Behrens et al., 2009; Hsu et al., 2008; Zhu et al., 2012). Specifically, using a set of third-party punishment games, we studied two factors driving third-party norm enforcement: (1) assessment of intentionality for and severity of norm violation, and (2) determination of appropriate level of sanction (Buckholtz et al., 2008; Fehr and Fischbacher, 2004; Spitzer et al., 2007).

First, despite clear psychological differences between egocentric and impartial notions of inequity, our results revealed a striking overlap between computational components involved in second and third party sanctions, and suggested that these regions exhibit the sophisticated capacities necessary for sensing norm violation in general. Specifically, we found opposing responses to inequity in ACC and insula on the one hand, and vmPFC on the other (Fig. 2). This is consistent with hypothesized functions of the former in processing aversive stimuli, including empathic pain and inequity (Chang and Sanfey, 2013; Civai et al., 2012; Corradi-Dell’Acqua et al., 2013; King-Casas et al., 2008; Sanfey et al., 2003; Singer et al., 2004), and the latter in reward processing (Hare et al., 2008; Li et al., 2009; O’Doherty et al., 2004; Padoa-Schioppa, 2007; Tabibnia et al., 2008; Tricomi et al., 2010; Zaki et al., 2013; Zaki and Mitchell, 2011). Critically, neural responses in these regions did not reflect egocentric notions of inequity or reward, but rather general notions of violation of distributional norm that are computed from the perspective of others. An important open question is the extent to which the current computational account could be generalized to cases where multiple norms coexist, such as when egocentric and impartial notions of inequity conflict. Indeed, in the present study, egocentric inequity was experimentally controlled to minimize conflicts between egocentric and impartial concerns. Future experiments can address this question by systematically manipulating relative payoff position and payoff distance among the players in a third party setting.

In contrast, we found that DLPFC but not ACC/insula differentiates intentionality in the Allocation event. This functional separation is consistent with previous evidence showing that assignment of responsibility is cognitively distinct from assessment of distributional norm violation (Buckholtz J et al. 2008). One possible computational role for the DLPFC in such decisions is that it is involved in overriding automatic impulses to punish in the Intention condition (Haushofer and Fehr, 2008; Knoch et al., 2006). That is, because demands of impartiality may require overriding retributive motives in order to arrive at a reasonable judgment, the anterior DLPFC in our task may be involved in down-regulating psychological reward derived from punishment in the Intention condition. An alternative account, in contrast, posits that the DLPFC is involved in a constructive process that generates assessment of intentionality, for example, through abstract reasoning or belief maintenance (Fehr and Schmidt, 1999; Greene et al., 2004; MacDonald et al., 2000). This is consistent with findings in studies on social learning and model-based reinforcement learning, where DLPFC is implicated in computations related to forming and updating beliefs about higher-order state associations (Burke et al., 2010; Gläscher et al., 2010). Interestingly, both accounts are consistent with the finding that repetitive transcranial magnetic stimulation (rTMS) over the DLPFC reduces punishment of intentional norm violations. However, because only the latter posits a computational role for DLPFC in processing intent per se, it is possible to test these two accounts in future experiments where the intentionality of the dictator must be inferred or learned, rather than explicitly given as in the current case (Behrens et al., 2008; Hampton et al., 2008).

Notably, none of these regions, including ACC/insula and DLPFC identified in the Allocation event, appeared to respond to both distributional inequity and intentionality during the Sanction event. Instead, we found evidence of an integration and selection role for the vmPFC. During the Sanction event, the sanction value signal, which integrated distributional inequity and intentionality and the selected the level of punishment, was correlated with activity in the vmPFC. This region overlapped with the vmPFC activation identified in the Allocation event, but this activation cannot be accounted for by hemodynamic lag, as sanction value did not significantly correlate with vmPFC activity, nor respond to intentionality, during the Allocation event.

These results therefore support the view of norm enforcement as part of a hierarchical process whereby computations involving distributional inequity and of intentionality are integrated to arrive at sanctioning decisions in the vmPFC, and accord well with the hypothesized role of the vmPFC in neural representation, integration, and interaction of both monetary and social rewards (Behrens et al., 2009). The vmPFC is anatomically and functionally well suited to play this role, as it projects to several brain areas that are heavily involved in reward valuation, preference generation, and decision-making (Behrens et al., 2009; Hare et al., 2010; Hare et al., 2009; Hare et al., 2008; Rangel et al., 2008). Our findings regarding the vmPFC also echo those of previous studies in which investigators, using different paradigms, reported data suggesting that activations in a neural network including the vmPFC positively reinforce social rewards (Hare et al., 2008; Li et al., 2009; O’Doherty et al., 2004; Padoa-Schioppa, 2007; Tabibnia et al., 2008; Tricomi et al., 2010; Zaki et al., 2013; Zaki and Mitchell, 2011), and more generally social cognition (Gusnard et al., 2001; Miller and Cohen, 2001; Saxe, 2006).

Interestingly, we found a similar sanction value response in the rTPJ, although unlike vmPFC, this response did not differ significantly according to intentionality. Although widely implicated in studies involving mentalizing and perception of agency (Frith and Frith, 2006), rTPJ activation is not typically observed in studies involving social preferences. In studies of reward-guided behavior, activation of rTPJ is normally associated with computations of learning signals related to belief updating and higher-order state associations (Behrens et al., 2008; Hampton et al., 2008), and is thought to reflect two separate operations—interacting with an opponent whose internal states can be modelled (i.e., another human) and whose behavior is also relevant for guiding one’s future actions (Gläscher et al., 2010). Therefore, one possible interpretation is that rTPJ is an additional computational system involved in third party punishment, as the computation of value of third-party needs shifting attention away from the self to focus on the needs of others (Frith and Frith, 2006; Lamm et al., 2007; Mitchell, 2008). That is, decisions to punish require the third party to focus on the desires and well-being of others rather than upon one’s own economic payoffs (Haushofer and Fehr, 2008).

Notably, we found that precuneus is positively correlated with distributional inequity in the Allocation event, but not in the Sanction event. The precuneus is widely implicated in studies involving visuo-spatial imagery, episodic memory retrieval and self-processing operations (Cavanna and Trimble, 2006). In particular, in studies of self-processing tasks, precuneus is more activated when subjects read self-descriptive traits compared to non-self-descriptive traits (Kircher et al., 2000), as well as when subjects read stories written in the first-person in comparison with a third-person perspective (Vogeley et al., 2001). Therefore, one possible interpretation for our observation is that precuneus is involved in the perception of inequity aversion at the Allocation event from the perspective of one’s self, while rTPJ is engaged in order to take the perspective of others to reach a punishment decision at the Sanction event. In a meta-analysis of decision making in the ultimatum game (Feng et al., 2015), precuneus is more activated when comparing unfair offers with fair offers. This suggests that precuneus is a commonly shared neural mechanism for self-other referencing for both reciprocal and third party punishment.

It is less clear, however, the specific nature of the mechanisms by which information regarding intentionality, norm violation, and sanctioning decision are integrated. The fact that our scaling model outperformed the additive model suggests DLPFC modulates, or “gates” an inequity aversion signal used to arrive at a punishment decision, as opposed to one where DLPFC exerting direct additional weight in punishment. However, we did not find strong evidence at the neural level that speak to the nature of DLPFC’s involvement in punishment. Specifically, we tested the extent to which the individual intention parameter, γI − γNI, was correlated with differential neural activities between the Intention and No-Intention conditions in vmPFC, insula, DLPFC, and rTPJ. None of these tests were significant even at liberal thresholds (p > 0.1 for all tests).

In light of these null findings, therefore, it may be desirable in future studies to causally manipulate DLPFC functioning instead of relying on the inherently correlational nature of fMRI measures. Indeed, there is some recent evidence using rTMS that are consistent with the gating hypothesis. In particular, application of rTMS to the DLPFC in legal judgment was found to reduce punishment by simultaneously diminishing the influence of information about culpability and enhancing the influence of information about harm severity (Buckholtz et al., 2015). Although seemingly paradoxical, this is consistent with our behavioral model, as well as the hypothesis that DLPFC encodes the information about culpability and gating the harm severity, which would lead to the reduced punishment when such responses are disrupted.

More generally, together with previous studies of reciprocal punishment and moral judgment, these results raise the intriguing possibility that involvement of theory of mind processes, subserved by frontoparietal circuits, may be a critical component accounting for the uniqueness of the human species in third party norm enforcement. That is, unlike reciprocal punishment, third-party norm enforcement requires individuals to represent norms from the perspective of others as opposed to one’s self. Although necessarily speculative, this is consistent with recent nonhuman primate evidence, which found that chimpanzees, the closest living phylogenetic relative to humans, do not punish those who steal from third parties, even as they readily punish those who steal from them directly (Riedl et al., 2012). This is particularly relevant given causal evidence suggesting a necessary role of rTPJ in supporting a uniquely human cognitive capacity to represent and reason about mental states of others (Carter et al., 2012; Saxe, 2006; Young et al., 2010), which in turn may help to explain the unique nature of human engagement in third-party norm enforcement.

4.1. Limitations and conclusions

Finally, there are two important open questions concerning the external validity of our results specifically, and our conceptualization of norm violation more generally. The first concerns the widespread forms of sanctions involving non-pecuniary means. For example, social exclusion such as ostracism and corporal punishment are some of the most common forms of social sanctions in response to social norm violations (Guala, 2012). It would be of interest to examine whether and how individual difference in third party punishment game would predict actual behavior in non-pecuniary forms of punishment. Future studies combining altruistic punishment with manipulations of social exclusion (Eisenberger et al., 2003) and physical punishment (McDermott et al., 2009) would be necessary.

The second concerns the psychological mechanism by which inequity aversion influences behavior. Whereas models of inequity aversion assume that decision-makers receive direct disutility from inequity, an alternative, non-mutually exclusive, approach is to allow players to have preferences regarding the beliefs of others, such that inequity affects behavior by shaping decision-makers’ expectations (Battigalli and Dufwenberg, 2007; Dufwenberg and Kirchsteiger, 2004; Geanakoplos et al., 1989; Rabin, 1993). For example, Battigalli and Dufwenberg (2007) proposed that a model of “guilt aversion” where a player’s social preferences depend on her beliefs about another player. Specifically, she feels “guilty” when she takes an action that deviates from others’ expectations of her action, akin to “letting the other player down”. In the third party punishment setting, it is possible that P3 punishes because he/she believes that is what P2 expects. Intuitively, this corresponds to a case where not punishing leads P3 to feel guilty about letting P2 down. Differentiating between this and our account, however, requires additional measures of beliefs, either using direct elicitation or manipulation of player beliefs. Future studies, such as combining our third-party punishment game and belief elicitation mechanisms used in guilt-aversion studies (Chang and Sanfey, 2013; Chang et al., 2011), would be necessary to address this important question.

Highlights.

We combined model-based fMRI and third party punishment games.

Brain regions implicated in reciprocal punishment extend to third party punishment.

ACC and insula cortex were positively associated with distributional inequity.

Anterior DLPFC was associated with assessment of intentionality to the violator.

The subjective value of sanctions modulated activity in both vmPFC and rTPJ.

Acknowledgments

We thank X.T. Zhu for assistance in data collection and the State Key Laboratory of Cognitive Neuroscience and Learning at Beijing Normal University for facilitating the fMRI experiment. This research was supported by the Hong Kong University of Science and Technology (S.Z. and S.H.C.), the Ministry of Education, Singapore (S.Z. and S.H.C.), the AXA Research Fund (S.H.C.), the National Institute of Mental Health (R01 MH098023, to M.H.), and the Hellman Family Faculty Fund (M.H.).

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Behrens TE, Hunt LT, Rushworth MF. The computation of social behavior. Science. 2009;324:1160–1164. doi: 10.1126/science.1169694. [DOI] [PubMed] [Google Scholar]

- Behrens TE, Hunt LT, Woolrich MW, Rushworth MF. Associative learning of social value. Nature. 2008;456:245–249. doi: 10.1038/nature07538. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bendor J, Swistak P. The Evolution of Norms. American Journal of Sociology. 2001;106:1493–1545. [Google Scholar]

- Blount S. When Social Outcomes Arent Fair: The Effect of Causal Attributions on Preferences. Organizational behavior and human decision processes. 1995;63:131–144. [Google Scholar]

- Boyd R. Culture and the evolutionary process. University of Chicago Press; 1988. [Google Scholar]

- Buckholtz J, Asplund C, Dux P, Zald D, Gore JC, Jones O, Marois R. The Neural Correlates of Third-Party Punishment. Neuron. 2008;60:930–940. doi: 10.1016/j.neuron.2008.10.016. [DOI] [PubMed] [Google Scholar]

- Burke CJ, Tobler PN, Baddeley M, Schultz W. Neural mechanisms of observational learning. Proceedings of the National Academy of Sciences. 2010;107:14431–14436. doi: 10.1073/pnas.1003111107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Camerer C. Behavioral game theory: experiments in strategic interaction. Princeton University Press; 2003. [Google Scholar]

- Carter RM, Bowling DL, Reeck C, Huettel SA. A distinct role of the temporal-parietal junction in predicting socially guided decisions. Science. 2012;337:109–111. doi: 10.1126/science.1219681. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chang LJ, Sanfey AG. Great expectations: neural computations underlying the use of social norms in decision-making. Social cognitive and affective neuroscience. 2013;8:277–284. doi: 10.1093/scan/nsr094. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Civai C, Crescentini C, Rustichini A, Rumiati RI. Equality versus self-interest in the brain: differential roles of anterior insula and medial prefrontal cortex. Neuroimage. 2012;62:102–112. doi: 10.1016/j.neuroimage.2012.04.037. [DOI] [PubMed] [Google Scholar]

- Corradi-Dell’Acqua C, Civai C, Rumiati RI, Fink GR. Disentangling self-and fairness-related neural mechanisms involved in the ultimatum game: an fMRI study. Social cognitive and affective neuroscience. 2013;8:424–431. doi: 10.1093/scan/nss014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- De Quervain DJ-F, Fischbacher U, Treyer V, Schellhammer M, Schnyder U, Buck A, Fehr E. The neural basis of altruistic punishment. Science. 2004 doi: 10.1126/science.1100735. [DOI] [PubMed] [Google Scholar]

- DeScioli P, Kurzban R. Mysteries of morality. Cognition. 2009;112:281–299. doi: 10.1016/j.cognition.2009.05.008. [DOI] [PubMed] [Google Scholar]

- DeScioli P, Kurzban R. A solution to the mysteries of morality. Psychological Bulletin. 2013;139:477. doi: 10.1037/a0029065. [DOI] [PubMed] [Google Scholar]

- Elster J. Social norms and economic theory. The Journal of Economic Perspectives. 1989;3:99–117. [Google Scholar]

- Falk A, Fehr E, Fischbacher U. On the nature of fair behavior. Economic Inquiry. 2003;41:20–26. [Google Scholar]

- Fehr E, Fischbacher U. The nature of human altruism. Nature. 2003;425:785–791. doi: 10.1038/nature02043. [DOI] [PubMed] [Google Scholar]

- Fehr E, Fischbacher U. Third-party punishment and social norms. Evolution and Human Behavior. 2004;25:63–87. [Google Scholar]

- Fehr E, Schmidt KM. A theory of fairness, competition, and cooperation. The quarterly journal of economics. 1999;114:817–868. [Google Scholar]

- Frith CD, Frith U. The neural basis of mentalizing. Neuron. 2006;50:531–534. doi: 10.1016/j.neuron.2006.05.001. [DOI] [PubMed] [Google Scholar]

- Gläscher J, Daw N, Dayan P, O’Doherty JP. States versus Rewards: Dissociable Neural Prediction Error Signals Underlying Model-Based and Model-Free Reinforcement Learning. Neuron. 2010;66:585–595. doi: 10.1016/j.neuron.2010.04.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Greene JD, Nystrom LE, Engell AD, Darley JM, Cohen JD. The neural bases of cognitive conflict and control in moral judgment. Neuron. 2004;44:389–400. doi: 10.1016/j.neuron.2004.09.027. [DOI] [PubMed] [Google Scholar]

- Guala F. Reciprocity: Weak or strong? What punishment experiments do (and do not) demonstrate. Behavioral and Brain Sciences. 2012;35:1–15. doi: 10.1017/S0140525X11000069. [DOI] [PubMed] [Google Scholar]

- Gusnard DA, Akbudak E, Shulman GL, Raichle ME. Medial prefrontal cortex and self-referential mental activity: relation to a default mode of brain function. Proceedings of the National Academy of Sciences. 2001;98:4259–4264. doi: 10.1073/pnas.071043098. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hampton AN, Bossaerts P, O’Doherty JP. Neural correlates of mentalizing-related computations during strategic interactions in humans. Proceedings of the National Academy of Sciences. 2008;105:6741–6746. doi: 10.1073/pnas.0711099105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hare TA, Camerer CF, Knoepfle DT, Rangel A. Value computations in ventral medial prefrontal cortex during charitable decision making incorporate input from regions involved in social cognition. J Neurosci. 2010;30:583–590. doi: 10.1523/JNEUROSCI.4089-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hare TA, Camerer CF, Rangel A. Self-control in decision-making involves modulation of the vmPFC valuation system. Science. 2009;324:646–648. doi: 10.1126/science.1168450. [DOI] [PubMed] [Google Scholar]

- Hare TA, O’Doherty JP, Camerer CF, Schultz W, Rangel A. Dissociating the role of the orbitofrontal cortex and the striatum in the computation of goal values and prediction errors. J Neurosci. 2008;28:5623–5630. doi: 10.1523/JNEUROSCI.1309-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haushofer J, Fehr E. You Shouldn’t Have: Your Brain on Others’ Crimes. Neuron. 2008;60:738–740. doi: 10.1016/j.neuron.2008.11.019. [DOI] [PubMed] [Google Scholar]

- Henrich J, McElreath R, Barr A, Ensminger J, Barrett C, Bolyanatz A, Cardenas J, Gurven M, Gwako E, Henrich N, Lesorogol C, Marlowe F, Tracer D, Ziker J. Costly Punishment Across Human Societies. Science (New York, NY) 2006;312:1767–1770. doi: 10.1126/science.1127333. [DOI] [PubMed] [Google Scholar]

- Hsu M, Anen C, Quartz SR. The right and the good: distributive justice and neural encoding of equity and efficiency. Science. 2008;320:1092–1095. doi: 10.1126/science.1153651. [DOI] [PubMed] [Google Scholar]

- King-Casas B, Sharp C, Lomax-Bream L, Lohrenz T, Fonagy P, Montague PR. The rupture and repair of cooperation in borderline personality disorder. Science (New York, NY) 2008;321:806–810. doi: 10.1126/science.1156902. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Knoch D, Pascual-Leone A, Meyer K, Treyer V, Fehr E. Diminishing reciprocal fairness by disrupting the right prefrontal cortex. Science. 2006;314:829–832. doi: 10.1126/science.1129156. [DOI] [PubMed] [Google Scholar]

- Lamm C, Batson CD, Decety J. The neural substrate of human empathy: effects of perspective-taking and cognitive appraisal. Journal of cognitive neuroscience. 2007;19:42–58. doi: 10.1162/jocn.2007.19.1.42. [DOI] [PubMed] [Google Scholar]

- Li J, Xiao E, Houser D, Montague PR. Neural responses to sanction threats in two-party economic exchange. Proceedings of the National Academy of Sciences of the United States of America. 2009;106:16835–16840. doi: 10.1073/pnas.0908855106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacDonald AW, Cohen JD, Stenger VA, Carter CS. Dissociating the role of the dorsolateral prefrontal and anterior cingulate cortex in cognitive control. Science. 2000;288:1835–1838. doi: 10.1126/science.288.5472.1835. [DOI] [PubMed] [Google Scholar]

- Marlowe FW, Berbesque JC, Barr A, Barrett C, Bolyanatz A, Cardenas JC, Ensminger J, Gurven M, Gwako E, Henrich J. More ‘altruistic’punishment in larger societies. Proceedings of the Royal Society B: Biological Sciences. 2008;275:587–592. doi: 10.1098/rspb.2007.1517. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller EK, Cohen JD. An integrative theory of prefrontal cortex function. Annual review of neuroscience. 2001;24:167–202. doi: 10.1146/annurev.neuro.24.1.167. [DOI] [PubMed] [Google Scholar]

- Mitchell JP. Activity in right temporo-parietal junction is not selective for theory-of-mind. Cerebral Cortex. 2008;18:262–271. doi: 10.1093/cercor/bhm051. [DOI] [PubMed] [Google Scholar]

- Montague PR, Lohrenz T. To detect and correct: norm violations and their enforcement. Neuron. 2007;56:14–18. doi: 10.1016/j.neuron.2007.09.020. [DOI] [PubMed] [Google Scholar]

- O’Doherty JP, Dayan P, Schultz J, Deichmann R, Friston K, Dolan RJ. Dissociable roles of ventral and dorsal striatum in instrumental conditioning. Science. 2004;304:452–454. doi: 10.1126/science.1094285. [DOI] [PubMed] [Google Scholar]

- Ostrom E. Collective Action and the Evolution of Social Norms. The Journal of Economic Perspectives. 2000;14:137–158. [Google Scholar]

- Padoa-Schioppa C. Orbitofrontal cortex and the computation of economic value. Ann NY Acad Sci. 2007;1121:232–253. doi: 10.1196/annals.1401.011. [DOI] [PubMed] [Google Scholar]

- Rangel A, Camerer C, Montague PR. A framework for studying the neurobiology of value-based decision making. Nature Reviews Neuroscience. 2008;9:545–556. doi: 10.1038/nrn2357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Riedl K, Jensen K, Call J, Tomasello M. No third-party punishment in chimpanzees. Proceedings of the National Academy of Sciences. 2012;109:14824–14829. doi: 10.1073/pnas.1203179109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sanfey AG, Rilling JK, Aronson J, Nystrom LE, Cohen J. The neural basis of economic decision-making in the Ultimatum Game. Science. 2003;300:1755–1758. doi: 10.1126/science.1082976. [DOI] [PubMed] [Google Scholar]

- Saxe R. Uniquely human social cognition. Current Opinion in Neurobiology. 2006;16:235–239. doi: 10.1016/j.conb.2006.03.001. [DOI] [PubMed] [Google Scholar]

- Singer T, Seymour B, O’Doherty JP, Kaube H, Dolan RJ, Frith CD. Empathy for Pain Involves the Affective but not Sensory Components of Pain. Science. 2004;303:1157–1162. doi: 10.1126/science.1093535. [DOI] [PubMed] [Google Scholar]

- Spitzer M, Fischbacher U, Herrnberger B, Gron G, Fehr E. The Neural Signature of Social Norm Compliance. Neuron. 2007;56:185–196. doi: 10.1016/j.neuron.2007.09.011. [DOI] [PubMed] [Google Scholar]

- Tabibnia G, Satpute AB, Lieberman MD. The sunny side of fairness preference for fairness activates reward circuitry (and disregarding unfairness activates self-control circuitry) Psychological Science. 2008;19:339–347. doi: 10.1111/j.1467-9280.2008.02091.x. [DOI] [PubMed] [Google Scholar]

- Tricomi E, Rangel A, Camerer CF, O’Doherty JP. Neural evidence for inequality-averse social preferences. Nature. 2010;463:1089–1091. doi: 10.1038/nature08785. [DOI] [PubMed] [Google Scholar]

- Xiang T, Lohrenz T, Montague PR. Computational substrates of norms and their violations during social exchange. The Journal of neuroscience. 2013;33:1099–1108. doi: 10.1523/JNEUROSCI.1642-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yamagishi T, Horita Y, Mifune N, Hashimoto H, Li Y, Shinada M, Miura A, Inukai K, Takagishi H, Simunovic D. Rejection of unfair offers in the ultimatum game is no evidence of strong reciprocity. Proceedings of the National Academy of Sciences of the United States of America. 2012;109:20364–20368. doi: 10.1073/pnas.1212126109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Young L, Camprodon JA, Hauser M, Pascual-Leone A, Saxe R. Disruption of the right temporoparietal junction with transcranial magnetic stimulation reduces the role of beliefs in moral judgments. Proceedings of the National Academy of Sciences of the United States of America. 2010;107:6753–6758. doi: 10.1073/pnas.0914826107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zaki J, López G, Mitchell JP. Activity in ventromedial prefrontal cortex covaries with revealed social preferences: Evidence for person-invariant value. Social cognitive and affective neuroscience. 2013:nst005. doi: 10.1093/scan/nst005. [DOI] [PMC free article] [PubMed]

- Zaki J, Mitchell JP. Equitable decision making is associated with neural markers of intrinsic value. Proceedings of the National Academy of Sciences. 2011;108:19761–19766. doi: 10.1073/pnas.1112324108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhu L, Mathewson KE, Hsu M. Dissociable Neural Representations of Reinforcement and Belief Prediction Errors underlying Strategic Learning. Proceedings of the National Academy of Sciences of the United States of America. 2012;109:1419–1424. doi: 10.1073/pnas.1116783109. [DOI] [PMC free article] [PubMed] [Google Scholar]