Abstract

Ultrasonic vocalisation is a broadly used proxy to evaluate social communication in mouse models of neuropsychiatric disorders. The efficacy and robustness of testing these models suffer from limited knowledge of the structure and functions of these vocalisations as well as of the way to analyse the data. We created mouseTube, an open database with a web interface, to facilitate sharing and comparison of ultrasonic vocalisations data and metadata attached to a recording file. Metadata describe 1) the acquisition procedure, e.g., hardware, software, sampling frequency, bit depth; 2) the biological protocol used to elicit ultrasonic vocalisations; 3) the characteristics of the individual emitting ultrasonic vocalisations ( e.g., strain, sex, age). To promote open science and enable reproducibility, data are made freely available. The website provides searching functions to facilitate the retrieval of recording files of interest. It is designed to enable comparisons of ultrasonic vocalisation emission between strains, protocols or laboratories, as well as to test different analysis algorithms and to search for protocols established to elicit mouse ultrasonic vocalisations. Over the long term, users will be able to download and compare different analysis results for each data file. Such application will boost the knowledge on mouse ultrasonic communication and stimulate sharing and comparison of automatic analysis methods to refine phenotyping techniques in mouse models of neuropsychiatric disorders.

Keywords: Mouse, ultrasonic vocalisations, mouse communication, database, mouseTube, mouse behaviour, open data, open analysis

Introduction

Mice are the most broadly studied animal models in scientific research. They are used to understand causes and mechanisms of human diseases, as well as to develop new therapeutic strategies. More and more scientists are interested in their social behaviour, and aim to improve housing conditions, better understand the way pharmacological substances or genetic mutations act on social life, or simply better know their models in order to develop the most adequate tests.

Mice are social animals and use olfactory, tactile, visual but also auditory signals to regulate their relationships. Indeed, mice emit audible and ultrasonic vocalisations to communicate with their conspecifics. These vocalisations might represent an “easy-to-record” proxy for sociality. Audible signals (20 Hz – 20 kHz) are much less frequent than ultrasonic vocalisations; we therefore focus on ultrasonic vocalisations, ranging between 20 kHz and more than 120 kHz. Mouse ultrasonic vocalisations are rapidly successive pure tones of short duration, high frequency modulations, and with or without frequency jump(s) (reviewed in ( Portfors, 2007)). Pups utter isolation calls in their first 2 weeks of life ( Zippelius & Schleidt, 1956). These vocalisations reliably trigger maternal retrieval ( Sewell, 1970; Zippelius & Schleidt, 1956). Juvenile and adult mice utter ultrasonic vocalisations when encountering an unknown conspecific of the same sex ( Chabout et al., 2012; Hammerschmidt et al., 2012; Maggio & Whitney, 1985; Panksepp et al., 2007). Both sexes utter these calls, but male vocal behaviour is maximised by social isolation in many cases ( Chabout et al., 2012; Scattoni et al., 2010). These vocalisations may play a role in social recognition and hierarchy establishment, at least in females ( D’Amato & Moles, 2001; Moles et al., 2007). Finally, sexually mature males vocalise when encountering an oestrus female or urinary cues from her ( Holy & Guo, 2005; Whitney et al., 1973). These calls increase the probability of the female staying in proximity with the male emitter ( Hammerschmidt et al., 2009).

Despite these identified contexts of emission, knowledge about the real significance and structure of mouse ultrasonic vocalisations still suffers from several weaknesses. First, the functions of these vocalisations, specifically those emitted by juvenile and adult mice, are still unclear. Indeed, male calling in presence of an oestrus female might represent a courtship situation ( Holy & Guo, 2005). These calls are nevertheless not structurally different from those of adult females, suggesting at least another function such as proximity maintenance ( Hammerschmidt et al., 2012; Seagraves et al., 2016). It is also still unclear to what extent the temporal organisation and the fine acoustic structure of the calls are meaningful for the receiving mice. They are physiologically able to perceive subtle acoustic variations ( Portfors et al., 2009), but behavioural evidence for the meaning of these subtle variations remains scarce ( Hammerschmidt et al., 2009; Wöhr et al., 2011a). Second, the emission of ultrasonic vocalisations is highly dependent on the emitter’s identity ( Holy & Guo, 2005), the receiver’s identity ( Seagraves et al., 2016), and the context ( e.g., Yang et al., 2013). These sources of variability remain under-explored and could explain the lack of reproducibility in several assays. Finally, the domain suffers from a lack of automation of the analysis of these signals. Some laboratories have developed their own detection and/or analysis methods ( e.g., Chabout et al., 2015; Hammerschmidt et al., 2012; Holy & Guo, 2005; Neunuebel et al., 2015; Seagraves et al., 2016; von Merten et al., 2014) or use commercial and/or manual solutions for detection and/or analysis ( Chabout et al., 2012; Ey et al., 2013; Wöhr et al., 2011b; Yang et al., 2013). Nevertheless, little is known about the advantages and disadvantages of each of these methods, and comparisons of these methods on the same files would be highly valuable for the field.

To counteract these current weaknesses of the domain, we developed mouseTube. This database is designed to share and exchange recording files from mouse ultrasonic vocalisations, along with all the corresponding metadata. It aims at increasing knowledge on mouse vocal communication, improving reproducibility of the experiments and stimulating the development of robust analysis tools. The web interface is available at http://mousetube.pasteur.fr.

Data uploaded on mouseTube are shared between all members of the community connected to mouseTube. Members uploading data on mouseTube are fully responsible for the content of uploaded files and the accuracy of the metadata provided. Data uploaded still belong to the laboratory that recorded them, but the owner gives the right to the members of mouseTube to use them for analyses and publications. Any member of the community can download data. mouseTube data are freely available upon online registration and can be used for subsequent publications. Any publications derived from the data should state the contributors (user) of the data and mousetube.pasteur.fr as being the data source and, whenever possible, cite the original paper(s) in which data have been first described. mouseTube administrators decline all responsibilities for the content of data and metadata.

Data and metadata

Database design

The mouseTube database stores the links toward each audio recording file and all the corresponding metadata. The audio recording files themselves are stored on external servers, owned by each laboratory and accessible with the login and password given when registration is confirmed (these servers should be configured with these login and password). This allows the owner to control his or her own data. For a video tutorial on how to upload data on mouseTube, please see ( Ferhat et al., 2016).

mouseTube is a web interface coded in php and a MySQL relational database hosted by an Apache server. The web interface allows users ( i.e., data contributors and data downloaders) to manage the data in the database. In relational databases, each table has its own unique key to connect tables together (it is possible to combine several keys but in the case of mouseTube, we identify a simple unique key for each table). In this way, the data are well organised and it is very fast to find all the specificities of an element, looking through the links.

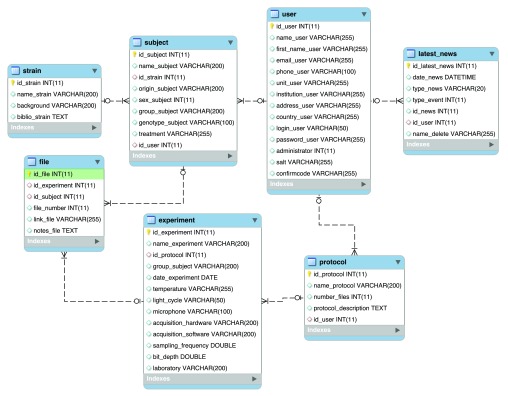

mouseTube is organised as seven tables connected by unique keys within the database ( Figure 1):

Figure 1. Overall organisation of the mouseTube database.

mouseTube is organised in 7 tables connected by unique keys.

-

-

“strain”: gathers all mouse strains already entered in the database. This table contains information about the name of the strain, the background on which it has been generated, and the bibliographic reference where it has been first described. If a new strain is needed, every user can send an email with all requested information for the administrators to add it. This procedure will avoid any.

-

-

“subject”: lists all the individual mice entered by contributing users. New subjects are created by each contributing user. The table “subject” is connected to the table “user” (unique key “id_user”), meaning that one individual belongs to only one contributing user. The “subject” table is also connected to the table “strain” by the unique key “id_strain”, meaning that one subject can only be characterised by one strain. This table stores all information relative to each subject such as its origin, name, sex, genotype, treatment, and subgroup.

-

-

“user”: stores the information about all contributing and non-contributing users having access to the mouseTube database. This table is connected to the table “subject” and “protocol” through the unique key “id_user”, identifying the owner of a subject and the protocols that have been used by this person (a subject or a protocol belongs to only one user). The table “user” stores contact information and the encrypted version of the password and login. All user information can be changed by him/herself whenever he/she needs to.

-

-

“protocol”: lists all the protocols entered by users. Protocols are created as free text by each user and should provide enough information to be replicable. This table is connected to the table “user” through the unique key “id_user” designating its creator. The table “protocol” contains information about the name of the protocol, its description, which user has created it, and the number of recording files generated for each mouse with this protocol.

-

-

“experiment”: lists all the experiments ( i.e., a set of audio files recorded for a group of individuals with one protocol) entered by the users. New experiments can be created by each user. It is connected to the “protocol” table through the unique key “id_protocol” since each experiment involves one unique protocol.

-

-

file: lists all the vocalisations files entered by the users, for each individual mouse (unique key “id_subject”) within each experiment (unique key “id_experiment”). This means that one file relates to a unique subject and a unique experiment. The preferred format is the uncompressed “.wav” one.

-

-

“latest news”: this table retraces all the new actions that have been performed on mouseTube. It allows researchers to follow updates made by each user (unique key “id_user”).

Data organisation

A help section is available on the home page of each user’s session. This part provides all details about the information stored in each section of mouseTube. It can also be accessed at any time through the “Help” tab available on each page.

For each protocol, a name is requested. Users should also specify how many recording files are generated for each mouse in this protocol. Users should then provide a very precise description of the exact protocol. If another user has already created the protocol, the owner of the vocalisation files needs to create it again with a similar name and same information. He/she will then be able to select it when he/she creates an experiment. The database is built in this way to track changes in each protocol.

For each experiment embedding several audio recording files, the following metadata are stored:

-

-

name of the experiment

-

-

protocol

-

-

mouse group

-

-

date of the beginning of the experiment

-

-

temperature

-

-

light phase for testing (light or dark period)

-

-

hardware used (microphone & sound card; auto-completion of this field to ease the identification of the equipment)

-

-

acquisition software

-

-

sampling frequency

-

-

bit depth

-

-

laboratory

These metadata will permit useful comparisons of the software, hardware and environmental conditions of each audio recording. This information is compulsory to evaluate constraints on each recording.

Data retrieval

The mouseTube web interface allows users to search different types of data into the database without coding a MySQL request. Data can be searched according to several criteria, such as protocols, owner of the files, mouse strain, and experiment. Users can select all recording files from a specific mouse strain or user/laboratory. mouseTube also aims at providing the users with different protocols to record mouse ultrasonic vocalisations. mouseTube even provides the possibility to search for individual mice. The more information is entered, the easier it is to find a subject. To download any vocalisation file, users need the login and password provided at the confirmation of their registration and common to all servers hosting vocalisation files linked in mouseTube. These are different from their personal login and password.

Use cases

Example 1: searching for protocols and control strains

In this example, we use the search function of mouseTube in the protocol section to gather information on potential protocols to record ultrasonic vocalisations in adult male mice and to collect data on control animals in this protocol.

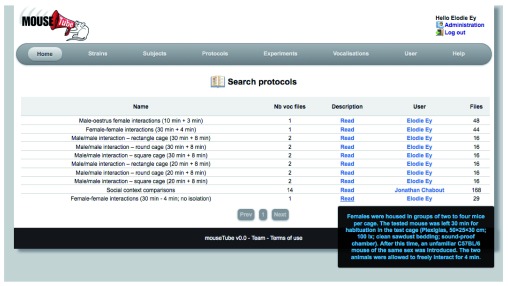

By going through all protocols ( Figure 2), we found several protocols used to record male ultrasonic vocalisations. Elodie Ey provided protocols to record male-male interactions in different cages after 3 weeks of isolation or male-female interactions. Jonathan Chabout also provided a protocol to record male vocalisations in response to urine (male or female), an anesthetised mouse (male or female), or an active female. With these selected protocols, at the time of writing, we managed to find 16 individuals from the C57BL/6J strain recorded in the male-male interaction protocol from E. Ey, 48 individuals from the ProSAP1/Shank2 strain recorded in the male-oestrus female protocol from E. Ey, and 12 individuals from the B6D2F1/J strain recorded with the protocol of J. Chabout. Altogether, we now have an important set of reference files for each protocol to compare to our data, and soon we will try the different protocols in our laboratory. The output file (Supplementary file 1) provides a sample of the metadata and links to the files recorded in the male – oestrus female interactions by E. Ey. This file is automatically generated for each vocalisation search request and can be downloaded to save the metadata related to each file.

Figure 2. Screenshot of the mouseTube web application on the search page for protocols.

A sample of the metadata and links to the files recorded in the male – oestrus female interactions by E. Ey. This file is automatically generated for each vocalisation search request and can be downloaded to save the metadata related to each file.

Copyright: © 2016 Torquet N et al.

Data associated with the article are available under the terms of the Creative Commons Zero "No rights reserved" data waiver (CC0 1.0 Public domain dedication).

Example 2: testing a detection algorithm

In this example, we plan to test a new detection algorithm under various recording conditions. To do so, we need to gather files recorded with different equipment under various levels of background noise conditions. We use the search function of mouseTube in the vocalisation section to select three vocalisation files in each of the protocols available in mouseTube. The diversity of recording conditions allows us to investigate the limit of our detection algorithm to extract ultrasonic vocalisations from background noise. We end up with a set of many vocalisation files to include in our test set. For each vocalisation search, a table recapitulates the corresponding metadata of each vocalisation file, specifying the protocol and hardware and software used. We can therefore test our detection algorithm on files knowing the number of individuals present during the recording session and the background noise that is then generated as well as the quality of the recording equipment ( e.g., the microphone frequency response).

Conclusion

We present mouseTube, a database with a web application to boost knowledge on mouse ultrasonic communication. This database stores recording files of mouse ultrasonic vocalisations as well as the corresponding metadata. It provides a source of information on the protocols to record mouse ultrasonic vocalisations and on the availability of recording files for different mouse strains.

At the time of writing this paper, mouseTube provides a platform to up- or download mouse recording files and the corresponding metadata. The aim is to constantly develop mouseTube, and we are currently exploring ways of enabling users to analyse their data online, where the owner of the data will be notified of each analysis performed with their data. The database will also offer researchers the option of keeping a portion of their data on a private part of mouseTube until they have been analysed and published, after which the data will be made publicly available. We will develop this analysis system shortly. We also aim to open mouseTube to plug-in other analysis systems. The users will then be able to choose which software they want to analyse their data.

Data and software availability

Audio recording files are available for all mouseTube users via the web application http://mousetube.pasteur.fr. To download the vocalisation files from the different storage servers, users need to enter the login and password common to all servers hosting mouseTube data files. These are provided upon registration online.

F1000Research: Dataset 1. Output file automatically generated for each request of vocalisation file, 10.5256/f1000research.9439.d135667 ( Torquet et al., 2016).

Acknowledgements

We thank Eliza Jones for lending her voice for the video presenting mouseTube.

Funding Statement

This work was supported by the Fondation de France; by the ANR FLEXNEURIM [ANR09BLAN034003]; by the ANR [ANR- 08-MNPS-037-01- SynGen]; by Neuron-ERANET (EUHF-AUTISM); by the Fondation Orange; by the Fondation FondaMentale; by the Fondation de France; by the Fondation Bettencourt-Schueller. The research leading to this article has also received support from the Innovative Medicine Initiative Joint Undertaking under grant agreement no. 115300, resources of which are composed of financial contribution from the European Union's Seventh Framework Program (FP7/2007-2013) and EFPIA companies' in kind contribution.

The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

[version 1; referees: 2 approved]

References

- Chabout J, Sarkar A, Dunson DB, et al. : Male mice song syntax depends on social contexts and influences female preferences. Front Behav Neurosci. 2015;9:76. 10.3389/fnbeh.2015.00076 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chabout J, Serreau P, Ey E, et al. : Adult male mice emit context-specific ultrasonic vocalizations that are modulated by prior isolation or group rearing environment. PLoS One. 2012;7(1):e29401. 10.1371/journal.pone.0029401 [DOI] [PMC free article] [PubMed] [Google Scholar]

- D’Amato FR, Moles A: Ultrasonic vocalizations as an index of social memory in female mice. Behav Neurosci. 2001;115(4):834–840. 10.1037//0735-7044.115.4.834 [DOI] [PubMed] [Google Scholar]

- Ey E, Torquet N, Le Sourd AM, et al. : The Autism ProSAP1/Shank2 mouse model displays quantitative and structural abnormalities in ultrasonic vocalisations. Behav Brain Res. 2013;256:677–689. 10.1016/j.bbr.2013.08.031 [DOI] [PubMed] [Google Scholar]

- Ferhat AT, Torquet N, Le Sourd AM, et al. : Recording Mouse Ultrasonic Vocalizations to Evaluate Social Communication. J Vis Exp. 2016; (112). 10.3791/53871 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hammerschmidt K, Radyushkin K, Ehrenreich H, et al. : Female mice respond to male ultrasonic 'songs' with approach behaviour. Biol Lett. 2009;5(5):589–592. 10.1098/rsbl.2009.0317 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hammerschmidt K, Radyushkin K, Ehrenreich H, et al. : The structure and usage of female and male mouse ultrasonic vocalizations reveal only minor differences. PLoS One. 2012;7(7):e41133. 10.1371/journal.pone.0041133 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holy TE, Guo Z: Ultrasonic songs of male mice. PLoS Biol. 2005;3(12):e386. 10.1371/journal.pbio.0030386 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maggio JC, Whitney G: Ultrasonic vocalizing by adult female mice ( Mus musculus). J Comp Psychol. 1985;99(4):420–436. 10.1037//0735-7036.99.4.420 [DOI] [PubMed] [Google Scholar]

- Moles A, Costantini F, Garbugino L, et al. : Ultrasonic vocalizations emitted during dyadic interactions in female mice: A possible index of sociability?. Behav Brain Res. 2007;182(2):223–230. 10.1016/j.bbr.2007.01.020 [DOI] [PubMed] [Google Scholar]

- Neunuebel JP, Taylor AL, Arthur BJ, et al. : Female mice ultrasonically interact with males during courtship displays. eLife. 2015;4:e06203. 10.7554/eLife.06203 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Panksepp JB, Jochman KA, Kim JU, et al. : Affiliative behavior, ultrasonic communication and social reward are influenced by genetic variation in adolescent mice. PLoS One. 2007;2(4):e351. 10.1371/journal.pone.0000351 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Portfors CV: Types and functions of ultrasonic vocalizations in laboratory rats and mice. J Am Assoc Lab Anim Sci. 2007;46(1):28–34. [PubMed] [Google Scholar]

- Portfors CV, Roberts PD, Jonson K: Over-representation of species-specific vocalizations in the awake mouse inferior colliculus. Neuroscience. 2009;162(2):486–500. 10.1016/j.neuroscience.2009.04.056 [DOI] [PubMed] [Google Scholar]

- Scattoni ML, Ricceri L, Crawley JN: Unusual repertoire of vocalizations in adult BTBR T+tf/J mice during three types of social encounters. Genes Brain Behav. 2010;10(1):44–56. 10.1111/j.1601-183X.2010.00623.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seagraves KM, Arthur BJ, Egnor SE: Evidence for an audience effect in mice: male social partners alter the male vocal response to female cues. J Exp Biol. 2016;219(Pt 10):1437–1448. 10.1242/jeb.129361 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sewell GD: Ultrasonic communication in rodents. Nature. 1970;227(5256):410. 10.1038/227410a0 [DOI] [PubMed] [Google Scholar]

- Torquet N, de Chaumont F, Faure P, et al. : Dataset 1 in: mouseTube – a database to collaboratively unravel mouse ultrasonic communication. F1000Research. 2016. Data Source [DOI] [PMC free article] [PubMed] [Google Scholar]

- von Merten S, Hoier S, Pfeifle C, et al. : A role for ultrasonic vocalisation in social communication and divergence of natural populations of the house mouse ( Mus musculus domesticus). PLoS One. 2014;9(5):e97244. 10.1371/journal.pone.0097244 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Whitney G, Coble JR, Stockton MD, et al. : Ultrasonic emissions: do they facilitate courtship of mice. J Comp Physiol Psychol. 1973;84(3):445–452. 10.1037/h0034899 [DOI] [PubMed] [Google Scholar]

- Wöhr M, Moles A, Schwarting RK, et al. : Lack of social exploratory activation in male μ-opioid receptor KO mice in response to playback of female ultrasonic vocalizations. Soc Neurosci. 2011a;6(1):76–87. 10.1080/17470911003765560 [DOI] [PubMed] [Google Scholar]

- Wöhr M, Roullet FI, Hung AY, et al. : Communication impairments in mice lacking Shank1: reduced levels of ultrasonic vocalizations and scent marking behavior. PLoS One. 2011b;6(6):e20631. 10.1371/journal.pone.0020631 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yang M, Loureiro D, Kalikhman D, et al. : Male mice emit distinct ultrasonic vocalizations when the female leaves the social interaction arena. Front Behav Neurosci. 2013;7:159. 10.3389/fnbeh.2013.00159 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zippelius HM, Schleidt WM: Ultraschall-Laute bei jungen Mäusen. Naturwissenschaften. 1956;43(21):502–502. 10.1007/BF00632534 [DOI] [Google Scholar]