Abstract

The integration of information has been considered a hallmark of human consciousness, as it requires information being globally available via widespread neural interactions. Yet the complex interdependencies between multisensory integration and perceptual awareness, or consciousness, remain to be defined. While perceptual awareness has traditionally been studied in a single sense, in recent years we have witnessed a surge of interest in the role of multisensory integration in perceptual awareness. Based on a recent IMRF symposium on multisensory awareness, this review discusses three key questions from conceptual, methodological and experimental perspectives: (1) What do we study when we study multisensory awareness? (2) What is the relationship between multisensory integration and perceptual awareness? (3) Which experimental approaches are most promising to characterize multisensory awareness? We hope that this review paper will provoke lively discussions, novel experiments, and conceptual considerations to advance our understanding of the multifaceted interplay between multisensory integration and consciousness.

Keywords: Multisensory integration, perceptual awareness, consciousness, perception, crossmodal integration, metacognition

1. Introduction

In our everyday lives, our brain has to deal with a constant influx of sensory signals. Looking at perceptual experience though, a fundamental aspect of our conscious awareness is that sensory signals are integrated nearly effortlessly into a seamless multisensory perception of our environment. Yet even though multisensory experience is pervasive in everyday life, the relationship between multisensory integration and perceptual awareness remains unclear.

This lack of clarity is all the more surprising given that several leading theories see a strong link between information integration and perceptual awareness. For instance, according to the global workspace model, consciousness emerges when information is made globally available via long range connectivity such as the frontoparietal system (Dehaene, 2001). Other theories suggest that consciousness emerges via recurrent interactions that enable information exchange across multiple levels of the cortical hierarchy (Lamme, 2006; Lamme and Roelfsema, 2000). Finally, the integrated information theory of consciousness associates consciousness with ‘integrated information’ and aims to determine the structural and functional properties that enable neural systems to form complex integrated information as a prerequisite of consciousness (Balduzzi and Tononi, 2008).

Yet despite the proposed link between information integration and consciousness, perceptual awareness has traditionally been studied in terms of single sense experiences (see De Graaf et al., 2012; Dehaene and Changeux, 2011 for reviews), such as vision, audition (Allen et al., 2000; Bekinschtein et al., 2009; Giani et al., 2015; Gutschalk et al., 2008; Haynes et al., 2005; Ro et al., 2003), or, on occasion, touch (Gallace and Spence, 2008, 2014) or olfaction (Stevenson and Attuquayefio, 2013). Only in recent years have we witnessed a surge of interest in studying perceptual awareness in multisensory terms. Based on a recent IMRF symposium on the topic, this paper aims to review the key conceptual, methodological and empirical findings that have advanced the field in recent years, and to provide better tools to confront the challenges raised by the multifaceted interplay between multisensory integration and perceptual awareness.

Section 2 provides a conceptual map of the kind of phenomena which fall under the general label of ‘multisensory awareness’, and highlights some of the main challenges for the field. We discuss the commonalities and differences of perceptual awareness that may occur in unisensory and multisensory contexts. For instance, in vision, information needs to be integrated across time and space into a coherent percept of our dynamic environment. Vision also faces the challenge of binding features such as colour and form, which are represented predominantly in different brain areas, into a unified object percept (Ghose and Maunsell, 1999; Roskies, 1999; Wolfe and Cave, 1999). Along similar lines, multisensory perception relies on binding complementary pieces of information (e.g., an object’s shape from the front side via vision and from the rear via touch) that are provided by different sensory modalities. Moreover, different senses can provide redundant information about specific properties such as the spatial location or timing of an event.

Section 3 explores the relationship between multisensory integration and multisensory awareness. More specifically, it reviews the behavioural and neural research investigating the extent to which multisensory signals can be integrated in the absence of awareness. Numerous studies have demonstrated that signals that we are aware of in one sensory modality can boost signals from another sensory modality that we are not aware of into perceptual awareness depending on temporal coincidence, spatial or higher order correspondences such as semantic or phonological congruency (Adam and Noppeney, 2014; Aller et al., 2015; Alsius and Munhall, 2013; Chen and Spence, 2011a, b; Hsiao et al., 2012; Olivers and Van der Burg, 2008; Palmer and Ramsey, 2012). Less is known about whether signals that we are unaware of can also influence where and how we perceive those signals that we are aware of. Moreover, despite the vast neurophysiological evidence showing multisensory interactions in anaesthetized animals (Stein and Meredith, 1993) only little behavioural evidence has been accumulated indicating that two signals from different sensory modalities can interact in the absence of awareness such as sleep (Arzi et al., 2012) or when signals are masked and thus precluded from awareness in both sensory modalities (Faivre et al., 2014).

Finally, Section 4 discusses various experimental approaches that can be pursued to tap into multisensory awareness. Unisensory research has developed a large repertoire of experimental manipulations and paradigms to contrast sensory processing in the presence and absence of awareness including multistable perception (e.g., ambiguous figures, multistable motion quartets, binocular rivalry, and continuous flash suppression), attentional blink, masking, or sleep. Which of those experimental approaches might be most promising when it comes to multisensory awareness?

2. What Do We Study When We Study Multisensory Awareness?

Most of our conscious experiences occur in a multisensory setting when several sensory modalities are likely being stimulated simultaneously. Some senses, like the vestibular system, proprioception, or touch, indeed almost never ‘switch off’ in natural circumstances. Meanwhile, audition and vision often function together starting with saccadic coordination (Heffner and Heffner, 1992a, b; Kruger et al., 2014) and leading to many well-known audiovisual illusions, such as the spatial ventriloquist effect (Alais and Burr, 2004; Bertelson and Aschersleben, 1998; Vroomen and De Gelder, 2004), the McGurk effect (McGurk and MacDonald, 1976), the double flash fission or fusion illusion (Andersen et al., 2004; Shams et al., 2000), and pitch-induced illusory motion (Maeda et al., 2004).

Most phenomenological reports also tell us that conscious experiences are multisensory: We perceive talking faces, we go through scented and colourful gardens, filled with birdsong, we sense the noise and feel of the computer keys pressed under our fingertips. The evidence, then, converges in making consciousness a matter of multisensory combination. This raises an important question: How should we map the concept of multisensory integration with the first person evidence of unified perceptual awareness? It is important to note that integration is studied as a process, or rather a set of processes, while consciousness is often analysed as a state presenting us with objects, events, and their relations. With consciousness being one of the most discussed and controversial notions in the philosophical and scientific literature, we only attempt here to provide a useful taxonomy to distinguish between different cases of multisensory awareness, for the field to study. With these distinctions in hand, it is useful to look at what the study of awareness really involves, by drawing on two useful conceptual distinctions between access and content.

2.1. Three Kinds of Multisensory Contents

While the field is most concerned with cases where a single property is perceived through two or more sensory modalities, there is more to multisensory awareness than these. The most studied cases concern those situations where different senses provide redundant information about specific properties such as the spatial location or timing of an event. Imagine, for instance, running through the forest and spotting a robin sitting on the branch and singing (Rohe and Noppeney, 2015, 2016). By integrating redundant spatial information from vision and audition, the brain can form more reliable estimates of the location of the singing bird. Redundant information can even be provided about higher order aspects such as a phoneme, as in speech perception. In fact, perceptual illusions such as spatial ventriloquism (Alais and Burr, 2004; Bertelson and Aschersleben, 1998; Vroomen and De Gelder, 2004) or the McGurk illusion (Gau and Noppeney, 2016; McGurk and MacDonald, 1976; Munhall et al., 1996) emerge because different sensory modalities provide redundant, yet slightly conflicting information about spatial location or about a particular phoneme (e.g., [ba] vs. [ga]). Another good illustration can be when touch and vision contribute to the perception of shape (Ernst and Banks, 2002). This said, the integration of redundant information is not necessarily tied to multisensory awareness, and could lead to episodes of unisensory consciousness being biased by the information provided by another modality, be it consciously perceived or not. In other words, many of these cases could be cases of crossmodal bias of unisensory awareness, as much as genuine cases of unified multisensory awareness. A possible way to exclude the first possibility is to show that the integration of two sensory inputs leads to the conscious experience of a new property or aspect that could not be experienced by a conjunction of unisensory episodes. This could consist in being able to experience the simultaneity between two unisensory events, or in the emergence of a new quality, such as flavour, which is commonly taken to involve a fusion of taste, smell (retronasal olfactory), and trigeminal inputs (Spence et al., 2015). In other words, we should not be too fast in thinking that all cases of integration need to get manifested in episodes of multisensory awareness and should look for evidence of specific or emerging multisensory properties (see Partan and Marler, 1999).

At least two other kinds of cases also need to be considered when studying multisensory awareness, besides cases resting on the integration of redundant information.

On the one hand, two modalities can contribute to the perception of the same object, but different or complementary properties of that object. These are the classical cases of multisensory binding — cases where one is conscious of the visual shape of the dog and the sound of its bark (Chen and Spence, 2010), the shape of the kettle and the whistling sound (Jackson, 1953). The two unisensory components need to be referred to the same object, or at least, in the case of an event, to the same moment and perceived location. These cases form a distinct category of conscious perception of multisensory objects and events, and raise different challenges than the one where the contents experienced by two modalities are the same. Here the two contents can remain unisensory but multisensory awareness seems to be of their co-attribution to the same object (or space/time).

On the other hand, cases where different senses contribute to the perception of one and the same property, or object, should not make us forget about a third, and no less important, category where two objects in different modalities, or even two multisensory objects, are experienced as part of the same multisensory scene. For instance, you may be aware of the cup in front of you, while also being conscious of the shape and temperature of the spoon in your hand, and the sounds of the barista talking behind you. All these various unisensory and multisensory objects or events are different and yet they are all experienced as part of the same setting or scene — presenting us with a third kind of multisensory awareness, i.e., multisensory scene perception (see Note 1).

2.2. Multisensory Contents vs. Multisensory Access

With these distinctions in hand, we can now turn to another important conceptual difference between what people report and what they are phenomenally aware of, or what they attend to. While there is no doubt that people will report experiencing multisensory objects, for instance, or scenes, the question that cognitive neuroscientists need to ask is whether these correspond to what is present in consciousness at any given moment in time, or what is reconstructed through other processes aggregating information experienced at different times. If this crucially marks the difference between genuine multisensory awareness and other processes where conscious information can be coalesced, the difference is certainly easier to draw conceptually than experimentally. Spence and Bayne (2015), for instance, question whether reports of multisensory events or objects should be taken at face value for being about a unified conscious episode, and whether they do not perhaps hide a rapid switch of attention between unisensory conscious episodes. The co-attribution to a single object would then not depend on the awareness of a multisensory object but on something like an ‘attentional glue’. In the absence of a good model for how attention could perform this role, it might be sufficient to note that the co-attribution to a single object might be a matter of nonconscious representation, which keeps track of, and predicts a relation of cooccurrence and colocalisation between two properties (Deroy, 2014). In other words, researchers interested in the link between multisensory integration and awareness should not take for granted that the kind of contents described above and reported by participants require multisensory access (see Table 1). Room should be left to explore how contents and access could come apart. While there is good evidence that we keep track of multisensory contents, a key question is to know whether those get manifested in consciousness or sit outside awareness. A second key question will be to see whether the same process or analysis should be given for all these cases. Integrating redundant information across the senses on the assumption that they concern a single property, or having to determine whether two kinds of information need to be referred to the same object, or how objects then relate to one another in a scene are different processes; It is likely that each will require to be investigated separately when it comes to its dependence on, and manifestation in, consciousness.

Table 1.

Overview of the three kinds of cases falling under the heading of multisensory awareness. Evidence of multisensory access is different from evidence that our brains and minds are integrating information about properties, objects and scenes, as these contents could be the result of unconscious processes, and not experienced at once

| Integration of information regarding a single property | Attribution of different properties referred to the same object | Copresence of multiple objects in the same scene | |

|---|---|---|---|

| Content | Multisensory property | Multisensory object | Multisensory scene |

| Access | Being aware of a single property across different senses at the same time | Being aware that two unisensory properties belong to the same object or are part of a single event | Being aware that two unisensory or multisensory objects are present at the same time in the environment |

3. What Is the Relationship Between Multisensory Integration and Awareness?

One of the key functions of the human brain is to monitor bodily states (interoception) and environmental states (exteroception) (Blanke, 2012; Critchley and Harrison, 2013; Faivre et al., 2015). Despite the tremendous amount and variability of exteroceptive and interoceptive signals the brain has to process, such monitoring seems to be performed flawlessly, and one experiences being an integrated bodily self, evolving in a unified, multisensory world (i.e., phenomenal unity, Chalmers and Bayne, 2003). Intuitively, perceptual consciousness (i.e., the subjective experiences caused by a subset of perceptual processes), may be better characterized as multisensory by essence, reflecting multisensory wholes rather than sums of unisensory features. In this respect, it is important to distinguish situations in which percepts from different modalities merely coexist (e.g., reading while scratching my hand), with situations in which they merge into a single unitary experience (e.g., looking at my hand being scratched; Deroy et al., 2014). Many theories of perceptual consciousness postulate strong interdependencies between consciousness and the capacity to integrate information across the senses, but also across spatial, temporal, and semantic dimensions (Mudrik et al., 2014). Accordingly, when consciously processing signals of multiple sensory origins, one may have privileged access to the integrated product while losing access to its component parts, and therefore experience phenomenal unity. Exploring the properties of phenomenal unity empirically is challenging, considering the nonspecific nature of subjective report (“Did you experience a multisensory object or two unisensory features?”), but also the discrepancy between phenomenal experience and multisensory integration as measured at the neural level (Deroy et al., 2014). Initial evidence has shown that participants do not integrate signals from vision and haptics into perceptual metamers, but are still able to distinguish between perceptual estimates based on congruent and incongruent signals (Hillis et al., 2002). These results suggest that participants had at least to some extent access to the sensory component signals rather than one unified multisensory estimate.

At the behavioural level, it has been repeatedly shown that the processing of an invisible stimulus is affected by the processing of supraliminal stimuli in the auditory, tactile, proprioceptive, vestibular, or olfactory modalities (see below for details). Yet because in these studies participants were always conscious of the nonvisual stimulus, these results could well reflect the interplay between unconscious vision and conscious processes in another modality, rather than an integrative process between two unconscious representations. Information about the supraliminal stimulus is possibly broadcast throughout the brain, and modulates visual neurons activated by the invisible stimulus.

Thus, these results are compatible with the view that multisensory integration requires consciousness, but we will now see that other studies in which no stimulus is consciously perceived are more decisive. In one of them (Arzi et al., 2012), it was shown that associations between tones and odours occurred during NREM sleep, arguably in the complete absence of awareness. The authors relied on partial reinforcement trace conditioning, and measured sniff responses to tones previously paired with pleasant and unpleasant odours while participants were sleeping. Even though subjects were in the NREM sleep stage, and arguably unconscious, they sniffed in response to tones alone, suggesting that they learned novel multisensory associations unconsciously. However, controlling stimulus awareness during sleep is difficult, and the possibility remains that the stimuli were consciously accessed when presented, but forgotten by the time of awaking. In another study trying to account for this potential limitation, awake participants were shown to compare the numerical information conveyed by an invisible image and an inaudible sound (Faivre et al., 2014). Interestingly, such unconscious audiovisual comparisons only occurred in those cases where the participants had previously been trained with consciously perceived stimuli, thus suggesting that conscious but not unconscious training enabled subsequent unconscious processes. The level at which the comparison of written and spoken digits operates is still an open question. While it could involve multisensory analyses of low level visual and acoustic features, a possibility remains that the comparison is made independently of perceptual features, once the visual and auditory stimuli have separately reached an amodal, semantic representation. Moreover, multisensory comparisons (e.g., congruency judgments) do not necessarily imply multisensory integration. Future studies may potentially help in disentangling these various mechanisms (Noel et al., 2015). First, disrupting the spatiotemporal structure of the audiovisual stream should have a larger impact on the comparative process in the case that it operates at a perceptual, rather than semantic, non-perceptual level. Second, in case the results rely on multisensory interactions in the absence of awareness, weakening the visual and auditory signals may potentially increase the strength of their integrated product, by virtue of audiovisual inverse effectiveness (Stanford, 2005; Stein et al., 2009; von Saldern and Noppeney, 2013). Third, if two subliminal signals are indeed integrated into a unified percept rather than only compared, the integrated percept should be able to prime subsequent perceptual processing.

At the neural level, several mechanisms support the possibility of unconscious multisensory integration. First, unconscious multisensory integration may be enabled by multisensory neurons that do not take part in large scale interactions. While such neurons have been described at relatively low levels in the brain, including primary sensory (Ghazanfar and Schroeder, 2006; Kayser et al., 2010; Lee and Noppeney, 2011, 2014; Liang et al., 2013; Meyer et al., 2010; Rohe and Noppeney, 2016; Vetter et al., 2014; Werner and Noppeney, 2010a) and subcortical structures such as the superior colliculus (see Meredith and Stein, 1986; Stein and Stanford, 2008, for a review), their relevance for elaborate cognitive functions remains to be assessed. Second, and higher in the neural hierarchy, another possibility is that unconscious multisensory integration operates through feedforward connections (and most likely outside of awareness, see Lamme and Roelfsema, 2000) between sensory cortical areas and multisensory convergence zones such as the superior temporal sulcus or the posterior parietal cortex (Schroeder and Foxe, 2005; Werner and Noppeney, 2010b). Interestingly, such feedforward processes within low level cortices and at early post-stimulus latencies have been shown to affect multisensory information processing and behaviour despite stimulus unawareness (e.g., phosphene perception enhancement by unconscious looming sounds, Romei et al., 2009). In contrast with these mechanisms, multisensory integration is sometimes held to require long range feedback connections between sensory cortices and frontoparietal networks, a mechanism that typically coincides with conscious access (Dehaene and Changeux, 2011). Hence, disentangling bottom-up and top-down multisensory processes is likely to be an important step towards understanding the intricate links between multisensory integration and consciousness (De Meo et al., 2015).

4. Which Experimental Approaches Are Most Promising to Characterize Multisensory Awareness?

Over the past decade, a growing number of studies have focused on the emergence of perceptual awareness in multisensory contexts. The majority of those studies have investigated how a signal arising from another sensory modality can modulate the access to visual awareness by using experimental paradigms in which visual stimuli, albeit presented on the retina, are suppressed from visual awareness using a variety of experimental paradigms such as the attentional blink, masking, and multistable perception. Here we will focus on experimental approaches using bistable visual stimuli (Blake and Logothetis, 2002) to investigate multisensory interactions during different states of visual awareness.

4.1. Bistable Perception of Ambiguous Figures

Our visual system is often faced with perceptual ambiguity and perceptual decisions need to be made to efficiently interact with the external world. According to the Bayesian theory of perception (for review see Knill and Pouget, 2004), the brain deals with perceptual uncertainty and ambiguity by representing sensory information in the form of probability distributions. If different perceptual interpretations have the same likelihood and are mutually exclusive, the visual system cannot ‘decide’ in favour of one or the other and visual perception periodically oscillates between the two alternatives, a phenomenon called bistable perception (Dayan, 1998). Bistable perception is thought to be generated by the competition between neural populations representing different interpretations of a visual stimulus (Blake and Logothetis, 2002). Perceptual bistability can arise from different forms of ambiguity: ambiguity in depth (e.g., the Necker cube, Necker, 1832), ambiguity in figure–ground segregation (e.g., Rubin’s face–vase illusion, Rubin, 1915), ambiguity between high level interpretations of images (e.g., Boring’s young girl/old woman figure, Boring, 1930), ambiguity in the direction of motion (e.g., the kinetic depth effect, Doner et al., 1984). Investigating whether a signal arising from another sensory modality can disambiguate bistable perception favouring the access to awareness of the interpretation of the visual stimulus congruent with the crossmodal stimulus is an interesting approach to the study of multisensory awareness. However, as pointed out by Deroy et al. (2014), since the bistable perception of ambiguous figures is to some extent under attentional control (Gómez et al., 1995; Horlitz and O’Leary, 1993; Liebert and Burk, 1985), it is difficult to disentangle the contribution of attention in mediating the effect of crossmodal stimulation on ambiguous figure perception. In fact, the interaction between bistable perception and tactile (visuotactile kinetic depth effect, Blake et al., 2004 and visuotactile Necker cube, Bruno et al., 2007), auditory (Rubin’s face/vase illusion with faces and voice uttering a syllable, Munhall et al., 2009) and olfactory (ambiguous motion direction associate with a particular smell, Kuang and Zhang, 2014) stimuli depends on awareness of the congruent interpretation of the visual stimulus, namely, crossmodal stimulation only interacts with the representation of the stimulus dominating observer’s perception, prolonging its duration.

4.2. Binocular Rivalry

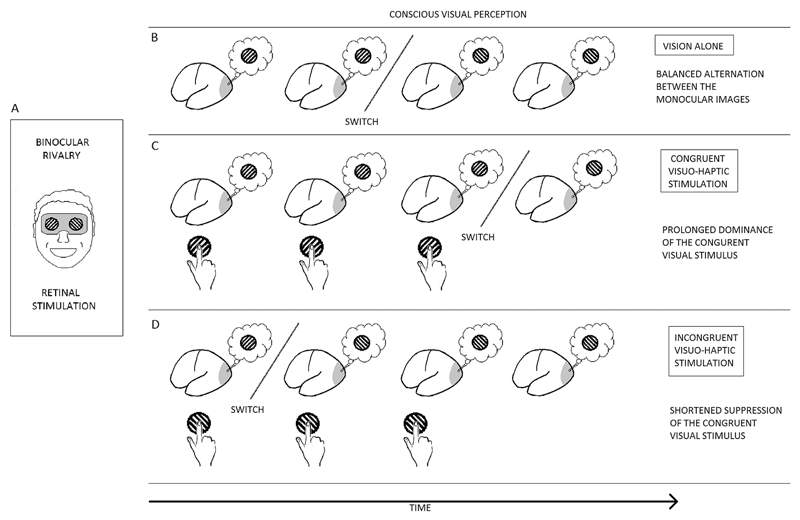

A special case of perceptual bistability is binocular rivalry (Levelt, 1965), that is caused by a conflict between monocular images rather than between different interpretations of the same monocular image. In a binocular rivalry display, incompatible images are contemporaneously presented to each eye (Fig. 1A), in this condition the two monocular images do not fuse into a coherent percept, but engage a strong competition for visual awareness that, in a ‘winner takes all’ dynamic, leads to perceptual oscillations between the two images: observer’s perception is dominated by the stimulus presented to one eye for a few seconds until a perceptual switch occurs in favour of the previously suppressed image (Fig. 1B). Importantly, during binocular rivalry, the suppressed visual stimulus is rendered invisible by the dominant one despite its presence on the retina. Compared to other forms of bistable perception, binocular rivalry is thought to be more automatic and stimulus-driven, ambiguous figures being more likely to be controlled by cognitive factors such as voluntary control or attention (Meng and Tong, 2004). Importantly, voluntary attentional control over binocular rivalry is limited to dominance of the attended visual stimulus as the observer cannot voluntarily provoke a switch but only hold the dominant stimulus for a longer time (for a review on attention and binocular rivalry see Paffen and Alais, 2011). Even though experimental evidence shows that visual stimuli rendered invisible can exogenously capture attention and thus provide a cue for different kinds of visual tasks (Astle et al., 2010; Hsieh and Colas, 2012; Hsieh et al., 2011; Lamy et al., 2015; Zhang and Fang, 2012), it is more difficult to voluntarily select the suppressed visual stimulus during binocular rivalry. In this vein, if crossmodal stimulation influences the dynamics of binocular rivalry only by prolonging dominance durations of the congruent visual stimulus (Fig. 1C reports an example of visuohaptic interactions during binocular rivalry), the effect could, in principle, be mediated by a crossmodal shift of attention or a higher level cognitive decision. If, on the other hand, crossmodal stimulation shortens the suppression of the congruent visual stimulus (provoking a switch when the visual and crossmodal stimulus are incongruent, Fig. 1D), promoting the access to awareness of the suppressed visual stimulus, the effect is likely to reflect a genuine case of multisensory awareness. It has been argued (Deroy et al., 2014) that the crossmodal modulation of visual awareness does not represent multisensory awareness, but only a case of multisensory interaction on the basis that this experimental approach studies awareness in a unisensory framework (for example, the access to visual awareness) and not the establishment of multisensory awareness from different sensory modalities information (Deroy et al., 2014). However, in this case, the observer is not aware of a visual stimulus on its own, and awareness is built by integrating signals from different modalities and therefore we may potentially be able to consider it a case of multisensory awareness.

Figure 1.

Diagram of a binocular rivalry display and possible effects of crossmodal stimulation on rivalrous visual perception. (A) An example of dichoptic stimulation in which orthogonal gratings are separately presented to the eyes, the resulting conscious perception (B) is dominated by one of the two monocular images until a perceptual switch occurs in favour of the other visual stimulus. Normally dominance duration of the rivalrous stimuli is balanced. (C) Example of crossmodal stimulation prolonging dominance of the congruent visual stimulus during binocular rivalry: if the observer touches a haptic grating parallel to the visual grating dominating rivalrous perception, the switch towards the orthogonal (incongruent) visual grating is delayed as compared to visual only stimulation. (D) Example of crossmodal stimulation shortening the suppression of the congruent visual stimulus during binocular rivalry: if the observer touches a haptic grating orthogonal to the visual grating dominating rivalrous perception, the switch towards the parallel (congruent) visual grating occurs earlier compared to visual only stimulation.

Several studies have reported multisensory effects on binocular rivalry depending on awareness, and therefore possibly mediated by attention: dominance durations of the congruent visual stimulus are prolonged by auditory (Conrad et al., 2010; Guzman-Martinez et al., 2012; Kang and Blake, 2005; Lee et al., 2015) and nostril-specific olfactory stimulation (Zhou et al., 2010) and by imitation of a grasping movement rivalling against a checkerboard (Di Pace and Saracini, 2014). A strict link between crossmodal attention and binocular rivalry has been demonstrated by a study showing that crossmodal stimulation enhances people’s attentional control over binocular rivalry (Van Ee et al., 2009). In this study, observers were asked to attend selectively to one of the rivalrous visual stimuli (which prolonged dominance durations of the attended stimulus compared to passive viewing), if either a sound or a vibration congruent with the attended visual stimulus was delivered simultaneously, dominance durations of the attended visual stimulus increased compared to the visual only condition (Van Ee et al., 2009). This result has recently been replicated using auditory and visual speech stimuli (Vidal and Barrès, 2014): The latter researchers have shown that auditory syllables increase voluntary control over the rivalrous image of lips uttering the congruent syllable.

What about crossmodal stimuli interacting with the suppression of the congruent visual stimulus? Numerous experiments have demonstrated that haptic and auditory stimulation interact with binocular rivalry by rescuing the congruent visual stimulus from binocular suppression (Conrad et al., 2010; Lunghi et al., 2010). In a first study, Lunghi et al. (2010) demonstrated that, during binocular rivalry between orthogonally oriented visual gratings, active exploration of a haptic grating, promoted dominance of the rivalrous visual grating congruent in orientation both by prolonging its dominance durations (delaying the time of a perceptual switch during congruent visuohaptic stimulation) and by shortening its suppression (hastening the time of a perceptual switch during incongruent visuohaptic stimulation) as compared to visual only stimulation (Lunghi et al., 2010). The effect of haptic stimulation on the suppressed visual stimulus has been shown to depend critically on the match between visuohaptic spatial frequencies (Lunghi et al., 2010) and orientations (Lunghi and Alais, 2013) and on the co-location of the haptic and visual stimuli (Lunghi and Morrone, 2013), indicating that the visual and haptic stimuli have to be perceived as being part of the same object and not simply cognitively associated. Moreover, a binocular rivalry experiment investigating suppression depth (the difference between contrast detection thresholds measured during dominance and suppression phases of binocular rivalry) during haptic stimulation (Lunghi and Alais, 2015) has shown that haptic stimulation influences the dynamics of binocular rivalry mainly by preventing the congruent visual stimulus from becoming deeply suppressed (Lunghi and Alais, 2015). This study clarifies a possible confound: during binocular rivalry, the monocular signals mutually inhibit each other, thus, in principle, touch could shorten suppression of the congruent visual stimulus both by interacting with it or potentially by interfering with the incongruent dominant stimulus, reducing its strength. By demonstrating that congruent touch improves contrast detection thresholds during suppression and incongruent touch does not have a masking effect on contrast detection thresholds during dominance (i.e., contrast discrimination thresholds are no higher during incongruent touch), Lunghi and Alais (2015) have demonstrated that crossmodal stimulation during binocular rivalry actually boosts the suppressed visual signal.

Similar effects on the suppressed visual stimulus have been reported for voluntary action (voluntarily controlling the motion direction of one of the rivalring stimuli by an active movement of the arm shortens its suppression, Maruya et al., 2007), simple and naturalistic motion sounds (Blake et al., 2004; Conrad et al., 2010, 2013), olfaction (suppression duration of either the picture of a marker or a rose is reduced when a congruent odorant is smelled, Zhou et al., 2010), ecologically relevant sounds (hearing a bird singing reduces suppression of the picture of a bird, Chen et al., 2011) and temporal events (auditory and tactile temporal events combine to synchronize binocular rivalry between visual stimuli differing in temporal frequency, Lunghi et al., 2014).

4.3. Continuous Flash Suppression

In order to selectively study the effect of crossmodal stimulation on visual stimuli undergoing interocular suppression, the method of continuous flash suppression (CFS) can be used, for it allows deep and constant suppression of a salient visual stimulus over extended periods of time (Tsuchiya and Koch, 2005). When one eye is continuously flashed with different, contour-rich, high contrast random patterns (e.g., white noise, Mondrian patterns, scrambled images) at about 10 Hz, information presented to the other eye is perceptually suppressed for extended periods of time (up to 3 min or more). Suppression provoked by continuous flashes has been shown to summate, resulting not only in longer suppression periods, but also in deeper suppression of the other eye: detection thresholds of probes presented to the suppressed eye during CFS are in fact elevated of a 20-fold factor compared to monocular viewing, compared with a 3-fold elevation observed during binocular rivalry (Tsuchiya et al., 2006). Importantly, in binocular rivalry, perception continuously alternates between the monocular images leading to some cognitive awareness about the suppressed stimulus, for it was the dominant one before the perceptual switch. By contrast, during CFS the coherent stimulus is deeply suppressed the flashing masks, so the observer is totally unaware of the suppressed visual stimulus, not only at the perceptual (the stimulus is invisible), but also at the cognitive level (no information about the suppressed visual stimulus is available to the observer either from memory or predictions). If a visual stimulus were released from CFS by a congruent crossmodal stimulus gaining access to visual awareness it would provide a case of multisensory awareness, or at least of awareness that has been induced multisensorially.

Recent evidence has described crossmodal influences on CFS. Alsius and Munhall (2013) have shown that the movie of lips uttering a sentence made invisible by CFS is rescued from suppression earlier if observers listen to a voice speaking the sentence uttered by the movie as compared to an incongruent sentence. Salomon et al. (2013) have reported a similar result for proprioceptive signals, demonstrating that the image of a hand (perceptually projected on the observer’s real hand) is suppressed for a shorter time during CFS if it matches the position of the observer’s own hand as compared to an incongruent position (Salomon et al., 2013). A recent study from the same group (Salomon et al., 2015) has also shown a facilitation for congruent versus incongruent visuo-vestibular stimulation during CFS (Salomon et al., 2015). Finally, auditory facilitation of suppressed visual stimuli has been shown to depend on spatial collocation between the crossmodal stimuli both along the azimuth (Aller et al., 2015) and depth planes (Yang and Yeh, 2014).

Taken together, then, the results reviewed here suggest that binocular rivalry and CFS are two promising techniques for characterizing multisensory awareness: first, suppressed visual stimuli are boosted into visual awareness via very specific mechanisms that rely on classical multisensory congruency cues that indicate whether sensory signals are caused by a common event; second, binocular rivalry suppression and CFS are impenetrable to voluntary attention (indicating a genuine multisensory effect is unlikely mediated by crossmodally driven attentional shifts); third, a variety of sensory signals contribute to the multisensory enhancement of awareness (audition, touch, proprioception, voluntary action, olfaction, and the vestibular system), indicating a real supramodal mechanism mediating and consolidating awareness. Interestingly, one study has shown that observers can learn to use invisible information (for example a vertical disparity gradient masked by other visual stimuli) to disambiguate visual perception in a bistable display (Di Luca et al., 2010), this suggests that similar learning paradigms using subliminal cross-modal stimuli in combination with either binocular rivalry or CFS could be used in the future to study crossmodal awareness.

5. Concluding Remarks

Our discussion has highlighted substantial advances in our understanding of multisensory awareness over the past decade. Nevertheless, research into the relationship between multisensory integration and perceptual awareness faces a couple of unresolved challenges:

First, it is controversially debated which perceptual experiences are necessarily associated with multisensory awareness. In the face of uncertainty concerning the underlying causal structure of the world, the brain often does not integrate sensory signals into one unified multisensory percept. For instance, in the spatial ventriloquist illusion, participants tend to report different locations for the visual and the auditory signal sources with the perceived sound location being shifted towards the visual signal and the visual location towards the auditory signal depending on the relative reliabilities (Körding et al., 2007; Rohe and Noppeney, 2015, 2016).

Can a sound percept that is influenced by a visual signal be considered an example of multisensory awareness? Further, when participants report both, the perceived sound and the perceived visual location, are they concurrently aware of both signals or do they rapidly switch their attention and awareness to perceptual and memory representations from different sensory modalities? Finally, in those cases where participants are thought to integrate sensory signals into one unified percept and report identical locations for both sensory signals, does this guarantee the emergence of integrated multisensory awareness or are participants simply not able to dissociate between the two sorts of unisensory awareness in their report? In the light of these puzzling questions, it is interesting to note that participants were not able to perceive and report the motion direction both in vision and touch when being presented concurrently with a bistable motion quartet in the visual and tactile modalities (Conrad et al., 2012). Thus, at least in those situations where perception in the individual sensory modalities requires sustained temporal perceptual binding (such as in the case of apparent motion), multisensory awareness may not necessarily emerge, instead, in these instances, awareness switches between sensory modalities such as vision and touch.

Second, numerous studies in anaesthetized animals have demonstrated that multisensory interactions can emerge in the absence of awareness (Stein and Meredith, 1993). Yet, their relevance for conscious perception remains to be determined. While accumulating evidence suggests that aware signals can boost unaware signals into awareness, little is known about whether the reverse is also true. Can unaware signals in one sensory modality influence perception in another sensory modality? Experiments focusing on the latter are more informative, because nonspecific top down effects could simply explain the former. Moreover, experiments may subliminally present signals in two sensory modalities that can be integrated into a unified percept to show that the subliminally integrated estimate influences subsequent conscious perception.

Third, research into perceptual awareness in unisensory contexts has recently refocused on classical metacognitive questions and asked to which extent participants can recognize their perceptual performance and abilities. This is an exciting as yet little explored avenue that would provide further insights into the emergence of multisensory integration, perception and awareness.

Acknowledgements

This work was supported by EU FP7 Framework Programme FPT/2007-2013, ECSPLAIN, n.338866 (CL), European Research Council ERC-STG multsens (UN), the AHRC Rethinking the Senses grant AH/L007053/1 (OD and CS) and a Marie Skłodowska-Curie Fellowship (NF).

Footnotes

Notes

1. This aspect is seldom approached in the experimental literature, and more often discussed in the philosophical literature as a form of ‘phenomenal unity’. See Deroy (2014) for review and discussion.

References

- Adam R, Noppeney U. A phonologically congruent sound boosts a visual target into perceptual awareness. Front Integr Neurosci. 2014;8:70. doi: 10.3389/fnint.2014.00070. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alais D, Burr D. Ventriloquist effect results from rear-optimal bimodal integration. Curr Biol. 2004;14:257–262. doi: 10.1016/j.cub.2004.01.029. [DOI] [PubMed] [Google Scholar]

- Allen J, Kraus N, Bradlow A. Neural representation of consciously imperceptible speech sound differences. Percept Psychophys. 2000;62:1383–1393. doi: 10.3758/bf03212140. [DOI] [PubMed] [Google Scholar]

- Aller M, Giani A, Conrad V, Watanabe M, Noppeney U. A spatially collocated sound thrusts a flash into awareness. Front Integr Neurosci. 2015;9:16. doi: 10.3389/fnint.2015.00016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alsius A, Munhall KG. Detection of audiovisual speech correspondences without visual awareness. Psychol Sci. 2013;24:423–431. doi: 10.1177/0956797612457378. [DOI] [PubMed] [Google Scholar]

- Andersen TS, Tiippana K, Sams M. Factors influencing audiovisual fission and fusion illusions. Cogn Brain Res. 2004;21:301–308. doi: 10.1016/j.cogbrainres.2004.06.004. [DOI] [PubMed] [Google Scholar]

- Arzi A, Shedlesky L, Ben-Shaul M, Nasser K, Oksenberg A, Hairston IS, Sobel N. Humans can learn new information during sleep. Nat Neurosci. 2012;15:1460–1465. doi: 10.1038/nn.3193. [DOI] [PubMed] [Google Scholar]

- Astle DE, Nobre AC, Scerif G. Subliminally presented and stored objects capture spatial attention. J Neurosci. 2010;30:3567–3571. doi: 10.1523/JNEUROSCI.5701-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Balduzzi D, Tononi G. Integrated information in discrete dynamical systems: motivation and theoretical framework. PLoS Comput Biol. 2008;4:e1000091. doi: 10.1371/journal.pcbi.1000091. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bekinschtein TA, Dehaene S, Rohaut B, Tadel F, Cohen L, Naccache L. Neural signature of the conscious processing of auditory regularities. Proc Natl Acad Sci USA. 2009;106:1672–1677. doi: 10.1073/pnas.0809667106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bertelson P, Aschersleben G. Automatic visual bias of perceived auditory location. Psychonom Bull Rev. 1998;5:482–489. [Google Scholar]

- Blake R, Logothetis NK. Visual competition. Nat Rev Neurosci. 2002;3:13–21. doi: 10.1038/nrn701. [DOI] [PubMed] [Google Scholar]

- Blake R, Sobel KV, James TW. Neural synergy between kinetic vision and touch. Psychol Sci. 2004;15:397–402. doi: 10.1111/j.0956-7976.2004.00691.x. [DOI] [PubMed] [Google Scholar]

- Blanke O. Multisensory brain mechanisms of bodily self-consciousness. Nat Rev Neurosci. 2012;13:556–571. doi: 10.1038/nrn3292. [DOI] [PubMed] [Google Scholar]

- Boring EG. A new ambiguous figure. Am J Psychol. 1930;42:444–445. [Google Scholar]

- Bruno N, Jacomuzzi A, Bertamini M, Meyer G. A visual-haptic Necker cube reveals temporal constraints on intersensory merging during perceptual exploration. Neuropsychologia. 2007;45:469–475. doi: 10.1016/j.neuropsychologia.2006.01.032. [DOI] [PubMed] [Google Scholar]

- Chalmers D, Bayne T. What is the unity of consciousness? In: Cleeremans A, Frith C, editors. The Unity of Consciousness: Binding, Integration, and Dissociation. Oxford University Press; Oxford, UK: 2003. pp. 23–58. [Google Scholar]

- Chen Y-C, Spence C. When hearing the bark helps to identify the dog: semantically-congruent sounds modulate the identification of masked pictures. Cognition. 2010;114:389–404. doi: 10.1016/j.cognition.2009.10.012. [DOI] [PubMed] [Google Scholar]

- Chen Y-C, Spence C. Crossmodal semantic priming by naturalistic sounds and spoken words enhances visual sensitivity. J Exp Psychol Hum Percept Perform. 2011a;37:1554–1568. doi: 10.1037/a0024329. [DOI] [PubMed] [Google Scholar]

- Chen Y-C, Spence C. The crossmodal facilitation of visual object representations by sound: evidence from the backward masking paradigm. J Exp Psychol Hum Percept Perform. 2011b;37:1784–1802. doi: 10.1037/a0025638. [DOI] [PubMed] [Google Scholar]

- Chen Y-C, Yeh S-L, Spence C. Crossmodal constraints on human perceptual awareness: auditory semantic modulation of binocular rivalry. Front Psychol. 2011;2:212. doi: 10.3389/fpsyg.2011.00212. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Conrad V, Bartels A, Kleiner M, Noppeney U. Audiovisual interactions in binocular rivalry. J Vis. 2010;10:27. doi: 10.1167/10.10.27. [DOI] [PubMed] [Google Scholar]

- Conrad V, Vitello MP, Noppeney U. Interactions between apparent motion rivalry in vision and touch. Psychol Sci. 2012;23:940–948. doi: 10.1177/0956797612438735. [DOI] [PubMed] [Google Scholar]

- Conrad V, Kleiner M, Bartels A, Hartcher O’Brien J, Bülthoff HH, Noppeney U. Naturalistic stimulus structure determines the integration of audiovisual looming signals in binocular rivalry. PLoS ONE. 2013;8:e70710. doi: 10.1371/journal.pone.0070710. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Critchley HD, Harrison NA. Visceral influences on brain and behavior. Neuron. 2013;77:624–638. doi: 10.1016/j.neuron.2013.02.008. [DOI] [PubMed] [Google Scholar]

- Dayan P. A hierarchical model of binocular rivalry. Neural Comput. 1998;10:1119–1135. doi: 10.1162/089976698300017377. [DOI] [PubMed] [Google Scholar]

- De Graaf TA, Hsieh PJ, Sack AT. The “correlates” in neural correlates of consciousness. Neurosci Biobehav Rev. 2012;36:191–197. doi: 10.1016/j.neubiorev.2011.05.012. [DOI] [PubMed] [Google Scholar]

- De Meo R, Murray MM, Clarke S, Matusz PJ. Top-down control and early multisensory processes: chicken vs egg. Front Integr Neurosci. 2015;9:17. doi: 10.3389/fnint.2015.00017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dehaene S. Towards a cognitive neuroscience of consciousness: basic evidence and a workspace framework. Cognition. 2001;79:1–37. doi: 10.1016/s0010-0277(00)00123-2. [DOI] [PubMed] [Google Scholar]

- Dehaene S, Changeux J-P. Experimental and theoretical approaches to conscious processing. Neuron. 2011;70:200–227. doi: 10.1016/j.neuron.2011.03.018. [DOI] [PubMed] [Google Scholar]

- Deroy O. The unity assumption and the many unities of consciousness. In: Bennett D, Hill C, editors. Sensory Integration and the Unity of Consciousness. MIT Press; Cambridge, MA, USA: 2014. pp. 105–124. [Google Scholar]

- Deroy O, Chen Y-C, Spence C. Multisensory constraints on awareness, Phil. Trans R Soc Lond B, Biol Sci. 2014;369(1641):20130207. doi: 10.1098/rstb.2013.0207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Di Luca M, Ernst MO, Backus BT. Learning to use an invisible visual signal for perception. Curr Biol. 2010;20:1860–1863. doi: 10.1016/j.cub.2010.09.047. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Di Pace E, Saracini C. Action imitation changes perceptual alternations in binocular rivalry. PLoS ONE. 2014;9:e98305. doi: 10.1371/journal.pone.0098305. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Doner J, Lappin JS, Perfetto G. Detection of three-dimensional structure in moving optical patterns. J Exp Psychol Hum Percept Perform. 1984;10:1–11. doi: 10.1037//0096-1523.10.1.1. [DOI] [PubMed] [Google Scholar]

- Ernst MO, Banks MS. Humans integrate visual and haptic information in a statistically optimal fashion. Nature. 2002;415(6870):429–433. doi: 10.1038/415429a. [DOI] [PubMed] [Google Scholar]

- Faivre N, Mudrik L, Schwartz N, Koch C. Multisensory integration in complete unawareness: evidence from audiovisual congruency priming. Psychol Sci. 2014;25:2006–2016. doi: 10.1177/0956797614547916. [DOI] [PubMed] [Google Scholar]

- Faivre N, Salomon R, Blanke O. Visual consciousness and bodily self-consciousness. Curr Opin Neurobiol. 2015;28:23–28. doi: 10.1097/WCO.0000000000000160. [DOI] [PubMed] [Google Scholar]

- Gallace A, Spence C. The cognitive and neural correlates of “tactile consciousness”: a multisensory perspective. Conscious Cogn. 2008;17:370–407. doi: 10.1016/j.concog.2007.01.005. [DOI] [PubMed] [Google Scholar]

- Gallace A, Spence C. In Touch With the Future. Oxford University Press; Oxford, UK: 2014. [Google Scholar]

- Gau R, Noppeney U. How prior expectations shape multisensory perception. NeuroImage. 2016;124:876–886. doi: 10.1016/j.neuroimage.2015.09.045. [DOI] [PubMed] [Google Scholar]

- Ghazanfar AA, Schroeder CE. Is neocortex essentially multisensory? Trends Cogn Sci. 2006;10:278–285. doi: 10.1016/j.tics.2006.04.008. [DOI] [PubMed] [Google Scholar]

- Ghose GM, Maunsell J. Specialized representations in visual cortex: a role for binding? Neuron. 1999;24:79–85. doi: 10.1016/s0896-6273(00)80823-5. [DOI] [PubMed] [Google Scholar]

- Giani AS, Belardinelli P, Ortiz E, Kleiner M, Noppeney U. Detecting tones in complex auditory scenes. NeuroImage. 2015;122:203–213. doi: 10.1016/j.neuroimage.2015.07.001. [DOI] [PubMed] [Google Scholar]

- Gómez C, Argandoña ED, Solier RG, Angulo JC, Vázquez M. Timing and competition in networks representing ambiguous figures. Brain Cogn. 1995;29:103–114. doi: 10.1006/brcg.1995.1270. [DOI] [PubMed] [Google Scholar]

- Gutschalk A, Micheyl C, Oxenham AJ. Neural correlates of auditory perceptual awareness under informational masking. PLoS Biol. 2008;6:1156–1165. doi: 10.1371/journal.pbio.0060138. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guzman-Martinez E, Ortega L, Grabowecky M, Mossbridge J, Suzuki S. Interactive coding of visual spatial frequency and auditory amplitude-modulation rate. Curr Biol. 2012;22:383–388. doi: 10.1016/j.cub.2012.01.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haynes JD, Driver J, Rees G. Visibility reflects dynamic changes of effective connectivity between V1 and fusiform cortex. Neuron. 2005;46:811–821. doi: 10.1016/j.neuron.2005.05.012. [DOI] [PubMed] [Google Scholar]

- Heffner RS, Heffner HE. Evolution of sound localization in mammals. In: Webster DB, Popper AN, Fay RR, editors. The Evolutionary Biology of Hearing. Springer Verlag; New York, NY, USA: 1992a. pp. 691–715. [Google Scholar]

- Heffner RS, Heffner HE. Visual factors in sound localization in mammals. J Comp Neurol. 1992b;317:219–232. doi: 10.1002/cne.903170302. [DOI] [PubMed] [Google Scholar]

- Hillis JM, Ernst MO, Banks MS, Landy MS. Combining sensory information: mandatory fusion within, but not between, senses. Science. 2002;298(5598):1627–1630. doi: 10.1126/science.1075396. [DOI] [PubMed] [Google Scholar]

- Horlitz KL, O’Leary A. Satiation or availability? Effects of attention, memory, and imagery on the perception of ambiguous figures. Percept Psychophys. 1993;53:668–681. doi: 10.3758/bf03211743. [DOI] [PubMed] [Google Scholar]

- Hsiao JY, Chen Y-C, Spence C, Yeh SL. Assessing the effects of audiovisual semantic congruency on the perception of a bistable figure. Conscious Cogn. 2012;21:775–787. doi: 10.1016/j.concog.2012.02.001. [DOI] [PubMed] [Google Scholar]

- Hsieh P-J, Colas JT. Awareness is necessary for extracting patterns in working memory but not for directing spatial attention. J Exp Psychol Hum Percept Perform. 2012;38:1085–1090. doi: 10.1037/a0028345. [DOI] [PubMed] [Google Scholar]

- Hsieh P-J, Colas JT, Kanwisher N. Pop-out without awareness: unseen feature singletons capture attention only when top-down attention is available. Psychol Sci. 2011;22:1220–1226. doi: 10.1177/0956797611419302. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jackson CV. Visual factors in auditory localization. Q J Exp Psychol. 1953;5:52–65. [Google Scholar]

- Kang M-S, Blake R. Perceptual synergy between seeing and hearing revealed during binocular rivalry. Psychologija. 2005;32:7–15. [Google Scholar]

- Kayser C, Logothetis NK, Panzeri S. Visual enhancement of the information representation in auditory cortex. Curr Biol. 2010;20:19–24. doi: 10.1016/j.cub.2009.10.068. [DOI] [PubMed] [Google Scholar]

- Knill DC, Pouget A. The Bayesian brain: the role of uncertainty in neural coding and computation. Trends Neurosci. 2004;27:712–719. doi: 10.1016/j.tins.2004.10.007. [DOI] [PubMed] [Google Scholar]

- Körding KP, Beierholm U, Ma WJ, Quartz S, Tenenbaum JB, Shams L. Causal inference in multisensory perception. PLoS ONE. 2007;2:e943. doi: 10.1371/journal.pone.0000943. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kruger H, Collins T, Cavanagh P. Similar effects of saccades on auditory and visual localization suggest common spatial map. J Vis. 2014;14:1232. [Google Scholar]

- Kuang S, Zhang T. Smelling directions: olfaction modulates ambiguous visual motion perception. Sci Rep. 2014;4:5796. doi: 10.1038/srep05796. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lamme VAF. Towards a true neural stance on consciousness. Trends Cogn Sci. 2006;10:494–501. doi: 10.1016/j.tics.2006.09.001. [DOI] [PubMed] [Google Scholar]

- Lamme VAF, Roelfsema PR. The distinct modes of vision offered by feedforward and recurrent processing. Trends Neurosci. 2000;23:571–579. doi: 10.1016/s0166-2236(00)01657-x. [DOI] [PubMed] [Google Scholar]

- Lamy D, Alon L, Carmel T, Shalev N. The role of conscious perception in attentional capture and object-file updating. Psychol Sci. 2015;26:48–57. doi: 10.1177/0956797614556777. [DOI] [PubMed] [Google Scholar]

- Lee H, Noppeney U. Long-term music training tunes how the brain temporally binds signals from multiple senses. Proc Natl Acad Sci USA. 2011;108:E1441–E1450. doi: 10.1073/pnas.1115267108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee H, Noppeney U. Temporal prediction errors in visual and auditory cortices. Curr Biol. 2014;24:R309–R310. doi: 10.1016/j.cub.2014.02.007. [DOI] [PubMed] [Google Scholar]

- Lee M, Blake R, Kim S, Kim C-Y. Melodic sound enhances visual awareness of congruent musical notes, but only if you can read music. Proc Natl Acad Sci USA. 2015;112:8493–8498. doi: 10.1073/pnas.1509529112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Levelt WJ. On Binocular Rivalry. Institute for Perception; Soesterberg, Netherlands: 1965. [Google Scholar]

- Liang M, Mouraux A, Hu L, Iannetti GD. Primary sensory cortices contain distinguishable spatial patterns of activity for each sense. Nat Commun. 2013;4:1979. doi: 10.1038/ncomms2979. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liebert RM, Burk B. Voluntary control of reversible figures. Percept Mot Skills. 1985;61:1307–1310. doi: 10.2466/pms.1985.61.3f.1307. [DOI] [PubMed] [Google Scholar]

- Lunghi C, Alais D. Touch interacts with vision during binocular rivalry with a tight orientation tuning. PLoS ONE. 2013;8:e58754. doi: 10.1371/journal.pone.0058754. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lunghi C, Alais D. Congruent tactile stimulation reduces the strength of visual suppression during binocular rivalry. Sci Rep. 2015;5:9413. doi: 10.1038/srep09413. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lunghi C, Morrone MC. Early interaction between vision and touch during binocular rivalry. Multisens Res. 2013;26:291–306. doi: 10.1163/22134808-00002411. [DOI] [PubMed] [Google Scholar]

- Lunghi C, Binda P, Morrone MC. Touch disambiguates rivalrous perception at early stages of visual analysis. Curr Biol. 2010;20:R143–R144. doi: 10.1016/j.cub.2009.12.015. [DOI] [PubMed] [Google Scholar]

- Lunghi C, Morrone MC, Alais D. Auditory and tactile signals combine to influence vision during binocular rivalry. J Neurosci. 2014;34:784–792. doi: 10.1523/JNEUROSCI.2732-13.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maeda F, Kanai R, Shimojo S. Changing pitch induced visual motion illusion. Curr Biol. 2004;14:R990–R991. doi: 10.1016/j.cub.2004.11.018. [DOI] [PubMed] [Google Scholar]

- Maruya K, Yang E, Blake R. Voluntary action influences visual competition. Psychol Sci. 2007;18:1090–1098. doi: 10.1111/j.1467-9280.2007.02030.x. [DOI] [PubMed] [Google Scholar]

- McGurk H, MacDonald J. Hearing lips and seeing voices. Nature. 1976;264(5588):746–748. doi: 10.1038/264746a0. [DOI] [PubMed] [Google Scholar]

- Meng M, Tong F. Can attention selectively bias bistable perception? Differences between binocular rivalry and ambiguous figures. J Vis. 2004;4:539–551. doi: 10.1167/4.7.2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meredith MA, Stein BE. Visual, auditory, and somatosensory convergence on cells in superior colliculus results in multisensory integration. J Neurophysiol. 1986;56:640–662. doi: 10.1152/jn.1986.56.3.640. [DOI] [PubMed] [Google Scholar]

- Meyer K, Kaplan JT, Essex R, Webber C, Damasio H, Damasio A. Predicting visual stimuli on the basis of activity in auditory cortices. Nat Neurosci. 2010;13:667–668. doi: 10.1038/nn.2533. [DOI] [PubMed] [Google Scholar]

- Mudrik L, Faivre N, Koch C. Information integration without awareness. Trends Cogn Sci. 2014;18:488–496. doi: 10.1016/j.tics.2014.04.009. [DOI] [PubMed] [Google Scholar]

- Munhall KG, Gribble P, Sacco L, Ward M. Temporal constraints on the McGurk effect. Percept Psychophys. 1996;58:351–362. doi: 10.3758/bf03206811. [DOI] [PubMed] [Google Scholar]

- Munhall KG, ten Hove MW, Brammer M, Paré M. Audiovisual integration of speech in a bistable illusion. Curr Biol. 2009;19:735–739. doi: 10.1016/j.cub.2009.03.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Necker LA. Observations on some remarkable optical phænomena seen in Switzerland; and on an optical phænomenon which occurs on viewing a figure of a crystal or geometrical solid. Philos Mag Ser 3. 1832;1:329–337. [Google Scholar]

- Noel J-P, Wallace M, Blake R. Cognitive neuroscience: integration of sight and sound outside of awareness? Curr Biol. 2015;25:R157–R159. doi: 10.1016/j.cub.2015.01.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Olivers CNL, Van der Burg E. Bleeping you out of the blink: sound saves vision from oblivion. Brain Res. 2008;1242:191–199. doi: 10.1016/j.brainres.2008.01.070. [DOI] [PubMed] [Google Scholar]

- Paffen CLE, Alais D. Attentional modulation of binocular rivalry. Front Hum Neurosci. 2011;5:105. doi: 10.3389/fnhum.2011.00105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Palmer TD, Ramsey AK. The function of consciousness in multisensory integration. Cognition. 2012;125:353–364. doi: 10.1016/j.cognition.2012.08.003. [DOI] [PubMed] [Google Scholar]

- Partan S, Marler P. Communication goes multimodal. Science. 1999;283(5406):1272–1273. doi: 10.1126/science.283.5406.1272. [DOI] [PubMed] [Google Scholar]

- Ro T, Breitmeyer B, Burton P, Singhal NS, Lane D. Feedback contributions to visual awareness in human occipital cortex. Curr Biol. 2003;13:1038–1041. doi: 10.1016/s0960-9822(03)00337-3. [DOI] [PubMed] [Google Scholar]

- Rohe T, Noppeney U. Cortical hierarchies perform Bayesian causal inference in multisensory perception. PLoS Biol. 2015;13:e1002073. doi: 10.1371/journal.pbio.1002073. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rohe T, Noppeney U. Distinct computational principles govern multisensory integration in primary sensory and association cortices. Curr Biol. 2016;1:1–6. doi: 10.1016/j.cub.2015.12.056. [DOI] [PubMed] [Google Scholar]

- Romei V, Murray MM, Cappe C, Thut G. Preperceptual and stimulus-selective enhancement of low-level human visual cortex excitability by sounds. Curr Biol. 2009;19:1799–1805. doi: 10.1016/j.cub.2009.09.027. [DOI] [PubMed] [Google Scholar]

- Roskies AL. The binding problem. Neuron. 1999;24:7–9. doi: 10.1016/s0896-6273(00)80817-x. [DOI] [PubMed] [Google Scholar]

- Rubin E. Synsoplevede Figurer [Visually experienced Figures]. Studier i Psykologisk Analyse. Gyldendal; Copenhagen, Denmark: 1915. [Google Scholar]

- Salomon R, Lim M, Herbelin B, Hesselmann G, Blanke O. Posing for awareness: proprioception modulates access to visual consciousness in a continuous flash suppression task. J Vis. 2013;13:2. doi: 10.1167/13.7.2. [DOI] [PubMed] [Google Scholar]

- Salomon R, Kaliuzhna M, Herbelin B, Blanke O. Balancing awareness: vestibular signals modulate visual consciousness in the absence of awareness. Conscious Cogn. 2015;36:289–297. doi: 10.1016/j.concog.2015.07.009. [DOI] [PubMed] [Google Scholar]

- Schroeder CE, Foxe J. Multisensory contributions to low-level, “unisensory” processing. Curr Opin Neurobiol. 2005;15:454–458. doi: 10.1016/j.conb.2005.06.008. [DOI] [PubMed] [Google Scholar]

- Shams L, Kamitani Y, Shimojo S. Illusions. What you see is what you hear. Nature. 2000;408(6814):788. doi: 10.1038/35048669. [DOI] [PubMed] [Google Scholar]

- Spence C, Bayne T. Is consciousness multisensory? In: Stokes D, Matthen M, Biggs S, editors. Perception and Its Modalities. Oxford University Press; Oxford, UK: 2015. pp. 95–132. [Google Scholar]

- Spence C, Smith B, Auvray M. Confusing tastes and flavours. In: Stokes D, Matthen M, Biggs S, editors. Perception and Its Modalities. Oxford University Press; Oxford, UK: 2015. pp. 247–274. [Google Scholar]

- Stanford TR. Evaluating the operations underlying multisensory integration in the cat superior colliculus. J Neurosci. 2005;25:6499–6508. doi: 10.1523/JNEUROSCI.5095-04.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stein BE, Meredith MA. The Merging of the Senses. MIT Press; Cambridge, MA USA: 1993. [Google Scholar]

- Stein BE, Stanford TR. Multisensory integration: current issues from the perspective of the single neuron. Nat Rev Neurosci. 2008;9:255–266. doi: 10.1038/nrn2331. [DOI] [PubMed] [Google Scholar]

- Stein BE, Stanford TR, Ramachandran R, Perrault TJ, Rowland BA. Challenges in quantifying multisensory integration: alternative criteria, models, and inverse effectiveness. Exp Brain Res. 2009;198:113–126. doi: 10.1007/s00221-009-1880-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stevenson RJ, Attuquayefio T. Human olfactory consciousness and cognition: its unusual features may not result from unusual functions but from limited neocortical processing resources. Front Psychol. 2013;4:819. doi: 10.3389/fpsyg.2013.00819. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tsuchiya N, Koch C. Continuous flash suppression reduces negative afterimages. Nat Neurosci. 2005;8:1096–1101. doi: 10.1038/nn1500. [DOI] [PubMed] [Google Scholar]

- Tsuchiya N, Koch C, Gilroy LA, Blake R. Depth of interocular suppression associated with continuous flash suppression, flash suppression, and binocular rivalry. J Vis. 2006;6:1068–1078. doi: 10.1167/6.10.6. [DOI] [PubMed] [Google Scholar]

- Van Ee R, Van Boxtel JJ, Parker AL, Alais D. Multisensory congruency as a mechanism for attentional control over perceptual selection. J Neurosci. 2009;29:11641–11649. doi: 10.1523/JNEUROSCI.0873-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vetter P, Smith FW, Muckli L. Decoding sound and imagery content in early visual cortex. Curr Biol. 2014;24:1256–1262. doi: 10.1016/j.cub.2014.04.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vidal M, Barrès V. Hearing (rivaling) lips and seeing voices: how audiovisual interactions modulate perceptual stabilization in binocular rivalry. Front Hum Neurosci. 2014;8:677. doi: 10.3389/fnhum.2014.00677. [DOI] [PMC free article] [PubMed] [Google Scholar]

- von Saldern S, Noppeney U. Sensory and striatal areas integrate auditory and visual signals into behavioral benefits during motion discrimination. J Neurosci. 2013;33:8841–8849. doi: 10.1523/JNEUROSCI.3020-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vroomen J, De Gelder B. Perceptual effects of cross-modal stimulation: ventriloquism and the freezing phenomenon. In: Calvert GA, Spence C, Stein BE, editors. The Handbook of Multisensory Processes. MIT Press; Cambridge, MA USA: 2004. pp. 141–146. [Google Scholar]

- Werner S, Noppeney U. Distinct functional contributions of primary sensory and association areas to audiovisual integration in object categorization. J Neurosci. 2010a;30:2662–2675. doi: 10.1523/JNEUROSCI.5091-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Werner S, Noppeney U. Superadditive responses in superior temporal sulcus predict audiovisual benefits in object categorization. Cereb Cortex. 2010b;20:1829–1842. doi: 10.1093/cercor/bhp248. [DOI] [PubMed] [Google Scholar]

- Wolfe JM, Cave KR. The psychophysical evidence for a binding problem in human vision. Neuron. 1999;24:11–17. doi: 10.1016/s0896-6273(00)80818-1. [DOI] [PubMed] [Google Scholar]

- Yang YH, Yeh SL. Unmasking the dichoptic mask by sound: spatial congruency matters. Exp Brain Res. 2014;232:1109–1116. doi: 10.1007/s00221-014-3820-5. [DOI] [PubMed] [Google Scholar]

- Zhang X, Fang F. Object-based attention guided by an invisible object. Exp Brain Res. 2012;223:397–404. doi: 10.1007/s00221-012-3268-4. [DOI] [PubMed] [Google Scholar]

- Zhou W, Jiang Y, He S, Chen D. Olfaction modulates visual perception in binocular rivalry. Curr Biol. 2010;20:1356–1358. doi: 10.1016/j.cub.2010.05.059. [DOI] [PMC free article] [PubMed] [Google Scholar]