Abstract

Although the visual system uses both velocity- and disparity-based binocular information for computing 3D motion, it is unknown whether (and how) these two signals interact. We found that these two binocular signals are processed distinctly at the levels of both cortical activity in human MT and perception. In human MT, adaptation to both velocity-based and disparity-based 3D motions demonstrated direction-selective neuroimaging responses. However, when adaptation to one cue was probed using the other cue, there was no evidence of interaction between them (i.e., there was no “cross-cue” adaptation). Analogous psychophysical measurements yielded correspondingly weak cross-cue motion aftereffects (MAEs) in the face of very strong within-cue adaptation. In a direct test of perceptual independence, adapting to opposite 3D directions generated by different binocular cues resulted in simultaneous, superimposed, opposite-direction MAEs. These findings suggest that velocity- and disparity-based 3D motion signals may both flow through area MT but constitute distinct signals and pathways.

SIGNIFICANCE STATEMENT Recent human neuroimaging and monkey electrophysiology have revealed 3D motion selectivity in area MT, which is driven by both velocity-based and disparity-based 3D motion signals. However, to elucidate the neural mechanisms by which the brain extracts 3D motion given these binocular signals, it is essential to understand how—or indeed if—these two binocular cues interact. We show that velocity-based and disparity-based signals are mostly separate at the levels of both fMRI responses in area MT and perception. Our findings suggest that the two binocular cues for 3D motion might be processed by separate specialized mechanisms.

Keywords: 3D motion, binocular vision, changing disparity, fMRI, interocular velocity difference, motion aftereffect

Introduction

The visual system combines dynamic 2D retinal signals from each eye to reconstruct 3D motion. When an object moves through depth, there are two main binocular signals that the brain might use to compute 3D motion. One is based on differences in the monocular velocities seen by each eye (“interocular velocity difference” or IOVD) and the other is based on changing retinal disparities over time (“changing disparity” or CD). Both IOVD and CD signals contribute to 3D motion perception and recent studies have identified 3D motion processing in MT driven by both IOVD-biased and CD-isolating stimuli (Rokers et al., 2009; Sanada and DeAngelis, 2014). However, it is not known whether and/or how these two sources of binocular 3D motion information interact.

One possibility is that both IOVDs and CDs are fused into a single “3D motion” visual signal. Velocity and disparity signals are integrated in MT (Movshon and Newsome, 1996; Ponce et al., 2008, 2011; Smolyanskaya et al., 2015), IOVD and CD cues both drive human MT (Rokers et al., 2009), and a recent single-neuron study found a fraction of neurons in MT with direction-selective responses from both IOVDs and CDs (Sanada and DeAngelis, 2014). In addition to the convergence of disparity and velocity information that occurs in MT, the fusion of 3D motion information would be consistent with the perceptual cue combination observed in many domains, including 3D space perception (Liu et al., 2004; Welchman et al., 2005; Ban et al., 2012).

An intriguing alternate possibility is that IOVDs and CDs might be separate mechanisms used to encode 3D motion in different visual and behavioral contexts (Shioiri et al., 2000; Brooks, 2002; Czuba et al., 2010). In prior psychophysical work, we found that perceptual sensitivity to IOVDs and CDs exhibited distinct (but complementary) patterns across visual field eccentricity and speed (Czuba et al., 2010). IOVDs sometimes provide a nearly complete account of combined-cue 3D motion perception (i.e., in contexts containing both IOVDs and CDs), implying that one cue might be treated separately (or at least preferentially) in certain contexts (Brooks, 2002; Watanabe et al., 2008; Czuba et al., 2011). However, the question of whether IOVDs and CDs are separate, specialized mechanisms has not been tested directly.

To test these two hypotheses, we used direction-selective adaptation paradigms in a series of dovetailed fMRI and psychophysical experiments. In an initial fMRI experiment, we assessed the degree to which the IOVD and CD cues individually contribute to combined-cue processing. Next, we tested directly for adaptation transfer between cues (e.g., adapting to IOVD and testing with CD) in both psychophysical and fMRI experiments. Last, we conducted a novel psychophysical adaptation test of separate IOVD and CD processing, in which subjects were adapted to alternating opposite 3D directions that were paired with one cue or the other. The results from these experiments consistently revealed processing that is mostly independent. This constitutes the first direct evidence for segregated velocity-based and disparity-based 3D motion subcircuits running through MT. Further, and perhaps most surprisingly, these signals can be shown to be available separately at the level of perception when pitted against one another.

Materials and Methods

Subjects.

A total of five subjects (four male and one female, age 28–51 years) with normal or corrected-to-normal vision participated in our experiments. Four subjects (the authors) were experienced psychophysical observers and fMRI participants. T.B.C, L.K.C., and A.C.H. participated in Experiment 1. S.J.J., L.K.C., and A.C.H. participated in Experiment 3. S.J.J., A.C.H., and one naive subject participated in psychophysical experiments (Experiments 2 and 4). All gave informed written consent in accordance with the Institutional Review Board at The University of Texas at Austin.

Stimuli and apparatus.

In Experiment 1, we used an MR-safe mirror stereoscope to present dichotic stimuli, a procedure described in detail previously (Rokers et al., 2009). We used CD-isolating, IOVD-biased, and combined (containing both IOVD- and CD-cue) stimuli with the same geometry as in a previous psychophysical study (Czuba et al., 2011). Briefly, a total of 100 dots (size = 0.15°, 50 black and 50 white) on a midgray background (109.4 cd/m2) were distributed uniformly in an annulus around fixation (2.5° to 4.75° eccentricity). The annulus was divided into four quadrants. Adjacent quadrants were offset in equal and opposite directions in depth such that 50% of dots were always nearer than fixation and 50% of dots were always farther than fixation, creating an alternating pinwheel of disparity planes. On each trial, the starting disparity phase was uniformly distributed within the depth volume (±36 arc minutes from the fixation plane). The monocular velocity of dots was either 0.6 or 1.5°/s.

In Experiment 3, we used a “PROPixx” DLP LED projector (VPixx Technologies) with a refresh rate of 240 Hz at full HD resolution (1920 × 1080), operating in grayscale mode (mean luminance = 59.75 cd/m2). Each pixel subtended 0.0311°. The left and right images were separated by a fast-switching circular polarization modulator in front of the projector lens (DepthQ; Lightspeed Design). The onset of each orthogonal polarization was synchronized with the video refresh, enabling interleaved refresh rates of 120 Hz for each eye's image. MR-safe circular polarization filters were attached to the head coil to dissociate the subject's left and right eye views of the stimuli, which were rear projected onto a polarization-preserving screen (Da-Lite 3D virtual black rear screen fabric, model 35929).

A total of 42 moving dots (size = 0.3°, 21 black and 21 white) on a midgray background were displayed in an annulus (2–7° eccentricity), with the constraint that the minimum distance between all possible dot pairs was 0.7°. Dots moved through depth within a depth volume (±21 arc minutes from the fixation depth plane). The monocular velocity of dots was 0.7 °/s; we used this speed because, based on prior work (Czuba et al., 2010), stimuli at this speed should drive the putative CD and IOVD mechanisms equally well in this eccentricity range. To aid binocular fusion, a surrounding annulus (8–9° eccentricity) of 90 static dots (45 black and 45 white) was presented in the plane of fixation. The fixation mark subtended 1°.

For combined-cue stimuli, dot positions in depth were chosen from a uniform distribution that spanned the depth volume. Dots moved through depth and wrapped at the near and far edge of this volume. Dot lifetime was 250 ms and all dots had random initial lifetimes ranging between 0 and 250 ms. Dots that either wrapped or reached the end of their lifetime were randomly repositioned within the annulus. In the imaging studies, we used 100% coherence dots. Dots moved in the same direction within each monocular image, but in opposite directions between the eyes for 3D motion. For example, leftward motion in the left eye and rightward motion in the right eye resulted in away motion in 3D space. The IOVD-cue stimuli were the same as those in the combined-cue stimuli except that the dots had the opposite contrast polarity in each eye to degrade depth information (Rokers et al., 2008). For CD-cue stimuli, we used conventional temporally uncorrelated dots that were randomly relocated in a frontoparallel plane on every video frame while changing disparity in the corresponding 3D direction (Julesz, 1971). In Experiment 3, in which frame rates of 120 Hz/eye were used, we presented the left and right image pairs for two frames to better equate the effective contrast of the CD dots relative to the other cue stimuli.

In the psychophysical experiments, we used stimuli based on those from Experiment 3, with modifications to allow for measurements of perceptual motion aftereffects (MAEs). Specifically, we manipulated motion coherence of the test stimuli to measure psychometric functions in these experiments. Coherence was defined as the percentage of dots moving in 3D direction (signal dots) among the total number of dots (signal + noise dots). Noise dots were animated as described in detail previously (Czuba et al., 2010). Briefly, noise dots moved in a random walk through the 3D space. The lifetimes of the noise dots were drawn from an exponential distribution, biasing them toward short lifetimes. Stimuli were presented on a linearized 42 inch LCD monitor (60 Hz, 1920 × 1080 resolution; LC-42D64U, Sharp; mean luminance = 56.5 cd/m2) viewed through a 73 cm optical path of a mirror stereoscope. Each pixel subtended 0.017°.

fMRI.

fMRI was performed at The University of Texas at Austin Imaging Research Center on a GE Signa HD 3T scanner using a GE 8-channel phased array head coil (Experiment 1) and a Siemens Skyra 3 T scanner using a 32-channel head coil (Experiment 3). A whole-brain anatomical volume at 1 × 1 × 1 mm resolution was acquired for each subject. Brain tissue was segmented into gray matter, white matter, and CSF by an automated algorithm followed by manual refinement. The T1-weighted inplane anatomical volume (Experiment 1) or T1-weighted structural volume at 1 × 1 × 1 mm resolution (Experiment 3) was acquired in the beginning of each fMRI session to coregister with this whole-brain anatomical volume (Nestares and Heeger, 2000).

For Experiment 1, we used a 2-shot spiral sequence (3.2 × 3.2 × 3.2 mm voxels, 1.5 s volume acquisition duration, repetition time = 750 ms, echo time = 30 ms, flip angle = 56°), with 14 quasi-axial slices covering the posterior visual cortices, oriented approximately parallel with the calcarine sulcus. For Experiment 3, an echoplanar imaging sequence (2 × 2 × 2 mm voxels, repetition time = 750 ms, echo time = 30 ms, flip angle = 55°) with 40 oblique slices acquired using a multiband (MB = 4) sequence to achieve better temporal and spatial resolution was used. To ensure that magnetic and hemodynamic steady-state had been reached, and that BOLD responses for each trial were similarly convolved with the responses from preceding and following trials, data from the first and last two trials (Experiment 1) and data from the first and last trials (Experiment 3) of each fMRI scan were discarded.

Defining ROI.

fMRI responses were analyzed in each of the visual cortical areas separately for each subject. Mapping of visual areas V1, V2, V3, and V3A was performed in separate experimental sessions for each subject using standard techniques (Engel et al., 1994; Sereno et al., 1995). Area MT was defined as a region of gray matter that responded with a systematic phase progression to retinotopic stimulation within the larger MT+ brain region that responded strongly to moving dots compared with stationary dots (Huk et al., 2002). We ran two reference scans (240 s) in each scan session in which moving dots (12 s) and stationary dots (12) alternated in the same annulus in which 3D motion dots were displayed. We fitted a sinusoid to the time series of the average of these reference scans. We further restricted the ROIs to the reference scan activations using conventional thresholds on coherence (correlation between the time series and the best-fitting sinusoid at the fundamental of the stimulus frequency) of 0.3 and a phase range of [0 π]. Applying coherence threshold values between 0.2 and 0.4 yielded similar results.

fMRI adaptation procedures.

We used a well established adaptation paradigm to assess 3D direction-selective adaptation at the level of fMRI activity and perception (Anstis et al., 1998; Mather et al., 1998; Huk et al., 2001; Larsson et al., 2006; Rokers et al., 2009; Czuba et al., 2011). In each session, subjects viewed adapting stimuli for a prolonged duration (60 s in Experiment 1; 40 s in Experiment 3) before the trials started. Each trial began with top-up adaptation (4 s), followed by a 1.25 s blank interstimulus interval. Test stimuli were displayed for 1 s, followed by 1.25 s of blank. In the fMRI experiments, there was an attention-demanding task in the far periphery to ensure that subjects maintained an equivalent attentional state throughout the scan run.

In Experiment 1, there were three adapting conditions (combined-cue, IOVD-cue, and CD-cue) and the test stimuli were always combined-cue. In each scan session, subjects were adapted to a single cue-direction pair (i.e., combined-cue, toward direction) and were then tested with a combined-cue stimulus moving in either the same or opposite direction as adaptation. Each subject participated in three to four scan sessions for each adapting cue condition. Each session consisted of eight scans. Because each adaptation scan was presented in rapid succession (∼4 s between scans), the prolonged initial adaptation stimulus was only presented before the first scan in a session. We confirmed that steady-state adaptation had been reached by comparing direction selectivity indices based on the first four and last four scans of each session. There were a total of 36 trials in each scan. In 14 of the 36 trials, the test direction was same as the adapting direction; in another 14, the test direction was the opposite to the adapting direction. In the remaining eight trials, the test stimulus was omitted and only the fixation and surrounding stimulus were presented. These blank trials were used to estimate the baseline response. The first two and last two trials in each scan were thrown out to allow for saturation of BOLD response and to provide an equal number of imaging time points in each trial analysis window. Trial types were pseudorandomly interleaved throughout each scan. In this trial structure, each subject finished 2592–3456 trials in total, of which there were 336–448 trials for each adapting cue condition.

To control attention in Experiment 1, subjects performed a two-interval forced choice discrimination task presented in a region eccentric to the main experimental stimulus. In this task, the subjects were shown bands of red and green dots surrounding the stimulus and had to pick the interval containing an unequal ratio of red to green dots. Each stimulus interval had 30 nonoverlapping red and green dots (0.2° diameter) presented in a 0.5° annulus eccentric to the experimental stimulus. Task difficulty was adjusted by changing the ratio of dot colors in the target interval using an adaptive QUEST staircase procedure (Watson and Pelli, 1983). The duration of each presentation interval was selected randomly from a truncated exponential distribution (raised-cosine dot-wise onset/offset; t(μ) = 2.0 s, 0.5 ≤ t ≤ 4.0 s). A yellow dot presented at fixation cued the subject to report the perceived unequal colored dot interval using a two-button response box with a 2 s intertrial interval. After each response, feedback was provided by displaying a green (correct) or red (incorrect) dot at fixation. The motivation for variable, gradual onset times was threefold: (1) to minimize abrupt onset transients, (2) to maintain subjects' attention within and between presentation intervals, and (3) to maintain temporal independence between the attentional control task and the experimental stimulus presentation. The average performance was not statistically different between conditions [combined-to-combined: 65%, 95% confidence interval (95% CI) = 58–70; IOVD-to-combined: 66%, 95% CI = 60–71; CD-to-combined: 68%, 95% CI = 62–73)], as confirmed by pairwise Wilcoxon rank-sum tests between conditions (all p-values >0.25).

In Experiment 3, we used a combinatorial design to measure interactions between IOVDs and CDs. There were two adapting cue conditions (IOVD-cue and CD-cue) and three test conditions (combined-cue, IOVD-cue, and CD-cue). In each scan run, subjects were adapted to an adapting condition (i.e., CD-cue, toward direction) and the test stimuli could be one of the six possible combinations of cue (combined, IOVD, or CD) and direction (toward or away). There were five repetitions of these test conditions in the scan run and five blank trials. Because the first and last trials were dummy trials, there were a total of 37 trials in each scan run. The adapting condition was randomized across all scan sessions, so each adapting condition was repeated 12 times. Each subject finished a total of 720 trials, in which there were 120 trials for each adapting and test cue combination (12 adapting cue repetition × 2 directions × 5 repetition within a scan run). The combined-cue test conditions were used to replicate the result of Experiment 1 and confirmed 3D direction-selective adaptation in MT for the IOVD-to-combined condition [adaptation index (AI) = 0.06, p = 0.03], whereas there was no indication of 3D direction-selective adaptation in MT for CD-to-combined condition (AI = −0.02, p = 0.78). There was no measurable 3D direction-selective adaptation in other early visual areas.

To control attention in Experiment 3, subjects performed a luminance change detection task on light or dark squares placed at the four corners of the display (14° from fixation). Subjects reported the luminance increase/decrease (20% contrast change) by pressing a designated button to indicate in which square the luminance change took place. The target square and the direction of luminance change were randomized for each event. Luminance change events lasted 100 ms and took place every 2 s with temporal jitter drawn from a uniform distribution of [−250, 250] ms. Average performance was not statistically different between adapting to IOVD and CD conditions (IOVD: 85%, 95% CI = 82–88; CD: 87%, 95% CI = 83–90), as confirmed by Wilcoxon rank-sum test (p = 0.29).

Psychophysical adaptation procedures.

In the psychophysical experiments, we measured the psychometric function for 3D motion direction discrimination as a function of motion coherence along the toward versus away direction axis, as described previously (Czuba et al., 2010, 2011). The coherence value of the test stimuli was chosen according to a QUEST procedure (Watson and Pelli, 1983). We used two independent QUEST staircases (randomized in trial order) to discourage subjects from guessing the next coherence value. Each staircase consisted of 25 trials. The initial starting points of each staircase were 50% coherence toward and 50% away, respectively. The QUEST output at each trial was later used to characterize the psychometric function. To ensure better estimation of the psychometric function, we added extreme coherence values that were 20% higher than the maximum coherence value in the staircases (five trials) and 30% lower than the minimum coherence value in the staircases (five trials).

In Experiment 2, each subject (n = 3) participated in 8 sessions: 2 sessions for each of 4 adapting conditions [2 adapting cue (IOVD and CD) × 2 adapting direction]. In each session, subjects were adapted to a particular cue condition (i.e., CD-cue toward) and tested with a particular condition (i.e., IOVD-cue).

In Experiment 4, the adapting stimuli consisted of opposite 3D directions alternating every 1 s. Each 3D direction was paired with one of the two 3D motion cues within a session. Subjects (n = 3) participated in 4 sessions: 2 sessions for each 2 adapting conditions (IOVD toward + CD away and IOVD away + CD toward). Each independent staircase was used to measure the psychometric function for IOVD and CD. In the control experiment, subjects (n = 2; one author and one laboratory member who was naive to the purpose of the experiment) participated in 8 sessions: 2 sessions for each 4 adapting conditions (long-lifetime (200 ms) IOVD toward + short-lifetime (66.7 ms) IOVD away, long-lifetime (200 ms) IOVD away + short-lifetime (66.7 ms) IOVD toward, long-lifetime (200 ms) IOVD toward + short-lifetime (33.3 ms) IOVD away, and long-lifetime (200 ms) IOVD away + short-lifetime (33.3 ms) IOVD toward). The test stimuli were long-lifetime IOVD stimuli.

fMRI data analysis.

The fMRI time series of each voxel was high-pass filtered (0.015 Hz cutoff frequency) to compensate for the slow signal drift typical in fMRI signals. Each voxel's time series was also divided by its mean intensity to convert the data from arbitrary image intensity units to percentage signal modulation (% BOLD signal change) and to compensate for variations in mean image intensity across space. Some scan sessions in Experiment 1 had noisy spikes in the raw fMRI data. To remove these artifacts, we defined an ROI outside of the brain and calculated the mean and the SD of the time series in this ROI. We rejected trials (15 in total) if any data point in the trial responses within this ROI exceeded 9 SDs from the mean.

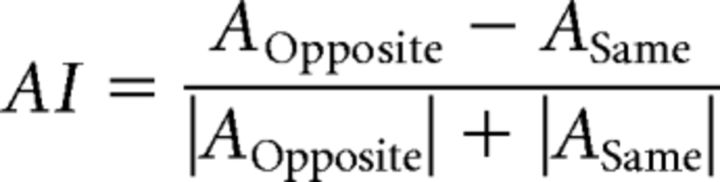

We used methods described previously to measure the fMRI time course on each trial (Larsson et al., 2006; Rokers et al., 2009). Briefly, we first measured the averaged fMRI time series across voxels in each ROI. From this averaged fMRI time series, we extracted fMRI responses for each condition (same, opposite, and blank). We averaged fMRI responses for blank trials and the fMRI response for each trial was measured by subtracting this baseline from each trial's fMRI response. We calculated a response amplitude (A) by projecting each trial's response vector (data points between 6 and 15 s) onto the mean vector of all the trials (regardless of condition), similar to a procedure described previously (Huk et al., 2001; Larsson et al., 2006; Rokers et al., 2009). Using a wider range of data points did not change the overall pattern of amplitude across conditions. To quantify the strength of adaptation, we defined an adaptation index (AI) as follows:

|

where AOpposite is the mean amplitude of responses to the opposite condition and ASame is the mean amplitude of responses to the same condition. Confidence intervals for AIs were estimated using bootstrap resampling. We first selected random 10,000 samples with replacement from the response amplitude for each subject and calculated AIs from each sample. The upper and lower bound of confidence intervals were estimated as the 16th and 84th percentiles of this distribution, respectively. Significant differences in AIs from zero in each visual area were estimated by calculating the ratio of the number of samples smaller than zero to the total number of samples (p-value) from the bootstrapped distribution.

Psychophysical data analysis.

To determine perceptual MAEs, we measured the psychometric function for 3D motion-direction discrimination. We binned coherence values that were used during staircase procedures into nine bins between −100% (away) and 100% (toward). We then calculated the proportion of toward responses in each bin. We used maximum likelihood estimation to fit a Gaussian cumulative distribution function to the data. We quantified 3D MAE magnitude as the difference in the midpoints of the psychometric functions (in units of motion coherence) between the adapt-toward and adapt-away conditions for each 3D motion cue. The 68% confidence interval of the midpoint of the psychometric function was estimated using a parametric bootstrap method (Wichmann and Hill, 2001). Bootstrapped replicates were generated by drawing binomially distributed random numbers with the probability parameter (“p” or “bias”) determined by the value of the best-fit psychometric function to the original data at each position along the x-axis. These MAE magnitude values thus compare two opposite adaptation conditions and are therefore twice the magnitude of the MAEs that we have presented previously, in which we compared a single adaptation condition with an unadapted reference (Czuba et al., 2011).

To further quantify how strong our adaptation effects were (or how sensitive our measurements were) given our sample size, we conducted a post hoc power analysis using the data in the IOVD-to-IOVD condition in Experiment 3. Using a type I error of 0.05 and the observed effect size (d = 3.5), the calculated power was 0.93. This suggests that, had there been a substantial adaptation effect in the cross-cue conditions, we likely would have observed it in our experiment.

Results

We conducted fMRI and psychophysical adaptation experiments using similar visual adaptation procedures (Fig. 1). We measured 3D direction-selective fMRI adaptation and perceptual MAEs as we manipulated the match or mismatch between the adapting cue and the test cue. Observers were adapted to one direction of motion (either toward or away through depth) using stimuli containing either IOVD-biased or CD-based information. We then probed their direction-selective adaptation with test stimuli moving in the same or opposite direction and containing either the same cue or a different cue as that used for adaptation (or both in the “combined-cue” baseline measurements).

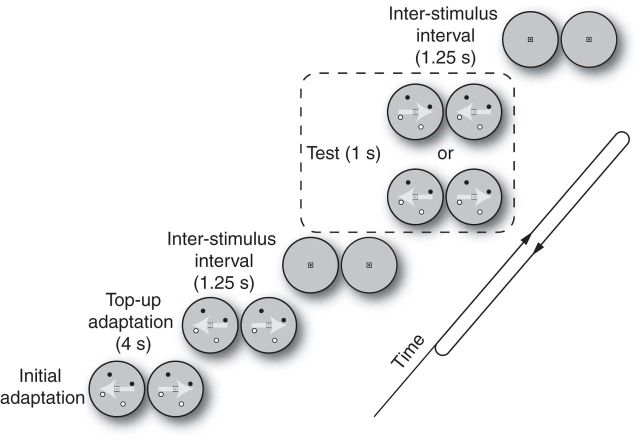

Figure 1.

Adaptation protocol. Subjects initially adapted to 3D motion for a prolonged duration (bottom left; 60 s for Experiment 1, 40 s for Experiments 2–4). Each trial began with top-up adaptation (4 s), followed by a brief test stimulus (1 s). During the inter-stimulus interval (ISI), a midgray blank screen was displayed for 1.25 s.

Experiment 1: cue-specific 3D direction selectivity in MT

Previous psychophysical experiments have shown that both IOVDs and CDs contribute to 3D motion perception and that both are likely to represent direction-selective processing (Brooks, 2002; Czuba et al., 2010, 2011, 2012; Sakano et al., 2012; but also see Allen et al., 2015). In particular, when measured using a combined-cue test stimulus, direction-selective adaptation to an IOVD-biased stimulus produced 3D MAEs that were comparable to those produced by combined-cue adaptation (Czuba et al., 2011). Corresponding CD-cue MAEs are comparatively small, with some debate as to whether they exist at all (Czuba et al., 2011, 2012; Sakano et al., 2012). To establish whether such psychophysical adaptation corresponds to direction-selective signals in human MT (Rokers et al., 2009), we tested for 3D direction-selective fMRI adaptation as a function of different adapting cue conditions (combined-cue, IOVD, and CD). Using the same combined-cue test stimulus across all measurements, there were three adapter cue and test cue pairs: combined-to-combined, IOVD-to-combined, and CD-to-combined.

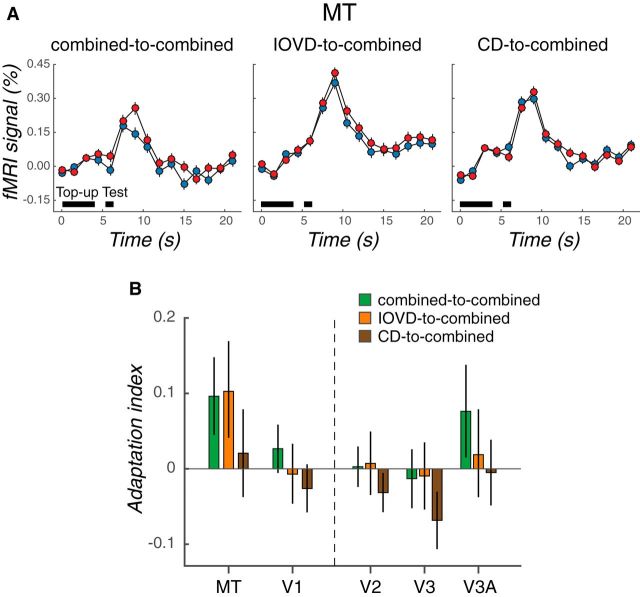

Figure 2A shows the averaged time course of fMRI responses in MT in each condition. Red and blue data points represent the fMRI responses to test stimuli moving in the direction opposite or the same as that of the adapter, respectively. A higher fMRI response to test stimuli moving in the opposite direction would confirm the presence of 3D direction-selective adaptation. The magnitude of 3D direction-selective effects was summarized using an AI (see Materials and Methods).

Figure 2.

3D direction adaptation in MT is cue specific. A, Time courses of averaged fMRI responses in MT. Left, middle, and right, Averaged fMRI responses in the combined-to-combined, IOVD-to-combined, and CD-to-combined conditions, respectively. Blue data points represent fMRI responses to the same-direction tests (i.e., test motion in the same direction as adaptation); red data points represent responses to the opposite-direction tests. Black horizontal bars indicate the top-up adaptation (4 s) and test stimulus (1 s) epochs. The error bars represent ±1 SEM across subjects. B, Adaptation indices in MT and early visual areas (V1–V3A) calculated from the data summarized in A. Green, orange, brown bars represent adaptation indices for the combined-to-combined, IOVD-to-combined, and CD-to-combined conditions, respectively. Error bars indicate 68% CIs.

Direction-selective adaptation in MT was elicited by both combined and cue-isolating 3D motion conditions. There was strong 3D direction-selective adaptation in the combined-to-combined condition in area MT (Fig. 2B, green bar; AI = 0.10; p = 0.029, bootstrapping test). Furthermore, MT exhibited comparably strong 3D direction-selective adaptation in the IOVD-to-combined condition (Fig. 2B, orange bar; AI = 0.10, p = 0.047). In contrast, we did not detect reliable 3D direction-selective adaptation for the CD adaptation condition (CD-to-combined; Fig. 2B, brown bar; AI = 0.02, p = 0.40). The difference between combined and IOVD adaptation conditions is clearly very small (difference in AIs = 0.003, 68% CI = −0.08 to 0.08, p = 0.47). Although the IOVD AI was significantly different from zero and the CD condition was not, the difference between the two conditions was not statistically significant (difference in AIs = 0.082, 68% CI = −0.001 to 0.17, p = 0.16). We also observed marginal 3D motion direction-selective adaptation in V3A (combined-to-combined; AI = 0.07; p = 0.10). Other early visual areas did not show 3D direction selectivity (V2: AI = 0.003, p = 0.46; V3: AI = −0.01, p = 0.64) and V1 did not reflect clear evidence for 3D direction selectivity (AI = 0.03; p = 0.196).

This initial fMRI experiment demonstrates 3D direction selectivity in MT based on cue-isolating stimuli. These results provide an important link between MT activity and cue-specific 3D MAEs and set the stage for subsequent testing of whether IOVD and CD signals are fused into a single 3D motion signal in MT. To assess such possible interactions between the IOVD and CD mechanisms, we tested for adaptation transfer between cues in the following psychophysical and fMRI experiments. The presence (or absence) of adaptation transfer between cues would suggest fusion (or independence) between IOVD and CD mechanisms.

Experiment 2: cue-independent perceptual 3D MAEs

In this experiment, we sought to test directly whether IOVDs and CDs are functionally fused within a common 3D motion mechanism or if they are distinct enough to be dissociated using perceptual aftereffects. To do this, we manipulated the relationship between the adapting cue and the test cue: the adapting and the test cue were either the same (“within-cue” conditions: IOVD-to-IOVD and CD-to-CD) or different (“cross-cue” conditions: CD-to-IOVD and IOVD-to-CD). The within-cue conditions established the presence of an MAE within each 3D motion mechanism (i.e., using a test stimulus that matched the adapter as opposed to the common combined-cue test used in Experiment 1), whereas the cross-cue conditions tested for possible dissociation of the cues (i.e., using test stimuli that differed from the adapter).

We used a paradigm similar to the preceding fMRI adaptation experiment except that the 3D motion strength (coherence) of the test stimulus was varied from trial to trial so that psychometric functions for 3D motion direction discrimination could be estimated. The fully crossed design comprised two adapting conditions (IOVD and CD), two adapting directions (toward and away), and two test conditions (IOVD and CD). In a given session, subjects were adapted to one of four adapting conditions (e.g., CD toward) and tested with one of two test conditions (e.g., IOVD) in both directions (toward and away).

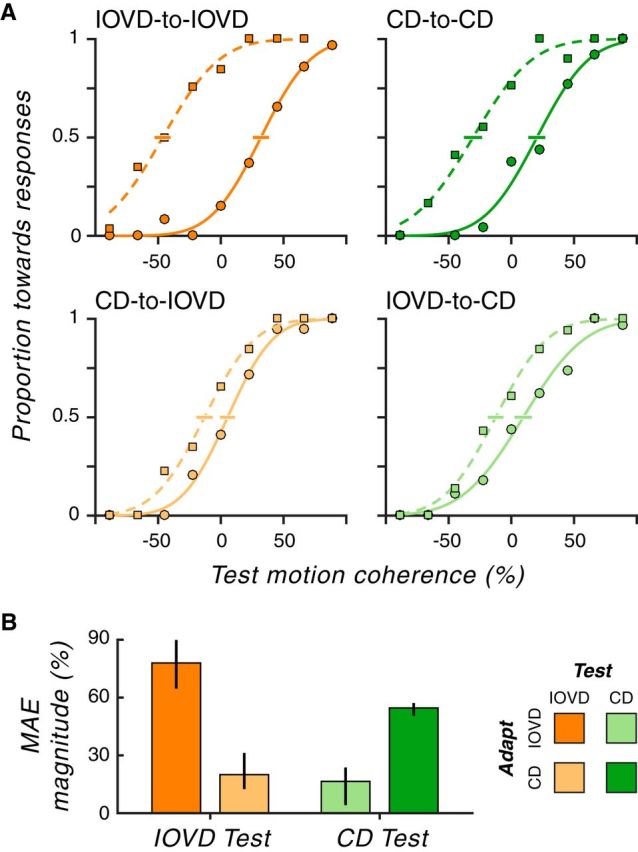

Figure 3A shows the average psychometric functions for adapting to toward motion (circles and solid lines) and adapting to away motion (squares and dashed lines) in each condition. The data points are the proportion of “toward” responses at each coherence and the lines are cumulative normal fits to these data. Lateral shifts in the psychometric function (i.e., along the x-axis) after adaptation are indicative of MAEs. We thus estimated the overall magnitude of the 3D MAEs for each condition as the relative horizontal offset between the psychometric functions for toward versus away motion adaptation.

Figure 3.

3D MAEs are cue specific. A, Psychometric functions for each adapting-cue-to-test-cue condition (averaged over subjects). In each panel, the solid and dashed lines depict the best fitting cumulative Gaussian functions to the data points for adapting to toward (circle) and away (square) motion, respectively. The y-axis shows the proportion of “toward” responses and the x-axis represents 3D motion strength in units of 3D motion coherence. The negative and positive coherence values are mapped to away and toward 3D motion, respectively, with higher values corresponding to stronger 3D motion strength. Error bars around the midpoint of the psychometric function show 68% CIs. B, For each condition, MAE magnitudes were computed from the difference in motion coherence at the midpoint of the “adapt toward” minus the “adapt away” psychometric functions. The x-axis represents test cue conditions (orange: IOVD and green: CD). For each test cue condition, the more saturated color represents the within-cue condition (e.g., IOVD-to-IOVD) and the less saturated color represents the cross-cue condition (e.g., CD-to-IOVD). Error bars indicate 95% CIs. Clearly, within-cue adaptation is much stronger than cross-cue adaptation.

We found strong MAEs in within-cue conditions (Fig. 3B; IOVD-to-IOVD: MAE magnitude = 78% coherence, 95% CI = 65.3–89.2; CD-to-CD: 55% coherence, 95% CI = 51.2–56.4). These within-cue results confirm perceptual 3D direction selectivity for both IOVDs and CDs (Czuba et al., 2011). In contrast, cross-cue conditions yielded much smaller aftereffects (CD-to-IOVD: 20% coherence, 95% CI = 13.2–30.6; IOVD-to-CD: 16% coherence, 95% CI = 5.9–23.1), markedly weaker compared with within-cue conditions (Wilcoxon rank-sum tests: IOVD-to-IOVD vs CD-to-IOVD, p ∼ 0; CD-to-CD vs IOVD-to-CD, p ∼ 0). These very small cross-cue MAEs suggest that IOVD and CD signals are mostly, if not entirely, segregated in perceptual 3D motion computations.

It is conceivable that some of this cue-specific dissociation is because the IOVD stimuli could produce monocular MAEs (whereas the CD stimuli could not) and this monocular adaption (or lack thereof) could produce an interaction such as that we observed. However, we have specifically ruled this out in our prior work (Czuba et al., 2011; see especially Fig. 14 in that study), in which we showed quantitatively that 3D MAEs could not be explained by monocular direction-selective adaptation. We thus conclude that the weak transfer between conditions that we see here reflects a distinct set of 3D motion signals as opposed to a distinction between stimuli that contain monocular motions (IOVD) and those that do not (CD). In light of this finding of distinct IOVD and CD processing at the level of perception, we then sought to test whether 3D direction-selective processing in MT was similarly cue specific.

Experiment 3: cue-independent 3D direction-selective adaptation in MT

Based on our psychophysical finding of strong within-cue MAEs and weak cross-cue MAEs (Experiment 2), we tested whether there would be a similar pattern of cue-specific adaptation in human MT. On a given scan run, subjects were adapted to a particular cue and direction combination (e.g., IOVD cue, toward direction). To test for interactions between IOVDs and CDs, we used a fully crossed design of each cue and direction. The test stimuli in each trial were chosen randomly from the set of all combinations (IOVD-toward, IOVD-away, CD-toward, and CD-away). Therefore, there were within-cue conditions (when the adapting cue and test cue were the same) and cross-cue conditions (when the adapting cue and test cue were different), all present in a given scan run.

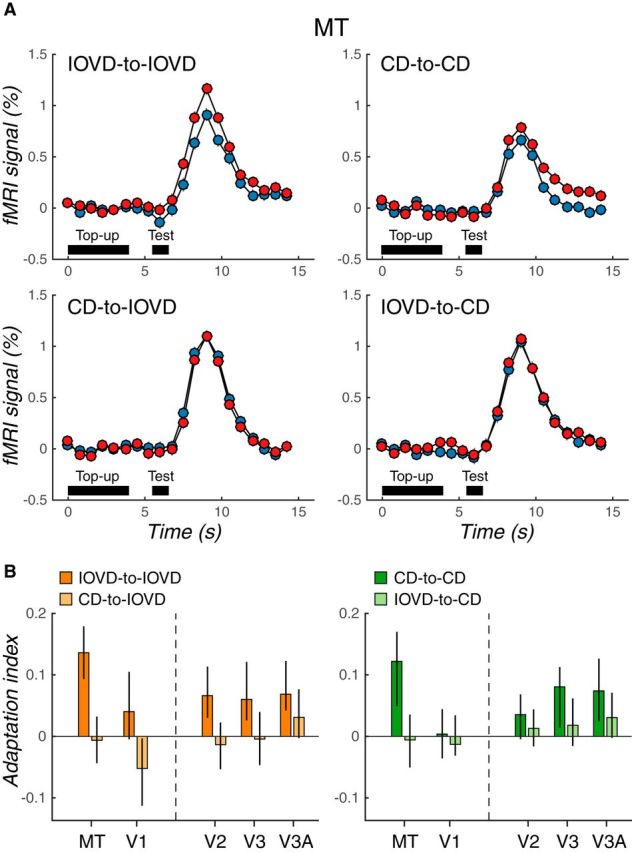

Direction-selective adaptation in MT was strikingly cue specific. Figure 4A shows the average fMRI time course in MT for each condition. For within-cue conditions, the fMRI response to the opposite-direction test stimulus was strong compared with that for the same-direction test, demonstrating 3D direction-selective adaptation. However, for cross-cue conditions, the fMRI response to the two test directions was nearly identical, indicating little, if any, evidence for directional interactions between IOVD and CD cues.

Figure 4.

IOVD and CD adaptation do not interact in MT. A, Time course of mean fMRI responses in MT for each adapting cue-to-test cue pairing (same conventions as Fig. 2). B, Adaptation indices in MT and early visual areas (V1–V3A) computed from the data summarized in A. The left and right panels show adaptation indices for IOVD (orange) and CD (green) test conditions, respectively. For each test cue condition, the saturated color represents the within-cue condition (i.e., IOVD-to-IOVD) and the desaturated color represents the cross-cue condition (i.e., CD-to-IOVD). Error bars indicate 68% CIs. The adaptation in MT is clearly cue specific.

We quantified these results by computing adaptation indices just as in Experiment 1 and these are shown in Figure 4B. Both within-cue conditions resulted in strong 3D direction-selective adaptation in MT (Fig. 4B; AI = 0.14, p = 0.0006 for IOVD-to-IOVD; AI = 0.12, p = 0.03 for CD-to-CD). In clear contrast, there was no compelling indication of 3D direction-selective adaptation in MT in cross-cue conditions (CD-to-IOVD: AI = −0.006, p = 0.56; IOVD-to-CD: AI = −0.006, p = 0.57). Comparison of within-cue and cross-cue conditions confirmed stronger adaptation effects in within-cue conditions compared with cross-cue conditions (IOVD-to-IOVD vs CD-to-IOVD: difference in AI = 0.14, p = 0.01; CD-to-CD vs IOVD-to-CD: difference in AI = 0.11, p = 0.04). Similar to the dissociation of 3D MAEs from monocular adaptation discussed in the preceding psychophysics, it is unlikely that the observed IOVD-to-IOVD aftereffects in this experiment simply reflect the collective adaptation of monocular motion pathways. Rokers et al. (2009) found that monocular adaptation could not account for majority of the directionally selective 3D motion adaptation in MT.

We did not find any clear 3D motion direction-selective adaptation in V1 (AIs ∼ 0 for both IOVD-to-IOVD and CD-to-CD), consistent with our previous findings that suggest a primarily extrastriate locus for 3D direction selectivity (Experiment 1; also see Rokers et al., 2009). Other visual areas showed a general increase in 3D direction selectivity up the visual hierarchy (IOVD-to-IOVD: AI = 0.07, p = 0.03 for V2, AI = 0.06, p = 0.06 for V3, AI = 0.07, p = 0.02 for V3A; CD-to-CD: AI = 0.04, p = 0.17 for V2, AI = 0.08, p = 0.10 for V3, AI = 0.07, p = 0.07 for V3A), but there was no sign of a IOVD–CD interaction across any of the visual areas that we assessed.

Summing up the psychophysical and MRI adaptation experiments so far, we found the same general pattern of results: cross-cue conditions resulted in far weaker 3D direction-selective adaptation compared with within-cue conditions. The small difference in cross-cue adaptation transfer between psychophysical and fMRI measurements (i.e., small transfer in perceptual MAEs compared with no measurable transfer in MT responses) might be due to different sensitivity between the techniques, but is also consistent with the two binocular cues interacting downstream of MT. These results lend critical support to the picture that has emerged over this series of experiments: even though they are both binocular sources of information in approximately the 3D direction, IOVDs and CDs are processed by relatively separate visual mechanisms.

Experiment 4: cue-specific, opposite-direction MAEs

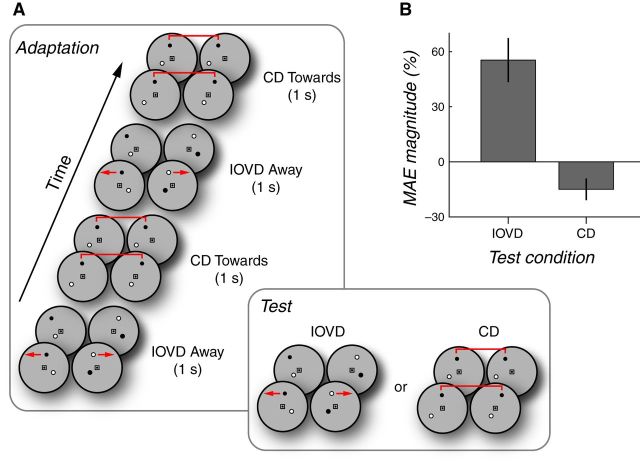

Finally, we reasoned that, if IOVDs and CDs are primarily distinct visual signals, then the visual system should be able to adapt independently to IOVDs and CDs at the same time. We hypothesized that there would be cue-specific 3D direction-selective adaptation when the adapting stimuli consisted of spatially superimposed (but temporally alternating) opposite directions of 3D motion so long as each direction was paired with a unique cue (e.g., IOVD-toward and CD-away). Figure 5A schematizes the procedure. In a given session, subjects were adapted to one set of cue-direction pairs alternating at 0.5 Hz (e.g., IOVD-toward and CD-away). The test stimuli were either IOVD or CD chosen randomly on each trial to discourage subjects from anticipating the test cue (and/or attending to only one cue over the other during adapting periods). In subsequent sessions, subjects adapted to the complementary set of cue-direction pairs (e.g., IOVD-away and CD-toward).

Figure 5.

Simultaneous opposite-direction cue-specific MAEs. A, Adaptation sequence used in Experiment 4 consisted of alternating 3D motion directions (toward or away, 0.5 Hz) presented in synchronously alternating IOVD or CD stimuli. Cue-direction pairings were fixed for each session; each subject completed a balanced set of sessions. On any given trial within a session, the test cue was either IOVD or CD. B, MAE magnitudes for the IOVD and CD test cues. By convention, the CD MAE is shown with negative values to emphasize the opposing direction of simultaneously elicited motion after effects. Error bars indicate 95% CIs. Simultaneous opposite adaptation was obtained and appears to be stronger for the IOVD stimulus.

Consistent with our prediction, but surprisingly nonetheless, we did in fact observe cue-specific, spatially superimposed, opposite-direction MAEs for IOVD and CD (Fig. 5B). The magnitudes of the interleaved opposite direction IOVD and CD MAEs were 55% coherence (95% CI = 29.1–86.0) and 15% coherence (95% CI = 1.15–26.0), respectively. Therefore, although adapting stimuli consisted of opposite directions of 3D motion, there were measurable MAEs in opposite directions when each direction of 3D motion was carried by a distinct 3D motion cue.

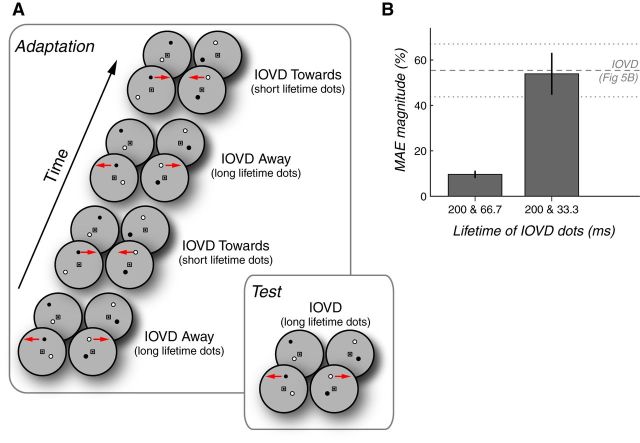

There is a possibility that these experiments demonstrated, not the existence of separate processing mechanisms for IOVD and CD, but rather a contingent MAE (Favreau et al., 1972; Walker, 1972; Favreau, 1976). Because the IOVD-biased and CD-isolating stimuli have inherently distinct monocular temporal properties, it is possible that apparent cue-specific MAEs might actually reflect directionally selective MAEs that are contingent on the temporal properties of the stimuli. To investigate this possibility directly, we attempted to induce such a contingent aftereffect using two IOVD stimuli that had noticeably different monocular temporal properties. Specifically, we presented alternating opposite 3D adaptation directions (0.5 Hz) paired with IOVD stimuli containing synchronously alternating long-lifetime (200 ms) or short-lifetime (66.7 ms) dots (Fig. 6A). Test stimuli contained only IOVD stimuli with long-lifetime (200 ms) dots.

Figure 6.

Control experiments support the idea that cue-specific MAEs are not merely contingent on temporal monocular characteristics. A, Variant of the adaptation procedure used in Experiment 4 consisted of alternating 3D motion directions (toward or away, 0.5 Hz) presented using IOVD stimuli with alternating long-lifetime (200 ms) or short-lifetime dots. Short dot lifetimes were set to either 66.7 or 33.3 ms in separate experiments. Test stimuli always contained long-lifetime IOVD dots. B, MAE magnitudes for the long-lifetime IOVD test stimuli in each control experiment. MAEs in the direction opposite of the long-lifetime adaptor were greatly reduced when alternated with short-lifetime adaptation in the opposite direction (which, despite the shorter lifetime, still clearly conveyed 3D direction; left bar). However, when alternated with ultra-short-lifetime dots (i.e., lifetimes so short that they yielded a weak or absent impression of 3D motion), the MAE magnitude was equal to that seen when paired with a CD stimulus in the previous experiment (dashed lines). Error bars indicate 95% CIs.

There are two different predictions for this control experiment. The first prediction would hold in the “contingent aftereffect” case. Here, the simultaneous opposite-direction MAE would be principally dependent on the pairing of temporal characteristics (long or short dot lifetimes) with a unique direction of adaptation. This would hold regardless of whether opposite-direction adaptation contained distinct motion cues (as in the main experiment) or contained the same motion cue (as in this control experiment). In this case, one would expect to see a robust 3D MAE from the long-lifetime IOVD test stimulus in the opposite the direction of the long-lifetime adaptation.

Conversely, if simultaneous opposite-direction 3D MAEs were not merely contingent on stimulus dot lifetimes, then adaptation to opposite-direction long- versus short-lifetime stimuli that contained the same 3D motion cue (in this case IOVD) would be expected to produce a weak 3D MAE because opposite adaptation directions driving a common neural substrate would effectively cancel out adaptation effects. In this case, one would expect to see a diminished 3D MAE from long-lifetime IOVD test stimuli in the control experiment.

Consistent with true cue-specific adaptation (and arguing against the contingent aftereffect explanation), we observed a 3D MAE with long-lifetime IOVD dots that was substantially weaker after adaptation to alternating 3D directions paired with synchronously alternating long- or short-lifetime IOVD dots (Fig. 6B, left bar; mean = 9.6%, 95% CI = 8.0–11.3). This suggests that cue-specific opposite-direction MAEs for IOVD and CD were not simply attributable to the distinct temporal characteristics between IOVD and CD stimuli, but instead truly reflected independent processing of IOVD and CD cues.

To further investigate whether the reduced MAE magnitude in the control experiment was due to an interaction of alternating opposite-direction IOVD adaptation and not simply by inserting short-lifetime dots, we conducted a follow-up experiment in which we used an even shorter “short-lifetime” IOVD dot duration of just 33.3 ms (i.e., a single two-frame motion step per lifetime). Perceptually, this shorter lifetime yielded little to no detectable 3D motion while maintaining a 50% duty cycle of long-lifetime IOVD dots during adaptation. The resulting 3D MAE magnitude when tested with long-lifetime dots was now stronger (Fig. 6B; 200 and 33.3 ms, mean = 53.9%, 95% CI = 44.7–63.31) and in fact was indistinguishable from the IOVD MAE magnitude produced from opposite-direction IOVD-CD adaptation (dashed gray lines, replotted from Fig. 5B).

Together, these results suggest that, not only are cue-specific opposite-direction 3D MAEs not explained by mere stimulus contingent aftereffects, but also that there is little to no interaction between opposite-direction adaptation when opposing adaptation directions are presented in different 3D motion cues. Therefore, cue-specific MAEs point to distinct 3D motion pathways, each driven by a distinct source of dynamic binocular information.

Discussion

We performed direct tests of cross-cue interactions between IOVD and CD cues in both neuroimaging and psychophysical experiments and found that these two binocular sources of 3D motion information were processed largely independently in human MT and at the level of perception. These results indicate that at least some of the velocity-based and disparity-based signals that arrive in MT via segregated pathways (Movshon and Newsome, 1996; Ponce et al., 2008, 2011; Smolyanskaya et al., 2015) remain functionally distinct in MT during the computation of 3D direction. These results also tighten the connections between IOVD- and CD- based processing seen at the single-unit level in monkeys (Czuba et al., 2014; Sanada and DeAngelis, 2014) with the inferences drawn from perceptual and neuroimaging experiments in humans (Rokers et al., 2009; Czuba et al., 2010, 2011).

It remains logically possible that our observations of weak (or even absent) cross-cue adaptation might be related to the sensitivity of our measurements. However, our fMRI measurements did reveal that area MT can be adapted to each 3D motion cue (Fig. 4B; IOVD-to-IOVD and CD-to-CD conditions), demonstrating our ability to resolve within-cue effects. Likewise, our psychophysical experiments did reveal small amounts of cross-cue transfer. The key point here is that our results support the idea of largely separable processing of CD and IOVD cues in both a key stage of motion processing (area MT) and in psychophysical assays of direction-selective processing. Some amount of transfer occurs at the level of perception and future work will be required to distinguish whether this relatively small amount of fusion of IOVD and CD is supported by processing within or outside of area MT.

In many contexts in which multiple sources of correlated information are present, the visual system often employs some form of cue combination (Meredith and Stein, 1983; Landy et al., 1995; Tsutsui et al., 2002; Liu et al., 2004; Gu et al., 2008; Morgan et al., 2008; Fetsch et al., 2013). In domains related to this work, human neuroimaging studies have shown that motion and disparity cues to depth are combined in dorsal visual cortex (Ban et al., 2012) and that disparity and pictorial cues to depth are integrated in ventral visual cortex (Welchman et al., 2005). Furthermore, monkey single-unit recording studies have revealed responses in area MT consistent with the combination of visual motion parallax with other nonretinal cues (Nadler et al., 2008, 2009).

Given the empirical and theoretical support for cue combination, it might seem surprising that IOVD and CD signals did not interact strongly in our experiments because they can both be used to compute 3D direction, they both drive MT responses (Rokers et al., 2009; Czuba et al., 2014; Sanada and DeAngelis, 2014), and, in fact, they cooccur in almost all naturally occurring 3D motion. However, it is worth noting that, although single-unit 3D direction selectivities for IOVD and CD cues have been shown to be significantly correlated, only a small fraction of neurons (6.5%, four neurons of 62) showed significant 3D direction selectivity for both IOVD and CD (Sanada and DeAngelis, 2014). The single-unit data thus do not predict clearly either fusion or separation of the two cues and are thus potentially compatible with our human evidence suggesting distinct IOVD and CD pathways.

Conversely, the notion of a single fused “3D motion” system is difficult to reconcile with a constellation of known phenomena. CD processing is known to be tuned for slow and parafoveal 3D motions, whereas IOVD sensitivity spans a wider range of 3D motions across both speed and eccentricity (Czuba et al., 2010). Although one recent study estimated that IOVD and CD cues can be combined in a Bayesian fashion, the possibility of statistically combining these distinct signals at the level of decisions, at least under some viewing conditions, is not inconsistent with the notion of two separable visual processing streams (Allen et al., 2015). In other words, the IOVD and CD signals may remain separate sensory representations, but decision processes may combine them to form a single decision variable. Such combination of IOVD and CD “evidence” is not at odds with our finding that IOVD adaptation does not affect CD processing and vice versa. As to the question of “but why have these different sensory representations when virtually all real stimuli contain both cues?,” we posit that these representations inherently have different spatial and temporal sensitivities (Czuba et al., 2010) and thus having both allows the system to encode a broader range of real-world stimuli, analogous to the way in which a system that has both rod and cone photoreceptors can operate across a broader range of light levels.

We therefore hypothesize the existence of at least two distinct 3D motion subcircuits preserved in early and middle stages of visual processing, subcircuits that are amenable to combination at the level of perceptual decisions, but are not mandatorily combined in a single visual representation. However, our focus on early visual cortical areas in our imaging studies leaves open the possibility that some amount of IOVD and CD mixing occurs in later visual areas (e.g., ventral intraparietal area, fundus of superior temporal area, etc.) or that areas typically implicated in perceptual decision making can combine the mostly separate cue-specific information in a manner better thought of as “read out” after sensory processing. It is now of particular interest to study whether the brain regions implicated in frontoparallel motion decisions are responsible for reading out 3D motion signals (e.g., lateral interparietal area, frontal eye field, superior colliculus, etc.) and how separate IOVD and CD signals in area MT might be combined in those later brain areas to encode a decision variable.

Consistent with the notion of 3D motion cue separation, we were able to elicit cue-specific MAEs in opposite 3D directions generated using IOVD and CD stimuli during interleaved presentations at the same spatial locations. It is unlikely that these separable IOVD and CD MAEs are simply instances of contingent MAEs (Favreau et al., 1972; Walker, 1972; Favreau, 1976) because control experiments demonstrated that these cue-specific 3D MAEs were not strongly dependent on monocular temporal characteristics of stimuli. Further, the perceptual dissociation of IOVDs and CDs in this experiment further validates the use of anticorrelated dots to isolate the IOVD cue (Rokers et al., 2008, 2009; Czuba et al., 2010, 2011); although disparity information is degraded in these stimuli (Rokers et al., 2008), a significant confounding contribution of such residual disparity signals in such stimuli would have supported stronger cross-cue adaptation effects.

Our findings of mostly separate processing of CDs and IOVDs may also shed light upon the individual differences that have been reported in 3D motion perception (Nefs et al., 2010). In the current study, as well as in most of our prior work, we have relied on a large number of measurements in a small number of subjects. Although this allows us to ensure good fixation and vergence posture in expert subjects, it of course limits our ability to speak to variability across subjects and to naive observers. In our fMRI experiments, we did not note systematic anecdotal differences in the pattern of results related to the individual subject's experience with cue-isolating stimuli and, in our psychophysical experiments, the naive observer produced a canonical pattern of results. We therefore believe that our particular protocols are tapping fairly general sensory processing mechanisms and hypothesize that larger ranges of individual variability may be dependent on the particular stimulus parameters and tasks used. Regardless, our finding of mostly separate IOVD and CD signals may shed some light upon individual differences. Given that IOVD and CD cues are typically correlated in real-world vision, it is possible that some individuals may have learned to rely preferentially on one cue over the other. Whether this correlates with a change in sensory quality per se or with efficiency in reading it out remains unknown. However, the separation of the two cues in terms of underlying neural substrates in fact provides the only realistic means by which idiosyncratic differences between the cues could occur across individuals; if there was only a single underlying mechanism, then observers could only differ in overall sensitivity.

Together, our results reveal the existence of separate, dissociable velocity-based and disparity-based systems for 3D motion processing. Future research will be needed to investigate how monocular cues to 3D motion (e.g., size change/looming) are integrated with these pathways, as well as the conditions under which these cues might be combined in perceptual decisions and visually guided behaviors. However, it is rather striking that two binocular sources of information that necessarily arise from a common viewing geometry would be extracted by rather distinct mechanisms and kept mostly separate. This raises the intriguing conjecture that the changing disparity pathway may have evolved for the perception of slowly manipulating objects in central vision and that the interocular velocity pathway may be a robust mechanism for a broader range of interactions with movements in the 3D environment.

Footnotes

This research was supported by the National Eye Institute–National Institutes of Health (Grant R01-EY020592 to A.C.H., L.K.C., and Adam Kohn at the Albert Einstein College of Medicine).

The authors declare no competing financial interests.

References

- Allen B, Haun AM, Hanley T, Green CS, Rokers B. Optimal combination of the binocular cues to 3D motion. Invest Ophthalmol Vis Sci. 2015;56:7589–7596. doi: 10.1167/iovs.15-17696. [DOI] [PubMed] [Google Scholar]

- Anstis S, Verstraten FA, Mather G. The motion aftereffect. Trends Cogn Sci. 1998;2:111–117. doi: 10.1016/S1364-6613(98)01142-5. [DOI] [PubMed] [Google Scholar]

- Ban H, Preston TJ, Meeson A, Welchman AE. The integration of motion and disparity cues to depth in dorsal visual cortex. Nat Neurosci. 2012;15:636–643. doi: 10.1038/nn.3046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brooks KR. Interocular velocity difference contributes to stereomotion speed perception. J Vis. 2002;2:218–231. doi: 10.1167/2.3.2. [DOI] [PubMed] [Google Scholar]

- Czuba TB, Rokers B, Huk AC, Cormack LK. Speed and eccentricity tuning reveal a central role for the velocity-based cue to 3D visual motion. J Neurophysiol. 2010;104:2886–2899. doi: 10.1152/jn.00585.2009. [DOI] [PubMed] [Google Scholar]

- Czuba TB, Rokers B, Guillet K, Huk AC, Cormack LK. Three-dimensional motion aftereffects reveal distinct direction-selective mechanisms for binocular processing of motion through depth. J Vis. 2011;11:18. doi: 10.1167/11.10.18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Czuba TB, Rokers B, Huk AC, Cormack LK. To CD or not to CD: Is there a 3D motion aftereffect based on changing disparities? J Vis. 2012;12:7. doi: 10.1167/12.4.7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Czuba TB, Huk AC, Cormack LK, Kohn A. Area MT encodes three-dimensional motion. J Neurosci. 2014;34:15522–15533. doi: 10.1523/JNEUROSCI.1081-14.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Engel SA, Rumelhart DE, Wandell BA, Lee AT, Glover GH, Chichilnisky EJ, Shadlen MN. fMRI of human visual cortex. Nature. 1994;369:525. doi: 10.1038/369525a0. [DOI] [PubMed] [Google Scholar]

- Favreau OE. Motion aftereffects: evidence for parallel processing in motion perception. Vision Res. 1976;16:181–186. doi: 10.1016/0042-6989(76)90096-1. [DOI] [PubMed] [Google Scholar]

- Favreau OE, Emerson VF, Corballis MC. Motion perception: a color-contingent aftereffect. Science. 1972;176:78–79. doi: 10.1126/science.176.4030.78. [DOI] [PubMed] [Google Scholar]

- Fetsch CR, DeAngelis GC, Angelaki DE. Bridging the gap between theories of sensory cue integration and the physiology of multisensory neurons. Nat Rev Neurosci. 2013;14:429–442. doi: 10.1038/nrn3503. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gu Y, Angelaki DE, Deangelis GC. Neural correlates of multisensory cue integration in macaque MSTd. Nat Neurosci. 2008;11:1201–1210. doi: 10.1038/nn.2191. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huk AC, Ress D, Heeger DJ. Neuronal basis of the motion aftereffect reconsidered. Neuron. 2001;32:161–172. doi: 10.1016/S0896-6273(01)00452-4. [DOI] [PubMed] [Google Scholar]

- Huk AC, Dougherty RF, Heeger DJ. Retinotopy and functional subdivision of human areas MT and MST. J Neurosci. 2002;22:7195–7205. doi: 10.1523/JNEUROSCI.22-16-07195.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Julesz B. Foundations of cyclopean perception. Chicago: University of Chicago Press; 1971. [Google Scholar]

- Landy MS, Maloney LT, Johnston EB, Young M. Measurement and modeling of depth cue combination: in defense of weak fusion. Vision Res. 1995;35:389–412. doi: 10.1016/0042-6989(94)00176-M. [DOI] [PubMed] [Google Scholar]

- Larsson J, Landy MS, Heeger DJ. Orientation-selective adaptation to first- and second-order patterns in human visual cortex. J Neurophysiol. 2006;95:862–881. doi: 10.1152/jn.00668.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu Y, Vogels R, Orban GA. Convergence of depth from texture and depth from disparity in macaque inferior temporal cortex. J Neurosci. 2004;24:3795–3800. doi: 10.1523/JNEUROSCI.0150-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mather G, Verstraten F, Anstis S. The motion aftereffect: A modern perspective. Cambridge, MA: MIT Press; 1998. [DOI] [PubMed] [Google Scholar]

- Meredith MA, Stein BE. Interactions among converging sensory inputs in the superior colliculus. Science. 1983;221:389–391. doi: 10.1126/science.6867718. [DOI] [PubMed] [Google Scholar]

- Morgan ML, Deangelis GC, Angelaki DE. Multisensory integration in macaque visual cortex depends on cue reliability. Neuron. 2008;59:662–673. doi: 10.1016/j.neuron.2008.06.024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Movshon JA, Newsome WT. Visual response properties of striate cortical neurons projecting to area MT in macaque monkeys. J Neurosci. 1996;16:7733–7741. doi: 10.1523/JNEUROSCI.16-23-07733.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nadler JW, Angelaki DE, DeAngelis GC. A neural representation of depth from motion parallax in macaque visual cortex. Nature. 2008;452:642–645. doi: 10.1038/nature06814. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nadler JW, Nawrot M, Angelaki DE, DeAngelis GC. MT neurons combine visual motion with a smooth eye movement signal to code depth-sign from motion parallax. Neuron. 2009;63:523–532. doi: 10.1016/j.neuron.2009.07.029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nefs HT, O'Hare L, Harris J. Two independent mechanisms for motion-in-depth perception: evidence from individual differences. Front Psychol. 2010;1:155. doi: 10.3389/fpsyg.2010.00155. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nestares O, Heeger DJ. Robust multiresolution alignment of MRI brain volumes. Magn Reson Med. 2000;43:705–715. doi: 10.1002/(SICI)1522-2594(200005)43:5%3C705::AID-MRM13%3E3.0.CO;2-R. [DOI] [PubMed] [Google Scholar]

- Ponce CR, Lomber SG, Born RT. Integrating motion and depth via parallel pathways. Nat Neurosci. 2008;11:216–223. doi: 10.1038/nn2039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ponce CR, Hunter JN, Pack CC, Lomber SG, Born RT. Contributions of indirect pathways to visual response properties in macaque middle temporal area MT. J Neurosci. 2011;31:3894–3903. doi: 10.1523/JNEUROSCI.5362-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rokers B, Cormack LK, Huk AC. Strong percepts of motion through depth without strong percepts of position in depth. J Vis. 2008;8:6.1–10. doi: 10.1167/8.4.6. [DOI] [PubMed] [Google Scholar]

- Rokers B, Cormack LK, Huk AC. Disparity- and velocity-based signals for three-dimensional motion perception in human MT+ Nat Neurosci. 2009;12:1050–1055. doi: 10.1038/nn.2343. [DOI] [PubMed] [Google Scholar]

- Sakano Y, Allison RS, Howard IP. Motion aftereffect in depth based on binocular information. J Vis. 2012;12:11. doi: 10.1167/12.1.11. pi. [DOI] [PubMed] [Google Scholar]

- Sanada TM, DeAngelis GC. Neural representation of motion-in-depth in area MT. J Neurosci. 2014;34:15508–15521. doi: 10.1523/JNEUROSCI.1072-14.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sereno MI, Dale AM, Reppas JB, Kwong KK, Belliveau JW, Brady TJ, Rosen BR, Tootell RB. Borders of multiple visual areas in humans revealed by functional magnetic resonance imaging. Science. 1995;268:889–893. doi: 10.1126/science.7754376. [DOI] [PubMed] [Google Scholar]

- Shioiri S, Saisho H, Yaguchi H. Motion in depth based on inter-ocular velocity differences. Vision Res. 2000;40:2565–2572. doi: 10.1016/S0042-6989(00)00130-9. [DOI] [PubMed] [Google Scholar]

- Smolyanskaya A, Haefner RM, Lomber SG, Born RT. A modality-specific feedforward component of choice-related activity in MT. Neuron. 2015;87:208–219. doi: 10.1016/j.neuron.2015.06.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tsutsui K, Sakata H, Naganuma T, Taira M. Neural correlates for perception of 3D surface orientation from texture gradient. Science. 2002;298:409–412. doi: 10.1126/science.1074128. [DOI] [PubMed] [Google Scholar]

- Walker JT. A texture-contingent visual motion aftereffect. Psychon Sci. 1972;28:333–335. doi: 10.3758/BF03328755. [DOI] [Google Scholar]

- Watanabe Y, Kezuka T, Harasawa K, Usui M, Yaguchi H, Shioiri S. A new method for assessing motion-in-depth perception in strabismic patients. Br J Ophthalmol. 2008;92:47–50. doi: 10.1136/bjo.2007.117507. [DOI] [PubMed] [Google Scholar]

- Watson AB, Pelli DG. QUEST: a Bayesian adaptive psychometric method. Percept Psychophys. 1983;33:113–120. doi: 10.3758/BF03202828. [DOI] [PubMed] [Google Scholar]

- Welchman AE, Deubelius A, Conrad V, Bülthoff HH, Kourtzi Z. 3D shape perception from combined depth cues in human visual cortex. Nat Neurosci. 2005;8:820–827. doi: 10.1038/nn1461. [DOI] [PubMed] [Google Scholar]

- Wichmann FA, Hill NJ. The psychometric function: II. Bootstrap-based confidence intervals and sampling. Percept Psychophys. 2001;63:1314–1329. doi: 10.3758/BF03194545. [DOI] [PubMed] [Google Scholar]