Abstract

There is a great deal of interest in using large scale brain imaging studies to understand how brain connectivity evolves over time for an individual and how it varies over different levels/quantiles of cognitive function. To do so, one typically performs so-called tractography procedures on diffusion MR brain images and derives measures of brain connectivity expressed as graphs. The nodes correspond to distinct brain regions and the edges encode the strength of the connection. The scientific interest is in characterizing the evolution of these graphs over time or from healthy individuals to diseased. We pose this important question in terms of the Laplacian of the connectivity graphs derived from various longitudinal or disease time points — quantifying its progression is then expressed in terms of coupling the harmonic bases of a full set of Laplacians. We derive a coupled system of generalized eigenvalue problems (and corresponding numerical optimization schemes) whose solution helps characterize the full life cycle of brain connectivity evolution in a given dataset. Finally, we show a set of results on a diffusion MR imaging dataset of middle aged people at risk for Alzheimer’s disease (AD), who are cognitively healthy. In such asymptomatic adults, we find that a framework for characterizing brain connectivity evolution provides the ability to predict cognitive scores for individual subjects, and for estimating the progression of participant’s brain connectivity into the future.

1. Introduction

Large scale scientific initiatives such as the Human Connectome Project (HCP) are beginning to provide exquisite imaging data that may eventually enable a full structural connectivity mapping of the human brain [28]. For instance, diffusion magnetic resonance imaging (dMRI), an imaging modality central to the aforementioned studies, captures in a spatially localized (voxel-wise) manner, water diffusion properties that can be used to infer the arrangement of network pathways in the brain [23, 27]. After suitable preprocessing, e.g., via so-called tractography procedures, we obtain a unique view of the fiber bundle layout that connects distinct brain regions [10] (see Fig. 1). From an analysis perspective, once the spatial organization of these fiber bundles is expressed as a graph whose nodes represent separate brain regions and the edges denote the “strength” of connection (e.g., number) of the connecting inter-region fibers, a variety of analyses can be conducted [12, 13, 24, 34]. For example, we may ask whether a specific edge of the graph exhibits statistically different connectivity measurements across clinically disparate groups: diseased and healthy. If the results of this hypothesis test are statistically significant, we can conclude that the corresponding fiber bundle (pertaining to the edge) is possibly affected by the disease.

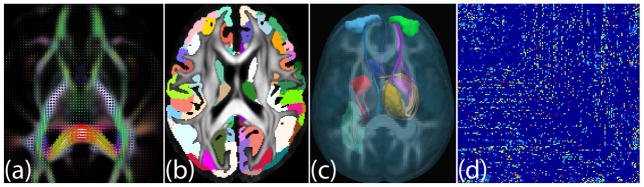

Figure 1.

(a) Diffusion tensor ellipsoids obtained from the dMRI data using non-linear estimation. (b) Anatomical regions in cortical and sub-cortical gray matter are used to define the nodes in the brain network. (c) Fiber tracts (axonal pathways between brain regions) estimated via tractography are used to define connectivity strength between various gray matter nodes in the brain. (d) The brain networks can be represented as symmetric adjacency matrices.

But more recently, there is increasing interest in identifying not just imaging based or structural connectivity based biomarkers, rather to quantitatively characterize disease progression [3, 18, 31]. For example, studies may recruit subjects for multiple visits over a period of time (which varies from months to years) and acquire diffusion imaging data at several time points. In such longitudinal datasets, each subject (or sample) corresponds to multiple images (or the corresponding brain connectivities) at different time points. The scientific goal then is to identify the entire life cycle of brain connectivity evolution — from when a middle aged participant was healthy to a stage where the individual’s cognitive function has become much worse.

The standard approach to answering the question above is to characterize change in brain connectivity at the level of individual edges in the graph. There are two problems with this proposal. First, treating individual edges as primitives neglects the local context in which the edge exists in the actual object of interest — i.e., the entire connectivity graph of the individual. But from a technical perspective, a second issue is perhaps more important. For n regions, we obtain 𝒪(n2) edges. After learning a model (e.g., association of the connection weights with age) for each edge and estimating the significance of the fit (e.g., p-values), we cannot simply report the edges with small p-values as relevant. Since the analysis is being conducted for a large number of edges, the likelihood of false positives is high, so a severe multiple comparisons correction needs to be adopted. This correction may often be too conservative, and we may end up discarding connections that are, in fact, scientifically meaningful; this is an undesirable consequence of treating the edges individually. To avoid this problem, practitioners often rely on summary measures of the connectivity graph instead, such as clustering coefficients, small-worldness, modularity and so on [2, 20]. This works well but is limited in that we cannot uncover spatially localized effects of disease (or other covariates) on connectivity.

A more attractive solution is to think of the graphs as an object and utilize a suitable parameterization of the graph. One possibility is to use the Laplacians [19, 25], either at the level of individual subjects or in terms of distinct partitions of the full cohort progressively going from healthy to diseased, i.e., the first partition is comprised of completely healthy individuals whereas the k-th partition includes diseased subjects. Then, if we look at the full set of bases of the partition-specific Laplacian we can come up with ways to characterize change in these bases as we move from the healthy to the diseased partition. Of course, such a parameterization also enables longitudinal analysis. For example, if we have data for multiple time-points for each partition, we can track how the Laplacian bases evolve over time. To achieve these goals, i.e., for analyzing changes across partitions of disease severity or partition-specific longitudinal analysis, one requirement is the ability to derive a coupled set of harmonic bases for a set of ordered Laplacians (longitudinally and cross-sectionally). This allows treating the full data holistically while preserving the ordinal nature of time and disease-induced cognitive decline. While a mature body of literature in numerical analysis provides sophisticated ways of deriving orthonormal set of bases for any self-adjoint operator, it provides little guidance on how to impose the coupling requirement, essential in this application. For example, we find that for most of the widely used eigenvalue decomposition methods [21, 32], it is non-trivial to modify the numerical scheme to satisfy the consistency requirement between consecutive set of eigen-bases. Addressing this limitation is a goal of this paper.

Key contributions

With the foregoing motivation, the core of this work deals with deriving efficient numerical optimization schemes to solve an ordinally and longitudinally coupled set of a generalized eigenvalue problems. To our knowledge, few publicly available alternatives currently exist [14, 15]. We provide (1) a novel formulation for estimating harmonic bases of brain connectivity networks that are smoothly varying in terms of both longitudinal as well as cross-sectional ordering (induced by a separate covariate such as cognitive performance). We provide an iterative numerical scheme for solving the problem using stochastic block coordinate descent based manifold optimization techniques. (2) We show that such a framework provides an exciting scientific tool in the following sense. Once the model has been estimated, we can vary a single parameter and “see” how the structural brain connectivity of an individual evolves over time or as a function of disease (see Fig. 2 for a qualitative demonstration). This yields a valuable mechanism for performing individual-level prediction. We show how our algorithm is able to provide connectivity prediction in a population of healthy controls who have some known risk factors of AD. Even though these individuals are asymptomatic, our approach is able to obtain a nominal degree of accuracy in assigning the subject to distinct cognitive quantiles. Demonstrating that we can, in fact, obtain a better than chance accuracy where the disease signal is so weak is the main contribution of this work.

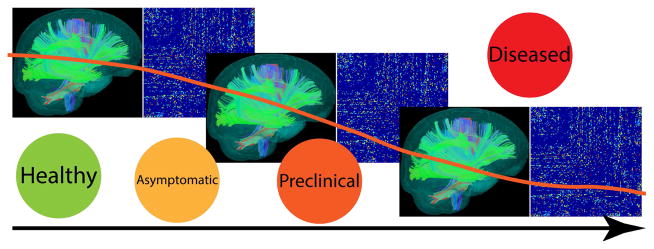

Figure 2.

Using coupled harmonic representations derived from brain connectivity networks, we can turn a “knob” and see how a network evolves as the cognitive stage changes from healthy to diseased.

2. Coupled harmonic bases for brain networks

Parameterization

Let us first describe a simple procedure for parameterizing the brain connectivity network in terms of its bases for individual subjects. Let A be a n × n weighted adjacency graph, A as in Fig. 1(d) representing a brain connectivity between n regions of the brain for a subject. We construct the Laplacian L, a commonly used tool/parameterization for representing graphs, defined as

where D is called the degree matrix. Based on spectral graph theory, the eigenvectors corresponding to lower order eigenvalues contain the ‘low frequency’ information which reflects the latent structure of the Laplacian. The bases are estimated by minimizing the following objective function

| (1) |

where tr(·) is the trace functional. The solution V to the above numerical optimization problem consists of the eigenvectors associated with the p smallest eigenvalues of L which we solve to express a given brain connectivity network via its Laplacian and/or its p eigenvectors/eigenvalues.

Now suppose we are given a longitudinal dMRI dataset for N subjects with T time points: this provides NT brain networks. We can parameterize all the networks simultaneously by minimizing the following objective function

| (2) |

where L[i,j] denotes the Laplacian matrix of brain network for subject i and time point j and V[i,j] denotes the set of p eigenvectors for L[i,j].

However, this formulation ignores a couple of key properties of our analysis goal (conceptually shown in Fig. 3). (1) Each subject has multiple time points which means that not all networks in the population are ‘independent’. There are strong dependencies among the networks derived from a single person observed over time. (2) The subjects can, if desired, be partitioned into distinct groups if a covariate of interest for the subjects is close enough (i.e., similar cognitive scores or a measure of pathology such as amyloid protein load may have roughly similar connectivity strength [6]). This suggests that the bases that we find must also be related, or coupled, while still respecting, to the extent possible, their original Laplacians. If we consider the population of networks as a system, the recovery of the full set of bases must ensure a notion of consistency among {V[i,j]}, governed by either the cognitive score grouping or longitudinal ordering described above.

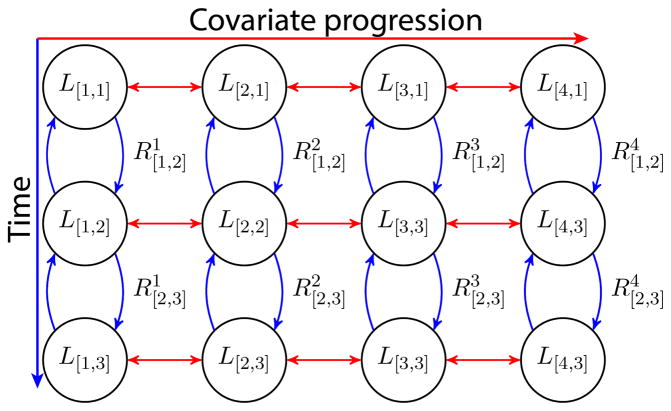

Figure 3.

The graph representation of the coupled data matrices. The nodes in each row (cross-sectional) are coupled horizontally in red while the nodes in each column (longitudinal) are coupled vertically in blue.

We now present our proposed framework for adding constraints in (2) that will ensure that the full set of Laplacians are treated jointly. Without loss of generality, we can work with the example dataset scenario presented in Fig. 3.

2.1. Longitudinal coupling

In this section, we introduce basis coupling constraints that model the relationships (blue arrows in Fig. 3) between temporally consecutive bases. Suppose we consider the bases V[•,j] and V[•,j+1] for two consecutive time points j and j + 1 for a specific subject. Since these are derived from the Laplacians of the same subject, we impose a homology constraint between the latent structures. In other words, we expect that V[•,j] and V[•,j+1] differ only by a small degree of rotation. Specifically, we impose

| (3) |

where which is a group of n × n orthogonal matrices with determinant = +1. This is, in fact, a Procrustes problem [30] of aligning the bases which provides the longitudinal evolution process of the set of bases as a sequence of rotation matrices. Note that the rotation matrix which aligns V[•,j+1] to V[•,j] is simply .

Now, for eigenvectors V[•,j] and V[•,j+1], we see that

| (4) |

| (5) |

Multiplying the above two equations we have

Thus, we have now added a constraint on V[•,j] that addresses the coupling between j and j + 1 as

| (6) |

Note that for j ∉ {1, T}, each V[•,j] is tied to V[•,j−1] and V[•,j+1]. Thus, the coupling matrices for those two relationships are and respectively. To account for both relations, we take the average coupling matrix as [7]:

| (7) |

For the boundary values of j = 1 and j = T, and respectively. Thus, for a subject i, we derive the following optimization model for discovering longitudinally coupled bases for the brain connectivity Laplacians,

| (8) |

Recall that the above constraint is the so-called generalized Stiefel constraint [1] with the mass matrix M[i,j]. This is also known as the generalized eigenvalue problem that finds the first p smallest eigenvalues and the respective eigenvectors of L[i,j] for the mass matrix M[i,j]. We can finally extend this implementation for all subjects as follows,

| (9) |

We point out that for each i and j, the above model is equivalent to (8).

2.2. Cross-sectional coupling

In this section, we present the appropriate constraints that will encode cross-sectional dependencies among the eigenvectors {V[i,j]}. Let us say the population can be partitioned into K distinct groups (columns in Fig. 3) where each group/column is cognitively equivalent based on some battery of tests. Such partitions may also be derived by certain measures of pathology. For each group, we first construct an average Laplacian X[i,•] at a fixed time point. This average Laplacian serves as a representative of that specific group. In principle, we can work with individual level Laplacians L, however, since the goal is to formulate the coupling of bases with respect to the covariates, the averaging helps us reduce the individual level variability and provides a more succinct picture of the network evolution along the trajectory of that covariate (e.g., cognitive scores).

Let us consider three such average Laplacians X[i−1,•], X[i,•] and X[i+1,•] from three consecutive/ordered partitions. The corresponding eigenvectors will be V[i−1,•], V[i,•] and V[i+1,•]. Since these bases are derived from partitions with disjoint/distinct groups of subjects, we cannot assume a homological relationship between them. Since the coupling constraints will be added only between adjacent partitions it is nonetheless reasonable to assume that the bases will not change drastically affecting the full connectivity network. We encode this requirement as a sparsity constraint on the difference of the bases, e.g., via ℓ0 norm. We use the relaxed ℓ1 alternative,

| (10) |

where λ > 0 is the regularization parameter which controls only the magnitude of the cross-sectional coupling.

Intuitively, it enforces similarities in certain dimensions of the cross-sectional bases while allowing the others to vary freely. In other words, we preserve some structural consistencies across the groups while still allowing the group-wise bases to be different. Note that for i = 1 and i = K, the regularization terms will only contain the first term and the second term of (10) respectively. The cross-sectionally coupled bases (V[i,•]) can then be estimated by minimizing the following,

| (11) |

Putting it all together, we have the following coupled generalized eigenvalue formulation,

| (12) |

We have now imposed both the longitudinal and cross-sectional basis coupling: for all partitions and time points.

3. Optimization scheme for coupled bases

In this section, we present an efficient numerical procedure for solving (12). Recall that the constraints involving the mass matrix form the generalized Stiefel manifold. We first present a few relevant technical details of the Stiefel manifold for completeness.

The set GFn,p = {u ∈ ℒ(ℝn, ℝp) : rank(u) = n} of n–frames in ℝp is called the Stiefel manifold. When p = n, GFn,n := GFn is the General Linear group or the set of n × n matrices with nonzero determinant. In other words, a Stiefel manifold is the set of n × p orthonormal matrices (with a Riemannian structure). ℒ(ℝn, ℝp) is the vector space of linear maps form ℝn to ℝp with the space ℝn×p of n × p matrices. Additional details on these topics such as tangent space, exponential map and retraction, are available in [1].

Algorithm 1.

Stochastic block coordinate descent in GFn,p

| 1: | Given: f : GFn,p → ℝ, V ∈ GFn,p(M), M ∈ ℝn×n |

| 2: | while Convergence criteria not met do |

| 3: | 𝒮 := Subproblem row indices |

| 4: | P0 := Initial feasible submatrix (19) |

| 5: | G := Subdifferential of f w.r.t. P0 (20) |

| 6: | W := Descent curve in the direction of −G on GFs,p(M𝒮𝒮) at P0 (22) |

| 7: | τ:= Step size under strong Wolfe conditions [17] |

| 8: | P := Feasible point W(τ) of subproblem with sufficient decrease in f |

| 9: | V′ (P) := Update new feasible point (23) |

| 10: | end while |

Our main strategy is to perform block coordinate descent over GFn,p to solve for {V[i,j]} for each matrix X[i,j] given in (12). Specifically, our algorithm iteratively decreases the objective value of the problem by finding the next feasible point in a curve which lies in the generalized Stiefel manifold GFn,p described in the constraints of (8), by adapting the scheme in [4, 9, 33]. This process is invoked as a module within our full pipeline.

Next, we show the entire framework of our algorithm which solves for all V[i,j] of the model (12) by iteratively solving for each V[i,j] while fixing the other decision variables V[i′,j′], ∀i′ ≠ i&j′ ≠ j. In each iteration, we also update the mass matrix M[i,j] using the most recent bases.

Stochastic block coordinate descent in GFn,p

For simplicity, let us focus on a single arbitrary partition and its Laplacian X, mass matrix M and the eigenvectors V and setup the following coupled model as in (11):

| (13) |

where the regularization term g(V) from (10). First, we show how to solve (13) on a subset of the dimensions. This is a very common procedure in a coordinate descent method where the dimensions can be computed nearly independently to allow parallelization and make large-scale implementation possible. Specifically, we construct a subproblem for each submatrix V𝒮· ∈ ℝs×p where 𝒮 is a subset of s row indices of V. We choose this submatrix as the free variable which we ultimately solve for while fixing the complementary submatrix V𝒮̄· for the rows 𝒮̄ called the fixed variable which we essentially treat as constants. Assuming w.l.o.g. that V𝒮· contains the first s rows of V and its complement V𝒮̄· contains the leftover indices, the constraint VTMV = I in (13) can be reorganized as

| (14) |

Rearranging it to move all the fixed variables on one side results in

| (15) |

for a constant matrix Ĥ. With the full-rank assumption on M𝒮𝒮, completing the square results the following:

| (16) |

for a new constant matrix . Since we assume that M is positive definite, M𝒮𝒮 is also positive definite and invertible.

Now, given an orthogonal subproblem decision matrix P, the next feasible iterate can be provided as

| (17) |

which satisfies the constraints in (14). Note that by using a retraction, we can smoothly map the tangent vectors to the manifold and preserve the key properties of the exponential function necessary to perform feasible descent on the manifold. For the Stiefel manifold, a computationally efficient retraction comes from the Cayley transform which can be extended to the generalized Stiefel manifold shown by Equation (1.2) and Lemma 4.1 of [33]. Consequently, we can eliminate the extra computation of the matrix square roots and simplify (17) to the following:

| (18) |

If PTM𝒮𝒮P = H for M𝒮𝒮 ≻ 0 and non-singular H, the above equation satisfies the subproblem constraint. Thus, given the previous V, the descent curve starts at the point:

| (19) |

So far, we have shown how to setup the initial point for the line search step. Next, we describe how to compute the descent curve of the subproblem on the generalized Stiefel manifold for the line search on the manifold. The first step is to find the gradient of the objective function of (13) which is f(V) = VTXV + λ g(V). Thus, for f(U) and V(P) where the next feasible point V as a function of P as in (18), the gradient of f ○ V(P) w.r.t. P is

| (20) |

where g′(V(P)) is the subgradient of the regularization term (10). Next, we project the descending subgradient −G′ at P0 onto the tangent space of the manifold GFi,p(M𝒮𝒮) by constructing a skew-symmetric matrix:

| (21) |

which conveniently allows the Cayley transform as in [33] to smoothly map from the tangent space to the generalized Stiefel manifold to create the descent curve W as a function of τ:

| (22) |

We can linearly search over the descent curve to find the new point P = W(τ) for some τ which results sufficient decrease in f. Thus, the next feasible decision variable V′ ∈ ℝn×p as a function of P is

| (23) |

so we can finally assign the current V with V′. By the construction of the coupled bases model (13), V′ is updated to minimize the objective function while it remains coupled with the other longitudinal and cross-sectional bases connected to V. The pseudo-code of the algorithm is in Alg. 1.

Algorithm 2.

Coupled bases framework using SBCD

| 1: | Given: |

| f : GFn,p → ℝ, V[:,:] ∈ GFn,p(M[:,:]), M[:,:] ∈ ℝn×n | |

| 2: | while Convergence criteria not met do |

| 3: | for i = 1,…, K do |

| 4: | for j = 1,…, T do |

| 5: | V[i,j] := Free variable |

| 6: | V[i,j] := SBCD(V[i,j]) (Alg. 1) |

| 7: | end for |

| 8: | for j = 1,…, T do |

| 9: | R[i,j] := Rotation matrix (3) |

| 10: | end for |

| 11: | for j = 1,…, T do |

| 12: | M[i,j] := Mass matrix (6), (7) |

| 13: | end for |

| 14: | end for |

| 15: | end while |

Iterative SBCD in GFn,p for bases coupling

With the stochastic block gradient descent (SBCD) method roughly similar to [16] as a solver for a single coupled basis, we now setup the framework to solve for all coupled bases. Specifically, given multiple matrices X[i,j] for i = 1,…, K and j = 1,…, T, we set up grid-like iterations as shown in Alg. 2 where we iteratively pass through all possible pairs of i and j. In each iteration, we set V[i,j] for the current pair of i and j to be the free variable and set V[i′,j′] of the remaining i′ ≠ i and j′ ≠ j to be the fixed variables. We solve for only the free variable V[i,j] using SBCD iteratively for all i and j.

Since (13) imposes the longitudinal coupling based on the mass matrices that are precomputed from the bases available at that iteration, they might not reflect the most accurate longitudinal relations precomputed mass matrices involving rotation matrices of the bases. Therefore, we must update the mass matrices so that they reflect the most accurate bases (encoding the naturally derived longitudinal trajectory) by recomputing the rotation matrices of the newly updated bases. Thus, the mass matrix computation step immediately follows the bases update step. We repeat these steps iteratively until convergence criteria are met.

4. Experiments

Data and scientific goal

Our dataset focuses on a cohort of middle aged individuals who are at risk for Alzheimer’s disease (AD) due to a positive family history (at least one parent with confirmed diagnosis of AD). Our data corresponds to at least three longitudinal scans of these subjects. The participants are cognitively healthy but some AD related brain changes, while subtle, have already begun. Note that in the analysis of anatomical changes in standard magnetic resonance images (not brain connectivity as we do here), numerous findings suggest that accurate quantification of brain “changes”, e.g., via tensor based morphometry, is often more sensitive than the analysis of individual images independently [8]. But in the context of AD (and for other brain disorders) most, if not all, such studies focus on data that cover the full spectrum of the disease (healthy to AD) – the disease effects of those data are much stronger and arguably easier to detect than those in the setting we consider here. We expect that estimating the longitudinal change process in connectivity accurately via the coupled harmonic bases model will enable identifying a disease signal even in the pool of healthy (but at-risk) individuals.

Workflow for deriving brain connectivity networks

There are three key steps in deriving brain connectivity networks for a given population study. (a) Coordinate system. For population level analysis of brain images, one typically needs to register all the images onto a standard coordinate system in a way that avoids any unwanted biases. We follow recommended procedures for deriving an unbiased coordinate system for the 3D+time regime as follows [11]. We first estimate a subject-specific average that is temporally unbiased. The subject specific averages are then used to generate an unbiased population level average template space, the process is summarized in Fig. 4. (b) Edges. We use tractography for deriving edges of the in vivo brain (structural) networks. The key ingredient needed for these algorithms is the orientational information of white matter fibers passing through a voxel, inferred by fitting a tissue model to the acquired MR signal from diffusion weighted imaging. Our data was acquired at a single shell diffusion weighting of b = 1000 s/mm2. Although limited in its ability to resolve crossing fiber tissue, for this data the most reliable model that can be fit is the so called diffusion tensor. The principal eigenvector of the diffusion tensor in a voxel provides a proxy to the predominant orientation of the white matter fibers in that voxel. Using this information, we repeated probabilistic tractography twenty times [5]. The normalized standard deviation of the eigenvectors of the tensor (also known as fractional anisotropy) in the tracts passing between two regions serves as the edge strength between nodes in our experiments. (c) Nodes. The third key component is the node definition. For defining nodes, we relied on expert neuroanatomy groups who have carefully delineated the boundaries of regions in the brain based on their knowledge (including histological studies). We used the gray matter atlas defined on a DTI template [29] which provides 160 distinct regions in the brain.

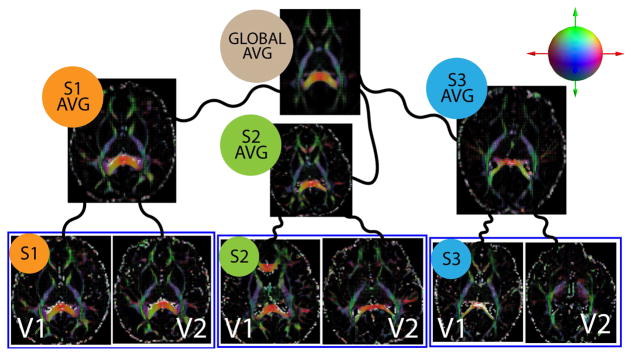

Figure 4.

Unbiased estimation of the global coordinate system for the longitudinally acquired imaging data. Visits are averaged first which are then used to estimate the global average. Each of the curved black lines represents a combination of rigid, affine and non-linear diffeomorphic transformations. These transformations and the spatial averages are estimated iteratively. Diffusion tensors are directly registered using log-Euclidean framework [35].

Covariates for creating sub-cohorts/partitions

We partition the full cohort using two key ordinal covariates: Rey Auditory Verbal Learning Test (RAVLT) [22] and Mini Mental State Exam (MMSE) [26]. These are cognitive performance scores based on tests that assess the cognitive functioning of the subjects and common in preclinical assessments of AD. Note that there is a systematic effect of age and gender on these scores. To control for these nuisance variables, we perform regression against these variables and derive z-scores for both RAVLT and MMSE. This imposes an implicit ordering of the subjects for the two measures, after the effect of age and sex has been accounted for. We derive K partitions of the z-scores to “stage” the cognitive status (and the subjects) into distinct cognitive quantiles. This implies that even within the full set of “healthy” individuals, subjects that fall within the same quantile are similar. If this staging is finer, we have fewer samples in each partition. We used K ∈ {2, 3, 4} partitions to keep the individual-level variance manageable while still allowing us to identify the general connectivity evolution patterns. Although we assume that all participants are recruited into the study concurrently (which may not be true), since they are assigned to distinct cognitive quantiles, estimating their longitudinal trajectories is reasonable (also, since the entire cohort is middle aged). In case of uneven distribution along the time axis, standard imputation strategies may be needed. Here, we only utilized data where all three time points were available to keep the presentation simple and avoid concerns related to the potential effect/bias of the specific imputation methods. Once the subjects are assigned to the appropriate partitions based on their z-scores (columns in Fig. 3), we can derive the average Laplacians, formulate the system as (12) and solve for the coupled bases.

Design

We use 68 subjects with three longitudinal time points and partition them for different K based on RAVLT and MMSE z-scores. Then, for each setting, we compute four sets of bases: (a) non-coupled (2), (b) longitudinally coupled (9), (c) cross-sectionally coupled ((12) with matrix M = I) and (d) longitudinally+cross-sectionally coupled (12). Thus, each partition now has a set of longitudinal bases (vertical direction in Fig. 3). For a novel test subject, we can calculate the corresponding connectivity graph and compare to each of the K partitions. The quantile of the closest partition is the label of this new subject. First, to measure the overall accuracy of this procedure, we use 21 ‘held out’ test subjects, which were not used to compute the four sets of bases to avoid overfitting, where each subject has three longitudinal scans available. So, we have total of 21 × 3 = 63 distinct Laplacians. We perform two classification tasks. First, we only predict the quantiles of the Laplacians at the first (or baseline) time point. Next, we predict all 63 Laplacians which is expected to be much harder since the quantiles of the subsequent time points are not used in deriving the partitions. Nonetheless, we expect that if our coupled bases are accurate, the information from the first time point should, in principle, affect subsequent time points in a way that allows our model to still predict the label correctly. We evaluated p ∈ {n/4, n/2, 3n/4} and chose p = n/4 since the residual is extremely small beyond n/2. We used |𝒮| = 40, but for larger datasets, it can be set to be larger if computationally feasible while also considering the approximation/speed trade-off. Lastly, we used λ ∈ {1, 20, 50}.

Results

We show the prediction accuracies of our algorithm in Table 1. We run the classification tasks for K ∈ {2, 3, 4} respectively. We compare the similarities of the bases of each test subject (using ℓ2 norm) to the set of bases in each partition to locate the closest one, which is the assigned quantile label of the test subject. In Table 1, we show the accuracy results for RAVLT and MMSE using four setups (columns 2 through 5): (a) non-coupled, (b) longitudinally coupled, (c) cross-sectionally coupled and (d) both longitudinally and cross-sectionally coupled. For RAVLT, first, we discuss the simplest setting for K = 2 (R:2 in Table 1). Here, the performance estimates suggest that the first three setups are unable to identify the signal whereas our proposed coupled setup offers accuracy estimates approaching 70%. This trend of the coupled setup improving the accuracy of the non-coupled or partially coupled setup continues for K = 3 (R:3) and K = 4 (R:4). We observe a very similar trend for the MMSE quantile prediction task suggesting that capturing the full set of longitudinal data in terms of its coupled bases offers significant advantages for predicting subject-level cognitive status.

Table 1.

Prediction accuracy (%) of RAVLT (R:K ∈ {2, 3, 4} quantiles) and MMSE (M:K ∈ {2, 3, 4} quantiles) on j = 1 time point and j = {1, 2, 3}. Best results are in red.

| K | Non-coupled | Longitudinal | Cross-section | Coupled | ||||

|---|---|---|---|---|---|---|---|---|

| j=1 | {1, 2, 3} | 1 | {1, 2, 3} | 1 | {1, 2, 3} | 1 | {1, 2, 3} | |

| R:2 | 33.33 | 34.92 | 42.86 | 42.86 | 66.67 | 60.32 | 71.43 | 71.43 |

| R:3 | 38.10 | 33.33 | 52.38 | 36.51 | 57.14 | 44.44 | 57.14 | 55.56 |

| R:4 | 28.57 | 28.57 | 23.81 | 30.16 | 30.16 | 23.81 | 47.62 | 34.92 |

|

| ||||||||

| M:2 | 42.86 | 41.27 | 28.57 | 30.16 | 57.14 | 39.68 | 76.19 | 71.43 |

| M:3 | 42.86 | 38.10 | 47.62 | 49.21 | 47.62 | 46.03 | 47.62 | 50.79 |

| M:4 | 34.92 | 28.57 | 23.81 | 14.29 | 19.05 | 12.70 | 47.62 | 28.57 |

Discussion

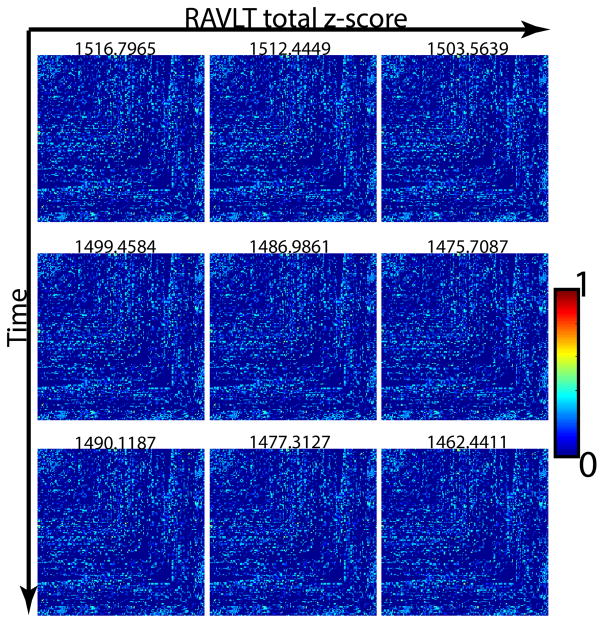

We briefly elaborate on the relevance of these findings. Recall that our dataset is preclinical, i.e., all subjects are healthy. This means that the brain connectivity changes that we seek to capture using our proposed formulation are extremely subtle. To appreciate the small effect sizes in this dataset, we show in Fig. 5 the actual brain connectivity adjacency matrices for K = 3 from the RAVLT based staging. The numbers at the top of each matrix image is the total sum of edge weights in the graph. As expected, when we move from left to right (and top to bottom), the overall connectivity progressively becomes weaker but the changes are extremely small and nearly impossible to pick up in a statistically significant way if this analysis were conducted on an edge-by-edge manner. Despite the fact that the overall changes are in the 0.5–1.0% range over the entire set of 12720 edges, our coupled harmonic bases setup is still able to offer better than chance prediction accuracy. Achieving this capability in a preclinical population is the main scientific result of our experimental evaluations.

Figure 5.

Average adjacency matrices for each of the three stages (columns) based on RAVLT total z-scores and each of the three time points (rows). The total magnitude of connectivity strengths (sum of the total edge weights) are shown in the respective titles of the matrices. Even though there is a trend of decrease in the overall connectivity strength along the cognitive staging, the effect sizes are extremely small 0.5 – 1.0%. In the case of individual edges the effects are even smaller.

Finally, we note that our model recovers disease effects at the level of individual tracts reliably: it specifically identified the top 50 fiber tracts with the most changes in connectivity strength across RAVLT progression (illustrative results are described in detail in the supplement).

5. Conclusion

The goal of this paper is to characterize the evolutionary patterns of brain connectivity networks derived from a longitudinal set of middle-aged healthy individuals who are at risk for Alzheimer’s disease. The changes in brain connectivity are extremely small during the preclinical stages and existing approaches do not seem to be sensitive enough. We presented a framework which treats the entire set of graph Laplacians of the brain connectivity networks as a system by explicitly considering the coupling between different cognitive as well as the longitudinal (temporal) stages. Our experimental results provide evidence that such a coupled bases approach can indeed provide better insights into the brain network changes across the clinical stages. While the technical development of our framework was motivated by the neuroimaging application, the resultant numerical optimization schemes can be widely applicable for incorporating relevant couplings into generalized eigenvalue problems which are pervasive in many other areas of computer vision and machine learning. The extended version of this paper, the supplement and the code are available at http://pages.cs.wisc.edu/~sjh/.

Supplementary Material

Acknowledgments

SJH was supported by a University of Wisconsin CIBM fellowship (5T15LM007359-14). We acknowledge support from NIH R01 AG040396 (VS), NIH R01 AG027161 (SCJ), NIH R01 AG37639 (BBB), NSF CCF 1320755 (VS), NSF CAREER award 1252725 (VS), UW ADRC AG033514, UW ICTR 1UL1RR025011, Waisman Core grant P30 HD003352-45 and UW CPCP AI117924.

References

- 1.Absil P-A, Mahony R, Sepulchre R. Optimization algorithms on matrix manifolds. Princeton University Press; 2009. [Google Scholar]

- 2.Achard S, Salvador R, Whitcher B, et al. A resilient, low-frequency, small-world human brain functional network with highly connected association cortical hubs. The Journal of neuroscience. 2006;26(1):63–72. doi: 10.1523/JNEUROSCI.3874-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Cairns NJ, Perrin RJ, Franklin EE, et al. Neuropathologic assessment of participants in two multi-center longitudinal observational studies: The Alzheimer Disease Neuroimaging Initiative (ADNI) and the Dominantly Inherited Alzheimer Network (DIAN) Neuropathology. 2015 doi: 10.1111/neup.12205. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Collins MD, Liu J, Xu J, et al. Spectral clustering with a convex regularizer on millions of images. ECCV. 2014 [Google Scholar]

- 5.Cook P, Bai Y, Nedjati-Gilani S, et al. Camino: open-source diffusion-MRI reconstruction and processing. ISMRM. 2006;2759 [Google Scholar]

- 6.Drzezga A, Becker JA, Van Dijk KR, et al. Neuronal dysfunction and disconnection of cortical hubs in non-demented subjects with elevated amyloid burden. Brain. 2011;134(6):1635–1646. doi: 10.1093/brain/awr066. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Ham J, Lee D, Saul L. Semisupervised alignment of manifolds. UAI. 2005;10:120–127. [Google Scholar]

- 8.Hua X, Leow AD, Parikshak N, et al. Tensor-based morphometry as a neuroimaging biomarker for Alzheimer’s disease: an MRI study of 676 AD, MCI, and normal subjects. NeuroImage. 2008;43(3):458–469. doi: 10.1016/j.neuroimage.2008.07.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Hwang SJ, Collins MD, Ravi SN, et al. A Projection Free Method for Generalized Eigenvalue Problem With a Nonsmooth Regularizer. ICCV. 2015 doi: 10.1109/ICCV.2015.214. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Jbabdi S, Sotiropoulos SN, Haber SN, et al. Measuring macroscopic brain connections in vivo. Nature neuroscience. 2015;18(11):1546–1555. doi: 10.1038/nn.4134. [DOI] [PubMed] [Google Scholar]

- 11.Keihaninejad S, Zhang H, Ryan NS, et al. An unbiased longitudinal analysis framework for tracking white matter changes using diffusion tensor imaging with application to Alzheimer’s disease. NeuroImage. 2013;72:153–163. doi: 10.1016/j.neuroimage.2013.01.044. [DOI] [PubMed] [Google Scholar]

- 12.Kim WH, Adluru N, Chung MK, et al. MICCAI. Springer; 2013. Multi-resolutional brain network filtering and analysis via wavelets on non-Euclidean space. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Kim WH, Adluru N, Chung MK, et al. Multi-resolution statistical analysis of brain connectivity graphs in preclinical Alzheimer’s disease. NeuroImage. 2015;118:103–117. doi: 10.1016/j.neuroimage.2015.05.050. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Kovnatsky A, Bronstein MM, Bronstein AM, et al. Computer Graphics Forum. Vol. 32. Wiley Online Library; 2013. Coupled quasi-harmonic bases; pp. 439–448. [Google Scholar]

- 15.Lei Z, Li SZ. Coupled spectral regression for matching heterogeneous faces. CVPR. 2009 [Google Scholar]

- 16.Liu J, Wright SJ, Ré C, et al. An asynchronous parallel stochastic coordinate descent algorithm. JMLR. 2015;16(1):285–322. [Google Scholar]

- 17.Nocedal J, Wright SJ. Numerical Optimization. 2. Springer; New York: 2006. [Google Scholar]

- 18.Raj A, Kuceyeski A, Weiner M. A network diffusion model of disease progression in dementia. Neuron. 2012;73(6):1204–1215. doi: 10.1016/j.neuron.2011.12.040. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Reijneveld JC, Ponten SC, Berendse HW, et al. The application of graph theoretical analysis to complex networks in the brain. Clinical Neurophysiology. 2007;118(11):2317–2331. doi: 10.1016/j.clinph.2007.08.010. [DOI] [PubMed] [Google Scholar]

- 20.Rubinov M, Sporns O. Complex network measures of brain connectivity: uses and interpretations. NeuroImage. 2010;52(3):1059–1069. doi: 10.1016/j.neuroimage.2009.10.003. [DOI] [PubMed] [Google Scholar]

- 21.Saad Y. Numerical methods for large eigenvalue problems. SIAM. 1992;158 [Google Scholar]

- 22.Schmidt M, et al. Rey auditory verbal learning test: a handbook. Western Psychological Services; Los Angeles: 1996. [Google Scholar]

- 23.Sotiropoulos SN, Jbabdi S, Xu J, et al. Advances in diffusion MRI acquisition and processing in the Human Connectome Project. NeuroImage. 2013;80:125–143. doi: 10.1016/j.neuroimage.2013.05.057. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Sporns O. The human connectome: a complex network. Annals of the New York Academy of Sciences. 2011;1224(1):109–125. doi: 10.1111/j.1749-6632.2010.05888.x. [DOI] [PubMed] [Google Scholar]

- 25.Stam CJ, Reijneveld JC. Graph theoretical analysis of complex networks in the brain. Nonlinear biomedical physics. 2007;1(1):3. doi: 10.1186/1753-4631-1-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Tombaugh TN, McIntyre NJ. The mini-mental state examination: a comprehensive review. Journal of the American Geriatrics Society. 1992;40(9):922–935. doi: 10.1111/j.1532-5415.1992.tb01992.x. [DOI] [PubMed] [Google Scholar]

- 27.Uğurbil K, Xu J, Auerbach EJ, et al. Pushing spatial and temporal resolution for functional and diffusion MRI in the Human Connectome Project. NeuroImage. 2013;80:80–104. doi: 10.1016/j.neuroimage.2013.05.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Van Essen DC, Ugurbil K, Auerbach E, et al. The Human Connectome Project: a data acquisition perspective. NeuroImage. 2012;62(4):2222–2231. doi: 10.1016/j.neuroimage.2012.02.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Varentsova A, Zhang S, Arfanakis K. Development of a high angular resolution diffusion imaging human brain template. NeuroImage. 2014;91:177–186. doi: 10.1016/j.neuroimage.2014.01.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Wang C, Mahadevan S. Manifold alignment using Procrustes analysis. ICML. 2008 [Google Scholar]

- 31.Wang P, Zhou B, Yao H, et al. Aberrant intra-and internetwork connectivity architectures in Alzheimer’s disease and mild cognitive impairment. Scientific reports. 2015;5 doi: 10.1038/srep14824. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Warsa JS, Wareing TA, Morel JE, et al. Krylov subspace iterations for deterministic k-eigenvalue calculations. Nuclear Science and Engineering. 2004;147(1):26–42. [Google Scholar]

- 33.Wen Z, Yin W. A feasible method for optimization with orthogonality constraints. Mathematical Programming. 2013;142(1–2):397–434. [Google Scholar]

- 34.Wig GS, Schlaggar BL, Petersen SE. Concepts and principles in the analysis of brain networks. Annals of the New York Academy of Sciences. 2011;1224(1):126–146. doi: 10.1111/j.1749-6632.2010.05947.x. [DOI] [PubMed] [Google Scholar]

- 35.Zhang H, Avants BB, Yushkevich P, et al. High-dimensional spatial normalization of diffusion tensor images improves the detection of white matter differences: an example study using amyotrophic lateral sclerosis. TMI. 2007;26(11):1585–1597. doi: 10.1109/TMI.2007.906784. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.