Abstract

How do infants plan and guide locomotion under challenging conditions? This experiment investigated the real-time process of visual and haptic exploration in 14-month-old infants as they decided whether and how to walk over challenging terrain—a series of bridges varying in width. Infants' direction of gaze was recorded with a head-mounted eye tracker and their haptic exploration and locomotor actions were captured on video. Infants' exploration was an organized, efficient sequence of visual, haptic, and locomotor behaviors. They used visual exploration from a distance as an initial assessment on nearly every bridge. Visual information subsequently prompted gait modifications while approaching narrow bridges and haptic exploration at the edge of the bridge. Results confirm predictions about the sequential, ramping-up process of exploration and the distinct roles of vision and touch. Exploration, however, was not a guarantee of adaptive decisions. With walking experience, exploratory behaviors became increasingly efficient and infants were better able to interpret the resulting perceptual information in terms of whether it was safe to walk.

Across the animal kingdom, locomotion comes in a variety of forms—flying, swimming, slithering, brachiating, hopping, and walking. Shared across the diversity of forms is the problem of safely transporting the body through a variable environment. Constraints on locomotion are always changing. For terrestrial animals, the path may be sloping, slippery, or squishy; it may terminate at a drop-off or be blocked by obstacles. When approaching a change in terrain, animals must decide whether to continue their current form of locomotion, modify it, or stop moving. Errors can be costly. At best, the animal fails to reach its goal; at worst, it falls or crashes into an obstacle. The problem emerges in the first days of mobility. As soon as infants can locomote, they must safely navigate a variable environment.

With locomotor experience, human infants become remarkably adept at navigating variable terrain. They crawl or walk over bridges that are sufficiently wide, drop-offs that are sufficiently small, and slopes that are sufficiently shallow (Adolph, 1995, 1997; Kretch & Adolph, 2013a, 2013b). On challenging surfaces, they modify their gait (Gill, Adolph, & Vereijken, 2009; Kretch & Adolph, 2013b). In situations beyond their abilities, they refuse to go or use alternative strategies. How do infants, or any other animal, match locomotor actions to environmental constraints?

Exploration links perception and action

Many years ago, James Gibson (1966, 1979) proposed that animals use perceptual information from exploratory activity to guide locomotion adaptively. Gibson stressed the reciprocal nature of perception and action: Perceptual information guides action, and actions produce perceptual information. Perception and action are linked in an ongoing, real-time cycle (Adolph, Eppler, Marin, Weise, & Clearfield, 2000; Bertenthal & Clifton, 1998; Thelen, 1990; Turvey, Carello, & Kim, 1990). An important implication of Gibson's theory is that, like locomotion and other instrumental actions, exploratory actions must be planned and controlled. Thus, exploration requires perceptual information, obtained by prior exploration.

In some species, the perception-action cycle has been worked out in detail. The echolocating bat is an apt example. Echolocation involves active generation and retrieval of perceptual information: Flying bats emit a call and retrieve the echo from surrounding surfaces and objects. In response to this feedback, bats adjust their flight behavior to aim toward prey and avoid obstacles; they also adjust their calling and listening behavior to produce maximally informative auditory information. The result is fine localization accuracy and successful hunting and obstacle navigation (Moss, Chiu, & Surlykke, 2011; Moss & Surlykke, 2010).

Visual and haptic exploration

Humans primarily use visual and haptic information to guide locomotion. Vision provides high-resolution information from directing gaze—looking—toward a particular location and low-resolution information from the periphery of the visual field (Patla, 1997). Haptic information is a result of direct contact with an object or surface—touching. Touching provides tactile information that reveals surface properties (e.g., rigidity and friction), and proprioceptive information that specifies the positions of and forces on particular body parts (Lederman & Klatzky, 2009).

Vision and touch have distinct roles (Adolph et al., 2000; Adolph & Joh, 2009; E. J. Gibson & Schmuckler, 1989). Vision is the primary distance sense in humans. Peripheral vision provides optic flow information for controlling balance (J. J. Gibson, 1958) and can alert walkers to unforeseen obstacles, but the quality of peripheral information is limited for precise spatial localization (Sivak & MacKenzie, 1990). Foveal vision from the high-resolution center of the retina produces the highest quality information from a distance (Patla, 1997). But not all information can be obtained from vision. For example, emergent properties like friction and rigidity can be perceived only through direct contact (Adolph, Joh, & Eppler, 2010; Joh & Adolph, 2006; Joh, Adolph, Narayanan, & Dietz, 2007). Moreover, haptic exploration can provide information that most closely simulates the potential action, allowing the perceiver to test what the action “feels like” before committing (Adolph, 1997; Adolph et al., 2000). Furthermore, in cases where perceptual information is uncertain, combining information from multiple modalities, such as vision and touch, can produce more accurate perception than either modality alone (Ernst & Banks, 2002).

Within the context of locomotion, the different modalities incur different costs. First, vision and touch involve different amounts of effort. Peripheral vision is essentially free, because the eyes are “parked in the front of the moving observer,” picking up visual information without additional effort above that already recruited for locomotion (Patla, 2004, p. 389). However, directing gaze to take advantage of foveal vision takes effort to move the head and eyes in a particular direction and to focus at a particular distance (J. J. Gibson, 1966). Controlling limb movements for haptic exploration requires more effort. Exploration with hands and feet develops long before infants can locomote (Galloway & Thelen, 2004; Rochat, 1989). However, in a locomotor context, infants must perform haptic exploration while maintaining balance and coordinating gait modifications. A second type of cost is the time devoted to exploration. Haptic exploration, unlike visual exploration, requires an interruption in ongoing locomotion and a delay in reaching the goal. A third type of cost is the risk of injury. Whereas visual exploration can be accomplished from a distance, haptic exploration requires proximity to the potentially hazardous surface. The more closely the exploratory action approximates the locomotor action, the more useful the information but the greater the risk (Adolph, 1997). In sum, haptic exploration is more costly than visual exploration in terms of effort, time, and risk of injury.

Real-time process of exploration

The bat highlights an important characteristic of efficient exploration: Higher cost exploratory behaviors are deployed only when necessary. Bats at rest or idly flying through the dark emit long, infrequent calls within a narrow pitch range (Galambos & Griffin, 1942; Griffin, Webster, & Michael, 1960). But if a returning echo indicates an obstacle or prey, bats ramp up their calling and listening behaviors. Calls become shorter and more frequent and span a wider pitch range (Griffin et al., 1960; Jen & Kamada, 1982; Simmons, Fenton, & O'Farrell, 1979).

How do infants deploy the different exploratory behaviors at their disposal? Adolph and colleagues (Adolph & Eppler, 1998; Adolph et al., 2000) proposed that exploration in the service of locomotion must play out in a temporal and spatial sequence as infants approach a change in the ground surface. Like bats, whose exploratory behaviors increase with decreasing time and distance from the target, Adolph and colleagues hypothesized that infants should ramp up their exploratory actions to include more costly forms of information gathering only if prompted by information obtained from less costly forms of exploration performed moments earlier. According to this ramping-up hypothesis, vision is typically the “first line of defense”, occurring early and from a distance. If a quick glance at the ground ahead reveals a potentially risky surface, infants may be prompted to engage in more extended visual exploration. Looking should be followed by gait modifications and touching only if visual information indicates potential risk. Because haptic information comes at a higher cost, touching should be reserved for cases prompted by prior visual information (analogous to the bat) rather than used to guide every step (like a blind person with a cane). However, this sequential ramping-up hypothesis with efficient use of modalities has not been tested because the sequential and spatial properties of exploration have not been characterized.

Moreover, infants likely require locomotor experience to achieve an efficient, ordered sequence of exploratory behaviors. Novice crawlers and walkers plunge over the edge of impossibly large drop-offs and steep slopes (Adolph, 1997, 2000; Kretch & Adolph, 2013a), suggesting that they fail to gather and/or interpret the appropriate information. If exploration does indeed involve a contingent cycle, then misreading early feedback could disrupt subsequent exploration. Infants who misinterpret initial visual information might fail to touch when needed, or conversely waste effort and time and incur risk of injury with unnecessary haptic exploration. With experience, infants' motor decisions become increasingly accurate (Adolph, 1997; Kretch & Adolph, 2013a) and their real-time exploration may also increase in specificity and efficiency.

Exploration in the service of locomotion

In experimental studies of infant navigation, infants are placed on a starting platform that leads to a discrete change in the ground surface (a drop-off, squishy waterbed, slope, bridge, etc.) and encouraged to cross (see Adolph & Robinson, 2013; 2015 for reviews). The most commonly reported measure of exploration is the total amount of time spent on the starting platform, termed “latency” to cross. Generally, latency is longer on risky surfaces than safe ones (Adolph & Robinson, 2015). Adolph and Eppler (1998) suggested that the latency period reflects prolonged visual exploration of uncertain surfaces. However, latency merely represents the upper limit of exploration time. Without direct measurement of visual and haptic exploration, we do not know whether infants fill the latency period with active exploration or with unrelated displacement behaviors (E. J. Gibson et al., 1987). Albeit popular, this global measure reveals little about the actual process of exploration.

Many studies also report infants' haptic exploration of surfaces. Consistent with Adolph and colleagues' (Adolph & Eppler, 1998; Adolph et al., 2000) ramping-up hypothesis, infants do not touch every surface they encounter. Touching is more frequent and prolonged on risky surfaces than on safe ones (see Adolph & Robinson, 2013; 2015 for reviews). Presumably, visual information informs infants whether touching is necessary. However, because previous work with infants relied solely on video recordings from a third person perspective, researchers were unable to determine when, from where, and for how long infants directed their gaze toward the relevant parts of the surface, or the temporal-spatial relations between visual and haptic exploration.

Only one study used head-mounted eye tracking to investigate visual guidance of obstacle navigation in infants (Franchak, Kretch, Soska, & Adolph, 2011). During free play in a room with varied elevations, infants' fixations of upcoming obstacles were frequent, but not obligatory: They navigated 28% of obstacles without prior fixations. Unlike older children and adults, infants sometimes used online guidance, fixating the obstacle while stepping onto or over it. Adults sometimes direct their gaze to ground obstacles, but they frequently navigate without looking, relying solely on peripheral vision (Franchak & Adolph, 2010; Marigold, Weerdesteyn, Patla, & Duysens, 2007). When adults do look, they do so prospectively, about 2-3 steps before the obstacle (Franchak & Adolph, 2010; Patla & Vickers, 1997, 2003). Looking scales to risk: Adults direct more looks toward variable terrain than uniform terrain (Marigold & Patla, 2007), and they look more at obstacles that require large gait modifications than at obstacles that require small modifications (Patla & Vickers, 1997).

Experimental data confirm Gibson's hypothesis that exploratory activity is essential to guide actions adaptively. When adults' exploratory swaying and stepping movements are restricted, they err in judging whether surfaces are sufficiently high for sitting (Mark, Baillet, Craver, Douglas, & Fox, 1990). When echolocation calls are prevented, bats miss their targets and fly into walls (Griffin & Galambos, 1941). Blocking vision of the ground causes cats and human adults to misstep and collide with obstacles (Matthis & Fajen, 2014; Rietdyk & Drifmeyer, 2009; Wilkinson & Sherk, 2005).

The amount of exploration required for adaptive motor decisions, however, is still an open question. One possibility is that more exploration is better, such that concerted looking and touching lead to more accurate judgments than does peripheral vision alone. Indeed, when deciding whether to walk down slippery slopes, exploratory touching was associated with more accurate decisions in infants and adults (Adolph et al., 2010; Joh et al., 2007). However, developmental data suggest the contrary: Experienced infants make adaptive decisions following very little exploration; conversely, novices engage in extended examinations of impossible surfaces but plunge ahead nonetheless (Adolph, 1997). Thus, quantity of exploration may not relate to motor decisions. A crucial factor may be the ability to correctly interpret the perceptual information generated by exploration and to recognize when additional information is needed; this ability may develop with locomotor experience. Moreover, mature integration of visual and haptic information is not evident before 8 years of age (Gori, Del Viva, Sandini, & Burr, 2008; Nardini, Begus, & Mareschal, 2013), so haptic information may not help infants to make more accurate decisions than vision alone.

Current study

The current experiment aimed to characterize the perception-action cycles that lead to accurate locomotor decisions in infants. To do so, we needed the appropriate recording technology and test paradigm. We used head-mounted eye tracking to record infants' visual exploration, synced with video recordings of their haptic exploration and locomotion. We used bridge crossing as our test paradigm because the apparatus presents a uniform, extended path leading to a discrete locomotor challenge whose properties could be parametrically varied (Kretch & Adolph, 2013b). Moreover, bridges are ideal for investigating visual exploration because the target is relatively small and localized, and fixation requires intentionally directing gaze to the specific location of the bridge. Finally, in contrast to obstacles that can be navigated with one walking step, successful bridge crossing requires accurate foot placement over a series of steps, thus allowing investigation of ongoing visual guidance of locomotion.

Our over-arching question was whether infant exploration in the service of locomotion is organized, efficient, and sensitive to the unique functions of different modalities. First, we quantified the amount of visual and haptic exploration and tested whether exploratory behaviors varied with bridge width. If infants' information gathering is efficient and sensitive to the properties of different modalities, we would expect low-cost exploratory looking to be frequent and high-cost exploratory touching to be infrequent and deployed only when visual information indicates risk.

Second, we investigated the spatiotemporal sequence of infants' exploratory behaviors. If haptic exploration is prompted by visual information gleaned from a distance as hypothesized by Adolph and colleagues, then looking should occur earlier in time and farther away from the bridge relative to touching. We also predicted that visual feedback would cause infants to subsequently modify ongoing locomotion in accordance with risk level.

Third, we asked whether exploration predicts motor decisions. When infants err by falling on medium-sized bridges or attempting to cross impossibly narrow bridges, which part of the perception-action cycle breaks down: Do infants fail to generate the relevant information or do they misinterpret the information gathered? The first possibility would predict lower rates of exploratory behaviors on trials where infants err; the second would predict no difference in exploratory behaviors.

Fourth, we examined the ongoing perception-action cycle during navigation of difficult terrain—how infants use visual information for ongoing guidance of the bridge. While crossing, each step must be planned to avoid falling into the precipice. Do infants fixate the bridge while crossing as they sometimes do for one-step obstacles, or do they look straight ahead and rely on peripheral visual information and haptic feedback?

Finally, we asked how locomotor experience relates to exploration. We predicted that infants with more walking experience would show more evidence of ramping-up, using visual information to inform when touching was necessary, and would be better able to interpret visual and haptic feedback to distinguish safe from risky bridges.

Method

Participants

Fifteen infants (nine boys) between 12.98 and 14.10 months of age (M = 13.68 months) were recruited from maternity wards of local hospitals. Most were from middle-class families; 8 were white and 7 were multiracial. Walking experience (dated from parental reports of the first day infants walked 10 feet continuously) ranged from 0.43-4.70 months, M = 2.23 months; one parent could not report reliably. Ten additional infants were excluded: 3 refused to walk on the platform, 5 refused to wear the eye tracker, and 2 became too fussy to complete a block of trials. Three additional infants completed the study but their data were excluded because of poor eye-tracking calibrations.

Head-mounted eye tracker

We recorded infants' gaze direction with a Positive Science (positivescience.com) head-mounted eye tracker. Two small cameras—one that records the visual scene and one that records the infant's right eye are mounted on a flexible headband attached to a fitted cap (Figure 1a). Video feeds from the eye tracker were routed through a long cable to a computer that recorded the videos, and infants wore a lightweight vest to secure the proximal end of the cable close to their body; an assistant walked behind the infant during the study to hold the cable out of the way.

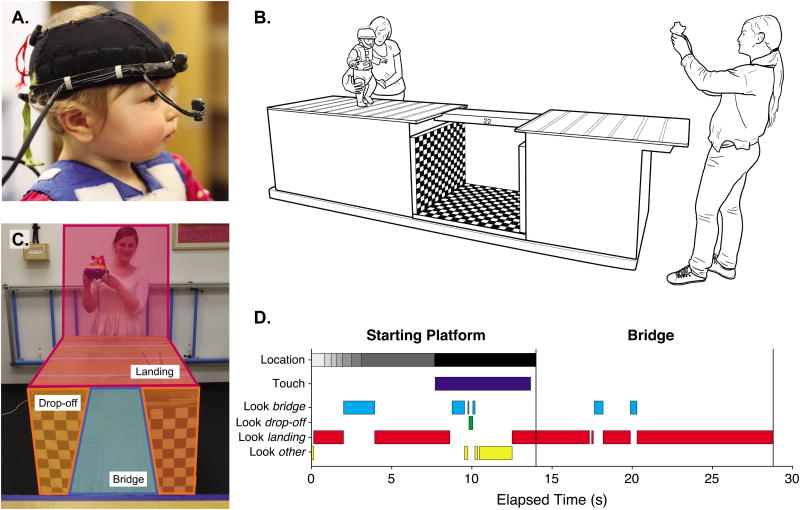

Figure 1.

(A) Infant wearing head-mounted eye tracker. (B) Bridge apparatus. Parents stood at the far end and encouraged infants to cross, and an experimenter followed beside infants to ensure their safety. (C) Areas of interest (AOIs) for frame-by-frame coding of gaze data. (D) Exemplar data from a trial on a 30-cm bridge. Each shade of gray in the top bar represents a different 15-cm section of the starting platform, with the black bar indicating time with the infant's feet past the platform edge.

After the session, we imported the two videos into Positive Science Yarbus software, which calculated the location of the point of gaze for each video frame using estimates of the center of the pupil and corneal reflection from the eye video. The software exported a new video with the point of gaze indicated on each frame of the scene video (spatial accuracy was previously measured in infants to be ∼2°; Franchak et al., 2011). We used a circle of 4° radius as the point of gaze indicator to allow for spatial error.

Bridge apparatus

The apparatus consisted of a 157-cm long starting platform and 106-cm long landing platform (both 86 cm high × 76 cm wide) flanking a 76-cm-long gap (Figure 1b). The starting platform was covered with colored calibration lines spaced 15 cm apart, to facilitate coding of infants' location. The gap was covered in black-and-white checkerboard to provide visual texture (E. J. Gibson & Walk, 1960; Kretch & Adolph, 2013b). Bridges of 6 widths (60, 38, 30, 22, 14, 6 cm) spanned the gap. The widest 60-cm bridge was easy; it covered most of the platform and in previous work, infants ran straight across (Kretch & Adolph, 2013b). The 38-, 30-, and 22-cm bridges were challenging; in previous work, 22 cm was, on average, the narrowest bridge 14-month-olds crossed successfully and 30- and 38-cm bridges required gait modifications. The 6-and 14-cm bridges were impossible; in previous work, attempts resulted in falling.

Procedure

Infants were barefooted and wore diapers and undershirts. First, they performed several warm-up trials on the 60-cm bridge without the eye tracker to become familiar with the apparatus. The experimenter placed infants on the far end of the starting platform and spotted them to ensure their safety. Parents, at the far end of the landing platform, used toys and snacks to encourage infants to cross (Figure 1b).

When infants seemed comfortable with walking over the bridge to their parents, we dressed them in the vest, hat, and eye tracker. We collected calibration data by calling infants' attention to noise-making targets displayed in windows cut out of a large board (Kretch, Franchak, & Adolph, 2014). Then, we performed a few more warm-up trials to accustom infants to walking while wearing the equipment. Calibration was performed after the session in Yarbus software by indicating the target locations in the video frames.

Infants received 1-3 blocks of test trials at the six bridge widths randomized within each block. The total number of trials ranged from 6-19, M = 15.47; each infant received at least one trial at each bridge width (6 infants completed fewer than three blocks due to fussiness, and 2 received one extra trial accidentally). Before each trial, an assistant held up a curtain to prevent infants from seeing the bridge. To start the trial, the assistant revealed the bridge. As in previous work (Kretch & Adolph, 2013b), parents were instructed to encourage infants to cross every bridge without specifying how or warning infants to be careful. If infants did not attempt to cross within 30 s, the experimenter ended the trial.

Data coding

Trials were videotaped from forward, overhead, and side views, which were mixed on-line into a single video frame. This combined video was synced with the eye-tracking video offline using Final Cut Pro to produce a composite video for coding. All study variables were scored frame-by-frame using Datavyu (datavyu.org) and videos are shared in the Databrary library (databrary.org). A primary coder scored 100% of trials and a second coder scored one third of each infant's trials to assess inter-rater reliability. Figure 1d illustrates data from all temporal variables coded over video frames of an exemplar trial.

Platform duration

The coders scored the duration of time that infants spent on the starting platform from the first video frame when the curtain was lifted to reveal the bridge until the moment when infants began to cross (first frame with both feet completely on bridge; first half of timeline in Figure 1d). If infants never tried to cross, platform duration defaulted to 30 s. Platform durations scored by the two coders were perfectly correlated, r = 1.00. (Note platform duration is equivalent to “latency” in previous work.)

Location

Coders scored the time and location of each step on the starting platform using the colored calibration lines (Figure 1b). Frames between steps were assigned to the previous step location, so that each frame of the platform duration had a corresponding location (grayscale bar in Figure 1d). Coders agreed on infants' exact location on 93.8% of frames, and agreed within one 15-cm increment on 99.6%.

Exploratory looking

For each frame of the platform duration, coders scored which of three areas of interest (AOI) was within the circular gaze cursor: the bridge, drop-off (sides or bottom), or landing platform (including the platform and the people and objects at the far end; Figure 1c, bottom four bars in Figure 1d). Looking anywhere else (across the room, at the experimenter, down at the starting platform, etc.) was coded as “other.” If multiple AOIs were contained within the gaze circle, they were prioritized by bridge, drop-off, landing platform, other. Thus, any fixation within 4 degrees of the bridge was coded as a look to the bridge; any fixation within 4 degrees of the drop-off and at least 4 degrees away from the bridge was coded as a look to the drop-off. Coders also noted frames to be excluded due to blinking or track loss. Coders agreed on gaze AOI on 98.3% of frames.

Exploratory touching

The coders identified every time infants touched the bridge with feet or hands for at least 0.5 s before attempting to cross (dark blue bar in Figure 1d). Touch duration was calculated from the first to last frame of the touch. If infants touched the bridge and then attempted to cross, touch offset was the first frame when they began moving onto the bridge. The two coders agreed on 99.7% of frames and their total touch durations were correlated, r = .99.

Motor decisions

The outcome of each trial was scored as a success (arrived safely on the landing platform), failure (attempted to walk but fell), or refusal (did not attempt to walk) as in Kretch & Adolph (2013b). On 3 trials, infants tried to crawl across the bridge; these trials were scored as refusals to walk. For the remaining 70 refusal trials, infants simply remained on the starting platform. Coders agreed on trial outcome in 98.7% of trials.

Visual guidance

For successful trials, the coders scored gaze locations while infants walked over the bridge (Figure 1d), from the first step on the bridge to the first step off the bridge using the same AOIs described above for exploratory looking.

Results

Effects of risk on exploratory behaviors

The first set of analyses examined whether the overall amount of visual and haptic exploration varied with risk level (bridge width). We found that infants showed different patterns of exploration for looking and touching. Looking at the bridge was frequent, brief, and unaffected by risk level. Touching was infrequent, prolonged, and reserved for narrow bridges.

Opportunity for exploration

Infants spent more time on the starting platform on trials with riskier bridge widths, in part because they were more likely to avoid crossing: Platform duration was M = 25.67 s on the 6-cm bridge but only M = 6.73 s on the 60-cm bridge, F(5, 65) = 20.59, p < .01, linear trend: F(1, 13) = 43.34, p < .01. Thus, more time was available for potential visual and haptic exploration on narrow bridges.

Looking at the bridge

Despite additional platform time on trials with narrow bridges, infants did not fill that time by looking at the bridge or drop-off. Instead, most of infants' looking time was directed at the goal—their parents and objects on the landing platform (red bars in Figure 2a). Infants spent little time, typically less than 2 s per trial, looking at the bridge (light blue bars in Figure 2a). A 6 (bridge width) × 4 (AOI) repeated-measures ANOVA revealed a main effect of AOI, F(3, 33) = 43.98, p < .01, and an interaction, F(15, 165) = 4.37, p < .01. Pairwise comparisons revealed that infants spent more time looking at the landing platform than any other AOI, ps < .01. Linear trend analyses on accumulated look duration for each AOI separately confirmed linear effects for fixations of the drop-off, F(1, 11) = 6.12, p = .03 (possibly because more of the drop-off was visible when the bridge was smaller), the landing platform, F(1, 11) = 16.48, p < .01, and the “other” category, F(1, 11) = 12.93, p < .01, but not for fixations of the bridge, F(1, 11) = 0.12, p = .74.

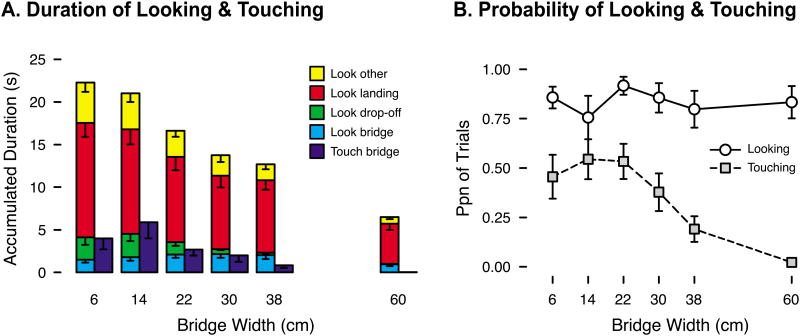

Figure 2.

Measures of looking and touching at each bridge width. (A) Average accumulated duration of looking at each AOI and touching the bridge. Overall height of the stacked bars represents the total time with valid gaze data. (B) Average proportion of trials in which infants looked at the bridge (white circles) and touched the bridge (gray squares). Error bars denote standard errors.

Albeit brief, visual exploration of the bridge was consistent. Experimenters and caregivers did not call infants' attention to the bridge, so there was no obligation to look at it. Nonetheless, infants spontaneously directed their gaze to the bridge on 84.0% of trials (pooled over all trials in the dataset), and the likelihood of fixating the bridge did not vary with bridge width, F(5, 55) = 0.55, p = .74 (white circles in Figure 2b). Note the bridge was likely in infants' field of view even in the 16% of trials in which they did not direct their gaze toward the bridge.

Touching the bridge

Touching the bridge was less frequent than looking at it (gray squares in Figure 2b), occurring on only 35.8% of trials (pooled). But touches were relatively long: M touch duration = 7.69 s (pooled over trials with touches). Infants touched mostly with their feet—89.2% of touch trials—as if testing what it would feel like to walk across. On 6 trials (7.2% of touch trials), infants touched with hands, and on 3 trials (3.6%), they touched with both feet and hands. Ten infants touched exclusively with feet, 3 used both feet and hands, 1 touched only with hands, and 1 never touched the bridge.

Touching was reserved primarily for risky and challenging bridges: Infants touched on about 50% of trials on the 6-, 14-, and 22-cm bridges, and the likelihood of touching decreased on the wider bridges, F(5, 65) = 6.56, p < .01, linear trend: F(1, 13) = 11.78, p < .01, quadratic trend: F(1, 13) = 6.60, p = .02. The accumulated duration of touching showed a similar pattern: Infants spent more time touching narrower bridges, F(5, 65) = 4.03, p < .01, linear trend: F(1, 13) = 7.69, p = .02 (dark blue bars in Figure 2a).

Spatiotemporal sequence of exploration

The second set of analyses examined the spatial and temporal sequence of exploratory behaviors and relations among them. We found that infants spent most of their platform time in close proximity to the precipice. Generally, approach toward the bridge was the first step in the sequence, followed by looking at the bridge from a distance, modifications in gait, stopping at the edge to touch the bridge, and then looking again from up close.

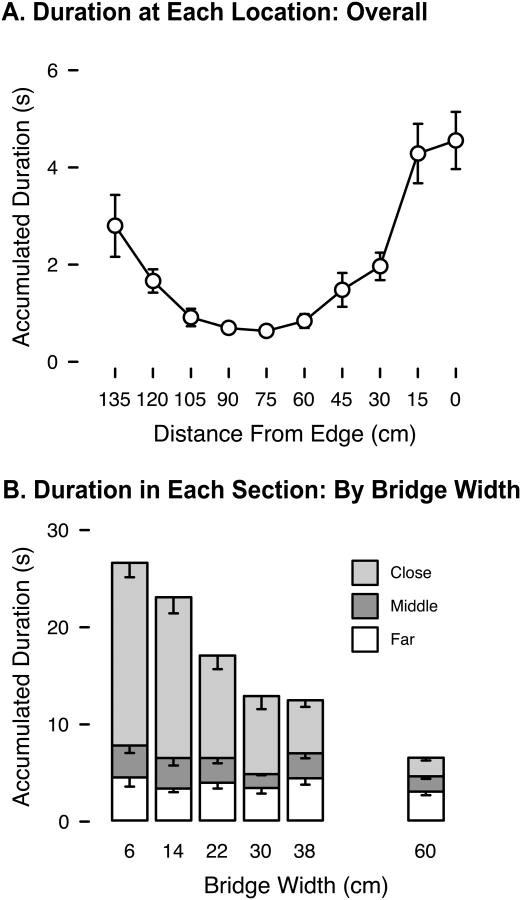

Exploration in proximity to bridge

Trials began with infants more than a meter away from the bridge, but they spent most of their platform time near the bridge (Figure 3a). Infants spent more time on the segment of the starting platform closest to the precipice on narrow bridges (light gray bars in Figure 3b), but time on the middle and farthest segments did not vary with bridge width (dark gray and white bars in Figure 3b), F(5, 60) = 20.13, p < .01, linear trend: F(1, 12) = 48.26, p < .01. In other words, the increase in platform duration for narrow bridges reported above consisted entirely of time in close proximity to the precipice. On 87.4% of trials, infants got so close that their feet were either on the bridge or hanging over the edge of the precipice. Of course, infants always reached the edge on trials when they attempted to cross. But they also stood at the edge in 62.0% of refusal trials in which they did not try to cross and could have kept their distance. On trials where infants went to the edge, they took longer to arrive when the bridge was narrower, F(5, 50) = 3.66, p < .01, linear trend: F(1, 10) = 4.90, p = .05.

Figure 3.

Infants' location on the starting platform. (A) Average accumulated time spent in each 15-cm section of the platform, pooled over all trials. (B) Average accumulated time spent in each third of the platform, for trials at each bridge width. The close section contains locations less than 45 cm from the edge; the middle section contains locations 45-90 cm from the edge, and the far section contains locations more than 90 cm from the edge. Overall height of stacked bars represents the total platform duration. Error bars denote standard errors.

Temporal sequence of exploration

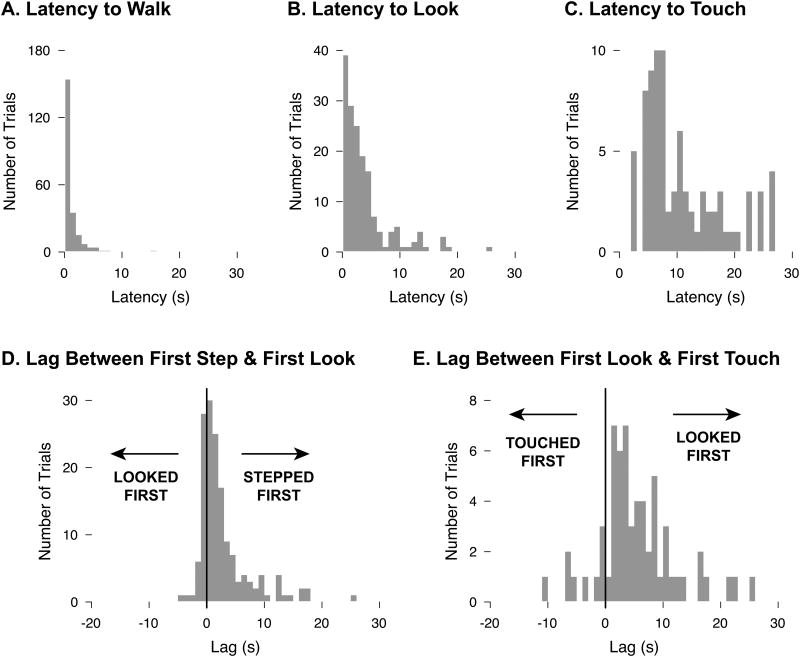

Exploration included several components (approaching, looking at, and touching the bridge) in temporal sequence. Typically, infants initiated locomotion toward the bridge almost immediately, taking their first step at M = 1.16 s after the start of the trial; in 85.1% of trials infants started walking within the first 2 s (Figure 4a). Looking at the bridge also occurred quickly: Infants first fixated the bridge at M = 3.84 s, and they looked within the first 5 s on 78.5% of trials (Figure 4b). First looks most frequently occurred far from the bridge, with the modal distance (26.9% of trials) at 120 cm away from the edge and the average distance at 82.66 cm from the edge. Touching, which by definition had to occur at the edge, came later in the trial, at M = 11.08 s (Figure 4c). Infants tended to initiate locomotion toward the goal before looking at the bridge (75.8% of trials), with an average lag between first step and first look of 2.80 s (Figure 4d); the proportion of trials in which the first step preceded the first look was significantly greater than 50%, one-sample t(13) = 5.29, p < .01. Infants usually touched after looking (85.5% of trials with both looks and touches), with an average lag from first look to first touch of 5.88 s (Figure 4e); the proportion of trials in which the first look preceded the first touch was significantly greater than 50%, t(12) = 8.05, p < .01. After initiating a touch, infants typically looked at the bridge again (79.0% of trials with touches). The final look occurred at M = 8.35 s for attempt trials (M = 2.76 s before starting to cross) and M = 18.96 s for refusal trials (M = 10.83 s before the end of the trial).

Figure 4.

Timing of exploration sequence components. (A) Latency from trial onset to first step. (B) Latency from trial onset to first look at the bridge. (C) Latency from trial onset to first touch. (D) Lag between first step and first look. Positive values indicate that the infant stepped before looking; negative values indicate that the infant looked before stepping. (E) Lag between first look and first touch. Positive values indicate that the infant looked before touching; negative values indicate that the infant touched before looking.

Looking and approach to bridge

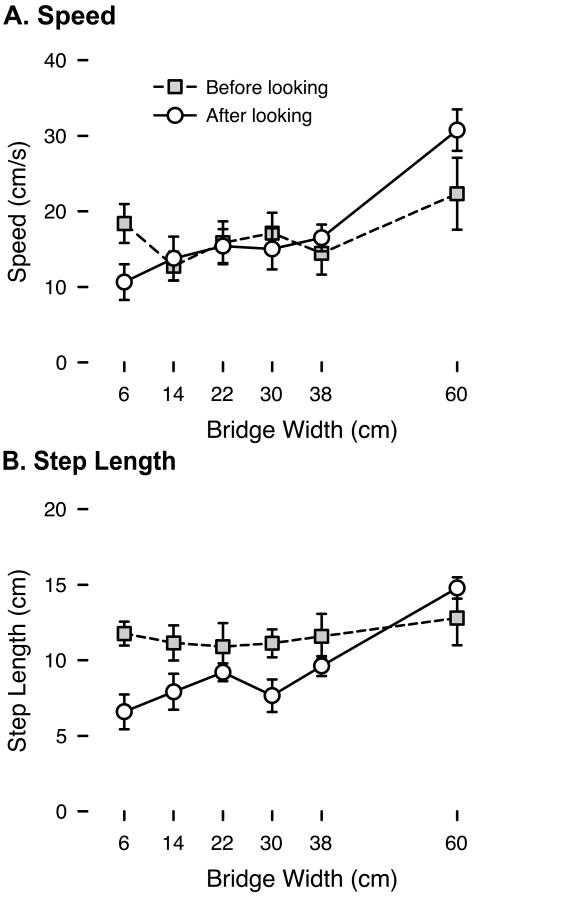

Given that infants gathered visual information in the course of walking toward the bridge, we asked whether they modified their walking in response to visual feedback about bridge width. As shown in Figure 5, infants modified their average speed (distance covered/time) and step length (distance covered/number of steps) after looking at the bridge, but not before obtaining visual information about bridge width. Because we were unable to measure distance if infants did not cross a calibration line or took only one step in the available time, these measures were missing from many trials, precluding us from calculating repeated-measures ANOVAs. Thus, we analyzed differences in speed and step length using Generalized Estimating Equations (GEEs) on the 142 available trials. The analyses revealed significant effects of bridge width, Wald χ2 > 12, ps < .01, and time period (before/after first look), Wald χ2 > 4, ps < .03, and significant bridge width × time period interactions, Wald χ2 > 8, p < .01. Parameter estimates from the models indicated that before looking, speed and step length did not differ by bridge width (ps > .3), but after looking, speed and step length were scaled to bridge width, with slower approach and shorter steps on narrower bridges (ps < .01).

Figure 5.

Properties of infants' gait before the first look (gray squares) and after the first look (white circles) at each bridge width. (A) Average walking speed (distance covered / time). (B) Average step length (distance covered / number of steps). Error bars denote standard errors.

Crossing behaviors

In the final set of analyses, we analyzed infants' behaviors after exploring the bridge. Infants' motor decisions were accurate, but unrelated to exploratory looking and touching. While crossing challenging bridges, infants looked ahead at the goal, not down at the bridge.

Motor decisions

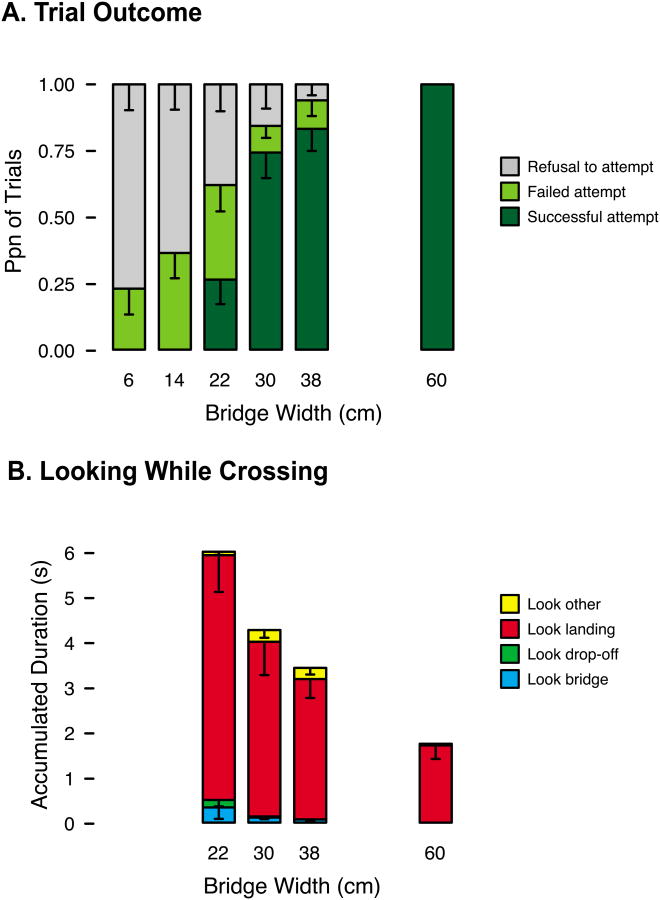

Infants' decisions about crossing were largely accurate and scaled to risk level. Attempts to walk (stacked green bars in Figure 6a) decreased on narrower bridges, F(5, 65) = 23.49, p < .01, linear trend: F(1, 13) = 72.05, p < .01. Reciprocally, refusals to walk increased on narrower bridges (gray bars in Figure 6a). However, infants did err occasionally (light green bars in Figure 6a).

Figure 6.

(A) Average proportion of trials at each bridge width in which infants succeeded (dark green bars), failed (light green bars), or refused (gray bars). (B) Average accumulated duration of looking at each AOI while crossing the bridge (successful trials only). Error bars denote standard errors.

We asked whether exploration was related to two types of errors: falling on challenging (22-, 30-, and 38-cm) bridges and falling on impossibly narrow (6-, 14-cm) bridges. Table 1 shows that for challenging bridges, looking (whether infants looked, how long they looked, and when they looked) and whether infants touched did not differ for successful and failed attempts; logistic GEEs confirmed that these variables did not significantly predict trial outcomes. Touch duration, however, did predict outcomes: Surprisingly, infants spent more time touching on failed than successful attempts. For impossible bridges, looking and touching did not significantly predict whether infants attempted or refused. Note there were only 13 impossible trials without looks. However, infants attempted 53.8% of these trials, compared to 27.5% of impossible trials in which they did look.

Table 1. Exploratory behaviors by trial outcome.

| Challenging bridges (22, 30, & 38 cm) | Impossible bridges (6 & 14 cm) | |||||

|---|---|---|---|---|---|---|

| Successful attempts | Failed attempts | Wald χ2 p-value | Attempts | Refusals | Wald χ2 p-value | |

|

|

|

|||||

| Ppn of trials with look | .862 (65) | .813 (16) | .94 | .667 (21) | .861 (43) | .37 |

| M look duration (s) | 2.06 (65) | 2.02 (16) | .80 | 0.91 (21) | 2.16 (43) | .99a |

| M latency to look (s) | 3.31 (56) | 3.80 (13) | .55 | 4.36 (14) | 4.93 (37) | .85 |

| M lag between last look and cross (s) | 2.80 (56) | 3.32 (13) | .93 | |||

| Ppn of trials with touch | .270 (74) | .608 (23) | .12b | .417 (24) | .547 (53) | .56 |

| M touch duration (s) | 1.30 (74) | 3.87 (23) | .01b | 3.13 (24) | 6.36 (53) | .34a |

Note.

Number of trials in parentheses.

Because infants had more time available to look and touch on refusal trials, platform duration was included as a covariate.

Because touching varied with bridge width on these increments, bridge width was included as a covariate.

Ongoing guidance of bridge crossing

Infants gathered very little visual information on-line to guide their steps (Figure 6b): They spent only M = 0.12 s (M = 2.7% of total crossing time) fixating the bridge while crossing. Instead, they spent M = 94.0% of crossing time looking ahead at the goal. Infants looked down slightly more often on narrower bridges, Wald χ2 = 4.99, p = .03, B = -.007.

Effects of walking experience

Our final set of analyses investigated whether walking experience affected infants' exploratory actions and crossing behaviors. Walking experience was uncorrelated with age, r(12) = .05, p = .88, and age was uncorrelated with all measures of exploration and motor decisions (ps > .36), indicating that experience—not age—was responsible for significant effects.

Motor decisions

As in previous work, walking experience was correlated with errors: Less experienced infants fell more frequently, r(12) = -.56, p = .04 (Figure 7a).

Figure 7.

Relations with walking experience. (A) Relation between walking experience and the proportion of trials in which infants looked at the bridge. (B) Relation between walking experience and the proportion of trials on narrow (6-, 14-, and 22-cm) bridges in which infants touched the bridge. (C) Relation between walking experience and the proportion of trials on wide (30-, 38-, and 60-cm) bridges in which infants touched the bridge. (D) Relation between walking experience and the proportion of trials in which infants fell. (E) Distribution of walking experience and two experience groups. (F) Average proportion of trials at each bridge width in which inexperienced (white circles) and experienced (gray squares) infants touched the bridge. Error bars denote standard errors.

Looking

Walking experience was not significantly correlated with the proportion of trials in which infants looked at the bridge, r(12) = .33, p = .25 (Figure 7b). However, the two infants with less than one month of experience had the lowest rates of looking: 40% and 53% of trials.

Touching

Pooled over bridge widths, walking experience was not correlated with the proportion of trials in which infants touched the bridge, r(12) = -.16, p = .58. However, because touching varied by bridge width, we conducted separate correlations for the 6-, 14-, and 22-cm bridges, and the 30-, 38-, and 60-cm bridge. On the narrower bridges, we initially found no relation between experience and touching, r(12) = .12, p = .68, but after removing a large bivariate outlier (the experienced infant who never touched), we found a marginal positive correlation, r(11) = .52, p = .07 (Figure 7c). On the wider bridges, we found a negative correlation between experience and touching, r(12) = -.57, p = .04: Infants with more walking experience were less likely to touch wider bridges (Figures 7d). To further examine these relations, we used the median of 1.97 months of walking experience to split infants into an “inexperienced” group (0.43-1.97 months of walking experience), and an “experienced” group (2.86-4.70 months of walking experience; see Figure 7e). A 2 (experience group) × 6 (bridge width) repeated measures ANOVA on the likelihood of touching revealed a significant interaction, F(5,55) = 2.63, p = .03: Experienced infants showed larger modulation of touching based on bridge width (Figure 7f).

Discussion

Animals do something with their perceptual systems to acquire information about the environment. Bats send out echolocation calls, dogs sniff the air, fish emit electric fields, and humans scan the scene and make direct contact with objects and surfaces. Exploratory movements allow animals to acquire information for guiding locomotion through a variable world. The current study sought to characterize the process of active information gathering in infants navigating challenging terrain.

Our findings replicate prior work showing that on narrower bridges, infants spend more time on the starting platform, are more likely to touch the bridge, and are less likely to attempt to cross (Kretch & Adolph, 2013b). The addition of head-mounted eye tracking allowed us to obtain novel spatial and temporal data on infants' gaze, describe the real-time cycle of perception and action, and directly test the ramping-up hypothesis.

Infants' use of visual and haptic exploration

Infants obtained three types of perceptual information about upcoming ground. They spent most of each trial facing forward; thus, the bridge was ostensibly in their peripheral visual field. Infants also gathered high-resolution visual information by actively directing gaze toward the bridge. And infants generated haptic information by making direct contact with the bridge, stepping partway on to test whether they could keep balance on the narrow surface.

Infants were sensitive to the costs and benefits of visual and haptic exploration and deployed the two modalities differently. Looking is relatively easy and is the only source of high-resolution information from a distance. Thus, consistent with the ramping up hypothesis, infants looked at the bridge from afar at the start of most trials and the likelihood of looking did not vary with bridge width. In other words, the exploratory sequence began with looking and the first look was not guided by prior information about bridge width. However, the duration of looking did not vary with bridge width, refuting Adolph and Eppler's (1998) prediction that initial quick glances would lead to long looks on risky surfaces.

We were most surprised at how little accumulated time infants spent fixating the bridge from the starting platform. In previous work, latency to cross served as a proxy for visual exploration and long latencies were interpreted as long looks (Adolph, 1997; Adolph & Eppler, 1998; Adolph et al., 2000). However, by measuring gaze directly, we found that visual exploration of the bridge comprised brief glances, not prolonged examinations, and infants spent the majority of each trial looking ahead at the goal. These brief glances provided sufficient visual information: Decisions were generally accurate, and longer looking did not ensure fewer errors.

Looking at the ground may be more costly than originally suspected. Visual attention resources are limited. Focusing on an object requires diverting gaze from another part of the environment—in this case, infants' caregivers, who called to them and held attractive objects. Similarly, in the real world, locomotion is seldom an animal's only task. We walk while carrying on a conversation, admiring the view, or reading a map. Locomotion typically has an end goal that may be the main focus of attention. For example, when navigating obstacles during a scavenger hunt, children and adults spent more time fixating search targets than obstacles (Franchak & Adolph, 2010). In the current study, infants kept their eyes on the goal and engaged in visual exploration of the bridge sparingly and efficiently.

Haptic exploration is more costly than looking, requiring more effort and time and carrying more risk. Accordingly, infants reserved touching only for narrow bridges. However, even on narrow bridges, touching—which required infants to modify their gait to stop at the edge—did not exceed 50% of trials.

Sequential process of exploration

Exploration played out in an organized spatial and temporal sequence—a realtime perception-action cycle. Infants looked from a distance, then approached the bridge and touched; they rarely touched before looking. This sequence supports the ramping-up hypothesis that infants use looking as an initial assessment, and that the decision to touch is informed by information gleaned from looking (Adolph & Eppler, 1998; Adolph et al., 2000). If looking from a distance indicated safe terrain, touching was unnecessary. But if visual information did not assure safety, touching was sometimes employed to provide additional information.

Of course, because infants began the trials at a distance from the bridge, the opportunity to look preceded the opportunity to touch. To test whether looking would be prioritized in a situation where both were available at the same moment, infants could be placed at the edge of the bridge at the start of the trial. However, this would be a highly unrealistic situation. Obstacles do not appear suddenly at one's feet; rather, observers see them coming ahead of time if they look. Looking at upcoming ground is strategic, but not mandatory (Franchak & Adolph, 2010; Franchak et al., 2011), and looking and touching in sequence is also not mandatory. The prediction of the ramping-up hypothesis that looking should precede touching, while logical, was unconfirmed prior to the current study.

Although the first look at the bridge typically preceded touching, infants usually looked again during the touch. Several studies have shown that infants pay more attention to perceptual properties that are redundant with those from other modalities experienced concurrently (Bahrick & Lickliter, 2012, 2014). Simultaneous looking and touching may highlight properties like bridge width that can be both seen and felt.

Information from looking also affected infants' walking gait. Before looking, infants' speed and step length were at baseline level, unaffected by bridge width. After looking at narrower bridges, infants slowed down and shortened their steps. After looking at the widest 60-cm bridge, infants sped up and lengthened their steps. Thus, infants used the information gained from looking—they modified their locomotion in response to visual feedback.

A novel outcome measure in the current study is where infants spent their time while exploring. Infants lived on the edge: They prioritized acquiring improved visual and haptic information over keeping a safe distance from the risky drop-off. In previous work, refusal to cross is referred to as “avoidance” (see Adolph, Kretch, & LoBue, 2014 for review), but our data indicate that this term is a misnomer. The fact that infants seek proximity to the edge but do not plunge over the brink indicates that infants perceive affordances of drop-offs but are not afraid of heights (Adolph et al., 2014; Kretch & Adolph, 2013a, 2013b).

Relations between exploration, experience, and decisions

On some trials, infants erred: They attempted to walk over impossibly narrow bridges or failed to adequately modify their walking gait while crossing. Clearly, some part of the perception-action cycle broke down: Either infants did not perform the necessary exploratory actions, or they misinterpreted the resulting information. Our data support the latter. We found no relations between success and exploratory behaviors. Looking and touching were not sufficient to prevent mistakes, and more looking and touching did not produce better outcomes. Similarly, previous work showed that novice crawlers attempt to crawl down impossibly steep slopes despite extended periods of haptic exploration at the brink (Adolph, 1997). Developmentally, the ability to plan and execute exploratory movements may precede the ability to understand the perceptual information generated by those movements (Adolph, 1997; Eppler, Adolph, & Weiner, 1996; E. J. Gibson, 1988). Additionally, the ability to recognize when to recruit an additional modality (touching) may develop earlier than the ability to integrate multiple modalities (Gori et al., 2008; Nardini et al., 2013).

Our analyses revealed effects of walking experience on the perception-action cycle. Infants' looking behavior was largely unaffected by walking experience. However, experience affected how infants responded to visual information. Initial looks prompted experienced walkers to touch more on narrow bridges and less on wider bridges. Less experienced infants were less discriminating: They touched at relatively high rates on wide bridges that visual inspection should have deemed safe. Less experienced infants also fell more frequently. The current data suggest that walking experience may teach infants to interpret perceptual information gleaned from exploratory behaviors, causing subsequent exploration to be more efficient and decisions to be more accurate.

Visual information is widely considered to be the primary perceptual system for guidance of locomotion in humans (J. J. Gibson, 1958, 1979; Lee & Lishman, 1977; Patla, 1997). Thus, it was counterintuitive that we did not find a significant relation between visual exploration of the bridge and errors. However, infants fixated the bridge on most trials. Possibly, infants did not contribute enough trials without looks to provide adequate power to test this relation. Of the handful of impossible bridge trials in which infants did not look at the bridge, they attempted and failed on more than half. Thus, the question remains open of whether directing gaze at an obstacle provides superior information than peripheral vision alone.

In the current study, we focused on the timing of looking and touching—when and for how long infants looked at and touched the bridge—rather than the quality of looks or touches. But all looks and all touches are not equivalent. Looking can involve varying levels of attention and different scan paths. Touching can involve tapping, rubbing, stepping, or rocking (Adolph, 1997). Studies of infant object exploration show that infants perform distinct patterns of haptic exploration based on object properties (Lockman & McHale, 1989; Palmer, 1989; Ruff, 1984; Ruff, Saltarelli, Capozzoli, & Dubiner, 1992). Different types of hand and finger movements provide different types of information about objects; adults and children adjust their exploratory procedures based on the information they seek (Klatzky, Lederman, & Mankinen, 2005; Lederman & Klatzky, 1987). Similarly, different types of haptic information should be differentially informative about locomotion over a particular surface. The optimal haptic exploration for assessing the walkability of bridges would reveal information about fitting the feet on the bridge and maintaining balance, so active steps or weight shifts may be important. In assessing affordances for locomotion, quality of exploration is likely more relevant than quantity.

Online guidance of locomotion

A consistent finding from the current study was the lack of fixation of the bridge while crossing. Walking over bridges is no easy feat. Infants had to place each step in exactly the right place and maintain balance by keeping their center of gravity above the narrow surface. Unlike previous studies involving obstacles that could be cleared in a single step (Franchak & Adolph, 2010; Franchak et al., 2011; Patla & Vickers, 1997), walking over a bridge requires constant updating of perceptual information in a continuation of the perception-action cycle. Infants succeeded on most trials, but success did not involve online fixation of the bridge.

What information did infants use instead? Previous work suggests that infants largely use peripheral optic flow for maintaining balance (Butterworth & Hicks, 1977; Lee & Aronson, 1974; Stoffregen, Schmuckler, & Gibson, 1987). Information for step placement was available from haptic feedback on the bottoms of infants' feet. If they stepped too far to one side, they felt the lack of support and could correct subsequent steps. Thus, fixating the bridge was unnecessary and may be counterproductive: Looking at one's feet requires leaning the head forward, thereby shifting the center of mass and disrupting balance (Adolph, 1997).

Perhaps, the need to continually update perceptual information while crossing contributed to the finding that prospective looking and touching did not guarantee success on challenging bridges. Infants could begin crossing the bridge with perfect technique, but err several steps later and fall partway across.

Conclusions

We provided evidence that infants' exploration for guiding locomotion over bridges is efficient and proceeds in an organized sequence. Our findings are generally consistent with the ramping-up hypothesis (Adolph & Eppler, 1998; Adolph et al., 2000) and with data from other tasks, age groups, and species (Adolph, 1997; Adolph & Robinson, 2015; Moss et al., 2011; Patla & Vickers, 1997, 2003). Thus, we propose that the process of real-time exploration illustrated here is a general one. While engaged in typical locomotion, animals send out occasional, low-cost, distance probes, such as quick glances at the ground or low-frequency echolocation calls. If the distance probe suggests business as usual, animals proceed. But if the distance probe suggests a challenge in the terrain ahead, animals ramp up to more costly forms of perceptual exploration and locomotor actions: They slow down and engage in more concerted exploration, such as haptic exploration or high-intensity, aimed bursts of calls. After a few months of walking experience, human infants follow this general procedure.

Research Highlights.

-When deciding whether to cross bridges varying in width, 13-14-month-old infants displayed an organized, efficient sequence of visual and haptic exploratory behaviors.

-Infants looked at the bridge on most trials, but looks were brief; exploratory touching was reserved for risky bridges, but touches were relatively prolonged.

-Exploration followed a sequential “ramping-up” process: Looking at the bridge occurred early and from a distance, providing an initial assessment that prompted subsequent gait modifications and haptic exploration on risky bridges.

-With walking experience, exploratory behaviors became increasingly efficient and infants were better able to interpret the resulting perceptual information.

Acknowledgments

Author Note: The project was supported by Award # R37HD033486 from the Eunice Kennedy Shriver National Institute of Child Health & Human Development to Karen Adolph and by Graduate Research Fellowship # 0813964 from the National Science Foundation to Kari Kretch. The content is solely the responsibility of the authors and does not necessarily represent the official views of the Eunice Kennedy Shriver National Institute of Child Health & Human Development, the National Institutes of Health, or the National Science Foundation.

We gratefully acknowledge Sora Lee and members of the NYU Infant Action Lab for helping to collect and code data, Gladys Chan for her beautiful line drawing, Alex Tiriac for introducing us to work on bat navigation, and Cynthia Moss for her discussions about babies and bats.

References

- Adolph KE. Psychophysical assessment of toddlers' ability to cope with slopes. Journal of Experimental Psychology: Human Perception and Performance. 1995;21:734–750. doi: 10.1037//0096-1523.21.4.734. [DOI] [PubMed] [Google Scholar]

- Adolph KE. Learning in the development of infant locomotion. Monographs of the Society for Research in Child Development. 1997;62(3, Serial No. 251) doi: 10.2307/1166199. [DOI] [PubMed] [Google Scholar]

- Adolph KE. Specificity of learning: Why infants fall over a veritable cliff. Psychological Science. 2000;11:290–295. doi: 10.1111/1467-9280.00258. [DOI] [PubMed] [Google Scholar]

- Adolph KE, Eppler MA. Development of visually guided locomotion. Ecological Psychology. 1998;10:303–321. [Google Scholar]

- Adolph KE, Eppler MA, Marin L, Weise IB, Clearfield MW. Exploration in the service of prospective control. Infant Behavior and Development. 2000;23:441–460. [Google Scholar]

- Adolph KE, Joh AS. Multiple learning mechanisms in the development of action. In: Woodward A, Needham A, editors. Learning and the infant mind. New York: Oxford University Press; 2009. pp. 172–207. [Google Scholar]

- Adolph KE, Joh AS, Eppler MA. Infants' perception of affordances of slopes under high and low friction conditions. Journal of Experimental Psychology: Human Perception and Performance. 2010;36:797–811. doi: 10.1037/a0017450. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adolph KE, Kretch KS, LoBue V. Fear of heights in infants? Current Directions in Psychological Science. 2014 doi: 10.1177/0963721413498895. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adolph KE, Robinson SR. The road to walking: What learning to walk tells us about development. In: Zelazo P, editor. Oxford handbook of developmental psychology. New York: Oxford University Press; 2013. pp. 403–443. [Google Scholar]

- Adolph KE, Robinson SR. Motor development. In: Liben L, Muller U, editors. Handbook of child psychology and developmental science. 7th. Vol. 2. New York: Wiley; 2015. pp. 114–157. Cognitive Processes. [Google Scholar]

- Bahrick LE, Lickliter R. The role of intersensory redundancy in early perceptual, cognitive, and social development. In: Bremner A, Lewkowicz DJ, Spence C, editors. Multisensory development. Oxford: Oxford University Press; 2012. pp. 183–205. [Google Scholar]

- Bahrick LE, Lickliter R. Learning to attend selectively: The dual role of intersensory redundancy. Current Directions in Psychological Science. 2014;23:414–420. doi: 10.1177/0963721414549187. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bertenthal BI, Clifton RK. Perception and action. In: Kuhn D, Siegler RS, editors. Handbook of Child Psychology, Vol 2: Cognition, Perception, and Language. 5th. New York: John Wiley & Sons; 1998. pp. 51–102. [Google Scholar]

- Butterworth G, Hicks L. Visual proprioception and postural stability in infancy: A developmental study. Perception. 1977;6:255–262. doi: 10.1068/p060255. [DOI] [PubMed] [Google Scholar]

- Eppler MA, Adolph KE, Weiner T. The developmental relationship between infants' exploration and action on slanted surfaces. Infant Behavior and Development. 1996;19:259–264. [Google Scholar]

- Ernst MO, Banks MS. Humans integrate visual and haptic information in a statistically optimal fashion. Nature. 2002;415:429–433. doi: 10.1038/415429a. [DOI] [PubMed] [Google Scholar]

- Franchak JM, Adolph KE. Visually guided navigation: Head-mounted eye-tracking of natural locomotion in children and adults. Vision Research. 2010;50:2766–2774. doi: 10.1016/j.visres.2010.09.024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Franchak JM, Kretch KS, Soska KC, Adolph KE. Head-mounted eye tracking: A new method to describe infant looking. Child Development. 2011;82:1738–1750. doi: 10.1111/j.1467-8624.2011.01670.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Galambos R, Griffin DR. Obstacle avoidance by flying bats: The cries of bats. Journal of Experimental Zoology. 1942;89:475–490. [Google Scholar]

- Galloway JC, Thelen E. Feet first: Object exploration in young infants. Infant Behavior and Development. 2004;27:107–112. doi: 10.1016/j.infbeh.2003.06.001. [DOI] [Google Scholar]

- Gibson EJ. Exploratory behavior in the development of perceiving, acting, and the acquiring of knowledge. Annual Review of Psychology. 1988;39:1–41. [Google Scholar]

- Gibson EJ, Riccio G, Schmuckler MA, Stoffregen TA, Rosenberg D, Taormina J. Detection of the traversability of surfaces by crawling and walking infants. Journal of Experimental Psychology: Human Perception and Performance. 1987;13:533–544. doi: 10.1037//0096-1523.13.4.533. [DOI] [PubMed] [Google Scholar]

- Gibson EJ, Schmuckler MA. Going somewhere: An ecological and experimental approach to development of mobility. Ecological Psychology. 1989;1:3–25. [Google Scholar]

- Gibson EJ, Walk RD. The “visual cliff”. Scientific American. 1960;202:64–71. [PubMed] [Google Scholar]

- Gibson JJ. Visually controlled locomotion and visual orientation in animals. British Journal of Psychology. 1958;49:182–194. doi: 10.1111/j.2044-8295.1958.tb00656.x. [DOI] [PubMed] [Google Scholar]

- Gibson JJ. The senses considered as perceptual systems. Boston: Houghton-Mifflin; 1966. [Google Scholar]

- Gibson JJ. The ecological approach to visual perception. Boston, MA: Houghton Mifflin Company; 1979. [Google Scholar]

- Gill SV, Adolph KE, Vereijken B. Change in action: How infants learn to walk down slopes. Developmental Science. 2009;12:888–902. doi: 10.1111/j.1467-7687.2009.00828.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gori M, Del Viva M, Sandini G, Burr DC. Young children do not integrate visual and haptic form information. Current Biology. 2008;18:694–698. doi: 10.1016/j.cub.2008.04.036. [DOI] [PubMed] [Google Scholar]

- Griffin DR, Galambos R. The sensory basis of obstacle avoidance by flying bats. Journal of Experimental Zoology. 1941;86:481–506. [Google Scholar]

- Griffin DR, Webster FA, Michael CR. The echolocation of flying insects by bats. Animal Behavior. 1960;8:141–154. [Google Scholar]

- Jen PHS, Kamada T. Analysis of orientation signals emitted by the CF-FM bat, Pteronotus p. parnellii and the FM bat, Eptesicus fuscus during avoidance of moving and stationary obstacles. Journal of Comparative Physiology. 1982;148:389–398. [Google Scholar]

- Joh AS, Adolph KE. Learning from falling. Child Development. 2006;77:89–102. doi: 10.1111/j.1467-8624.2006.00858.x. [DOI] [PubMed] [Google Scholar]

- Joh AS, Adolph KE, Narayanan PJ, Dietz VA. Gauging possibilities for action based on friction underfoot. Journal of Experimental Psychology: Human Perception and Performance. 2007;33:1145–1157. doi: 10.1037/0096-1523.33.5.1145. [DOI] [PubMed] [Google Scholar]

- Klatzky RL, Lederman SJ, Mankinen JM. Visual and haptic exploratory procedures in children's judgments about tool function. Infant Behavior and Development. 2005;28:240–249. [Google Scholar]

- Kretch KS, Adolph KE. Cliff or step? Posture-specific learning at the edge of a drop-off. Child Development. 2013a;84:226–240. doi: 10.1111/j.1467-8624.2012.01842.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kretch KS, Adolph KE. No bridge too high: Infants decide whether to cross based on the probability of falling not the severity of the potential fall. Developmental Science. 2013b;16:336–351. doi: 10.1111/desc.12045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kretch KS, Franchak JM, Adolph KE. Crawling and walking infants see the world differently. Child Development. 2014 doi: 10.1111/cdev.12206. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lederman SJ, Klatzky RL. Hand movements: a window into haptic object recognition. Cognitive Psychology. 1987;19:342–368. doi: 10.1016/0010-0285(87)90008-9. [DOI] [PubMed] [Google Scholar]

- Lederman SJ, Klatzky RL. Haptic perception: A tutorial. Attention, Perception, and Psychophysics. 2009;71:1439–1459. doi: 10.3758/APP.71.7.1439. [DOI] [PubMed] [Google Scholar]

- Lee DN, Aronson E. Visual proprioceptive control of standing in human infants. Perception and Psychophysics. 1974;15:529–532. [Google Scholar]

- Lee DN, Lishman R. Visual control of locomotion. Scandinavian Journal of Psychology. 1977;18:224–230. doi: 10.1111/j.1467-9450.1977.tb00281.x. [DOI] [PubMed] [Google Scholar]

- Lockman JJ, McHale JP. Object manipulation in infancy: Developmental and contextual determinants. In: Lockman JJ, Hazen NL, editors. Action in social context: Perspectives on early development. New York: Plenum; 1989. pp. 129–167. [Google Scholar]

- Marigold DS, Patla AE. Gaze fixation patterns for negotiating complex ground terrain. Neuroscience. 2007;144(1):302–313. doi: 10.1016/j.neuroscience.2006.09.006. [DOI] [PubMed] [Google Scholar]

- Marigold DS, Weerdesteyn V, Patla AE, Duysens J. Keep looking ahead? Re-direction of visual fixation does not always occur during an unpredictable obstacle avoidance task. Experimental Brain Research. 2007;176(1):32–42. doi: 10.1007/s00221-006-0598-0. [DOI] [PubMed] [Google Scholar]

- Mark LS, Baillet JA, Craver KD, Douglas SD, Fox T. What an actor must do in order to perceive the affordance for sitting. Ecological Psychology. 1990;2:325–366. [Google Scholar]

- Matthis JS, Fajen BR. Visual control of foot placement when walking over complex terrain. Journal of Experimental Psychology: Human Perception and Performance. 2014;40:106–115. doi: 10.1037/a0033101. [DOI] [PubMed] [Google Scholar]

- Moss CF, Chiu C, Surlykke A. Adaptive vocal behavior drives perception by echolocation in bats. Current Opinion in Neurobiology. 2011;21:645–652. doi: 10.1016/j.conb.2011.05.028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moss CF, Surlykke A. Probing the natural scene by echolocation in bats. Frontiers in Behavioral Neuroscience. 2010;4:1–16. doi: 10.3389/fnbeh.2010.00033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nardini M, Begus K, Mareschal D. Multisensory uncertainty reduction for hand localization in children and adults. Journal of Experimental Psychology: Human Perception and Performance. 2013;39:773–787. doi: 10.1037/a0030719. [DOI] [PubMed] [Google Scholar]

- Palmer CF. The discriminating nature of infants' exploratory actions. Developmental Psychology. 1989;25:885–893. [Google Scholar]

- Patla AE. Understanding the roles of vision in the control of human locomotion. Gait and Posture. 1997;5:54–69. [Google Scholar]

- Patla AE. Gaze behaviors during adaptive human locomotion: Insights into how vision is used to regulate locomotion. In: Vaina LM, Beardsley SA, Rushton SK, editors. Optic flow and beyond. Dordrecht: Kluwer Academic Publishers; 2004. pp. 383–399. [Google Scholar]

- Patla AE, Vickers JN. Where and when do we look as we approach and step over an obstacle in the travel path. Neuroreport. 1997;8:3661–3665. doi: 10.1097/00001756-199712010-00002. [DOI] [PubMed] [Google Scholar]

- Patla AE, Vickers JN. How far ahead do we look when required to step on specific locations in the travel path during locomotion? Experimental Brain Research. 2003;148:133–138. doi: 10.1007/s00221-002-1246-y. [DOI] [PubMed] [Google Scholar]

- Rietdyk S, Drifmeyer JE. The rough-terrain problem: Accurate foot targeting as a function of visual information regarding target location. Journal of Motor Behavior. 2009;42:37–48. doi: 10.1080/00222890903303309. [DOI] [PubMed] [Google Scholar]

- Rochat P. Object manipulation and exploration in 2- to 5-month-old infants. Developmental Psychology. 1989;25:871–884. [Google Scholar]

- Ruff HA. Infants' manipulative exploration of objects: Effects of age and object characteristics. Developmental Psychology. 1984;20:9–20. [Google Scholar]

- Ruff HA, Saltarelli LM, Capozzoli M, Dubiner K. The differentiation of activity in infants' exploration of objects. Developmental Psychology. 1992;28:851–861. [Google Scholar]

- Simmons JA, Fenton MB, O'Farrell MJ. Echolocation and pursuit of prey by bats. Science. 1979;203:16–21. doi: 10.1126/science.758674. [DOI] [PubMed] [Google Scholar]

- Sivak B, MacKenzie CL. Integration of visual information and motor output in reaching and grasping: The contributions of peripheral and central vision. Neuropsychologia. 1990;28:1095–1116. doi: 10.1016/0028-3932(90)90143-c. [DOI] [PubMed] [Google Scholar]

- Stoffregen TA, Schmuckler MA, Gibson EJ. Use of central and peripheral optical flow in stance and locomotion in young walkers. Perception. 1987;16:113–119. doi: 10.1068/p160113. [DOI] [PubMed] [Google Scholar]

- Thelen E. Coupling perception and action in the development of skill: A dynamic approach. In: Bloch H, Berthenthal BI, editors. Sensory-motor organizations and development in infancy and early childhood. Dordrecht, the Netherlands: Kluwer Academic Publishers; 1990. pp. 39–56. [Google Scholar]

- Turvey MT, Carello C, Kim NG. Links between active perception and the control of action. In: Haken H, Stadler M, editors. Synergetics of cognition. Heidelberg: Springer-Verlag Berlin; 1990. pp. 269–295. [Google Scholar]

- Wilkinson EJ, Sherk HA. The use of visual information for planning accurate steps in a cluttered environment. Behavioural Brain Research. 2005;164:270–274. doi: 10.1016/j.bbr.2005.06.023. [DOI] [PubMed] [Google Scholar]