Abstract

Phase transitions and critical behavior are crucial issues both in theoretical and experimental neuroscience. We report analytic and computational results about phase transitions and self-organized criticality (SOC) in networks with general stochastic neurons. The stochastic neuron has a firing probability given by a smooth monotonic function Φ(V) of the membrane potential V, rather than a sharp firing threshold. We find that such networks can operate in several dynamic regimes (phases) depending on the average synaptic weight and the shape of the firing function Φ. In particular, we encounter both continuous and discontinuous phase transitions to absorbing states. At the continuous transition critical boundary, neuronal avalanches occur whose distributions of size and duration are given by power laws, as observed in biological neural networks. We also propose and test a new mechanism to produce SOC: the use of dynamic neuronal gains – a form of short-term plasticity probably located at the axon initial segment (AIS) – instead of depressing synapses at the dendrites (as previously studied in the literature). The new self-organization mechanism produces a slightly supercritical state, that we called SOSC, in accord to some intuitions of Alan Turing.

“Another simile would be an atomic pile of less than critical size: an injected idea is to correspond to a neutron entering the pile from without. Each such neutron will cause a certain disturbance which eventually dies away. If, however, the size of the pile is sufficiently increased, the disturbance caused by such an incoming neutron will very likely go on and on increasing until the whole pile is destroyed. Is there a corresponding phenomenon for minds, and is there one for machines? There does seem to be one for the human mind. The majority of them seems to be subcritical, i.e., to correspond in this analogy to piles of subcritical size. An idea presented to such a mind will on average give rise to less than one idea in reply. A smallish proportion are supercritical. An idea presented to such a mind may give rise to a whole “theory” consisting of secondary, tertiary and more remote ideas. (…) Adhering to this analogy we ask, “Can a machine be made to be supercritical?”” Alan Turing (1950)1.

The Critical Brain Hypothesis2,3 states that (some) biological neuronal networks work near phase transitions because criticality enhances information processing capabilities4,5,6 and health7. The first discussion about criticality in the brain, in the sense that subcritical, critical and slightly supercritical branching process of thoughts could describe human and animal minds, has been made in the beautiful speculative 1950 Imitation Game paper by Turing1. In 1995, Herz & Hopfield8 noticed that self-organized criticality (SOC) models for earthquakes were mathematically equivalent to networks of integrate-and-fire neurons, and speculated that perhaps SOC would occur in the brain. In 2003, in a landmark paper, these theoretical conjectures found experimental support by Beggs & Plenz9 and, by now, more than half a thousand papers can be found about the subject, see some reviews2,3,10. Although not consensual, the Critical Brain Hypothesis can be considered at least a very fertile idea.

The open question about neuronal criticality is what are the mechanisms responsible for tuning the network towards the critical state. Up to now, the main mechanism studied is some dynamics in the links which, in the biological context, would occur at the synaptic level11,12,13,14,15,16,17.

Here we propose a whole new mechanism: dynamic neuronal gains, related to the diminution (and recovery) of the firing probability, an intrinsic neuronal property. The neuronal gain is experimentally related to the well known phenomenon of firing rate adaptation18,19,20. This new mechanism is sufficient to drive neuronal networks of stochastic neurons towards a critical boundary found, by the first time, for these models. The neuron model we use was proposed by Galves and Locherbach21 as a stochastic model of spiking neurons inspired by the traditional integrate-and-fire (IF) model.

Introduced in the early 20th century22, IF elements have been extensively used in simulations of spiking neurons20,23,24,25,26,27,28. Despite their simplicity, IF models have successfully emulated certain phenomena observed in biological neural networks, such as firing avalanches12,13,29 and multiple dynamical regimes30,31. In these models, the membrane potential V(t) integrates synaptic and external currents up to a firing threshold VT32. Then, a spike is generated and V(t) drops to a reset potential VR. The leaky integrate-and-fire (LIF) model extends the IF neuron with a leakage current, which causes the potential V(t) to decay exponentially towards a baseline potential VB in the absence of input signals24,26.

LIF models are deterministic but it has been claimed that stochastic models may be more adequate for simulation purposes33. Some authors proposed to introduce stochasticity by adding noise terms to the potential24,25,30,31,33,34,35,36,37, yielding the leaky stochastic integrate-and-fire (LSIF) models.

Alternatively, the Galves-Löcherbach (GL) model21,38,39,40,41 and also the model used by Larremore et al.42,43 introduce stochasticity in their firing neuron models in a different way. Instead of noise inputs, they assume that the firing of the neuron is a random event, whose probability of occurrence in any time step is a firing function Φ(V) of membrane potential V. By subsuming all sources of randomness into a single function, the Galves-Löcherbach (GL) neuron model simplifies the analysis and simulation of noisy spiking neural networks.

Brain networks are also known to exhibit plasticity: changes in neural parameters over time scales longer than the firing time scale27,44. For example, short-term synaptic plasticity45 has been incorporated in models by assuming that the strength of each synapse is lowered after each firing, and then gradually recovers towards a reference value12,13. This kind of dynamics drives the synaptic weights of the network towards critical values, a SOC state which is believed to optimize the network information processing3,4,7,9,10,46.

In this work, first we study the dynamics of networks of GL neurons by a very simple and transparent mean-field calculation. We find both continuous and discontinuous phase transitions depending on the average synaptic strength and parameters of the firing function Φ(V). To the best of our knowledge, these phase transitions have never been observed in standard integrate-and-fire neurons. We also find that, at the second order phase transition, the stimulated excitation of a single neuron causes avalanches of firing events (neuronal avalanches) that are similar to those observed in biological networks3,9.

Second, we present a new mechanism for SOC based on a dynamics on the neuronal gains (a parameter of the neuron probably related to the axon initial segment – AIS32,47), instead of depression of coupling strengths (related to neurotransmiter vesicle depletion at synaptic contacts between neurons) proposed in the literature12,13,15,17. This new activity dependent gain model is sufficient to achieve self-organized criticality, both by simulation evidence and by mean-field calculations. The great advantage of this new SOC mechanism is that it is much more efficient, since we have only one adaptive parameter per neuron, instead of one per synapse.

The Model

We assume a network of N GL neurons that change states in parallel at certain sampling times with a uniform spacing Δ. Thus, the membrane potential of neuron i is modeled by a real variable Vi[t] indexed by discrete time t, an integer that represents the sampling time tΔ.

Each synapse transmits signals from some presynaptic neuron j to some postsynaptic neuron i, and has a synaptic strength wij. If neuron j fires between discrete times t and t + 1, its potential drops to VR. This event increments by wij the potential of every postsynaptic neuron i that does not fire in that interval. The potential of a non-firing neuron may also integrate an external stimulus Ii[t], which can model signals received from sources outside the network. Apart from these increments, the potential of a non-firing neuron decays at each time step towards the baseline voltage VB by a factor μ ∈ [0, 1], which models the effect of a leakage current.

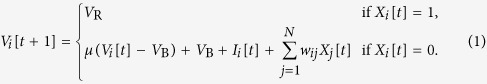

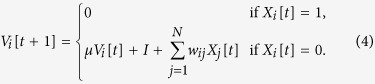

We introduce the Boolean variable Xi[t] ∈ {0, 1} which denotes whether neuron i fired between t and t + 1. The potentials evolve as:

|

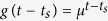

This is a special case of the general GL model21, with the filter function  , where ts is the time of the last firing of neuron i. We have Xi[t + 1] = 1 with probability Φ(Vi[t]), which is called the firing function21,38,39,40,41,42. We also have Xi[t + 1] = 0 if Xi[t] = 1 (refractory period). The function Φ is sigmoidal, that is, monotonically increasing, with limiting values Φ(−∞) = 0 and Φ(+∞) = 1, with only one derivative maximum. We also assume that Φ(V) is zero up to some threshold potential VT (possibly −∞) and is 1 starting at some saturation potential VS (possibly +∞). If Φ is the shifted Heaviside step function Θ, Φ(V) = Θ(V − VT), we have a deterministic discrete-time LIF neuron. Any other choice for Φ(V) gives a stochastic neuron.

, where ts is the time of the last firing of neuron i. We have Xi[t + 1] = 1 with probability Φ(Vi[t]), which is called the firing function21,38,39,40,41,42. We also have Xi[t + 1] = 0 if Xi[t] = 1 (refractory period). The function Φ is sigmoidal, that is, monotonically increasing, with limiting values Φ(−∞) = 0 and Φ(+∞) = 1, with only one derivative maximum. We also assume that Φ(V) is zero up to some threshold potential VT (possibly −∞) and is 1 starting at some saturation potential VS (possibly +∞). If Φ is the shifted Heaviside step function Θ, Φ(V) = Θ(V − VT), we have a deterministic discrete-time LIF neuron. Any other choice for Φ(V) gives a stochastic neuron.

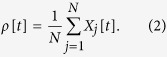

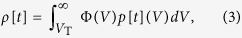

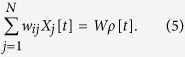

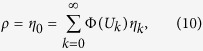

The network’s activity is measured by the fraction (or density) ρ[t] of firing neurons:

|

The density ρ[t] can be computed from the probability density p[t](V) of potentials at time t:

|

where p[t](V)dV is the fraction of neurons with potential in the range [V, V + dV] at time t.

Neurons that fire between t and t + 1 have their potential reset to VR. They contribute to p[t + 1](V) a Dirac impulse at potential VR, with amplitude (integral) ρ[t] given by equation (3). In subsequent time steps, the potentials of all neurons will evolve according to equation (1). This process modifies p[t](V) also for V ≠ VR.

Results

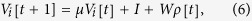

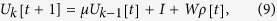

We will study only fully connected networks, where each neuron receives inputs from all the other N − 1 neurons. Since the zero of potential is arbitrary, we assume VB = 0. We also consider only the case with VR = 0, and uniform constant input Ii[t] = I. So, for these networks, equation (1) reads:

|

Mean-field calculation

In the mean-field analysis, we assume that the synaptic weights wij follow a distribution with average W/N and finite variance. The mean-field approximation disregards correlations, so the final term of equation (1) becomes:

|

Notice that the variance of the weights wij becomes immaterial when N tends to infinity.

Since the external input I is the same for all neurons and all times, every neuron i that does not fire between t and t + 1 (that is, with Xi[t] = 0) has its potential changed in the same way:

|

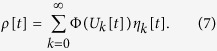

Recall that the probability density p[t](V) has a Dirac impulse at potential U0 = 0, representing all neurons that fired in the previous interval. This Dirac impulse is modified in later steps by equation (6). It follows that, once all neurons have fired at least once, the density p[t](V) will be a combination of discrete impulses with amplitudes η0[t], η1[t], η2[t], …, at potentials U0[t], U1[t], U2[t], …, such that  .

.

The amplitude ηk[t] is the fraction of neurons with firing age k at discrete time t, that is, neurons that fired between times t − k − 1 and t − k, and did not fire between t − k and t. The common potential of those neurons, at time t, is Uk[t]. In particular, η0[t] is the fraction ρ[t − 1] of neurons that fired in the previous time step. For this type of distribution, the integral of equation (3) becomes a discrete sum:

|

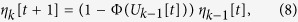

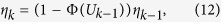

According to equation (6), the values ηk[t] and Uk[t] evolve by the equations

|

|

for all k ≥ 1, with η0[t + 1] = ρ[t] and U0[t + 1] = 0.

Stationary states for general Φ and μ

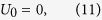

A stationary state is a density p[t](V) = p(V) of membrane potentials that does not change with time. In such a regime, quantities Uk and ηk do not depend anymore on t. Therefore, the equations (8) and (9) become the recurrence equations:

|

|

|

|

for all k ≥ 1.

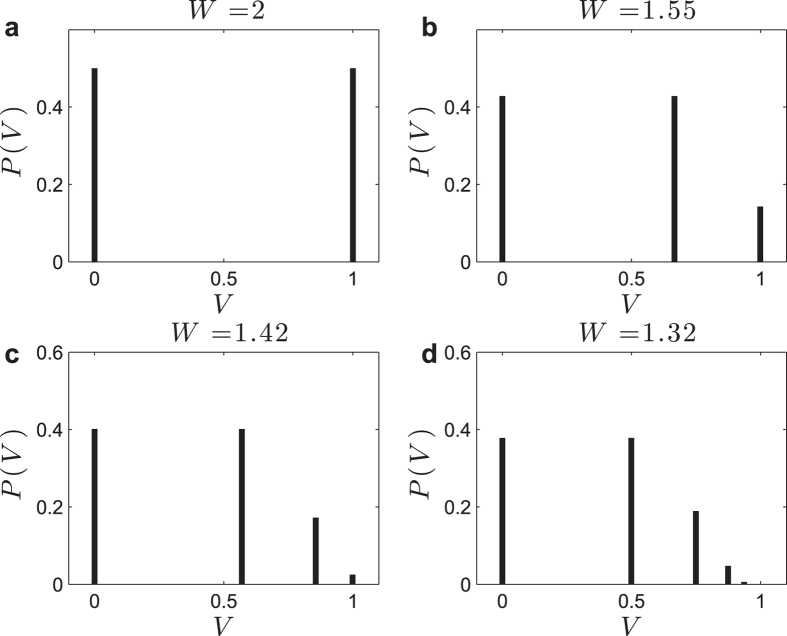

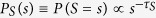

Since equations (12) are homogeneous on the ηk, the normalization condition  must be included explicitly. So, integrating over the density p(V) leads to a discrete distribution P(V) (see Fig. 1 for a specific Φ).

must be included explicitly. So, integrating over the density p(V) leads to a discrete distribution P(V) (see Fig. 1 for a specific Φ).

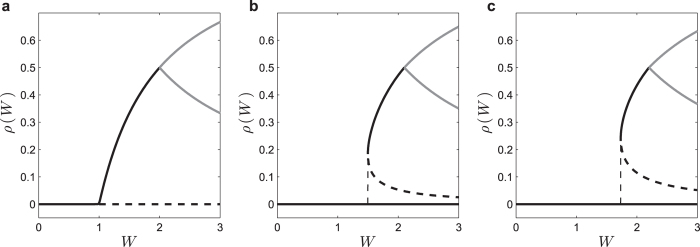

Figure 1. Examples of stationary potential distributions P(V): monomial Φ function with r = 1, Γ = 1, μ = 1/2, I = 0 case with different values of W.

(a) W2 = WB = 2, two peaks; (b) W3 = 14/9, three peaks; (c) W4 = 488/343, four peaks, (d) W∞ ≈ 1.32, infinite number of peaks with U∞ = 1. Notice that for W < W∞ all the peaks in the distribution P(V) lie at potentials Uk < 1. For WB = 2 we have η0 = η1 = 1/2, producing a bifurcation to a 2-cycle. The values of Wm = W2, W3, W4 and W∞ can be obtained analytically by imposing the condition Um−1 = 1 in equations (12–13).

Equations (10, 11, 12, 13) can be solved numerically, e. g. by simulating the evolution of the potential probability density p[t](V) according to equations (8) and (9), starting from an arbitrary initial distribution, until reaching a stable distribution (the probabilities ηk should be renormalized for unit sum after each time step, to compensate for rounding errors). Notice that this can be done for any Φ function, so this numerical solution is very general.

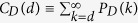

The monomial saturating Φ with μ > 0

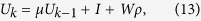

Now we consider a specific class of firing functions, the saturating monomials. This class is parametrized by a positive degree r and a neuronal gain Γ > 0. In all functions of this class, Φ(V) is 0 when V ≤ VT, and 1 when V ≥ VS, where the saturation potential is VS = VT + 1/Γ. In the interval VT < V < VS, we have:

|

Note that these functions can be seen as limiting cases of sigmoidal functions, and that we recover the deterministic LIF model Φ(V) = Θ(V − VT) when Γ → ∞.

For any integer p ≥ 2, there are combinations of values of VT, VS, and μ that cause the network to behave deterministically. This happens if the stationary state defined by equations (12) and (13) is such that Up−2 ≤ VT ≤ VS ≤ Up−1—that is, Φ(Uk) is either 0 or 1 for all k, so the GL model becomes equivalent to the deterministic LIF model. In such a stationary state, we have ρ = ηk = 1/p for all k < p; meaning that the neurons are divided into p groups of equal size, and each group fires every p steps, exactly. If the inequalities are strict (Up−2 < VT and VS < Up−1) then there are also many deterministic periodic regimes (p-cycles) where the p groups have slightly more or less than 1/p of all the neurons, but still fire regularly every p steps.

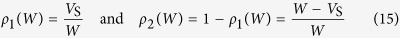

Note that, if VT = 0, such degenerate (deterministic) regimes, stationary or periodic, occur only for p = 2 and W ≥ WB where WB = 2(I + VS). The stationary regime has ρ = η0 = η1 = 1/2 and U1 = I + W/2. In the periodic regimes (2-cycles) the activity ρ[t] alternates between two values ρ′ and ρ′′ = 1 − ρ′, with ρ1(W) < ρ′ < 1/2 < ρ′′ < ρ2(W), where:

|

All these 2-cycles are marginally stable, in the sense that, if a perturbed state ρε = ρ + ε satisfy equation (15) then the new cycle ρε[t + 1] = 1 − ρε[t] is also marginally stable.

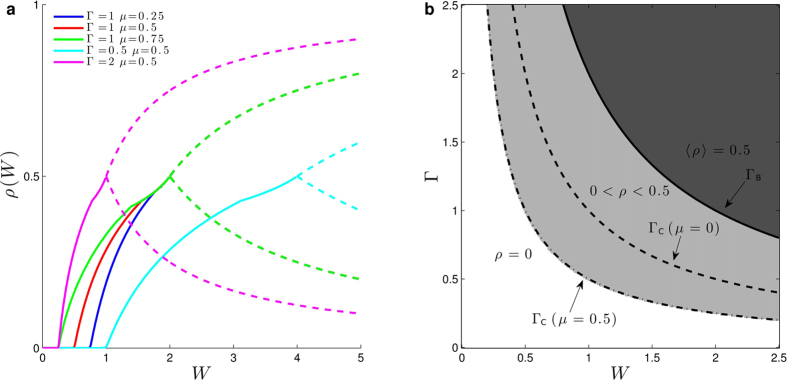

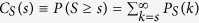

In the analyses that follows, the control parameters are W and Γ, and ρ(W, Γ) is the order parameter. We obtain numerically ρ(W, Γ) and the phase diagram (W, Γ) for several values of μ > 0, for the linear (r = 1) saturating Φ with I = VT = 0 (Fig. 2). Only the first 100 peaks (Uk, ηk) were considered, since, for the given μ and Φ, there was no significant probability density beyond that point. The same numerical method can be used for r ≠ 1, I ≠ 0, VT ≠ 0.

Figure 2. Results for μ > 0.

(a) Numerically computed ρ(W) curves for the monomial Φ with r = 1, I = VR = VT = 0, and (Γ, μ) = (1, 1/4), (1, 1/2), (1, 3/4), (1/2, 1/2), and (2, 1/2). The absorbing state ρ0 = 0 looses stability at WC and the non trivial fixed point ρ > 0 appears. At WB = 2/Γ, we have ρ = 1/2 and from there we have the fixed point ρ[t] = 1/2 and the 2-cycles with ρ[t] between the two bounds of equation (15) (dashed lines). (b) Numerically computed (Γ, W) diagram showing the critical boundaries ΓC(W) = (1 − μ)/W and the bifurcation line ΓB(W) = 2/W to 2-cycles.

Near the critical point, we obtain numerically ρ(W, μ) ≈ C(W − WC)/W, where WC(Γ) = (1 − μ)/Γ and C(μ) is a constant. So, the critical exponent is α = 1, characteristic of the mean-field directed percolation (DP) universality class3,4. The critical boundary in the (W, Γ) plane, numerically obtained, seems to be ΓC(W) = (1 − μ)/W (Fig. 2b).

Analytic results for μ = 0

Below we give results of a simple mean-field analysis in the limits N → ∞ and μ → 0. The latter implies that, at time t + 1, the neuron “forgets” its previous potential Vi[t] and integrates only the inputs I[t] + WijXj[t]. This scenario is interesting because it enables analytic solutions, yet exhibits all kinds of behaviors and phase transitions that occur with μ > 0.

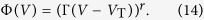

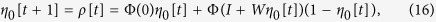

When μ = 0 and Ii[t] = I (uniform constant input), the density p[t](V) consists of only two Dirac peaks at potentials U0[t] = VR = 0 and U1[t] = I + Wρ[t − 1], with fractions η0[t] and η1[t] that evolve as:

|

|

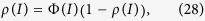

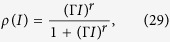

Furthermore, if the neurons cannot fire spontaneously, that is, Φ(0) = 0, then equation (16) reduces to:

|

In a stationary regime, equation (18) simplifies to:

|

since η0 = ρ, η1 = 1 − ρ, U0 = 0, and U1 = I + Wρ. Below, all the results refer to the monomial saturating Φs given by equation (14).

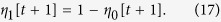

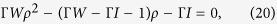

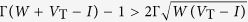

The case with r = 1, V T = 0

When r = 1, we have the linear function Φ(V) = ΓV for 0 < V < VS = 1/Γ, where V = I + Wρ. Equation (19) turns out:

|

with solution (Fig. 3a):

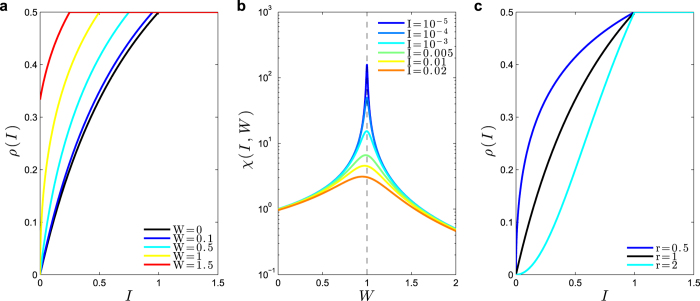

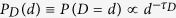

Figure 3. Network and isolated neuron responses to external input I.

(a) Network activity ρ(I, W) as a function of I for several W; (b) Susceptibility χ(I, W) as a function of W for several I. Notice the divergence χC(I) ∝ I−1/2 for small I; (c) Firing rate of an isolated neuron ρ(I, W = 0) for monomial exponents r = 0.5, 1 and 2.

|

For zero input we have:

|

where WC = 1/Γ and the order parameter critical exponent is β = 1. This corresponds to a standard mean-field continuous (second order) absorbing state phase transition. This transition will be studied in detail two section below.

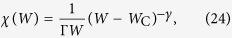

A measure of the network sensitivity to inputs (which play here the role of external fields) is the susceptibility χ = dρ/dI, which is a function of Γ, W and I (Fig. 3b):

|

For zero external inputs, the susceptibility behaves as:

|

where we have the critical exponent γ = 1.

A very interesting result is that, for any I, the susceptibility is maximized at the critical line WC = 1/Γ, with the values:

|

|

For I → 0 we have  . The critical exponent δ is defined by I ∝ ρδ for small I, so we obtain the mean-field value δ = 2. In analogy with Psychophysics, we may call m = 1/δ = 1/2 the Stevens’s exponent of the network4.

. The critical exponent δ is defined by I ∝ ρδ for small I, so we obtain the mean-field value δ = 2. In analogy with Psychophysics, we may call m = 1/δ = 1/2 the Stevens’s exponent of the network4.

With two critical exponents it is possible to obtain others through scaling relations. For example, notice that β, γ and δ are related to 2β + γ = β(δ + 1).

Notice that, at the critical line, the susceptibility diverges as  as I → 0. We will comment the importance of the fractionary Stevens’s exponent m = 1/2 (Fig. 3a) and the diverging susceptibility (Fig. 3b) for information processing in the Discussion section.

as I → 0. We will comment the importance of the fractionary Stevens’s exponent m = 1/2 (Fig. 3a) and the diverging susceptibility (Fig. 3b) for information processing in the Discussion section.

Isolated neurons

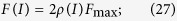

We can also analyze the behavior of the GL neuron model under the standard experiment where an isolated neuron in vitro is artificially injected with a current of constant intensity J. That corresponds to setting the external input signal I[t] of that neuron to a constant value I = JΔ/C where C is the effective capacitance of the neuron.

The firing rate of an isolated neuron can be written as:

|

where Fmax is an empirical maximum firing rate (measured in spikes per second) of a given neuron and ρ is our previous neuron firing probability per time step. With W = 0 and I > 0 in equation (19), we get:

|

The solution for the monomial saturating Φ with VT = 0 is (Fig. 3c):

|

which is less than ρ = 1/2 only if I < 1/Γ. For any I ≥ 1/Γ the firing rate saturates at ρ = 1/2 (the neuron fires at every other step, alternating between potentials U0 = VR = 0 and U1 = I. So, for I > 0, there is no phase transition. Interestingly, equation (29), known as generalized Michaelis-Menten function, is frequently used to fit the firing response of biological neurons to DC currents48,49.

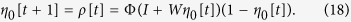

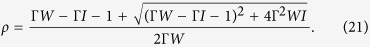

Continuous phase transitions in networks: the case with r = 1

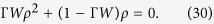

Even with I = 0, spontaneous collective activity is possible if the network suffers a phase transition. With r = 1, the stationary state condition equation (19) is:

|

The two solutions are the absorbing state ρ0 = 0 and the non-trivial state:

|

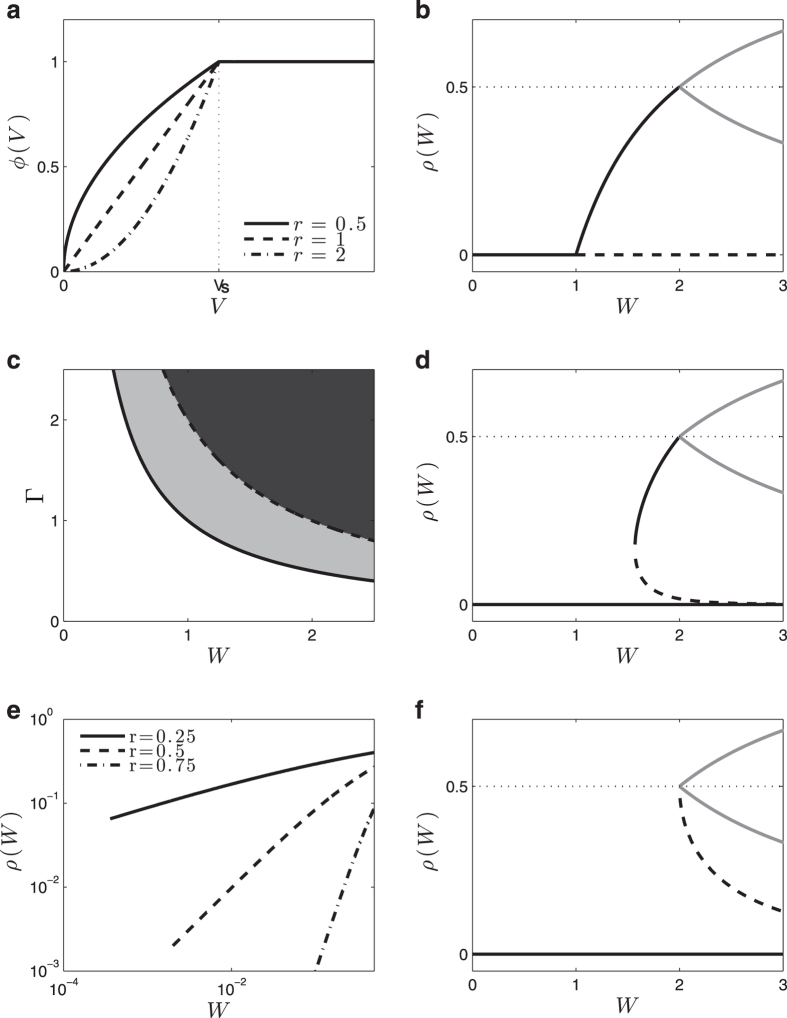

with WC = 1/Γ. Since we must have 0 < ρ ≤ 1/2, this solution is valid only for WC < W ≤ WB = 2/Γ (Fig. 4b).

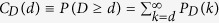

Figure 4. Firing densities (with Γ = 1) and phase diagram with μ = 0 and VT (I) = 0.

(a) Examples of monomial firing functions Φ(V) with Γ = 1 r = 0.5, 1 and 2. (b) The ρ(W) bifurcation plot for r = 1. The absorbing state ρ0 looses stability after W > WC = 1 (dashed line). The non trivial fixed point ρ bifurcates at WB = 2/Γ = 2 into two branches (gray lines) that bound the marginally stable 2-cycles. (c) The (Γ, W) phase diagram for r = 1. Below the critical boundary Γ = ΓC(W) = 1/W the inactive state ρ0 = 0 is absorbing and stable; above that line it is also absorbing but unstable. Above the line Γ = ΓB(W) = 2/W there are only the marginally stable 2-cycles. For ΓC(W) < Γ ≤ ΓB(W) there is a single stationary regime ρ(W) = (W − WC)/W < 1/2, with WC = 1/Γ. (d) Discontinuous phase transitions for Γ = 1 with exponents r = 1.2. The absorbing state ρ0 now is stable (solid line at zero). The non trivial fixed point ρ+ starts with the value ρC at WC and bifurcates at WB, creating the boundary curves (gray) that delimit possible 2-cycles. At WC also appears the unstable separatrix ρ− (dashed line). (e) Ceaseless activity (no phase transitions) for r = 0.25, 0.5 and r = 0.75. The activity approach zero (for W = 0) as power laws. (f) In the limiting case r = 2 we do not have a ρ > 0 fixed point, but only the stable ρ0 = 0 (black), the 2-cycles region (gray) and the unstable separatrix (traces).

This solution describes a stationary state where 1 − ρ of the neurons are at potential U1 = W − WC. The neurons that will fire in the next step are a fraction Φ(U1) of those, which are again a fraction ρ of the total. For any W > WC, the state ρ0 = 0 is unstable: any small perturbation of the potentials cause the network to converge to the active stationary state above. For W < WC, the solution ρ0 = 0 is stable and absorbing. In the ρ(W) plot, the locus of stationary regimes defined by equation (31) bifurcates at W = WB into the two bounds of equation (15) that delimit the 2-cycles (Fig. 4b).

So, at the critical boundary W = 1/Γ, we have a standard continuous absorbing state transition ρ(W) ∝ (W − WC)α with a critical exponent α = 1, which also can be written as ρ(Γ) ∝ (Γ − ΓC)α. In the (Γ, W) plane, the phase transition corresponds to a critical boundary ΓC(W) = 1/W, below the 2-cycle phase transition ΓB(W) = 2/W (Fig. 4c).

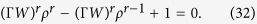

Discontinuous phase transitions in networks: the case with r > 1

When r > 1 and W ≤ WB = 2/Γ, the stationary state condition is:

|

This equation has a non trivial solution ρ+ only when 1 ≤ r ≤ 2 and WC(r) ≤ W ≤ WB, for a certain WC(r) > 1/Γ. In this case, at W = WC(r), there is a discontinuous (first-order) phase transition to a regime with activity ρ = ρC(r) ≤ 1/2 (Fig. 4d). It turns out that ρC(r) → 0 as r → 1, recovering the continuous phase transition in that limit. For r = 2, the solution to equation (32) is a single point ρ(WC) = ρC = 1/2 at WC = 2/Γ = WB (Fig. 4f).

Notice that, in the linear case, the fixed point ρ0 = 0 is unstable for W > 1 (Fig. 4b). This occurs because the separatrix ρ− (trace lines, Fig. 4d), for r → 1, collapses with the ρ0 point, so that it looses its stability.

Ceaseless activity: the case with r < 1

When r < 1, there is no absorbing solution ρ0 = 0 to equation (32). In the W → 0 limit we get ρ(W) = (ΓW)r/(1−r). These power laws means that ρ > 0 for any W > WC(r) = 0 (Fig. 4e). We recover the second order transition WC(r = 1) = 1/Γ when r → 1 in equation (32). Interestingly, this ceaseless activity ρ > 0 for any W > 0 seems to be similar to that found by Larremore et al.42 with a μ = 0 linear saturating model. That ceaseless activity, observed even with r = 1, perhaps is due to the presence of inhibitory neurons in Larremore et al. model.

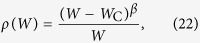

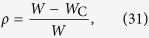

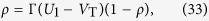

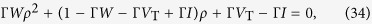

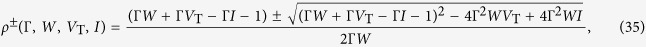

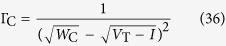

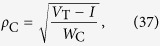

Discontinuous phase transitions in networks: the case with V T > 0 and I > 0

The standard IF model has VT > 0. If we allow this feature in our models we find a new ingredient that produces first order phase transitions. Indeed, in this case, if U1 = Wρ + I < VT then we have a single peak at U0 = 0 with η0 = 1, which means we have a silent state. When U1 = Wρ + I > VT, we have a peak with height η1 = 1 − ρ and ρ = η0 = Φ(U1)η1.

For the linear monomial model this leads to the equations:

|

|

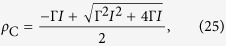

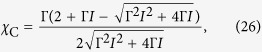

with the solution:

|

where ρ+ is the non trivial fixed point and ρ− is the unstable fixed point (separatrix). These solutions only exist for ΓW values such that  . This produces the condition:

. This produces the condition:

|

which defines a first order critical boundary. At the critical boundary the density of firing neurons is:

|

which is nonzero (discontinuous) for any VT > I. These transitions can be seen in Fig. 5. The solutions for equations (35) and (37) is valid only for ρC < 1/2 (2-cycle bifurcation). This imply the maximal value VT = WC /4 + I.

Figure 5. Phase transitions for VT > 0: monomial model with μ = 0, r = 1, Γ = 1 and thresholds VT = 0, 0.05 and 0.1.

Here the solid black lines represent the stable fixed points, dashed black lines represent unstable fixed points and grey lines correspond to the marginally stable boundaries for 2-cycles regime. The discontinuity ρC goes to zero for VT → 0.

Neuronal avalanches

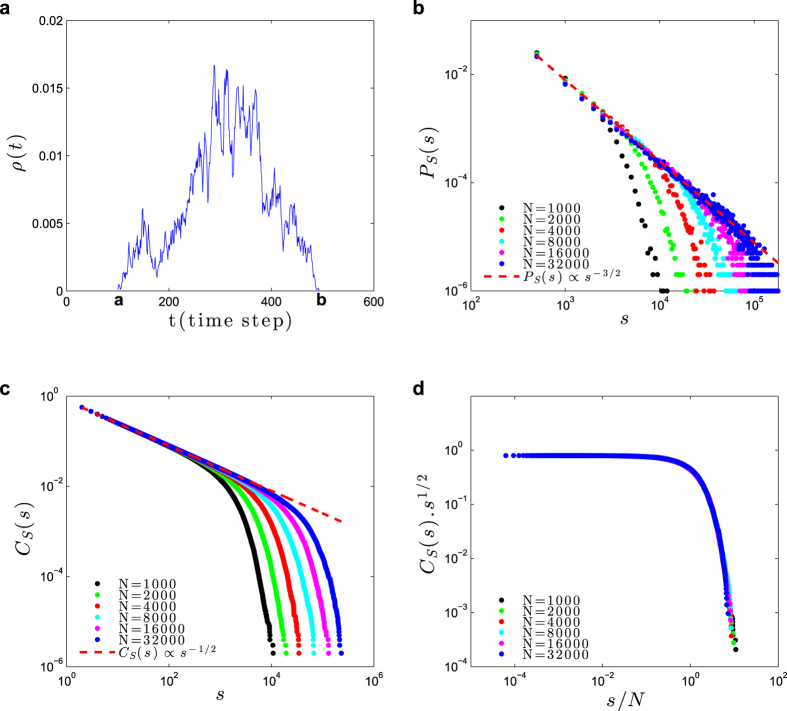

Firing avalanches in neural networks have attracted significant interest because of their possible connection to efficient information processing3,4,5,7,9. Through simulations, we studied the critical point WC = 1, ΓC = 1 (with μ = 0) in search for neuronal avalanches3,9 (Fig. 6).

Figure 6. Avalanche size statistics in the static model.

Simulations at the critical point WC = 1, ΓC = 1 (with μ = 0). (a) Example of avalanche profile ρ[t] at the critical point. (b) Avalanche size distribution PS(s) ≡ P(S = s), for network sizes N = 1000, 2000, 4000, 8000, 16000 and 32000. The dashed reference line is proportional to  , with τs = 3/2. (c) Complementary cumulative distribution

, with τs = 3/2. (c) Complementary cumulative distribution  . Being an integral of PS(s), its power law exponent is −τs + 1 = −1/2 (dashed line). (d) Data collapse (finite-size scaling) for CS(s)s1/2 versus function of

. Being an integral of PS(s), its power law exponent is −τs + 1 = −1/2 (dashed line). (d) Data collapse (finite-size scaling) for CS(s)s1/2 versus function of  , with the cutoff exponent cS = 1.

, with the cutoff exponent cS = 1.

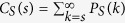

An avalanche that starts at discrete time t = a and ends at t = b has duration d = b − a and size  (Fig. 6a). By using the notation S for a random variable and s for its numerical value, we observe a power law avalanche size distribution

(Fig. 6a). By using the notation S for a random variable and s for its numerical value, we observe a power law avalanche size distribution  , with the mean-field exponent τS = 3/2 (Fig. 6b)3,9,13. Since the distribution PS(s) is noisy for large s, for further analysis we use the complementary cumulative function

, with the mean-field exponent τS = 3/2 (Fig. 6b)3,9,13. Since the distribution PS(s) is noisy for large s, for further analysis we use the complementary cumulative function  (which gives the probability of having an avalanche with size equal or greater than s) because it is very smooth and monotonic (Fig. 6c). Data collapse gives a finite-size scaling exponent cS = 1 (Fig. 6d)15,17.

(which gives the probability of having an avalanche with size equal or greater than s) because it is very smooth and monotonic (Fig. 6c). Data collapse gives a finite-size scaling exponent cS = 1 (Fig. 6d)15,17.

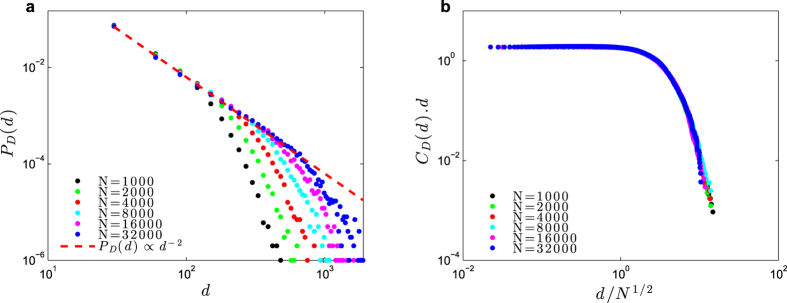

We also observed a power law distribution for avalanche duration,  with τD = 2 (Fig. 7a). The complementary cumulative distribution is

with τD = 2 (Fig. 7a). The complementary cumulative distribution is  . From data collapse, we find a finite-size scaling exponent cD = 1/2 (Fig. 7b), in accord with the literature13.

. From data collapse, we find a finite-size scaling exponent cD = 1/2 (Fig. 7b), in accord with the literature13.

Figure 7. Avalanche duration statistics in the static model.

Simulations at the critical point WC = 1, ΓC = 1 (μ = 0) for network sizes N = 1000, 2000, 4000, 8000, 16000 and 32000: (a) Probability distribution PD(d) ≡ P(D = d) for avalanche duration d. The dashed reference line is proportional to  , with τD = 2. (b) Data collapse CD(d)d versus

, with τD = 2. (b) Data collapse CD(d)d versus  , with the cutoff exponent cD = 1/2. The complementary cumulative function

, with the cutoff exponent cD = 1/2. The complementary cumulative function  , being an integral of PD(d), has power law exponent −τD + 1 = −1.

, being an integral of PD(d), has power law exponent −τD + 1 = −1.

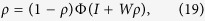

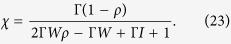

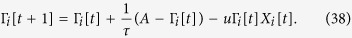

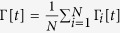

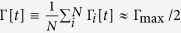

The model with dynamic parameters

The results of the previous section were obtained by fine-tuning the network at the critical point ΓC = WC = 1. Given the conjecture that the critical region presents functional advantages, a biological model should include some homeostatic mechanism capable of tuning the network towards criticality. Without such mechanism, we cannot truly say that the network self-organizes toward the critical regime.

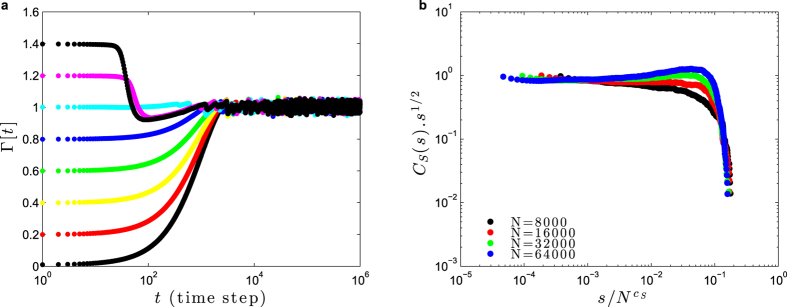

However, observing that the relevant parameter for criticality in our model is the critical boundary ΓCWC = 1, we propose to work with dynamic gains Γi[t] while keeping the synapses Wij fixed. The idea is to reduce the gain Γi[t] when the neuron fires, and let the gain slowly recover towards a higher resting value after that:

|

Now, the factor τ is related to the characteristic recovery time of the gain, A is the asymptotic resting gain, and u ∈ [0, 1] is the fraction of gain lost due to the firing. This model is plausible biologically, and can be related to a decrease and recovery, due to the neuron activity, of the firing probability at the AIS47. Our dynamic Γi[t] mimics the well known phenomenon of spike frequency adaptation18,19.

Figure 8a shows a simulation with all-to-all coupled networks with N neurons and, for simplicity, Wij = W. We observe that the average gain  seems to converge toward the critical value ΓC(W) = 1/W = 1, starting from different Γ[0] ≠ 1. As the network converges to the critical region, we observe power-law avalanche size distributions with exponent −3/2 leading to a cumulative function CS(s) ∝ s−1/2 (Fig. 8b). However, we also observe supercritical bumps for large s and N, meaning that the network is in a slightly supercritical state.

seems to converge toward the critical value ΓC(W) = 1/W = 1, starting from different Γ[0] ≠ 1. As the network converges to the critical region, we observe power-law avalanche size distributions with exponent −3/2 leading to a cumulative function CS(s) ∝ s−1/2 (Fig. 8b). However, we also observe supercritical bumps for large s and N, meaning that the network is in a slightly supercritical state.

Figure 8. Self-organization with dynamic neuronal gains.

Simulations of a network of GL neurons with fixed Wij = W = 1, u = 1, A = 1.1 and τ = 1000 ms. Dynamic gains Γi[t] starts with Γi[0] uniformly distributed in [0, Γmax]. The average initial condition is  , which produces the different initial conditions Γ[0]. (a) Self-organization of the average gain Γ[t] over time. The horizontal dashed line marks the value ΓC = 1. (b) Data collapse for CS(s)s1/2 versus

, which produces the different initial conditions Γ[0]. (a) Self-organization of the average gain Γ[t] over time. The horizontal dashed line marks the value ΓC = 1. (b) Data collapse for CS(s)s1/2 versus  for several N, with the cutoff exponent cS = 1.

for several N, with the cutoff exponent cS = 1.

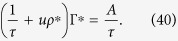

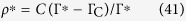

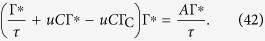

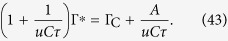

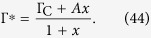

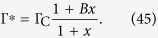

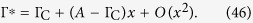

This empirical evidence is supported by a mean-field analysis of equation (38). Averaging over the sites, we have for the average gain:

|

In the stationary state, we have Γ[t + 1] = Γ[t] = Γ*, so:

|

But we have the relation

|

near the critical region, where C is a constant that depends on Φ(V) and μ, for example, with μ = 0, C = 1 for Φ linear monomial model. So:

|

Eliminating the common factor Γ*, and dividing by uC, we have:

|

Now, call x = 1/(uCτ). Then, we have:

|

The fine tuning solution is to put by hand A = ΓC, which leads to Γ* = ΓC independent of x. This fine tuning solution should not be allowed in a true SOC scenario. So, suppose that A = BΓC. Then, we have:

|

Now we see that to have a critical or supercritical state (where equation (41) holds) we must have B > 1, otherwise we fall in the subcritical state Γ* < ΓC where ρ* = 0 and our mean-field calculation is not valid. A first order approximation leads to:

|

This mean-field calculation shows that, if x → 0, we obtain a SOC state Γ* → ΓC. However, the strict case x → 0 would require a scaling τ = O(Na) with an exponent a > 0, as done previously for dynamic synapses12,13,15,17.

However, if we want to avoid the non-biological scaling τ(N) = O(Na), we can use biologically reasonable parameters like τ ∈ [10, 1000] ms, u = [0.1, 1], C = 1 and A ∈ [1.1, 2]ΓC. In particular, if τ = 1000, u = 1 and A = 1.1, we have x = 0.001 and:

|

Even a more conservative value τ = 100 ms gives Γ* ≈ 1.001ΓC. Although not perfect SOC10, this result is totally sufficient to explain power law neuronal avalanches. We call this phenomena self-organized supercriticality (SOSC), where the supercriticality can be very small. We must yet determine the volume of parameter space (τ, A, u) where the SOSC phenomenon holds. In the case of dynamic synapses Wij[t], this parametric volume is very large15,17 and we conjecture that the same occurs for the dynamic gains Γi[t]. This shall be studied in detail in another paper.

Discussion

Stochastic model

The stochastic neuron Galves and Löcherbach21,41 is an interesting element for studies of networks of spiking neurons because it enables exact analytic results and simple numerical calculations. While the LSIF models of Soula et al.34 and Cessac35,36,37 introduce stochasticity in the neuron’s behavior by adding noise terms to its potential, the GL model is agnostic about the origin of noise and randomness (which can be a good thing when several noise sources are present). All the random behavior is grouped at the single firing function Φ(V).

Phase transitions

Networks of GL neurons display a variety of dynamical states with interesting phase transitions. We looked for stationary regimes in such networks, for some specific firing functions Φ(V) with no spontaneous activity at the baseline potential (that is, with Φ(0) = 0 and I = 0). We studied the changes in those regimes as a function of the mean synaptic weight W and mean neuronal gain Γ. We found basically tree kinds of phase transition, depending of the behavior of Φ(V) ∝ Vr for low V:

r < 1: A ceaseless dynamic regime with no phase transitions (WC = 0) similar to that found by Larremore et al.42;

r = 1: A continuous (second order) absorbing state phase transition in the Directed Percolation universality class usual in SOC models2,3,10,15,17;

r > 1: Discontinuous (first order) absorbing state transitions.

We also observed discontinuous phase transitions for any r > 0 when the neurons have a firing threshold VT > 0.

The deterministic LIF neuron models, which do not have noise, do not seem to allow these kinds of transitions27,30,31. The model studied by Larremore et al.42 is equivalent to the GL model with monomial saturating firing function with r = 1, VT = 0, μ = 0 and Γ = 1. They did not report any phase transition (perhaps because of the effect of inhibitory neurons in their network), but found a ceaseless activity very similar to what we observed with r < 1.

Avalanches

In the case of second-order phase transitions (Φ(0) = 0, r = 1, VT = 0), we detected firing avalanches at the critical boundary ΓC = 1/W whose size and duration power law distributions present the standard mean-field exponents τS = 3/2 and τD = 2. We observed a very good finite-scaling and data collapse behavior, with finite-size exponents cS = 1 and cD = 1/2.

Maximal susceptibility and optimal dynamic range at criticality

Maximal susceptibility means maximal sensitivity to inputs, in special to weak inputs, which seems to be an interesting property in biological terms. So, this is a new example of optimization of information processing at criticality. We also observed, for small I, the behavior ρ(I) ∝ Im with a fractionary Stevens’s exponent m = 1/δ = 1/2. Fractionary Stevens’s exponents maximize the network dynamic range since, outside criticality, we have only a input-output proportional behavior ρ(I) ∝ I, see ref. 4. As an example, in non-critical systems, an input range of 1–10000 spikes/s, arriving to the neurons due to their extensive dendritic arbors, must be mapped onto a range also of 1–10000 spikes/s in each neuron, which is biologically impossible because neuronal firing do not span four orders of magnitude. However, at criticality, since  , a similar input range needs to be mapped only to an output range of 1–100 spikes/s, which is biologically possible. Optimal dynamic range and maximal susceptibility to small inputs constitute prime biological motivations to neuronal networks self-organize toward criticality.

, a similar input range needs to be mapped only to an output range of 1–100 spikes/s, which is biologically possible. Optimal dynamic range and maximal susceptibility to small inputs constitute prime biological motivations to neuronal networks self-organize toward criticality.

Self-organized criticality

One way to achieve this goal is to use dynamical synapses Wij[t], in a way that mimics the loss of strength after a synaptic discharge (presumably due to neurotransmitter vesicles depletion), and the subsequent slow recovery12,13,15,17:

|

The parameters are the synaptic recovery time τ, the asymptotic value A, and the fraction u of synaptic weight lost after firing. This synaptic dynamics has been examined in refs 12, 13, 15 and 17. For our all-to-all coupled network, we have N(N − 1) dynamic equations for the Wijs. This is a huge number, for example O(108) equations, even for a moderate network of N = 104 neurons15,17. The possibility of well behaved SOC in bulk dissipative systems with loading is discussed in refs 10, 13 and 50. Further considerations for systems with conservation on the average at the stationary state, as occurs in our model, are made in refs 15 and 17.

Inspired by the presence of the critical boundary, we proposed a new mechanism for short-scale neural network plasticity, based on dynamic neuron gains Γi[t] instead of the above dynamic synaptic weights. This new mechanism is biologically plausible, probably related an activity-dependent firing probability at the axon initial segment (AIS)32,47, and was found to be sufficient to self-organize the network near the critical region. We obtained good data collapse and finite-size behavior for the PS(S) distributions.

The great advantage of this new SOC mechanism is its computational efficiency: when simulating N neurons with K synapses each, there are only N dynamic equations for the gains Γi[t], instead of NK equations for the synaptic weights Wij[t]. Notice that, for the all-to-all coupling network studied here, this means O(N2) equations for dynamic synapse but only O(N) equations for dynamic gains. This makes a huge difference for the network sizes that can be simulated.

We stress that, since we used τ finite, the criticality is not perfect (Γ*/ΓC ∈ [1.001; 1.01]). So, we called it a self-organized super-criticality (SOSC) phenomenon. Interestingly, SOSC would be a concretization of Turing’s intuition that the best brain operating point is slightly supercritical1.

We speculate that this slightly supercriticality could explain why humans are so prone to supercritical pathological states like epilepsy3 (prevalence 1.7%) and mania (prevalence 2.6% in the population). Our mechanism suggests that such pathological states arises from small gain depression u or small gain recovery time τ. These parameters are experimentally related to firing rate adaptation and perhaps our proposal could be experimentally studied in normal and pathological tissues.

We also conjecture that this supecriticality in the whole network could explain the Subsamplig Paradox in neuronal avalanches: since the initial experimental protocols9,10, critical power laws have been seem when using arrays of Ne = 32–512 electrodes, which are a very small numbers compared to the full biological network size with N = O(106–109) neurons. This situation Ne << N has been called subsampling51,52,53.

The paradox occurs because models that present good power laws for avalanches measured over the total number of neurons N, under subsampling present only exponential tails or log-normal behaviors53. No model, to the best of our knowledge, has solved this paradox10. Our dynamic gains, which produce supercritical states like Γ* = 1.01ΓC, could be a solution to the paradox if the supercriticality in the whole network, described by a power law with a supercritical bump for large avalanches, turns out to be described by an apparent pure power law under subsampling. This possibility will be fully explored in another paper.

Directions for future research

Future research could investigate other network topologies and firing functions, heterogeneous networks, the effect of inhibitory neurons30,42, and network learning. The study of self-organized supercriticality (and subsampling) with GL neurons and dynamic neuron gains is particularly promising.

Methods

Numerical Calculations

All numerical calculations are done by using MATLAB software. Simulation procedures: Simulation codes are made in Fortran90 and C++11. The avalanche statistics were obtained by simulating the evolution of finite networks of N neurons, with uniform synaptic strengths Wij = W (Wii = 0), Φ(V) monomial linear (r = 1) and critical parameter values WC = 1 and ΓC = 1. Each avalanche was started with all neuron potentials Vi[0] = VR = 0 and forcing the firing of a single random neuron i by setting Xi[0] = 1.

In contrast to standard integrate-and fire12,13 or automata networks4,15,17, stochastic networks can fire even after intervals with no firing (ρ[t] = 0) because membrane voltages V[t] are not necessarily zero and Φ(V) can produce new delayed firings. So, our criteria to define avalanches is slightly different from previous literature: the network was simulated according to equation (1) until all potentials had decayed to such low values that  , so further spontaneous firing would not be expected to occur for thousands of steps, which defines a stop time. Then, the total number of firings S is counted from the first firing up to this stop time.

, so further spontaneous firing would not be expected to occur for thousands of steps, which defines a stop time. Then, the total number of firings S is counted from the first firing up to this stop time.

The correct finite-size scaling for avalanche duration is obtained by defining the duration as D = Dbare + 5 time steps, where Dbare is the measured duration in the simulation. These extra five time steps probably arise from the new definition of avalanche used for these stochastic neurons.

Additional Information

How to cite this article: Brochini, L. et al. Phase transitions and self-organized criticality in networks of stochastic spiking neurons. Sci. Rep. 6, 35831; doi: 10.1038/srep35831 (2016).

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Acknowledgments

This paper results from research activity on the FAPESP Center for Neuromathematics (FAPESP grant 2013/07699-0). OK and AAC also received support from Núcleo de Apoio à Pesquisa CNAIPS-USP and FAPESP (grant 2016/00430-3). L.B., J.S. and A.C.R. also received CNPq support (grants 165828/2015-3, 310706/2015-7 and 306251/2014-0). We thank A. Galves for suggestions and revision of the paper, and M. Copelli and S. Ribeiro for discussions.

Footnotes

Author Contributions L.B. and A.d.A.C. performed the simulations and prepared all the figures. O.K. and J.S. made the analytic calculations. O.K., J.S. and L.B. wrote the paper. M.A. and A.C.R. contributed with ideas, the writing of the paper and citations to the literature. All authors reviewed the manuscript.

References

- Turing A. M. Computing machinery and intelligence. Mind 59, 433–460 (1950). [Google Scholar]

- Chialvo D. R. Emergent complex neural dynamics. Nature physics 6, 744–750 (2010). [Google Scholar]

- Hesse J. & Gross T. Self-organized criticality as a fundamental property of neural systems. Criticality as a signature of healthy neural systems: multi-scale experimental and computational studies (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kinouchi O. & Copelli M. Optimal dynamical range of excitable networks at criticality. Nature physics 2, 348–351 (2006). [Google Scholar]

- Beggs J. M. The criticality hypothesis: how local cortical networks might optimize information processing. Philosophical Transactions of the Royal Society of London A: Mathematical, Physical and Engineering Sciences 366, 329–343 (2008). [DOI] [PubMed] [Google Scholar]

- Shew W. L., Yang H., Petermann T., Roy R. & Plenz D. Neuronal avalanches imply maximum dynamic range in cortical networks at criticality. The Journal of Neuroscience 29, 15595–15600 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Massobrio P., de Arcangelis L., Pasquale V., Jensen H. J. & Plenz D. Criticality as a signature of healthy neural systems. Frontiers in systems neuroscience 9 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Herz A. V. & Hopfield J. J. Earthquake cycles and neural reverberations: collective oscillations in systems with pulse-coupled threshold elements. Physical review letters 75, 1222 (1995). [DOI] [PubMed] [Google Scholar]

- Beggs J. M. & Plenz D. Neuronal avalanches in neocortical circuits. The Journal of neuroscience 23, 11167–11177 (2003). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marković D. & Gros C. Power laws and self-organized criticality in theory and nature. Physics Reports 536, 41–74 (2014). [Google Scholar]

- de Arcangelis L., Perrone-Capano C. & Herrmann H. J. Self-organized criticality model for brain plasticity. Physical review letters 96, 028107 (2006). [DOI] [PubMed] [Google Scholar]

- Levina A., Herrmann J. M. & Geisel T. Dynamical synapses causing self-organized criticality in neural networks. Nature physics 3, 857–860 (2007). [Google Scholar]

- Bonachela J. A., De Franciscis S., Torres J. J. & Muñoz M. A. Self-organization without conservation: are neuronal avalanches generically critical? Journal of Statistical Mechanics: Theory and Experiment 2010, P02015 (2010). [Google Scholar]

- De Arcangelis L. Are dragon-king neuronal avalanches dungeons for self-organized brain activity? The European Physical Journal Special Topics 205, 243–257 (2012). [Google Scholar]

- Costa A., Copelli M. & Kinouchi O. Can dynamical synapses produce true self-organized criticality? Journal of Statistical Mechanics: Theory and Experiment 2015, P06004 (2015). [Google Scholar]

- van Kessenich L. M., de Arcangelis L. & Herrmann H. Synaptic plasticity and neuronal refractory time cause scaling behaviour of neuronal avalanches. Scientific Reports 6 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Campos J., Costa A., Copelli M. & Kinouchi O. Differences between quenched and annealed networks with dynamical links. arXiv:1604.05779 To appear in Physical Review E (2016).

- Ermentrout B., Pascal M. & Gutkin B. The effects of spike frequency adaptation and negative feedback on the synchronization of neural oscillators. Neural Computation 13, 1285–1310 (2001). [DOI] [PubMed] [Google Scholar]

- Benda J. & Herz A. V. A universal model for spike-frequency adaptation. Neural computation 15, 2523–2564 (2003). [DOI] [PubMed] [Google Scholar]

- Buonocore A., Caputo L., Pirozzi E. & Carfora M. F. A leaky integrate-and-fire model with adaptation for the generation of a spike train. Mathematical biosciences and engineering: MBE 13, 483–493 (2016). [DOI] [PubMed] [Google Scholar]

- Galves A. & Löcherbach E. Infinite systems of interacting chains with memory of variable length—stochastic model for biological neural nets. Journal of Statistical Physics 151, 896–921 (2013). [Google Scholar]

- Lapicque L. Recherches quantitatives sur l’excitation électrique des nerfs traitée comme une polarisation. J. Physiol. Pathol. Gen. 9, 620–635 (1907). Translation: Brunel, N. & van Rossum, M. C. Quantitative investigations of electrical nerve excitation treated as polarization. Biol. Cybernetics 97, 341–349 (2007). [DOI] [PubMed] [Google Scholar]

- Gerstein G. L. & Mandelbrot B. Random walk models for the spike activity of a single neuron. Biophysical journal 4, 41 (1964). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burkitt A. N. A review of the integrate-and-fire neuron model: I. homogeneous synaptic input. Biological cybernetics 95, 1–19 (2006). [DOI] [PubMed] [Google Scholar]

- Burkitt A. N. A review of the integrate-and-fire neuron model: II. inhomogeneous synaptic input and network properties. Biological cybernetics 95, 97–112 (2006). [DOI] [PubMed] [Google Scholar]

- Naud R. & Gerstner W. The performance (and limits) of simple neuron models: generalizations of the leaky integrate-and-fire model. In Computational Systems Neurobiology 163–192 (Springer, 2012). [Google Scholar]

- Brette R. et al. Simulation of networks of spiking neurons: a review of tools and strategies. Journal of computational neuroscience 23, 349–398 (2007). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brette R. What is the most realistic single-compartment model of spike initiation? PLoS Comput Biol 11, e1004114 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Benayoun M., Cowan J. D., van Drongelen W. & Wallace E. Avalanches in a stochastic model of spiking neurons. PLoS Comput Biol 6, e1000846 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ostojic S. Two types of asynchronous activity in networks of excitatory and inhibitory spiking neurons. Nature neuroscience 17, 594–600 (2014). [DOI] [PubMed] [Google Scholar]

- Torres J. J. & Marro J. Brain performance versus phase transitions. Scientific reports 5 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Platkiewicz J. & Brette R. A threshold equation for action potential initiation. PLoS Comput Biol 6, e1000850 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- McDonnell M. D., Goldwyn J. H. & Lindner B. Editorial: Neuronal stochastic variability: Influences on spiking dynamics and network activity. Frontiers in computational neuroscience 10 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Soula H., Beslon G. & Mazet O. Spontaneous dynamics of asymmetric random recurrent spiking neural networks. Neural Computation 18, 60–79 (2006). [DOI] [PubMed] [Google Scholar]

- Cessac B. A discrete time neural network model with spiking neurons. Journal of Mathematical Biology 56, 311–345 (2008). [DOI] [PubMed] [Google Scholar]

- Cessac B. A view of neural networks as dynamical systems. International Journal of Bifurcation and Chaos 20, 1585–1629 (2010). [Google Scholar]

- Cessac B. A discrete time neural network model with spiking neurons: Ii: Dynamics with noise. Journal of mathematical biology 62, 863–900 (2011). [DOI] [PubMed] [Google Scholar]

- De Masi A., Galves A., Löcherbach E. & Presutti E. Hydrodynamic limit for interacting neurons. Journal of Statistical Physics 158, 866–902 (2015). [Google Scholar]

- Duarte A. & Ost G. A model for neural activity in the absence of external stimuli. Markov Processes and Related Fields 22, 37–52 (2016). [Google Scholar]

- Duarte A., Ost G. & Rodrguez A. A. Hydrodynamic limit for spatially structured interacting neurons. Journal of Statistical Physics 161, 1163–1202 (2015). [Google Scholar]

- Galves A. & Löcherbach E. Modeling networks of spiking neurons as interacting processes with memory of variable length. J. Soc. Franc. Stat. 157, 17–32 (2016). [Google Scholar]

- Larremore D. B., Shew W. L., Ott E., Sorrentino F. & Restrepo J. G. Inhibition causes ceaseless dynamics in networks of excitable nodes. Physical review letters 112, 138103 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Virkar Y. S., Shew W. L., Restrepo J. G. & Ott E. Metabolite transport through glial networks stabilizes the dynamics of learning. arXiv preprint arXiv:1605.03090 (2016).

- Cooper S. J. Donald o. hebb’s synapse and learning rule: a history and commentary. Neuroscience & Biobehavioral Reviews 28, 851–874 (2005). [DOI] [PubMed] [Google Scholar]

- Tsodyks M., Pawelzik K. & Markram H. Neural networks with dynamic synapses. Neural computation 10, 821–835 (1998). [DOI] [PubMed] [Google Scholar]

- Larremore D. B., Shew W. L. & Restrepo J. G. Predicting criticality and dynamic range in complex networks: effects of topology. Physical review letters 106, 058101 (2011). [DOI] [PubMed] [Google Scholar]

- Kole M. H. & Stuart G. J. Signal processing in the axon initial segment. Neuron 73, 235–247 (2012). [DOI] [PubMed] [Google Scholar]

- Lipetz L. E. The relation of physiological and psychological aspects of sensory intensity. In Principles of Receptor Physiology, 191–225 (Springer, 1971). [Google Scholar]

- Naka K.-I. & Rushton W. A. S-potentials from luminosity units in the retina of fish (cyprinidae). The Journal of physiology 185, 587 (1966). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bonachela J. A. & Muñoz M. A. Self-organization without conservation: true or just apparent scale-invariance? Journal of Statistical Mechanics: Theory and Experiment 2009, P09009 (2009). [Google Scholar]

- Priesemann V., Munk M. H. & Wibral M. Subsampling effects in neuronal avalanche distributions recorded in vivo. BMC neuroscience 10, 40 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ribeiro T. L. et al. Spike avalanches exhibit universal dynamics across the sleep-wake cycle. PloS one 5, e14129 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ribeiro T. L., Ribeiro S., Belchior H., Caixeta F. & Copelli M. Undersampled critical branching processes on small-world and random networks fail to reproduce the statistics of spike avalanches. PloS one 9, e94992 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]