Abstract

We examined whether striatal dopamine moderates the impact of externalizing proneness (disinhibition) on reward-based decision-making. Participants completed disinhibition and substance abuse subscales of the brief form Externalizing Spectrum Inventory, and then performed a delay discounting task to assess preference for immediate rewards along with a dynamic decision-making task that assessed long-term reward learning (i.e., inclination to choose larger delayed versus smaller immediate rewards). Striatal tonic dopamine levels were operationalized using spontaneous eyeblink rate. Regression analyses revealed that high disinhibition predicted greater delay discounting among participants with lower levels of striatal dopamine only, while substance abuse was associated with poorer long-term learning among individuals with lower levels of striatal dopamine, but better long-term learning in those with higher levels of striatal dopamine. These results suggest that disinhibition is more strongly associated with the wanting component of reward-based decision-making, whereas substance abuse behavior is associated more with learning of long-term action-reward contingencies.

Externalizing, or impulse control, problems are pervasive and can have substantial consequences. Research from the National Comorbidity Survey shows the incidence rate of impulse control disorders, including substance abuse conditions, in the United States to be approximately 8% – 9% (Insel & Fenton, 2005; Kessler et al., 1994; Wang et al., 2005). Additionally, many more individuals exhibit sub-clinical manifestations of disinhibition and substance abuse that also have adverse effects. One prominent domain in which externalizing tendencies can engender negative consequences in is decision-making. In particular, externalizing behavior has been linked to impairments in reward-based decisions that contrast short-term versus long-term consequences (Bechara & Damasio, 2002).

Substance Abuse, Trait Disinhibition, and Dopaminergic Function

Though both can be characterized as externalizing problems, substance abuse and trait disinhibition represent phenotypically distinctive phenomena (e.g., Armstrong & Costello, 2002; Finn et al., 2009; Krueger et al., 2007; Waldman & Slutske, 2000). Substance abuse entails recreational or problematic use of drugs and alcohol, whereas disinhibition reflects broader tendencies toward nonplanfulness, impulsive risk-taking, irresponsibility, and alienation from others (Patrick, Kramer, Krueger, & Markon, 2013). Available evidence, including data from twin studies, points to trait disinhibition as a highly heritable liability toward externalizing problems (Krueger, 1999; Krueger & Markon, 2006; Krueger, McGue, Iacono, 2001; Krueger et al., 2002)—with substance abuse representing one of its distinct behavioral (phenotypic) expressions. Molecular genetic research on problems of these types has suggested that allelic variation in dopaminergic genes, including DRD2, DRD3, and DRD4, is related both to disinhibitory traits and to substance abuse problems (Comings et al., 1994; Derringer et al., 2010; Krebs et al., 1998; Kreek et al., 2005; Lusher, Chandler, & Ball, 2001; Sokoloff et al., 1990). Furthermore, a recent study that examined associations of striatal and prefrontal dopaminergic genes with reward-related ventral striatum reactivity, a predictive feature of impulsive choice and incentive-based decision-making, showed that gene variants that increased striatal dopamine release and availability were associated with increased reactivity of the ventral striatum (Forbes et al., 2009). Taken together, findings from human behavioral and molecular genetic research along with neuroscientific evidence indicate a role for genetically-based variation in striatal dopaminergic function in general proneness to externalizing problems. Although research demonstrates that dopaminergic variation is associated with externalizing problems, the exact nature of this relationship for specific subdimensions (facets) of externalizing problems, such as trait disinhibition and substance abuse (Krueger et al., 2007; Patrick et al., 2013), is unclear. One possibility is that the distinction between disinhibition and substance abuse corresponds to differences in striatal dopaminergic function.

Dopamine and Facets of Reward Processing

According to incentive-sensitization theory, associative learning mechanisms determine the dopaminergic sensitization to incentive salience, a process by which stimuli become rewarding and wanted. Furthermore, the neural systems that underlie incentive salience, or reward ‘wanting’, and the pleasurable effects of a rewarding stimulus, or reward liking, are separate (Robinson & Berridge, 1993). While the dopaminergic system mediates reward wanting, it is not sufficient to trigger reward liking, which instead relies on opioid and GABA-benzodiazapine neurotransmitters (Baskin-Sommers & Foti, 2015; Berridge & Robinson, 1998). Extensive research has demonstrated that dopamine plays a critical role in the neural circuitry underlying reward learning and wanting (e.g., Berridge & Robinson, 1998; Ikemoto, 2007; Pessiglione et al., 2006; Robinson & Berridge, 2000; Wise, 2004). A recent review demonstrated that discrete dopamine-dependent neurobiological processes underlie wanting and learning aspects of reward responding (Baskin-Sommers & Foti, 2015). The distinction between reward wanting and learning processes is crucial to understanding the role of externalizing behavior in reward-based decision-making. Physiological reward wanting drives approach toward reward and enhances reward motivation. Dopamine signals in the ventral striatum connect incentive value to a reward stimulus (Baskin-Sommers & Foti, 2015). Physiological wanting can be distinguished from perceived wanting, which entails explicit awareness of the wanting experience, and can occur in response to both implicit unconditioned cues or learned reward cues, such as monetary incentives. Learning, on the other hand, involves dopamine signaling from the ventral striatum to the prefrontal cortex, which updates goal representations and associations between a stimulus and its outcome (Baskin-Sommers & Foti, 2015; Everitt & Robbins, 2005; Ma et al., 2010; Motzkin et al., 2014). Specifically, dopaminergic neurons in the mesolimbic system encode predictions about a reward and update that prediction based on feedback from prediction errors, thus signaling the reward value of stimuli in reinforcement learning contexts (Berridge, Robinson, & Aldridge, 2009; Flagel et al., 2011; Glimcher, 2011; Hollerman & Schultz, 1998). However, it is unclear whether tonic or phasic striatal dopamine is the basis for the effects of wanting and learning processes.

Tonic dopamine refers to the baseline level of extrasynaptic dopamine in the brain, whereas phasic dopamine refers to the spiking activity of dopamine neurons in response to a stimulus, such as a reward signal (Schultz, 1998). Trait impulsivity has been associated with decreased D2/D3 autoreceptor availability and increased amphetamine-induced dopamine release in the ventral striatum (Buckholtz et al., 2010a). Drug or alcohol addiction alters the balance between the tonic and phasic dopamine system. Frequent drug use increases tonic dopamine levels, which inhibits phasic dopamine release (Grace, 1995). Thus, in contrast to the elevated phasic dopamine responding associated with impulsivity (Buckholtz et al., 2010a), the dopamine system is altered in substance abusers such that tonic striatal dopamine levels are elevated and the phasic dopamine system becomes desensitized and weakened in its reactivity (Grace, 1995). As a function of this, individuals may use substances to restore the tonic-phasic dopamine system to equilibrium (Grace, 1995; 2000). This disequilibrium between tonic and phasic dopamine makes it especially important to examine how tonic dopamine interacts with substance abuse tendencies to affect decision-making behavior. In regard to reward processing, phasic dopamine activity, in particular, has been shown to encode reward prediction errors in the striatum (Ljungberg, Apicella, & Schultz, 1992; Niv, Daw, Joel, Dyan, 2007; Schultz 1998; Waelti et al. 2001). On the other hand, tonic dopamine levels encode the average reward rate (Niv et al., 2007). Given their distinct influences on reward processing, tonic and phasic dopamine may moderate the effects of externalizing tendencies on reward wanting and learning.

Reward-Based Decision Making: Relations with Substance Abuse and Disinhibition

Previous research suggests that individuals with substance use disorders show a failure in associative learning, leading to poorer decision-making on the Iowa Gambling Task (IGT) (Bechara, 2003; Bechara & Damasio, 2002). However, other work has found no difference on average in decision-making performance between individuals with substance use problems and controls, although drug dependence severity is predictive of associative learning deficits (Bolla et al., 2003; Ernst et al., 2003). Enhanced associative learning for drug stimuli and reward outcomes has been proposed as a mechanism for transitioning from recreational drug use to drug addiction (Hogarth, Balleine, Corbit, & Killcross, 2013). Although research is mixed on the relationship between substance abuse and associative learning on decision-making, it appears that it strongly affects reward processing of drug stimuli.

Because disinhibitory traits and substance abuse share heritable origins, disinhibition is rarely studied independently of substance abuse constructs. This poses a clear problem in evaluating distinctive relations for disinhibition and substance abuse with reward-based decision-making. Research on delay discounting, a measure of immediate versus delayed preferences for receiving rewards, often shows small correlations with impulsivity and is often restricted to specific impulsivity subscales (de Wit, 2007; Kirby, Petry, & Bickel, 1999; Madden, Petry, Badger, & Bickel, 1997; Mitchell, 1999; Reynolds, Richards, Dassinger, & de Wit, 2004; Vuchinich & Simpson, 1998). However, the majority of delay discounting studies have investigated impulsivity in concert with substance abuse tendencies, and, to our knowledge, only one study has tested for an effect of impulsivity on reward-based decision-making separate from its association with substance abuse. This study, by de Wit et al. (2007), demonstrated that non-planful impulsivity predicted preference for immediate rewards, or enhanced ‘wanting’. This bias in choosing immediate rewards over larger delayed rewards has been shown to be mediated by increased ventral striatum activity (Dagher & Robbins, 2009; McClure, Laibson, Loewenstein, & Cohen, 2004).

Although preference for immediate rewards is predictive of substance abuse, few studies have tested for individual contributions of disinhibition and substance abuse to reward-based decision-making. The fact that disinhibition and substance abuse are often conflated is a major limitation to work on externalizing behaviors and reward. As previous research has shown associations between substance abuse and associative learning, one possibility is that tonic dopamine may interact with substance abuse to affect reward-based associative learning such that elevated tonic dopamine levels enhance learning of the long-term average rewards associated with each option. Low tonic dopamine levels may lead to larger phasic spikes in response to reward prediction errors, and thus enhanced associations of the immediate action-reward contingencies (Daw, 2003; Niv et al., 2007). Thus, in substance abusing individuals in particular, tonic dopamine may operate to enhance updating of reward values and thereby facilitate learning of the long-term average reward rates of differing options.

On the other hand, previous research has demonstrated that impulsivity, separately from substance abuse, is predictive of immediate reward preference (de Wit et al., 2007). One possibility is that high-impulsive individuals with low tonic dopamine levels may experience larger phasic spikes in response to reward stimuli (Buckholtz et al., 2010a, b) and enhanced immediate desire for rewards, or wanting. As elevated tonic dopamine is associated with learning of average reward rates, heightened tonic dopamine levels may not influence reward wanting. Thus, while general proneness to externalizing problems likely has an underlying neural basis in reward dysfunction (e.g., Beauchaine & McNulty, 2013), the manifestations of this broad liability vary, and it is important to evaluate whether effects of trait disinhibition or impulsivity on reward wanting and learning differ from those of substance abuse tendencies.

Current Study

To assess variation in dopamine levels among participants, we used spontaneous eyeblink rate (EBR), which provides an index of striatal tonic dopamine (Karson, 1983). Specifically, previous research demonstrates that faster spontaneous EBR is indicative of elevated dopamine levels in the striatum (Colzato et al., 2009; Karson, 1983; Taylor et al., 1999). Moreover, in an incarcerated sample, prisoners with higher scores on the Barrett Impulsivity Scale-version 10 (BIS-10) showed faster EBRs compared to both inmates who reported lower levels of impulsivity and non-incarcerated control participants (Huang, Stanford, & Barratt, 1994). Findings for the relationship between substance abuse and EBR are mixed. For example, recreational cocaine users tend to have lower EBRs compared to non-users (Colzato et al., 2008), whereas daily administration of d-amphetamine over the course of several days increases EBR (Strakowski & Sax, 1998; Strakowski, Sax, Setters, & Keck, 1996). Based on prior studies of this type that have used EBR to quantify dopaminergic activity, we employed EBR in the current study as an index of tonic dopamine levels in the striatum, with heightened dopamine levels operationalized as faster EBR.

In order to assess reward-related wanting, we utilize the Delay Discounting Task (Richards, Zhang, Mitchell, & Wit, 1999). Within the Research Domain Criteria (RDoC) framework (Kozak & Cuthbert, in press), delay discounting is an experimental paradigm that relates to the approach motivation construct under the Positive Valence Systems domain. Previous research indicates that the RDoC approach motivation construct corresponds to physiological reward wanting (Baskin-Sommers & Foti, 2015). In the delay discounting task, participants indicate whether they would prefer a smaller amount of money immediately or a larger amount of money after a time delay (e.g., “Would you prefer $2 now or $10 after 30 days?”). A preference for immediate reward indicates greater disregard for (discounting of) the delayed reward option and, by inference, a higher degree of ‘wanting’ for immediate reward.

To examine reward learning, we utilized a complex reinforcement-learning (RL) task, a type of paradigm enumerated under the RDoC reward learning construct. This task, the dynamic decision-making task, involves a choice-history dependent reward structure and decision-making under uncertainty, and has been used extensively in previous research to investigate learning of immediate and delayed reward outcomes (Cooper, Gorlick, Worthy, & Maddox, 2013; Worthy et al., 2011; Worthy, Otto, & Maddox, 2012; Worthy, Byrne, & Fields, 2014; Worthy et al., 2014). In the task, participants repeatedly choose between two options to learn which option leads to the best outcome. One option, the Increasing option, offers fewer points on each trial compared to the second option, but rewards for both options increase over time as it is selected more frequently. The second option, the Decreasing option, offers more points on each trial but as this alternative is chosen more often, rewards for both options decrease in value. Thus, participants must choose between both options to learn that the Increasing option is advantageous because it offers more points in the long-run.

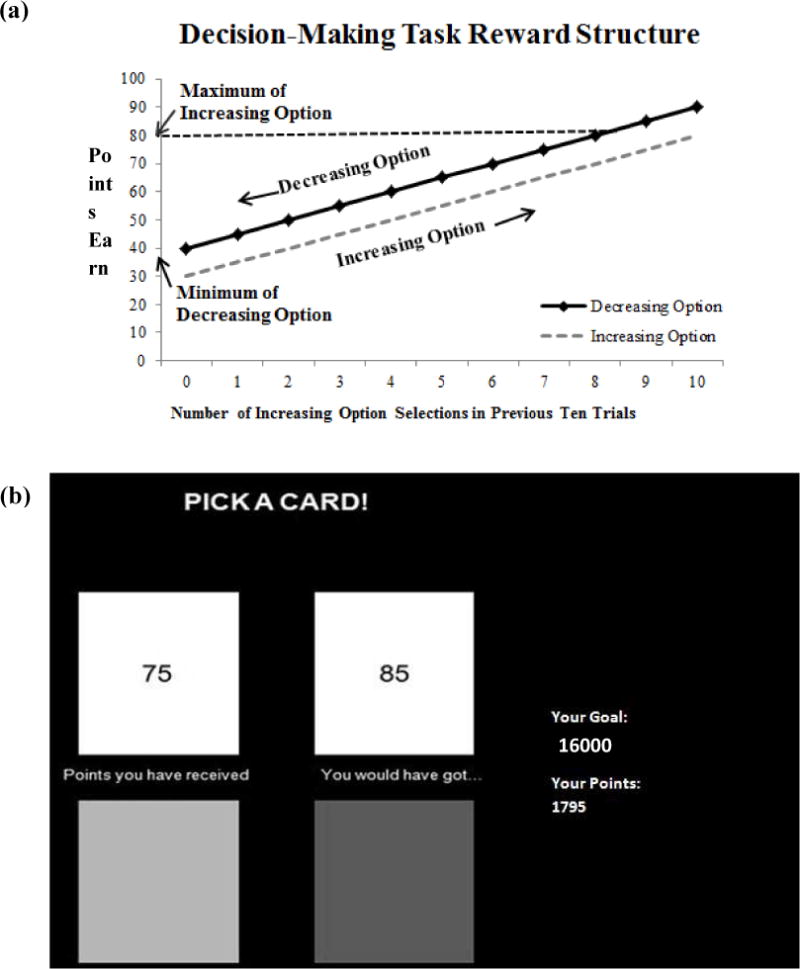

The rewards offered for each option in the dynamic decision-making task depend on the choices made by the participant on earlier trials (Figure 1a), which mimics real-world decision-making situations in which the consequences of future choices depend on those made previously. This type of decision-making is particularly relevant to externalizing problems, as previous reward-based decisions such as using drugs or engaging in other illegal or irresponsible activities may influence individual’s future choices and options. Additionally, we altered the salience of the task’s reward structure by presenting participants with feedback regarding the amount of points they would have received if they had selected the alternate option. Previous research (Byrne, Silasi-Mansat, & Worthy, 2014; Byrne & Worthy, 2013; 2015; Otto & Love, 2010) has shown that displaying foregone rewards to participants biases them toward the sub-optimal option because it highlights the short-term benefit of the Decreasing option (i.e., receipt of more points on the immediate task trial). This makes the immediately-rewarding Decreasing option more salient than the overall reward structure of the task, thus requiring flexible responding and overriding of the foregone-reward biasing information in order to learn information about each option and perform well on the task. A clear distinction between the delay discounting procedure and the dynamic decision making task is that participants make selections based on descriptive information on the former, whereas they need to learn the rewards and consequences offered by each option in the latter. Thus, these two tasks are highly effective for separately examining the wanting and learning components of reward-based decision-making.

Figure 1.

(a) Decision-making task reward structure. Rewards were a function of the number of times participants had selected the Increasing option over the previous ten trials. If participants had selected the Increasing option on all of the ten of the previous trial then they would be at the right-most point on the x-axis, while if they had selected the Decreasing option on all ten of the previous ten trials then they would be at the left-most point on the x-axis. (b) Screen shot of the dynamic decision-making task. Participants were shown the amount of points they would have received had they selected the alternate option.

The current investigation sought to evaluate the influences of general externalizing proneness and its specific manifestation in the form of substance abuse on reward learning and behavioral choices, and the role of variations in striatal dopamine levels (as indexed by spontaneous EBR) in moderating this relationship. Three major hypotheses were advanced:

Based on previous research, we expected that individuals with higher disinhibition/impulsivity would show slower EBR (i.e., reflecting lower striatal tonic dopamine levels). Although findings pertaining to EBR in individuals with substance use problems are mixed, based on working showing that frequent substance use heightens tonic dopamine we predicted that EBR would be elevated in individuals reporting high levels of substance use. For the task performance variables, we predicted that preference for the immediate reward option on the delay discounting task would be associated with slower EBR (lower tonic dopamine levels), whereas enhanced learning of the long-term advantageous option on the dynamic decision-making task would be associated with faster EBR (higher tonic dopamine levels).

We predicted that a dissociation would be evident in the effects of general disinhibition and substance abuse tendencies on behavior in the two reward tasks (delay discounting and dynamic decision-making). Specifically: Because persistent use of substances entails learning of stimulus reward-contingencies (Hogarth et al., 2013), substance abuse should influence performance on the dynamic decision-making task, which assesses the learning component of decision-making. On the other hand, general disinhibition is associated with enhanced wanting of immediate over delayed rewards (de Wit et al., 2007), and consequently, disinhibition should influence delay discounting preferences, as this task assesses the wanting component of reward processing.

We further predicted that variations in tonic striatal dopamine would moderate the effects of disinhibition on wanting and of substance abuse on learning. Given that elevated tonic dopamine is associated with learning long-term average reward rates, we expected that substance users with heightened tonic dopamine levels would learn the reward contingencies of the decision options more effectively than substance users with low tonic dopamine levels, and consequently, perform better on the dynamic decision-making task. Additionally, if low tonic dopamine levels lead to larger phasic spikes in response to reward stimuli, then more impulsive individuals who have low tonic dopamine levels may show higher discounting scores on the delay discounting task, indicative of more ‘wanting’, compared to impulsive individuals with higher tonic dopamine levels.

Method

Participants

Ninety-three undergraduate students (48 females; age range 18 – 22) from a large southwestern university completed the delay discounting task for partial fulfillment of a course requirement. Of these, 67 (36 females) also performed the dynamic decision-making task.

Materials and Procedure

Externalizing Spectrum Inventory: Brief Form

To assess disinhibitory/externalizing tendencies, we administered the Disinhibition and Substance Abuse subscales from the Externalizing Spectrum Inventory-Brief Form (ESI-BF; Patrick, Kramer, Krueger, & Markon, 2013). The Disinhibition subscale consists of 20 items that assess general externalizing proneness (i.e., proclivities toward reckless-impulsive behavior, and affiliated traits; Krueger et al., 2007), and includes questions about problematic impulsivity, irresponsibility, theft, impatient urgency, fraud, alienation, planful control, and boredom proneness. The Substance Abuse subscale contains 18 items pertaining to use of and problems with alcohol and other drugs. For each scale, item responses were made using a 4-point Likert scale (true, somewhat true, somewhat false, or false). Both the Disinhibition and Substance Abuse subscales show strong validity in relation to relevant criterion measures (Patrick & Drislane, in press; Venables & Patrick, 2012), and both exhibited very high internal consistency within the current sample (αs = .94 and .95). Importantly, the ESI-BF Disinhibition scale is a measure of an individual’s general proclivity for externalizing problems, whereas the ESI-BF Substance Abuse scale indexes a distinct manifestation of this broad disinhibitory liability—namely, problematic use of alcohol/drugs.

Barratt Impulsiveness Scale

The Barratt Impulsiveness Scale (11th version; BIS-11) is a 30-item questionnaire that assess impulsivity factors, including motor impulsiveness, nonplanning impulsiveness, and attentional impulsiveness (Patton, Stanford, & Barratt, 1995). Participants reported the frequency in which they engaged in each item listed in the questionnaire using a 0 (rarely/never) – 3 (almost always/always) scale. Higher scores indicated engaging in more impulsive behaviors or thoughts. This scale has been shown to have a high degree of internal consistency among college students (α = .82). As disinhibition is characterized by impulse control problems, this measure was included to corroborate the relationship between self-reported disinhibition and impulsivity on decision-making.

Sleep Screening Question

Based on previous research showing that sleep deprivation affects eyeblink rate (Barbato et al., 1995), participants were queried regarding the number of hours they slept the previous night, and this was taken into account in statistical analyses.

Spontaneous Eyeblink Rate (Tonic Dopamine Index)

Following previous published research (e.g., Chermahini & Hommel, 2010; Colzato, Slagter et al., 2009; De Jong & Merckelbach, 1990; Ladas, Frantzidis, Bamidis, & Vivas, 2013), we used electrooculogram (EOG) recording to assess spontaneous eyeblink rate (EBR) as an indirect index of available levels of tonic dopamine in the striatum. To record EBR, we followed the procedure described by Fairclough & Venables (2006), in which vertical eyeblink activity was recorded from Ag/AgCl electrodes positioned above and below the left eye, with a ground electrode placed on the center of the forehead. All EOG signals were filtered (at 0.01–10 Hz) and amplified using a Biopac EOG100C differential corneal–retinal potential amplifier. Eyeblinks were defined as phasic increases in EOG activity of >100 μV and occurring within intervals of 400ms or less over the recording interval. Eyeblink frequency was quantified in two ways in order to ensure valid results: via manual count and using a BioPac Acqknowledge software scoring routine. The manual EBR results and BioPac Acqknowledge automated EBR results were strongly positively correlated, r = .97, p<.001. Manual EBR was used for all statistical analyses reported below.

All recordings were collected during daytime hours of 11am to 4pm because previous work has shown that diurnal fluctuations in spontaneous EBR can occur in the evening hours (Barbarto et al., 2000). A black fixation cross (“X”) was displayed on a wall at eye level 1 M from where the participant was seated. Participants were instructed to look in the direction of the fixation cross for the duration of the recording and avoid moving or turning their head. Eyeblinks were recorded for 6 min under this basic resting condition. Each participant’s EBR was determined by computing the average number of blinks across the 6 min recording interval.

Delay Discounting Task

Participants were instructed that they would be asked repeatedly to choose whether they would prefer a smaller amount of money now (Option A) or a larger sum of money (Option B) at one of five specified delay intervals (1 day, 2 day, 1 month, 6 months, or 1 year; Richards et al., 1999). For each delay period, participants chose between $2 offered immediately or $10 offered after each delay interval. The immediate reward increased in 50-cent increments on each subsequent trial until the immediate and delay rewards were equal (both $10). Using this procedure, we were able to derive an indifference point, reflecting the least amount of money an individual chose to receive immediately in place of the $10 following the time delay, for each of the five delay periods. Lower indifference points indicated that individuals discounted delayed rewards more. To quantify the degree to which participants preferred delayed versus immediate rewards, we used an area-under-the-curve (AUC) procedure (Myerson, Green, & Warusawitharana, 2001). Smaller AUC values represented greater discounting, and thus a stronger preference for immediate rewards. Larger AUC values, on the other hand, indicated less discounting—that is, a stronger preference to forego smaller immediate rewards in favor of larger delayed rewards.

Dynamic Decision-Making Task

Participants completed a choice-history dependent dynamic decision-making task that has been used in previous research to examine decision-making strategies in choosing immediate compared to long-term reward options (Byrne et al., 2014; Byrne & Worthy, 2013, 2015; Otto & Love, 2010). One of the options on the task, the Increasing option, offered smaller immediate rewards on each trial compared to the Decreasing option, but the rewards for both options increased as the Increasing option was selected more frequently. The Increasing option had a possible range of 30 – 80 points, while the points for the Decreasing option ranged from 40 – 90 points. Figure 1a shows the rewards offered for each option based on the number of times participants had selected the Increasing option over the past 10 trials. Participants began with 55 points for the Increasing option and 65 points for the Decreasing option. If the Increasing option was selected, individuals would earn 80 points on each trial after the first ten trials. In contrast, if the Decreasing option was selected, individuals would earn 40 points on each trial after the initial ten trials. Thus repeatedly selecting the Increasing option led to a 40 point advantage compared to the Decreasing option. Switching between options followed the same pattern.

The optimal strategy to earn the maximum amount of points in the task, therefore, was to repeatedly choose the Increasing option. Although the Increasing option yielded 10 points less than the Decreasing option on each immediate trial, over time selecting it increased reward for both options, making it the optimal choice in the task. Therefore, performance on the dynamic decision-making task was computed as the average proportion of times participants chose the Increasing option. Higher values indicated more Increasing optimal option selections, and thus better learning of the long-term advantageous options, while lower values reflected a preference for the Decreasing option and, consequently, poorer learning of the long-term advantageous option.

Additionally, participants were shown the amount of points they would have received if they had selected the alternative option (Figure 1b). The presence of this foregone reward information was designed to bias participants toward the sub-optimal Decreasing option by highlighting on each trial that the Decreasing option (although less lucrative in the long-term) led to a larger immediate payoff.

Procedure

Participants completed the questionnaires and the decision-making tasks on PC computers using Psychtoolbox for Matlab (version 2.5). Participants first completed the screening question and the ESI-BF Disinhibition and Substance Abuse subscales, and then completed 100 trials of the dynamic decision-making task. They were given a goal of earning at least 7,200 points on the task, which required them to select the optimal Increasing option on more than 60% of the trials. They were not informed about the rewards provided for each response option, the number of trials, or the choice-history dependent nature of the reward structure of the task. After the dynamic decision-making task, participants completed the delay discounting task. Participants were informed that the questions in this task were hypothetical, but that they should try to respond as if they were actually receiving the money. The session ended with the 6-min assessment of eyeblink rate.

Data Analysis

In order to evaluate our first hypothesis regarding the association between the EBR index of striatal dopamine and the individual differences and performance measures, bivariate correlations were conducted. We anticipated that negative correlations would be observed between delay discounting reward preference and the EBR index as well as between ESI-BF Disinhibition/BIS-11 Impulsivity and the EBR index, whereas a positive relationship between dynamic decision-making performance and EBR was expected.

To test our other two hypotheses pertaining to the interaction between the EBR index of striatal dopamine and externalizing tendencies, separate hierarchical regression analyses were conducted for the delay discounting and dynamic decision-making tasks. These analyses provided for evaluation of the separate and interactive effects of continuous variations in externalizing tendencies and dopamine levels on decision-making. Gender, age, and hours slept were included as covariates in both regression analyses to control for possible effects of these variables. Thus, the predictors for both delay discounting and dynamic decision-making performance regressions were identical. Results from the delay discounting preferences and dynamic decision-making performance regressions were used to assess for effects of externalizing proneness and its interaction with striatal dopamine on reward wanting and learning, respectively.

Results

Behavioral Analyses

Descriptive Statistics

Examination of the spontaneous eyeblink rate results revealed that one participant’s data was excluded because EBR in this case was more than three standard deviation units above the mean and thus represented an outlier. After this exclusion, individual EBRs ranged from 4.33 – 38.83 blinks/min (M = 17.31, SD = 8.81). Scores on the ESI-BF Disinhibition subscale ranged from 0 – 51 (M = 15.39, SD = 13.60) and the range of scores on the ESI-BF Substance Abuse subscale ranged from 0 – 34 (M = 13.36, SD = 7.46). No outliers were observed in responses to the ESI-BF subscales. Similarly, scores on the BIS-11 ranged from 50 – 90 (M = 65.90, SD = 8.44) with no outliers detected.

Correlational Analyses

Bivariate correlations (rs) were computed between each of the measures collected (i.e., EBR index of striatal dopamine, Substance Abuse and Disinhibition scales of the ESI-BF, BIS-11 Impulsivity) and performance on the delay discounting task and the dynamic decision-making task (Table 1). ESI-BF Disinhibition and Substance Abuse scores were positively correlated as expected with one another (cf. Patrick et al., 2013), r = .46, p<.01, and with BIS-11 impulsivity scores, rs = .58 and .39, respectively, ps<.01. Disinhibition and Substance Abuse scores each showed correlations in expected directions with performance on the two decision tasks (i.e., negative with delay discounting scores, and positive with dynamic decision-making scores), but the rs were modest and nonsignificant. Substance Abuse scores, and to a lesser extent Impulsivity and Disinhibition scores, showed negative associations with the EBR index of tonic dopamine level, although these correlations were also nonsignificant. The EBR index showed negligible zero-order rs with performance scores for the two decision-making tasks.

Table 1.

Correlations between the ESIBF Externalizing Factors, BIS Impulsivity, EBR, delay discounting, and selection of the Increasing optimal option on the dynamic decision-making task

| Substance Abuse | Disinhibition | Impulsivity | EBR | Delay Discounting | |

|---|---|---|---|---|---|

| Substance Abuse | |||||

| Disinhibition | 0.46** | ||||

| Impulsivity | 0.39** | 0.58** | |||

| EBR | −0.16 | −0.05 | −0.13 | ||

| Delay Discounting | −0.10 | −0.11 | −0.08 | 0.05 | |

| Dynamic Decision-Making | 0.11 | 0.18 | 0.10 | −0.02 | −0.18 |

Note. Impulsivity refers to scores on the BIS Impulsivity Scale. Lower delay discounting scores indicate more discounting.

indicates significance at the p<.01 level.

Delay Discounting Task

A three-step hierarchical multiple regression analysis was conducted to examine the effect of Disinhibition score, substance abuse, and striatal dopamine, as measured by eyeblink rate, on decision-making performance. Table 2 shows the regression coefficients for every variable at each step of the model. In the first step, gender, age, and hours slept were entered as covariates. Omnibus prediction at this step of the model was marginally significant, F(3, 88) = 2.42, p=.07. Gender did not emerge as a significant predictor at this step (p=.52), but hours slept showed a significant relationship (β = .23, p=.03), indicating that sleep was associated with less discounting of delayed rewards, and age showed a marginally significant predictive association, β = .17, p=.10. In the second step of the model, Disinhibition score, Substance Abuse score, and striatal dopamine (as indexed by EBR) were entered to evaluate their independent predictive associations with delay discounting. The model as a whole was not significant at this step (p=.56), and none of the predictors evidenced an independent association with delay discounting preferences, ps>.30. In the third step of the model, interaction terms for striatal dopamine by Disinhibition and striatal dopamine by Substance Abuse were entered as predictors. The addition of these terms accounted for a significant proportion of the variance in delay discounting, ΔR2 = .06, F(8, 83) = 3.19, p<.05. At this step of the model, the Striatal Dopamine X Disinhibition interaction (β = .29, p=.01) contributed significantly to prediction of delay discounting choices, whereas striatal dopamine (p=.91), Disinhibition (p=.18), Substance Abuse (p=.84), and the Striatal Dopamine X Substance Abuse interaction (p=.69) were not predictive of delay discounting preferences.1

Table 2.

Hierarchical Regression Coefficients for the Delay Discounting and Dynamic Decision-Making Tasks

| Predictor variable | Step 1 | Step 2 | Step 3 |

|---|---|---|---|

| Delay Discounting Task | |||

|

| |||

| Gender | 0.07 | 0.12 | 0.19 |

| Age | 0.17† | 0.16 | 0.16 |

| Sleep Hours | 0.23* | 0.22* | 0.20† |

| Striatal Dopamine (EBR Index) | 0.02 | 0.01 | |

| Disinhibition | −0.11 | −0.17 | |

| Substance Abuse | −0.07 | 0.03 | |

| Dopamine X Disinhibition | 0.29* | ||

| Dopamine X Substance Abuse | −0.05 | ||

| Adjusted R2 | 0.05 | 0.04 | 0.08 |

|

| |||

| Dynamic Decision-Making Task | |||

|

| |||

| Gender | .46** | .46** | .51** |

| Age | 0.06 | 0.06 | 0.10 |

| Sleep Hours | 0.12 | 0.12 | 0.13 |

| Striatal Dopamine (EBR Index) | 0.07 | 0.23† | |

| Disinhibition | 0.04 | 0.17 | |

| Substance Abuse | −0.03 | 0.07 | |

| Dopamine X Disinhibition | 0.06 | ||

| Dopamine X Substance Abuse | .41** | ||

| Adjusted R2 | 0.18 | 0.15 | 0.27 |

indicates significance at the p<.01 level.

indicates significance at the p<.05 level.

indicates p<.10.

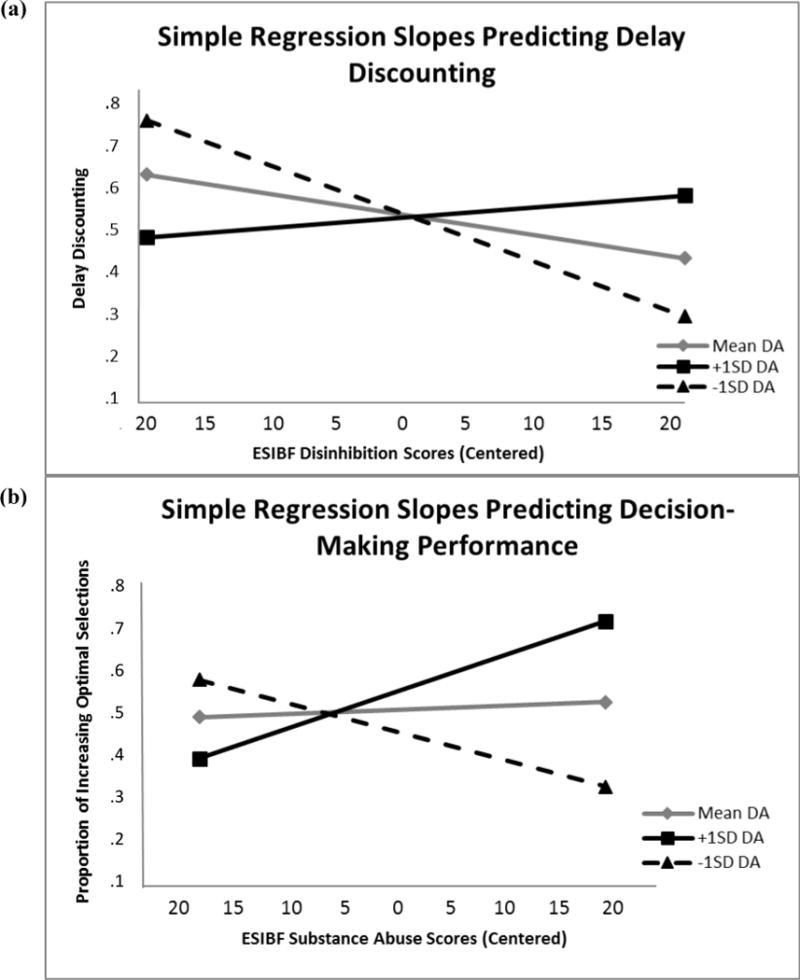

Figure 2a shows simple regression lines for the effect of Disinhibition score on delay discounting at (a) the mean for striatal dopamine, (b) one standard deviation above the mean for striatal dopamine, and (c) one standard deviation below the mean for striatal dopamine. Striatal dopamine, Disinhibition, and Substance Abuse variables were centered prior to creating the centered interaction terms. The simple regression slope coefficients when centered at the mean (β = −.17, p=.18) and at one standard deviation above the mean (β = .09, p=.54) were not significant, but the simple regression slope coefficient centered at one standard deviation below the mean significantly predicted delay discounting, β = −.43, p=.02, such that at low levels of striatal dopamine individuals higher in disinhibitory tendencies tended to discount future rewards at a greater rate. This result suggests that the impact of increasing disinhibition on delay discounting performance varied as a function of tonic DA level as indexed by EBR, such that high-disinhibited individuals with low tonic dopamine showed the most aberrant delay discounting performance, and thus the strongest reward wanting preferences.

Figure 2.

(a) Simple regression slopes for the effect of ESIBF Disinhibition scores (centered at the mean) on delay discounting centered at the mean of dopamine levels, one standard deviation above the mean of dopamine levels, and one standard deviation below the mean of dopamine levels. Delay discounting scores are reversed such that lower scores are indicative of more discounting of delayed rewards. (b) Simple regression slopes for the effect of ESIBF Substance Abuse scores (centered at the mean) on decision-making performance centered at the mean of dopamine levels, one standard deviation above the mean of dopamine levels, and one standard deviation below the mean of dopamine levels.

Dynamic Decision-Making Task

The same predictors used in the analysis of delay discounting were entered across three steps of a counterpart regression model for dynamic decision-making task performance, operationalized as the average proportion of Increasing optimal option selections on the task. Omnibus prediction at step 1 of the model, at which gender, age, and hours slept were added, was significant, F(3,64) = 6.05, p<.01, with gender (β = .46, p<.01) but not age (p=.63) or hours slept (p=.30) emerging as distinctly predictive of dynamic decision-making performance. Consistent with previous research (Byrne & Worthy, 2015), males selected the optimal option more frequently than females. The increase in overall prediction was not significant at step 2 of the model (ΔR2 =.01, F(6, 61) = 0.16, p=.92), in which disinhibition, substance abuse, and striatal dopamine were included as predictors, and none of these variables accounted for unique variance in decision making performance, all ps>.50. In the last step of the model, interaction terms for striatal dopamine by disinhibition and striatal dopamine by substance abuse were entered. A significant increase in overall prediction was evident at this step (ΔR2 = .13, F(8, 59) = 5.76, p<.01), with the Striatal Dopamine X Substance Abuse interaction effect (β = .41, p<.01) showing a unique predictive association. The effect of striatal dopamine on decision-making performance was marginally significant at this step (β = .23, p=.07), whereas Substance Abuse (p=.63), Disinhibition (p=.23), and the Striatal Dopamine X Disinhibition interaction (p=.59) contributed negligibly.2 The regression coefficients for the variables at each step of the model are shown in Table 2.

Based on the relationship between EBR and Substance Abuse score, evidence from the regression analysis suggests that heightened striatal dopamine moderates decision-making in high substance-abusing individuals, leading to enhanced performance. Figure 2b depicts the simple regression lines for the association of substance abuse with decision-making performance at (a) the mean for striatal dopamine, (b) one standard deviation above the mean for striatal dopamine, and (c) one standard deviation below the mean for striatal dopamine. As with the delay discounting analysis, predictor variables were centered before the interaction terms were created. The simple regression slope coefficient for association at the mean was not significant (β = .07, p=.63), but the slope coefficients for one standard deviation above (β = .54) and below the mean (β = −.41) significantly predicted dynamic decision-making performance (ps = .02 and .04, respectively).

Discussion

We examined whether disinhibitory traits and substance use problems have differential effects on reward wanting and learning as a function of variation in striatal tonic dopamine levels. Our results provide evidence that baseline tonic dopamine levels moderate the effects of disinhibition and substance abuse on reward processing. We observed a crossover interaction between tonic dopamine and substance abuse. At higher tonic dopamine levels, substance abuse was associated with enhanced reward learning, resulting in better decision-making performance in a dynamic decision-making task. At lower tonic dopamine levels, an opposing inverse relationship between substance use and reward learning was evident, reflecting comparatively poorer performance for individuals reporting higher levels of substance use. These results suggest that learning of long-term action-reward contingencies depends on tonic dopamine levels in substance abusers. The implication could be that higher levels of tonic dopamine might facilitate improved reward learning in individuals with high levels of substance use. Alternatively, alcohol or drug users with high tonic dopamine levels may be strategically reward-oriented rather than impulsively driven by immediate desires. Notably, we observed no effect of disinhibition (i.e., general externalizing proneness) on this form of reward learning.

In the delay discounting task we found that disinhibitory tendencies were associated with stronger preferences for immediate reward only for individuals with lower tonic dopamine levels. At moderate and high levels of tonic dopamine we observed no relationship between disinhibition and preferences for immediate versus delayed reward. We also observed no effect of substance abuse in this task. These findings demonstrate that the effect of general disinhibitory tendencies on reward processing is not homologous; rather, it differs depending on the phenotypic expression of the behavior and baseline dopamine levels in the striatum. Elevated tonic dopamine appears to enhance learning of the long-term reward value of different options in individuals with more substance abuse problems, whereas phasic dopamine (low tonic dopamine) increases immediate desire for rewards, or wanting, in individuals with higher disinhibitory traits. A potential implication of this result is that high-disinhibited individuals with low striatal tonic dopamine may comprise a maximum-liability group.

Although previous research has shown that a common heritable vulnerability, including variation in striatal dopaminergic genes, contributes to externalizing behaviors, results from the current study demonstrate that the specific manifestation of the behavior can differentially impact reward wanting and learning. As such, our findings support previous work showing that substance abuse is associated with enhanced associative learning of rewards, whereas disinhibition is associated with increased preference for immediate rewards (de Wit et al., 2007; Hogarth et al., 2013). However, we did not observe significant associations for either substance abuse or disinhibition with reward-based decision-making when tonic dopamine levels were not taken into account. Rather, our results uniquely demonstrate that substance abuse and disinhibition not only affect distinct decision-making processes, but also depend on variation in tonic dopamine levels.

Consistent with previous research showing that striatal dopamine increases updating of reward stimuli to their outcomes (Hazy, Frank, & O’Reilly, 2006; Maia & Frank, 2011), elevated tonic dopamine in the striatum was associated with increased learning of each option’s long-term reward in individuals with more substance use problems. While it could be the case that these high tonic dopamine substance users represent “functional addicts,” it is also important to consider the downstream post-learning reward processes that occur in these individuals, such as learning disengagement. While better long-term reward learning led to enhanced decision-making performance in our task, clearly it is not always the case that better long-term associative learning of rewards is advantageous. In particular, enhanced associative learning of the rewarding properties of drugs and other substances of abuse can lead recreational substance users to the path of addiction (Hogarth et al., 2013). Therefore, despite elevated tonic dopamine enhancing reward learning within the current study task for individuals reporting high levels of substance use, this proclivity is clearly harmful when the increased reward learning ends in addiction.

The finding that high disinhibition was associated with preference for immediate reward options is consistent with previous research (Dagher & Robbins, 2009; de Wit et al., 2007; McClure, Laibson, Loewenstein, & Cohen, 2004). However, the observation that this association was only found in individuals with low striatal tonic dopamine levels offers novel insight into the relationship between externalizing problems and tonic dopamine. It appears that the effects of disinhibition on reward wanting may be particularly strong in individuals with diminished striatal dopamine, whereas elevated tonic dopamine reduces reward seeking tendencies in individuals with higher disinhibitory tendencies. Thus, the current results clearly demonstrate that the effect of disinhibition on reward wanting depends on tonic dopamine levels in the striatum.

Implications and Future Directions

The results of this investigation have important implications for models of addiction and impulsivity. Disinhibition, or trait impulsivity, and substance abuse are often considered to have the same effect on reward processing, enhancing incentive salience and thus reward wanting. In spite of this, our results show clear support for dissociative effects of externalizing proneness on reward wanting and learning. Future research investigating the relationship between externalizing tendencies and reward dysfunction should consider the distinct effects that such tendencies can have on wanting and learning.

Although the current results demonstrate that dopamine moderates the effect of substance abuse on reward learning, our data did not provide support the hypothesis that substance abuse would be positively related to dopamine levels (as indexed by EBR). Indeed, contrary to prediction, a weak negative association between substance abuse and EBR was actually observed. Evidence that substance abuse is associated with increased tonic dopamine levels comes from research examining effects of frequent administration of amphetamine in both mice and humans (Grace, 1995; Strakowski & Sax, 1998; Strakowski et al., 1996). By contrast, other work showing that substance abuse is associated with diminished blink rates, and thus diminished tonic dopamine levels, employed a sample of recreational cocaine users who used cocaine monthly for at least two years (Colzato et al., 2008).

Thus, one possible explanation for the discrepancy between our hypothesis and results may have to do with substance use frequency and duration. The research that found enhanced tonic dopamine levels involved high frequency, short-duration drug administration, whereas the participants in Colzato et al.’s study were low frequency, long-term drug users. Because our study did not assess for substance use frequency or the length of time that participants had been using drugs or alcohol, we are not able to directly examine whether frequency and duration of substance abuse might alter the relationship between substance abuse and tonic dopamine levels. Alternatively, the specific type of drug used in excess could also affect striatal tonic dopamine levels. As we did not test for the type of drugs that participants in our sample used, it is possible that non-stimulants, such as alcohol or cannabis, might exert different effects on tonic dopamine levels than stimulants. Future research should specifically test for moderating effects of substance type, frequency, and duration on tonic dopamine levels.

Because disinhibition and substance abuse frequently lead to impaired decision-making in the real-world, it is important to examine how these findings can be applied to specific impairments that result from externalizing proclivities. Accelerated reinforcement learning of reward options may be beneficial in some situations, such as academics and career goals. When the reward is a harmful, like a drug, increased tonic dopamine may still promote learning of action-reward contingencies and lead to difficulty in reward disengagement (Dagher & Robbins, 2009). Because we did not examine the long-term consequences of reward learning or differences in task disengagement, however, more research is needed to examine effects of these variables in substance abusers.

Limitations

While the tasks used in the present study effectively index reward wanting and learning behavior, one limitation to these tasks is that they are designed to assess learning from rewards only. In particular, elevated tonic dopamine levels have been shown to support reward learning, whereas diminished tonic dopamine levels reinforce avoidance, or punishment, learning (Frank, Seeberger, & O’Reilly, 2004). The distinction between reward and punishment learning is important for understanding the mechanistic effect of tonic dopamine on disinhibition and substance abuse. However, the question of how disinhibition and substance abuse relate to punishment learning, such as learning from monetary losses, lies outside the scope of this investigation. In addition, further work is needed to determine whether the effect of tonic dopamine on reward wanting and learning extends to decision-making tasks involving both gains and losses.

In considering the generalizability of the current results, it should be noted that the goal of this study was primarily to examine individual differences in externalizing tendencies in the general population, and not to characterize individuals with severe clinical-level impulse control or substance use disorders. It is certainly conceivable that severe problems of these types may be associated with different reward processing patterns than those observed in our college student sample. Furthermore, spontaneous eyeblink rate is an indirect marker of striatal tonic dopamine levels and thus inferences should be made with caution. Additional techniques, such as PET imaging, are needed to directly establish relationships between externalizing problems and altered striatal dopamine activity in reward processing contexts. Finally, while current results provide evidence for associations between externalizing problems and aberrant reward processing, we do not purport that striatal tonic dopamine levels causally affect reward wanting or learning.

Conclusions

This study is the first to demonstrate that disinhibition and substance abuse exert different effects on reward processing, depending on variations in striatal tonic dopamine levels. Specifically, our results provide support for the hypothesis that these distinct components of externalizing behavior are differentially related to reward wanting and learning. We conclude that externalizing problems may reflect either an enhanced desire for rewards, or augmented associative linking of reward stimuli to their outcomes. Although associative learning regarding reward values and reward predictors may initially be beneficial, it can lead to negative consequences, such as addiction, in certain disposed individuals across time.

Acknowledgments

All authors developed the study concept and contributed to the study design. K.A.B. and D.A.W. programmed the experiments. K.A.B. oversaw data collection and data analysis. D.A.W. and C.J.P. provided suggestions on the data analysis. K.A.B. wrote the manuscript. D.A.W. and C.J.P. edited and provided feedback on revisions of the manuscript. All authors approved the final version. This manuscript was supported by NIA grant AG043425 to D.A.W.

Footnotes

Disinhibition and Impulsivity scores were strongly associated. When BIS-11 Impulsivity scores were included in the model in place of ESI-BF Disinhibition scores, both BIS and the BIS X EBR interaction emerged as significant predictors of delay discounting (βs = .79 and 2.59, respectively, ps<.01). Thus, disinhibition and impulsivity can be viewed as related constructs (Beauchaine & McNulty, 2013; Yancey et al., 2013) that have very similar effects on delay discounting.

When BIS-11 Impulsivity scores were entered into the model in place of ESI-BF Disinhibition scores, the results were similar; neither BIS (β = .38, p=.22) nor the BIS X Striatal Dopamine interaction (β = −.66, p=.51) were significant predictors of performance on the dynamic decision-making task.

References

- Armstrong TD, Costello EJ. Community studies on adolescent substance use, abuse, or dependence and psychiatric comorbidity. Journal of Consulting and Clinical Psychology. 2002;70:1224–1239. doi: 10.1037//0022-006x.70.6.1224. [DOI] [PubMed] [Google Scholar]

- Barbato G, Ficca G, Beatrice M, Casiello M, Muscettola G, Rinaldi F. Effects of sleep deprivation on spontaneous eye blink rate and Alpha EEG power. Biological Psychiatry. 1995;38:340–341. doi: 10.1016/0006-3223(95)00098-2. [DOI] [PubMed] [Google Scholar]

- Barbato G, Ficca G, Muscettola G, Fichele M, Beatrice M, Rinaldi F. Diurnal variation in spontaneous eye-blink rate. Psychiatry Research. 2000;93:145–151. doi: 10.1016/s0165-1781(00)00108-6. [DOI] [PubMed] [Google Scholar]

- Baskin-Sommers AR, Foti D. Abnormal reward functioning across substance use disorders and major depressive disorder: Considering reward as a transdiagnostic mechanism. International Journal of Psychophysiology. 2015 doi: 10.1016/j.ijpsycho.2015.01.011. [DOI] [PubMed] [Google Scholar]

- Beauchaine TP, McNulty T. Comorbidities and continuities as ontogenic processes: toward a developmental spectrum model of externalizing psychopathology. Development and Psychopathology. 2013;25:1505–1528. doi: 10.1017/S0954579413000746. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bechara A. Risky business: emotion, decision-making, and addiction. Journal of Gambling Studies. 2003;19:23–51. doi: 10.1023/a:1021223113233. [DOI] [PubMed] [Google Scholar]

- Bechara A, Damasio H. Decision-making and addiction (part I): impaired activation of somatic states in substance dependent individuals when pondering decisions with negative future consequences. Neuropsychologia. 2002;40:1675–1689. doi: 10.1016/s0028-3932(02)00015-5. [DOI] [PubMed] [Google Scholar]

- Berridge KC, Robinson TE. What is the role of dopamine in reward: hedonic impact, reward learning, or incentive salience? Brain Research Reviews. 1998;28:309–369. doi: 10.1016/s0165-0173(98)00019-8. [DOI] [PubMed] [Google Scholar]

- Berridge KC, Robinson TE, Aldridge JW. Dissecting components of reward:‘liking’,‘wanting’, and learning. Current Opinion in Pharmacology. 2009;9:65–73. doi: 10.1016/j.coph.2008.12.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bolla KI, Eldreth DA, London ED, Kiehl KA, Mouratidis M, Contoreggi C, Ernst M. Orbitofrontal cortex dysfunction in abstinent cocaine abusers performing a decision-making task. Neuroimage. 2003;19:1085–1094. doi: 10.1016/s1053-8119(03)00113-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buckholtz JW, Treadway MT, Cowan RL, Woodward ND, Benning SD, Li R, Zald DH. Mesolimbic dopamine reward system hypersensitivity in individuals with psychopathic traits. Nature Neuroscience. 2010;13:419–421. doi: 10.1038/nn.2510. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buckholtz JW, Treadway MT, Cowan RL, Woodward ND, Li R, Ansari MS, Zald DH. Dopaminergic network differences in human impulsivity. Science. 2010;329:532–532. doi: 10.1126/science.1185778. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Byrne KA, Silasi-Mansat CD, Worthy DA. Who chokes under pressure? The Big Five personality traits and decision-making under pressure. Personality and Individual Differences. 2015;74:22–28. doi: 10.1016/j.paid.2014.10.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Byrne KA, Worthy DA. Do narcissists make better decisions? An investigation of narcissism and dynamic decision-making performance. Personality and Individual Differences. 2013;55:112–117. [Google Scholar]

- Byrne KA, Worthy DA. Gender differences in reward sensitivity and information processing during decision-making. Journal of Risk and Uncertainty. 2015;50:55–71. [Google Scholar]

- Chermahini SA, Hommel B. The (b) link between creativity and dopamine: spontaneous eye blink rates predict and dissociate divergent and convergent thinking. Cognition. 2010;115:458–465. doi: 10.1016/j.cognition.2010.03.007. [DOI] [PubMed] [Google Scholar]

- Colzato LS, Slagter HA, van den Wildenberg WP, Hommel B. Closing one’s eyes to reality: Evidence for a dopaminergic basis of psychoticism from spontaneous eye blink rates. Personality and Individual Differences. 2009;46:377–380. [Google Scholar]

- Colzato LS, van den Wildenberg WP, Hommel B. Reduced spontaneous eye blink rates in recreational cocaine users: evidence for dopaminergic hypoactivity. PLoS One. 2008;3:e3461. doi: 10.1371/journal.pone.0003461. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Comings DE, Muhleman D, Ahn C, Gysin R, Flanagan SD. The dopamine D2 receptor gene: a genetic risk factor in substance abuse. Drug and Alcohol Dependence. 1994;34:175–180. doi: 10.1016/0376-8716(94)90154-6. [DOI] [PubMed] [Google Scholar]

- Cooper JA, Gorlick MA, Denny T, Worthy DA, Beevers CG, Maddox WT. Training attention improves decision making in individuals with elevated self-reported depressive symptoms. Cognitive, Affective, & Behavioral Neuroscience. 2014;14:729–741. doi: 10.3758/s13415-013-0220-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dagher A, Robbins TW. Personality, addiction, dopamine: insights from Parkinson’s disease. Neuron. 2009;61:502–510. doi: 10.1016/j.neuron.2009.01.031. [DOI] [PubMed] [Google Scholar]

- Daw ND. Doctoral dissertation. University College London; 2003. Reinforcement learning models of the dopamine system and their behavioral implications. [Google Scholar]

- De Jong PJ, Merckelbach H. Eyeblink frequency, rehearsal activity, and sympathetic arousal. International Journal of Neuroscience. 1990;51:89–94. doi: 10.3109/00207459009000513. [DOI] [PubMed] [Google Scholar]

- Derringer J, Krueger RF, Dick DM, Saccone S, Grucza RA, Agrawal A, Bierut LJ. Predicting sensation seeking from dopamine genes a candidate-system approach. Psychological Science. 2010;21:1282–1290. doi: 10.1177/0956797610380699. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Wit H, Flory JD, Acheson A, McCloskey M, Manuck SB. IQ and nonplanning impulsivity are independently associated with delay discounting in middle-aged adults. Personality and Individual Differences. 2007;42:111–121. [Google Scholar]

- Ernst M, Grant SJ, London ED, Contoreggi CS, Kimes AS, Spurgeon L. Decision making in adolescents with behavior disorders and adults with substance abuse. American Journal of Psychiatry. 2003;160:33–40. doi: 10.1176/appi.ajp.160.1.33. [DOI] [PubMed] [Google Scholar]

- Everitt BJ, Robbins TW. Neural systems of reinforcement for drug addiction: from actions to habits to compulsion. Nature Neuroscience. 2005;8:1481–1489. doi: 10.1038/nn1579. [DOI] [PubMed] [Google Scholar]

- Fairclough SH, Venables L. Prediction of subjective states from psychophysiology: A multivariate approach. Biological Psychology. 2006;71:100–110. doi: 10.1016/j.biopsycho.2005.03.007. [DOI] [PubMed] [Google Scholar]

- Finn PR, Rickert ME, Miller MA, Lucas J, Bogg T, Bobova L, Cantrell H. Reduced cognitive ability in alcohol dependence: examining the role of covarying externalizing psychopathology. Journal of Abnormal Psychology. 2009;118:100–116. doi: 10.1037/a0014656. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Flagel SB, Clark JJ, Robinson TE, Mayo L, Czuj A, Willuhn I, Akil H. A selective role for dopamine in stimulus-reward learning. Nature. 2011;469:53–57. doi: 10.1038/nature09588. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Forbes EE, Brown SM, Kimak M, Ferrell RE, Manuck SB, Hariri AR. Genetic variation in components of dopamine neurotransmission impacts ventral striatal reactivity associated with impulsivity. Molecular Psychiatry. 2009;14:60–70. doi: 10.1038/sj.mp.4002086. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frank MJ, Seeberger LC, O’Reilly RC. By carrot or by stick: cognitive reinforcement learning in parkinsonism. Science. 2004;306:1940–1943. doi: 10.1126/science.1102941. [DOI] [PubMed] [Google Scholar]

- Glimcher PW. Understanding dopamine and reinforcement learning: the dopamine reward prediction error hypothesis. Proceedings of the National Academy of Sciences. 2011;108:15647–15654. doi: 10.1073/pnas.1014269108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grace AA. The tonic/phasic model of dopamine system regulation: its relevance for understanding how stimulant abuse can alter basal ganglia function. Drug and Alcohol Dependence. 1995;37:111–129. doi: 10.1016/0376-8716(94)01066-t. [DOI] [PubMed] [Google Scholar]

- Grace AA. The tonic/phasic model of dopamine system regulation and its implications for understanding alcohol and psychostimulant craving. Addiction. 2000;95:119–128. doi: 10.1080/09652140050111690. [DOI] [PubMed] [Google Scholar]

- Hazy TE, Frank MJ, O’Reilly RC. Banishing the homunculus: making working memory work. Neuroscience. 2006;139:105–118. doi: 10.1016/j.neuroscience.2005.04.067. [DOI] [PubMed] [Google Scholar]

- Hogarth L, Balleine BW, Corbit LH, Killcross S. Associative learning mechanisms underpinning the transition from recreational drug use to addiction. Annals of the New York Academy of Sciences. 2013;1282:12–24. doi: 10.1111/j.1749-6632.2012.06768.x. [DOI] [PubMed] [Google Scholar]

- Hollerman JR, Schultz W. Dopamine neurons report an error in the temporal prediction of reward during learning. Nature Neuroscience. 1998;1:304–309. doi: 10.1038/1124. [DOI] [PubMed] [Google Scholar]

- Huang Z, Stanford MS, Barratt ES. Blink rate related to impulsiveness and task demands during performance of event-related potential tasks. Personality and Individual Differences. 1994;16:645–648. [Google Scholar]

- Ikemoto S. Dopamine reward circuitry: two projection systems from the ventral midbrain to the nucleus accumbens–olfactory tubercle complex. Brain Research Reviews. 2007;56:27–78. doi: 10.1016/j.brainresrev.2007.05.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Insel TR, Fenton WS. Psychiatric epidemiology: it’s not just about counting anymore. Archives of General Psychiatry. 2005;62:590–592. doi: 10.1001/archpsyc.62.6.590. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Karson CN. Spontaneous eye-blink rates and dopaminergic systems. Brain. 1983;106:643–653. doi: 10.1093/brain/106.3.643. [DOI] [PubMed] [Google Scholar]

- Kessler RC, McGonagle KA, Zhao S, Nelson CB, Hughes M, Eshleman S, Kendler KS. Lifetime and 12-month prevalence of DSM-III-R psychiatric disorders in the United States: results from the National Comorbidity Survey. Archives of General Psychiatry. 1994;51:8–19. doi: 10.1001/archpsyc.1994.03950010008002. [DOI] [PubMed] [Google Scholar]

- Kirby KN, Petry NM, Bickel WK. Heroin addicts have higher discount rates for delayed rewards than non-drug-using controls. Journal of Experimental Psychology: General. 1999;128:78–87. doi: 10.1037//0096-3445.128.1.78. [DOI] [PubMed] [Google Scholar]

- Krebs MO, Sautel F, Bourdel MC, Sokoloff P, Schwartz JC, Olie JP, Poirier MF. Dopamine D 3 receptor gene variants and substance abuse in schizophrenia. Molecular Psychiatry. 1998;3:337–341. doi: 10.1038/sj.mp.4000411. [DOI] [PubMed] [Google Scholar]

- Kreek MJ, Nielsen DA, Butelman ER, LaForge KS. Genetic influences on impulsivity, risk taking, stress responsivity and vulnerability to drug abuse and addiction. Nature Neuroscience. 2005;8:1450–1457. doi: 10.1038/nn1583. [DOI] [PubMed] [Google Scholar]

- Krueger RF. The structure of common mental disorders. Archives of General Psychiatry. 1999;56:921–926. doi: 10.1001/archpsyc.56.10.921. [DOI] [PubMed] [Google Scholar]

- Krueger RF, Hicks BM, Patrick CJ, Carlson SR, Iacono WG, McGue M. Etiologic connections among substance dependence, antisocial behavior and personality: Modeling the externalizing spectrum. Journal of Abnormal Psychology. 2002;111:411–424. [PubMed] [Google Scholar]

- Krueger RF, Markon KE. Reinterpreting comorbidity: A model-based approach to understanding and classifying psychopathology. Annual Review of Clinical Psychology. 2006;2:111–133. doi: 10.1146/annurev.clinpsy.2.022305.095213. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krueger RF, Markon KE, Patrick CJ, Benning SD, Kramer MD. Linking antisocial behavior, substance use, and personality: An integrative quantitative model of the adult externalizing spectrum. Journal of Abnormal Psychology. 2007;116:645–666. doi: 10.1037/0021-843X.116.4.645. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krueger RF, McGue M, Iacono WG. The higher-order structure of common DSM mental disorders: Internalization, externalization, and their connections to personality. Personality and Individual Differences. 2001;30:1245–1259. [Google Scholar]

- Ladas A, Frantzidis C, Bamidis P, Vivas AB. Eye blink rate as a biological marker of mild cognitive impairment. International Journal of Psychophysiology. 2013;93:12–16. doi: 10.1016/j.ijpsycho.2013.07.010. [DOI] [PubMed] [Google Scholar]

- Ljungberg T, Apicella P, Schultz W. Responses of monkey dopaminergic neurons during learning of behavioral reactions. Journal of Neurophysiology. 1992;67:145–163. doi: 10.1152/jn.1992.67.1.145. [DOI] [PubMed] [Google Scholar]

- Lusher JM, Chandler C, Ball D. Dopamine D4 receptor gene (DRD4) is associated with Novelty Seeking (NS) and substance abuse: the saga continues. Molecular Psychiatry. 2001;6:497–499. doi: 10.1038/sj.mp.4000918. [DOI] [PubMed] [Google Scholar]

- Ma N, Liu Y, Li N, Wang CX, Zhang H, Zhang DR. Addiction related alteration in resting-state brain connectivity. NeuroImage. 2010;49:738–744. doi: 10.1016/j.neuroimage.2009.08.037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maia TV, Frank MJ. From reinforcement learning models to psychiatric and neurological disorders. Nature Neuroscience. 2011;14:154–162. doi: 10.1038/nn.2723. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Madden GJ, Petry NM, Badger GJ, Bickel WK. Impulsive and self-control choices in opioid-dependent patients and non-drug-using control patients: Drug and monetary rewards. Experimental and Clinical Psychopharmacology. 1997;5:256–262. doi: 10.1037//1064-1297.5.3.256. [DOI] [PubMed] [Google Scholar]

- McClure SM, Laibson DI, Loewenstein G, Cohen JD. Separate neural systems value immediate and delayed monetary rewards. Science. 2004;306:503–507. doi: 10.1126/science.1100907. [DOI] [PubMed] [Google Scholar]

- Mitchell SH. Measures of impulsivity in cigarette smokers and non-smokers. Psychopharmacology. 1999;146:455–464. doi: 10.1007/pl00005491. [DOI] [PubMed] [Google Scholar]

- Motzkin JC, Baskin-Sommers A, Newman JP, Kiehl KA, Koenigs M. Neural correlates of substance abuse: Reduced functional connectivity between areas underlying reward and cognitive control. Human Brain Mapping. 2014;35:4282–4292. doi: 10.1002/hbm.22474. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Myerson J, Green L, Warusawitharana M. Area under the curve as a measure of discounting. Journal of the Experimental Analysis of Behavior. 2001;76:235–243. doi: 10.1901/jeab.2001.76-235. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Niv Y, Daw ND, Joel D, Dayan P. Tonic dopamine: opportunity costs and the control of response vigor. Psychopharmacology. 2007;191:507–520. doi: 10.1007/s00213-006-0502-4. [DOI] [PubMed] [Google Scholar]

- Otto AR, Love BC. You don’t want to know what you’re missing: When information about forgone rewards impedes dynamic decision-making. Judgment and Decision Making. 2010;5:1–10. [Google Scholar]

- Patrick CJ, Drislane LE. Triarchic model of psychopathy: Origins, operationalizations, and observed linkages with personality and general psychopathology. Journal of Personality. doi: 10.1111/jopy.12119. (in press) [DOI] [PubMed] [Google Scholar]

- Patrick CJ, Kramer MD, Krueger RF, Markon KE. Optimizing efficiency of psychopathology assessment through quantitative modeling: development of a brief form of the Externalizing Spectrum Inventory. Psychological Assessment. 2013;25:1332–1348. doi: 10.1037/a0034864. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Patton JH, Stanford MS, Barratt ES. Factor structure of the Barratt impulsiveness scale. Journal of Clinical Psychology. 1995;51:768–774. doi: 10.1002/1097-4679(199511)51:6<768::aid-jclp2270510607>3.0.co;2-1. [DOI] [PubMed] [Google Scholar]

- Pessiglione M, Seymour B, Flandin G, Dolan RJ, Frith CD. Dopamine-dependent prediction errors underpin reward-seeking behaviour in humans. Nature. 2006;442:1042–1045. doi: 10.1038/nature05051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reynolds B, Richards JB, Dassinger M, de Wit H. Therapeutic doses of diazepam do not alter impulsive behavior in humans. Pharmacology Biochemistry and Behavior. 2004;79:17–24. doi: 10.1016/j.pbb.2004.06.011. [DOI] [PubMed] [Google Scholar]

- Richards JB, Zhang L, Mitchell SH, Wit H. Delay or probability discounting in a model of impulsive behavior: effect of alcohol. Journal of the Experimental Analysis of Behavior. 1999;71:121–143. doi: 10.1901/jeab.1999.71-121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Robbins TW, Roberts AC. Differential regulation of fronto-executive function by the monoamines and acetylcholine. Cerebral Cortex. 2007;17:i151–i160. doi: 10.1093/cercor/bhm066. [DOI] [PubMed] [Google Scholar]

- Robinson TE, Berridge KC. The neural basis of drug craving: an incentive-sensitization theory of addiction. Brain Research Reviews. 1993;18:247–291. doi: 10.1016/0165-0173(93)90013-p. [DOI] [PubMed] [Google Scholar]

- Robinson TE, Berridge KC. The psychology and neurobiology of addiction: an incentive–sensitization view. Addiction. 2000;95:91–117. doi: 10.1080/09652140050111681. [DOI] [PubMed] [Google Scholar]

- Schultz W. Predictive reward signal of dopamine neurons. Journal of Neurophysiology. 1998;80:1–27. doi: 10.1152/jn.1998.80.1.1. [DOI] [PubMed] [Google Scholar]

- Sokoloff P, Giros B, Martres MP, Bouthenet ML, Schwartz JC. Molecular cloning and characterization of a novel dopamine receptor (D3) as a target for neuroleptics. Nature. 1990;347:146–151. doi: 10.1038/347146a0. [DOI] [PubMed] [Google Scholar]

- Strakowski SM, Sax KW. Progressive behavioral response to repeated d-amphetamine challenge: further evidence for sensitization in humans. Biological Psychiatry. 1998;44:1171–1177. doi: 10.1016/s0006-3223(97)00454-x. [DOI] [PubMed] [Google Scholar]

- Strakowski SM, Sax KW, Setters MJ, Keck PE. Enhanced response to repeated d-amphetamine challenge: evidence for behavioral sensitization in humans. Biological Psychiatry. 1996;40:872–880. doi: 10.1016/0006-3223(95)00497-1. [DOI] [PubMed] [Google Scholar]

- Taylor JR, Elsworth JD, Lawrence MS, Sladek JR, Jr, Roth RH, Redmond DE., Jr Spontaneous blink rates correlate with dopamine levels in the caudate nucleus of MPTP-treated monkeys. Experimental Neurology. 1999;158:214–220. doi: 10.1006/exnr.1999.7093. [DOI] [PubMed] [Google Scholar]

- Venables NC, Patrick CJ. Validity of the Externalizing Spectrum Inventory in a criminal offender sample: Relations with disinhibitory psychopathology, personality, and psychopathic features. Psychological Assessment. 2012;24:88. doi: 10.1037/a0024703. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vuchinich RE, Simpson CA. Hyperbolic temporal discounting in social drinkers and problem drinkers. Experimental and Clinical Psychopharmacology. 1998;6:292–305. doi: 10.1037//1064-1297.6.3.292. [DOI] [PubMed] [Google Scholar]

- Waelti P, Dickinson A, Schultz W. Dopamine responses comply with basic assumptions of formal learning theory. Nature. 2001;412:43–48. doi: 10.1038/35083500. [DOI] [PubMed] [Google Scholar]

- Waldman ID, Slutske WS. Antisocial behavior and alcoholism: A behavioral genetic perspective on comorbidity. Clinical Psychology Review. 2000;20:255–287. doi: 10.1016/s0272-7358(99)00029-x. [DOI] [PubMed] [Google Scholar]

- Wang PS, Lane M, Olfson M, Pincus HA, Wells KB, Kessler RC. Twelve-month use of mental health services in the United States: results from the National Comorbidity Survey Replication. Archives of General Psychiatry. 2005;62:629–640. doi: 10.1001/archpsyc.62.6.629. [DOI] [PubMed] [Google Scholar]

- Wise RA. Dopamine, learning and motivation. Nature Reviews Neuroscience. 2004;5:483–494. doi: 10.1038/nrn1406. [DOI] [PubMed] [Google Scholar]

- Worthy DA, Byrne KA, Fields S. Effects of emotion on prospection during decision-making. Frontiers in Psychology. 2014;5:591. doi: 10.3389/fpsyg.2014.00591. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Worthy DA, Cooper JA, Byrne KA, Gorlick MA, Maddox WT. State-based versus reward-based motivation in younger and older adults. Cognitive, Affective, & Behavioral Neuroscience. 2014;14:1208–1220. doi: 10.3758/s13415-014-0293-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Worthy DA, Gorlick MA, Pacheco JL, Schnyer DM, Maddox WT. With age comes wisdom: Decision-making in younger and older adults. Psychological Science. 2011;22:1375–1380. doi: 10.1177/0956797611420301. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Worthy DA, Otto AR, Maddox WT. Working-memory load and temporal myopia in dynamic decision making. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2012;38:1640–1658. doi: 10.1037/a0028146. [DOI] [PMC free article] [PubMed] [Google Scholar]