Abstract

We derive an algorithm to directly solve logistic regression based on cardinality constraint, group sparsity and use it to classify intra-subject MRI sequences (e.g. cine MRIs) of healthy from diseased subjects. Group cardinality constraint models are often applied to medical images in order to avoid overfitting of the classifier to the training data. Solutions within these models are generally determined by relaxing the cardinality constraint to a weighted feature selection scheme. However, these solutions relate to the original sparse problem only under specific assumptions, which generally do not hold for medical image applications. In addition, inferring clinical meaning from features weighted by a classifier is an ongoing topic of discussion. Avoiding weighing features, we propose to directly solve the group cardinality constraint logistic regression problem by generalizing the Penalty Decomposition method. To do so, we assume that an intra-subject series of images represents repeated samples of the same disease patterns. We model this assumption by combining series of measurements created by a feature across time into a single group. Our algorithm then derives a solution within that model by decoupling the minimization of the logistic regression function from enforcing the group sparsity constraint. The minimum to the smooth and convex logistic regression problem is determined via gradient descent while we derive a closed form solution for finding a sparse approximation of that minimum. We apply our method to cine MRI of 38 healthy controls and 44 adult patients that received reconstructive surgery of Tetralogy of Fallot (TOF) during infancy. Our method correctly identifies regions impacted by TOF and generally obtains statistically significant higher classification accuracy than alternative solutions to this model, i.e., ones relaxing group cardinality constraints.

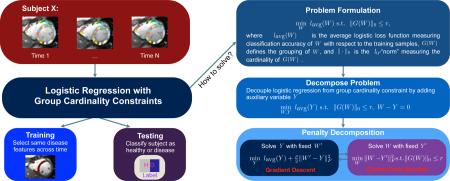

Graphical abstract

1. Introduction

An important topic in medical image analysis is to identify image phenotypes by automatically classifying time series of 3D Magnetic Resonance Images (MRIs). For example, intra-subject MRI sequences are used to analyze cardiac motion (Osman et al., 1999; Sermesant et al., 2003; Chandrashekara et al., 2004; Huang et al., 2005; Besbes et al., 2007; Sundar et al., 2009; Zhang et al., 2010a; Margeta et al., 2012; Wang et al., 2012; Yu et al., 2014), and brain development (Chetelat et al., 2005; Zhang et al., 2010b; Aljabar et al., 2011; Serag et al., 2012; Toews et al., 2012; Bernal-Rusiel et al., 2013; Schellen et al., 2015). However, the automatic classification of medical images is generally challenging. First, the number of features extracted from medical images is usually much larger than the number of samples. This generally results in overfitting of the method to the data, i.e., much higher classification accuracy during training than on test data (Ryali et al., 2010; Marques et al., 2012; Deshpande et al., 2014). In addition, the image phenotypes identified by automatic classifiers are often difficult to relate to the medical literature (Qu et al., 2003). In this article, we propose an algorithm that addresses both issues by directly solving the so called logistic regression problem with group sparsity constraints.

Classifiers based on sparse models reduce the dense image data to a small number of features by counting the number of selected features via the l0-“norm” and are configured so that the count is below a predefined threshold (Yamashita et al., 2008; Carroll et al., 2009; Rao et al., 2011; Liu et al., 2012; Lv et al., 2015). A generalization of that concept are group sparsity models, which first group image features based on predefined rules and then count the number of non-zero groupings (Ng et al., 2010; Wu et al., 2010; Ryali et al., 2010). To solve the underlying minimization problem, however, these methods relax the feature selection process from (group) cardinality constraints to weighting feature by, for example, replacing the l0-“norm” with the l2-norm (Meier et al., 2008; Friedman et al., 2010; Ryali et al., 2010; Li et al., 2012). The solution of those methods relates to the original sparse problem only under specific assumptions, e.g., the data entry matrix needs to satisfy the restricted isometry property in compressed sensing problem (Candes and Tao, 2005; Candès et al., 2006). However, matrices generally do not satisfy this property, such as those of the appendix of (Lu and Zhang, 2013), and most data matrices of medical image applications, e.g., matrices defined by the regional volume scores of subjects. Thus, with the exception of sparse models applied to compressed sensing, the solution obtained with respect to the relaxed norm generally does not recover the one of the original sparse model defined by the l0-“norm”. In addition, the number of measures selected by the classifier depends now on the training data due to the soft selection scheme. One can select a predefined number by choosing measures whose weight is above a certain threshold. However, in the case of sparse logistic regression the corresponding classifier depends on the measures below the threshold and the relevance of those weights with respect to the disease under study is an ongoing topic of discussion (Haufe et al., 2014; Sabuncu, 2014). Alternatively, the upper bound associated with the sparse constraint is set so that the classifier returns the wanted number of measures for a given training data set (Vounou et al., 2012; Zhang and Shen, 2012; Ma and Huang, 2008; Zhang et al., 2012). The tuning is now data dependent, i.e., each training set is generally associated with a different upper bound so that selected number of scores is constant across training sets. Even comparing the patterns of different subsets of the same data set, i.e., folds, is none trivial as each pattern is the solution to a minimization problem, whose sparsity constraint is unique to a fold. Avoiding soft feature selection and thus these issues, our algorithm solves the original group sparsity constrained, logistic classification problem defined by the l0-“norm” by extending the Penalty Decomposition (PD) method (Lu and Zhang, 2013). By doing so, our method uses a single model to not only classify samples but also directly select patterns (without thresholding or changing upper bounds) that potentially are image phenotypes meaningful to medical community.

To further investigate its potential, we now generalize PD from solving sparse logistic regression problems with group size one to more than one. Specifically, we assume that an intra-subject series of images represents repeated samples of the same disease patterns. In other words, selecting an image feature for disease identification needs to account for the entire series of measurements created by that feature across time. We model this assumption by combining each “feature series” into a single group. The proposed PD algorithm then derives a solution within that model by decoupling the minimization of the logistic regression function from enforcing the group sparsity constraint. Applying Block Coordinate Descent (BCD), the minimum to the smooth and convex logistic regression problem is determined via gradient descent while we derive a closed form solution for finding a sparse approximation of that minimum.

We apply our method to cine MRI of 38 healthy adults and 44 adult patients, that received reconstructive surgery of Tetralogy of Fallot (TOF) during infancy. The data sets fulfill the assumption of the group sparsity model as the residual effects of TOF mostly impact the shape of the right ventricle (Atrey et al., 2010; Bailliard and Anderson, 2009) so that the regions impacted by TOF should not change across the time series captured by a cine MRI. During training, we automatically set all important parameters of our approach by first training a separate regressor for each setting of the parameter space. We then reduce the risk of over-fitting by combining those classifiers into a single ensemble of classifiers (Rokach, 2010). This ensemble of classifiers correctly favors subregions of the ventricles most likely impacted by TOF. For most experiments, it also produces statistically significant higher accuracy scores than ensemble of classifiers that relax the group cardinality constraint.

We first proposed to generalize PD to group sparsity constraints at MICCAI 2015 (Zhang and Pohl, 2015). This article provides a more in-depth view of this idea. Specifically, we expand PD to guarantee convergence of the sparse approximation to a local minimum of the group-sparsity confined, logistic regression problem, which is the primary contribution of this manuscript. We also modify the experiments by replacing the morphometric encodings of heart regions based on the average of the Jacobian determinants with simple volumetric scores. This simplifies preprocessing as alignment of each cine MRI to a template is unnecessary. It also reduces the size of the parameter search space, which now omits the smoothing parameters associated with the alignment process. Moreover, we not only record a single accuracy score for each implementation but instead generate distributions of scores by modifying the number of training samples. For each training size, we apply the method to 10 different training and testing sets. Finally, we distinguish the ventricular septum from the left ventricle to refine our findings from the previous publication (Zhang and Pohl, 2015) and support those findings with new plots that visualize the selection of regions across the entire heart.

Beyond our MICCAI publication, a possible alternative regression approach for simultaneous classification and pattern extraction is the random forest method (Lempitsky et al., 2009). However, it is unclear how to expand this technology to group-wise selection schemes that enforce temporal consistency in selecting regions, i.e., the same regions are picked across all time points. Due to these difficulties most machine learning approaches applied to cine MRI just focus on disease classification, such as (McLeod et al., 2013; Afshin et al., 2014; Bai et al., 2015). They often improve results by manually selecting regions thought to be impacted by the disease before performing classification (Wald et al., 2009). An exception are (Qian et al., 2011; Ye et al., 2014; Bhatia et al., 2014), which seperately perform disease classification and weigh individual regions possibly impacted by disease. The disconnect between the two steps and the weighing of individual regions makes clinical interpretation of the findings more difficult as, in addition to the earlier mentioned issues associated with the interpretation of weights, it increases the risk of false positive findings compared to directly identifying patterns of regions. Our experimental results echo these issues, where logistic regression with relaxed sparsity constraints was generally significantly less accurate than our proposed solution to the original sparsity constraint. We conclude that our proposed approach is the first to solve a single optimization problem for simultaneous disease classification and group-based pattern identification based on segmentation of cine MRIs.

The rest of this paper is organized as follows. Section 2 provides an in-depth description of PD algorithm and its convergence properties. Section 3 summarizes the experiments on the TOF dataset and Section 4 concludes the paper with final remarks.

2. Solving Sparse Group Logistic Regression

We first describe the logistic regression model with group cardinality constraint, which accurately assigns subjects to cohorts based on features extracted from intra-subject image sequences. We then generalize the PD approach to find a solution within that model. We end the section deriving convergence properties of the resulting algorithm.

2.1. The Model

The input to our model are N subjects, their diagnosis {b1,...,bN} and features {Z1,..., ZN} extracted from 3D+t medical images with T time points. The diagnosis bs ∈ {−1, +1} is +1 if subject ‘s’ is healthy and −1 otherwise. The feature matrix of subject s is composed of vectors encoding the tth time point through M features. Our goal is now to accurately model the relationship between the labels {b1,...,bN} and the features {Z1,...,ZN}.

Given the large number of features and the relatively small number of samples, we make the model tractable by assuming that the disease is best characterized by the same ‘r’ features (subject to (s.t.) r ≤ M) at each time point, which means the same ‘r’ rows of each feature matrix Zs. One way of modeling this relationship is via group-sparsity constraint solutions to a logistic regression problem. Weighing features according to their importance in separating the healthy from the disease group, the problem of logistic regression is to find the configuration that most accurately infers the diagnosis of each sample. Sparsity constraints simply confine the search space to those configurations that only select a subset of features, i.e, the weight of non-selected features is zero. Our model aims to identify ‘r’ rows of a feature matrix, which group-sparsity constraint does by first defining the features of a row as a group before enforcing the sparsity constraint on those groupings. In other words, if the model chooses a feature in one time point, the corresponding features in other time points should also be chosen since the importance of a feature should be similar across time.

To formally define this model, we now introduce

the diagnosis-weighted feature matrix As := bs · Zs for s = 1,...,N,

the weight matrix defining the importance of each feature in correctly classifying subjects,

being the ith row of matrix W,

the trace of a matrix Tr(·),

the logistic function θ(y) := log(1 + exp(–y)), and

the average logistic loss function with respect to the label weight

| (1) |

The logistic regression problem with group sparsity constraint is then defined as

| (2) |

where groups the weight vectors over time by computing the l2-norm of the rows. Thus, equals the number of nonzero components of , i.e., the non-zero rows of W. The sparsity constraint search space is then formally defined as

so that Eq. (2) shortens to

| (3) |

Note, that in the case of T = 1 or replacing with then Eq. (3) changes to the more common sparse logistic regression problem, which, in contrast, chooses individual features of W ignoring any temporal dependency. While the accuracy of this model might be similar to the proposed group-sparsity model, the selected features have limited meaning for diseases such as the residual effects of TOF. TOF impacts the morphometry and thus leads to changes in local shape patterns that are consistent across the cardiac cycle.

2.2. Approximating the Solution to the Group Sparsity Constraint, Minimization Problem

PD of (Lu and Zhang, 2013) estimates the sparse solution (T = 1) to logistic regression problems by decoupling the minimization of the logistic regression lavg(·,·) from finding a solution within sparsity constraint space χ. It does so by defining a penalty function consisting of two components: (1) the logistic regression lavg(·,·) dependent on v and an auxiliary variable , and (2) a similarity measure S(Y,W) between the non-sparse solution Y and the approximated sparse solution W. In other words, the penalty function is defined as

where the penalty parameter ϱ < 0 weighs the importance of S (·,·). At each iteration, PD increase ϱ and then determines the minimizing qϱ(·,·,·). Thus, as ϱ increases, the difference reduces between , the solution of the regularized logistic problem, and its sparse approximation . Once the algorithm converges, is the approximated solution of the original sparse problem. In the remainder of this subsection, we generalized PD to approximate the group sparsity constraint solution (T ≥ 1) of the logistic regression problem defined by Eq. (3).

To adapt PD to our model, we first assume that our algorithm is initialized qith ϱi and (vi, Wi), where . Each iteration ‘p’ of our algorithm is then composed of three steps: define penalty function, minimize penalty function, and update and check convergences of results.

Step 1 - Define penalty function

Finding (v*, W*) of Eq. (3) is equivalent to solving

| (4) |

Introducing the matrix Frobenius norm ∥ · ∥F, the above equation is equivalent to

| (5) |

so that is a natural metric to measure the similarity between W and Y for our PD algorithm. For the current penalty parameter ϱp, we define the PD characteristic penalty function as

| (6) |

Thus, the solution to the following non-convex and non-continuous minimization problem approximates the original sparse solution of Eq. (3):

| (7) |

Step 2 - Determine local minimum point of Eq. (7) via BCD

To find a local minimum point of Eq. (7), BCD alternates between minimizing the penalty function (Eq. (6)) with respect to the non-sparse terms (v, Y) and updating the sparse term W. Specifically, let (vb–1, Yb–1, Wb–1) be the current estimate of BCD. The bth iteration of BCD then determines (vb, Yb) by solving the smooth and convex problem

| (8) |

which can be done via a gradient descent. To update Wb, minimizing the penality function with respect to W, i.e.,

| (9) |

can now be solved in closed form. We derive the closed form solution by assuming (without loss of generality) that the rows of Yb are nonzero and listed in descending order according to their l2-norm, i.e., let be the jth row of Yb for j = 1,..., M then . Lemma A.1 (see Appendix for this and any proceeding lemmas and theorems) then shows that the closed form solution Wb is defined by the first ‘r’ rows of Yb,

| (10) |

In theory, multiple global solutions Wb exist in case . In practice, we have not come across that scenario.

Denoting with ∥·∥max the max norm of a matrix, i.e.,, , BCD stops updating the results when the relative change of each variable is smaller than a benchmark value ϵBCD, i.e.,

| (11) |

We choose this criteria over the absolute change of the sequence (vb, Yb, Wb), i.e.,

as it is more robust when variables have large values, i.e.,

is large.

Step 3 - Update results, penalty parameter, and check the stopping criterion

Let BCD stop at the b′th iteration, is then set to Yb′ and to vb′. To update , we first define an upper bound Γ = lavg(vi, Wi) with respect to the initialization, and then check whether

| (12) |

In case the condition Eq. (12) holds, is set to Wb′ and otherwise to Wi. According to Lemma A.5, this check guarantees that in case PD converges also converges to , i.e., . Finally, PD stops updating the results when and are similar enough according to the similarity parameter ϵPD, i.e.,

| (13) |

Note, Algorithm 1 is the pseudocode of our PD approach (Step 1-3), whose corresponding software implementation used for this publication can be dowloaded via https://dx.doi.org/10.6084/m9.figshare.3398332 or the current version via https://github.com/sibis-platform/PDLG.

Algorithm 1.

Penalty Decomposition (PD) Applied to Eq. (3)

| 1: | Initialization: Choose a sparsity parameter , scalar weight and a feasible sparsity constrained weights where |

| . | |

| Furthermore, set the following parameters | |

| • W0 ← Wi (initialize weight) | |

| • ϱ0 > 0 (initial penalty) | |

| • σ > 1 (penalty updating factor) | |

| • ϵBCD > 0 (upper bound for convergence of BCD) | |

| • ϵPD > 0 (upper bound for convergence of PD) | |

| • p ← 0 (PD index) | |

| • Γ = lavg(vi, Wi) (upper bound for qϱp(v, Y, W)) | |

| 2: | repeat (PD Loop) |

| 3: | % Step 1: Define the penalty function |

| 4: | |

| 5: | |

| 6: | % Step 2: Determine the local minimum point of qϱp via BCD |

| 7: | b ← 0 (BCD index) |

| 8: | repeat (BCD Loop) |

| 9: | b ← b + 1 |

| 10: | % Solve the following via Gradient Descent |

| 11: | |

| 12: | . |

| 13: | until |

| 14: | |

| 15: | % Step 3: Update results, penalty parameter, and check the stopping criterion |

| 16: | ϱp+1 ← σ · ϱp (increase penalty parameter) |

| 17: | if minv,Y qϱp+1(v, Y, Wb) ≤ Γ then |

| 18: | W0 ← Wb |

| 19: | else |

| 20: | W0 ← Wi |

| 21: | p ← p + 1, , and |

| 22: | until |

2.3. Convergence Properties of Penalty Decomposition

For the interested reader, we now briefly derive the properties guaranteeing that the converged solution of our PD approach is a local minimum point of Eq. (3). Focusing just on one iteration of PD, we first show that if the BCD approach of Step 2 converges then the corresponding accumulation point is also a local minimum point of Eq. (7). Across iterations of PD, these local minima define another sequence, which is defined by Step 3. We then show if the sequence converges to an accumulation point with exact r nonzero rows then this point is a local minimum point of the original sparse problem defined by Eq. (3). Note, deriving these properties of our algorithm is non-trivial as the PD penalty function Eq. (6) is non-convex and the sparse space is non-continuous. The Appendix contains the complete proofs of the properties of our method described below.

Convergence property of Step 2

In the pth iteration of PD, let be an accumulation point of the converged sequence (v1, Y1, W1), (v2, Y2, W2),... produced by BCD. To show that is a local minimum point of Eq. (7), the triple needs to be the minimum point of (·,·,·) with respect to a neighborhood of this triple. We confine the neighborhood to those triples (v, Y, W), where the sign of non-zero components of equals those of W. We formally express this constraint by introducing the set of indices corresponding to non-zero components of

so that the neighborhood is defined as

| (14) |

An interesting characteristic of that neighborhood is that for any triple the following relation holds among H, and (see also Lemma A.3)

| (15) |

where 0 is a matrix whose entries are all zero. We make use of this property in Theorem A.4, where we derive the following lower bound for (·,·,·) with respect to

so that applying Eq. (15) results in

In other words, is a local minimum point of Eq. (7).

Convergence property of Step 3

Let (v*, Y*, W*) be the accumulation point of the converging sequence , ,... produced by Step 3. Furthermore, assume that W* has exact ‘r’ nonzero rows, i.e., , which has always been the case in our experiments. Lemma A.7 then states that (v*, W*) is a local minimum point of Eq. (3) if there exists a matrix so that the following holds:

| (16) |

where is a set of ‘r’ indices for which (W*)i ≠ 0 and f(x) |x=x′ is the value of f(·) at x′. To determine, , we note that Y* = W* (see also Lemma A.5). Theorem A.8 then states that the sequence

| (17) |

is a bounded and converges to Z*. The theorem furthermore notes that (v*, W*) fulfills the condition of Eq. (16) when . In summary, if PD converges then it converges to a local minimum point of the original sparse problem is defined by Eq. (3).

3. Testing Algorithms on Correctly Classifying TOF

To better understand the strength and weakness of our proposed Algorithm 1, we compare the accuracy of our approach to alternative solver of sparsity constraint logistic regression problems on a data set consisting of regional volume scores extracted from cine MRIs of 44 TOF cases and 38 healthy controls. The dataset provides an ideal test bed for such a comparison as it contains ground-truth diagnosis, i.e., each subject received reconstructive surgery for TOF during infancy or not. Furthermore, refining the quantitative analysis of these scans could lead to improved monitoring of TOF patients, i.e., timing for follow-up surgeries. Finally, the residual effects of TOF reconstructive surgery mostly impact the morphometry, i.e., the shape of the right ventricle (Bailliard and Anderson, 2009; Atrey et al., 2010), so that the regional volume scores extracted from each time point of the image series are sample descriptions of the same phenomena, a core assumption of the group sparsity model. We not only show that our PD approach (Algorithm 1) reflects this manifestation of the disease by mostly weighing its decision based on regions within the right ventricle but also achieves significantly higher accuracy than alternative solutions to this model, such as solving logistic regression with relaxed sparsity constraints (Ryali et al., 2010) 1.

3.1. Experimental Setup

Extracting Regional Volume Scores

Each sample of the data set consists of a segmentation of each time point of a motion-corrected cine MRI, i.e., we corrected for slice misalignment due to breathing motion by detecting the center of the left ventricle via Hough transform (Duda and Hart, 1972) and then stacking the slices so that the center of the left ventricle aligns across the slices. Covering the basal, mid-cavity, and apical part with 8 slices, the segmentation outlines the right ventricular blood pool, the wall of the Left Ventricle (LV), and the Ventricular Septum (VS), which was done at end-diastole according to the semi-automatic procedure described in (Ye et al., 2014) and then propagated from end-diastole to the other time points via non-rigid registration (Avants et al., 2008). For the right ventricular blood pool, we reduce the maps to a 7mm band along its boundary, which is similar to the width of the wall of the other two structures, and name it RV (see Fig. (1)). For each time point and image slice, we then parcellate the three structures into smaller sections based on a predefined subtended angles from the center of mass (of the RV or LV&VS). More specifically, RV and LV are divided into the same number of sections, while the VS is divided into of that number reflecting its relative size to RV and LV. For example in Fig. (2), the VS is divided into six sections with respect to each slice and time point of the scan while the RV and LV are divided into 18 sections each. Finally, the input to our proposed solver is the volume of each section.

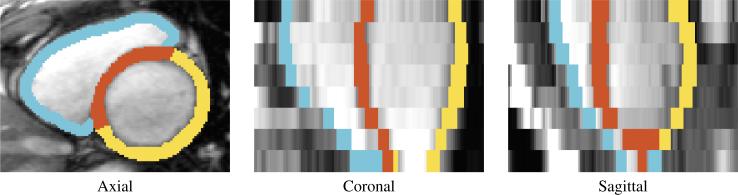

Figure 1.

Example segmentation of the RV (blue), VS (red), and LV (yellow)

Figure 2.

An example slice of the partitioning of the RV and LV into 18 sections and the VS into six sections at time point 1, 6, 13, 19, and 25.

Measure Classification Accuracy

We measure the accuracy of our approach with respect to correctly classifying samples just based on the sectional volumes scores of the RV alone, the LV alone, and the VS alone as well as using the scores of the whole heart (RV, LV&VS). We train our algorithm with different numbers of training samples, which are defined by the percentage {5%, 10%, . . . , 75%} of cases captured by the entire data set, i.e., 82 cases. For each training sample size, we run ten experiments to estimate a distribution of accuracy scores.

For each experiment, we randomly select the training samples from both groups and label the remaining cases as test subjects. For a fair comparison with other sparse logistic regression solvers, we initialize our algorithm according to (Lu and Zhang, 2013), i.e., ϱ = 0.1, σ = √10, ϵBCD = 10−4, ϵPD = 10−3, Wi = 0 and vi = 1. For each training set, we then determine the optimal setting of our algorithm with respect to the broad parameter space of the remaining two parameters:

the number of sections s ∈ {2, 3, . . . , 9} that each slice of the VS is divided into (so that each subject is represented by 3360 to 15120 volume scores)

and the maximum percentage of regional values chosen by our approach ω ∈ {5%, 10%,... , 50%}. In other words, the sparsity constraint is defined as r := ceil(M · s · ω) where M · s is the total number of section that the heart cycle is divided into.

We determine the optimal setting by first experimenting with parameter exploration. Specifically, for each of the 80 unique parameter settings we define a regressor by computing the optimal weights W* of Algorithm 1 with respect to training data. In all experiments, PD then converged within 5 iterations and BCD converged within 500 iterations for each penalty parameter ϱp. After convergence, we compute the accuracy of the regressor with respect to the training set via the normalized accuracy (nAcc), i.e., we separately compute the accuracy for each cohort and then average their values to account for the imbalance in cohort size. The entire process of training and measuring the accuracy of the 80 regressors took less than 10 seconds on a single PC (Intel(R) Xeon(R) CPU E5-2603 v2 @1.80GHz and 32G memory). It also resulted in multiple settings, i.e., regressors, with 100% classification accuracy. In case of parameter exploration failing, a common solution (and the one we chose) is to train an ensemble of classifiers (Rokach, 2010). The final label of the ensemble is then defined by the weighted average across the set of regressors, where the weight of the regressor in the decision of the ensemble of classifiers is simply its training accuracy. Once trained, we then measure the accuracy of the resulting ensemble on correctly assigning test samples to the patient groups using the nAcc score. In the remainder of this section, we refer to this ensemble of classifier as L0-Grp.

Alternative Models

We compare our solver to alternative algorithm using the same mechanism as above, i.e., we create ensemble of classifiers with respect to the same parameter space and training data sets, and measure their accuracy on the same test data sets. Specifically, we use the algorithm by (Liu et al., 2009) to solve the logistic regression with the sparsity constraints relaxed via the l2-norm, i.e.,

| (18) |

with λ being the sparse regularizing parameter. We refer to the corresponding ensemble as Rlx-Grp. Note, relaxing the sparsity constraint via the l1-norm results in a optimization problem ignoring temporal consistency, which violates our initial assumption of the model.

In addition, we investigate the accuracy of PD and (Liu et al., 2009) when only applied to a single time point (T = 1). When T = 1, then Eq. (3) simplifies to

| (19) |

i.e., the sparsity constrained problem solved by PD in (Lu and Zhang, 2013). We refer to the corresponding ensemble as L0-nGrp. Furthermore, for T = 1, Eq. (18) is equivalent to

| (20) |

whose corresponding ensemble of classifier we refer to Rlx-nGrp. Finally, we note that the sparsity parameter λ of Rlx-Grp and Rlx-nGrp is not directly related to the number of selected sections. For a fair comparison to the sparsity constrained methods, we therefore automatically tune the sparsity parameter λ of Eq. (18) and Eq. (20) so that the number of chosen sections r̄ were similar to those defined in Eq. (3), i.e., |r – r̄| ≤ 1.

3.2. Experimental results

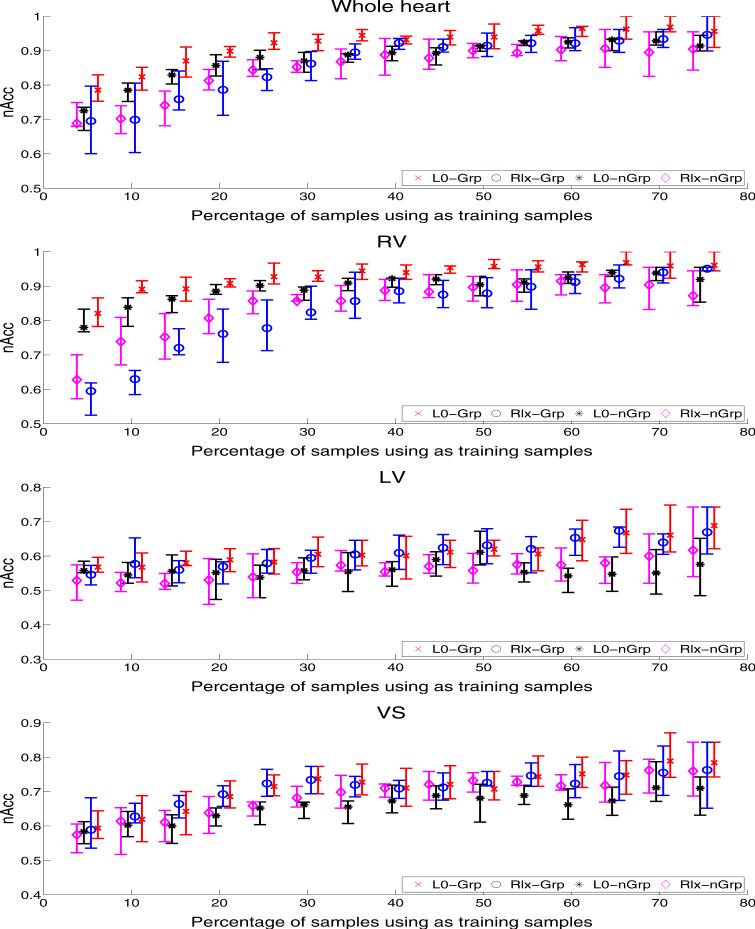

The box plots of Fig. (3) summarize the distribution of the accuracy scores associated with each implementation and structure by the average, the first quin-tile, and the fourth quintile of the nAcc scores across the 10 test data sets run for each training sample size. For all four implementations, the average nAcc scores generally increase with the number of training samples. For the RV and the whole heart (RV,LV&VS), the proposed L0-Grp implementation (red boxplots) generally achieves a higher average accuracy, first quintile, and fourth quintile scores than then other three approaches. The difference is especially large for smaller number of training samples, i.e., 5% to 35%. For large training samples, i.e., 70% and 75%, L0-Grp is the only method with a fourth quintile score of 100%. To follow up these observations, we computed the p-value of the paired-sample t-test (McDonald, 2009) between L0-Grp and other three implementations. Table 1 summarizes those computations. For the whole heart, L0-Grp is significantly better (p < 0.05) than Rlx-nGrp and L0-nGrp with respect to 13 out of the 15 training sample sizes, and 10 out of the 15 training sets with respect to the Rlx-Grp. For the RV, the counts for statically significant differences increase with respect to the implementations with relaxed constraints. However, this is not true for L0-nGrp implementation, in which case L0-Grp is significantly better in 8 experiments. In this experiment, we conclude that the impact of reducing the number of time points on the accuracy scores is less than relaxing the sparsity constraint. An explanation for this observation might lie in the fact that solution generated by solvers relaxing the sparsity constraint, i.e., Rlx-nGrp and Rlx-Grp, is only accurate with respect to the original problem Eq. (3) under certain conditions (Candès et al., 2006), which are not satisfied here and in medical image analysis in general.

Figure 3.

The boxplots of the average, first quintile, and fourth quintile nAcc scores for all four ensembles with respect to the percentage of the whole data set used for training and the encoding of the LV, RV, VS and the whole heart (RV,LV&VS). For the RV and whole heart, the proposed L0-Grp implementation (red box plots) generally achieves a higher average, first quintile, and fourth quintile scores than then other three approaches. With respect to the LV and VS, all four methods perform similarly with the average nAcc scores starting at around 55% and generally increasing with the number of training samples.

Table 1.

Significant: p ≤ 0.05; Trend: 0.05 < p ≤ 0.1; Indifferent: p > 0.1. Frequency of p-value of the paired-sample t-test between L0-Grp and the other three methods. In most tests, the results obtained by L0-Grp are significantly more accurate than the other three methods with respect to the paired-sample t-test (McDonald, 2009).

| RV | RV, LV & VS | |||||

|---|---|---|---|---|---|---|

| Significant | Trend | Indifferent | Significant | Trend | Indifferent | |

| Rlx-Grp | 13 | 0 | 2 | 10 | 2 | 3 |

| L0-nGrp | 8 | 4 | 3 | 13 | 2 | 0 |

| Rlx-nGrp | 15 | 0 | 0 | 13 | 2 | 0 |

With respect to the LV and VS, Fig. (3) reports insignificant differences between the accuracy of all four methods. The average nAcc scores start at around 55% and generally increase with the number of training samples. While the average scores of Rlx-Grp almost match those of L0-Grp, L0-Grp achieves the highest average sore with 69% for the LV and 74% for the VS indicating that the VS is slightly more impacted by TOF than the LV. This observation is also in alignment with the literature (Bailliard and Anderson, 2009; Atrey et al., 2010) reporting the residual effects of TOF impacting the RV, which the VS is attached to. Increasing the number of training samples also impacts the spread between the first and fourth quintile. This issue is partly due to the fact that the larger the training data set, the smaller the test set. From a statistical perspective, e.g. recording the outcome of flipping a biased coin several times, one expects this outcome as the first and fourth quintile scores deviate further from the average score compared to experiments with larger test sets. Interestingly enough, only the average scores of the L0-nGrp are not improving with large training sets as it peaks at a training size of 50% for the LV and gets unstable starting at 50% for the VS. In other words, adding more samples to the training is not more informative than adding more information of each individual sample by, for example, increasing the number of time points or including measurements from the RV, the structure most impacted by TOF.

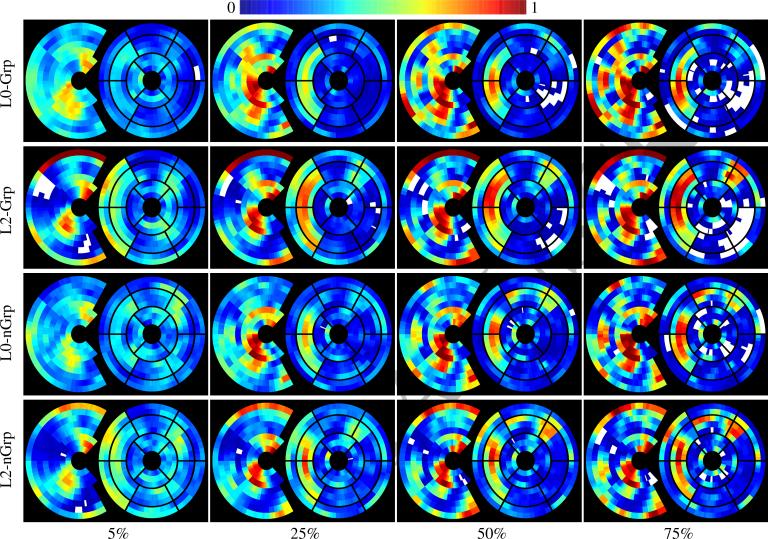

To gain a deeper understanding of the experimental results, Fig. (4) plots the importance of heart sections in distinguishing TOF from healthy controls based on the regional volume scores and with respect to the type of solver and percentage associated with the training sample size. The incomplete circle on the left represents the importance of sections of the RV and on the right the importance of sections of the LV and VS. Each ring of those (incomplete) circles represents a slice of the cine MRI with the outer circle representing the base of the heart. As it is common in the cardiac literature, we overlay the bullseye plot over the LV & VS maps. For each type and percentage, we infer the importance of a section from their average importance across the corresponding ensembles of classifiers, i.e., the number of times a section was selected by a sparse solver multiplied by the solvers’ training accuracy. Sections in white were never selected, those in blue had very low and those in red very high impact on the final classification. The ensembles were indifferent to sections labeled turquoise, green, yellow, and orange. We first note that across solvers the number of indifferent section reduces and the number of ignored section increases with larger number of training samples. This indicates a higher confidence of the ensembles in the importance of sections. Furthermore, the number of selected RV sections (left) increases, which is inline with the increase in testing accuracy. Of all methods, L0-Grp relies least on LV sections (right). This could explain its significantly higher accuracy scores compared to those other method in the majority of whole heart experiments. As noted in Section 1, one has to be careful when relating the weights of classifiers to biomedical landmarks. We are in this experiment as the importance maps of Fig. (4) are based on the number of times regions were selected by solvers (not their soft weights) and those of the L0-Grp are in accordance with the medical literature stating that residual effects of TOF mostly impact the RV.

Figure 4.

Importance of heart sections in distinguishing TOF from healthy controls with respect to the type of solver and percentage associated with the training sample size. The incomplete circle on the left represents the importance of sections of the RV and on the right the importance of sections of the LV and VS. Each ring of those (incomplete) circles represents a slice of the cine MRI. As it is common in the cardiac literature, we overlay the bullseye plot over the LV & VS maps. For each type and percentage, the importance of a section is inferred from its average importance across the corresponding ensembles of classifiers, which is based on the number of times a section was selected by a sparse solver. The number of white regions (never selected), blue (weight close to 0) and red (weight close to 1) is generally increasing with the number of training samples indicating that the confidence of the ensemble increases in the selection of the sections. Furthermore, all methods correctly emphasize more sections of the RV than the LV&VS. L0-Grp ignores the LV sections the most of all solvers. This could explain its significantly higher accuracy in most experiments of the whole heart compared to those other three methods.

4. Conclusion

We generalized the PD approach to directly solve group cardinality constraint logistic regression, i.e., simultaneously performing disease classification and temporal-consistent pattern identification. To do so, we assumed that an intra-subject series of images represents repeated samples of the same disease patterns. We modeled this assumption by combining series of measurements created by a feature across time into a single group. Unlike existing approaches, our algorithm then derived a solution within that model by decoupling the minimization of the logistic regression function from enforcing the group sparsity constraint. The minimum to the smooth and convex logistic regression problem was determined via gradient descent while we derived a closed form solution for finding a sparse approximation of that minimum. We applied our method to cine MRI of 38 healthy controls and 44 adult patients that received reconstructive surgery of Tetralogy of Fallot (TOF) during infancy. Our method correctly identified the RV to be most impacted by TOF and generally obtained statistically significant higher classification accuracy than alternative solutions to this model, i.e., ones relaxing group cardinality constraints or ones only applied to a single time point.

While the experiments were confined to regional volumes scores extracted from cine MRIs, the method could be applied to any features computed from intra-subject sequence of images. One only has to ensure that the assumption holds that series of images represent repeated samples of the same disease patterns. Furthermore, one has to be careful when relating the selected features to disease patterns. We did so on our experiments as the selcted features agreed with the medical literature.

Highlights.

Model concurrent disease classification and temporal-consistent pattern selection

Minimize model by directly solving logistic regression confined by group cardinality

Correctly identify ROIs differentiating the cine MRs of 44 TOF from 38 controls

Generally significantly more accurate than approaches relaxing group sparsity

Acknowledgment

We would like to thank Drs. Benoit Desjardins and DongHye Ye for their help on generating the cardiac dataset. This research was supported by NIH grants (R01 HL127661, K05 AA017168) and the Creative and Novel Ideas in HIV Research (CNIHR) Program through a supplement to the University of Alabama at Birmingham (UAB) Center For AIDS Research funding (P30 AI027767). This funding was made possible by collaborative efforts of the Office of AIDS Research, the National Institute of Allergy and Infectious Diseases, and the International AIDS Society.

Appendix A

Lemma A. 1

Given a matrix , let Aj be the jth row of A for j = 1,..., M. Without loss of the generality, we assume that

Then the solution for the minimization problem

| (A.1) |

within are the first r rows of A, i.e.,

| (A.2) |

Proof

Suppose there is a solution to Eq. (A.1) which is different from W* defined in Eq. (A.2). We now show that the .

We know that for all , the following is true as otherwise can not be an optimal solution for Eq. (A.1). To see that, simply replace with Aj for any j such that , which results in returns a smaller value for . Since is different from W′, there must exists i1 > r such that . Given that , then there must exists a row such that for j2 ≤ r. By using the definition of W*, we also have and (W*)j1 = 0. In addition, according to the assumption that . Then we have

which implies that if we define

then . Continuing this procedure of replacing values results at some point W′ = W* and thus . Thus W* is an optimal solution of problem Eq. (A.1).

Lemma A. 2

Suppose that is an accumulation point of the sequence {(vb, Yb, Wb)} generated by BCD described in Section 2. Then it is also the block coordinate minimum point of Eq. (7), i.e.,

| (A.3) |

Proof

First, note that

| (A.4) |

It then follows that

| (A.5) |

Hence, the sequence {qϱp(vb, Yb, Wb)} is non-increasing. Since is an accumulation point of {(vb, Yb, Wb)}, there exists a subsequence L such that

We then observe that {qϱp(vb, Yb, Wb)}b∈L is bounded, which together with the monotonicity of {qϱp(vb, Yb, Wb)} implies that {qϱp(vb, Yb, Wb)} is bounded below and hence limb→∞ qϱp(vb, Yb, Wb) exists. This observation, Eq. (A.5), and the continuity of qϱp(·,·,·) yield

Given that qϱp(·,·,·) is continuous, then taking limits on both sides of Eq. (A.4) with respect to b ∈ L → ∞ results in

| (A.6) |

Note that is the accumulation point of {(vb, Yb, Wb)}b∈L→∞. Then according to the definition of , we have which immediately implies . Thus, is a block coordinate minimum point of Eq. (7).

Lemma A. 3

Let be a block coordinate minimum of Eq. (7) and define a small feasible step of , i.e., . Then

| (A.7) |

Proof

We note that if where , then according to Eq. (A.2), . Thus, Eq. (A.7) is true. For , we observe from Eq. (A.2) that for all where . Thus for . On the other hand, for all , so that for all . From , it follows that (H)i = 0 for all and hence for .

Theorem A. 4

Suppose that is an accumulation point of the sequence {(vb, Yb, Wb)} generated by BCD described in Section 2. Then, is a local minimum point of Eq. (7).

Proof

According to Lemma A.2, we have is a block coordinate minimum point of Eq. (7). Next we show that is also a local minimum point of Eq. (7).

Since lavg(·,·) is a convex function, we know that qϱp(·,·,·) is also convex. It then follows from the first relation of Eq. (A.3), the partial derivative of qϱp(·,··) 1.1.1 in Bertsekas (1999)), that

| (A.8) |

Let H be a “small” feasible step of and . Then using Lemma 3, Eq. (A.8) and the convexity of qϱp, we have

which together with the above choice of h, G and H implies that is local minimum point of Eq. (7).

Lemma A.5

Let be the sequence generated by PD, and . Suppose (v*, Y*, W*) is an accumulation point of . Then

Proof

Since (vi, Wi) defined in Algorithm 1 is a feasible point of Eq. (3), we have

By the specification of W0 according to line 17-20 of Algorithm 1, we have

From Eq. (A.4), we know that the sequence of qϱp(vb, Yb, Wb) is non-increasing. Thus in view of Eq. (7) and the choice of that is specified in line 21 of Algorithm 1, we observe that

By the definition of , we have . Then we obtain that

| (A.9) |

Since (v*, Y*, W*) is an accumulation point of , there exists a subsequence . Then taking limits on both sides of Eq. (A.9) as , and using ϱp → ∞ as p → ∞, we see that . Thus, the conclusion holds immediately.

Lemma A. 6

Let be the sequence generated by PD, be a set of r district indices in such that for any . Suppose (v*, Y*, W*) is an accumulation point of , then when k ∈ S is sufficiently large,

for some index set .

Proof

Since (v*, Y*, W*) is an accumulation point of , there exists a subsequence . Since is an index set, is bounded for all k. Thus, there exists a subsequence such that for some r distinct indices . Since are r distinct integers, one can easily conclude that for sufficiently large p ∈ S. Let . It then follows that when k ∈ S is sifficiently large, and moreover, .

Lemma A. 7

Let (v*, W*) ∈ and the family of subsets of with size ‘r’ and the sets’ complement only consisting of the zero rows of W* be defined as

where . (v*, W*) is then a local minimum point of Eq. (3) if for each there exists a matrix so that the following holds:

| (A.10) |

with being the value of f(·) at x′.

Proof

The proof first determines the necessary condition for minima of Eq. (3) and then shows that any (v*, W*) fulfilling the necessary condition also fulfils the sufficient condition. To derive the necessary condition, let us assume that (v*, W*) is a local minimum point of Eq. (3). Now, we know that may contain more than one component due to the inequal cardinality constraints in Eq. (3), i.e., . Then for any , we observe that (v*, W*) also minimizes the following problem

| (A.11) |

Then according to Proposition 3.1.1 of (Bertsekas, 1999), for any (v*, W*) being the solution of Eq. (A.11), it is necessary that there exists a matrix so that the following holds:

| (A.12) |

Now any (v*, W*) that is a local minimum point of Eq. (3) also has to be a local minimum point of Eq. (A.11) for all . Thus it has to fulfill Eq. (A.12) for all so that Eq. (A.12) is a first-order necessary condition of Eq. (3).

From now on, we call any (v*, W*) fulfilling Eq. (A.12) for all a stationary point. We now show that a first-order sufficient condition of Eq. (3) is the existence of a stationary point, i.e., any point fulfilling the necessary condition is also a local minimum point of Eq. (3). From Proposition 3.4.1 of (Bertsekas, 1999), we know that (v*, W*) is the global minimum point of Eq. (A.11) for all

Hence, there exists ϵ > 0 and neighborhoods of (v*, W*) for different , i.e.,

with ϵ > 0, such that for all members of the union of neighborhoods, i.e.,

the following is true

Now we define the neighborhood of (v*, W*) with respect to the sparse space as as

Since , then for any there exists so that according to Eq. (A.11) . Hence

Thus, any stationary point (v*, W*) is a local minimum point of Eq. (3).

Theorem A. 8

Let (v*, Y*, W*) be an accumulation point of the sequence generated by PD. Assume that the solution obtained by BCD satisfies

| (A.13) |

for ϵp → 0. If , then (v*, W*) is a local minimum point of problem Eq. (3).

Proof

We now prove the statement by first showing is bounded, then that converges to for some (the matrix defined in Eq. (A.12)), and finally the (v*, W*) have to be a minimum point of Eq. (3) when . Now let us assume that Zp is not bounded. To contradict this results, let

It then follows from Eq. (A.13) that for all p. Thus for follows . Applying Eq. (17) and the definition of qϱp in Eq. (7), we have

| (A.14) |

Now . Let . Obviously, the sequence is bounded. Then by using Bolzano-Weierstrass Theorem, there must exists an accumulation point such that . Clearly . Dividing both sides Eq. (A.14) by ∥Zp∥F, taking the limits with respect to p ∈ K → ∞, and using the relation , we obtain that

| (A.15) |

which contradicts . Therefore, the subsequence {Zp}p∈S is bounded. By applying Bolzano-Weierstrass Theorem Bartle and Sherbert (1982) and the boundness of {Zp}p∈S, there must exists an accumulation point Z* such that . Taking limits on both sides of Eq. (A.14) as , and using the relations , we see that the first two relations of Eq. (A.12) hold with Z* = Λ*. From Eq. (10) and the definitions of , we have for and hence . In addition, we know from Lemma 6 that when k ∈ S is sufficiently large. Hence . This together with the definitions of and implies that Z* satisfies

Now so that

has only one unique component. Hence, Z* together with (v*, W*) satisfies Eq. (A.12) so that according to Lemma A.7 (v*, W*) is a local minimum point of Eq. (3).

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

We use the SLEP package to solve the relaxed sparsity constraint model, see (Liu et al., 2009) for details.

By convexity, see Proposition B.3 of Bertsekas (1999).

References

- Afshin M, Ben Ayed I, Punithakumar K, Law M, Islam A, Goela A, Peters TM, Li S. Regional assessment of cardiac left ventricular myocardial function via mri statistical features. IEEE Transactions on Medical Imaging. 2014;33:481–494. doi: 10.1109/TMI.2013.2287793. [DOI] [PubMed] [Google Scholar]

- Aljabar P, Wolz R, Srinivasan L, Counsell SJ, Rutherford MA, Edwards AD, Hajnal JV, Rueckert D. A combined manifold learning analysis of shape and appearance to characterize neonatal brain development. IEEE Transactions on Medical Imaging. 2011;30:2072–2086. doi: 10.1109/TMI.2011.2162529. [DOI] [PubMed] [Google Scholar]

- Atrey PK, Hossain MA, El Saddik A, Kankanhalli MS. Multimodal fusion for multimedia analysis: a survey. Multimedia System. 2010;16:345–379. [Google Scholar]

- Avants BB, Epstein CL, Grossman M, Gee JC. Symmetric diffeomorphic image registration with cross-correlation: evaluating automated labeling of elderly and neurodegenerative brain. Medical Image Analysis. 2008;12:26–41. doi: 10.1016/j.media.2007.06.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bai W, Peressutti D, Oktay O, Shi W, O'Regan DP, King AP, Rueckert D. Functional Imaging and Modeling of the Heart. Springer; 2015. Learning a global descriptor of cardiac motion from a large cohort of 1000+ normal subjects; pp. 3–11. [Google Scholar]

- Bailliard F, Anderson RH. Tetralogy of Fallot. Orphanet Journal of Rare Diseases. 2009;4:1–10. doi: 10.1186/1750-1172-4-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bartle R, Sherbert D. Matemáticas (Limusa) Wiley; 1982. Introduction to real analysis. [Google Scholar]

- Bernal-Rusiel JL, Reuter M, Greve DN, Fischl B, Sabuncu MR. Spatiotemporal linear mixed effects modeling for the mass-univariate analysis of longitudinal neuroimage data. NeuroImage. 2013;81:358–370. doi: 10.1016/j.neuroimage.2013.05.049. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bertsekas D. Athena Scientific optimization and computation series. Athena Scientific; 1999. Nonlinear programming. [Google Scholar]

- Besbes A, Komodakis N, Glocker B, Tziritas G, Paragios N. Advances in Visual Computing. Springer; 2007. 4D ventricular segmentation and wall motion estimation using efficient discrete optimization; pp. 189–198. [Google Scholar]

- Bhatia KK, Rao A, Price AN, Wolz R, Hajnal JV, Rueckert D. Hierarchical manifold learning for regional image analysis. IEEE Transactions on Medical Imaging. 2014;33:444–461. doi: 10.1109/TMI.2013.2287121. [DOI] [PubMed] [Google Scholar]

- Candès EJ, Romberg J, Tao T. Robust uncertainty principles: Exact signal reconstruction from highly incomplete frequency information. IEEE Transactions on Information Theory. 2006;52:489–509. [Google Scholar]

- Candes EJ, Tao T. Decoding by linear programming. IEEE Transactions on Information Theory. 2005;51:4203–4215. [Google Scholar]

- Carroll MK, Cecchi GA, Rish I, Garg R, Rao AR. Prediction and interpretation of distributed neural activity with sparse models. NeuroImage. 2009;44:112–122. doi: 10.1016/j.neuroimage.2008.08.020. [DOI] [PubMed] [Google Scholar]

- Chandrashekara R, Mohiaddin RH, Rueckert D. Analysis of 3-D myocardial motion in tagged MR images using nonrigid image registration. IEEE Transactions on Medical Imaging. 2004;23:1245–1250. doi: 10.1109/TMI.2004.834607. [DOI] [PubMed] [Google Scholar]

- Chetelat G, Landeau B, Eustache F, Mezenge F, Viader F, de La Sayette V, Desgranges B, Baron JC. Using voxel-based morphometry to map the structural changes associated with rapid conversion in MCI: a longitudinal MRI study. NeuroImage. 2005;27:934–946. doi: 10.1016/j.neuroimage.2005.05.015. [DOI] [PubMed] [Google Scholar]

- Deshpande H, Maurel P, Barillot C. 2nd International Workshop on Sparsity Techniques in Medical Imaging (STMI) MICCAI 2014; 2014. Detection of multiple sclerosis lesions using sparse representations and dictionary learning. [Google Scholar]

- Duda RO, Hart PE. Use of the hough transformation to detect lines and curves in pictures. Communications of the ACM. 1972;15:11–15. [Google Scholar]

- Friedman J, Hastie T, Tibshirani R. A note on the group Lasso and a sparse group Lasso. arXiv. 2010:1001.0736. [Google Scholar]

- Haufe S, Meinecke F, Görgen K, Dähne S, Haynes JD, Blankertz B, Bießmann F. On the interpretation of weight vectors of linear models in multivariate neuroimaging. NeuroImage. 2014;87:96–110. doi: 10.1016/j.neuroimage.2013.10.067. [DOI] [PubMed] [Google Scholar]

- Huang H, Shen L, Zhang R, Makedon F, Hettleman B, Pearlman J. A prediction framework for cardiac resynchronization therapy via 4D cardiac motion analysis. Medical Image Computing and Computer-Assisted Intervention – MICCAI 2005. volume 3749 of Lecture Notes in Computer Science. 2005:704–711. doi: 10.1007/11566465_87. [DOI] [PubMed] [Google Scholar]

- Lempitsky V, Verhoek M, Noble JA, Blake A. Functional Imaging and Modeling of the Heart. Springer; 2009. Random forest classification for automatic delineation of myocardium in real-time 3D echocardiography; pp. 447–456. [Google Scholar]

- Li S, Yin H, Fang L. Group-sparse representation with dictionary learning for medical image denoising and fusion. IEEE Transactions on Biomedical Engineering. 2012;59:3450–3459. doi: 10.1109/TBME.2012.2217493. [DOI] [PubMed] [Google Scholar]

- Liu J, Ji S, Ye J. SLEP: Sparse Learning with Efficient Projections. Arizona State University; 2009. URL: http://www.public.asu.edu/~jye02/Software/SLEP. [Google Scholar]

- Liu M, Zhang D, Shen D. Ensemble sparse classification of Alzheimer's disease. NeuroImage. 2012;60:1106–1116. doi: 10.1016/j.neuroimage.2012.01.055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lu Z, Zhang Y. Sparse approximation via penalty decomposition methods. SIAM Journal on Optimization. 2013;23:2448–2478. [Google Scholar]

- Lv J, Jiang X, Li X, Zhu D, Chen H, Zhang T, Zhang S, Hu X, Han J, Huang H, et al. Sparse representation of whole-brain fMRI signals for identification of functional networks. Medical Image Analysis. 2015;20:112–134. doi: 10.1016/j.media.2014.10.011. [DOI] [PubMed] [Google Scholar]

- Ma S, Huang J. Penalized feature selection and classification in bioinformatics. Briefings in Bioinformatics. 2008;9:392–403. doi: 10.1093/bib/bbn027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Margeta J, Geremia E, Criminisi A, Ayache N. Layered spatio-temporal forests for left ventricle segmentation from 4D cardiac MRI data. Statistical Atlases and Computational Models of the Heart. Imaging and Modelling Challenges. volume 7085 of Lecture Notes in Computer Science. 2012:109–119. [Google Scholar]

- Marques J, Clemmensen LKH, Dam E. 1st International Workshop on Sparsity Techniques in Medical Imaging (STMI) MICCAI 2012; 2012. Diagnosis and prognosis of ostheoarthritis by texture analysis using sparse linear models. [Google Scholar]

- McDonald JH. Handbook of biological statistics. volume 2. Sparky House Publishing Baltimore. 2009 [Google Scholar]

- McLeod K, Mansi T, Sermesant M, Pongiglione G, Pennec X. Modeling in Computational Biology and Biomedicine. Springer; 2013. Statistical shape analysis of surfaces in medical images applied to the tetralogy of Fallot heart; pp. 165–191. [Google Scholar]

- Meier L, Van De Geer S, Bühlmann P. The group Lasso for logistic regression. Journal of the Royal Society. Series B. 2008;70:53–71. [Google Scholar]

- Ng B, Vahdat A, Hamarneh G, Abugharbieh R. Generalized sparse classifiers for decoding cognitive states in fMRI. Machine Learning in Medical Imaging. volume 6357 of Lecture Notes in Computer Science. 2010:108–115. [Google Scholar]

- Osman NF, Kerwin WS, McVeigh ER, Prince JL. Cardiac motion tracking using CINE harmonic phase (HARP) magnetic resonance imaging. Magnetic Resonance in Medicine. 1999;42:1048–1060. doi: 10.1002/(sici)1522-2594(199912)42:6<1048::aid-mrm9>3.0.co;2-m. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Qian Z, Liu Q, Metaxas DN, Axel L. Identifying regional cardiac abnormalities from myocardial strains using nontracking-based strain estimation and spatio-temporal tensor analysis. IEEE Transactions on Medical Imaging. 2011;30:2017–2029. doi: 10.1109/TMI.2011.2156805. [DOI] [PubMed] [Google Scholar]

- Qu Y, Adam B.l., Thornquist M, Potter JD, Thompson ML, Yasui Y, Davis J, Schellhammer PF, Cazares L, Clements M, Wright GL, Feng Z. Data reduction using a discrete wavelet transform in discriminant analysis of very high dimensionality data. Biometrics. 2003;59:143–151. doi: 10.1111/1541-0420.00017. [DOI] [PubMed] [Google Scholar]

- Rao A, Lee Y, Gass A, Monsch A. Classification of Alzheimer's disease from structural MRI using sparse logistic regression with optional spatial regularization. in: Engineering in Medicine and Biology Society, EMBC, 2011 Annual International Conference of the IEEE. 2011:4499–4502. doi: 10.1109/IEMBS.2011.6091115. [DOI] [PubMed] [Google Scholar]

- Rokach L. Ensemble-based classifiers. Artificial Intelligence Review. 2010;33:1–39. [Google Scholar]

- Ryali S, Supekar K, Abrams DA, Menon V. Sparse logistic regression for whole-brain classification of fMRI data. NeuroImage. 2010;51:752–764. doi: 10.1016/j.neuroimage.2010.02.040. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sabuncu M. A universal and efficient method to compute maps from image-based prediction models. Medical Image Computing and Computer-Assisted Intervention – MICCAI 2014. volume 8675 of Lecture Notes in Computer Science. 2014:353–360. doi: 10.1007/978-3-319-10443-0_45. [DOI] [PubMed] [Google Scholar]

- Schellen C, Ernst S, Gruber GM, Mlczoch E, Weber M, Brugger PC, Ulm B, Langs G, Salzer-Muhar U, Prayer D, Kasprian G. Fetal MRI detects early alterations of brain development in Tetralogy of Fallot. American Journal of Obstetrics and Gynecology. 2015;213:392.e1–392.e7. doi: 10.1016/j.ajog.2015.05.046. [DOI] [PubMed] [Google Scholar]

- Serag A, Gousias I, Makropoulos A, Aljabar P, Hajnal J, Boardman J, Counsell S, Rueckert D. Unsupervised learning of shape complexity: Application to brain development. Spatio-temporal Image Analysis for Longitudinal and Time-Series Image Data. volume 7570 of Lecture Notes in Computer Science. 2012:88–99. [Google Scholar]

- Sermesant M, Forest C, Pennec X, Delingette H, Ayache N. Deformable biomechanical models: Application to 4D cardiac image analysis. Medical Image Analysis. 2003;7:475–488. doi: 10.1016/s1361-8415(03)00068-9. [DOI] [PubMed] [Google Scholar]

- Sundar H, Litt H, Shen D. Estimating myocardial motion by 4D image warping. Pattern Recognition. 2009;42:2514–2526. doi: 10.1016/j.patcog.2009.04.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Toews M, Wells, William M, I., Zöllei L. A feature-based developmental model of the infant brain in structural MRI. Medical Image Computing and Computer-Assisted Intervention – MICCAI 2012. volume 7511 of Lecture Notes in Computer Science. 2012:204–211. doi: 10.1007/978-3-642-33418-4_26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vounou M, Janousova E, Wolz R, Stein JL, Thompson PM, Rueckert D, Montana G. Sparse reduced-rank regression detects genetic associations with voxel-wise longitudinal phenotypes in Alzheimer's disease. NeuroImage. 2012;60:700–716. doi: 10.1016/j.neuroimage.2011.12.029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wald RM, Haber I, Wald R, Valente AM, Powell AJ, Geva T. Effects of regional dysfunction and late gadolinium enhancement on global right ventricular function and exercise capacity in patients with repaired tetralogy of Fallot. Circulation. 2009;119:1370–1377. doi: 10.1161/CIRCULATIONAHA.108.816546. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang H, Amini A, et al. Cardiac motion and deformation recovery from MRI: a review. IEEE Transactions on Medical Imaging. 2012;31:487–503. doi: 10.1109/TMI.2011.2171706. [DOI] [PubMed] [Google Scholar]

- Wu F, Yuan Y, Zhuang Y. Proceedings of the International Conference on Multimedia. ACM; New York, NY, USA: 2010. Heterogeneous feature selection by group Lasso with logistic regression; pp. 983–986. [Google Scholar]

- Yamashita O, Sato M, Yoshioka T, Tong F, Kamitani Y. Sparse estimation automatically selects voxels relevant for the decoding of fMRI activity patterns. NeuroImage. 2008;42:1414–1429. doi: 10.1016/j.neuroimage.2008.05.050. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ye DH, Desjardins B, Hamm J, Litt H, Pohl KM. Regional manifold learning for disease classification. IEEE Transactions on Medical Imaging. 2014;33:1236–1247. doi: 10.1109/TMI.2014.2305751. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yu Y, Zhang S, Li K, Metaxas D, Axel L. Deformable models with sparsity constraints for cardiac motion analysis. Medical Image Analysis. 2014;18:927–937. doi: 10.1016/j.media.2014.03.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang D, Shen D. Multi-modal multi-task learning for joint prediction of multiple regression and classification variables in Alzheimer's disease. NeuroImage. 2012;59:895–907. doi: 10.1016/j.neuroimage.2011.09.069. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang H, Wahle A, Johnson RK, Scholz TD, Sonka M. 4-D cardiac MR image analysis: left and right ventricular morphology and function. IEEE Transactions on Medical Imaging. 2010a;29:350–364. doi: 10.1109/TMI.2009.2030799. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang J, Peng Q, Li Q, Jahanshad N, Hou Z, Jiang M, Masuda N, Lang-behn DR, Miller MI, Mori S, et al. Longitudinal characterization of brain atrophy of a huntington's disease mouse model by automated morphological analyses of magnetic resonance images. NeuroImage. 2010b;49:2340–2351. doi: 10.1016/j.neuroimage.2009.10.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang S, Zhan Y, Metaxas DN. Deformable segmentation via sparse representation and dictionary learning. Medical Image Analysis. 2012;16:1385–1396. doi: 10.1016/j.media.2012.07.007. [DOI] [PubMed] [Google Scholar]

- Zhang Y, Pohl KM. Solving logistic regression with group cardinality constraints for time series analysis. Medical Image Computing and Computer-Assisted Intervention – MICCAI 2015. volume 9351 of Lecture Notes in Computer Science. 2015:459–466. doi: 10.1007/978-3-319-24574-4_55. [DOI] [PMC free article] [PubMed] [Google Scholar]