Abstract

Subthalamic nucleus (STN) deep brain stimulation in Parkinson’s disease induces modifications in the recognition of emotion from voices (or emotional prosody). Nevertheless, the underlying mechanisms are still only poorly understood, and the role of acoustic features in these deficits has yet to be elucidated. Our aim was to identify the influence of acoustic features on changes in emotional prosody recognition following STN stimulation in Parkinson’s disease. To this end, we analysed the performances of patients on vocal emotion recognition in pre-versus post-operative groups, as well as of matched controls, entering the acoustic features of the stimuli into our statistical models. Analyses revealed that the post-operative biased ratings on the Fear scale when patients listened to happy stimuli were correlated with loudness, while the biased ratings on the Sadness scale when they listened to happiness were correlated with fundamental frequency (F0). Furthermore, disturbed ratings on the Happiness scale when the post-operative patients listened to sadness were found to be correlated with F0. These results suggest that inadequate use of acoustic features following subthalamic stimulation has a significant impact on emotional prosody recognition in patients with Parkinson’s disease, affecting the extraction and integration of acoustic cues during emotion perception.

Keywords: Basal ganglia, Deep brain stimulation, Parkinson’s disease, Emotional prosody, Subthalamic nucleus

1. Introduction

By demonstrating that subthalamic nucleus (STN) deep brain stimulation (DBS) in Parkinson’s disease induces modifications in emotion processing, previous research has made it possible to infer the functional involvement of the STN in this domain (see, Péron, Frühholz, Vérin, & Grandjean, 2013 for a review). STN DBS in Parkinson’s disease has been reported to induce modifications in all the emotional components studied so far (subjective feeling, motor expression of emotion, arousal, action tendencies, cognitive processes, and emotion recognition), irrespective of stimulus valence (positive or negative) and sensory-input modality. In emotion recognition, for instance, these patients exhibit deficits or impairments both for facial emotion (Biseul et al., 2005; Drapier et al., 2008; Dujardin et al., 2004; Le Jeune et al., 2008; Péron, Biseul, et al., 2010; Schroeder et al., 2004) and for vocal emotion: so-called emotional prosody (Bruck, Wildgruber, Kreifelts, Kruger, & Wachter, 2011; Péron, Grandjean, et al., 2010).

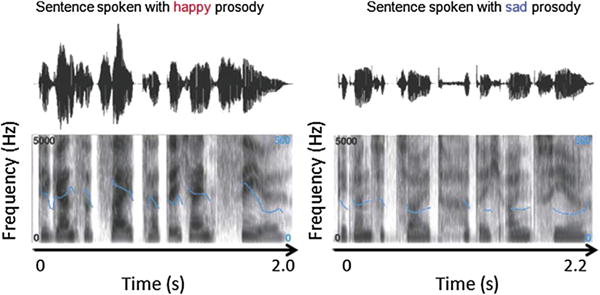

Emotional prosody refers to the suprasegmental and segmental changes that take place in the course of a spoken utterance, affecting physical properties such as amplitude, timing, and fundamental frequency (F0), the last of these being perceived as pitch (Grandjean, Banziger, & Scherer, 2006). An additional cue to emotion is voice quality, the percept derived from the energy distribution of a speaker’s frequency spectrum, which can be described using adjectives such as shrill or soft, and can have an impact at both the segmental and the suprasegmental levels (Schirmer & Kotz, 2006). Emotional prosody recognition has been shown to correlate with perceived modulations of these different acoustic features during an emotional episode experienced by the speaker. In the prototypical example illustrated in Fig. 1, taken from Schirmer and Kotz (2006), happiness is characterized by a rapid speech rate, by high intensity, and by mean F0 and F0 variability, making vocalizations sound both melodic and energetic. By contrast, sad vocalizations are characterized by a slow speech rate, by low intensity, and by mean F0 and F0 variability, but have high spectral noise, resulting in the impression of a broken voice (Banse & Scherer, 1996). Thus, understanding a vocal emotional message requires the analysis and integration of a variety of acoustic cues.

Fig. 1.

Oscillograms (top panels) and spectrograms (bottom panels) of the German sentence “Die ganze Zeit hatte ich ein Ziel” (“During all this time I had one goal”). The sentence is shorter when produced with a happy prosody (2 sec) than with a sad one (2.2 sec). The speech is also louder, as can be seen by comparing the sound envelopes illustrated in the oscillograms. This envelope is larger (i.e., it deviates more from baseline) for happy than for sad prosody. Spectral differences between happy and sad prosody are illustrated in the spectrograms. The dark shading indicates the energy of frequencies up to 5000 Hz. The superimposed blue lines represent the fundamental frequency (F0) contour, which is perceived as speech melody. This contour shows greater variability and a higher mean for happy than for sad prosody. Reproduced with permission (N°3277470398909) from Schirmer and Kotz (2006).

The perception and decoding of emotional prosody has been studied in functional magnetic resonance imaging (fMRI) and patient studies, allowing researchers to delineate a distributed neural network involved in the identification and recognition of emotional prosody (Ethofer, Anders, Erb, Droll, et al., 2006; Ethofer, Anders, Erb, Herbert, et al., 2006; Ethofer et al., 2012; Frühholz, Ceravolo, & Grandjean, 2012; Grandjean, Sander, Lucas, Scherer, & Vuilleumier, 2008; Grandjean et al., 2005; Sander et al., 2005; Schirmer & Kotz, 2006; Wildgruber, Ethofer, Grandjean, & Kreifelts, 2009). Accordingly, models of emotional prosody processing have long postulated that information is processed in multiple successive stages related to different levels of representations (see Witteman, Van Heuven, & Schiller, 2012 for a review). Following the processing of auditory information in the primary and secondary auditory cortices (Bruck, Kreifelts, & Wildgruber, 2011; Wildgruber et al., 2009), with the activation of predominantly right-hemispheric regions (Banse & Scherer, 1996; Grandjean et al., 2006) (Stage 1), two successive stages of prosody decoding have been identified. The second stage, related to the representation of meaningful suprasegmental acoustic sequences, is thought to involve projections from the superior temporal gyrus (STG) to the anterior superior temporal sulcus (STS). These cortical structures have been identified as forming the so-called temporal voice-sensitive area (Belin & Zatorre, 2000; Grandjean et al., 2005) made up of voice-sensitive neuronal populations. In the third stage, emotional information is made available by the STS for higher order cognitive processes mediated by the right inferior frontal gyrus (IFG) (Frühholz & Grandjean, 2013b) and orbitofrontal cortex (OFC) (Ethofer, Anders, Erb, Herbert, et al., 2006; Grandjean et al., 2008; Sander et al., 2005; Wildgruber et al., 2004). This stage appears to be related to the explicit evaluation of vocally expressed emotions.

In addition to this frontotemporal network, increased activity has also been observed within the amygdaloid nuclei in response to emotional prosody (Frühholz et al., 2012; Frühholz & Grandjean, 2013a; Grandjean et al., 2005; Sander et al., 2005). Although it was not their focus, these studies have also reported the involvement of subcortical regions (other than the amygdaloid nuclei) in the processing of emotional prosody, such as the thalamus (Wildgruber et al., 2004) and the basal ganglia (BG). The involvement of the caudate and putamen has repeatedly been observed in fMRI, patient, and electroencephalography studies (Bach et al., 2008; Frühholz et al., 2012; Grandjean et al., 2005; Kotz et al., 2003; Morris, Scott, & Dolan, 1999; Paulmann, Pell, & Kotz, 2008; Paulmann, Pell, & Kotz, 2009; Sidtis & Van Lancker Sidtis, 2003). More recently, the studies exploring the emotional effects of STN DBS in Parkinson’s disease have highlighted the potential involvement of the STN in the brain network subtending emotional prosody processing (Bruck, Wildgruber, et al., 2011; see also, Péron et al., 2013 for a review; Péron, Grandjean, et al., 2010). In the study by Péron, Grandjean, et al. (2010), an original emotional prosody paradigm was administered to post-operative Parkinson’s patients, preoperative Parkinson’s patients, and matched controls. Results showed that, compared with the other two groups, the post-operative group exhibited a systematic emotional bias, with emotions being perceived more strongly. More specifically, contrasts notably revealed that, compared with preoperative patients and healthy matched controls, the post-operative group rated “happiness” more intensely when they listened to fearful stimuli, and they rated “surprise” significantly more intensely when they listened to angry or fearful utterances. Interestingly, a recent high-resolution fMRI study in healthy participants reinforced the hypothesis that the STN plays a functional role in emotional prosody processing, reporting left STN activity during a gender task that compared angry voices with neutral stimuli (Frühholz et al., 2012; Péron et al., 2013). It is worth noting that, while these results seem to confirm the involvement of the BG, with further supporting evidence coming from numerous sources (for a review, see Gray & Tickle-Degnen, 2010; see also Péron, Dondaine, Le Jeune, Grandjean, & Verin, 2012), most models of emotional prosody processing fail to specify the functional role of either the BG in general or the STN in particular, although some authors have attempted to do so.

Paulmann et al. (2009), for instance, suggested that the BG are involved in integrating emotional information from different sources. Among other things, they are thought to play a functional role in matching acoustic speech characteristics such as perceived pitch, duration, and loudness (i.e., prosodic information) with semantic emotional information. Kotz and Schwartze (2010) elaborated on this suggestion by underlining the functional role of the BG in decoding emotional prosody. They postulated that these deep structures are involved in the rhythmic aspects of speech decoding. The BG therefore seem to be involved in the early stage, and above all, the second stage of emotional prosody processing (see earlier for a description of the multistage models of emotional prosody processing).

From the emotional effects of STN DBS reported in the Parkinson’s disease literature, Péron et al. (2013) have posited that the BG and, more specifically, the STN, coordinate neural patterns, either synchronizing or desynchronizing the activity of the different neuronal populations involved in specific emotion components. They claim that the STN plays “the role of neural rhythm organizer at the cortical and subcortical levels in emotional processing, thus explaining why the BG are sensitive to both the temporal and the structural organization of events” (Péron et al., 2013). Their model incorporates the proposal put forward by Paulmann et al. (2009) and elaborated on by Kotz and Schwartze (2010), but goes one step further by suggesting that the BG and, more specifically, the STN, are sensitive to rhythm because of their intrinsic, functional role as rhythm organizer or coordinator of neural patterns.

In this context, the exact contribution of the STN and, more generally, the BG, to emotional prosody decoding remains to be clarified. More specifically, the questions of the interaction between the effects of STN DBS per se and the nature of the auditory emotional material (e.g., its acoustic features), as well as the impact that DBS might have on the construction of the acoustic object/auditory percept, has yet to be resolved. The influence of acoustic features on emotional prosody recognition in patients with Parkinson’s disease undergoing STN DBS has not been adequately accounted for to date, even though this question is of crucial interest since, as explained earlier, evidence gathered from fMRI and lesion models have led to the hypothesis that the BG play a critical and potentially direct role in the integration of the acoustic features of speech, especially in rhythm perception (Kotz & Schwartze, 2010; Pell & Leonard, 2003).

From the results of an 18fludeoxyglucose-positron emission tomography (18FDG-PET) study comparing resting-state glucose metabolism before and after STN DBS in Parkinson’s disease (Le Jeune et al., 2010), we postulated that acoustic features have an impact on the emotional prosody disturbances observed following STN DBS. This study indeed showed that STN DBS modifies metabolic activity across a large and distributed network encompassing areas known to be involved in the different stages of emotional prosody decoding (notably the second and third stages in Schirmer and Kotz’s 2006, with clusters found in the STG and STS regions) (Le Jeune et al., 2010).

In this context, the aim of the present study was to pinpoint the influence of acoustic features on changes in emotional prosody recognition following STN DBS in Parkinson’s disease. To this end, we analysed the vocal emotion recognition performances of 21 Parkinson’s patients in a preoperative condition, 21 Parkinson’s patients in a postoperative condition, and 21 matched healthy controls (HC), derived from the data published in a previous study (Péron, Grandjean, et al., 2010), by entering the acoustic features of the stimuli into our statistical models as dependent variables of interest. This validated emotional prosody recognition task (Péron et al., 2011; Péron, Grandjean, Drapier, & Vérin, 2014; Péron, Grandjean, et al., 2010) has proven to be relevant for studying the affective effects of STN DBS in PD patients, notably because of its sensitivity (Péron, 2014). The use of visual (continuous) analogue scales is indeed far more sensitive to emotional effects than are categorization and forced-choice tasks (naming of emotional faces and emotional prosody), chiefly because visual analogue scales do not induce categorization biases (K.R. Scherer & Ekman, 2008).

2. Materials and methods

2.1. Participants and methods

The performance data from two groups of patients with Parkinson’s disease (preoperative and post-operative groups) and an HC group, as described in a previous study (Péron, Grandjean, et al., 2010), were included in the current study (N = 21 in each group). The two patient groups were comparable for disease duration and cognitive functions, as well as for dopamine replacement therapy, calculated on the basis of correspondences adapted from Lozano et al. (1995). All three groups were matched for sex, age, and education level. After receiving a full description of the study, all the participants provided their written informed consent. The study was conducted in accordance with the Declaration of Helsinki. The characteristics of the two patient groups and the HC group are presented in Table 1.

Table 1.

Sociodemographic and motor data for the two groups of PD patients and the HC group.

| Pre-op (n = 21)

|

Post-op (n = 21)

|

HC (n = 21)

|

Stat. val. (F) | df | p value | |

|---|---|---|---|---|---|---|

| Mean ± SD | Mean ± SD | Mean ± SD | ||||

| Age | 59.5 ± 7.9 | 58.8 ± 7.4 | 58.2 ± 8.0 | <1 | 1. 40 | .88 |

| Disease duration | 11.0 ± 3.6 | 11.3 ± 4.1 | – | <1 | 1. 40 | .81 |

| DRT (mg) | 973.6 ± 532.3 | 828.3 ± 523.8 | – | <1 | 1. 40 | .38 |

| UPDRS III On-dopa-on-stim | – | 6.8 ± 4.3 | – | – | – | |

| UPDRS III On-dopa-off stim | 9.5 ± 6.9 | 13.7 ± 8.8 | – | 2.43 | 1. 40 | .13 |

| UPDRS III Off-dopa-on-stim | – | 14.3 ± 6.9† | – | – | – | |

| UPDRS III Off-dopa-off-stim | 27.6 ± 13.5 | 34.3 ± 8.0 | – | 2.78 | 1. 40 | .11 |

| H&Y On | 1.3 ± 0.6 | 1.3 ± 1.0 | – | <1 | 1. 40 | .86 |

| H&Y Off | 2.1 ± 0.7 | 2.3 ± 1.2 | – | <1 | 1. 40 | .67 |

| S&E On | 92.8 ± 7.8 | 84.7 ± 13.2 | – | 5.79 | 1. 40 | .02* |

| S&E Off | 74.7 ± 13.6 | 66.1 ± 21.0 | – | 2.45 | 1. 40 | .13 |

Statistical values (stat. val.), degrees of freedom (df), and p-values between preoperative (pre-op), post-operative (post-op), and HC (healthy control) groups are reported (single-factor analysis of variance).

PD = Parkinson’s disease; DRT = dopamine replacement therapy; UPDRS = Unified Parkinson’s Disease Rating Scale; H&Y = Hoehn and Yahr scale; S&E = Schwab and England scale.

p < .0001 when compared with the UPDRS III off-dopa-off-stim score (pairwise t-tests for two independent groups).

Significant if p-value < .05.

All the Parkinson’s patients (preoperative and postoperative) underwent motor (Core Assessment Program for Intracerebral Transplantation; Langston et al., 1992), neuropsychological (Mattis Dementia Rating Scale, a series of tests assessing frontal executive functions and a scale assessing depression; Mattis, 1988), and emotional prosody assessments (see below). All the patients were on their normal dopamine replacement therapy (i.e., they were “on-dopa”) when they performed the neuropsychological and emotional assessments. In the post-operative condition, the patients were on-dopa and on-stimulation. The overall neurosurgical methodology for the post-operative group was similar to that previously described by Benabid et al. (2000) and is extensively described in the study by Péron, Biseul, et al. (2010).

The motor, neuropsychological, and psychiatric results are set out in full in Péron, Biseul, et al. (2010) and are also shown in Tables 1 and 2. These results globally showed motor improvement induced by the surgery (UPDRS III off-dopa-off-stim versus off-dopa-on-stim scores in the post-operative Parkinson’s patient group; t = 8.86 (20), p < .0001), as well as a higher score on the depression scale for both patient groups compared with HC. There was no significant difference between the three groups for any of the neuropsychological variables.

Table 2.

Neuropsychological background data for the two groups of PD patients and the HC group.

| Pre-op (n = 21)

|

Post-op (n = 21)

|

HC (n = 21)

|

Stat. val. (F) | df | p-value | ||

|---|---|---|---|---|---|---|---|

| Mean ± SD | Mean ± SD | Mean ± SD | |||||

| MMSE | – | – | 29.0 ± 0.8 | – | – | ||

| Mattis (of 144) | 141.1 ± 2.3 | 139.9 ± 2.8 | 140.9 ± 2.0 | 1.43 | 2. 60 | .25 | |

| Stroop | Interference | 3.8 ± 10.2 | 2.1 ± 8.6 | 6.8 ± 9.2 | 1.34 | 2. 60 | .27 |

| TMT | A (seconds) | 42.5 ± 13.9 | 50.0 ± 20.6 | 42.7 ± 15.1 | 1.35 | 2. 60 | .27 |

| B (seconds) | 95.5 ± 44.7 | 109.7 ± 49.3 | 91.5 ± 42.7 | <1 | 2. 60 | .40 | |

| B-A (seconds) | 52.9 ± 37.0 | 59.7 ± 39.9 | 48.8 ± 34.9 | <1 | 2. 60 | .64 | |

| Verbal fluency | Categorical | 34.8 ± 9.4 | 29.0 ± 12.3 | 32.0 ± 9.0 | 1.61 | 2. 60 | .21 |

| Phonemic | 24.7 ± 7.2 | 21.0 ± 7.4 | 20.8 ± 6.1 | 2.12 | 2. 60 | .13 | |

| Action verbs | 16.7 ± 5.8 | 14.9 ± 5.3 | 17.8 ± 6.5 | 1.31 | 2. 60 | .28 | |

| MCST | Categories | 5.5 ± 0.8 | 5.6 ± 0.7 | 5.9 ± 0.2 | 1.90 | 2. 60 | .16 |

| Errors | 4.4 ± 4.5 | 2.9 ± 3.9 | 2.3 ± 1.9 | 1.80 | 2. 60 | .17 | |

| Perseverations | 1.0 ± 1.6 | 1.6 ± 3.0 | 0.4 ± 0.6 | 1.70 | 2. 60 | .19 | |

| MADRS | 5.7 ± 8.1 | 5.6 ± 4.9 | 1.6 ± 2.06 | 3.48 | 2.60 | .03* |

Statistical values (stat. val.), degrees of freedom (df), and p-values between preoperative (pre-op), post-operative (post-op), and HC (healthy control) groups are reported (single-factor analysis of variance).

PD = Parkinson’s disease; MMSE = Mini Mental State Examination; TMT = Trail Making Test; MCST = modified version of the Wisconsin Card Sorting Test; MADRS = Montgomery-Asberg Depression Rating Scale.

Significant if p-value <.05.

2.2. Extraction of acoustic features from the original stimuli

We extracted several relevant acoustic features from the original stimuli in order to enter them as covariates in the statistical models.

2.2.1. Original vocal stimuli

The original vocal stimuli, consisting of meaningless speech (short pseudosentences), were selected from the database developed by Banse and Scherer (1996) and validated in their study. These pseudosentences were obtained by concatenating pseudowords (composed of syllables found in Indo-European languages so that they would be perceived as natural utterances) featuring emotional intonation (across different cultures) but no semantic content. Five different categories of prosody, four emotional (anger, fear, happiness, and sadness) and one neutral, were used in the study (60 stimuli, 12 in each condition). The mean duration of the stimuli was 2044 msec (range: 1205–5236 msec). An analysis of variance failed to reveal any significant difference in duration between the different prosodic categories (neutral, angry, fearful, happy, and sad), F(4, 156) = 1.43, p > .10, and there was no significant difference in the mean acoustic energy expended, F(4, 156) = 1.86, p > .10 (none of the systematic pairwise comparisons between the neutral condition and the emotional prosodies were significant, F < 1 for all comparisons). Likewise, there was no significant difference between the categories in the standard deviation of the mean energy of the sounds, F(4, 156) = 1.9, p > .10.

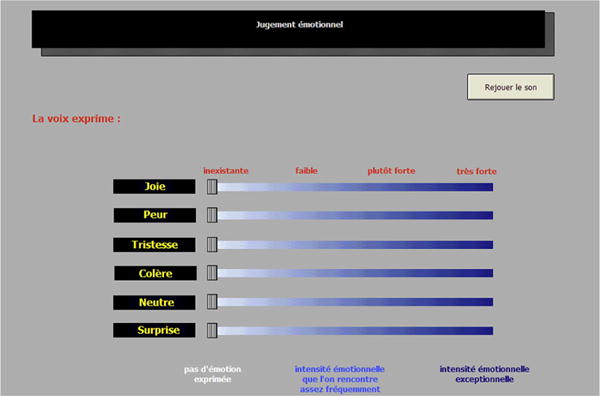

2.2.2. Original vocal emotion recognition procedure

All the stimuli were presented bilaterally through stereo headphones by using an Authorware programme developed specially for this study. Participants were told they would hear meaningless speech uttered by male and female actors, and that these actors would express emotions through their utterances. Participants were required to judge the extent to which the different emotions were expressed on a series of visual analogue scales ranging from not at all to very much. Participants rated six scales: one scale for each emotion presented (anger, fear, happiness, and sadness), one for the neutral utterance, and one for surprise. The latter was included in order to see whether the expression of fear by the human voice would be confused with surprise, as is the case with facial expressions (Ekman, 2003; K. R. Scherer & Ellgring, 2007). An example of the computer interface used for the recognition of emotional prosody task is provided in Appendix 1. To ensure that participants had normal hearing, they were assessed by means of a standard audiometric screening procedure (AT-II-B audiometric test) to measure tonal and vocal sensitivity.

2.2.3. Extraction of selected acoustic features

The set of acoustic features consisted of metrics that are commonly used to describe human vocalizations, in particular speech and emotional prosody (Sauter, Eisner, Calder, & Scott, 2010). These features were extracted from the original stimuli by using Praat software (Boersma & Weenink, 2013). For amplitude, we extracted the utterance duration, as well as the mean, minimum, maximum, and standard deviation values of intensity and loudness. For pitch, we extracted the mean, minimum, maximum, and standard deviation values, as well as the range.

2.3. Statistical analysis

2.3.1. Specificity of the data and zero-inflated methods

When we looked at the distribution of the emotional judgement data, we found that it presented a pattern that is frequently encountered in emotion research: The zero value was extensively represented compared with the other values on the response scales. We therefore decided to model the data by using a zero-inflated distribution, such as the zero-inflated Poisson or zero-inflated negative binomial distribution (Hall, 2000). This method has been previously validated in the emotional domain (McKeown & Sneddon, 2014; Milesi et al., 2014).

These zero-inflated models are estimated in two parts, theoretically corresponding to two data-generating processes: (i) The first part consists in fitting the excess zero values by using a generalized linear model (GLM) with a binary response; and (ii) the second part, which is the one that interested us here, consists in fitting the rest of the data, as well as the remaining zero values, by using a GLM with the response distributed as a Poisson or a negative binomial variable. In our case, we specifically used a zero-inflated negative binomial mixed model, as this allowed us to estimate a model with random and fixed effects that took the pattern of excess zeros (zero-inflated data) into account. It should be noted that the binary response model contained only one intercept, as its purpose was to control for the excess zeros and not to explicitly estimate the impact of acoustic features on the excess zeros versus the rest of the data. Statistical analyses of interest were performed by using the glmmADMB package on R.2.15.2.

2.3.2. Levels of analysis

The aim of the present study was to pinpoint the influence of acoustic features on changes in emotional prosody recognition following STN DBS in Parkinson’s disease. In this context, we performed three levels of analyses.

The first level of analysis served to assess the effect of group on each response scale for each prosodic category. To this end, we tested the main effect of group for each scale and each emotion, controlling for all the main effects on the remaining scales and emotions.

Second, in order to assess the differential impact of acoustic features on emotional judgements between groups (preoperative, post-operative, HC), we examined the statistical significance of the interaction effects between group and each acoustic feature of interest. We chose to focus on this second level of analysis for the experimental conditions in which the post-operative group was found to have performed significantly differently from both the preoperative and HC groups in the first level of analysis in order to reduce the number of comparisons. For this second level of analysis, the acoustic features were split into two different sets. The first set contained duration, and the acoustic features related to intensity (minimum, maximum, mean, SD) and loudness (minimum, maximum, mean, SD), and the second set included the acoustic features related to pitch (minimum, maximum, mean, SD). We tested each interaction effect between group and acoustic feature separately, controlling for the interaction effects between groups and the remaining acoustic features. The results therefore had to be interpreted in this classification context. For example, if the interaction effect between group and minimum intensity was significant, it would mean that at least two groups responded differently from each other as a function of the minimum intensity value, given that all the main effects of group and of the acoustic features belonging to the first set of parameters, as well as all the interaction effects between these same factors (except for the interaction of interest), were taken into account.

Third and last, in order to assess whether the main effect of group on responses persisted after controlling for all the potential effects of acoustic features and the Group × Acoustic feature interactions, we tested whether the main effect of group remains significant if all the main effects of acoustic features and the Group × Acoustic feature interactions are taken into account. This analysis was also performed separately for the two sets of parameters, as described earlier.

The level of statistical significance was set at p = .05 for the first level of analysis; the p-value for the second and third levels of analysis was adjusted for multiple comparisons.

3. Results

3.1. First level of analysis: group effects (Table 3)

Table 3.

Means (and standard deviations (SDs)) of continuous judgement in the emotional prosody task for the two groups of PD patients (preoperative and post-operative) and the HC group.

| Happiness scale

|

Fear scale

|

Sadness scale

|

Anger scale

|

Neutral scale

|

Surprise scale

|

|

|---|---|---|---|---|---|---|

| Mean ± SD | Mean ± SD | Mean ± SD | Mean ± SD | Mean ± SD | Mean ± SD | |

| Preoperative (n = 21) | ||||||

| Anger | .90 ± 5.11 | 4.00 ± 12.14 | 1.72 ± 6.64 | 42.50 ± 33.29 | 5.73 ± 16.00 | 8.19 ± 18.14 |

| Fear | 1.84 ± 8.72 | 30.76 ± 31.72 | 9.42 ± 17.71 | 5.70 ± 16.45 | 6.17 ± 16.74 | 9.93 ± 18.15 |

| Happiness | 26.88 ± 30.02 | 6.93 ± 17.23 | 6.23 ± 17.10 | 5.03a ± 14.67 | 4.00 ± 12.42 | 13.49 ± 22.08 |

| Neutral | 4.80 ± 12.15 | .92 ± 4.06 | 1.46 ± 6.00 | .86 ± 6.13 | 32.28 ± 28.55 | 9.64 ± 17.44 |

| Sadness | .53 ± 2.41 | 9.52 ± 21.31 | 26.16 ± 30.50 | .98 ± 6.65 | 21.77 ± 27.06 | 2.30 ± 8.32 |

| Post-operative (n = 21) | ||||||

| Anger | 1.08 ± 5.36 | 10.19 ± 21.59 | 3.38 ± 11.64 | 53.00 ± 35.33 | 7.63 ± 19.15 | 12.56 ± 23.44 |

| Fear | 3.96 ± 13.75 | 42.85b ± 33.40 | 12.20a,b± 21.41 | 10.29 ± 23.70 | 6.33 ± 16.75 | 19.74b ± 27.61 |

| Happiness | 33.35 ± 35.29 | 11.83a,b ± 23.45 | 10.66a,b ± 23.18 | 8.33b ± 20.54 | 6.75 ± 16.77 | 21.51 ± 29.17 |

| Neutral | 7.39 ± 16.31 | 3.54 ± 11.61 | 4.53 ± 13.47 | 1.64 ± 7.02 | 41.52 ± 34.59 | 17.19 ± 26.02 |

| Sadness | 2.37a,b ± 11.03 | 12.16 ± 22.88 | 39.51a,b ± 34.26 | 3.64 ± 12.44 | 23.47 ± 32.13 | 3.81 ± 11.18 |

| Healthy controls (n = 21) | ||||||

| Anger | .59 ± 3.99 | 3.89 ± 12.44 | 1.51 ± 6.51 | 50.70 ± 32.47 | 4.88 ± 15.09 | 6.99 ± 17.34 |

| Fear | 1.09 ± 6.69 | 39.38 ± 28.62 | 12.79 ± 21.19 | 8.83 ± 18.34 | 2.27 ± 10.42 | 11.10 ± 19.63 |

| Happiness | 28.67 ± 30.70 | 6.30 ± 15.81 | 8.97 ± 20.46 | 8.16 ± 19.06 | 1.49 ± 7.94 | 16.83 ± 25.59 |

| Neutral | 4.74 ± 12.56 | .84 ± 4.09 | 1.93 ± 7.39 | .36 ± 2.44 | 34.21 ± 29.77 | 14.82 ± 21.66 |

| Sadness | .72 ± 6.60 | 7.14 ± 18.38 | 33.19 ± 31.20 | 1.74 ± 9.21 | 21.36 ± 29.09 | 2.38 ± 9.02 |

PD = Parkinson’s disease.

Significant in comparison to healthy controls (HC).

Significant in comparison to the preoperative group.

Overall, analysis revealed a main effect of Emotion, F(4,240) = 38.93, p < .00001, an effect of Group, F(2,60) = 7.25, p = .001, and, more interestingly, an interaction between the Group × Emotion × Scale factors, F(4,1200) = 1.71, p = .003, showing that the preoperative, post-operative, and HC groups displayed different patterns of responses on the different scales and different emotions.

The experimental conditions in which the post-operative group performed significantly differently from both the preoperative and the HC groups were as follows:

-

-

“Sadness” stimuli on the Happiness scale: When the stimulus was “sadness” and the scale Happiness, contrasts revealed a difference between the post-operative and the HC groups, z = 2.52, p = .01, and between the post-operative and the preoperative groups, z = 2.00, p = .05, but not between the preoperative and the HC groups, p = 1.0.

-

-

“Sadness” stimuli on the Sadness scale: When the stimulus was “sadness” and the scale Sadness, contrasts showed a difference between the post-operative and the HC groups, z = 4.02, p < .001, and between the post-operative and the preoperative groups, z = 3.92, p < .001, but not between the preoperative and the HC groups, p .9.

-

-

“Happiness” stimuli on the Fear scale: When the stimulus was “happiness” and the scale Fear, contrasts showed a difference between the post-operative and the HC groups, z = 2.78, p < .001, and between the post-operative and the preoperative groups, z = 2.79, p < .001, but not between the preoperative and the HC groups, p .8.

-

-

“Happiness” stimuli on the Sadness scale: When the stimulus was “happiness” and the scale Sadness, contrasts showed a difference between the post-operative and the HC groups, z = 3.57, p < .001, and between the postoperative and the preoperative groups, z = 2.70, p < .001, but not between the preoperative and the HC groups, p .2.

-

-

“Fear stimuli” on the Sadness scale: When the stimulus was “fear” and the scale Sadness, contrasts showed a difference between the post-operative and the HC groups, z = 3.93, p < .001, and between the post-operative and the preoperative groups, z = 2.47, p = .01, but not between the preoperative and the HC groups, p = .06.

We also observed the following results:

-

-

“Fear” stimuli on the Fear scale: When the stimulus was “ fear” and the scale Fear, contrasts failed to reveal a significant difference between the post-operative and the HC groups, z = 1.66, p = .1, or between the preoperative and the HC groups, p = .3, but there was a significant difference between the post-operative and the preoperative groups, z = 2.31, p = .02.

-

-

“Fear” stimuli on the Surprise scale: When the stimulus was “fear” and the scale Surprise, contrasts showed no significant difference between the post-operative and the HC groups, z = 1.75, p = .08, or between the preoperative and the HC groups, p = .2, but there was a significant difference between the post-operative and the preoperative groups, z = 2.38, p = .02.

-

-

“Happiness” stimuli on the Anger scale: When the stimulus was “happiness” and the scale Anger, contrasts did not reveal any significant difference between the postoperative and the HC groups, z = .85, p = .4, but there was a difference between the post-operative and the preoperative groups, z = 2.54, p = .01, and between the preoperative and the HC groups, z = 2.10, p = .04.

3.2. Second level of analysis: differential impact of acoustic features on vocal emotion recognition between preoperative, post-operative, and HC groups (Table 4 and Fig. 2)

Table 4.

Differential impact of acoustic features on vocal emotion recognition between the preoperative, post-operative, and HC groups, after controlling for participant effect, excess zero pattern, and main effects of group and acoustic feature, as well as the effects of the remaining Group × Acoustic feature interactions.

| Post-op vs HC

|

Post-op vs Pre-op

|

Pre-op vs HC

|

||||

|---|---|---|---|---|---|---|

| Stat. value | p-value | Stat. value | p-value | Stat. value | p-value | |

| Happiness on Fear scale – duration | −2.41 | .02* | −2.23 | .02* | .74 | .4 |

| Happiness on Fear scale – max. loudness | 2.16 | .03 | 3.57 | <.01* | .79 | .4 |

| Happiness on Sadness scale – max. F0 | −2.58 | <.01* | −4.09 | <.001* | −.96 | .3 |

| Sadness on Happiness scale – mean F0 | −2.64 | <.01* | −2.78 | <.01* | .48 | .6 |

| Sadness on Happiness scale – min. F0 | 2 | .04 | 2.69 | <.01* | .19 | .8 |

F0 = fundamental frequency; HC = healthy controls; max. = maximum; min. = minimum; Post-op = post-operative group; Pre-op = preoperative group; Stat. value = statistical value.

Significant (corrected for multiple comparisons).

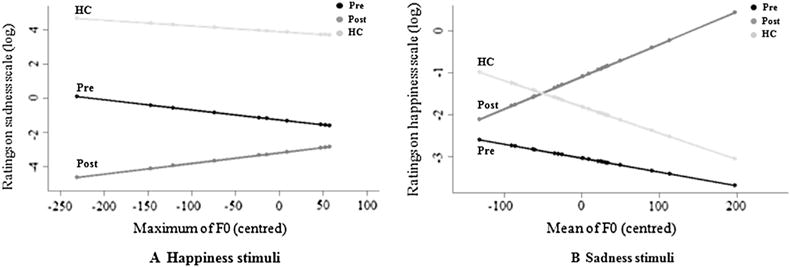

Fig. 2.

Differential impact of acoustic features on vocal emotion recognition between the preoperative, post-operative, and HC groups, after controlling for participant effect, excess zero pattern, and main effects of group and acoustic feature, as well as the effects of the remaining Group × Acoustic feature interactions. (A) Differential impact of maximum fundamental frequency (F0), perceived as pitch, on the Sadness scale when the stimulus was “happiness” between the preoperative (black), post-operative (dark grey), and HC (in light grey) groups. (B) Differential impact of mean F0 on the Happiness scale when the stimulus was “sadness” between the preoperative (black), post-operative (dark grey), and HC (light grey) groups.

The results of the additional analyses, after controlling for the participant effect as a random effect, the excess zero pattern (see Statistical Analysis section), and the main effects of group and acoustic feature, as well as the effects of the remaining Group × Acoustic feature interactions, are set out in Table 4. Selected results of interest are provided in Fig. 2 (the significant effects are displayed in Table 4; the other effects were not significant, p > .1).

3.3. Third level of analysis: main effect of group on vocal emotion recognition, after controlling for all the main effects of acoustic features and the effects of the group × acoustic feature interactions

We failed to find any significant effects for the following statistical models: “happiness” stimuli on the Fear scale, pitch domain (z = 1.90, p = .4); “happiness” stimuli on the Sadness scale, pitch domain (z = 15.32, p = .1); and “sadness” stimuli on the Happiness scale, pitch domain (z = 4.43, p = .1). However, for “happiness” stimuli on the Fear scale, intensity domain (intensity–loudness–duration), we did find a significant main effect (z = 55.26, p < .0001). More specifically, we observed a significant difference between the post-operative and the HC groups (z = 5.07, p < .0001), as well as between the postoperative and the preoperative groups (z = 4.90, p < .0001), though not between the preoperative and the HC groups (z = .23, p = .8).

4. Discussion

The aim of the present study was to pinpoint the influence of acoustic features on changes in emotional prosody recognition following STN DBS in Parkinson’s disease. To this end, we analysed the vocal emotion recognition performances of 21 Parkinson’s patients in a preoperative condition, 21 Parkinson’s patients in a post-operative condition, and 21 HC, as published in a previous study (Péron, Grandjean, et al., 2010), by entering the acoustic features in our statistical models as dependent variables. We focused these additional analyses on results that differed significantly between the post-operative and preoperative groups, and between the post-operative and HC groups, but not between the preoperative and HC groups. Postulating that these results would reflect an emotional prosody deficit or bias specific to the postoperative condition, we performed three levels of analysis.

For the first level of analysis (group effects), we found that, compared with the preoperative and HC groups, the postoperative group rated the Sadness and Fear scales significantly more intensely when they listened to happy stimuli. Similarly, this same group gave higher ratings on the Sadness scale when they listened to fearful stimuli. Furthermore, contrasts revealed that, compared with the other two groups, the post-operative patients were biased in their ratings on the Happiness scale, providing significantly higher ratings on this scale when they listened to sad stimuli. This level of analysis enables us to replicate previous results, exploring emotional processing following STN DBS in PD (for a review, see Péron et al., 2013). These studies have yielded the observation that STN DBS in PD induces modifications in all components of emotion, irrespective of stimulus valence. More specifically, what DBS studies seem to show is that the STN decreases misattributions (or misclassifications) during emotional judgements. For example, when sensitive methodologies are used (e.g., a judgement task using visual analogue scales instead of a categorization task (Péron, Grandjean, et al., 2010; Vicente et al., 2009)), results show an increase in misattributions following STN DBS, rather than wholesale emotional dysfunction. It looks as if STN DBS either introduces “noise” into the system, or else prevents it from correctly inhibiting the non-relevant information and/or correctly activating the relevant information, causing emotional judgements to be disturbed.

In the present study, these specific emotionally biased ratings were investigated, in a second level of analysis, by entering acoustic features as a dependent variable in a statistical method that took into account the specific distribution of the data, characterized by excess zero values. By taking this pattern into account, we were able to interpret the estimated effects for what they were, and not as artefacts arising from a misspecification of the actual structure of the data or a violation of the assumptions of the Gaussian distribution. The set of acoustic features we extracted consisted of metrics that are commonly used to describe human vocalizations, in particular speech and emotional prosody (Sauter et al., 2010). In the amplitude domain, we included duration, and the mean minimum, maximum, and standard deviation values of intensity and loudness as covariates. We also investigated the influence of the mean, minimum, maximum, and standard deviation values of F0, perceived as pitch. These contrasts revealed that the post-operative biased ratings on the Fear scale when the patients listened to happy stimuli were correlated with duration. The post-operative biased ratings on the Sadness scale when the patients listened to the happy stimuli were correlated with maximum F0 (Fig. 2A). The disturbed ratings on the Happiness scale when the postoperative patients listened to sad stimuli were found to correlate with mean (Fig. 2B). Analyses of the slopes of the effects revealed that the higher the F0 and the longer the duration, the more biased the post-operative group were in their emotional judgements. For all these contrasts, the postoperative group was significantly different from the two other groups, whereas no significant difference was observed between the preoperative and the HC groups. That being said, we also observed effects in which the post-operative group was significantly different from the preoperative group, whereas no significant difference was observed between the preoperative and the HC groups, or between the postoperative and the HC groups. These effects are more “marginal,” but also add elements regarding the sensory contribution to a vocal emotion deficit following STN DBS. With this pattern of results, we observed that the post-operative biased ratings on the Fear scale when the patients listened to happy stimuli were correlated with maximum loudness, and the disturbed ratings on the Happiness scale when the postoperative patients listened to sad stimuli were correlated with minimum F0. Analyses of the slopes of these effects revealed that the greater the loudness, the fewer misattributions the post-operative participants made. No significant effects were found between the other acoustic parameters and these emotionally biased ratings, nor were the other emotional judgements found to be specifically impaired in the postoperative group (e.g., “fear” ratings on the Sadness scale).

The present results appear to support our initial hypothesis that there is a significant influence of acoustic feature processing on changes in emotional prosody recognition following STN DBS in Parkinson’s disease. According to the models of emotional prosody processing, the present results appear to show that STN DBS has an impact on the representation of meaningful suprasegmental acoustic sequences, which corresponds to the second stage of emotional prosody processing. At the behavioural level, these results seem to indicate that STN DBS disturbs the extraction of acoustic features and the related percepts that are needed to correctly discriminate prosodic cues. Interestingly, in the present study, F0 (perceived as pitch) was found to be correlated with biased judgements, as the post-operative group gave higher intensity ratings on the Sadness scale when they listened to happy stimuli or (conversely) when they gave higher ratings on the Happiness scale when they listened to sad stimuli. As has previously been shown (see Fig. 1), this acoustic feature is especially important for differentiating between sadness and happiness in the human voice (Sauter et al., 2010). However, analyses of the slopes suggested that the post-operative group overused this acoustic feature to judge vocal emotions, leading to emotional biases, whereas the other two groups used F0 more moderately. Amplitude has also been reported to be crucial for correctly judging vocal emotions, and more especially for recognizing sadness, disgust, and happiness (Sauter et al., 2010). Accordingly, we observed that loudness was not sufficiently used by the post-operative group (in comparison to the preoperative group), leading these participants to provide significantly higher fear ratings than the other group when they listened to happy stimuli. This over- or underuse of acoustic features, leading to emotional misattributions, seems to plead in favour of a previous hypothesis formulated by Péron et al. (2013), whereby STN DBS either introduces noise into the system, or else prevents it from correctly inhibiting the non-relevant information and/or correctly activating the relevant information, causing emotional judgements to be disturbed. Another hypothesis, not mutually exclusive with Péron and colleagues’ model, can be put forward in the context of embodiment theory. This theory postulates that perceiving and thinking about emotion involves the perceptual, somatovisceral, and motoric re-experiencing (collectively referred to as embodiment) of the relevant emotion (Niedenthal, 2007). As a consequence, a motor disturbance, such as the speech and laryngeal control disturbances that have been reported following high-frequency STN DBS (see for example, Hammer, Barlow, Lyons, & Pahwa, 2010; see also, Hammer, Barlow, Lyons, & Pahwa, 2011), could contribute to a deficit in emotional prosody production and, in turn, to disturbed emotional prosody recognition. This hypothesis has already been proposed in the context of the recognition of facial expression following STN DBS in Parkinson’s disease patients (Mondillon et al., 2012).

Finally, we performed a third level of analysis in order to assess whether the main effect of group on responses persisted after controlling for all the potential main effects of the acoustic features, as well as the effects of the Group × Acoustic feature interactions. We found that the influence of the acoustic parameters (intensity domain) on biased ratings on the Fear scale when the post-operative group listened to happy stimuli was not sufficient to explain the differences in variance observed across the groups. These results would thus mean that, even if there is a significant influence of acoustic feature processing on changes in emotional prosody recognition following STN DBS in Parkinson’s disease as explained earlier, the variance observed is not sufficient to explain all the emotionally biased results. Two hypotheses can be put forward to explain these results. First, the part of variance not explained by the acoustic features we studied could be explained by other acoustic parameters. Second, these results suggest that the hypothesized misuse of acoustic parameters is not sufficient to explain the emotional biases observed at the group level in post-operative patients and that the latter effects should be explained by other variables. We propose that STN DBS also influences other (presumably higher) levels of emotional prosody processing and that this surgery has an impact not only on the extraction and integration of the acoustic features of prosodic cues, but also in the third stage of emotional prosody processing, which consists of the assessment and cognitive elaboration of vocally expressed emotions. Even if the present study did not address this question directly, we would be inclined to favour the second hypothesis on the basis of the 18FDG-PET study comparing resting-state glucose metabolism before and after STN DBS in Parkinson’s disease, on which the present study’s operational hypotheses were based (Le Jeune et al., 2010). This study showed that STN DBS modifies metabolic activity in a large and distributed network known for its involvement in the associative and limbic circuits. More specifically, clusters were found in the STG and STS regions, which are known to be involved in the second stage of emotional prosody processing, as well as in the IFG and OFC regions, known to be involved in higher level emotional prosody processing (Ethofer, Anders, Erb, Herbert, et al., 2006; Frühholz et al., 2012; Grandjean et al., 2008; Sander et al., 2005; Wildgruber et al., 2004; see Witteman et al., 2012 for a review). As such, the STN has been hypothesized to belong to a distributed neural network that subtends human affective processes at a different level from these specific emotional processes. Rather than playing a specific function in a given emotional process, the STN and other BG would act as coordinators of neural patterns, either synchronizing or desynchronizing the activity of the different neuronal populations responsible for specific emotion components. By so doing, they initiate a temporally structured, over-learned pattern of neural co-activation and inhibit competing patterns, thus allowing the central nervous system to implement a momentarily stable pattern (Péron et al., 2013). In this context, and based on the present results, we can postulate that the STN is involved in both the (de)-synchronization needed for the extraction of the acoustic features of prosodic cues and (future research will have to directly test this second part of the assumption) in the (de)-synchronization processes needed for higher level evaluative emotional judgements supposedly mediated by the IFG (Frühholz & Grandjean, 2013b). STN DBS is thought to desynchronize the coordinated activity of these neuronal populations (i.e., first and/or subsequent stages of emotional prosody processing).

In summary, deficits in the recognition of emotions (in this case expressed in vocalizations) are well documented in Parkinson’s disease following STN DBS, although the underlying mechanisms are still poorly understood. The results of the present study show that several acoustic features (notably F0, duration, and loudness) have a significant influence on disturbed emotional prosody recognition in Parkinson’s patients following STN DBS. Nevertheless, this influence does not appear to be sufficient to explain these disturbances. Our results suggest that at least the second stage of emotional prosody processing (extraction of acoustic features and construction of acoustic objects based on prosodic cues) is affected by STN DBS. These results appear to be in line with the hypothesis that the STN acts as a marker for transiently connected neural networks subserving specific functions.

Future research should investigate the brain modifications correlated with emotional prosody impairment following STN DBS, as well as the extent of the involvement of the different emotional prosody processing stages in these metabolic modifications. At a more clinical level, deficits in the extraction of acoustic features constitute appropriate targets for both behavioural and pharmaceutical follow-up after STN DBS.

Acknowledgments

We would like to thank the patients and healthy controls for giving up their time to take part in this study, as well as Elizabeth Wiles-Portier and Barbara Every for preparing the manuscript, and the Ear, Nose and Throat Department of Rennes University Hospital for conducting the hearing tests.

Abbreviations

- 18FDG-PET

18Fludeoxyglucose-Positron emission tomography

- BG

basal ganglia

- DBS

deep brain stimulation

- F0

fundamental frequency

- FFA

face fusiform area

- fMRI

functional magnetic resonance imaging

- HC

healthy controls

- IFG

inferior frontal gyrus

- MADRS

Montgomery-Asberg Depression Rating Scale

- OCD

obsessive-compulsive disorder

- OFC

orbitofrontal cortex

- STG

superior temporal gyrus

- STN

subthalamic nucleus

- STS

superior temporal sulcus

- UPDRS

Unified Parkinson’s Disease Rating Scale

Appendix. Computer interface for the original paradigm of emotional prosody recognition

Footnotes

Disclosure

The authors report no conflicts of interest. The data acquisition was carried out at the Neurology Unit of Pontchaillou Hospital (Rennes University Hospital, France; Prof. Marc Vérin). The first author (Dr Julie Péron) was funded by the Swiss National Foundation (grant no. 105314_140622; Prof. Didier Grandjean and Dr Julie Péron), and by the NCCR Affective Sciences funded by the Swiss National Foundation (project no. 202 – UN7126; Prof. Didier Grandjean). The funders had no role in data collection, discussion of content, preparation of the manuscript, or decision to publish.

References

- Bach DR, Grandjean D, Sander D, Herdener M, Strik WK, Seifritz E. The effect of appraisal level on processing of emotional prosody in meaningless speech. NeuroImage. 2008;42(2):919–927. doi: 10.1016/j.neuroimage.2008.05.034. [DOI] [PubMed] [Google Scholar]

- Banse R, Scherer KR. Acoustic profiles in vocal emotion expression. Journal of Personality and Social Psychology. 1996;70(3):614–636. doi: 10.1037//0022-3514.70.3.614. [DOI] [PubMed] [Google Scholar]

- Belin P, Zatorre RJ. ‘What’, ‘where’ and ‘how’ in auditory cortex. Nature Neuroscience. 2000;3(10):965–966. doi: 10.1038/79890. [DOI] [PubMed] [Google Scholar]

- Benabid AL, Koudsie A, Benazzouz A, Fraix V, Ashraf A, Le Bas JF, et al. Subthalamic stimulation for Parkinson’s disease. Archives of Medical Research. 2000;31(3):282–289. doi: 10.1016/s0188-4409(00)00077-1. [DOI] [PubMed] [Google Scholar]

- Biseul I, Sauleau P, Haegelen C, Trebon P, Drapier D, Raoul S, et al. Fear recognition is impaired by subthalamic nucleus stimulation in Parkinson’s disease. Neuropsychologia. 2005;43(7):1054–1059. doi: 10.1016/j.neuropsychologia.2004.10.006. [DOI] [PubMed] [Google Scholar]

- Boersma P, Weenink D. Praat: Doing phonetics by computer (version version 5.3.51. 2013 retrieved 2 June 2013 from http://www.praat.org/

- Bruck C, Kreifelts B, Wildgruber D. Emotional voices in context: a neurobiological model of multimodal affective information processing. Physics of Life Reviews. 2011;8(4):383–403. doi: 10.1016/j.plrev.2011.10.002. [DOI] [PubMed] [Google Scholar]

- Bruck C, Wildgruber D, Kreifelts B, Kruger R, Wachter T. Effects of subthalamic nucleus stimulation on emotional prosody comprehension in Parkinson’s disease. PLoS One. 2011;6(4):e19140. doi: 10.1371/journal.pone.0019140. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Drapier D, Péron J, Leray E, Sauleau P, Biseul I, Drapier S, et al. Emotion recognition impairment and apathy after subthalamic nucleus stimulation in Parkinson’s disease have separate neural substrates. Neuropsychologia. 2008;46(11):2796–2801. doi: 10.1016/j.neuropsychologia.2008.05.006. [DOI] [PubMed] [Google Scholar]

- Dujardin K, Blairy S, Defebvre L, Krystkowiak P, Hess U, Blond S, et al. Subthalamic nucleus stimulation induces deficits in decoding emotional facial expressions in Parkinson’s disease. Journal of Neurology, Neurosurgery, and Psychiatry. 2004;75(2):202–208. [PMC free article] [PubMed] [Google Scholar]

- Ekman P. Emotions revealed. New York: Times book; 2003. [Google Scholar]

- Ethofer T, Anders S, Erb M, Droll C, Royen L, Saur R, et al. Impact of voice on emotional judgment of faces: an event-related fMRI study. Human Brain Mapping. 2006;27(9):707–714. doi: 10.1002/hbm.20212. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ethofer T, Anders S, Erb M, Herbert C, Wiethoff S, Kissler J, et al. Cerebral pathways in processing of affective prosody: a dynamic causal modeling study. NeuroImage. 2006;30(2):580–587. doi: 10.1016/j.neuroimage.2005.09.059. [DOI] [PubMed] [Google Scholar]

- Ethofer T, Bretscher J, Gschwind M, Kreifelts B, Wildgruber D, Vuilleumier P. Emotional voice areas: anatomic location, functional properties, and structural connections revealed by combined fMRI/DTI. Cerebral Cortex. 2012;22(1):191–200. doi: 10.1093/cercor/bhr113. [DOI] [PubMed] [Google Scholar]

- Frühholz S, Ceravolo L, Grandjean D. Specific brain networks during explicit and implicit decoding of emotional prosody. Cerebral Cortex. 2012;22(5):1107–1117. doi: 10.1093/cercor/bhr184. [DOI] [PubMed] [Google Scholar]

- Frühholz S, Grandjean D. Amygdala subregions differentially respond and rapidly adapt to threatening voices. Cortex. 2013a;49(5):1394–1403. doi: 10.1016/j.cortex.2012.08.003. [DOI] [PubMed] [Google Scholar]

- Frühholz S, Grandjean D. Processing of emotional vocalizations in bilateral inferior frontal cortex. Neuroscience & Biobehavioral Reviews. 2013b;37(10 Pt 2):2847–2855. doi: 10.1016/j.neubiorev.2013.10.007. [DOI] [PubMed] [Google Scholar]

- Grandjean D, Banziger T, Scherer KR. Intonation as an interface between language and affect. Progress in Brain Research. 2006;156:235–247. doi: 10.1016/S0079-6123(06)56012-1. [DOI] [PubMed] [Google Scholar]

- Grandjean D, Sander D, Lucas N, Scherer KR, Vuilleumier P. Effects of emotional prosody on auditory extinction for voices in patients with spatial neglect. Neuropsychologia. 2008;46(2):487–496. doi: 10.1016/j.neuropsychologia.2007.08.025. [DOI] [PubMed] [Google Scholar]

- Grandjean D, Sander D, Pourtois G, Schwartz S, Seghier ML, Scherer KR, et al. The voices of wrath: brain responses to angry prosody in meaningless speech. Nature Neuroscience. 2005;8(2):145–146. doi: 10.1038/nn1392. [DOI] [PubMed] [Google Scholar]

- Gray HM, Tickle-Degnen L. A meta-analysis of performance on emotion recognition tasks in Parkinson’s disease. Neuropsychology. 2010;24(2):176–191. doi: 10.1037/a0018104. [DOI] [PubMed] [Google Scholar]

- Hall DB. Zero-inflated Poisson and binomial regression with random effects: a case study. Biometrics. 2000;56(4):1030–1039. doi: 10.1111/j.0006-341x.2000.01030.x. [DOI] [PubMed] [Google Scholar]

- Hammer MJ, Barlow SM, Lyons KE, Pahwa R. Subthalamic nucleus deep brain stimulation changes speech respiratory and laryngeal control in Parkinson’s disease. Journal of Neurology. 2010;257(10):1692–1702. doi: 10.1007/s00415-010-5605-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hammer MJ, Barlow SM, Lyons KE, Pahwa R. Subthalamic nucleus deep brain stimulation changes velopharyngeal control in Parkinson’s disease. Journal of Communication Disorders. 2011;44(1):37–48. doi: 10.1016/j.jcomdis.2010.07.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kotz SA, Meyer M, Alter K, Besson M, von Cramon DY, Friederici AD. On the lateralization of emotional prosody: an event-related functional MR investigation. Brain Lang. 2003;86(3):366–376. doi: 10.1016/s0093-934x(02)00532-1. [DOI] [PubMed] [Google Scholar]

- Kotz SA, Schwartze M. Cortical speech processing unplugged: a timely subcortico-cortical framework. Trends in Cognitive Science. 2010;14(9):392–399. doi: 10.1016/j.tics.2010.06.005. [DOI] [PubMed] [Google Scholar]

- Langston JW, Widner H, Goetz CG, Brooks D, Fahn S, Freeman T, et al. Core assessment program for intracerebral transplantations (CAPIT) Movement Disorders. 1992;7(1):2–13. doi: 10.1002/mds.870070103. [DOI] [PubMed] [Google Scholar]

- Le Jeune F, Péron J, Biseul I, Fournier S, Sauleau P, Drapier S, et al. Subthalamic nucleus stimulation affects orbitofrontal cortex in facial emotion recognition: a PET study. Brain. 2008;131(Pt 6):1599–1608. doi: 10.1093/brain/awn084. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Le Jeune F, Péron J, Grandjean D, Drapier S, Haegelen C, Garin E, et al. Subthalamic nucleus stimulation affects limbic and associative circuits: a PET study. European Journal of Nuclear Medicine and Molecular Imaging. 2010;37(8):1512–1520. doi: 10.1007/s00259-010-1436-y. [DOI] [PubMed] [Google Scholar]

- Lozano AM, Lang AE, Galvez-Jimenez N, Miyasaki J, Duff J, Hutchinson WD, et al. Effect of GPi pallidotomy on motor function in Parkinson’s disease. Lancet. 1995;346(8987):1383–1387. doi: 10.1016/s0140-6736(95)92404-3. [DOI] [PubMed] [Google Scholar]

- Mattis S. Dementia rating scale. Odessa, F.L: Ressources Inc. Psychological Assessment; 1988. [Google Scholar]

- McKeown GJ, Sneddon I. Modeling continuous self-report measures of perceived emotion using generalized additive mixed models. Psychological Methods. 2014;19(1):155–174. doi: 10.1037/a0034282. [DOI] [PubMed] [Google Scholar]

- Milesi V, Cekic S, Péron J, Frühholz S, Cristinzio C, Seeck M, et al. Multimodal emotion perception after anterior temporal lobectomy (ATL) Frontiers in Human Neuroscience. 2014;8:275. doi: 10.3389/fnhum.2014.00275. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mondillon L, Mermillod M, Musca SC, Rieu I, Vidal T, Chambres P, et al. The combined effect of subthalamic nuclei deep brain stimulation and L-dopa increases emotion recognition in Parkinson’s disease. Neuropsychologia. 2012;50(12):2869–2879. doi: 10.1016/j.neuropsychologia.2012.08.016. [DOI] [PubMed] [Google Scholar]

- Morris JS, Scott SK, Dolan RJ. Saying it with feeling: neural responses to emotional vocalizations. Neuropsychologia. 1999;37(10):1155–1163. doi: 10.1016/s0028-3932(99)00015-9. [DOI] [PubMed] [Google Scholar]

- Niedenthal PM. Embodying emotion. Science. 2007;316(5827):1002–1005. doi: 10.1126/science.1136930. [DOI] [PubMed] [Google Scholar]

- Paulmann S, Pell MD, Kotz SA. Functional contributions of the basal ganglia to emotional prosody: evidence from ERPs. Brain Research. 2008;1217:171–178. doi: 10.1016/j.brainres.2008.04.032. [DOI] [PubMed] [Google Scholar]

- Paulmann S, Pell MD, Kotz SA. Comparative processing of emotional prosody and semantics following basal ganglia infarcts: ERP evidence of selective impairments for disgust and fear. Brain Research. 2009;1295:159–169. doi: 10.1016/j.brainres.2009.07.102. [DOI] [PubMed] [Google Scholar]

- Pell MD, Leonard CL. Processing emotional tone from speech in Parkinson’s disease: a role for the basal ganglia. Cognitive, Affective, & Behavioral Neuroscience. 2003;3(4):275–288. doi: 10.3758/cabn.3.4.275. [DOI] [PubMed] [Google Scholar]

- Péron J. Does STN-DBS really not change emotion recognition in Parkinson’s disease? Parkinsonism & Related Disorders. 2014;20(5):562–563. doi: 10.1016/j.parkreldis.2014.01.018. [DOI] [PubMed] [Google Scholar]

- Péron J, Biseul I, Leray E, Vicente S, Le Jeune F, Drapier S, et al. Subthalamic nucleus stimulation affects fear and sadness recognition in Parkinson’s disease. Neuropsychology. 2010a;24(1):1–8. doi: 10.1037/a0017433. [DOI] [PubMed] [Google Scholar]

- Péron J, Dondaine T, Le Jeune F, Grandjean D, Verin M. Emotional processing in Parkinson’s disease: a systematic review. Movement Disorders. 2012;27(2):186–199. doi: 10.1002/mds.24025. [DOI] [PubMed] [Google Scholar]

- Péron J, El Tamer S, Grandjean D, Leray E, Travers D, Drapier D, et al. Major depressive disorder skews the recognition of emotional prosody. Progress In Neuro-Psychopharmacology & Biological Psychiatry. 2011;35:987–996. doi: 10.1016/j.pnpbp.2011.01.019. [DOI] [PubMed] [Google Scholar]

- Péron J, Frühholz S, Vérin M, Grandjean D. Subthalamic nucleus: a key structure for emotional component synchronization in humans. Neuroscience & Biobehavioral Reviews. 2013;37(3):358–373. doi: 10.1016/j.neubiorev.2013.01.001. [DOI] [PubMed] [Google Scholar]

- Péron J, Grandjean D, Drapier S, Vérin M. Effect of dopamine therapy on nonverbal affect burst recognition in Parkinson’s disease. PLoS One. 2014;9(3):e90092. doi: 10.1371/journal.pone.0090092. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Péron J, Grandjean D, Le Jeune F, Sauleau P, Haegelen C, Drapier D, et al. Recognition of emotional prosody is altered after subthalamic nucleus deep brain stimulation in Parkinson’s disease. Neuropsychologia. 2010b;48(4):1053–1062. doi: 10.1016/j.neuropsychologia.2009.12.003. [DOI] [PubMed] [Google Scholar]

- Sander D, Grandjean D, Pourtois G, Schwartz S, Seghier ML, Scherer KR, et al. Emotion and attention interactions in social cognition: brain regions involved in processing anger prosody. NeuroImage. 2005;28(4):848–858. doi: 10.1016/j.neuroimage.2005.06.023. [DOI] [PubMed] [Google Scholar]

- Sauter DA, Eisner F, Calder AJ, Scott SK. Perceptual cues in nonverbal vocal expressions of emotion. Quarterly Journal Experimental Psychology (Hove) 2010;63(11):2251–2272. doi: 10.1080/17470211003721642. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scherer KR, Ekman P. Methodological issues in studying nonverbal behavior. In: Harrigan J, Rosenthal R, Scherer K, editors. The New Handbook of Methods in Nonverbal Behavior Research. Oxford: Oxford University Press; 2008. pp. 471–512. [Google Scholar]

- Scherer KR, Ellgring H. Multimodal expression of emotion: affect programs or componential appraisal patterns? Emotion. 2007;7(1):158–171. doi: 10.1037/1528-3542.7.1.158. [DOI] [PubMed] [Google Scholar]

- Schirmer A, Kotz SA. Beyond the right hemisphere: brain mechanisms mediating vocal emotional processing. Trends in Cognitive Science. 2006;10(1):24–30. doi: 10.1016/j.tics.2005.11.009. [DOI] [PubMed] [Google Scholar]

- Schroeder U, Kuehler A, Hennenlotter A, Haslinger B, Tronnier VM, Krause M, et al. Facial expression recognition and subthalamic nucleus stimulation. Journal of Neurology, Neurosurgery, and Psychiatry. 2004;75(4):648–650. doi: 10.1136/jnnp.2003.019794. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sidtis JJ, Van Lancker Sidtis D. A neurobehavioral approach to dysprosody. Seminars in Speech and Language. 2003;24(2):93–105. doi: 10.1055/s-2003-38901. [DOI] [PubMed] [Google Scholar]

- Vicente S, Biseul I, Péron J, Philippot P, Drapier S, Drapier D, et al. Subthalamic nucleus stimulation affects subjective emotional experience in Parkinson’s disease patients. Neuropsychologia. 2009;47(8–9):1928–1937. doi: 10.1016/j.neuropsychologia.2009.03.003. [DOI] [PubMed] [Google Scholar]

- Wildgruber D, Ethofer T, Grandjean D, Kreifelts B. A cerebral network model of speech prosody comprehension. International Journal of Speech-Language Pathology. 2009;11(4):277–281. [Google Scholar]

- Wildgruber D, Hertrich I, Riecker A, Erb M, Anders S, Grodd W, et al. Distinct frontal regions subserve evaluation of linguistic and emotional aspects of speech intonation. Cerebral Cortex. 2004;14(12):1384–1389. doi: 10.1093/cercor/bhh099. [DOI] [PubMed] [Google Scholar]

- Witteman J, Van Heuven VJ, Schiller NO. Hearing feelings: a quantitative meta-analysis on the neuroimaging literature of emotional prosody perception. Neuropsychologia. 2012;50(12):2752–2763. doi: 10.1016/j.neuropsychologia.2012.07.026. [DOI] [PubMed] [Google Scholar]