Abstract

The human tendency to use positive words (“adorable”) more often than negative words (“dreadful”) is called the linguistic positivity bias. We find evidence for this bias in two studies of word use, one based on written corpora and another based on naturalistic speech samples. In addition, we demonstrate that the positivity bias applies to nouns and verbs as well as adjectives. We also show that it is found to the same degree in written as well as spoken English. Moreover, personality traits and gender moderate the effect, such that persons high on extraversion and agreeableness and women display a larger positivity bias in naturalistic speech. Results are discussed in terms of how the linguistic positivity bias may serve as a mechanism for social facilitation. People, in general, and some people more than others, tend to talk about the brighter side of life.

Keywords: word frequency, word valence, linguistic positivity bias, big five, gender, Electronically Activated Recorder

A Positivity Bias in Written and Spoken English and Its Moderation by Personality and Gender

The tendency to use positive words (“pretty”) more often than equally familiar negative words (“ugly’) was originally called “the Pollyanna hypothesis” by Boucher and Osgood (1969). Contemporary researchers refer to this curious finding as a positivity bias in language use (e.g., Rozin,Berman,&Royzman, 2010). This linguistic positivity bias (LPB) is thought by some (e.g., Rozin et al., 2010) to reflect the fact that life provides most people with more positive than negative events to talk about (Gable, Reis,&Elliot, 2000). Boucher and Osgood (1969) speculated that positive valence leads to increased word use, whereas Zajonc (1968) speculated that increased word use leads to positive valence. Whatever the cause, demonstrations of the LPB have occasionally appeared in the literature.

Rozin et al. (2010) examined frequency data for seven positive adjectives, and their opposites, and report that the positive word was always used more frequently than its opposite. While Rozin et al. demonstrate a number of other interesting LPBs and that such biases are consistent across 20 languages, they acknowledge that examining seven highly positive adjectives and their opposites is not definitive evidence for an LPB. In the current research, we focus on the LPB in terms of how frequently words with different valance ratings are used, both in written (Study 1) and spontaneous spoken English (Study 2). We also examine (Study 1) whether an LPB is found in the use of nouns and verbs, in addition to adjectives. And finally, we examine (Study 2) personality and gender differences in the magnitude of the LPB.

Positivity Bias in Frequency of Word Use

A handful of older studies have directly demonstrated an LPB in adjective use. For example, Gough (1956) had judges rate personality adjectives for likability and found that frequency norms differed based on likability. Similarly, Zajonc (1968) used frequency information from Thorndike and Lorge (1944) to demonstrate a correlation between word frequency and word desirability. Others have used subsamples of words from the Thorndike and Lorge (1944) list and obtained significant correlations between word frequency and “good–bad” ratings of the words (e.g., Johnson, Thomson, & Frincke, 1960). Zajonc (1968) also used Anderson’s (1964) 555 adjectives that had been normed for likability and found a strong correlation with Thorndike-Lorge frequency of use. Given that the Thorndike-Lorge word list was initially compiled in 1921 and the frequency data were gathered prior to that time, the frequency information in these studies is nearly a century old at this point. Do modern written word samples also show a general LPB? Moreover, many of these older studies focused only on adjectives used to describe persons. Does the LPB extend to other parts of speech? Finally, all studies on the LPB have been limited to written language, which is more controlled or effortful than spoken language (Aaron & Joshi, 2006). An important extension would be to test for an LPB in spontaneous speech.

Recent studies provide incidental evidence for an LPB. In a study of noun processing speed, Unkelbach et al. (2010) report a significant correlation between word frequency of use (in written English) and word positivity. In a study of Italian adjectives, Suitner and Maass (2008) report a correlation between frequency of use (in written Italian) and positivity ratings of those adjectives. These authors conclude that there exists “a general positivity bias when describing human beings” (Suitner & Maass, 2008, p. 1078). Based on their analyses of frequency and valence norms for 100 words, in 13 languages, conducted almost half a century earlier, Boucher and Osgood (1969) arrived at very similar conclusions: “people tend to look on (and talk about) the bright side of life” (p. 1).

In Study 1, we examine frequency information in a very large list of diverse words, including adjectives, nouns, and verbs. We used a word list that is well characterized on pleasantness and arousal. Frequency information was extracted from norms based on written corpora. There is some variability across the different indicators of word frequency (Burgess & Livesay, 1998). One of the most commonly used measures of word frequency is the set of norms published by Kucera and Francis (KF; 1967). However, these norms are over four decades old, and they are based on written works of professional authors.

Lund and Burgess (1996) have provided a more recent set of frequency norms, based on amateur writers, called the Hyperspace Analogue to Language (HAL). These norms are based on approximately 131 million words gathered across 3,000 Usenet newsgroups in February 1995. The HAL norms are stronger predictors of word recognition than the KF norms (Balota et al., 2004), and so in this study we employ the HAL norms as an index of word frequency. Because word frequency norms are not normally distributed, we will also use the log transform of both the KF and HAL frequency indexes (as recommended by Balota et al., 2007).

Regardless of whether the linguistic positive bias is due to valence (i.e., Boucher & Osgood, 1969) or mere exposure (i.e., Zajonc, 1968), we predict that we will find such a bias in Study 1. Specifically, we predict a positive and significant correlation between word pleasantness and frequency of use. We have no reason to expect these correlations to differ in subsamples of adjectives, nouns, or verbs, though this will be the first study to examine the LPB in different parts of speech. And finally, we will test if the arousal value of words is related to frequency of use.

Study 1

Method

Word selection

Words were drawn from the Affective Norms for English Words list (ANEW; Bradley & Lang, 1999). The 1,034 ANEW words have been normed by a large group of college students on pleasantness and arousal (see Bradley & Lange, 1999). The pleasantness dimension is a bipolar scale that runs from 1 to 9, with a rating of 1 indicating extremely unpleasant, a 5 indicating neutral, and 9 indicating extremely pleasant. The arousal dimension is a unipolar scale that runs from 1 to 9, with a rating of 1 indicating low arousal and 9 indicating high arousal. Information for obtaining the ANEW words is available from the Center for the Study of Emotion and Attention at http://www.phhp.ufl.edu/csea/index.html.

Frequency information on the ANEW words

The ANEW data set contains the Kucera and Francis (1967) frequency norms on each word. We also obtained the more modern HAL frequency information on each word from the English Lexicon Project (ELP). The ELP is a searchable Web-based database containing lexical characteristics and behavioral data on over 40,000 words and is available online at http://elexicon.wustl.edu/default.asp. We also used the log transform of both the KF and HAL frequency indexes, since frequency information is usually not normally distributed. The ELP database also contains part of speech codes on each word, coding whether it is a noun, a verb, or an adjective (some words can be used both as nouns and verbs [shriek], verbs and adjectives [awed], or adjectives and nouns [adult], and hence can have multiple codes).

We submitted the 1,034 ANEW words to the ELP search engine, which found exact matches for 1,021 words. The valence ratings from the ANEW database were then merged with the HAL frequency data and part of speech codes from the ELP database for each of these words. This list of 1,021 words forms the final data set used in our analyses. Thus, our valence and arousal ratings were taken from the ANEW database (Bradley & Lang, 1999) and our frequency index was taken from the HAL (i.e., Lund & Burgess, 1996) corpus via the ELP (Balota et al., 2007). All analyses were conducted across words.

Results and Discussion

Descriptive information on the words is presented in Table 1. Regarding pleasantness, the words averaged very close to the neutral point of 5 on the 9-point rating scale, though there was a good deal of variability on pleasantness. The average arousal rating fell in the moderately arousing range but again with variability across words. ANOVAs were conducted to examine for differences between adjectives, nouns, and verbs on the frequency indexes and pleasantness and arousal. No significant differences emerged, though there is a very slight tendency for adjectives to be used less often than nouns and verbs.

Table 1.

Descriptive Statistics on Words Broken Down by Parts of Speech.

| Freq-KF | Freq-HAL | log-KF | log-HAL | Valence | Arousal | |

|---|---|---|---|---|---|---|

| Total Word Sample (N = 1021) | ||||||

| Mean | 52.30 | 21138.02 | 2.83 | 8.57 | 5.14 | 1.65 |

| SD | 109.63 | 52970.38 | 1.53 | 1.77 | 1.99 | .38 |

| Adjectives (N = 261) | ||||||

| Mean | 41.67 | 18905.34 | 2.72 | 8.40 | 4.98 | 1.67 |

| SD | 79.37 | 48978.4 | 1.44 | 1.82 | 2.07 | .36 |

| Nouns (N = 805) | ||||||

| Mean | 60.62 | 24608.55 | 2.99 | 8.82 | 5.26 | 1.65 |

| SD | 120.81 | 58275.79 | 1.56 | 1.72 | 1.94 | .39 |

| Verbs (N = 360) | ||||||

| Mean | 66.58 | 29559.81 | 3.14 | 8.94 | 5.09 | 1.64 |

| SD | 140.48 | 71035.31 | 1.50 | 1.80 | 2.00 | .38 |

Note: Some words fall into more than one part of speech category; e.g., fun is both a noun and an adjective, charm is both a noun and a verb, and loved is both a verb and an adjective. Each word was counted in each part of speech category to which it is used. Consequently, the sum of the adjectives, nouns, and verbs exceeds the total word count.

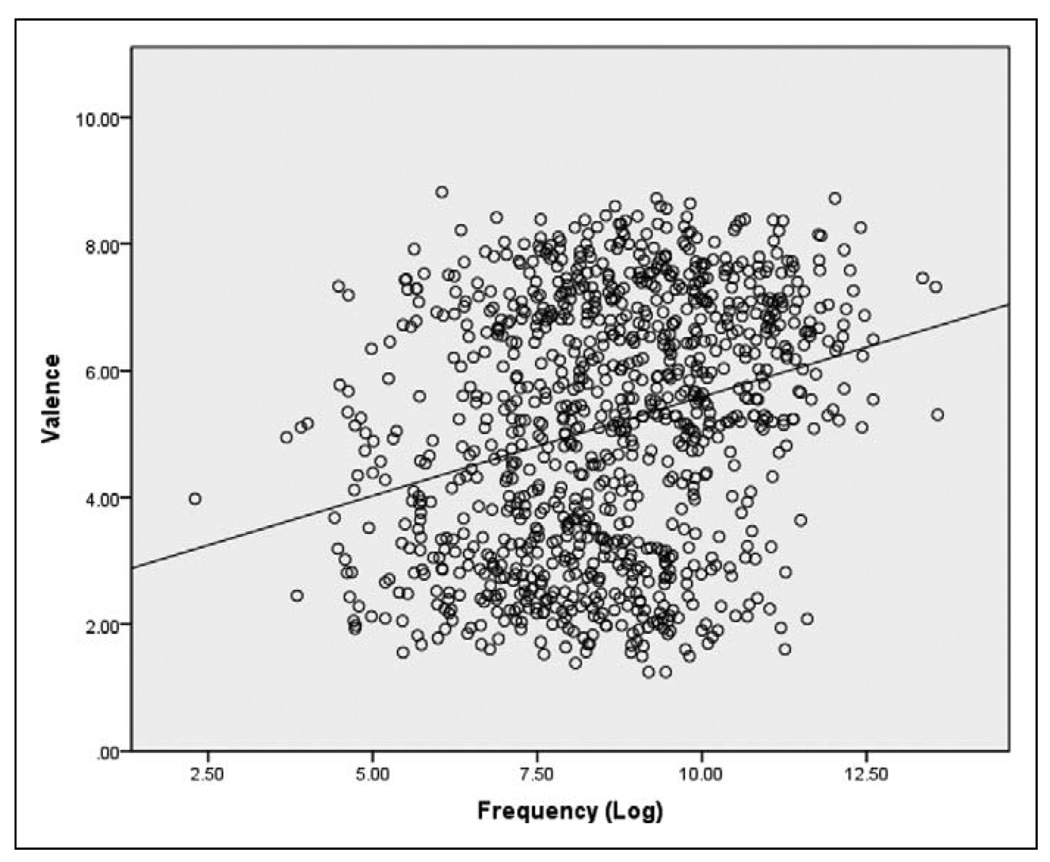

Pearson correlations between all variables are presented in Table 2. To summarize, frequency of use is significantly related to the pleasantness value of words for both frequency norms. The correlations are stronger for log-transformed frequency norms, which correct for skewness in the frequency data. In addition, this effect appeared to be linear and not driven by, for example, a total disuse of negative words (see Figure 1). Arousal value of words showed no relationship to frequency norms. We used z tests used to compare respective correlations between the adjective, noun, and verb categories, and none were found significant, implying that frequency of use correlates with word positivity regardless of whether those words are adjectives, nouns, or verbs (see Table 2).

Table 2.

Pearson Correlations Between Word Frequency Indexes and Ratings of Word Pleasantness and Arousal

| Freq-KF | Freq-HAL | log-KF | log-HAL | |

|---|---|---|---|---|

| Total Word Sample (N = 1021) | ||||

| Pleasantness | .18** | .18** | .28** | .28** |

| Arousal | −.02 | .00 | .00 | .05 |

| Adjectives (N = 261) | ||||

| Pleasantness | .21** | .20** | .25** | .22** |

| Arousal | −.01 | −.01 | .03 | .04 |

| Nouns (N = 805) | ||||

| Pleasantness | .19** | .19** | .29** | .29** |

| Arousal | −.02 | .01 | −.02 | .05 |

| Verbs (N = 360) | ||||

| Pleasantness | 16** | .19** | .29** | .31** |

| Arousal | −.04 | −.02 | −.08 | −.06 |

p < .01.

Figure 1.

Study 1: Scatterplot Representation of the Relationship Between Valence and Frequency of Use (Log Transformation of the HAL Index)

The frequency data used in this study were generated from written samples, and thus, the LPB we observed may not be present in naturalistic spoken language use. A second purpose of the current research is to determine if an LPB is present in everyday spoken language. Consequently, in Study 2 we will examine samples of spoken English. In addition, we will examine whether, as is the case with past research on language use (e.g., Mehl, Gosling, & Pennebaker, 2006; Yarkoni, 2010), theoretically relevant individual difference variables moderate the relationship between word positivity and frequency of use.

Individual differences in the LPB

Personality is widely predictive of the ways in which people use language in a number of contexts, such as everyday spoken language (Mehl et al., 2006), self-narratives (Hirsh & Peterson, 2009; Küfner, Back, Nestler, & Egloff, in press), interviews (Fast & Funder, 2008, 2010), and electronic mediums (Nowson, 2006; Nowson, Oberlander, & Gill, 2005; Oberlander & Gill, 2006; Yarkoni, 2010). While personality has been related to a number of linguistic behaviors, it has yet to be examined in relation to the LPB. So the second specific aim of this research is to examine whether the LPB is moderated by personality factors.

The degree to which a personality trait predicts the magnitude of the LPB should depend on the social and affective nature of the trait and the ways that trait predicts other types of language use. In particular, we predict that extraversion and agreeableness should moderate the LPB such that those high in these traits show stronger relationships between valence and frequency of use. Extraversion and agreeableness are affective personality traits comprising a number of features relevant to social facilitation (Ozer & Benet-Martínez, 2006). Those higher (vs. lower) in extraversion talk more and spend more time with other people. To enable more positive interactions, the highly extraverted individual may use more positive words. In a similar vein, those higher (vs. lower) in agreeableness are more polite and cooperative and spend more time with others. To enable affirmative and upbeat dialogue, the highly agreeable individual may use more positive words. Said differently, consistently using positive words in everyday speech would likely act to facilitate the more constructive and enjoyable social interactions created and experienced by those higher in extraversion and agreeableness.

Past research on the associations between personality and word use also suggest that extraversion and agreeableness should moderate the LPB. Those higher in extraversion and agreeableness use more words that refer to other people, use more feeling words, and refer to social processes more (Gill, Nowson, & Oberlander, 2009; Gill & Oberlander, 2002; Hirsh & Peterson, 2009; Mehl et al., 2006; Yarkoni, 2010); all of those word categories are likely positively valenced. In terms of the use of individual words, those higher in extraversion are most likely to use words relevant to socializing, such as “bar,” “drinks,” and “dancing” (Yarkoni, 2010). In contrast, the traits of neuroticism, conscientiousness, and openness are less likely to predict the magnitude of the LPB. These traits are less relevant to socializing and do not predict word categories that would be relevant for social facilitation. While those higher in neuroticism are more likely, and those higher in conscientiousness are less likely, to refer to negative emotions, these traits do not consistently predict other categories of nonemotional words (Yarkoni, 2010). Finally, while openness shows broad associations with the use of a number of word categories (i.e., first person singular, articles, prepositions, etc.), these categories contain words that are not typically valenced.

Prior research also reveals consistent evidence of gender differences in the degree to which women and men use emotion words. For example, Mehl and Pennebaker (2003) found that women use more emotion words in everyday language. In addition, Newman, Groom, Handelman, and Pennebaker (2008) found that women use more feeling words (happy, joy, anxiety, and sadness) across a variety of text genres. Women also use more overtly positive words that refer to other people (Newman et al., 2008). Given these findings, we expect gender to also moderate the LPB.

In Study 2, we use three different samples of spoken language sampled in a naturalistic context (using the Electronically Activated Recorder [EAR]; Mehl, Pennebaker, Crow, Dabbs, & Price, 2001) to examine whether an LPB is present in everyday spoken language and if any theoretically relevant individual difference variables predict the magnitude of this bias. Given prior research, we predict that gender, extraversion, and agreeableness will moderate this bias, such that women and those higher in extraversion and agreeableness will show a larger relationship between frequency of use and valence.

Study 2

Method

Participants

Data from a total of 228 (Age: M = 18.79, SD = 1.21; 50.9% female) participants were used for the examination of natural language usage. The data from these participants were gathered as a part of three different studies (Sample 1, n = 52; Mehl & Pennebaker, 2003; Sample 2, n = 96; Mehl et al., 2006; see also Mehl, 2006; Sample 3, n = 80; Vazire & Mehl, 2008; see also Holtzman, Vazire, & Mehl, 2010; Mehl, Vazire, Holleran, & Clark, 2010).

Materials

Personality measures

Personality as organized by the Big Five personality traits was assessed using the NEO Five Factor Inventory (NEO-FFI; Costa & McCrae, 1992; Sample 1 α = .65 to .86) and the Big Five Inventory (BFI-44; John & Srivastava, 1999; Sample 2 α = .77 to .90; Sample 3 α = .76 to .89). The personality data were centered prior to combining data from the three samples.

EAR monitoring

A representative sample of participants’ daily spoken word use was recorded using the EAR (Mehl et al., 2001). The EAR is a naturalistic observation sampling tool that uses a digital recorder, a microphone clipped to participants’ collars, and a controller microchip. It operates by periodically sampling brief snippets of ambient sounds from participants’ momentary social environments. The sampling pattern used in the three studies was a 30-second on, 12.5-minute off cycle, which produced approximately five recording intervals each hour. Participants were unable to determine when recordings were taking place. The EAR was carried in a small case attached either to the belt or shoulder and was switched off overnight (see Mehl et al., 2001, for further detail).

Lexical characteristics

The words used in this analysis, as well as the comparison data for the valence and arousal ratings of words, were taken from the 1,021 words contained in the ANEW database (Bradley & Lang, 1999). For our index of usage frequency, we utilized the HAL project (Lund & Burgess, 1996) because this index was the more robust predictor of word pleasantness in Study 1.

Procedure

For all three samples, participants were brought into the lab in small groups to complete the personality measures and were then given detailed instructions and debriefings regarding the use of the EAR. For Sample 1, participants wore the EAR for two 48-hour periods (either Monday morning through Wednesday morning or Wednesday afternoon through Friday afternoon) separated by 4 weeks (see Mehl & Pennebaker, 2003). For Sample 2, participants wore the EAR for 2 consecutive weekdays (using the same start and end times as those in Sample 1; see Mehl et al., 2006). For Sample 3, participants wore the EAR for 4 consecutive days (beginning on a Friday; see Vazire & Mehl, 2008). Upon returning to the lab, participants were given the opportunity to delete any recordings they desired (deletion rate was very low, i.e., .01% of recorded files).

Transcription and linguistic analysis

Research assistants transcribed all of the participants’ utterances. They received special training for how to handle ambiguities such as repetitions, filler words, nonfluencies, or slang. The frequency with which participants uttered specific words (all words contained in the ANEW database) was then calculated (using a user-defined dictionary) using the program Linguistic Inquiry and Word Count (LIWC; Pennebaker, Francis, & Booth, 2001).

Results and Discussion

Pearson correlations between the frequency of use in the combined EAR samples and the parameters reported in the ANEW (valence and arousal) and HAL (frequency of use) databases were calculated. Frequency of use was highly correlated between the HAL and EAR word counts across the 1,021 words (r = .88, p < .05), suggesting that the EAR frequency counts closely match the HAL norms.

Relationships between frequency of use and both valence and arousal were also consistent between the EAR samples (raw proportional frequency: valence r = .16, p < .05; arousal r = .01, ns; log-frequency: valence r = .18, p < .05, arousal r = −.01, ns) and the findings reported in Study 1. Although these effects may appear small, they are consistent with the effect size observed within Study 1 and the size of effects typically observed in studies of natural language use (i.e., Yarkoni, 2010). Thus, the frequency with which individuals use words in both daily life (EAR sampled language) and written samples (HAL) is associated with the valence of those words (based on the ANEW database), such that positive words are used more frequently.

To examine the extent to which gender or personality moderate the LPB, aggregation of the word frequency data was required. The reason for this is that there was extensive zero-level data (i.e., unspoken words) for any given word at the person level. In other words, one would not expect an individual to use every word present in the ANEW data set over the course of a month, let alone within a sample of 30-second periods occurring across several days (i.e., Yarkoni, 2010). Therefore, we aggregated words into groups having similar valence. The size of the word grouping was determined to ensure that there were enough words in each group to limit the amount of zero-level data. We also chose the size of the word grouping to allow for enough word groupings that within-person relationships could still be adequately determined.

To form the groupings, we rank-ordered the words by valence rating. We then created groups of 15 consecutive words based on this ranking (i.e., the most negative 15 words form Group 1, the second most negative 15 words form Group 2, etc.), resulting in 68 groups of words. The mean frequency of use from both the HAL and EAR data sets as well as the mean valence and arousal ratings from the ANEW database were then calculated across the 68 word groupings. After aggregation, the frequency measures from both the HAL and EAR (mean proportion of use) data sets were still highly related (r = .80, p < .05) and the relationships between frequency and valence (ANEW r = .58, p < .05; EAR r = .52, p < .05) and arousal (ANEW r = −.15, ns; EAR r = .07, ns) exhibited the same pattern of relationships, though the positivity correlations are higher likely due to aggregation achieved by grouping words. Thus, this aggregation method produced a data set that both maintains the preexisting relationships among study variables and allows for the calculation of within-person effects.

To determine the potential moderating role of personality and gender, we first calculated within-person correlations between EAR-based frequency and HAL frequency, pleasantness, and arousal ratings.1,2 As seen in Table 3, only the two socially facilitative personality variables of extraversion and agreeableness moderated the within-person relationships between actual spoken frequency of use and word pleasantness. As predicted, higher levels of extraversion and agreeableness were related to a larger LPB. Furthermore, none of the remaining Big Five dimensions predicted the magnitude of this bias. These results are consistent with our hypotheses and prior research regarding personality and language use.

Table 3.

Personality Moderation of the Linguistic Positivity Bias

| E | A | C | O | N | Gender | |

|---|---|---|---|---|---|---|

| Frequency–Frequency | .11 | .10 | .04 | .02 | −.12 | .11 |

| Frequency–Valence | .15* | .15* | .08 | .06 | −.12 | .19* |

| Frequency–Arousal | .08 | −.06 | −.02 | .02 | .10 | .15* |

Note: Word Group N = 68, participant N = 228. E = extraversion; A = Agreeableness; C = conscientiousness; O = openness to experience; N = neuroticism; data represent correlation coefficients. Gender: positive effects indicate that women had higher values than men.

p < .05

Results also indicate that gender moderates the within-person relationships between frequency and valence. Consistent with our hypotheses and prior research, women displayed a stronger relationship between valence and frequency (Female M = .35, SD = .13; Male M = .30, SD = .12; t(224) = −2.85, p < .05). In addition, women displayed a stronger relationship between arousal and frequency (Female M = .06, SD = .10; Male M = .03, SD = .11; t(224) = −2.29, p < .05). Women use positive emotion words and other positive, nonemotion words (i.e., words referring to other people) more frequently (Mehl & Pennebaker, 2003; Newman et al., 2008); women also display a larger LPB in these natural samplings of spoken language.

General Discussion

The results of these studies have several implications for existing and future research. First, these studies examined individual differences in a relatively broad pattern of language use. The majority of studies to date have focused on main effects (i.e., word categories from the standard LIWC dictionary; Pennebaker et al., 2001) or specific patterns of word usage (Gill & Oberlander, 2002; Oberlander & Gill, 2006; Yarkoni, 2010). The present research concerns personality predictors of naturalistic linguistic behavior across a wide number of word categories. Future research should attempt to determine the degree to which personality and other individual difference variables predict broad speech patterns.

Second, while there is a growing body of research examining individual differences in the number (Rozin et al., 2010) and use (Hirsh & Peterson, 2009; Mehl et al., 2006) of emotion words, these studies examined the use of emotionally relevant (i.e., valenced) words. Not all words that are emotionally relevant are emotion descriptors. In other words, while cloudy is not a negative emotion word and sunny is not a positive emotion word, both of these words have valenced connotations. By exclusively examining emotion words, one loses the potential differences in words with emotional connotations that are not emotion descriptors. Our findings also show that there is a difference between the bias and use of these emotionally relevant words. While individuals may show rapid and devoted attention to highly negative words (Larsen, Augustine, & Prizmic, 2010; Nasrallah, Carmel, & Lavie, 2009), they do not use these words to a greater degree. Thus, despite the greater cognitive weight of words like murder and vomit, words like delight and lucky are used to a greater degree.

Finally, our findings suggest a possible role for social facilitation in the use of more positive words (Fiedler, 2008). Using more positive words may lead to more positive social interactions and those who experience more positive social interactions should use positive words to a greater degree. Indeed, those individuals who spend more time with others and who refer to other people more frequently (i.e., women and people higher in extraversion and agreeableness) did show a higher LPB. However, it is also possible that our findings reflect the influence of emotion on language. People generally experience mild positive emotions and this typical experience may influence the language people use in an emotion-congruent manner, creating a tendency to use more positive words. This would be consistent with our moderation findings; those higher (vs. lower) in extraversion and agreeableness experience relatively more positive emotions (for a review, see Larsen & Augustine, 2008) and also display a relatively larger LPB. Similarly, those higher (vs. lower) in neuroticism experience relatively more negative emotion (for a review, see Larsen & Augustine, 2008); although not significant, our data reveal a marginal effect, such that those higher in neuroticism display a lesser LPB. Thus, our data suggest a possible role for both social facilitation and emotion-congruent language use in the LPB. Future research should examine other ways in which our social lives, emotions, and other categories of behavior percolate into and influence our use of language.

In sum, individuals display a generic LPB, such that they use positively valenced words to a greater degree than they do negatively valenced words. This applies to nouns and verbs as well as adjectives, and it applies to written and spoken words as well. Moreover, personality and gender predict the degree to which individuals exhibit this linguistic bias. People in general, and some people more than others, tend to talk more about the brighter side than the darker side of life.

Acknowledgments

Financial Disclosure/Funding

The authors disclosed receipt of the following financial support for the research and/or authorship of this article: Portions of this research were supported by Grant R01 AG028419 to Randy J. Larsen. During the preparation of this report Adam A Augustine was supported by a National Research Service Award T32 GM081739 to Randy J. Larsen.

Biographies

Adam A Augustine is a graduate student in the Department of Psychology at Washington University in St. Louis.

Matthias R. Mehl is an assistant professor in the Department of Psychology at the University of Arizona.

Randy J. Larsen is the William R. Stuckenberg Professor of Human Values and Moral Development and the Chair of the Department of Psychology at Washington University in St. Louis.

Footnotes

Declaration of Conflicting Interests

The authors declared that they had no conflicts of interests with respect to their authorship or the publication of this article.

Although multilevel modeling is the default method of choice for examining the moderation of within-person effects, we are not examining the moderation of within-person effects but rather the moderation of the match between a within-person variable (frequency of word usage) and a fixed comparison/criterion variable (valence ratings from the ANEW database). Thus, the only true within-person variable is frequency, and we analyze these data using the process approach (Larsen, Augustine, & Prizmic, 2009), rather than multilevel modeling.

To ensure that effects were not due to data collection differences between the three samples used in this study, we examined if the frequency of use in each word grouping differed based on sample. A MANOVA (all 68 frequency groupings were entered as the dependent variables and sample was entered as the independent variable) revealed no sample-based differences in frequency, F(136, 318) = 1.187, ns.

References

- Aaron PG, Joshi RM. Written language is as natural as spoken language: A biolinguistic perspective. Reading Psychology. 2006;27:263–311. [Google Scholar]

- Anderson NH. Likeableness ratings of 555 personality–trait adjectives. University of California at Los Angles; 1964. Unpublished manuscript. [Google Scholar]

- Balota DA, Cortese MJ, Sergent-Marshall SD, Spieler DH, Yap MJ. Visual word recognition of single-syllable words. Journal of Experimental Psychology: General. 2004;133:283–316. doi: 10.1037/0096-3445.133.2.283. [DOI] [PubMed] [Google Scholar]

- Balota DA, Yap MJ, Cortese MJ, Hutchison KA, Kessler B, Loftis B, Treiman R. The English Lexicon Project. Behavior Research Methods. 2007;39(3):445–459. doi: 10.3758/bf03193014. [DOI] [PubMed] [Google Scholar]

- Boucher J, Osgood CE. The Pollyanna Hypothesis. Journal of Verbal Learning and Behavior. 1969;8:1–8. [Google Scholar]

- Bradley MM, Lang PJ. Affective norms for English words (ANEW): Stimuli, instruction manual and affective ratings (Technical Report C-1) Gainesville, FL: The Center for Research in Psychophysiology, University of Florida; 1999. [Google Scholar]

- Burgess C, Livesay K. The effect of corpus size in predicting RT in a basic word recognition task: Moving on from Kucera and Francis. Behavior Research Methods, Instruments, & Computers. 1998;30:272–277. [Google Scholar]

- Costa PT, McCrae RR. Revised NEO Personality Inventory (NEO-PI-R) and NEO Five-Factor Inventory (NEO-FFI) professional manual. Odessa, FL: Psychological Assessment Resources; 1992. [Google Scholar]

- Fast LA, Funder DC. Personality as manifest in word use: Correlations with self-report, acquaintance report, and behavior. Journal of Personality and Social Psychology. 2008;94:334–346. doi: 10.1037/0022-3514.94.2.334. [DOI] [PubMed] [Google Scholar]

- Fast LA, Funder DC. Gender differences in the correlates of self-referent word use: Authority, entitlement and depressive symptoms. Journal of Personality. 2010;78:313–338. doi: 10.1111/j.1467-6494.2009.00617.x. [DOI] [PubMed] [Google Scholar]

- Fiedler K. Language: A toolbox for sharing and influencing social reality. Perspectives on Psychological Science. 2008;3:38–47. doi: 10.1111/j.1745-6916.2008.00060.x. [DOI] [PubMed] [Google Scholar]

- Gable SL, Reis HT, Elliot A. Behavioral activation and inhibition in everyday life. Journal of Personality and Social Psychology. 2000;78:1135–1149. doi: 10.1037//0022-3514.78.6.1135. [DOI] [PubMed] [Google Scholar]

- Gill AJ, Nowson S, Oberlander J. What are they blogging about? Personality, topic and motivation in blogs. Unpublished proceedings of the 3rd International AAAI Conference on Weblogs and Social Media (ICWSM09); AAAI, Menlo Park, CA. 2009. [Google Scholar]

- Gill AJ, Oberlander J. Proceedings of the 24th Annual Conference of the Cognitive Science Society. Austin, TX: Cognitive Science Society; 2002. Taking care of the linguistic features of Extraversion; pp. 363–368. [Google Scholar]

- Gough HG. California Psychological Inventory. Palo Alto, CA: Consulting Psychologists Press; 1956. [Google Scholar]

- Hirsh JB, Peterson JB. Personality and language use in self-narratives. Journal of Research in Personality. 2009;43:524–527. [Google Scholar]

- Holtzman NS, Vazire S, Mehl MR. Sounds like a narcissist: Behavioral manifestations of narcissism in everyday life. Journal of Research in Personality. 2010;44:478–484. doi: 10.1016/j.jrp.2010.06.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- John OP, Srivastava S. The Big Five trait taxonomy: History, measurement, and theoretical perspectives. In: Pervin LA, John OP, editors. Handbook of personality theory and research. New York: Guilford Press; 1999. pp. 102–138. [Google Scholar]

- Johnson RC, Thomson CW, Frincke G. Word values, word frequency, and visual duration thresholds. Psychological Review. 1960;67:332–342. doi: 10.1037/h0038869. [DOI] [PubMed] [Google Scholar]

- Kucera H, Francis W. Computational analysis of present-day American English. Providence, RI: Brown University Press; 1967. [Google Scholar]

- Küfner ACP, Back MD, Nestler S, Egloff B. Tell me a story and I will tell you who you are! Lens model analyses of personality and creative writing. Journal of Research in Personality. (in press) [Google Scholar]

- Larsen RJ, Augustine AA. Basic personality dispositions: Extraversion/neuroticism, BAS/BIS, positive/negative affectivity, and approach/avoidance. In: Elliot AJ, editor. Handbook of approach and avoidance motivation. Hillsdale, NJ: Lawrence Erlbaum Associate; 2008. pp. 151–164. [Google Scholar]

- Larsen RJ, Augustine AA, Prizmic Z. A process approach to emotion and personality: Using time as a facet of data. Cognition & Emotion. 2009;23:1407–1426. [Google Scholar]

- Larsen RJ, Augustine AA, Prizmic Z. The power of negative information: Preferential attention to negative vs. positive stimuli. Manuscript in preparation. 2010 [Google Scholar]

- Lund K, Burgess C. Producing high-dimensional semantic spaces from lexical co-occurrence. Behavioral Research Methods, Instruments, & Computers. 1996;28:203–208. [Google Scholar]

- Mehl MR. The lay assessment of subclinical depression in daily life. Psychological Assessment. 2006;18:340–345. doi: 10.1037/1040-3590.18.3.340. [DOI] [PubMed] [Google Scholar]

- Mehl MR, Gosling SD, Pennebaker JW. Personality in its natural habitat: Manifestations and implicit folk theories of personality in daily life. Journal of Personality and Social Psychology. 2006;90:862–877. doi: 10.1037/0022-3514.90.5.862. [DOI] [PubMed] [Google Scholar]

- Mehl MR, Pennebaker JW. The sound of social life: A psychometric analysis of student’s daily social environments and natural conversations. Journal of Personality and Social Psychology. 2003;84:857–870. doi: 10.1037/0022-3514.84.4.857. [DOI] [PubMed] [Google Scholar]

- Mehl MR, Pennebaker JW, Crow M, Dabbs J, Price J. The Electronically Activated Recorder (EAR): A device for sampling naturalistic daily activities and conversations. Behavior Research Methods, Instruments, and Computers. 2001;33:517–523. doi: 10.3758/bf03195410. [DOI] [PubMed] [Google Scholar]

- Mehl MR, Vazire S, Holleran SE, Clark CS. Eaves-dropping on happiness: Well-being is related to having less small talk and more substantive conversations. Psychological Science. 2010;21:539–541. doi: 10.1177/0956797610362675. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nasrallah M, Carmel D, Lavie N. Murder, she wrote: Enhanced sensitivity to negative word valence. Emotion. 2009;9:609–618. doi: 10.1037/a0016305. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Newman ML, Groom CJ, Handelman LD, Pennebaker JW. Gender differences in language use: An analysis of 14,000 text samples. Discourse Processes. 2008;45:211–236. [Google Scholar]

- Nowson S. Unpublished doctoral thesis. University of Edinburgh; 2006. The language of weblogs: A study of genre and individual differences. [Google Scholar]

- Nowson S, Oberlander J, Gill AJ. Proceedings of the 27th Annual Conference of the Cognitive Science Society. Austin, TX: Cognitive Science Society; 2005. Weblogs, genres and individual differences; pp. 1666–1671. [Google Scholar]

- Oberlander J, Gill AJ. Language with character: A corpus-based study of individual differences in e-mail communication. Discourse Processes. 2006;42:239–270. [Google Scholar]

- Ozer D, Benet-Martínez V. Personality and the prediction of consequential outcomes. Annual Review of Psychology. 2006;57:401–421. doi: 10.1146/annurev.psych.57.102904.190127. [DOI] [PubMed] [Google Scholar]

- Pennebaker JW, Francis ME, Booth RJ. Linguistic inquiry and word count: LIWC 2001. Mahwah, NJ: Lawrence Erlbaum Associates; 2001. [Google Scholar]

- Rozin P, Berman L, Royzman E. Biases in use of positive and negative words across twenty languages. Cognition & Emotion. 2010;24:536–548. [Google Scholar]

- Suitner C, Maass A. The role of valence in the perception of agency and communion. European Journal of Social Psychology. 2008;38:1073–1082. [Google Scholar]

- Thorndike EL, Lorge I. The teacher’s word book of 30,000 words. Oxford, UK: Bureau of Publications, Teachers College; 1944. [Google Scholar]

- Unkelbach C, von Hippel W, Forgas JP, Robinson MD, Shakarchi RJ, Hawkins C. Good things come easy: Subjective exposure frequency and the faster processing of positive information. Social Cognition. 2010;28(4):538–555. [Google Scholar]

- Vazire S, Mehl MR. Knowing me, knowing you: The relative accuracy and unique predictive validity of self-ratings and other-ratings of daily behavior. Journal of Personality and Social Psychology. 2008;95:1202–1216. doi: 10.1037/a0013314. [DOI] [PubMed] [Google Scholar]

- Yarkoni T. Personality in 100,000 words: A large-scale analysis of personality and word use among bloggers. Journal of Research in Personality. 2010;44:363–373. doi: 10.1016/j.jrp.2010.04.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zajonc RB. Attitudinal effects of mere exposure. Journal of Personality and Social Psychology Monograph Supplement. 1968;9(2):1–27. [Google Scholar]