Abstract

Scientific models are abstractions that aim to explain natural phenomena. A successful model shows how a complex phenomenon arises from relatively simple principles while preserving major physical or biological rules and predicting novel experiments. A model should not be a facsimile of reality; it is an aid for understanding it. Contrary to this basic premise, with the 21st century has come a surge in computational efforts to model biological processes in great detail. Here we discuss the oxymoronic, realistic modeling of single neurons. This rapidly advancing field is driven by the discovery that some neurons don't merely sum their inputs and fire if the sum exceeds some threshold. Thus researchers have asked what are the computational abilities of single neurons and attempted to give answers using realistic models. We briefly review the state of the art of compartmental modeling highlighting recent progress and intrinsic flaws. We then attempt to address two fundamental questions. Practically, can we realistically model single neurons? Philosophically, should we realistically model single neurons? We use layer 5 neocortical pyramidal neurons as a test case to examine these issues. We subject three publically available models of layer 5 pyramidal neurons to three simple computational challenges. Based on their performance and a partial survey of published models, we conclude that current compartmental models are ad hoc, unrealistic models functioning poorly once they are stretched beyond the specific problems for which they were designed. We then attempt to plot possible paths for generating realistic single neuron models.

Keywords: dendrites, compartmental model, cable theory, ion channel, channel kinetics

“I come to bury Caesar, not to praise him.” [Mark Antony, in Shakespeare's Julius Caesar (1599)]

the brain computes, ergo, solving the puzzle of the brain requires a computer. This is one credo of modern computational neuroscience. A computer, however, is a golem that requires a human-written list of instructions, i.e., a program, to compute. Thus, day in and day out, computational neuroscientists write computer code. We vainly call this inglorious activity modeling. More formally perhaps, the ultimate goal of computational neuroscience, as expressed by Sejnowski et al. (1988) is “…to explain how electrical and chemical signals are used in the brain to represent and process information.” Modeling can be abstract, describing a neuron as a simple summation device (Abeles 1991; Gerstein and Mandelbrot 1964; Izhikevich 2007; Knight 1972). The allure of this methodology is the ability to use simple tractable rules to study neuronal computation. By contrast, other models, named, or perhaps misnamed, “realistic,” generate complex outputs, rich with biological detail. The basic assumption behind these realistic models is that expressing the biological details of the single neuron in silico creates a virtual double of the neuron of which its computational properties will emerge like Athena jumping out of Zeus's head. Recently, these biologically detailed models have been applied to simulate the activity of large neuronal populations (Markram 2006, 2012; Markram et al. 2015).

Between integrate and fire models and biologically detailed ones, there is a spectrum of neuronal modeling techniques (Herz et al. 2006). Here we remain agnostic regarding most modeling dogmas and take a critical look at the current state of biologically detailed or “realistic” neuronal modeling. We ask: have computers and biology ripened to the extent that we can build full, biologically detailed neural models or not? This question has been gathering importance since studies of voltage-gated channels, dendritic synaptic integration, axonal properties, and synaptic plasticity have shown that single neurons perform computations more complex than integrate-and-fire. This observation leads to a simple yet unanswered question: if single neurons do not just integrate and fire, what computation do they do (Koch 1997; Koch and Segev 2000; Silver 2010)?

We note, that at the heart of realistic neuronal modeling dwells the assumption that computations performed by a real neuron are more complex than those described by a point neuron. It is also assumed that this complex computation affects the computation of the network. The former assumption is supported by many studies demonstrating the complex nature of postsynaptic integration (Koch 1999; London and Hausser 2005; Major et al. 2013; Migliore and Shepherd 2002; Poirazi et al. 2003b; Polsky et al. 2004), the latter one less so, although some network simulations using complex neurons predict complex network activity (Kording and Konig 2000, 2001a, 2001b; Markram et al. 2015). Nevertheless, accepting these assumptions forces us to acknowledge that single neuron computation is complex.

One established strategy for approaching this question is to use abstract or partial models of an individual cellular function. This approach attempts to distill, one by one, a set of cellular computational properties. This approach has advanced rapidly in great part due to exposure to larger and larger data sets and more powerful computing resources. Indeed, progress is amazing. In less than two decades, we have gone from endless hours running crude simulations on 486 processors to automatically optimizing models using supercomputers, from modeling networks of just a few hundred virtual neurons to networks of billions of units, and from modeling single biochemical reactions to simulating entire cytoplasmic networks. Researchers now have the luxury of performing very large and complex simulations (Ananthanarayanan and Modha 2007; Eliasmith et al. 2012; Eliasmith and Trujillo 2014; Izhikevich and Edelman 2008; Markram 2006, 2012; Markram et al. 2015). Likewise, the availability of computing power and wealth of biological data themselves suggest biologically detailed modeling. And mightn't we hope that by better describing the underlying biology, we will also better approximate the functionality of these systems, in particular, their computations? We must take heed and remember that, as the adage runs, with great power comes great responsibility. Accordingly, some scientists have asked, given such mastodonic resources, how may they best be used for understanding brain function (Eliasmith and Trujillo 2014). Here we ask a more basic question, not how the gargantuan computer power and data sets delivered into our hands can best be deployed, but rather can we use these resources to understand the brain, or are such resources by themselves insufficient and we are unprepared to wield this power? While it is impossible to cover the entire field of computational neuroscience in one review, we can gain insight into this general question by focusing on recent attempts at “realistic” compartmental models for dendritic and axonal integration in individual, neocortical, pyramidal neurons in layer 5.

Complex Single-Neuron Computation

Complex single-neuron computation is best exhibited in two areas, and it is in them that we will focus our review of computational studies: dendritic synaptic integration (Branco et al. 2010; Branco and Hausser 2010; Cash and Yuste 1998, 1999; Gasparini et al. 2004; Gulledge et al. 2005; Hausser et al. 2000; Larkum et al. 2009; Losonczy and Magee 2006; Makara and Magee 2013; Mel 1993; Nettleton and Spain 2000; Oviedo and Reyes 2012; Polsky et al. 2004; Remy et al. 2009; Stuart et al. 1999; Yuste 2011) and axonal excitation (Bender and Trussell 2012; Clark et al. 2005; Foust et al. 2010; Hu et al. 2009; Khaliq and Raman 2006; Martina et al. 2000; Meeks and Mennerick 2007; Palmer et al. 2010; Palmer and Stuart 2006; Popovic et al. 2011; Stuart et al. 1997a; Stuart and Palmer 2006).

The properties and functions of dendrites have been extensively studied over the past two decades (for reviews, see Johnston 1999; Johnston et al. 2003; Kastellakis et al. 2015; London and Hausser 2005; Magee and Johnston 2005; Major et al. 2013; Migliore and Shepherd 2002; Ramaswamy and Markram 2015; Stuart et al. 1999), mainly due to the success of patch-clamp recording from visually identifiable dendrites in brain slices (Stuart et al. 1993) and of new imaging techniques (Antic 2003; Antic et al. 1999; Denk et al. 1994; Lasser-Ross et al. 1991; Tsien 1989). Conflicting with the early assumption that dendrites were passive, it has been shown that action potentials initiated at the axon either actively back-propagate into the dendritic tree (Bischofberger and Jonas 1997; Chen et al. 1997, 2002; Hausser et al. 1995; Spruston et al. 1995; Stuart et al. 1997a; Stuart and Sakmann 1994), or else fail to propagate effectively into the dendritic tree, for example, in cerebellar Purkinje cells, where the amplitude of the action potential decreased to just a few millivolts at the proximal dendrite (Llinas and Sugimori 1980; Stuart and Hausser 1994; Vetter et al. 2001). Furthermore, dendrites generate complex regenerative calcium and sodium spikes (Amitai et al. 1993; Antic 2003; Ariav et al. 2003; Bischofberger and Jonas 1997; Chen et al. 1997; Golding and Spruston 1998; Johnston et al. 1996, 2003; Kamondi et al. 1998; Korogod et al. 1996; Llinas and Sugimori 1980; Magee et al. 1998; Martina et al. 2000; Migliore and Shepherd 2002; Pouille et al. 2000; Schiller et al. 1997; Schwindt and Crill 1998; Stuart et al. 1997b; Zhu 2000), modulate synaptic potentials (Magee 1999; Magee and Johnston 1995), contain electrically- and chemically-defined compartments (Bekkers 2000a; Gasparini and Magee 2006; Hoffman et al. 1997; Korngreen and Sakmann 2000; Larkum et al. 1999b, 2001; Losonczy and Magee 2006; Magee 1999; Schiller et al. 1997, 2000), and influence the induction and expression of synaptic plasticity (Golding et al. 2002; Kim et al. 2012; Lavzin et al. 2012; Losonczy and Magee 2006; Losonczy et al. 2008; Magee and Johnston 1997; Makara and Magee 2013; Markram et al. 1997; Nevian et al. 2007; Polsky et al. 2004; Smith et al. 2013). Moreover, recent in vivo studies demonstrated complex dendritic computations (Grienberger et al. 2014, 2015; Palmer et al. 2014; Smith et al. 2013). These results revitalized the discussion of the computational properties of the single neuron (Hausser and Mel 2003; Koch 1999; London and Hausser 2005; Major et al. 2013; Migliore and Shepherd 2002; Oviedo and Reyes 2012; Poirazi et al. 2003b; Polsky et al. 2004; Silver 2010).

The neuron's computational capabilities depend on various parameters, such as its morphology (dendritic/axonal arborization, length, diameter, membrane area) and the distribution of ion channels throughout its membrane (Hausser et al. 2000; Kim and Connors 1993; Mainen and Sejnowski 1996; Roberts et al. 2009; Schaefer et al. 2003b; Serodio and Rudy 1998; Talley et al. 1999; van Elburg and van Ooyen 2010; Vetter et al. 2001; Weaver and Wearne 2008). The neuron's ability to couple inputs originating in different compartments also depends on the specific features of each compartment, and its threshold for attenuating postsynaptic potentials as defined by its cable properties, together with the different active properties of the dendrites (London and Hausser 2005; Rall et al. 1995). Pyramidal neurons in layer 5 couple and decouple proximal and distal areas through morphology of proximal oblique dendrites (Schaefer et al. 2003b). Coupling lowers the threshold for initiating dendritic calcium spikes and leads to axonal burst firing (Helmchen et al. 1999; Larkum et al. 1999a, 1999b; Williams and Stuart 1999).

Computational properties, however, are only rarely defined in a simple manner by physical properties. The efficacy of back-propagating action potentials, for example, is lower when dendrites have complex structure, but this can be compensated with an increase in the density of voltage-gated channels (Vetter et al. 2001). The relation between action potential propagation and dendritic complexity has the implication that distal synapses and axonal output are less coupled in neurons with highly branching geometries. Yet, once again, this can be compensated (Baer and Rinzel 1991; Jaslove 1992) by changes in spine density during development (Gould et al. 1990; Harris et al. 1992) and by synaptic plasticity (Engert and Bonhoeffer 1999; Maletic-Savatic et al. 1999). The computational significance of dendritic spines is underscored by the fact that dopaminergic neurons and various interneurons, which have minimal dendritic branching, tend to be aspiny, whereas Purkinje neurons have both highly branching dendrites and high spine density (Vetter et al. 2001).

The biophysics of dendrites too can nonlinearly modulate integration of synaptic potentials on one hand (Cash and Yuste 1998, 1999; Yuste 2011) and on another support local electrogenesis of dendritic spikes, leading to a nonlinear integration of synaptic potentials (Gasparini et al. 2004; Gulledge et al. 2005; Hausser et al. 2000; Losonczy and Magee 2006; Makara and Magee 2013; Mel 1993; Nettleton and Spain 2000; Polsky et al. 2004) and allowing the neuron to respond over a longer timescale. The nonlinear integration of synaptic inputs occurs either in response to functional or anatomical synapse clusters (Druckmann et al. 2014; Losonczy and Magee 2006; Makino and Malinow 2011; McBride et al. 2008; Poirazi et al. 2003b; Polsky et al. 2004; Yadav et al. 2012). In the case of functional clustering, synchronous stimulation of nearby synapses within the same branch results in a supralinear summation, while stimulation of the same number of synapses located between branches sum in a linear way (Branco et al. 2010; Branco and Hausser 2010; Cash and Yuste 1998; Hausser and Mel 2003; Larkum et al. 2009; Losonczy and Magee 2006; Mel 1993; Oviedo and Reyes 2012; Poirazi et al. 2003a; Polsky et al. 2004), suggesting that a single dendritic branch acts as a computational compartment of the neuron.

Furthermore, supralinear synaptic integration at distal dendritic branches can lower the driving force for activating voltage-gated ion channels (Hausser and Mel 2003; Magee 2000; Mel 1993; Spruston 2008; Williams and Stuart 2003). Since the driving force allows the dendrites to regenerate their spikes and propagate them forward to the axonal action potential initiation zone, its modulation serves as an additional mechanism for nonlinear dendritic computation. In layer 5 pyramidal neurons, synaptic conductance is highly compartmentalized, and the dendritic tree performs axo-somatic and dendritic integration in high-conductance conditions (Williams 2004, 2005). It has been suggested that the axon initial segment plays a significant role in neuronal processing through a mechanism of activity-dependent plasticity, where the neuron can modulate its excitation by changing the location of subcellular sections along the axon initial segment. This mechanism shifts the input-output function of the neuron according to the level of excitation (Burrone and Murthy 2003; Grubb and Burrone; Marder and Prinz 2002; Turrigiano and Nelson 2000). Combining axonal plasticity together with dendritic synaptic plasticity can maximize the neuron computational process and stabilize adaptation. A recent study has shown that not only does the axon initial segment influence layer 5 pyramidal neuron excitability, but also the distal axon plays a role in facilitating high-frequency burst firing (Kole 2011).

New morphological data too suggest that the traditional neuron computation model is oversimplified. Axons can emerge from dendrites in neuroendocrine cells (Herde et al. 2013), dopaminergeic neurons (Hausser et al. 1995), interneurons (Martina et al. 2000), hippocampal and cortical pyramidal neurons (Lorincz and Nusser 2010; Peters et al. 1968; Sloper and Powell 1979; Thome et al. 2014), and Thome et al. (2014) showed that this anatomical difference in CA1 pyramidal neurons causes different functional responses in neurons with axon-carrying dendrites. Dendritic spikes are more common in axon-carrying dendrites rather than in nonaxon-carrying dendrites. This enhances supralinear integration of synaptic inputs and tightly couples synchronous timing of synaptic inputs to action potential output (Ariav et al. 2003; Losonczy and Magee 2006; Losonczy et al. 2008; Thome et al. 2014). So neuronal morphology affects neuronal computation and broadens our view of how the outputs of single neurons are related to their inputs.

In the Beginning There Was the Hodgkin and Huxley Model

Neuronal modeling, as well as many other subdisciplines within computational neuroscience, grew directly out of Hodgkin and Huxley's seminal investigation of the action potential in the squid giant axon (Hodgkin and Huxley 1952d). One could even make a case that this study culminated with the first compartmental model. Using the newly invented voltage-clamp technique, Hodgkin and Huxley recorded ionic currents from the squid giant axon and, using clever ionic substitution and novel voltage-clamp experiments, isolated two voltage-gated conductances (Hodgkin and Huxley 1952a, 1952b, 1952c; Hodgkin et al. 1952). They then performed two stages of data reduction. First, they used standard chemical kinetics to analyze the voltage-dependence of the sodium and potassium conductances, thereby obtaining the rate constants for opening, closing, and inactivation of the conductances. Second, they reduced these rate constants to a small set of algebraic and differential equations describing the time and voltage dependence of the channels. This model explained and predicted the basis of the action potential in the squid giant axon (Hodgkin and Huxley 1952d). The entire model was derived from experimental results and contains almost no parameters of unknown value (the values for the maximal conductances and number of gates being manually adjusted by Hodgkin and Huxley). Any aspiring neuroscientist in general, and computational neuroscientists in particular, should find the time to read Hodgkin and Huxley's 1952 papers in the Journal of Physiology.

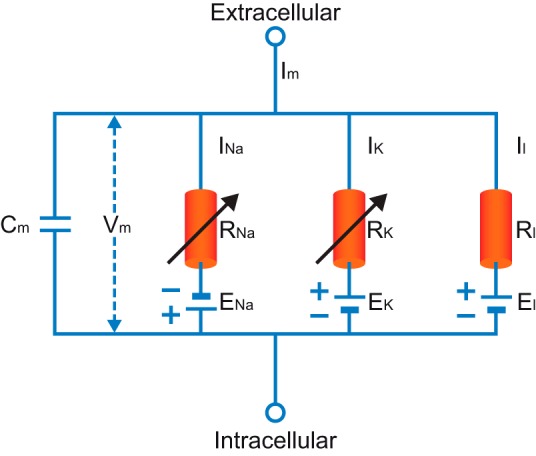

As a first step in our discussion, we reproduce Hodgkin and Huxley's model. We do so, however odd, to highlight several aspects of the model that are relevant to our discussion. Figure 1 presents the parallel conductance model of neural excitation. This graphical representation can be formally expressed using the following equations (we deliberately present the equations in the form given by Hodgkin and Huxley to calculate the propagating action potential, since they are the most relevant to the modern form of compartmental modeling):

| (1) |

| (2) |

| (3) |

| (4) |

| (5) |

| (6) |

| (7) |

Fig. 1.

The parallel conductance circuit model of the squid giant axon. Im, membrane current; Vm, membrane potential; INa, sodium current; RNa, membrane resistance to sodium; IK, potassium current; RK, membrane resistance to potassium; Il, leak current; Rl, nonspecific membrane resistance.

where a is diameter of the axon; Ri is cytoplasmic resistance; Cm is membrane capacitance; ḡk is maximal potassium conductance density; n is potassium activation gate; V is membrane potential, Ek is potassium Nernst potential; ḡNa is maximal sodium conductance density; Ena is sodium Nernst potential; m is sodium activation gate; h is sodium inactivation gate; ḡl is maximal leak conductance density; El is leak Nernst potential; αn is forward rate constant for potassium activation; βn is backward rate constant for potassium activation; αm is forward rate constant for sodium activation; βm is backward rate constant for sodium activation; αh is forward rate constant for sodium inactivation; and βh is backward rate constant for sodium inactivation.

Two aspects of the model must be highlighted. First, it is remarkable that these 7 equations contain 26 parameters. Three are passive parameters Ri, Cm, and gl (today we use the membrane resistance Rm, which relates to 1/gl); one is a structural parameter (a), and three reversal potentials. The other 19 parameters define the kinetic properties of the voltage-gated sodium and potassium conductances. All of the parameters were derived from experiments on the squid giant axon. We call attention to the surprisingly high number of parameters at this early stage of the discussion to give us a point of reference for comparison below with today's compartmental models. Second, we must note, the Hodgkin and Huxley's model is a purely electrical one. It makes no reference to biochemistry, changes to cytoplasmic ionic concentrations, or other intracellular processes. Even today, compartmental models remain electro-centric, a legacy partly responsible for the lamentable lack of common language between computational neuroscience and chemo-centric systems biology (De Schutter 2008).

Then Came Compartmental Modeling

The Hodgkin and Huxley model describes the physiology of one cylindrical structure. Wilfred Rall blazed a new path in modeling quantifying the laws of electrical current flow in neurons with arbitrary structure (Rall et al. 1995). In a series of classic papers during the 1950s, he provided deep mechanistic insight into the cable properties of neurons with complex dendritic trees (Rall 1957, 1959, 1960). Then in 1964 he described a numerical approach for solving cable equations and demonstrated that juxtaposed synapses summate sublinearly (Rall 1964). This made him the first to use a computer for simulating neuronal physiology and among the first who applied computers to problems in biology. The numerical technique developed by Rall in his 1964 paper is the foundation of modern compartmental modeling. Of the multitude of contributions made by Rall, this work, in our opinion, truly distinguishes him as a founding father of computational neuroscience.

The numerical method presented by Rall takes its kernel from the analysis of compartmentalized chemical reactions. Reactants enter a first compartment (or subvolume of space), their products are funneled to the next compartment, and so forth. The reaction in each compartment is described with a discrete set of differential equations, while the flow of material from compartment to compartment, and reaction to reaction, can be expressed as mass flux. Within each compartment it is assumed that the activity is homogenous. This makes it possible to formalize it as an ordinary first order differential equation. Spatial changes are expressed only as differences between compartments.

Rall adopted this formalism to describe neuronal electrical activity in a distributed neuronal morphology (Rall 1964). The neuronal tree is segmented into cylindrical compartments. The length of each cylindrical segment is set so that the axial changes in potential are small enough to comply, within acceptable error, with the assumption of compartment homogeneity. Segmenting in this way often results in a series of hundreds, and even thousands, of compartments describing a single neuronal morphology. Since each compartment is defined by its own set of differential equations, the number of equations scales linearly with the number of compartments. The dynamics of membrane currents in each segment are described, assuming the parallel conductance model with ordinary differential equations. Segments are connected by resistors through which axial current flows. We call this numerical modeling scheme compartmental modeling, commemorating its chemical origins; it is one of the most widely applied techniques in computational neuroscience today.

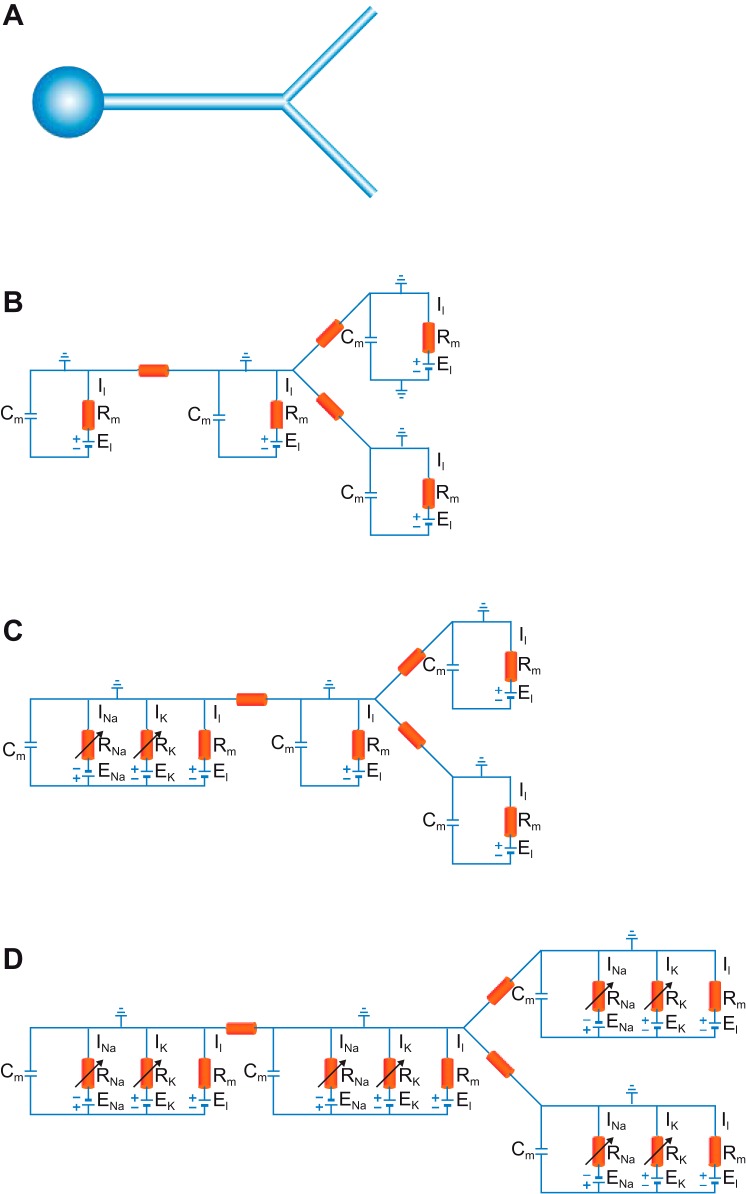

We noted above that the Hodgkin-Huxley model, which is by definition a single-compartment model, has 26 parameters. We now estimate the number of parameters in a simple compartmental model of a small neuron with one somatically connected dendrite bifurcating into two branches (Fig. 2A). Let the structure be represented by four compartments. The first functional model we impose on the structure is the passive one (Fig. 2B). Each compartment is represented as an equivalent membrane circuit of a capacitor connected in parallel to a resistor and the passive driving force of the membrane, and so it is endowed with three parameters. The entire neuron, then, is represented with four ordinary differential equations. With 4 compartments and 3 connections among them, the total number of parameters is 15. It is standard to normalize the passive parameters to the diameter of the compartment (Koch 1999) and to assume that these normalized parameters have the same values throughout the dendritic tree. This reduces the number of parameters which require tuning to just four (Cm, Rm, Ri, El). The actual number of parameters, however, scales with the number of compartments.

Fig. 2.

A simple compartmental model. A: a schematic drawing of a neuron with a soma and one bifurcating dendrite. B: an equivalent circuit of the same neuron assuming only passive membrane parameters. C: an equivalent circuit of the same neuron assuming passive dendrites and an active soma. D: an equivalent circuit of the same neuron assuming that both soma and dendrites are excitable. Rm, membrane resistance.

Consider now a second model (Fig. 2C) for this structure. It has the same morphological parameters but an excitable soma represented with the Hodgkin-Huxley parallel conductance model. Since the Hodgkin-Huxley model has 26 parameters, this small compartmental neuronal model with the Hodgkin-Huxley model embedded inside it has 38 parameters. As in the above example, we can make several assumptions to reduce the set of parameters. We assume that the passive properties are homogenous throughout the somato-dendritic tree. We also assume that the kinetics of the Hodgkin-Huxley model do not require any tuning, leaving us only with the maximal conductances of the sodium and potassium channels to tune. These assumptions allow us to define a set of just six parameters (GNa,soma, GK,soma, Cm, Rm, Ri, El) to make this model of a neuron with active soma and passive dendrites function reasonably well. Now, to model the dendrites as active, we embed the Hodgkin-Huxley model into each compartment of the structure (Fig. 2D). The total number of parameters thus rises to 107! With similar assumptions to those used above, we can reduce the number of those parameters requiring human tuning to ∼11. Compared with the Hodgkin-Huxley model (26 parameters), it may appear that we have achieved parameter reduction. This is not so. Similar reduction of the Hodgkin-Huxley model leaves us with just two parameters (GNa,soma, GK,soma). Thus we experience parameter inflation in both the full and reduced model.

Parameter inflation, we contend, is the Achilles' heel of compartmental modeling. While even in the simple structure we considered and even with the simplifying assumptions we made, the number of parameters rapidly inflated. Today, most compartmental models are constructed for complex cellular morphologies. They contain large numbers of voltage-gated channels, and their ion channels are not distributed along the neuronal membrane homogeneously. As a result, they contain many parameters of unknown value. And due to the limitations of modern recording techniques, we do not have experimental access to thin dendritic and axonal branches, so we are ignorant of the properties and distributions of many ion channels in the systems we are modeling. Therefore, much of the time spent making a compartmental model is dedicated to guessing parameter values, testing the model, guessing again, testing again, a cycle of hand-tuning that can loop for a depressingly long time. It should be emphasized that most of the reductions and assumptions that are commonly being made in constructing a compartmental model are due to a lack of available experimental measurements of specific parameters, rather than ignorance of such data on the modelers' part. Anyone who has hand-tuned a compartmental model knows that after many tuning cycles one loses the mental capacity to improve the model: every change made, it comes to seem, is bad. This outcome, almost inevitable, is a direct consequence of the rapid parameter inflation. Malaise accompanies many scientific and engineering problems whose character is defined by their large numbers of parameters. It is a malaise which emerges from an evil curse, the curse of dimensionality.

Multiparameter Models and the Curse of Dimensionality

“What casts the pall over our victory celebration? It is the curse of dimensionality, a malediction that has plagued the scientist from earliest days.” (Bellman 1961)

Richard Bellman coined the phrase “curse of dimensionality” to describe how adding dimensions to a mathematical space, practically building a multidimensional hypercube, exponentially increasing the volume of that space (Bellman 1957). In our discussion, the curse is that, as the number of model parameters increases, the time required for efficiently searching parameter space increases exponentially. Furthermore, when dimensionality increases, the volume of space containing a given number of data points increases so rapidly, the samples become sparse; as a result, the number of samples needed to reach statistical significance also grows exponentially. Moreover, while it is often important to find correlated parameters while analyzing parameter space, in a high-dimensional space all parameters pairings appear sparse, and this drastically hampers standard parameter organization schemes (Bellman 1957).

To put this in perspective, consider a simple model with 10 independent parameters of unknown value each with just 10 possible values. The number of parameter combinations to sift through is 1010. If each combination can be tested in just 1 s, a single-core processor still requires over 300 years to test each point in the space. And unfortunately, due to sparseness, local gradient decent methods are not efficient. Note that in nature one rarely finds completely independent parameters due to large parameter dependence. This reduces solution space considerably. Yet many compartmental models have parameter spaces vastly larger, with close to 20 parameters of unknown value each changing over a wide physiological range. Even conservative estimates of the possible permutations reach astronomical numbers. Moreover, it is highly likely that reducing computing time by randomly sampling such a vast space will lead to under-sampling and ill-representation of the space.

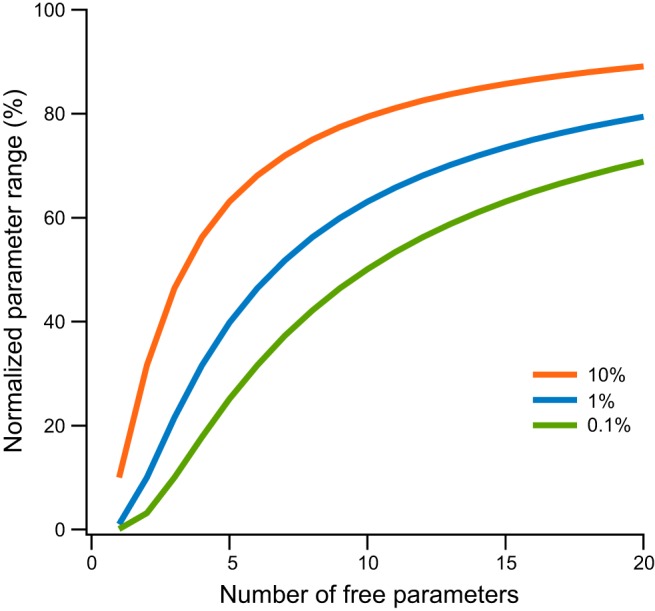

To demonstrate this problem let us consider how much of the parameter space need be sampled to study the neighborhood around one specific set of parameter vector x̄. This may tell us in which direction we may find more optimal parameters. We assume that points in the space are uniformly distributed in an n-dimensional cube of unit length; that is, all parameters range from 0 to 1 or were normalized to this range. Now we sample some portion of the total volume of the parameter space in a neighborhood around x̄. This neighborhood is a hypercube around x̄. It has volume V = ln, and thus side length l = V1/n. Now, given a proportion of the parameter space which we are willing to sample, we may calculate the proportion of each parameter's range which must be sampled around x̄. Let us sample just 1% of the parameter space in model with only five parameters of unknown value. Since the volume of the parameter space is 1, the volume of the neighborhood around x̄ which we must sample is V = 0.01, and the side of the hypercube is l = 0.011/5 ≈ 0.4. In other words, almost 40% of the possible values for each parameter must be sampled to understand the neighborhood around x̄. To sample just 0.1% of space in a neighborhood around x̄, we still need to sample 25% of that range for the same model. The proportion of each parameter's range that must be sampled increases exponentially with the number of parameters of unknown value (Fig. 3). This is a direct consequence of the curse of dimensionality. As the number of parameters increases, we are driven to sample practically the entire parameter space. Thus, when we construct a compartmental model with several unknown parameters and start to fiddle with them, we are fighting unwittingly a mighty curse, a curse which Hodgkin and Huxley elegantly averted by avoiding parameters of unknown value through carefully reducing their data, and a curse which casts its pall over all current attempts to realistically model neuronal physiology.

Fig. 3.

Sampling parameter neighborhood in a parameter hypercube. We assume that parameter values in the model are uniformly distributed in an n-dimensional cube of unit length. We sample some portion of the total volume of the parameter space in a neighborhood around x̄. This neighborhood is a hypercube around x̄. It has volume V = ln, and thus side length l = V1/n. The range of variation for each parameter in the model is plotted as a function of the number of model parameters. The lines represent the range of parameter variation one needs to sample to properly sample the volume around a specific solution x̄. The lines were calculated for sampling of 10%, 1%, and 0.1% of the total volume around x̄.

Methods in Compartmental Modeling

To demonstrate the complexity and the challenges which lay before the modeling community, we consider as a test case the quest to model the neocortical pyramidal neuron. First, although we will review the current state of compartmental modeling software and techniques. In Software, Constructing a compartmental model, and Tuning the model sections below, we give a fairly technical introduction to the techniques currently used for compartmental modeling. We then resume discussion of whether compartmental models can realistically model single neurons when we introduce the layer 5 pyramidal neuron in The Layer 5 Pyramidal Neuron as a Model System section below.

Software.

During the 1970s and early 1980s, researchers creating compartmental models wrote their own code using generic programming languages. By the end of the 1980s, several software packages dedicated for compartmental modeling had emerged. A brief literature search summons the names SPICE (Bunow et al. 1985; Segev et al. 1985), NEURON (Hines 1989), GENESIS (Bower and Beeman 1995), SABER (Carnevale et al. 1990), AXONTREE (Manor et al. 1991), and NODUS (De Schutter 1989).

Two programming languages among this early batch are actively maintained today, NEURON (Carnevale and Hines 2006; Hines and Carnevale 1997, 2000, 2001) and GENESIS (Bower and Beeman 1995). Both are environments for modeling individual neurons as well as networks of neurons. They contain tools for building and using models that are numerically sound. In our laboratory we have used NEURON for over a decade (Almog and Korngreen 2014; Brody and Korngreen 2013; Gurkiewicz and Korngreen 2007; Gurkiewicz et al. 2011; Keren et al. 2005, 2009; Lavian and Korngreen 2016; Pashut et al. 2011, 2014; Schaefer et al. 2003a, 2007). While our comments are based on our intimate acquaintance with NEURON, we have heard other computational neuroscientists make uncannily similar comments about GENESIS.

NEURON, probably for historical reasons, is constructed from two programming languages. Ion channel mechanisms (Hodgkin-Huxley-like kinetics, Markov chain kinetics, calcium-dependent kinetics, synaptic properties, and others) are programmed using templates in MOD (Carnevale and Hines 2006; Hines and Carnevale 2000). These templates are then parsed and translated into functions in C, compiled, and bound into a dynamic library. All other operations in NEURON are performed using scripts written in HOC and executed in an interpreter (Carnevale and Hines 2006). Since NEURON's advent several decades ago, computer programming has greatly advanced such that today some parts of its architecture lack standard features. The most prominent drawback in our opinion is the lack of formal debugger and profiler. Other problems are the tendency to rely on global variables, outdated graphic library, and the HOC language itself, which was not developed for this purpose and is simply too primitive [in an attempt to solve some of NEURON's intrinsic problems, the command line interpreter, once in HOC, is being switched to Python (Hines et al. 2009)]. Nevertheless, while many of NEURON's intrinsic problems require attention, it is among the best tools for compartmental modeling. Most importantly, it has a large and vibrant user community, mostly readable documentation (Carnevale and Hines 2006), and an extensive online database ModelDB (Hines et al. 2004; McDougal et al. 2015; Migliore et al. 2003; Morse 2008). In recent years many investigators have voluntarily contributed models to ModelDB. It is now possible to find code relevant to almost any project in ModelDB, thus cutting time for model development.

While some compartmental modeling software packages atrophied, others have sprung up in their place. In recent years, simulation packages have increased in number and variety. We will describe only a few. More elaborate lists can be found online.1 PSICS simulates neuronal activity, taking into account stochastic properties of ion channels (Cannon et al. 2010). This is an important aspect of neuronal physiology that is difficult to implement in other software packages. Successfully using PSICS to model the physiology of a CA1 neuron suggests that stochastic gating of dendritic ion channels may account for a major fraction of the probabilistic somatic and dendritic spikes (Cannon et al. 2010). CONICAL, a completely different package, is a C++ class library focusing on compartmental modeling. Contrary to the interpreter and GUI style of other packages, this allows direct programming in C++, and thus allows fast execution of the compiled program. As part of the OpenWorm project (Szigeti et al. 2014), an international collaboration aiming at understanding the behavior of the worm C. elegans using fundamental physiological processes, the Geppetto simulator was developed as the core modular simulation engine of this project to create computational models of the worm (Szigeti et al. 2014). Another ambitious simulation environment is MOOSE (http://moose.ncbs.res.in) whose ancestry can be traced to GENESIS (Ray and Bhalla 2008). MOOSE spans different scales, enabling simulations of chemical signaling models, reaction-diffusion models, single-neuron models, network models, and multiscale models (Ray and Bhalla 2008). It is compatible with GENESIS, Python and NeuroML. There are also more generic tools that have extensions allowing compartmental modeling. One of them is PyDSTool (Clewley 2012), a differential equation solver containing various toolboxes, including one allowing compartmental modeling.

Many of the recent software packages use Python as their main scripting language. Moreover, many of these simulation environments are accessible using an XML-based description language called NeuroML that provides a common data format for defining and exchanging descriptions of neuronal cell and network models that is developed as part of the Open Source Brain international collaboration (Cannon et al. 2014; Crook et al. 2007; Gleeson et al. 2007, 2010; Vella et al. 2014). NeuroML, acting as an über-language, allows the scientist to define a model using several levels of description starting at the channel level (ChannelML), through the cellular morphology (MorphML) and all the way to the network (NetworkML). The resulting XML templates, which can be converted to a variety of simulator specific scripts, are then executed using the appropriate tool (NEURON, GENESIS, MOOSE, PSICS, etc.). In contrast, the ongoing Global Neuroinformatic Project aims at providing a framework for simulator independent code generation in the hope of standardizing the diverse field of neuronal modeling. This heralds the transition from venerable, yet now somewhat outdated software, to more modern programming standards. In particular, it facilitates for debugging, which is sourly missed in older simulation packages. Modern debugging is of the utmost importance since it will allow assessing not only the logical correctness of the code, but also model comparisons to experiments, final model validation, parameter sensitivity analysis, and many other currently unavailable tests. In conclusion, there are many simulation environments available for compartmental modeling. A user, who is flexible enough to know or learn several platforms, can pick among them that which is best for his or her project (Gewaltig and Cannon 2014).

Constructing a compartmental model.

Constructing a compartmental model consists of three basic stages: defining the morphology and passive membrane parameters, building a kinetic model for each compartment, and inserting these kinetic models into the morphology. In the early days of compartmental modeling, all of these stages involved hand-tuning. Today hand-tuning has been reduced with the availability of online resources and raw computing power.

In the first stage, we decide on a cellular morphology, then adjust it to the requirements of the model and the limits of computing power. In one of his famous papers, Rall painstakingly reduced the morphology of an α-motoneuron to an equivalent cylinder (Rall 1959). This allowed him to analytically solve the model's equations and demonstrate the dendritic load on somatic synaptic integration. More recently, reducing the number of compartments in the model has been useful for other reasons. For a time, reduction was necessary to deal with the computing power limits of desktop computers. As the power of desktop computers has grown, this need has diminished such that today even models with several hundred compartments can be executed rapidly. Reduction is also a sensible strategy in light of aspiration to use efficient models of single neurons as building blocks for models of neural networks. Reducing the number of compartments can greatly increase the speed of network computation (Bahl et al. 2012; Bush and Sejnowski 1993; Destexhe 2001; Jackson and Cauller 1997). Due to a lack of experimentally reconstructed morphologies, abstract morphologies were used. Today this difficulty has been obviated to a great extent thanks to online databases such as NeuroMorpho (Ascoli et al. 2007; Halavi et al. 2008) which freely provide simulator-ready neuronal morphologies. That said, morphological reduction is still investigated as a tool for enhancing computational efficacy (Destexhe 2001; Wybo et al. 2015). It is important to note that morphology is not a constant thing but rather highly variable even between neurons of the same type (Cuntz et al. 2007, 2010, 2011; Hay et al. 2011; Torben-Nielsen and De Schutter 2014).

So, while reduction remains an important strategy, it is no longer forced upon our models of single neurons by the limits of our computers. The extent to which we may reduce neuronal morphology depends upon our research questions and the level of abstraction that is reasonable and in conformance with our assumptions. Since it is realistic neuronal modeling discussed here, we henceforth discuss models which do not employ reduction.

In the second stage of model construction, we assign passive parameters to the structure. This may be done by assigning average values given in physiology textbooks, by searching the literature for experimental measures of the parameters in the salient kind of neuron, or by estimating passive parameters experimentally. Using average values for the passive parameters can be beneficial when attempting to explore or demonstrate a basic subthreshold mechanism. In this way, we rapidly generate models and quickly gain a sense of their qualitative characteristics, which can be very helpful in explaining experimental results or suggesting working hypotheses. Searching the literature, either for specific values for the neuron simulated or for population values (Tripathy et al. 2015) can provide a good estimate of passive parameters and, of no less importance, their range of change. This physiological range of the parameters can be used to set the search space for the optimization algorithm discussed later in the text.

Simple experimental protocols (e.g., whole-cell patch-clamp recording combined with intracellular dye-staining and computer-aided morphological reconstruction) can also be used to estimate the values of the passive parameters. (Clements and Redman 1989; Major et al. 1994; Roth and Häusser 2001; Stuart and Spruston 1998). Typically, these measurements are made from the soma. We then posit that the passive parameters are similar throughout the dendritic tree (save for changes due to spine density). Note, though, that even this straightforward assumption should be verified per the investigated system. In layer 5 pyramidal neurons it has been shown that passive parameters change along the apical dendrite (Stuart and Spruston 1998). Once we have set the passive parameters, we can test the model's response to subthreshold stimulation. This can reveal problems in the code and verify that, at least for the subthreshold zone, the model behaves similarly to the neuron modeled.

In the third stage, we write and debug the voltage- and ligand-gated ion channels that will be inserted into the model. Most compartmental models use the Hodgkin-Huxley formalism to describe ion channel kinetics (Hodgkin and Huxley 1952d). It must be remembered, though, that this kinetic scheme is phenomenological and assumes that each activation and inactivation gate acts independently of other gates. The mechanisms underlying membrane excitation have revealed themselves over the years in much greater detail than Hodgkin and Huxley could see, and several differences from their model must be emphasized. Whereas the Hodgkin-Huxley model has no connectivity between the activating and inactivating gates of the voltage-dependent sodium channel, more recent studies have shown that the voltage-dependence of the inactivation gate is due to its coupling to the activation process, i.e., it can close only after the activation gate opens (Armstrong and Bezanilla 1977; Bezanilla and Armstrong 1977). Moreover, additional recording and analysis of single channels have shown that Markov chain models better describe ion channel kinetics (Horn and Lange 1983; Lampert and Korngreen 2014; Magleby 1992; McManus and Magleby 1989; McManus et al. 1989; Qin et al. 2000a, 2000b; Sakmann and Neher 1995; Venkataramanan and Sigworth 2002). Several studies have confronted cases where the Hodgkin-Huxley formalism proved insufficient requiring the use of Markov chain kinetics (Khaliq et al. 2006; Schmidt-Hieber and Bischofberger 2010). Functional Markov models can be extracted by curve fitting whole traces (Gurkiewicz and Korngreen 2006, 2007; Gurkiewicz et al. 2011; Lampert and Korngreen 2014; Menon et al. 2009; Milescu et al. 2005) not only from single channel records. Furthermore, an algorithm for automatically optimizing the Markov model by addition and subtraction of states has been proposed (Menon et al. 2009). It is, however, important to point out that Markov models are not a magic bullet. Replacing the description of ion channel kinetics from Hodgkin-Huxley type models to Markov models does not solve any of the problems we discuss in this review. Thus, even though Markov models are superior, physiological modeling of neuronal activity mostly uses the simpler Hodgkin-Huxley formalism. This is probably due to the lack of good tutorials for creating Markov models and their significantly longer execution time. In our hands Markov models executed up to four times slower than the Hodgkin-Huxley models for the same channel (unpublished observations). Interestingly, Hodgkin himself noted that the phenomenological gating scheme was adopted because he and Huxley could not provide a solution stemming from basic principles (Hodgkin 1976). Indeed no ab initio solution is possible even today because of the complexity of the problem we face when we try solving the ion channel structure-function problem.

Unfortunately, the vast genetic information available to us about the sequences of most voltage-gated channel subunits provides only partial kinetic information for compartmental modeling. Expressing these subunits in various expression systems enables generating functional channels from which gating kinetics can be extracted using the voltage-clamp technique. However, using these kinetic models for modeling neuronal physiology is problematic. For example, in our favorite model system, the apical dendrite of layer 5 pyramidal neurons, studies reveal the expression of the voltage-gated potassium channel subunits Kvα1.2, Kvα1.4, Kvα2.1, Kvβ1.1, Kvβ2.1, Kvα4.2 and Kvα4.3 (Rhodes et al. 1995, 1996; Serodio and Rudy 1998; Sheng et al. 1994; Trimmer and Rhodes 2004; Tsaur et al. 1997). However, voltage-clamp experiments have detected only three voltage-gated channels in these neurons (Bekkers 2000a, 2000b; Bekkers and Delaney 2001; Korngreen and Sakmann 2000; Schaefer et al. 2007). This variety of channel subunits leads to the conclusion that native potassium channels are heteromers, containing probably both α- and β-subunits. It has been shown that subunit composition greatly affects the properties of potassium channels (Rettig et al. 1994; Ruppersberg et al. 1990; Stuhmer et al. 1989). Similar disparity between the number of expressed subunits and the final number of channels has been observed in other neuronal types and may be the rule rather than the exception. And this is just the tip of the iceberg. We note in passing the modulation of channels by signaling pathways and auxiliary subunits. Thus the molecular identity of the channel cannot be used to predict its kinetic properties. Therefore, kinetics of voltage-gated channels extracted from artificial expression systems should be used with utmost caution in compartmental models.

The most reasonable alternative to using kinetic models extracted from expression systems is to use kinetic analysis of voltage-clamp experiments performed directly on the cell under investigation. It is important to caution that in vitro brain slice preparations too have their problems, many times void of neuromodulators with known and unknown effects on channel kinetics. In many cases, code for the appropriate channel based on such experiments is available on ModelDB. Nevertheless, such code must be checked against the original study on which it was based. Not all models we downloaded from the web were faithful descriptions of the experimental data. Another alternative is to generate a kinetic model by extracting the kinetic scheme from published papers. Still another alternative, one which can only be commended, is to perform the experiments yourself, or at least have a trusted and proximate collaborator do the experiments and hand you the data. Simply extract membrane patches, employ the voltage-clamp protocols, and use the Hodgkin-Huxley formalism or Markov chain modeling to generate a kinetic model. Nothing replaces working with the experimental data. Once we have the kinetic models, we need only to insert them into the passive model according to published conductance gradients.

Tuning the model.

At this stage of model construction we have made assumptions about the morphology, electrotonic structure, channel kinetics, and channel distribution. We assume that these have been conscious assumptions based on prior experience in modeling. It is vital to dredge these assumptions into consciousness as they determine the strength of the conclusions we can draw from the model. These assumptions are expressed in many parameters of unknown values. Unfortunately, we cannot yet fill in these values experimentally: our current technology cannot fully access fine dendritic and axonal processes. To overcome this knowledge gap, there are several strategies. Different strategies are appropriate for different kinds of research questions.

One straightforward strategy is general simplification. For example, we may assume that the dendrites are passive and the soma is excitable (like in the example presented in Fig. 2C). When investigating subthreshold activity, this is a fair and powerful assumption [although in some cases the involvement of voltage-gated ion channels in subthreshold activity cannot be neglected (Devor and Yarom 2002; Jacobson et al. 2005)]. We recently applied a similar assumption with success to qualitatively investigate dendritic inhibitory synaptic transmission (Lavian and Korngreen 2016). Such simplifying assumptions can reduce the compartmental model to a form that can help accept or reject a working hypothesis for a given physiological question.

Another strategy has to be adopted when, even after making such additional assumptions, there remain important parameters of unknown values. No values having been determined experimentally, one may try to hand-tune the model until it appears to function properly. This trial-and-error process requires that we keep in mind an image of the desired behavior or functionality. Simply modify the parameters, execute the model, check the results against the ideal, and repeat until the model fits the image. Since this is done by mentally scoring the performance of the model, the process is no better than your meat-brain and estimates, i.e., probably highly inaccurate, nearly impossible to reproduce, heuristic, almost arbitrary, erratic, and subject to sleep disturbances. Furthermore, due to the curse of dimensionality, parameter space cannot be searched exhaustively, and probably not even very well. The process is solipsistic: it's not at all clear that one can bootstrap beyond what is in essence assumed at the beginning. That said, when the model aims to address a conceptual problem or when it is very simple, hand-tuning may suffice. Otherwise, when the model is complex, or aims at realistic behavior, the curse of dimensionality damns hand-tuning to sisyphean looping and failure.

Computer-tuning the model.

This third strategy, or set of strategies, for tuning the model merits special attention. We are living in the information age of the 21st century. Perhaps there is salvation in silicon; perhaps computer algorithms can successfully search the parameter space and find the best models. The computer must perform the same heuristic process we would perform when hand-tuning the model. The hope is that the computer does it more rapidly, more thoroughly, and more objectively. The strategy for automatic model optimization is based on multiple quantitative comparisons of possible models to experimental, or surrogate, data. This approach has three basic components: the optimization algorithm, the comparison function (a.k.a., cost or score function), and the data set to which the model is compared. Each component affects the efficiency of the search through parameter space (Bahl et al. 2012; Druckmann et al. 2008, 2011; Keren et al. 2005; LeMasson and Maex 2001). Let us consider each in turn, surveying currently available techniques.

the optimization algorithm.

The simplest automatic approach to model optimization is brute force: the computer checks all possible parameter permutations. This is extremely cumbersome, even with large supercomputers. Thus this method can only be used sparingly and with models having small numbers of parameters where rastering through the whole parameter space is possible. This brute-force tuning algorithm has been used to great effect in studying models of the somatogastric ganglion demonstrating that identical network or neuron activities can be obtained from disparate modeling parameters (Goldman et al. 2001; Golowasch et al. 2002; Prinz et al. 2004). It has been suggested to use grid sampling of parameter space to tune compartmental models (Prinz et al. 2003). Brute force sampling, however, is probably not applicable to more complex models due to the almost infinite number of calculations needed to fully sample parameter space. This probably applies also to a variant of the brute-force approach in which a subset of the possible vectors is randomly selected (Marder and Taylor 2011; Taylor et al. 2006, 2009).

The alternative to grid sampling or random sampling is to use an algorithm to sample the parameter space in a smarter manner. The general idea behind such an algorithm is to use a set of heuristic rules and an iterative process to search within parameter space in the vicinity of a given solution to find a better solution. There are many such search algorithms. All of them draw their core from natural phenomena that search vast parameter spaces.

Simulated annealing takes its core from crystal formation and has proven highly effective for solving discrete problems, such as the traveling salesman problem (Kirkpatrick et al. 1983). A simple simulated annealing algorithm starts with a random parameter vector representing the system in the hot liquid state. A “family” of successor solutions is generated by randomly perturbing one or several parameters in the original parameter vector. Each new family member is tested and compared using a score function (see below). Family members that scored the best generate the next families of solutions. As this iterative process continues, the algorithms adjust a “temperature” parameter. This reduces the size of parameter perturbations according to a predetermined annealing schedule. As the iterations continue, random perturbations slowly decrease, converging on a selected solution. Simulated annealing performs well for compartmental models with a small numbers of parameters, but less effectively for models with many compartments (Vanier and Bower 1999). A combination of simulated annealing with the deterministic simplex optimization algorithm (Cardoso et al. 1996; Press 1992) was effective in determining the relative contribution of morphology and active conductances in models of neurons of the goldfish hindbrain (Weaver and Wearne 2008). It is worth noting that both random sampling and simulated annealing are forms of Gibbs sampling which theoretically is guaranteed to recover the true distribution, given sufficient sampling (Casella and George 1992).

Particle swarm optimization draws on the behavior of schools of fish and flocks of birds (Kennedy et al. 2001). The optimization method assumes that each individual in the swarm, i.e., each candidate solution, has two basic behaviors, conforming to the direction of the swarm and moving independently toward a potential food source. The optimization process begins with a swarm of random parameter vectors traveling at random “velocities.” Individuals are scored according to a cost function, and, using a linear combination of the best (lowest) scoring individuals, other individuals adjust their positions (i.e., their parameters). Although particle swarm optimization does not guarantee finding a global minimum, it does find local minima, and sometimes gets stuck in them, neglecting proximate better food sources (i.e., parameter sets) just over hills in the cost function. An important advantage of particle swarm optimization is that its convergence to a manifold of solutions allows for analysis of solution variability.

Genetic algorithms contain operators from both genetics and evolutionary biology (Mitchell 1996). Like simulated annealing and particle swarm optimization, genetic algorithms are initiated with a large population of random parameter vectors, each of which, in our context, represents the parameters of a compartmental model. Each vector is used to generate a simulation and is scored according to how well that simulation resembles the target data set. A first operator is then applied to this primordial soup of solutions descends from the concept in evolutionary biology of “survival of the fittest.” Parameter vectors, which generate simulations that are most similar to the target data, are considered to have metaphoric good sets of genes and allowed to transfer their “genetic material” to the next generation, while vectors generating simulations that do not measure up against this survival condition are taken to have bad sets of genes and not allowed to reproduce. The individuals with good scores are then paired and “mated” to produce a new population. During the mating process, the individuals, i.e., the parameter vectors, interchange parts of their genetic code, i.e., their values for particular parameters, using a crossover operator. This operator takes the form of randomly selecting one point along one parent parameter vector and exchanging the values from that point onwards with those of the other parent parameter vector (two-point crossover operators are also in use). At the same time, another operator is also active, a mutation operator, which, with a given probability, replaces or perturbs the value of one parameter in one individual. This next generation, then, is used to generate simulations, scored according to how well it resembles the target data set, and mated. This process is repeated for many generations. Many variants of the genetic-evolutionary algorithm have been presented in the general optimization literature. Of the stochastic search algorithms presented here, genetic algorithm has emerged as the most popular algorithm for optimizing compartmental neuronal models (Almog and Korngreen 2014; Bahl et al. 2012; Druckmann et al. 2007, 2008, 2011; Gerken et al. 2005; Gurkiewicz and Korngreen 2007; Gurkiewicz et al. 2011; Hay et al. 2011; Keren et al. 2005, 2009; Tabak and Moore 1998; Tabak et al. 2000; Vanier and Bower 1999).

These three stochastic optimization algorithms, as well as others we have not discussed here, attempt to reduce computational load while fully sampling parameter space. In other words, they aim to overcome, at least partially, the curse of dimensionality, by searching parameter space in a systematic yet heuristic manner. Indeed, it has been rigorously proven that, given infinite computing time, all these algorithms locate the best possible solution in parameter space. Unfortunately, since infinite computing time is hard to come by, they practically never reach optimal solutions (Keren et al. 2005, 2009). Furthermore, they are not immune to the curse of dimensionality (Chen et al. 2015) and cannot be considered magic bullets for compartmental model optimization and generation of realistic models. Moreover, they demand so much iteration, they require computing power orders of magnitude beyond that available in a single desktop computer. So, to actually implement these algorithms, parallel computing must be used. Fortunately, all of the above-mentioned search algorithms could be parallelized easily using simple, embarrassingly parallel methods. There are many flavors of parallel computing machines. The most widely distributed are Linux clusters that can be constructed and configured easily, even with old computers (Hoffman and Hargrove 1999). Parallel clusters of computers are now available in almost every university, drastically reducing computing time. Many companies, such as Amazon and Google, provide time on their immense clusters at reasonable rates. Commercially available graphic processing units (GPUs), which contain many parallel mathematical processing cores, can also be used to considerably speed up computation time, possibly by several orders of magnitude (John et al. 2009; Liu et al. 2009; Manavski and Valle 2008; Nageswaran et al. 2009; Pinto et al. 2009; Rossant et al.; Stone et al. 2007; Won-Ki et al. 2010). We have shown that parallelizing genetic algorithm optimization of ion channel kinetics (Ben-Shalom et al. 2012) or the execution of the compartmental model (Ben-Shalom et al. 2013) on a Fermi graphic computing engine from NVIDIA, using the CUDA extension of the C programming language, increased the algorithm's speed over a hundredfold. However, the use of GPUs is still on a small scale compared with Linux clusters, probably due to the nontrivial programming required to utilize these cards.

The cost function.

As we noted above, hand-tuning may be conceived as an optimization process in which the scientist scores the simulations produced by different parameters mentally with criteria ranging from explicit to subjective. To translate this scoring process to the computer, a cost function is defined and used to quantitatively compare the output of the simulations with a target data set. As suggested by their name, these functions measure the distance between the simulation and the data and are used to score the aptness of a specific simulation relative to many other simulations. The cost function used for optimizing compartmental models usually measure this distance using root mean squares:

| (8) |

In Eq. 8, N is the total number of points in the target data set, and data and simulation are, respectively, vectors of the experimental or surrogate data and of the simulation output matched appropriately. We have left Eq. 8 in a general and informal form because target data sets can differ radically between different optimization studies of compartmental models, and the methods by which different cost functions find the difference between these vectors too can differ in crucial ways.

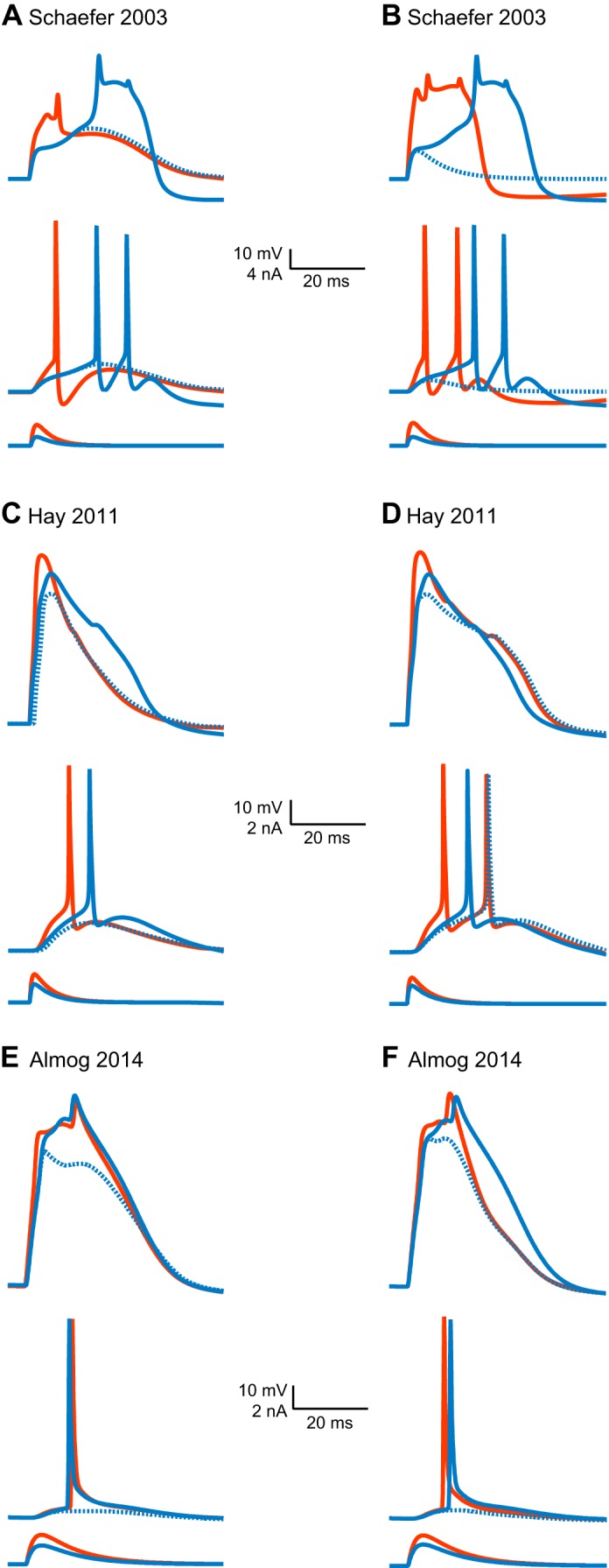

In its simplest variant, the cost function subtracts the experimental traces from the simulated membrane potential traces (Keren et al. 2005, 2009). This point-by-point subtraction is highly sensitive to the precise timing of action potentials (LeMasson and Maex 2001), so that, if the simulated action potential is slightly only too early or too late, its score will be closer to a poor simulation than to an ideal simulation. This sharp and narrow sensitivity to timing makes this direct geometrical comparison between simulation and data function poorly in genetic algorithm optimization processes (Keren et al. 2005). Several solutions have been suggested. One may represent the phase plane of the membrane potential, and this solves the problem of sharpness (LeMasson and Maex 2001). It is also possible to add information about action potential timing and to construct combinations of several cost functions to help a genetic algorithm better search the parameter space (Keren et al. 2005) or to align action potentials before calculating the cost function (Weaver and Wearne 2006). Another approach is to extract average features from a group of experimentally recorded action potentials, such as amplitude, half-width, threshold, and amplitude after-hyperpolarization, and then to use these as the salient points for comparison (Druckmann et al. 2007, 2008; Hay et al. 2011). This has the advantage of using values representing a neuronal population rather than a single neuron. It also takes experimental variance into account a priori (Hay et al. 2011). Another powerful strategy is to combine several cost functions and a multiobjective algorithm (Druckmann et al. 2007, 2008). Extracting features from the target data, however, greatly reduces the information available from the target data. This can result in simulations which have, for example, the desired amplitude after-hyperpolarization, if this is a feature in the cost function, but not the correct shape of the after-hyperpolarization (Druckmann et al. 2007, 2008).

The cost function can be made to play another important role in the optimization algorithm, namely, as a measure of its progress (Bahl et al. 2012; Ben-Shalom et al. 2013; Druckmann et al. 2007, 2008; Keren et al. 2005). This can result in convergence that is either too fast or too slow. Extremely fast convergence, with the score rapidly dropping within a few generations and then leveling off (Keren et al. 2005), is less likely the result of an extremely efficient search than it is an indication that the parameter space was inadequately sampled. Conversely, extremely slow convergence can indicate that the algorithm became stuck in a local minimum, but not low enough minimum, to terminate. In several studies, the score function displayed an initial moderate decay followed by a slow one (Achard and De Schutter 2006; Gurkiewicz and Korngreen 2007; Keren et al. 2005; Vanier and Bower 1999). This problem we have shown elsewhere can be solved by greatly increasing the size of the population in a genetic algorithm (Ben-Shalom et al. 2012).

In any case, the multidimensional surface defined by the cost function can be very complex (Achard and De Schutter 2006; Bhalla and Bower 1993; Goldman et al. 2001; Golowasch et al. 2002; Taylor et al. 2006, 2009), including, for example, distinct regions with similar scores for similarly behaving simulations. Although it is nice in some sense that very different sets of parameters may yield reasonable behavior, such results can mislead us altogether, if we interpret parameter values as biological realities. So we must carefully analyze our cost functions and their sensitivity to perturbation of parameter values (Achard and De Schutter 2006; Bhalla and Bower 1993). In many cases, setting up the cost function properly is far from trivial and requires in-depth understanding of the goal of the optimization.

the target data set.

The third foundation of automatic model optimization is the target data set used by the algorithm. For obvious reasons, the selected target data set profoundly affects the output of the cost function. For all automatic compartmental model optimization to date, the target data sets have comprised membrane potential recordings or features extracted from such recordings (Bahl et al. 2012; Druckmann et al. 2007, 2008, 2011; Gerken et al. 2005; Hay et al. 2011; Keren et al. 2009; Vanier and Bower 1999; Weaver and Wearne 2008). It is worth noting that simulated data sets have occasionally been used as surrogate data to test the performance of optimization algorithms or specific features of the optimization process (Druckmann et al. 2008; Keren et al. 2005, 2009). Moreover, several studies have been dedicated to determining features of the data sets optimal for optimization algorithm searches of parameter space (Druckmann et al. 2011; Gurkiewicz and Korngreen 2007; Huys et al. 2006; Huys and Paninski 2009; Keren et al. 2005). Our laboratory has previously shown that to optimize a compartmental model containing dendritic and axonal conductance gradients a data set including recordings from the axon, soma, and dendrites is required (Keren et al. 2005). These studies suggest a simple rule of thumb: the larger and richer in features the target data set, the better.

Existing methods for fitting a curve over a large domain are highly sensitive to the size and diversity of the data set (Motulsky and Christopoulos 2004). To attempt such global curve fitting requires, in particular, that our data set contain traces sensitive to changes in all the parameters undergoing optimization. For otherwise, no matter how clever the optimization algorithm, although a parameter changes, and so too the model's simulations, the changes do not register compared with the target data, their simulations' scores do not differ, and the parameter is left free and unconstrained: it falls through a crack in the target data set and escapes optimization. In control theory when a parameter cannot be estimated using a given data set, it is said to be unobservable. Varying the value of such a parameter over a large interval, demonstrating that model behavior does not change significantly, can serve as a test for the parameter's unobservability.

The Layer 5 Pyramidal Neuron as a Model System

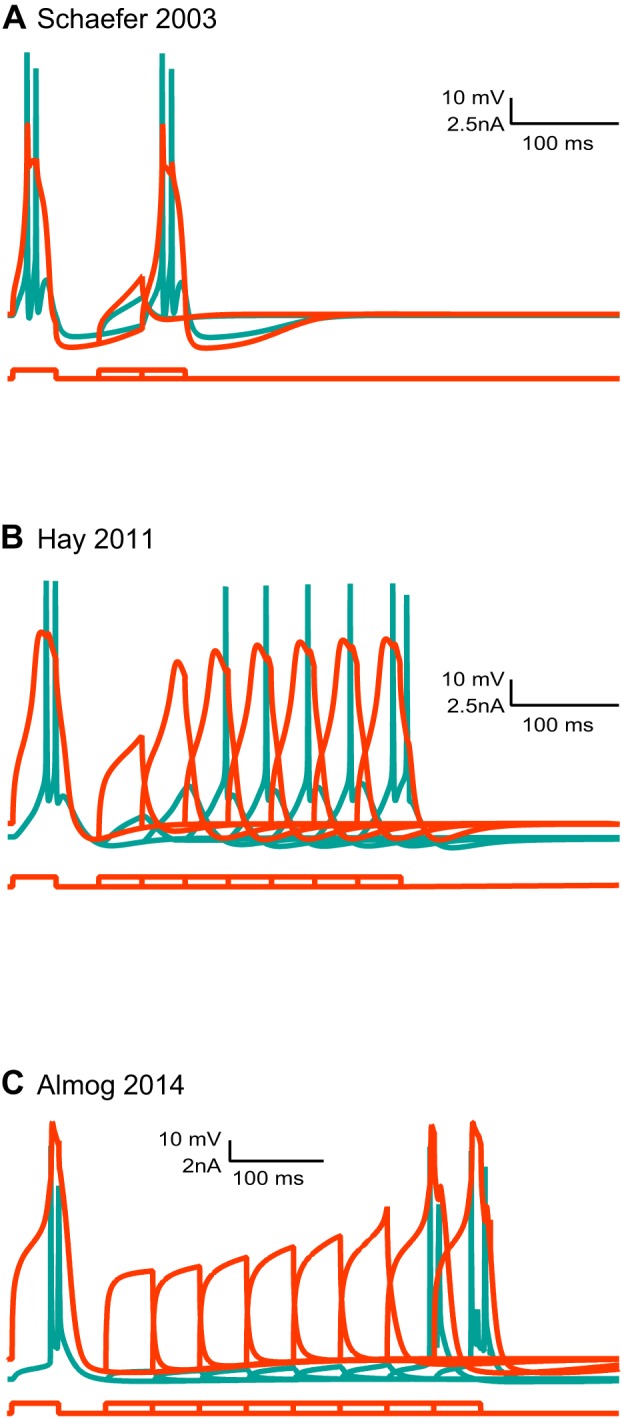

There are many approaches to optimizing compartmental models. This makes it difficult to compare between models and assessing their success in modeling neurons realistically. To avoid comparing apples and oranges, we designed several numerical challenges, focused on models of layer 5 neocortical pyramidal neurons, and applied our challenges to publically available models. The challenges required that the model reproduce an experimentally observed behavior of layer 5 pyramidal neurons. The models were not explicitly tuned to generate these behaviors at their time of creation.

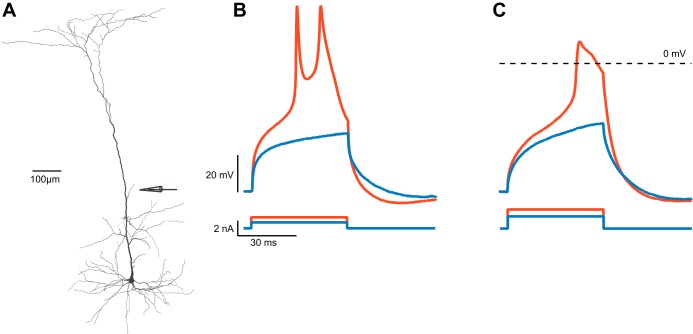

Layer 5 neocortical pyramidal neurons are one of the primary output cells from the cortex; the main axonal stem projects to intrahemispheric, interhemispheric, and subcortical targets, including the spinal cord, pons, medulla, tectum, thalamus and the striatum (Feldman 1984). Efforts to record from these neurons have achieved noteworthy successes. Regenerative calcium spikes have been recorded from the apical dendrite of layer 5 pyramidal neurons (Amitai et al. 1993; Larkum et al. 1999a, 1999b, 2001; Schiller et al. 1997; Zhu 2000). And today a number of important phenomena have been revealed. When back-propagating action potentials coincide with distal synaptic inputs, dendritic calcium spikes are generated, and this in turn generates bursts of action potentials at the soma, a process nicknamed “BAC firing” (Larkum et al. 1999b). Under some conditions, calcium spikes can be isolated in the dendrites, whereas under others, the spikes can spread to the soma (Larkum et al. 1999a; Schiller et al. 1997). Action potential bursts can be triggered by the proximal apical dendrite as well as by the distal apical dendrite (Larkum et al. 2001; Williams and Stuart 1999). Depolarization of the proximal apical dendrite facilitates the forward propagation of dendritic sodium and calcium spikes (Larkum et al. 2001). Thin tuft and basal dendrites preferentially initiate local N-methyl-d-aspartate (NMDA) and weak sodium spikes (Larkum et al. 2009; Nevian et al. 2007; Polsky et al. 2004; Schiller et al. 2000), whereas the main trunk of the apical dendrite is dominated by calcium spikes (Larkum et al. 1999b; Schiller et al. 1997). NMDA spikes have been shown to confer increased computational abilities to basal and tuft dendrites, allowing clustered synaptic inputs to define a local region of nonlinear synaptic integration (Larkum et al. 2009; Nevian et al. 2007; Polsky et al. 2004; Schiller et al. 2000). Thus the dendritic tree of layer 5 pyramidal neurons may be viewed as a multilayer, constantly reconfiguring computing device (Poirazi et al. 2003a, 2003b; Poirazi and Mel 2001). Over the years, many studies have attempted to simulate the complex physiology of layer 5 pyramidal neurons. Rather than review them all, we will draw a short time line of the past 20 years, taking three models as test cases.

The first model to which we direct our attention is the Mainen and Sejnowski (1996) model. It was among the first efforts to model (Rapp et al. 1996) layer 5 pyramidal neurons following the discovery of the back-propagating action potential (Stuart and Hausser 1994; Stuart and Sakmann 1994). The Mainen and Sejnowski (1996) model is a fully developed, hand-tuned compartmental model containing a real morphology of a cortical neuron and several voltage-gated channels. The morphology has about 400 compartments, each with several voltage-gated channels. This brings the number of differential equations defining the compartmental model to ∼3,000 and the total number of parameters to over 10,000. Using homogenizing assumptions, the model was hand-tuned using about 15 parameters (Mainen and Sejnowski 1996). It faithfully reproduced the back-propagation of the action potential by introducing a low density of voltage-gated sodium channels in the apical dendrite (Mainen and Sejnowski 1996). While the model does contain a voltage-gated calcium channel in the apical dendrite, it does not generate dendritic calcium spikes. Instead, back-propagating action potentials can generate calcium electrogenesis in the distal apical dendrite and lead to a burst of action potentials. An important feature of this model, one that many later models adopted, is the stylized axon initial segment replacing the natural one. This model of the spike initiation zone is highly robust, functioning well in a variety of compartmental models. The weakness of this model is the high density of voltage-gated sodium channels in the axon initial segment; this introduces an unrealistically large local shunt.

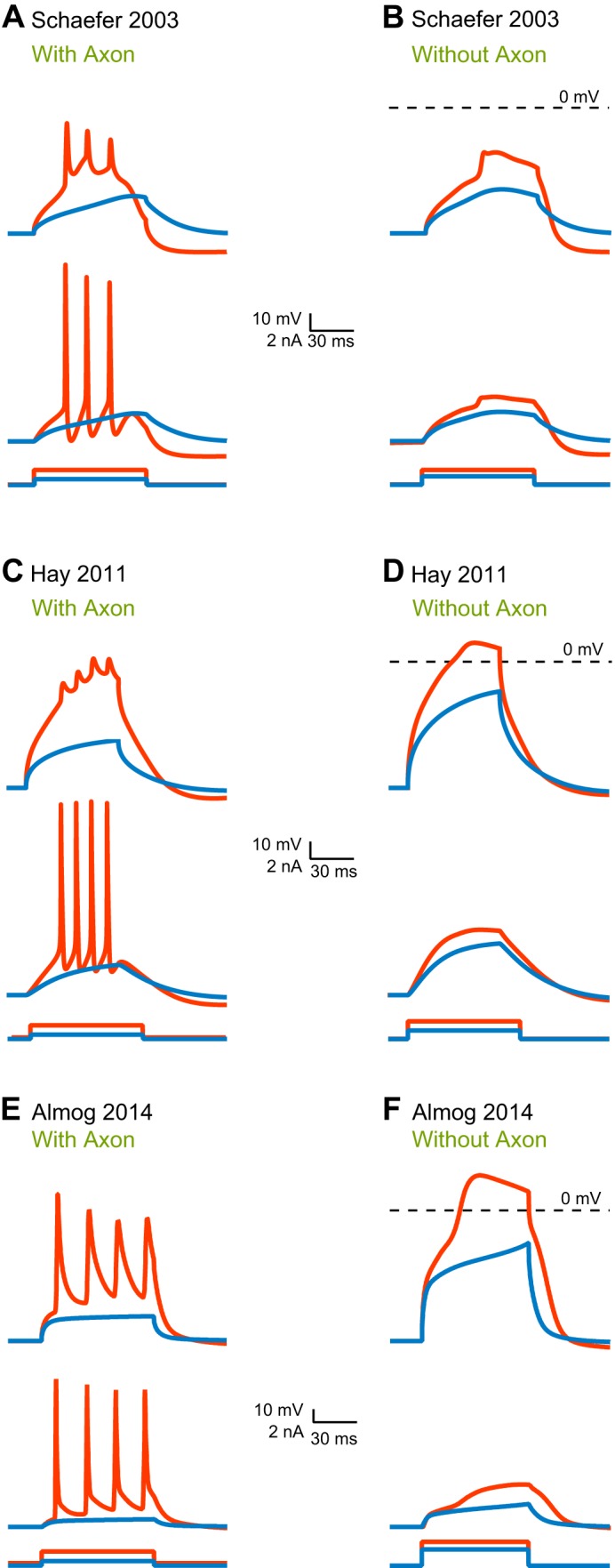

The Mainen and Sejnowski (1996) model was modified a few years later by Schaefer et al. (2003b). Like the Mainen and Sejnowski (1996) model, the Schaefer et al. (2003b) model (henceforth: the Schaefer model) uses a fully reconstructed morphology and inflates to over 10,000 parameters which are then reduced using standard assumptions to about 17 parameters of unknown value. It adds an ionic mechanism for dendritic calcium spikes in the distal apical dendrite (Schaefer et al. 2003b). Together with other models (Acker and Antic 2009; Grewe et al. 2010; Hay et al. 2013; Vetter et al. 2001), the Schaefer model has been instrumental in examining the relationship between morphology, excitation, and coupling of somatic and dendritic action potential initiation sites. By adding a hot-spot of T-type voltage-gated calcium channels in the distal apical dendrite, this model acquires a local low-threshold site for the initiation of dendritic spikes. It was also able to reproduce BAC-firing and to illustrate how variability in BAC-firing is linked to morphology and the number of oblique dendrites (Schaefer et al. 2003b). Similar to the Mainen and Sejnowski (1996) model, the Schaefer model was hand-tuned to phenomenologically reproduce a specific neuronal physiology. There are other hand-tuned models describing dendritic excitability of layer 5 pyramidal neurons (Larkum et al. 2009; Rapp et al. 1996). Instead of discussing all of them, we will proceed to discuss computer-tuned models.

The second contender in our computational challenge is the Hay et al. (2011) (henceforth: the Hay model), a computer-optimized model designed to capture a wide range of dendritic and somatic properties. The Hay model was tuned using a multiobjective genetic algorithm (Deb et al. 2002), and the target data set comprised features extracted from somatic and dendritic membrane potential recordings (Hay et al. 2011). Similar to the Mainen and Sejnowski (1996) model, it uses a full reconstructed morphology, and, similarly, it inflates to over 10,000 parameters, which were then reduced using standard assumptions to 22 parameters of unknown value. In their elegant modeling study, Hay et al. (2011) smartly extracted features of somatic and dendritic spikes from several data sets and, in the fitting process, took the variability of each feature into account. They were thus able to generate a family of possible models for layer 5 pyramidal neurons falling within the same range of physiological activity. In this model, the conductance densities of the high- and low-voltage activated calcium channels were elevated in the distal apical dendrite. In contrast with the Mainen and Sejnowski (1996) model and the Schaefer model, the Hay model did not model an initiation zone in detail; rather, it discarded the axon and loaded the soma with high density of potassium and sodium conductances. The Hay model, then, departed from physiology, and generates action potentials at the soma. It faithfully reproduced BAC-firing and the somatic response to current injection (Hay et al. 2011).