We develop a novel clusterless decoding algorithm that can categorize hippocampal sharp-wave ripple replay events in real time. This work provides an approach for experimental neurophysiologists to identify the content of replay events using multiunit spiking activity, and to interrupt individual events based on their content, in a real-time, closed-loop experimental setting.

Keywords: hippocampus, neural control, real-time decoding, replay, state-space models

Abstract

Sharp-wave ripple (SWR) events in the hippocampus replay millisecond-timescale patterns of place cell activity related to the past experience of an animal. Interrupting SWR events leads to learning and memory impairments, but how the specific patterns of place cell spiking seen during SWRs contribute to learning and memory remains unclear. A deeper understanding of this issue will require the ability to manipulate SWR events based on their content. Accurate real-time decoding of SWR replay events requires new algorithms that are able to estimate replay content and the associated uncertainty, along with software and hardware that can execute these algorithms for biological interventions on a millisecond timescale. Here we develop an efficient estimation algorithm to categorize the content of replay from multiunit spiking activity. Specifically, we apply real-time decoding methods to each SWR event and then compute the posterior probability of the replay feature. We illustrate this approach by classifying SWR events from data recorded in the hippocampus of a rat performing a spatial memory task into four categories: whether they represent outbound or inbound trajectories and whether the activity is replayed forward or backward in time. We show that our algorithm can classify the majority of SWR events in a recording epoch within 20 ms of the replay onset with high certainty, which makes the algorithm suitable for a real-time implementation with short latencies to incorporate into content-based feedback experiments.

NEW & NOTEWORTHY

We develop a novel clusterless decoding algorithm that can categorize hippocampal sharp-wave ripple replay events in real time. This work provides an approach for experimental neurophysiologists to identify the content of replay events using multiunit spiking activity, and to interrupt individual events based on their content, in a real-time, closed-loop experimental setting.

recent developments in closed-loop experiments allow for causal manipulation of neural events in real time (Grosenick et al. 2015). Here we present a new statistical paradigm for closed-loop experimental investigation of memory and learning. We develop new methods that allow manipulation of sequences of spiking events that occur during memory replay in a feature-specific manner. Specifically, we apply real-time decoding methods to replay events and then infer a replay feature by computing its posterior probability at each time.

Because of the profound role memory plays in our lives, the study of the neural mechanisms that underlie memory processes, such as memory encoding, consolidation, and retrieval, has attracted substantial interest among neuroscientists. Memory is thought to depend on the reactivation of patterns of activity related to previous experience, and efforts to identify these patterns have focused, in part, on hippocampal sharp-wave ripple (SWR) events (Buzsaki 2015; Carr et al. 2011). As an animal moves through its environment, individual hippocampal neurons (place cells) are active when the animal occupies specific regions of space, known as the cells' place fields (Best et al. 2001; O'Keefe and Dostrovsky 1971). A traversal through a given environment results in the sequential activation of a series of these place cells. Subsequently, during slow movement, immobility, and sleep, time-compressed versions of these sequences can be replayed during SWRs (Diba and Buzsaki 2007; Foster and Wilson 2006; Ji and Wilson 2007; Lee and Wilson 2002; Redish 1999; Redish and Touretzky 1998). Sleep SWRs were originally proposed to support memory consolidation and related processes (Buzsaki 1989), and during sleep disrupting SWRs leads to subsequent performance deficits in a spatial memory task (Ego-Stengel and Wilson 2010; Girardeau et al. 2009).

Interestingly, replay during SWRs also occurs in the awake state, most prevalently during periods of slow movement and immobility (Cheng and Frank 2008; Csicsvari et al. 2007; Diba and Buzsaki 2007; Foster and Wilson 2006; Karlsson and Frank 2009; Pfeiffer and Foster 2013; Silva et al. 2015; Singer and Frank 2009; Wu and Foster 2014). Interruption of these awake SWRs leads to a specific learning and performance deficit (Jadhav et al. 2012). This result helped establish the importance of awake SWRs but does not establish that the specific pattern of spiking activity within SWRs contributes to memory processes. Individual SWRs can contain spiking patterns that replay different sequences, and, furthermore, these sequences can be replayed in both a temporally forward and a reverse order during awake SWRs (Ambrose et al. 2015; Csicsvari et al. 2007; Diba and Buzsaki 2007; Foster and Wilson 2006; Wu and Foster 2014). In particular, reverse replay is hypothesized to be an integral mechanism for learning about recent events, and insights into this type of replay could be critical to understanding how animals learn from experience (Foster and Wilson 2006; Pfeiffer and Foster 2013).

Therefore, a deeper examination of how replayed information contributes to learning and decision making requires the ability to manipulate SWR events based on their content. This presents a fundamental analysis challenge: to decode and characterize replay content in real time.

However, studies of SWR events have so far been largely limited to analyses of spiking data that were sorted offline. Currently, real-time spike-sorting algorithms tend not to be sufficiently accurate to allow for closed-loop interventions. Additionally, methods for categorizing replay content typically require waiting for the completion of a replay event, for example, to compute a correlation measure to study temporal replay order (Foster and Wilson 2006; Wu and Foster 2014). The lack of dynamic estimation methods makes it difficult to further probe any causal relationship between a specific replay sequence and memory function that may happen downstream of the replay event. Among the currently used methods, there is no consensus as to which classifies the replay content most accurately and reliably.

One approach that has been successful in decoding dynamic content from neural data is state-space modeling (Brown et al. 1998; Eden et al. 2004; Huang et al. 2009; Kemere et al. 2008; Koyama et al. 2010; Paninski et al. 2010; Smith and Brown 2003; Srinivasan et al. 2006; Truccolo et al. 2005; Wu et al. 2009). Here we incorporate state-space methods to develop a dynamic algorithm to determine whether the content of a replay event represents a specific sequence, which will then make it possible to interrupt events based on the type of spatial trajectory they represent.

Previously, we and others have developed decoding methods that do not require multiunit spiking waveforms to be sorted into single units based on the theory of marked point processes (Chen et al. 2012; Deng et al. 2015; Kloosterman et al. 2014). Here we extend the state of our marked point process filter to include a discrete state variable that categorizes the content of hippocampal replays in real time. By including such a “decision state,” we make use of the underlying state process in a content-specific way by selectively including different sources of information. In particular, this allows us to incorporate into our model three fundamental features of the information content of individual replay events: the location where the trajectory begins, whether the sequence of locations represents a trajectory proceeding toward or away from the animal's current location, and whether the spiking pattern reflects place-field structure for a specific direction of movement.

In this report, we illustrate our approach by decoding experimental data recorded in the hippocampus of a rat performing a spatial memory task. We apply the discrete decision state filter to classify the replay content into four categories based on the direction and temporal order of the replay trajectory and compute our confidence about the classification. We show that our algorithm can classify the majority of replay events in a 15-min recording epoch within 20 ms of the replay onset with 80–95% certainty, suitable for future incorporation into real-time, content-based feedback experiments.

ALGORITHM DEVELOPMENT

Previous studies have used state-space models to track the actual position of a rat using spiking activity in hippocampus (Brown et al. 1998; Huang et al. 2009; Koyama et al. 2010). In those studies, spiking activity was first modeled as a point process, which can be fully characterized by its conditional intensity function (Daley and Vere-Jones 2003). The conditional intensity model then relates the firing activity to a state process that represents the animal's actual position.

In this section, we generalize this method in two ways. First, we extend the conditional intensity function to allow for multiunit activity. Second, we relate the firing activity to a new state process that represents features of a replay event rather than an actual movement trajectory. Here we posit that the dynamics of the replayed trajectory are similar to those of the actual movement, although with an accelerated time course. For this reason, we call this new state the “replay position.” We might loosely interpret this new state process as a kind of mental exploration or “mental time-travel” (Hasselmo 2009; Tulving and Markowitsch 1998). However, it is important to note that this new state does not necessarily represent any real or imagined position.

The goal of the proposed algorithm is to compute the posterior distribution of the new decision state process conditioned on the set of observations up until the current time, which allows for real-time categorization of replay content with a measure of uncertainty.

Discrete state point process filters.

Any point process representing neural spiking can be fully characterized by its conditional intensity function (Daley and Vere-Jones 2003). A conditional intensity function describes the instantaneous probability of observing a spike, given previous spiking history. By relating the conditional intensity to specific biological and behavioral signals we can specify a spike train encoding model.

The conditional intensity also generalizes to the marked case, in which a random vector, termed a mark, is attached to each point (Cox and Isham 1980). Here we use the mark to characterize features of the spike waveform. In the case of tetrode recordings, for example, the mark could be a length-four vector of the maximum amplitudes on each of the four electrodes at every spike time.

A marked point process is completely defined by its joint-mark intensity

| (1) |

where Ht is the history of the spiking activity up to time t. represents a joint stochastic model for the marks as well as the arrivals of the point process. The joint-mark intensity characterizes the instantaneous probability of observing a spike with mark at time t as a function of any factors that may influence spiking activity.

Traditionally, we posit that the spiking activity depends on some underlying internal state variable x(t), such as an animal's location in space, that varies across time. Here, to allow for real-time decision making based on the information content of replay events, we extend the state variable from a single continuous state x(t) to a joint state variable {x(t), I}, where x(t) remains an underlying dynamic, continuous state process and I is a discrete, fixed state variable. The discrete variable I is constant in time but influences the dynamics of the continuous state x(t). For example, this discrete state could be a binary variable that describes whether a movement is a forward or reverse replay event. In closed-loop experiments, we might make a decision to intervene based on an estimated value of this discrete state. For that reason, we use the term “decision state” to represent this variable. The goal of this subsection then is to derive an algorithm that computes posterior probability of this decision state conditioned on the set of observations up until the current time, , where is the spiking activity with marks at time t and Ht is the history of the spiking activity up to time t.

A multiunit encoding model is constructed by writing the joint-mark intensity as a function of all the variables that influence the neural spiking,

| (2) |

Here g is the function that describes the instantaneous probability of observing a spike with a waveform that gives mark at time t based on the joint state value. This joint conditional intensity models spiking activity across a population of neurons.

The conditional intensity of observing any spikes in this population can be computed by integrating the joint-mark intensity over the entire space of possible marks, ,

| (3) |

Λ(t|Ht) can be understood as the conventional conditional intensity of a temporal point process that includes the spiking of all the neurons in the population. For a marked point process, Λ(t|Ht) is called the intensity of the ground process (Daley and Vere-Jones 2003). The mark space can be of any dimension.

A joint state point process filter applies the theory of state-space adaptive filters (Haykin 1996) to compute, at each time point, the posterior distribution of the joint state variable given observed marked point process. To derive the filter in discrete time, we first partition the observation interval [0,T] into {tk: 0 ≤ t1 < . . . < tN ≤ T} and let Δk = tk − tk−1. The posterior density for the joint state variable {x(t), I} can be derived simply using Bayes's rule,

| (4) |

where xk = x(tk) is the continuous state variable at time tk, ΔNk is the number of spikes observed in the interval (tk−1, tk], and Hk is the history of spiking activity up to time tk−1. represents a collection of mark vectors , i = 1, . . . , ΔNk, observed in the interval (tk−1,tk].

The first term in the numerator on the right-hand side of Eq. 4, p(xk, I|Hk), is called the one-step prediction density for the joint state variable and is determined by the Chapman-Kolmogorov equation as

| (5) |

Equation 5 has two components: p(xk|xk−1, I), which is given by a state transition model under the Markovian assumption conditioned on the discrete decision state, and , which is the posterior density from the last iteration step. We multiply this probability density function with the posterior distribution of the joint state variable at the previous time step tk−1 and numerically integrate the product over all possible values of the continuous state at the previous time, xk−1. The resulting integral is the a one-step prediction density at the current time tk.

The second term in the numerator of Eq. 4, , is the likelihood or observation distribution of the population spiking activity at the current time conditioned on the joint state and past spiking history, which can be fully characterized by the joint-mark intensity as defined in Eq. 2:

| (6) |

We can interpret Eq. 6 by separating the product on the right-hand side into two terms. The term characterizes the distribution of firing ΔNk spikes, such that the mark value of the ith spike in the interval (tk−1, tk] is mki, where i = 1, . . . , ΔNk. If no spike occurs, i.e., ΔNk = 0, this term equals 1. The exp[−ΔkΛ(tk|Hk)] term gives the probability of not firing any other spikes in the observation interval, where as defined in Eq. 3.

The observation distribution is then multiplied by the one-step prediction density to get the posterior of the joint state at the current time:

| (7) |

Note that we can drop the normalization term in the denominator of Eq. 4, , because it is not a function of {xk, I}.

Finally, because our goal is to estimate the discrete decision state, we integrate out the continuous state x(t) from the posterior of {x(t), I} in Eq. 7 to compute the posterior probability of the discrete decision state I:

| (8) |

Substituting Eq. 7 into Eq. 8 yields a recursive expression for the estimation of the discrete decision state variable I:

| (9) |

The solution we obtained for the joint state filter in Eq. 7 shares a similar form with solutions derived for a marked point process filter with only a continuous state x(t) (Deng et al., 2015):

| (10) |

This similarity allows for an alternative, more statistically intuitive interpretation of our new decision state filtering algorithm—a set of weighted parallel continuous state filters.

For each possible value i that the decision state I can take on, Eq. 7 represents a separate filtering algorithm with some initial condition on the joint state variable {x(t),I}:

| (11.1) |

We can therefore interpret our decision state filter solution in Eq. 9 instead as a system of equations describing d marked point process filters running in parallel, where d is the total number of values the decision state I can take on:

| (11.2) |

The value of the posterior integrated over x for each category I = j relative to each of the others can be thought of as a weight, which describes the posterior probability for a particular value of the decision state:

| (11.3) |

From Eqs. 11, we can see that the decision state filter obtains information from the following three distinct components, all of which depend on the value of the decision state I:

For example, we might let the continuous state, x(t), be the replay position and the discrete decision state, I, a binary indicator where I = 0 defines an outbound replay trajectory from point A to point B and I = 1 defines an inbound replay trajectory from point B to point A. Here differences in firing rate and place field structure associated with inbound and outbound motion (McNaughton et al. 1983) would inform the model and allow for estimates of the value of the decision state I.

Correspondingly, the three sources of information can be interpreted as the initial condition (where the replay trajectory starts); the state transition model (whether the replay path follows an outbound or inbound movement trajectory); and the observation model (whether the replay activity reflects spiking from inbound or outbound movements).

Thus, to estimate the decision state, we must define probability models for p(x0|I), p(xk|xk−1, I), and .

DATA ANALYSES

To demonstrate the ability of our proposed algorithm to estimate and classify replay content, we applied it to the analysis of an example set of electrophysiological data from a rat performing an alternation task in an M-shaped maze.

We begin by defining probability models to capture the three sources of information discussed above—p(x0|I), p(xk|xk−1, I), and —that contribute to the decoding of replay trajectory. We then apply our marked point process filter with a single continuous state variable x(t) to decode the full trajectory of four example replay events. Next, we extend the state variable to jointly include a discrete decision state, I, that identifies whether each replay event represents an outbound or inbound trajectory as well as whether the temporal order of activity occurs forward or backward in time. In our analyses, I is an indicator function for a replay event being “outbound, forward,” “outbound, reverse,” “inbound, forward,” or “inbound, reverse.” We categorize the content of the four example replay events using the marked point process filter with the joint state variable {x(t),I}. Finally, we investigate how thresholding the posterior probability of the decision state, Pr(I), at different values affects the classification results during a recording epoch with multiple replay events.

We show that our algorithm can classify the majority of replay events within 20 ms of the replay onset with 80–95% confidence. We additionally observe that there appears to be a difference in the frequency of outbound, forward vs. inbound, reverse replay events between the rat exploring a novel and a familiar environment.

Description of experimental data and algorithm implementation.

The experimental methods are described in detail in Karlsson and Frank (2008). In brief, data were taken from one male Long-Evans rat implanted with a microdrive array containing 30 independently movable tetrodes targeting CA1 and CA3. Tetrodes that never yielded clusterable units across the 8 days of experiments were excluded from analysis. Tetrodes have been an established method for recording from the hippocampus and other brain regions in terms of reliability and identification of units (Gray et al. 1995; Harris et al. 2000; Wilson and McNaughton 1993). While other techniques exist to record from hippocampus such as silicon probes (Buzsaki 2004; Csicsvari et al. 2003), we foresee that our decoding algorithm can be compatible with data collected with any technique that allows features to be extracted that can be used to isolate single units. We limit this study to tetrode data because of the ease of identifying features that allow units to be easily separated, e.g., the amplitudes of each spike.

Multiunit spiking activity recorded on a total of 18 tetrodes was used for the data analyses below. The multiunit activity recorded from one tetrode consists of all detectable spike waveforms that cross a minimum amplitude threshold, usually set between 40 and 100 μV. This activity includes action potentials from dozens of neurons per tetrode, and typical decoding procedure involves first sorting these spikes into putative, well-isolated units before decoding from the population of sorted units (Brown et al. 1998). Through standard manual clustering techniques, between 0 and 10 well-isolated units were extracted per tetrode in the data set described here, for a total of 82 cells across 18 simultaneously recorded tetrodes recorded. Importantly, the activity of these sorted putative units includes a minority of detectable spikes: 95.62% of spikes remain unclassified because they could not be confidently assigned to a single unit. Thus a large fraction of the data collected in these recordings would not be used for standard decoding analyses.

Ripples were detected on 11 of the 30 tetrodes recorded from CA1 by using an aggregated measure of the root mean square (RMS) power in the 150–250 Hz band across the tetrodes (Csicsvari et al. 1999). The aggregated RMS power was then smoothed with a Gaussian (4-ms standard deviation), and SWR events were detected as lasting at least 15 ms above 2 standard deviations of the mean. The entire SWR time was then set to include times immediately before and after the power exceeded the mean.

The data were recorded while the rat ran a continuous alternation task on an M maze (76 cm × 76 cm with 7-cm-wide track) shown in Fig. 1A. The animal was rewarded each time it visited the end of an arm in the correct sequence, starting in the center and then alternating visits to each outer arm and returning to the center. The animal would start at the food well in position O and run toward the intersection, or “choice point” (position CP), at the top of the center stem, where a choice would need to be made. The correct choice is to alternate between left and right on successive turns. If a correct choice is made, for example, to turn right, the animal would continue to run toward the food well at position R to receive a reward, and then return to the center well at position O to move on to the next trial. If an error is made, the rat is not given a reward and must return to the center well to initiate the next trial. All animal procedures and surgery were reviewed and approved by the University of California San Francisco Institutional Animal Care and Use Committee and were in accordance with National Institutes of Health guidelines.

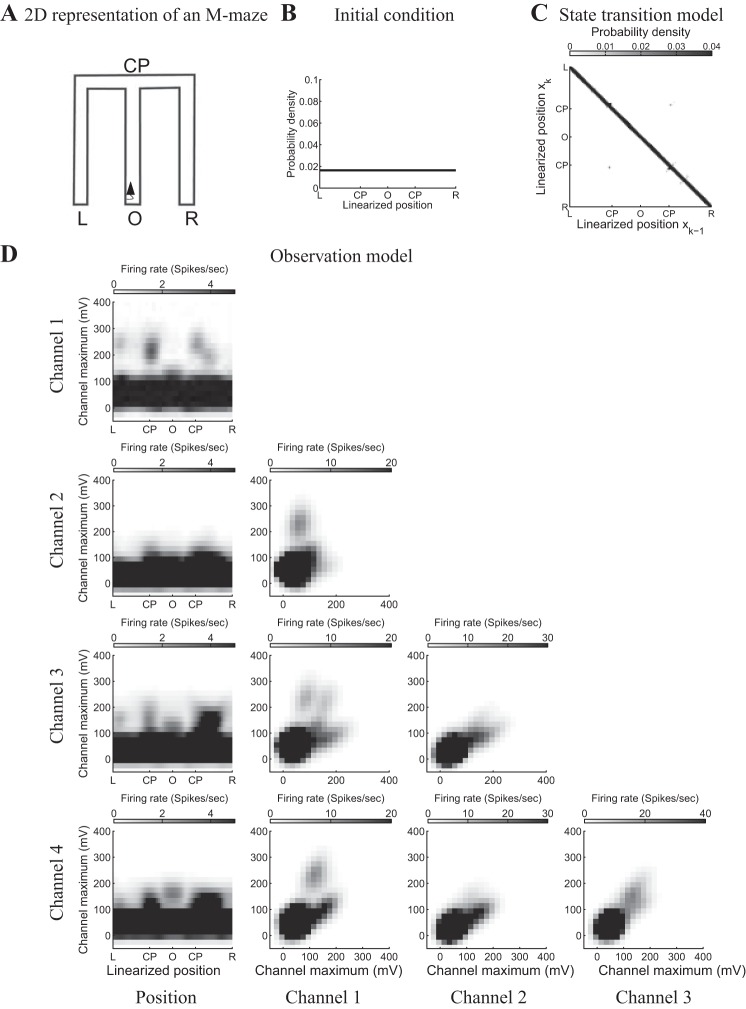

Fig. 1.

Linearization and encoding models for position. A: 2-dimensional representation of an M maze. The black triangle represents an animal whose head is oriented toward the choice point. B: initial condition of the state variable, linearized position x(t). Here we assume that a replay event is equally likely to start at any position on the maze. C: state transition probability matrix p(xk|xk−1) of transition probabilities from every possible replay position at the prior time step to each value at the current time. Here we posit that the dynamics of the replayed trajectory are similar to those of the actual movement and use the empirical movement data to compute the state transition model. D: observation model for an example tetrode. Here we plot the pairwise marginalization of the 5-dimension position-mark model. Panel at the intersection of each row and column plots the estimated joint-mark intensity function marginalized over the row and column linearized position or tetrode channel.

We linearized the actual two-dimensional coordinate position of the rat to a single coordinate. The one-dimensional coordinate indicates the total distance from the center well (position O) in centimeters, with negative numbers indicating trajectories that include a left turn and positive numbers indicating trajectories that include a right turn. When the rat was on the center arm of the maze, the region to which its position was mapped was determined by the direction from which the rat came during inbound trajectories and by the direction it would turn next when it reached the choice point (position CP) during outbound trajectories. Throughout this report, to facilitate ease of visualization, when plotting we label the linearized maze at position O (“origin”), CP (“choice point”), L (“left food well”), or R (“right food well”) instead of the corresponding signed one-dimensional coordinate.

The present algorithm is implemented in MATLAB 2015b and runs on a high-end workstation computer (Xeon E5-2643 with 128 GB ram). Using only a single computation thread and decoding an example replay event in 1-ms time intervals (340 ms, 525 spikes across 18 tetrodes), estimating each tetrode's decision state posterior probability at all time bins takes on average 320 ms. This suggests that a parallelized implementation of the algorithm where each tetrode's decision state is evaluated simultaneously would allow for real-time, content-based feedback.

Analysis of individual replay events: sources of information for decoding replay position.

Before extending to the decision state, we first estimate the replay trajectory x(t) on its own, without regard to any decision state I, from unsorted ensemble spiking activity using the marked point process filter, as defined in Eq. 10.

In this case, we assume a uniform probability model for the initial condition p(x0). For the state transition model p(xk|xk−1), we posit that the dynamics of the replayed trajectory are similar to those of the actual movement, although with an accelerated time course. We use the empirical movement data accelerated by a factor of 33× to compute the state transition model. We selected this speedup value based on our previous work (Deng et al. 2015) where this value was used successfully to decode replay trajectories. We repeated these analyses using a range of speedup factors between 15× and 33×, and the results were largely consistent (not shown). In an example epoch with 117 replay events, the classification of 90 events remained the same across every speedup factor between 15× and 33×. Of those that did change, most went from not being classified with certainty at a value of 15× to a consistent classification for values above 25×. This suggests that there is ample evidence in the population spiking activity to allow it to be robust to moderate misspecification of the state evolution model.

To estimate the joint-mark conditional intensity in the observation model , we use a Poisson, nonparametric, kernel-based encoding model:

| (12) |

where N is the total number of spikes, ui is the time of ith spike, and T is the total time of the experiment. is a multivariate kernel in both the place field and the mark space whose smoothness depends on both the smoothing parameter Bx and the bandwidth matrix (Ramsay and Silverman 2010). K is a univariate kernel on the spatial component only, and its smoothing parameter is bx. Here we use Gaussian kernels for both and K. Similarly,

| (13) |

In addition to this classical, two-step, encoding and decoding approach where a well-fit encoding model that is determined to be accurate is used directly to decode replay events, we also describe the construction of bootstrap confidence bounds on in the appendix, which takes into account the uncertainty in the estimation of the joint conditional intensity. For the data examples described here, we implemented both approaches and found that the decoding results were largely identical across both approaches, further suggesting that our encoding model sufficiently captures features of the spiking activity that are important for our purpose of accurately decoding and classifying replay events.

Fig. 1, B–D, illustrate the three sources of information that contribute to the decode of replay position x(t).

Figure 1B plots the initial condition of the state variable, p(x0). Here we assume that a replay event is equally likely to start at any location (2.8-cm position bins) on the maze by defining a uniform probability model for x0, where .

Figure 1C plots the state transition probability matrix p(xk|xk−1) of transition probabilities from every possible replay position at the prior time step to each value at the current time. The empirical state transition matrix has most of its probability mass near the diagonal, reflecting continuous trajectories in each of the track section, with a few off-diagonal elements that reflect the presence of the choice point. The state transition matrix describes the distribution of the difference in the replay position x(t) between time bins of size Δk. Here we let Δk = 1 ms, so that the difference tends to be small, leading to a state transition matrix with a narrow, nearly diagonal structure. By selecting a larger time bin, the state transition matrix will still be nearly diagonal but will be more diffuse around the diagonal. This would allow for faster decoded replay trajectories.

Figure 1D plots the estimated observation model for a single example tetrode. It characterizes the distribution of the spiking activity as a function of the replay position and features of the spike waveform (peak height of each channel), which is determined by the joint conditional intensity .

Each panel in Fig. 1D plots a two-dimensional slice of the five-dimension position-mark model, which consists of a four-dimensional vector of the maximum amplitudes of the spike waveform on each electrode of a single tetrode during a replay event plus the one-dimensional state variable x(t), i.e., the animal's position. We observe that this example tetrode picked up a lot of low-amplitude spiking activity with peaks between −50 mV and 100 mV. There is also evidence for the presence of multiple place cells. We observe, for example, from the plot showing “Channel 1” and “Position,” that the ensemble spiking activity on this example tetrode includes clusters with peaks above 100 mV that are place specific. The firing rate is higher when the rat is at the choice point CP on a future left turn than on a future right turn. When the rat is on a left turn, the firing rate increases near the left food well (position L) but not at the right corner of the maze. Contrarily, when the animal is on a right turn, the firing rate increases at the right corner but not near the right food well (position R). For the decoding analysis described below, we fit such marked point process models for a total of 18 tetrodes.

Analysis of individual replay events: decoding replay position.

Because the data were recorded with a microdrive array, we can gain additional spatial information by combining tetrodes together. In this case, we can augment the observation distribution to be

| (14) |

where S is the number of groups of recordings, in this case the number of tetrodes. ΔNks is the number of spikes observed from neurons on tetrode s during (tk−1, tk]. , where defines the joint-mark intensity function for neurons on tetrode s, where s = 1, . . . , S.

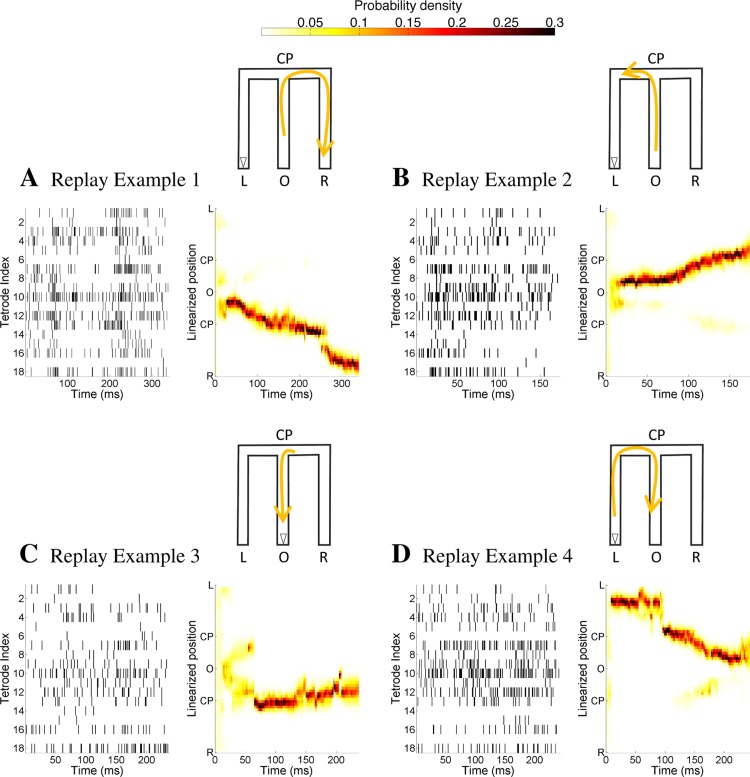

Figure 2 shows the decoded trajectories of four example replay events using a marked point process filter whose state variable is just the one-dimensional state variable x(t), i.e., the replay position. The schematic representation of the decoding results is illustrated by the M-shaped maze at the top right corner of each panel in Fig. 2, A–D, where the yellow arrowed line shows the decoded replay trajectory schematically and the open triangle represents the actual position and orientation of the rat during the replay example. Each panel on the left in Fig. 2, A–D, plots the unsorted ensemble spiking activity on each tetrode as a function of replay time. Each panel on the right in Fig. 2, A–D, plots the posterior density for the replay position of the animal during each replay event as a function of time, i.e., as computed from Eq. 10. The yellow region in the heat plot represents position where the estimated posterior density of the replay position at each time step is large.

Fig. 2.

Decoding replay trajectory from multiunit spiking activity using a marked point process filter with just a single state variable, the 1-dimensional replay position. A–D, left: unsorted ensemble spiking activity on each tetrode as a function of replay time. Right: replay trajectories of 4 examples decoded using clusterless methods where the heat plot shows the estimated posterior density at each time step. Top: schematic representation of the decoding results, in which the yellow arrowed line illustrates the evolution of the replay trajectory and the open triangle represents the actual position and orientation of the rat during the event.

We can see that replay example 1, plotted in Fig. 2A, shows a replay trajectory that begins at the center well (position O), moves up the center arm to the choice point (position CP), then turns right and proceeds to reach the right well (position R). Replay example 2, plotted in Fig. 2B, shows a replay trajectory that begins at the center well (position O), moves up the center arm to the choice point (position CP), and then turns left toward the left corner. The decoding results for replay example 4, plotted in Fig. 2D, show a replay trajectory that originates from the left well (position L) and moves up the left arm to the left corner and toward the choice point (position CP) and then down to the center well (position O).

The decoding results for replay example 3, plotted in Fig. 2C, show a replay trajectory that is first an outbound trajectory but then shifts to an inbound trajectory: the replay trajectory first begins from the center well (position O) and moves up the center stem to the choice point (position CP), but then at ∼70 ms into the replay, it moves inbound back to the center well (position O). However, we note that the outbound trajectory replayed during the first 70 ms has a lower posterior probability density at ∼0.1 (lighter shade of yellow) while the rest of the replay has a higher posterior probability density at ∼0.3 (darker shade of yellow).

Analysis of individual replay events: sources of information for decoding a decision state.

To categorize the content of hippocampal replays, our model requires an explicitly defined, fixed, discrete decision state I. Previous studies have reported the presence of both forward and reverse replay events in rat hippocampus (Ambrose et al. 2015; Csicsvari et al. 2007; Diba and Buzsaki 2007; Foster and Wilson 2006; Wu and Foster 2014). We therefore define a decision state I that consists of two components: movement direction with regard to the center well (“outbound” or “inbound”) and the temporal replay order of the actual spatial experience (“forward” or “reverse”).

We choose these specific values of decision state because we are interested in understanding the link between replay events and learning and memory.

An “outbound, forward” replay event can be interpreted as a spiking sequence that reflects spiking of place cells during an actual outbound movement being replayed in forward time. Loosely we might envision this event as a mental exploration of the rat moving from the center well to a side arm with its head facing forward. An “inbound, forward” replay event can be interpreted in a similar way except for the rat moving from a side arm to the center well.

We can regard an “outbound, reverse” replay event as a spiking sequence that represents neural activity during an actual outbound movement being replayed backward in time. We might envision this event as a movie of the same mental exploration of an “outbound, forward” event with the frames played backward in time. Watching such a movie, one might see the rat moving from a side arm to the center well with its head facing away from the center well with each backward step. An “inbound, reverse” replay event can be interpreted in a similar way except for the rat moving from the center well to a side arm with its head facing toward the center well.

Recall that in Discrete state point process filters we defined three sources of information for our decoding algorithm. The first two sources of information, the initial condition of the state variable and the state transition model, contribute to the decoder knowledge about the spatial evolution of the movement trajectory. The last source of information, the observation model, contributes to the decoder knowledge about mark-place preference in ensemble spiking. Therefore, if a replay event is categorized as outbound, forward, it means that both the spatial evolution of the replay movement and the spiking are consistent with those during an actual outbound movement. If a replay event is categorized as inbound, forward, it means that both the spatial evolution of the replay movement and the spiking are consistent with those during an actual inbound movement. However, if a replay event is categorized as a reversely replayed outbound trajectory, or simply outbound, reverse, it means that although the spiking during the reply event is consistent with that during an actual outbound movement, the spatial evolution of the replay movement is more consistent with that of an inbound movement. Similarly, if a replay event is categorized as a reversely replayed inbound trajectory, or simply inbound, reverse, it means that although the spiking during the reply event is consistent with that during an actual inbound movement, the spatial evolution of the replay movement is more consistent with that of an outbound movement.

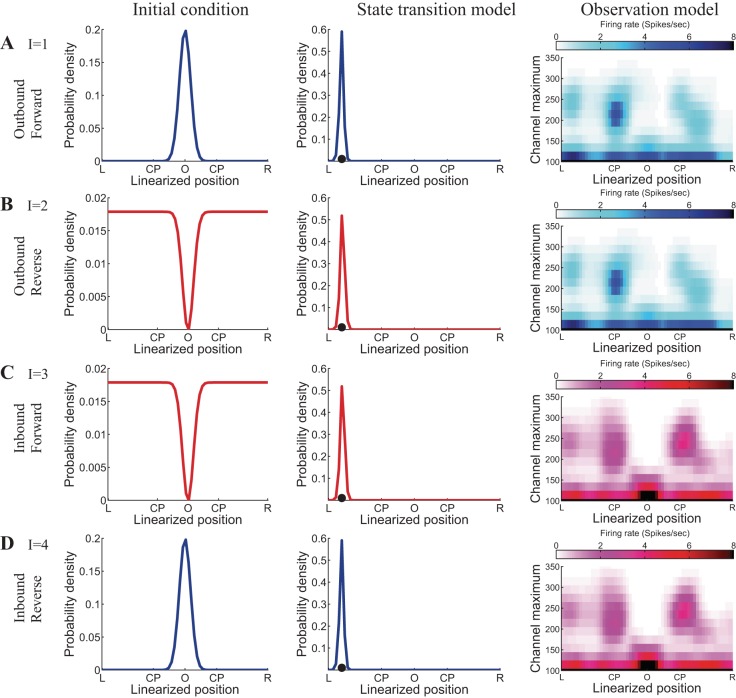

Figure 3 provides an example of the three different sources of information, as defined in Eqs. 11. As a visualization aid, we plot sources of information that are consistent with those during outbound movements in shades of blue and sources of information that are consistent with those during inbound movements in shades of red.

Fig. 3.

Examples of the 3 sources of information contributing to the decision state decoder. As a visualization aid, we plot sources of information that are consistent with those during actual outbound movement in shades of blue and sources of information that are consistent with those during actual inbound movement in shades of red. A–D, left: initial condition of replay position conditioned on the decision state. Center: state transition model of replay position conditioned on the decision state. A slice (when the animal is at a position on the left arm shown by the black dot) of the state transition matrix is plotted here. Right: estimated observation or likelihood model of joint-mark intensity conditioned on the decision state.

In each of Fig. 3, A–D, the left panel plots p(x0|I), the probability model for the initial condition of the replay position state variable conditioned on the discrete decision state. When I = 1 and I = 4, x0|I is the initial condition for all outbound trajectories and we assume it to have a Gaussian distribution centered at the center well (position O), which is plotted in blue. When I = 2 and I = 3, x0|I is the initial condition for all inbound trajectories. Here we assume it to have a probability distribution that is constant away from the center well and subtracts a Gaussian density near the center well, which is plotted in red.

The center panels of Fig. 3 show a slice of the transition matrix of the replay position (when the animal is at a position on the left arm shown by the black dot) conditioned on the decision state, p(xk|xk−1, I). When I = 1 and I = 4, p(xk|xk−1, I) is the state transition model for all outbound trajectories, plotted in blue. When I = 2 and I = 3, p(xk|xk−1, I) is the state transition model for all inbound trajectories, plotted in red. In this analysis, when specifying both the forward and reverse events, we use the empirical state transition matrix computed from actual movement data.

Visually, the center panels of Fig. 3 look very similar, because locally the difference between empirical state transition of actual outbound and inbound movement is too subtle to discern when plotted against the entire track length. By carefully comparing the center panels plotted in blue with those plotted in red, we can see that if the animal is in the middle of the left arm, it is more likely to move toward the left well (position L) during an outbound trajectory and more likely to move toward the center during an inbound trajectory.

The right panels of Fig. 3 show a slice of the estimated joint-mark intensity marginalized over three tetrode channels, leaving the linearized position and one remaining channel, on an example tetrode. When I = 1 and I = 2, is the observation model for outbound movements, plotted in a blue shade. When I = 3 and I = 4, is the observation model for inbound movements, plotted in a red shade. By comparing the observation models for outbound (in blue) and inbound (in red) trajectories, we can see that the ensemble firing activity observed on this example tetrode shows directional preference. For example, the outbound observation model (in blue) shows that the ensemble is most likely to spike with a peak height around 220 mV when the rat is near the choice point of a future left path. The inbound observation model (in red) shows that the ensemble is most likely to spike with a peak height around 240 mV when the rat is returning to the choice point from a right turn.

Analysis of individual replay events: decoding a decision state.

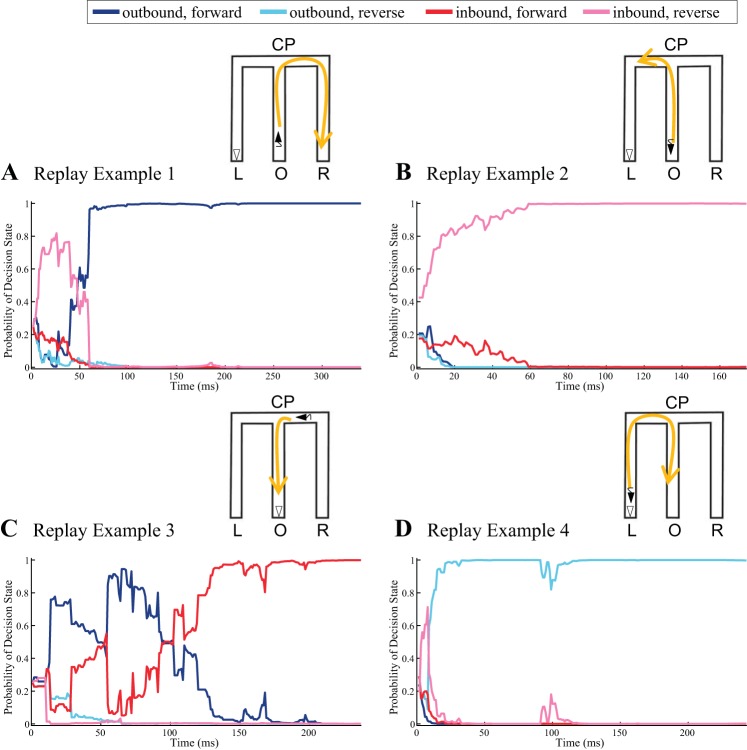

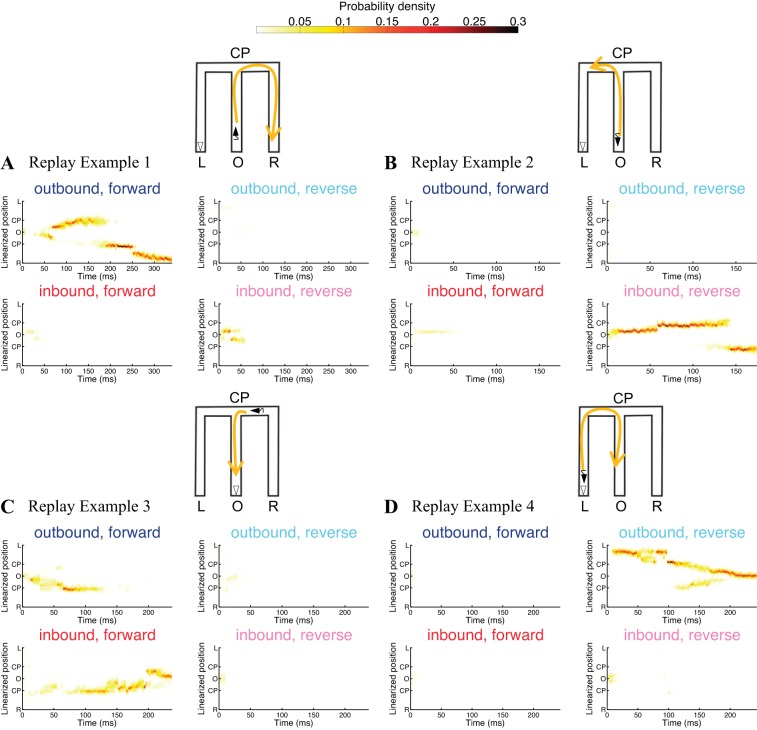

Figure 4 shows the decoding results of the decision state for the same four example replay events shown in Figure 2. The schematic representation of the decoding results is illustrated by the M-shaped maze on the top right corner of each panel in Fig. 4, where the yellow arrow represents the decoded replay trajectory and the open triangle represents the actual position and orientation of the rat during the ev ent. The black triangle represents the replayed orientation of the rat at the beginning of an event in order to ease the visualization, especially in the case of reverse replay.

Fig. 4.

Decoding a discrete, fixed decision state. A–D: probability of a decision state of 4 example replay events as a function of time. Probability of the replay event representing an “outbound and forward” path, an “outbound and reverse” path, an “inbound and forward” path, or an “inbound and reverse” path is plotted in darker blue, lighter blue, darker red, and lighter red, respectively. Top: schematic representation of the decoding results, in which the yellow arrowed line represents the temporal evolution of the replay trajectory, the open triangle represents the actual position and orientation of the rat during the event, and the black triangle represents the replayed orientation of the rat at the beginning of the event.

Each main panel in Fig. 4, A–D, plots the estimated posterior probability of each of the four categories defined in the decision state as a function of replay time. The line in darker blue represents as computed from Eq. 9, the probability of the replay event being an “outbound, forward” trajectory; the line in lighter blue represents the probability of the replay event being a reversely replayed outbound trajectory; the line in darker red represents the probability of being an inbound and forward trajectory from the side well; the line in lighter red represents the probability of being a reversely replayed inbound trajectory from the side well.

Figure 4A shows that by 60 ms into replay example 1 the decision state decoder has determined the event to be an outbound, forward event with almost 100% certainty. Figure 4B shows that by 60 ms into replay example 2 the decoder has determined the event to be an inbound, reverse event with near 100% certainty. Figure 4C shows a decoding trajectory that initially suggests an outbound, forward event with high probability but after ∼100 ms increasingly suggests an inbound, forward event. Figure 4D shows that by 25 ms into replay example 4 the decision state decoder has determined the event to be an outbound, reverse event with near 100% certainty. Near 100 ms, this confidence is briefly reduced but quickly returns to almost 100% confidence.

For some of these examples, if we were to make a decision at the first time the posterior probability of the decision state passed a fixed confidence threshold, the resulting decision would change based on the threshold level. For example, for replay example 1, if we were to set a moderate confidence threshold of 0.8, we might have determined the replay to be an inbound, reverse event at ∼25 ms into the ripple. Similarly, for replay example 3, even with a relatively high threshold of 0.9, we would have determined the replay to be an outbound, forward event. These observations of switching between directionality and temporal replay order within a replay event are similar to previous findings that support the notion that replay captured the unique structure of the environment (encoding of the choice point) (Wu and Foster 2014).

As explained in Discrete state point process filters, the decision state decoder can be interpreted as a set of weighted, marked point process filters running in parallel. In Fig. 5, we illustrate this alternative interpretation by plotting , i = 1,2,3,4 for each example replay event as computed from Eq. 11.3. The four panels in each subplot of Fig. 5, A–D, show the decoded replay trajectory conditioned on each of the four decision states.

Fig. 5.

Interpreting the decision state filter as a set of weighted, parallel marked point process filters. A–D: decoded replay trajectory conditioning on each of the decision states for 4 example replay events where the heat plot shows the estimated posterior density at each time step.

For each replay example shown in Fig. 5, when the posterior probability for a particular decision state is low, very little of the probability mass appears in the corresponding panel. For example, in Fig. 5A there is no visible probability mass in the outbound, reverse or inbound, forward panels and only fleeting presence of probability mass in the inbound, reverse panel. After 50 ms, nearly all of the probability mass is completely contained in the outbound, forward panel: the replay trajectory moves from the center well to the choice point and then to the right well; this is consistent with the visualization in Fig. 4A, which shows that this replay event is initially most consistent with an inbound, reverse movement during the first 50 ms but is eventually estimated to be an outbound, forward movement with high confidence.

The probability mass of the estimated replay trajectory plotted in Fig. 5B is primarily located in the inbound, reverse panel. The trajectory moves from the center well to the choice point and then to the right corner, a sequence of position similar to the trajectory in Fig. 5A. However, the spiking sequence is more consistent with spiking during inbound movements, which gives us confidence that the trajectory in Fig. 5B is actually a reverse event. Similarly, the probability mass of the replay trajectory plotted in Fig. 5D is primarily located in the outbound, reverse panel. While it shows a trajectory that moves from the left well to the choice point and then to the center well, the spiking sequence is more consistent with spiking during outbound movements, which gives us confidence that it is actually a reverse event.

The estimated trajectory in Fig. 5C has probability mass initially concentrated in the outbound, forward panel and then after 100 ms becomes concentrated in the inbound, forward panel. The replay trajectory first moves outbound from the center well to the choice point and then moves inbound back to the center well, consistent with the shift in decision state shown in Fig. 4C. This result suggests that our model, which assumes a constant decision state over an entire replay event, may be misspecified. We could augment the state model to allow for discrete transitions that occur in mixed replay events (Wu and Foster 2014).

Analysis of an entire recording epoch: decoding a decision state.

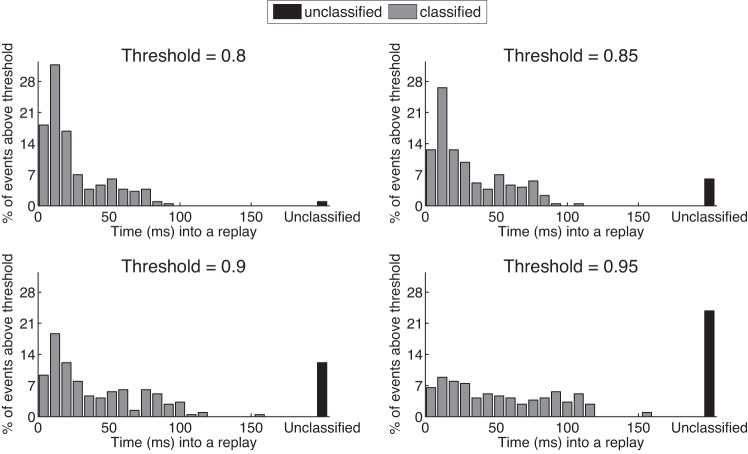

Because our algorithm was developed with the goal of real-time manipulation of SWR replays, we investigated the amount of time required to make a decision to interrupt a replay event based on our desired confidence in its classification. Figure 6 plots the histogram of the fraction of replay events that pass a specified confidence threshold on the estimated decision state as a function of time into a replay event for an entire recording epoch. Events that can be classified are plotted in gray. Events whose decision state probabilities did not pass the threshold by the end of a replay and cannot be classified are plotted in black. With a moderate threshold of 0.8, <2% of replay events failed to be categorized into any of the four categories, and the majority of the events were categorized within 20 ms of the replay onset. As we increase the threshold value, which means requiring more confidence in the classification, more replays failed to be categorized, and we have to wait longer into a replay for it to be categorized.

Fig. 6.

Relative frequency histogram of decision state as a function of time into a replay event, with 4 different thresholds on Pr(I). Events that can be classified are plotted in gray. Events whose decision state probabilities did not pass the threshold and cannot be classified are plotted in black.

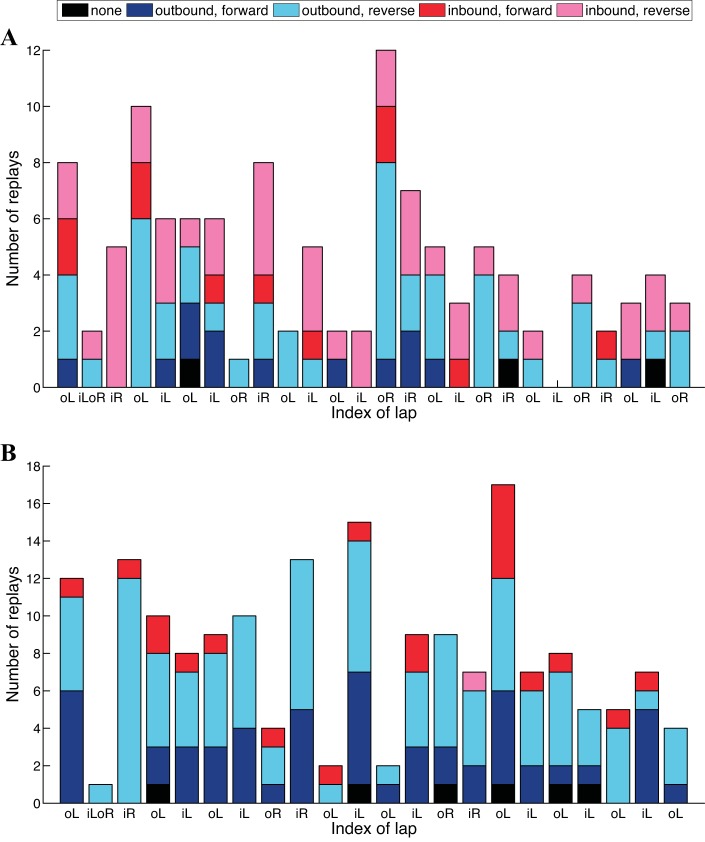

Figure 7 plots the decoding results for the decision state of all replay events by lap using a threshold of 0.9 on the classification confidence for two entire recording epochs on the same day, experiment day 8. On the eighth experiment day, the rat was first running its 3rd 15-min session on the novel track and then its 14th 15-min session on the familiar track, with a 30-min sleep session in between.

Fig. 7.

Decision state decoding results for 2 recording epochs on the same experiment day. Summary of decision state, by lap, with a threshold of 0.9. Replay event classified as an “outbound, forward” path, an “outbound, reverse” path, an “inbound, forward” path, or an “inbound, reverse” path is plotted in darker blue, lighter blue, darker red, and lighter red, respectively. Left and outbound lap, left and inbound lap, right and outbound lap, and right and inbound lap are denoted on the x-axis as “oL,” “iL,” “oR,” and “iR.” A: a novel track. B: a familiar track.

Figure 7A plots results when the rat explored a novel track, while Figure 7B shows results from a familiar track. Events that are classified into “outbound, forward,” “outbound, reverse,” “inbound, forward,” and “inbound, reverse” are plotted in darker blue, lighter blue, darker red, and lighter red, respectively. Events whose decision state probabilities did not pass the threshold by the end of a replay and cannot be classified are plotted in black. Laps are indexed on the x-axis. Left and outbound laps, left and inbound laps, right and outbound laps, and right and inbound laps are denoted on the x-axis as “oL,” “iL,” “oR,” and “iR,” respectively.

We can see from Fig. 7 that for both example epochs not only does the number of replay events on each lap vary but also the proportion of replay events that fall into each decision category. In Fig. 7A, which plots the decoding results when the rat was exploring a novel track, the majority of the replays are inbound, reverse events colored in lighter red. In contrast, in Fig. 7B, which plots the decoding results when the rat was running on a familiar track, the majority of the replays are either outbound, forward events colored in darker blue or outbound, reverse events colored in lighter blue, with only one inbound, reverse event colored in lighter red.

In this small data sample, there appears to be a difference in the frequency of outbound, forward vs. inbound, reverse replay events between novel and familiar conditions. While it is too early to draw any conclusions about the distribution of replay events in novel vs. familiar exposures to an environment, this example highlights the manner in which our decoding approach can be used to better understand the role of replay in learning and memory tasks.

DISCUSSION AND CONCLUSION

Previous work has shown that interruption of awake SWRs leads to a specific learning and performance deficit (Jadhav et al. 2012). However, outstanding questions about the role of specific replay sequences in learning and memory remain. Here we present an approach to efficiently extract information about the content of SWR replay events that can facilitate real-time experiments to manipulate neural activity in a content-specific manner. Such an experiment has the potential to improve our understanding of the causal role that replay has in learning and memory. We extend our previous work on marked point process filters to capture the tuning properties of multiunit activity without spike sorting (Deng et al. 2015). Our algorithms make use of three potential sources of information to classify replay events.

We illustrated the properties of the algorithm on four example replay events recorded as a rat performed an navigational memory task. We defined a decision state that captured both the movement direction (“outbound” or “inbound”) and the temporal order (“forward” or “reverse”) of the spatial experience being replayed. We demonstrated that our decoder provided a rapid classification of the replay content and a measure of classification uncertainty.

Our classification algorithm combines information from three sources—where the replay begins, the spatial sequence of the trajectory, and with which movement direction the neural activity is consistent. It provides an approach to extract information from these three sources. The reliability of our algorithm depends on both the experimentalist's ability to define a decision state process about which these sources are informative as well as the adequacies of the information in these neural signals. In the examples discussed here, the difference between inbound and outbound movement can be seen specifically present in Fig. 3, and the fact that we can decode consistently suggests that these signals are reliable across replay events. Our algorithm allows us to express our confidence about our classification estimates based on the consistency of information across these sources, without the need for template matching for each category individually. For example, replay example 1 (Fig. 4A) showed a switch at ∼60 ms into the replay from being classified as a reverse, inbound trajectory (I = 4) with ∼80% confidence by the decoder to a forwardly replayed outbound trajectory (I = 1) with full certainty. These observations of switching between directionality and temporal replay order within a replay event are similar to previous findings of mixed replay content that depends on environmental features such as choice points (Wu and Foster 2014).

A closer look at the sources of information that are combined to estimate the decision state for replay example 1 (Fig. 3, A and D) reveals that the switch can be attributed to a change in the spike and waveform patterns. Throughout the replay event, the spatial evolution remains consistent with an outbound trajectory; both states I = 1 and I = 4 are consistent with an initial condition in the center arm and a state transition model that moves away from the center arm. However, I = 4 is defined by using an observation model that is consistent with actual inbound spatial experiences, while I = 1 is defined by using an observation model that is consistent with actual inbound spatial experiences. Therefore, our algorithm allows us both to estimate and classify replay trajectory rapidly, and to study these replay events in a more feature-specific way.

We also applied our algorithm to decode the decision state of two longer recording epochs, each at least 15 min in length: one when the rat was exploring a novel track and the other when it was exploring a familiar track. We showed that within these two example epochs, shown in Fig. 7, there was a persistent pattern of composition of classes of replay events across laps. Between these two epochs, however, the distributions of classes of replay events are quite different. Specifically, we found that inbound, reverse events were more common in our example familiar epoch.

There are a number of possible explanations for this difference in replay distribution. For example, Karlsson and Frank (2009) suggested that some replay events may represent previously experienced environment—a situation that may occur more frequently in familiar environments. In future work, we plan to use these methods to explore replay of local vs. previously experienced environments.

There are a few additional caveats related to these methods and the example analyses above. We assumed that the joint-mark intensity model that we computed from the data was known with complete certainty. By incorporating the encoding model uncertainty, as described in the appendix, we would expect the confidence level for each decoded replay event to be decreased. Additionally, our state model assumed that each replay event was one of four possible types. In fact, the discussion above highlights other possible origins for the observed replay sequences. Similarly, previous analyses have found evidence for mixed events (Wu and Foster 2014), which does not match the model assumptions. The effect of model misspecification on these methods is an important direction for future research.

There are a number of directions in which this work may be extended. For example, one question is whether there is a multiunit receptive field model better suited for real-time estimation than the kernel models we used here. We are actively investigating the potential for Gaussian mixture models in describing the ensemble spiking activity. Another extension is to explore more features of the spike waveform as marks within our model, such as including its width and the slope.

Closed-loop experiments are increasingly common in neuroscience. They allow for real-time control over neural dynamics and animal behavior with the goal of probing the causal relationship between neural activity and behavior and the testing of hypotheses of the underlying neural systems that would be difficult or impossible to address in an open-loop setting (Grosenick et al. 2015; Siegle and Wilson 2014). Closing the loop demands novel data analysis methods that can guide informed experimental decisions—stimulation, inhibition, and modulation—using the neural activity on a physiologically relevant timescale.

This work provides an approach to decode continuous features and make discrete decisions based on the confidence gained from multiunit spiking activity with a short latency on the order of milliseconds, which is suitable for closed-loop control. Components of our proposed state-space filtering algorithm can be formalized to test specific hypotheses, such as the causal role of specific activity patterns in generating subsequent activity patterns and behavior. We envision the need for this algorithm to broaden and enhance our understanding of a wide range of neural systems.

GRANTS

This research was supported in part by a grant from the Simons Foundation (SCGB 320135 to L. M. Frank and SCGB 337036 to U. T. Eden), National Institutes of Health (NIH) Grant MH-105174 to L. M. Frank and U. T. Eden, National Science Foundation (NSF) GRFP Grant 1144247 to D. F. Liu, and NSF Grant IIS-0643995 and NIH Grant R01 NS-073118 to U. T. Eden.

DISCLOSURES

No conflicts of interest, financial or otherwise, are declared by the author(s).

AUTHOR CONTRIBUTIONS

X.D., D.F.L., L.M.F., and U.T.E. conception and design of research; X.D. and U.T.E. analyzed data; X.D., D.F.L., L.M.F., and U.T.E. interpreted results of experiments; X.D. prepared figures; X.D. drafted manuscript; X.D., D.F.L., L.M.F., and U.T.E. edited and revised manuscript; X.D., D.F.L., M.P.K., L.M.F., and U.T.E. approved final version of manuscript; M.P.K. performed experiments.

ACKNOWLEDGMENTS

We thank Kenny Kay, Kensuke Arai, and Jason Chung for helpful discussions.

APPENDIX

Bootstrap Confidence Bounds for Estimated Joint-Mark Intensity

We can estimate the uncertainty associated with the joint-mark intensity model analytically or numerically. Here we describe a bootstrap algorithm for constructing confidence bounds on the joint-mark intensity. Over the encoding period, we resample each discrete time step with replacement to generate a new set of positions, spikes, and marks. This set is the jth of B total bootstrap samples. For each bootstrap sample, we construct a separate joint-mark intensity estimate, . The pointwise confidence bounds can be computed using the quantiles of the collection of bootstrap estimates at any mark value.

Incorporating model uncertainty into decoding algorithm.

In the analyses described in this report, we assumed that the joint-mark intensity was estimated with perfect confidence. To incorporate the uncertainty in the joint-mark encoding model, we can augment the likelihood in Eq. 9 by using the bootstrap joint-mark intensity computed above. The likelihood now becomes

where represents the probability, as in Eq. 6, computed using the jth bootstrap joint-mark intensity .

The filtering algorithm then proceeds as previously described using this augmented likelihood.

REFERENCES

- Ambrose E, Ambrose BE, Foster DJ. Rate of reverse, but not forward hippocampal replay increases with a relative increase in reward (Abstract). Neuroscience Meeting Planner 2015: 631–04., 2015. [Google Scholar]

- Best PJ, White AM, Minai A. Spatial processing in the brain: the activity of hippocampal place cells. Annu Rev Neurosci 24: 459–486, 2001. [DOI] [PubMed] [Google Scholar]

- Brown EN, Frank LM, Tang D, Quirk MC, Wilson MA. A statistical paradigm for neural spike train decoding applied to position prediction from ensemble firing patterns of rat hippocampal place cells. J Neurosci 18: 7411–7425, 1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buzsaki G. Two-stage model of memory trace formation: a role for “noisy” brain states. Neuroscience 31: 551–570, 1989. [DOI] [PubMed] [Google Scholar]

- Buzsaki G. Large-scale recording of neuronal ensembles. Nat Neurosci 7: 446–451, 2004. [DOI] [PubMed] [Google Scholar]

- Buzsaki G. Hippocampal sharp wave-ripple: a cognitive biomarker for episodic memory and planning. Hippocampus 25: 1073–1188, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carr MF, Jadhav SP, Frank LM. Hippocampal replay in the awake state: a potential substrate for memory consolidation and retrieval. Nat Neurosci 14: 147–153, 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen Z, Kloosterman F, Layton S, Wilson MA. Transductive neural decoding for unsorted neuronal spikes of rat hippocampus. Conf Proc IEEE Eng Med Biol Soc 2012: 1310, 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cheng S, Frank LM. New experiences enhance coordinated neural activity in the hippocampus. Neuron 57: 303–313, 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cox DR, Isham V. Point Processes. London: Chapman and Hall, 1980. [Google Scholar]

- Csicsvari J, Henze DA, Jamieson B, Harris KD, Sirota A, Bartho P, Wise KD, Buzsaki G. Massively parallel recording of unit and local field potentials with silicon-based electrodes. J Neurophysiol 90: 1314–1323, 2003. [DOI] [PubMed] [Google Scholar]

- Csicsvari J, Hirase H, Czurko A, Mamiya A, Buzsaki G. Oscillatory coupling of hippocampal pyramidal cells and interneurons in the behaving rat. J Neurosci 19: 274–287, 1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Csicsvari J, O'Neill J, Allen K, Senior J. Place-selective firing contributes to the reverse-order reactivation of CA1 pyramidal cells during sharp waves in open-field exploration. Eur J Neurosci 26: 704–716, 2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Daley D, Vere-Jones D. An Introduction to the Theory of Point Processes. New York: Springer, 2003. [Google Scholar]

- Deng X, Liu DF, Kay K, Frank LM, Eden UT. Clusterless decoding of position from multiunit activity using a marked point process filter. Neural Comput 27: 1438–1460, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Diba K, Buzsaki G. Forward and reverse hippocampal place-cell sequences during ripples. Nat Neurosci 10: 1241–1242, 2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eden UT, Frank LM, Barbieri R, Solo V, Brown EN. Dynamic analysis of neural encoding by point process adaptive filtering. Neural Comput 16: 971–998, 2004. [DOI] [PubMed] [Google Scholar]

- Ego-Stengel V, Wilson MA. Disruption of ripple-associated hippocampal activity during rest impairs spatial learning in the rat. Hippocampus 20: 1–10, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Foster DJ, Wilson MA. Reverse replay of behavioural sequences in hippocampal place cells during the awake state. Nature 440: 680–683, 2006. [DOI] [PubMed] [Google Scholar]

- Girardeau G, Benchenane K, Wiener SI, Buzsaki G, Zugaro MB. Selective suppression of hippocampal ripples impairs spatial memory. Nat Neurosci 12: 1222–1223, 2009. [DOI] [PubMed] [Google Scholar]

- Gray CM, Maldonado PE, Wilson M, McNaughton B. Tetrodes markedly improve the reliability and yield of multiple single-unit isolation from multi-unit recordings in cat striate cortex. J Neurosci Methods 63: 43–54, 1995. [DOI] [PubMed] [Google Scholar]

- Grosenick L, Marshel JH, Deisseroth K. Closed-loop and activity-guided optogenetic control. Neuron 86: 106–139, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harris KD, Henze DA, Csicsvari J, Hirase H, Buzsaki G. Accuracy of tetrode spike separation as determined by simultaneous intracellular and extracellular measurements. J Neurophysiol 84: 401–414, 2000. [DOI] [PubMed] [Google Scholar]

- Hasselmo ME. A model of episodic memory: mental time travel along encoded trajectories using grid cells. Neurobiol Learn Mem 92: 559–573, 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haykin S. Adaptive Filter Theory. Englewood Cliffs, NJ: Prentice-Hall, 1996. [Google Scholar]

- Huang Y, Brandon MP, Griffin AL, Hasselmo ME, Eden UT. Decoding movement trajectories through a T-maze using point process filters applied to place field data from rat hippocampal region CA1. Neural Comput 21: 3305–3334, 2009. [DOI] [PubMed] [Google Scholar]

- Jadhav SP, Kemere C, German PW, Frank LM. Awake hippocampal sharp-wave ripples support spatial memory. Science 336: 1454–1458, 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ji D, Wilson MA. Coordinated memory replay in the visual cortex and hippocampus during sleep. Nat Neurosci 10: 100–107, 2007. [DOI] [PubMed] [Google Scholar]

- Karlsson MP, Frank LM. Network dynamics underlying the formation of sparse, informative representations in the hippocampus. J Neurosci 28: 14271–14281, 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Karlsson MP, Frank LM. Awake replay of remote experiences in the hippocampus. Nat Neurosci 12: 913–918, 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kemere C, Santhanam G, Yu BM, Afshar A, Ryu SI, Meng TH, Shenoy KV. Detecting neural-state transitions using hidden Markov models for motor cortical prostheses. J Neurophysiol 100: 2441–2452, 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kloosterman F, Layton SP, Chen Z, Wilson MA. Bayesian decoding using unsorted spikes in the rat hippocampus. J Neurophysiol 111: 217–227, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koyama S, Eden UT, Brown EN, Kass RE. Bayesian decoding of neural spike trains. Ann Inst Stat Math 62: 37–59, 2010. [Google Scholar]

- Lee AK, Wilson MA. Memory of sequential experience in the hippocampus during slow wave sleep. Neuron 36: 1183–1194, 2002. [DOI] [PubMed] [Google Scholar]

- McNaughton BL, Barnes CA, O'Keefe J. The contributions of position, direction, and velocity to single unit activity in the hippocampus of freely-moving rats. Exp Brain Res 52: 41–49, 1983. [DOI] [PubMed] [Google Scholar]

- O'Keefe J, Dostrovsky J. The hippocampus as a spatial map. Preliminary evidence from unit activity in the freely-moving rat. Brain Res 34: 171–175, 1971. [DOI] [PubMed] [Google Scholar]

- Paninski L, Ahmadian Y, Ferreira DG, Koyama S, Rad KR, Vidne M, Vogelstein J, Wu W. A new look at state-space models for neural data. J Comput Neurosci 29: 107–126, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pfeiffer BE, Foster DJ. Hippocampal place-cell sequences depict future paths to remembered goals. Nature 497: 74–79, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ramsay JO, Silverman BW. Functional Data Analysis (2nd ed). New York: Springer, 2010. [Google Scholar]

- Redish AD. Beyond the Cognitive Map: From Place Cells to Episodic Memory. Cambridge, MA: MIT Press, 1999. [Google Scholar]

- Redish AD, Touretzky DS. The role of the hippocampus in solving the Morris water maze. Neural Comput 10: 73–111, 1998. [DOI] [PubMed] [Google Scholar]

- Siegle JH, Wilson MA. Enhancement of encoding and retrieval functions through theta phase-specific manipulation of hippocampus. Elife 3: e03061, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Silva D, Feng T, Foster DJ. Trajectory events across hippocampal place cells require previous experience. Nat Neurosci 18: 1772–1779, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Singer AC, Frank LM. Rewarded outcomes enhance reactivation of experience in the hippocampus. Neuron 64: 910–921, 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith AC, Brown EN. Estimating a state-space model from point process observations. Neural Comput 15: 965–991, 2003. [DOI] [PubMed] [Google Scholar]

- Srinivasan L, Eden UT, Willsky AS, Brown EN. A state-space analysis for reconstruction of goal-directed movement using neural signals. Neural Comput 18: 2465–2494, 2006. [DOI] [PubMed] [Google Scholar]

- Truccolo W, Eden UT, Fellows MR, Donoghue JP, Brown EN. A point process framework for relating neural spiking activity to spiking history, neural ensemble, and extrinsic covariate effects. J Neurophysiol 94: 1074–1089, 2005. [DOI] [PubMed] [Google Scholar]

- Tulving E, Markowitsch HJ. Episodic and declarative memory: role of the hippocampus. Hippocampus 8: 198–204, 1998. [DOI] [PubMed] [Google Scholar]

- Wilson MA, McNaughton BL. Dynamics of the hippocampal ensemble code for space. Science 261: 1055–1058, 1993. [DOI] [PubMed] [Google Scholar]

- Wu W, Kulkarni J, Hatsopoulos N, Paninski L. Neural decoding of goal-directed movements using a linear state-space model with hidden states. IEEE Trans Neural Syst Rehabil Eng 17: 370–378, 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wu X, Foster DJ. Hippocampal replay captures the unique topological structure of a novel environment. J Neurosci 34: 6459–6469, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]