Abstract

The language production and perception systems rapidly learn novel phonotactic constraints. In production, for example, producing syllables in which /f/ is restricted to onset position (e.g. as /h/ is in English) causes one’s speech errors to mirror that restriction. We asked whether or not perceptual experience of a novel phonotactic distribution transfers to production. In three experiments, participants alternated hearing and producing strings of syllables. In the same condition, the production and perception trials followed identical phonotactics (e.g. /f/ is onset). In the opposite condition, they followed reverse constraints (e.g. /f/ is onset for production, but /f/ is coda for perception). The tendency for speech errors to follow the production constraint was diluted when the opposite pattern was present on perception trials, thus demonstrating transfer of learning from perception to production. Transfer only occurred for perceptual tasks that may involve internal production, including an error monitoring task, which we argue engages production via prediction.

Keywords: phonotactics, transfer of learning, speech errors, language perception, language production, implicit learning

Speakers learn to speak by listening. But how do acts of speech perception lead to change within the production system? Our ability to speak depends on the acquisition of general patterns such as the fact that, in English, adjectives precede nouns or that one says “an” before words beginning with vowels. This paper is concerned with perception-to-production transfer of a specific kind of generalization, phonotactic constraints. Phonotactics are constraints about the ordering of segments, typically within syllables. They are language specific and hence must be learned. For example, in English, /h/ must be a syllable onset (occur at the beginning of a syllable, e.g. /hum/) and /ng/ must be a syllable coda (occur at the end of a syllable, e.g. /song/). In Persian, though, /h/ can be a coda (e.g. /dah/ “ten”), and in Vietnamese, /ng/ can be an onset (e.g. /ngei/ “day”).

Knowledge of native-language phonotactics emerges in infancy (e.g. Jusczyk et al., 1993) and, throughout life, constrains language perception and production (e.g. Pitt, 1988). Production models assume that phonotactic constraints are consulted during the encoding of word forms, particularly during the construction of syllables (e.g. Levelt, Roelofs, & Meyer, 1999). Evidence for this assumption comes from speech errors. Just as Freud famously hypothesized that speech errors reveal unconscious wishes, modern psycholinguistics proposes that slips reflect the speaker’s implicit linguistic knowledge, including phonotactics. Specifically, slips exhibit the phonotactic regularity effect. “Nun” might slip to the phonotactically legal syllable “nung”, but not to the illegal “ngun” (Fromkin, 1971). Although the phonotactic regularity effect is not without exceptions, particularly when one examines the phonetic and articulatory details of slips (e.g. Goldstein et al., 2007), it is generally accepted that slip outcomes are strongly shaped by linguistic factors (see Frisch & Wright, 2002; Goldrick & Blumstein, 2006).

How does the production system acquire and modify its phonotactic knowledge in adulthood? Several studies have used a laboratory analogue to the phonotactic regularity effect to investigate the learning of phonotactic distributions (Dell et al., 2000). Participants recite strings of syllables that, unbeknownst to them, follow novel phonotactic constraints. For example, whenever a syllable contains the consonant /f/, it appears only in onset position. Although /f/ may occur in onset position in English, the absence of /f/ in a coda position is novel in that it represents a change in the phonotactics of everyday English. The learning of the novel constraint is revealed in the participant’s slips. When some other consonant is mispronounced as /f/, the slip occurs in onset, rather than coda, position 95–98% of the time. It is as if the errors “know” that /f/’s must be onsets. Another way to say this is that the slips are 95–98% “legal” (5–2% “illegal”) with respect to the experimental constraints, just as natural slips are legal with respect to language-wide phonotactics. Research using this speech-error paradigm has demonstrated that slips reflect the novel, experiment-specific constraints within minutes (e.g. Goldrick, 2004), sometimes in as few as 9 speaking trials (Taylor & Houghton, 2005). The strength of this influence depends on the frequency with which the constraints are experienced in production. That is, the strength of the tendency for slips of, say, /f/ to stick to, say, onset position, depends on the relative proportion of onset and coda /f/’s in the experiment (Goldrick & Larson, 2008).

We interpret the sensitivity of slips to the experimentally experienced phonotactic distributions as “learning” in the sense that it is change as a function of experience. Often, though, theorists distinguish between very temporary changes, referred to as “priming,” and longer-lasting effects that constitute true learning (e.g. Taylor & Houghton, 2005). For example, Bock and Griffin (2000) asked whether structural priming in language production is the result of learning or priming. Priming was assumed to be caused by the normal persistence of activation that occurs in the performance of a task, here language production. They estimated that the decay of activation during production was on the order of a few seconds and hence that structural priming, which persisted in their experiment for 10 minutes, was a learning effect. Some effects of altered phonotactics on slips have been demonstrated to persist for 7 days (Warker, 2013). Warker and Dell (2006) introduced a computational model of how changes in phonotactic distributions affect speech errors and attributed the effects to alterations in the weights of connections in a network, as opposed to persisting activation. Attributing the effects to weight changes means that the network retains the changes unless further learning degrades them. However, our manipulations do not include demonstrations of the persistence of phonotatic learning, and so when we speak of learning, we simply mean change as a result of experience, without a further commitment to whether this is best described as priming or learning.

Like the production system, the perceptual system can also learn phonotactic distributions from brief experience. Onishi, Chambers, and Fisher (2002) presented adults with syllables that followed artificial constraints, and found that participants then processed “legal” syllables more quickly than “illegal” ones, thus demonstrating perceptual phonotactic learning (see also Bernard, 2015; Chambers et al., 2010, 2011). But can a phonotactic generalization acquired from perceptual experience be transferred to the production system? We know that a single phonological form is easily transferred from perception to production through imitation. If we hear, but do not say, syllables in which /f/ is always an onset, will our speech errors obey that constraint?

Transfer of phonotactics from perception to production was sought in a study by Warker et al. (2009). Participants did the speech error task used by Dell et al. (2000) in pairs, taking turns producing or hearing their partner produce sequences such as “hes feng neg kem”. For half of the pairs, the produced and the perceived sequences followed the same constraint, such as /f/ is an onset and /s/ is a coda (which we abbreviate as the fes constraint). For the other pairs, the produced and perceived sequences followed opposite constraints. For example, one person’s sequences would follow the fes constraint, while the other person’s sequences would follow the opposite sef constraint. If there is transfer of the perceived constraint to the production system, slips of participants in the same condition should adhere to the constraint present in production trials (since participants experience the same constraint in perception). It is the opposite condition that provides the critical test of transfer from perception to production: If heard syllables immediately impact production, oppositely distributed restricted consonants in perception should reduce the legality effect in production. That is, slips of participants in the opposite condition will not adhere as strongly to the production constraints, because the constraint experienced on perception trials will dilute the constraint present in production trials. If each heard syllable is as powerful as a spoken one, the legality of the restricted consonant slips in the opposite condition should be as low as that of unrestricted consonant (/n/, /g/, /k/, and /m/) slips. Slips of consonants that are not restricted to onset or coda are “legal” around 75% of the time – that is, they retain their syllable position around 75% of the time when they slip (Boomer & Laver, 1968).

Warker et al. (2009) found that in the same condition, as expected, the slips strongly adhered to the constraint present in the spoken sequences, with slips of /f/ and /s/ slipping to their “legal” positions between 94–100% of the time. However, there was no transfer at all in two experiments: restricted consonant slips of participants in the opposite condition looked very much like slips of participants in the same condition, almost always slipping to the positions that were “legal” in production. In a third experiment, there was robust transfer: in the opposite condition, the slips of experimentally restricted consonants were significantly less likely to adhere to the production constraints, compared to the same condition. The inconsistency in transfer across experiments was likely due to the task assigned during perception trials. For the two studies with no transfer, the perception task was to count the number of times the other person said “heng”. During perception trials in the experiment that found transfer, the participants engaged in monitoring for errors: they saw a printed representation of what the speaking partner was trying to say, and were told to circle any syllables in which they heard slips.

The results of these studies are clear, but their interpretation is equivocal. The fact that transfer was not found in the first two studies is perhaps not surprising: perception representations for the restricted consonants (/f/ and /s/) may not have been activated, because participants’ task (counting the number of times they heard the syllable “heng”) directed attention away from syllables containing the experimental constraints. This could have impaired learning, and hence transfer, of these constraints. The fact that there was transfer in just one study, however, makes it difficult to interpret the implications of the transfer effect. We illustrate this by describing three hypotheses of the relation between perception and production representations: the inseparable, the separate, and the separable hypotheses.

According to the inseparable view, the same representations are used in perception and production. Experience in one modality transfers immediately to the other because experience in one is experience for the other. Syntactic representations may be inseparable in this way (e.g. Bock et al., 2007). Moreover, if perceptual representations automatically and fully activate production representations and vice versa, representations in each modality are functionally inseparable. The motor theory of speech perception (Liberman & Mattingly, 1985) has this quality. The inseparable hypothesis holds that heard syllables that change phonotactic distributions should immediately affect production. For example, hearing /f/-onset syllables should make /f/’s slip to onset positions.

According to the separate hypothesis, production representations are used during production and perceptual representations are used during perception. Immediate transfer to production of a phonotactic constraint present in heard syllables is therefore impossible. For example, if someone hears but does not produce a surfeit of /f/-onsets, the altered distribution will not penetrate the production system and affect speech errors. Of course, if the listener actually repeats each heard item, the production system can then learn the new distribution by generalizing over these production experiences. But this is not true transfer: only the individual items are “transferred” from perception to explicit production. The ability to imitate – to repeat a single phonological form after hearing it – is an important ability (e.g. see Hickok, 2014; Nozari et al., 2010; Plaut & Kello, 1999), but its existence is not controversial.

According to the separable hypothesis, there are separate representations for perception and production, but some perceptual tasks activate the production system, while others do not. The separable hypothesis is perhaps the best supported of the three by evidence from brain imaging and neuropsychology. Perception and production activate distinct neural networks in normal individuals, but some areas of activation are shared (Heim et al., 2003; Hickok, Houde, & Rong, 2011; Wilson et al., 2004), and the extent to which they are shared may depend on the difficulty of the perceptual task (D’Ausilio et al., 2011; Fadiga et al., 2002; Hickok, Houde, & Rong, 2011). Moreover, although brain damage may selectively impair production or perception (e.g. Martin, Lesch, & Bartha, 1999; Romani, 1992; Shallice, Rumiati, & Zadini, 2000), these impairments tend to correlate with one another (Martin & Saffran, 2002; although see Nickels & Howard, 1995). The separable hypothesis predicts that when the production system is activated during perception, even though no speech is generated, transfer should occur.

The involvement of production in perception described by the separable hypothesis is largely uncontroversial for perceptual tasks that stimulate verbal rehearsal (e.g. Hickok & Poeppel, 2004), and hence experiencing altered phonotactic distributions while doing these tasks should transfer those distributions to production. A more controversial possibility is that production is engaged in perceptual tasks that involve prediction. For example, Delong et al. (2005) demonstrated that, in a supportive context (e.g. “windy day,” “boy flew”), comprehenders anticipate the word kite, including its phonology. Several researchers have proposed and supported the claim that such anticipatory processes are carried out by the production system (e.g. Chang et al., 2006; Baus et al., 2014; Federmeier, 2007; Mani & Huettig, 2012; Pickering & Garrod, 2007). Dell and Kittredge (2013) and Dell and Chang (2014) refer to this claim as “prediction is production”. Specifically, Pickering and Garrod (2007) argued that, during language processing, the production system is used to construct an “emulator” or forward model, which can anticipate upcoming input at all linguistic levels. These anticipations then guide input analysis. Transfer of phonotactic learning from perception to production should then occur if the perceptual experience involves prediction, and prediction activates production representations. Importantly, this hypothesis does not suggest that the production system is activated by perceived speech; instead, the act of predicting upcoming speech during perception activates the production system.

Warker et al. (2009)’s finding of transfer when the perceptual task was error monitoring is intriguing, because it suggests support for the claim that “prediction is production”. Perhaps, error monitoring involves prediction (I predict that I will hear “hes”, then “feng”, etc.), and if “prediction is production”, monitoring promotes transfer to production. As Warker et al. acknowledged, however, a plausible alternative is that the transfer was mediated through orthographic representations, as the listener saw both the other participant’s syllables and his/her own spoken syllables. In the opposite condition, transfer may have occurred as the orthographic forms of both fes and sef syllables were encoded. Moreover, the orthographic representations of syllables that participants heard may have activated output phonology through well-established orthography-to-phonology mappings (Damian & Bowers, 2009; Laszlo & Federmeier, 2007; Lupker, 1982; Rastle et al., 2011; Seidenberg & McClelland, 1989), causing perceived constraints to be represented in the production system. Given the distinct possibility of this alternative explanation, Warker et al. conservatively concluded in the title of their article: “Speech errors reflect the phonotactic constraints in recently spoken syllables, but not in recently heard syllables”.

We present three new experiments that show true perception-to-production transfer of phonotactics is possible, but is limited to perceptual tasks that may involve production. In the first experiment, we demonstrate that focused attention on restricted consonants during perception does not lead to any transfer. In the second, we demonstrate strong transfer from a perception task that engages internal articulation, showing that when perception stimulates production, transfer will occur. Finally, we show that error monitoring during perception trials creates transfer regardless of whether the perception task includes orthographic support. The results are consistent with the notion that error monitoring engages production, perhaps by encouraging prediction, and hence with the claim that “prediction is production”.

Experiment 1

In the Warker et al. (2009) experiment that found transfer, participants had to pay close attention to the phonemes of perceived syllables to monitor them for errors. Experiment 1 tested the hypothesis that heightened attention to phonemes drives transfer. This outcome is predicted by the inseparable hypothesis, and is also consistent with the separable hypothesis if strongly activated input phonology required for such a difficult task also activates output phonology (e.g. Hickok et al., 2011). We asked participants to monitor for the restricted phonemes /f/ and /s/ during the perception trials that were interleaved with their production trials. This task should cause heightened attention not only to the restricted phonemes, but also to their distribution. Phoneme monitoring reaction times increase when a phoneme that has been in one syllable position suddenly changes its position (Pitt & Samuel, 1990; Finney, Protopapas, & Eimas, 1996).

Participants

Fifty-six college students with normal or corrected-to-normal vision and hearing and no known linguistic or psychiatric disorders participated in the experiment. The participants were all native English speakers, as determined by their responses on a questionnaire at the end of the study that assessed familiarity with English and other languages. Participants were only allowed participation in one of the three experiments, because the learning in these kinds of studies has been shown to persist over long periods of time (Warker, 2013). Nine additional participants were recruited for the experiment but were excluded from analysis because of evidence of non-native English (based upon their answers to the questionnaires), mispronouncing experimentally restricted consonants (for more details, see Stimuli and Procedure below), or technical difficulties resulting in incomplete data.

Stimuli and Procedure

Participants alternated between production and perception trials, completing 96 production trials and 192 perception trials to equate the number of produced and perceived syllables. Unlike in Warker et al. (2009), participants were run individually rather than in pairs, and hence the perceptual stimuli were prerecorded and delivered by computer.

During each production trial, the participant saw a sequence of four CVC syllables all with the vowel /ε/ (spelled ‘e’), with each of these 8 consonants: /h/ and /ng/, which were restricted to onset and coda positions respectively by English phonotactics; /m/, /n/, /k/, /g/, which could occur in either onset or coda position and hence were unrestricted; and /f/ and /s/, whose positions were restricted with either /f/ as onset and /s/ as coda, or the reverse. Aside from these restrictions, the consonants were distributed randomly in the sequence. An example sequence in the fes condition would be “kem neg feng hes”. The participant repeated this sequence twice, timing each syllable with the beat of a metronome set at 2.53 beats/sec. Participants were eliminated from the experiment if they consistently mispronounced restricted consonants, e.g. said /z/ when they saw /s/. Participants were told to prioritize producing all syllables, at the expense of accuracy if necessary.

The syllables used for the perception trials were constructed according to the same rules, using the same vowel and consonants. On half of the perception trials, participants monitored for /f/, and on the other half for /s/; the order of these trials followed a random sequence. At the beginning of each perception trial, the participant saw either “F” or “S”, which designated the phoneme target for that trial. Then they heard four syllables (500 ms/syllable) 1, and the task was to press the spacebar if and when they heard the target phoneme, which could be absent or appear 1–2 times. This task should produce heightened attention to the positions of the restricted phonemes /f/ and /s/, thus enhancing learning of the altered phonotactic distribution on the perception trials.

For participants in the same condition, the production and perception trials both followed either the fes or the sef constraints. For participants in the opposite condition, production trials were fes and perception sef, or vice versa. Participants were randomly assigned to one of these 4 conditions (same - fes, same - sef, opposite - fes perception / sef production, opposite - sef perception / fes production).

After all of the production and perception trials, participants were given an unexpected recognition memory test for the syllables that they encountered during the perception trials. This test provided an additional assessment of the degree to which participants processed all of the syllables, particularly the syllables in the perception task. The memory test consisted of 49 spoken syllables: 22 syllables containing restricted consonants (11 following the sef constraint, and 11 following the fes constraint), and 27 syllables containing only unrestricted consonants. Test syllables were presented singly, and participants responded Y for “yes” if they judged that it occurred during a perception trial, and N for “no” otherwise. Participants also completed a questionnaire after the memory test, which asked them to report strategies used during the experiment.

Instructions for the perception trials, production trials, and memory test were displayed on the screen, and the experimenter provided explanations to supplement the written instructions as necessary. Participants practiced 6 perception and 6 production trials before the experiment, and received feedback on their performance from the experimenter. Participants were given 2 short breaks at regular intervals over the course of the experiment.

Analysis

Participants’ production trials were recorded and transcribed offline by one primary coder (blind to experimental condition2) and 2 secondary coders (blind to experimental condition and hypothesis). Slips during the production trials largely involved movements of consonants from one place to another, and these errors were classified as legal or illegal according to the original location of the errorful consonant in the target sequence. For example, given the target “hes meg fen keng”, and the spoken sequence “hes mek feng g-…keng”, the /ng/ in “feng” would be classified as a legal error (/ng/ kept its position as a coda), while the /k/ in “mek” would be classified as an illegal error (/k/ moved from onset position to coda position). Cutoff errors such as “g-…keng” were included in the analysis. Omissions, intrusions of consonants not present in the sequence (e.g. /t/), and unintelligible responses were excluded. As in Warker et al. (2009), two measures of coding reliability were calculated: (1) overall reliability, i.e. coders’ agreement on errors as well as correct repetitions, and (2) the percentage of errors for which the secondary coders agreed on the presence and nature of an error detected by the primary coder.

The hypotheses of interest dealt only with differences between experimentally restricted consonant (/f/, /s/) errors and unrestricted consonant (/m/, /n/, /k/, /g/) errors and hence only these slips were included in the regression analyses conducted for statistical inference. This eliminated the language-wide restricted consonant (/h/, /ng/) errors which, as expected, were almost never illegal (less than 1%) in this and in the other two experiments. To assess learning of the constraints, we compared the legality of restricted-consonant slips to the legality of unrestricted-consonant slips. Transfer of the constraints from perception to production was assessed by comparing the legality of restricted-consonant slips in the same condition to that in the opposite condition, with an increase in illegality in the opposite condition indicating transfer.

To assess these differences in the illegality of consonant slips, we used multilevel logistic regression. Following Agresti (2012), we reasoned that model convergence obviated the need for empirical logit regression (Barr, 2008). The legality of participants’ speech errors (i.e. legal/illegal) was predicted from the participants’ experimental condition (i.e. same/opposite), the type of consonant on which the error was made (i.e. restricted/unrestricted), and the interaction of these variables. All independent variables were centered and contrast-coded for the regression analyses. Participant-specific variability was modeled with a random intercept, and participant-specific effects of consonant type (restricted/unrestricted) were modeled with a random slope (following Barr et al., 2013)3. The speech error data were analyzed four times: once with all participants, once with only the participants in the opposite condition, once with only the participants in the same condition, and once with only restricted consonant errors of all participants.

Performance on the memory task was assessed by analyzing participants’ acceptance of having heard the 22 syllables that included restricted consonants, comparing acceptance of syllables that followed the constraints they experienced in perception (fes or sef, depending on the participants’ condition) with that of syllables they did not experience in perception. All data from the memory tasks were analyzed using multilevel logistic regression as described above. Participants’ acceptance of syllables as having been heard (i.e. yes/no) was predicted from the participants’ constraint condition (i.e. same/opposite), the legality of each syllable according to the constraint that participant experienced in perception (i.e. legal/illegal), and their interaction. All independent variables were centered and contrast-coded. Participant-specific variability was modeled with a random intercept, and participant-specific effects of syllable legality were modeled with a random slope. The memory data were analyzed twice, once with all participants, and once with only the participants in the opposite condition. It is only the latter group that experiences some syllables in perception, but not in production, and hence checking these participants’ memory for perceived syllables provides important evidence that they internalized the perception constraints.

Participants’ responses on the phoneme monitoring task given during perception trials were considered correct if they detected the target immediately, or during the following syllable.

Results and Discussion

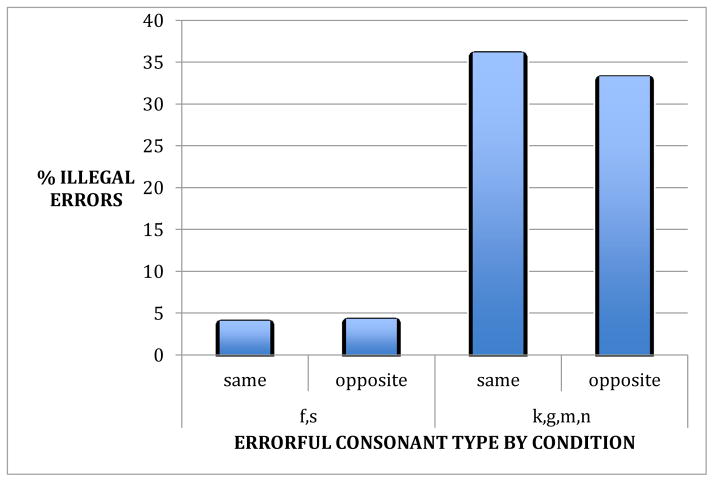

In the production task, participants made 5010 consonant errors, at a rate of 5.7% per consonant spoken (# consonant errors / total # consonants spoken). Coding reliability was high (overall agreement = 93.8%; 95% confidence interval = 93.0% – 94.7%), and reliability for errors was comparable to that in Warker et al. (2009) (agreement on errors = 78.6%; 95% confidence interval = 75.0% – 82.1%). The analysis of 2738 relevant consonant slips made during production trials revealed learning of the constraint. The proportion of illegal slips of the restricted consonants, /f/ and /s/, was much smaller than that for the unrestricted consonants (/m/, /n/, /k/, and /g/), in both the same constraint condition (4.1% f/s versus 36.2% k/g/m/n; coefficient = 2.83, SE = 0.39, p < .001; see Table 1) and opposite constraint condition (4.3% f/s, versus 33.3% k/g/m/n; coefficient = 2.52, SE = 0.45, p < .001; see Table 2). Crucially, there was no transfer (Figure 1): the percent of illegal slips of restricted consonants was not larger in the opposite constraint condition, compared to the same constraint condition (coefficient = 0.37, SE = 0.60, p = .539; see Table 3). Another index of transfer was also negative: the difference between restricted and unrestricted consonant illegal slips was not significantly smaller in the opposite constraint condition than in the same constraint condition (coefficient = 0.31, SE = 0.59, p = .603; see Table 4).

Table 1.

Results of logistic regression analysis predicting speech error illegality of same participants only, Experiment 1.

| Fixed effect | Coefficient | Standard error | p |

|---|---|---|---|

| Intercept | 1.85*** | 0.18 | < .001 |

| Consonant type (restricted/unrestricted) | 2.83*** | 0.39 | < .001 |

| Repetition (present/absent) | 0.06 | 0.31 | .844 |

| Consonant type*Repetition | −0.38 | 0.67 | .569 |

|

| |||

| Random effect | Variance | ||

|

| |||

| Intercept (subject) | 0.04 | ||

Interaction variables are denoted with a single asterisk (*). Significance with p < .001 of fixed effects is denoted with three asterisks (***).

Table 2.

Results of logistic regression analysis predicting speech error illegality of opposite participants only, Experiment 1.

| Fixed effect | Coefficient | Standard error | p |

|---|---|---|---|

| Intercept | 1.07*** | 0.13 | < .001 |

| Consonant type (restricted/unrestricted) | 2.52*** | 0.45 | < .001 |

| Repetition (present/absent) | −0.21 | 0.27 | .436 |

| Consonant type*Repetition | −0.75 | 1.04 | .471 |

|

| |||

| Random effect | Variance | ||

|

| |||

| Intercept (subject) | 0.11 | ||

| Slope (Consonant type by subject) | 1.38 | ||

Interaction variables are denoted with a single asterisk (*). Significance with p < .001 of fixed effects is denoted with three asterisks (***).

Figure 1.

Percentage of illegal slips of restricted and unrestricted consonants as a function of conditions in Experiment 1

Table 3.

Results of logistic regression analysis predicting restricted consonant speech error illegality for all participants, Experiment 1.

| Fixed effect | Coefficient | Standard error | p |

|---|---|---|---|

| Intercept | 3.45*** | 0.30 | < .001 |

| Condition (same/opposite) | 0.37 | 0.60 | .539 |

| Repetition (present/absent) | −0.38 | 0.60 | .531 |

| Condition*Repetition | 0.74 | 1.22 | .543 |

|

| |||

| Random effect | Variance | ||

|

| |||

| Intercept (subject) | 0.11 | ||

Interaction variables are denoted with a single asterisk (*). Significance with p < .001 of fixed effects is denoted with three asterisks (***).

Table 4.

Results of logistic regression analysis predicting speech error illegality of all participants, Experiment 1.

| Fixed effect | Coefficient | Standard error | p |

|---|---|---|---|

| Intercept | 1.13*** | 0.08 | < .001 |

| Condition (same/opposite) | 0.14 | 0.17 | .419 |

| Consonant type (restricted/unrestricted) | 2.89*** | 0.30 | < .001 |

| Repetition (present/absent) | −0.01 | 0.17 | .968 |

| Condition*Consonant type | 0.31 | 0.59 | .603 |

| Condition*Repetition | 0.38 | 0.34 | .265 |

| Consonant type*Repetition | −0.57 | 0.60 | .344 |

| Condition*Consonant type*Repetition | 0.54 | 1.21 | .657 |

|

| |||

| Random effect | Variance | ||

|

| |||

| Intercept (subject) | 0.11 | ||

| Slope (Consonant type by subject) | 0.48 | ||

Interaction variables are denoted with a single asterisk (*). Significance with p < .001 of fixed effects is denoted with three asterisks (***).

Despite the lack of transfer from perception to production, it was clear that the perceptual task engaged the participants. Participants demonstrated excellent phoneme monitoring accuracy in both the same (92.1%) and opposite (93.2%) constraint conditions. Moreover, on the strategy questionnaires, 23.2% of participants independently reported noticing the constraint. This suggests that even though participants only had to detect the phonemes /f/ and /s/, many of them were aware of the syllable position of these phonemes, as in other phoneme monitoring studies (Finney et al., 1996; Pitt & Samuel, 1990). Participants’ good memory for heard syllables also suggests encoding of the constraints present in perception trials: all participants accepted more perception-legal syllables than perception-illegal syllables (68.5% perception-legal versus 27.1% perception-illegal; coefficient = 2.61, SE = 0.23, p < .001; see Table 5), and this pattern held for participants in the opposite condition as well (69.8% perception-legal versus 48.1% perception-illegal; coefficient = 1.41, SE = 0.29, p < .001; see Table 6). This suggests that participants were generally able to make memory judgments specific to their perceptual experience, even when their production experience could have interfered with these judgments (as in the opposite constraint condition).

Table 5.

Results of logistic regression analysis predicting acceptance of memory test items as heard, for all participants, Experiment 1.

| Fixed effect | Coefficient | Standard error | p |

|---|---|---|---|

| Intercept | −0.18 | 0.16 | .245 |

| Condition (same/opposite) | −1.61*** | 0.31 | < .001 |

| Legality in perception (legal/illegal) | 2.61*** | 0.23 | < .001 |

| Condition*Legality | 2.36*** | 0.47 | < .001 |

|

| |||

| Random effect | Variance | ||

|

| |||

| Intercept (subject) | 0.93 | ||

| Slope (Legality by subject) | 1.36 | ||

Interaction variables are denoted with a single asterisk (*). Significance with p < .001 of fixed effects is denoted with three asterisks (***).

Table 6.

Results of logistic regression analysis predicting acceptance of memory test items as heard, for opposite participants only, Experiment 1.

| Fixed effect | Coefficient | Standard error | p |

|---|---|---|---|

| Intercept | 0.62*** | 0.18 | < .001 |

| Legality in perception (legal/illegal) | 1.41*** | 0.29 | < .001 |

|

| |||

| Random effect | Variance | ||

|

| |||

| Intercept (subject) | 0.71 | ||

| Slope (Legality by subject) | 1.25 | ||

Significance with p < .001 of fixed effects is denoted with three asterisks (***).

Experiment 1 allows us to conclude that transfer to production is not a necessary consequence of fully engaged perception of the target phonemes and their positions. This eliminates the inseparable hypothesis as an account of the relation between representations that support phonotactic learning in perception and production. In Experiment 2, we test the claim that when a perceptual task unequivocally engages the production system, this is sufficient to produce transfer of learning. Inner speech represents the phoneme-level information that should be critical to phonotactic learning (Oppenheim & Dell, 2008; Oppenheim & Dell, 2010), and thus inwardly producing perceived syllables should lead to transfer of perception constraints to production.

Experiment 2

Participants

Thirty-six college students with normal or corrected-to-normal vision and hearing and no known linguistic or psychiatric disorders participated in the experiment. The participants were all native English speakers, as determined by their responses to the same language questionnaire as in Experiment 1. Six additional participants were recruited for the experiment and excluded from analysis because of evidence of non-native English (based upon their answers to the questionnaires), mispronouncing experimentally restricted consonants, technical difficulties resulting in incomplete data, mouthing the syllables during perception trials, or failure to report using any auditory imagery on perception trials (for more details see Stimuli and Procedure below).

Stimuli and Procedure

As in Experiment 1, participants alternated between production and perception trials. Participants completed 96 production trials and 384 perception trials to equate the number of produced and perceived syllables. The stimuli for these trials were constructed in the same manner as for Experiment 1.

The procedure for the production trials and memory test was identical to that in Experiment 1. On perception trials, participants performed a task utilizing inner speech. On a given trial, participants heard a pair of syllables drawn from the syllable set that was appropriate for their condition (e.g. “heng mef” might be a pair used in the sef condition). They were told to reverse the position of the syllables in their head (e.g. “mef heng”), and rehearse the reversed syllables using inner speech. This presentation of the syllables and rehearsal period lasted 4450 milliseconds, allowing participants to rehearse the syllables approximately 3 times. Participants were monitored throughout the experiment to ensure that they did not silently mouth the syllables, as the goal was to activate only pre-articulatory phonology in the perception task (participants who did so on more than 3 production trials were excluded from analysis). After the rehearsal period, participants were prompted to report one of the consonants of the syllables they rehearsed by typing it (e.g. reporting the first consonant of the second syllable they rehearsed, “h” for the example above).

In a questionnaire at the end of the experiment, participants were asked to report any strategies they had used during the perception trials, and whether they used their own inner speech to rehearse the words or simply “replayed” the speaker’s voice (participants who did not report using any auditory or articulatory imagery, e.g. only reported visualizing the words, were excluded from analysis).

Analysis

Participants’ responses to the perception trials were considered accurate only if the correct consonant was typed. Participants’ production trials were recorded and transcribed offline by one primary coder (blind to experimental condition4) and 2 secondary coders (blind to experimental condition and hypothesis). The same coding reliability measures were calculated as in Experiment 1. Statistical analyses of the speech error data and memory data were conducted in the same manner as for Experiment 1.

Results and Discussion

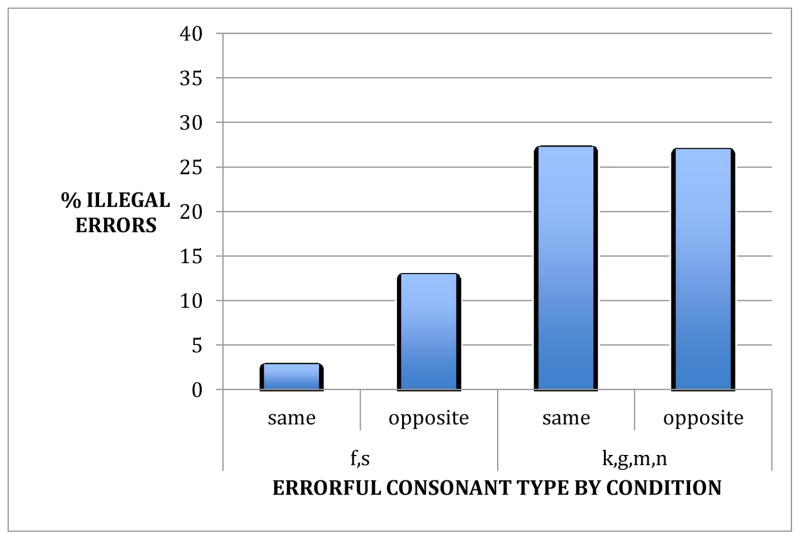

Participants made 3204 consonant errors, at a rate of 5.7% per consonant spoken. Coding reliability was high (overall agreement = 98.3%; 95% confidence interval = 97.8% – 98.9%), as was reliability for errors (agreement on errors = 91.8%; 95% confidence interval = 87.0% – 96.6%). Analysis of the legality of the 1829 relevant slips showed learning of the constraints in both the same (2.8% illegal slips for f/s versus 27.3% illegal slips for k/g/m/n; coefficient = 3.66, SE = 0.59, p < .001; see Table 7) and opposite (13.0% illegal slips for f/s versus 27.0% illegal slips for k/g/m/n; coefficient = 0.95, SE = 0.31, p < .01; see Table 8) conditions, as well as robust transfer (Figure 2): in the opposite condition, illegal slips with the restricted consonants /f/ and /s/ were four times more likely than in the same condition (13.0% opposite versus 2.8% same; coefficient = 2.43, SE = 0.83, p < .01; see Table 9). The opposite nature of the constraint experienced in the perception trials clearly diluted, but also did not completely remove, the tendency for restricted slips to stick to their syllable positions: the difference between illegal slips of restricted and unrestricted consonants was smaller in the opposite condition than in the same condition (coefficient = 2.24, SE = 0.86, p < .01; see Table 10), but was still a significant difference in the opposite condition (coefficient = 0.95, SE = 0.31, p < .01; see Table 8). Participants’ good performance on the consonant report task and memory test, and their responses to the questionnaires, further support their internalization of the constraint in perception trials. All of the participants reported repeating the words to themselves in their head, with the majority of participants (75.0%) reversing the syllables as requested. Participants were quite accurate at reporting the consonant from their inner speech (89.7% in the same and 89.3% in the opposite condition). In the memory task, participants accepted more perception-legal syllables than perception-illegal syllables, both across conditions (74.0% perception-legal versus 25.0% perception-illegal; coefficient = 2.61, standard error = 0.20, p < .001; see Table 11) and within the opposite condition (71.7% perception-legal versus 43.4% perception-illegal; coefficient = 1.30, standard error = 0.22, p < .001; see Table 12).

Table 7.

Results of logistic regression analysis predicting speech error illegality of same participants only, Experiment 2.

| Fixed effect | Coefficient | Standard error | p |

|---|---|---|---|

| Intercept | 2.07*** | 0.23 | < .001 |

| Consonant type (restricted/unrestricted) | 3.66*** | 0.59 | < .001 |

|

| |||

| Random effect | Variance | ||

|

| |||

| Intercept (subject) | 0.41 | ||

Significance with p < .001 of fixed effects is denoted with three asterisks (***).

Table 8.

Results of logistic regression analysis predicting speech error illegality of opposite participants only, Experiment 2.

| Fixed effect | Coefficient | Standard error | p |

|---|---|---|---|

| Intercept | 1.21*** | 0.12 | < .001 |

| Consonant type (restricted/unrestricted) | 0.95** | 0.31 | .002 |

|

| |||

| Random effect | Variance | ||

|

| |||

| Intercept (subject) | 0.07 | ||

| Slope (Consonant type by subject) | 0.31 | ||

Significance with p < .001 of fixed effects is denoted with three asterisks (***). Significance with p < .01 is denoted with two asterisks (**).

Figure 2.

Percentage of illegal slips of restricted and unrestricted consonants as a function of conditions in Experiment 2

Table 9.

Results of logistic regression analysis predicting restricted consonant speech error illegality for all participants, Experiment 1.

| Fixed effect | Coefficient | Standard error | p |

|---|---|---|---|

| Intercept | 3.80*** | 0.50 | < .001 |

| Condition (same/opposite) | 2.43** | 0.83 | .003 |

|

| |||

| Random effect | Variance | ||

|

| |||

| Intercept (subject) | 0.91 | ||

Interaction variables are denoted with a single asterisk (*).Significance with p < .001 of fixed effects is denoted with three asterisks (***). Significance with p < .01 is denoted with two asterisks (**).

Table 10.

Results of logistic regression analysis predicting speech error illegality for all participants, Experiment 2.

| Fixed effect | Coefficient | Standard error | p |

|---|---|---|---|

| Intercept | 1.72*** | 0.14 | < .001 |

| Condition (same/opposite) | 0.68** | 0.26 | .008 |

| Consonant type (restricted/unrestricted) | 2.59*** | 0.49 | < .001 |

| Condition*Consonant type | 2.24** | 0.86 | .009 |

|

| |||

| Random effect | Variance | ||

|

| |||

| Intercept (subject) | 0.18 | ||

| Slope (Consonant type by subject) | 1.29 | ||

Interaction variables are denoted with a single asterisk (*). Significance with p < .001 of fixed effects is denoted with three asterisks (***). Significance with p < .01 is denoted with two asterisks (**).

Table 11.

Results of logistic regression analysis predicting acceptance of memory test items as heard, for all participants, Experiment 2.

| Fixed effect | Coefficient | Standard error | p |

|---|---|---|---|

| Intercept | −0.20 | 0.13 | .109 |

| Condition (same/opposite) | −1.11*** | 0.25 | < .001 |

| Legality in perception (legal/illegal) | 2.61*** | 0.20 | < .001 |

| Condition*Legality | 2.68*** | 0.40 | < .001 |

|

| |||

| Random effect | Variance | ||

|

| |||

| Intercept (subject) | 0.22 | ||

Interaction variables are denoted with a single asterisk (*). Significance with p < .001 of fixed effects is denoted with three asterisks (***).

Table 12.

Results of logistic regression analysis predicting acceptance of memory test items as heard, for opposite participants only, Experiment 2.

| Fixed effect | Coefficient | Standard error | p |

|---|---|---|---|

| Intercept | 0.36* | 0.18 | .050 |

| Legality in perception (legal/illegal) | 1.30*** | 0.22 | < .001 |

|

| |||

| Random effect | Variance | ||

|

| |||

| Intercept (subject) | 0.38 | ||

Significance with p < .001 of fixed effects is denoted with three asterisks (***). Significance with p < .05 is denoted with one asterisk (*).

This result demonstrates that robust transfer can be observed when the perceptual task has a production component. This suggests that any perceptual task that engages production should produce transfer of learning. In the third experiment, we return to the possibility that error monitoring is such a task. When the intended sequence is known, error monitoring may entail active prediction of each syllable in advance of hearing it, and if prediction is a production process, then transfer will occur. Recall that Warker et al. (2009) found transfer with an error monitoring task; however, that result could have been mediated by listeners having access to the printed syllables that the speaker was supposed to say. Consequently, in the third experiment, we manipulated whether or not the monitoring task was carried out with orthographic support.

Experiment 3

Participants

Forty college students with normal or corrected-to-normal vision and hearing and no known linguistic or psychiatric disorders participated in the experiment. The participants were all native English speakers, as determined by their responses to the same language questionnaire as in Experiments 1–2. Seven additional participants were recruited for the experiment and excluded from analysis because of evidence of non-native English (based upon their answers to the questionnaires), mispronouncing experimentally restricted consonants (see Stimuli and Procedure of Experiment 1 for details), and technical difficulties resulting in incomplete data.

Stimuli and Procedure

As in Experiments 1 and 2, participants alternated between production and perception trials. Participants completed 96 production trials and 96 perception trials to equate the number of produced and perceived syllables. The stimuli for production trials were constructed in the same manner as for Experiments 1 and 2.

The procedure for the production trials and memory test was identical to that in Experiments 1–2. The perception trials started with the numbers 1 2 3 4 appearing in a row on the screen. The participants then heard a four-syllable reference sequence (1 sec/syllable), in which each syllable corresponded to a number (e.g. 1st syllable = “1”, 2nd syllable = “2”, etc.). For participants in the orthography condition, a written version of each heard syllable appeared on the screen as it was spoken, while those in the no orthography condition did not see written syllables. After a pause of 750 milliseconds, all participants were then cued to perform the error monitoring task. They saw only the numbers and listened to a second version of the sequence, in which each syllable corresponded to a number on the screen (e.g. 1st syllable = “1”), checking it for any deviations from the reference sequence. Deviant perception trials (50% of all trials) were randomly distributed throughout the experiment, and contained 0, 1 or 2 consonant substitutions (although these never occurred for the restricted consonants /f/ and /s/). Other than the presence of these consonant substitutions, the stimuli for the perception trials were constructed in the same manner as for Experiments 1 and 2. Participants typed in the numbers (1, 2, 3, 4) corresponding to any syllable(s) that contained errors, and 0 if there were no errors.

Participants were not given any strategy questionnaire after this experiment.

Analysis

Participants’ responses to the perception trials were considered correct if participants correctly detected the absence of errors, or the presence of any errors, regardless of whether the participant typed in the true errorful syllable(s). Participants’ production trials were recorded and transcribed offline by one primary coder (blind to experimental condition) and 3 secondary coders (blind to experimental condition and hypothesis). The same coding reliability measures were calculated as in Experiments 1 and 2. Statistical analyses of the speech error data and memory data were conducted in the same manner as for Experiments 1 and 2.

Results and Discussion

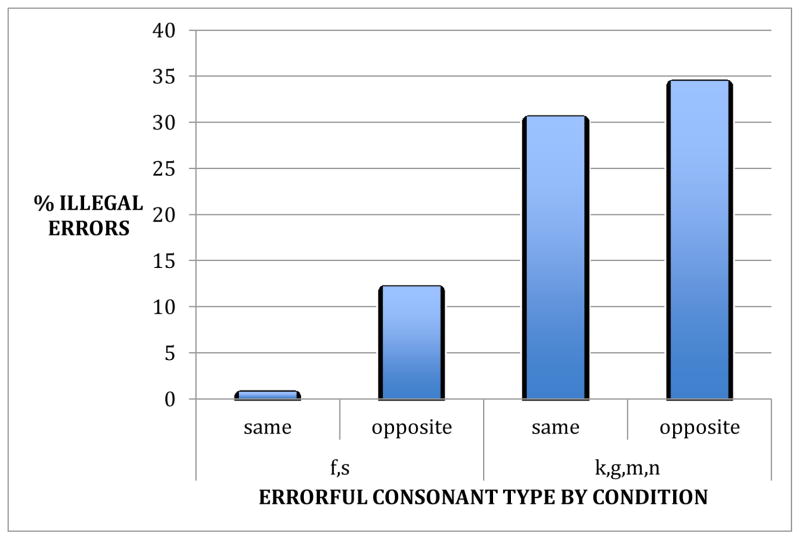

Participants made 2994 consonant errors, at a rate of 4.8% per consonant spoken. Coding reliability was high (overall agreement = 97%; 95% confidence interval = 97.4% – 98.5%), and reliability for errors was good (agreement on errors = 70.6%; 95% confidence interval = 61.3% – 79.9%). Analyses of the 1830 relevant slips showed learning of the constraints in both the same condition (0.8% illegal slips for f/s versus 30.6% illegal slips for k/g/m/n; coefficient = 3.72, SE = 0.72, p < .001; see Table 13) and opposite condition (12.2% illegal slips for f/s versus 34.5% illegal slips for k/g/m/n; coefficient = 1.37, SE = 0.23, p < .001; see Table 14). There was also clear transfer from perception to production (Figure 3): there were many fewer illegal slips of restricted consonants in the same compared to the opposite condition (0.8% same versus 12.2% opposite; coefficient = 2.69, SE = 1.06, p < .05; see Table 15), and the difference between restricted and unrestricted consonant slips was larger in the same compared to the opposite condition (coefficient = 2.37, SE = 0.76, p < .01; Table 16).

Table 13.

Results of logistic regression analysis predicting speech error illegality of same participants only, Experiment 3.

| Fixed effect | Coefficient | Standard error | p |

|---|---|---|---|

| Intercept | 1.54*** | 0.17 | < .001 |

| Consonant type (restricted/unrestricted) | 3.72*** | 0.72 | < .001 |

| Orthography (orthography/no orthography) | 0.003 | 0.33 | .993 |

| Consonant type*Orthography | 0.12 | 1.44 | .932 |

|

| |||

| Random effect | Variance | ||

|

| |||

| Intercept (subject) | 0.04 | ||

Interaction variables are denoted with a single asterisk (*). Significance with p < .001 of fixed effects is denoted with three asterisks (***).

Table 14.

Results of logistic regression analysis predicting speech error illegality of opposite participants only, Experiment 3.

| Fixed effect | Coefficient | Standard error | p |

|---|---|---|---|

| Intercept | 0.94*** | 0.12 | < .001 |

| Consonant type (restricted/unrestricted) | 1.37*** | 0.23 | < .001 |

| Orthography (orthography/no orthography) | −0.14 | 0.24 | .552 |

| Consonant type* Orthography | −0.04 | 0.46 | .936 |

|

| |||

| Random effect | Variance | ||

|

| |||

| Intercept (subject) | 0.13 | ||

Interaction variables are denoted with a single asterisk (*). Significance with p < .001 of fixed effects is denoted with three asterisks (***).

Figure 3.

Percentage of illegal slips of restricted and unrestricted consonants as a function of conditions in Experiment 3

Table 15.

Results of logistic regression analysis predicting restricted consonant speech error illegality for all participants, Experiment 1.

| Fixed effect | Coefficient | Standard error | p |

|---|---|---|---|

| Intercept | 3.68*** | 0.50 | < .001 |

| Condition (same/opposite) | 2.69* | 1.06 | .011 |

| Orthography (orthography/no orthography) | 0.01 | 0.99 | .995 |

| Condition*Orthography | 0.22 | 2.10 | .917 |

|

| |||

| Random effect | Variance | ||

|

| |||

| Intercept (subject) | 1.60 | ||

Interaction variables are denoted with a single asterisk (*). Significance with p < .001 of fixed effects is denoted with three asterisks (***).Significance with p < .05 is denoted with one asterisk (*).

Table 16.

Results of logistic regression analysis predicting speech error illegality for all participants, Experiment 3.

| Fixed effect | Coefficient | Standard error | p |

|---|---|---|---|

| Intercept | 1.24*** | 0.10 | < .001 |

| Condition (same/opposite) | 0.67** | 0.21 | .002 |

| Consonant type (restricted/unrestricted) | 2.48*** | 0.36 | < .001 |

| Orthography (orthography/no orthography) | −0.08 | 0.21 | .712 |

| Condition*Consonant type | 2.37** | 0.76 | .002 |

| Condition*Orthography | 0.13 | 0.42 | .764 |

| Consonant type*Orthography | 0.04 | 0.73 | .957 |

| Condition*Consonant type*Orthography | 0.15 | 1.52 | .922 |

|

| |||

| Random effect | Variance | ||

|

| |||

| Intercept (subject) | 0.09 | ||

Interaction variables are denoted with a single asterisk (*). Significance with p < .001 of fixed effects is denoted with three asterisks (***). Significance with p < .01 is denoted with two asterisks (**).

Just as in Experiments 1 and 2, participants’ internalization of the production constraint was supported by their good performance on the perception task and memory test. Participants were good at monitoring for errors across conditions (73.7% detection accuracy in the same and 71.5% in the opposite condition), and in the memory task, participants accepted more perception-legal syllables than perception-illegal syllables, both across conditions (67.0% perception-legal versus 23.6% perception-illegal; coefficient = 2.86, standard error = 0.26, p < .001; see Table 17) and within the opposite condition (60.9% perception-legal versus 44.5% perception-illegal; coefficient = 0.72, standard error = 0.21, p < .001; see Table 18).

Table 17.

Results of logistic regression analysis predicting acceptance of memory test items as heard, for all participants, Experiment 3.

| Fixed effect | Coefficient | Standard error | p |

|---|---|---|---|

| Intercept | −0.61*** | 0.18 | < .001 |

| Condition (same/opposite) | −1.49*** | 0.36 | < .001 |

| Legality in perception (legal/illegal) | 2.86*** | 0.26 | < .001 |

| Condition*Legality | 4.29*** | 0.52 | < .001 |

|

| |||

| Random effect | Variance | ||

|

| |||

| Intercept (subject) | 0.65 | ||

Interaction variables are denoted with a single asterisk (*). Significance with p < .001 of fixed effects is denoted with three asterisks (***).

Table 18.

Results of logistic regression analysis predicting acceptance of memory test items as heard, for opposite participants only, Experiment 3.

| Fixed effect | Coefficient | Standard error | p |

|---|---|---|---|

| Intercept | 0.13 | 0.21 | .544 |

| Legality in perception (legal/illegal) | 0.72*** | 0.21 | < .001 |

|

| |||

| Random effect | Variance | ||

|

| |||

| Intercept (subject) | 0.69 | ||

Significance with p < .001 of fixed effects is denoted with three asterisks (***).

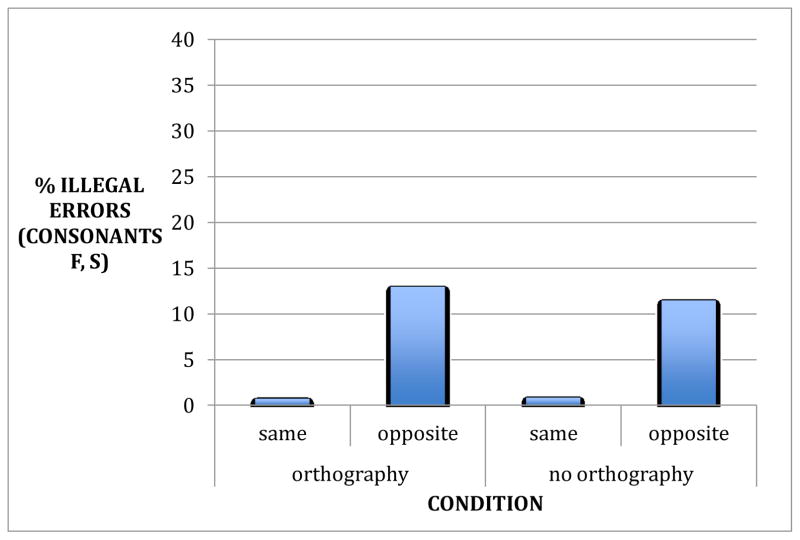

Although the presence of orthography did slightly improve participants’ accuracy in monitoring for errors (76.3% in the orthography condition, compared to 68.9% in the no orthography condition), transfer was unaffected by orthographic support (coefficient = 0.15, SE = 1.52, p = .922; see Table 16). This can be seen in Figure 4, which shows the illegality percentages for restricted consonants broken down by same/opposite and orthography/no orthography. Most importantly, a separate analysis of the no orthography condition revealed significant transfer: the difference between restricted and unrestricted consonant error legality was larger for participants in the same condition than participants in the opposite condition (coefficient = 2.30, standard error = 1.08, p < .05; see Table 19).

Figure 4.

Percentage of illegal slips for the restricted consonants only, as a function of conditions in Experiment 3

Table 19.

Results of logistic regression analysis predicting speech error illegality for no orthography participants only, Experiment 3.

| Fixed effect | Coefficient | Standard error | p |

|---|---|---|---|

| Intercept | 1.20*** | 0.13 | < .001 |

| Condition (same/opposite) | 0.54* | 0.26 | .035 |

| Consonant type (restricted/unrestricted) | 2.47*** | 0.53 | < .001 |

| Condition*Consonant type | 2.30* | 1.08 | .033 |

|

| |||

| Random effect | Variance | ||

|

| |||

| Intercept (subject) | 0.03 | ||

Interaction variables are denoted with a single asterisk (*). Significance with p < .001 of fixed effects is denoted with three asterisks (***). Significance with p < .05 is denoted with one asterisk (*).

Discussion and Conclusions

Our experiments provide the first clear evidence of immediate transfer of a phonotactic generalization from perception to production. Crucially, this kind of transfer is possible without orthographic support (Experiments 2 and 3). We propose that such transfer is mediated by engaging the production system during perception. Such production is not a necessary property of any attention-demanding perceptual task, though, because a task that focuses attention on the restricted consonants leads to no transfer (Experiment 1). In short, our results add to a significant body of evidence from neuroimaging and neuropsychology supporting what we called the separable hypothesis, which asserts that production and perception are separate, but capable of mutual influence (e.g. Hickok & Poeppel, 2004). Most importantly, we hypothesize that the mutual influence between perception and production arises in tasks for which perception induces production-like processes. Each syllable that is perceived in such a task then naturally alters the production system’s representation of the distributions of speech sounds, which in turn affects speech errors. So, we are not arguing that a phonotactic generalization is learned inside the perception system and then handed to the production system. Rather, the production system is independently learning the generalization from production-like events arising during perception.

Moreover, our results delineate which production-like events might make such transfer possible. Engaging the production system via internal articulation is sufficient for producing transfer: we found transfer when participants produced inner speech during perception (Experiment 2). This transfer mechanism may also have contributed to the transfer effect in Experiment 3. If participants monitor for errors by predicting upcoming syllables because the intended sequence is known in advance, and if “prediction is production” (Dell & Kittredge, 2013; Dell & Chang, 2014), the production system is engaged, allowing perceived syllables to immediately impact the phonotactic distributions that the production system experiences. Notice that it is error monitoring, not monitoring for a target (e.g. a particular phoneme, as in Experiment 1) that creates the transfer, suggesting that actively examining the heard syllables for deviations from an expected set of syllables is driving the transfer. When one monitors for say, /f/, one is not expecting to hear any particular syllable, but matching input features to a representation of the target phoneme. We believe that the transfer in Experiment 3 arises from production via prediction, rather than an explicit generation of inner speech, because participants were not instructed to rehearse the syllables of the reference sequences. We acknowledge, though, that we cannot definitely prove that they did not engage in inner speech.

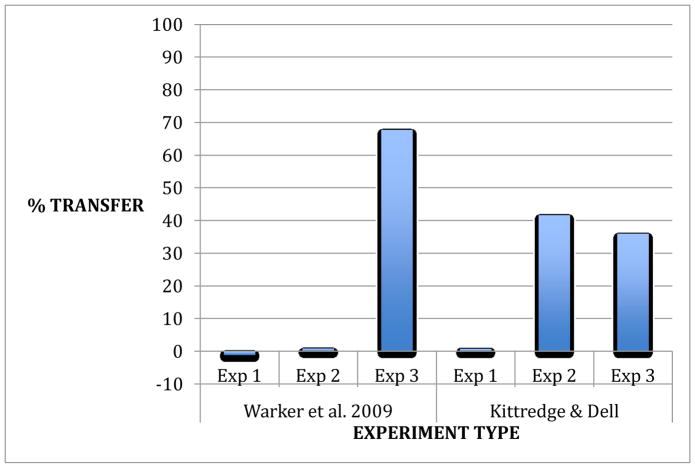

Figure 5 quantifies the amount of transfer in these experiments and the studies of Warker et al. (2009). When transfer is present, it is substantial. At the same time, transfer is incomplete: Figure 5 suggests that internally producing a syllable is not as powerful a force in shaping the production system’s phonotactics as externally producing one. Crucially, transfer is incomplete even when participants produce at least as many syllables internally as externally (e.g. Experiment 2). Although in theory this experience should equate the production system’s exposure to constraints present in the perception and production sequences (Goldrick & Larson, 2008), and lead to full transfer, it does not. Why is this the case? Although phonotactic constraints in the production system are more robustly encoded at the level of phoneme representations (Goldrick, 2004), these constraints are also likely represented at lower, articulatory levels of processing that cannot be trained through internal production. Patterns of these purely articulatory features may be registered only as syllables are spoken, and these patterns may influence speech errors in a way that perceptual experience cannot. If so, transfer of phonotactic learning from perception to production may never be more than partial. Full transfer may also be impeded by participants’ perception of their own productions, which adds additional perceptual tokens that reinforce the constraints experienced on production trials. Perhaps each spoken syllable provides two experiences (one produced and one heard), but each syllable on a perception trial provides only one. Hence, although the perceived trials transfer, they can dilute, but not overcome the more powerful experiences arising from production (see Remez, 2014).

Figure 5.

Percentage of transfer of phonotactic learning from perception to production, defined as 1 - (Legality of Restricted Opposite – Pooled Legality of Unrestricted)/(Legality of Restricted Same – Pooled Legality of Unrestricted)

The fact that there were clear limits to transfer of phonotactic learning is consistent with the literature on perception-production transfer of other phoneme-level learning. A substantial body of work documents imitation of perceived speech and acquisition of non-native phonological distinctions from perceptual experience alone, but these effects are inconsistently found and limited in scope (Goldinger, 1998; Fowler et al., 2003; Mitterer & Ernestus, 2008; Nielsen, 2011; Babel, 2012; Cooper, 1979; Kraljic, Brennan & Samuel, 2008; Pardo, 2006; Remez, 2014; Sancier & Fowler, 1997; Bradlow et al., 1999). It may be that transfer of such learning, too, depends on the extent to which people predict via internal production during perception.

Does prediction necessarily promote transfer? Or could transfer in these experiments be explained by internal production alone, regardless of whether prediction is involved? One argument for the role of prediction in transfer is the phenomenon of error-based learning, i.e. the learning that arises when a learner’s prediction is wrong. Error-based learning is a cornerstone of psychological theory (Elman, 1990; Holroyd & Coles, 2002; Rescorla & Wagner, 1972; Zacks et al., 2007) and is formalized in the delta rule (Rosenblatt, 1962), a learning algorithm used to train many connectionist networks. The network’s weights are updated as a function of how the network’s actual output deviates from the target output. Error-based learning has been evoked to explain a variety of psycholinguistic phenomena, including syntactic priming (Scheepers, 2003; Jaeger & Snider, 2008; Snider & Jaeger, 2009), syntax acquisition (Elman 1990; Chang et al., 2006), and word frequency effects in semantic priming paradigms (Becker, 1979; Plaut & Booth, 2000). Low-frequency words and structures are less predictable, and so they should generate a bigger error signal during language perception, leading to greater activation of low-frequency items and better learning of them. Prediction error may also be particularly important for driving statistical sequence learning in other modalities (Conway et al., 2010; Turk-Browne et al., 2010), learning which may share some mechanisms with phonotactic constraint acquisition. Thus error-based learning provides another mechanism, in addition to simple activation of production phonology, for transfer of learning in Experiment 3.

It is possible that error monitoring in a social context, as in Warker et al.’s Experiment 3, would lead to even more robust transfer. When predictively activating the production system, people may do so by covertly imitating heard speech (Pickering & Garrod, 2013). When listeners view speakers positively, they may increase their phonetic imitation of the speaker (Babel, 2012; Giles & Coupland, 1991). Participants in Warker et al. were told to “bring a friend” who would serve as their experimental partner. Perhaps they felt more aligned with the speaker and engaged in more covert imitation than participants in Experiment 3, who listened to a recorded voice. To the extent that covert imitation activates the production system, a greater effort to imitate could lead to more transfer.

There is also another mechanism that could have contributed to transfer. In each case where transfer was found (Warker et al.’s Experiment 3, and our Experiments 2 and 3), participants paid careful attention to whole syllables to complete a challenging perception task. By contrast, in our Experiment 1, participants did not have to encode the whole syllable to detect the target phonemes. Although participants in Experiment 1 showed sensitivity to the positions of restricted phonemes in their performance on the memory task, memory for syllables that begin with specific phonemes does not depend on careful processing of those syllables. By contrast, detailed processing of whole syllables may be required to activate position-specific phoneme representations (e.g. f-onset, e-vowel, g- coda) that are thought to support phonotactic constraint learning in production (Warker & Dell, 2006), and so their activation during perception may be important for transfer of learning.

Given that some forms of statistical sequence learning, such as word segmentation, can operate on the scale required for natural language acquisition (Frank et al., 2013), it is possible that the mechanisms of artificial constraint learning that we have investigated here may apply to real-life language learning. This interpretation is supported by data suggesting that participants in our experiments perceive the nonsense syllables as a continuation of their everyday experience with English: participants pronounced the syllables as English, and their errors with /h/ and /ng/ obeyed English constraints nearly 100% of the time. The relation between what is learned in the experiment and what had been known before was modeled by Warker and Dell (2006) by first training a connectionist network on English syllables, and then exposing the network to the particular distributions of syllables experienced by participants in the experiments, resulting in further weight changes. Warker (2013) then hypothesized that the changes resulting from experience with the experimental syllables are stored in a separate “mini-grammar” that is associated with the experimental context and hence is immune to interference from everyday English. She supported this proposal by demonstrating that the experimental learning was retained a week later. These notions, along with our findings about transfer could, in principle, be applied to the learning of phonotactic systems that differ to a much greater extent from the language(s) a speaker already knows. Specifically, our results suggest that foreign phonotactic constraints are only acquired by the production system through perceptual experience if that perceptual experience somehow engages the production system. And furthermore, these new production abilities would be retained and associated with the perceptual contexts that engendered them.

More broadly, these results are consistent with all theories in which perception and production phonologies are functionally connected when perception involves predictive processing (e.g. DeLong et al., 2005; Federmeier, 2007; Hickok et al., 2011; Pickering & Garrod, 2013). Given that there may be differences in the extent to which people make predictions during language comprehension (Federmeier, Kutas, & Schul, 2010; Huettig, Singh & Mishra, 2011; Wlotko, Federmeier, & Kutas, 2012), this raises the intriguing possibility that these variations would lead to differences in the amount of transfer of learning. And if predictive processing is common in everyday language comprehension (Federmeier, 2007), this suggests that, strikingly, our production system is constantly adapting in the face of new perceptual experience.

Highlights.

We assessed transfer of phonotactic constraint learning from perception to production

We sought evidence of transfer in participants’ speech errors in three experiments

Speech errors reflected phonotactics experienced in perception, evincing transfer

Transfer only occurred for perceptual tasks that may involve internal production

We argue that error monitoring yields transfer by engaging production via prediction

Acknowledgments

Many thanks to Pamela Glosson, Cofi Aufmann, Ranya Hasan, Tessa McGirk, and Haley Wright for assistance recording and transcribing speech. We are very thankful to many people for their comments on this work: Aaron Benjamin, Amelie Bernard, Kara Federmeier, Simon Fischer-Baum, Cynthia Fisher, Maureen Gillespie, Bonnie Nozari, Sharon Peperkamp, Daniel Simons, three anonymous reviewers for the Annual Meeting of the Cognitive Science Society, and the members of the University of Illinois Phonotactic Learning Group and Language Production Lab. We are especially grateful to Jay Verkuilen for invaluable discussion and citations regarding the regression analyses. This research was made possible by a grant from the National Institutes of Health (NIH DC 000191).

Footnotes

Half of the participants in each condition were exposed to perception sequences that contained some syllable and phoneme repetition in the sequence, and the other half were exposed to sequences that consisted of syllables without this repetition. As can be seen in Tables 1–4, our analyses determined that this factor (called “Repetition”) had no influence on the results, and so it will not be further discussed.

The primary coder inadvertently became aware of two participants’ experimental conditions. It is unlikely that this affected the primary coders’ transcriptions, given that the inter-coder reliability was comparable to that in Experiment 3 (in which the primary coder was blind to condition for all participants).

In cases where the random slope model resulted in a very high correlation (e.g. 1) between the random intercept and slope, implying overparameterization of the model, the random slope was dropped from the model (e.g. Baayen, Davidson, & Bates, 2008). Although Baayen et al. (2008) only recommend dropping random slopes if a likelihood ratio test indicates no significant difference between the random slope-and-intercept model and the random intercept-only model, we did not perform likelihood ratio tests for models with high random effects correlations, due to the possibility of unreliable log likelihoods in models exhibiting collinearity (Jay Verkuilen, personal communication; Agresti, 2012). Importantly, though, in all analyses for Experiments 1–3, random intercept-only models and random slope-and-intercept models produced estimates of the effects that were similar in directionality and significance.

Just as in Experiment 1, the primary coder inadvertently became aware of two participants’ experimental conditions. It is unlikely that this affected the primary coders’ transcriptions, given that the inter-coder reliability was comparable to Experiment 3 (in which the primary coder was completely blind to condition for all participants).

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Contributor Information

Audrey K. Kittredge, Email: audreyk@andrew.cmu.edu.

Gary S. Dell, Email: gdell@illinois.edu.

References

- Agresti A. Categorical Data Analysis. 3. Wiley; 2012. [Google Scholar]

- Baayen RH, Davidson DJ, Bates DM. Mixed-effects modeling with crossed random effects for subjects and items. Journal of Memory and Language. 2008;59:390–412. [Google Scholar]

- Babel M. Evidence for phonetic and social selectivity in spontaneous phonetic imitation. Journal of Phonetics. 2012;40:177–189. [Google Scholar]

- Barr DJ. Analyzing ‘visual world’ eye tracking data using multilevel logistic regression. Journal of Memory and Language. 2008;59:457–474. [Google Scholar]

- Barr DJ, Levy R, Scheepers C, Tily HJ. Random effects structure for confirmatory hypothesis testing: Keep it maximal. Journal of Memory and Language. 2013;68:255–278. doi: 10.1016/j.jml.2012.11.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baus C, Sebanz N, de la Fuente V, Branzi FM, Martin C, Costa A. On predicting others’ words: Electrophysiological evidence of prediction in speech production. Cognition. 2014;133:395–407. doi: 10.1016/j.cognition.2014.07.006. [DOI] [PubMed] [Google Scholar]

- Becker CA. Semantic context and word frequency effects in visual word recognition. Journal of Experimental Psychology: Human Perception and Performance. 1979;5:252–259. doi: 10.1037//0096-1523.5.2.252. [DOI] [PubMed] [Google Scholar]

- Bernard A. An onset is an onset: Evidence from abstraction of newly learned phonotactic constraints. Journal of Memory and Language. 2015;78:18–32. doi: 10.1016/j.jml.2014.09.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bock JK, Dell GS, Chang F, Onishi KH. Persistent structural priming from language comprehension to language production. Cognition. 2007;104:437–458. doi: 10.1016/j.cognition.2006.07.003. [DOI] [PubMed] [Google Scholar]

- Boomer DS, Laver JDM. Slips of the tongue. British Journal of Disorders of Communication. 1968;3:1–12. doi: 10.3109/13682826809011435. [DOI] [PubMed] [Google Scholar]

- Bradlow A, Akahane-Yamada R, Pisoni D, Tohkura Y. Training Japanese listeners to identify English /r/and /l/: Long-term retention of learning in perception and production. Perception & Psychophysics. 1999;61:977–985. doi: 10.3758/bf03206911. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chambers KE, Onishi KH, Fisher C. A vowel is a vowel: Generalizing newly-learned phonotactic constraints to new contexts. Journal of Experimental Psychology: Learning, Memory and Cognition. 2010;36:821–828. doi: 10.1037/a0018991. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chambers KE, Onishi KH, Fisher C. Representations for phonotactic learning in infancy. Language Learning and Development. 2011;7:287–308. doi: 10.1080/15475441.2011.580447. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chang F, Dell GS, Bock K. Becoming syntactic. Psychological Review. 2006;113(2):234–272. doi: 10.1037/0033-295X.113.2.234. [DOI] [PubMed] [Google Scholar]

- Conway CM, Bauernschmidt A, Huang SS, Pisoni DB. Implicit statistical learning in language processing: Word predictability is the key. Cognition. 2010;114:356–371. doi: 10.1016/j.cognition.2009.10.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cooper WE. Speech perception and production: Studies in selective adaptation. Norwood, NJ: Ablex Publishing Company; 1979. [Google Scholar]

- Damian MF, Bowers JS. Assessing the role of orthography in speech perception and production: Evidence from picture–word interference tasks. European Journal of Cognitive Psychology. 2009;21:581–598. [Google Scholar]

- D’Ausilio A, Bufalari I, Salmas P, Fadiga L. The role of the motor system in discriminating normal and degraded speech sounds. Cortex. 2011;48:882–887. doi: 10.1016/j.cortex.2011.05.017. [DOI] [PubMed] [Google Scholar]

- Dell GS, Chang F. The P-Chain: Relating sentence production and its disorders to comprehension and acquisition. Philosophical Transactions of the Royal Society B. 2014;369:1471–2970. doi: 10.1098/rstb.2012.0394. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dell GS, Kittredge AK. Prediction, Production, Priming, and imPlicit learning: A framework for psycholinguistics. In: Sanz M, Laka I, Tanenhaus M, editors. Understanding Language: Forty Years Down the Garden Path. Oxford University Press; 2013. [Google Scholar]

- Dell GS, Reed KD, Adams DR, Meyer AS. Speech errors, phonotactic constraints, and implicit learning: A study of the role of experience in language production. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2000;26:1355–1367. doi: 10.1037//0278-7393.26.6.1355. [DOI] [PubMed] [Google Scholar]

- DeLong KA, Urbach TP, Kutas M. Probabilistic word pre-activation during language comprehension inferred from electrical brain activity. Nature Neuroscience. 2005;8:1117–1121. doi: 10.1038/nn1504. [DOI] [PubMed] [Google Scholar]

- Elman JL. Finding structure in time. Cognitive Science. 1990;14(2):179–211. [Google Scholar]