Abstract

Attention to faces is a fundamental psychological process in humans, with atypical attention to faces noted across several clinical disorders. Although many clinical disorders onset in adolescence, there is a lack of well‐validated stimulus sets containing adolescent faces available for experimental use. Further, the images comprising most available sets are not controlled for high‐ and low‐level visual properties. Here, we present a cross‐site validation of the National Institute of Mental Health Child Emotional Faces Picture Set (NIMH‐ChEFS), comprised of 257 photographs of adolescent faces displaying angry, fearful, happy, sad, and neutral expressions. All of the direct facial images from the NIMH‐ChEFS set were adjusted in terms of location of facial features and standardized for luminance, size, and smoothness. Although overall agreement between raters in this study and the original development‐site raters was high (89.52%), this differed by group such that agreement was lower for adolescents relative to mental health professionals in the current study. These results suggest that future research using this face set or others of adolescent/child faces should base comparisons on similarly‐aged validation data. Copyright © 2015 John Wiley & Sons, Ltd.

Keywords: adolescent development, face processing, methodology, emotion perception, face stimulus set

Introduction

The faces of others hold special significance. Early in development, infants prefer human faces to patterned stimuli that do not resemble faces (e.g. Goren et al., 1975; Kleiner and Banks, 1987; Maurer and Young, 1983). Atypical attention to faces can be measured experimentally using eye‐tracking methodology (Horley et al., 2004) and has been observed across various clinical disorders (Archer et al., 1992). For example, atypical eye contact is often noted clinically in children and adults with various psychological disorders, such as social anxiety disorder, autism spectrum disorder, specific phobia, generalized anxiety disorder, and depression (Greist, 1994; Cowart and Ollendick, 2010, 2011; Jones et al., 2008; Mathews and MacLeod, 2002; Pelphrey et al., 2002, Mogg et al., 2000; Gotlib et al., 2004, Hallion and Ruscio, 2011). Most of these clinical disorders typically onset in late childhood or early adolescence; however, nearly all studies of attention and face processing with child and adolescent samples utilize adult faces.

Several stimulus sets of adult faces are widely available and routinely used in behavioural and neuroscience research. These include the Ekman set (Ekman and Friesen, 1976), the Japanese and Caucasian Facial Expressions of Emotion (JACFEE; Biehl et al., 1997), the NimStim set of Facial Expression (Tottenham et al., 2009), the Karolinska Directed Emotional Faces (Lundqvist et al., 1998), and the Naples Computer Generated Face Stimulus set (Naples et al., 2014). Mazurski and Bond (1993) created a stimulus set that contains primarily adult faces, with an additional six child faces included. These stimulus sets range widely in terms of the number of actors, emotions, images provided per condition, posing characteristics of the actors, as well as the method used to validate the emotions depicted in the faces, with most studies using samples of convenience with little regard for the populations that may be viewing the images in clinical studies. To date, children and adolescents have not been involved in the validation of the images used in these studies and, to our knowledge, only one data set is comprised exclusively of images of children and adolescents, the recently developed National Institute of Mental Health Child Emotional Faces Picture Set (NIMH‐ChEFs; Egger et al., 2011). However, even with this child stimulus set, the faces were validated only with adults.

It is critical to consider adolescent responses to age‐matched stimuli because of documented developmental differences in patterns of facial emotion perception. From a neuroscientific perspective, children and adolescents demonstrate altered activation when viewing faces of children compared to adults (Hoehl et al., 2010; Marusak et al., 2013), and children and adolescents show differential activation on functional magnetic resonance imaging (fMRI) tasks when viewing adult emotional faces compared to adults (Blakemore, 2008). Developmental improvements in the N170, a face‐sensitive component observed in electroencephalography (EEG), occur steadily from childhood into adulthood, as indexed by reduced latency to faces with age (Leppänen et al., 2007; Taylor et al., 2004; Taylor et al., 1999). Children and adults display differences when viewing adult faces compared to child and infant faces (Macchi Cassia, 2011; Macchi Cassia et al., 2012). Together, this indicates that face perception may change over time throughout development. In addition, it has been shown that children demonstrate increased responsivity, or faster reaction times, to child faces compared to adult faces (Benoit et al., 2007). These findings clearly illustrate the importance of utilizing peer emotional faces when conducting research with children and adolescents. Furthermore, much of what we currently know of child and adolescent responses to facial stimuli may be incomplete and may not fully capture the developmental effects of facial emotion recognition, given the frequent use of adult stimuli (Marusak et al., 2013). Collectively, these findings highlight the importance of validating adolescent stimuli in an adolescent sample.

The NIMH‐ChEFs stimulus set provides a valuable resource for investigators conducting clinical research with children and adolescents. These stimuli have already been used in recent studies, including work on attention retraining in adolescents with anxiety (De Voogd et al., 2014; Ferri et al., 2013) and neuroimaging studies of face processing in typical development (Marusak et al., 2013). However, it is important to consider features within the image sets themselves that may potentially bias results, and to acknowledge that some improvements can be applied to make this stimulus set even more useful. For example, within the stimulus set, some limitations are noted, such as low‐level visual properties of these stimuli, or variability in brightness (which resulted in visual “hot spots,” or areas of potentially increased salience due to luminance or spatial frequency), yet have not been controlled, and such limitations could complicate research with neuroimaging tasks and pupillometric assessment (Porter et al., 2007; Rousselet and Pernet, 2011). Further, angles of reference lines on the body relative to the viewer (e.g. tilted or rotated) result in a visual mismatch between the angles at which the actors' faces were tilted with respect to the gaze of a viewer. Previous studies suggest that face identification accuracy is sensitive to variation in such features (Bachmann, 1991; Costen et al., 1994; Nasanen, 1999). As previously mentioned, this stimulus set has only been validated by adults, and therefore validation by adolescents themselves might add to its usefulness. Just as it is important to standardize evidence‐based assessment tools with the clinical population for which they are intended (see McLeod et al., 2013), it is essential to validate facial stimulus sets with children and adolescents for clinical research.

Accordingly, our aims here are two‐fold: (1) to standardize this image set with the images along various parameters (i.e. colour, luminance, frequency, and other low‐level visual properties), and (2) to further validate the set of adolescent facial expressions with regard to inter‐rater agreement of perceived emotion and representativeness, or the degree to which the stimuli appeared to be good representations of the emotions they were intended to depict in adolescents, parents of adolescents, and mental health professional samples. With the sub‐sample of mental health professionals, we wished to replicate the Egger et al. (2011) findings, which were based on ratings from mental health professionals. Parents of teens were selected to rate the stimuli due to exposure to teen facial expressions and also to provide a validated sample for researchers who wish to use the stimuli for caregiver research, and to address our exploratory aim investigating whether there are group differences between adolescents, parents, and mental health professionals.

Methods

Stimuli development and image processing

All stimuli were generated using procedures described by Egger et al. (2011; see this article for original image development steps ). The final image set from Egger et al. (2011) contained 482 images of 59 children and adolescents aged 10 to 17 years (20 males and 39 females) displaying five different expressions (happiness, anger, sadness, fear, and neutral) with two gaze conditions (direct and averted). As noted by Egger et al. (2011), most actors were Caucasian, with only one boy and four girls of non‐Caucasian ethnicities. The processed image set included direct gaze only (N = 257 out of the 482 images).

Adjustment of low‐level visual properties

To facilitate comparability among image metrics at later steps, all of the images were first sampled into a common pixel space (Figure 1a; 1960 × 3008) while preserving the original resolution of the Egger et al. (2011) images. To create visual landmarks, each face was manually bisected vertically and horizontally, such that the centre of the bridge of the nose to the philtrum was in the centre of a vertical axis and that the area just below the pupils was in the centre of the horizontal axis, resulting in a four‐quadrant space whose origin lay at the midline of the face just above the bridge of the nose (Figure 1b). In cases where the actor had tilted his/her head, the image was rotated until the orientation of facial landmarks derived from the drawn vectors matched the horizontal and vertical grid of the background. An oval cutout of a standardized size (182 × 267 pixels) was then placed over the face, thus masking additional identifying information (clothes, hair; Figure 1c.). Due to variability in the physical size of different faces, some images were isotropically scaled for each axis direction, by either increasing the degree of visual angle occupied by the face, or decreasing the size of the face to ensure that critical features (e.g. the eyebrows) were visible. In either case, the resultant image occupied the entire oval cutout so that non‐face pixels were minimal. Finally, pixels not falling into the oval were filled in with black (see Figure 1c), resulting in a high‐resolution “face‐only” image.

Figure 1.

Depicts each of the processing steps undertaken to standardize the stimulus set: (a) the original image, (b) the rotated image to place the eyes and nose in the exact centre of the screen, (c) an oval cutout was then placed over the face to obscure hair and clothing, (d) the figure was then matched to the average brightness of the other images in the respective condition (e.g. all “happy” faces were matched to mean brightness for happy), (e) a 3 mm kernel then located visual “hotspots” to remove these spots from the image, (f) the high frequency was then isolated and removed from the image, (g) the final resultant image.

Some applications may require extreme precision in the consistency of low‐level visual properties across the image set. Accordingly, we employed additional steps to further standardize visual features (brightness, spatial frequency). First, to correct variability in the luminance (brightness), we adjusted all the images to within ±0.0001% brightness using a combination of custom and publicly available code from the Python Image Library (see Figure 1d; PIL; version 1.1.7). In our approach, the voxel‐wise brightness along each red–green–blue (RGB) vector was computed and aggregated within image to a scaled global value of 80% of the original brightness (to allow some images to be adjusted upward, and some downward if necessary), constrained to a tuning factor of ±0.0001 variability. We then minimized the influence of visual “hot spots” and high frequency data in the images by calibrating images to each other based on overall spatial frequency (Figures 1e and 1f). This procedure had the dual purpose of eliminating bright pixels as well as edges in the images (which tend to attract the eyes during unrestricted viewing; Einhäuser and König, 2003), and thus eliminated unevenly distributed high frequency data as a potential source of noise in patterns of visual attention. We removed high frequency data using a two dimensional (2D) convolution that consisted of weighting each local pixel by a moving 3 × 3 Gaussian kernel for spatial smoothing along the average of its 3 × 3 neighbourhood. The ultimate goal of this process was to ensure that studies employing our “adjusted” images could rule out high frequency noise and unevenly distributed brightness as sources of noise in visual attention, leaving only the lower frequency components of the face (e.g. gross anatomy) to drive visual attention (see Figure 1g for final image). We have made the total image set available at the stages corresponding to images c, d, e, and g of Figure 1.

Validation of stimuli

Online surveys

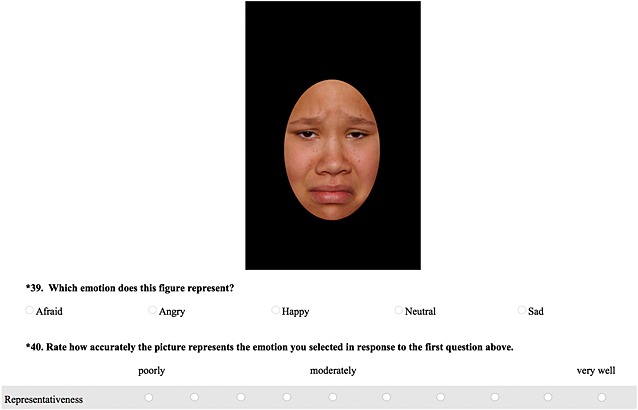

The total image set (k = 257 direct gaze faces; Neutral = 56, Angry = 52; Happy = 50; Sad = 48, Fear = 51) was randomly split within gender into two separate surveys (Survey 1: Image N = 125 faces; Survey 2: Image N = 132 faces) and uploaded to secure web‐based survey platform. We split the total image set into two parts due to the estimated time required for a single user to validate the entire set (approximately 50 minutes), which we considered to be prohibitively long, especially for our adolescent sample. Each participant completed only one of the two surveys. The surveys were identical in format (i.e. each survey contained 8–10 male and 15–18 female faces from each expression group) and differed only on the specific faces that were portrayed. The participants for each of the two surveys saw the images in the same order. However, the images were presented randomly such that no emotion and no actor was presented more than twice in a row. The survey also included questions about the rater's sex, age category, race/ethnicity, and, for the mental health professionals, the level of training in the practice of clinical or counselling psychology. The survey informed the raters that they would see pictures of faces portraying various expressions of emotions. Similar to Egger et al. (2011), raters were asked to answer two questions: (1) which emotion does this picture represent, and (2) how accurately does the picture represent the emotion you selected in response to the first question (see Figure 2 for a sample page). For the first question, raters could select one of five expressions (afraid, angry, happy, neutral, or sad), always provided in the same alphabetical order. For the second question, regarding representativeness of the expression of emotions, raters selected a level on a scale from 1 (poorly) to 10 (very well).

Figure 2.

This is a sample of the webpage that raters viewed to perform rating.

Recruitment of participants for image validation

Recruitment criteria for adolescents included being between the ages of 12 and 17, inclusive. There were no age restrictions for the other two groups. To be included in the parents of adolescents group, the participants had to endorse having at least one child living in the household between the ages of 12 and 17 years. Mental health professionals included participants who reported having formal training in the practice of clinical and counselling psychology. Their level of training ranged from pre‐masters’ (in graduate school) to completed terminal degree (e.g. PhD). To recruit adolescents and parents of adolescents, we used a variety of databases within our department and issued advertisements on our department's website, as well as flyers posted in the community. Recruitment of mental health professionals was done via emails to the faculty, graduate students, and alumni of the Department of Psychology and mental health professionals from the community not affiliated with our university. For each survey, one rater was chosen at random from each group to receive a $20 cash prize.

A total of 129 raters completed the surveys: 41 adolescents [58.5% female, 90.2% Caucasian, mean age = 14.54, standard deviation (SD) = 1.70], 54 parents (83.33% female, 87.03% Caucasian, modal age range = 45–47), and 34 mental health professionals (82.4% female, 88.2%, modal age range = 50 and above). Modal age is reported for parents and mental health professionals, due to the survey set up which asked for age within a range: 33.3% of parents were between the ages of 24 and 41, 50.0% were between the ages of 42 and 50, and 16.6% were above the age of 50 years; for the professionals, 67.6% were between the ages of 24 and 41, 5.8% were between the ages of 42 and 50, and 26.5% were above the age of 50 years. The level of clinical training for the mental health professionals varied from pre‐master's degree (n = 2; 5.9%) to post master's degree (n = 12; 35.3%) to completed doctoral degree (n = 20; 58.8%).

Analytic approach

We computed Fleiss kappa for multiple raters from these data to estimate overall agreement, as well as agreement for each emotion type, and agreement within groups. In addition, each image was scored in terms of percentage of agreement between the rater and the previously reported classification of the expression it was intended to convey (Egger et al., 2011). These analyses were performed for each expression, as well as for each rater group. IBM SPSS Statistics 21 and R (R Development Core Team, 2010) were used for data analyses. An α level of 0.05 was used for all statistical tests.

To examine differences between the two surveys, a one‐way analysis of variance (ANOVA) was conducted with survey (Survey 1 versus Survey 2) as a factor, and overall agreement with previously reported classification of emotions (based on the paper by Egger et al., 2011) as the dependent variable. To examine potential differences in participant characteristics between the two surveys, we conducted a series of t‐tests comparing means between different participant characteristics.

To examine the differences between groups in agreement with classification from Egger et al. (2011) across and within emotions, as well as the representativeness of the emotions, a one‐way ANOVA was conducted with group (adolescent, parent, mental health professional) as a factor, and accuracy (or representativeness) ratings for emotion as a dependent variable. If a significant group difference was found, pairwise multiple comparison using Tukey post hoc test were used to identify specific groups differing on accuracy and representativeness.

As a secondary analysis, to examine gender differences in accuracy for rating emotional faces in adolescents, we conducted a series of t‐tests comparing means between the two gender groups for total accuracy as well as the five emotions, for a total of six t‐tests. Additionally, in order to examine whether accuracy for identification of emotions differed by age, we conducted a Spearman correlation between the adolescent ages and percentage of accuracy for all emotions.

Results

Survey differences

As indicated earlier, the survey was split into two different sub‐sections to reduce subject burden. Overall kappa for the Survey 1 was κ = 0.81, and for Survey 2 it was κ = 0.84. Kappa for adolescents was κ = 0.81 for Survey 1 and κ = 0.79 for Survey 2. Kappa for parents of adolescents was κ = 0.83 and κ = 0.84 for the Surveys 1 and 2, respectively. Kappa for mental health professionals was κ = 0.81 for Survey 1 and κ = 0.89 for Survey 2. There was substantial agreement for all kappa values and all p values < 0.01. Further analyses of kappa by emotion type are displayed in Table 1.

Table 1.

Depicts kappa scores for Surveys 1 and 2 by group across each expression

| Expression | Survey | Adolescents Kappa score (p value) | Parents Kappa score (p value) | Professionals Kappa score (p value) | Total Kappa score (p value) |

|---|---|---|---|---|---|

| Fear | 1 | 0.79 (p < 0.01) | 0.75 (p < 0.01) | 0.83 (p < 0.01) | 0.64 (p < 0.01) |

| 2 | 0.40 (p < 0.01) | 0.51 (p < 0.01) | 0.50 (p < 0.01) | 0.50 (p < 0.01) | |

| Anger | 1 | 0.51 (p < 0.01) | 0.52 (p < 0.01) | 0.57 (p < 0.01) | 0.53 (p < 0.01) |

| 2 | 0.43 (p < 0.01) | 0.48 (p < 0.01) | 0.50 (p < 0.01) | 0.46 (p < 0.01) | |

| Happy | 1 | 0.87 (p < 0.01) | 0.85 (p < 0.01) | 0.87 (p < 0.01) | 0.89 (p < 0.01) |

| 2 | 0.90 (p < 0.01) | 0.90 (p < 0.01) | 0.97 (p < 0.01) | 0.91 (p < 0.01) | |

| Neutral | 1 | 0.60 (p < 0.01) | 0.62 (p < 0.01) | 0.66 (p < 0.01) | 0.40 (p < 0.01) |

| 2 | 0.28 (p < 0.01) | 0.38 (p < 0.01) | 0.45 (p < 0.01) | 0.35 (p < 0.01) | |

| Sad | 1 | 0.15 (p < 0.01) | 0.20 (p < 0.01) | 0.15 (p < 0.01) | 0.15 (p < 0.01) |

| 2 | 0.37 (p < 0.01) | 0.45 (p < 0.01) | 0.55 (p < 0.01) | 0.42 (p < 0.01) |

No differences in terms of types of images shown or rater characteristics, aside from the age of mental health professionals, across groups were noted, and, as such, the results for both surveys were combined for analytic purposes. There was no statistically significant difference in terms of overall agreement with previously reported classifications between the two surveys [F(1, 127) = 2.21, p = .14]. See Supporting Information Table S1 for a breakdown of participant characteristics between the two surveys.

Agreement with previously reported classification

Table 2 indicates the percentage agreement between the respondents and the previously reported classifications established by Egger et al. (2011). The mean agreement rate across emotions for all three groups was 89.52%: mean (M) = 87.97% for adolescents, M = 89.74% for parents of adolescents, and M = 91.02% for mental health professionals. However, the overall agreement with previously reported classifications differed significantly between groups [F(2, 128) = 4.31, p = 0.02]. For total agreement, adolescents and the mental health professionals differed (Tukey test, p = 0.01) such that mental health professionals had higher overall agreement with previously reported classifications than adolescents. There was no significant difference between the parents of adolescents and the mental health professionals (Tukey test, p = 0.40) or adolescents and parents of adolescents (Tukey test, p = 0.15) in accuracy ratings.

Table 2.

Mean accuracy, standard deviation (SD), and range of accuracy by group across all expressions

| Group | Mean % (SD) | Range % |

|---|---|---|

| Adolescents | 87.97 (5.87) | 67.79–96.07 |

| Parents | 89.74 (4.00) | 77.16–97.63 |

| Professionals | 91.02 (3.39) | 80.75–95.22 |

| Total | 89.52 (4.66) | 67.79–97.63 |

For 72 of the 257 stimuli (28.02%), there was complete agreement among all raters and the previously reported emotion classification. Additionally, for 194 images (75.48%), there was at least 90% agreement with previously reported classification, and for 220 stimuli (85.60%) at least 75% agreement. Therefore, only 37 of the 257 images (14.40% of stimuli) did not reach a 75% agreement – a criterion suggested by Egger et al. (2011) to establish accuracy. See Supporting Information Table S1 for the agreement percentages for each of the specific images.

Agreement by emotion condition

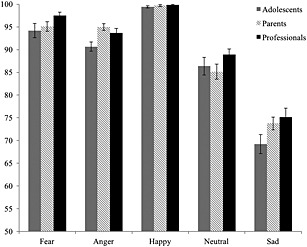

Table 3 indicates the percentage agreement between the respondents and previously reported classification for each emotion type. Stimuli expressing happiness showed highest agreement across all raters, followed by fear, anger, and neutral stimuli. Images displaying sadness showed the lowest agreement across rater groups. See Table 3 and Figure 3 for breakdown of agreement by emotion type for each of the rater groups.

Table 3.

Accuracy for each emotion by group

| Expression (number of images) | Adolescents Mean % (SD)Range | Parents Mean % (SD) Range | Professionals Mean % (SD) Range | Total Mean % (SD) Range |

|---|---|---|---|---|

| Fear (N = 51) | 94.18 (10.10) 50.00–100 | 95.09 (7.60) 58.33–100 | 97.53 (4.16) 83.33–100 | 95.44 (7.87) 50.00–100 |

| Anger (N = 52) | 90.68 (6.49) 73.08–100 | 94.97 (5.18) 80.77–100 | 93.66 (5.98) 76.92–100 | 93.26 (6.04) 73.08–100 |

| Happy (N = 50) | 99.44 (1.62) 92.31–100 | 99.72 (1.64) 88.46–100 | 99.89 (0.67) 96.15–100 | 99.67 (1.44) 88.46–100 |

| Neutral (N = 56) | 86.37 (12.53) 43.33– 100 | 85.16 (12.44) 37.04–100 | 88.91 (7.14) 70.00–100 | 86.54 (11.34) 37.04–100 |

| Sad (N = 48) | 69.21 (13.50) 37.50–95.83 | 73.77 (10.29) 41.66–95.83 | 75.12 (11.63) 37.50–91.67 | 72.67 (11.90) 37.50–95.83 |

Figure 3.

A bar graph displaying mean accuracy rates by group. The black bars represent the profession group, the hatched bars represent parents, and the grey bars represent adolescents.

The groups showed differences in rating agreement for anger [F(2, 128) = 6.46; p < 0.01]. Parents of adolescents agreed with previously reported classification of images more than the adolescents for stimuli expressing anger (Tukey test, p < 0.01). No other differences among groups were noted. See Figure 3 for accuracy by rater group. In addition, mental health professionals did not differ from adolescents on their rating agreement for any of the expressions (Tukey test, p = 0.07–0.59).

Happiness was the emotion most often identified correctly, across all three rater groups. There was at least a 97% agreement among raters with previously reported classification for all 50 images expressing happiness. Sadness, however, was the emotion that was least likely to be correctly identified. There was perfect agreement among raters for only three of the 48 images of sadness. For images of anger, all raters agreed on the previously reported classification for 12 of the 52 images. For fear, complete agreement among raters occurred for nine of the 51 images. For neutral expressions, complete agreement was reached for five out of 56 images. See Table 4 for summary percentages of correctly identified stimuli for each emotion.

Table 4.

Percentage agreement across raters for individual faces

| Percentage of images agreed upon by raters | Fear | Anger | Happy | Neutral | Sad |

|---|---|---|---|---|---|

| 100% | 17.65 | 23.08 | 86.00 | 8.93 | 6.25 |

| 85% | 94.12 | 86.54 | 100.00 | 69.64 | 47.92 |

| 75% | 96.08 | 90.38 | 100.00 | 80.36 | 60.42 |

Emotion misattribution

In order to evaluate the nature of misattributions (incorrectly identified expressions) in finer detail, we evaluated the types of errors in emotional attributions for misidentified faces. Table 5 indicates the total number of times each group misidentified an expression, which expression was incorrectly chosen, and the proportion of time that expressions were incorrectly chosen. Misattributed fearful faces were most commonly identified as angry, followed by neutral, sad, and happy. Misinterpreted angry faces were inaccurately labelled as neutral, sad, fearful, and happy. Misinterpreted happy faces were misidentified as angry, sad, fearful, and neutral. Misinterpreted neutral faces were identified as angry most often, followed by sad, happy, and fearful. Misidentified sad faces were classified as angry, followed by neutral, fearful, and happy.

Table 5.

Overall emotion misattribution

| Expression depicted | Expression chosen | Adolescents | Parents | Clinicians | Total |

|---|---|---|---|---|---|

| (N) % | (N) % | (N) % | (N) % | ||

| Fear | Anger | (23) 34.33 | (29) 41.43 | (11) 52.38 | (63) 39.87 |

| Happy | (12) 17.91 | (4) 5.71 | (1) 4.76 | (17) 10.76 | |

| Neutral | (20) 29.85 | (20) 28.5 | (3) 14.28 | (43) 27.22 | |

| Sad | (12) 17.91 | (17) 24.29 | (6) 28.57 | (35) 22.15 | |

| Anger | Fear | (11) 11.22 | (16) 18.18 | (4) 7.41 | (31) 12.92 |

| Happy | (1) 0.10 | (2) 0.14 | (0) 0 | (3) 1.25 | |

| Neutral | (48) 48.98 | (42) 47.72 | (22) 40.74 | (112) 46.67 | |

| Sad | (38) 38.76 | (28) 31.81 | (28) 51.85 | (94) 39.17 | |

| Happy | Fear | (1) 16.67 | (0) 0 | (1) 100 | (2) 18.18 |

| Anger | (4) 66.67 | (2) 0.14 | (0) 0 | (5) 45.45 | |

| Neutral | (2) 16.67 | (42) 47.72 | (0) 0 | (1) 9.10 | |

| Sad | (0) 0 | (28) 31.81 | (0) 0 | (3) 36.61 | |

| Neutral | Fear | (10) 6.10 | (5) 2.13 | (3) 2.75 | (18) 3.54 |

| Anger | (77) 46.95 | (131) 55.74 | (52) 47.71 | (260) 51.18 | |

| Happy | (18) 10.98 | (0) 0 | (7) 6.42 | (44) 8.66 | |

| Sad | (59) 35.96 | (80) 34.04 | (47) 43.12 | (186) 36.61 | |

| Sad | Fear | (51) 16.83 | (41) 12.10 | (41) 20.20 | (133) 15.72 |

| Anger | (145) 47.85 | (242) 71.18 | (125) 61.58 | (512) 60.52 | |

| Happy | (8) 2.64 | (1) 0.29 | (1) 0.50 | (10) 1.18 | |

| Neutral | (99) 32.67 | (56) 16.47 | (36) 17.73 | (191) 22.58 |

Representativeness ratings

Overall, the mean representativeness across all five emotions was 75.61% (SD = 11.29) across all raters. The ratings did not differ significantly across the three groups [F(2, 128) = 0.24, p = 0.78]. In addition, the ratings did not differ for individual expressions [F(2, 128) = 0.48–1.41, p values = 0.25–0.62]. Our findings were consistent with the results reported in Egger et al. (2011), such that images of happy faces had the highest representativeness rating, followed by neutral faces, angry faces, then fearful faces. Sad faces had the lowest representativeness rating. See Table 6 for breakdown of representativeness by emotion type for each of the three rater groups.

Table 6.

Representativeness rating for each emotion by group

| Expression (number of images) | Adolescents Mean % (SD) Range | Parents Mean % (SD) Range | Professionals Mean % (SD) Range | Total Mean % (SD)Range |

|---|---|---|---|---|

| Fear (N = 51) | 69.92 (16.33) 25.71–100 | 69.69 (16.14) 26.43–99.29 | 73.62 (21.11) 49.64–96.07 | 70.80 (15.23) 25.71–100 |

| Anger (N = 52) | 73.26 (14.95) 28.85–100 | 76.03 (14.78) 24.62–100 | 74.06 (12.04) 52.69–97.69 | 74.63 (14.11) 24.61–100 |

| Happy (N = 50) | 89.15 (11.81) 58.85–100 | 91.11 (9.48) 68.08–100 | 92.66 (7.92) 70.77–100 | 90.89 (9.94) 58.85–100 |

| Neutral (N = 56) | 75.17 (14.09) 40.67–100 | 75.52 (13.84) 43.33–100 | 72.35 (9.52) 53.67–95.56 | 74.70 (12.92) 40.67–100 |

| Sad (N = 48) | 66.43 (15.04) 25.00–100 | 69.33 (15.16) 36.25–100 | 64.13 (12.27) 37.92–88.33 | 67.04 (14.47) 25.00–100 |

Gender and age differences

Male and female adolescents did not differ significantly on accuracy across the different emotion types [t(39) = 0.61, p = 0.55] or for specific emotions [t(39) = 0.38–1.31, p values = 0.20–0.71].

There was no significant correlation of accuracies with age in the adolescent group for any of the expressions [Anger: r(39) = 0.21, p = 0.19; Happiness: r(39) = 0.06, p = 0.70; Sadness: r(39) = 0.23, p = 0.16; Fear: r(39) = −0.15, p = 0.35; Neutral: r(39) = −0.02, p = 0.91].

Discussion

In this study, we refined the child and adolescent facial stimulus set presented in Egger et al. (2011) and validated the revised set with a sample of adolescents, parents of adolescents, and mental health professionals. We found high accucuracy ratings overall across expressions, consistent with previously reported ratings, although with somewhat diminished accuracy in emotions expressing sadness and slightly lower accuracy ratings in adolescents. We standardized the faces from Egger et al. (2011) for low‐ and high‐level visual properties by standardizing the stimuli for luminance, size, and smoothness. In addition, the stimuli were adjusted for location of facial features. Visual properties are one of the many factors that influence the choice of a given stimulus set for clinical and research purposes. For instance, when measuring covert attention, low‐level visual properties within an image may contribute to spurious findings in neuroimaging or attention retraining research (Naples et al., 2014). This extends to proper control of luminance, smoothness, and size of the image. Although experiments that allow for free viewing, such as eyetracking studies, are less susceptible to this type of noise and thus do not require the same amount of smoothing or luminance matching, these properties are nonetheless desirable ones (Einhäuser and König, 2003).

To our knowledge, this is the only facial stimulus set comprised solely of adolescent faces that also accounts for visual properties of the stimuli. From a neuroscience perspective, adolescents show differential activation on fMRI tasks when viewing adult faces compared to adolescent faces (Hoehl et al., 2010; Marusak et al., 2013), when viewing adult emotional faces than do adults (Blakemore, 2008) and developmental improvements in the temporal ordering of face processing occur predictably from childhood into adulthood (Taylor et al., 2004; Taylor et al., 1999). Thus, the development of a carefully developed and standardized set of adolescent stimuli is needed to advance research in this domain. We offer such a set in this study.

Our efforts to validate the stimulus set with adolescents, parents of adolescents, and mental health professionals yielded encouraging results. Agreement between our three groups of respondents and the previously reported emotion classifications established by Egger et al. (2011) was approximately 90%. Across respondents, agreements were highest for the happy emotion faces (almost 100%) and lowest for the sad emotion faces (about 73%), with agreement for fear, anger, and neutral emotion faces falling in between. It is important to highlight that neutral expression is not always perceived as emotionally neutral (Thomas et al., 2001; Lobaugh et al., 2006), which is why some researchers opt to include calm, in addition to neutral faces (e.g. Tottenham et al., 2009). This should be taken into account when interpreting the ratings of neutral expressions. Our three groups of respondents differed in accuracy minimally, and the representativeness ratings paralleled their accuracy ratings. Although parents of adolescents and mental health professonals did not differ in accuracy for any of the expressions, parents of adolescents were more accurate than adolescents in accurately recognizing faces expressing anger. It should be noted, however, that although these differences were obtained, the adolescents still accurately identified approximately 91% of the angry faces (versus 95% for parents). Our finding that parents were more accurate at identifying angry faces of adolescents suggests that parents may find angry faces of adolescents more salient. This finding is somewhat supported by Hoehl et al. (2010), who reported that adults have greater amygdala activation when viewing angry adolescent faces than children viewing the same images, and Marusak et al. (2013) who reported reduced amygdala activation in children when viewing adolescent angry versus happy faces. Still, the differences we did find do not appear to be clinically meaningful in the sense that as much as over 90% of the adolescents correctly identified the angry and happy faces.

Sad emotion faces were the least accurately identified by all three respondent groups: approximatley 30% of adolescents and 26% of parents of adolescents and mental health professionals missclassified the sad emotion faces. Relatively poor rates of agreement for sad emotion faces were also reported by Egger et al. (2011) and by other research groups using different face sets (e.g. Mazurski and Bond, 1993; Tottenham et al., 2009). Thus, the relatively poor recognition of sad faces in the current study likely represents a general feature of sad face‐emotion displays, rather than a unique feature of this data set. Here, misidentified sad faces were classified as angry faces about 60% of the time followed by neutral faces (23%), fearful faces (16%), and happy faces (1%). It should be recalled that accuracy estimates were determined based on the accuracy of the 20 mental health professionals in identifying the emotions that the adolescents were making in the original Egger et al. (2011) study and no other criterion of accuracy was used. In that study, 76% of the sad direct gaze faces were correctly identified by 75% of the raters, and 23 sad faces had to be eliminated from their final pool of 38 sad faces. In our study we used only those found to be accurate by the mental health professionals in the study by Egger et al. (2011). Nevertheless, as noted, we found agreement rates for our respondents to be the lowest for the sad faces. For ease of use in research projects, we have included specific accuracy and representativeness ratings of each image by group in the Supporting Information (Table S2) so that all images, regardless of expression, might be selected based on an acceptable percentage agreement criterion.

Further research is needed to detemine the sources of inaccuracy for the sad stimuli and to determine whether a more objective criterion by which to gauge accuracy, other than judgements by mental health professionals as provided by Egger et al. (2011), can be established. Most importantly, validation of the happy, angry, fear, and neutral emotion faces seem acceptable from our findings across adolescents, parents of adolescents, and mental health professionals. Further, the represetativeness of the emotion faces were viewed as satisfactory in the judgement of our various respondent groups. Overall, the stimuli were successfully validated in the three populations examined (adolescents, parents of adolescents, and mental health professionals). This study set out to test differences in face perception in terms of accuracy ratings across our three groups of raters. The differences across groups were only partially observed, which might be due to the generally high accuracy across the groups. The developmental differences we did observe in anger ratings between adolescents and parents may be accounted for by age effects observed in EEG, which highlight improvements in face perception with age (Taylor et al., 2004), although this was not ascertained in the current study.

Several limitations exist in our study, some of which were also evident in the prior study by Egger et al. (2011). First, neither study included disgust and surprise emotion faces. Both of these emotions have been identified as basic emotions (Ekman and Friesen, 1975; 1976) and need to be examined in future studies. Second, nearly all of the facial stimuli were derived predominantly from Caucasian actors (over 95%) and nearly all of our respondents were Caucasian (over 95%). Thus, our findings are limited to this racial/ethnic group and may not generalize to other ethnicities and races. This is a noteable limitation and one that is in need of careful and systematic inquiry. Third, the sample size in this study for each group is comparatively small. This limitation in number of raters should be kept in mind when interpreting the results of this study.

These limitations notwithstanding, the current study provides useful data on a carefully refined set of facial emotion stimuli for basic and clinical research and it has validated this set of stimuli across adolescent, parent, and mental health professional groups. Given noted differences in developmental markers of face perception and expertise (e.g. Benoit et al., 2007), we suggest it is important for researchers to use age‐matched stimuli for the population under study. Our research group is currently using this stimulus set in an NIMH‐sponsored study with socially anxious adolescents who are receiving attention retraining via a dot‐probe paradigm to address their implicit attentional biases. Future studies will also need to examine the utility of these stimuli to explore the potentially enhanced effects of viewing similar‐age emotional faces in brain based tasks, as suggested by Blakemore (2008), Hoehl et al. (2010), Marusak et al. (2013), and Taylor and colleagues (Taylor et al., 2004; Taylor et al., 1999).

Supporting information

Supporting info item

Supporting info item

Supporting info item

Supporting info item

Supporting info item

Supporting info item

Acknowledgements

This work was supported in part by the National Institute of Mental Health (NIMH) Grant # R34 MH096915 awarded to Thomas H. Ollendick. The authors gratefully acknowledge the NIMH for its support and the many colleagues who assisted them with various aspects of the present research, including Kathleen Driscoll who helped create the surveys. Finally, the authors thank the parents, teenagers, and colleagues who participated in the validation ratings.

Coffman, M. C. , Trubanova, A. , Richey, J. A. , White, S. W. , Kim‐Spoon, J. , Ollendick, T. H. , and Pine, D. S. (2015) Validation of the NIMH‐ChEFS adolescent face stimulus set in an adolescent, parent, and health professional sample. Int. J. Methods Psychiatr. Res., 24: 275–286. doi: 10.1002/mpr.1490.

Footnotes

The stimuli were developed by Ellen Leibenluft and Danny Pine at NIMH and first validated by Egger et al. (2011) with mental health professionals.

For more detail on the validation of our processing steps, please see Supporting Information.

Processing within each window uses the original pixel value, not the previously calculated values.

The image set is available at each of the processing steps (i.e., unadjusted colour, unadjusted grey scale, and all smoothing steps) at http://www.scanlab.org/downloads.html

Notably, the parents of adolescents did not necessarily include parents of the adolescents who participated in the survey; parents whose teenagers did not participate in the survey were included, as were teenagers whose parents did not participate in the survey. The anonymity of the survey did not allow for knowledge of parent–child dyads.

For parents and mental health professionals, the difference in age was calculated using the median age in each age group (e.g. for individuals between 24 and 26 years, age 25 was used for comparison analyses). The age ranges were equally spaced allowing for a parametric comparison.

References

- Archer J., Hay D.C., Young A.W. (1992) Face processing in psychiatric conditions. British Journal of Clinical Psychology, 31(1), 45–61. [DOI] [PubMed] [Google Scholar]

- Bachmann T. (1991) Identification of spatially quantized tachistoscopic images of faces: How many pixels does it take to carry identity? European Journal of Cognitive Psychology, 3(1), 87–103. [Google Scholar]

- Benoit K.E., McNally R.J., Rapee R.M., Gamble A.L., Wiseman A.L. (2007) Processing of emotional faces in children and adolescents with anxiety disorders. Behaviour Change, 24(04), 183–194. [Google Scholar]

- Biehl M., Matsumoto D., Ekman P., Hearn V., Heider K., Kudoh T., Ton V. (1997) Matsumoto and Ekman's Japanese and Caucasian Facial Expressions of Emotion (JACFEE): Reliability data and cross‐national differences. Journal of Nonverbal Behavior, 21(1), 3–21. [Google Scholar]

- Blakemore S.J. (2008) The social brain in adolescence. Nature Reviews Neuroscience, 9(4), 267–277. [DOI] [PubMed] [Google Scholar]

- Costen N.P., Parker D.M., Craw I. (1994) Spatial content and spatial quantization effects in face recognition. Perception, 23, 129–146. [DOI] [PubMed] [Google Scholar]

- Cowart M.J.W., Ollendick T.H. (2010) Attentional biases in children: Implication for treatment In Hadwin J.A., Field A.P. (eds) Information Processing Biases and Anxiety: A Developmental Perspective, pp. 297–319, Oxford University Press: Oxford. [Google Scholar]

- Cowart M.J.W., Ollendick T.H. (2011) Attention training in socially anxious children: A multiple baseline design analysis. Journal of Anxiety Disorders, 25(7), 972–977. [DOI] [PubMed] [Google Scholar]

- De Voogd E.L., Wiers R.W., Prins P.J.M., Salemink E. (2014) Visual search attentional bias modification reduced social phobia in adolescents. Journal of Behavior Therapy and Experimental Psychiatry, 45(2), 252–259. [DOI] [PubMed] [Google Scholar]

- Egger H.L., Pine D.S., Nelson E., Leibenluft E., Ernst M., Towbin K.E., Angold A. (2011) The NIMH Child Emotional Faces Picture Set (NIMH‐ChEFS): A new set of children's facial emotion stimuli. International Journal of Methods in Psychiatric Research, 20(3), 145–156. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ekman P., Friesen W.V. (1975) Unmasking the face: A guide to recognizing emotions from facial cues, Palo Alto: CA, Consulting Psychologists Press. [Google Scholar]

- Ekman P., Friesen W.V. (1976) Pictures of Facial Affect, Palo Alto: CA, Consulting Psychologists Press. [Google Scholar]

- Einhäuser W., König P. (2003) Does luminance‐contrast contribute to a saliency map for overt visual attention? European Journal of Neuroscience, 17(5), 1089–1097. [DOI] [PubMed] [Google Scholar]

- Ferri J., Bress J.N., Eaton N.R., Proudfit G.H. (2013) The impact of puberty and social anxiety on amygdala activation to faces in adolescence. Developmental Neuroscience, 36(3–4), 239–249. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goren C.C., Sarty M., Wu P.Y. (1975) Visual following and pattern discrimination of face‐like stimuli by newborn infants. Pediatrics, 56(4), 544–549. [PubMed] [Google Scholar]

- Gotlib I.H., Krasnoperova E., Yue D.N., Joormann J. (2004) Attentional biases for negative interpersonal stimuli in clinical depression. Journal of Abnormal Psychology, 113(1), 127. [DOI] [PubMed] [Google Scholar]

- Greist J.H. (1994) The diagnosis of social phobia. The Journal of Clinical Psychiatry, 56(5), 5–12. [PubMed] [Google Scholar]

- Hallion L.S., Ruscio A.M. (2011) A meta‐analysis of the effect of cognitive bias modification on anxiety and depression. Psychological Bulletin, 137(6), 940. [DOI] [PubMed] [Google Scholar]

- Hoehl S., Brauer J., Brasse G., Striano T., Friederici A.D. (2010) Children's processing of emotions expressed by peers and adults: An fMRI study. Social Neuroscience, 5(5–6), 543–559. [DOI] [PubMed] [Google Scholar]

- Horley K., Williams L.M., Gonsalvez C., Gordon E. (2004) Face to face: Visual scanpath evidence for abnormal processing of facial expressions in social phobia. Psychiatry Research, 127(1), 43–53. [DOI] [PubMed] [Google Scholar]

- Jones W., Carr K., Klin A. (2008) Absence of preferential looking to the eyes of approaching adults predicts level of social disability in 2‐year‐old toddlers with autism spectrum disorder. Archives of General Psychiatry, 65(8), 946–954. [DOI] [PubMed] [Google Scholar]

- Kleiner K.A., Banks M.S. (1987) Stimulus energy does not account for 2‐month‐olds’ face preferences. Journal of Experimental Psychology: Human Perception and Performance, 13(4), 594. [DOI] [PubMed] [Google Scholar]

- Leppänen J.M., Moulson M.C., Vogel‐Farley V.K., Nelson C.A. (2007) An ERP study of emotional face processing in the adult and infant brain. Child Development, 78(1), 232–245. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lobaugh N.J., Gibson E., Taylor M.J. (2006) Children recruit distinct neural systems for implicit emotional face processing. Neuroreport, 17(2), 215–219. [DOI] [PubMed] [Google Scholar]

- Lundqvist D., Flykt A., Öhman A. (1998) The Karolinska directed emotional faces (KDEF). CD ROM from Department of Clinical Neuroscience, Psychology Section, Karolinska Institutet, 91‐630.

- Macchi Cassia V. (2011) Age biases in face processing: The effects of experience across development. British Journal of Psychology, 102(4), 816–829. [DOI] [PubMed] [Google Scholar]

- Macchi Cassia V., Pisacane A., Gava L. (2012) No own‐age bias in 3‐year‐old children: More evidence for the role of early experience in building face‐processing biases. Journal of Experimental Child Psychology, 113(3), 372–382. [DOI] [PubMed] [Google Scholar]

- McLeod B.D., Jensen‐Doss A., Ollendick T.H. (eds). (2013) Diagnostic and Behavioral Assessment in Children and Adolescents: A Clinical Guide, New York, Guilford Press. [Google Scholar]

- Marusak H.A., Carré J.M., Thomason M.E. (2013) The stimuli drive the response: An fMRI study of youth processing adult or child emotional face stimuli. NeuroImage, 83, 679–689. [DOI] [PubMed] [Google Scholar]

- Mathews A., MacLeod C. (2002) Induced processing biases have causal effects on anxiety. Cognitive Emotion, 16(3), 331–354. [Google Scholar]

- Maurer D., Young R.E. (1983) Newborn's following of natural and distorted arrangements of facial features. Infant Behavior and Development, 6(1), 127–131. [Google Scholar]

- Mazurski E.J., Bond N.W. (1993) A new series of slides depicting facial expressions of affect: A comparison with the pictures of facial affect series. Australian Journal of Psychology, 45(1), 41–47. [Google Scholar]

- Mogg K., Millar N., Bradley B.P. (2000) Biases in eye movements to threatening facial expressions in generalized anxiety disorder and depressive disorder. Journal of Abnormal Psychology, 109(4), 695. [DOI] [PubMed] [Google Scholar]

- Naples A.N., Nguyen‐Phuc A., Coffman M.C., Kresse A., Bernier R., McPartland C. (2014) A computer‐generated animated face stimulus set for psychophysiological research. Behavioral Research Methods, 47(2), 562–570. DOI: 10.3758/s13428-014-0491-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nasanen R. (1999) Spatial frequency bandwidth used in the recognition of facial images. Vision Research, 39(23), 3824–3833. [DOI] [PubMed] [Google Scholar]

- Pelphrey K.A., Sasson N.J., Reznick J.S., Paul G., Goldman B.D., Piven J. (2002) Visual scanning of faces in autism. Journal of Autism and Developmental Disorders, 32(4), 249–261. [DOI] [PubMed] [Google Scholar]

- Porter G., Troscianko T., Gilchrist I.D. (2007) Effort during visual search and counting: Insights from pupillometry. The Quarterly Journal of Experimental Psychology, 60(2), 211–229. [DOI] [PubMed] [Google Scholar]

- R Development Core Team (2010) R: A Language Environment for Statistical Computing, Vienna, R Foundation for Statistical Computing; Retrieved from http://www.R-proect.org [28 January 2014]. [Google Scholar]

- Rousselet G.A., Pernet C.R. (2011) Quantifying the time course of visual object processing using ERPs: It's time to up the game. Frontiers in Psychology, 2(107), 2–6. DOI: 10.3389/fpsyg.2011.00107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taylor M.J., Batty M., Itier R.J. (2004) The faces of development: A review of early face processing over childhood. Journal of Cognitive Neuroscience, 16(8), 1426–1442. [DOI] [PubMed] [Google Scholar]

- Taylor M.J., McCarthy G., Saliba E., Degiovanni E. (1999) ERP evidence of developmental changes in processing of faces. Clinical Neurophysiology, 110(5), 910–915. [DOI] [PubMed] [Google Scholar]

- Thomas K.M., Drevets W.C., Whalen P.J., Eccard C.H., Dahl R.E., Ryan N.D., Casey B.J. (2001) mygdala response to facial expressions in children and adults. Biological Psychiatry, 49(2), 309–316. [DOI] [PubMed] [Google Scholar]

- Tottenham N., Tanaka J.W., Leon A.C., McCarry T., Nurse M., Hare T.A., Marcus D.J., Westerlund A., Casey B., Nelson C. (2009) The NimStim set of facial expressions: Judgments from untrained research participants. Psychiatry Research, 168(3), 242–249. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supporting info item

Supporting info item

Supporting info item

Supporting info item

Supporting info item

Supporting info item