Abstract

Objectives

Empirical study of public behavioral health systems' use of data and their investment in evidence based treatment (EBT) is limited. This study describes trends in state-level EBT investment and research supports from 2001-2012.

Methods

Multilevel models examined change over time related to state adoption of EBTs, numbers served, and penetration rates for six behavioral health EBTs for adults and children, based on data from the National Association for State Mental Health Program Directors Research Institute (NRI). State supports related to research, evaluation, and information management were also examined.

Results

Increasing percentages of states reported funding an external research center, promoting the adoption of EBTs through provider contracts, and providing financial incentives for EBTs. Decreasing percentages of states reported promoting EBT adoption through stakeholder mobilization, monitoring fidelity, and specific budget requests. There was greater reported use of adult-focused EBTs (65-80%) compared with youth EBTs (25-50%). Overall penetration rates of EBTs were low (1% - 3%) and EBT adoption by states showed flat or declining trends. SMHAs' investment in data systems and use of research showed little change.

Conclusion

SMHA investment in EBTs, implementation infrastructure, and use of research has declined. More systematic measurement and examination of these metrics may provide a useful approach for setting priorities, evaluating success of health reform efforts, and making future investments.

Over the past several decades, a steady stream of articles, reports, and national calls to action have concluded that public behavioral health systems are characterized by ineffectiveness (1-3). Many of these reports called for increasing the availability of evidence-based treatments (EBTs) in public behavioral health systems and systematic use of data and research for continuous quality improvement (1-5).

States are in a clear position to lead mental health service and system reform efforts, including investment in EBTs and application of research to improve outcomes (6-8). Thus, states represent a logical focus for research on public system investments in research and evidence. Overall, however, empirical study of state support to EBT implementation and data use is scant (9).

One potential metric for gauging use of research evidence is the extent to which states implement EBTs. This has been widely promoted as a partial solution to improving efficiency and increasing the likelihood of effectively addressing the behavioral health needs of children and adults (10, 11). Because commitment to EBTs requires a commensurate commitment to practitioner and organizational capacity to deliver EBTs, state infrastructural supports (e.g., policy and fiscal incentives, workforce development supports, data systems, and research centers or centers of excellence) represents a second potential metric (12-14).

In 1987, a not-for-profit research center for the National Association of State Mental Health Program Directors (NASMHPD) was established. In 1993, the NASMHPD Research Institute (NRI) initiated regular surveys of state representatives about the characteristics of state mental health authorities (SMHAs), including (starting in 2001) the nature and extent of their investment in EBTs and support to research, evaluation, and data use. To date, these data have not been used to examine state trends in EBT implementation, EBT implementation supports, or methods for promoting data and research use, all potential indicators of “research-based decision-making.”

Using the NRI data, the current study examines the degree to which state systems invest in EBTs and research-based supports, and how such investments have changed over the period from 2001-2012. This period represents the initiation of NRI tracking of specific EBTs as well as a time of increased awareness of and federal initiatives to support behavioral health EBTs (3, 15-17). We address three research questions. First, how have the rates of use and penetration of EBTs by SMHAs changed from 2001-2012? Second, what kinds of support to EBTs are provided by SMHAs, and how has this changed over time? Third, what infrastructure is in place to support use of data by SMHAs, and how has this changed over time?

Methods

Data Source and Elements

NRI data originate from two publically available sources. The State Profiles System (SPS) ask questions such as whether the SMHA conducted research on client outcomes, implemented a statewide client outcomes monitoring system, engaged in initiatives to build awareness of EBTs, and other similar items as displayed in Table 1. All of these were asked of the system in general and were not specifically tied to individual EBTs. Data elements from the Uniform Reporting System (URS) focus on counts of individuals receiving specific EBTs and estimates of youth with serious emotional disturbance and adults with serious mental illness. Prevalence estimates are overall estimates, not specific to the number of people eligible for each EBT. Additional details can be found from recent reports (18, 19). Because data are all publically available, formal review by an Institutional Review Board was not required.

Table 1.

Proportions of states endorsing activities to support research, data use, and evidence-based treatments.

| 2001 | 2002 | 2004 | 2007 | 2009 | 2010 | 2012 | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| N | % | N | % | N | % | N | % | N | % | N | % | N | % | |

| Does/Has the SMHA… | ||||||||||||||

| Conduct research/evaluations on client outcomes?3 | 13 | 29 | 32 | 84 | 36 | 75 | 42 | 88 | 40 | 83 | -- | -- | -- | -- |

| Implemented a statewide client outcomes monitoring system? | -- | -- | -- | -- | -- | -- | -- | -- | -- | -- | 29 | 58 | 29 | 64 |

| Integrated its client datasets with client datasets from other agencies? | -- | -- | 23 | 49 | 28 | 58 | -- | -- | 26 | 54 | 25 | 50 | 24 | 53 |

| Produce a directory of research and/or evaluation projects? | 11 | 23 | 11 | 23 | 7 | 15 | 9 | 19 | 9 | 19 | 11 | 23 | -- | -- |

| Operate a Research Center/Institute? | 8 | 17 | 8 | 17 | 7 | 15 | 7 | 15 | 7 | 15 | 4 | 8 | -- | -- |

| Fund a Research Center/Institute?1 | 6 | 13 | 11 | 23 | 9 | 19 | 13 | 27 | 8 | 17 | 15 | 31 | -- | -- |

| What initiatives, if any, are you implementing to promote the adoption of EBTs? | ||||||||||||||

| Awareness/Training1 | -- | -- | 38 | 75 | 34 | 71 | 41 | 84 | 43 | 86 | 44 | 88 | 37 | 86 |

| Consensus building among stakeholders2 | -- | -- | 38 | 79 | 36 | 75 | 44 | 90 | 42 | 84 | 41 | 82 | 31 | 72 |

| Incorporation in contracts1 | -- | -- | 20 | 42 | 21 | 44 | 30 | 61 | 29 | 58 | 37 | 74 | 29 | 67 |

| Monitoring of fidelity2 | -- | -- | 25 | 52 | 27 | 56 | 34 | 69 | 36 | 72 | 35 | 70 | 29 | 67 |

| Financial incentives1 | -- | -- | 8 | 17 | 15 | 31 | 14 | 29 | 18 | 37 | 19 | 38 | 15 | 35 |

| Modification of IT systems and data reports | -- | -- | 20 | 42 | 22 | 46 | 27 | 55 | 25 | 50 | 29 | 58 | 22 | 51 |

| Specific budget requests2 | -- | -- | 14 | 29 | 19 | 40 | 25 | 51 | 19 | 39 | 19 | 38 | 12 | 28 |

| Does the SMHA conduct research/evaluations on… | ||||||||||||||

| Utilization Rates | -- | -- | 33 | 87 | 36 | 78 | 40 | 83 | 39 | 81 | -- | -- | -- | -- |

| Change in Functioning1 | -- | -- | 26 | 68 | 29 | 63 | 35 | 73 | 37 | 77 | -- | -- | -- | -- |

| Penetration Rates | -- | -- | 28 | 74 | 30 | 65 | 35 | 73 | 36 | 75 | -- | -- | -- | -- |

p < .05 for a time trend

p< .05 for a quadratic time trend

p< .05 for a cubic time trend

Sample

Information was provided by SMHA representatives in all 50 states, District of Columbia, Puerto Rico, and Virgin Islands. Response rates by states and territories were high, ranging from 87% (46 of 53) in 2001 to 98% (52 of 53) in 2005.

EBTs tracked

EBTs tracked include three interventions for children with serious emotional disturbance: Therapeutic Foster Care (20), Multisystemic Therapy (21), and Functional Family Therapy (22); and three treatments for adults with serious mental illness: Supported Housing (23), Supported Employment (24), and Assertive Community Treatment (25). Because the NRI survey is intended to provide federal funding agencies with information on populations of specific interest (adults with serious mental illness, children with serious emotional disturbance), all of these interventions are intended for individuals with complex needs, and most are multimodal (i.e., include multiple strategies that address a range of factors that may influence individual, and contextual needs).

Years examined

Data were collected for most variables in 2001, 2002, 2004, 2007, 2009, 2010, and 2012. Data on numbers and rates of individuals served by EBTs, however, are only available from 2007-2012, when the URS was implemented as a state-level accountability mechanism by the Substance Abuse and Mental Health Services Administration (SAMHSA).

Outliers and Missing Data

Continuous variables were examined for possible outliers or data entry errors, and 115 outliers (out of 1,543 total responses, 7%) were deleted or replaced. (For full information on identification and handling of outliers, please see Appendix.)

Data Analysis

Two-level Multilevel Models (MLM; 26), with time/year nested within state, were used to examine change over time. Three models were run for each dependent variable to test linear, quadratic, and cubic time trends, and the best-fitting parsimonious model was selected for each. (See Appendix for more information on distributions used, determination of model fit, and software used).

Results

State Use of Evidence and Activities to Promote EBTs

Table 1 presents the percent of states that endorsed each specific practice concerning EBT implementation, training and workforce support, or data and research support. Time trend estimates are in Table 2. Significant linear time trends were found for five variables. Increasing percentages of states reported: Funding an external research center/institute, Initiatives to increase EBT awareness, Promoting the adoption of EBTs through provider contracts, Providing financial incentives for offering EBTs, and Conducting research or evaluation studies examining client change in functioning. The two most dramatic increases (based on the slope size) were in conducting research on change in client functioning, and promoting adoption of EBTs through contracts.

Table 2. Multi-level models testing longitudinal trends.

| Item | Time trend | Intercept | p | Linear slope | p | Quadratic slope | p | Cubic slope | p |

|---|---|---|---|---|---|---|---|---|---|

| Activities to support research, data use, and evidence-based practices. | |||||||||

| Does/Has the SMHA… | |||||||||

| Conduct research/evaluations on client outcomes? | Fixed | -.681 | .014 | 1.577 | <.001 | -.327 | .008 | .021 | .030 |

| Implemented a statewide client outcomes monitoring system? | Random | -.721 | .656 | .113 | .484 | ||||

| Integrated its client datasets with client datasets from other agencies? | Random | .242 | .372 | -.012 | .744 | ||||

| Produce a directory of research and/or evaluation projects? | Random | -1.303 | <.001 | .004 | .878 | ||||

| Operate a Research Center/Institute? | Random | -1.412 | <.001 | -.028 | .188 | ||||

| Fund a Research Center/Institute?1 | Random | -1.464 | <.001 | .054 | .031 | ||||

| What initiatives, if any, are you implementing to promote the adoption of EBTs? | |||||||||

| Awareness/Training | Random | 1.049 | <.001 | .073 | .029 | ||||

| Consensus building among stakeholders | Random | .765 | .009 | .255 | .019 | -.023 | .022 | ||

| Incorporation in contracts | Random | -.419 | .141 | .119 | .008 | ||||

| Monitoring of fidelity | Random | -.277 | .420 | .264 | .019 | -.016 | .066 | ||

| Financial incentives | Random | -1.287 | <.001 | .081 | .032 | ||||

| Modification of IT systems and data reports | Random | -.269 | .318 | .470 | .220 | ||||

| Specific budget requests | Random | -1.131 | .002 | .311 | .019 | -.027 | .013 | ||

| Does the SMHA conduct research/evaluations on… | |||||||||

| Utilization Rates | Random | 1.012 | .014 | .041 | .502 | ||||

| Change in Functioning | Random | .089 | .779 | .134 | .005 | ||||

| Penetration Rates | Random | .449 | .136 | .070 | .115 | ||||

| Numbers of people receiving specific EBTs | |||||||||

| Therapeutic Foster Care | Random | 6.309 | <.001 | .107 | .019 | -.021 | .016 | ||

| Multisystemic Therapy | Random | 5.611 | <.001 | .089 | .020 | ||||

| Functional Family Therapy | Random | 6.508 | <.001 | -.004 | .938 | ||||

| Supportive Housing | Random | 7.727 | <.001 | -.259 | .029 | .104 | .019 | -.011 | .021 |

| Supportive Employment | Random | 6.948 | <.001 | .037 | .394 | ||||

| Assertive Community Treatment | Random | 7.212 | <.001 | .033 | .163 | ||||

| Rates of Adults with Serious Mental Illness and Children with Serious Emotional Disturbance Receiving Specific EBTs | |||||||||

| Therapeutic Foster Care | Fixed | -3.284 | <.001 | .303 | .015 | -.132 | .014 | .014 | .055 |

| Multisystemic Therapy | Fixed | -4.013 | <.001 | .552 | .010 | -.293 | .045 | .039 | .057 |

| Functional Family Therapy | Fixed | -3.874 | <.001 | .737 | .042 | -.311 | .051 | .035 | .066 |

| Supportive Housing | Fixed | -.53 | <.001 | .004 | .849 | .045 | .004 | -.009 | .002 |

| Supportive Employment | Random | -3.309 | <.001 | .005 | .906 | ||||

| Assertive Community Treatment | Random | -3.41 | <.001 | -.013 | .459 |

p < .05 for a time trend

p < .05 for a quadratic time trend

p < .05 for a cubic time trend

Significant quadratic time trends were found for three variables. Significantly increasing and then decreasing percentages of states reported Promoting EBT adoption through Consensus building among stakeholders, Promoting EBT adoption through monitoring fidelity, and Promoting EBT adoption through specific budget requests. Reported use of these practices peaked in 2007-2009. A significant cubic trend was found for States conducting research/evaluation on client outcomes, which showed a sharp increase from 2001-2002, and then a slight decrease and slight increase over 2002-2009. No significant change over time was found for: Implementing a statewide outcomes monitoring system, Integrating client datasets with client datasets from other agencies, Producing a directory of research and/or evaluation projects, Operating a research center or institute, Modification of IT systems and data reports, Conducting research/evaluations on utilization rates, and Conducting research/evaluations on penetration rates.

States with EBT Services Available

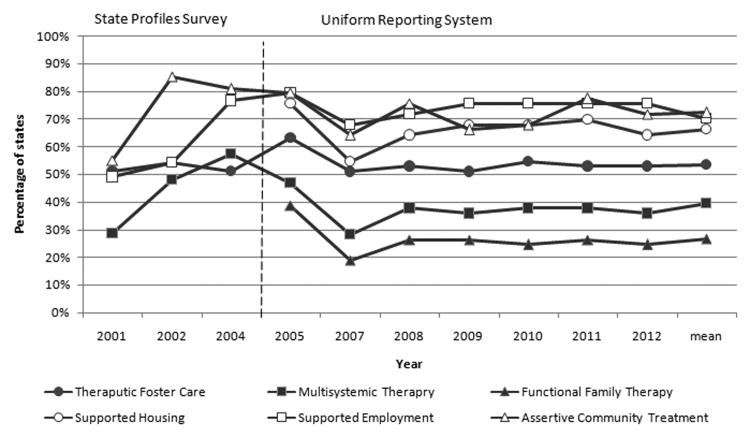

Figure 1 depicts the percentage of states that reported availability for the six EBTs tracked. Averaging across 2001-2012, EBTs serving adults were more commonly available; 72% of states reported the availability of Assertive Community Treatment, 70% reported Supported Employment, and 66% reported Supported Housing. For children and youth, 54% of states reported that Therapeutic Foster Care was available, 39% reported Multisystemic Therapy, and 27% reported Functional Family Therapy.

Figure 1. Percentage of states using specific evidence-based practices, 2001-2012.

Model fitting confirmed that piecewise linear time trends fit the data better than exponential time trends. Table 3 depicts the results of individual piecewise MLMs. For all EBTs with valid data from 2001-2012, there were significant increases in the proportion of states using Therapeutic Foster Care, Multisystemic Therapy, Supported Employment, and Assertive Community Treatment from 2001-2005, and then no significant increases or decreases from 2007-2012. Data on Functional Family Therapy and Supported Housing were not collected until 2005; tests for these practices found no significant increases or decreases from 2005-2012.

Table 3. Piecewise Multilevel Models of change in proportion of states using specific evidence-based practices.

| Time trend | Slope 2001-2005 | p | Slope 2007-2012 | p | |

|---|---|---|---|---|---|

| Therapeutic Foster Care | Random | .347 | <.001 | .051 | .285 |

| Multisystemic Therapy | Fixed | .390 | .004 | .021 | .653 |

| Supportive Employment | Random | .458 | <.001 | .043 | .349 |

| Assertive Community Treatment | Random | .133 | .020 | -.081 | .119 |

| Slope 2001-2004 | p | Slope 2005-2012 | p | ||

| Functional Family Therapy | Random | n/a | n/a | -.056 | .202 |

| Supportive Housing | Random | n/a | n/a | -.010 | .826 |

Note. Data for Functional Family Therapy and Supported Housing were not collected from 2001-2004. Models ran as overdispersed Bernoulli population-average models with robust standard errors and full penalized quasi-likelihood estimation.

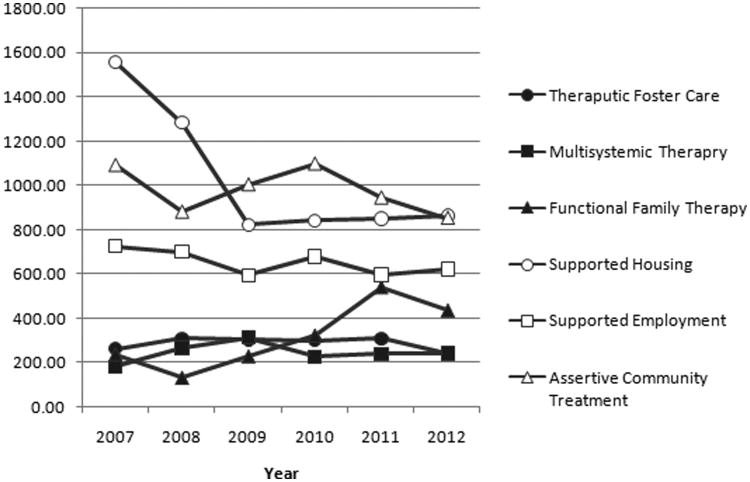

Number of clients served by specific EBTs

Due to positive skew (a few states reporting large numbers served), we examined state medians for clients served, and only used data from states that reported any availability of the salient EBT. Averaging across 2007-2012, a median of 1,029 clients used Supported Housing, 950 used Assertive Community Treatment, and 669 used Supported Employment per year. To compare to EBTs serving children and youth, a median of 371 clients used Functional Family Therapy, 279 clients used Therapeutic Foster Care, and 230 clients used Multisystemic Therapy.

Figure 2 displays the median number served per state each year and Table 2 provides MLM estimates for best-fitting time trends. Results indicate a significant but small linear increase in the number of Multisystemic Therapy clients, a significant quadratic change in Therapeutic Foster Care clients (an increase followed by a decrease) and a significant cubic change in the number of Supported Housing clients (a decrease followed by a flattening). No time trends were found for Functional Family Therapy, Supported Employment, or Assertive Community Treatment.

Figure 2.

Median numbers of people served by specific evidence based practices, 2007-2012.

Rates of clients served

Rates of clients served by EBTs were calculated by dividing the reported numbers of clients served by the reported number of adults with serious mental illness or children and youth with serious emotional disturbance in the state. Averaging across 2007-2012, the median rates of clients served were, in descending order, 3% for Supported Housing, 2% for Functional Family Therapy, 2% for Assertive Community Treatment, 2% for Supported Employment, 1% for Therapeutic Foster Care, and 1% for Multisystemic Therapy.

Table 2 displays the MLM estimates for best fitting time trends for rates of clients served. Results indicated no significant changes for Supported Employment or Assertive Community Treatment, and significant cubic changes for Therapeutic Foster Care, Multisystemic Therapy, Functional Family Therapy, and Supported Housing. Therapeutic Foster Care and Supported Housing both showed flat trends followed by a substantial decrease and then another flat trend. Multisystemic Therapy showed an increase from 2007-2009 followed by a decrease and a brief increase. Functional Family Therapy showed a flat trend, a sharp increase, and then a slow downward trend from 2009-2012.

Discussion

While there is evidence that many states have invested in infrastructure supports to encourage and support EBT implementation, the impact on availability and reach of EBTs has been relatively small. In 2012, between 65% and 80% of states reported using the adult EBTs examined; yet median numbers of clients served by states was well below 1,000 for each EBT, and penetration rates ranged from only 2% to 3%. Uptake of child EBTs was lower: only 25% to 50% of states reported use of the interventions studied, and median clients served only 250-400, reaching only 1%-3% of youth with serious emotional disturbance. Although it is not possible from these data to explain the reasons for the discrepancy in use of adult- versus child-focused EBTs, this could be an extension of the longstanding trend in state behavioral health systems to invest more heavily in services for adults (27).

The results also pointed to large gaps between need and available EBTs for both adults and children. Some of this observed gap may extend from study limitations. Because the NRI survey focuses on a small number of multi-modal interventions for individuals with complex needs, data for a range of well-known interventions for individuals with less complex or intensive behavioral health needs (e.g., cognitive behavioral therapies, behavioral parenting interventions) were not available. Thus, the actual number of EBTs being used across states is likely higher. Second, there have been significant changes since 2001 in states' authority, including increasing penetration of managed care leading to less direct state control over services (18, 28). Thus, states have less authority to oversee EBT initiatives and/or may be unaware of EBTs that are being used through managed care.

Nonetheless, the scant uptake of EBTs found here is consistent with other studies of specific EBT implementation, which demonstrate significant challenges in getting research-based services installed in real world systems (29-32). Results corroborate that the population entrusted to public mental health care is not deriving benefits from receipt of evidence-based therapeutic services. While each of the interventions examined has been proposed as a strategy to meet the behavioral health needs of individuals with the most complex presentations, and are tailored to clients served by specific public systems (e.g., foster care for Therapeutic Foster Care, juvenile justice involved for Multisystemic Therapy), only a very small portion of individuals actually receive one of the EBTs.

Perhaps more worrisome, adoption by states, numbers served, and penetration rates are flat or declining. Even if these interventions are merely considered indicators or proxies of EBT uptake in states, trends examined here suggest that after a burst of initial adoption and expansion in the early 2000s, EBT uptake has leveled off, if not diminished. This study also found similar “inverted-U” shaped trends in state EBT adoption drivers, including consensus building among stakeholders, state-led fidelity monitoring, and specific budget requests for EBTs.

Given that research-based strategies require investment in resources, both types of downward trends are not surprising given the magnitude of budget shortages that hit most states from 2007 to 2012. In Fiscal Year 2013, 13 SMHAs still had expenditures below 2007 levels (33), and these recent cuts occurred on top of historical and long-standing budget shortfalls that have consistently impacted SMHAs (27).

Regardless, the trend in reduced state support to EBTs and their implementation is troubling, given that resources beyond standard fee-for-service payments are typically necessary for providers to implement EBTs well (34-36). Moreover, the goal of reducing overall public expenditures “downstream” (e.g., corrections, emergency room care, hospitalization) (37) is theoretically linked to maintaining investment in EBTs that reduce the need for those expensive services. Thus, the trend to limit EBT investment may ultimately result in increased state spending. In future planned analyses, we will use NRI along with other data to examine the relationship between fluctuations in state fiscal investments and use of research based strategies.

Some EBT implementation support strategies increased across the study period. Building EBTs into provider contracts showed the single greatest increase among the variables examined, from 43% of states in 2001-2004, to 70% in 2009-2012. This phenomenon was also found in a study by Rieckmann et al (38), which found that in 2007-2009 the majority of states opted to promote substance use disorder-related EBTs by encouraging providers to deliver these practices with contract funds.

States also reported increased use of financial incentives for provider EBT use over the study period. This may be an encouraging trend, as it has been highlighted as a proactive fiscal policy that encourages EBT adoption (38-40). However, the number of states reporting such incentives in 2012 was only 35%, barely more than the 31% who reported using the strategy in 2004.

Despite substantial improvements in information technology (IT) since the turn of the millennium, the percent of states implementing a statewide outcomes monitoring system, integrating client datasets across agencies, producing directories of research and/or evaluation projects, modifying IT systems to support EBT, and conducting research/evaluation did not change from 2001-2012. In 2012, only half of states reported having integrated data systems or a client outcomes monitoring system. This is very troubling given broad consensus that true accountability is achieved not merely through investment in EBTs (41), but through investment in data systems, data integration, and outcomes monitoring that promotes feedback, understanding of system functioning, and continuous improvement (2, 42, 43).

Unfortunately, however, to preserve services, SMHAs report taking their cuts in “administrative” expenses such as data and research use, IT systems, outcomes monitoring, evaluation, and fidelity monitoring. Among evaluation and data use variables, only “funding an external research center/institute” showed an increase over time, from 13% to 31%. While this could be interpreted as a positive trend, it is mirrored by a decrease in SMHAs' internal operation of research centers, from 17% to 8%.

Limitations

SMHAs are not the only systems that provide EBTs in a state. Vocational rehabilitation agencies may provide Supported Employment and child welfare and/or juvenile justice agencies may support Therapeutic Foster Care or Multisystemic Therapy. SMHA respondents may not have been fully informed about all state behavioral health initiatives when responding to NRI-administered surveys, or about localized efforts or pilot projects. In addition, estimates of the penetration rates for EBTs were based on general estimates provided by SMHA respondents of the overall number of youth with serious emotional disturbance or adults with serious mental illness, which may not accurately reflect actual rates of individuals eligible for these EBTs.

Another limitation is that, due to the NRI's funding mandate to focus on services funded by federal block grants, EBT data focus only on services for adults with serious mental illness or children with serious emotional disturbance, not populations with other conditions or less intensive needs. The extent to which these findings are applicable to EBT implementation for other conditions, less intensive needs, or early intervention and prevention, is unknown. Relatedly, while most of the EBTs studied are well-defined and manualized, Therapeutic Foster Care is a broader service type possibly reducing accuracy and interpretation of utilization trends. Similarly, other important drivers and indicators of research and EBT use were not surveyed and thus could not be examined, including workforce support and training, the role of changing state leadership, differential costs associated with installing EBTs, and shifts over time to managed care. These are all important areas for future research.

Finally, the current study aimed to examine national trends, and thus we presented aggregate results across all states. While beyond the scope of the current study, future analyses should examine patterns of individual state trends and predictors of these variations.

Conclusions

State investment in EBT implementation infrastructure and use of data is critical, and only likely to grow in importance as healthcare reforms call for more effective services and greater quality and accountability. Results of the current study suggest, however, that SMHA investment in EBTs and implementation infrastructure is flat or declining in many areas. Consistent, reliable, and valid measurement of these constructs will assist states in ensuring that their systems of services are capable of meeting the needs of the populations for whom they are responsible. Such data may also serve as a basis for renewed investment, and a metric for reform efforts.

Supplementary Material

Acknowledgments

We would like to thank the hundreds of representatives of state mental health authorities for completing surveys over the years and thus making this study possible. We would also like to thank the William T. Grant Foundation, whose initiative to study the use of research evidence in public systems inspired these analyses.

Funding: This research was partially supported by the Advanced Center on Implementation and Dissemination Science in States for Children and Families (NIMH P30 MH090322).

Footnotes

Previous Presentation: This paper was originally presented at the Seventh Annual National Institutes of Health Dissemination and Implementation Conference, Bethesda Maryland, December, 2014.

Contributor Information

Eric J. Bruns, University of Washington - Psychiatry and Behavioral Sciences, Seattle, Washington

Suzanne E.U. Kerns, University of Washington - Psychiatry and Behavioral Sciences, Seattle, Washington

Michael D. Pullmann, University of Washington - Psychiatry and Behavioral Sciences, Seattle, Washington

Spencer W. Hensley, University of Washington - Psychiatry and Behavioral Sciences, 2815 Eastlake Ave E Suite 200, Seattle, Washington 98102

Ted Lutterman, NASMHPD Reserach Institute.

Kimberly Hoagwood, New York State Psychiatric Institute, Columbia - Psychiatry and Clinical Psychology.

References

- 1.Kazak AE, Hoagwood K, Weisz JR, et al. A meta-systems approach to evidence-based practice for children and adolescents. American Psychologist. 2010;65:85–97. doi: 10.1037/a0017784. [DOI] [PubMed] [Google Scholar]

- 2.Institute of Medicine. Ethical considerations for research involving prisoners. Washington, DC: National Academy Press; 2006. [Google Scholar]

- 3.U.S. Public Health Services. Mental Health: A Report of the Surgeon General. Washington, DC: Author; 1999. [Google Scholar]

- 4.Institute of Medicine. To err is human: Building a safer health system. Washington, DC: National Academy Press; 1999. [Google Scholar]

- 5.Institute of Medicine. Crossing the Quality Chasm: A New Health System for the 21st Century. National Academies Press; US: 2001. [PubMed] [Google Scholar]

- 6.Chor KHB, Olin SS, Weaver J, et al. Adoption of Clinical and Business Trainings by Child Mental Health Clinics in New York State. Psychiatric Services. 2014;65:1439–44. doi: 10.1176/appi.ps.201300535. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Bell NN, Shern DL. State mental health commissions: Recommendations for change and future directions. Washington, DC: National Technical Assistance Center for State Mental Health Planning; 2002. [Google Scholar]

- 8.Glisson C, Schoenwald S. The ARC organizational and community intervention strategy for implementing evidence-based children's mental health treatments. Mental Health Services Research. 2005;7:243–59. doi: 10.1007/s11020-005-7456-1. [DOI] [PubMed] [Google Scholar]

- 9.Wisdom JP, Chor KH, Hoagwood KE, et al. Innovation adoption: a review of theories and constructs. Adm Policy Ment Health. 2014;41:480–502. doi: 10.1007/s10488-013-0486-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Garland AF, Haine-Schlagel R, Brookman-Frazee L, et al. Improving community-based mental health care for children: translating knowledge into action. Administration and Policy in Mental Health. 2013;40:6–22. doi: 10.1007/s10488-012-0450-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Frueh BC, Ford JD, Elhai JD, et al. Evidence-Based Practice in Adult Mental Health; in Handbook of Evidence-Based Practice in Clinical Psychology. John Wiley & Sons, Inc; 2012. [Google Scholar]

- 12.Bertram RM, Blase KA, Fixsen DL. Improving Programs and Outcomes: Implementation Frameworks and Organization Change. Research on Social Work Practice. 2014 [Google Scholar]

- 13.Bruns EJ, Hoagwood KE. State Implementation of Evidence-Based Practice for Youth, part 1: Responses to the state of the evidence. Journal of the American Academy of Child & Adolescent Psychiatry. 2008;47:369–73. doi: 10.1097/CHI.0b013e31816485f4. [DOI] [PubMed] [Google Scholar]

- 14.Fixsen DL, Blase KA, Naoom SF, et al. Core Implementation Components. Research on Social Work Practice. 2009;19:531–40. [Google Scholar]

- 15.Chambless DL, Hollon SD. Defining Empirically Supported Therapies. Journal of Consulting & Clinical Psychology. 1998;66:7–18. doi: 10.1037//0022-006x.66.1.7. [DOI] [PubMed] [Google Scholar]

- 16.Drake RE, Goldman HE, Leff HS, et al. Implementing evidence-based practices in routine mental health service settings. Psychiatric Services. 2001;52:179–82. doi: 10.1176/appi.ps.52.2.179. [DOI] [PubMed] [Google Scholar]

- 17.U.S. Department of Health and Human Services. New Freedom Initiative on Mental Health. 2003 [Google Scholar]

- 18.U.S. Department of Health and Human Services. 2011 Medicaid Managed Care Enrollment Report. Baltimore, MD: Center for Medicare and Medicaid Services; 2012. [Google Scholar]

- 19.Fisher WH, Rivard JC. The research potential of administrative data from state mental health agencies. Psychiatr Serv. 2010;61:546–8. doi: 10.1176/ps.2010.61.6.546. [DOI] [PubMed] [Google Scholar]

- 20.Farmer EMZ, Burns BJ, Dubs MS, et al. Assessing Conformity to Standards for Treatment Foster Care. Journal of Emotional and Behavioral Disorders. 2002;10:213–22. [Google Scholar]

- 21.Henggeler SW. Efficacy studies to large-scale transport: the development and validation of multisystemic therapy programs. Annu Rev Clin Psychol. 2011;7:351–81. doi: 10.1146/annurev-clinpsy-032210-104615. [DOI] [PubMed] [Google Scholar]

- 22.Sexton TL, Alexander JF. Functional family therapy: A mature clinical model for working with at-risk adolescents and their families. In: Sexton TL, Weeks GR, Robbins MS, editors. Handbook of family therapy: The science and practice of working with families and couples. New York, NY, US: Brunner-Routledge; 2003. [Google Scholar]

- 23.Tabol C, Drebing C, Rosenheck R. Studies of “supported” and “supportive” housing: A comprehensive review of model descriptions and measurement. Evaluation and Program Planning. 2010;33:446–56. doi: 10.1016/j.evalprogplan.2009.12.002. [DOI] [PubMed] [Google Scholar]

- 24.Cook JA, Leff HS, Blyler CR, et al. Results of a multisite randomized trial of supported employment interventions for individuals with severe mental illness. Arch Gen Psychiatry. 2005;62:505–12. doi: 10.1001/archpsyc.62.5.505. [DOI] [PubMed] [Google Scholar]

- 25.Drake RE, McHugo GJ, Clark RE, et al. Assertive Community Treatment for patients with co-occurring severe mental illness and substance use disorder: A clinical trial. American Journal of Orthopsychiatry. 1998;68:201–15. doi: 10.1037/h0080330. [DOI] [PubMed] [Google Scholar]

- 26.Singer JD, Willett JB. Applied longitudinal data analysis. New York: Oxford University Press; 2003. [Google Scholar]

- 27.Frank RG, Glied SA. Better But Not Well: Mental Health Policy in the United States Since 1950. Baltimore, MD: Johns Hopkins University Press; 2006. [Google Scholar]

- 28.Allen KD, Pires SA. Improving Medicaid Managed Care for Youth with Serious Behavioral Health Needs: A Quality Improvement Toolkit. New Brunswick, NJ: Center for Health Care Strategies; 2009. [Google Scholar]

- 29.Catalano RF, Fagan AA, Gavin LE, et al. Worldwide application of prevention science in adolescent health. Lancet. 2012;379:1653–64. doi: 10.1016/S0140-6736(12)60238-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Hoagwood K, Atkins M, Ialongo N. Unpacking the Black Box of Implementation: The Next Generation for Policy, Research and Practice. Administration and Policy in Mental Health and Mental Health Services Research. 2013;40:451–5. doi: 10.1007/s10488-013-0512-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Brown CH, Kellam SG, Kaupert S, et al. Partnerships for the design, conduct, and analysis of effectiveness, and implementation research: experiences of the prevention science and methodology group. Adm Policy Ment Health. 2012;39:301–16. doi: 10.1007/s10488-011-0387-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Bickman L. Editorial: Facing reality and jumping the chasm. Administration and Policy in Mental Health. 2013;40:1–5. doi: 10.1007/s10488-012-0460-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Neylon K, Shaw R, Lutterman T. State Mental Health Agency-Controlled Expenditures for Mental Health Services, State Fiscal Year 2013, National Association of State Mental Health Program Directors. Alexandria, VA: National Association of State Mental Health Program Directors; 2014. [Google Scholar]

- 34.Bickman L. A Measurement Feedback System (MFS) Is Necessary to Improve Mental Health Outcomes. J Am Acad Child Adolesc Psychiatry. 2008;47:1114–9. doi: 10.1097/CHI.0b013e3181825af8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.de Beurs E, den Hollander-Gijsman ME, van Rood YR, et al. Routine outcome monitoring in the Netherlands: practical experiences with a web-based strategy for the assessment of treatment outcome in clinical practice. Clin Psychol Psychother. 2011;18:1–12. doi: 10.1002/cpp.696. [DOI] [PubMed] [Google Scholar]

- 36.Holzner B, Giesinger J, Pinggera J, et al. The computer-based health evaluation software (CHES): A software for electronic patient-reported outcome monitoring. BMC Medical Informatics and Decision Making. 2012;12:126. doi: 10.1186/1472-6947-12-126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Aos S, Lieb R, Mayfield J, et al. Benefits and costs of prevention and early intervention programs for youth. Olympia, WA: Washington; State Institute for Public Policy: 2004. [Google Scholar]

- 38.Finnerty MT, Rapp CA, Bond GR, et al. The State Health Authority Yardstick (SHAY) Community Ment Health J. 2009;45:228–36. doi: 10.1007/s10597-009-9181-z. [DOI] [PubMed] [Google Scholar]

- 39.Gilmore AS, Zhao Y, Kang N, et al. Patient outcomes and evidence-based medicine in a preferred provider organization setting: a six-year evaluation of a physician pay-for-performance program. Health Serv Res. 2007;42:2140–59. doi: 10.1111/j.1475-6773.2007.00725.x. discussion 294-323. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Bruns EJ, Hoagwood KE, Rivard JC, et al. State implementation of evidence-based practice for youth: Recommendations for research and policy. Journal of American Academy of Child and Adolescent Psychiatry. 2008;47:5. doi: 10.1097/CHI.0b013e3181684557. [DOI] [PubMed] [Google Scholar]

- 41.Daleiden EL, Chorpita BF. From data to wisdom: Quality improvement strategies supporting large-scale implementation of evidence-based services. Child and Adolescent Psychiatric Clinics of North America. 2005;14:329–+. doi: 10.1016/j.chc.2004.11.002. [DOI] [PubMed] [Google Scholar]

- 42.Cohen D. Effect of the Exclusion of Behavioral Health from Health Information Technology (HIT) Legislation on the Future of Integrated Health Care. The Journal of Behavioral Health Services & Research. 2014:1–6. doi: 10.1007/s11414-014-9407-x. [DOI] [PubMed] [Google Scholar]

- 43.Office of the National Coordinator for Health Information Technology. Federal health information technology strategic plan, 2011-2015. Washington, DC: Department of Health and Human Services; 2011. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.