Abstract

Medical evidence is obtainable from approaches, which might be descriptive, analytic and integrative and ranked into levels of evidence, graded according to quality and summarized into strengths of recommendation. Sources of evidence range from expert opinions through well-randomized control trials to meta-analyses. The conscientious, explicit, and judicious use of current best evidence in making decisions related to the care of individual patients defines the concept of evidence-based neurosurgery (EBN). We reviewed reference books of clinical epidemiology, evidence-based practice and other previously related articles addressing principles of evidence-based practice in neurosurgery. Based on existing theories and models and our cumulative years of experience and expertise conducting research and promoting EBN, we have synthesized and presented a holistic overview of the concept of EBN. We have also underscored the importance of clinical research and its relationship to EBN. Useful electronic resources are provided. The concept of critical appraisal is introduced.

What is evidence based neurosurgery?

Evidence-based medicine (EBM) has become one of the pillars of modern medicine and neurosurgery is no exception. Advancements in neuroepidemiology/neurostatistics and information technology have led to the emergence of the concept of “evidence-based neurosurgery” (EBN). Sackett et al1 defined EBM as “the conscientious, explicit, and judicious use of current best evidence in making decisions about the care of individual patients”.1 Alternatively, “EBN is the application of clinical neuroepidemiology to the care of patients with neurosurgical problems”. Evidence based neurosurgery integrates clinical/surgical expertise and judgment, patient preferences and values, clinical circumstances, and the best available research evidence to provide a framework for patient care.2,3 In a domain as old as neurosurgery, other kinds of “evidence” have been, and are practiced in place of EBN with considerable influence on decision-making. These include: eminence-based neurosurgery, vehemence-based, eloquence-based, providence-based, diffident-based, nervousness-based, and confidence-based neurosurgery.4 These parodies of EBN, which are less reliable alternatives can be very compelling at the emotional level and may provide a convenient way of coping with uncertainty although they are very weak substitutes for research evidence.5 Although traditional paradigms based on pathophysiology and clinical experience are necessary as premised in evidence-based practice, they alone are inadequate guides for practice.3 The techniques of EBM are relevant in neurological surgery and should guide decision making in neurosurgery as far as possible. To optimize the translation of evidence into practice requires the understanding of the basics of “research methods”.

Illustrative case scenario

An astute and keen resident while attending an out-patient clinic with his teacher wanted to know on what grounds the latter based his decision when an educated 50-year-old patient recently diagnosed with a giant pituitary adenoma with visual affection asked the following questions: Are you going to open my head or go through my nostrils? Will you use the microscope or endoscope? The neurosurgeon told the patient that he was to go transphenoidally using the endoscope. The surgeon told the resident that this was in accordance with the current evidence based guidelines on management of giant pituitary adenomas. The resident in the quest to understand this decision did a PubMed Internet search, but found a heterogeneous flood of papers with rather confusing and contradictory conclusions. The surgeon, a proponent of EBM then recommended a recent systematic review article6 published in 2012 with the following conclusion: “The endoscopic endonasal approach can be safe and effective for the resection of giant pituitary adenomas without significant lateral extension. Endonasal approaches have a significantly higher rate of gross total resection, improved visual outcome, and fewer recurrences. Postoperative CSF leak rates are lower for endoscopic approaches than for transcranial approaches, and meningitis occurred more frequently in the open cohort than the transsphenoidal or endoscopic cohorts.”

Overview of neuroepidemiologic research designs

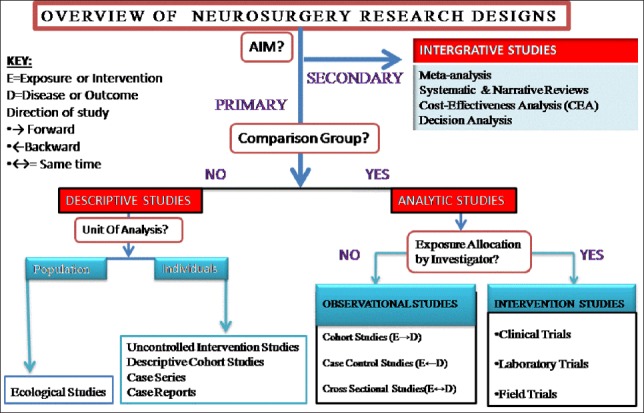

A sound knowledge of the different types of research methodologies in clinical neuroepidemiology is the initial step to understanding the concept of EBN. Details have been discussed in “Part I” of this review series.7 A summary of the different types of research methodologies is described in Figure 1.

Figure 1.

Common types of research designs.7

Case scenario (continuation)

The review article6 (secondary research) included 14 primary studies (on 478 patients) with 4 case reports and 10 described as “retrospective studies”. Evidently this systematic review is based on descriptive studies with no included analytic observational studies nor randomized surgical trials. The question that begs to be asked is: on what study types should neurosurgeons base their decision-making? How solid and certain is the evidence obtained from these studies?

Study design and EBN

Evidence-based neurosurgery has become one of the pillars of modern neurosurgery. Randomized clinical trials (RCT), which represent the ‘gold standard’ for the effects of interventions in medicine performed in most situations may not apply in neurosurgery for the following reasons:8 Conditions may be rare and a long duration needed for outcomes to be assessed (for example recurrence of benign tumors like most pituitary adenomas). Thus, the information derived from case series (level 4 evidence) may be the best available data (as in our case scenario) to base clinical decision-making for patients with benign tumors and extended life expectancies.8 Also, few patients are willing to participate in randomized trials in which one group has open surgery (for example a subfrontal approach) whereas the other group is managed by a less invasive method such as endoscopic transsphenoidal surgery. Surgical therapies are high-priced, may involve insertion of expensive hardware, so financial pressures abound - whether from industry trying to promote a new product, or government agencies trying to control costs.9 Surgical trials pose many methodological challenges often not present in trials of medical interventions, which if not properly accounted for, may introduce significant biases and threaten the validity of the results.10 In an ideal RCT, Allocation of intervention is blinded and controlled (ABC of an ideal RCT), but blinding (for example of patient and surgeon/investigator) to the intervention is often difficult with an increased likelihood for bias. A real blinded design involves sham surgery, and is rarely feasible, so new treatment effects and placebo effects abound.9 “Expertise bias” is a major problem of RCTs in neurosurgery where surgeons involved in a trial might have a high level of expertise with one procedure (usually the standard or old procedure for example microscopic pituitary surgery), but only limited experience with the other procedure investigated, usually the novel procedure3 (for example endoscopic pituitary surgery). Surgery itself is variable and difficult to standardize nor institutionalize.

Human disease is variable (compared to animal models), and most patients have additional illnesses and treatments that complicate the picture. Additionally, patients have their own ideas on the treatment they want; needless to say, so do surgeons - so true randomization is nearly impossible.9 The definition of the study, alternative, and control treatments is very difficult to agree upon and is often deficient.9 Likewise, there is no agreement on the best way to statistically analyze surgical study results. Randomized surgical trials are often small and inadequate11 thus with a wide sampling variability. So, a single study often fails to detect or exclude with certainty a modest but important difference in the effect of 2 therapies. A trial may thus show no statistically significant treatment effect when in reality a clinical effect exists.12

For these and many other reasons, neurosurgeons most often have to base their daily decision-making on rather “low level” or “poor quality” evidence.8 In fact, Yarascavitch et al2 in their review on 660 eligible articles from 3 journals published in 2009 and 2010, revealed that only 14 (2.1%) of neurosurgical literature were level I, while 54 (8.2%) were level II, 73 (11.1%) were level III, 287 (43.5%) were level IV, and 232 (35.2%) were level V. Therefore the tyrannizing of EBM by RCTs should be overturned as advocated,9 and the misconception that EBM relies purely on RCTs should be discarded as the field of neurosurgery is a blatant example where other research designs have been and are used for decision making. “WHERE” and “HOW” do we get evidence when challenged by a clinical problem?

Evidence-based medicine methodology

Evidence-based neurosurgery is a tool of considerable value for neurosurgical practice that provides a secure base for clinical practice and practice improvement.13 The EBN approach involves several steps. These steps include using experience to identify important knowledge gaps and information needs, formulating answerable questions, identifying potentially relevant research, assessing the validity of evidence and results, developing clinical policies that align research evidence and clinical circumstances, and applying research evidence to individual patients with their specific experiences, expectations, and values.3 These are summarized as the “5 As of EBN” (Table 1).3,14-16

Table 1.

Five basic steps to taking an evidence-based approach.

| Five basic steps to taking an evidence-based approach- “5 Step Model.” |

|---|

|

1. ASK: Formulate a focused, clinically pertinent question from a patient’s problem. A strategy for formulating specific questions is the P.I.C.O.T.S acronym: • Patient (the person presenting with the problem, or the Problem itself) • Intervention (action taken in response to the problem, for example endoscopic surgery) • Comparison (benchmark against which the intervention is measured, for example microscopic surgery) • Outcome (anticipated result of the intervention, for example visual outcome) • Time Frame • Settings |

| 2. ACQUIRE: Searching for and retrieving of appropriate literature (best available research evidence) |

| 3. APPRAISE: Critically review and grading of this literature (critically evaluating and appraising the evidence for its validity and usefulness), |

| 4. APPLY: Summarizing and formulating recommendations from the best available evidence. |

| 5. ACT: Recommendations from step 4 are integrated with the physician’s experience and patient factors to determine optimal care (that is, implementing the findings in clinical practice). |

Practicing EBN is not a walk in the park as practitioners must know how to frame a clinical question to facilitate use of the literature in its resolution. Using the PICOTS format; a question should include the population/problem, the intervention, comparison group, relevant outcome measures and ought to include the settings and timing because the determinants of a disease (place and time) are variable. Thus the patient’s concern can be transformed into a clinical question.3,14-16

Case Scenario (continuation)

The most important step is to formulate a clear searchable clinical question. In our case scenario, the patient raised 2 concerns: approach and tool to be used. Naturally 2 questions can be formulated: Effectiveness and safety of transcranial versus transsphenoidal approach and endoscopy versus microscopy pituitary adenoma surgery.

A question could be “in a patients presenting with giant pituitary adenoma (problem) what is the effectiveness and safety of the endoscopic (intervention) to the microscopic transnasal approach (comparison) on tumor resection, biochemical remission, and complication (outcome). Additionally a precision can be made on the “Setting”, and long term outcome (for example recurrence over 10 years [Time])”. The question is answered via the acquisition of literature usually from Electronic databases (MEDLINE/PUBMED, EMBASE, Cochrane databases, specialized registers for example CINAHL) and searching other resources (bibliographies, hand searches of journals, personal communications, conference presentations, grey literature: unpublished studies, theses, and non-peer–reviewed journals). Other restrictions might include the language (for example, English literature) and time frame. Data acquisition is followed by its appraisal using the following appraisal tools; the application of the results on the individual patients ought to integrate the surgeon’s experience and patient’s values through the process of “clinical decision making”.

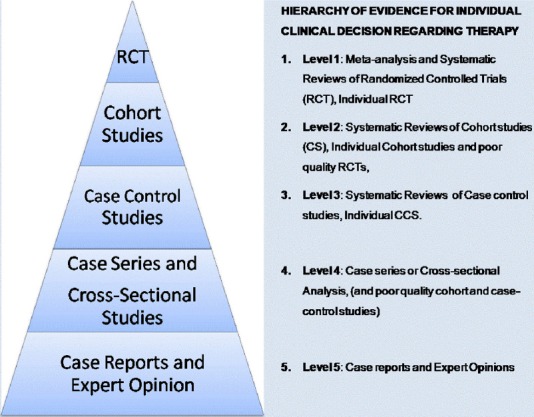

Clinical decision-making and EBM EBM clinical decision-making is the process of delivering evidence-based medicine. Neurosurgeons who work under the EBN paradigm (EBN practitioners) regularly consult original literature, including the “methods”, “results” and “discussion” sections of research articles and not just the “introduction” and “conclusion” section as many traditional practitioners will do.3 The process of searching for evidence usually leads to a plethora of clinical evidence with many of the studies yielding conflicting conclusions as in our case scenario. Obviously, not all conclusions can be correct. Correctly interpreting literature on diagnosis, prognosis, and treatment and potentially harmful exposures (medications’ side effects, complications) requires an understanding of research methods, as well as the hierarchy of evidence.3 An EBN approach to sorting through the confusion involves the ranking of evidence from clinical studies according to the type of study design and the methodological rigor followed in each individual study.5,8,16 This has led to the notion of ‘‘hierarchy of evidence’’ where some research designs are ranked more powerful than others in their ability to answer specific research questions. The sorts of questions that research addresses may be etiologic, diagnostic, therapeutic, prognostic or economic/decision analysis,2,17,18 and different schemes for levels of hierarchy exist for these different types of research questions. Common to most of the schemes are “expert opinions” at the bottom and “systematic reviews/meta-analyses” at the top of the hierarchical pyramids. That is to say: As one ascends from the bottom to the top of the pyramid, the research design becomes more rigorous, the quality of evidence increases, and the chance for bias decreases.3 The hierarchy provides a framework for ranking evidence that evaluates health care interventions (for questions on therapy) and indicates which studies should be given the most weight in an evaluation where the same question has been examined using different types of studies.19 A hierarchical “Pyramid of Evidence” that emphasizes RCTs has been promulgated as the approach to judging study design and quality (Figure 2).20 Subsequently, we will focus on the hierarchy of evidence for individual clinical decision regarding “therapy” (intervention) since neurosurgery is an intervention and an action-oriented medical subspecialty.

Figure 2.

Hierarchy of evidence for individual clinical decision regarding therapy.

Evaluation of study quality and classification of evidence

Evidence-based medicine emphasizes a hierarchy of evidence to help with clinical decision making.3 Studies are usually evaluated for quality and categorized according to level of evidence. Methods of subject recruitment, group assignment, blinding and the use of randomization are examples of considerations used in assigning each study’s level of evidence. The level of evidence determined for each study, indicates the quality of methodology used in the study. Levels of evidence are defined using commonly accepted standards in the literature (Figure 2).21

Case Scenario (continuation)

According to Komotar et al,6 4 of the studies were case reports (Level 5) and 10 “Case series” (level 4) although according to current definitions, the latter 10 articles ought to be (retrospective) descriptive cohorts22 (level 3 or 4 depending on their quality), but this re-assessment is beyond the scope of our review. The type of research question here is that of therapy and no existing observational analytic studies nor RCT were identified by the authors.

Rating scheme for the strength of the evidence: “Three-class system”

A proposed hierarchy of published clinical evidence for making individual clinical decisions is presented in Table 2 based on a “3-class system” which is a re-organization of the “5 tiered classification” aforementioned in Figure 2. The 5-tiered strategy as in the NASS scheme.23 Figure 2 assigns separate levels to “case series” and “expert opinion” while the 3-tiered combines all lower levels of evidence.24-26 This distinction becomes relevant when grading recommendations. The hierarchy implies that in searching for evidence on the effectiveness of interventions or treatments, properly conducted systematic reviews of RCTs with or without meta-analysis or properly conducted RCTs will provide the most powerful form of evidence.19 However less than 4% of the neurosurgical literature is Level I evidence.2

Table 2.

| Class of Evidence | Study Designs |

|---|---|

| I=Strong evidence | Good quality (well designed), randomized clinical trial* |

| II=Moderate evidence | Moderate quality RT Good quality cohort study (CS) Good quality case control study (CCS) |

| III=Weak evidence | Poor quality RCT Moderate/poor quality CS or CCS Case Series, case reports, anatomical studies, expert opinion |

If a systematic review or individual randomized trials are not available, the EBM practitioner will look for high-quality observational studies of relevant management strategies. If unable to get the desired evidence from the above searches, EBM practitioners will fall back on the underlying biology and pathophysiology, and resort to their own or their colleague’s clinical experience.3 The review article by Komotar et al,6 is considered as level 4 evidence since it is based on “case reports” and “case series”(Figure 1)

Quality of evidence

Although study quality assessment is beyond the scope of our review, we underscore that the foundation for any evidence-based practice guidelines rests on the meticulous assessment of medical evidence.23 Many classifications exist for the assessment of the quality of evidence obtained from studies. There exists a weighting scheme according to the methods delineated by Sackett and colleagues16 which carefully assesses the methodology of each manuscript and that of each study according to its relevant category-diagnosis, therapy, prognosis, or harm.25 Another much more useful classification for neurosurgeons is as depicted in the guidelines for the management of severe traumatic brain injury by the brain trauma foundation. Methods24 derived from criteria developed by the United sates of America Preventive services task force, the National Health Service Centre for Reviews and Dissemination (United Kingdom), and the Cochrane Collaboration. We propose using the modified Downs and Black scale27 to assess quality because of its robustness in evaluating randomized and nonrandomized methodologies, including observational studies. It provides an overall score for study quality and a profile of scores not only for the quality of reporting, internal validity (bias or systematic error and confounding) and power, but also for external validity.27

Resources to other quality assessment tools are enumerated and undermentioned in Table 3.

Table 3.

| Study Design | Initiative | Meaning | Links |

|---|---|---|---|

| Meta-analysis and systematic reviews | PRISMA (Replaced QUOROM)41,42 | Preferred reporting items for systematic reviews and meta-analyses (reporting checklist) | http://www.prisma-statement.org/ |

| AMSTAR42,43 | Assessment of multiple systematic reviews (methodology checklist) | http://www.biomedcentral.com/content/pdf/1471-2288-7-10.pdf | |

| Meta-analysis of observational studies | MOOSE40,42 | Meta-analysis of observational studies in epidemiology | https://www.editorialmanager.com/jognn/account/MOOSE.pdf |

| Randomized clinical trials | CONSORT36,37,42 | Consolidated standards of reporting trials | http://www.consort-statement.org/downloads |

| Observational studies | STROBE39,42 | strengthening the reporting of observational studies in epidemiology. | http://www.strobe-statement.org/ |

| Studies of diagnostic tests accuracy | STARD42 | Standards for the reporting of diagnostic accuracy studies | http://www.stard-statement.org/ |

| Other Resources | |||

| EQUATOR42 | Enhancing the quality and transparency of health research | http://www.equator-network.org/reporting-guidelines/stard/ | |

Strength of recommendation

Each study is usually described in terms of “level” and “quality” of evidence and summarized in terms of “strength” of recommendation.28 Three levels of recommendations I, II, III are derived from their respective class of evidence (Class I, II, and III).24 Another rating scheme for the strength of the recommendations used levels A, B, C, and D.25 The North American Spine Society (NASS) strategy scheme grades the recommendations into A (good evidence), B (fair evidence), C (poor evidence), and I (insufficient evidence for recommendation).23 Table 4 summarizes all the aforementioned schemes.

Table 4.

| Level | Strength | Level | Description |

|---|---|---|---|

| Level I high degree of certainty | Standard | A | Based on consistent class I evidence (well-designed RCT) |

| B | Single class I study or consistent class II evidence (especially when circumstances preclude RCTS) | ||

| Level II moderate degree of certainty | Guideline | C | Class II evidence (less well-designed RCT or one or more observational study) or a preponderance of class III evidence |

| Level III unclear degree of certainty | Option | D (or I) | Class III evidence (case series, case reports, and expert opinion) |

In determining the strength of recommendations derived from systematic reviews and meta-analyses, one has to consider the quality of studies included, their homogeneity and the direction of findings from the individual studies as well as the results of analyses that examine potential confounding factors. It is thus possible for a meta-analysis containing only Class II studies to yield a level III recommendation if the above considerations render uncertainty in the confidence of the overall findings.24 Also, a meta-analysis of poor quality studies does not yield high quality data.29

Some literature use the terms: “Standards”, “Guidelines” and “Options” respectively for Classes I, II, and III of recommendations of scientific evidence.30,31 Other words used synonymously with “recommendations” are “practice parameters”, “practice guideline”30-32 or “consensus statements”.15 “Standards” are accepted principles of patient management that reflect a high degree of clinical certainty, and “Guidelines” are recommendations that reflect a particular strategy or a range of management strategies that themselves reflect a moderate clinical certainty, while “Options” are other strategies for patient management for which there is unclear clinical certainty because of inconclusive or conflicting evidence or opinion.33

“Guideline” versus “Guidelines” confusion

Evidence based guidelines rank recommendations based on evidentiary quality as “standards”, “guidelines”, and “options”, hence the confusion regarding the term guideline. In its larger, plural usage (that is “guidelines”), it refers to the entire set of recommendations; within the set of recommendations, a “guideline” is a recommendation based on evidence of intermediate quality.15 Clinical guidelines are “systematically developed statements to assist practitioner and patient decisions about appropriate health care for specific clinical circumstances.34

Critical appraisal

Critical appraisal is the assessment of evidence by systematically reviewing its relevance, validity and results to specific situations.35 The process of critical appraisal can be very time consuming as it requires a careful reading of the whole article, especially the research methodology and statistical analysis and not just the “easy” bits like the introduction and conclusion sections. It is worth the effort, as it enables an understanding of research process to judge its trustworthiness, its value, and contextual relevance.35 The critical analysis of published reports of clinical investigation is a fundamental skill of the physician. Without the ability to critically analyze, the physician will not be able to incorporate new clinical knowledge appropriately into his or her practice and will not be able to distinguish real advances from fads or overenthusiastic promotions.15 A critical analysis helps to identify the strengths and weaknesses of the study and avoids taking the author’s conclusions at face value.15

Several critical appraisal tools are available for the evaluation of quality of methods and reporting of different study designs.36-43 Critical appraisal methods form a central part of the systematic review process. They are used in evidence-based healthcare training to assist clinical decision-making, and are increasingly used in evidence-based social care and education provision.

Quite recently but still to be fully incorporated into the field of neurosurgery is the scheme of the GRADE Working Group that developed the GRADE Frame for rating quality of evidence and grading strength of recommendations in health care. (GRADE=Grading of Recommendations Assessment, Development and Evaluation).44 It classifies a body of evidence into high, moderate, low and very low quality evidence (high and moderate [Randomized trial], low [Observational study] and very low [any other evidence]) and degrees of recommendations into 2 levels: strong and weak recommendations. RCTs are GRADED as high quality of evidence but can be downgraded, likewise observational studies are GRADED as low, but can be UPGRADED. The GRADE system has the advantage that it is facilitated by a computer program, “GRADEpro”. For details see link below: http://www.gradeworkinggroup.org/publications/JCE_series.htm

Case Scenario (continuation)

The patient was scheduled for an elective endoscopic transsphenoidal surgery (ETS) but 2 days before the operation, he developed pituitary adenoma apoplexy with severe headache and deteriorating vision. The consultant on call had several years of experience with the microscopic approach with little experience in endoscopy. The patient underwent a microscopic transsphenoidal surgery (MTS) with a near total excision except for a remnant lateral and encasing the carotid. The EBN warrants the integration of the best available research evidence, clinical/surgical expertise and judgment, patient preferences and values, and clinical circumstances to provide the best patient care.2,3 Although the best available research evidence is in favor of an ETS, the pending clinical circumstance was that of an emergency with no available staff to support ETS (lack of surgical expertise). The patient switched his preferences from ETS to MTS because of the fear of losing his vision. Intra-operatively, the surgeon left behind a remnant (clinical judgment), which could otherwise have been easily excised if an angled endoscope, was used.

Many authors have suggested that there is no clear evidence of the superiority of one technique to another since differences if any in outcome are minute. Each of the techniques has its respective pros and cons and specific indications. The systematic review by Komotar et al6 is based poor quality data (on level 4 and 5 evidence and produces LEVEL III recommendation i.e. evidence of unclear degree of certainty), so there is no justification to aggressively push for one technique over the other. The absence of analytic (namely comparative) studies in the review makes it difficult to establish any causal associations. Maybe in future, a randomized controlled trial will do.

Pros and cons of evidence-based medicine

Evidence-based neurosurgery is a tool of considerable value for neurosurgical practice that provides a secure base for clinical practice and practice improvement though it is not without inherent drawbacks, weaknesses or limitations.13

Much of the accepted neurosurgical practice has never been validated in RCTs and there will always be plausible interventions for which no evidence is (yet) available.8 Evidence-based medicine in general with EBN inclusive aims to address the persistent problem of clinical practice variation with the help of various tools, including standardized practice guidelines. Critics stipulate that by discouraging idiosyncrasies in clinical technique, standards introduce disincentives for individual innovations in care and healthy competition among practitioners. While advocates welcome the stronger scientific foundation of such guidelines, critics argue that EBM could de-revolutionize care, to bring about stagnation and bland uniformity, derogatorily characterizing it as “cookbook medicine.34 Because EBM was initially defined in opposition to clinical experience, it created fear amongst traditional professionals but later definitions have emphasized its complementary character and have aimed to improve clinical experience with better evidence.”34 Opponents of EBM disregard it as not having sufficient proven efficacy.45 They mistakenly suggested that EBM equates “lack of evidence of efficacy” with the “evidence for the lack of efficacy”.3 Questions such as “Where is the evidence for evidence-based medicine?” have been asked.15 But absence of evidence shouldn’t be interpreted as evidence of absence as inferred from the classic logical fallacy.8 Other critics argue that EBM is not a tool for providing optimal patient care, but merely a cost-containment tool.3

Opponents continue to argue that EBM relies on peer-reviews of literature as their primary source of evidentiary knowledge, which themselves are flawed and have inherent limitations. Also RCTs, the pinnacle in evidentiary hierarchy are extremely expensive and time consuming to perform thus rare diagnoses and uncommon interventions are not likely to ever be studied using RCT methodology. “As a result, there will never be this level of evidence for those diseases or interventions” and unfortunately, many neurosurgical diseases and interventions fall into this category. One key point to underscore is the fact that there is a major difference between size of effect for an intervention and strength of evidence supporting the use of that intervention. A study design low in the evidentiary hierarchy can produce results with a strong association precluding the necessity for further studies of higher rank. For example an RCT was not needed to prove that the earliest possible, rather than delayed, intervention for patients with epidural hematoma and pupillary asymmetry was better.46 Similarly the introduction of penicillin in the 1930s is another classic example.8 The dramatic effect of penicillin was obvious at the case report and case series level, and it would not have been appropriate or desirable to await RCT testing before recommending penicillin as standard therapy for pneumonia.8 Worth noting is that: EBM evidence hierarchies do not include qualitative studies, which are important in clinical decision-making.

Cost is also a driving factor in health care, and this should be factored in. Medical and policy decisions are influenced by other factors other than evidence such as religion, politics and a new notion called “enthusiasm!” Summarily, Poolman et al,47 pointed out “7 common misconceptions” about EBM namely: EBM is not possible without RCTs, EBM disregards clinical proficiency, one needs to be a statistician to practice EBM, the usefulness of applying EBM to individual patients is limited, keeping up-to-date and finding the evidence is impossible for busy clinicians, EBM is a cost-reduction strategy, and EBM is not evidence-based. These false interpretations stemming from a lack of knowledge are convenient interpretations of the principles of EBM and a contradiction to the true goals of EBM which is to ameliorate patient care.3

While the impact of evidence-based practice parameter development on patient outcomes remains relatively understudied,48 there have been at least some suggestions of a beneficial impact.15,49,50 Supporters tend to see guidelines as a panacea for the problems of rising costs, inequity, and variability plaguing the health care field.34 Evidenced-based neurosurgery and practice guidelines provide a stronger scientific foundation for clinical work, to achieve consistency, efficiency, effectiveness, quality, and safety in medical care. Evidenced-based neurosurgery methods have led to guidelines forming the basis of evidence-based care; an attractive philosophy for providing an objective and science-based rationale for healthcare policies.

In conclusion, EBN has become one of the pillars of modern neurosurgery. The purpose of EBN and practice guidelines is to provide a stronger scientific foundation for clinical work, to achieve consistency, efficiency, effectiveness, quality, and safety in medical care. Neurological surgery is an intervention and action-oriented surgical subspecialty. Consequently, while decisions regarding etiology, diagnosis, prevention, and prognosis are important, decisions regarding interventions tend to be more interesting and relevant to the majority of decisions. Although RCTs are not suitable to investigate many neurosurgical problems, this does not preclude practicing EBN that requires consideration of the strongest available evidence at the time of clinical decision-making. The techniques of EBM are relevant to neurological surgeons and require periodic updating, as information and knowledge are time dependent. Neurosurgeons and neurosurgical trainees should cultivate sound EBN practice and strive to improve the available evidence base for neurosurgery. This requires an understanding of the basic concepts of neurostatistics and neuroepidemiology.

References

- 1.Sackett DL, Rosenberg WM, Gray JA, Haynes RB, Richardson WS. Evidence based medicine:what it is and what it isn’t. BMJ. 1996;312:71–72. doi: 10.1136/bmj.312.7023.71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Yarascavitch BA, Chuback JE, Almenawer SA, Reddy K, Bhandari M. Levels of evidence in the neurosurgical literature:more tribulations than trials. Neurosurgery. 2012;71:1131–1137. doi: 10.1227/NEU.0b013e318271bc99. [DOI] [PubMed] [Google Scholar]

- 3.Bhandari M, Joensson A. Clinical Research for Surgeons. Stuttgart (NY): Thieme; 2009. [Google Scholar]

- 4.Isaacs D, Fitzgerald D. Seven alternatives to evidence based medicine. BMJ. 1999;319:1618. doi: 10.1136/bmj.319.7225.1618. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Fletcher RH, Fletcher SW. Clinical Epidemiology:The Essentials. 5th ed. Philadelphia (PA): Lippincott Williams & Wilkins; 2005. [Google Scholar]

- 6.Komotar RJ, Starke RM, Raper DM, Anand VK, Schwartz TH. Endoscopic endonasal compared with microscopic transsphenoidal and open transcranial resection of giant pituitary adenomas. Pituitary. 2012;15:150–159. doi: 10.1007/s11102-011-0359-3. [DOI] [PubMed] [Google Scholar]

- 7.Esene IN, El-Shehaby AM, Baeesa SS. Essentials of research methods in neurosurgery and allied sciences for research, appraisal and application of scientific information to patient care (Part I) Neurosciences (Riyadh) 2016;21:97–107. doi: 10.17712/nsj.2016.2.20150552. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Pollock BE. Guiding Neurosurgery by Evidence. Basel (CH): Karger Publishers; 2006. [Google Scholar]

- 9.Roitberg B. Tyranny of a “randomized controlled trials”. Surg Neurol Int. 2012;3:154. doi: 10.4103/2152-7806.104748. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Farrokhyar F, Karanicolas PJ, Thoma A, Simunovic M, Bhandari M, Devereaux PJ, et al. Randomized controlled trials of surgical interventions. Ann Surg. 2010;251:409–416. doi: 10.1097/SLA.0b013e3181cf863d. [DOI] [PubMed] [Google Scholar]

- 11.Egger M, Smith GD, Sterne JA. Uses and abuses of meta-analysis. Clin Med. 2001;1:478–484. doi: 10.7861/clinmedicine.1-6-478. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Haidich AB. Meta-analysis in medical research. Hippokratia. 2010;14:29–37. [PMC free article] [PubMed] [Google Scholar]

- 13.Linskey ME. Evidence-based medicine for neurosurgeons:introduction and methodology. Prog Neurol Surg. 2006;19:1–53. doi: 10.1159/000095175. [DOI] [PubMed] [Google Scholar]

- 14.Bandopadhayay P, Goldschlager T, Rosenfeld JV. The role of evidence-based medicine in neurosurgery. J Clin Neurosci. 2008;15:373–378. doi: 10.1016/j.jocn.2007.08.014. [DOI] [PubMed] [Google Scholar]

- 15.Haines SJ. Evidence-based neurosurgery. Neurosurgery. 2003;52:36–47. doi: 10.1097/00006123-200301000-00004. [DOI] [PubMed] [Google Scholar]

- 16.Sackett DL, Straus SE, Richardson WS, Rosenberg W, Haynes RB. Evidence-Based Medicine:How to Practice and Teach It. 2nd ed. Edinburgh (Scotland): Churchill Livingstone; 2002. [Google Scholar]

- 17.Wupperman R, Davis R, Obremskey WT. Level of evidence in Spine compared to other orthopedic journals. Spine (Phila Pa 1976) 2007;32:388–393. doi: 10.1097/01.brs.0000254109.12449.6c. [DOI] [PubMed] [Google Scholar]

- 18.Medina JM, McKeon PO, Hertel J. Rating the Levels of Evidence in Sports-Medicine Research. Athletic Therapy Today. 2006;11:38–41. [Google Scholar]

- 19.Akobeng AK. Understanding randomised controlled trials. Arch Dis Child. 2005;90:840–844. doi: 10.1136/adc.2004.058222. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Ho PM, Peterson PN, Masoudi FA. Evaluating the evidence:is there a rigid hierarchy? Circulation. 2008;118:1675–1684. doi: 10.1161/CIRCULATIONAHA.107.721357. [DOI] [PubMed] [Google Scholar]

- 21.Liu JC, Stewart MG. Teaching evidence-based medicine in otolaryngology. Otolaryngol Clin North Am. 2007;40:1261–1274. doi: 10.1016/j.otc.2007.07.006. [DOI] [PubMed] [Google Scholar]

- 22.Esene IN, Ngu J, Elzoghby M, Solaroglu I, Sikod AM, Kotb A, et al. Case series and descriptive cohort studies in neurosurgery:the confusion and solution. Childs Nerv Syst. 2014;30:1321–1332. doi: 10.1007/s00381-014-2460-1. [DOI] [PubMed] [Google Scholar]

- 23.Kaiser MG, Eck JC, Groff MW, Watters WC, 3rd, Dailey AT, Resnick DK, et al. Guideline update for the performance of fusion procedures for degenerative disease of the lumbar spine. Part 1:introduction and methodology. J Neurosurg Spine. 2014;21:2–6. doi: 10.3171/2014.4.SPINE14257. [DOI] [PubMed] [Google Scholar]

- 24.Brain Trauma Foundation. Guidelines for the management of severe traumatic brain injury Methods. J Neurotrauma. 2007;24:S3–S6. doi: 10.1089/neu.2007.9996. [DOI] [PubMed] [Google Scholar]

- 25.Anderson PA, Matz PG, Groff MW, Heary RF, Holly LT, Kaiser MG, et al. Laminectomy and fusion for the treatment of cervical degenerative myelopathy. J Neurosurg Spine. 2009;11:150–156. doi: 10.3171/2009.2.SPINE08727. [DOI] [PubMed] [Google Scholar]

- 26.Feigin VL, Bennett DA. Handbook Of Clinical Neuroepidemiology. New York (NY): Nova Science Publishers; 2006. [Google Scholar]

- 27.Downs SH, Black N. The feasibility of creating a checklist for the assessment of the methodological quality both of randomised and non-randomised studies of health care interventions. J Epidemiol Community Health. 1998;52:377–384. doi: 10.1136/jech.52.6.377. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Kochanek PM, Carney N, Adelson PD, Ashwal S, Bell MJ, Bratton S, et al. Guidelines for the acute medical management of severe traumatic brain injury in infants, children, and adolescents--second edition. Pediatr Crit Care Med. 2012;13:S1–S82. doi: 10.1097/PCC.0b013e31823f435c. [DOI] [PubMed] [Google Scholar]

- 29.Garg AX, Hackam D, Tonelli M. Systematic review and meta-analysis:when one study is just not enough. Clin J Am Soc Nephrol. 2008;3:253–260. doi: 10.2215/CJN.01430307. [DOI] [PubMed] [Google Scholar]

- 30.Eddy DM. Clinical decision making:from theory to practice. Designing a practice policy. Standards, guidelines, and options. JAMA. 1990;263(3077):3081–3084. doi: 10.1001/jama.263.22.3077. [DOI] [PubMed] [Google Scholar]

- 31.Knuth T, Letarte PB, Ling G, Moores LE. Methodology:Guideline Development Rationale and Process. 1st edition. New York (NY): Brain Trauma Foundation; 2005. [Google Scholar]

- 32.Greenberg M. Handbook of Neurosurgery. New York (NY): Thieme Medical Publishers; 2010. [Google Scholar]

- 33.Rosenberg J, Greenberg MK. Practice parameters:strategies for survival into the nineties. Neurology. 1992;42:1110–1115. doi: 10.1212/wnl.42.5.1110. [DOI] [PubMed] [Google Scholar]

- 34.Timmermans S, Mauck A. The promises and pitfalls of evidence-based medicine. Health Aff (Millwood) 2005;24:18–28. doi: 10.1377/hlthaff.24.1.18. [DOI] [PubMed] [Google Scholar]

- 35.Burls A. What is critical appraisal? Calgary (CAN): Hayward Medical Communications; 2012. [Google Scholar]

- 36.Moher D, Schulz KF, Altman DG. The CONSORT statement:revised recommendations for improving the quality of reports of parallel-group randomized trials. Ann Intern Med. 2001;134:657–662. doi: 10.7326/0003-4819-134-8-200104170-00011. [DOI] [PubMed] [Google Scholar]

- 37.Begg C, Cho M, Eastwood S, Horton R, Moher D, Olkin I, et al. Improving the quality of reporting of randomized controlled trials. The CONSORT statement. JAMA. 1996;276:637–639. doi: 10.1001/jama.276.8.637. [DOI] [PubMed] [Google Scholar]

- 38.Tooth L, Ware R, Bain C, Purdie DM, Dobson A. Quality of reporting of observational longitudinal research. Am J Epidemiol. 2005;161:280–288. doi: 10.1093/aje/kwi042. [DOI] [PubMed] [Google Scholar]

- 39.Von EE, Altman DG, Egger M, Pocock SJ, Gotzsche PC, Vandenbroucke JP. The Strengthening the Reporting of Observational Studies in Epidemiology (STROBE) statement:guidelines for reporting observational studies. Lancet. 2007;370:1453–1457. doi: 10.1016/S0140-6736(07)61602-X. [DOI] [PubMed] [Google Scholar]

- 40.Stroup DF, Berlin JA, Morton SC, Olkin I, Williamson GD, Rennie D, et al. Meta-analysis of observational studies in epidemiology:A proposal for reporting. JAMA. 2000;283:2008–2012. doi: 10.1001/jama.283.15.2008. [DOI] [PubMed] [Google Scholar]

- 41.Moher D, Liberati A, Tetzlaff J, Altman DG. Preferred reporting items for systematic reviews and meta-analyses:the PRISMA statement. J Clin Epidemiol. 2009;62:1006–1012. doi: 10.1016/j.jclinepi.2009.06.005. [DOI] [PubMed] [Google Scholar]

- 42.Vandenbroucke JP. STREGA, STROBE, STARD, SQUIRE, MOOSE, PRISMA, GNOSIS, TREND, ORION, COREQ, QUOROM, REMARK. and CONSORT:for whom does the guideline toll? J Clin Epidemiol. 2009;62:594–596. doi: 10.1016/j.jclinepi.2008.12.003. [DOI] [PubMed] [Google Scholar]

- 43.Shea BJ, Grimshaw JM, Wells GA, Boers M, Andersson N, Hamel C, et al. Development of AMSTAR:a measurement tool to assess the methodological quality of systematic reviews. BMC Med Res Methodol. 2007;7:10. doi: 10.1186/1471-2288-7-10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Guyatt G, Oxman AD, Akl EA, Kunz R, Vist G, Brozek J, et al. GRADE guidelines: 1. Introduction-GRADE evidence profiles and summary of findings tables. J Clin Epidemiol. 2011;64:383–394. doi: 10.1016/j.jclinepi.2010.04.026. [DOI] [PubMed] [Google Scholar]

- 45.Charlton BG. Restoring the balance:evidence-based medicine put in its place. J Eval Clin Pract. 1997;3:87–98. doi: 10.1046/j.1365-2753.1997.00097.x. [DOI] [PubMed] [Google Scholar]

- 46.Bricolo AP, Pasut LM. Extradural hematoma:toward zero mortality. A prospective study. Neurosurgery. 1984;14:8–12. doi: 10.1227/00006123-198401000-00003. [DOI] [PubMed] [Google Scholar]

- 47.Poolman RW, Petrisor BA, Marti RK, Kerkhoffs GM, Zlowodzki M, Bhandari M. Misconceptions about practicing evidence-based orthopedic surgery. Acta Orthop. 2007;78:2–11. doi: 10.1080/17453670610013358. [DOI] [PubMed] [Google Scholar]

- 48.Grol R. Between evidence-based practice and total quality management:the implementation of cost-effective care. Int J Qual Health Care. 2000;12:297–304. doi: 10.1093/intqhc/12.4.297. [DOI] [PubMed] [Google Scholar]

- 49.Grimshaw JM, Russell IT. Effect of clinical guidelines on medical practice:a systematic review of rigorous evaluations. Lancet. 1993;342:1317–1322. doi: 10.1016/0140-6736(93)92244-n. [DOI] [PubMed] [Google Scholar]

- 50.Shin JH, Haynes RB, Johnston ME. Effect of problem-based, self-directed undergraduate education on life-long learning. CMAJ. 1993;148:969–976. [PMC free article] [PubMed] [Google Scholar]