Summary

In a randomized controlled study, we examined the effects of a one‐on‐one cognitive training program on memory, visual and auditory processing, processing speed, reasoning, attention, and General Intellectual Ability (GIA) score for students ages 8–14. Participants were randomly assigned to either an experimental group to complete 60 h of cognitive training or to a wait‐list control group. The purpose of the study was to examine changes in multiple cognitive skills after completing cognitive training with ThinkRx, a LearningRx program. Results showed statistically significant differences between groups on all outcome measures except for attention. Implications, limitations, and suggestions for future research are examined. © 2016 The Authors Applied Cognitive Psychology Published by John Wiley & Sons Ltd.

The modification of ‘IQ’ has been an elusive quest of many neuroplasticity researchers who have found little transfer from targeted cognitive training interventions to general intelligence (Chein & Morrison, 2010; Dunning, Holmes, & Gathercole, 2013). Although transfer from working memory training to fluid intelligence has been documented in several small studies (Jaeggi, Buschkuehl, Jonides, & Perrig, 2008; Jaušovec & Jaušovec, 2012), skepticism continues to permeate the field (Redick et al., 2013). This bent is understandable given the number of non‐significant findings. Despite the controversy, the modern brain training movement has exploded with an assortment of programs designed to enhance cognitive function. The purpose of the present study was to examine the effects of a one‐on‐one cognitive training program on General Intellectual Ability (GIA) as well as on fluid reasoning, memory, visual and auditory processing, processing speed, and attention—all key cognitive skills that underlie the ability to learn.

Extant research has demonstrated support for the efficacy of cognitive training programs in improving individual cognitive skills (Holmes, Gathercole, & Dunning, 2009; Klingberg et al., 2005; Melby‐Lervag & Hulme, 2013; Sonuga‐Barke et al., 2013; Wegrzyn, Hearrington, Martin, & Randolph, 2012). However, because each training program described in the literature targets different cognitive skills, the results are as diverse and varied as the programs themselves. Given the growing research base on the associations among working memory and intelligence (Cornoldi & Giofre, 2014), and working memory and learning (Alloway & Copello, 2013), it is easy to see why a majority of the cognitive training programs target working memory. Certainly, most of the studies do cite improvements in working memory (Beck, Hanson, & Puffenberger, 2010; Dunning et al., 2013; Gray et al., 2012; Holmes & Gathercole, 2014; Wiest, Wong, Minero, & Pumaccahua, 2014), but pretest to post‐test gains have also been documented in fluid reasoning (Barkl, Porter, & Ginns, 2012; Jaeggi et al., 2008; Mackey, Hill, Stone, & Bunge, 2011), processing speed (Mackey et al., 2011), reading (Loosli, Buschkuehl, Perrig, & Jaeggi, 2012; Shalev, Tsal, & Mevorach, 2007), computational accuracy (Witt, 2011), and attention (Rabiner, Murray, Skinner, & Malone, 2010; Tamm, Epstein, Peugh, Nakonezny, & Hughes, 2013).

Despite the assertion that fluid intelligence and individual cognitive skills can be trained (Sternberg, 2008), the evidence that IQ scores can be modified by a training intervention is scarce. An intriguing gap in the literature is the dearth of cognitive training studies that specifically measure effects on IQ score, especially given the role of IQ scores in predicting reading ability (Naglieri & Ronning, 2000), academic achievement (Freberg, Vandiver, Watkins, & Canivez, 2008), the severity of children's mental health problems (Mathiassen et al., 2012), social mobility (Forrest, Hodgson, Parker, & Pearce, 2011), obesity (Chandola, Deary, Blane, & Batty, 2006), suicidality (Gunnell, Harbord, Singleton, Jenkins, & Lewis, 2009), early mortality (Maenner, Greenberg, & Mailick, 2015), income potential (Murray, 2002), and occupational performance (Hunter, 1986). The assessment of GIA—although standard practice in the formal diagnoses of learning disabilities—can provide valuable information as a response to intervention context as well. Anastasi and Urbina (1997) suggest that intelligence tests should be used to assess strengths and weaknesses in order to plan how to bring people to their maximum level of functioning.

The implicit measurement of general intelligence is hinted at in the studies using tests of fluid reasoning. Barkl et al. (2012) argue that the high correlation between fluid reasoning and general intelligence supports the assumption that interventions targeting fluid reasoning will necessarily target IQ score. While their findings included significant improvements in inductive reasoning following reasoning training, a comprehensive measure of GIA was not included in the study. Hayward, Das, and Janzen (2007) used the full scale score on the Das–Naglieri Cognitive Assessment System (CAS) in their study of the COGENT cognitive training program but did not find significant group differences on the measure. Dunning et al. (2013) included the Wechsler Abbreviated Scales of Intelligence in their measures of working memory training outcomes and found no evidence that training working memory leads to enhancement in non‐verbal intelligence score. This finding that the training did not impact IQ score was consistent with findings from a previous study on the same working memory training program (Holmes et al., 2010). Although Roughan and Hadwin (2011) did note significant group differences in IQ score as measured by Raven's Standard Progressive Matrices in a small study (n = 15), Mansur‐Alves and Flores‐Mendoza (2015) did not find significant post‐training differences between groups on the Raven's test in a larger study (n = 53). Thus, the lack of corroborating findings presents an unconvincing view that working memory training alone is a tool for increasing IQ score.

Xin, Lai, Li, and Maes (2014) suggest that the mixed results from working memory training studies may be because of the differences in working memory tasks used in the interventions. Harrison, Shipstead, and Engle (2015) propose that the relationship between working memory and fluid intelligence is a function of the matrix tasks used to measure fluid intelligence. Specifically, the ability to maintain solutions from prior items on the Raven's in active memory will enhance performance on the test. Alternatively, perhaps the inconsistency in findings is not associated with variations in tasks, in the ability to recycle solutions, or in working memory training efficacy per se, but in the narrow theoretical foundation on which working memory training programs may be based. With few exceptions, the commercially available programs are based on Baddeley's (1992) model of working memory—the three‐component model showcasing the phonological loop, visuo‐spatial sketchpad, and central executive as the most widely accepted theory of working memory. However, given that development and revision of contemporary IQ tests are guided by the ever‐evolving Cattell–Horn–Carroll (CHC) theory of cognitive abilities (McGrew, 2009), it should follow that interventions grounded in a similar theoretical basis should have a larger impact on IQ score and the multiple cognitive constructs on which a full scale IQ score is collectively determined. The CHC theory is a relatively new model of intelligence that merges the Gf‐Gc theory (fluid intelligence and crystallized intelligence, respectively) espoused by Cattell and Horn (1991) and the tri‐stratum model of intelligence espoused by Carroll (1993). The most recent update of the model (Schneider & McGrew, 2012) places the individual cognitive abilities in four categories: acquired knowledge (crystallized intelligence), domain‐independent general capacities (fluid reasoning and memory), sensory‐motor abilities (visual and auditory processing), and general speed (processing speed, reaction times, and psychomotor speed)—all under the umbrella of GIA. Thus, it would be interesting to investigate if comprehensive cognitive training interventions that target multiple cognitive abilities across these categories have an effect not only on the individual cognitive constructs but also on a GIA score (McGrew, Schrank, & Woodcock, 2007).

One such program has been developed to target multiple cognitive abilities. As described in a prior study (Gibson, Carpenter, Moore, & Mitchell, 2015), the ThinkRx cognitive training program targets and remediates seven general cognitive skills and 25 subskills through repeated engagement in game‐like mental tasks delivered one‐on‐one by a cognitive trainer (Table 1). The 60‐h program includes a 230‐page curriculum consisting of 23 different training procedures with more than 1000 total difficulty levels. The program components are sequenced and loaded by difficulty and intensity. Trainers use a metronome, stopwatch, tangrams, shape and number cards, workboards, a trampoline, footbag, and activity sheets to deliver the program to students. The training tasks emphasize visual or auditory processes that require attention and reasoning throughout each 60 to 90‐min training period. Training sessions are focused, demanding, intense, and tightly controlled by the trainer to push students to just above their current cognitive skill levels. Deliberate distractions are built in to the sessions to tax the brain's capacity for sorting and evaluating the importance of incoming information. The use of a metronome increases intensity and ensures there are no ‘mental breaks’ while completing a training task.

Table 1.

Descriptions of training tasks and skills targeted by each task, and the number of difficulty levels in each task

| Task description | Skills targeted | Levels |

|---|---|---|

| 1. Colored arrows or words are displayed. Participants call out colors, directions, or words | DA, PS, SA, STA, VM, VN, WM | 48 |

| 2. Columns of numbers are displayed. Participants add, subtract, or multiply a constant number to each number in the column. | PS, MC, DA, LTM, STA, WM | 35 |

| 3. A more difficult version of #2 using multiple operations and optional trampoline. | PS, SF, MC, DA, LTM, STA, VS, WM | 44 |

| 4. Participants visually fixate on a pen while simultaneously completing a mental activity | STA, VP, DA, VF, SM | 18 |

| 5. Participants perform actions on charts of numbers and letters. | PS, DA, MC, WM, SF, SA, SM, STA, VD, VS | 44 |

| 6. Participants are asked to paraphrase stories and represent concepts with concrete objects. | VN, C, SP, SSP, LR | 17 |

| 7. Participants listen to or read descriptors and select the object that matches the descriptions. | VN, C, LR, SP, WM | 32 |

| 8. Trainer and participant toss a hacky sack on metronome beat | AA, DA, MC, PS, SM, WM, SSA, VN | 5 |

| 9. Participant claps and taps in rhythm to the metronome with distractions | AD, DA, SA, SM, SP, SSA | 13 |

| 10. Participant touches his thumb to his fingers on beat with mental activities | DA, PS, SM | 6 |

| 11. Participant studies numbers and their positions on a card and recalls the digits and positions on beat | DA, MC, WM, VP, VS, VN | 25 |

| 12. Trainer calls off numbers for participant to perform a mathematical operation on n‐back numbers using a timer and metronome | DA, C, PS, SA, SSA, WM, SP | 44 |

| 13. Participant studies patterns of shapes and reproduces them from memory | WM, LTM, VD, VS, SSA, PS, SP | 35 |

| 14. Participant identifies three‐card groups sharing shape, color, orientation, and size characteristics | LR, C, WM, PS, SA, SSA, VD | 40 |

| 15. Participant reasons through brain teaser cards | LR, VN, C, SP, VM | 32 |

| 16. Trainer and participant visualize and verbally play tic tac toe activities | DA, EP, PS, SP, VP, STM, VN | 32 |

| 17. Using a golf course map, participant studies the route to the hole and draws the route with his eyes closed. | VP, VN, SM | 32 |

| 18. Participant studies humorous images representing groups of related people, objects, numbers, and concepts and recalls the items from memory | LTM, VN, AM, C, PS, SSA, WM, VP | 34 |

| 19. Participant recreates studied images with tangrams | VN, LR, SP, SM, STM, SP, SSA, VM, WM | 38 |

| 20. Participant visualizes and spells words in the air | VP, VN, WM | 6 |

| 21. Trainer drills participant on 17 sounds | AA, AD, AP | 14 |

| 22. Participant segments sounds of words | AA, AD, AP, AS | 14 |

| 23. Participant blends sounds to make words | AA, AB, AD, AP | 14 |

| 24. Participate manipulates words by removing sounds | AA, AD, AP | 14 |

Note. AA = auditory analysis, AB = auditory blending, AD = auditory discrimination, AP = auditory processing, AS = auditory segmenting, AM = associative memory, C = comprehension, DA = divided attention, EP = executive processing, LR = logic and reasoning, MC = math computation, PS = processing speed, SF = saccadic fixation, SA = selective attention, SM = sensory‐motor integration, SP = sequential processing, STM = short term memory, SSP = simultaneous processing, STA = sustained attention, VP = visual processing, VD = visual discrimination, VF = visual fixation, VM = visual manipulation, VN = visualization, VS = visual span, WM = working memory.

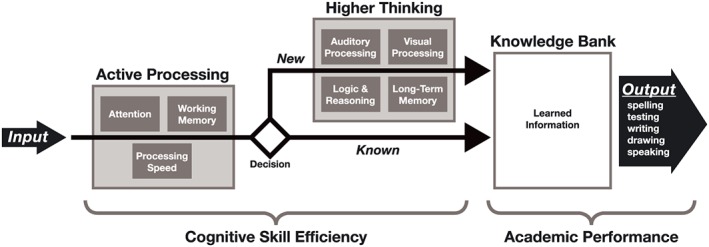

Each ThinkRx training procedure targets various combinations of multiple skills such as working memory, processing speed, visualization, auditory discrimination, reasoning, sensory motor integration, and attention. The program itself is grounded in The Learning Model (Gibson, Hanson, & Mitchell, 2007; Gibson et al., 2015; Press, 2012), a pictorial representation of information processing shown in Figure 1. The Learning Model is based on the CHC theory espousing a multiple‐construct view of intelligence. (For a complete description of CHC Theory, see McGrew, 2005.) The Learning Model illustrates the role of individual cognitive abilities in cognitive skill efficiency and its direct influence on the ability to store and retrieve accumulated knowledge. For example, information is acquired through the senses and must then be recognized and analyzed by a fluid or active processing system that includes working memory, processing speed, and attention. This is the executive control system that determines which information is unimportant, easily handled, or requires more complex processing. If the information is novel or complex, higher order processes such as reasoning, auditory processing, and visual processing must occur in order to complete the task. With practice, higher order processing can be bypassed, which helps decrease the time between sensory input and output.

Figure 1.

Pictorial representation of The Learning Model

The one‐on‐one delivery method of the ThinkRx program is supported by Feuerstein's theory of structural cognitive modifiability, which posits that cognition is not static but malleable as a result of mediated experiences with the world (Feuerstein, Feuerstein, & Falik, 2010). This mediation represents the role of the adult in the students' ability to make sense of stimuli in the environment. It is not the impartation of knowledge upon a student but, instead, the purposeful coaching of a student's interaction with a stimulus to bring about understanding and build cognitive capacity for learning. Research on Feuerstein's Instrumental Enrichment Basic (IE) cognitive training program suggests that fluid intelligence can be modified through these interactions (Kozulin et al., 2010). As is Feuerstein's program, the trainer‐delivered ThinkRx program is distinct from the computer‐based cognitive training programs that are ubiquitous in the extant literature.

Although statistically significant cognitive skill gains have been noted in four doctoral research studies on the ThinkRx program (Jedlicka, 2012; Luckey, 2009; Moore, 2015; Pfister, 2013), published research on the efficacy of ThinkRx has only recently begun to proliferate. Building on the results from a quasi‐experimental study on the ThinkRx program, which used propensity‐matched controls (Gibson et al., 2015), it was important to conduct a randomized, controlled trial if results of the study were to make a meaningful contribution to the existing literature on cognitive training for remediating deficits in multiple cognitive skills.

Method

To examine the effects of a one‐on‐one cognitive training program on children's cognitive skills, we conducted a randomized, pretest–posttest control group study using the ThinkRx cognitive training program delivered by cognitive trainers in two training locations. This study was guided by the following question: Is there a statistically significant difference in GIA score, Associative Memory, Visual Processing, Auditory Processing, Logic and Reasoning, Processing Speed, Working Memory, Long Term Memory, and Attention between those who complete ThinkRx cognitive training and those who do not?

Participants

The sample for the study (n = 39) was recruited from the population of students ages 8–14 in a database of families who had requested information about LearningRx cognitive training in Colorado Springs in the three years prior to the study. A recruitment email was sent to all families in the database (n = 2241). Eligibility was limited to participants between the ages of 8 and 14 who lived within commuting distance of Colorado Springs and who scored at screening between 70 and 130 on the GIA composite of the Woodcock Johnson III—Tests of Cognitive Abilities. Of the 43 volunteers, 39 students met the criteria for participation. Using blocked sampling with siblings and individuals, participants were randomly assigned to one of two groups: an experimental group that completed 60 h of one‐on‐one cognitive training versus a waitlist control group. Blocking by sibling or individual status was chosen to reduce the risk of attrition and contamination if siblings were assigned to different groups. The experimental group (n = 20) included 11 females and nine males, with a mean age of 11.3. In the experimental group, parent‐reported diagnoses included ADHD (n = 6), dyslexia (n = 3), LD (n = 2), speech delay (n = 2), and TBI (n = 1). The control group (n = 19) included seven females and 12 males, with a mean age of 11.1. In the control group, parent‐reported diagnoses included ADHD (n = 7), dyslexia (n = 3), learning disability (n = 1), and speech delay (n = 2). Diagnosis was not an exclusion criteria for several reasons. First, prior observational data from LearningRx reveals similar results across diagnostic categories. Next, randomization washes out the influence of diagnosis on training results. Finally, it would be impossible to tease apart differences based on diagnosis in a small sample without losing statistical power. A check of the random assignment indicated the groups were balanced, with no significant differences between groups based on personal characteristics (age: t = −.407, p = .686; gender: χ2 = 1.29, p = .26; race/ethnicity: χ2 = 3.42, p = .06; ADD/ADHD: χ2 = .21, p = .65; autistic: χ2 = .98, p = .32; dyslexia: χ2 = .01, p = .95; gifted: χ2 = .42, p = .52; LD: χ2 = .31, p = .58; none: χ2 = .74, p = .39; physical: χ2 = .00, p = .97; speech: χ2 = .00, p = .96; TBI: χ2 = .96, p = .32).

Training tasks

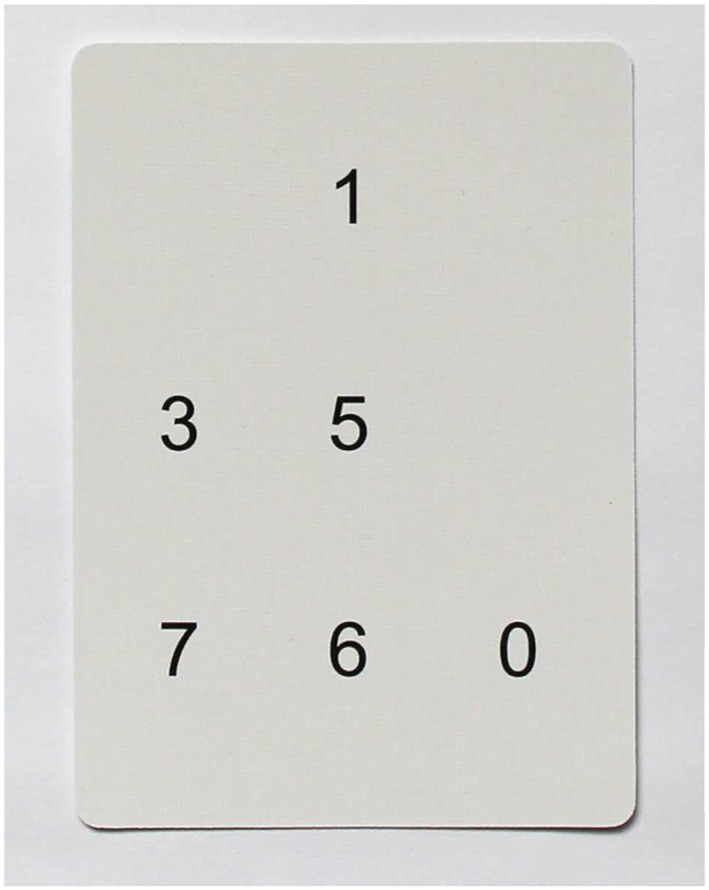

The ThinkRx cognitive training program includes 23 training tasks. Each task targets a primary cognitive ability and multiple cognitive skills. For example, the primary objective of training task #11 (Figure 2) is to develop working memory but visual span, visualization, and concentration are also developed through this procedure. Descriptions of each training task are presented in Table 1. Trainers tracked participants' progress through each level using a dynamic assessment system. As participants mastered each level of task difficulty, the trainers documented the date and time in individual student workbooks. Trainers provided constant feedback and awarded points for mastery and effort. Participants were able to save and later exchange their points for small prizes or gift cards.

Figure 2.

Example of a memory training procedure. Participants study a card for 3 s and then recall the numbers in the correct positions on the grid. Oral responses must be given in beat with the metronome. In this example, the response would be ‘Blank‐1‐blank‐3‐5‐blank‐7‐6‐0’

Testing tasks

Associative memory test

The Visual–Auditory Learning subtest of the Woodcock Johnson III—Tests of Cognitive Abilities was administered to measure associative and semantic memory. The test requires encoding and retrieval of auditory and visual associations. The test administrator teaches the participant a rebus, or a set of pictures that each represents a word. Then, the participant must recall the association between the pictures and the words by reading them as a sentence aloud. For ages 5–19, this test has a median reliability of .81 (Mather & Woodcock, 2001).

Visual processing test

The Spatial Relations subtest of the Woodcock Johnson III—Tests of Cognitive Abilities measures visual processing skills by asking the student to match individual puzzle pieces to a completed shape. For ages 5–19, this test has a median reliability of .86 (Mather & Woodcock, 2001).

Auditory processing test

The Sound Blending subtest of the Woodcock Johnson III—Tests of Cognitive Abilities measures the ability to synthesize phonemes. The test administrator presents a series of phonemes (language sounds) and the student must blend them together to form a word. For ages 5–19, this test has a median reliability of .86 (Mather & Woodcock, 2001).

Logic and reasoning test

The Concept Formation subtest of the Woodcock Johnson III—Tests of Cognitive Abilities measures fluid reasoning by requiring the student to use inductive logic and apply rules to sets of shapes that share similarities and differences. The student must indicate the rule that differentiates one set of shapes from the others. For ages 5–19, this test has a median reliability of .94 (Mather & Woodcock, 2001).

Working memory test

The Numbers Reversed subtest of the Woodcock Johnson III—Tests of Cognitive Abilities measures working memory by asking the student to remember a span of numbers and repeat them in reverse order from how they were presented. For ages 5–19, this test has a median reliability of .86 (Mather & Woodcock, 2001).

Processing speed test

The Visual Matching subtest of the Woodcock Johnson III—Tests of Cognitive Abilities measures perceptual processing speed by asking the student to discriminate visual symbols. In three minutes, the student identifies and circles pairs of matching numbers in each row of six number combinations ranging from single digit to three‐digit numbers. For ages 5–19, this test has a median reliability of .89 (Mather & Woodcock, 2001).

Long‐term memory test

The Visual–Auditory Learning‐Delayed subtest of the Woodcock Johnson III—Tests of Cognitive Abilities repeats the verbal–visual associations learned during the Visual–Auditory Learning subtest administered earlier in the testing session. The test requires the student to read the rebus passages again as a measure of long‐term retention. For ages 5–19, this test has a median reliability of .92 (Mather & Woodcock, 2001).

Attention test

The Flanker Inhibitory Control and Attention Test from the NIH Toolbox Cognition Battery measures attention and inhibitory control. The computer‐based test requires the student to focus on and identify the direction of an arrow while other arrows are flanking it. For this 3‐min test, scoring is based on a combination of accuracy and reaction time. For ages 8–15, the test has a convergent validity with D‐KEFS Inhibition Test of .34 (Zelazo et al., 2013).

GIA score

GIA score is a cluster score on the Woodcock Johnson III—Tests of Cognitive Abilities (Woodcock, McGrew, & Mather, 2001). The score is a weighted composite based on age of seven cognitive skills tests that measure verbal comprehension (20%), associative memory (17%), visual processing (9%), phonemic awareness (12%), fluid reasoning (19%), processing speed (19%), and working memory (13%). Attention and long‐term memory are not included in the GIA score.

Procedures

After obtaining parental consent, participants were pre‐tested in quiet testing rooms. Under the supervision of a doctoral‐level educational psychologist, master's‐level test administrators assisted with delivering the Woodcock Johnson III—Tests of Cognitive Abilities (1–7, 10) and were blind to the experimental condition. The Flanker Test from the NIH Toolbox—Cognition Battery was administered by trained research assistants. The mean interval from pretest to post‐test was 14.4 weeks for the experimental group and 14.5 weeks for the control group. For the experimental group, participants attended three or four 90‐min training sessions per week during the 15‐week study period for a total of 40 sessions. Training sessions were held at two locations: a cognitive training center and a cognitive science research facility with training rooms similar to those at the training center. LearningRx certified cognitive trainers who were not part of the research team delivered the ThinkRx program during the scheduled sessions. On‐site LearningRx master trainers monitored day‐to‐day program fidelity. The remaining phases of the study including design and data analysis were not performed by LearningRx. One hundred percent of the students in the experimental group completed the required 60‐h protocol and attended all 40 training sessions. The control group participants waited to begin their intervention until the experimental group had completed their 60 h of training. Post‐testing was completed within two weeks of the experimental group's program completion.

Statistical analysis

Data were analyzed using multivariate analysis of variance (MANOVA), with the dependent variables being the difference scores between the pre and post tests for each measure. In other words, the study used a difference‐in‐difference analysis for all measures. Given the number of pairwise comparisons (i.e., nine, one for each measure), a Bonferroni correction was applied to the multiple comparisons. Effect sizes were also calculated for all measures using Cohen's d. To address the potential for Lord's Paradox (Wainer, 1991), we conducted an alternate series of individual analyses of covariance (ANCOVA) for each post‐test score as a dependent variable with pre‐test scores as covariates, including a Bonferroni correction for multiple comparisons. Because the results were conceptually the same, we chose to report the MANOVA findings, with two exceptions described below.

Data screening indicated no missing data, and almost all variables were within tolerable ranges for skewness, with only the Long Term Memory pretest indicating a small positive skew. Finally, comparisons of pretest scores indicate groups were statistically equivalent on almost all measures (Associative Memory t = −.57, p = .57; Visual Processing t = −.21, p = .83; Auditory Processing t = .16, p = .87; Working Memory t = .66, p = .51; Long Term Memory t = −.35, p = .73; GIA t = .63, p = .54; Attention t = −.88, p = .39), with the exceptions of Logic and Reasoning (t = 2.33, p = .03) and Processing Speed (t = −2.04, p = .05), where the treatment group reported a lower mean score on the former (treatment = 100.70, control = 111.95) and a higher score on the latter (treatment = 87.35, control = 77.68). Because of the significant differences on these two measures, we provide results below from the aforementioned ANCOVA, in addition to the MANOVA, as the former presents the post‐test results after controlling for the pre‐tests.

Results

As indicated in Table 2, participants in the treatment group consistently showed greater difference scores as compared to the control group on all measures. When examining the difference in difference scores, the greatest gap was evident between groups on Logic and Reasoning and GIA, with the smallest gaps present in Attention, Processing Speed, and Visual Processing. Moreover, subjects in the treatment group showed growth on all measures, whereas control group participants showed decreasing mean scores on four measures (Auditory Processing, Logic and Reasoning, Working Memory, and GIA). The greatest growth in the treatment group was evident in Long Term Memory, Associative Memory, and Logic and Reasoning, with the smallest growth in Attention and Visual Processing.

Table 2.

Pre to post difference scores by group

| Control | Treatment | Difference | |||

|---|---|---|---|---|---|

| Mean | SD | Mean | SD | MT − MC | |

| GIA | −5.11 | 8.93 | 21.00 | 13.49 | 26.11 |

| Associative Memory | 7.68 | 14.77 | 22.95 | 13.61 | 15.27 |

| Visual Processing | 4.26 | 10.30 | 10.85 | 9.75 | 6.59 |

| Auditory Processing | −3.74 | 12.44 | 13.30 | 12.28 | 17.04 |

| Logic and Reasoning | −7.21 | 10.87 | 21.10 | 18.50 | 28.31 |

| Processing Speed | 6.53 | 7.24 | 12.95 | 9.53 | 6.42 |

| Working Memory | −7.68 | 19.66 | 13.05 | 15.11 | 20.73 |

| Long Term Memory | 6.95 | 13.05 | 28.20 | 22.38 | 21.25 |

| Attention | 3.17 | 7.34 | 5.06 | 8.12 | 1.89 |

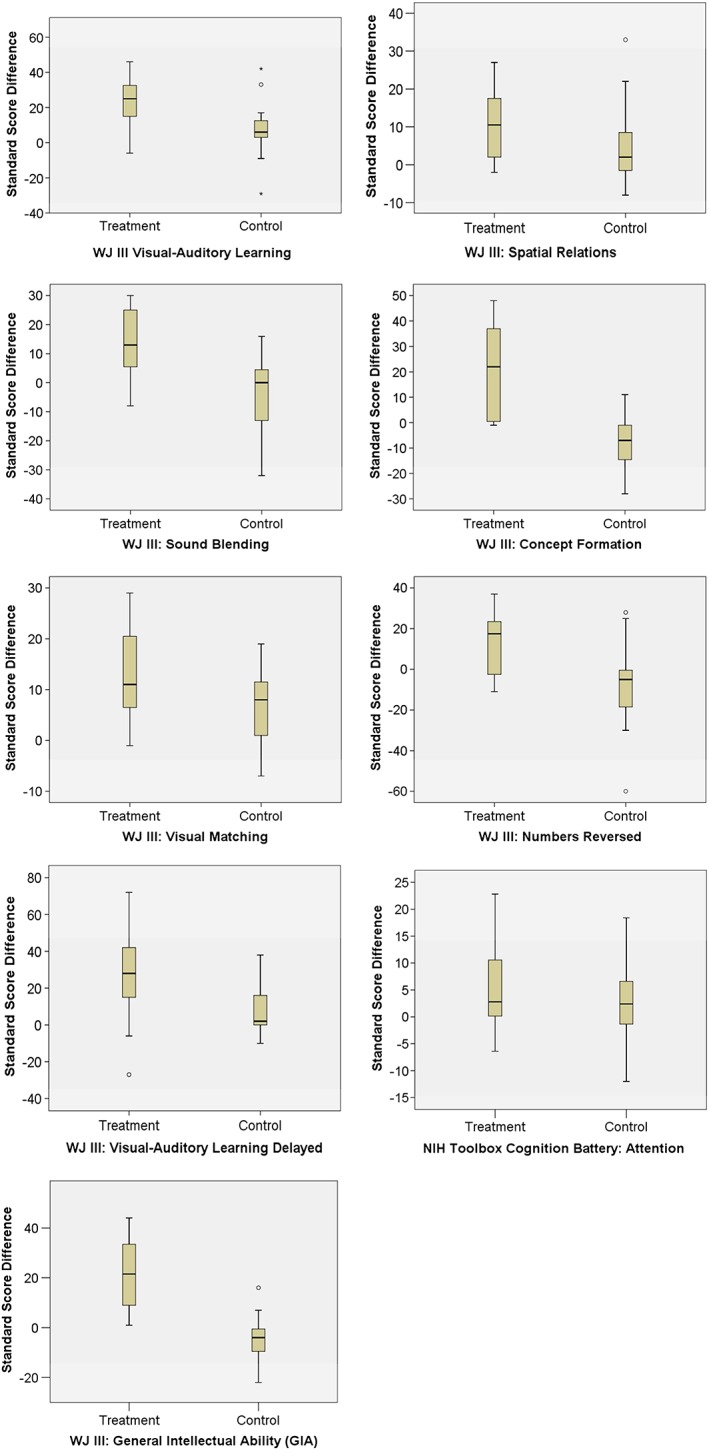

Another way to visualize the differences is illustrated in Table 3. These data represent participants in each group whose scores were at or close to the mean difference score for each metric. As such, these can be thought of as average or representative participants for each group on each measure. These individual data demonstrate how much greater the growth was for treatment group participants as compared to those in the control. Among these representative participants, treatment students typically saw growth two to three times greater than that of those in the control. Notably, this is so despite treatment pre scores that almost always exceeded control pre scores. To illustrate treatment and control group differences in changes from pretest to post‐test, Figure 3 shows the distribution of change scores by group in the form of boxplots.

Table 3.

Pre and post scores for a representative sample of selected participants at approximately the average of each measure's difference score

| Treatment | Control | |||||

|---|---|---|---|---|---|---|

| Pre | Post | Diff | Pre | Post | Diff | |

| Associative Memory | 94 | 117 | 23 | 79 | 86 | 7 |

| Visual Processing | 96 | 107 | 11 | 93 | 97 | 4 |

| Auditory Processing | 116 | 133 | 17 | 105 | 104 | −1 |

| Logic and Reasoning | 118 | 138 | 20 | 99 | 92 | −7 |

| Processing Speed | 101 | 113 | 12 | 81 | 87 | 6 |

| Working Memory | 92 | 106 | 14 | 100 | 94 | −6 |

| Long Term Memory | 90 | 117 | 27 | 78 | 86 | 8 |

| GIA | 126 | 146 | 20 | 109 | 103 | −6 |

| Attention | 107 | 111 | 4 | 91 | 95 | 3 |

Figure 3.

Distribution of change scores by group

MANOVA results indicate an overall significant difference between treatment and control groups (F = 15.83, p = .00, partial η2 = .83), with pairwise comparisons indicating significant differences between groups on eight of nine measures. Table 4 illustrates the significance testing results for each assessment measure. The one difference that was not significant was in Attention. Turning to effect sizes indicating the magnitude of the significance, the greatest effect of the intervention was measured on GIA score, followed by Logic and Reasoning. Both measures saw extremely large effects. All three measures of memory also saw very large effects. The smallest effect was measured on Attention, then Visual Processing and Processing Speed, both of which saw medium to large effect sizes.

Table 4.

Significance testing results for assessment measures

| F | p | d | |

|---|---|---|---|

| GIA | 50.20 | 0.00 | 2.92 |

| Associative Memory | 11.28 | 0.00 | 1.03 |

| Visual Processing | 4.21 | 0.05 | 0.64 |

| Auditory Processing | 18.53 | 0.00 | 1.37 |

| Logic and Reasoning | 33.49 | 0.00 | 2.60 |

| Processing Speed | 5.57 | 0.02 | 0.89 |

| Working Memory | 13.72 | 0.00 | 1.05 |

| Long Term Memory | 12.95 | 0.00 | 1.63 |

| Attention | 0.58 | 0.45 | 0.26 |

As for the ANCOVA analysis for Logic and Reasoning and Processing Speed, results indicate post‐test scores were significantly greater for treatment group subjects, after controlling for pre‐test scores. On Logic and Reasoning (F = 32.01, p = .000), treatment group students conditionally scored approximately 19 points greater than control participants (MT = 123.08, SE = 2.35; MC = 103.39, SE = 2.41). As for Processing Speed (F = 10.47, p = .003), treatment group students conditionally scored more than eight points greater than control participants (MT = 96.67, SE = 1.81; MC = 88.04, SE = 1.86).

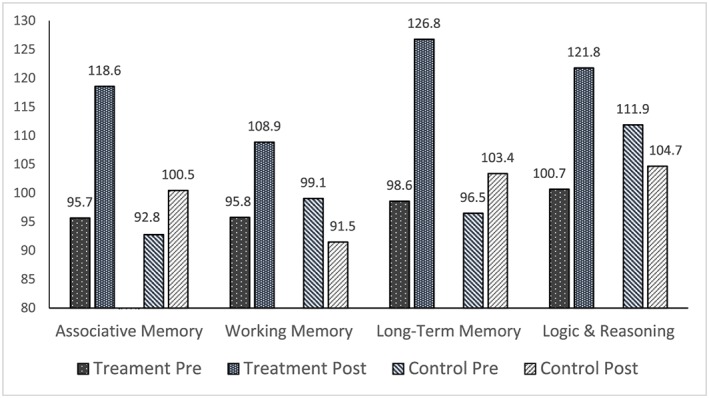

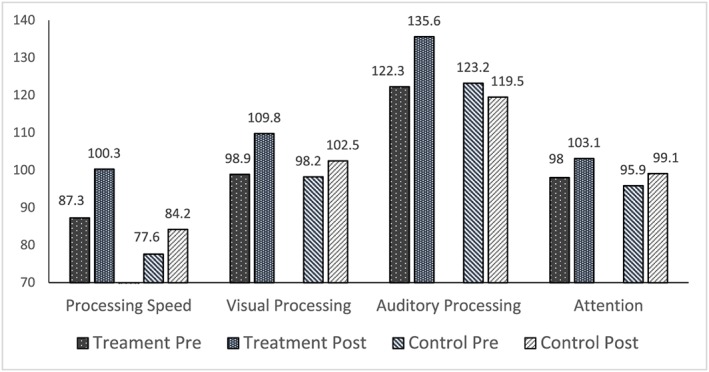

In summary, the intervention produced statistically significantly greater growth on all measures except Attention. Those who received the intervention consistently showed growth from pretest to post‐test, while control group participants reported decreases on some measures. Finally, effect sizes were extremely large for two measures—Logic and Reasoning and GIA—and the large effect sizes for all three measures of memory were quite similar. Figures 4, 5, 6 show the between group pretest to post‐test differences.

Figure 4.

Comparison of treatment and control group mean pretest and posttest scores on General Intellectual Ability (GIA)

Figure 5.

Comparison of treatment and control group mean pretest and posttest scores on memory and logic and reasoning

Figure 6.

Comparison of treatment and control group mean pretest and posttest scores on processing speed, visual processing, auditory processing, and attention

Discussion

In the current study, we tested the effects of a comprehensive cognitive training program delivered to children and adolescents in a one‐on‐one setting. Our research question asked if there is a statistically significant difference in GIA score, Associative Memory, Visual Processing, Auditory Processing, Logic and Reasoning, Processing Speed, Working Memory, Long Term Memory, and Attention between those who complete cognitive training and those who do not. The purpose for investigating the effects of this cognitive training program was to address two gaps in the cognitive training literature: the effects of a multidimensional, one‐on‐one cognitive training on multiple cognitive abilities and the effects of a comprehensive one‐on‐one cognitive training on GIA. Based on the comprehensive nature of the intervention, we predicted improvements in all nine measures for the treatment group.

The results of the study are consistent with the findings from an earlier quasi‐experimental study on the same program (Gibson et al., 2015) and add additional information to the literature with the inclusion of GIA and attention measures. Statistically significant differences between groups were found on all three measures of memory, on both auditory and visual processing, on processing speed, and on logic and reasoning. Further, the change in GIA score was significantly different between the two groups.

The positive effect of cognitive training on all three measures of memory generalized beyond the trained tasks because there are qualitative differences between the training and testing tasks. First, although Task 18 is an association task, it is also timed with a reverse sequence component. Second, unlike the WJ III associative memory test, Visual–Auditory Learning, there is no visual prompt provided in the associative memory training sessions after the initial associations between pictures and concepts have been learned. Further, associative memory training sessions are grounded in meaningful mnemonic device learning of real‐world associations rather than arbitrary images presented during the testing tasks. Third, the WJ III test of working memory, Numbers Reversed, is an auditory backwards span task. Alternatively, there are 12 training procedures that target working memory, none with a backwards span task. It is interesting to note that the backwards span task—which measures working memory capacity—is a powerful predictor of a student's ability to learn (Alloway & Copello, 2013). Thus, the generalization of the working memory training effects to working memory capacity is indeed a vital gain.

Four of the same training procedures that target working memory also target long‐term memory. The WJ III test for long‐term memory is a delayed administration of the associative memory test, Visual–Auditory Learning‐Delayed. With the exception of Task 18 described above, there are no training tasks that use associative memory tasks to target the development of long‐term memory. Thus, the gains in associative, working, and long‐term memory are more likely a function of generalized improvement in memory abilities rather than task‐specific performance improvements.

The differences between groups on the measure of processing speed also suggest generalized improvement. Twelve training tasks specifically target processing speed through the use of speeded tasks, tasks using a metronome, visual search and span tasks, computation tasks, memory‐building procedures, and tasks requiring sustained attention. One of the training tasks, Task 5, uses attention and visual discrimination to identify patterns in large blocks of numbers or letters. The more difficult levels of the task include sets of operations that the participant must perform on the items, such as circling one number, crossing out a different number, and placing a triangle around a third number. Although conceptually similar to the WJ III test of processing speed, Visual Matching, which requires the test‐taker to identify pairs of matching numbers on each line, the complexity of this training task engages multiple cognitive abilities and problem‐solving skills.

Visual and auditory processing differences were also significant between groups. The WJ III test to measure transfer of visual processing training, Spatial Relations, engages the participant in solving puzzles through mental rotation of pieces printed on the test. In the ThinkRx training program, there are nine procedures that target visual processing, three that target visual discrimination, five that target visual manipulation, and ten that target visualization. Unlike the testing task, none of the training procedures requires mental rotation of shapes. For example, in Task 19, participants use tangrams to recreate visual patterns from memory—a task that also targets visual memory, logic and reasoning, and attention. In Task 17, participants visualize a path and draw the route with their eyes closed. Given the qualitative differences between the testing and training tasks, gains in visual processing appear to be generalized improvements.

However, the difference between groups on auditory processing is probably best described as near transfer of the training effect. The WJ III testing task, Sound Blending, required participants to listen to individual sounds and specify the word the sounds make when blended together. In ThinkRx, there are six training tasks that target auditory processing. Because of the nature of the development of phonemic awareness, a primary way to learn sound blending is to practice blending sounds. Task 23 is the auditory processing training task that targets sound blending. Participants read the word, say the individual sounds, listen to a word, and say the individual sounds. Although the tasks seem similar, a key difference between the testing and training tasks is the use of nonsense words in training.

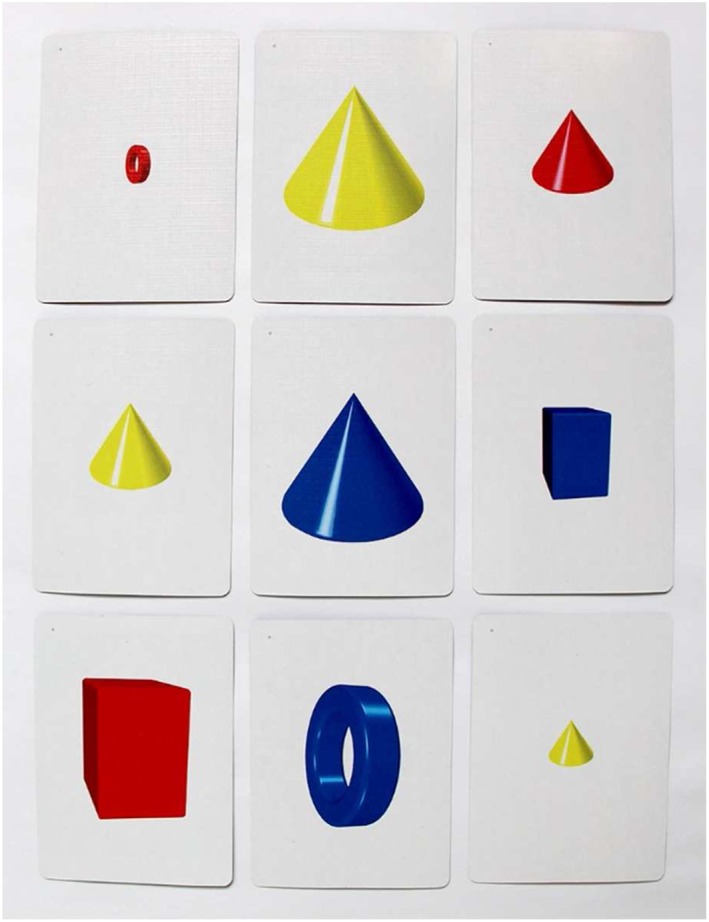

The significant difference between groups on logic and reasoning may be a function of task generalization. The WJ III test for logic and reasoning, Concept Formation, is an inductive reasoning task asking participants to derive a rule for each item in a stimulus set. There are five training tasks that target logic and reasoning, including Tasks 14 and 15, which target deductive reasoning, congruence, part–whole relations, and diagramming. For example, Task 14 uses a deck of 81 cards containing small, medium, and large cones, rings, and boxes with three positional variations and three colors. Participants must create sets of three based on shared characteristics of the items. One level of this task is presented in Figure 7.

Figure 7.

Example of a logic and reasoning training procedure. Participants must find a group of three cards where all of the card's features are the same or all different. In this example, the group includes the three cards with a vertical yellow cone, one small, one medium, and one large. All three cards share the same shape, color, and orientation, and all three cards are different sizes

The difference in visual selective attention between groups as measured by the NIH Toolbox Flanker Test was not statistically significant. This may be because of the use of an unrelated arrows task during training of visual discrimination and selective attention that caused confusion for the treatment group during post‐testing. However, the results may instead be a reflection of the psychometric limitations of the measure. Although the NIH Toolbox Flanker Test received endorsement for use with ages 3–85 (Zelazo et al., 2013), the psychometric stability of the test for ages 8–15 is not firmly established. A validation of the Flanker Test with a pediatric population resulted in convergent validity of just .34 when compared with the D‐KEFS Inhibition test, and also found significant practice effects from repeated testing (Zelazo et al., 2013). Further, Akshoomoff et al. (2014) found significant ceiling effects in older children when conducting a large normative study on the cognition battery. Unfortunately, the use of the NIH Cognition Toolbox does not facilitate strong conclusions about the efficacy of the ThinkRx program on selective attention. However, it is important to note that Numbers Reversed subtest of the WJ III is a measure of broad attention. The difference between groups on the test of broad attention was indeed statistically significant.

There are applied implications to the findings from the current study. Cognitive training is applicable to both educational and clinical settings for remediating and strengthening cognitive abilities necessary for learning. Based on prior research that educational and personality characteristics of cognitive trainers do not significantly influence training outcomes (Moore, 2015), the LearningRx Corporation trained eight new people to serve as trainers for the current study. New trainers can learn the program in 25 instructional hours, and the curriculum and materials for each participant fit in a backpack. This simplicity in preparation for training and the portability of the materials suggests that the program can be delivered anywhere including clinics, schools, afterschool programs, tutoring centers, and homes. Given that 40% of high school seniors are not academically prepared for college (U.S. Department of Education, 2013) and that 2.4 million American children were identified as learning disabled in 2014 (Cortiella & Horowitz, 2014), one‐on‐one cognitive training may be a viable option for addressing the multiple cognitive deficits associated with learning problems.

The present study has some limitations. First, the results do not include longitudinal data on the lasting effects of cognitive training. However, this was the first phase of a larger year‐long study where researchers will collect follow‐up cognitive testing and academic achievement data. Next, some readers may be concerned that the use of a waitlist control group rather than an active control group may introduce the threat of expectancy effects. To mitigate the risk of expectancy effects, participants were not told that there was a waitlist control group. Instead, they were told that they were being assigned to either a summer or fall start for their training program. Further, prior research on expectancy effects in cognitive training studies has revealed that this is a minimal threat. Mahncke et al. (2006) tested the effect through the use of two control groups and concluded that the lack of difference between the two control groups suggests that there is no meaningful placebo effect with this type of study. Dunning et al. (2013) used a similar dual control group design and also concluded that experimental gains were not likely the result of expectancy effects. Burki, Ludwig, Chicherio, and Ribaupierre (2014) reported comparable results, finding no significant differences in training outcomes between active controls and no‐contact controls. Finally, two recent meta‐analyses of 35 cognitive training studies indicated no difference between types of control groups when compared to each other. One found significant treatment group gains regardless of the type of control group (Au et al., 2015), and the second also found that the type of control group did not have a significant influence on training effects (Peng & Miller, 2016).

A final limitation is that pretest group means on measures of logic and reasoning and processing speed were not homogenous. However, we opted not to drop the data from the logic and reasoning and processing speed tests because MANOVA is robust to the violation of homogeneity of covariance when group sizes are nearly equal (Tabachnick & Fidell, 2007; Warner, 2013), particularly when the significance value is not less than .001 (Field, 2009).

In addition to gathering longitudinal data and functional outcomes, future research should also incorporate neuroimaging data to assess how neural connections between brain regions are impacted by cognitive training. Research with a larger sample size and a like‐task comparison group might also be considered.

Conclusion

In summary, the results of the current study provide additional support for the efficacy of the ThinkRx cognitive training program in improving cognitive skills in children ages 8–14. There were significant generalized improvements in seven cognitive skills—associative memory, working memory, long‐term memory, visual and auditory processing, logic and reasoning, and processing speed—as well as in the GIA cluster score. These findings also support the use of CHC Theory in the design of cognitive training programs to ensure multiple cognitive skills are targeted in the training tasks. There is much work to be done in the field of cognitive training research, and this study offers an important contribution to the knowledge base on cognitive training effects in children.

Acknowledgements

The authors thank LearningRx Corporation for covering costs associated with the delivery of the ThinkRx training program. With training costs covered, we were able to include students from all social strata, thereby excluding the need to include socioeconomic status as a variable and increase the generalizability of the findings. We also thank the children and families who participated in this research.

Carpenter, D. M. , Ledbetter, C. , and Moore, A. L. (2016) LearningRx Cognitive Training Effects in Children Ages 8–14: A Randomized Controlled Trial. Appl. Cognit. Psychol., 30: 815–826. doi: 10.1002/acp.3257.

References

- Akshoomoff, N. , Newman, E. , Thompson, W. , McCabe, C. , Bloss, C. S. , Chang, L. , … Jernigan, T. (2014). The NIH toolbox cognition battery: Results from a large normative developmental sample (PING). Neuropsychology, 28(1), 1–10. DOI:10.1037/neu0000001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alloway, T. P. , & Copello, E. (2013). Working memory: The what, the why, and the how. The Australian Educational and Developmental Psychologist, 30(2), 105–118. DOI:10.1017/edp.2013.13. [Google Scholar]

- Anastasi, A. , & Urbina, S. (1997). Psychological testing (7th edn). Upper Saddle River, NJ: Prentice‐Hall. [Google Scholar]

- Au, J. , Sheehan, E. , Tsai, N. , Duncan, G. J. , Buschkuehl, M. , & Jaeggi, S. M. (2015). Improving fluid intelligence with training on working memory: A meta‐analysis. Psychonomic Bulletin Review, 22, 366–377. DOI:10.3758/s13423-014-0699-x. [DOI] [PubMed] [Google Scholar]

- Baddeley, A. D. (1992). Working memory. Science, 255(5044), 556–559. [DOI] [PubMed] [Google Scholar]

- Barkl, S. , Porter, A. , & Ginns, P. (2012). Cognitive training for children: Effects on inductive reasoning, deductive reasoning, and mathematical achievement in an Australian school setting. Psychology in the Schools, 49(9), 828–842. DOI:10.1002/pits.21638. [Google Scholar]

- Beck, S. J. , Hanson, C. A. , & Puffenberger, S. S. (2010). A controlled trial of working memory training for children and adolescent with ADHD. Journal of Clinical Child and Adolescent Psychology, 39(6), 825–836. DOI:10.1080/15374416.2010.517162. [DOI] [PubMed] [Google Scholar]

- Burki, C. N. , Ludwig, C. , Chicherio, C. , & Ribaupierre, A. (2014). Individual differences in cognitive plasticity: An investigation of training curves in younger and older adults. Psychological Research, 78(6), 821–835. DOI:10.1007/s00426-014-0559-3. [DOI] [PubMed] [Google Scholar]

- Carroll, J. B. (1993). Human cognitive abilities: A survey of factor analytic studies. New York: Cambridge University Press. [Google Scholar]

- Chandola, T. , Deary, I. J. , Blane, D. , & Batty, G. D. (2006). Childhood IQ in relation to obesity and weight gain in adult life: The National Child Development (1958) Study. International Journal of Obesity, 30(9), 1422–1432. DOI:10.1038/sj.ijo.0803279. [DOI] [PubMed] [Google Scholar]

- Chein, J. M. , & Morrison, A. B. (2010). Expanding the mind's workspace: Training and transfer effects with a complex working memory span task. Psychonomic Bulletin and Review, 17(2), 193–199. DOI:10.3758/PBR.17.2.193. [DOI] [PubMed] [Google Scholar]

- Cornoldi, C. , & Giofre, D. (2014). The crucial role of working memory in intellectual functioning. European Psychologist, 19(4), 260–268. DOI:10.1027/1016-9040-a000183. [Google Scholar]

- Cortiella, C. , & Horowitz, S. H. (2014). The state of learning disabilities: Facts, trends, and emerging issues. New York: National Center for Learning Disabilities; Retrieved November 1, 2015 from http://www.ncld.org/wp‐content/uploads/2014/11/2014‐State‐of‐LD.pdf [Google Scholar]

- Dunning, D. L. , Holmes, J. , & Gathercole, S. E. (2013). Does working memory training lead to generalized improvements in children with low working memory? A randomized controlled trial. Developmental Science, 16(6), 915–925. DOI:10.1111/desc.12068. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Feuerstein, R. , Feuerstein, R. S. , & Falik, L. H. (2010). Beyond smarter: Mediated learning and the brain's capacity for change. New York: Teacher's College Press. [Google Scholar]

- Field, A. (2009). Discovering statistics using SPSS (3rd edn). Thousand Oaks, CA: SAGE. [Google Scholar]

- Forrest, L. F. , Hodgson, S. , Parker, L. , & Pearce, M. S. (2011). The influence of childhood IQ and education on social mobility in the Newcastle Thousand Families birth cohort. BMC Public Health, 11(1), 895–903. DOI:10.1186/1471-2458-11-895. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Freberg, M. M. , Vandiver, B. J. , Watkins, M. W. , & Canivez, G. L. (2008). Significant factor score variability and the validity of the WISC‐III full scale IQ in predicting later academic achievement. Applied Neuropsychology, 15(2), 131–139. DOI:10.1080/09084280802084010. [DOI] [PubMed] [Google Scholar]

- Gibson, K. , Carpenter, D. , Moore, A. L. , & Mitchell, T. (2015). Training the brain to learn: Augmenting vision therapy. Vision Developmental and Rehabilitation, 1(2), 119–128. [Google Scholar]

- Gibson, K. , Hanson, K. , & Mitchell, T. (2007). Unlock the Einstein inside: Applying new brain science to wake up the smart in your child. Colorado Springs, CO: LearningRx. [Google Scholar]

- Gunnell, D. , Harbord, R. , Singleton, N. , Jenkins, R. , & Lewis, G. (2009). Is low IQ associated with an increased risk of developing suicidal thoughts? Social Psychiatry and Psychiatric Epidemiology, 44(1), 34–38. DOI:10.1007/s00127-008-0404-3. [DOI] [PubMed] [Google Scholar]

- Gray, S. A. , Chaban, P. , Martinussen, R. , Goldberg, R. , Gotlieb, H. , Kronitz, R. , … Tannock, R. (2012). Effects of a computerized working memory training program on working memory, attention, and academics in adolescents with severe LD and comorbid ADHD: A randomized controlled trial. Journal of Child Psychology and Psychiatry, 53(12), 1277–1284. DOI:10.1111/j.1469-7610.2012.02592.x. [DOI] [PubMed] [Google Scholar]

- Harrison, T. L. , Shipstead, Z. , & Engle, R. (2015). Why is working memory capacity related to matrix reasoning tasks? Memory and Cognition, 43, 389–396. DOI:10.3758/s13421-014-0473-3. [DOI] [PubMed] [Google Scholar]

- Hayward, D. , Das, J. P. , & Janzen, T. (2007). Innovative programs for improvement in reading through cognitive enhancement: A remediation study of Canadian first nations children. Journal of Learning Disabilities, 40(5), 443–457. [DOI] [PubMed] [Google Scholar]

- Holmes, J. , & Gathercole, E. (2014). Taking working memory training from the laboratory into schools. Educational Psychology, 34(4), 440–450. DOI:10.1080/01443410.2013.797338. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holmes, J. , Gathercole, S. E. , & Dunning, D. L. (2009). Adaptive training leads to sustained enhancement of poor working memory in children. Developmental Science, 12(4), 9–15. DOI:10.1111/j.1467-7687.2009.00848.x. [DOI] [PubMed] [Google Scholar]

- Holmes, J. , Gathercole, S. E. , Place, M. , Dunning, D. , Hilton, K. , & Elliott, J. G. (2010). Working memory deficits can be overcome: Impacts of training and medication on working memory in children with ADHD. Applied Cognitive Psychology, 24, 827–836. DOI:10.1002/acp.1589. [Google Scholar]

- Horn, J. L. (1991). Measurement of intellectual capabilities: A review of theory In McGrew K. S., Werder J. K., & Woodcock R. W. (Eds.), Woodcock–Johnson technical manual (pp. 197–232). Chicago: Riverside. [Google Scholar]

- Hunter, J. E. (1986). Cognitive ability, cognitive aptitudes, job knowledge, and job performance. Journal of Vocational Behavior, 29(3), 340–362. DOI:10.1016/0001-8791(86)90013-8. [Google Scholar]

- Jaeggi, S. M. , Buschkuehl, M. , Jonides, J. , & Perrig, W. J. (2008). Improving fluid intelligence with training on working memory. Proceedings from the National Academy of Sciences, 105(10), 6829–6833. DOI:10.1073/pnas.0801268105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jaušovec, N. , & Jaušovec, K. (2012). Working memory training: Improving intelligence—Changing brain activity. Brain & Cognition, 79(2), 96–106. DOI:10.1016/j.bandc.2012.02.007. [DOI] [PubMed] [Google Scholar]

- Kozulin, A. , Lebeer, J. , Madella‐Noja, A. , Gonzalez, F. , Jeffrey, I. , Rosenthal, N. , & Koslowsky, M. (2010). Cognitive modifiability of children with developmental disabilities: A multicenter study using Feuerstein's Instrumental Enrichment‐Basic program. Research in Developmental Disabilities, 31(2), 551–559. DOI:10.1016/j.ridd.2009.12.001. [DOI] [PubMed] [Google Scholar]

- Klingberg, T. , Fernell, E. , Oleson, P. J. , Johnson, M. , Gustafsson, P. , & Dahlstrom, K. (2005). Computerized training of working memory in children with ADHD—A randomized, controlled trial. Journal of the American Academy of Child and Adolescent Psychiatry, 44(2), 177–186. DOI:10.1097/00004583-200502000-00010. [DOI] [PubMed] [Google Scholar]

- Jedlicka, E. J. (2012). The real‐life benefits of cognitive training . (Doctoral dissertation). Retrieved from ProQuest Dissertations and Theses. (UMI No. 3519139)

- Loosli, S. , Buschkuehl, M. , Perrig, W. J. , & Jaeggi, S. M. (2012). Working memory training improves reading processes in typically developing children. Child Neuropsychology, 18(1), 62–78. DOI:10.1080/09297049.2011.575772. [DOI] [PubMed] [Google Scholar]

- Luckey, A. J. (2009). Cognitive and academic gains as a result of cognitive training. (Doctoral dissertation.) Retrieved from ProQuest Dissertations and Theses. (UMI No. 3391981)

- Maenner, M. J. , Greenberg, J. S. , & Mailick, M. R. (2015). Association between low IQ scores and early mortality in men and women: Evidence from a population‐based cohort study. American Journal on Intellectual and Developmental Disabilities , 120(3), 244–257, 270, 272. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mackey, A. , Hill, S. , Stone, S. , & Bunge, S. (2011). Differential effects of reasoning and speed training in children. Developmental Science, 14(3), 582–590. DOI:10.1111/j.1467-7687.2010.01005.x. [DOI] [PubMed] [Google Scholar]

- Mahncke, H. W. , Connor, B. B. , Appelman, J. , Ahsanuddin, A. N. , Hardy, J. L. , Wood, R. A. , … Merzenich, M. M. (2006). Memory enhancement in health older adults using a brain plasticity‐based training program: A randomized controlled study. Proceedings of the National Academy of Sciences, 103(33), 12523–12528. DOI:10.1073/pnas.0605194103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mansur‐Alves, M. , & Flores‐Mendoza, C. (2015). Working memory training does not improve intelligence: Evidence from Brazilian children. Psicologia, Reflexão e Crítica, 28(3), 474–482. DOI:10.1590/1678-7153.201528306. [Google Scholar]

- Mather, N. , & Woodcock, R. (2001). Woodcock Johnson III Tests of Cognitive Abilities Examiner's manual: Standard and extended batteries. Itasca, IL: Riverside. [Google Scholar]

- Mathiassen, B. , Brøndbo, P. H. , Waterloo, K. , Martinussen, M. , Eriksen, M. , Hanssen‐Bauer, K. , & Kvernmo, S. (2012). IQ as a predictor of clinician‐rated mental health problems in children and adolescents. British Journal of Clinical Psychology, 51(2), 185–196. DOI:10.1111/j.2044-8260.2011.02023.x. [DOI] [PubMed] [Google Scholar]

- McGrew, K. (2005). The Cattell–Horn–Carroll theory of cognitive abilities In Flanagan D. P., & Harrison P. L. (Eds.), Contemporary intellectual assessment: Theories, tests, and issues (pp. 151–179). New York: Guilford. [Google Scholar]

- McGrew, K. (2009). CHC theory and the human cognitive abilities project: Standing on the shoulders of the giants of psychometric intelligence research. Intelligence, 37, 1–10. DOI:10.1016/j.intell.2008.08.004. [Google Scholar]

- McGrew, K. , Schrank, F. , & Woodcock, R. (2007). Technical Manual. Woodcock–Johnson III normative update. Rolling Meadows, IL: Riverside Publishing. [Google Scholar]

- Melby‐Lervag, M. , & Hulme, C. (2013). Is working memory training effective? A meta‐analytic review. Developmental Psychology, 49(2), 270–291. DOI:10.1037/a0028228. [DOI] [PubMed] [Google Scholar]

- Moore, A. (2015). Characteristics of cognitive trainers that predict outcomes for students with and without ADHD. (Doctoral dissertation.) Retrieved from ProQuest Dissertations and These. (UMI No. 3687613)

- Murray, C. (2002). IQ and income inequality in a sample of sibling pairs from advantaged family backgrounds. American Economic Review, 92(2), 339–343. [Google Scholar]

- Naglieri, J. A. , & Ronning, M. (2000). The relationship between general ability using the NNAT and SAT reading achievement. Journal of Psychoeducational Assessment, 18, 230–239. [Google Scholar]

- Peng, P. , & Miller, A. C. (2016). Does attention training work? A selective meta‐analysis to explore the effects of attention training and moderators. Learning and Individual Differences, 45, 77–87. DOI:10.1016/j.lindif.2015.11.012. [Google Scholar]

- Pfister, B. E. (2013). The effect of cognitive rehabilitation therapy on memory and processing speed in adolescents . (Doctoral dissertation.) Retrieved from ProQuest Dissertations and Theses. (UMI No. 3553928)

- Press, L. J. (2012). Historical perspectives on auditory and visual processing. Journal of Behavioral Optometry, 23(4), 99–105. [Google Scholar]

- Rabiner, D. L. , Murray, D. W. , Skinner, A. T. , & Malone, P. S. (2010). A randomized trial of two promising computer‐based interventions for students with attention difficulties. Journal of Abnormal Child Psychology, 38(1), 131–142. DOI:10.1007/s10802-009-9353-x. [DOI] [PubMed] [Google Scholar]

- Redick, T. S. , Shipstead, Z. , Harrison, T. L. , Hicks, K. L. , Fried, D. E. , Hambrick, D.Z. , Engle, R. W. (2013). No evidence of intelligence improvement after working memory training: A randomized, placebo‐controlled study. Journal of Experimental Psychology, 142(2), 359–379. DOI:10.1037/a0029082. [DOI] [PubMed] [Google Scholar]

- Roughan, L. , & Hadwin, J. A. (2011). The impact of working memory training in young people with social, emotional, and behavioural difficulties. Learning and Individual Differences, 21(6), 759–764. DOI:10.1016/j.lindif.2011.07.011. [Google Scholar]

- Schneider, W. J. , & McGrew, K. (2012). The Cattell–Horn–Carroll model of intelligence In Flanagan D., & Harrison P. (Eds.), Contemporary intellectual assessment: Theories, tests, and issues (3rd edn, pp. 99 – 144). New York: Guilford. [Google Scholar]

- Shalev, L. , Tsal, Y. , & Mevorach, C. (2007). Computerized progressive attentional training (CPAT) program: Effective direct intervention for children with ADHD. Child Neuropsychology, 13(4), 382–388. DOI:10.1080/09297040600770787. [DOI] [PubMed] [Google Scholar]

- Sonuga‐Barke, E. J. , Brandeis, D. , Cortese, S. , Daley, D. , Ferrin, M. , Holtmann, M. , … Sergeant, J. (2013). Nonpharmacological interventions for ADHD: Systematic review and meta‐analysis of randomized controlled trials of dietary and psychological treatments. American Journal of Psychiatry, 170(3), 275–289. [DOI] [PubMed] [Google Scholar]

- Sternberg, R. J. (2008). Increasing fluid intelligence is possible after all. Proceedings from the National Academy of Sciences, 105(19), 6791–6792. DOI:10.1073/pnas.0803396105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tabachnick, B. , & Fidell, L. (2007). Using multivariate statistics (5th edn). Needham Heights, MA: Allyn & Bacon. [Google Scholar]

- Tamm, L. , Epstein, J. , Peugh, J. , Nakonezny, P. , & Hughes, C. (2013). Preliminary data suggesting the efficacy of attention training for school‐aged children with ADHD. Developmental Cognitive Neuroscience, 4, 16–28. DOI:10.1016/j.dcn.2012.11.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- US Department of Education, Institute of Education Sciences, National Center for Education Statistics, National Assessment of Educational Progress . (2013). 2013 mathematics and reading: Grade 12 assessments. Retrieved November 1, 2015 from http://goo.gl/3UZ3tc

- Wainer, H. (1991). Adjusting for differential base‐rates: Lord's Paradox again. Psychological Bulletin, 109, 147–151. [DOI] [PubMed] [Google Scholar]

- Warner, R. M. (2013). Applied statistics: From bivariate to multivariate techniques (2nd edn). Thousand Oaks, CA: SAGE. [Google Scholar]

- Wegrzyn, S. C. , Hearrington, D. , Martin, T. , & Randolph, A. B. (2012). Brain games as a potential nonpharmaceutical alternative for the treatment of ADHD. Journal of Research on Technology in Education , 45(2), 107–130. Retrieved November 1, 2015 from International Society for Technology in Education website: http://www.iste.org/Store/Product?ID=2610 [Google Scholar]

- Wiest, D. J. , Wong, E. H. , Minero, L. P. , & Pumaccahua, T. T. (2014). Utilizing computerized cognitive training to improve working memory and encoding: Piloting a school‐based intervention. Education, 135(2), 264–270. [Google Scholar]

- Witt, M. (2011). School‐based working memory training: Preliminary finding pf improvement in children's mathematical performance. Advances in Cognitive Psychology, 7, 7–15. DOI:10.2478/v10053-008-0083-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Woodcock, R.W. , McGrew, K.S. , & Mather, N. (2001). Woodcock‐Johnson III Tests of Cognitive Abilities. Rolling Meadows, IL: Riverside Publishing.

- Xin, Z. , Lai, Z. , Li, F. , & Maes, J. (2014). Near and far transfer effects of working memory updating training in elderly adults. Applied Cognitive Psychology, 28, 403–408. DOI:10.1002/acp.3011. [Google Scholar]

- Zelazo, P. D. , Anderson, J. E. , Richler, J. , Wallner‐Allen, K. , Beaumont, J. L. , & Weintraub, S. (2013). II. NIH Toolbox Cognition Battery (CB): Measuring executive function and attention. Monographs of the Society for Research in Child Development, 78(4), 16–33. DOI:10.1111/mono.12032. [DOI] [PubMed] [Google Scholar]