Abstract

The Support Vector Regression (SVR) model has been broadly used for response prediction. However, few researchers have used SVR for survival analysis. In this study, a new SVR model is proposed and SVR with different kernels and the traditional Cox model are trained. The models are compared based on different performance measures. We also select the best subset of features using three feature selection methods: combination of SVR and statistical tests, univariate feature selection based on concordance index, and recursive feature elimination. The evaluations are performed using available medical datasets and also a Breast Cancer (BC) dataset consisting of 573 patients who visited the Oncology Clinic of Hamadan province in Iran. Results show that, for the BC dataset, survival time can be predicted more accurately by linear SVR than nonlinear SVR. Based on the three feature selection methods, metastasis status, progesterone receptor status, and human epidermal growth factor receptor 2 status are the best features associated to survival. Also, according to the obtained results, performance of linear and nonlinear kernels is comparable. The proposed SVR model performs similar to or slightly better than other models. Also, SVR performs similar to or better than Cox when all features are included in model.

1. Introduction

In the literature, different models have been proposed for survival prediction, such as Cox proportional hazard model and the accelerated failure-time model [1]. Although Cox proportional hazards model is the most popular survival model, its proportional hazards assumption may not always be realistic. Also, this model is applicable when the factors affect the outcome linearly. To overcome these issues, other models such as artificial neural networks and Support Vector Machines (SVM) are applied in the literature [2, 3].

The Support Vector Regression (SVR) model has been applied extensively for response prediction [4–6]. However, there are few studies that use SVM for survival analysis. The traditional SVM models require complete response values, whereas survival data usually include the censored response values. Different attempts have been made to extend the standard SVM model making it applicable in survival analysis. Shivaswamy et al. [7] proposed a modified SVR algorithm for survival analysis. Ding [8] discussed possible application of SVR for censored data. Van Belle et al. [3, 9] proposed a new SVR model using ranking and regression constraints. Another proposal regarding application of SVR in survival analysis was made by Khan and Bayer-Zubek [10]. The authors compared SVR with Cox for some clinical data sets. Van Belle et al. [11] proposed a new survival modeling technique based on least-squares SVR. Their proposed model was compared with different survival models on a clinical BC data set. Mahjub et al. evaluated and compared performance of different SVR models using data sets with different characteristics [12].

SVM does not include automatic feature selection. Feature selection methods for SVM are categorized into two types: filter and wrapper [13]. Wrapper methods are based on SVM, while filter methods are general and not specifically based on SVM.

When dealing with uncensored data, various studies use SVM for feature selection. Many of these studies use feature selection methods for SVM in classification problems [14–18]. For regression problems majority of available approaches propose filter methods [19, 20]. Few studies can be found which propose a wrapper feature selection method for regression [19, 21, 22]. Among these studies is the Recursive Feature Elimination (RFE) method proposed by Guyon et al. [23] for gene selection in cancer diagnosis. Camps-Valls et al. [24] used SVR for prediction and analysis of antisense oligonucleotide efficacy. They applied feature selection using three methods: correlation analysis, mutual information, and SVM-RFE. Becker et al. [18] developed some feature selection methods and used SVM-RFE as a reference model for comparison.

In survival analysis, different feature selection methods are applied in the literature [1, 25–30]. However, using SVR for identifying factors associated with response is rare and to the best of our knowledge, there is no previous study in which SVR is used for evaluating subsets of features. Liu et al. [31] applied SVR with L1 penalty for survival analysis on large datasets. They identified survival-associated prognosis factors for patients with prostate cancer. In costrast to current study, they directly used SVR weights to select the reduced features. Du and Dua [32] discussed the strength of SVR in BC prognosis. They used two feature selection methods neither of which are based on SVR.

The purpose of this study is two-fold. First, we predict the survival time for patients with BC using different SVR models including all available features. We propose a new SVR model using a two-sided loss function for censored data. Performance of Cox model is compared to SVR models by means of three performance measures.

Second, for feature selection, we assess the relationship between every feature and the predicted survival time using relevant statistical tests. We further employ univariate feature selection and the SVM-RFE method to select the best subset of features. Univariate feature selection based on concordance index is proposed in current study. To the best of our knowledge, in the field of survival analysis, there are no previous studies that employ SVM-based feature selection methods. In this paper, all employed feature selection methods are based on SVR.

2. Materials and Methods

In this section, first the BC dataset and two available datasets are introduced. Then, different SVR models, Cox regression for censored data, and three feature selection methods are described.

2.1. Dataset Description

From April 2004 to April 2011, a historical cohort study was performed on 573 patients with BC, who visited the Oncology Clinic in Institute of Diagnostic and Therapeutic Center orphanage of Hamadan province, in west of Iran. Inclusion criteria were as follows: (i) the patient must be woman, (ii) treated with chemotherapy and radiotherapy, (iii) has undergone one of the related surgery types: breast conservative surgery (lumpectomy), simple mastectomy, radical mastectomy, and partial removal of breast tissue (a quarter or less of the breast) (segmental mastectomy). 31 patients are not eligible and are eliminated from the study. 11 features are selected as independent variables. Table 1 displays the patients' characteristics. Survival time is defined as the time period from diagnosis to patient death. The patients who survived during the entire study period are considered as right censored. During our study, 201 (37.1%) cancer patients died and 341 (62.9%) patients survived.

Table 1.

Comparison of characteristics of dead and alive patients in the BC dataset, and the relation between the features and the estimated prognostic based on standard linear SVR.

| Independent variables | Category | Characteristics of patients in dataset | Estimated prognostic index in SVR model | |||||

|---|---|---|---|---|---|---|---|---|

| Alive | Dead | p value | ||||||

| Count | Mean (SD) | Count | Mean (SD) | Pearson correlation (with prognostic index) | ||||

| Age of diagnosis | 341 | 45.33 (10.26) | 201 | 47.30 (11.64) | −0.22 | 0.001∗∗ | ||

| Count | Percent | Count | Percent | Mean prognostic index | SD prognostic index | |||

| Tumor size (cm) | ≤2 | 230 | 70.12 | 98 | 29.88 | −15.98 | 12.18 | 0.313 |

| 2–5 | 103 | 56.28 | 80 | 43.72 | −16.47 | 12.59 | ||

| >5 | 8 | 25.80 | 23 | 74.2 | −23.18 | 8.12 | ||

| Involved lymph nodes (n) | <4 | 198 | 69.72 | 86 | 30.28 | −13.44 | 11.87 | <0.001∗∗∗ |

| ≥4 | 103 | 62.05 | 63 | 37.95 | −21.14 | 11.32 | ||

| Unknown | 40 | 43.48 | 52 | 56.52 | ||||

| Marital status | Married | 326 | 62.69 | 194 | 37.31 | −16.20 | 12.21 | 0.354 |

| Single | 15 | 68.18 | 7 | 31.82 | −20.29 | 12.93 | ||

| Family history | Yes | 300 | 62.11 | 183 | 37.89 | −16.46 | 7.12 | 0.418 |

| No | 41 | 69.49 | 18 | 30.51 | −14.94 | 12.58 | ||

| Type of surgery | Lumpectomy | 18 | 64.24 | 10 | 35.76 | −13.34 | 9.19 | 0.031∗ |

| Radical mastectomy | 250 | 61.27 | 158 | 38.73 | −15.47 | 12.13 | ||

| Segmental mastectomy | 26 | 63.41 | 15 | 36.59 | −18.64 | 15.79 | ||

| simple mastectomy | 47 | 72.30 | 18 | 27.70 | −22.62 | 9.75 | ||

| Histological type | Ductal | 301 | 62.57 | 180 | 37.43 | −17.18 | 12.26 | 0.022∗ |

| Lobular | 21 | 61.76 | 13 | 38.24 | −10.63 | 10.69 | ||

| Medullar | 19 | 70.37 | 8 | 29.63 | −10.76 | 10.81 | ||

| Metastases status | Present | 51 | 36.42 | 89 | 63.58 | −34.32 | 10.82 | <0.001∗∗∗ |

| Absent | 290 | 72.13 | 112 | 27.87 | −12.83 | 8.99 | ||

| HER2 | Positive | 110 | 65.47 | 58 | 34.53 | −20.89 | 11.62 | <0.001∗∗∗ |

| Negative | 84 | 60.00 | 56 | 40.00 | −10.02 | 10.09 | ||

| Unknown | 147 | 62.82 | 87 | 37.18 | ||||

| ER status | Positive | 106 | 63.85 | 60 | 36.15 | −17.54 | 12.35 | 0.148 |

| Negative | 126 | 68.10 | 59 | 31.90 | −15.24 | 12.06 | ||

| Unknown | 109 | 57.06 | 82 | 42.94 | ||||

| PR status | Positive | 85 | 59.44 | 58 | 40.56 | −20.39 | 11.57 | <0.001∗∗∗ |

| Negative | 142 | 71.71 | 56 | 28.29 | −13.37 | 11.88 | ||

| Unknown | 114 | 56.71 | 87 | 43.29 | ||||

∗ p value < 0.05, ∗∗ p value < 0.01, ∗∗∗ p value < 0.001.

Two publicly available datasets (data are available at https://vincentarelbundock.github.io/Rdatasets/datasets.html) are also used for further analysis. The first data set was derived from one of the trials of adjuvant chemotherapy for colon cancer [33]. The dataset includes data of 929 patients. In this experiment (CD), the event under study is recurrence. The variables are as follows: type of treatment, sex, age, time from surgery to registration, extent of local spread, differentiation of tumor, number of lymph nodes, adherence to nearby organs, perforation of colon, and obstruction of colon by tumor. The second data set was obtained from Mayo Clinic trial in primary biliary cirrhosis of the liver [34]. The data set includes 312 randomized participants. There are two events for each person. In a first experiment (PT), the event is transplantation and in a second one (PD) the event is death. There are 17 variables which are as follows: stage, treatment, alkaline phosphatase, edema, age, sex, albumin, bilirubin, cholesterol, aspartate aminotransferase, triglycerides, urine copper, presence of hepatomegaly or enlarged liver, platelet count, standardized blood clotting time, presence of ascites, and blood vessel malformations in the skin.

Some artificial experiments were also conducted to evaluate the effect of number of features included in the model. These experiments are generated with different number of features: 10,20,…, 120. 50 datasets with 200 training and 500 test observations are generated for each feature value. Features values are simulated from a standard normal distribution. Similar methods of dataset generation have been previously applied in the literature [9]. The event time is exponentially distributed with a mean equal to w T x, where x is the feature vector and w is the weight vector which is zero for half of features and is generated from a standard normal distribution for the rest of the features [31, 35, 36]. The censoring time is also exponentially distributed with a mean equal to cw T x, where c determines the censoring rate. Other studies applied similar methods of dataset simulation [35, 36].

2.2. Standard SVR for Censored Data (Model 1)

Throughout the current text, x i denotes a d-dimensional independent variable vector, y i is survival time, and δ i is the censorship status. If an event occurs δ i is 1, and if the observation is right censored δ i is 0. In standard SVR, the prognostic index, that is the model prediction, is formulated as

| (1) |

where w T φ(x) denotes a linear combination of a transformation of the variables φ(x) and b is a constant. To estimate the prediction, SVR is formulated as an optimization problem and loss function is minimized subject to some constraints [9]. Shivaswamy et al. [7] extended the SVR model for censored data by modifying the constraints of standard SVR. In this paper, the standard SVR model for censored data is named model 1 and formulated as follows.

Description of the Formulas Used in SVR Models

-

Model 1

(2) -

Model 2

(3) -

SVR-MRL

(4)

where γ and μ are positive regularization constants, ϵ i, ϵ i ∗, and ξ i are slack variables, and n is sample size.

The prognostic index for a new point x ∗ is computed as

| (5) |

where α i and α i ∗ indicate the Lagrange multipliers. φ(x i)T φ(x j) can be formulated as a positive definite kernel:

| (6) |

Commonly used kernels are linear, polynomial, and RBF [9]. Recently, an additive kernel has been used for clinical data defined as k(x, z) = ∑p=1 d k p(x p, z p) in which k p(·, ·) is separately defined for continuous and categorical variables [9, 37].

2.3. Survival-SVR Using Ranking and Regression Constraints (Model 2)

The SVR model proposed by Van Belle et al. [3, 9] which includes both ranking and regression constraints is called model 2 in this paper and is detailed in “Description of the Formulas Used in SVR Models”. Recall that the previous model (model 1) includes only regression constraints. In model 2, comparable pairs of observations are identified. A data pair is defined to be comparable whenever the order of their event times is known. Data in a pair are comparable if both of them are uncensored, or only one is censored with the censoring time being later than the event time. The number of comparisons is reduced by comparing each observation i with its comparable neighbor that has the largest survival time smaller than y i, which is indicated with y j(i) [9].

2.4. A New SVR Model Using Mean Residual Lifetime

The survival SVR models, discussed in previous subsections, uses a one-sided loss function for errors arising from prediction of censored observations. We propose a new SVR model which uses a two-sided loss function. This model assumes that the event time for a censored observation is equal to sum of its censoring time and mean residual lifetime (MRL). For individuals of age y, MRL measures their expected remaining lifetime using the following formula [1]:

| (7) |

where S(y) is the survival function. Kaplan–Meier estimator is a standard estimator of the survival function which is used in the current study. Suppose the ith individual is censored and y i shows her/his censoring time. Therefore, it is only known that she/he is alive until y i. Since MRLi measures her/his expected remaining lifetime from y i onwards, event time for this individual can be estimated using sum of censoring time and MRL. Thus, the model is also penalized for censored observations when they are predicted to be greater than this sum. In this paper, this model is called SSVR-MRL and is formulated in “Description of the Formulas Used in SVR Models”.

The parameters of SVR models described in this subsection and previous subsections are tuned using the three-fold cross-validation criterion. We compare different SVR models with the Cox model [1].

The different survival models are compared using three performance measures: concordance index (c-index) [3, 7, 9], log rank test χ 2 statistic [3, 9, 37], and hazard ratio [3, 9, 10].

Standard SVR (model 1) and SVR with ranking and regression constraints (model 2) based on different kernels are trained on all datasets. In each experiment 2/3 of the dataset is used as the training set and the rest is used as the test set. Each experiment is repeated 100 times with random division of training and testing subsets. The training set is used for estimating different models. The performance of models is evaluated based on the test data.

2.5. Feature Selection

The first feature selection method proposed in current study is not a feature subset selection method. Considering that the prognostic index in SVR regression is directly correlated to survival time the prognostic index is calculated for all patients and the relationship between this index and each feature is assessed using a relevant statistical test.

The second feature selection method used in this study is univariate feature selection, which employs a univariate standard linear SVR for evaluating the relationship between each feature and survival time. After implementing experiments for all features, they are arranged in increasing order of c-index. Then, we pick out the p top ranked variables and include them in a linear standard SVR model. In the BC dataset, to select the best value of p, both SVR and Cox models are fitted for every value of p (1 − 11), and a subset with highest performance is selected.

The SVM-RFE algorithm, proposed by Guyon et al. [23], is also used for feature selection. This method identifies a subset with size p among m features (p < m) which maximizes the performance measures of model. In this algorithm, the square of feature weight values in linear SVR was used as the ranking criterion [13, 23].

3. Results

All reported performance measures are obtained using the median performance on 100 randomizations between training and test sets for prediction and 50 randomizations for feature selection. All SVR models are implemented in Matlab using the Mosek optimization toolbox for Matlab and Yalmip toolbox. Also we use “R”, Version 3.1.2, for running the Cox model and calculating some performance measures.

3.1. Prediction of Survival

All measures for the BC dataset indicated that SVR with linear kernel outperformed SVR with nonlinear kernels. The linear SVR models outperformed other models. Using the Wilcoxon rank sum test, statistically significant differences between linear SVR (model 1) and other models are indicated in Table 2. SVR-MRL slightly outperformed linear SVR (model 1), but differences of performances between the two models were not significant.

Table 2.

Performance measures of Cox and SVR models using different kernels and datasets when all features are included in the model. Statistical significant differences between SVR based model 1 (indicated in >italic) and the other models are indicated based on the Wilcoxon rank sum test.

| Dataset | Model | Type of kernel | c-index | Log rank test statistic | Hazard ratio |

|---|---|---|---|---|---|

| BC | Cox model | — | 0.61 ± 0.03 | 1.38 ± 1.10 | 1.22 ± 0.11∗∗ |

| SVR based model 1 | Linear | 0.61 ± 0.03 | 1.85 ± 1.48 | 1.33 ± 0.14 | |

| SVR-MRL based model 1 | Linear | 0.62 ± 0.03 | 1.95 ± 1.44 | 1.33 ± 0.15 | |

| SVR based model 2 | Linear | 0.60 ± 0.03 | 1.76 ± 1.33 | 1.31 ± 0.14 | |

| SVR based model 1 | RBF | 0.56 ± 0.03∗∗∗ | 0.52 ± 0.46∗∗∗ | 1.13 ± 0.07∗∗∗ | |

| SVR based model 2 | RBF | 0.54 ± 0.05∗∗∗ | 0.64 ± 0.64∗∗∗ | 1.14 ± 0.11∗∗∗ | |

| SVR based model 1 | Polynomial | 0.59 ± 0.04∗ | 1.56 ± 1.56 | 1.23 ± 0.17∗∗ | |

| SVR based model 2 | Polynomial | 0.57 ± 0.08∗∗∗ | 0.88 ± 0.88∗∗∗ | 1.19 ± 0.23∗∗∗ | |

| SVR based model 1 | Clinical | 0.60 ± 0.02∗ | 1.47 ± 1.11 | 1.26 ± 0.14 | |

| SVR based model 2 | Clinical | 0.60 ± 0.04∗ | 1.14 ± 0.97∗∗ | 1.27 ± 0.14 | |

| CD | Cox model | — | 0.64 ± 0.01 | 20.81 ± 5.33 | 1.53 ± 0.07 |

| SVR based model 1 | Linear | 0.64 ± 0.01 | 21.59 ± 5.53 | 1.49 ± 0.07 | |

| SVR based model 2 | Linear | 0.64 ± 0.01 | 22.17 ± 6.41 | 1.51 ± 0.07 | |

| SVR-MRL based model 1 | Linear | 0.66 ± 0.01 | 22.60 ± 5.51 | 1.50 ± 0.09 | |

| SVR based model 1 | RBF | 0.61 ± 0.01∗∗∗ | 12.13 ± 3.49∗∗∗ | 1.46 ± 0.08∗∗ | |

| SVR based model 2 | RBF | 0.61 ± 0.01∗∗∗ | 11.98 ± 3.98∗∗∗ | 1.43 ± 0.07∗∗∗ | |

| SVR based model 1 | Polynomial | 0.63 ± 0.01∗ | 18.94 ± 7.05∗ | 1.56 ± 0.11 | |

| SVR based model 2 | Polynomial | 0.63 ± 0.02∗∗ | 14.72 ± 9.65∗∗ | 1.54 ± 0.17 | |

| SVR based model 1 | Clinical | 0.65 ± 0.01 | 22.63 ± 4.80 | 1.63 ± 0.07 | |

| SVR based model 2 | Clinical | 0.65 ± 0.01 | 22.28 ± 5.12 | 1.65 ± 0.07 | |

| PT | Cox model | — | 0.70 ± 0.05∗∗ | 2.13 ± 1.50∗∗ | 1.41 ± 0.24∗∗∗ |

| SVR based model 1 | Linear | 0.74 ± 0.06 | 3.18 ± 2.24 | 2.08 ± 0.49 | |

| SVR based model 2 | Linear | 0.74 ± 0.06 | 3.14 ± 1.92 | 2.14 ± 0.48 | |

| SVR-MRL based model 1 | Linear | 0.76 ± 0.06 | 3.91 ± 2.40 | 2.12 ± 0.49 | |

| SVR based model 1 | RBF | 0.68 ± 0.06∗∗∗ | 0.81 ± 0.75∗∗∗ | 1.45 ± 0.30∗∗∗ | |

| SVR based model 2 | RBF | 0.67 ± 0.05∗∗∗ | 0.84 ± 0.74∗∗∗ | 1.45 ± 0.28∗∗∗ | |

| SVR based model 1 | Polynomial | 0.74 ± 0.05 | 3.69 ± 2.03 | 2.15 ± 0.52 | |

| SVR based model 2 | Polynomial | 0.76 ± 0.06 | 3.76 ± 2.32 | 2.35 ± 0.49 | |

| SVR based model 1 | Clinical | 0.71 ± 0.07∗∗ | 1.85 ± 1.67∗∗ | 1.83 ± 0.46∗∗ | |

| SVR based model 2 | Clinical | 0.70 ± 0.07∗∗ | 1.60 ± 1.42∗∗∗ | 1.83 ± 0.48∗∗ | |

| PD | Cox model | — | 0.82 ± 0.02∗∗∗ | 23.11 ± 5.24∗∗ | 2.67 ± 0.31∗∗∗ |

| SVR based model 1 | Linear | 0.84 ± 0.01 | 26.31 ± 5.19 | 3.12 ± 0.55 | |

| SVR based model 2 | Linear | 0.83 ± 0.01 | 27.40 ± 5.59 | 3.07 ± 0.54 | |

| SVR-MRL based model 1 | Linear | 0.84 ± 0.01 | 26.09 ± 5.82 | 3.14 ± 0.52 | |

| SVR based model 1 | RBF | 0.84 ± 0.02 | 27.93 ± 4.46 | 3.02 ± 0.55 | |

| SVR based model 2 | RBF | 0.84 ± 0.02 | 28.51 ± 4.61 | 3.01 ± 0.59 | |

| SVR based model 1 | Polynomial | 0.84 ± 0.02 | 26.58 ± 4.52 | 3.02 ± 0.52 | |

| SVR based model 2 | Polynomial | 0.84 ± 0.02 | 26.61 ± 4.85 | 3.12 ± 0.49 | |

| SVR based model 1 | Clinical | 0.83 ± 0.01∗∗ | 23.92 ± 4.80∗ | 3.21 ± 0.56 | |

| SVR based model 2 | Clinical | 0.83 ± 0.01∗∗ | 25.11 ± 5.23∗ | 3.14 ± 0.54 |

∗ p value < 0.05, ∗∗ p value < 0.01, ∗∗∗ p value < 0.001 (Wilcoxon rank sum test).

As the linear standard SVR model significantly outperformed other models, the prognostic indices were obtained based on this model. By comparing the mean value of prognostic index of subgroups of variables, the following results were obtained (Table 1). The survival time decreased as the age increased. The patients who developed metastasis survived less than other patients. The mean of prognostic index in subgroup with smaller tumor size was higher than the mean of subgroup with larger tumor size. The relationship between other variables and survival time can be evaluated similarly.

The results of CD experiment showed that SVR-MRL and SVR with clinical kernel outperformed other models but the difference between their performances and linear SVR (model 1) was not significant. In the PD experiment, SVR-MRL and SVR with polynomial kernel slightly outperformed other models but their performance differences with Linear SVR were not significant. Linear SVR significantly outperformed Cox. In the PT experiment, performances of all models were almost similar except that Cox and SVR with clinical kernel performed significantly worse than linear SVR.

3.2. Feature Selection

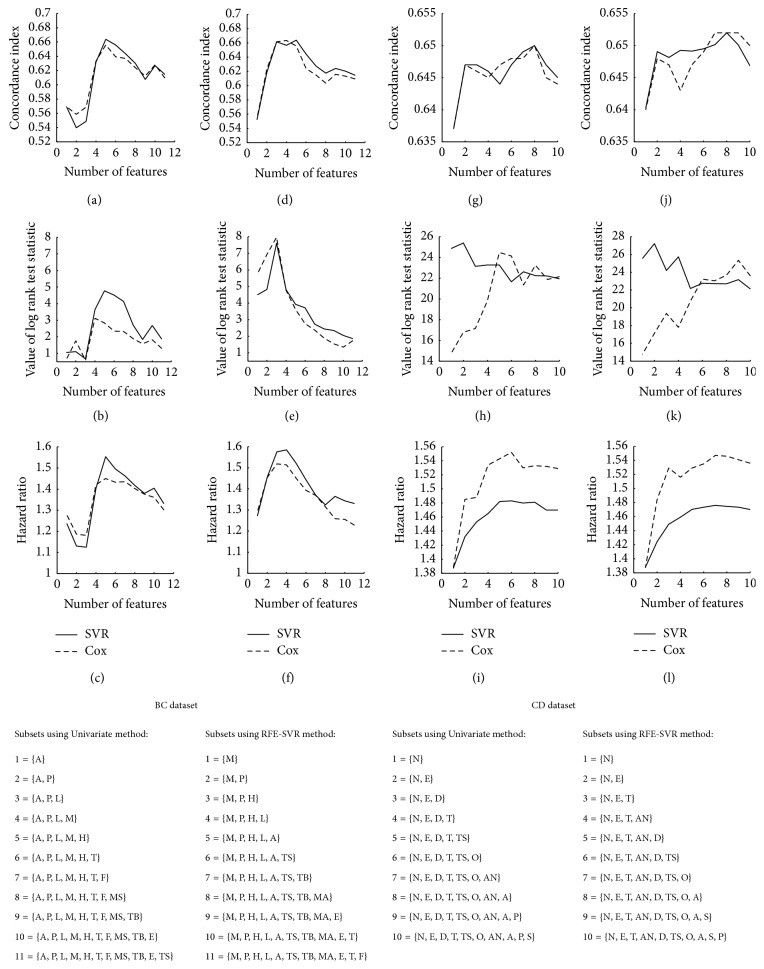

In the BC dataset, based on the first feature selection method, the relationship between the prognostic index and each feature was evaluated and p values of the tests are presented in Table 1. Seven variables were significant (p value < 0.05). For univariate feature selection, Figure 1 indicates that the subset with five features has the best performance. In SVR-RFE method, eleven replications were implemented for finding all subset of features. According to the performance measures of subsets, the subset with three features was selected as the best. In this figure, it is clearly shown that when the model involves large number of features, SVR outperforms Cox.

Figure 1.

Performance measures of standard linear SVR and Cox for different subsets of features. Horizontal axis shows the number of features included in the model. (a), (b), and (c): performance measures of univariate method for BC dataset. (d), (e), and (f): performance measures of RFE for BC dataset. (g), (h), and (i): performance measures of univariate method for CD dataset. (j), (k), and (l): performance measures of RFE for CD dataset. BC dataset's abbreviations: M: metastasis, P: PR status, H: HER2, L: number of involved lymph nodes, A: age, T: tumor size, TB: histological type of BC, MS: marital status, E: ER status, TS: type of surgery, and F: family history. CD dataset's abbreviations: S: sex, P: perforation of colon, A: age, AN: adherence to nearby organs, O: obstruction of colon by tumor, TS: time from surgery to registration, T: treatment, D: differentiation of tumor, E: extent of local spread, and N: number of lymph nodes.

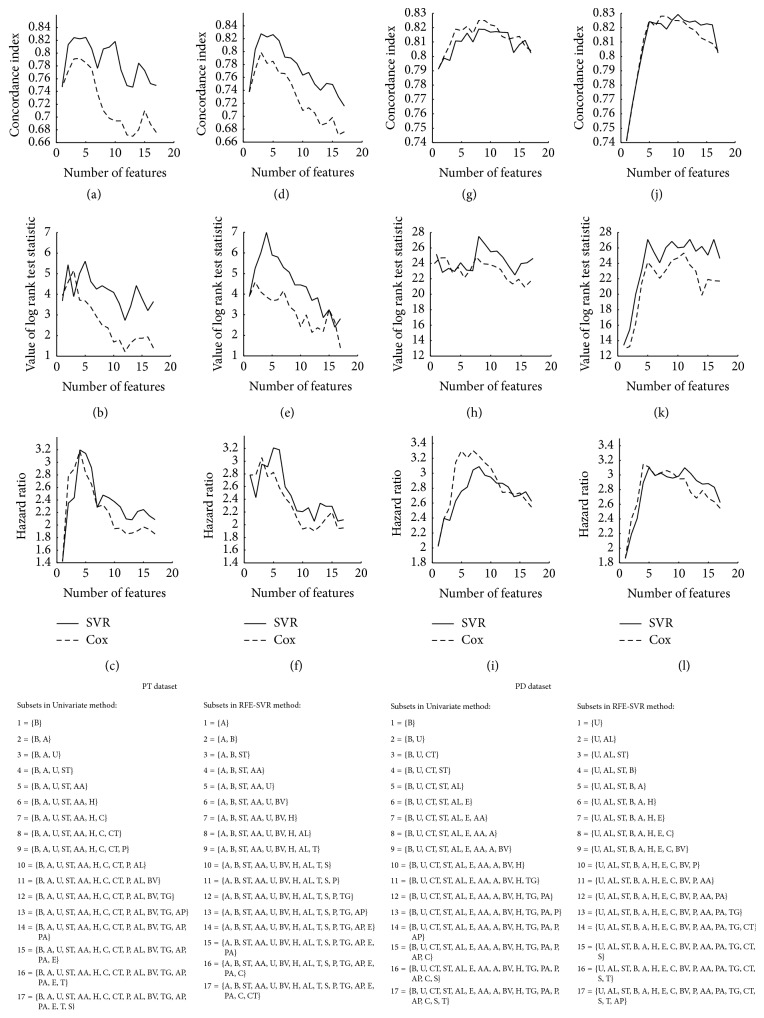

In the CD experiment, RFE and univariate methods selected subsets with six and five features respectively, which had five common features (Figure 1). In PT experiment, univariate method selected the subset of five features and RFE method selected the subset of three features among which two features were similar (Figure 2). In the PD experiment, univariate method selected the subset with eight features. RFE selected a subset with five features all of which were observed in the subset selected by RFE. However, in these experiments, the best subset may be not unified. The first feature selection method found most features as significantly associated with the survival time. The selected features had noticeable overlap with two other methods.

Figure 2.

Performance measures of linear SVR and Cox for different subsets of features. (a), (b), and (c): performance measures of univariate method for PT dataset. (d), (e), and (f): performance measures of RFE for PT dataset. (g), (h), and (i): performance measures of univariate method for PD dataset. (j), (k), and (l): performance measures of RFE for PD dataset. B: bilirubin, A: age, U: urine copper, ST: stage, AA: aspartate aminotransferase, H: presence of hepatomegaly, C: cholesterol, CT: standardized blood clotting time, P: platelet count, AL: albumin, BV: blood vessel malformations in the skin, TG: triglycerides, AP: alkaline phosphatase, PA: presence of ascites, E: edema, T: treatment, and S: sex.

It seemed that in subsets with large number of features, SVR outperformed Cox. The results of simulated datasets also indicated similar results so that when the number of features was more than 60, SVR significantly outperformed Cox (Table 3).

Table 3.

Performance measures of SVR and Cox models for simulated experiments with different number of features.

| Number of features | Model | c-index | Log rank test statistic | Hazard ratio |

|---|---|---|---|---|

| 10 | SVR | 0.562 ± 0.015 | 12.281 ± 4.955 | 1.240 ± 0.063 |

| Cox | 0.560 ± 0.013 | 10.662 ± 5.234 | 1.245 ± 0.060 | |

| 20 | SVR | 0.552 ± 0.013 | 8.589 ± 5.557 | 1.203 ± 0.048 |

| Cox | 0.552 ± 0.013 | 9.289 ± 5.990 | 1.194 ± 0.054 | |

| 30 | SVR | 0.540 ± 0.010 | 5.070 ± 2.275 | 1.156 ± 0.042 |

| Cox | 0.541 ± 0.011 | 5.510 ± 3.907 | 1.154 ± 0.041 | |

| 40 | SVR | 0.535 ± 0.011 | 4.900 ± 2.907 | 1.117 ± 0.029 |

| Cox | 0.538 ± 0.011 | 4.367 ± 2.573 | 1.125 ± 0.035 | |

| 50 | SVR | 0.540 ± 0.014 | 5.234 ± 3.557 | 1.136 ± 0.054 |

| Cox | 0.537 ± 0.013 | 3.874 ± 2.681 | 1.120 ± 0.047 | |

| 60 | SVR | 0.532 ± 0.012 | 3.474 ± 2.748 | 1.105 ± 0.039 |

| Cox | 0.528 ± 0.015 | 3.723 ± 2.662 | 1.112 ± 0.052 | |

| 70 | SVR | 0.535 ± 0.012 | 4.134 ± 2.304 | 1.123 ± 0.036 |

| Cox | 0.528 ± 0.016 | 2.774 ± 1.925∗ | 1.096 ± 0.044∗ | |

| 80 | SVR | 0.530 ± 0.010 | 3.171 ± 2.597 | 1.120 ± 0.036 |

| Cox | 0.526 ± 0.010∗ | 1.687 ± 1.323 | 1.079 ± 0.041∗∗ | |

| 90 | SVR | 0.526 ± 0.013 | 2.846 ± 2.219 | 1.098 ± 0.030 |

| Cox | 0.521 ± 0.012∗ | 1.720 ± 1.492∗ | 1.070 ± 0.041∗∗ | |

| 100 | SVR | 0.524 ± 0.012 | 1.594 ± 1.386 | 1.085 ± 0.045 |

| Cox | 0.517 ± 0.014∗∗ | 1.226 ± 1.033 | 1.054 ± 0.030∗ | |

| 110 | SVR | 0.535 ± 0.012 | 3.738 ± 3.335 | 1.101 ± 0.048 |

| Cox | 0.515 ± 0.008∗∗∗ | 0.870 ± 0.800∗∗∗ | 1.057 ± 0.024∗∗∗ | |

| 120 | SVR | 0.527 ± 0.012 | 1.863 ± 1.581 | 1.091 ± 0.035 |

| Cox | 0.515 ± 0.015∗∗ | 1.092 ± 0.964∗ | 1.058 ± 0.033∗∗ |

∗ p value < 0.05, ∗∗ p value < 0.01, ∗∗∗ p value < 0.001 (Wilcoxon rank sum test).

4. Discussion

The results indicated that, in the BC dataset, linear SVR models outperformed SVR with nonlinear kernel. For other datasets, linear SVR and SVR with nonlinear kernels were comparable. The SVR-MRL model performed similar to or slightly better than other models. This model is based on additivity assumption. The results of this study indicated that SVR model performed similar to or better than Cox when all features were included in model. When feature selection was used, the performance of SVR and Cox was comparable. Although Cox model is based on proportional hazards assumption, an extension of the model was proposed for time-dependent variables when this assumption is not satisfied [1, 38, 39]. However, the additivity of the proposed MRL-method is also a restriction.

Using BC, PT, and PD datasets, the results showed that when the number of included features in model was large, SVR outperformed the Cox model. The results of simulated datasets also indicated that, in models with large number of features, SVR significantly outperformed the Cox model. Du and Dua [32], also using a breast cancer dataset, indicated that SVR performs better than Cox on initial dataset and performs similar to Cox when feature selection is conducted. Van Belle et al. [3, 9] also indicated that the SVR model outperforms the Cox model for high-dimensional data, while for clinical data the models have similar performance.

The experiments indicated that the difference between the simple standard SVR model (model 1) and the complicated SVR model (model 2) was not significant. Using five clinical data sets, Van Belle et al. [9] also found that the differences between two models are not significant.

The results of BC dataset indicated that feature selection methods selected subsets of features with different sizes. However, they were subsets of each other. The three features selected by all methods were as follows: metastasis status, progesterone receptor (PR) status, and human epidermal growth factor receptor 2 (HER2) status. The results indicated that the patients that developed metastasis had shorter life time compared to patients who did not develop metastasis. Some other studies also indicated similar results [25, 27, 40, 41]. Also the results indicated patients with negative HER2 status survived longer than patients with positive HER2 status, which is consistent with results of some previous studies [40, 41]. In addition, the present study yielded that patients with positive PR status had less survival time than the patients with negative PR status. Some other studies indicated contrary result [40, 41] which may be due to the missing values for this variable. However, Faradmal et al. [28] presented similar result for the PR status.

There are other techniques for feature selection. Some studies used statistical characteristics such as correlation coefficients or Fisher score for feature ranking [15, 24, 32]. These criteria are simple, neither being based on training algorithm. In the current study, square of feature weight is used as the ranking criterion which is based on SVR. This criterion was previously applied in other studies [13, 23]. Chang and Lin [15] also ranked each feature by considering how the performance (accuracy) is affected without that. Their method is more time-consuming compared to the feature ranking method used in our study. Also, they found that feature ranking using weights of linear SVR outperforms the feature expelling method in terms of the final model performance. There are some techniques which ranked features based on their influence on the decision hyperplane and were able to also use nonlinear kernels for feature selection [14, 16].

In this study, subset selection methods were used to assess subsets of variables according to their performance in the SVR model. These methods are often criticized due to requiring massive amounts of computation. However, they are robust against overfitting [42]. Also, the better performance of SVM-RFE for feature selection compared with correlation coefficient and mutual information was shown in [23, 24].

Some feature selection methods incorporate variable selection as part of the training using a penalty function [18, 31]. Becker et al. [18] proposed a combination of SCAD and ridge penalties and found it being a robust tool for feature selection. Most of the mentioned techniques were applied for classification problems. It is suggested that, for future studies, these techniques are extended to survival regression models and different feature selection methods are used, evaluated, and compared.

To the best of our knowledge, there is no previous study employing feature selection based on SVR for survival analysis. The univariate method used in this study is a performance-based method which has the advantage of being applicable to all training algorithms. As opposed to this method, SVR-RFE takes into account possible correlation between the variables. For the BC dataset, SVR-RFE method yielded 11 subsets of features one of which was exactly the same as the subset obtained by the first method used in this study. RFE-SVR is an iterative procedure which requires much time for feature selection but the proposed feature selection method is trained once and the results can simultaneously be used for two purposes, prediction, and feature selection. SVR models have some limitations, as well. They require more time for tuning the parameters of the model and the required time for analysis increases with increasing the number of parameters of the model.

5. Conclusion

The results for BC data indicated that SVR with linear kernel outperformed SVR with nonlinear kernels. For other datasets, linear SVR and SVR with nonlinear kernels were comparable. Performance of the model improved slightly but not significantly by applying a two-sided loss function. However, the additivity of this model is a restriction.

SVR performed similar or better than Cox when all features were included in model. When feature selection was used, performance of two models was comparable. The result of simulated datasets indicated that when the number of features included in model was large, SVR model significantly outperformed Cox.

Univariate feature selection based on concordance index was proposed in current study which has the advantage of being applicable to all training algorithms. Another method (a combination of SVR and statistical tests) in contrast to univariate and RFE methods is not iterative and can be used for pilot feature selection. For all datasets, the employed methods selected subsets of features with different size, but with high overlaps. Based on the three feature selection methods, metastasis status, progesterone receptor status, and human epidermal growth factor receptor 2 status were the best features associated to survival.

Acknowledgments

The authors wish to thank the Vic-Chancellor of Research and Technology of Hamadan University of Medical Sciences, Iran, for approving the project and providing financial supports for conducting this research (Grant no. 9308204015).

Disclosure

The current study is a part of Ph.D. thesis in biostatistics field.

Competing Interests

The authors declare that there is no conflict of interests regarding the publication of this paper.

References

- 1.Klein J. P., Moeschberger M. L. Survival Analysis: Techniques for Censored and Truncated Data. New York, NY, USA: Springer Science & Business Media; 2003. [Google Scholar]

- 2.Giordano A., Giuliano M., De Laurentiis M., et al. Artificial neural network analysis of circulating tumor cells in metastatic breast cancer patients. Breast Cancer Research and Treatment. 2011;129(2):451–458. doi: 10.1007/s10549-011-1645-5. [DOI] [PubMed] [Google Scholar]

- 3.Van Belle V., Pelckmans K., van Huffel S., Suykens J. A. K. Improved performance on high-dimensional survival data by application of survival-SVM. Bioinformatics. 2011;27(1):87–94. doi: 10.1093/bioinformatics/btq617. [DOI] [PubMed] [Google Scholar]

- 4.Huang C.-F. A hybrid stock selection model using genetic algorithms and support vector regression. Applied Soft Computing Journal. 2012;12(2):807–818. doi: 10.1016/j.asoc.2011.10.009. [DOI] [Google Scholar]

- 5.Kazem A., Sharifi E., Hussain F. K., Saberi M., Hussain O. K. Support vector regression with chaos-based firefly algorithm for stock market price forecasting. Applied Soft Computing. 2013;13(2):947–958. doi: 10.1016/j.asoc.2012.09.024. [DOI] [Google Scholar]

- 6.Tien Bui D., Pradhan B., Lofman O., Revhaug I. Landslide susceptibility assessment in vietnam using support vector machines, decision tree, and nave bayes models. Mathematical Problems in Engineering. 2012;2012:26. doi: 10.1155/2012/974638.974638 [DOI] [Google Scholar]

- 7.Shivaswamy P. K., Chu W., Jansche M. A support vector approach to censored targets. Proceedings of the 7th IEEE International Conference on Data Mining (ICDM '07); October 2007; Omaha, Neb, USA. pp. 655–660. [DOI] [Google Scholar]

- 8.Ding Z. X. The application of support vector machine in survival analysis. Proceedings of the 2nd International Conference on Artificial Intelligence, Management Science and Electronic Commerce (AIMSEC '11); August 2011; Dengfeng, China. IEEE; pp. 6816–6819. [DOI] [Google Scholar]

- 9.Van Belle V., Pelckmans K., Van Huffel S., Suykens J. A. K. Support vector methods for survival analysis: a comparison between ranking and regression approaches. Artificial Intelligence in Medicine. 2011;53(2):107–118. doi: 10.1016/j.artmed.2011.06.006. [DOI] [PubMed] [Google Scholar]

- 10.Khan F. M., Bayer-Zubek V. Support vector regression for censored data (SVRc): a novel tool for survival analysis. Proceedings of the 8th IEEE International Conference on Data Mining (ICDM '08); December 2008; pp. 863–868. [DOI] [Google Scholar]

- 11.Van Belle V., Pelckmans K., Suykens J. A., Van Huffel S. Additive survival least-squares support vector machines. Statistics in Medicine. 2010;29(2):296–308. doi: 10.1002/sim.3743. [DOI] [PubMed] [Google Scholar]

- 12.Mahjub H., Goli S., Mahjub H., Faradmal J., Soltanian A.-R. Performance evaluation of support vector regression models for survival analysis: a simulation study. International Journal of Advanced Computer Science & Applications. 2016;1(7):381–389. [Google Scholar]

- 13.Iguyon I., Elisseeff A. An introduction to variable and feature selection. Journal of Machine Learning Research. 2003;3:1157–1182. [Google Scholar]

- 14.Mangasarian O. L., Kou G. Feature selection for nonlinear kernel support vector machines. Proceedings of the 7th IEEE International Conference on Data Mining Workshops (ICDMW '07); October 2007; Omaha, Neb, USA. IEEE; [DOI] [Google Scholar]

- 15.Chang Y.-W., Lin C.-J. WCCI Causation and Prediction Challenge. 2008. Feature ranking using linear SVM. [Google Scholar]

- 16.Su C.-T., Yang C.-H. Feature selection for the SVM: an application to hypertension diagnosis. Expert Systems with Applications. 2008;34(1):754–763. doi: 10.1016/j.eswa.2006.10.010. [DOI] [Google Scholar]

- 17.Nguyen M. H., De la Torre F. Optimal feature selection for support vector machines. Pattern Recognition. 2010;43(3):584–591. doi: 10.1016/j.patcog.2009.09.003. [DOI] [Google Scholar]

- 18.Becker N., Toedt G., Lichter P., Benner A. Elastic SCAD as a novel penalization method for SVM classification tasks in high-dimensional data. BMC Bioinformatics. 2011;12(1, article 138) doi: 10.1186/1471-2105-12-138. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Hochreiter S., Obermayer K. Feature Extraction. Springer; 2006. Nonlinear feature selection with the potential support vector machine; pp. 419–438. [Google Scholar]

- 20.Zhao H.-X., Magoulés F. Feature selection for support vector regression in the application of building energy prediction. Proceedings of the 9th IEEE International Symposium on Applied Machine Intelligence and Informatics (SAMI '11); January 2011; Smolenice, Slovakia. pp. 219–223. [DOI] [Google Scholar]

- 21.Li G.-Z., Meng H.-H., Yang M. Q., Yang J. Y. Combining support vector regression with feature selection for multivariate calibration. Neural Computing and Applications. 2009;18(7):813–820. doi: 10.1007/s00521-008-0202-6. [DOI] [Google Scholar]

- 22.Yang J.-B., Ong C.-J. Feature selection for support vector regression using probabilistic prediction. Proceedings of the 16th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD '10); July 2010; Washington, DC, USA. ACM; pp. 343–351. [DOI] [Google Scholar]

- 23.Guyon I., Weston J., Barnhill S., Vapnik V. Gene selection for cancer classification using support vector machines. Machine Learning. 2002;46(1–3):389–422. doi: 10.1023/a:1012487302797. [DOI] [Google Scholar]

- 24.Camps-Valls G., Chalk A. M., Serrano-López A. J., Martín-Guerrero J. D., Sonnhammer E. L. L. Profiled support vector machines for antisense oligonucleotide efficacy prediction. BMC Bioinformatics. 2004;5(1, article 135) doi: 10.1186/1471-2105-5-135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Faradmal J., Talebi A., Rezaianzadeh A., Mahjub H. Survival analysis of breast cancer patients using cox and frailty models. Journal of Research in Health Sciences. 2012;12(2):127–130. [PubMed] [Google Scholar]

- 26.Kim H., Bredel M. Feature selection and survival modeling in The Cancer Genome Atlas. International Journal of Nanomedicine. 2013;8(supplement 1):57–62. doi: 10.2147/ijn.s40733. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Faradmal J., Soltanian A. R., Roshanaei G., Khodabakhshi R., Kasaeian A. Comparison of the performance of log-logistic regression and artificial neural networks for predicting breast cancer relapse. Asian Pacific Journal of Cancer Prevention. 2014;15(14):5883–5888. doi: 10.7314/APJCP.2014.15.14.5883. [DOI] [PubMed] [Google Scholar]

- 28.Faradmal J., Kazemnejad A., Khodabakhshi R., Gohari M.-R., Hajizadeh E. Comparison of three adjuvant chemotherapy regimes using an extended log-logistic model in women with operable breast cancer. Asian Pacific Journal of Cancer Prevention. 2010;11(2):353–358. [PubMed] [Google Scholar]

- 29.Lagani V., Tsamardinos I. Structure-based variable selection for survival data. Bioinformatics. 2010;26(15):1887–1894. doi: 10.1093/bioinformatics/btq261.btq261 [DOI] [PubMed] [Google Scholar]

- 30.Choi I., Wells B. J., Yu C., Kattan M. W. An empirical approach to model selection through validation for censored survival data. Journal of Biomedical Informatics. 2011;44(4):595–606. doi: 10.1016/j.jbi.2011.02.005. [DOI] [PubMed] [Google Scholar]

- 31.Liu Z., Chen D., Tian G., Tang M.-L., Tan M., Sheng L. Advances in Computational Biology. Vol. 680. Berlin, Germany: Springer; 2010. Efficient support vector machine method for survival prediction with SEER data; pp. 11–18. (Advances in Experimental Medicine and Biology). [DOI] [PubMed] [Google Scholar]

- 32.Du X., Dua S. Cancer prognosis using support vector regression in imaging modality. World Journal of Clinical Oncology. 2011;2(1):44–49. doi: 10.5306/wjco.v2.i1.44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Moertel C. G., Fleming T. R., Macdonald J. S., et al. Levamisole and fluorouracil for adjuvant therapy of resected colon carcinoma. The New England Journal of Medicine. 1990;322(6):352–358. doi: 10.1056/nejm199002083220602. [DOI] [PubMed] [Google Scholar]

- 34.Therneau T. M., Grambsch P. M. Modeling Survival Data: Extending the Cox Model. Springer Science & Business Media; 2000. [Google Scholar]

- 35.Van Belle V., Pelckmans K., Suykens J. A. K., Van Huffel S. Learning transformation models for ranking and survival analysis. The Journal of Machine Learning Research. 2011;12:819–862. [Google Scholar]

- 36.Shiao H.-T., Cherkassky V. SVM-based approaches for predictive modeling of survival data. Proceedings of the International Conference on Data Mining (DMIN '13); July 2013; The Steering Committee of the World Congress in Computer Science, Computer Engineering and Applied Computing (WorldComp); [Google Scholar]

- 37.Van Belle V., Pelckmans K., Suykens J. A. K., Van Huffel S. On the use of a clinical kernel in survival analysis. Proceedings of the 18th European Symposium on Artificial Neural Networks, Computational Intelligence and Machine Learning (ESANN '10); April 2010; Bruges, Belgium. pp. 451–456. [Google Scholar]

- 38.Bellera C. A., MacGrogan G., Debled M., De Lara C. T., Brouste V., Mathoulin-Pélissier S. Variables with time-varying effects and the Cox model: some statistical concepts illustrated with a prognostic factor study in breast cancer. BMC Medical Research Methodology. 2010;10, article 20 doi: 10.1186/1471-2288-10-20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Hartmann O., Schuetz P., Albrich W. C., Anker S. D., Mueller B., Schmidt T. Time-dependent Cox regression: serial measurement of the cardiovascular biomarker proadrenomedullin improves survival prediction in patients with lower respiratory tract infection. International Journal of Cardiology. 2012;161(3):166–173. doi: 10.1016/j.ijcard.2012.09.014. [DOI] [PubMed] [Google Scholar]

- 40.Bilal E., Dutkowski J., Guinney J., et al. Improving breast cancer survival analysis through competition-based multidimensional modeling. PLoS Computational Biology. 2013;9(5) doi: 10.1371/journal.pcbi.1003047.e1003047 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Karimi A., Delpisheh A., Sayehmiri K., Saboori H., Rahimi E. Predictive factors of survival time of breast cancer in kurdistan province of Iran between 2006–2014: a cox regression approach. Asian Pacific Journal of Cancer Prevention. 2014;15(19):8483–8488. doi: 10.7314/apjcp.2014.15.19.8483. [DOI] [PubMed] [Google Scholar]

- 42.Reunanen J. Overfitting in making comparisons between variable selection methods. Journal of Machine Learning Research. 2003;3:1371–1382. [Google Scholar]