Abstract

Intervention development can be accelerated by using wearable sensors and ecological momentary assessment (EMA) to study how behaviors change within a person. The purpose of this study was to determine the feasibility and acceptability of a novel, intensive EMA method for assessing physiology, behavior, and psychosocial variables utilizing two objective sensors and a mobile application (app). Adolescents (n = 20) enrolled in a 20-day EMA protocol. Participants wore a physiological monitor and an accelerometer that measured sleep and physical activity and completed four surveys per day on an app. Participants provided approximately 81 % of the expected survey data. Participants were compliant to the wrist-worn accelerometer (75.3 %), which is a feasible measurement of physical activity/sleep (74.1 % complete data). The data capture (47.8 %) and compliance (70.28 %) with the physiological monitor were lower than other study variables. The findings support the use of an intensive assessment protocol to study real-time relationships between biopsychosocial variables and health behaviors.

Keywords: Feasibility, Ecological momentary assessment, Physical activity, Adolescents, Wearable sensors

Understanding how behaviors change within a person has the potential to inform intervention development. To date, however, health behavior data has not been assessed with enough precision or with a high enough sampling rate to use computational modeling approaches to understand dynamic processes [1]. In recent years, wearable sensors combined with subjective reports of internal states (e.g., ecological momentary assessment) have emerged as technologies that hold tremendous potential for identifying drivers of human behavior and accelerating behavioral medicine research. Ecological momentary assessment refers to the collection of behavioral, physiological, or self-reported data in nearly real time and in a person’s natural environment. Therefore, this kind of data capture is less susceptible to recall bias and is more sensitive to contextual factors that may influence variables of interest.

Wearable sensors provide precise and temporally dense information regarding the behavioral, physiological, and even affective states of individuals throughout the day [1]. With enough observations of intensive longitudinal data combining wearable sensors and ecological momentary assessment technologies, it may be possible to develop dynamical systems models of high value health behaviors such as sedentary activity, moderate to vigorous physical activity, sleep, and diet.

Dynamical system modeling, a computational approach to understanding relationships in measured data, allows for the study of the relationships between variables, within a system (i.e., an individual) over time, and provides a comprehensive analytic technique for testing theories of behavior change [2]. In order for dynamical systems models to be maximally effective, high-throughput data indicating the state of the system needs to be available in high temporal density. This approach considers how system variables respond to changes in input variables, can answer questions regarding measurement targets, how often measures should be taken, and the function of the outcome response [3]. Such information is vital for making decisions regarding the timing, spacing, and dosage level of intervention components.

Previous attempts to capitalize on wearable sensors and ecological momentary assessment have examined physical activity patterns and both the social and physical context of activity [4, 5], affective states [6], and sleep [7]. While the articles to date have provided some evidence for the feasibility of collecting behavioral parameters from adolescents over a short period of time [8], the methodology commonly lacks physiological measurement and is limited to examining variables for a short duration (i.e., 4 days) [4]. The development of dynamical regulatory systems models will require long assessment windows or frequent repeated assessments; comprehensive assessment of behavioral, physiological, and subjective variables; and participants who are willing to comply with the methods necessary to develop intensive longitudinal data. Therefore, an understanding of the feasibility and acceptability of this type of data capture is necessary for the research literature to move forward.

To our knowledge, no studies have examined the utility and acceptability of complementary ecological momentary assessment methodologies (i.e., actigraphy, self-report questionnaires, and physiological variables) to measure dynamical systems in adolescents. Adolescents are a particularly important group for study from a feasibility perspective as many adult health habits are established in adolescence. The purpose of this study was to determine the feasibility and acceptability of a novel and intensive ecological momentary assessment method for assessing behavioral, psychosocial, and physiological variables utilizing two objective sensors and a mobile application (app). This paper addresses calls in the literature to assess variables in nearly real time, to measure behaviors objectively, and to assess the dynamic linkage between psychological variables, physiological variables, and health behaviors [9]. This study advances the research literature by describing an intensive assessment protocol for just-in-time capture of variables that stand to impact health behavior change.

METHODS

Participant characteristics

Participants enrolled in the study included 20 adolescents (60 % male) between 13 and 18 years of age (M = 15.67, SD = 1.75). Participants self-identified as Caucasian (80 %), Native American (10 %), Hispanic (5 %), and Asian (5 %). The sample was primarily middle class, with 73.7 % of the sample having an annual family income greater than $60,000; 10.5 % with income between $50,000 and $60,000; and 10.5 % between $30,000 and $50,000, with the remaining reporting a family income between $20,000 and $30,000 (5.3 %). One participant did not report on family income.

Feasibility and acceptability measures

Procedures

Participants were recruited from a small midwestern college town and were invited to complete the study for 20 consecutive days. Recruitment methods included flyers, direct emails, and a social media campaign (i.e., Facebook messages posted to a page associated with the authors’ research laboratory). Parental consent and participant assent were collected for all adolescents. Participants who were 18 years old at the time of the study provided consent. The local Institutional Review Board approved all study procedures prior to beginning the assessment. In order to participate, adolescents were required to be between the ages of 13–18, without any visual impairments and chronic or acute physical maladies that would limit physical activity.

During an initial session, participants were equipped with a Zephyr BioHarness 3.0 (Zephyr Technology, Auckland, New Zealand) as well as an Actigraph wActiSleep-BT accelerometer (Actigraph LLC, Pensacola, FL). They were instructed to wear the Zephyr BioHarness for 12 h each day. Participants wore the BioHarness around the chest next to the skin and the accelerometer on the non-dominant wrist. The accelerometer was waterproof and did not require charging during the 20 days, so study staff instructed participants to wear it at all times. However, the BioHarness needed to be removed during activities involving water (i.e., swimming or showering), and required nightly charging.

Each participant received an Android smartphone (Google Nexus 4) that was equipped to deliver questionnaires via an application (PETE app). The PETE app prompted participants to report on context variables, mood, and dietary behaviors at four time points each day. Participants provided four times, based on their schedules, when they would be available to answer the questionnaires (e.g., 7 am, 12 pm, 4 pm, and 8 pm). At each designated time, an alarm sounded prompting participants to complete the questionnaires. Participants also completed a social support survey administered once a day. Adolescents were required to select two times in the morning and two times in the afternoon, and surveys were required to be at least 2 h apart. Beyond these restrictions, it was suggested, but not required, that participants respond to a survey before school, before lunch, after school, and in the early evening. These suggestions were made in an attempt to sample the dynamical systems assumed to underlie adolescent behavior at points far enough apart in time that the system could be expected to vary. Ultimately, participants chose times between 6:00 am and 9:30 pm. During the pilot testing, the questionnaires took approximately 3 min to complete, for a total of 12–15 min across the four assessment occasions.

Following the first three prompts, participants received 36 questions regarding their location, mood, energy level, fruit and vegetable consumption, and high-fat high-sugar food consumption. On the fourth prompt, the participants received 47 questions, which included a social support for exercise questionnaire in addition to the other items. The smartphone was touch screen so participants could easily record their responses on a 5-point Likert scale. After the 20-day study period, participants returned all equipment, and completed post-assessment questionnaires. On some occasions, it was not possible to schedule a follow-up visit on the 20th day of the study. In those cases, surveys continued to be delivered, but participants were not required to provide valid data. Therefore, data were excluded if more than 80 observations were obtained from a participant (i.e., 80 observations were expected given 4 surveys per day over 20 days), or the data was collected after the 20th day. At the exit session, study staff administered the feasibility and acceptability measures. Participants were compensated up to $40 for their participation in the study, based on their compliance to the protocol. Participants earned $0.75 each time they wore the physiological monitoring system (i.e., BioHarness 3.0) for 12 h ($15) and a bonus of $25 for completing all four of the surveys on 85 % of study days.

Before deployment, devices were initialized using the same lab computer. Internal clocks on each device were synchronized to the computer clock, and automatic time updates were disabled. This ensured that devices would produce timestamps that could be compared and would represent nearly the same time window at the unit of seconds. Although, some clock drift is expected without additional synchronization.

Objective behavioral measures

Sleep and physical activity

The Actigraph wActiSleep-BT accelerometer (Actigraph LLC, Pensacola, FL; 10) is a validated wireless activity monitor that allows for objective physical activity and sleep/wake measurements. The device records movement and can sample at 1-s epochs. The actigraph uses a triaxial accelerometer, which can measure accelerations in three planes of movement in the range of 0.05 to 2 G’s using a band-limited frequency of 0.25 to 2.5 Hz [10]. The use of this accelerometer worn on the non-dominant wrist is in accordance with the current National Health and Nutrition Examination Survey (NHANES) protocol [11]. The battery life would last throughout the 20-day protocol and did not require charging by the participants. Raw data was processed using the Actilife software v.6.10.2. Actigraph data were read into Actilife and scored using the Sadeh algorithm [12] to estimate sleep.

Physiological measures

Physiological variables

The Zephyr BioHarness 3.0 (Zephyr Technology, Auckland, New Zealand) recorded electrocardiogram, heart rate, respiration rate, body orientation (upright/prone), and activity, which were stored on the internal device memory. Following the 20-day protocol, data were downloaded in summary format from the BioHarness 3.0. This output provides observations at the level of once per second for the period that the sensor is worn. Data were processed using a custom Python program designed to screen for data quality (i.e., signal confidence values above 75 %) and aggregate the physiological variables assessed once per second in 15-min windows (i.e., sum of observations/900) from the time a survey was administered. If the device was not worn or the signal confidence value was below 75 %, data were considered missing.

Self-report constructs

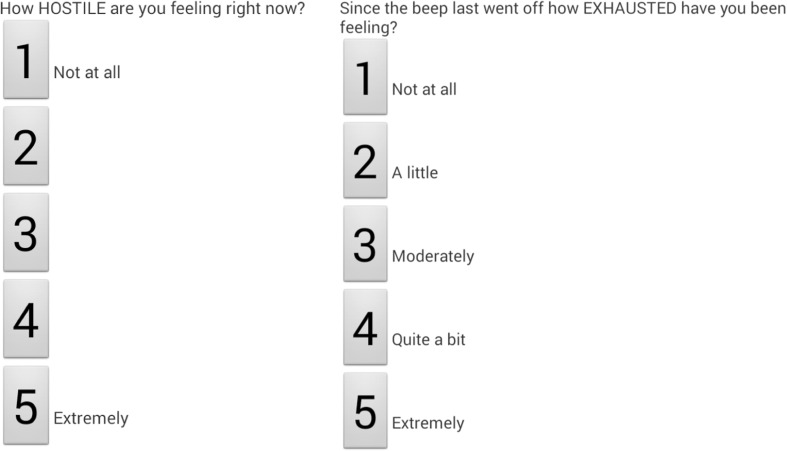

Self-report constructs were assessed using the PETE app, which was developed for this study. The PETE app is an ecological momentary assessment tool that allows researchers to trigger a questionnaire loaded on the participant’s Android phone. The app administers surveys multiple times per day at random intervals or at predefined times. Each questionnaire administration can deliver the entire bank or selected items. When a survey is triggered, an alarm sounds to prompt the participant to complete a survey and continues to sound until the first question has been answered. If the phone was powered on, the alarm sounded regardless of the volume level of the device and continued until the first question was answered. The app was designed to include customized item stems and supported questions that have up to five response options. The app used the Android touch screen interface to allow participants to tap the screen to select their response. The data were stored on the Android device until being downloaded on a computer. Please see Fig. 1 for a screenshot of the PETE app.

Fig 1.

S-PETE screen shot example

Affective constructs

Eight affective constructs associated with physical activity [6] were identified using theory and a review of the empirical literature. Two broad constructs of positive and negative affect were measured by the 10-item International Positive and Negative Affect Schedule Short Form (I-PANAS-SF) [13]. Participants were instructed to rate the extent to which they experienced a particular emotion “since the last beep went off.” Six additional clinical constructs (1) tension-anxiety, (2) vigor-anxiety, (3) depression-dejection, (4) fatigue-inertia, (5) anger-hostility, and (6) confusion-bewilderment were measured by including the three items with the highest factor loadings from the Profile of Mood States (POMS) [14]. Each item was scored on a 5-point Likert scale.

Social support

The Social Support for Exercise Survey [15] is a 13-item measure that assesses the level of support individuals making health-behavior changes felt they were receiving from family and friends. The original questionnaire was modified to assess the amount of social support received each day from friends and family members as one item. Respondents rated on a 5-point Likert scale ranging from not at all to four or more times, how often family and friends provided support for physical activity. Example items include “Today one of my friends or family helped plan activities around my exercise,” and “Today one of my friends or family exercised with me.” Ratings were summed across items to determine an encouragement and discouragement score, with higher scores indicating higher levels of perceived social support.

Context and location questions

Five items were developed for the purpose of this study to examine the location and environment to determine the built environment of the participant at each of four time points each day. Example items include “How many trees and plants are there in the area where you are right now,” “How safe do you feel right now,” and “What is the weather like outside?” Respondents answered each question on a 5-point scale.

Diet items

The number of fruits and vegetables consumed throughout the day was assessed using two items. In accordance with the stoplight system [16], which divides all foods into three categories (green, yellow, and red) based on the fat and sugar content; respondents were asked to report the number of “red foods on the spotlight system” they had consumed throughout the day (i.e., foods with more than 7 g of fat or 12 g of sugar). Items included “How many servings of FRUITS and VEGETABLES (Green Foods on the Stoplight System) have you eaten today?” and “How many servings of HIGH FAT/HIGH SUGAR foods (Red Foods on the Stoplight System; e.g., cookies, candy, hamburgers, pizza) have you eaten today?” Response choices were 1–5. These items were administered at each observation with the goal of developing a cumulative record throughout the day. This data collection strategy allows for a daily total (i.e., value observed at the fourth survey) and total consumed between each occasion (i.e., T3 − T2 = amount consumed between the second and third observations) using the same item wording.

Acceptability questionnaire

Obtaining longitudinal data to identify idiographic physical activity profiles involves capturing a large amount of data and is typically cumbersome to acquire. Therefore, the current study set out to demonstrate that participants are willing to provide these data and are happy with the support they receive for doing so (i.e., technical assistance, explanation of devices, etc.). To assess the acceptability of the protocol, participants were asked 14 items on a 7-point scale. Six of the items were unique to the current study and assessed specific issues such as the comfort of wearing the sensors (2 items) and the ease of answering questions on the smartphone (2 items). Participants were asked about their general enjoyment of the current study and whether they would participate in a similar protocol if asked to do so by their doctor (2 items). The remaining eight items were modified from the Client Satisfaction Questionnaire-8 (please see Table 1 for specific items, [17]).

Table 1.

Descriptive statistics for the acceptability measures

| Mean (SD) | |

|---|---|

| How do you rate the quality of service you received?a | 4.54 (0.51) |

| Did you get the kind of service you wanted?a | 3.55 (0.51) |

| To what extent has our program met your needs?a | 4.40 (0.82) |

| If a friend were in need of similar help, would you recommend our services?a | 3.27 (0.70) |

| How satisfied are you with the amount of help you received?a | 3.23 (0.87) |

| Have the services you received helped you to deal more effectively with your problem?a | 4.05 (0.61) |

| Overall, how satisfied are you with the services you have received?a | 4.45 (0.61) |

| If you were to seek help again, would you come back to our program?a | 3.27 (0.63) |

| I enjoyed participating in the study.b | 4.59 (1.87) |

| I thought the heart rate monitor was comfortable to wear each day for 20 days.b | 5.29 (3.39) |

| I though the wrist strap was comfortable to wear each day for 20 days.b | 4.76 (2.44) |

| Answering the surveys on the smartphone was easy.b | 5.12 (2.23) |

| Answering the surveys on the smartphone took too much time.b | 6.41 (3.09) |

| If my doctor asked me to do a study like this to know more about my health I would do it.b | 4.94 (1.60) |

The terms “services” and “program” were in reference to the assessment method and technical support

aScores were based on a 4-point Likert scale (higher scores indicate more acceptability)

bScores were based on a 7-point Likert scale ranging from 1 (“not at all true”) to 7 (“very true”)

Data analysis

All statistical analyses were conducted using IBM SPSS Statistics Developer Version 22. Data from the PETE app were downloaded from the smartphone and converted to a SPSS database. Additionally, information from the BioHarness physiological monitoring system and Actigraph accelerometer were downloaded and imported into SPSS.

RESULTS

Attrition

Twenty participants were consented and enrolled in the study; however, two participants withdrew prior to completing the 20-day protocol. One participant reported that the protocol was too demanding and that he/she did not have the time. The second participant was admitted to a psychiatric inpatient facility and was withdrawn from the study. The data reported are based on the 18 participants that completed the 20-day protocol.

Objective behavioral measures

To determine the compliance rates for the accelerometer, the number of nights the participant fell asleep while wearing the device on their wrist was divided by 20 and multiplied by 100. At the end of the study, the compliance for the accelerometer was 75.3 %. In terms of feasibility, we had 74.1 % complete data for the accelerometers. On one occasion, an accelerometer was initialized incorrectly by study staff and was not able to collect data explaining why the feasibility value is lower than the compliance value. To assess for a decline in usage, a logistic regression was conducted, with accelerometer wear as a dichotomous dependent variable (i.e., wore vs. did not wear) and study day as a continuous independent variable; the results indicated that this regression was significant (95 % CI for Exp(B) 0.86–0.93). Therefore, there was a significant decline in accelerometer wear during the study period.

Eleven of 14 participants (79 %) who completed the acceptability questionnaires replied somewhat true to very true when asked to report if “the wrist strap was comfortable to wear each day for 20 days.” In the open-ended questions, one participant wrote that the accelerometer irritated the skin when the strap was wet.

Physiological measures

To calculate compliance with the physiological measures, the percentage of missing heart rate data for the first 80 observations was subtracted from 100 %. To assess for complete data from the physiological monitoring system, frequency data were calculated based on whether heart rate was captured within 15 min of a survey being triggered. Therefore, data could be listed as missing if the physiological monitoring system collected all day outside of that 15 min window. It is noteworthy that for this study, 15 min was chosen arbitrarily, but the data processing program can be configured to any time window of interest.

Compliance to the physiological monitoring system was 70.28 %. However, of the time that participants wore the physiological monitoring system, only 47.8 % of the expected values were captured. Missing data reflects times when the physiological monitoring system did not secure against the skin to connect well and therefore was unable to record heart rate data. There were a small number of circumstances in which equipment malfunction resulted in data loss from the BioHarness 3.0 (n = 2). In these two instances, the BioHarness battery was completely depleted in storage causing the internal clock to reset to a default time, and it was not possible to match timestamps with other study data. To assess for a decline in usage, a logistic regression was conducted, with BioHarness wear as a dichotomous dependent variable (i.e., wore vs. did not wear) and observation as a continuous independent variable; the results indicated that this regression was not significant (95 % CI for Exp(B) 1.00–1.01). Therefore, there was not a significant decline in BioHarness wear during the study period.

Eight of the 14 participants (57 %) who completed the acceptability questionnaires reported that the physiological monitoring system was comfortable to wear each day for 20 days (somewhat true to very true). Another three participants reported not at all true when asked to report if “the heart rate monitor was comfortable to wear.” See Table 1 for descriptive statistics.

Self-report measures

The percentage of missing questionnaire data from the smartphone was used to determine the compliance rates for the survey questions. Overall, 81.02 % of the self-report measures administered were completed by the participants. When asked if answering the surveys on the smartphone was easy, 12 participants (86 %) reported somewhat true to very true. However, when asked if answering the surveys took too much time, eight participants (57 %) reported somewhat true to very true. The average amount of time to complete each survey was 13.18 min (SD = 61:03), but this number was inflated by several surveys that were left open for hours at a time. The majority of surveys were completed in under 5 min (86 %), and nearly all were completed in less than 10 min (91 %).

To assess for a decline in usage, a logistic regression was conducted, with survey completion as a dichotomous dependent variable (i.e., completed vs. not completed) and observation as a continuous independent variable; the results indicated that this regression was not significant (95 % CI for Exp(B) 0.99–1.00). Additionally, a count variable was calculated based on the number of survey questions completed at each observation. A linear regression model was then used to examine whether compliance (number of survey questions answered) declined over the 20-day period. A non-significant regression equation revealed no such decline in compliance as the study progressed (F (1, 1438) = 0.089, p > .05), with an R 2 of 0.002. Therefore, there was not a significant decline in survey completion using the PETE app during the study period.

Program evaluation

Of all 20 participants, 19 (95 %) completed the evaluation questions.1 Generally, participants were satisfied with the program (95 % reported being very satisfied or mostly satisfied). Participants felt the quality of the services they received was good (42 %) or excellent (58 %) and would even recommend the program to a friend who was seeking to improve their physical activity (95 %). Therefore, the results suggest that participants may see the value in assessing behavior, psychosocial variables, and physiology in nearly real time. Participants also reported that if they were seeking out services to improve their level of physical activity engagement, the current program would meet their needs (95 %) and believed the services provided helped them to deal more effectively with increasing physical activity (84 %). Participants were asked to report on their willingness to complete a similar study if they were asked by their doctor, in an effort to know more about their health. All participants reported somewhat true to very true indicating that they see the protocol as acceptable for use in clinical care (see Table 1).

Qualitative responses

In addition to the questions provided above, a subsample of participants (n = 14) were given the opportunity to anonymously report on what they liked and disliked about the program. A third of participants (n = 5) reported the equipment was uncomfortable to wear each day for 20 days, in which most were referring to the physiological monitoring system. Example responses included “I didn’t like that the monitor was slightly uncomfortable and left a red spot,” “The heart rate monitor was very uncomfortable,” and “The heart rate monitor kept slipping off of me.” Other participants reported that the accelerometer, while waterproof, also proved to be uncomfortable when it got wet: “I didn’t like that you had to take a shower with the wristwatch on, because if I put a shirt on the wrist watch would make my shirt wet” or “When the accelerometer got wet it became uncomfortable and irritated my skin.” Additionally, while participants were provided the opportunity to choose the times at which the surveys were answered, eight participants wrote that the surveys came at unpredictable times or the surveys interfered with ongoing activities (“The amount of afternoon texts was occasionally annoying,” “Sometimes the questions interfered with parts of my day, but that would have not been foreseeable when this was started”).

Participants had a number of things they liked about the protocol, including being part of something bigger such as research or a project that was so interesting they were excited to tell their friends (n = 5). One participant replied, “(I liked) the fact that I got to help contribute data to a program that is attempting to reach out to my generation and curb its fitness problems.” A number of participants also enjoyed learning more about their health and challenging themselves to increase their physical activity (n = 6). Utilizing such novel technology was also mentioned as something participants liked as well as answering the questions, and the comfort of the equipment included in the protocol.

DISCUSSION

The results of this study suggest that current sensor and smartphone technologies are feasible for research and acceptable to participants for generating the kinds of data that could be used in a dynamical systems modeling framework. Adolescents are willing to complete a rigorous protocol to obtain this information. Compliance rates to the physiological monitoring system, accelerometer, and survey questions are similar to those reported in the literature [6] and should be sufficient to recover relationships in the data. In general, the participants were excited about the use of the equipment and reported they would be willing to comply with a similar protocol if requested to do so by their doctor.

A wearable sensor holds promise for linking subjective feeling states with physiological data and has the potential for informing intervention development. However, a number of adolescents in the study reported discomfort in wearing the physiological monitoring system for 12 h each day, which may have affected the compliance to the protocol. In fact, this may introduce selection bias toward participants who are willing to engage in an intensive protocol that requires the use of equipment with minimal comfort. The current study was only able to retain 80 % of the sample, due in part to the intensity of the assessment protocol. Even among that 80 %, the complete data rate was considerably lower for the physiological monitoring system relative to the accelerometer and ecological momentary assessment surveys. Ambulatory physiological monitoring is challenging and developments in sensor technology may be required to increase the percentage of complete data.

The high compliance to the PETE survey suggests that adolescents are willing to provide self-reported information at minimal cost and for a longer duration than previously documented in this age group [4, 18]. The majority of responses were completed in 10 min (i.e., 91 % of surveys). However, it is noteworthy that the current protocol did result in some extraordinarily long survey intervals on 9 % of responses. This is because participants occasionally responded to the first item to silence the phone and answered the remaining questions at a convenient time. As a result, our team has needed to build processing programs that identify whether a response occurs too far from the previous data point (i.e., greater than 10 min apart). Given that we are interested in capturing contemporaneous associations between affective variables, it is important that responses represent largely the same window in time. In the current example, 10 min is arbitrary and additional studies are needed to determine what time window results in an association between two constructs dropping out of significance. In the near future, protocols will be improved by adding a simple “snooze” button to the PETE app allowing the participant to delay the survey for a period of time. In an only slightly more distant future, it may be possible to use sensors or contextual variables to passively sense when it is a good or bad time to survey a participant.

The results of the study should be considered in light of the limitations. The sample included highly educated and middle-income families that self-selected to participate in the study. Therefore, there could be a potential self-selection bias, and the results of the study may not generalize to other demographic groups. There is also a concern that there may be too much lag between given responses to link them together if a participant answers the first question to silence the alarm and then answers other items at a later time (a pattern observed in the current data). Also related to timing of assessments, it is not possible with the current data to determine how long participants waited before responding to each survey; this limitation is because the PETE app timestamps responses, but does not provide a timestamp for the survey trigger. Therefore, if a survey was delayed because a device was turned off, it would not be possible to detect this using the current feature set. Finally, because participants are asked to report on their affect “since the beep last went off,” there is some potential for recall bias. Future studies should consider examining the optimal window for balancing participant burden and accurate reporting of affect.

The promising use of sensor technology can only be realized if adolescents and other demographic groups are willing to wear this type of equipment. As the field continues to incorporate the use of wearable sensors and other technology, new advancements in the comfort and feasibility of data capture of the equipment are warranted. Additionally, equipment malfunction and fit around the participant’s chest limited the completion of data from the physiological monitoring system; these problems could potentially be remedied by advancements in the comfort and adherence of the strap around the chest to improve data capture. Given the feedback regarding the discomfort of the wearable sensors, the drop-out due to intensive longitudinal data capture, and the poor feasibility of data collection, future studies could advance the research literature by identifying the optimal number of observations needed to inform intervention development.

Overall, there has been increased interest in using smartphones to promote physical activity. However, currently available products are not well aligned with scientific findings [19]. Inferences about the experiences of an individual cannot be made without observing that individual when he/she is physically active or not. Employing a methodology that combines ecological momentary assessment and other objective measures can allow researchers to identify the dynamic moment-to-moment relationships of biological, psychological, and social variables that influence whether an adolescent will engage in a target behavior. A notable strength of the study was the ability to collect subjective affective states and additional variables of interests at multiple time points throughout the day. The amount of data captured opens the possibility of data analysis using dynamical systems modeling (as well as more common methods such as multilevel models).

To extend the findings from the current study, the next step in development of this protocol will be to expand the PETE app to allow triggering of surveys based on real-time physiological data (event-based triggers). For example, if heart rate variability decreases, as measured by the BioHarness 3.0, it would be an opportunity to assess whether a stressor has occurred. By tying the triggering event to a physiological variable rather than a time of day, it would be possible to develop real-time linkages between physiological states and affect. In an ideal case, an idiographic relationship between physiology and affect could be developed for a person. This is not to say that event-based triggers are superior to time-based triggers, but there may be use cases where triggering an assessment by using physiological data could be of benefit. Beyond the assessment phase, a precision medicine approach could be employed where, for example, a physiological state (known from the assessment phase to be highly correlated with subjective stress) could be used to trigger a relaxation intervention rather than a survey.

The simultaneous use of ecological momentary assessment and physiological assessment can also be of great value to clinicians in practice. Ecological momentary assessment in particular has been recommended to increase the efficacy of interventions by clarifying antecedents of physical activity, by improving the accuracy of self-monitoring, and by specifying the temporal relationships of target behaviors [6]. Tools that simultaneously measure interrelated processes can significantly alter clinician’s ability to inform treatment and provide feedback on engagement in health behaviors. One potential advantage of intensive assessment is to allow clinicians to specify appropriate targets for modifiable behaviors in the patient’s environment. Collecting and monitoring data in real time would allow clinicians to provide tailored ecological momentary interventions based on the data provided by adolescents (e.g., encourage physical activity based on location or encourage consumption of fruits and vegetables based on reported low levels). This information not only can streamline clinical visits, but also creates improved clinical outcomes through the use of tailored self-regulation interventions [20]. In fact, Kanning and colleagues [21] report that ambulatory assessment interventions that provide tailored, moment-specific feedback have the potential to influence and support the individual when the unhealthy behavior actually occurs. Recent research also suggests that if smartphones assist with improving overall patient care as a means of improving the efficiency of completing clinical tasks, clinicians would be more willing to adopt and use a smartphone in their clinical work [22]. Thus, this study has important implications for researchers and clinicians alike.

Compliance with ethical standards

Statement on prior publication

The findings reported here have not been previously published and the manuscript is not being simultaneously submitted elsewhere.

Previous reporting of data

These data have not been reported previously.

Full control of primary data

We have the data and will make them available if requested.

Funding sources

The current work was funded by institutional funds granted to the second author by the Oklahoma State University.

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical disclosures and informed consent

Informed consent was obtained from a legal guardian for all participants and the child/adolescent also provided informed assent to participate. The authors are compliant with the American Psychological Association Code of Ethics.

Welfare of animals

No animals were used in this study.

IRB approval

The study was approved by the Institutional Review Board of Oklahoma State University.

Footnotes

One participant dropped out of the study due to time constraints but provided acceptability data before ending their participation.

Implications

Practice: Tools that have the capacity to capture physiology, behavior, and psychosocial processes in nearly real time are feasible and acceptable to adolescents given minimal cost and can help to streamline clinical encounters and interventions.

Policy: Efforts to increase collaboration among health psychologists, tech developers, and government agencies may facilitate the development of just-in-time interventions to target health behavior change.

Research: In order to move beyond the current established areas of assessment, research should examine the dynamic relationships between physiology, behavior, and psychosocial processes in nearly real time.

References

- 1.Riley WT, Cesar A, Martin, Rivera DE. The importance of behavior theory in control system modeling of physical activity sensor data. Paper presented at: 2014 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society; 26–30 Aug. 2014; Chicago, IL. [DOI] [PMC free article] [PubMed]

- 2.Navarro-Barrientos JE, Rivera DE, Collins LM. A dynamical model for describing behavioural interventions for weight loss and body composition change. Math Comptu Model Dyn Syst. 2011;17:183–203. doi: 10.1080/13873954.2010.520409. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Martin CA, Rivera DE, Riley WT, et al. A dynamical systems model of Social Cognitive Theory. 2014 American Control Conference; 4-6 June 2014, 2014; Portland, OR.

- 4.Dunton GF, Whalen CK, Jamner LD, Henker B, Floro JN. Using ecologic momentary assessment to measure physical activity during adolescence. Am J Prev Med. 2005;29:281–287. doi: 10.1016/j.amepre.2005.07.020. [DOI] [PubMed] [Google Scholar]

- 5.Spook JE, Paulussen T, Kok G, Van Empelen P. Monitoring dietary intake and physical activity electronically: feasibility, usability, and ecological validity of a mobile-based ecological momentary assessment tool. J Med Internet Res. 2013;15:e214. doi: 10.2196/jmir.2617. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Rofey DL, Hull EE, Phillips J, Vogt K, Silk JS, Dahl RE. Utilizing ecological momentary assessment in pediatric obesity to quantify behavior, emotion, and sleep. Obesity. 2010;18:1270–1272. doi: 10.1038/oby.2009.483. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Guile JM, Huynh C, Desrosiers L, Bouvier H, MacKay J, Chevrier E, et al. Exploring sleep disturbances in adolescent borderline personality disorder using actigraphy: a case report. Int J Adolesc Med Health. 2009;21:123–126. doi: 10.1515/ijamh.2009.21.1.123. [DOI] [PubMed] [Google Scholar]

- 8.Dunton GF, Whalen CK, Jamner LD, Floro JN. Mapping the social and physical contexts of physical activity across adolescence using ecological momentary assessment. Ann Behav Med. 2007;34:144–153. doi: 10.1007/BF02872669. [DOI] [PubMed] [Google Scholar]

- 9.Kanning MK, Ebner-Priemer UW, Schlicht WM. How to investigate within-subject associations between physical activity and momentary affective states in everyday life: a position statement based on a literature overview. Front Psychol. 2013;29:187. doi: 10.3389/fpsyg.2013.00187. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Sadeh A, Sharkey KM, Carskadon MA. Activity-based sleep—wake identification: an empirical test of methodological issues. Sleep. 1994; 17(3): 201–207. [DOI] [PubMed]

- 11.Thompson ER. Development and validation of an internationally reliable short-form of the positive and negative affect schedule (PANAS) J Cross-Cultural Psyc. 2007;38:227–242. doi: 10.1177/0022022106297301. [DOI] [Google Scholar]

- 12.McNair PM, Lorr M, Droppleman LF. POMS Manual. 2. San Diego: Educational and Industrial Testing Service; 1981. [Google Scholar]

- 13.Sallis JF, Grossman RM, Pinski RB, Patterson TL, Nader PR. The development of scales to measure social support for diet and exercise behaviors. Prev Med. 1987;16:825–836. doi: 10.1016/0091-7435(87)90022-3. [DOI] [PubMed] [Google Scholar]

- 14.Epstein LH, Squires S. The Stoplight Diet for Children. Boston, MA: Little, Brown and Company; 1988. [Google Scholar]

- 15.Attkisson CC, Greenfield TK. The UCSF Client Satisfaction Questionnaire (CSQ) Scales: the Client Satisfaction Questionnaire-8. In: Me M, ed. Psychological testing: Treatment planning and outcome assessment. 2nd ed. Mahwah, NJ: Erlbaum; 1999.

- 16.Dunton GF, Liao Y, Intille SS, Spruijt-Metz D, Pentz M. Investigating children’s physical activity and sedentary behavior using ecological momentary assessment with mobile phones. Obesity. 2011;19:1205–1212. doi: 10.1038/oby.2010.302. [DOI] [PubMed] [Google Scholar]

- 17.Brannon EE, Cushing CC. Is there an app for that? Translational science of pediatric behavior change. J Pediatr Psychol. 2015;40:373–384. doi: 10.1093/jpepsy/jsu108. [DOI] [PubMed] [Google Scholar]

- 18.Cushing CC, Steele RG. A meta-analytic review of eHealth interventions for pediatric health promoting and maintaining behaviors. J Pediatr Psychol. 2010;35:937–949. doi: 10.1093/jpepsy/jsq023. [DOI] [PubMed] [Google Scholar]

- 19.Kanning MK, Ebner-Priemer UW, Schlicht WM. How to investigate within-subject associations between physical activity and momentary affective states in everyday life: a position statement based on a literature overview. Front Psychol. 2013;2013:4. doi: 10.3389/fpsyg.2013.00187. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Putzer, G. J., & Park, Y. (2012). Are physicians likely to adopt emerging mobile technologies? Attitudes and innovation factors affecting smartphone use in the Southeastern United States. Perspect Health Inf Manag, 9. [PMC free article] [PubMed]

- 21.Larsen DL, Attkisson CC, Hargreaves WA, Nguyen TD. Assessment of client/patient satisfaction: development of a general scale. Eval Program Plann. 1979;2:197–207. doi: 10.1016/0149-7189(79)90094-6. [DOI] [PubMed] [Google Scholar]

- 22.Cushing CC, Brannon EE, Suorsa KI, Wilson D. Health promotion interventions for children and adolescents: a meta-analytic review using an ecological framework. J Pediatr Psychol. 2014;39:949–962. doi: 10.1093/jpepsy/jsu042. [DOI] [PMC free article] [PubMed] [Google Scholar]