We investigate the underlying neurophysiology of age-related auditory temporal processing deficits in normal-hearing listeners using natural speech (in noise). Two neurophysiological techniques are used—magnetoencephalography and EEG—to investigate two different brain areas—cortex and midbrain—within each subject. Older adults have more exaggerated cortical speech representations than younger adults in both quiet and noise. Midbrain speech representations depend more critically on noise level and synchronize more weakly in older adults than younger.

Keywords: aging, electrophysiology, midbrain, cortex, hearing

Abstract

Humans have a remarkable ability to track and understand speech in unfavorable conditions, such as in background noise, but speech understanding in noise does deteriorate with age. Results from several studies have shown that in younger adults, low-frequency auditory cortical activity reliably synchronizes to the speech envelope, even when the background noise is considerably louder than the speech signal. However, cortical speech processing may be limited by age-related decreases in the precision of neural synchronization in the midbrain. To understand better the neural mechanisms contributing to impaired speech perception in older adults, we investigated how aging affects midbrain and cortical encoding of speech when presented in quiet and in the presence of a single-competing talker. Our results suggest that central auditory temporal processing deficits in older adults manifest in both the midbrain and in the cortex. Specifically, midbrain frequency following responses to a speech syllable are more degraded in noise in older adults than in younger adults. This suggests a failure of the midbrain auditory mechanisms needed to compensate for the presence of a competing talker. Similarly, in cortical responses, older adults show larger reductions than younger adults in their ability to encode the speech envelope when a competing talker is added. Interestingly, older adults showed an exaggerated cortical representation of speech in both quiet and noise conditions, suggesting a possible imbalance between inhibitory and excitatory processes, or diminished network connectivity that may impair their ability to encode speech efficiently.

NEW & NOTEWORTHY

We investigate the underlying neurophysiology of age-related auditory temporal processing deficits in normal-hearing listeners using natural speech (in noise). Two neurophysiological techniques are used—magnetoencephalography and EEG—to investigate two different brain areas—cortex and midbrain—within each subject. Older adults have more exaggerated cortical speech representations than younger adults in both quiet and noise. Midbrain speech representations depend more critically on noise level and synchronize more weakly in older adults than younger.

the ability to track and understand speech in the presence of interfering speakers is one of the most complex communication challenges experienced by humans. In a complex auditory scene, both humans and animals show an innate ability to detect and recognize individual auditory objects, an important component in the process of stream segregation. The ability to transform the noise-corrupted acoustic signal into a neural representation suitable for speech recognition may occur in the auditory cortex via adaptive neural encoding (Ding et al. 2014; Ding and Simon 2012, 2013). Specifically, low-frequency auditory cortical activity recorded with magnetoencephalography (MEG) reliably synchronizes to the slow temporal modulations of speech, even when the energy of the background noise is considerably higher than the speech signal and even when the background noise is also speech (Ding and Simon 2012). However, the accuracy of cortical speech processing may also be affected by the precision of neural synchronization in the auditory midbrain, as seen in studies that compare cortical responses with those using the frequency following response (FFR), believed to arise primarily from the midbrain (Chandrasekaran and Kraus 2010). For example, noise has a greater impact on the robustness of cortical speech processing in children (with learning impairments) who have delayed peak latencies in FFRs to a speech syllable (King et al. 2002). In normal-hearing young adults, earlier peak latencies in the FFR are associated with larger N1 amplitudes in cortical responses to speech in noise, and larger N1 amplitudes are associated with a better ability to recognize sentences in noise (Parbery-Clark et al. 2011). Furthermore, Bidelman et al. (2014) demonstrated that age-related temporal speech-processing deficits arising from the midbrain may be compensated by a stronger cortical response. Recent work from Chambers et al. (2016) showed that a combination of profound cochlear denervation and desynchronization can result in absence of wave I in the brain stem but not in the cortex, suggesting compensatory central gain increases that help restore the representation of the auditory object in auditory cortex. Whereas these studies examined age- and hearing loss-related changes in midbrain and cortical encoding of vowels and tones presented in quiet, the comparison between midbrain and cortical encoding of speech syllables and sentences presented in competing single-talker speech has not yet been investigated in either younger or older adults.

Such auditory temporal processing deficits are of great relevance, since communication difficulties for older adults have a significant social impact, with strong correlations seen between hearing loss and depression (Carabellese et al. 1993; Herbst and Humphrey 1980; Kay et al. 1964; Laforge et al. 1992) and cognitive impairment (Gates et al. 1996; Lin et al. 2013; Uhlmann et al. 1989). Although audibility is an important factor in the older adult's ability to understand speech (Humes and Christopherson 1991; Humes and Roberts 1990), the use of hearing aids often does not improve speech understanding in noise, perhaps because increased audibility cannot restore temporal precision degraded by aging. Several electrophysiological studies in humans and animals support the hypothesis that degraded auditory temporal processing may play a role in explaining speech-in-noise problems experienced by older adults (Alain et al. 2014; Anderson et al. 2012; Clinard and Tremblay 2013; Lister et al. 2011; Parthasarathy and Bartlett 2011; Presacco et al. 2015; Ross et al. 2010; Soros et al. 2009).

To investigate further the neural mechanisms underlying age-related deficits in speech-in-noise understanding, this current study evaluated the effects of aging on temporal synchronization of speech in the presence of a competing talker in both cortex and midbrain. To de-emphasize the effects of audibility, only clinically normal-hearing listeners were included in both the younger and older age groups. We posit several hypotheses. First, in responses arising from midbrain, we hypothesize that younger adults encode speech with greater neural fidelity, reflected by higher amplitude responses and higher stimulus-to-response and quiet-to-noise correlations than older adults when the signal is presented in quiet and in noise. This hypothesis was driven by the results of the above-mentioned studies, showing more robust and less jittered responses in quiet in younger adults (Anderson et al. 2012; Clinard and Tremblay 2013; Mamo et al. 2016; Presacco et al. 2015) and an age-related effect of noise (Parthasarathy et al. 2010). In contrast, for cortical responses, we hypothesize that older adults will show an over-representation of the response both in quiet and noise. This hypothesis is driven by evidence showing age-related increases in amplitude (Alain et al. 2014; Soros et al. 2009) and in latency (Tremblay et al. 2003) of the main peaks of auditory cortical responses. Finally, we hypothesize that within an individual, better speech-in-noise understanding (at the behavioral level) correlates with greater fidelity of neural encoding of speech, regardless of age.

MATERIALS AND METHODS

The experimental protocol and all procedures were reviewed and approved by the Institutional Review Board of the University of Maryland. Participants gave written, informed consent, according to principles set forth by the University of Maryland's Institutional Review Board, and were paid for their time.

Participants

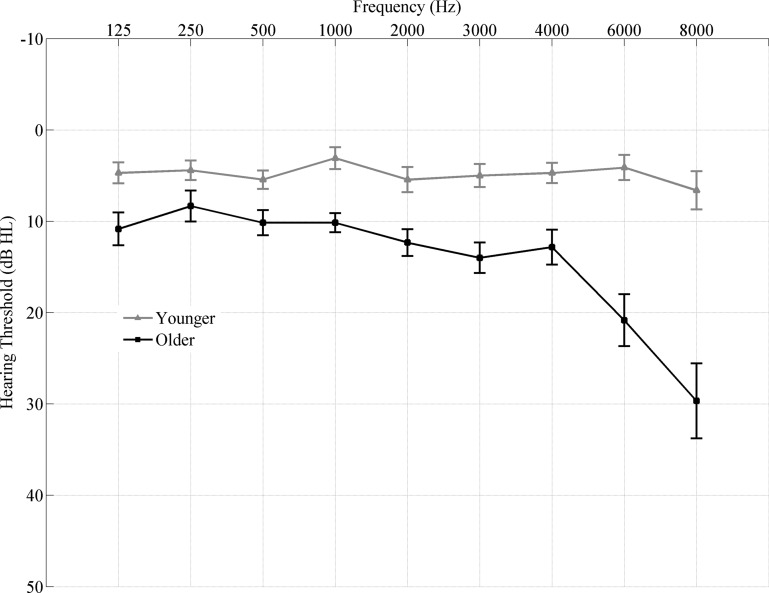

Participants comprised 17 younger adults (18–27 yr, means ± SD 22.23 ± 2.27, 3 men) and 15 older adults (61–73 yr, means ± SD 65.06 ± 3.30, 5 men), recruited from the Maryland; Washington, D.C.; and Virginia areas. All participants had clinically normal hearing (Fig. 1), defined as follows: 1) air conduction thresholds ≤ 25 dB hearing level from 125 to 4,000 Hz bilaterally and 2) no interaural asymmetry (>15 dB hearing-level difference at no more than 2 adjacent frequencies). Participants had a normal intelligence quotient [scores ≥85 on the Wechsler Abbreviated Scale of Intelligence (Zhu and Garcia 1999)] and were not significantly different on intelligence quotient [F(1,30) = 0.660, P = 0.423] and sex (Fisher's exact, P > 0.05). Because of the established effects of musicianship on subcortical auditory processing (Bidelman and Krishnan 2010; Parbery-Clark et al. 2012), professional musicians were excluded. In addition, the older adults were screened for dementia on the Montreal Cognitive Assessment (Nasreddine et al. 2005). All participants spoke English as their first language, and none of them were tonal language speakers.

Fig. 1.

Audiogram (mean ± 1 SE) of the grand averages of both ears of younger (gray) and older (black) adults. All participants have clinically normal hearing. HL, hearing level.

Speech Intelligibility

The Quick Speech-in-Noise test (QuickSIN) (Killion et al. 2004) was used to quantify the ability to understand speech presented in noise composed of four-talker babble.

EEG: Stimuli and Recording

A 170-ms/da/(Anderson et al. 2012) was synthesized at a 20-kHz sampling rate with a Klatt-based synthesizer (Klatt 1980). The stimulus was presented at an 80 peak-dB sound-pressure level diotically with alternating polarities at a rate of 4 Hz through electromagnetically shielded insert earphones (ER·1; Etymotic Research, Elk Grove Village, IL) via Xonar Essence One (ASUS, Taipei, Taiwan) using Presentation software (Neurobehavioral Systems, Berkeley, CA). A single-competing female talker narrating A Christmas Carol by Charles Dickens was used as the background noise. FFRs were recorded in quiet and in noise [signal-to-noise ratios (SNRs): +3, 0, −3, and −6 dB] at a sampling frequency of 16,384 Hz using the ActiABR-200 acquisition system (BioSemi B.V., Amsterdam, Netherlands) with a standard vertical montage of five electrodes (Cz active, 2 forehead ground common mode sense/driven right leg electrodes, earlobe references) and with an online 100- to 3,000-Hz bandpass filter. During the recording session (∼1 h), participants sat in a recliner and watched a silent, captioned movie of their choice to facilitate a relaxed yet wakeful state. Artifact-free sweeps (2,300) were recorded for each condition from each participant.

Data analysis.

Data recorded with BioSemi B.V. were analyzed in MATLAB (version R2011b; MathWorks, Natick, MA) after being converted into MATLAB format with the function pop_biosig from EEGLab (Scott Makeig, Swartz Center for Computational Neuroscience, University of California, San Diego, CA) (Delorme and Makeig 2004). Sweeps with amplitude in the ±30-μV range were retained and averaged in real time and then processed offline. The time window for each sweep was −47 to 189 ms, referenced to the stimulus onset. Responses were digitally bandpass filtered offline from 70 to 2,000 Hz using a fourth-order Butterworth filter to minimize the effects of cortical low-frequency oscillations (Galbraith et al. 2000; Smith et al. 1975). A final average response was created by averaging the sweeps of both polarities to minimize the influence of cochlear microphonic and stimulus artifact on the response and simultaneously maximize the envelope response (Aiken and Picton 2008; Campbell et al. 2012; Gorga et al. 1985). Root-mean-square (RMS) values were calculated for the transition (18–68 ms) and steady-state (68–170 ms) regions. Correlation (Pearson's linear correlation) between the envelope response in quiet and noise was calculated for each subject to estimate the extent to which noise affects the FFR. Pearson's linear correlation was also used to quantify the stimulus-to-response correlation in the steady-state region, during which the response more reliably follows the stimulus. For this analysis, the envelope of the analytic signal of the stimulus was extracted and then band-pass filtered using the same filter as for the response. Average spectral amplitudes over 20 Hz bins were also calculated from each response using a fast Fourier transform (FFT) with zero padding and 1 Hz interpolated frequency resolution over the transition and steady-state regions for the fundamental frequency (F0) and the first two harmonics. An additional analysis signal was created by subtracting and then averaging the sweeps of the two polarities to enhance the temporal fine structure (TFS) (Aiken and Picton 2008). One younger adult was removed from the TFS analysis because of corruption by stimulus artifact. Average spectral amplitudes over 20 Hz bins were calculated for the TFS from each response using a FFT with zero padding and 1 Hz interpolated frequency resolution over the transition and steady-state regions for the frequencies of 400 and 700 Hz, which represent the two main peaks of interest from the two time regions (Anderson et al. 2012).

MEG: Stimuli and Recording

Participants were asked to attend to one of two stories (foreground) presented diotically while ignoring the other one. The stimuli for the foreground consist of segments from the book, The Legend of Sleepy Hollow by Washington Irving, whereas the stimuli for the background were the same as were used in the EEG experiment. The foreground was spoken by a male talker, whereas the background story was spoken by a female talker. Additional stimuli using a background narration in an unfamiliar language were also presented, but the responses to those stimuli are not analyzed here. Each speech mixture was constructed, as described by Ding and Simon (2012), by digitally mixing two speech segments into a single channel with a duration of 1 min. Five different conditions were recorded: quiet and +3, 0, −3, and −6 dB SNR. Four different segments from the same foreground story were used to minimize the possibility that the clarity of the stories could affect the performance of the subjects. The same segment was played for quiet and −6 dB. To maximize the level of attention of the subject on the foreground segment, participants were asked beforehand to count the number of times a specific word or name was mentioned in the story. The sounds, ∼70 dB sound-pressure level when presented with a solo speaker, were delivered to the participants' ears with 50 Ω sound tubing (E-A-RTONE 3A; Etymotic Research), attached to E-A-RLINK foam plugs inserted into the ear canal. The entire acoustic delivery system was equalized to give an approximately flat transfer function from 40 to 4,000 Hz, thereby encompassing the range of the delivered stimuli. Neuromagnetic signals were recorded using a 157-sensor whole-head MEG system (Kanazawa Institute of Technology, Nonoichi Ishikawa, Japan) in a magnetically shielded room, as described in Ding and Simon (2012).

Data analysis.

Three reference channels were used to measure and cancel the environmental magnetic field by using time shift-principal component analysis (de Cheveigné and Simon 2007). MEG data were analyzed offline using MATLAB. The 157 raw MEG data channel responses were first filtered between 2 and 8 Hz, with an order 700 windowed (Hamming) linear-phase finite impulse response filter, then decomposed using n spatial filters into n signal components (where n ≤ 157) using the denoising source separation (DSS) algorithm (de Cheveigné and Simon 2008; Särelä and Valpola 2005). The first six DSS component filters were then used for the analysis. The filtering range of 2–8 Hz was chosen based on previous results showing the absence of intertrial coherence above 8 Hz (Ding and Simon 2013) and the importance of the integrity of the modulation spectrum above 1 Hz to understand spoken language (Greenberg and Takayuki 2004). The signal components used for analysis were then re-extracted from the raw data for each trial, spatially filtered using the six DSS filters just constructed, band-pass filtered between 1 and 8 Hz (Ding and Simon 2012) with a second-order Butterworth filter, and averaged over trials. Reconstruction of the envelope was performed using a linear reconstruction matrix estimated via the Boosting algorithm (David et al. 2007; Ding et al. 2014; Ding and Simon 2013). Success of the reconstruction is measured by the linear correlation between the reconstructed and actual speech envelope. The reconstructed envelope was obtained from the unmixed speech of the single speaker to which the participant was instructed to attend, not from the acoustic stimulus mixture. The envelope was computed as the 1- to 8-Hz band pass-filtered magnitude of the analytic signal. Data were analyzed using three different time windows for this reconstruction model: 500, 350, and 150 ms. The choice to narrow the integration window down to 150 ms is based on previous results, showing that the ability to track the speech envelope substantially worsens as the window decreases down to 100 ms (Ding and Simon 2013). These values refer to the time shift imposed on our data with respect to the onset of the speech and to the corresponding integration window of our reconstruction matrix. Specifically, if processing time for younger and older adults is the same, then their performance should follow the same pattern as the integration window changes. Conversely, if older adults require more time to process the information because of the possible presence of temporal processing deficits, then the narrowing of the integration window should negatively affect their performance more than for younger adults. The noise floor was calculated by using the neural response recorded from each condition to reconstruct the speech envelope of a different stimulus than was used during this response.

Statistical Analyses

All statistical analyses were conducted in SPSS version 21.0 (IBM, Armonk, NY). Fisher's z transformation was applied to all of the correlation values calculated for the midbrain and cortical analysis before any statistical analysis. Split-plot ANOVAs were used to test for age-group × condition interactions for the RMS values of the FFR response in the time domain, for the stimulus-to-response correlations of the FFR, and for correlation values calculated for the cortical data. The Greenhouse-Geisser test was used when the Mauchly's sphericity test was violated. Paired t-tests were used for within-subject group analysis for the correlation values and amplitudes for the cortical data, whereas one-way ANOVAs were used to analyze the RMS amplitude values of the FFR, stimulus-to-noise correlation of the FFR, FFT of the FFR, quiet-noise correlations, and the correlation values for the cortical data. The nonparametric Mann-Whitney U-test was used in place of the one-way ANOVA when Levene's test for Equality of Variances was violated. Two-tailed Spearman's rank correlation (ρ) was used to evaluate the relationships among speech-in-noise scores, midbrain, cortical parameters, and pure-tone average. The false discovery rate procedure (Benjamini and Hochberg 1995) was applied to control for multiple comparisons where appropriate.

RESULTS

Speech Intelligibility (QuickSIN)

Younger adults (means ± SD = −0.57 ± 1.13 dB SNR loss) scored significantly better [F(1,30) = 10.613, P = 0.003] than older adults (means ± SD = 0.8 ± 1.25 dB SNR loss) on the QuickSIN test, suggesting that older adults' performance in noise may decline compared with younger adults, even when audiometric thresholds are clinically normal.

Midbrain (EEG)

Amplitude analysis.

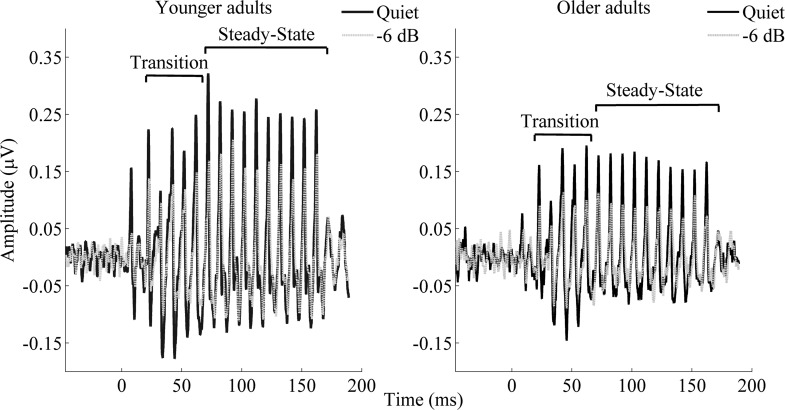

Figure 2 shows the grand average of FFRs of the stimulus envelope of younger and older adults in quiet and in one of the four noise conditions tested (−6 dB). Figure 3A displays the RMS values for each condition tested in younger and older adults in the transition and steady-state regions. In both regions, the RMS values of the responses in noise of younger and older adults are significantly higher than the RMS calculated for the noise floor (all, P < 0.007).

Fig. 2.

Grand average (n = 17 for younger and n = 15 for older adults) of the response to the stimulus envelope for younger (left) and older [right; quiet = dark lines; noise (−6 dB) = light lines] adults. Statistical analyses carried out on individual subjects show that in both the transition and steady-state regions, noise resulted in a significant decrease (P < 0.01 and < 0.05 for the transition and steady-state region, respectively) in the amplitude response for both younger and older adults at all of the conditions tested. Higher RMS values were also found in younger adults in both regions (P < 0.05).

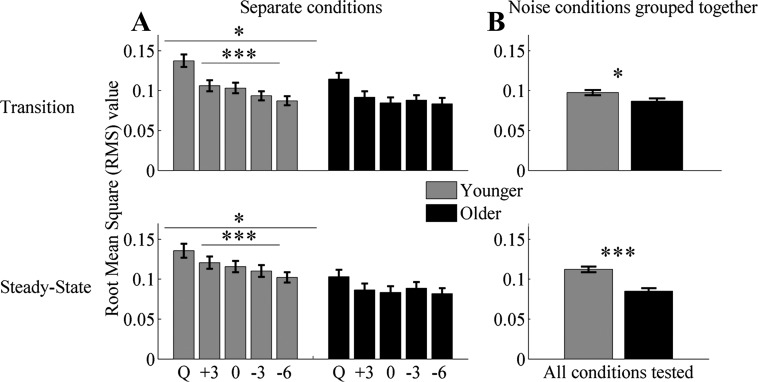

Fig. 3.

RMS values ± 1 SE of the envelope for the conditions (Q = Quiet, +3 = +3 dB, 0 = 0 dB, −3 = −3 dB, and −6 = −6 dB) tested in younger (gray bars) and older (black bars) adults. A: average RMS for each single condition. B: average RMS collapsed across all noise conditions tested. Younger adults had significantly higher RMS values in quiet in both the transition and the steady-state regions. An RMS × group-interaction effect was noted in the transition at −3 and −6 dB but not in the steady-state region. Repeated-measures ANOVA, applied to the 4 noise conditions, shows significant differences in younger adults in both the transition and steady-state regions but not in older adults. Noise minimally affects older adults, likely because their response in quiet is already degraded. *P < 0.05, ***P < 0.001.

transition region.

A one-way ANOVA showed that younger adults have significantly higher RMS values in quiet [F(1,30) = 4.255, P = 0.048]. When all of the noise conditions were collapsed together, one-way ANOVA showed significant differences between younger and older adults [F(1,126) = 5.150, P = 0.025; Fig. 3B]. The follow-up results of paired t-tests suggest that noise significantly decreases response amplitude in both younger and older adults in all of the noise conditions tested (all, P < 0.01). Repeated-measures ANOVA showed a condition × age interaction between quiet and noise at −3 dB [F(1,30) = 6.264, P = 0.018] and −6 dB [F(1,30) = 6.696, P = 0.015] but not at the other conditions tested [F(1,30) = 1.125, P = 0.297 and F(1,30) = 0.333, P = 0.568 for +3 and 0 dB, respectively]. Repeated-measures ANOVA showed significant differences across noise conditions in younger [F(3,48) = 13.384, P < 0.001] but not in older [F(3,48) = 0.885, P = 0.457] adults (Fig. 3A).

steady-state region.

A one-way ANOVA showed that younger adults have significantly higher RMS values than older adults in quiet [F(1,30) = 6.877, P = 0.014]. The follow-up results of paired t-tests suggest that noise significantly decreases response amplitude in both younger and older adults in all of the noise conditions tested (all, P < 0.05). Repeated-measures ANOVA showed no condition × age interaction between quiet and noise at any of the conditions tested [F(1,30) = 0.072, P = 0.791; F(1,30) = 0.000, P = 0.986; F(1,30) = 2.574, P = 0.119; and F(1,30) = 3.197, P = 0.084 for +3; 0; −3; and −6 dB, respectively]. Repeated-measures ANOVA showed significant differences across noise conditions in younger [F(3,48) = 19.847, P < 0.001] but not in older [F(3,48) = 0.874, P = 0.462] adults (Fig. 3A). When all of the noise conditions were collapsed together, a follow-up one-way ANOVA showed significant differences between younger and older adults [F(1,126) = 27.364, P < 0.001; Fig. 3B].

correlation analysis.

To analyze the robustness of the response in noise, we linearly correlated (Pearson correlation) the average response obtained in quiet with that recorded in noise for both the transition and steady-state regions for each subject. Repeated-measures ANOVA showed no significant noise condition × age interaction in either the transition [F(3,90) = 1.129, P = 0.342] or the steady-state [F(3,90) = 1.015, P = 0.390] region. When all of the noise conditions were grouped together, a follow-up Mann-Whitney U-test showed significantly higher Fisher-transformed r values in younger adults in the steady-state [U(128) = 1,272, Z = −3.667, P < 0.001] but not in the transition [U(128) = 1,675, Z = −1.743, P = 0.081] region.

stimulus-to-response correlation.

Repeated-measures ANOVA showed a significant noise condition × age interaction between quiet and noise at all of the noise conditions tested [F(1,30) = 5.915, P = 0.021; F(1,30) = 4.302, P = 0.047; F(1,30) = 5.786, P = 0.023; and F(1,30) = 8.318, P = 0.007 for +3; 0; −3; and −6 dB, respectively]. A one-way ANOVA showed that the younger adults' correlation values were significantly higher than those of older adults in all of the noise conditions tested [F(1,30) = 7.768, P = 0.009; F(1,30) = 5.535, P = 0.025; F(1,30) = 5.166, P = 0.030; and F(1,30) = 8.838, P = 0.006 for +3; 0; −3; and −6 dB, respectively] but not in quiet [U(62) = 114, Z = −0.510, P = 0.628].

Frequency analysis (envelope).

transition region.

A one-way ANOVA showed no significant amplitude differences in the transition region at F0 and at the first two harmonics in all of the conditions tested (all, P > adjusted threshold), except the second harmonic at +3 dB [F(1,30) = 9.046, P = 0.005]. Repeated-measures ANOVA showed significant differences across noise conditions only in younger adults and only at the second harmonic [F(3,48) = 6.141, P = 0.007].

steady-state region.

A one-way ANOVA showed that younger adults' F0 amplitude is significantly higher than that of older adults in all of the noise conditions tested [F(1,30) = 9.287, P = 0.005; F(1,30) = 9.598, P = 0.004; F(1,30) = 6.518, P = 0.016; and F(1,30) = 8.901, P = 0.006 for +3; 0; −3; and −6 dB, respectively] but not in quiet [F(1,30) = 3.390, P = 0.076]. No significant differences were found at the second harmonic (all, P > 0.05), whereas the amplitude of the third harmonic is significantly higher in younger adults than in older adults in all of the noise conditions tested [F(1,30) = 12.744, P = 0.001; F(1,30) = 9.259, P = 0.005; F(1,30) = 4.318, P = 0.046; and F(1,30) = 7.517, P = 0.010 for +3; 0; −3; and −6 dB, respectively] and in quiet [F(1,30) = 26.771, P < 0.001]. Repeated-measures ANOVA showed significant amplitude differences across noise conditions only in younger adults at the F0 [F(3,48) = 3.987, P = 0.013] and at the first [F(3,48) = 3.065, P = 0.037] and second [F(3,48) = 8.421, P < 0.001] harmonics.

Frequency analysis (TFS).

transition region.

A one-way ANOVA showed no significant group differences in amplitude at 400 or 700 Hz at any of the conditions tested (all, P > 0.05). Repeated-measures ANOVA showed significant differences across noise conditions only in younger adults and only at 400 Hz [F(3,45) = 4.406, P = 0.008] but not for 700 Hz in the younger adults or for either frequency in the older adults (all, P > 0.05).

steady-state region.

A one-way ANOVA showed that the 400-Hz amplitude in younger was significantly higher than that of older adults but only in quiet [F(1,29) = 12.908, P = 0.001] and at 0 dB SNR [F(1,29) = 9.654, P = 0.004]. No significant differences were found at 700 Hz at any SNR (all, P > 0.05) or in quiet (P > 0.05, Mann-Whitney test). Repeated-measures ANOVA showed significant amplitude differences across noise conditions in the younger adults at 400 Hz [F(3,45) = 4.802, P = 0.006] but not for 700 Hz in the younger adults or for either frequency in the older adults (all, P > 0.05).

Cortex (MEG)

Reconstruction of the attended speech envelope.

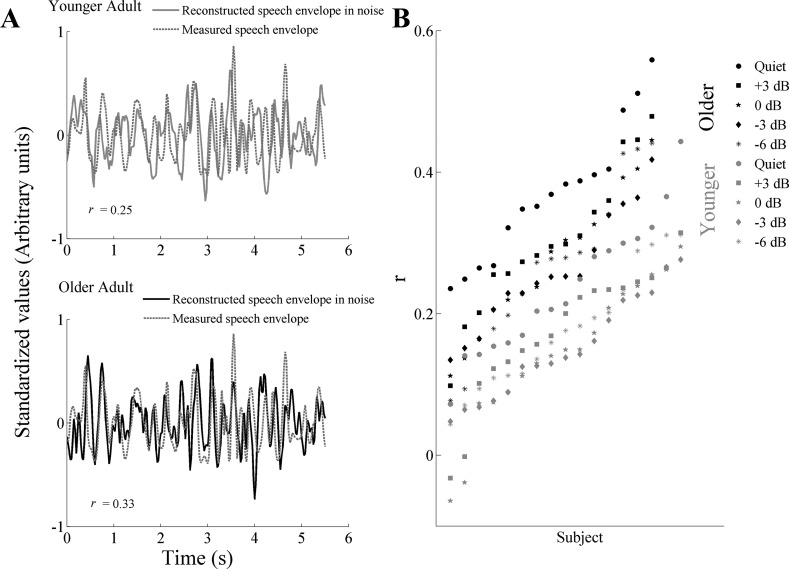

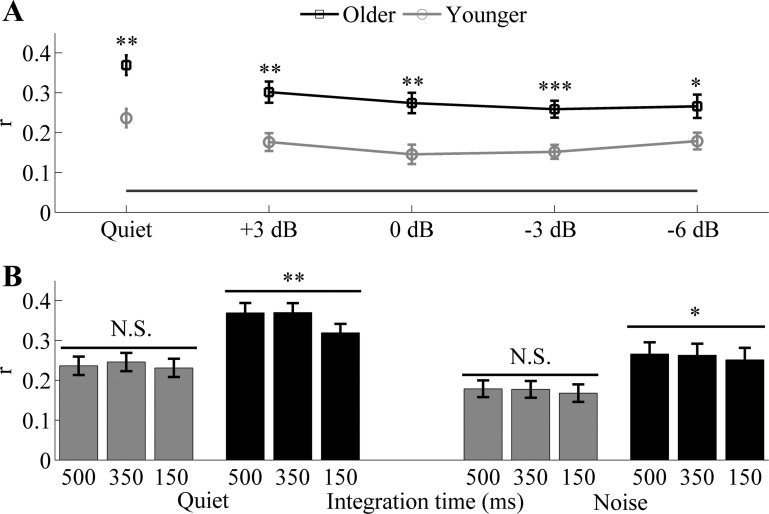

The ability to reconstruct the low-frequency speech envelope from cortical activity is a measure of the fidelity of the neural representation of that speech envelope (Ding and Simon 2012). Figure 4A shows an example of reconstruction of the speech envelope of the foreground in noise (−6 dB) from a representative younger and older adult. Figure 4B plots the r values for each participant, at each tested condition, in ascending order. Figure 5A displays the grand average ± SE of the reconstruction accuracy for younger and older adults for all of the conditions tested. One-way ANOVA showed significantly higher correlation values in older adults compared with younger adults in quiet [F(1,30) = 14.923, P = 0.001] and in all of the noise conditions tested [Fig. 5A; F(1,30) = 13.315, P = 0.001; F(1,30) = 13.374, P = 0.001; F(1,30) = 15.331, P < 0.001; and F(1,30) = 6.195, P = 0.019 for +3; 0; −3; and −6 dB, respectively]. All of the reconstruction values were significantly higher than the noise floor (all, P < 0.01). Since the difference between older and younger adults is minimized at −6 dB, this condition was used to analyze the effect of the integration window on the fidelity of the reconstruction of the speech envelope. Results from a split-plot ANOVA, applied to the three integration windows used (Fig. 5B) for the analysis, revealed a reconstruction window × age-group interaction in quiet [F(2,60) = 9.332, P = 0.004] but not in noise [F(2,60) = 0.105, P = 0.802]. Repeated-measures ANOVA, applied to 500, 350, and 150 ms integration windows, shows significant differences in older adults in both quiet [F(2,32) = 14.954, P = 0.001] and noise [F(2,32) = 5.048, P = 0.037] but not in younger adults [F(2,32) = 4.213, P = 0.048 and F(2,32) = 1.195, P = 0.302 in quiet and noise, respectively]. A follow-up paired t-test of the foreground reconstructed in quiet and noise at 500 vs. 350 ms and 500 vs. 150 ms showed that the reconstruction accuracy of younger adults is not significantly affected by the integration windows in noise [t(16) = 0.366, P = 0.719 and t(16) = 1.162, P = 0.262 for 500 vs. 350 ms and 500 vs. 150 ms, respectively], whereas in quiet, the 500-ms integration window had significantly lower values than 350 ms but not than 150 ms [t(16) = −3.722, P = 0.002 and t(16) = 0.973, P = 0.345 for 500 vs. 350 ms and 500 vs. 150 ms, respectively]. Conversely, older adults' ability to track the speech envelope of the foreground is significantly reduced at 350 and 150 ms in both quiet [t(14) = −0.248, P = 0.807 and t(14) = 3.779, P = 0.002 for 500 vs. 350 ms and 500 vs. 150 ms, respectively] and noise [t(14) = 2.064, P = 0.058 and t(14) = 2.512, P = 0.0248 for 500 vs. 350 ms and 500 vs. 150 ms, respectively].

Fig. 4.

A: example of the reconstruction of the speech envelope of the foreground for a representative younger (top) and older (bottom) adult in noise (−6 dB SNR). The waveforms have been standardized for visualization purposes. B: scatter plots of r values for each participant at each condition tested, plotted in ascending order.

Fig. 5.

Reconstruction accuracy ± 1 SE of the speech envelope of the foreground for younger and older adults. A: results in quiet and in all of the noise conditions tested. The black horizontal line shows the noise floor. Older adults' reconstruction accuracy is significantly higher in quiet (P = 0.001). However, as a completing talker is added to the task, the differences between the 2 age groups are reduced. B: reconstruction accuracy in quiet and at −6 dB for the 3 integration windows tested: 500, 350, and 150 ms. Significant differences across the 3 integration windows were found only in older adults in both quiet (P = 0.001) and noise (P < 0.05). *P < 0.05, **P < 0.01, ***P < 0.001.

Reconstruction of the unattended speech envelope.

Repeated-measures ANOVA showed a significant correlation × age interaction across the four noise conditions tested [F(3,90) = 2.909, P = 0.039]. A one-way ANOVA showed significantly higher reconstruction accuracy in older adults at all of the noise conditions tested except +3 dB [F(1,30) = 3.487, P = 0.072; F(1,30) = 4.99, P = 0.033; F(1,30) = 7.523, P = 0.01; and F(1,30) = 19.251, P < 0.001 for +3; 0; −3; and −6 dB, respectively]. All of the reconstruction values were significantly higher than the noise floor (all, P < 0.01).

Relationships among Behavioral, Midbrain, and Cortical Data

Two-tailed Spearman's rank correlation coefficient was used to study the correlations among the following measurements: speech-in-noise score, cortical decoding accuracy in quiet and in noise with an integration window of 500 ms, and the quiet-to-noise correlation value in the steady-state region of midbrain responses. No significant correlations were found in either younger or older adults in any of the relationships tested.

DISCUSSION

The results of this study provide support for most, but not all, of the initial hypotheses. Behavioral data showed that older adults do have poorer speech understanding in noise than younger adults, despite their normal, audiometric hearing thresholds. In midbrain, noise suppresses the response in younger adults to a greater extent than in older adults, whereas the fidelity of the reconstruction of speech in cortex is higher in older than in younger adults. Differently from what was initially hypothesized, no significant associations were found between behavioral and electrophysiological data and between midbrain and cortex.

Midbrain (EEG)

Amplitude response.

The greater amplitude decrease in noise in younger adults compared with older adults was unexpected. However, an RMS × age-group interaction was only significant in the transition region and may be explained by reduced audibility in the high frequencies in older adults, given that the transition region is characterized by the presence of a high-frequency burst. These results are consistent with an earlier study that suggested that older adults' high-frequency hearing loss might disrupt their ability to encode the high-frequency components of a syllable (Presacco et al. 2015).

Not surprisingly, in younger adults, the loss of amplitude between quiet and noise conditions was also larger in the steady-state region, although no significant RMS × age interaction was observed. The lack of significant differences observed in the steady-state region is consistent with results reported by Parthasarathy et al. (2010), where amplitude modulation following responses differed in younger and older rats only under specific SNR conditions. Specifically, they observed that at the highest SNR, there were no significant differences at any of the modulation frequencies tested, but with a 10-dB loss of SNR, the amplitude modulation following responses of younger rats tended to decrease substantially, whereas older rats' responses showed negligible changes. This is consistent with results showing significant differences across noise conditions only in younger adults. Additionally, previous studies have shown that hearing loss may lead to an exaggerated representation of the envelope in midbrain (Anderson et al. 2013; Henry et al. 2014). Despite having clinically normal, audiometric thresholds up to 4 kHz, most of our older adults have a mild, sensorineural hearing loss at higher frequencies (6 and 8 kHz). This mild hearing loss might have potentially contributed to generating an amplitude response big enough to reduce the RMS × age interaction in the steady-state region. Interestingly, the frequency domain analysis shows significant differences across noise conditions only in younger adults in both the transition (second harmonic in the envelope and 400 Hz in the TFS) and in the steady-state regions (fundamental and both harmonics in the envelope and 400 Hz in the TFS), consistent with observations of Parthasarathy et al. (2010).

Robustness of the envelope to noise.

The correlation analysis supported the initial hypothesis that younger adults' responses should be more robust to noise than those of older adults. Younger adults showed significantly higher correlations when all of the noise conditions were collapsed. The higher robustness of the envelope to noise in younger adults is also confirmed by the results of the stimulus-to-response correlation, which shows that the ability of older adults' responses to follow the stimulus is significantly worse than that of younger adults in noise. These differences between the two age groups may be due to disruption of periodicity in the encoded speech envelope, which has been suggested to cause a decrease in word identification (Pichora-Fuller et al. 2007).

Cortex (MEG)

Reconstruction of the speech envelope.

The results of the reconstruction of the speech envelope show that older adults had higher correlation values both in quiet and in noise. An enhanced reconstruction in older adults, both in quiet and in noise, is consistent with studies showing an exaggerated representation of cortical responses in older adults, both with and without hearing loss. Specifically, Alain et al. (2014), Lister et al. (2011), and Soros et al. (2009) report abnormally higher amplitude for the P1 and N1 peaks in normal-hearing older adults compared with normal-hearing younger adults, in agreement with results from previous studies that showed that aging might alter inhibitory neural mechanisms in the cortex (de Villers-Sidani et al. 2010; Hughes et al. 2010; Juarez-Salinas et al. 2010; Overton and Recanzone 2016). The P1 and N1 peaks reflect different auditory mechanisms. Specifically, P1, occurring 50 ms after the stimulus onset, originates in Heschl's gyrus and can be modulated by stimulus rate, intensity, and modulation depth (Ross et al. 2000). Conversely, N1, occurring 100 ms after stimulus onset, originates in the Planum temporale and has been shown to be modulated by attention (Okamoto et al. 2011). Therefore, this exaggerated response might be a reflection of changes in the way that the acoustical stimuli are processed and as a consequence, in the level of attention required to process them. Interestingly, Chambers et al. (2016) recently showed that recovery from profound cochlear denervation in rats leads to cortical spike responses higher than the baseline recorded before inducing auditory neuropathy; this finding reinforces the possibility that auditory neuropathy could play a critical role in the over-representation of an auditory stimulus. It is also possible that peripheral hearing loss contributes to problems in the speech-encoding process, as several studies have shown that this cortical neural enhancement is exacerbated by hearing loss (Alain et al. 2014; Tremblay et al. 2003). However, no significant correlation was found (two-tailed Spearman's rank correlation) between the pure-tone average for the frequencies between 2 and 8 kHz and cortical reconstruction (all, P > 0.05). The above-mentioned, exaggerated cortical response, which can take the form of both better cortical reconstruction and higher peak amplitude (P1 and N1), is perhaps counterintuitive and in disagreement with the concept of “stronger is better,” as observed in the midbrain. However, if we assume that a decrease of inhibition leads to larger neural currents, then we can hypothesize that this neural enhancement is mainly the result of imbalance between excitatory and inhibitory mechanisms.

As higher cognitive processes affect the cortical representation of the speech signal, the higher reconstruction in older adults may be related to an inefficient use of cognitive resources and an associated decrease in cortical network connectivity reported in older adults (Peelle et al. 2010). Decreased cortical network connectivity would result in neighboring cortical areas processing the same stimulus redundantly instead of cooperatively. Such an overuse of neural resources would lead to the over-representation observed here. Decreased connectivity may translate to using significantly more energy to accomplish a task that younger adults can complete with much less effort. This explanation would be in agreement with several studies showing that overuse of cognitive resources leads to poorer performance on a secondary task (Anderson Gosselin and Gagné 2011; Tun et al. 2009; Ward et al. 2016).

Importantly, the addition of a competing talker caused a substantial drop of decoding accuracy in older adults, who required a much longer integration time than younger adults. This finding is consistent with several psychoacoustic (Fitzgibbons and Gordon-Salant 2001; Gordon-Salant et al. 2006) and electrophysiological (Alain et al. 2012; Lister et al. 2011) studies, demonstrating that older adults' responses are affected to a greater degree than younger adults when temporal parameters are varied. Specifically, older adults required longer time to process specific temporal acoustic cues, such as voice-onset time, vowel duration, silence duration, and transition duration (Gordon-Salant et al. 2008). The degradation of the cortical response from quiet to noise observed in both age groups is also consistent with previous results showing that the evoked response seen in quiet is affected by the presence of noise (Billings et al. 2015). Specifically, cortical response amplitude decreases as SNR decreases in both younger and older adults, consistent with the current findings showing a reduction in reconstruction accuracy within each group. Interestingly, even with an integration window as narrow as 150 ms, older adults still show evidence of enhanced reconstruction of the speech envelope. These results contribute to understanding the significant group differences observed in the reconstruction of the background noise. Older adults' difficulty in understanding speech in noise may also partially arise from the reduced ability to suppress unattended stimuli, as suggested by an over-representation also present in the reconstruction of the background talker. Note also that even when low, the r values in this study are unlikely to be tied to noise-floor effects: reconstruction values in both age groups are well above the noise floor and are consistent with previously published data (Ding and Simon 2012).

Effect of Hearing Threshold Differences and Cognitive Decline on Cortical Results

The possibility that the over-representation of the response of older adults in quiet might be due to significant differences in the hearing thresholds cannot be ruled out. In fact, even though the older adults that we tested had clinically normal hearing, all of their thresholds were significantly higher than younger adults (P < 0.05), a typical occurrence for the majority of aging studies. Cochlear synaptopathy has also been suggested to result from aging and to be a possible contributor to difficulties in understanding speech-in-noise (Sergeyenko et al. 2013). Furthermore, several studies have also shown the existence of age-related cognitive declines (Anderson Gosselin and Gagné 2011; Pichora-Fuller et al. 1995; Surprenant 2007; Tun et al. 2009) that may play an important role in compromising attentional resources believed to be critical for proper representation of the auditory object (Shamma et al. 2011).

Relationships Among Behavioral, Midbrain, and Cortical Data

The absence of correlations among behavioral and electrophysiological measurements suggests the possibility that our behavioral measurements might not completely account for the presence of temporal processing deficits in the central auditory system. Caution should be used when interpreting the results due to important factors. 1) Behavioral data were collected with four-talker babble as the background noise, whereas cortical and subcortical data were recorded using a single-competing talker. A single-competing talker may draw the subjects' attention away from the target to a greater extent than would four-talker babble, given the fact that multiple talkers generate speech without meaning (little informational masking) (Larsby et al. 2008; Tun et al. 2002). 2) Several studies have also shown that the performance in a task varies depending on different features of the masker (i.e., spectral differences, SNR level, etc.) (Calandruccio et al. 2010; Larsby et al. 2008). The speech materials used for the electrophysiological recording were not equated for spectral differences with the speech material used for the speech-in-noise test.

No significant association was found between midbrain and cortical results. Even though previous results showed relationships between weak speech encoding in the midbrain (FFR) and an over-representation of the cortical response (Bidelman et al. 2014), a more recent animal study (Chambers et al. 2016) suggests that the absence of auditory brain stem response wave I does not necessarily lead to an absence of cortical spike response, suggesting compensatory central gain increases that could help restore the representation of the auditory object at the cortical level. This finding may also explain the lack of association between midbrain and cortex findings. It could also be argued that the absence of correlation between midbrain and cortex could be linked to the different stimuli used for the EEG (speech syllable/da/) and MEG (1 min of speech) task. Additionally, midbrain and cortical responses were filtered in different frequency ranges to reflect the frequency differences in the responses emerging from different parts of the auditory systems. The use of different stimuli was necessitated by the larger number of trials required to obtain clear responses from midbrain. Whereas for the cortical analysis, three runs were sufficient to obtain a clear response above the noise floor, in the midbrain, a minimum of 2,000 runs was needed, making the use of stimuli longer than 170 ms not feasible for this long experiment. Finally, subjects were passively listening to the auditory stimuli in the EEG experiment, whereas in the MEG, subjects were actively engaged in listening to the target speaker.

Concluding Remarks

The results of our studies add compelling evidence to the notion that age-related temporal processing deficits are a key factor in explaining speech comprehension problems experienced by older adults, particularly in noisy environments. Auditory midbrain responses revealed an age-related failure to encode speech syllables in quiet, which reduces the ability to cope with the presence of a background talker. Whereas younger adults adapt to the presence of noise and changes in its loudness, older adults' midbrain responses seem to be less affected by different SNRs, suggesting a failure to encode properly both the target and the irrelevant speech. This result is likely not due to the noise-floor effect, as all of the RMS values calculated in noise were significantly higher than the RMS of the prestimulus. Our study also reveals an over-representation of the cortical response, consistent with previous studies (Alain et al. 2014; Lister et al. 2011; Soros et al. 2009); this neural enhancement is reduced with the addition of a competing talker, suggesting that larger cortical responses are not beneficial and might, in fact, represent a failure of the brain to process speech properly. Critically, we were unable to find any significant correlations between midbrain and cortex measures. We believe this result brings additional support to recent findings that suggest that cortical plasticity may partially restore temporal processing deficits at lower levels of the auditory system (Chambers et al. 2016), although we cannot exclude the possibility that a lack of correlation may reflect differences in the stimuli used to elicit the midbrain and cortical responses.

This apparent lack of relationship between midbrain and cortex further highlights the relevance of this study, which is the importance of investigating simultaneously different areas of the auditory system to understand better the mechanisms underlying age-related degradation of speech representation.

GRANTS

Funding for this study was provided by the University of Maryland College Park (UMCP) Department of Hearing and Speech Sciences, UMCP ADVANCE Program for Inclusive Excellence (NSF HRD1008117), and National Institute on Deafness and Other Communication Disorders (Grants R01DC008342, R01DC014085, and T32DC-00046).

DISCLOSURES

The authors declare no competing financial interests.

AUTHOR CONTRIBUTIONS

A.P., J.Z.S., and S.A. conception and design of research; A.P. performed experiments; A.P. analyzed data; A.P., J.Z.S., and S.A. interpreted results of experiments; A.P. and S.A. prepared figures; A.P., J.Z.S., and S.A. drafted manuscript; A.P., J.Z.S., and S.A. edited and revised manuscript; A.P., J.Z.S., and S.A. approved final version of manuscript.

ACKNOWLEDGMENTS

The authors are grateful to Natalia Lapinskaya for excellent technical support.

REFERENCES

- Aiken SJ, Picton TW. Envelope and spectral frequency-following responses to vowel sounds. Hear Res 245: 35–47, 2008. [DOI] [PubMed] [Google Scholar]

- Alain C, McDonald K, Van Roon P. Effects of age and background noise on processing a mistuned harmonic in an otherwise periodic complex sound. Hear Res 283: 126–135, 2012. [DOI] [PubMed] [Google Scholar]

- Alain C, Roye A, Salloum C. Effects of age-related hearing loss and background noise on neuromagnetic activity from auditory cortex. Front Syst Neurosci 8: 8, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson S, Parbery-Clark A, White-Schwoch T, Drehobl S, Kraus N. Effects of hearing loss on the subcortical representation of speech cues. J Acoust Soc Am 133: 3030–3038, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson S, Parbery-Clark A, White-Schwoch T, Kraus N. Aging affects neural precision of speech encoding. J Neurosci 32: 14156–14164, 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson Gosselin P, Gagné JP. Older adults expend more listening effort than young adults recognizing speech in noise. J Speech Lang Hear Res 54: 944–958, 2011. [DOI] [PubMed] [Google Scholar]

- Benjamini Y, Hochberg Y. Controlling the false discovery rate: a practical and powerful approach to multiple testing. J R Statist Soc B 57: 289–300, 1995. [Google Scholar]

- Bidelman GM, Krishnan A. Effects of reverberation on brainstem representation of speech in musicians and non-musicians. Brain Res 1355: 112–125, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bidelman GM, Villafuerte JW, Moreno S, Alain C. Age-related changes in the subcortical-cortical encoding and categorical perception of speech. Neurobiol Aging 35: 2526–2540, 2014. [DOI] [PubMed] [Google Scholar]

- Billings CJ, Penman TM, McMillan GP, Ellis EM. Electrophysiology and perception of speech in noise in older listeners: effects of hearing impairment and age. Ear Hear 36: 710–722, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Calandruccio L, Dhar S, Bradlow AR. Speech-on-speech masking with variable access to the linguistic content of the masker speech. J Acoust Soc Am 128: 860–869, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Campbell T, Kerlin JR, Bishop CW, Miller LM. Methods to eliminate stimulus transduction artifact from insert earphones during electroencephalography. Ear Hear 33: 144–150, 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carabellese C, Appollonio I, Rozzini R, Bianchetti A, Frisoni GB, Frattola L, Trabucchi M. Sensory impairment and quality of life in a community elderly population. J Am Geriatr Soc 41: 401–407, 1993. [DOI] [PubMed] [Google Scholar]

- Chambers AR, Resnik J, Yuan Y, Whitton JP, Edge AS, Liberman MC, Polley DB. Central gain restores auditory processing following near-complete cochlear denervation. Neuron 89: 867–879, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chandrasekaran B, Kraus N. The scalp-recorded brainstem response to speech: neural origins and plasticity. Psychophysiology 47: 236–246, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clinard CG, Tremblay KL. Aging degrades the neural encoding of simple and complex sounds in the human brainstem. J Am Acad Audiol 24: 590–599; quiz 643–594, 2013. [DOI] [PubMed] [Google Scholar]

- David SV, Mesgarani N, Shamma SA. Estimating sparse spectro-temporal receptive fields with natural stimuli. Network 18: 191–212, 2007. [DOI] [PubMed] [Google Scholar]

- de Cheveigné A, Simon JZ. Denoising based on spatial filtering. J Neurosci Methods 171: 331–339, 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Cheveigné A, Simon JZ. Denoising based on time-shift PCA. J Neurosci Methods 165: 297–305, 2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Villers-Sidani E, Alzghoul L, Zhou X, Simpson KL, Lin RC, Merzenich MM. Recovery of functional and structural age-related changes in the rat primary auditory cortex with operant training. Proc Natl Acad Sci USA 107: 13900–13905, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Delorme A, Makeig S. EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J Neurosci Methods 134: 9–21, 2004. [DOI] [PubMed] [Google Scholar]

- Ding N, Chatterjee M, Simon JZ. Robust cortical entrainment to the speech envelope relies on the spectro-temporal fine structure. Neuroimage 88: 41–46, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ding N, Simon JZ. Adaptive temporal encoding leads to a background-insensitive cortical representation of speech. J Neurosci 33: 5728–5735, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ding N, Simon JZ. Emergence of neural encoding of auditory objects while listening to competing speakers. Proc Natl Acad Sci USA 109: 11854–11859, 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fitzgibbons PJ, Gordon-Salant S. Aging and temporal discrimination in auditory sequences. J Acoust Soc Am 109: 2955–2963, 2001. [DOI] [PubMed] [Google Scholar]

- Galbraith GC, Threadgill MR, Hemsley J, Salour K, Songdej N, Ton J, Cheung L. Putative measure of peripheral and brainstem frequency-following in humans. Neurosci Lett 292: 123–127, 2000. [DOI] [PubMed] [Google Scholar]

- Gates GA, Cobb JL, Linn RT, Rees T, Wolf PA, D'Agostino RB. Central auditory dysfunction, cognitive dysfunction, and dementia in older people. Arch Otolaryngol Head Neck Surg 122: 161–167, 1996. [DOI] [PubMed] [Google Scholar]

- Gordon-Salant S, Yeni-Komshian G, Fitzgibbons P. The role of temporal cues in word identification by younger and older adults: effects of sentence context. J Acoust Soc Am 124: 3249–3260, 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gordon-Salant S, Yeni-Komshian GH, Fitzgibbons PJ, Barrett J. Age-related differences in identification and discrimination of temporal cues in speech segments. J Acoust Soc Am 119: 2455–2466, 2006. [DOI] [PubMed] [Google Scholar]

- Gorga M, Abbas P, Worthington D. Stimulus calibration in ABR measurements. In: The Auditory Brainstem Response, edited by Jacobsen J. San Diego: College Hill, 1985, p. 49–62. [Google Scholar]

- Greenberg S, Takayuki A. What are the essential cues for understanding spoken language? IEICE Trans Inf Syst 87: 1059–1070, 2004. [Google Scholar]

- Henry KS, Kale S, Heinz MG. Noise-induced hearing loss increases the temporal precision of complex envelope coding by auditory-nerve fibers. Front Syst Neurosci 8: 20, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Herbst KG, Humphrey C. Hearing impairment and mental state in the elderly living at home. Br Med J 281: 903–905, 1980. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hughes LF, Turner JG, Parrish JL, Caspary DM. Processing of broadband stimuli across A1 layers in young and aged rats. Hear Res 264: 79–85, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Humes LE, Christopherson L. Speech identification difficulties of hearing-impaired elderly persons: the contributions of auditory processing deficits. J Speech Hear Res 34: 686–693, 1991. [DOI] [PubMed] [Google Scholar]

- Humes LE, Roberts L. Speech-recognition difficulties of the hearing-impaired elderly: the contributions of audibility. J Speech Hear Res 33: 726–735, 1990. [DOI] [PubMed] [Google Scholar]

- Juarez-Salinas DL, Engle JR, Navarro XO, Recanzone GH. Hierarchical and serial processing in the spatial auditory cortical pathway is degraded by natural aging. J Neurosci 30: 14795–14804, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kay DW, Beamish P, Roth M. Old age mental disorders in Newcastle upon Tyne. I. A study of prevalence. Br J Psychiatry 110: 146–158, 1964. [DOI] [PubMed] [Google Scholar]

- Killion MC, Niquette PA, Gudmundsen GI, Revit LJ, Banerjee S. Development of a quick speech-in-noise test for measuring signal-to-noise ratio loss in normal-hearing and hearing-impaired listeners. J Acoust Soc Am 116: 2395–2405, 2004. [DOI] [PubMed] [Google Scholar]

- King C, Warrier CM, Hayes E, Kraus N. Deficits in auditory brainstem pathway encoding of speech sounds in children with learning problems. Neurosci Lett 319: 111–115, 2002. [DOI] [PubMed] [Google Scholar]

- Klatt DH. Software for a cascade/parallel formant synthesizer. J Acoust Soc Am 67: 971–995, 1980. [Google Scholar]

- Laforge RG, Spector WD, Sternberg J. The relationship of vision and hearing impairment to one-year mortality and functional decline. J Aging Health 4: 126–148, 1992. [Google Scholar]

- Larsby B, Hallgren M, Lyxell B. The interference of different background noises on speech processing in elderly hearing impaired subjects. Int J Audiol 47, Suppl 2: S83–S90, 2008. [DOI] [PubMed] [Google Scholar]

- Lin FR, Yaffe K, Xia J, Xue QL, Harris TB, Purchase-Helzner E, Satterfield S, Ayonayon HN, Ferrucci L, Simonsick EM. Hearing loss and cognitive decline in older adults. JAMA Intern Med 173: 293–299, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lister JJ, Maxfield ND, Pitt GJ, Gonzalez VB. Auditory evoked response to gaps in noise: older adults. Int J Audiol 50: 211–225, 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mamo SK, Grose JH, Buss E. Speech-evoked ABR: effects of age and simulated neural temporal jitter. Hear Res 333: 201–209, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nasreddine ZS, Phillips NA, Bedirian V, Charbonneau S, Whitehead V, Collin I, Cummings JL, Chertkow H. The Montreal Cognitive Assessment, MoCA: a brief screening tool for mild cognitive impairment. J Am Geriatr Soc 53: 695–699, 2005. [DOI] [PubMed] [Google Scholar]

- Okamoto H, Stracke H, Bermudez P, Pantev C. Sound processing hierarchy within human auditory cortex. J Cogn Neurosci 23: 1855–1863, 2011. [DOI] [PubMed] [Google Scholar]

- Overton JA, Recanzone GH. Effects of aging on the response of single neurons to amplitude modulated noise in primary auditory cortex of Rhesus macaque. J Neurophysiol 115: 2911–2923, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parbery-Clark A, Anderson S, Hittner E, Kraus N. Musical experience offsets age-related delays in neural timing. Neurobiol Aging 33: 1483.e1481–1483.e1484, 2012. [DOI] [PubMed] [Google Scholar]

- Parbery-Clark A, Marmel F, Bair J, Kraus N. What subcortical-cortical relationships tell us about processing speech in noise. Eur J Neurosci 33: 549–557, 2011. [DOI] [PubMed] [Google Scholar]

- Parthasarathy A, Bartlett EL. Age-related auditory deficits in temporal processing in F-344 rats. Neuroscience 192: 619–630, 2011. [DOI] [PubMed] [Google Scholar]

- Parthasarathy A, Cunningham PA, Bartlett EL. Age-related differences in auditory processing as assessed by amplitude-modulation following responses in quiet and in noise. Front Aging Neurosci 2: 152, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peelle JE, Troiani V, Wingfield A, Grossman M. Neural processing during older adults' comprehension of spoken sentences: age differences in resource allocation and connectivity. Cereb Cortex 20: 773–782, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pichora-Fuller MK, Schneider BA, Daneman M. How young and old adults listen to and remember speech in noise. J Acoust Soc Am 97: 593–608, 1995. [DOI] [PubMed] [Google Scholar]

- Pichora-Fuller MK, Schneider BA, Macdonald E, Pass HE, Brown S. Temporal jitter disrupts speech intelligibility: a simulation of auditory aging. Hear Res 223: 114–121, 2007. [DOI] [PubMed] [Google Scholar]

- Presacco A, Jenkins K, Lieberman R, Anderson S. Effects of aging on the encoding of dynamic and static components of speech. Ear Hear 36: e352–e363, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ross B, Borgmann C, Draganova R, Roberts LE, Pantev C. A high-precision magnetoencephalographic study of human auditory steady-state responses to amplitude-modulated tones. J Acoust Soc Am 108: 679–691, 2000. [DOI] [PubMed] [Google Scholar]

- Ross B, Schneider B, Snyder JS, Alain C. Biological markers of auditory gap detection in young, middle-aged, and older adults. PLoS One 5: e10101, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Särelä J, Valpola H. Denoising source separation. J Mach Learn Res 6: 233–272, 2005. [Google Scholar]

- Sergeyenko Y, Lall K, Liberman MC, Kujawa SG. Age-related cochlear synaptopathy: an early-onset contributor to auditory functional decline. J Neurosci 33: 13686–13694, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shamma SA, Elhilali M, Micheyl C. Temporal coherence and attention in auditory scene analysis. Trends Neurosci 34: 114–123, 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith JC, Marsh JT, Brown WS. Far-field recorded frequency-following responses: evidence for the locus of brainstem sources. Electroencephalogr Clin Neurophysiol 39: 465–472, 1975. [DOI] [PubMed] [Google Scholar]

- Soros P, Teismann IK, Manemann E, Lutkenhoner B. Auditory temporal processing in healthy aging: a magnetoencephalographic study. BMC Neurosci 10: 34, 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Surprenant AM. Effects of noise on identification and serial recall of nonsense syllables in older and younger adults. Neuropsychol Dev Cogn B Aging Neuropsychol Cogn 14: 126–143, 2007. [DOI] [PubMed] [Google Scholar]

- Tremblay KL, Piskosz M, Souza P. Effects of age and age-related hearing loss on the neural representation of speech cues. Clin Neurophysiol 114: 1332–1343, 2003. [DOI] [PubMed] [Google Scholar]

- Tun PA, McCoy S, Wingfield A. Aging, hearing acuity, and the attentional costs of effortful listening. Psychol Aging 24: 761–766, 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tun PA, O'Kane G, Wingfield A. Distraction by competing speech in young and older adult listeners. Psychol Aging 17: 453–467, 2002. [DOI] [PubMed] [Google Scholar]

- Uhlmann RF, Larson EB, Rees TS, Koepsell TD, Duckert LG. Relationship of hearing impairment to dementia and cognitive dysfunction in older adults. JAMA 261: 1916–1919, 1989. [PubMed] [Google Scholar]

- Ward CM, Rogers CS, Van Engen KJ, Peelle JE. Effects of age, acoustic challenge, and verbal working memory on recall of narrative speech. Exp Aging Res 42: 126–144, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhu J, Garcia E. The Wechsler Abbreviated Scale of Intelligence (WASI). New York: Psychological Corp, 1999. [Google Scholar]