Abstract

In this article, I discuss some of the latest functional neuroimaging findings on the organization of object concepts in the human brain. I argue that these data provide strong support for viewing concepts as the products of highly interactive neural circuits grounded in the action, perception, and emotion systems. The nodes of these circuits are defined by regions representing specific object properties (e.g., form, color, and motion) and thus are property-specific, rather than strictly modality-specific. How these circuits are modified by external and internal environmental demands, the distinction between representational content and format, and the grounding of abstract social concepts are also discussed.

Keywords: Concepts and categories, Cognitive neuroscience of memory, Embodied cognition, Neuroimaging and memory

For the past two decades, my colleagues and I have studied the neural foundation for conceptual representations of common objects, actions, and their properties. This work has been guided by a framework that I have previously referred to as the “sensory–motor model” (Martin, 1998, Martin, Ungerleider, & Haxby, 2000), and that I will refer to here by the acronym GRAPES (standing for “grounding representations in action, perception, and emotion systems”). This framework is a variant of the sensory/functional model outlined by Warrington, Shallice, and colleagues in the mid-1980s (Warrington & McCarthy, 1987, Warrington & Shallice, 1984) that has dominated neuropsychological (e.g., Damasio, Tranel, Grabowski, Adolphs, & Damasio, 2004, Humphreys & Forde, 2001), cognitive (e.g., Cree & McRae, 2003), and computational (e.g., McClelland & Rogers, 2003, Plaut, 2002) models of concept representation (see also Allport, 1985).

In this article, I will describe the GRAPES model and discuss its implications for understanding how conceptual knowledge is organized in the human brain.

Preliminary concerns

For the present purposes, an object concept refers to the information an individual possesses that defines a basic-level object category (roughly equivalent to the name typically assigned to an object category, such as “dog,” “hammer,” or “apple”; see Rosch, Mervis, Gray, Johnson, & Boyes-Braem, 1976, for details). Concepts play a central role in cognition because they eliminate the need to rediscover or relearn an object’s properties with each encounter (Murphy, 2002). Identifying an object or a word as a “hammer” allows us to infer that this is an object that is typically made of a hard substance, grasped in one hand, used to pound nails—that it is, in fact, a tool—and so forth. It takes only brief reflection (or a glance at a dictionary) to realize that object information is not limited to perception-, action-, or emotion-related properties. We know, for example, that “dogs” like to play fetch, carpenters use “hammers,” and “apples” grow on trees. In fact, most of the information that we possess about objects is this type of associative or encyclopedic knowledge. This knowledge is typically expressed verbally, is unlimited (there is no intrinsic limit on how much information we can acquire), and is often idiosyncratic (e.g., some people know things about “dogs” that others do not). In contrast, another level of representation, often referred to as semantic or conceptual “primitives,” is accessed automatically, constrained in number, and universal to everyone who possesses the concept. Conceptual primitives are object-associated properties that underpin our ability to quickly and efficiently identify objects at the basic-category level (e.g., as a “dog,” “hammer,” or “apple”), regardless of the modality of presentation (visual, auditory, tactile, or internally generated) or the stimulus format (verbal, nonverbal). Conceptual primitives provide a scaffolding or foundation to support both the explicit retrieval of object-associated information (e.g., enabling us to answer “orange” when asked, What color are carrots?), as well as to gain access to information from our large stores of associative/ encyclopedic object knowledge (e.g., allowing us to answer “rabbit” when asked, What animal likes to eat carrots?). For a more detailed discussion of this and related issues, see Martin (1998, 2007, 2009).

The GRAPES model

The central claim is that information about the salient properties of an object—such as what it looks like, how it moves, how it is used, as well as our affective response to it—is stored in our perception, action, and emotion systems. The use of the terminology “perception, action, and emotion systems” rather than “sensory-motor regions” is a deliberate attempt to guard against an unfortunate and unintended consequence of the sensory–motor terminology that has given some the mistaken impressions that concepts could be housed in primary sensory and motor cortices (e.g., V1, S1, A1, M1) and that an object concept could be stored in a single brain region (e.g., a “tool” region). Nothing could be further from the truth. As is described below, my position is, and has always been, that the regions where we store information about specific object-associated properties are located within (i.e., overlap with) perceptual and action systems, specifically excluding primary sensory–motor regions (Martin, Haxby, Lalonde, Wiggs, & Ungerleider, 1995; however, the role of primary sensory and motor regions in conceptual-processing tasks is an important issue that will be addressed below).

It is also assumed that this object property information is acquired and continually updated through innately specified learning mechanisms (for a discussion, see Caramazza & Shelton, 1998, Carey, 2009). These mechanisms allow for the acquisition and storage of object-associated properties—form, color, motion, and the like. Although the architecture and circuitry of the brain dictates where these learning mechanisms are located, they are not necessarily tied to a single modality of input (i.e., they are property-specific, not modality-specific). For example, a mechanism specialized for learning about object shape or form will typically work upon visual input because that is the modality through which object form information is commonly acquired. As a result, this mechanism will be located in the ventral occipitotemporal visual object-processing stream. However, as has been convincingly demonstrated by studies of typically developing (Amedi, Malach, Hendler, Peled, & Zohary, 2001, Amedi, Jacobson, Hendler, Malach, & Zohary, 2002) as well as congenitally blind (Amedi et al., 2007, Pietrini et al., 2004) individuals, this mechanism can work upon tactile input, as well. Thus, information about the physical shape or form of objects will be stored in the same place in both normally sighted and blind individuals (e.g., ventral occipitotemporal cortex), regardless of the modality through which that information was acquired (see also Mahon et al., 2009, Noppeney, Friston, & Price, 2003). Relatedly, this information can be accessed through multiple modalities as well (e.g., information about how dogs look is accessed automatically when we hear a bark, or when we read or hear the word “dog”; Tranel et al., 2003a).

There are two major consequences of this formulation. Firstly, from a cognitive standpoint, it provides a potential solution for the grounding problem: How do mental representations become connected to the things they refer to in the world (Harnad, 1990)? Within GRAPES and related frameworks, representations are grounded by virtue of their being situated within (i.e., partially overlapping with) the neural system that supports perceiving and interacting with our external and internal environments.

Secondly, from a neurobiological standpoint, it provides a strong, testable—and easily falsifiable —claim about the spatial organization of object information in the brain. Not only is object property information distributed across different locations, but also, these locations are highly predictable on the basis of our knowledge of the spatial organization of the perceptual, action, and affective processing systems. Conceptual information is not spread across the cortex in a seemingly random, arbitrary fashion (Huth, Nishimoto, Vu, & Gallant, 2012), but rather follows a systematic plan.

The representation of object-associated properties: The case of color

According to the GRAPES model, object property information is stored within specific processing streams, but downstream from primary sensory, and upstream from motor, cortices. The overwhelming majority of functional brain-imaging studies support this claim (Kiefer & Pulvermüller, 2012, Martin, 2009, Thompson-Schill, 2003). Here I will concentrate on a single property, color, to illustrate the main findings and points.

Early brain-imaging studies showed that retrieving the name of a color typically associated with an object (e.g., “yellow” in response to the word “pencil”), relative to retrieving a word denoting an object-associated action (e.g., “write” in response to the word “pencil”), elicited activity in a region of the fusiform gyrus in ventral temporal cortex anterior to the region in occipital cortex associated with perceiving colors (Martin et al., 1995; and see Chao & Martin, 1999, and Wiggs, Weisberg, & Martin, 1999, for similar findings). Converging evidence to support this claim has come from studies of color imagery generation in control subjects (Howard et al., 1998) and in color–word synthestes in response to heard words (Paulesu et al., 1995).

Importantly, these findings were also consistent with clinical studies documenting a double dissociation between patients with achromatopsia—acquired color blindness concurrent with a preserved ability to generate color imagery (commonly associated with lesions of the lingual gyrus in the occipital lobe; e.g., Shuren, Brott, Schefft, & Houston, 1996)—and color agnosia—impaired knowledge of object-associated colors concurrent with normal color vision (commonly associated with lesions of posterior ventral temporal cortex, although these lesions can also include occipital cortex; e.g., Miceli et al., 2001, Stasenko, Garcea, Dombovy, & Mahon, 2014).

We interpreted our findings as supporting a grounded-cognition view based on the fact that the region active when retrieving color information was anatomically close to the region previously identified as underpinning color perception, whereas retrieving object-associated action words yielded activity in lateral temporal areas close to the site known to support motion perception (see Martin et al., 1995, for details). However, these data could just as easily be construed as being consistent with “amodal” frameworks that maintain that conceptual information is autonomous or separate from sensory processing (e.g., Wilson & Foglia, 2011). The grounded-cognition position maintains that the neural substrates for conceptual, perceptual, and sensory processing are all part of a single, anatomically broad system supporting both perceiving and knowing about object-associated information. Thus, evidence in support of grounded cognition would require showing functional overlap between, for example, the neural systems supporting sensory/perceptual and conceptual processing of color.

In spite of the failure of early attempts to demonstrate such a link (Chao & Martin, 1999), investigations have yielded strong, converging evidence to support that claim. Beauchamp, Haxby, Jennings, and DeYoe (1999) showed that when color-selective cortex was mapped by having subjects passively view colored versus grayscale stimuli, as had typically been done in previous studies (e.g., Chao & Martin, 1999, McKeefry & Zeki, 1997, Zeki et al., 1991), neural activity was restricted to the occipital cortex. However, when color-selective cortex was mapped using a more demanding task requiring subjects to make subtle judgments about differences in hue (modeled after the classic Farnsworth–Munsell 100-Hue Test used in the clinical evaluation of color vision), activity extended downstream from occipital cortex to the fusiform gyrus in ventral posterior temporal cortex (Beauchamp et al., 1999). We replicated this finding and further observed that this downstream region of the fusiform gyrus was also active when subjects retrieved information about object-associated color using a verbal, property-verification task (Simmons et al., 2007). These data provided support for the best of both worlds: continued support for the double dissociation between color agnosia and achromotopsia (because the color perceptual task, but not the color conceptual task, activated occipital cortex), but now coupled with evidence consistent with grounded cognition (because both tasks activated the same downstream region of the fusiform gyrus) (Simmons et al., 2007; see Martin, 2009, and Stasenko et al., 2014, for discussions).

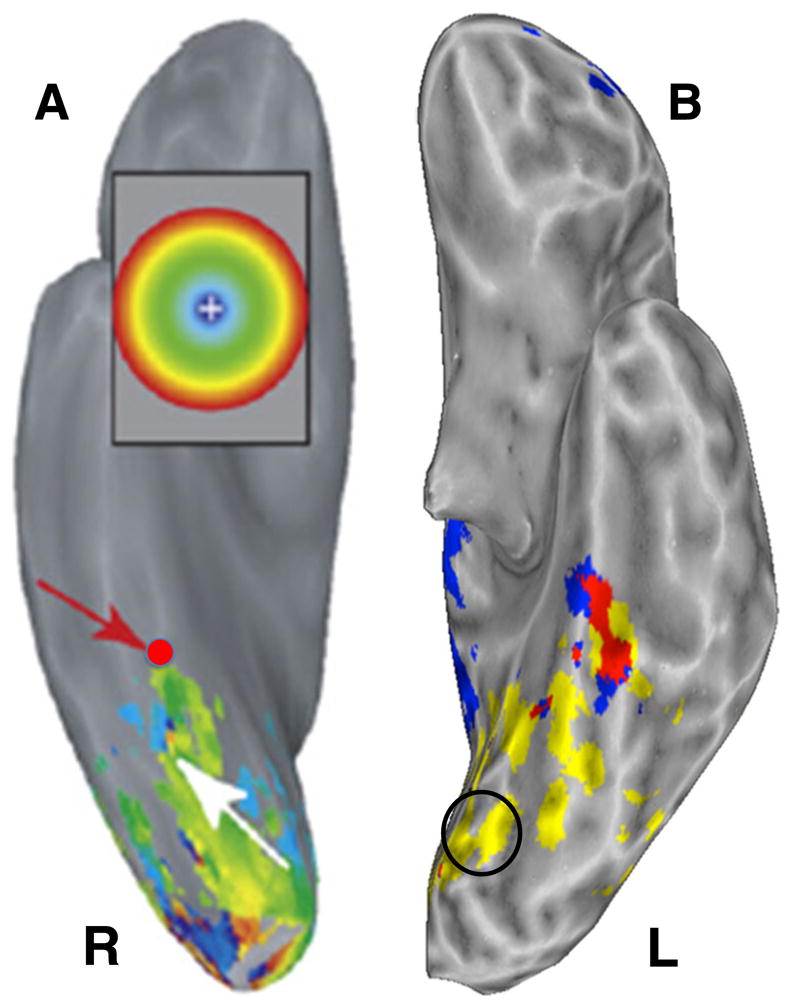

Additional supporting evidence has come from a completely different source—electrophysiological recording and stimulation of the human cortex. Recording from posterior brain regions prior to neurosurgery, Murphey, Yoshor, & Beauchamp (2008) identified a site in the fusiform gyrus that not only was color-responsive, but was preferentially tuned to viewing a particular blue-purple color. Moreover, when electrical stimulation was applied to that site, the patient reported vivid, blue-purple color imagery (see Murphey et al., 2008, for details). The location of this region corresponded closely to the region active in previous imaging studies of color information retrieval, and, as is illustrated in Fig. 1, corresponded remarkably well to the region active during both perceiving and retrieving color information in the Simmons et al. (2007) study.

Fig. 1.

Regions of ventral occipitotemporal cortex responsive to perceiving and knowing about color. (A) Ventral view of the right hemisphere of a single patient. The red dot shows the location of the electrode that responded most strongly to blue-purple color and that produced blue-purple visual imagery when stimulated (reprinted with permission; see Murphey et al., 2008, for details). (B) Ventral view of the left hemisphere from the group study on perceiving and knowing about color (Simmons et al., 2007). Regions active when distinguishing subtle differences in hue are shown in yellow. The black circle indicates the approximate location of the lingual gyrus region active when passively viewing colors. The region responding to both perceiving and retrieving information about color is shown in red. Note the close correspondence between that region and the location of the electrode in panel A

Thus, in support of the grounded-cognition framework, these data indicate that the processing system supporting color perception includes both lower-level regions that mediate the conscious perception—or more appropriately, the “sensation” of color—and higher-order regions that mediate both perceiving and storing color information. Moreover, as will be discussed below, these posterior and anterior regions are in a dynamic, interactive state to support contextual, task-dependent demands.

The effect of context 1: Conceptual task demands influence responses in primary sensory (color) cortex

The Simmons et al. (2007) study using the modified version of the Farnsworth–Munsell 100-Hue Test demonstrated that increasing perceptual processing demands resulted in activity that extended downstream from low-level into higher-order color-processing regions. Thompson-Schill and colleagues have provided evidence that the reverse effect also holds (Hsu, Frankland, & Thompson-Schill, 2012); that is, increasing conceptual-processing demands can produce activity that feeds back upstream into early, primary-processing areas in order to solve the task at hand. These investigators also used the modified Farnsworth–Munsell 100-Hue Test to map color-responsive cortex. However, in contrast to the property verification task used by Simmons and colleagues, which required a “yes/no” response to such probes as “eggplant–purple” (Simmons et al., 2007), the study by Hsu et al. (2012) used a conceptual-processing task requiring subjects to make subtle distinctions in hue, thereby more closely matching the demands of the color perception task (e.g., which object is “lighter”? lemon, basketball; see Hsu et al., 2012, for details). Under these conditions, both the color perception and color knowledge tasks yielded overlapping activity in a region of the lingual gyrus in occipital cortex associated with the sensory processing of color. Moreover, this effect seems to be tied to similarity in the demands of the perceptual and conceptual tasks, since previous work by these investigators had shown that simply making the conceptual task more attention-demanding increased activity in the fusiform, by not the lingual, gyri (Hsu, Kraemer, Oliver, Schlichting, & Thompson-Schill, 2011). These findings suggest that, in order to meet specific task demands, higher-level regions in the fusiform gyrus that store information about object-associated color can reactivate early, lower-level areas in occipital cortex that underpin the sensory processing of color (and see Amsel, Urbach, & Kutas, 2014, for more evidence for the tight linkage between low-level perceptual and high-level conceptual processes in the domain of color).

As will be discussed next, low-level sensory regions can also show effects of conceptual processing when the modulating influence arises from the demands of our internal, rather than the external, environment.

The effect of context 2: The body’s homeostatic state influences responses in primary sensory (gustatory) cortex to pictures of appetizing food

A number of functional brain-imaging studies have shown that identifying pictures of appetizing foods activates a site located in the anterior portion of the insula (as well as other brain areas, such as orbitofrontal cortex; e.g., Killgore et al., 2003, Simmons, Martin, & Barsalou, 2005; see van der Laan, de Ridder, Viergever, & Smeets, 2011, for a review). Because the human gustatory system sends inputs to the insula, we interpreted this activity as reflecting inferences about taste generated automatically when viewing food pictures (Simmons et al., 2005). We have now obtained direct evidence in support of this proposal by mapping neural activity associated with a pleasant taste (apple juice, relative to a neutral liquid solution) and inferred taste (images of appetizing foods, relative to nonfood pictures) (Simmons et al., 2013). Juice delivery elicited activity in primary gustatory cortex, located in the mid-dorsal region of the insula (Small, 2010), as well as in the more anterior region of the insula identified in the previous studies of appetizing food picture identification (representing inferred taste). Viewing pictures of appetizing foods yielded activity in the anterior, but not mid-dorsal, insula. Thus, these results followed the same pattern as our study on perceiving and knowing about color (Simmons et al., 2007). Whereas gustatory processing activated both primary (mid-dorsal insula) and more anterior insula sites, higher-order representations associated with viewing pictures of food were limited to the more anterior region of insular cortex.

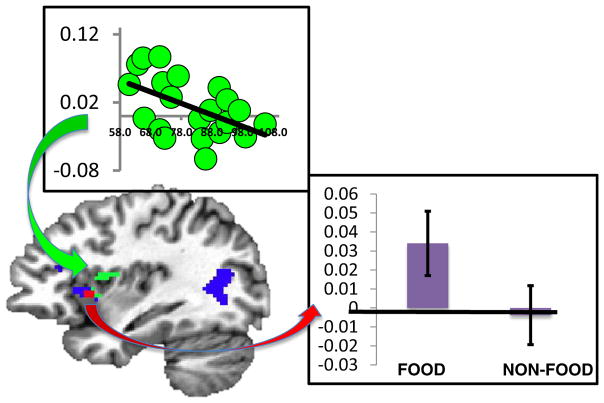

However, a unique feature of our study was that, because it was part of a larger investigation of dietary habits, we were able to acquire data on our subjects’ metabolic states immediately prior to the scanning session. Analyses of those data revealed that the amount of glucose circulating in peripheral blood was negatively correlated with the neural response to food pictures in the mid-dorsal, primary gustatory region of the insula—the lower the glucose level, the stronger the insula response. This unexpected finding indicated that bodily input could modulate the brain’s response to visual images of one category of objects (appetizing foods) but not others (nonfood objects; see Simmons et al., 2013, for details). When the body’s energy resources are low (as indexed by low glucose levels), pictures of appetizing foods become more likely to activate primary gustatory cortex, perhaps as a signal to act (i.e., to obtain food; more on this later). Moreover, this modulatory effect of glucose on the neural response to food pictures occurred in primary gustatory cortex—an area, like primary color-responsive cortex in the occipital lobe, assumed not to be involved in processing higher-order information (Fig. 2).

Fig. 2.

Regions of insular cortex responsive to perceived and inferred taste: Sagittal view of the left hemisphere showing regions in the insular cortex responsive to a pleasant taste (green) and viewing pictures of appetizing foods (blue). The histogram shows activation levels for food and nonfood objects in the anterior insula responsive to taste (red area). The graph shows the level of each subject’s response in primary gustatory cortex (mid-dorsal insula, green) as a function of peripheral blood glucose level. The correlation between glucose and the mid-dorsal insula response was significant (r = –.51) and significantly stronger than the response in this region to nonfood objects (r = –.04; see Simmons et al., 2013, for details)

Overall, these findings suggest a dynamic, interactive relationship between lower-level sensory and higher-order conceptual processing components of perceptual processing streams. Activity elicited in higher-order processing areas (fusiform gyrus for color, anterior insula for taste) may reflect the retrieval of properties associated with stable conceptual representations (invariant representations needed for understanding and communicating). In contrast, feedback from these regions to primary, low-level sensory processing areas may reflect contextual effects as a function of specific task requirements (as in the case of color) or bodily states (as in the case of taste). Neural activity elicited during conceptual processing is determined by both the content of the information retrieved and the demands of our external and internal environments.

What does overlapping activity mean?

The goal of these studies was to determine whether the neural activity selectively associated with retrieving object property information overlapped with the activity identified (independently localized) by a sensory or motor task. This approach has been used successfully multiple times. Some recent examples include showing that reading about motion activates motion-processing regions (Deen & McCarthy, 2010, Saygin, McCullough, Alac, & Emmorey, 2010), viewing pictures of graspable objects activates somatosensory cortex (Smith & Goodale, 2015), and viewing pictures of sound-implying objects (musical instruments, animals) activates auditory cortex (using an anatomical rather than a functional localizer; Meyer et al., 2010). The implication of these findings is that sensory/perceptual and conceptual processes are tightly linked. Demonstrating that retrieving information about color shows partial overlap with regions active when processing color licenses conclusions about where object property information is stored in the brain. This information is stored right in the processing system active when that information was acquired and updated. The alternative would be, for example, that we learn about the association between a particular object and its color in one place and then ship that information off to a different location for storage. The neuroimaging data provide clear evidence against that scenario.

The fact that overlapping activity is associated with perceptual and conceptual task performance does not mean, however, that the representations underpinning these processes—or their neural substrates—are identical. In fact, although functional brain-imaging data cannot address this issue,1 it is highly likely that the representations are substantially different. Perceiving, imagining, and knowing, after all, are very different things, and so must be their neural instantiations. Even at the level of the neural column, bottom-up and top-down inputs show distinct patterns of laminar connectivity (e.g., Felleman & van Essen, 1991, Foxworthy, Clemo, & Meredith, 2013) and rely on different oscillatory frequencies (Buffalo, Fries, Landman, Buschman, & Desimone, 2011, van Kerkoerle et al., 2014). Nevertheless, the fact that perceptual and conceptual representations differ leaves open the possibility that their formats are the same.

Content and format

There seems to be strong, if not unanimous, agreement about the content and relative location in the brain of perception- and action-related object property information. Hotly debated, however, is the functional significance of this information (see below), and, most especially, the format of this information. Is conceptual information stored in a highly abstract, “amodal,” language-like propositional format? Or, is it stored in a depictive, iconic, picture-like format? The chief claim of many advocates of embodied and/or grounded cognition is that object and action concepts are represented exclusively in depictive, modality-specific formats (e.g., Barsalou, 1999, Glenberg & Gallese, 2012, Zwaan & Taylor, 2006; and see Carey, 2009, for a discussion from a nonembodied perspective of why the representational format of all of “core cognition” is likely to be iconic). Others have argued forcibly that the representations are abstract, amodal, and disembodied (although necessarily interactive with sensory–motor information; see, e.g., the “grounding by interaction” hypothesis proposed by Mahon & Caramazza, 2008).

The importance of the distinction between the content and format of mental representations was raised by Caramazza and colleagues (Caramazza, Hillis, Rapp, & Romani, 1990) in their argument against the “multiple, modality-specific semantics hypothesis” advocated by Shallice (Shallice, 1988; and see Shallice, 1993, for his reply, and Mahon, 2015, for more on the format argument). Prior to that the issue of format was, and it continues to be, the central focus of the lengthy debate regarding whether the format of mental imagery is propositional (e.g., Pylyshyn, 2003) or depictive (e.g., Kosslyn, Thompson, & Ganis, 2006).

The problem, however, is that we do not know how to determine the format of a representation (if we did, we would not still be debating the issue). And, knowing where in the brain information is stored, and/or what regions are active when that information is retrieved, offers no help at all. Even in the earliest, lowest-level regions of the visual-processing stream, the format could be depictive on the way up, and propositional on the way back down. What we do know is that at the biological level of description, mental representations are in the format of the neural code. No one knows what that is, and no one knows how it maps onto the cognitive descriptions of representational formats (i.e., amodal, propositional, depictive, iconic, and the like), nor even if those descriptions are appropriate for such mapping. What is missing from this debate is agreed-upon procedures for determining the format of a representation. Until then, the format question will remain moot. It has no practical significance.

Object property information is integrated within category-specific neural circuits: The case of “tools”

Functional brain imaging has provided a major advance in our thinking about how the brain responds to the environment by showing that viewing objects triggers a cascade of activity in multiple brain regions that, in turn, represent properties associated with that category of objects. Viewing faces, for example, elicits activity that extends beyond the fusiform face area to regions associated with perceiving biological motion (the posterior region of the superior temporal sulcus) and affect (the amygdala), even when the face images are static and posed with neutral expressions (Haxby, Hoffman, & Gobbini, 2000). Similarly, viewing images of common tools (objects with a strong link between how they are manipulated and their function; Mahon et al., 2007) elicits activity that extends beyond the ventral object-processing stream to include left hemisphere regions associated with object motion (posterior middle temporal gyrus) and manipulation (intraparietal sulcus, ventral premotor cortex) (Beauchamp, Lee, Haxby, & Martin, 2002, 2003, Chao & Martin, 2000, Grafton, Fadiga, Arbib, & Rizzolatti, 1997, Kellenbach, Brett, & Patterson, 2003, Mahon et al., 2007, Mahon, Kumar, & Almeida, 2013; and see Chouinard & Goodale, 2010, for a review).

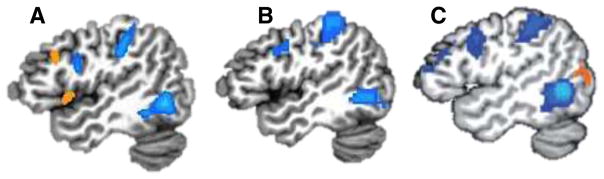

Thus, specific object categories are associated with unique networks or circuits composed of brain regions that code for different object properties. There are several important points to note about these circuits. Firstly, they reflect some, but certainly not all, of the properties associated with a particular category. Tools and animals, for example, have distinctive sounds (hammers bang, lions roar), yet the auditory system is not automatically engaged when viewing or naming tools or animals. Certain properties are more salient than others for representing a category of objects—a result that agrees well with behavioral data (e.g., Cree & McRae, 2003). Secondly, the regions comprising a circuit do not come online in piecemeal fashion as they are required to perform a specific task, but rather seem to respond in an automatic, all-or-none fashion, as if they were part of the intrinsic, functional neural architecture of the brain. Indeed, studies of spontaneous, slowly fluctuating neural activity recorded when subjects are not engaged in performing a task (i.e., task-independent or resting-state functional imaging) strongly support this possibility. These studies have shown that during the so-called resting state, there is strong covariation among the neural signals spontaneously generated from each of the regions active when viewing and identifying certain object categories, including faces (O’Neil, Hutchison, McLean, & Köhler, 2014, Turk-Browne, Norman-Haignere, & McCarthy, 2010), scenes (Baldassano, Beck, & Fei-Fei, 2013, Stevens, Buckner, & Schacter, 2010), and tools (Hutchison, Culham, Everling, Flanagan, & Gallivan, 2014, Simmons & Martin, 2012, Stevens, Tessler, Peng, & Martin, 2015; see Fig. 3). Certain object categories are associated with activity in a specific network of brain regions, and these regions are in constant communication, over and above the current task requirements.

Fig. 3.

Intrinsic circuitry for perceiving and knowing about “tools.” (A) Task-dependent activations: Sagittal view of the left hemisphere showing regions in posterior middle temporal gyrus, posterior parietal cortex, and premotor cortex that are more active when viewing tools than when viewing animals (blue regions, N = 34) (Stevens et al., in press). (B) Task-independent data: Covariation of slowly fluctuating neural activity recorded at “rest” in a single subject (blue regions). Seeds were in the medial region of the left fusiform gyrus and in the right lateral fusiform gyrus (not shown), identified by the comparison of tools versus animals, respectively (independent localizer). Resting-state time series in the color regions were significantly more correlated with fluctuations in the left medial fusiform gyrus than with those in the right lateral fusiform gyrus (for details, see Stevens et al., in press). (C) Covariation of slowly fluctuating neural activity recorded at “rest” in a group study (blue regions, N = 25). Seeds were in the left posterior middle temporal gyrus and the right posterior superior temporal sulcus, identified by independent localizer scans (see Simmons & Martin, 2012, for details)

Although the function of this slowly fluctuating, spontaneous activity remains largely unknown, one possibility is that it allows information about different properties to be shared across regions of the network. If so, then each region may act as a convergence zone (Damasio, 1989, Simmons & Barsalou, 2003) or “hub,” representing its primary property and, to a lesser extent, the properties of one or more of the other regions in the circuit—depending, perhaps, on its spatial relation to the other regions in the circuit (Power, Schlaggar, Lessov-Schlaggar, & Petersen, 2013). The more centrally located a region, the more hub-like its function. This seems to be the case for tools, for which a lesion of the most centrally located component of its circuitry, the posterior region of the left middle temporal gyrus, produces a category-specific knowledge deficit for tools and their associated actions (Brambati et al., 2006, Campanella, D’Agostini, Skrap, & Shallice, 2010, Mahon et al., 2007, Tranel, Damasio, & Damasio, 1997, Tranel, Manzel, Asp, & Kemmerer, 2008).2

According to this view, information about a property is not strictly localized to a single region (as is suggested by the overlap approach), but rather is a manifestation of local computations performed in that region as well as a property of the circuit as a whole (cf. Behrmann & Plaut, 2013). Moreover, regions vary in their global connectivity, or “hubness” (i.e., the extent to which a region is interconnected with other brain regions) (see Buckner et al., 2009, Cole, Pathak, & Schneider, 2010, Gotts et al., 2012; and Power, Schlaggar, Lessov-Schlaggar, & Petersen, 2013, for approaches and data on the brain’s hub structure).

An advantage of this view is that it provides a framework for understanding how a lesion to a particular region or node of a circuit can sometimes produce a deficit for retrieving one type of category-related information, but not others, whereas other lesions seem to produce a true category-specific disorder characterized by a failure to retrieve all types of information about a particular category (Capitani, Laiacona, Mahon, & Caramazza, 2003). For example, in the domain of tools, some apraxic patients with damage to left posterior parietal cortex can no longer demonstrate an object’s use, but can still name it, whereas other patients with damage to left middle temporal gyrus seem to have more general losses of knowledge about tools and their actions (e.g., Tranel, Kemmerer, Adolphs, Damasio, & Damasio, 2003b), presumably as a result of disrupted connectivity or functional diaschisis (He et al., 2007, Price, Warburton, Moore, Frackowiak, & Friston, 2001; see Carrera & Tononi, 2014, for a recent review).

Once we accept that different forms of knowledge about a single object category (e.g., tools) can be dissociated, we are left with an additional puzzle. The neuropsychological evidence clearly shows that damage to left posterior parietal cortex can result in an inability to correctly use an object, without affecting the ability to visually recognize and name that object (Johnson-Frey, 2004, Negri et al., 2007, Rothi, Ochipa, & Heilman, 1991). If so, then why is parietal cortex active when subjects simply view and/or name tools? What is the functional role of that activity? One possibility is that this parietal activity does not reflect any function at all. Rather, it is simply due to activity that automatically propagates from other parts of the circuit necessary to perform the task at hand. Naming tools requires activity in temporal cortex. Thus, regions in posterior parietal cortex may become active merely as a byproduct of temporal–parietal lobe connectivity; that activity might have no functional significance. Although this theory is logically possible, I do not think it is a serious contender. It takes a lot of metabolic energy to run a brain, and I doubt that systems have evolved to waste it (Raichle, 2006). Neural activity is never epiphenomenal; it always reflects some function, even though that function may not be readily apparent.

I think that there are two, non-mutually-exclusive purposes behind activity in the dorsal processing stream when naming tools. One possibility is that this activation is, in fact, part of the “full” representation of the concept of a tool (Mahon & Caramazza, 2008). Under that view, perception- and action-related properties are both constitutive, essential components of the full concept of a particular tool. Removal of one of these components—for example, action-related information—as a consequence of brain injury or disease would result in an impoverished concept. The concept of that tool would nevertheless remain grounded, but now by perceptual systems alone (for a different interpretation, see Mahon & Caramazza, 2008).

Another possibility is that parietal activity reflects the spread of activity to a function that typically occurs following object identification. For example, I have previously argued that the hippocampus is active when we name objects not because it is necessary to name them (it is not), but rather because it is necessary to be able to recall having named them (Martin, 1999). In a similar fashion, and consistent with the well-established role of the dorsal stream in action representation (Goodale & Milner, 1992), parietal as well as premotor activity associated with viewing tools might reflect a prediction or prime for future action (Martin, 2009, Simmons & Martin, 2012). Experience has taught us that seeing some objects is followed by an action. Activating the dorsal stream when viewing a tool may be a prime to use it. Activating the insula when viewing an appetizing food may be a prime to eat it—a phenomenon that the advertising industry has long been aware of.

Concluding comment

The GRAPES model provides a framework for understanding how information about object-associated properties is organized in the brain. A central advance of this model over previous formulations is a deeper recognition and understanding of the role played by the brain’s large-scale, intrinsic circuitry in providing dynamic links between regions representing the salient properties associated with specific object categories.

Many of these properties are situated within the ventral and dorsal processing streams that play a fundamental role in object and action representation. An ever-increasing body of data from monkey neuroanatomy and neurophysiology, and from human neuroimaging, is providing a more detailed understanding of this circuitry. One major implication of these findings is that the notion of serial, hierarchically organized processing streams is no longer tenable. Instead, these large-scale systems are best characterized by discrete, yet highly interactive circuits, which, in turn, are composed of multiple, recurrent feedforward and feedback loops (see Kravitz, Saleem, Baker, & Mishkin, 2011, Kravitz, Saleem, Baker, Ungerleider, & Mishkin, 2013, for a detailed overview and compelling synthesis of these findings). This type of architecture is assumed to characterize the category-specific circuits discussed here and to underpin the dynamic interaction between higher-order conceptual, perceptual, and lower-order sensory regions in the service of specific task and bodily demands.

The emphasis on grounded circuitry may also inform our understanding of how abstract concepts are organized. Imaging studies of social and social–emotional concepts (such as “brave,” honor, “generous,” “impolite,” and “convince”) have consistently implicated the most anterior extent of the superior temporal gyrus/sulcus (STG/STS; Simmons, Reddish, Bellgowan, & Martin, 2010, Wilson-Mendenhall, Simmons, Martin, & Barsalou, 2013, Zahn et al., 2007; for reviews, see Olson, McCoy, Klobusicky, & Ross, 2013, Simmons & Martin, 2009, Wong & Gallate, 2012), as well as medial—especially ventromedial—prefrontal cortex (Mitchell, Heatherton, & Macrae, 2002, Roy, Shohamy, & Wager, 2012, Wilson-Mendenhall et al., 2013). One might think that any conceptual information represented in these very anterior brain regions would be disconnected from, rather than grounded in, action and perceptual systems. Yet the circuitry connecting these regions with other areas of the brain suggests otherwise.

Tract-tracing studies of the macaque brain (Saleem, Kondo, & Price, 2008) and task-based (Burnett & Blakemore, 2009) and resting-state (Gotts et al., 2012, Simmons & Martin, 2012, Simmons et al., 2010) functional connectivity studies of the human brain have revealed strong connectivity between these anterior temporal and prefrontal regions. For example, anterior STG/STS, but not anterior ventral temporal cortex, is strongly connected to medial prefrontal cortex (Saleem et al., 2008). In addition, human functional-imaging studies have shown that both of these regions are part of a broader circuit implicated in multiple aspects of social functioning in typically developing individuals (for reviews, see Adolphs, 2009, Frith & Frith, 2007) and in social dysfunction in autistic subjects (e.g., Ameis & Catani, 2015, Gotts et al., 2012, Libero et al., 2014, Uddin et al., 2011, Wallace et al., 2010).

So, how are these social and social–emotional concepts grounded? They are grounded by virtue of being situated within circuitry that includes regions for perceiving and representing biological form (lateral region of the fusiform gyrus) and biological motion (posterior STS) and for recognizing emotion (the amygdala) (Burnett & Blakemore, 2009, Gotts et al., 2012, Simmons & Martin, 2012, Simmons et al., 2010). Clearly, much work remains to be done in uncovering the roles of the anterior temporal and frontal cortices in representing our social world. Nevertheless, these data provide an example of how even abstract concepts may be grounded in our action, perception, and emotion systems.

Acknowledgments

I thank Chris Baker, Alfonso Caramazza, Anjan Chatterjee, Dwight Kravitiz, Brad Mahon, and the members of my laboratory for their critical comments on earlier versions of this manuscript. This work was supported by the National Institute of Mental Health, National Institutes of Health, Division of Intramural Research (1 ZIA MH 002588-25; NCT01031407). The views expressed in this article do not necessarily represent the views of the NIMH, NIH, HHS, or the United States Government.

Footnotes

Multivariate pattern analysis methods (Haxby, Connolly, & Guntupalli, 2014) can be used to make claims about the degree of similarity in underlying representations, but they cannot determine whether the representations are identical.

It is of interest to note that this is one case in which the neuroimaging data preceded the lesion data; the prominence of the left posterior middle temporal gyrus for identifying tools and retrieving action knowledge was established prior to the neuropsychological findings. It is also noteworthy that these patient lesion data on loss of knowledge about tools stand as a serious challenge to proponents of a single, amodal semantic hub (e.g., Lambon Ralph, Sage, Jones, & Mayberry, 2010, Patterson, Nestor, & Rogers, 2007).

References

- Adolphs R. The social brain: Neural basis of social knowledge. Annual Review of Psychology. 2009;60:693–716. doi: 10.1146/annurev.psych.60.110707. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Allport DA. Distributed memory, modular subsystems and dysphasia. In: Newman SP, Epstein R, editors. Current perspectives in dysphasia. New York, NY: Churchill Livingstone; 1985. pp. 32–60. [Google Scholar]

- Amedi A, Malach R, Hendler T, Peled S, Zohary E. Visuohaptic object-related activation in the ventral visual pathway. Nature Neuroscience. 2001;4:687–689. doi: 10.1038/85201. [DOI] [PubMed] [Google Scholar]

- Amedi A, Jacobson G, Hendler T, Malach R, Zohary E. Convergence of visual and tactile shape processing in the human lateral occipital complex. Cerebral Cortex. 2002;12:1202–1212. doi: 10.1093/cercor/12.11.1202. [DOI] [PubMed] [Google Scholar]

- Amedi A, Stern WM, Camprodon JA, Bermpohl F, Merabet L, Rotman S, … Pascual-Leone A. Shape conveyed by visual-to-auditory sensory substitution activates the lateral occipital complex. Nature Neuroscience. 2007;10:324–330. doi: 10.1038/nn1912. [DOI] [PubMed] [Google Scholar]

- Ameis SH, Catani M. Altered white matter connectivity as a neural substrate for social impairment in Autism Spectrum Disorder. Cortex. 2015;62:158–181. doi: 10.1016/j.cortex.2014.10.014. [DOI] [PubMed] [Google Scholar]

- Amsel BD, Urbach TP, Kutas M. Empirically grounding grounded cognition: The case of color. NeuroImage. 2014;99:149–157. doi: 10.1016/j.neuroimage.2014.05.025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baldassano C, Beck DM, Fei-Fei L. Differential connectivity within the parahippocampal place area. NeuroImage. 2013;75:228–237. doi: 10.1016/j.neuroimage.2013.02.073. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barsalou LW. Perceptual symbol systems. Behavioral and Brain Sciences. 1999;22:577–660. doi: 10.1017/S0140525X99002149. [DOI] [PubMed] [Google Scholar]

- Beauchamp MS, Haxby JV, Jennings JE, DeYoe EA. An fMRI version of the Farnsworth–Munsell 100-hue test reveals multiple color-selective areas in human ventral occipitotemporal cortex. Cerebral Cortex. 1999;9:257–263. doi: 10.1093/cercor/9.3.257. [DOI] [PubMed] [Google Scholar]

- Beauchamp MS, Lee KE, Haxby JV, Martin A. Parallel visual motion processing streams for manipulable objects and human movements. Neuron. 2002;34:149–159. doi: 10.1016/s0896-6273(02)00642-6. [DOI] [PubMed] [Google Scholar]

- Beauchamp MS, Lee KE, Haxby JV, Martin A. fMRI responses to video and point-light displays of moving humans and manipulable objects. Journal of Cognitive Neuroscience. 2003;15:991– 1001. doi: 10.1162/089892903770007380. [DOI] [PubMed] [Google Scholar]

- Behrmann M, Plaut DC. Distributed circuits, not circumscribed centers, mediate visual cognition. Trends in Cognitive Sciences. 2013;17:210–219. doi: 10.1016/j.tics.2013.03.007. [DOI] [PubMed] [Google Scholar]

- Brambati SM, Myers D, Wilson A, Rankin KP, Allison SC, Rosen HJ, … Gorno-Tempini ML. The anatomy of category-specific object naming in neurodegenerative diseases. Journal of Cognitive Neuroscience. 2006;18:1644–1653. doi: 10.1162/jocn.2006.18.10.1644. [DOI] [PubMed] [Google Scholar]

- Buckner RL, Sepulcre J, Talukdar T, Krienen FM, Liu H, Hedden T, … Johnson KA. Cortical hubs revealed by intrinsic functional connectivity: Mapping, assessment of stability, and relation to Alzheimer’s disease. Journal of Neuroscience. 2009;29:1860–1873. doi: 10.1523/JNEUROSCI.5062-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buffalo EA, Fries P, Landman R, Buschman TJ, Desimone R. Laminar differences in gamma and alpha coherence in the ventral stream. Proceedings of the National Academy of Sciences. 2011;108:11262–11267. doi: 10.1073/pnas.1011284108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burnett S, Blakemore SJ. Functional connectivity during a social emotion task in adolescents and adults. European Journal of Neuroscience. 2009;29:1294–1301. doi: 10.1111/j.1460-9568.2009.06674.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Campanella F, D’Agostini S, Skrap M, Shallice T. Naming manipulable objects: Anatomy of a category specific effect in left temporal tumours. Neuropsychologia. 2010;48:1583–1597. doi: 10.1016/j.neuropsychologia.2010.02.002. [DOI] [PubMed] [Google Scholar]

- Capitani E, Laiacona M, Mahon B, Caramazza A. What are the facts of semantic category-specific deficits? A critical review of the clinical evidence. Cognitive Neuropsychology. 2003;20:213–261. doi: 10.1080/02643290244000266. [DOI] [PubMed] [Google Scholar]

- Caramazza A, Shelton JR. Domain-specific knowledge systems in the brain: The animate-inanimate distinction. Journal of Cognitive Neuroscience. 1998;10:1–34. doi: 10.1162/089892998563752. [DOI] [PubMed] [Google Scholar]

- Caramazza A, Hillis AE, Rapp BC, Romani C. The multiple semantics hypothesis: Multiple confusions? Cognitive Neuropsychology. 1990;7:161–189. [Google Scholar]

- Carey S. The origin of concepts. New York, NY: Oxford University Press; 2009. [Google Scholar]

- Carrera E, Tononi G. Diaschisis: Past, present, future. Brain. 2014;137:2408–2422. doi: 10.1093/brain/awu101. [DOI] [PubMed] [Google Scholar]

- Chao LL, Martin A. Cortical regions associated with perceiving, naming, and knowing about colors. Journal of Cognitive Neuroscience. 1999;11:25–35. doi: 10.1162/089892999563229. [DOI] [PubMed] [Google Scholar]

- Chao LL, Martin A. Representation of manipulable man-made objects in the dorsal stream. NeuroImage. 2000;12:478–484. doi: 10.1006/nimg.2000.0635. [DOI] [PubMed] [Google Scholar]

- Chouinard PA, Goodale MA. Category-specific neural processing for naming pictures of animals and naming pictures of tools: An ALE meta-analysis. Neuropsychologia. 2010;48:409–418. doi: 10.1016/j.neuropsychologia.2009.09.032. [DOI] [PubMed] [Google Scholar]

- Cole MW, Pathak S, Schneider W. Identifying the brain’s most globally connected regions. NeuroImage. 2010;49:3132–3148. doi: 10.1016/j.neuroimage.2009.11.001. [DOI] [PubMed] [Google Scholar]

- Cree GS, McRae K. Analyzing the factors underlying the structure and computation of the meaning of chipmunk, cherry, chisel, cheese, and cello (and many other such concrete nouns) Journal of Experimental Psychology: General. 2003;132:163–201. doi: 10.1037/0096-3445.132.2.163. [DOI] [PubMed] [Google Scholar]

- Damasio AR. Time-locked multiregional retroactivation: A systems-level proposal for the neural substrates of recall and recognition. Cognition. 1989;33:25–62. doi: 10.1016/0010-0277(89)90005-x. [DOI] [PubMed] [Google Scholar]

- Damasio H, Tranel D, Grabowski T, Adolphs R, Damasio A. Neural systems behind word and concept retrieval. Cognition. 2004;92:179–229. doi: 10.1016/j.cognition.2002.07.001. [DOI] [PubMed] [Google Scholar]

- Deen B, McCarthy G. Reading about the actions of others: Biological motion imagery and action congruency influence brain activity. Neuropsychologia. 2010;48:1607–1615. doi: 10.1016/j.neuropsychologia.2010.01.028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Felleman DJ, van Essen DC. Distributed hierarchical processing in the primate cortex. Cerebral Cortex. 1991;1:1–47. doi: 10.1093/cercor/1.1.1-a. [DOI] [PubMed] [Google Scholar]

- Foxworthy WA, Clemo HR, Meredith MA. Laminar and connectional organization of a multisensory cortex. Journal of Comparative Neurology. 2013;521:1867–1890. doi: 10.1002/cne.23264. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frith CD, Frith U. Social cognition in humans. Current Biology. 2007;17:R724–R732. doi: 10.1016/j.cub.2007.05.068. [DOI] [PubMed] [Google Scholar]

- Glenberg AM, Gallese V. Action-based language: A theory of language acquisition, comprehension, and production. Cortex. 2012;48:905–922. doi: 10.1016/j.cortex.2011.04.010. [DOI] [PubMed] [Google Scholar]

- Goodale MA, Milner AD. Separate visual pathways for perception and action. Trends in Neurosciences. 1992;15:20–25. doi: 10.1016/0166-2236(92)90344-8. [DOI] [PubMed] [Google Scholar]

- Gotts SJ, Simmons WK, Milbury LA, Wallace GL, Cox RW, Martin A. Fractionation of social brain circuits in autism spectrum disorders. Brain. 2012;135:2711–2725. doi: 10.1093/brain/aws160. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grafton ST, Fadiga L, Arbib MA, Rizzolatti G. Premotor cortex activation during observation and naming of familiar tools. NeuroImage. 1997;6:231–236. doi: 10.1006/nimg.1997.0293. [DOI] [PubMed] [Google Scholar]

- Harnad S. The symbol grounding problem. Physica D. 1990;42:335–346. [Google Scholar]

- Haxby JV, Hoffman EA, Gobbini MI. A distributed human neural system for face perception. Trends in Cognitive Sciences. 2000;4:223–233. doi: 10.1016/s1364-6613(00)01482-0. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Connolly AC, Guntupalli JS. Decoding neural representational spaces using multivariate pattern analysis. Annual Review of Neuroscience. 2014;37:435–456. doi: 10.1146/annurev-neuro-062012-170325. [DOI] [PubMed] [Google Scholar]

- He BJ, Snyder AZ, Vincent JL, Epstein A, Shulman GL, Corbetta M. Breakdown of functional connectivity in frontoparietal networks underlies behavioral deficits in spatial neglect. Neuron. 2007;53:905–918. doi: 10.1016/j.neuron.2007.02.013. [DOI] [PubMed] [Google Scholar]

- Howard RJ, ffytche DH, Barnes J, McKeefry D, Ha Y, Woodruff PW, … Brammer M. The functional anatomy of imagining and perceiving colour. NeuroReport. 1998;9:1019–1023. doi: 10.1097/00001756-199804200-00012. [DOI] [PubMed] [Google Scholar]

- Hsu NS, Kraemer DJM, Oliver RT, Schlichting ML, Thompson-Schill SL. Color, context, and cognitive style: Variations in color knowledge retrieval as a function of task and subject variables. Journal of Cognitive Neuroscience. 2011;23:2544–2557. doi: 10.1162/jocn.2011.21619. [DOI] [PubMed] [Google Scholar]

- Hsu NS, Frankland SM, Thompson-Schill SL. Chromaticity of color perception and object color knowledge. Neuropsychologia. 2012;50:327–333. doi: 10.1016/j.neuropsychologia.2011.12.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Humphreys G, Forde EME. Hierarchies, similarity, and interactivity in object recognition: “Category-specific” neuropsychological deficits. Behavioral and Brain Sciences. 2001;24:453–509. [PubMed] [Google Scholar]

- Hutchison RM, Culham JC, Everling S, Flanagan JR, Gallivan JP. Distinct and distributed functional connectivity patterns across cortex reflect the domain-specific constraints of object, face, scene, body, and tool category-selective modules in the ventral visual pathway. NeuroImage. 2014;96:216–236. doi: 10.1016/j.neuroimage.2014.03.068. [DOI] [PubMed] [Google Scholar]

- Huth AG, Nishimoto S, Vu AT, Gallant JL. A continuous semantic space describes the representation of thousands of object and action categories across the human brain. Neuron. 2012;76:1210–1224. doi: 10.1016/j.neuron.2012.10.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson-Frey SH. The neural bases of complex tool use in humans. Trends in Cognitive Sciences. 2004;8:71–78. doi: 10.1016/j.tics.2003.12.002. [DOI] [PubMed] [Google Scholar]

- Kellenbach ML, Brett M, Patterson K. Actions speak louder than functions: The importance of manipulability and action in tool representation. Journal of Cognitive Neuroscience. 2003;15:20–46. doi: 10.1162/089892903321107800. [DOI] [PubMed] [Google Scholar]

- Kiefer M, Pulvermüller F. Conceptual representations in mind and brain: Theoretical developments, current evidence and future directions. Cortex. 2012;48:805–825. doi: 10.1016/j.cortex.2011.04.006. [DOI] [PubMed] [Google Scholar]

- Killgore WDS, Young AD, Femia LA, Bogorodzki P, Rogowska J, Yurgelun-Todd DA. Cortical and limbic activation during viewing of high- versus low-calorie foods. NeuroImage. 2003;19:1381–1394. doi: 10.1016/s1053-8119(03)00191-5. [DOI] [PubMed] [Google Scholar]

- Kosslyn SM, Thompson WL, Ganis G. The case for mental imagery. New York, NY: Oxford University Press; 2006. [Google Scholar]

- Kravitz DJ, Saleem KS, Baker CI, Mishkin M. A new neural framework for visuospatial processing. Nature Reviews Neuroscience. 2011;12:217–230. doi: 10.1038/nrn3008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kravitz DJ, Saleem KS, Baker CI, Ungerleider LG, Mishkin M. The ventral visual pathway: An expanded neural framework for the processing of object quality. Trends in Cognitive Sciences. 2013;17:26–49. doi: 10.1016/j.tics.2012.10.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lambon Ralph MA, Sage K, Jones RW, Mayberry EJ. Coherent concepts are computed in the anterior temporal lobes. Proceedings of the National Academy of Sciences. 2010;107:2717–2722. doi: 10.1073/pnas.0907307107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Libero LE, DeRamus TP, Deshpande HD, Kana RK. Surface-based morphometry of the cortical architecture of autism spectrum disorders: Volume, thickness, area, and gyrification. Neuropsychologia. 2014;62:1–10. doi: 10.1016/j.neuropsychologia.2014.07.001. [DOI] [PubMed] [Google Scholar]

- Mahon BZ. What is embodied about cognition? Language, Cognition and Neuroscience. 2015;30:420–429. doi: 10.1080/23273798.2014.987791. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mahon BZ, Caramazza A. A critical look at the embodied cognition hypothesis and a new proposal for grounding conceptual content. Journal of Physiology. 2008;102:59–70. doi: 10.1016/j.jphysparis.2008.03.004. [DOI] [PubMed] [Google Scholar]

- Mahon BZ, Milleville SC, Negri GAL, Rumiati RI, Caramazza A, Martin A. Action-related properties shape object representations in the ventral stream. Neuron. 2007;55:507–520. doi: 10.1016/j.neuron.2007.07.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mahon BZ, Anzellotti S, Schwarzbach J, Zampini M, Caramazza A. Category-specific organization in the human brain does not require visual experience. Neuron. 2009;63:397–405. doi: 10.1016/j.neuron.2009.07.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mahon BZ, Kumar N, Almeida J. Spatial frequency tuning reveals interactions between the dorsal and ventral visual systems. Journal of Cognitive Neuroscience. 2013;25:862–871. doi: 10.1162/jocn_a_00370. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Martin A. The organization of semantic knowledge and the origin of words in the brain. In: Jablonski NG, Aiello LC, editors. The origins and diversification of language. San Francisco, CA: California Academy of Sciences; 1998. pp. 69–88. [Google Scholar]

- Martin A. Automatic activation of the medial temporal lobe during encoding: Lateralized influences of meaning and novelty. Hippocampus. 1999;9:62–70. doi: 10.1002/(SICI)1098-1063(1999)9:1<62::AID-HIPO7>3.0.CO;2-K. [DOI] [PubMed] [Google Scholar]

- Martin A. The representation of object concepts in the brain. Annual Review of Psychology. 2007;58:25–45. doi: 10.1146/annurev.psych.57.102904.190143. [DOI] [PubMed] [Google Scholar]

- Martin A. Circuits in mind: The neural foundations for object concepts. In: Gazzaniga MS, editor. The cognitive neurosciences. 4. Cambridge, MA: MIT Press; 2009. pp. 1031–1045. [Google Scholar]

- Martin A, Haxby JV, Lalonde FM, Wiggs CL, Ungerleider LG. Discrete cortical regions associated with knowledge of color and knowledge of action. Science. 1995;270:102–105. doi: 10.1126/science.270.5233.102. [DOI] [PubMed] [Google Scholar]

- Martin A, Ungerleider LG, Haxby JV. Category specificity and the brain: The sensory–motor model of semantic representations of objects. In: Gazzaniga MS, editor. The cognitive neurosciences. 2. Cambridge, MA: MIT Press; 2000. pp. 1023–1036. [Google Scholar]

- McClelland JL, Rogers TT. The parallel distributed processing approach to semantic cognition. Nature Reviews Neuroscience. 2003;4:310–322. doi: 10.1038/nrn1076. [DOI] [PubMed] [Google Scholar]

- McKeefry DJ, Zeki S. The position and topography of the human colour centre as revealed by functional magnetic resonance imaging. Brain. 1997;120:2229–2242. doi: 10.1093/brain/120.12.2229. [DOI] [PubMed] [Google Scholar]

- Meyer K, Kaplan JT, Essex R, Webber C, Damasio H, Damasio A. Predicting visual stimuli on the basis of activity in auditory cortices. Nature Neuroscience. 2010;13:667–668. doi: 10.1038/nn.2533. [DOI] [PubMed] [Google Scholar]

- Miceli G, Fouch E, Capasso R, Shelton JR, Tomaiuolo F, Caramazza A. The dissociation of color from form and function knowledge. Nature Neuroscience. 2001;4:662–667. doi: 10.1038/88497. [DOI] [PubMed] [Google Scholar]

- Mitchell JP, Heatherton TF, Macrae CN. Distinct neural systems subserve person and object knowledge. Proceedings of the National Academy of Sciences. 2002;99:15238–15243. doi: 10.1073/pnas.232395699. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murphey DK, Yoshor D, Beauchamp MS. Perception matches selectivity in the human anterior color center. Current Biology. 2008;18:216–220. doi: 10.1016/j.cub.2008.01.013. [DOI] [PubMed] [Google Scholar]

- Murphy GL. The big book of concepts. Cambridge, MA: MIT Press; 2002. [Google Scholar]

- Negri GAL, Rumiati RI, Zadini A, Ukmar M, Mahon BZ, Caramazza A. What is the role of motor simulation in action & object recognition? Evidence from apraxia. Cognitive Neuropsychology. 2007;24:795–816. doi: 10.1080/02643290701707412. [DOI] [PubMed] [Google Scholar]

- Noppeney U, Friston KJ, Price CJ. Effects of visual deprivation on the organization of the semantic system. Brain. 2003;126:1620–1627. doi: 10.1093/brain/awg152. [DOI] [PubMed] [Google Scholar]

- O’Neil EB, Hutchison RM, McLean DA, Köhler S. Resting-state fMRI reveals functional connectivity between face-selective perirhinal cortex and the fusiform face area related to face inversion. NeuroImage. 2014;92:349–355. doi: 10.1016/j.neuroimage.2014.02.005. [DOI] [PubMed] [Google Scholar]

- Olson IR, McCoy D, Klobusicky E, Ross LA. Social cognition and the anterior temporal lobes: A review and theoretical framework. Social Cognitive and Affective Neuroscience. 2013;8:123–133. doi: 10.1093/scan/nss119. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Patterson K, Nestor PJ, Rogers TT. Where do you know what you know? The representation of semantic knowledge in the human brain. Nature Reviews Neuroscience. 2007;8:976–987. doi: 10.1038/nrn2277. [DOI] [PubMed] [Google Scholar]

- Paulesu E, Harrison J, Baron-Cohen S, Watson JD, Goldstein L, Heather J, … Frith CD. The physiology of coloured hearing. A PET activation study of colour–word synaesthesia. Brain. 1995;118:661–676. doi: 10.1093/brain/118.3.661. [DOI] [PubMed] [Google Scholar]

- Pietrini P, Furey ML, Ricciardi E, Gobbini MI, Wu WHC, Cohen L, … Haxby JV. Beyond sensory images: Object-based representation in the human ventral pathway. Proceedings of the National Academy of Sciences. 2004;101:5658–5663. doi: 10.1073/pnas.0400707101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Plaut DC. Graded modality-specific specialization in semantics: A computational account of optic aphasia. Cognitive Neuropsychology. 2002;19:603–639. doi: 10.1080/02643290244000112. [DOI] [PubMed] [Google Scholar]

- Power JD, Schlaggar BL, Lessov-Schlaggar CN, Petersen SE. Evidence for hubs in human functional brain networks. Neuron. 2013;79:798–813. doi: 10.1016/j.neuron.2013.07.035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Price CJ, Warburton EA, Moore CJ, Frackowiak RSJ, Friston KJ. Dynamic diaschisis: Anatomically remote and context-sensitive human brain lesions. Journal of Cognitive Neuroscience. 2001;13:419–429. doi: 10.1162/08989290152001853. [DOI] [PubMed] [Google Scholar]

- Pylyshyn Z. Return of the mental image: Are there really pictures in the brain? Trends in Cognitive Sciences. 2003;7:113–118. doi: 10.1016/S1364-6613(03)00003-2. [DOI] [PubMed] [Google Scholar]

- Raichle ME. The brain’s dark energy. Science. 2006;314:1249–1250. doi: 10.1126/science.1134405. [DOI] [PubMed] [Google Scholar]

- Rosch E, Mervis CB, Gray WD, Johnson DM, Boyes-Braem P. Basic objects in natural categories. Cognitive Psychology. 1976;8:382–439. doi: 10.1016/0010-0285(76)90013-X. [DOI] [Google Scholar]

- Rothi LJ, Ochipa ZC, Heilman KM. A cognitive neuropsychological model of limb apraxis. Cognitive Neuropsychology. 1991;8:443–458. [Google Scholar]

- Roy M, Shohamy D, Wager TD. Ventromedial prefrontal–subcortical systems and the generation of affective meaning. Trends in Cognitive Sciences. 2012;16:147–156. doi: 10.1016/j.tics.2012.01.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saleem KS, Kondo H, Price JL. Complementary circuits connecting the orbital and medial prefrontal networks with the temporal, insular, and opercular cortex in the macaque monkey. Journal of Comparative Neurology. 2008;506:659–693. doi: 10.1002/cne.21577. [DOI] [PubMed] [Google Scholar]

- Saygin AP, McCullough S, Alac M, Emmorey K. Modulation of BOLD response in motion-sensitive lateral temporal cortex by real and fictive motion sentences. Journal of Cognitive Neuroscience. 2010;22:2480–2490. doi: 10.1162/jocn.2009.21388. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shallice T. Specialization within the semantic system. Cognitive Neuropsychology. 1988;5:133–142. doi: 10.1080/02643298808252929. [DOI] [Google Scholar]

- Shallice T. Multiple semantics: Whose confusions? Cognitive Neuropsychology. 1993;10:251–261. doi: 10.1080/02643299308253463. [DOI] [Google Scholar]

- Shuren JE, Brott TG, Schefft BK, Houston W. Preserved color imagery in an achromatopsic. Neuropsychologia. 1996;34:485–489. doi: 10.1016/0028-3932(95)00153-0. [DOI] [PubMed] [Google Scholar]

- Simmons WK, Barsalou LW. The similarity-in-topography principle: Reconciling theories of conceptual deficits. Cognitive Neuropsychology. 2003;20:451–486. doi: 10.1080/02643290342000032. [DOI] [PubMed] [Google Scholar]

- Simmons WK, Martin A. The anterior temporal lobes and the functional architecture of semantic memory. Journal of the International Neuropsychological Society. 2009;15:645–649. doi: 10.1017/S1355617709990348. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simmons WK, Martin A. Spontaneous resting-state BOLD fluctuations map domain-specific neural networks. Social Cognitive and Affective Neuroscience. 2012;7:467–475. doi: 10.1093/scan/nsr018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simmons WK, Martin A, Barsalou LW. Pictures of appetizing foods activate gustatory cortices for taste and reward. Cerebral Cortex. 2005;15:1602–1608. doi: 10.1093/cercor/bhi038. [DOI] [PubMed] [Google Scholar]

- Simmons WK, Ramjee V, Beauchamp MS, McRae K, Martin A, Barsalou LW. A common neural substrate for perceiving and knowing about color. Neuropsychologia. 2007;45:2802–2810. doi: 10.1016/j.neuropsychologia.2007.05.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simmons WK, Reddish M, Bellgowan PSF, Martin A. The selectivity and functional connectivity of the anterior temporal lobes. Cerebral Cortex. 2010;20:813–825. doi: 10.1093/cercor/bhp149. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simmons WK, Rapuano KM, Kallman SJ, Ingeholm JE, Miller B, Gotts SJ, … Martin A. Category-specific integration of homeostatic signals in caudal but not rostral human insula. Nature Neuroscience. 2013;16:1551–1552. doi: 10.1038/nn.3535. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Small DM. Taste representation in the human insula. Brain Structure and Function. 2010;214:551–561. doi: 10.1007/s00429-010-0266-9. [DOI] [PubMed] [Google Scholar]

- Smith FW, Goodale MA. Decoding visual object categories in early somatosensory cortex. Cerebral Cortex. 2015;25:1020–1031. doi: 10.1093/cercor/bht292. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stasenko A, Garcea FE, Dombovy M, Mahon BZ. When concepts lose their color: A case of object color knowledge impairment. Cortex. 2014;58:217–238. doi: 10.1016/j.cortex.2014.05.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stevens WD, Buckner RL, Schacter DL. Correlated low-frequency BOLD fluctuations in the resting human brain are modulated by recent experience in category-preferential visual regions. Cerebral Cortex. 2010;20:1997–2006. doi: 10.1093/cercor/bhp270. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stevens WD, Tessler MH, Peng CS, Martin A. Functional connectivity constrains the category-related organization of human ventral occipitotemporal cortex. Human Brain Mapping. 2015 doi: 10.1002/hbm.22764. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thompson-Schill SL. Neuroimaging studies of semantic memory: Inferring “how” from “where. Neuropsychologia. 2003;41:280–292. doi: 10.1016/S0028-3932(02)00161-6. [DOI] [PubMed] [Google Scholar]

- Tranel D, Damasio H, Damasio AR. A neural basis for retrieving conceptual knowledge. Neuropsychologia. 1997;35:1319–1327. doi: 10.1016/s0028-3932(97)00085-7. [DOI] [PubMed] [Google Scholar]

- Tranel D, Damasio H, Eichhorn GR, Grabowski T, Ponto LLB, Hichwa RD. Neural correlates of naming animals from their characteristic sounds. Neuropsychologia. 2003a;41:847–854. doi: 10.1016/S0028-3932(02)00223-3. [DOI] [PubMed] [Google Scholar]

- Tranel D, Kemmerer D, Adolphs R, Damasio H, Damasio AR. Neural correlates of conceptual knowledge for actions. Cognitive Neuropsychology. 2003b;20:409–432. doi: 10.1080/02643290244000248. [DOI] [PubMed] [Google Scholar]

- Tranel D, Manzel K, Asp E, Kemmerer D. Naming dynamic and static actions: Neuropsychological evidence. Journal of Physiology. 2008;102:80–94. doi: 10.1016/j.jphysparis.2008.03.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Turk-Browne NB, Norman-Haignere SV, McCarthy G. Face-specific resting functional connectivity between the fusiform gyrus and posterior superior temporal sulcus. Frontiers in Human Neuroscience. 2010;4:176. doi: 10.3389/fnhum.2010.00176. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Uddin LQ, Menon V, Young CB, Ryali S, Chen T, Khouzam A, … Hardan AY. Multivariate searchlight classification of structural magnetic resonance imaging in children and adolescents with autism. Biological Psychiatry. 2011;70:833–841. doi: 10.1016/j.biopsych.2011.07.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van der Laan LN, de Ridder DTE, Viergever MA, Smeets PAM. The first taste is always with the eyes: A meta-analysis on the neural correlates of processing visual food cues. NeuroImage. 2011;55:296–303. doi: 10.1016/j.neuroimage.2010.11.055. [DOI] [PubMed] [Google Scholar]

- van Kerkoerle T, Self MW, Dagnino B, Gariel-Mathis MA, Poort J, van der Togt C, Roelfsema PR. Alpha and gamma oscillations characterize feedback and feedforward processing in monkey visual cortex. Proceedings of the National Academy of Sciences. 2014;111:14332–14341. doi: 10.1073/pnas.1402773111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wallace GL, Dankner N, Kenworthy L, Giedd JN, Martin A. Age-related temporal and parietal cortical thinning in autism spectrum disorders. Brain. 2010;133:3745–3754. doi: 10.1093/brain/awq279. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Warrington EK, McCarthy R. Categories of knowledge—Further fractionations and an attempted integration. Brain. 1987;110:1273–1296. doi: 10.1093/brain/110.5.1273. [DOI] [PubMed] [Google Scholar]

- Warrington EK, Shallice T. Category specific semantic impairments. Brain. 1984;107:829–853. doi: 10.1093/brain/107.3.829. [DOI] [PubMed] [Google Scholar]

- Wiggs CL, Weisberg JA, Martin A. Neural correlates of semantic and episodic memory retrieval. Neuropsychologia. 1999;37:103–118. doi: 10.1016/s0028-3932(98)00044-x. [DOI] [PubMed] [Google Scholar]

- Wilson A, Foglia L. Embodied cognition. Zalta EN, editor. The Stanford encyclopedia of philosophy. (2011) 2011 Fall; Retrieved from http://plato.stanford.edu/archives/fall2011/entries/embodied-cognition/

- Wilson-Mendenhall C, Simmons WK, Martin A, Barsalou LW. Contextual processing of abstract concepts reveals neural representations of non-linguistic semantic content. Journal of Cognitive Neuroscience. 2013;25:920–935. doi: 10.1162/jocn_a_00361. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wong C, Gallate J. The function of the anterior temporal lobe: A review of the empirical evidence. Brain Research. 2012;17:94–116. doi: 10.1016/j.brainres.2012.02.017. [DOI] [PubMed] [Google Scholar]

- Zahn R, Moll J, Krueger F, Huey ED, Garrido G, Grafman J. Social concepts are represented in the superior anterior temporal cortex. Proceedings of the National Academy of Sciences. 2007;104:6430–6435. doi: 10.1073/pnas.0607061104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zeki S, Watson JDG, Lueck CJ, Friston KJ, Kennard C, Frackowiak RSJ. A direct demonstration of functional specialization in human visual cortex. Journal of Neuroscience. 1991;11:641–649. doi: 10.1523/JNEUROSCI.11-03-00641.1991. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zwaan RA, Taylor LJ. Seeing, acting, understanding: Motor resonance in language comprehension. Journal of Experimental Psychology: General. 2006;135:1–11. doi: 10.1037/0096-3445.135.1.1. [DOI] [PubMed] [Google Scholar]