Abstract

Although most studies of language learning take place in quiet laboratory settings, everyday language learning occurs under noisy conditions. The current research investigated the effects of background speech on word learning. Both younger (22‐ to 24‐month‐olds; n = 40) and older (28‐ to 30‐month‐olds; n = 40) toddlers successfully learned novel label–object pairings when target speech was 10 dB louder than background speech but not when the signal‐to‐noise ratio (SNR) was 5 dB. Toddlers (28‐ to 30‐month‐olds; n = 26) successfully learned novel words with a 5‐dB SNR when they initially heard the labels embedded in fluent speech without background noise, before they were mapped to objects. The results point to both challenges and protective factors that may impact language learning in complex auditory environments.

Children have an impressive ability to learn words quickly and with limited exposure (Carey & Bartlett, 1978). Young word learners are sensitive to the statistics of their auditory environment (Gerken, 2006; Saffran, Aslin, & Newport, 1996), can use the syntactic structure of a sentence to infer a word's meaning (Naigles, 1996; Yuan & Fisher, 2009), and are highly influenced by the social context in which they learn (e.g., Bannard & Tomasello, 2012; Hoff, 2006). Thus, the auditory environment in which children learn directly affects their language ability (e.g., Hoff, 2003; Hoff & Naigles, 2002; Weisleder & Fernald, 2013).

Although children's prodigious ability to learn words has been well established, most word‐learning experiments are conducted in quiet laboratory settings. This is quite different from the language learning environments that are likely typical for most young children: Households or child‐care settings with multiple people talking at the same time, lots of environmental noise, and many other distractions (e.g., TV) that are likely to make the word‐learning task vastly more challenging. Nevertheless, in the absence of developmental delays or disorders, children's vocabularies grow rapidly over the first years of life despite the fact that much of their word learning presumably occurs amid many distractions, auditory and otherwise. In order to fully understand how young children learn words and other aspects of their native language(s), we must account for the increased complexity created by extraneous auditory information. Ecologically motivated studies on the impact of environmental noise on children suggest that the presence of noise in a child's home or school impacts the child both cognitively and psychophysiologically, as evidenced by negative school performance and increased cortisol levels and heart rate (Evans, Hygge, & Bullinger, 1995). Reading skills, speech perception, and memory appear to be particularly vulnerable to the effects of noise (Evans & Maxwell, 1997; Evans et al., 1995; Maxwell & Evans, 2000).

In a classic study, Cohen, Glass, and Singer (1973) measured interior and exterior noise levels at the Bridge Apartments, an income‐controlled housing project built over a highway in New York City, and examined the effects of environmental noise on children's reading ability and auditory discrimination. The average external noise levels were relatively high (84 dB) and internal noise levels ranged from 55 to 66 dB. The measures of internal noise showed that as the floor level increased, the amount of noise exposure decreased. School‐aged children who lived in the lower, noisier floors of the apartments showed poorer auditory discrimination skills. Furthermore, children's auditory skills were significantly correlated with their reading abilities. These findings suggest that chronic noise pollution may cause a child to become inattentive to acoustic cues, which in turn leads to a decreased ability to discriminate phonemes and subsequent challenges in reading. Interestingly, children who are exposed to intense environmental noise may initially show an increased ability to tune out auditory distractors, but this benefit disappears after 4 years of exposure (Cohen, Krantz, Evans, Stokols, & Kelly, 1981). Thus, although noise exposure may initially confer an advantage to listeners, this advantage quickly diminishes when the exposure persists for extended periods of time.

Studies such as the aforementioned apartment noise study demonstrate that by uncoupling learning from the complex environments in which learning naturalistically occurs, laboratory testing may be occluding the greater picture of how children learn their native language(s). Experimental studies of early language development and other aspects of infant cognition have generally avoided the complicating effects of noise and other environmental variables using laboratory‐based methods. One laboratory method that can be used to approximate a dimension of naturalistic language exposure is the presentation of speech that is superimposed over background noise. Using this technique, researchers have been able to create auditory stimuli in the laboratory that simulate the complexity of everyday learning environments.

Infants’ ability to detect important information in target speech streams within a multitalker environment develops with age. For instance, 13‐month‐old infants are able to recognize their name when it is presented in the presence of background babble with a relatively small signal‐to‐noise ratio (SNR) of +5 dB (such that their name is presented slightly louder than the background babble), whereas 5‐ and 9‐month‐old infants only recognized their own names when the target voice was 10 dB higher in intensity than the background speech (Newman, 2005). Two‐year‐olds appear to be particularly adept at recognizing familiar words in background speech, tolerating background speech levels up to 5 dB louder than the target speech stream (Newman, 2011).

These results demonstrate that familiar word recognition is susceptible to auditory interference, and this susceptibility changes as a child gets older. Further research has found that infants can override competing speech cues under certain circumstances, particularly when the target speech and background speech are spatially separated (Litovsky, 2005) and when background and target speech differ in the speaker's gender, intensity, and quantity of talkers (Newman, 2009; Newman & Jusczyk, 1996). For example, Newman and Jusczyk (1996) investigated infants’ ability to recognize familiar words presented in noisy backgrounds consisting of multiple talkers. They found that infants can selectively attend to a specific talker in order to perceptually separate multiple speech streams. To do so, infants rely on the talker intensity and speaker gender as cues to the most pertinent speech stream. These results suggest that infants can exploit some of the same cues as adults to determine to which speech streams they should attend, given multiple overlapping talkers (Treisman, 1960). However, research in this area has largely focused on recognition of highly familiar words.

Recent studies have begun to examine the effect of background noise on children's comprehension as well as on the production of newly learned words. When learning words in noise versus quiet condition, children as young as 2.5‐years‐old and as old as 10‐years‐old do not appear to be affected by the presence of noise (Blaiser, Nelson, & Kohnert, 2014; Riley & McGregor, 2012). The results of production studies are less clear. Riley and McGregor (2012) found that 9‐ to 10‐year‐olds produced less accurate utterances for words learned in speech‐shaped noise compared to words learned in quiet. In a different study including 2.5‐ to 6‐year‐old children with and without hearing loss, multitalker babble did not appear to affect production of newly learned words (Blaiser et al., 2014). Interestingly, access to multiple opportunities to learn the novel words improved both comprehension and production for hearing‐impaired children. These data suggest that increased exposure may help children overcome the deleterious effects of noise during word learning.

Although it appears that some measures of word learning are susceptible to interference from certain types of noise, it is important to probe the limitations of children's burgeoning word learning. Dombroski and Newman (2014) examined word learning in the context of background speech with 32‐ to 36‐month‐old children. Three groups of children were taught novel words with either a +5 dB SNR, 0 dB SNR, or in quiet condition. Interestingly, while all groups of children showed evidence of learning, children in the +5 dB SNR condition showed poorer performance than either the 0 dB SNR or quiet conditions. Although Dombroski and Newman (2014) found that word learning was not impeded by noise, the age group chosen to assess word learning is older than those typically used to evaluate word recognition. As word‐recognition studies have shown that some 24‐month‐olds are able to recognize familiar words at a −5 dB SNR, it is possible that early word learning is less susceptible to noise than one would have assumed (Newman, 2011). However, it is also possible that a child's ability to learn words in noise increases with age. Indeed, Blaiser et al. (2014) found that older children were less impeded by background speech than younger children.

Investigations of learning in noise are particularly pressing because children from low‐income households are disproportionately exposed to noisy environments compared to their more affluent peers (e.g., Evans, 2004; Evans & Kantrowitz, 2002). As reported by Evans (2004), children from low‐socioeconomic status households are more likely to live in homes that are crowded—characterized as homes with more than one person per room (Myers, Baer, & Choi, 1996), spend more time watching television (Larsen & Vema, 1999), and attend schools with higher levels of environmental noise (Haines, Stansfeld, Head, & Job, 2002). This increased noise exposure may have lasting impacts on the language development of children from low‐socioeconomic statuses (SES) families. As early word learning is a dynamic process that is likely influenced by the child's auditory environment, it is important to understand how complex environments impact language development.

The question motivating the present experiments was whether toddlers are able to learn novel words in the presence of background speech. To address this question, we focused on toddlers younger than those included in prior studies of word learning in noise. The current studies combine the well‐established looking‐while‐listening method for investigating lexical processing with the use of multitalker babble to simulate a more complex auditory environment. Experiment 1 was designed to determine whether toddlers (22‐ to 24‐month‐olds) are able to learn novel words in the presence of background noise using two different intensities of background speech. Experiment 2 investigated whether older toddlers (28‐ to 30‐month‐olds) were better able to overcome the effects of background noise than younger toddlers. Finally, toddlers (28‐ to 30‐month‐olds) in Experiment 3 were exposed to novel word labels in clear speech prior to ostensive labeling as a possible way to ameliorate the negative effects of background speech. Together, the experiments examine factors that influence early word learning in complex auditory environments.

Experiment 1

This experiment explored whether 22‐ to 24‐month‐old toddlers are hampered by background speech when learning novel words. Participants were exposed to two different intensity levels of competing speech as a between‐subjects manipulation. The target age of 22‐ to 24‐month‐olds was chosen for this study because toddlers of this age are adept at recognizing familiar words in the context of background speech (Newman, 2011). We used a three‐phase, word‐learning paradigm designed to include experience with the sounds of words prior to word–label mapping (Lany & Saffran, 2010). In the first phase, auditory familiarization, toddlers were exposed to object labels (without referents) by listening to simple English sentences that included novel words. This phase was intended to give the toddlers experience with the auditory forms of the labels prior to exposure to the label–referent pairs. Next, during the referent training phase, toddlers saw an object image appear on the screen and heard its corresponding label (which had been previously heard without its referent during the auditory familiarization phase). Finally, during the test phase, toddlers were presented with a pair of objects on each trial. They then heard a target label that corresponded to one of the two objects. To test the effect of background speech on word learning, two‐talker background speech was used during both the auditory familiarization and referent training phases. Half of the participants experienced background speech at a 10‐dB SNR, while the other half of participants experienced a 5‐dB SNR. Testing was conducted in quiet, without background speech, for all toddlers. We predicted that while this learning task would normally be quite easy for toddlers in quiet conditions, we would observe a deleterious effect of the background speech, such that learners in the 5 dB SNR condition would outperform learners in the 10 dB SNR condition.

Method

Participants

Participants were 40 monolingual English learners (24 male) with a mean age of 23 months (range = 22.0–24.7 months). Participants were randomly assigned to either the 10 dB SNR condition (20 toddlers, 12 male, M age = 22.9 months, range = 22–24.7) or the 5 dB SNR condition (20 toddlers, 12 male, M age = 23.1 months, range = 22.1–24.7). Participants in the two conditions had comparable expressive vocabulary scores (M 10 dB = 60.3, range = 18–92; M 5 dB = 53.2, range = 16–90), as measured by the MacArthur‐Bates Communicative Development Inventory: Short‐Form Level II (MCDI II Short Form), t(38) = 1.017, p = .315. An additional 24 toddlers were excluded from the analyses due to parental interference (2), side bias (3), failure to reach the test phase due to inattentiveness (14), or failure to complete at least 50% of the test trials due to inattentiveness (5). All participants were full term and had no history of hearing or vision problems or current ear infections. Participants were recruited from a medium‐sized city with a large university community, located in the Midwestern United States. Data were collected from August 2011 through August 2013.

Materials

Two different audio streams—target and background speech—were used in this experiment. Target speech stimuli were digitally recorded by a native‐English‐speaking female in an infant‐directed register and were edited with Praat. All target stimuli were equalized to yield an average loudness of 65 dB for each phrase. Recordings of a native‐English male speaker producing sentences (from the Harvard IEEE corpus; Rothauser et al., 1969) were randomly overlaid to produce two‐talker background speech (Grieco‐Calub, Saffran, & Litovsky, 2009). The background speech was then equalized to yield a loudness of either 55 dB (10 dB SNR condition) or 60 dB (5 dB SNR condition).

Auditory familiarization

Toddlers were first familiarized to the auditory forms of the two novel words (coro and tursey). Following Jusczyk and Aslin (1995), stimuli for the auditory familiarization phase consisted of a six‐sentence paragraph for each novel word (see Appendix, for sentence lists). Each of the paragraphs featured the novel word in two sentence‐final, two sentence‐medial, and two sentence‐initial positions. While listening to the familiarization sentences, toddlers watched a video of clouds in order to maintain their interest toward the front screen.

Referent training

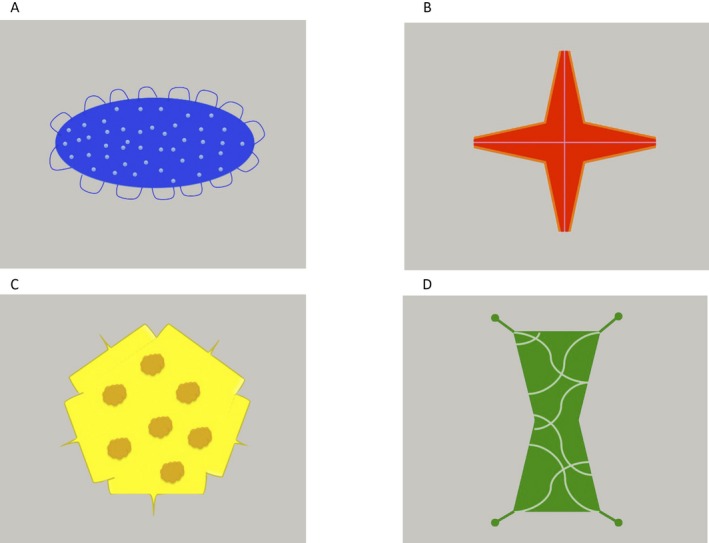

The stimuli consisted of the two novel labels previously heard during the auditory familiarization phase, each paired with an image of a single novel object (see Figure 1). Two familiar objects were also used during this phase to help children maintain interest in the task (ball and shoe). The object labels were embedded in common naming carrier phrases (Fennell & Waxman, 2010; Fernald & Hurtado, 2006): “Look at the ___. There's a ___.” or “See the ___. This is a ___.”

Figure 1.

The four novel objects. Objects A and B were used in Experiments 1 and 2. Objects A–D were used in Experiment 3. (A) Tursey, (B) Coro, (C) Pif, and (D) Blicket.

Test

During the test phase, two stationary images were positioned on the left and right sides of the screen. Target image locations were counterbalanced such that each object appeared as a target on the left and right side an equal number of times. On each test trial, one object served as the target and one served as the distractor. To direct their attention to the target image, toddlers heard either “Find the ___.” or “Where's the ___?” paired with a generic attention getter phrase (e.g., “Wow!” “Check that out.” “That's cool.” “Do you like it?”). Mean duration of carrier phrases across sentences was 902 ms (range = 891–923), and mean target–noun duration was 775 ms (range = 753–797). Test items were always presented in quiet, without background speech present.

At the beginning of each trial, toddlers saw the image pairs for 1,500 ms in silence, before the auditory stimuli began to play. At 1,500 ms, the carrier phrase began and was followed by the onset of the target word at 2,467 ms into the trial. Each trial ended 6,000 ms after picture onset. For data analyses, toddler's looks during a baseline window from 967 to 2,467 ms were compared to a target window from 2,767 to 4,267 ms.

Procedure

The study took place in a double‐walled sound‐attenuated booth. Visual stimuli were displayed on a high‐definition, 55 inch television. Toddlers were seated on their caregivers’ lap 3 feet from the screen. Caregivers were wore blacked‐out glasses to minimize their influence on the toddler's behavior. Background speech stimuli were presented in stereo via speakers located on either side of the chair. Target audio stimuli were presented through speakers located directly in front of the toddlers. Toddlers heard background speech stimuli simultaneously with target speech stimuli during both the auditory familiarization and the referent training phases. During the test phase, toddlers heard only the target speech. A custom software program was used to present the visual and auditory stimuli. A digital camera mounted below the monitor captured the session, and toddlers’ looks to the monitor were coded offline in 33 ms frames.

The three phases of the word‐learning study occurred sequentially while the toddlers were seated on their caregiver's lap. During the auditory familiarization phase (1.25 min), toddlers were familiarized with two novel words (coro and tursey) embedded in six‐sentence passages. Each toddler heard each passage twice, in alternating order. While listening to the passages, toddlers watched a neutral, unrelated video of clouds on the center monitor in order to maintain attention toward the front of the room. During the referent training phase (2.5 min), toddlers were taught the referents for the two words they had heard during the auditory familiarization phase. During each naming event, a single object moved up and down the left or right side of the screen as the object was labeled. This phase consisted six blocks of trials, with three trials per block. Each block began with one familiar‐object naming trial (ball or shoe), followed by two novel‐object naming trials. Toddlers saw each novel object six times and heard the object labeled twice during each object presentation. A 7‐s filler trial consisting of a cartoon was presented between each block to keep toddlers engaged in the task. The order of the novel words was counterbalanced across children. The test phase immediately followed the referent training phase. Two images (yoked into either novel or familiar pairs) appeared on the screen for 1.5 s prior to the onset of the auditory stimuli. Each trial lasted for 6 s. Each familiar word served as the target 4 times and each novel word served as the target 8 times, for a total of 24 trials. Trials were organized in blocks structured as in the referent training phase and filler trials occurred with the same frequency as the referent training phase. After the experiment, the caregivers filled out the MCDI II short form (Fenson et al., 2000).

Results and Discussion

Videos were coded offline by a trained coder using custom software (Swingley, Pinto, & Fernald, 1999). Children's looking behavior was coded frame by frame as either being left, right, or off‐screen. The coder was blind to the target word and side of target picture, and videos did not capture the auditory stimulus. To establish coding reliability, 20% of the videos were coded by a second coder, establishing an intercoder reliability rate of 98.4%.

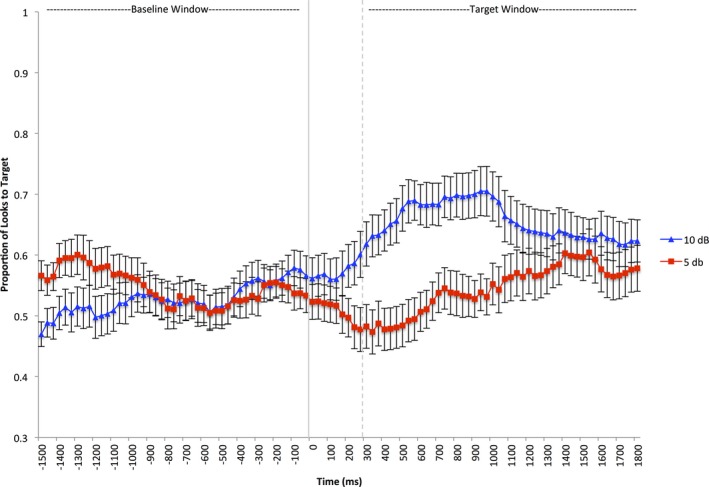

The primary question was whether background speech is detrimental to early word learning. The accuracy of each toddler's response on each trial was calculated as the proportion of time they looked at the target versus the distractor image (out of the total time spent fixating the target or distractor). Given the elevated baselines across samples (Figure 2), we have corrected for baseline in our analyses by comparing looking behavior before and after labeling of the target image. Toddler's looks during a 1,500 ms baseline window (from 967 to 2,467 ms) were compared to their proportion of looks to target during a 1,500‐ms target window following noun onset. The target window spanned from 2,767 to 4,267 ms, beginning 300 ms after noun onset in order to account for the planning of eye movements (Fernald et al., 2008).

Figure 2.

Results from Experiment 1. Mean proportion of looks to the target object as a function of time and condition during the baseline and target windows. The solid vertical line marks the onset of the target word. The dashed vertical line marks the beginning of the target window (300 ms after the onset of the spoken word). Error bars depict standard errors.

A paired‐sample t test was used to compare looking during the baseline and target windows separately for toddlers in each SNR condition (see Figure 2). In the 10 dB SNR condition, participants looked significantly more to the target object during the target window than during the baseline window (M baseline = .53, SE = .021; M target = .66, SE = .027), t(19) = 3.974, p < .001, d = .889, suggesting that toddlers in this condition successfully learned the words. However, in the 5 dB SNR condition, participants did not show a significant increase in looking during the target window relative to the baseline window (M baseline = .55, SE = .021; M target = .55, SE = .023) t(19) = 0.051, p = .960, d = .011. These results suggest that the intensity of the background speech relative to the target speech impacts word learning. When presented with novel words that were only 5 dB louder than the background speech, toddlers showed no evidence of word learning. However, toddlers did successfully learn the novel words when the background speech was 10 dB quieter than the target speech.

Previous studies have shown that children's ability to recognize familiar words in the presence of background noise improves with age and experience (Newman, 2005). Perhaps, like word recognition, older toddlers are better at word learning in noise than younger toddlers. Not only are older toddlers more sophisticated language users than younger toddlers, but they may also have more experience with complex auditory environments. Indeed, studies have found that 32‐ to 36‐month‐olds succeed in learning novel words in the presence of background speech (Dombroski & Newman, 2014). Experiment 2 was designed to determine whether 28‐ to 30‐month‐olds could learn under the same conditions as in Experiment 1. In particular, we hypothesized that these older toddlers would successfully learn words when there was only a 5‐dB difference between the target and distractor speech, unlike the younger toddlers in Experiment 1.

Experiment 2

Method

Participants

Participants were 40 monolingual English learners (25 male) with a mean age of 29.1 months (range = 27.7–30 months). Participants were randomly assigned to either the 10 dB SNR condition (20 toddlers, 13 male, M age = 29.1, range = 27.7–30) or the 5 dB SNR condition (20 toddlers, 12 male, M age = 29.1, range = 28–30). Participants in the two conditions had comparable expressive vocabulary scores (M 10 dB = 77.65, range = 38–97; M 5 dB = 77.40, range = 21–100), as measured by the MCDI II short form, t(38) = 0.044, p = .965. An additional 21 toddlers were excluded from the analyses due to side bias (2), failure to reach the test phase due to inattentiveness (12), or failure to complete at least 50% of the test trials due to inattentiveness (7). All participants were full term and had no history of hearing or vision problems or current ear infections. Participants were recruited from the same community as the participants in Experiment 1. Data were collected from August 2013 to April 2014.

Stimuli and Procedure

The stimuli and procedure in this experiment were identical to Experiment 1.

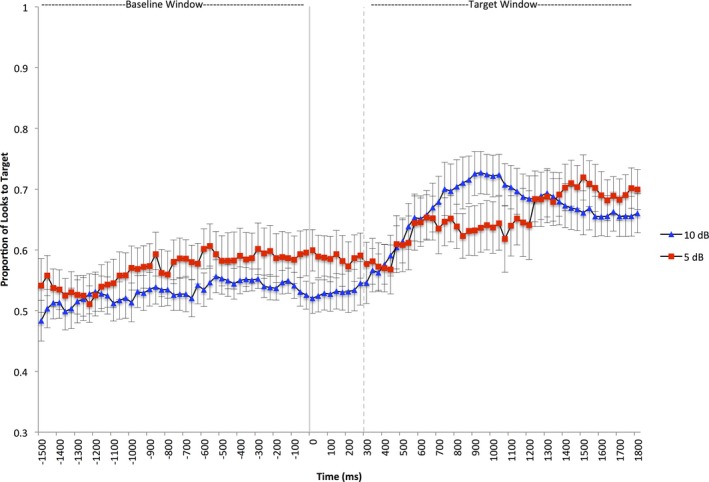

Results and Discussion

The data were analyzed in the same manner as in Experiment 1. Videos were coded with an interrater reliability of 97.8%. The mean looking time to the target image was calculated for the same baseline and target windows as in Experiment 1. A paired‐sample t test was used to examine whether participants looked longer toward the target object in the target window than in the baseline window in each condition. Toddlers in the 10 dB condition showed an increase in looking time to the target object during the target window relative to the baseline window (M baseline = .53, SE = .018; M target = .67, SE = .029), t(19) = 4.256, p < .001, d = .952, suggesting successful word learning (see Figure 3). However, toddlers in the 5 dB condition did not significantly increase their proportion of looks to the target object, suggesting that they failed to learn the novel words (M baseline = .57, SE = .019; M target = .64, SE = .031), t(19) = 1.699, p = .106, d = .380.

Figure 3.

Results from Experiment 2. Mean proportion of looks toddlers made to the target object as a function of time and condition during the baseline and target windows. The solid vertical line marks the onset of the target word. The dashed vertical line marks the beginning of the target window (300 ms after the onset of the spoken word). Error bars depict standard errors.

The results of Experiment 2 are essentially identical to those in Experiment 1. Both groups of toddlers showed the same pattern of sensitivity to the intensity of background speech, despite a substantial difference in age. Although word‐recognition studies have shown that as children develop, their ability to recognize words spoken concurrently with background speech increases (Newman, 2005), the participants in the current study showed no such developmental change. One possibility is that our two age groups were not that different developmentally, as the children in Experiment 2 were only 6 months older than the children in Experiment 1. However, a comparison of the MCDI scores for the two age groups indicates that the older toddlers had a significantly higher expressive vocabularies than the younger toddlers, t(78) = 4.670, p < .001, suggesting that a moderate increase in vocabulary size alone does not facilitate toddlers’ ability to overcome background speech.

Although both younger and older 2‐year‐olds showed difficulty in learning words in the presence of background noise at a higher SNR, the environment may provide useful cues to help overcome these difficulties. One such cue may be hearing labels in fluent speech in the absence of background speech. Before infants begin to map meanings onto labels, they have months of exposure to the sounds of the words. Most words that infants learn have been present in the auditory input for months or years before they are reliably attached to meanings. The majority of the speech children hear consists of multiword utterances that provide an opportunity for incidental learning that children may use to extrapolate the meaning of individual words (Werker & Yeung, 2005). Additionally, experimental results suggest that infants are able to use their prior experience with hearing fluent speech to successfully map words onto meanings (Graf Estes, Evans, Alibali, & Saffran, 2007; Hay, Pelucchi, Graf Estes, & Saffran, 2011; Lany & Saffran, 2010) and experience with fluent speech bolsters word representations (Swingley, 2007). Although participants in Experiments 1 and 2 did hear the object labels prior to learning the label–referent mapping, they did so under noisy conditions. Experiment 3 was designed to test whether experience with clear productions of a novel word prior to word–label mapping can facilitate word learning. Crucially, the training materials in Experiment 3 were created with a 5‐dB SNR—a level of background speech that impeded learning in Experiments 1 and 2.

Experiment 3

Method

This study used the same three‐phase word‐learning paradigm as in Experiments 1 and 2 to test whether providing the labels in fluent speech, without competitor speech, acts as a protective factor when learning word‐meaning mappings in the presence of background speech. During the auditory familiarization phase, toddlers in Experiment 3 were familiarized with two novel words in noise‐free, fluent speech. This is different from Experiments 1 and 2, in which the auditory familiarization phase included background speech. During the referent training phase, toddlers were taught object–label pairings for four novel words—the two they had previously heard during auditory familiarization and two new words—concurrently with background speech that was 5 dB quieter than the target words. Importantly, toddlers in the two prior studies failed to show evidence of word learning with a 5‐dB SNR. Testing was identical to the two prior studies, except that toddlers were tested on four novel words rather than two. Using four novel words and a within‐participants manipulation of noise experience, we were able to control for any idiosyncratic behavioral differences children might have during the referent training phase because of the background noise—allowing us to focus on the role of prior experience from the auditory familiarization phase. Additionally, using four words instead of two words allowed us to yoke the test trials into familiarized and nonfamiliarized pairs—minimizing any biases that might have been created by the increased frequency of object labels for the familiarized words.

We hypothesized that toddlers would be more successful at learning the two words that were heard in quiet during the auditory familiarization phase than the two words that were not, despite the fact that toddlers received equal training on the label–object mappings for all four words.

Participants

Participants were 26 monolingual English learners (12 male) with a mean age of 28.3 months (range = 27.7–30 months). Participants had comparable expressive vocabulary scores to the participants in Experiment 2 (M = 82.23; range = 43–98), as measured by the MCDI II short form, t(64) = 1.154, p = .253. An additional 12 toddlers were excluded from the analyses due to experimental error (2), side bias (1), failure to make reach the test phase due to inattentiveness (7), or failure to complete at least 50% of the test trials due to inattentiveness (2). All participants were full term and had no history of hearing or vision problems or current ear infections. Participants were recruited from the same community as in Experiments 1 and 2.

Stimuli

Stimuli were created using the same procedure as in Experiments 1 and 2.

Auditory Familiarization

Toddlers heard two novel words (either coro and tursey or blicket and pif) embedded in the same sentence frames as in Experiments 1 and 2. While listening to the familiarization sentences, toddlers watched a video of nature scenes in order to keep them engaged in the task. During this phase, the familiarization sentences were the only auditory stimuli. This is different from Experiments 1 and 2, in which the familiarization sentences were presented in background speech. Two different conditions were created to counterbalance the familiarized and nonfamiliarized words, such that half of the participants heard tursey and coro during the familiarization phase and the other half heard blicket and pif during the familiarization phase. Note that we chose these particular four words because previous work with these stimuli demonstrated that toddlers of this age can learn this set of four word–object pairings in quiet condition (Wojcik & Saffran, 2013). A single female speaker recorded all of the materials in an infant‐directed register.

Referent Training

Toddlers were taught the object–label pairings for four novel words (blicket, pif, coro, and tursey). Two of the labels had been previously heard during the auditory familiarization phase, and two of the labels were new to the infants. Each novel label was paired with a single novel object. The labels were embedded in the naming phrases used in Experiments 1 and 2. During each object‐labeling event, 60 dB background speech played simultaneously with the 65 dB target speech, creating a 5‐dB SNR—the same SNR that hindered word learning in Experiments 1 and 2.

Test

The test phase followed the same procedure as the test phases of Experiments 1 and 2. Toddlers saw two novel objects appear on the screen—yoked into familiarized and nonfamiliarized pairs—and heard a sentence directing their attention toward one of the objects. Mean duration of carrier phrases across sentences was 904 ms (range = 891–928), onset of the target–noun occurred at 2,467 ms, and mean target–noun duration was 730 ms (range = 654–797).

Procedure

The procedure was identical to Experiments 1 and 2, with three exceptions: toddlers heard 65 dB background speech stimuli simultaneously with target speech stimuli only during the referent training phase; during the referent training phase, toddlers were taught object–label mappings for four words rather than two; and toddlers were tested on four novel words rather than two. During the auditory familiarization phase (1.25 min), toddlers were familiarized to two novel words (coro and tursey or blicket and pif) in the same manner as in Experiments 1 and 2. During the referent training phase (2.5 min), toddlers were taught the object–label pairings for the two words they heard during the auditory familiarization phase as well as two new words. The referent training phase began with two familiar‐object trials (ball and shoe) to familiarize the toddlers with the task. Following the familiar‐object trials, toddlers saw each object 4 times and heard the corresponding label twice during each trial. Trials were organized into four blocks of trials, with four trials per block, for a total of 16 trials. The order of the novel words was randomized across children. The test phase immediately followed the referent training phase. Objects during the test phase were yoked into familiarized and nonfamiliarized pairs. The number of trials presented during the test phase differed from Experiments 1 and 2, with each word acting as the target 4 times, for a total of 18 trials, two familiar‐object‐filler trials and 16 novel‐object trials. Trials were organized into four blocks, with each block featuring one trial for each word. After the experiment, the caregivers filled out the MCDI II short form (Fenson et al., 2000).

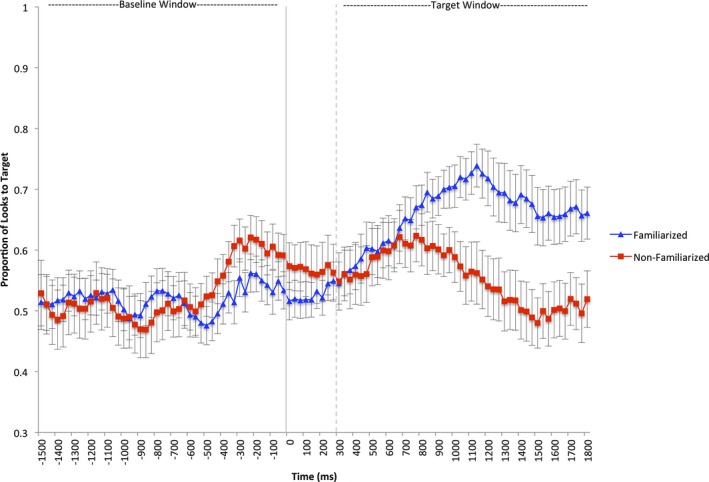

Results and Discussion

The data were coded as in Experiments 1 and 2, with an interrater reliability of 98.6%. The mean looking time to the target image was calculated for the same baseline and target window as in Experiments 1 and 2. For familiarized words, toddlers showed an increase in looking time from baseline to target window (M baseline = .52, SE = .014; M target = .66, SE = .030). Toddlers did not show increased looking to the target for nonfamiliarized words (M baseline = .53, SE = .025; M target = .55, SE = .031). A 2 (word type) × 2 (looking window) repeated‐measures analysis of variance was used to determine whether there was an effect of auditory familiarization on word learning. There was a significant main effect of familiarization, F(1, 25) = 9.214, p = .006, , and a significant main effect of looking window, F(1, 25) = 4.907, p = .036, . The Familiarization × Looking Window interaction was also significant, F(1, 25) = 5.019, p = .034, . This pattern of data suggests that word learning was more successful for the familiarized words, for which toddlers experienced clear exposures to word tokens, prior to the object‐labeling events (see Figure 4).

Figure 4.

Results from Experiment 3. Mean proportion of looks toddlers made to the target object as a function of time and condition during the baseline and target windows. The solid vertical line marks the onset of the target word. The dashed vertical line marks the beginning of the target window (300 ms after the onset of the spoken word). Error bars depict standard errors.

Experience with an auditory label, prior to using that label to explicitly name an object, facilitates word learning in a complex auditory environment. In Experiments 1 and 2, toddlers failed to learn words when there was a 5‐dB SNR during both the auditory familiarization and referent training phases. However, in Experiment 3, providing toddlers with clear tokens of the labels in fluent speech during the auditory familiarization promoted word learning in spite of the presence of the 5 dB SNR background speech during the referent training phase. Importantly, this effect cannot simply be due to hearing the familiarized words more often than the nonfamiliarized words: Toddlers in Experiments 1 and 2 were also familiarized with the target words before label–object mapping but in the presence of noise. It thus appears that the beneficial effects of prior exposure derive from having heard the familiarized words in quiet in Experiment 3, prior to label–object mapping in noise.

General Discussion

Using a three‐phase word‐learning paradigm, we examined the vulnerability of word learning in the presence of background speech. In Experiments 1 and 2, younger (22‐ to 24‐month‐olds) and older (28‐ to 30‐month‐old) toddlers were first familiarized with novel auditory labels in fluent speech. They were then trained on mappings between these labels and novel objects. To examine the effect of background speech on word learning, toddlers heard multitalker background speech during both learning phases—during auditory familiarization and during the label–object mapping. Toddlers in both age groups showed evidence of word learning only when the background noise was quieter—10 dB SNR versus 5 dB SNR. Thus, it appears that although toddlers can contend with moderate levels of background speech in word learning, higher intensities of background speech hinder learning.

It is important to note that there are several aspects of our design that limit the generalizability of the current results. We exposed children to only one type of background noise—two‐talker babble speech, which was created by overlapping sentences produced by the same speaker. Multitalker babble provides both energetic and informational masking, which may have compounded the effects of our noise manipulation. However, there are several types of multitalker babble that may have produced different results (Tun & Wingfield, 1999). We selected two‐talker babble as our competitor stimuli based on previous findings that 10 dB SNR two‐talker babble impedes familiar word recognition in 30‐month‐olds (Grieco‐Calub et al., 2009). In addition, children have a more difficult time recognizing familiar words when the background speech has fewer talkers (Newman, 2009). It is likely that the two‐talker babble speech, as opposed to the nine‐talker babble speech used by Dombroski and Newman (2014), is a more difficult form of competitor speech, which may have been particularly challenging for these toddlers.

Additionally, the words selected for our tasks—tursey, coro, pif, and blicket—are highly dissimilar, which may have impacted children's ability to learn. This was an intentional choice in order to make the task easier for toddlers to learn. Indeed, these words have been shown to be learnable in other studies with a similar age group (Wojcik & Saffran, 2013). It is likely that if we increased the similarity of the words, we would have increased the processing demands of the task, which would in turn lead to a greater effect of noise on learning for children in the 10 dB SNR condition (Swingley & Aslin, 2007; Werker, Fennel, Corcoran, & Stager, 2002).

Using a three‐phase word‐learning paradigm, we were able to simulate two distinct aspects of the word‐learning experience: exposure to the sounds of words in fluent speech and forming an object–referent mapping. Experience with fluent speech has been shown to directly affect children's word learning, helping them connect sound and meaning in their lexicon (Estes et al., 2007; Lany & Saffran, 2010; Lany & Saffran, 2011). In Experiment 3, we asked whether first hearing word labels in competitor‐free fluent speech would enable toddlers to subsequently learn mappings between the labels and objects in the presence of high‐intensity noise. Toddlers (28‐ to 30‐month‐old) first heard two novel words in fluent speech, with no background speech present. They were then taught the label–object pairings for the previously heard words, as well as two new label–object pairings. During this training phase, the target labels were paired with 60 dB background speech—the same SNR that hindered learning in Experiments 1 and 2. Although toddlers were unable to identify the correct referent for labels they heard only during training, they successfully identified the target object for the labels they had first heard during the familiarization phase. Providing toddlers with clear tokens of the auditory labels prior to a naming event facilitated toddlers’ word learning in Experiment 3, whereas the presence of competitor speech during auditory familiarization hindered learning in Experiments 1 and 2. Thus, in addition to helping children organize their lexicon, experience with clear fluent speech may also bolster their later perception and acquisition of words in difficult listening conditions.

A similar facilitation effect has been found for word recognition; school‐aged children and adults are better at identifying target words in a high‐noise trial if that trial is preceded by a low‐noise trial (Fallon, Trehub, & Schneider, 2000). Experience with the task under less demanding conditions allowed both adults and children to generalize to the higher demand created by increased background speech, though it is unclear which specific information led to an increase in performance. Additionally, providing children with repeated exposures to a word help children overcome the effects of background speech during word learning (Blaiser et al., 2014). Given the prevalence of noise, including both environmental sounds and background speech, it is likely that children rarely experience completely quiet environments when learning. However, even limited listening opportunities in quieter environments may help children overcome the deleterious effects of noisier environments.

Urban environments, such as those investigated by Cohen et al. (1973, 1981) may be particularly difficult for children as they often feature high levels of environmental noise. The presence of noise in Cohen et al.’ studies may be directly interrupting the act of learning, and although they were limited in their ability to establish a causal link between language environment and later reading abilities, the results of our experiments indicate that exposure to background noise may directly hinder language learning opportunities. On a more positive note, though, the results of Experiment 3 suggest that providing children with some opportunities to learn in quiet environments may help compensate for the effects of otherwise noisy environments.

A considerable amount of research has examined differences in the language environment of children growing up in households with different SES. Broadly speaking, this literature suggests that children from lower SES homes are exposed to less language, that the language they hear is less varied in both vocabulary and syntax, and that this variability is reflected in the child's own language development (Hart & Risley, 1995; Hoff, 2003; Huttenlocher et al. 2010). Hart and Risley (1995) estimated that by the age of 3, children in high‐SES households will have heard 30 million more words than low‐SES children. However, even within a given SES group there is a large amount of variability in the amount of words a child hears (Weisleder & Fernald, 2013). In order to fully understand how the language environment shapes a child's language development, it may be important to consider the auditory environment more generally. The presence or absence of background noise in a child's home may be yet another factor that affects how children perceive and learn from the available language input.

Although we have only begun to study the effects of background noise on word learning in young children, previous studies have shown that even older children have difficulty with processing speech that is embedded in noise (Stelmachowicz, Pittman, Hoover, & Lewis, 2004), and a child's ability to overcome the sensory demands created by background noise does not reach adult levels until the late teenage years (Johnson, 2000). In addition to having a greater impact on younger listeners than older listeners (Fallon et al., 2000), background noise has been shown to be particularly problematic for hearing‐impaired individuals. Toddlers who use cochlear implants have poorer word recognition than their hearing peers, especially when background speech is present (Grieco‐Calub et al., 2009). Although cochlear implants enable toddlers with hearing loss to experience the auditory world around them, users must still contend with perceptual limitations. Similarly, children with learning disabilities have an increased difficulty with understanding sentences in noise than children without a language disability (Bradlow, Kraus, & Hayes, 2003). Given the prevalence of noise in children's environment, it is important to understand how these populations of learners may be differentially impacted by complexity and acoustic distraction in their environments in order to properly identify interventions and learning strategies.

There is a paucity of studies on the effects of noise on learning with infants and toddlers, but it is clear that background noise is a very real issue for school‐age children. Classrooms are frequently characterized as exceeding the recommended noise levels (Knecht, Nelson, Whitelaw, & Feth, 2002), and children are sensitive to the variety of intrusive sound sources in their environment (Dockrell & Shield, 2004). The presence of white noise affected school‐age children's accuracy in identifying and producing newly learned words (Riley & McGregor, 2012). However, the effects of background noise were ameliorated when students heard tokens produced with hyperarticulated speech—known as clear speech—versus plain speech. Interestingly, mothers teaching their 2‐year‐old children new words change their infant‐directed speech style in a manner similar to clear speech—greatly fluctuating the prosody of their utterances—when multitalker speech is playing in the background (Newman, 2003). However, the greater pitch variability, higher overall pitch, and slower speaking rate did not appear to impact children's ability to learn words. In complex, noisy environments, speakers adapt their speaking style in order to lower the listening demands. To fully understand how children learn in complex auditory environments, future studies must account for both speaker variability as well as environmental variability, as learning in complex environments relies on an interaction between characteristics of the child and the acoustic setting created by overlapping sources of information.

Two‐year‐olds are particularly adept at extracting meaning from their world in order to learn language. The present studies begin to simulate real learners’ messy worlds by manipulating background noise. In this first look at early word learning when background speech is present, we have begun to understand some of the vulnerabilities of learning mechanisms involved in word learning. Given the inherent complexity of the auditory environment, it is imperative that future studies begin to look at both the real‐world environment, as well as the processes that drive word learning, in order to fully understand how children learn.

Sentences used during the auditory familiarization phase. Participants in Experiments 1 and 2 heard paragraphs A and B. Participants in Experiment 3 heard either paragraphs A and B or paragraphs C and D, depending on counterbalanced condition.

“The tursey is on the table. The girl picked up a pretty tursey. The cat swatted her tursey across the apartment. The teacher played with your tursey. A tursey rolled onto the ground. The doctor put a shiny tursey on the counter.”

“The coro is under the tree. The boy dropped the heavy coro. The bird tossed his coro into the air. The nurse gave me a big coro. A coro fell on the floor. The mailman brought a new coro into the house.”

“The blicket is on the table. The girl picked up a pretty blicket. The cat swatted her blicket across the apartment. The teacher played with your blicket. A blicket rolled onto the ground. The doctor put a shiny blicket on the counter.”

“The pif is under the tree. The boy dropped the heavy pif. The bird tossed his pif into the air. The nurse gave me a big pif. A pif fell on the floor. The mailman brought a new pif into the house.”

We would like to thank the participating families and the members of the Infant Learning Lab, especially Erica Wojcik, Annalisa Groth, Hilary Stein, and Ami Regele. We also thank Erica Wojcik and Casey Lew‐Williams for their comments on previous versions of this article. This research was funded by NICHD grants to Brianna T. M. McMillan (T32HD049899; PI Maryellen MacDonald), Jenny R. Saffran (R37HD037466), and the Waisman Center (P30HD03352); Morse Society Fellowship to Brianna T. M. McMillan; and by a grant from the James F. McDonnell Foundation to Jenny R. Saffran.

References

- Bannard, C. , & Tomasello, M. (2012). Can we dissociate contingency learning from social learning in word acquisition by 24‐month‐olds? PLoS ONE, 7, doi:10.1371/journal.pone.0049881 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blaiser, K. M. , Nelson, P. B. , & Kohnert, K. (2014). Effect of repeated exposures on word learning in quiet and noise. Communication Disorders Quarterly, 37, 25–35. doi:10.1177/1525740114554483 [Google Scholar]

- Bradlow, A. R. , Kraus, N. , & Hayes, E. (2003). Speaking clearly for children with learning disabilities: Sentence perception in noise. Journal of Speech, Language, and Hearing Research, 46, 80–97. doi:10.1121/1.4743692 [DOI] [PubMed] [Google Scholar]

- Carey, S. , & Bartlett, E. (1978). Acquiring a single new word. Papers and Reports on Child Language Development, 15, 17–29. [Google Scholar]

- Cohen, S. , Glass, D. C. , & Singer, J. E. (1973). Apartment noise, auditory discrimination, and reading ability in children. Journal of Experimental Social Psychology, 9, 407–422. doi:10.1016/S0022‐1031(73)80005‐8 [Google Scholar]

- Cohen, S. , Krantz, D. S. , Evans, G. W. , Stokols, D. , & Kelly, S. (1981). Aircraft noise and children: Longitudinal and cross‐sectional evidence on adaptation to noise and the effectiveness of noise abatement. Journal of Personality and Social Psychology, 40, 331. doi:10.1037//0022‐3514.40.2.331 [Google Scholar]

- Dockrell, J. E. , & Shield, B. (2004). Children's perceptions of their acoustic environment at school and at home. The Journal of the Acoustical Society of America, 115, 2964–2973. doi:10.1121/1.1652610 [DOI] [PubMed] [Google Scholar]

- Dombroski, J. , & Newman, R. S. (2014). Toddlers’ ability to map the meaning of new words in multi‐talker environments. The Journal of the Acoustical Society of America, 136, 2807–2815. doi:10.1121/1.4898051 [DOI] [PubMed] [Google Scholar]

- Evans, G. W. (2004). The environment of childhood poverty. American Psychologist, 59(2), 77. doi:10.1037/0003‐066x.59.2.77 [DOI] [PubMed] [Google Scholar]

- Evans, G. W. , Hygge, S. , & Bullinger, M. (1995). Chronic noise and psychological stress. Psychological Science, 6, 333–338. doi:10.1111/j.1467‐9280.1995.tb00522.x [Google Scholar]

- Evans, G. W. , & Kantrowitz, E. (2002). Socioeconomic status and health: The potential role of environmental risk exposure. Annual Review of Public Health, 23, 303–331. doi:10.1146/annurev.publhealth.23.112001.112349 [DOI] [PubMed] [Google Scholar]

- Evans, G. W. , & Maxwell, L. (1997). Chronic noise exposure and reading deficits: The mediating effects of language acquisition. Environment and Behavior, 29, 638–656. doi:10.1177/0013916597295003 [Google Scholar]

- Fallon, M. , Trehub, S. E. , & Schneider, B. A. (2000). Children's perception of speech in multitalker babble. The Journal of the Acoustical Society of America, 108, 3023–3029. doi:10.1121/1.1323233 [DOI] [PubMed] [Google Scholar]

- Fennell, C. T. , & Waxman, S. R. (2010). What paradox? Referential cues allow for infant use of phonetic detail in word learning. Child Development, 81, 1376–1383. doi:10.1111/j.1467‐8624.2010.01479.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fenson, L. , Pethick, S. , Renda, C. , Cox, J. L. , Dale, P. S. , & Reznick, J. S. (2000). Short‐form versions of the MacArthur Communicative Development Inventories. Applied Psycholinguistics, 21, 95–116. doi:10.1017/S0142716400001053 [Google Scholar]

- Fernald, A. , & Hurtado, N. (2006). Names in frames: Infants interpret words in sentence frames faster than words in isolation. Developmental Science, 9(3), F33–F40. doi:10.1111/j.1467.7687.2006.00482.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fernald, A. , Zangl, R. , Portillo, A. L. , & Marchman, V. A. (2008). Looking while listening: Using eye movements to monitor spoken language. Developmental Psycholinguistics: On‐line Methods in Children's Language Processing, 113–132. [Google Scholar]

- Gerken, L. (2006). Decisions, decisions: Infant language learning when multiple generalizations are possible. Cognition, 98(3), B67–B74. doi:10.1016/j.cognition.2004.03.003 [DOI] [PubMed] [Google Scholar]

- Graf Estes, K. , Evans, J. L. , Alibali, M. W. , & Saffran, J. R. (2007). Can infants map meaning to newly segmented words? Statistical segmentation and word learning. Psychological Science, 18, 254–260. doi:10.1111/j.1467‐9280.2007.01885.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grieco‐Calub, T. M. , Saffran, J. R. , & Litovsky, R. Y. (2009). Spoken word recognition in toddlers who use cochlear implants. Journal of Speech, Language, and Hearing Research, 52, 1390–1400. doi:10.1044/1092‐4388(2009/08‐0154) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haines, M. M. , Stansfeld, A. A. , Head, J. , & Job, R. F. (2002). Multilevel modelling of aircraft noise on performance tests in schools around Heathrow Airport London. Journal of Epidemiology and Community Health, 56(2), 139–144. doi: 10.1136/jech.56.2.139 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hart, B. , & Risley, T. R. (1995). Meaningful differences in the everyday experience of young American children. Baltimore, MD: Paul H Brookes Publishing. [Google Scholar]

- Hay, J. F. , Pelucchi, B. , Graf Estes, K. , & Saffran, J. R. (2011). Linking sounds to meanings: Infant statistical learning in a natural language. Cognitive Psychology, 63, 93–106. doi:10.1016/j.cogpsych.2011.06.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoff, E. (2003). The specificity of environmental influence: Socioeconomic status affects early vocabulary development via maternal speech. Child Development, 74, 1368–1378. doi:10.1111/1467‐8624.00612 [DOI] [PubMed] [Google Scholar]

- Hoff, E. (2006). How social contexts support and shape language development. Developmental Review, 26(1), 55–88. doi:10.1016/j.dr.2005.11.002 [Google Scholar]

- Hoff, E. , & Naigles, L. (2002). How children use input to acquire a lexicon. Child Development, 73, 418–433. doi:10.1111/1467‐8624.00415 [DOI] [PubMed] [Google Scholar]

- Huttenlocher, J. , Waterfall, H. , Vasilyeva, M. , Vevea, J. , & Hedges, L. V. (2010). Sources of variability in children's language growth. Cognitive Psychology, 61, 343–365. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson, C. E. (2000). Children's phoneme identification in reverberation and noise. Journal of Speech, Language & Hearing Research, 43, 144–157. [DOI] [PubMed] [Google Scholar]

- Jusczyk, P. W. , & Aslin, R. N. (1995). Infants’ detection of the sound patterns of words in fluent speech. Cognitive Psychology, 29, 1–23. doi:10.1006/cogp.1995.1010 [DOI] [PubMed] [Google Scholar]

- Knecht, H. A. , Nelson, P. B. , Whitelaw, G. M. , & Feth, L. L. (2002). Background noise levels and reverberation times in unoccupied classrooms: Predictions and measurements. American Journal of Audiology, 11(2), 65–71. doi:10.1044/1059‐0899(2002/009) [DOI] [PubMed] [Google Scholar]

- Lany, J. , & Saffran, J. R. (2010). From statistics to meaning: Infants’ acquisition of lexical categories. Psychological Science, 21, 284–291. doi:10.1177/0956797609358570 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lany, J. , & Saffran, J. R. (2011). Interactions between statistical and semantic information in infant language development. Developmental Science, 14, 1207–1219. doi:10.1111/j.1467‐7687.2011.01073.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Larson, R. , & Verma, S. (1999). How children and adolescents spend time across the world: Work, play and developmental opportunities. Psychological Bulletin, 126, 701–736. doi:10.1037/0033‐2909.135.6.701 [DOI] [PubMed] [Google Scholar]

- Litovsky, R. Y. (2005). Speech intelligibility and spatial release from masking in young children. The Journal of the Acoustical Society of America, 117, 3091–3099. doi:10.1121/1.1873913 [DOI] [PubMed] [Google Scholar]

- Maxwell, L. E. , & Evans, G. W. (2000). The effects of noise on pre‐school children's pre‐reading skills. Journal of Environmental Psychology, 20(1), 91–97. doi:10.1006/jevp.1999.0144 [Google Scholar]

- Myers, D. , Baer, W. C. , & Choi, S. Y. (1996). The changing problem of overcrowded housing. Journal of the American Planning Association, 62(1), 66–84. doi:10.1080/0194439608975671 [Google Scholar]

- Naigles, L. R. (1996). The use of multiple frames in verb learning via syntactic bootstrapping. Cognition, 58, 221–251. doi:10.10166/0010‐0277(95)00681‐8 [DOI] [PubMed] [Google Scholar]

- Newman, R. S. (2003). Prosodic differences in mothers’ speech to toddlers in quiet and noisy environments. Applied Psycholinguistics, 24, 539–560. doi:10.1017/S0142716403000274 [Google Scholar]

- Newman, R. S. (2005). The cocktail party effect in infants revisited: Listening to one's name in noise. Developmental Psychology, 41, 352. doi:10.1037/0012‐1649.41.2.352 [DOI] [PubMed] [Google Scholar]

- Newman, R. S. (2009). Infants’ listening in multitalker environments: Effect of the number of background talkers. Attention, Perception, & Psychophysics, 71, 822–836. doi:10.3758/APP.71.4.822 [DOI] [PubMed] [Google Scholar]

- Newman, R. S. (2011). 2‐year‐olds’ speech understanding in multitalker environments. Infancy, 16, 447–470. doi:10.1111/j.1532‐7078.2010.00062.x [DOI] [PubMed] [Google Scholar]

- Newman, R. S. , & Jusczyk, P. W. (1996). The cocktail party effect in infants. Perception & Psychophysics, 58, 1145–1156. doi:10.3758/BF03207548 [DOI] [PubMed] [Google Scholar]

- Riley, K. G. , & McGregor, K. K. (2012). Noise hampers children's expressive word learning. Language, Speech, and Hearing Services in Schools, 43, 325–337. doi:10.1044/0161‐1461(2012/11‐0053) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rothauser, E. H. , Chapman, W. D. , Guttman, N. , Nordby, K. S. , Silbiger, H. R. , Urbanek, G. E. , & Weinstock, M. (1969). IEEE recommended practice for speech quality measurements. IEEE Transactions on Audio and Electroacoustics, 17, 225–246. [Google Scholar]

- Saffran, J. R. , Aslin, R. N. , & Newport, E. L. (1996). Statistical learning by 8‐month‐old infants. Science, 274, 1926–1928. doi:10.1126/science.274.5294.1926 [DOI] [PubMed] [Google Scholar]

- Stelmachowicz, P. G. , Pittman, A. L. , Hoover, B. M. , & Lewis, D. E. (2004). Novel‐word learning in children with normal hearing and hearing loss. Ear and Hearing, 25(1), 47–56. doi:10.1097/01.AUD.0000111258.98509.DE [DOI] [PubMed] [Google Scholar]

- Swingley, D. (2007). Lexical exposure and word‐form encoding in 1.5‐year‐olds. Developmental Psychology, 43, 454. doi:10.1037/0012‐1649.43.2.454 [DOI] [PubMed] [Google Scholar]

- Swingley, D. , & Aslin, R. N. (2007). Lexical competition in young children's word learning. Cognitive Psychology, 54, 99–132. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Swingley, D. , Pinto, J. P. , & Fernald, A. (1999). Continuous processing in word recognition at 24 months. Cognition, 71, 73–108. doi:10.1016/S0010‐0277(99)00021‐9 [DOI] [PubMed] [Google Scholar]

- Treisman, A. M. (1960). Contextual cues in selective listening. Quarterly Journal of Experimental Psychology, 12, 242–248. doi:10.1080/17470216008416732 [Google Scholar]

- Tun, P. A. , & Wingfield, A. (1999). One voice too many: Adult age differences in language processing with different types of distracting sounds. Journal of Gerontology: Psychological Sciences, 54, P317–P327. doi:10.1093/geronb/54b.5.p317 [DOI] [PubMed] [Google Scholar]

- Weisleder, A. , & Fernald, A. (2013). Talking to children matters: Early language experience strengthens processing and builds vocabulary. Psychological Science, 24, 2143–2152. doi:10.1177/0956797613488145 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Werker, J. F. , Fennel, C. T. , Corcoran, K. M. , & Stager, C. L. (2002). Infants’ ability to learn phonetically similar words: Effects of age and vocabulary size. Infancy, 3(1), 1–30. [Google Scholar]

- Werker, J. F. , & Yeung, H. H. (2005). Infant speech perception bootstraps word learning. Trends in Cognitive Sciences, 9, 519–527. doi:10.1016/j.tics.2005.09.003 [DOI] [PubMed] [Google Scholar]

- Wojcik, E. H. , & Saffran, J. R. (2013). The ontogeny of lexical networks: Toddlers encode the relationships among referents when learning novel words. Psychological Science, 24, 1898–1905. doi:10.1177/0956797613478198 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yuan, S. , & Fisher, C. (2009). “Really? She blicked the baby?” Two‐year‐olds learn combinatorial facts about verbs by listening. Psychological Science, 20, 619–626. doi:10.1111/j.1467‐9280.2009.02341.x [DOI] [PMC free article] [PubMed] [Google Scholar]