Abstract

Objective

The present research reports on two randomized controlled trials evaluating TakeCARE, a video bystander program designed to help prevent sexual violence on college campuses.

Method

In Study 1, students were recruited from psychology courses at two universities. In Study 2, first-year students were recruited from a required course at one university. In both studies, students were randomly assigned to view one of two videos: TakeCARE or a control video on study skills. Just before viewing the videos, students completed measures of bystander behavior toward friends and ratings of self-efficacy for performing such behaviors. The efficacy measure was administered again after the video, and both the bystander behavior measure and the efficacy measure were administered at either one (Study 1) or two (Study 2) months later.

Results

In both studies, students who viewed TakeCARE, compared to students who viewed the control video, reported engaging in more bystander behavior toward friends and greater feelings of efficacy for performing such behavior. In Study 1, feelings of efficacy mediated effects of TakeCARE on bystander behavior; this result did not emerge in Study 2.

Conclusions

This research demonstrates that TakeCARE, a video bystander program, can positively influence bystander behavior toward friends. Given its potential to be easily distributed to an entire campus community, TakeCARE might be an effective addition to campus efforts to prevent sexual violence.

Keywords: bystander behavior, sexual violence, college students, prevention, randomized controlled trial

Sexual violence, which includes sexual coercion and assault, is a significant problem on college campuses due to its high prevalence and adverse consequences. Large surveys indicate that 19–25% of women experience sexual violence while they are in college (Fisher, Cullen, Turner, & Leary, 2000; Krebs, Lindquist, Warner, Fisher, & Martin, 2009). Moreover, victims of sexual violence are at increased risk for experiencing a range of mental health problems and adjustment difficulties, including trauma symptoms, eating disorders, diminished academic performance, drug and alcohol abuse (Baker & Sommers, 2008; Banyard & Cross, 2008; Campbell, Dworkin, & Cabral, 2009; Littleton, Axsom, & Grills-Taquechel, 2009), as well as future incidents of violence (Finkelhor, Ormord, & Turner, 2007; Macy, 2008). The high prevalence and adverse consequences of sexual violence during the college years has prompted many campuses to make the prevention of sexual violence a high priority. The present research examines the efficacy of a novel strategy for increasing students’ bystander behavior, which has the potential to reduce sexual violence on college campuses.

Sexual violence prevention programs on college campuses

Many college sexual violence prevention programs focus on the penalties for perpetrating sexual violence, or strategies and skills for reducing risk for sexual victimization. That is, the programs address students as potential perpetrators or victims of sexual violence. Unfortunately, few of these programs have been rigorously evaluated and found to be effective in actually reducing rates of sexual violence (Anderson & Whiston, 2005; Morrison, Hardison, Mathew, & O’Neil, 2004). In addition, such programs have been criticized for failing to engage students, who typically do not consider themselves as either potential perpetrators or potential victims (Foubert, Langhinrichsen-Rohling, Brasfield, & Hill, 2010; Potter, Krider, & McMahon, 2000).

Bystander programs

Another strategy for reducing sexual violence on college campuses conceptualizes students as agents whose actions can reduce the risk that other students on campus will experience sexual violence. Programs adhering to this strategy, collectively referred to as bystander programs, share the common goal of engaging students in a community-wide effort to prevent sexual violence. A key component of such programs involves motivating students to become responsive bystanders, typically conceptualized and operationalized as engaging in behavior that: 1) interrupts situations that might result in sexual violence, 2) counters social norms that support sexual violence, and 3) supports those who have experienced sexual violence. Examples of responsive bystander behavior include: discouraging a friend from “hooking up” with someone who is intoxicated, expressing disagreement with someone who makes excuses for abusive behavior, and supporting a friend who believes he or she may have experienced sexual violence. Of course, the ultimate goal of campus bystander programs is to reduce sexual violence on campus by changing behavior and cognitions (e.g., confidence or perceived efficacy for intervening in situations) across a wide swath of students. It is not yet clear whether bystander programs reduce campus rates of sexual violence, but emerging evidence indicates that campus-wide reductions in rates of sexual violence can indeed be achieved (Coker et al., 2014).

A recent meta-analytic review indicates that bystander programs increase students’ sense of personal efficacy for engaging in bystander behavior, as well as their self-reports of bystander behavior (Katz & Moore, 2013). Unfortunately, despite these positive findings, it is challenging for universities to broadly disseminate most of the empirically-supported bystander programs. Most such programs require trained staff and typically are administered in a small-group format, making it difficult for universities to reach large groups of students at a reasonable cost. Furthermore, attempts to broadly disseminate and implement empirically-supported programs of all kinds often result in programs that are low in fidelity to the original program (Karlin & Cross, 2014), a potential problem with the dissemination of most bystander programs evaluated thus far.

TakeCARE – a Video Bystander Program

To address the need for an efficacious, easy-to-disseminate bystander program with the potential for broad reach, we worked in conjunction with groups of college students and administrators to develop a video bystander program (TakeCARE) that can be administered online. A video format eliminates many of the potential barriers to implementing bystander programs across large groups of students, including limited staff capacity to administer the program, limited staff knowledge and/or skills in program delivery, as well as staff recruitment, training, and supervision costs (Karlin & Cross, 2014). In addition, the potential for low fidelity to the original program is eliminated with a video program, because what is disseminated is the exact original program. Finally, a video program can not only be disseminated across an entire campus, but across any institution interested in offering a bystander program.

In addition to the video format, TakeCARE differs from other bystander programs in several important ways. It is much briefer than most, lasting less than 25 minutes, as opposed to one or more sessions of an hour or longer. The brief format was driven in part by student desires for a program that was short and to-the-point, and by administrator desires for a program that would not be perceived as burdensome by students. This prompted us to focus the content of the video tightly on a single outcome: responsive bystander behavior toward friends, and a single process for accomplishing that outcome: increasing feelings of efficacy for performing bystander behaviors. This focus differs from other bystander programs, which target multiple outcomes and processes for accomplishing those outcomes (e.g., Banyard et al., 2007). The emphasis on friends taking care of friends is consistent with findings on the significant influence that friends can have on a wide range of individuals’ health-related behaviors (e.g. Cullum, O’Grady, Sandoval, Armeli, & Tennan, 2013; Fitzgerald, Fitzgerald, & Aherne, 2012; Lau, Quadrel, & Hartman, 1990). In addition, there are developmental as well as empirically-based reasons to believe that encouraging students to take action to protect friends, as compared to generalized “others,” would contribute to successful intervention effects (e.g., Levine, Cassidy, Brazier, & Reicher, 2002). Specifically, the importance of peer relationships in late adolescence is likely to motivate college students to look out for the well-being of their friends. Also, because most sexual assaults and completed rapes on college campuses take place in the victim’s place of residence (Fisher et al., 2000), we reasoned that at least some of the individuals in close temporal or physical proximity to the event would be friends of the victim or the perpetrator. The focus on perceived efficacy is consistent with theory and research on the bystander effect that relates greater efficacy to increased bystander behavior (e.g., Banyard, Moynihan, Cares, & Warner, 2014; Burn, 2009).

A small evaluation of an early iteration of TakeCARE was conducted with 96 college students (81% female) who were recruited from social psychology classes and randomly assigned to view either TakeCARE or a control program on study skills (Kleinsasser, Jouriles, McDonald, & Rosenfield, 2014). Compared to the control group, those who viewed TakeCARE reported engaging in more bystander behavior to protect their friends over the two months following the intervention. They also reported greater efficacy for engaging in bystander behavior, and efficacy partially mediated the effects of TakeCARE on bystander behavior. This initial evaluation provides preliminary empirical evidence for TakeCARE’s potential value; however, the evidence is arguably limited by the size and diversity of the sample.

Present research

To attempt to provide more compelling evidence for TakeCARE, we conducted two randomized controlled trials evaluating whether TakeCARE’s effects generalize across campuses and across a more diverse array of students than those in the initial study. The first trial was conducted across two universities, with a sample recruited from psychology courses. The second trial was conducted at a single university, but participants were recruited from a class that first-year students are required to take. In each of the trials, we hypothesized that students who viewed TakeCARE would report: (1) engaging in more responsive bystander behavior to protect friends, and (2) greater efficacy for intervening in situations in which friends may be at risk for sexual violence, than would students who viewed the control video. We also hypothesized that efficacy for intervening would: (3) predict bystander behavior during the follow-up period, and (4) mediate the effects of TakeCARE on bystander behavior. We also explored whether TakeCARE’s effects differed across universities, across male and female students, and across students who liked and disliked the video.

Study 1

Methods

Participants

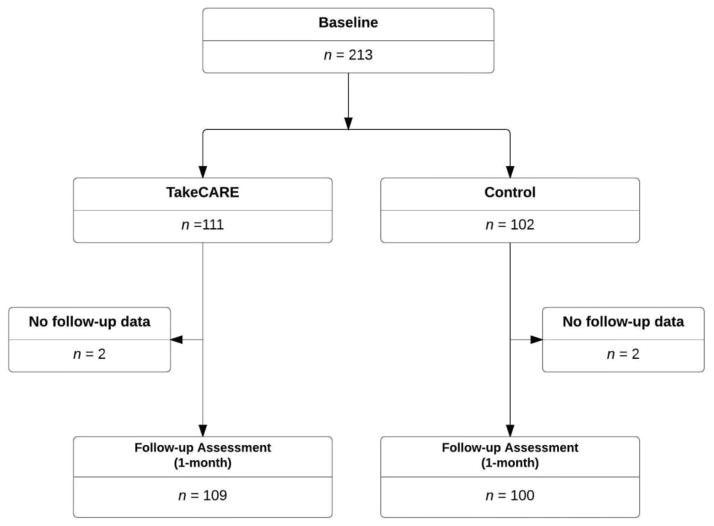

Participants were recruited from undergraduate psychology classes at two mid-sized, private universities in the United States. One was located in the Southwest (SW) and the other in the northern Midwest (MW). Figure 1 displays the flow of participants through the project. Of the 213 students who volunteered to participate, four withdrew before the follow-up assessment, resulting in a sample of 209 students with complete data (SW n = 69; MW n = 144). The sample of 213 students was predominantly female (n = 172; 80.8%) and White (n = 179; 84.0%), but it also included Asian (n = 11; 5.2%), Black (n = 9; 4.2%), Bi- or Multi-racial (n = 9; 4.2%), and “Other” (n = 5; 2.3%) participants. Twenty-one participants (9.9%) were Hispanic. Participants ranged in age from 18 to 35 (only four participants were older than 22) (M = 19.14, SD = 1.81). Students received extra credit in a psychology course for their participation. Those who did not wish to participate had the option to participate in another research study or to complete an alternative assignment for extra credit.

Figure 1.

Participant flow and retention for Study 1.

Procedures

The Institutional Review Boards at both universities approved all procedures. Participants were told during the informed consent process that their initial lab visit would involve completing questionnaires on a variety of topics. They would be randomly assigned to view one of two brief videos (TakeCARE or the control video on study skills) and would also complete several questionnaires immediately after viewing the video. Participants were also informed that they would receive an email link to additional self-report measures approximately one month later. All study measures were administered using Qualtrics survey software. Baseline and post-video assessments were conducted September through October 2014; thus, one-month follow-up assessments were conducted in October and November 2014. The computers in the computer lab in which the baseline questionnaires, video programs, and post-video questionnaires were administered were set up to ensure participant privacy (i.e., barriers prevented students from seeing one another’s computer screens). Baseline measures covered a variety of topics (e.g., motivation to study, study concentration) in addition to bystander-related topics to help disguise the purpose of the study and enhance the credibility of the control condition.

A random numbers table was used to assign participants to conditions. Participants in Study 1 (the 2-university study) were randomized within university. Those randomized to view TakeCARE (n = 111) did not differ from those randomized to view the control program (a video designed to improve study skills) (n = 102) on any of the measured demographic variables (sex, age, or race/ethnicity, ps > .60) or study variables (described below, ps > .28). The demographics for the two groups and means and standard deviations of the study variables at baseline are summarized in Tables 1 and 2, respectively. The average number of days between the initial lab visit and one-month follow-up was 30.4 (SD = 4.79), and did not differ across conditions (p > .48).

Table 1.

Sample Characteristics: Study 1

| Variable | Group | |||

|---|---|---|---|---|

| Control (n = 102) | TakeCARE (n = 111) | |||

| n | % | n | % | |

| Sex | ||||

| Male | 18 | 7.6 | 23 | 20.7 |

| Female | 84 | 82.4 | 88 | 79.3 |

| Race | ||||

| White | 86 | 84.3 | 93 | 83.8 |

| Asian | 5 | 4.9 | 6 | 5.4 |

| Other | 11 | 10.8 | 12 | 10.8 |

| Ethnicity | ||||

| Hispanic | 11 | 10.8 | 10 | 9.0 |

| Non-Hispanic | 91 | 89.2 | 101 | 1.0 |

|

| ||||

| M (SD) | M (SD) | |||

|

|

||||

| Age (years) | 19.07 (1.16) | 19.18 (2.24) | ||

Table 2.

Means (Standard Deviations) of Study Variables at Baseline, Post-Video, and Follow-Up for Study 1

| Variable | -----Control----- | -----TakeCARE----- | ||||

|---|---|---|---|---|---|---|

| Baseline | Post-Video | Follow-up | Baseline | Post-Video | Follow-up | |

| Bystander behavior | 27.95 (19.02) | — | 21.35 (18.17) | 30.83 (19.83) | — | 28.50 (22.54) |

| Bystander efficacy | 75.23 (13.63) | 76.88 (14.83) | 72.43 (18.56) | 75.19 (13.88) | 84.88 (23.18) | 78.95 (13.80) |

Note. Bystander behavior scores range from 0 to 49, with higher scores indicating greater use of bystander behaviors. Bystander efficacy scores range from 0 to 100 with higher scores indicating greater feelings of efficacy.

Video Programs

TakeCARE

Participants viewed TakeCARE on a computer. TakeCARE starts with an acknowledgement of the various demands placed on college students, such as balancing adult responsibilities with college social opportunities, and noting that friends are often an important part of students’ lives. The program describes the likelihood of sexual violence or relationship abuse happening to someone they know, and how they can help “take care” of their friends to help prevent these negative experiences. TakeCARE then presents and discusses three vignettes designed to demonstrate ways in which students can intervene when they see sexual coercion or violence, or when they see risky situations that may result in these consequences. The vignettes present several situations in which college students encounter risky situations involving their friends, demonstrating effective bystander responses that 1) prevent the event, 2) stop it from continuing or escalating, or 3) provide support for a friend after an event takes place. For example, the opening vignette shows a male and female together at a party, both intoxicated and about to go to a bedroom together. Another couple (bystanders) sees what is happening. The vignette pauses while a narrator discusses the situation, indicating that it could result in certain problems for either or both of these two individuals. The video then resumes, concluding with the bystanders redirecting the male to alternative options to occupy his time at the party, and by taking the female home. The narrator then describes several other things friends could do in “situations like this” to prevent their friends from being harmed, indicating that “it’s not so important what you do, but that you do something” to protect your friends.

During the program, the narrator uses the phrase “TakeCARE,” linking the letters in the word “CARE” to the principles of successful bystander behavior. In each vignette, the bystanders demonstrate that they are:

C—Confident that they can help their friends avoid risky situations,

A—Aware that their friends could get hurt in these kinds of situations,

R—Responsible for helping, and,

E—Effective in how they help.

The CARE acronym is intended to provide a mnemonic for participants to use when thinking about how they might respond in risky situations, and to encourage participants to think of bystander behavior as simply “friends taking care of friends.”

Interspersed among the vignettes, the video also provides information about sexual pressure, relationship violence and dating abuse, and a definition of “consent” as it applies to sexual behavior. The TakeCARE video is 24 minutes long.

Control program

Participants also watched the control program on a computer. The program features videos from Samford University Office of Marketing and Communication entitled “How to Get the Most Out of Studying” interspersed with presentation of information about study skills. Similar to the TakeCARE program, the control program presents video clips featuring scenes with college-aged students, narration providing information on the topic, and written text information. The program discusses common cognitive errors, presents ways to study most efficiently, highlights information about levels of processing, and introduces a particular note-taking method as a technique to aid deeper processing of information. The control program video is 20 minutes long.

Measures

As indicated in the procedures section, the study measures were embedded in a broader assessment, which included measures of school performance, motivation to study, and study concentration. Below are descriptions of the subset of measures used to evaluate TakeCARE.

Bystander behaviors

At the baseline and one-month follow-up assessment, students completed the 49-item Bystander Behaviors Scale for Friends (Banyard et al., 2014). This scale examines several dimensions of bystander intervention opportunities including: 1) risky situations: identifying and interrupting situations in which risk for sexual and relationship abuse seemed to be escalating, 2) accessing resources: calling for professional help, 3) proactive behavior: making a plan in advance of being in a risky situation, and talking with others about issues of violence, and 4) party safety: behaviors to staying safe when going to parties. Participants reported whether or not they had engaged in each of the behaviors in the past month. Items include: If I saw a friend taking a very intoxicated person to their room, I said something and asked what they were doing; I expressed disagreement with a friend who said having sex with someone who is passed out or very intoxicated is okay. The number of “yes” responses was used to provide an index of responsive bystander behaviors. Past research has found greater self-reported bystander behavior to be related to theorized determinants of bystander behavior, such as efficacy for engaging in bystander behavior (Banyard et al., 2014). Coefficient alpha at baseline and follow-up was .93 and .95 for Study 1, and .93 and .96 for Study 2.

Efficacy for intervening

To assess participants’ confidence in their ability to perform bystander behaviors, participants completed the Bystander Efficacy Scale (Banyard, Plante, & Moynihan, 2005) at baseline, post-video, and follow-up. This questionnaire asks students to rate how confident they are that they could perform each of 14 behaviors, using a scale from 0 to 100 (0 = Can’t do, 100 = Very certain can do). Items include: Do something to help a very drunk person who is being brought upstairs to a bedroom by a group of people at a party; Express my discomfort if someone says that rape victims are to blame for being raped. Efficacy scores correlate with self-reported bystander behavior (Banyard et al., 2005). Coefficient alpha at baseline, post-video, and follow-up was .87, .93, and .93 for Study 1, and .87, .92, and .90 for Study 2. Since efficacy was examined as a mediator of the effects of TakeCARE on bystander behavior, we computed the average level of efficacy during the time interval for which bystander behavior was assessed (post-video efficacy + follow-up efficacy).

Consumer satisfaction

A brief consumer satisfaction survey was administered at follow-up. Participants rated on a 5-point scale (1 = Not at all, 3 = Somewhat, 5 = Very much) the extent to which they liked the video program they viewed, learned something new, found the video helpful, and thought it would be helpful to their friends. Coefficient alpha was .87 for Study 1, and .90 for Study 2.

Results

Effects of TakeCARE

We conducted analyses of covariance (ANCOVA) to examine the effects of TakeCARE on bystander behavior (hypothesis 1) and efficacy (hypothesis 2). ANCOVA is the recommended approach for analyzing pre-post data (Tabachnik & Fidell, 2013) because it: 1) adjusts for pretest differences, 2) does not suffer from regression to the mean, and 3) has the lowest post-test variance (after adjusting for pretest scores). In addition to controlling for baseline level of outcome in the ANCOVA, we also controlled for university (SW, MW), race, Hispanic ethnicity (coded separately from race), sex and age. Because of small numbers for some racial groups, race was coded as White, Asian, and “Other”, and was represented by two dummy variables coding the difference between Asian and White, and between “Other” and White.

Theoretically, it is possible that the data were correlated within sites (SW, MW). That is, characteristics of students and their campus experiences may be more similar within a particular university, as opposed to across universities. Thus, we performed our analyses twice: once using a mixed effects model with participants nested within sites, and once using standard ANCOVA. Results from both models were virtually identical. Specifically, all statistically significant effects in one analysis were significant in the other. Below we present the results from the standard ANCOVA models, since these results are slightly more conservative than the results from the mixed effects models.

Participants who viewed TakeCARE reported engaging in more bystander behavior in the month following the viewing (adjusted M = 27.92, SE = 1.71) than participants in the control condition (adjusted M = 21.98, SE = 1.78), F(1, 200) = 5.77, p = .017, partial η2 = 2.8. Paired sample t-tests showed that bystander behavior decreased from baseline to follow-up in the control group, t(99) = 3.53, p = .001, but it stayed level in the TakeCARE condition, t(108) =.93, p = .36 (see Table 2).

Similar results were found for efficacy. Participants who viewed TakeCARE reported higher efficacy (adjusted M = 82.04, SE = 1.02) than participants in the control condition (adjusted M = 74.61, SE = 1.06), F(1, 204) = 25.60, p < .001, partial η2 = 11.2 (see Table 2).

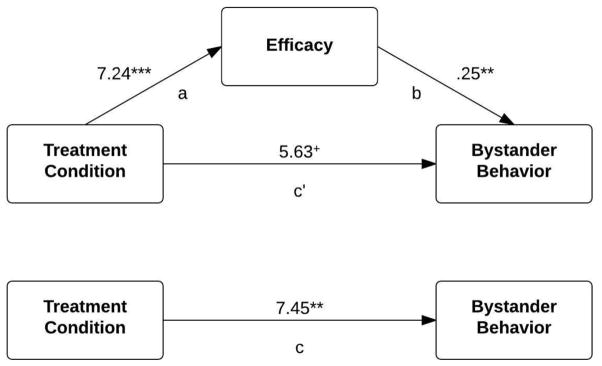

Relation between Efficacy and Bystander Behavior and Tests for Mediation

Bystander behavior during the month post-video was positively correlated with efficacy during that period, r(204) = .22, p = .001 (hypothesis 3). It was also positively correlated with efficacy at both the post-video and one-month follow-up assessments, ps <. 05. Thus, we performed a mediation analysis to determine if efficacy mediated the effect of TakeCARE on bystander behavior (hypothesis 4). As can be seen in Figure 2, intervention condition was related to efficacy, b = 7.24, t(200) = 3.41, p < .001 (the “a” path), which in turn was related to bystander behavior, controlling for intervention condition, b = .25, t(200) = 2.74, p < .01 (the “b” path). Using bias-corrected bootstrapping with 5000 bootstrap samples to test the statistical significance of the indirect effect (a*b), we found a*b = 1.89, 95% CI: [4.09, .47]. Mediation can be inferred because the 95% confidence interval (CI) did not include 0. As an indication of effect size, the proportion of the total effect of TakeCARE on bystander behavior that was mediated by efficacy was PM=25.4%. Using post-video efficacy instead as the mediator (rather than average efficacy from post-video to follow-up as the mediator) also yielded a mediating effect, a*b = 1.16, 95% CI: [2.98, .02], PM = 15.6%

Figure 2.

Model evaluating efficacy as a mediator of TakeCARE’s effects on bystander behavior for Study 1.

Tests for Moderation

Follow-up analyses examined whether university (SW vs. MW), participant sex, or consumer satisfaction moderated the effect of TakeCARE on bystander behavior or efficacy. First, site and sex were added in separate analyses as between-subjects variables to the ANCOVAs reported above. No moderating effects were detected (for the Intervention × Site interactions, ps > .31; for Intervention × Sex, ps > .14, and for Intervention × Site × Sex, ps > .24).

Regarding consumer satisfaction, means for the 4 consumer satisfaction items (rated on a 1–5 scale) were: Did you like the video? (M = 2.99, SD = .97); Did you learn anything new? (M = 2.86, SD = 1.08); Has the video been helpful to you? (M = 2.73, SD = 1.08); and Do you think the video would be helpful to your friends? (M = 3.04, SD = 1.05). We combined these 4 items into a scale of overall consumer satisfaction (coefficient α = .90), and an ANCOVA (controlling for university [SW, MW], race, Hispanic ethnicity, sex and age) indicted that there were no differences between consumer satisfaction in the TakeCARE and control conditions (p = .95). In addition, consumer satisfaction was not a predictor of bystander behavior or efficacy; nor was it a moderator of the effect of intervention condition on bystander behavior or efficacy (ps > .20).

Study 2

In Study 1, students in psychology courses at two universities who viewed TakeCARE reported engaging in more bystander behavior toward friends and greater feelings of efficacy for engaging in bystander behavior than did students in the control group. Moreover, bystander behavior was positively associated with efficacy, and efficacy partially mediated TakeCARE’s effects on bystander behavior. Tests for moderation indicated that our results did not differ across the two universities or across male and female students. The extent to which students liked the video also did not moderate its effects.

Study 2 was designed to provide a complimentary test of TakeCARE’s effects on a sample of first-year students recruited from a required university class. Our intent was to obtain a different type of university sample to evaluate TakeCARE’s effects, as compared to limiting ourselves to students who were enrolled in psychology classes and seeking extra credit (the sample used for Study 1, as well as the sample used for the evaluation of an early iteration of TakeCARE; Kleinsasser et al., 2014), and to extend the follow-up period for assessing these effects to 2 months.

Methods

Participants

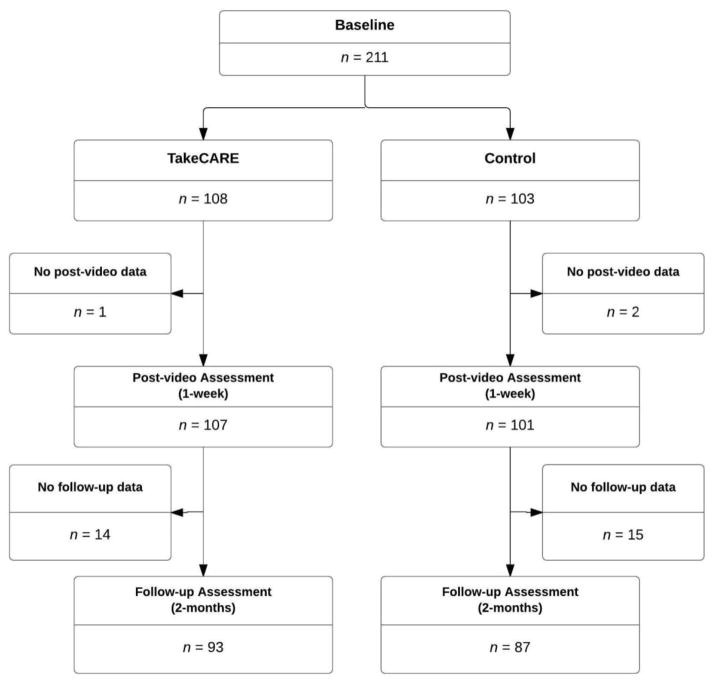

Participants were recruited from required first-year Wellness classes taught by four instructors at a midsize, private university in the southwestern United States (SW in Study 1). Students in all Wellness classes were required to complete “Out of Class” experiences, and participating in this study was one of several options available. None of the students who participated in Study 1 participated in Study 2. Of the 211 students who elected to participate, 31 dropped out before the follow-up assessment, resulting in a sample of 180 students with both baseline and follow-up data. Figure 3 displays the flow of participants through Study 2.

Figure 3.

Participant flow and retention for Study 2.

The sample of 211 students included an almost equal number of female and male participants (106 females, 50.2%). The distribution for participant race was: White (n = 144, 68.2%), Asian (n = 33, 15.6%), Black (n = 9, 4.3%), Bi- or Multi-racial (n = 19, 9.0%), and 6 (2.8%) in other categories. Twenty-three (10.9%) of these participants were Hispanic. Participants ranged from 18 to 21 years old (M = 18.25, SD = 0.59). Our sampling strategy for this second study resulted in a sample that was fairly representative of the first-year students at the university where the study was conducted with respect to sex (university is 50% female) and race/ethnicity (e.g., university is 73% White, 10% Hispanic, 5% Black). Study completers (n = 180) did not differ from drop-outs (n = 31) on any of the study variables (ps > .28) or demographic variables, although there was a greater tendency for males to drop out (20/105, 19.0%) than for females (11/106, 10.4%), Fisher Exact Test p = .083.

Comparisons across the samples for Study 1 and Study 2 indicated that Study 1 had a higher proportion of females (80.8% vs. 50.2%, Fisher’s Exact Test p < .001) and Whites (84.0 vs. 68.2%, Fisher’s Exact Test p < .001), and a lower proportion of Asians (5.2% vs. 15.6%, Fisher’s Exact Test p < .001). As would be expected, the students in Study 2 were younger (19.1 vs. 18.3, F(1, 422) = 45.56, p < .001).

Procedures

The university’s Institutional Review Board approved all procedures. The procedures were identical to those described in Study 1 with two exceptions. First, the post-video questionnaires were administered approximately one week after the participants viewed the video (as opposed to immediately afterward in Study 1). Second, participants received an email link to the follow-up assessment questionnaires approximately 2 months after they viewed the video (as opposed to one month afterward in Study 1). The reference period for the Bystander Behaviors Scale for Friends (Banyard et al., 2014) was modified to reflect this change. Specifically, at baseline and follow-up, respondents reported whether or not they had engaged in each of the bystander behaviors in the past 2 months. Baseline and post-video assessments were conducted September through October 2014; thus, 2-month follow-up assessments were conducted during November and December 2014.

A random numbers table was used to randomize participants to condition. Those randomized to view TakeCARE (n = 108) did not differ from those randomized to view the control program (the same study skills video used in Study 1) (n = 103) on any of the measured demographic variables (sex, age, or race/ethnicity, ps > .54) or baseline study variables (ps > .28). In addition, attrition did not differ across the conditions (TakeCARE, n = 15, 13.9%; Control, n = 16, 15.5%). Table 3 summarizes the demographics of the two groups; Table 4 shows the means of the study variables at baseline. The average number of days between the initial lab visit (baseline assessment, randomization, and viewing the video) and the one-week post-video assessment was 7.66 (SD = 4.46); this did not differ across conditions (p > .23). The average number of days between baseline assessment and the two-month follow-up assessment was 63.61 (SD = 6.41) and did not differ across conditions (p > .43).

Table 3.

Sample Characteristics: Study 2

| Variable | Group | |||

|---|---|---|---|---|

| Control (n = 103) | TakeCARE (n = 108) | |||

| n | % | n | % | |

| Sex | ||||

| Male | 49 | 47.6 | 56 | 51.9 |

| Female | 54 | 52.4 | 52 | 48.1 |

| Race | ||||

| White | 70 | 68.0 | 74 | 68.5 |

| Asian | 16 | 15.5 | 17 | 15.7 |

| Other | 17 | 16.5 | 17 | 15.7 |

| Ethnicity | ||||

| Hispanic | 12 | 11.7 | 11 | 10.2 |

| Non-Hispanic | 91 | 88.3 | 97 | 89.8 |

| M (SD) | M (SD) | |||

|

|

||||

| Age (years) | 18.27 (0.63) | 18.22 (0.56) | ||

Table 4.

Means (Standard Deviations) of Study Variables at Baseline, Post-Video, and Follow-Up for Study 2

| Variable | -----Control----- | -----TakeCARE----- | ||||

|---|---|---|---|---|---|---|

| Baseline | Post-Video | Follow-up | Baseline | Post-Video | Follow-up | |

| Bystander behavior | 34.13 (22.36) | — | 33.97 (25.00) | 31.12 (18.30) | — | 38.56 (26.09) |

| Bystander efficacy | 74.10 (15.47) | 73.74 (16.12) | 72.08 (20.06) | 74.08 (13.57) | 75.49 (17.35) | 75.29 (17.35) |

Note. Bystander behavior scores range from 0 to 49; higher scores indicate more bystander behavior. Bystander efficacy scores range from 0 to 100; higher scores indicate greater efficacy.

Results

Effects of TakeCARE

Again, students were recruited from the classes of four instructors, and it is possible that the data were correlated within instructors. Thus, we again performed our analyses twice: once using a mixed effects model with participants nested within instructors, and once using standard ANCOVA. Again, results from both models were virtually identical, and all significant effects in one were significant in the other. Below we present the results from the standard ANCOVA models, since these results are slightly more conservative than the results from the mixed effects models.

An ANCOVA, using the same covariates as those in Study 1, except for site (Study 2 involved only one site), showed that participants who viewed TakeCARE reported more bystander behavior during the follow-up period (adjusted M = 39.39, SE = 2.18) than did participants in the control condition (adjusted M = 33.08, SE = 2.26), F(1, 172) = 4.03, p = .046, partial η2 = 2.3 (hypothesis 1). Paired-sample t-tests showed that bystander behavior did not change from pre-video to follow-up for participants in the control condition, t(86) = .14, p = .89, but it increased for participants who viewed TakeCARE, t(92) = 2.89, p = .005 (see Table 4).

Mean efficacy during the follow-up period (the average of efficacy at post-video and at follow-up) was higher for participants who viewed TakeCARE (adjusted M = 75.30, SE = .92) than for participants in the control condition (adjusted M = 72.62, SE = .94), F(1, 201) = 4.15, p = .043, partial η2 = 2.0 (hypothesis 2).

Relation between Efficacy and Bystander Behavior and Tests for Mediation

Average efficacy over the two-month follow-up period was not correlated with bystander behavior, r(172) = .10, p = .20 (post-video efficacy was not correlated with bystander behavior, but efficacy at follow-up was, p = .05) (hypothesis 3). Nor was average efficacy related to bystander behavior in either experimental condition when examined separately (ps > .19). Thus, the path between efficacy and bystander behavior was not statistically significant in the mediation model, nor was the mediated pathway significant, a*b = .34, 95% CI: [1.88,-.21]. The same pattern emerged when post-video efficacy was used as the mediator variable (hypothesis 4).

Tests for Moderation

Again using the same approach as in Study 1, we examined whether participant sex moderated the effects of TakeCARE on bystander behavior or efficacy; no moderating effects were observed (ps > .16).

For consumer satisfaction, means for the four items (1–5 scale) for the TakeCARE condition were: Did you like the video?, M = 2.88, SD = .91. Did you learn anything new?, M = 2.90, SD = 1.06. Has the video been helpful to you?, M = 2.67, SD = 1.05. Do you think the video would be helpful to your friends? M = 2.91, SD = 1.01. We combined these 4 items into a scale of overall consumer satisfaction (coefficient α =. 87), and an ANCOVA indicated that consumer satisfaction did not differ across TakeCARE and control conditions (p = .50); it did not predict bystander behavior or efficacy, and it did not moderate the effect of TakeCARE on bystander behavior or efficacy (ps > .27)

Discussion

This research replicates and extends findings on TakeCARE (Kleinsasser et al., 2014), a bystander program designed to help prevent sexual violence on college campuses. Consistent with our first two hypotheses, students who viewed TakeCARE reported engaging in more bystander behavior on behalf of friends, and greater feelings of efficacy for engaging in bystander behavior, than did students in the control group. These results emerged in a sample of students at two different universities (Study 1), and in a single-university sample of first-year students (Study 2). Thus, there are now three randomized controlled trials (two reported in this manuscript and one reported in Kleinsasser et al.) indicating that TakeCARE can exert a positive influence on college student bystander behavior. Moreover, this video bystander program eliminates significant potential barriers to campus-wide implementation of traditional bystander programs, which typically are offered by trained facilitators in an in-person, small-group format and carry costs to train, supervise, and maintain a staff of facilitators. We thus view these findings as extremely encouraging.

Consistent with our third hypothesis, efficacy for intervening was related to bystander behavior in Study 1. Similarly, consistent with our fourth hypothesis, TakeCARE’s effects on bystander behavior were partially mediated by efficacy for intervening in Study 1. However, neither of these effects emerged in Study 2. This pattern of results suggests that efficacy may play a role in the effects of TakeCARE on bystander behavior, but indicates that other processes are operating as well. This research did not evaluate TakeCARE’s effects on other processes, but plausible hypotheses might include increased awareness of the vulnerability of friends to unwanted sexual experiences, decreased fear of adverse consequences for saying or doing something to help protect friends, and increased sense of responsibility for acting to help friends.

In both studies, participants who viewed TakeCARE reported engaging in more bystander behavior at the follow-up assessment than did participants in the control condition. However, the pattern of change in bystander behavior over time differed across the two studies. Specifically, for Study 1, the level of bystander behavior from baseline to follow-up remained stable for students in the TakeCARE condition, while it decreased in the control group. However, in Study 2, the level of bystander behavior increased from baseline to follow-up for students in the TakeCARE condition, but remained constant for those in the control condition. Regarding the pattern of results in Study 1, it is important to note that others have documented declines in bystander behavior over time in prospective studies (e.g., Kleinsasser et al., 2014; Moynihan et al., 2015). Thus, the prevention of a decline in bystander is still a positive effect, particularly when a decline is observed in a control group. However, the different pattern of results across studies is still curious.

One hypothesis for the different patterns of results in Study 1 and Study 2 involves the timing of the administration (e.g., first semester of the first year of college for students in Study 2), and the idea that aspects of campus environments may actually discourage responsive bystander behavior over time. That is, after students spend more time on campus and become affiliated with certain campus groups, bystander behavior may decrease because others discourage it. This hypothesis is consistent with anecdotal data obtained from members of campus fraternities and sororities, who have told us that intruding on members’ social interactions (some of which might lead to sexual coercion or assault) is viewed negatively and is actively discouraged. However, the power of situational forces to inhibit responsive bystander behavior has not been systematically investigated in research on campus bystander programs. It is also possible that first-semester, first-year students do not change their partying behavior as the semester progresses and are presented with a similar number of opportunities to act as a responsive bystander at the beginning and end of the semester, but older and presumably more mature students tend to decrease partying behavior over the course of a semester and are thus presented with fewer opportunities to act as a responsive bystander. Since most measures of bystander behavior conflate opportunity to act as a bystander with actual bystander behavior (Jouriles, Kleinsasser, Rosenfield, & McDonald, 2014), declines in bystander behavior might be expected if opportunities diminish. Regardless of the reason for the different pattern of results across Studies 1 and 2, the different pattern suggests that parameters of the timing and context in which bystander programs are administered may have implications for program effects.

Limitations

Several limitations of the present research should be acknowledged. The follow-up periods in both of these studies were short, and it is unknown whether TakeCARE’s effects lasted beyond those periods. It might be argued that short-term effects on bystander behavior are still meaningful for campus efforts to reduce rates of sexual violence (short-term effects may still reduce a significant number of incidents of sexual violence, particularly on a large campus), and demonstrating that this can be achieved with a brief video program is very encouraging. However, at this point we do not know if the program leads to stable, longer-term behavioral changes. There were also some limitations with the two samples, which make it unclear how generalizable the effects of TakeCARE might be. Specifically, both samples were predominantly White. Research on the effectiveness of bystander programs across different racial/ethnic groups is limited, but available data suggests possible complex interactions between bystander program and race/ethnicity in predicting outcomes (Brown, Banyard, & Moynihan, 2014). Specifically, there may be cultural differences in the perceived acceptability of intervening in friends’ relationships, which raises the possibility that the effects of TakeCARE may not generalize widely. In addition, in both samples, students chose to participate in this study over completing an alternative assignment. It seems reasonable to think that students who elect to participate in a study that evaluates video programs, as opposed to another assignment, may be more responsive to the video program’s message.

The self-report measure of bystander behavior utilized in this study also has limitations. For example, it is sensitive only to the occurrence (presence/absence), and not to the frequency or quality of different types of bystander behavior. A more comprehensive assessment of bystander behavior, especially one that utilizes methods that go beyond self-report (Jouriles, Kleinsasser, Rosenfield, & McDonald, 2014; Parrott et al., 2012), might bolster confidence in the results. It would also be worthwhile to expand the measurement of outcomes. Some evaluations of bystander programs have found reductions in participants’ reports of their own sexual or physical violence victimization and perpetration (e.g., Gidycz, Orchowski, & Berkowitz, 2011; Miller et al., 2013). This would be an especially valuable outcome to document, given that the ultimate goal of bystander programs is to reduce rates of violence. Other possible outcomes that would be valuable to assess include risk awareness for violence on college campuses and possible iatrogenic effects of bystander interventions.

It should also be emphasized that students viewed TakeCARE in a monitored computer lab. This method of administration was used to help to ensure students actually viewed the video. It may have also prompted students to take the viewing of the video more seriously than they otherwise would. Although this method of administration has some potential advantages, it may not be as cost-effective as other methods, and it is possible that the positive effects of TakeCARE are yoked to this particular method of administration. That is, it is not clear if TakeCARE would still have the same positive effects if students viewed TakeCARE in a group setting (e.g., a classroom), or if students were sent a link to view TakeCARE on their own. The method of administration is an important issue to consider, prior to advocating for widespread dissemination of TakeCARE as an effective bystander program.

Clinical and Policy Implications

Prominent organizations have recommended bystander programs for preventing sexual violence on college campuses (American College Health Association, 2011; Campus Sexual Violence Elimination Act, 2013). However, most empirically-supported bystander programs require considerable resources to disseminate widely, especially at large college campuses. The ease of administering and distributing a video program allows for a greater number of individuals to be reached, potentially resulting in more widespread and/or intensified effects on a college campus. This study provides additional evidence for the efficacy of TakeCARE, a video bystander program designed to help prevent sexual violence on college campuses. As noted above, there are now three randomized controlled trials indicating that TakeCARE can have a positive effect on college student bystander behavior. However, due to some of the limitations noted above, caution still needs to be exercised in the dissemination and use of TakeCARE.

Research Implications

This evaluation of TakeCARE can be viewed as a promising step in the development of effective programs to promote responsive bystander behavior on college campuses. However, there is still much to be learned. For example, attention to the above-mentioned limitations will be important, particularly those involving the duration and generalizability of effects, and the importance of the method of administration. It will also be important to develop a more comprehensive knowledge base on the contexts in which bystander programs, such as TakeCARE, are likely to be most effective. For example, are such programs most effective when offered before students arrive on campus (e.g., during orientation sessions for new students), or after students have had some time to acclimate to life as a college student? Are they more effective when offered as part of a required class, or as part of some other campus experience? Theoretically, a wide variety of campus variables may influence program effects on bystander behavior (e.g., students’ connectedness to campus), and a more complete understanding of these possible moderators can contribute to our understanding of bystander programs.

In addition, a greater understanding of the processes by which TakeCARE influences bystander behavior can be key in replicating and building upon program effects in future prevention research. For example, one difference between TakeCARE and other bystander programs is TakeCARE’s emphasis on “friends taking care of friends.” Yet, it is not clear from this research how important this emphasis is for obtaining positive outcomes. Similarly, one of the processes by which TakeCARE is theorized to change bystander behavior is by increasing student efficacy for engaging in bystander behavior. However, efficacy only accounted for 25% of the change in bystander behavior in Study 1, and it was not a significant mediator of TakeCARE effects on bystander behavior in Study 2. Thus, other processes appear to be operating in addition to efficacy. Moreover, the potential for situational factors (e.g., attitudes of other students) to support or undermine both efficacy and bystander behavior needs to be examined. A more comprehensive understanding of processes and effects of situational factors will contribute to theory and research on determinants of bystander behavior.

One of the questions we asked ourselves in the development of TakeCARE was: How can we maximize the effects of this video on students? Even though TakeCARE appears to have a positive effect on bystander behavior, there still are likely to be ways to increase its effectiveness. For example, there may be advantages to moving beyond passive video viewing to more interactive student involvement. Such involvement may strengthen deep processing of content, helping participants retain and reinforce the information presented (Ritterfeld, & Weber, 2006). As another example, modifications to TakeCARE to improve student satisfaction with the video might result in a greater likelihood that universities would adopt such a program, and an increased likelihood of social diffusion, such as students talking with others about the video.

Concluding Remarks

In conclusion, this study demonstrates the effective use of a video bystander program in increasing responsive bystander behavior on college campuses. Despite being only 24 minutes in length, TakeCARE influenced bystander behavior for friends in the one- to two-month period following its viewing. Although the present results point to the potential utility of a brief video bystander intervention, this should not imply that such a program is going to solve the problem of sexual violence on college campuses. Nor should it imply that video programs should supplant existing programs with demonstrated efficacy. In selecting a program to implement on college campuses, administrators must determine which types of programs best fit their goals and their campus community. Sexual assault on college campuses is a serious, longstanding, and complex problem with multiple determinants. Multiple types and levels of prevention and intervention programming, including efforts aimed at potential or actual perpetrators and victim, as well as bystanders, are likely necessary to combat sexual violence effectively.

Acknowledgments

This research was supported by a grant from the National Institutes of Health R21 HD075585.

Contributor Information

Ernest N. Jouriles, Department of Psychology, Southern Methodist University

Renee McDonald, Department of Psychology, Southern Methodist University.

David Rosenfield, Department of Psychology, Southern Methodist University.

Nicole Levy, Department of Psychology, Southern Methodist University.

Kelli Sargent, Department of Psychology, Southern Methodist University.

Christina Caiozzo, Department of Psychology, Marquette University.

John H. Grych, Department of Psychology, Marquette University

References

- American College Health Association. Position statement on preventing sexual violence on college and university campuses. Hanover, MD: 2011. Retrieved from http://www.acha.org/Publications/docs/ACHA_Statement_Preventing_Sexual_Violence_Dec2011.pdf. [Google Scholar]

- Anderson LA, Whiston SC. Sexual assault education programs: A meta-analytic examination of their effectiveness. Psychology of Women Quarterly. 2005;29:374–388. doi: 10.1111/j.1471-6402.2005.00237.x. [DOI] [Google Scholar]

- Baker RB, Sommers MS. Relationship of genital injuries and age in adolescent and young adult rape survivors. Journal of Obstetric, Gynecologic, & Neonatal Nursing. 2008;37:282–289. doi: 10.1111/j.1552-6909.2008.00239.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Banyard VL, Cross C. Consequences of teen dating violence: Understanding intervening variables in ecological context. Violence Against Women. 2008;14:998–1013. doi: 10.1177/1077801208322058. [DOI] [PubMed] [Google Scholar]

- Banyard VL, Moynihan MM, Cares AC, Warner R. How do we know if it works? Measuring outcomes in bystander-focused abuse prevention on campuses. Psychology of Violence. 2014;4:101–115. doi: 10.1037/a0033470. [DOI] [Google Scholar]

- Banyard VL, Plante EG, Moynihan MM. Rape prevention through bystander education: Bringing a broader community perspective to sexual violence prevention. Journal of Community Psychology. 2005;32:61–79. doi: 10.1002/jcop.10078. [DOI] [Google Scholar]

- Banyard VL, Ward S, Cohn ES, Plante EG, Moorhead C, Walsh W. Unwanted sexual contact on campus: A comparison of women’s and men’s experiences. Violence and Victims. 2007;22:52–70. doi: 10.1891/vv-v22i1a004. [DOI] [PubMed] [Google Scholar]

- Brown AL, Banyard VL, Moynihan MM. College students as helpful bystanders against sexual violence: Gender, race, and year in college moderate the impact of perceived peer norms. Psychology of Women Quarterly. 2014;38:350–362. [Google Scholar]

- Burn SM. A situational model of sexual assault prevention through bystander intervention. Sex Roles. 2009;60:779–792. doi: 10.1007/s11199-008-9581-5. [DOI] [Google Scholar]

- Campbell R, Dworkin E, Cabral G. An ecological model of the impact of sexual assault on women’s mental health. Trauma, Violence, & Abuse. 2009;10:225–246. doi: 10.1177/1524838009334456. [DOI] [PubMed] [Google Scholar]

- Campus Sexual Violence Elimination Act in Violence Against Women Reauthorization Act of 2013. Public Law 113–4. Available at: http://www.gpo.gov/fdsys/pkg/PLAW-113publ4/pdf/PLAW-113publ4.pdf.

- Coker AL, Fisher BS, Bush HM, Swan SC, Williams CM, Clear ER, DeGue S. Evaluation of the Green Dot bystander intervention to reduce interpersonal violence among college students across three campuses. Violence Against Women. 2014:1–21. doi: 10.1177/1077801214545284. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cullum J, O’Grady M, Sandoval P, Armeli S, Tennen H. Ignoring norms with a little help from my friends: Social support reduces normative influence on drinking behavior. Journal of Social and Clinical Psychology. 2013;32:17–33. doi: 10.1521/jscp.2013.32.1.17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Finkelhor D, Ormrod RK, Turner HA. Poly-victimization: A neglected component in child victimization. Child Abuse & Neglect. 2007;31:7–26. doi: 10.1016/j.chiabu.2006.06.008. [DOI] [PubMed] [Google Scholar]

- Fisher BS, Cullen FT, Turner MG. The sexual victimization of college women (Report No. 182369) Washington DC: National Institute of Justice. Retrieved from the National Criminal Justice Reference Service; 2000. http://www.ncjrs.gov/txtfiles1/nij/182369.txt. [Google Scholar]

- Fitzgerald A, Fitzgerald N, Aherne C. Do peers matter? A review of peer and/or friends’ influence on physical activity among American adolescents. Journal of Adolescence. 2012;35:941–958. doi: 10.1016/j.adolescence.2012.01.002. [DOI] [PubMed] [Google Scholar]

- Foubert JD, Langhinrichsen-Rohling J, Brasfield H, Hill B. Effects of a rape awareness program on college women: Increasing bystander efficacy and willingness to intervene. Journal of Community Psychology. 2010;38:813–827. doi: 10.1002/jcop.20397. [DOI] [Google Scholar]

- Gidycz CA, Orchowski LM, Berkowitz AD. Preventing sexual aggression among college men: An evaluation of a social norms and bystander intervention program. Violence Against Women. 2011;17:720–742. doi: 10.1177/1077801211409727. [DOI] [PubMed] [Google Scholar]

- Jouriles EN, Kleinsasser A, Rosenfield D, McDonald R. Measuring bystander behavior to prevent sexual violence: Moving beyond self reports. Psychology of Violence. 2014 doi: 10.1037/a0038230. Advance online publication. [DOI] [Google Scholar]

- Karlin BE, Cross G. From the laboratory to the therapy room: National dissemination and implementation of evidence-based psychotherapies in the US Department of Veterans Affairs Health Care System. American Psychologist. 2014;69:19–33. doi: 10.1037/a0033888. [DOI] [PubMed] [Google Scholar]

- Katz J, Moore J. Bystander education training for campus sexual assault prevention: an initial meta-analysis. Violence and Victims. 2013;28:1054–1067. doi: 10.1037/a0033888. [DOI] [PubMed] [Google Scholar]

- Kleinsasser A, Jouriles EN, McDonald R, Rosenfield D. An online bystander intervention program for the prevention of sexual violence. Psychology of Violence. 2014;5:227–235. doi: 10.1037/a0037393. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krebs CP, Lindquist CH, Warner TD, Fisher BS, Martin SL. College women’s experiences with physically forced, alcohol-or other drug-enabled, and drug-facilitated sexual assault before and since entering college. Journal of American College Health. 2009;57:639–649. doi: 10.3200/JACH.57.6.639-649. [DOI] [PubMed] [Google Scholar]

- Lau RR, Quadrel MJ, Hartman KA. Development and change of young adults’ preventive health beliefs and behavior: influence from parents and peers. Journal of Health and Social Behavior. 1990;31:240–259. [PubMed] [Google Scholar]

- Levine M, Cassidy C, Brazier G, Reicher S. Self-categorization and bystander non-intervention: Two experimental studies. Journal of Applied Social Psychology. 2002;32:1452–1463. doi: 10.1111/j.1559-1816.2002.tb01446.x. [DOI] [Google Scholar]

- Littleton H, Grills-Taquechel A, Axsom D. Impaired and incapacitated rape victims: Assault characteristics and post-assault experiences. Violence and Victims. 2009;24:439–457. doi: 10.1891/0886-6708.24.4.439. [DOI] [PubMed] [Google Scholar]

- Macy RJ. A research agenda for sexual revictimization: Priority areas and innovative statistical methods. Violence Against Women. 2008;14:1128–1147. doi: 10.1177/1077801208322701. [DOI] [PubMed] [Google Scholar]

- Miller E, Tancredi DJ, McCauley HL, Decker MR, Virata MCD, Anderson HA, Silverman JG. One-year follow-up of a coach-delivered dating violence prevention program: a cluster randomized controlled trial. American Journal of Preventive Medicine. 2013;45:108–112. doi: 10.1016/j.amepre.2013.03.007. [DOI] [PubMed] [Google Scholar]

- Morrison S, Hardison J, Mathew A, O’Neil J. An evidence-based review of sexual assault preventive intervention programs. Washington DC: U.S. Department of Justice. Retrieved from the National Criminal Justice Reference Service; 2004. Report No. 207262. https://www.ncjrs.gov/pdffiles1/nij/grants/207262.pdf. [Google Scholar]

- Moynihan MM, Banyard VL, Cares AC, Potter SJ, Williams LM, Stapleton JG. Encouraging responses in sexual and relationship violence prevention: What program effects remain 1 year later? Journal of Interpersonal Violence. 2015;30:110–132. doi: 10.1177/0886260514532719. [DOI] [PubMed] [Google Scholar]

- Parrott DJ, Tharp AT, Swartout KM, Miller CA, Hall GCN, George WH. Validity for an integrated laboratory analogue of sexual aggression and bystander intervention. Aggressive Behavior. 2012;38:309–321. doi: 10.1002/ab.21429. [DOI] [PubMed] [Google Scholar]

- Potter RH, Krider JE, McMahon PM. Examining elements of campus sexual violence policies: Is deterrence or health promotion favored? Violence Against Women. 2000;6:1345–1362. doi: 10.1177/10778010022183686. [DOI] [Google Scholar]

- Ritterfeld U, Weber R. Playing Video Games: Motives, Responses, and Consequences. Mahwah, NJ: Lawrence Erlbaum Associates; 2006. Video games for entertainment and education; pp. 399–413. [Google Scholar]

- Tabachnik BG, Fidell SL. Using multivariate statistics. 3. Vol. 201. Boston: Pearson Education Inc; 2013. Discriminant analysis; pp. 377–438. [Google Scholar]