Abstract

Expressing one's preference via choice can be rewarding, particularly when decisions are voluntarily made as opposed to being forced. An open question is whether engaging in choices involving rewards recruits distinct neural systems as a function of sensitivity to reward. Reward sensitivity is a trait partly influenced by the mesolimbic dopamine system, which can impact an individual's neural and behavioral response to reward cues. Here, we investigated how reward sensitivity contributes to neural activity associated with free and forced choices. Participants underwent a simple decision-making task, which presented free- or forced-choice trials in the scanner. Each trial presented two cues (i.e., points or information) that led to monetary reward at the end of the task. In free-choice trials, participants were offered the opportunity to choose between different reward cues (e.g., points vs. information), whereas forced-choice trials forced individuals to choose within a given reward cue (e.g., information vs. information, or points vs. points). We found enhanced ventrolateral prefrontal cortex (VLPFC) activation during free choice compared to forced choice in individuals with high reward sensitivity scores. Next, using the VLPFC as a seed, we conducted a PPI analysis to identify brain regions that enhance connectivity with the VLPFC during free choice. Our PPI analyses on free vs. forced choice revealed increased VLPFC connectivity with the posterior cingulate and precentral gyrus in reward sensitive individuals. These findings suggest reward sensitivity may recruit attentional control processes during free choice potentially supporting goal-directed behavior and action selection.

Keywords: choice, perceived control, striatum, cognitive control

Introduction

Reward sensitivity refers to individual responsiveness to rewards and the positive affect derived from engaging in reinforcing behaviors (Gray, 1987). Sensitivity to reward can be influenced by the biological mesocorticolimbic system (Di Chiara et al., 2004; Kelley et al., 2005) and psychological traits (Cohen et al., 2005), which suggests it can vary significantly among individuals (Carver and White, 1994). Upon encountering stimuli with appetitive properties (i.e., food or drugs), reward sensitivity may predict responses to obtain such cues, reflected by heightened activations in reward-related brain regions (Volkow et al., 2002; Beaver et al., 2006; Carter et al., 2009). For instance, those with a high sensitivity to reward are more likely to experience greater cravings (Franken and Muris, 2005), recruit reward-related brain activity (Beaver et al., 2006; Hahn et al., 2009), and exhibit appetitive responses toward cues with greater reinforcement value (Volkow et al., 2002; Davis et al., 2004). These findings highlight how reinforcers tend to promote approach behavior that lead to the seeking and consumption of incentives—effects that are more pronounced in humans with greater reward sensitivity (Stephens et al., 2010).

An important way of understanding responses to reward cues is to compare decisions voluntarily made by the individual (i.e., free choice) versus predetermined choices (i.e., forced choice). Expressing one's preference via choice can be rewarding, particularly when decisions are freely made as opposed to being forced (Lieberman et al., 2001; Sharot et al., 2009, 2010). For instance, participants who were prompted to smoke on a predetermined schedule (forced choice) experienced significantly lower rewarding effects from smoking compared to those who were free to smoke (free choice) on their own schedule (Catley and Grobe, 2008). In these types of choice studies, free-choice behavior is compared to forced-choice procedure that is experimenter-determined and the resulting outcome is measured. Such studies converge on the idea that exerting control via choice enhances (a) motivation and performance (Ryan and Deci, 2000a; Patall, 2013) and (b) positive feelings and neural activity in reward-related brain regions such as the striatum when anticipating an opportunity to exert control (Leotti and Delgado, 2011, 2014). Importantly, these findings suggest having the opportunity to choose (free choice) relative to being forced to choose (forced choice) between reward options may engage distinct behavioral and neural patterns in reward sensitive individuals. Although previous work examining benefits of choice have focused on neural responses during the anticipation of choice (Sharot et al., 2009, 2010; Leotti and Delgado, 2011, 2014), the present study investigates the period of choice itself and whether engaging in choices involving rewards recruits distinct neural systems as a function of reward sensitivity.

Implementing control via choice also augments general motivation and performance (Ryan and Deci, 2000a; Patall, 2013), and prior research has suggested that the ventrolateral prefrontal cortex (VLPFC) may be sensitive to manipulations of motivation and performance (Taylor et al., 2004; Baxter et al., 2009). The VLPFC receives input from the orbitofrontal cortex and subcortical areas such as the midbrain and amygdala (Barbas and De Olmos, 1990) linked with motivational and affective information (Tremblay and Schultz, 1999; Paton et al., 2006). The VLPFC has also been associated with cognitive control processes that guide access to relevant information (Petrides et al., 1995; Duncan and Owen, 2000; Petrides, 2002; Bunge et al., 2004; Badre and Wagner, 2007) and is more activated during conditions that require goal-directed behavior (Sakagami and Pan, 2007). Interestingly, the VLPFC interacts with motor regions to orient attention (Corbetta and Shulman, 2002), suggesting increased connectivity with VLPFC might be important for directing attention to relevant stimuli in reward sensitive individuals. Altogether, these findings suggest responses to choice may vary due to individual traits such as reward sensitivity, which can be tracked by the VLPFC (Mullette-Gillman et al., 2011).

Here, we investigated how reward sensitivity contributes to neural responses associated with free and forced choices. For example, how does reward sensitivity interact with forced choices within a category (for example, broccoli and Brussels sprouts) versus free choices across categories (i.e., vegetables and snacks)? Will reward sensitive individuals demonstrate different patterns of brain activity during free relative to forced choices? In this study, we presented participants with two cues that were predictive of distinct classes of outcomes in each trial. Specifically, participants could earn points or information, both of which were tied to a monetary reward at the end of the experiment (Smith et al., 2016b). We presented these cues in two distinct formats. On free-choice trials, the cues were mixed (for example, subjects were free to choose between points or information), thus allowing participants to freely express their preference between points and information. On forced-choice trials, both cues were predictive of points or information, thus forcing the participant to choose within the given option (i.e., forced to choose within information or information), hence limiting their freedom to choose across cues. The goal of the task was to choose the option that maximized monetary reward to be obtained at the end. We focused on two key hypotheses. Based on prior studies demonstrating striatal involvement in the value of choice, we hypothesized reward sensitivity to be linked with reward signals in the striatum during free choice. Second, based on motivational control literature, we expected the VLPFC to modulate reward-related circuitry during free-choice trials in reward sensitive individuals.

Methods

Participants

Thirty-three healthy subjects participated in the current study (mean age = 24, range: 18–39, 18 females). Written informed consent was obtained from each subject for a protocol approved by the Institutional Review Board of Rutgers University.

Stimuli and task

In an experiment prior to this task (Smith et al., 2016b), subjects performed a card task that involved learning about colors that were associated with either points or information (Figure 1). A points trial presented three cold colors, in which each color was associated with a point value (1, 2, or 3). An information trial presented three warm colors, and each color was probabilistically linked with a letter (D, K, or X). Upon selecting a color in each trial, participants received a feedback representing the value (either a point or letter) of the color. Participants performed 36 trials of each points and information trial types. Both types of trials were important because (1) subjects needed to accrue enough points to play a bonus game at the end to earn extra money, and (2) the bonus game presented a letter in each trial and subjects needed to answer correctly to win money. This task allowed participants to develop preferences for either points or information as a means to acquire reward. Further methodological details and discussion of results are described in Smith et al. (2016b).

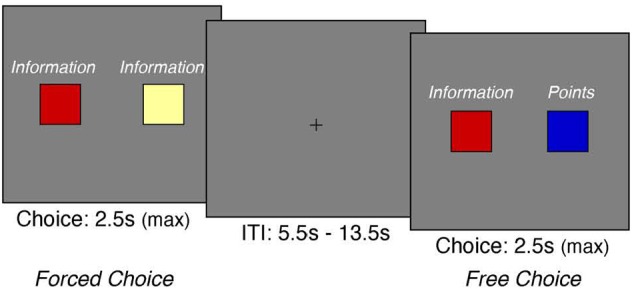

Figure 1.

Experimental Task Design. On each trial, we presented participants with two cues that were predictive of points (cold colors) and/or information (warm colors)-both of which were critical for receiving monetary reward. We presented these cues in two distinct formats. During free-choice trials, the cues were mixed, thus allowing participants to freely express their preference between points and information. On forced-choice trials, both cues were predictive of points or information, thus forcing the participant to choose the given option. (Note that the words “information” and “points” were not presented to the participants during the task; they are shown here for illustrative purposes).

Subsequently, on each trial of the choice task, we assessed preferences between points and information by asking participants to make a series of free and forced choices (Figure 1). On free-choice trials, participants chose freely between a cue that delivered points and a cue that delivered information. On forced-choice trials, participants were presented with two cues that delivered either points or information. This procedure blocked the participant from choosing between points and information—which effectively forced them to choose the presented option (cf. Lin et al., 2012). The goal of the task was to maximize monetary reward to be received at the end of the task. Each trial was presented for 2.5 s, during which participants could make a response. A random trial was selected at the end of the experiment to ensure that participants cared about each decision. Each trial was separated by a random intertrial interval from 5.5 to 13.5 s. At the end of the task, a randomly chosen trial from each subject's response was presented with an associated monetary reward. The present experimental task was programmed using the Psychophysics Toolbox 3 in MATLAB (Brainard, 1997; Kleiner et al., 2007).

Temporal experience of pleasure scale (TEPS)

To probe individuals' sensitivity to rewards, we implemented the Temporal Experience of Pleasure Scale (TEPS; Gard et al., 2006). The scale is composed of two subscales measuring anticipatory (10 items) and consummatory (8 items) pleasure. Anticipatory pleasure reflects positive feelings derived from anticipation of a reinforcer, whereas consummatory pleasure measures in-the-moment feelings of joy in response to a pleasurable cues (Gard et al., 2006; Treadway and Zald, 2011). Based on previous literature which suggests perceiving control via choice is rewarding (Leotti and Delgado, 2011, 2014), we focused on the consummatory subscale as subjects were expected to increase feelings of joy upon being presented with an option of control.

fMRI data acquisition and preprocessing

Functional magnetic resonance imaging data were acquired on a 3T Siemens MAGNETOM Trio scanner using a 12-channel head coil at the Rutgers University Brain Imaging Center (RUBIC). Whole-brain functional images were collected using a T2*-weighted echo-planar imaging (EPI) sequence. The parameters for the functional measurement were as follows: GRAPPA with R = 2; repetition time (TR) = 2000 ms; echo time (TE) = 30 ms; flip angle = 90°; matrix size = 68 × 68; field of view (FOV) = 204 mm; voxel size = 3.0 × 3.0 × 3.0 mm; with a total of 37 slices (10% gap). High-resolution T1-weighted structural scans were collected using a magnetization-prepared rapid gradient echo (MPRAGE) sequence (TR: 1900 ms; TE: 2.52 ms; matrix 256 × 256; FOV: 256 mm; voxel size 1.0 × 1.0 × 1.0 mm; 176 slices; flip angle: 9°). B0 field maps were also obtained following the same slice prescription and voxel dimensions as the functional images (TR: 402 ms; TE1: 7.65 ms; TE2: 5.19 ms; flip angle: 60°).

Imaging data were preprocessed using Statistical Parametric Mapping software [SPM12; Wellcome Department of Cognitive Neurology, London, UK (http://www.fil.ion.ucl.ac.uk/spm/software/spm12)]. Each image was aligned with the anterior commissure posterior commissure plane for better registration. To correct for head motion, each time series were realigned to its first volume. Using the B0 maps, we spatially unwarped each dataset to remove distortions from susceptibility artifacts. Prior to normalization of the T1 anatomical image, mean EPI image was coregistered to the anatomical scan. A unified segmentation normalization was performed on the anatomical image, which was used to reslice EPI images to MNI stereotactic space using 3-mm isotropic voxels (Ashburner and Friston, 2005). Normalized images were spatially smoothed using a 4-mm full-width-half-maximum Gaussian kernel. Additional corrections were applied to control for motion using tools from FSL (FMRIB Software Library), given that connectivity results can be particularly vulnerable to severe distortion by head motion. Motion spikes were identified by calculating the differences between the reference volume and (1) root-mean-square (RMS) intensity difference of each volume, and (2) mean RMS change in rotation/translation parameters. A boxplot threshold (i.e., 75 percentile plus 1.5 times the interquartile range) was applied to classify volumes as motion spikes. Once identified, all spikes and the extended motion parameters (i.e., squares, temporal differences, and squared temporal differences) were removed via regression (Power et al., 2015). Next, non-brain tissue was segmented and removed using robust skull stripping with the Brain Extraction Tool (BET), and the 4D dataset was globally normalized with grand mean scaling. Low frequency drift in the MR signal was removed using a high-pass temporal filter (Gaussian-weighted least-squares straight line fit, with a cutoff period of 100 s).

fMRI analyses

Imaging data were analyzed using the FEAT (fMRI Expert Analysis Tool) module of FSL package, version 6.0. A general linear model (GLM) with local autocorrelation correction was used for our model (Woolrich et al., 2001). First, we generated a model to identify brain regions that showed increased BOLD signal as a function of free and forced choice conditions. Our GLM included five regressors to model two cues (i.e., points or information) presented to subjects during the two conditions (free and forced choice): free choice (points), forced choice (points), free choice (information), forced choice (information), and missed responses. Therefore, each condition (forced or free) consisted of two regressors (points and information). Our main contrast of interest was free > forced choice. To test our hypothesis of whether individual differences in reward sensitivity modulated distinct brain regions in response to free choice, individual scores of TEPS were entered as a covariate.

Next, we used the output of the main contrast of interest (free > forced) as a seed to conduct a psychophysiological interaction analysis (PPI; Friston et al., 1997). Recent meta-analytic work has demonstrated that PPI produces consistent and specific patterns of task-dependent brain connectivity across studies (Smith and Delgado, 2016; Smith et al., 2016a). For each individual, we extracted BOLD time-series from the peak voxel within a mask of the VLPFC cluster. The whole cluster was identified using the contrast of the regressor during free-choice vs. forced-choice trials. Next, we generated a single-subject GLM consisting of the following regressors: five main regressors/conditions, a physiological regressor representing the time course of activation within the VLPFC ROI, and interactions with VLPFC activity with each of the four main regressors. Importantly, modeling PPI effects separately for each condition (i.e., a generalized PPI model) has been shown to result in improved sensitivity and specificity (McLaren et al., 2012). We included nuisance regressors in our model to account for missed responses during the decision-making phase. All task regressors were convolved with the canonical hemodynamic response function. We modeled group-level analyses using a mixed-effects model in FLAME 1 (FMRIB's Local Analysis of Mixed Effects), treating subjects as a random effect (Beckmann et al., 2003). All z-statistic images were thresholded and corrected for multiple comparisons using an initial cluster-forming threshold of z > 2.3 and a corrected cluster-extent threshold of p <0.05 (Worsley, 2001).

Results

In the present study, we addressed the question whether individual differences in reward sensitivity are associated with behavioral and neural responses to free and forced choice. Our behavioral analyses focused on evaluating individual difference scores in reward sensitivity with TEPS. Subsequently, we analyzed differences in reaction times in free and forced choice trials.

Individual differences in reward sensitivity

From an experiment preceding the choice task, we found that subjects were more likely to prefer affective choices (M = 0.607, SD = 0.182) compared to informative choices (M = 0.393, SD = 0.182); t(32) = −3.367, p = 0.002. They were successful at obtaining relevant information as indicated by performance in the bonus task (M = 69.36%; SE = 3.45%). The bonus task assessed the extent to which the participants learned the associations between the colors and letters, which led to a monetary bonus.

Consistent with previous research (Carter et al., 2009; Chan et al., 2012), we quantified reward sensitivity with TEPS (Gard et al., 2006). Given that our primary goal was to examine how reward sensitivity interacts with responses to free and forced choices during the decision-making phase, our subsequent analyses focused on the consummatory component of the TEPS. Individuals varied in the consummatory reward sensitivity score (TEPS-c) from 34.91 ± 4.89 (mean ± SD, ranging from 26 to 45), which was positively related with TEPS-a, r(31) = 0.42, p <0.05.

Making decisions during free and forced choices might be associated with different levels of difficulty in decision making, which may yield slower or faster reaction times between conditions. To test whether subjects perceived differences in difficulty between the two trial types, we conducted a paired-sample t-test to measure differences in response times between free and forced-choice trials. Reaction times between free (M = 1.1700 s, SD = 0.279 s) and forced choice (M = 1.166 s, SD = 0.2697 s) were not significant [t(32) = −0.131, p = 0.45]. Hence, our reaction time results suggest (1) participants perceived both types of trials as similar in difficulty, and (2) further differences in neural activity were not driven by participants' reaction times between free and forced choice trials. Nevertheless, individual differences in response times could be tied to reward sensitivity. We therefore examined whether response times for free and forced choice were correlated as a function of individual reward sensitivity (TEPS-c). We did not find a relationship between reward sensitivity scores and response times (TEPS-a and reaction time during free choice, r = −0.15, n.s.; TEPS-a and reaction time during forced choice, r = −0.08, n.s.; TEPS-c and reaction during free choice, r = −0.18, n.s.; and TEPS-c and reaction time during forced choice, r = −0.16, n.s.). Taken together, these observations suggest both types of trials did not reveal any differences in response times that could be attributed to further differences in neural activations.

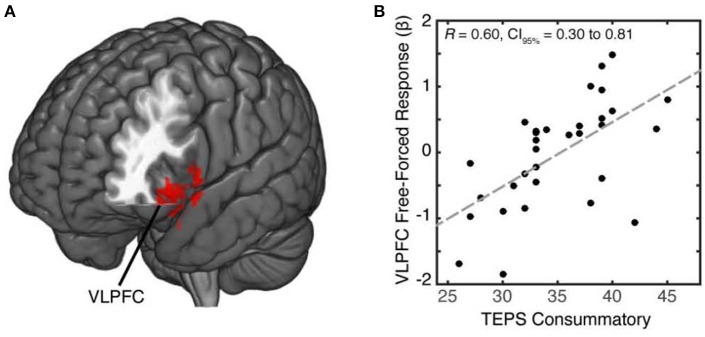

Reward sensitivity engages the VLPFC during choice

Individuals experience positive feelings when given the opportunity to freely choose (Leotti et al., 2010; Leotti and Delgado, 2011). We predicted that for reward sensitive individuals, free-choice trials would enhance reward-related neural activation modulated by VLPFC. Imaging data have found reward-motivated trials to enhance response in the VLPFC, a region previously associated with cognitive control, response selection, and reward motivation (Taylor et al., 2004; Badre and Wagner, 2007; Baxter et al., 2009). To test our hypothesis of whether individual differences in reward sensitivity modulated distinct brain regions in response to free choice, we examined neural patterns that covaried as a function of reward sensitivity during free vs. forced choice. In our whole-brain analysis of free vs. forced choice contrast, we identified a cluster within the left VLPFC (MNI x,y,z, = −48, 23, −19; 140 voxels, p = 0.011) (Figure 2A; Supplementary Table 1) that covaried with individuals' reward sensitivity scores (Figure 2B; see also Supplementary Figure 1). Specifically, individuals who reported greater reward sensitivity scores exhibited greater activation in the VLPFC during free choice compared to forced-choice trials. We did not observe any activations from the forced vs. free contrast that covaried with reward sensitivity scores, even at a lower uncorrected-threshold.

Figure 2.

Increased activation of VLPFC in reward sensitive individuals during free choice relative to forced choice. (A) To identify brain regions whose activation increased during free choice (relative to forced choice) as a function of reward sensitivity, we conducted a whole-brain cluster analysis at a threshold of z = 2.3. We identified a cluster within the left VLPFC (MNI x,y,z = −45, 20, −22; 140 voxels) which positively correlated with individuals' reward sensitivity scores during free-relative to forced-choice trials. (B) We found a positive linear trend ofVLPFC activity with reward sensitivity scores, with higher activation corresponding to higher reward sensitivity and lower activation corresponding to lower reward sensitivity.

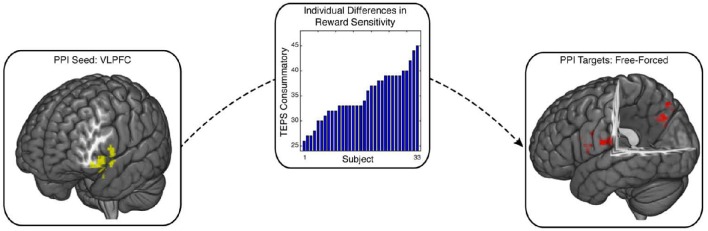

Enhanced connectivity of the attentional systems and the ventrolateral prefrontal cortex during free choice

Our whole-brain cluster analysis suggests a role for the VLPFC in processing free choice in reward sensitive individuals. The VLPFC is densely connected with a number of structures associated with cognitive control and motivational systems (Petrides and Pandya, 2002; Sakagami and Pan, 2007). One potential idea is that connectivity of VLPFC with these regions may support response selection and goal-directed behavior during free-choice trials. We tested this idea using a psychophysiological interaction (PPI) analysis with the VLPFC defined by the cluster analysis as our seed region (Friston et al., 1997). This analysis allowed us to identify regions whose connectivity with the VLPFC increased as a function of reward sensitivity during free relative to forced choice. Our PPI analysis identified two clusters in the posterior cingulate cortex (PCC) (MNI x,y,z = 18, −73, 41; 103 voxels, p = 0.043) and the precentral gyrus (MNI x,y,z = −39, −19, 35; 156 voxels, p = 0.0035), which showed enhanced connectivity with VLPFC as a function of individuals' reward sensitivity (Figure 3; see also Supplementary Figure 2). All coordinates are reported on Supplementary Table 1.

Figure 3.

Enhanced VLPFC connectivity with PCC and precentral gyrus during free choice compared to forced choice. Psychophysical interaction (PPI) analysis was implemented to test whether free choice elicited greater VLPFC connectivity with other brain regions as a function of individuals' reward sensitivity scores. The seed region, (VLPFC; image on the left), exhibited enhanced connectivity with PCC and precentral gyrus (image on the right), contingent upon reward sensitivity (image in the middle). PCC is a region that has been previously associated with cognitive control, along with VLPFC. This connectivity relationship was enhanced for individuals who reported greater valuation for rewards.

Discussion

This paper investigated the influence of free choice on individual differences in reward sensitivity. When given free choice, individuals high in reward sensitivity revealed enhanced VLPFC activation, a region known to be involved in attentional control and response selection. Further, our PPI analyses found increased VLPFC connectivity with the PCC and precentral gyrus that might be involved in motor processing during free-choice trials in reward sensitive individuals. These observations suggest reward sensitivity may recruit VLPFC-related attentional control processes during free choice that relate to goal-directed behavior and action selection.

Individuals high in reward sensitivity show a tendency to engage in goal-directed behavior and to experience pleasure when exposed to cues of impending reward (Carver and White, 1994; Gard et al., 2006). When given an opportunity to freely choose between options, individuals sensitive to rewards may more readily orient to an option that leads to maximizing their goal (i.e., increasing chances of reward). The VLPFC has been associated with computing behavioral significance by integrating input from regions processing motivational and affective information (Sakagami and Pan, 2007). Experiencing control via exerting self-initiated choice is motivating and can be rewarding in it of itself (Ryan and Deci, 2000b; Bhanji and Delgado, 2014; Leotti et al., 2015). A region linked with tracking reward expectancy value (Pochon et al., 2002), the recruitment of VLPFC during free choice in reward sensitive individuals might suggest a role for the region in increasing attentional control (Duncan and Owen, 2000; Bunge et al., 2004; Badre and Wagner, 2007) and guiding goal-directed behavior (Sakagami and Pan, 2007). Consistent with this idea, greater VLPFC activation is observed when individuals make decisions between consequential choices (i.e., choosing with whom to date) relative to inconsequential choices (i.e., choosing between same-sex faces) (Turk et al., 2004). Our results suggest that an opportunity for free choice enhances attentional biases to maximize goals (i.e., reward maximization) by increasing VLPFC activation in reward sensitive individuals. Exerting control by expressing one's choice has been linked with adaptive consequences (Bandura, 1997; Ryan and Deci, 2006; Leotti et al., 2010), and one's ability to choose between alternatives modulates expectancy toward hedonic and/or aversive outcomes (Sharot et al., 2009, 2010; Leotti and Delgado, 2014). Our findings may extend to interpretations of motivational influences of free choice on reward sensitivity by modulating cognitive control regions that may facilitate goal-directed behavior.

A novel aspect of our study was that when individuals chose freely between options, we observed increased VLPFC connectivity with the precentral gyrus and the PCC/precuneus. Although the PCC is commonly associated with the default-mode network (Buckner et al., 2008), recent findings have suggested that the PCC can also participate in the cognitive control network (Leech et al., 2011, 2012; Utevsky et al., 2014). This observation has led some researchers to argue that the PCC is involved in detecting and responding to stimuli that demand behavioral modifications (Pearson et al., 2011; Leech and Sharp, 2014). By interacting with regions involved in cognitive control, the PCC might help individuals more readily orient to options during free choice and increase efficacy of behavioral responses promoting maximization of one's goals (i.e., earning rewards). In line with this explanation, our results indicate making a choice between two attributes is facilitated by increased VLPFC connectivity with the PCC. This finding yields a potential interpretation that free choice enhances VLPFC region connectivity with target PCC and precentral regions to support motor responses in reward sensitive individuals. A recent meta-analysis of PPI studies found PCC was a reliable target of studies examining cognitive control, but only when the dorsolateral prefrontal cortex was used as the seed region (Smith et al., 2016a). This discrepancy could be due to the fact that our results are based on individual differences in reward sensitivity, which were explicitly ignored in the recent PPI meta-analysis. In addition, because our analyses are limited to connectivity between regions and not directionality, an alternative explanation accounts for VLPFC region modulating PCC in response to context-specific factors (i.e., free choice) modulated by individual differences in reward sensitivity. The brain engages in multiple processes of valuation when deciding between different options. Once the sensory information is computed, signals are integrated with other motivational and contextual factors, which are then used to guide choices (Kable and Glimcher, 2009; Grabenhorst and Rolls, 2011). The interactions between the VLPFC and PCC increased during free choice, suggesting cognitive resources are made accessible via enhanced connectivity of the PCC with the cognitive control network (Leech et al., 2012; Utevsky et al., 2014).

Contrary to our hypothesis, we did not observe an association between reward sensitivity and reward signals in the striatum during free choice. Studies suggest striatal recruitment can depend on variables such as individual differences (i.e., preference for choice) and can be influenced by contextual factors (such as gain or loss context) (Leotti and Delgado, 2014). It is possible that individuals value opportunity for choice because they believe such choice will provide them access to the best option available. Consistent with this idea, previous studies found reward-related striatal activation is limited to free-choice biases primarily predicting positive outcomes (Leotti and Delgado, 2011, 2014; Cockburn et al., 2014). Hence, it is possible that the striatum did not dissociate between free and forced choices because value across both conditions were similar. It is also noteworthy that we did not observe neural activations involved in value computation in our contrasts that correlated with reward sensitivity scores. In our experimental design, there were no differences in value representation between points and information because they both led to monetary rewards. Therefore, a potential explanation for not finding value modulated brain areas as a function of reward sensitivity might account for similarity of value associated with each option.

The purpose of the current study was to compare free vs. forced choices involving rewards not necessarily prescribed to negative or positive outcomes, and both types of choice trials were related to accomplishing the goal of the task (i.e., accruing monetary outcomes). The opportunity to choose involves making a decision by selecting a response necessary for obtaining one's goals, processes supported by VLPFC (Duncan and Owen, 2000; Bunge et al., 2004; Badre and Wagner, 2007; Sakagami and Pan, 2007). Consistent with this perspective, the VLPFC emerged as a key region in our paradigm that tracked the opportunity for choice in reward sensitive individuals. In sum, our results suggest goal-directed behavior (i.e., increasing chances of reward) might be facilitated in reward sensitive individuals by enhanced attentional and cognitive control network in response to trials that afford them free choice.

We note that our reward sensitivity findings may have important clinical implications. Psychiatric patients often show deficits in decision-making tasks involving rewards (Parvaz et al., 2012). For instance, substance abuse and psychopathy are related to high levels of responsiveness to rewards (Buckholtz et al., 2010; Schneider et al., 2012; Yau et al., 2012; Tanabe et al., 2013). Reward sensitivity is also associated with negative mental-health outcomes such as greater risks for addictions (Kreek et al., 2005), alcohol abuse (Franken, 2002), eating disorders (Davis et al., 2004; Franken and Muris, 2005), and depressive disorders (Alloy et al., 2016). Consistent with our findings from reward sensitivity, bipolar patients show hypersensitivity to rewards and recruit VLPFC when anticipating rewards (Whitton et al., 2015; Chase et al., 2016), and pathological gamblers tend to reveal hyposensitivity to rewards and fail to activate the VLPFC in response to monetary rewards (de Ruiter et al., 2009). The close association between levels of sensitivity for rewards and psychiatric disorders may implicate failures in the executive control network during affective and motivational processing (Johnstone et al., 2007; Heatherton and Wagner, 2011). An exciting direction for future research is with respect to understanding networks and the specific connectivity affected by reward in/sensitivity that govern decision-making processes.

Although our results may have implications for clinical research, we note that a number of limitations accompany our results. First, in our design, free choice offered individuals a choice between dissimilar options, whereas forced-choice trials provided participants with similar options. It is possible that subjects perceived forced-choice trials as easier than choice trials upon making decisions. However, analyses on reaction times did not reveal significant differences between free- vs. forced-choice trials, which suggest our findings were not due to differences in perceived difficulty between trial types. Also, an alternative approach to human behavior of preferring forced choice could be explained by the regret theory (Loomes and Sugden, 1982), which suggests feelings of regret are enhanced when the option taken leads to a worse outcome than the alternative option. Therefore, humans may have a tendency for a status quo bias to minimize a feeling of regret (Nicolle et al., 2011). Moreover, although some aspects of our design did not measure forced choice explicitly in line with conventional free vs. forced choice framework, given that reward sensitive regions are context specific and varies in accordance with the range of possible options available (Nieuwenhuis et al., 2005), the current study tested whether brain activations in reward sensitivity would dissociate responses to free choices between categories compared with forced choices within a category. Second, it is also worth noting that the VLPFC has been implicated in a number of roles including incentive motivation (Taylor et al., 2004; Baxter et al., 2009), cognitive regulation (Lopez et al., 2014), response inhibition (Aron et al., 2004), and task switching (Braver et al., 2003). Therefore, there may be alternative explanations for the VLPFC activation in reward sensitive individuals. However, research demonstrates consistency of VLPFC activation patterns in response to rewarding stimuli as a function of reward sensitivity (de Ruiter et al., 2009; Yokum et al., 2011; Whitton et al., 2015), suggesting a role for the region in modulating attentional control in response to rewarding contexts particularly for reward sensitive individuals. Finally, we note that complex personality traits such as reward sensitivity can be difficult to quantify. Although TEPS provides one validated approach for measuring reward sensitivity, we note that other scales have also been used to relate brain responses to personality traits associated with reward and motivation. For instance, recent work has demonstrated that individual differences in regulatory focus are associated with PCC responses to promotion goals (Strauman et al., 2013) and ventral striatal responses to reward (Scult et al., 2016). These observations suggest that future work may be able to build on our findings be integrating regulatory focus theory and other personality measures with classical measures of reward sensitivity (e.g., TEPS and BIS/BAS).

Despite these caveats, our findings suggest that reward sensitivity may be an important factor in determining one's responses to rewarding stimuli. This can extend to intrinsically rewarding stimuli, such as the opportunity to exert control. When given an opportunity to make a free choice, high reward sensitive individuals might demonstrate enhanced reactivity and engage greater attentional and motor control necessary for achieving one's goals (i.e., accruing more money).

Author contributions

CC, DS, and MD designed the research; CC and DS performed research; CC and DS analyzed data; CC, DS, and MD wrote the manuscript.

Conflict of interest statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

This study was funded by National Institutes of Health grants R01-MH084081 (to MD) and F32-MH107175 (to DS). We are grateful for help from Ahmet Ceceli, who provided helpful comments on previous drafts of the manuscript. We also thank Ana Rigney for assistance with data collection.

Supplementary material

The Supplementary Material for this article can be found online at: http://journal.frontiersin.org/article/10.3389/fnins.2016.00529/full#supplementary-material

References

- Alloy L. B., Olino T., Freed R. D. (2016). Role of reward sensitivity and processing in major depressive and bipolar spectrum disorders. Behav. Ther. 29, 1–68. 10.1016/j.beth.2016.02.014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aron A. R., Robbins T. W., Poldrack R. A. (2004). Inhibition and the right inferior frontal cortex. Trends Cogn. Sci. 8, 170–177. 10.1016/j.tics.2004.02.010 [DOI] [PubMed] [Google Scholar]

- Ashburner J., Friston K. J. (2005). Unified segmentation. Neuroimage 26, 839–851. 10.1016/j.neuroimage.2005.02.018 [DOI] [PubMed] [Google Scholar]

- Badre D., Wagner A. D. (2007). Left ventrolateral prefrontal cortex and the cognitive control of memory. Neuropsychologia 45, 2883–2901. 10.1016/j.neuropsychologia.2007.06.015 [DOI] [PubMed] [Google Scholar]

- Bandura A. (1997). Self-Efficacy: The Exercise of Control. New York, NY: Freeman. [Google Scholar]

- Barbas H., De Olmos J. (1990). Projections from the amygdala to basoventral and mediodorsal prefrontal regions in the rhesus monkey. J. Comp. Neurol. 300, 549–571. 10.1002/cne.903000409 [DOI] [PubMed] [Google Scholar]

- Baxter M. G., Gaffan D., Kyriazis D. A., Mitchell A. S. (2009). Ventrolateral prefrontal cortex is required for performance of a strategy implementation task but not reinforcer devaluation effects in rhesus monkeys. Eur. J. Neurosci. 29, 2049–2059. 10.1111/j.1460-9568.2009.06740.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beaver J. D., Lawrence A. D., van Ditzhuijzen J., Davis M. H., Woods A., Calder A. J. (2006). Individual differences in reward drive predict neural responses to images of food. J. Neurosci. 26, 5160–5166. 10.1523/JNEUROSCI.0350-06.2006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beckmann C. F., Jenkinson M., Smith S. M. (2003). General multilevel linear modeling for group analysis in FMRI. Neuroimage 20, 1052–1063. 10.1016/S1053-8119(03)00435-X [DOI] [PubMed] [Google Scholar]

- Bhanji J. P., Delgado M. R. (2014). Perceived control influences neural responses to setbacks and promotes persistence. Neuron 83, 1369–1375. 10.1016/j.neuron.2014.08.012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brainard D. H. (1997). The psychophysics toolbox. Spat. Vis. 10, 433–436. 10.1163/156856897X00357 [DOI] [PubMed] [Google Scholar]

- Braver T. S., Reynolds J. R., Donaldson D. I. (2003). Neural mechanisms of transient and sustained cognitive control during task switching. Neuron 39, 713–726. 10.1016/S0896-6273(03)00466-5 [DOI] [PubMed] [Google Scholar]

- Buckholtz J. W., Treadway M. T., Cowan R. L., Woodward N. D., Benning S. D., Li R., et al. (2010). Mesolimbic dopamine reward system hypersensitivity in individuals with psychopathic traits. Nat. Neurosci. 13, 419–421. 10.1038/nn.2510 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buckner R. L., Andrews-Hanna J. R., Schacter D. L. (2008). The brain's default network: anatomy, function, and relevance to disease. Ann. N.Y. Acad. Sci. 1124, 1–38. 10.1196/annals.1440.011 [DOI] [PubMed] [Google Scholar]

- Bunge S. A., Burrows B., Wagner A. D. (2004). Prefrontal and hippocampal contributions to visual associative recognition: interactions between cognitive control and episodic retrieval. Brain Cogn. 56, 141–152. 10.1016/j.bandc.2003.08.001 [DOI] [PubMed] [Google Scholar]

- Carter R. M., Macinnes J. J., Huettel S. A., Adcock R. A. (2009). Activation in the VTA and nucleus accumbens increases in anticipation of both gains and losses. Front. Behav. Neurosci. 3, 21. 10.3389/neuro.08.021.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carver C. S., White T. L. (1994). Behavioral inhibition, behavioral activation, and affective responses to impending reward and punishment: the BIS/BAS Scales. J. Pers. Soc. Psychol. 67, 319–333. 10.1037/0022-3514.67.2.319 [DOI] [Google Scholar]

- Catley D., Grobe J. E. (2008). Using basic laboratory research to understand scheduled smoking: a field investigation of the effects of manipulating controllability on subjective responses to smoking. Heal. Psychol. 27, S189–S196. 10.1037/0278-6133.27.3(suppl.).s189 [DOI] [PubMed] [Google Scholar]

- Chan R. C., Shi Y. F., Lai M. K., Wang Y. N., Wang Y., Kring A. M. (2012). The temporal experience of pleasure scale (TEPS): exploration and confirmation of factor structure in a healthy Chinese sample. PLoS ONE 7:e35352. 10.1371/journal.pone.0035352 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chase H. W., Phillips M. L., Friston K. J., Chen C. H., Suckling J., Lennox B. R., et al. (2016). Elucidating neural network functional connectivity abnormalities in bipolar disorder: toward a harmonized methodological approach. Biol. Psychiatry Cogn. Neurosci. Neuroimaging 1, 288–298. 10.1016/j.bpsc.2015.12.006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cockburn J., Collins A. G. E., Frank M. J. (2014). A reinforcement learning mechanism responsible for the valuation of free choice. Neuron 83, 551–557. 10.1016/j.neuron.2014.06.035 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen M. X., Young J., Baek J. M., Kessler C., Ranganath C. (2005). Individual differences in extraversion and dopamine genetics predict neural reward responses. Cogn. Brain Res. 25, 851–861. 10.1016/j.cogbrainres.2005.09.018 [DOI] [PubMed] [Google Scholar]

- Corbetta M., Shulman G. L. (2002). Control of goal-directed and stimulus-driven attention in the brain. Nat. Rev. Neurosci. 3, 215–229. 10.1038/nrn755 [DOI] [PubMed] [Google Scholar]

- Davis C., Strachan S., Berkson M. (2004). Sensitivity to reward: implications for overeating and overweight. Appetite 42, 131–138. 10.1016/j.appet.2003.07.004 [DOI] [PubMed] [Google Scholar]

- de Ruiter M. B., Veltman D. J., Goudriaan A. E., Oosterlaan J., Sjoerds Z., van den Brink W. (2009). Response perseveration and ventral prefrontal sensitivity to reward and punishment in male problem gamblers and smokers. Neuropsychopharmacology 34, 1027–1038. 10.1038/npp.2008.175 [DOI] [PubMed] [Google Scholar]

- Di Chiara G., Bassareo V., Fenu S., De Luca M. A., Spina L., Cadoni C., et al. (2004). Dopamine and drug addiction: the nucleus accumbens shell connection. Neuropharmacology 47, 227–241. 10.1016/j.neuropharm.2004.06.032 [DOI] [PubMed] [Google Scholar]

- Duncan J., Owen A. M. (2000). Common regions of the human frontal lobe recruited by diverse cognitive demands. Trends Neurosci. 23, 475–483. 10.1016/S0166-2236(00)01633-7 [DOI] [PubMed] [Google Scholar]

- Franken I. H. A. (2002). Behavioral approach system (BAS) sensitivity predicts alcohol craving. Pers. Individ. Dif. 32, 349–355. 10.1016/S0191-8869(01)00030-7 [DOI] [Google Scholar]

- Franken I. H. A., Muris P. (2005). Individual differences in reward sensitivity are related to food craving and relative body weight in healthy women. Appetite 45, 198–201. 10.1016/j.appet.2005.04.004 [DOI] [PubMed] [Google Scholar]

- Friston K. J., Buechel C., Fink G. R., Morris J., Rolls E., Dolan R. J. (1997). Psychophysiological and modulatory interactions in neuroimaging. Neuroimage 6, 218–229. 10.1006/nimg.1997.0291 [DOI] [PubMed] [Google Scholar]

- Gard D. E., Gard M. G., Kring A. M., John O. P. (2006). Anticipatory and consummatory components of the experience of pleasure: a scale development study. J. Res. Pers. 40, 1086–1102. 10.1016/j.jrp.2005.11.001 [DOI] [Google Scholar]

- Grabenhorst F., Rolls E. T. (2011). Value, pleasure and choice in the ventral prefrontal cortex. Trends Cogn. Sci. 15, 56–67. 10.1016/j.tics.2010.12.004 [DOI] [PubMed] [Google Scholar]

- Gray J. A. (1987). The neuropsychology of emotion and personality, in Cognitive Neurochemistry, eds Stahl S. M., Iverson S. D., Goodman E. C. (New York, NY: Oxford University Press; ), 171–190. [Google Scholar]

- Hahn T., Dresler T., Ehlis A. C., Plichta M. M., Heinzel S., Polak T., et al. (2009). Neural response to reward anticipation is modulated by Gray's impulsivity. Neuroimage 46, 1148–1153. 10.1016/j.neuroimage.2009.03.038 [DOI] [PubMed] [Google Scholar]

- Heatherton T. F., Wagner D. D. (2011). Cognitive neuroscience of self-regulation failure. Trends Cogn. Sci. 15, 132–139. 10.1016/j.tics.2010.12.005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnstone T., van Reekum C. M., Urry H. L., Kalin N. H., Davidson R. J. (2007). Failure to regulate: counterproductive recruitment of top-down prefrontal-subcortical circuitry in major depression. J. Neurosci. 27, 8877–8884. 10.1523/JNEUROSCI.2063-07.2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kable J. W., Glimcher P. W. (2009). Review the neurobiology of decision : consensus and controversy. Neuron 63, 733–745. 10.1016/j.neuron.2009.09.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kelley A. E., Schiltz C. A., Landry C. F. (2005). Neural systems recruited by drug- and food-related cues: studies of gene activation in corticolimbic regions. Physiol. Behav. 86, 11–14. 10.1016/j.physbeh.2005.06.018 [DOI] [PubMed] [Google Scholar]

- Kleiner M., Brainard D., Pelli D., Ingling A., Murray R., Broussard C. (2007). What's new in psychtoolbox-3. perception, 36, 1–16. [Google Scholar]

- Kreek M. J., Nielsen D. A., Butelman E. R., LaForge K. S. (2005). Genetic influences on impulsivity, risk taking, stress responsivity and vulnerability to drug abuse and addiction. Nat. Neurosci. 8, 1450–1457. 10.1038/nn1583 [DOI] [PubMed] [Google Scholar]

- Leech R., Braga R., Sharp D. J. (2012). Echoes of the brain within the posterior cingulate cortex. J. Neurosci. 32, 215–222. 10.1523/JNEUROSCI.3689-11.2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leech R., Kamourieh S., Beckmann C. F., Sharp D. J. (2011). Fractionating the default mode network: distinct contributions of the ventral and dorsal posterior cingulate cortex to cognitive control. J. Neurosci. 31, 3217–3224. 10.1523/JNEUROSCI.5626-10.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leech R., Sharp D. J. (2014). The role of the posterior cingulate cortex in cognition and disease. Brain 137, 12–32. 10.1093/brain/awt162 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leotti L. A., Cho C., Delgado M. R. (2015). The neural basis underlying the experience of control in the human brain, in The Sense of Agency, eds Haggard P., Eitam B. (Oxford University Press; ), 145. [Google Scholar]

- Leotti L. A., Delgado M. R. (2011). The inherent reward of choice. Psychol. Sci. 22, 1310–1318. 10.1177/0956797611417005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leotti L. A., Delgado M. R. (2014). The value of exercising control over monetary gains and losses. Psychol. Sci. 25, 596–604. 10.1177/0956797613514589 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leotti L. A., Iyengar S. S., Ochsner K. N. (2010). Born to choose: the origins and value of the need for control. Trends Cogn. Sci. 14, 457–463. 10.1016/j.tics.2010.08.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lieberman M. D., Ochsner K. N., Gilbert D. T., Schacter D. L. (2001). Do amnesics exhibit cognitive dissonance reduction? The role of explicit memory and attention in attitude change. Psychol. Sci. 12, 135–140. 10.1111/1467-9280.00323 [DOI] [PubMed] [Google Scholar]

- Lin A., Adolphs R., Rangel A. (2012). Social and monetary reward learning engage overlapping neural substrates. Soc. Cogn. Affect. Neurosci. 7, 274–281. 10.1093/scan/nsr006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Loomes G., Sugden R. (1982). Regret theory : an alternative theory of rational choice under uncertainty. Econ. J. 92, 805–824. 10.2307/2232669 [DOI] [Google Scholar]

- Lopez R. B., Hofmann W., Wagner D. D., Kelley W. M., Heatherton T. F. (2014). Neural predictors of giving in to temptation in Daily Life. Psychol. Sci. 25, 1337–1344. 10.1177/0956797614531492 [DOI] [PMC free article] [PubMed] [Google Scholar]

- McLaren D. G., Ries M. L., Xu G., Johnson S. C. (2012). A generalized form of context-dependent psychophysiological interactions (gPPI): a comparison to standard approaches. Neuroimage 61, 1277–1286. 10.1016/j.neuroimage.2012.03.068 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mullette-Gillman O. A., Detwiler J. M., Winecoff A., Dobbins I., Huettel S. A. (2011). Infrequent, task-irrelevant monetary gains and losses engage dorsolateral and ventrolateral prefrontal cortex. Brain Res. 1395, 53–61. 10.1016/j.brainres.2011.04.026 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nicolle A., Fleming S. M., Bach D. R., Driver J., Dolan R. J. (2011). A regret-induced status quo bias. J. Neurosci. 31, 3320–3327. 10.1523/JNEUROSCI.5615-10.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nieuwenhuis S., Heslenfeld D. J., von Geusau N. J., Mars R. B., Holroyd C. B., Yeung N. (2005). Activity in human reward-sensitive brain areas is strongly context dependent. Neuroimage 25, 1302–1309. 10.1016/j.neuroimage.2004.12.043 [DOI] [PubMed] [Google Scholar]

- Parvaz M. A., MacNamara A., Goldstein R. Z., Hajcak G. (2012). Event-related induced frontal alpha as a marker of lateral prefrontal cortex activation during cognitive reappraisal. Cogn. Affect. Behav. Neurosci. 12, 730–740. 10.3758/s13415-012-0107-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Patall E. A. (2013). Constructing motivation through choice, interest, and interestingness. J. Educ. Psychol. 105, 522 10.1037/a0030307 [DOI] [Google Scholar]

- Paton J. J., Belova M. A., Morrison S. E., Salzman C. D. (2006). The primate amygdala represents the positive and negative value of visual stimuli during learning. Nature 439, 865–870. 10.1038/nature04490 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pearson J. M., Heilbronner S. R., Barack D. L., Hayden B. Y., Platt M. L. (2011). Posterior cingulate cortex: adapting behavior to a changing world. Trends Cogn. Sci. 15, 143–151. 10.1016/j.tics.2011.02.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Petrides M. (2002). The mid-ventrolateral prefrontal cortex and active mnemonic retrieval. Neurobiol. Learn. Mem. 78, 528–538. 10.1006/nlme.2002.4107 [DOI] [PubMed] [Google Scholar]

- Petrides M., Alivisatos B., Evans A. C. (1995). Functional activation of the human ventrolateral frontal cortex during mnemonic retrieval of verbal information. Proc. Natl. Acad. Sci. U.S.A. 92, 5803–5807. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Petrides M., Pandya D. N. (2002). Comparative cytoarchitectonic analysis of the human and the macaque ventrolateral prefrontal cortex and corticocortical connection patterns in the monkey. Eur. J. Neurosci. 16, 291–310. 10.1046/j.1460-9568.2001.02090.x [DOI] [PubMed] [Google Scholar]

- Pochon J. B., Levy R., Fossati P., Lehericy S., Poline J. B., Pillon B., et al. (2002). The neural system that bridges reward and cognition in humans: an fMRI study. Proc. Natl. Acad. Sci. U.S.A. 99, 5669–5674. 10.1073/pnas.082111099 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Power J. D., Schlaggar B. L., Petersen S. E. (2015). Recent progress and outstanding issues in motion correction in resting state fMRI. Neuroimage 105, 536–551. 10.1016/j.neuroimage.2014.10.044 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ryan R. M., Deci E. (2000a). Intrinsic and extrinsic motivations: classic definitions and new directions. Contemp. Educ. Psychol. 25, 54–67. 10.1006/ceps.1999.1020 [DOI] [PubMed] [Google Scholar]

- Ryan R. M., Deci E. L. (2000b). Self-determination theory and the facilitation of intrinsic motivation, social development, and well-being. Am. Psychol. 55, 68–78. 10.1037/0003-066X.55.1.68 [DOI] [PubMed] [Google Scholar]

- Ryan R. M., Deci E. L. (2006). Self-regulation and the problem of human autonomy: does psychology need choice, self-determination, and will? J. Pers. 74, 1557–1585. 10.1111/j.1467-6494.2006.00420.x [DOI] [PubMed] [Google Scholar]

- Sakagami M., Pan X. (2007). Functional role of the ventrolateral prefrontal cortex in decision making. Curr. Opin. Neurobiol. 17, 228–233. 10.1016/j.conb.2007.02.008 [DOI] [PubMed] [Google Scholar]

- Schneider S., Peters J., Bromberg U., Brassen S., Miedl S. F., Banaschewski T., et al. (2012). Risk taking and the adolescent reward system: a potential common link to substance abuse. Am. J. Psychiatry 169, 39–46. 10.1176/appi.ajp.2011.11030489 [DOI] [PubMed] [Google Scholar]

- Scult M. A., Knodt A. R., Hanson J. L., Ryoo M., Adcock R. A., Hariri A. R., et al. (2016). Individual differences in regulatory focus predict neural response to reward. Soc. Neurosci. 919, 1–11. 10.1080/17470919.2016.1178170 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sharot T., De Martino B., Dolan R. J. (2009). How choice reveals and shapes expected hedonic outcome. J. Neurosci. 29, 3760–3765. 10.1523/JNEUROSCI.4972-08.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sharot T., Velasquez C. M., Dolan R. J. (2010). Do decisions shape preference? Evidence from blind choice. Psychol. Sci. 21, 1231–1235. 10.1177/0956797610379235 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith D. V., Delgado M. R. (2016). Meta-analysis of psychophysiological interactions: revisiting cluster-level thresholding and sample sizes. Hum. Brain Mapp. [Epub ahead of print]. 10.1002/hbm.23354 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith D. V., Gseir M., Speer M. E., Delgado M. R. (2016a). Toward a cumulative science of functional integration: a meta-analysis of psychophysiological interactions. Hum. Brain Mapp. 37, 2904–2917. 10.1002/hbm.23216 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith D. V., Rigney A. E., Delgado M. R. (2016b). Distinct reward properties are encoded via corticostriatal interactions. Sci. Rep. 6:20093. 10.1038/srep20093 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stephens D. N., Duka T., Crombag H. S., Cunningham C. L., Heilig M., Crabbe J. C. (2010). Reward sensitivity: issues of measurement, and achieving consilience between human and animal phenotypes. Addict. Biol. 15, 146–168. 10.1111/j.1369-1600.2009.00193.x [DOI] [PubMed] [Google Scholar]

- Strauman T. J., Detloff A. M., Sestokas R., Smith D. V., Goetz E. L., Rivera C., et al. (2013). What shall I be, what must I be: neural correlates of personal goal activation. Front. Integr. Neurosci. 6:123. 10.3389/fnint.2012.00123 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tanabe J., Reynolds J., Krmpotich T., Claus E., Thompson L. L., Du Y. P., et al. (2013). Reduced neural tracking of prediction error in Substance-dependent individuals. Am. J. Psychiatry 170, 1356–1363. 10.1176/appi.ajp.2013.12091257 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taylor S. F., Welsh R. C., Wager T. D., Phan K. L., Fitzgerald K. D., Gehring W. J. (2004). A functional neuroimaging study of motivation and executive function. Neuroimage 21, 1045–1054. 10.1016/j.neuroimage.2003.10.032 [DOI] [PubMed] [Google Scholar]

- Treadway M. T., Zald D. H. (2011). Reconsidering anhedonia in depression: lessons from translational neuroscience. Neurosci. Biobehav. Rev. 35, 537–555. 10.1016/j.neubiorev.2010.06.006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tremblay L., Schultz W. (1999). Relative reward preference in primate orbitofrontal cortex. Nature 398, 704–708. 10.1038/19525 [DOI] [PubMed] [Google Scholar]

- Turk D. J., Banfield J. F., Walling B. R., Heatherton T. F., Grafton S. T., Handy T. C., et al. (2004). From facial cue to dinner for two: the neural substrates of personal choice. Neuroimage 22, 1281–1290. 10.1016/j.neuroimage.2004.02.037 [DOI] [PubMed] [Google Scholar]

- Utevsky A. V., Smith D. V., Huettel S. A. (2014). Precuneus is a functional core of the default-mode network. J. Neurosci. 34, 932–940. 10.1523/JNEUROSCI.4227-13.2014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Volkow N. D., Wang G. J., Fowler J. S., Logan J., Jayne M., Franceschi D., et al. (2002). “Nonhedonic” food motivation in humans involves dopamine in the dorsal striatum and methylphenidate amplifies this effect. Synapse 44, 175–180. 10.1002/syn.10075 [DOI] [PubMed] [Google Scholar]

- Whitton A. E., Treadway M. T., Pizzagalli D. A. (2015). Reward processing dysfunction in major depression, bipolar disorder and schizophrenia. Curr. Opin. Psychiatry 28, 7–12. 10.1097/YCO.0000000000000122 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Woolrich M. W., Ripley B. D., Brady M., Smith S. M. (2001). Temporal autocorrelation in univariate linear modeling of FMRI data. Neuroimage 14, 1370–1386. 10.1006/nimg.2001.0931 [DOI] [PubMed] [Google Scholar]

- Worsley K. J. (2001). Statistical analysis of activation images, in Functional MRI: An Introduction to Methods, eds Jezzard P., Matthews P. M., Smith S. M. (New York: Oxford University; ), 251–270. [Google Scholar]

- Yau W. Y., Zubieta J. K., Weiland B. J., Samudra P. G., Zucker R. A., Heitzeg M. M. (2012). Nucleus accumbens response to incentive stimuli anticipation in children of alcoholics: relationships with precursive behavioral risk and lifetime alcohol use. J Neurosci 32, 2544–2551. 10.1523/JNEUROSCI.1390-11.2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yokum S., Ng J., Stice E. (2011). Attentional bias to food images associated with elevated weight and future weight gain: an fMRI study. Obesity (Silver Spring). 19, 1775–1783. 10.1038/oby.2011.168 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.