Abstract

The STROBE (Strengthening the Reporting of Observational Studies in Epidemiology) statement was first published in 2007 and again in 2014. The purpose of the original STROBE was to provide guidance for authors, reviewers, and editors to improve the comprehensiveness of reporting; however, STROBE has a unique focus on observational studies. Although much of the guidance provided by the original STROBE document is directly applicable, it was deemed useful to map those statements to veterinary concepts, provide veterinary examples, and highlight unique aspects of reporting in veterinary observational studies. Here, we present the examples and explanations for the checklist items included in the STROBE‐Vet statement. Thus, this is a companion document to the STROBE‐Vet statement methods and process document (JVIM_14575 “Methods and Processes of Developing the Strengthening the Reporting of Observational Studies in Epidemiology—Veterinary (STROBE‐Vet) Statement” undergoing proofing), which describes the checklist and how it was developed.

Keywords: Animal Health, Animal welfare, Food Safety, Observational studies, Production, Reporting guidelines

Abbreviation

- STROBE

Strengthening the Reporting of Observational Studies in Epidemiology

In veterinary research, observational studies are commonly used to describe the natural history of disease, assess etiology, and identify and investigate the effect of risk factors. To maximize the value of observational studies, it is critical that they are reported in a manner that facilitates internal and external validity assessment. Reporting guidelines allow researchers to appraise the published findings and potentially apply them to future research or decision making. Initially used for intervention (clinical trial) assessments, the CONSORT1, 2 and REFLECT statements3, 4 were developed to create an experimental and reporting framework for randomized controlled trials and to help authors, reviewers, and editors address concerns about incomplete reporting. The STROBE (Strengthening the Reporting of Observational Studies in Epidemiology) statement, first published in 2007 and again in 2014,5, 6, 7 provided a similar framework for observational studies. In this document, we provide the rationale behind the revision of STROBE for use in veterinary research and examples of data reporting under the revised guidelines. Although much of the STROBE material is directly relevant to veterinary studies, animal health investigations have sufficient unique features to warrant publishing a set of veterinary‐investigator‐specific guidelines (JVIM_14575 “Methods and Processes of Developing the Strengthening the Reporting of Observational Studies in Epidemiology—Veterinary (STROBE‐Vet) Statement” undergoing proofing). For example, multiple levels of organization are common in animal populations, and observational studies should account for this when reporting results. Given the importance of population structures when interpreting results, this issue features prominently in the STROBE‐Vet extension.

Omission or unclear reporting of important details is a common problem in all types of research reports. Some omissions can seriously limit the utility of the research by either hiding limitations or creating unwarranted doubt about the studies’ conclusions. These omissions, in turn, increase research wastage.8, 9, 10, 11, 12, 13 Study results are usually used by people other than the manuscript authors to make decisions. Hence, these users need as much information as possible to judge the validity of the results. Reporting guidelines are designed to reduce critical omissions by providing a checklist of important items to include in the report. Checklists improve author, editor, and reviewer compliance with respect to what information should be included in a comprehensive report, making them valuable research‐reporting tools.14, 15

How to Use This Document

Each item is presented in the same manner: first the item number (1–22) with subdivisions and a description of the item, followed by examples that illustrate the reporting approach for the item and a discussion of the rationale for their inclusion. Ideally, the examples chosen would illustrate all of the key concepts and only those concepts. However, it was not always possible to identify such specific real‐world examples from the veterinary literature. The working group decided not to use human healthcare or hypothetical examples. As a consequence, the examples sometimes include additional examples or several examples were needed to illustrate the key concepts. When the explanation for an item was the same as that reported in the original STROBE publication, we used the material ad verbatim, with permission from the original authors. Examples of poorly reported items were not included due to space considerations and the consensus that their inclusion would not substantially increase understanding or adoption of the guidelines. A table with the STROBE‐Vet checklist is included at the end of this document (Table 1).

Table 1.

STrengthening the Reporting of OBservational studies in Epidemiology statement checklist for Veterinary medicine (the STROBE‐Vet statement)

| Item | ||

|---|---|---|

| Title and Abstract | 1 | (a) Indicate that the study was an observational study and, if applicable, use a common study design term |

| (b) Indicate why the study was conducted, the design, the results, the limitations, and the relevance of the findings | ||

| Background/Rationale | 2 | Explain the scientific background and rationale for the investigation being reported |

| Objectives | 3 | (a) State specific objectives, including any primary or secondary prespecified hypotheses or their absence |

| (b) Ensure that the level of organizationa is clear for each objective and hypothesis | ||

| Study design | 4 | Present key elements of study design early in the paper |

| Setting | 5 | (a) Describe the setting, locations, and relevant dates, including periods of recruitment, exposure, follow‐up, and data collection |

| (b) If applicable, include information at each level of organization | ||

| Participantsb | 6 | Describe the eligibility criteria for the owners/managers and for the animals, at each relevant level of organization |

| Describe the sources and methods of selection for the owners/managers and for the animals, at each relevant level of organization | ||

| Describe the method of follow‐up | ||

| (d) For matched studies, describe matching criteria and the number of matched individuals per subject (eg, number of controls per case) | ||

| Variables | 7 | (a) Clearly define all outcomes, exposures, predictors, potential confounders, and effect modifiers. If applicable, give diagnostic criteria |

| (b) Describe the level of organization at which each variable was measured | ||

| (c) For hypothesis‐driven studies, the putative causal structure among variables should be described (a diagram is strongly encouraged) | ||

| Data Sources/Measurement | 8c | (a) For each variable of interest, give sources of data and details of methods of assessment (measurement). If applicable, describe comparability of assessment methods among groups and over time |

| (b) If a questionnaire was used to collect data, describe its development, validation, and administration | ||

| (c) Describe whether or not individuals involved in data collection were blinded, when applicable | ||

| (d) Describe any efforts to assess the accuracy of the data (including methods used for “data cleaning” in primary research, or methods used for validating secondary data) | ||

| Bias | 9 | Describe any efforts to address potential sources of bias due to confounding, selection, or information bias |

| Study Size | 10 | (a) Describe how the study size was arrived at for each relevant level of organization |

| (b) Describe how nonindependence of measurements was incorporated into sample‐size considerations, if applicable | ||

| (c) If a formal sample‐size calculation was used, describe the parameters, assumptions, and methods that were used, including a justification for the effect size selected | ||

| Quantitative Variables | 11 | Explain how quantitative variables were handled in the analyses. If applicable, describe which groupings were chosen, and why |

| Statistical Methods | 12 | (a) Describe all statistical methods for each objective, at a level of detail sufficient for a knowledgeable reader to replicate the methods. Include a description of the approaches to variable selection, control of confounding, and methods used to control for nonindependence of observations |

| (b) Describe the rationale for examining subgroups and interactions and the methods used | ||

| (c) Explain how missing data were addressed | ||

| (d) If applicable, describe the analytical approach to loss to follow‐up, matching, complex sampling, and multiplicity of analyses | ||

| (e) Describe any methods used to assess the robustness of the analyses (eg, sensitivity analyses or quantitative bias assessment) | ||

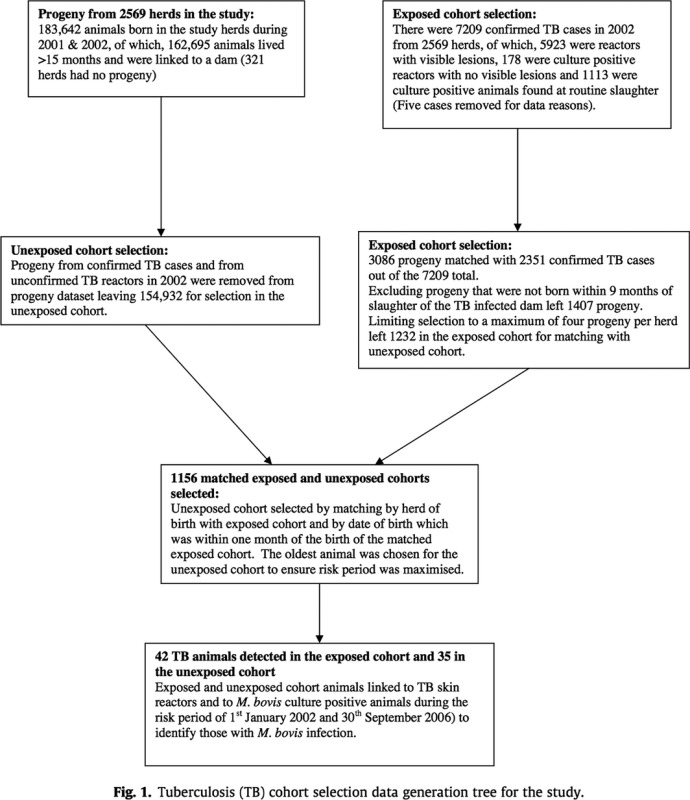

| Participants | 13c | (a) Report the numbers of owners/managers and animals at each stage of study and at each relevant level of organization—for example, numbers eligible, included in the study, completing follow‐up, and analyzed |

| (b) Give reasons for nonparticipation at each stage and at each relevant level of organization | ||

| (c) Consider use of a flow diagram, a diagram of the organizational structure, or both | ||

| Descriptive Data on Exposures and Potential Confounders | 14c | (a) Give characteristics of study participants (eg, demographic, clinical, social) and information on exposures and potential confounders by group and level of organization, if applicable |

| (b) Indicate number of participants with missing data for each variable of interest and at all relevant levels of organization | ||

| (c) Summarize follow‐up time (eg, average and total amount), if appropriate to the study design | ||

| Outcome Data | 15c | (a) Report outcomes as appropriate for the study design and summarize at all relevant levels of organization |

| (b) For proportions and rates, report the numerator and denominator | ||

| (c) For continuous outcomes, report the number of observations and a measure of variability | ||

| Main Results | 16 | (a) Give unadjusted estimates and, if applicable, adjusted estimates and their precision (eg, 95% confidence interval). Make clear which confounders and interactions were adjusted. Report all relevant parameters that were part of the model |

| (b) Report category boundaries when continuous variables were categorized | ||

| (c) If relevant, consider translating estimates of relative risk into absolute risk for a meaningful time period | ||

| Other Analyses | 17 | Report other analyses done, such as sensitivity/robustness analysis and analysis of subgroups |

| Key Results | 18 | Summarize key results with reference to study objectives |

| Strengths and Limitations | 19 | Discuss strengths and limitations of the study, taking into account sources of potential bias or imprecision. Discuss both direction and magnitude of any potential bias |

| Interpretation | 20 | Give a cautious overall interpretation of results considering objectives, limitations, multiplicity of analyses, results from similar studies, and other relevant evidence |

| Generalizability | 21 | Discuss the generalizability (external validity) of the study results |

| Transparency | 22 |

(a) Funding—Give the source of funding and the role of the funders for the present study and, if applicable, for the original study on which the present article is based (b) Conflicts of interest—Describe any conflicts of interest, or lack thereof, for each author (c) Describe the authors’ roles—Provision of an author's declaration of transparency is recommended (d) Ethical approval—Include information on ethical approval for use of animal and human subjects (e) Quality standards—Describe any quality standards used in the conduct of the research |

Level of organization recognizes that observational studies in veterinary research often deal with repeated measures (within an animal or herd) or animals that are maintained in groups (such as pens and herds); thus, the observations are not statistically independent. This nonindependence has profound implications for the design, analysis, and results of these studies.

The word “participant” is used in the STROBE statement. However, for the veterinary version, it is understood that “participant” should be addressed for both the animal owner/manager and for the animals themselves.

Give such information separately for cases and controls in case‐control studies and, if applicable, for exposed and unexposed groups in cohort and cross‐sectional studies.

Title and Abstract

The purpose of the abstract and title is to quickly allow the reader to identify the topic of the research, the general design of the study, the main results, and the implications of the findings.

1(a) Indicate that the Study was an Observational Study and, If Applicable, Use a Common Study Design Term

Example 1

Title: “An observational study with long‐term follow‐up of canine cognitive dysfunction: Clinical characteristics, survival, and risk factors”.16

Example 2

Title: “Case‐control study of risk factors associated with Brucella melitensis on goat farms in Peninsular Malaysia”.17

Explanation

Including the study design term in the title or abstract when a standard study design is used, or at least identifying that a study is observational, allows the reader to easily identify the design and helps to ensure that articles are correctly indexed in electronic databases.18 In STROBE, item 1a only requests that a common study design term be used. However, in veterinary research, not all observational studies are easily categorized into cohort, case‐control, or cross‐sectional study designs. Therefore, we recommend including that the study was observational and, if possible, the study design or important design characteristics, for example, longitudinal, in the title.

1(b) Indicate Why the Study was Conducted, the Approach, the Results, the Limitations, and the Relevance of the Findings

Example

Methicillin‐resistant Staphylococcus pseudintermedius (MRSP) has emerged as a highly drug‐resistant small animal veterinary pathogen. Although often isolated from outpatients in veterinary clinics, there is concern that MRSP follows a veterinary‐hospital associated epidemiology. This study's objective was to identify risk factors for MRSP infections in dogs and cats in Germany. Clinical isolates of MRSP cases (n = 150) and methicillin‐susceptible S. pseudintermedius (MSSP) controls (n = 133) and their corresponding host signalment and medical data covering the six months prior to staphylococcal isolation were analysed by multivariable logistic regression. The identity of all MRSP isolates was confirmed through demonstration of S. intermedius‐group specific nuc and mecA. In the final model, cats (compared to dogs, OR: 18.5, 95% CI: 1.8–188.0, P = .01), animals that had been hospitalised (OR: 104.4, 95% CI: 21.3–511.6, P < .001), or visited veterinary clinics more frequently (>10 visits OR: 7.3, 95% CI: 1.0–52.6, P = .049) and those that had received topical ear medication (OR: 5.1, 95% CI: 1.8–14.9, P = .003) or glucocorticoids (OR: 22.5, 95% CI: 7.0–72.6, P < .001) were at higher risk of MRSP infection, whereas S. pseudintermedius isolates from ears were more likely to belong to the MSSP group (OR: 0.09, 95% CI: 0.03–0.34, P < .001). These results indicate an association of MRSP infection with veterinary clinic/hospital settings and possibly with chronic skin disease. There was an unexpected lack of association between MRSP and antimicrobial therapy; this requires further investigation…

Explanation

The abstract provides key information that enables readers to understand the key aspects of the study and decide whether to read the article. In STROBE, item 1b recommended that authors provide an informative and balanced summary of what experiments were done, what results were found, and the implications of the findings in the abstract. In STROBE‐Vet, this item was modified to provide more guidance on the key components that should be addressed. The study design should be stated; however, if the study does not correspond to a named study design such as case‐control, cross‐sectional, and cohort study, then the author should describe the key elements of the study design such as incident versus prevalent cases, and whether or not the selection was based on outcome status.20 The abstract should succinctly describe the study objectives, including the primary objective and primary outcome, the exposure(s) of interest, relevant population information such as species and the purpose (or uses) of the animals, the study location and dates, and the number of study units. In addition, including the organizational level at which the outcome was measured (eg, herd, pen, or individual) is recommended. The presented results should include summary outcome measures (eg, frequency or appropriate descriptor of central tendency such as mean or median) and, if relevant, a clear description of the association direction along with accompanying association measures (eg, odds ratio) and measures of precision (eg, 95% confidence interval) rather than P‐value alone. We discourage stating that an exposure is or is not significantly associated with an outcome without appropriate statistical measures. Finally, because many veterinary observational studies evaluate multiple potential risk factors, the abstract should provide the number of exposure‐outcome associations tested to alert the end user to potential type I error in the study. When multiple outcomes are observed, provide the reader with a rationale for the outcomes presented in the abstract, for example, only statistically significant results or the outcome of the primary hypothesis is presented.

Introduction

The aim of the introduction is to allow the reader to understand the study's context and the results’ potential to contribute to current knowledge.

2 Background/Rationale: Explain the Scientific Background and Rationale for the Investigation Being Reported

Example

The syndesmochorial placenta of cattle prevents the bovine fetus from receiving immunoglobulins in utero; therefore, calves are born essentially agammaglobulinemic []1. Calves acquire passive immunity by consuming colostrum in the first 24–36 h of life []. Inadequate colostrum consumption leads to failure of passive transfer (FPT), which has detrimental effects on calf health and survival. As many as 40% of dairy calves experience FPT []. However, beef and dairy calf management is considerably different, as beef calves generally remain with the cow post‐calving and nurse ad libitum, while dairy producers often separate calves from their dams and then provide the colostrum. Hence, the prevalence of and risk factors for FPT in beef calves may vary substantially from those in reports describing dairy calves….

Explanation

The scientific background provides important context for readers. It describes the focus, gives an overview of what is known on a topic and what gaps in current knowledge are addressed by the study. Background material should note recent pertinent studies and any reviews of pertinent studies. The background section should also include the anticipated impact of the work.

3(a) Objectives: State Specific Objectives, Including any Primary or Secondary Prespecified Hypotheses or their Absence

Example

The objective of this study was to investigate the effect of track way distance and cover on the probability for lameness in Danish dairy herds using grazing. We hypothesised that short track distances with added cover would be associated with the lowest lameness prevalence.

Explanation

Objectives are the detailed aims of the study. Well‐crafted objectives specify populations, exposures and outcomes, and parameters that will be estimated. They might be formulated as specific hypotheses or as questions that the study was designed to address. In some situations, objectives might be less specific, for example, in early discovery phases. Regardless, the report should clearly reflect the investigators’ original intentions.

3(b) Ensure that the Level of Organization is Clear for Each Objective and Hypothesis

Example

There were three objectives for this study: (1) to quantify the standing and lying behavior, with particular emphasis on post‐milking standing time, of dairy cows milked 3×/d, (2) to determine the cow‐ and herd‐level factors associated with lying behavior, and (3) to relate these findings to the risk of experiencing an elevation in somatic cell count (SCC).

Explanation

A full explanation is provided in Box 4: Organization structures in animal populations.

Methods

The aim of the methods section is to describe what experiments were planned and performed in sufficient detail for the reader to understand them; judge whether they were adequate with respect to providing reliable, valid answers to the objectives and hypotheses; and assess whether deviations from the original research plan were justified.

4 Study Design: Present Key Elements of Study Design Early in the Paper

Example

A cohort study was performed on two farrow‐to‐finish farms (A and B) in two farrowing rooms (cohorts) per farm. Sows were examined for the presence of A. pleuropnemoniae infection by collection of blood and tonsil brush samples approximately 3 weeks before parturition. The proportions of colonization at litter and individual piglet level were determined 3 days before weaning and associations with dam parity and sow serum and brush sample results were evaluated.

Explanation

We advise presenting key elements of study design early in the methods section (or at the end of the introduction) so that readers can understand the basics of the study. For example, if the authors used a cohort study design, which followed animals or animal groups over a particular time period, they should describe the group that comprised the cohort and their exposure status. Similarly, if the investigation used a case‐control design, the cases and controls and their source population(s) should be described.

If a study is a variant of the three main study types (cohort, case‐control, or cross‐sectional), there is an additional need for clarity. Authors can provide a clear description of the study design by including the following key elements: (1) the timing of study population enrollment with respect to the occurrence of the outcome such as after or prior to, (2) the role of exposure status on enrollment such as enrolled based on exposure or not, (3) the role of outcome status on enrollment such as enrolled based on outcome or not, (4) the timing of outcome and exposure determination such as outcome determined before, after, or concurrent to exposure determination, and (5) if the outcome is a disease, condition, or behavior, whether the outcome represents incidence or prevalence. If the study only estimates prevalence or incidence in a single group, then the authors need to clarify whether the outcome represents incidence or prevalence. This item is intended to give the reader a general idea of the study design. The design specifics are described in detail in subsequent items.

We recommend that authors refrain from calling a study “prospective” or “retrospective” because these terms are ill defined.25 One usage sees cohort and prospective as synonymous and reserves the word retrospective for case‐control studies. A second usage distinguishes prospective and retrospective cohort studies according to the timing of data collection relative to when the idea for the study was developed.26 A third usage distinguishes prospective and retrospective case‐control studies depending on whether the data about the exposure of interest existed when cases were selected.27

In STROBE‐Vet, we do not use the words prospective and retrospective, nor alternatives such as concurrent and historical. We recommend that, whenever authors use these words, they define what they mean. Most importantly, we recommend that authors describe exactly how and when data collection took place.

5(a) Setting: Describe the Setting, Locations, and Relevant Dates, Including Periods of Recruitment, Exposure, Follow‐Up, and Data Collection 5(b) If Applicable, Include Information at Each Level of Organization

Example

This study was conducted in Afar and Tigray regions in north‐eastern Ethiopia. Two administrative zones (Zone‐1 and Zone‐4) out of five zones of Afar region were included in the study, and then one district from each zone was selected (Asiyta and Yallo, respectively). Asayita district was selected to include an agro‐pastoral production system where irrigation farming is widely prevalent. … Yallo was selected for its location interfacing with the highland agro‐climate in Alamata and Raya Azebo districts where the livestock are moved for grazing and watering during dry season []. There were two distinct agro‐ecological climates prevailing in the Afar study area: lowland (<1,500 m) and highland (>2,300 m)…

A cross‐sectional study was carried out between October 2011 and February 2012 to assess epidemiological factors associated with observed [lumpy skin disease] in the previous 2 years (September 2009 to October 2011). Three to four Kebeles (the lowest administrative unit next to district in order of hierarchy in Ethiopia) were selected randomly from each district, and 20–30 herds were randomly selected from each Kebele. Herd‐owners were selected based on willingness to complete the questionnaire.

Explanation

Readers must understand the clinical, demographic, managerial, geographic, and temporal contexts in which the study was conducted, so readers will be able to determine the populations to which the study's inferences can be applied. Data from research herds or kennels might not extrapolate to commercial or home settings. Dates are required to understand the historical context of the research, because medical, sociological, and agricultural practices can change over time, which, in turn, can affect the prevalence of risk factors, potential confounders, diseases, and study methods. Knowing when a study took place and over what period participants were recruited and followed places the study in historical context and is important for the interpretation of results.

6 Participants 6(a) Describe the Eligibility Criteria for the Owners/Managers and for the Animals, at Each Relevant Level of Organization

Example

Counties were chosen based on the proportion of registered backyard flock owners and location of commercial industries and auction markets. In May 2011, the Maryland Department of Agriculture (MDA) confidentially mailed 1,000 informational letters and return postcards to poultry owners enrolled in the Maryland Poultry Registration Program. Participants were eligible for the study if they lived in Maryland, owned domesticated fowl, and maintained a flock size fewer than 1,000 birds.

Explanation

Eligibility criteria might be presented as inclusion and exclusion criteria, although this distinction is not always necessary or useful. Regardless, we advise authors to report all eligibility criteria and also to describe the group from which the study population was selected (eg, the general population of a region or country), and the method of recruitment (eg, referral or self‐selection through advertisements). Authors of studies involving animal populations should describe the eligibility criteria at all organizational levels (eg, farm, pen, stable, or clinic) for the animals included, and for smaller units within included animals, such as limbs or mammary quarters, if applicable (see Box 4: Organization structures in animal populations).

6(b) Describe the Sources and Methods of Selection for the Owners/Managers and for the Animals, at Each Relevant Level of Organization

Example

All MRSP isolates identified between October 2010 and October 2011 inclusive were considered. MSSP isolates were selected throughout the study period using simple randomization on www.randomizer.org.

Data and pedigree information were obtained from the Swedish Dairy Association (Stockholm, Sweden), and the Swedish organic certification organization (KRAV; Uppsala, Sweden) contributed information about dairy farms with organic plant production. … The initial data set contained records from 402 organic herds (all herds with available data) and 5,335 …. conventional herds (herds with an even last number in the herd identity).

Explanation

There are many ways eligible study units can be selected, and when multiple organizational levels are used, the selection approach might differ based on the level. For example, random selection might be used at one level and convenience sampling at another. Clear and transparent descriptions of the selection approach for eligible study units enable identification of the population to which the study results can be inferred and any potential selection biases. When nonprobability sampling (eg, convenience, haphazard, or snowball methods) is used, indicate this explicitly and provide a rationale for its use.

6(c) Describe the Method of Follow‐Up

Example 1

After surgery, the owners of the dogs were instructed to monitor for any signs of new mammary tumors and notify the principal investigator (PI) if any signs of recurrence or new tumors were noted. In addition, they were contacted by the PI (VK) every 6 months through phone to ensure this information. … Dogs with reported/suspected new tumors were requested to return for clinical examination and confirmation.

Example 2

Table 1 Possible outcomes of horses on cohort32

| Possible outcome | Action |

|---|---|

| No further colic during study | Censored |

| Colic resolves without medication | Horse returns to population at risk 48 h after colic episode |

| Colic requires medical attention—clinical records obtained | Horse returns to population at risk 48 h after colic episode |

| Colic requires surgery | Surgical diagnosis and end of contribution to time at risk |

| Death from other causes | Censored |

| Dropout of cohort | Censored/loss to follow‐up |

Explanation

The potential for loss to follow‐up differs between studies; therefore, follow‐up monitoring approaches might differ between studies. For example, companion animal populations that rely on client return visits are prone to loss to follow‐up, analogous to the human population studies discussed in STROBE. The authors of these studies often make several attempts to contact animal owners to determine their pet's outcome. In other animal populations, data might be collected from computerized systems, such as herd inventory at the start and end of the study, where relevant records (eg, the reasons for losses) might or might not be available. Reporting the approach used by the authors to minimize loss to follow‐up will allow users to assess the potential for bias related to this loss.

6(d) For Matched Studies, Describe Matching Criteria and Number of Matched Individuals per Subject (eg, Number of Controls per Case)

Example 1

Two to 4 control farms matched to each case farm on the basis of type of farm (dairy or beef) and location (inside or outside the TB core area) were included in the study.

Example 2

Each time a herd was recorded as a ‘case’, a randomly selected at‐risk herd was identified as a ‘control’. Each control herd was selected with probability proportional to their time at risk (incidence density sampling) during the study period…

Explanation

Matching is more common in case‐control studies, but occasionally, investigators use matching in cohort studies. Matching in cohort studies makes groups directly comparable for potential confounders (Box 5: Confounding) and presents fewer intricacies than with case‐control studies. For example, it is not necessary to take the matching into account for the estimation of the relative risk. Because matching in cohort studies might increase statistical precision, investigators might allow for the matching in their analyses and thus obtain narrower confidence intervals.

Box 1. Bias in Observational Studies.

Bias is a systematic deviation of a study's results from a true value. Typically, it is introduced during the design or implementation of a study and its effects cannot be eliminated later or correct analytically. Bias and confounding are not synonymous. Bias arises from flawed information or subject selection so that a wrong association is found. Confounding produces relations that are factually correct, but they cannot be interpreted causally because some underlying, unaccounted for factor is associated with both exposure and outcome (see Box 5: Confounding). Bias differs from random or chance error such as a deviation from a true value caused by random fluctuations in the measured data in either direction. Many potential sources of bias have been described and a various terms have been used.132 We find that it is helpful to separate them into two simple categories: information bias and selection bias.

Information bias occurs when systematic differences in data completeness or accuracy lead to animal misclassification with respect to exposures, outcomes, or measurement errors of values recorded on a continuous scale. Detection bias in cohort studies, interviewer bias, and recall bias are all forms of information bias. For example, in a case‐control study of risk factors for horse falls, poor dressage performers were less likely to report accurate dressage scores than good performers, thereby introducing information bias.133

Selection bias exists when the association between the exposure and outcome among study‐eligible participants is different from those participants included at any stage of the study, from entry to the study to inclusion in the analysis. Various types of selection bias include bias introduced when selecting the control group in a case‐control study, differential loss to follow‐up, incidence‐prevalence bias, volunteer bias, healthy worker bias, and nonresponse bias.134 Detection bias also acts as a form of selection bias in case‐control studies.135

In case‐control studies, matching is done to increase a study's efficiency by ensuring similarity in the distribution of variables between cases and controls, in particular the distribution of potential confounding variables.35, 36 Example 1 illustrates this type of matching description by matching on farm type and location. Because matching can be done in various ways, with one or more controls per case, the rationale for the choice of matching variables and the details of the method used should be described. Commonly used forms of matching are frequency matching (also called group matching) and individual matching. In frequency matching, investigators choose controls so that the distribution of matching variables becomes identical or similar to that of cases. Individual matching involves matching one or several controls to each case. Matching is not always appropriate in case‐control studies, but if used, it needs to be taken into account in the analysis (see Box 2: Matching in case‐control studies).

Although matching is generally considered to be based on potentially confounding population characteristics, in some case‐control studies, the term matching is also used to describe a means of controlling selection from the risk set based on the case occurrence timing such as in an incidence density sampling design. Example 2 provides a description of a time‐matched selection control approach.

7(a) Clearly Define all Outcomes, Exposures, Predictors, Potential Confounders, and Effect Modifiers. If Applicable, Give Diagnostic Criteria

Example 1

…the explanatory variable of interest was IBK status. Other explanatory variables included in each model as potential effect modifiers or confounders of the association between IBK and weight at ultrasonographic evaluation were birth weight, season, sex of calves after weaning (bull, heifer, or steer), ADG (weaning to yearling weight), preweaning management group, postweaning management group, year of calving, season of calving, the interaction between year and season, and age at ultrasonographic evaluation

Example 2

Refer to Section 6(c) for a good description of the outcome event(s) in a cohort study.

Example 3

Body condition was scored from 1 (emaciated) to 5 (obese) using standard methods described by DAFF []. Faecal consistency was scored as described by Alberta Dairy Management [] from 1, representing a liquid consistency, to 4, representing a dry sample. Hide cleanliness was scored following the guidelines of the Food Standards Agency [], where 1 = clean and dry, and 5 = filthy and wet.

Explanation

Authors should define all variables considered for and included in the analysis, including outcomes, exposures, predictors, potential confounders, and potential effect modifiers. Disease outcomes require adequately detailed description of the diagnostic criteria. This applies to criteria for cases in a case‐control study, disease events during follow‐up in a cohort study, and prevalent disease in a cross‐sectional study.

We advise that authors should declare all “candidate variables” considered for statistical analysis, rather than selectively reporting only those included in the final models (see also item 16a).39, 40 Authors should report whether exposures are consistent or change over the study period. For studies involving follow‐up, authors should describe how study subjects were uniquely identified, allowing research personnel to correctly record observations at follow‐up visits.

7(b) Describe the Level of Organization at Which Each Variable was Measured

Example

Fixed explanatory variables considered for inclusion in the PA‐MNT model were assessment day (d –4, +1, +3, +6, +8, and +10), eye‐level IBK‐associated corneal ulceration status (present or absent), calf‐level IBK‐associated corneal ulceration status (present or absent), and landmark (7 levels).

Explanation

Animal populations commonly have multiple organizational levels, so authors should clarify the organizational level at which each variable was measured. For more information, see Box 4: Organization structures in animal populations.

7(c) For Hypothesis‐Driven Studies, the Putative Causal Structure Among Variables Should be Described (A Diagram is Strongly Encouraged)

Example

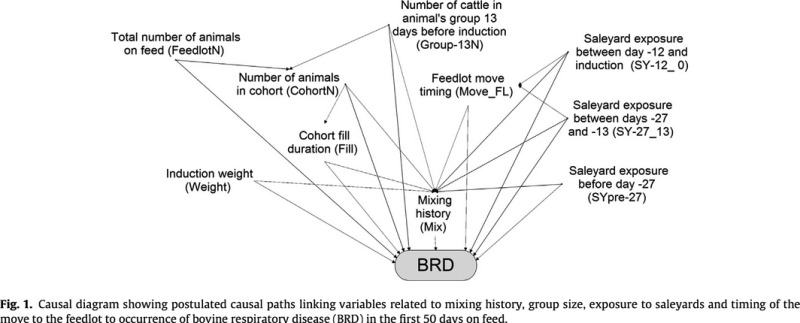

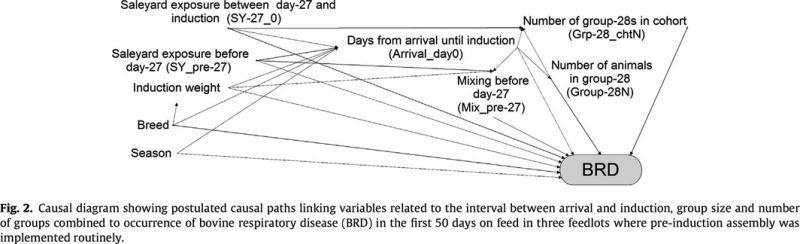

Causal diagrams were constructed to describe postulated links between measured exposure variables and between exposure variables and occurrence of BRD in the first 50 days at risk. As this resulted in a very complex diagram, a simplified version (only including variables relevant to the assessment of the risk factors included in the analyses reported in this paper) is shown in Fig. 1. Figure 2 shows the causal diagram used to inform the analyses restricted to the three feedlots that routinely used pre‐induction assembly. Additional variables included as potential confounders in either of these diagrams were cohort fill duration (all animals added to their cohort within a single day or over a longer period), total number of animals on feed in the animal's feedlot (average for the animal's induction month), number of animals in the animal's cohort, induction weight, breed and season in which the animal was inducted. …The DAGitty® software [] was used to identify minimal sufficient adjustment sets to assess total and direct effects of the exposure variable of interest on the occurrence of BRD.

Figures extracted from publication42

Explanation

For hypothesis‐driven studies, it is extremely useful to the end user if the a priori hypothesis and the variable relationships envisioned by the authors are clear and understandable. There are various means available for articulating causal assumptions,43 including directed acyclic graphs (DAGs).44 Including a causal assumption diagram is strongly recommended. Understanding the underlying causality being explored is important when identifying potential confounding variables and interpreting the results of multivariable analyses. If variables are controlled unnecessarily in a regression model, the power is reduced, and the association between the exposure of interest and the outcome might be biased.43, 45, 46

Box 2. Matching in Case‐Control Studies.

In any case‐control study, choices need to be made on whether to use matching of controls to cases, and if so, what variables to match on, the precise method of matching to use, and the appropriate method of statistical analysis. Although confounding can be adjusted for in the analysis, there could be a major loss in statistical efficiency. The use of matching in case‐control studies and its interpretation are fraught with difficulties, especially if matching is attempted on several risk factors, some of which might be linked to the exposure of prime interest.27, 136 For example, in a case‐control study of a Salmonella outbreak, investigators could match based on factors, such as sex, that are related to the consumption of various food products. However, this control group would no longer represent food consumption choices in the general population and has several implications. A crude analysis of the data will produce odds ratios that are usually biased toward unity if the matching factor is associated with the exposure. The solution is to perform a matched or stratified analysis (see item 12d). In addition, because the matched control group ceases to be representative for the population at large, the exposure distribution among the controls can no longer be used to estimate the population attributable fraction (see Box 6: Measures of Association and measures of impact).137 Also, the effect of the matching factor can no longer be studied. If matching is done on multiple factors, the search for well‐matched controls can be cumbersome and a nonmatched control group might be preferable.

Overmatching is another problem, which might reduce the efficiency of matched case‐control studies and, in some situations, introduce bias.

Information is lost and the power of the study is reduced if the matching variable is closely associated with the exposure. Then, many individuals in the same matched sets will tend to have identical or similar levels of exposures and therefore not contribute relevant information.

The complexities involved with matching have caused some methodologists to advise against routine matching in case‐control studies. Instead, they recommend judicious consideration of each potential matching factor, recognizing that it could potentially be measured and used as an adjustment variable. As a result, studies are reducing the number of matching factors employed, and increasing the use of frequency matching, which avoids some of the problems discussed above. In addition, case‐control studies are increasingly abandoning potential confounder matching.138 Currently, matching remains advisable, or even necessary, when confounder distributions differ radically between the unmatched comparison groups (eg, age).35, 36

8(a) For Each Variable of Interest, Give Sources of Data and Details of Methods of Assessment (Measurement). If Applicable, Describe Comparability of Assessment Methods Among Groups and Over Time

Example

Each tumour was examined independently by two specialist veterinary pathologists and, to be included, had to have a minimum of 7 (out of a possible 10) features identified as part of the histopathology study. The 10 features included the presence of: aggregates of lymphocytes, infiltrative margins, intralesional necrosis, perilesional scarring,/inflammation, adjuvant‐like material in macrophages, medium‐high mitotic rate, giant cells and types of cellular differentiation []. To be included in the estimate of incidence the FISS (‘Feline Injection Site Sarcomas’ added by authors) had to be diagnosed at the practices for which denominator information was available.

Explanation

The way in which exposures, confounders, and outcomes were measured affects the reliability and validity of a study. Measurement error and misclassification of exposures or outcomes can make it more difficult to detect cause‐effect relationships, or might produce spurious relationships. Error in measurement of potential confounders can increase the risk of residual confounding.48, 49 It is helpful, therefore, if authors report the findings of any studies of the validity or reliability of assessments or measurements, including details of the reference standard that was used. Rather than simply citing validation studies, we advise that authors give the estimated validity or reliability, which can then be used for measurement error adjustment or sensitivity analyses (see items 12 and 17).

In addition, it is important to know if groups being compared differed with respect to the way in which the data were collected. For instance, if an interviewer first questions all the cases and then the controls, or vice versa, bias is possible because of the learning curve; solutions such as randomizing the order of interviewing might avoid this problem. Information bias might also arise if the compared groups are not given the same diagnostic

8(b) If a Questionnaire was Used to Collect Data, Describe Its Development, Validation, and Administration

Example

Questionnaire designs were the collective effort of five veterinarians (including four epidemiologists) and a biostatistician. Included in the design group was the Veterinary Officer for Poultry Diseases, who had an in‐depth knowledge of each farm as a result of working with the producers to eradicate Salmonella from poultry. There were several questionnaires, the main one designed to record independent variables acting at the various levels of broiler production such as at the flock, house and farm levels. During the interval between flocks in each broiler house, a field technician employed by the Veterinary Officer for Poultry Diseases visited each farm to record responses from face‐to‐face interviews with the person most closely associated with the hands‐on management of the broiler flocks and houses, and to record observations of cleaning and disinfection procedures between flocks. The design team reviewed all questions and the method of recording with the field technician to ensure clear understanding. The Veterinary Officer for Poultry Diseases accompanied the field technician on all farm visits and questionnaire recording for the first full month of sampling. During the course of the study, two university‐educated field technicians were employed. The first technician was employed for 2 years, and trained the second technician for 1 month prior to leaving the project. Interview times varied from 10 to 15 min per questionnaire, depending on whether the producer needed to verify records. To ensure consistency in responses, data collected at the previous visit were reviewed with the producer. All questions pertaining to our analysis were closed.

Explanation

For STROBE‐VET, we needed to draw attention to the descriptions of questionnaire development and administration, because questionnaires are a common data source for veterinary observational studies. Occasionally, authors provide information documenting their questionnaire validation methods, sometimes as a separate publication.51, 52 If previous validation information is not available, then the authors should describe their approach for developing and testing the questionnaire in the manuscript. Like any diagnostic test, unless validated, the diagnostic characteristics of the questionnaire and its ability to accurately measure the variables are unclear. The questionnaire(s) should also be included as supplementary data, or in an open access, permanent site preferably with a digital object identifier (DOI).

8(c) Describe Whether or Not Individuals Involved in Data Collection were Blinded, When Applicable

Example

This was an observational study of 292 uniquely identified Bovelder cows born in either 2002 or 2003 (2002 and 2003 cohorts) that were followed from just prior to their first breeding season until they had weaned up to five calves. … Farm management and staff were blinded to RTS (reproductive tract scoring) data throughout the study.

Explanation

Although blinding is commonly associated with randomized controlled trials, in observational studies, there is potential for information bias in measurement of exposure arising from knowledge of the outcome of interest (case‐control studies) or information bias in measurement of the outcome arising for knowledge of the exposure of interest (cohort studies).3, 4 For example, if researchers conduct a case‐control study determining factors associated with a tick‐borne disease such as Lyme disease (the outcome of interest) and an owner is interviewed about indoor or outdoor exercise (the exposure of interest), the owners of case animals might recall outdoor exercise more easily, because they are familiar with the disease and its causes. This prior knowledge is a potential source of bias. Thus, information about blinding is critical for the reader to assess the impact of bias on the study result. Similar to clinical trials, the use of the terms single and double blinding should be avoided. Rather, the author should specify the task, caregiver, or outcome assessor who is blinded.54

8(d) Describe Any Efforts to Assess the Accuracy of the Data (Including Methods Used for “Data Cleaning” in Primary Research, or Methods Used for Validating Secondary Data)

Example

Selections of dogs from the entire hospital records were made using Oracle programming languages … []. First, an in‐house hospital code for laboratory‐confirmed diagnosis of urolithiasis was used to isolate all the eligible dogs within the boundaries of the study population. … Afterwards, urolith laboratory results or medical notes of the identified dogs were manually reviewed to isolate those whose urolith composed of at least 70% monohydrate or dehydrate forms of CaOx (case dogs). Urolith composition was determined at one of two commercial laboratories … by means of optical crystallography or infrared spectroscopy as described elsewhere [].

Explanation

Reporting the measurement approach is frequently insufficient to ensure validity; therefore, when efforts are made to ensure the data are valid (eg, the case validity in the example above), these methods should be documented. This documentation enables the end user to identify potential information bias. In the example above, there could have been concern that the electronic medical records were inaccurate; therefore, the authors validated the electronic medical records by examining the physical medical records, giving the end user greater confidence in the variable measured. In addition, when data are used for multiple different studies, the data could have been collected for a different purpose initially than that described in the later study. In this case, the original purpose should be described. A description of data validation approaches has recently been published.56

9 Bias: Describe Any Efforts to Address Potential Sources of Bias Due to Confounding, Selection, or Information Bias

Example

The responses were collected through face‐to‐face interviews conducted by four experienced interviewers (two teams each comprising two interviewers) between October 2011 and March 2012. As there are different dialects in the Philippines, the questionnaire was written in English and translated to the appropriate dialect at the interview. To reduce information bias the questionnaire was pretested on experts in the Philippines pig production systems comprising regional and provincial veterinary officers and animal health advisors. All questions in the questionnaire were clarified with all interviewers before the study date. The interviewers were instructed to ask questions exactly as stated in the questionnaire and provide only non‐directive guidance. To minimize inter‐observer variability in conducting the interview, all observers and PVO [Provincial Veterinary Office] personnel met after the questionnaire was piloted on the six farms to agree on a common interpretation of the findings. If there was disagreement, the interpretation of the PVO was chosen. To minimize information (misclassification) and selection biases, the interviewers were asked to verify the trader's identity, dates when the pigs were sold and number of pigs sold for slaughter before an interview was conducted. … The validity of the collected questionnaire data was confirmed during follow‐up visits to six farms (three in each province) by the first author, the interviewers and Provincial Veterinary Officers personnel. To reduce misclassification bias that could arise from coding errors, the interviewers and the first author checked and corrected impossible coding of categorical variables (n = 80) and unreliable outlier values for continuous variables (n = 3).

Explanation

Bias causes study results to differ systematically from the truth. It is important for a reader to know what measures were taken during the conduct of a study to reduce the potential of bias. Ideally, investigators carefully consider potential sources of bias when they plan their study. At the stage of reporting, we recommend that authors always assess the likelihood of relevant biases. Specifically, the direction and magnitude of bias should be discussed and, if possible, estimated. When investigators have set up quality control programs for data collection to counter a possible “drift” in measurements of variables in longitudinal studies, or to keep variability at a minimum when multiple observers are used, these should be described. In veterinary medicine, euthanasia or animal culling is a unique potential form of attrition bias, and authors should describe any methods used to account for this bias. Recently, an overview of approaches for addressing bias, including quantitative bias analysis and the use of bias parameters in data analysis, with accompanying veterinary examples was published.58

A discussion about selection bias, information bias, and confounding as well as their impact on observational studies is provided in Box 1: Bias in observational studies and Box 5: Confounding.

10(a) Study Size: Describe How the Study Size was Arrived at for Each Relevant Level of Organization

Example

A sample size of 36 cases and 108 controls was calculated to provide a 95% level of confidence for detecting an odds ratio of 3 with 80% statistical power, assuming a 1:3 ratio of case to control farmers and a random notification process such as a 50% probability of reporting observed oyster mortality. Sample size was increased by 15% to account for non‐participation rate observed in previous and recent studies conducted in the same population [], leading to a total of 41 cases and 124 controls, out of 165 and 703 eligible oyster farmers, respectively.

Explanation

A study should be large enough to obtain a point estimate with a sufficiently narrow confidence interval to meaningfully answer a research question. Large samples are needed to distinguish a small association from no association. Small studies often provide valuable information, but wide confidence intervals might indicate that they contribute less to current knowledge in comparison with studies providing estimates with narrower confidence intervals. Also, small studies that show “interesting” or “statistically significant” associations are published more frequently than small studies that do not have “significant” findings. Although these studies might provide an early signal in the context of discovery, readers should be informed of their potential weaknesses.

Box 3. Grouping/Categorization.

There are several reasons why continuous data might be grouped. 139 When collecting data, it might be better to use an ordinal variable than to seek an artificially precise continuous measure for an exposure based on recall over several years. Categories might also be helpful for presentation, for example, to present all variables in a similar style, or to show a dose‐response relationship.

Grouping might also be done to simplify the analysis, for example, to avoid an assumption of linearity or when investigating interactions between two continuous variables. However, grouping loses information and might reduce statistical power140 especially when dichotomization is used.69, 141, 142 If a continuous confounder is grouped, residual confounding might occur, whereby some of the variable's confounding effect remains unadjusted (see Box 5: Confounding).48, 143 Increasing the number of categories can diminish power loss and residual confounding, and is especially appropriate in large studies. Small studies might use few groups because of limited numbers.

Investigators might choose cut points for groupings based on commonly used values that are relevant for diagnosis or prognosis, for practicality, or on statistical grounds. They might choose equal numbers of individuals in each group using quantiles.144 On the other hand, one might gain more insight into the association with the outcome by choosing more extreme outer groups and having the middle group(s) larger than the outer groups.145 In case‐control studies, deriving a distribution from the control group is preferred because it is intended to reflect the source population. Readers should be informed if cut points were selected post hoc. In particular, if the cut points were chosen to minimize a P‐value, the true strength of an association will be exaggerated.68

When analyzing grouped variables, it is important to recognize their underlying continuous nature. For instance, a possible trend in risk across ordered groups can be investigated. A common approach is to model the rank of the groups as a continuous variable. Such linearity across group scores will approximate an actual linear relation if groups are equally spaced but not otherwise. Il'ysova et al146 recommend publication of both the categorical and the continuous estimates of effect, with their standard errors, to facilitate meta‐analysis, as well as providing intrinsically valuable information on dose‐response. One analysis might inform the other and neither is assumption‐free. Authors often ignore the ordering and consider the estimates (and P‐values) separately for each category compared to the reference category. This might be useful for description, but might fail to detect a real trend in risk across groups. Recent method developments, such as fractional polynomials that fit a wide range of nonlinear relationships,147 and the availability of software to implement these methods in standard software packages reduce the need to routinely categorize variables.

The importance of sample‐size determination in observational studies depends on the context. If an analysis is performed on data that were already available for other purposes, the main question is whether the analysis of the data will produce results with sufficient statistical precision to contribute substantially to the literature. Formal a priori calculation of sample size might be useful when planning a new study.60, 61 Such calculations are associated with more uncertainty than implied by the single number that is generally produced. For example, estimates of the rate of the event of interest or other assumptions central to calculations are commonly imprecise, if not guesswork.62 The precision obtained in the final analysis can often not be determined beforehand because it will be reduced by inclusion of confounding variables in multivariable analyses,63 the degree of precision with which key variables can be measured, and the exclusion or nonselection of some individuals.64

Sample‐size determination can be complicated further by studies with multiple objectives. Studies frequently have multiple objectives, largely to maximize the amount of data that can be collected from a research study. For instance, a cross‐sectional study might estimate an outcome frequency and evaluate the association between one or more exposures on that outcome. It should be clear to the reader which objective was used for sample‐size determination or, if both objectives were considered, how the final sample size was derived.

In animal health, observational studies might not be hypothesis‐driven. These studies are not conducted to detect a specific effect size magnitude for an a priori identified exposure of interest. Instead, a large number of association measures are calculated with varying levels of precision. This type of study is hypothesis generating. This factor should be discussed specifically, and the rationale for the sample size should be provided. Often, studies do not use formal sample‐size calculations. For example, when a small number of cases are available for a case‐control study, the investigators might choose to include all eligible cases. In this case, the reader still needs to understand how the sample size was derived such as selection of all available cases to evaluate the potential for selection bias or identify an underpowered study.

10(b) Describe How Nonindependence of Measurements was Incorporated into Sample‐Size Considerations, If Applicable

Example 1

The expected prevalence of MRSA was estimated to be considerably lower at 1–2% [], with a much lower between cluster T variance estimated at 0.0001, meaning a total of 800 nasal swab samples would be required to estimate prevalence with a precision of 1% and 95% confidence. To allow for an overall compliance proportion of approximately 60%, each practice was asked to recruit the next 20 horses seen on visits (a total of 1,300 horses).

Example 2

Researchers adjusted this sample size for clustering of stillbirth risk in a herd by using the formula n* = n[1 + (m − 1)p], where m is the average herd size, p is the intra‐ class correlation coefficient (ICC), and n is the unadjusted sample size necessary to determine the difference between 2 proportions. Expected herd size was approximately 150 cows and the ICC was estimated to be 0.09.66

Explanation

Given the frequency of nonindependent study units in animal populations (see Box 4: Organization structures in animal populations), authors should adjust sample‐size calculations to account for nonindependence. Failure to account for nonindependence in sample‐size determinations might result in studies that are underpowered when analyzed correctly using methods that account for clustering. The ethics of conducting underpowered studies are less obvious for observational studies, because study units are observed rather than purposefully assigned to a group. However, resources are potentially wasted when studies are underpowered; therefore, adjustment for nonindependence in sample‐size determinations should be conducted for prospectively planned observational studies.

10(c) If a Formal Sample‐Size Calculation was Used, Describe the Parameters, Assumptions, and Methods that were Used, Including a Justification for the Effect Size Selected

Example

…prior to conducting the analysis, sample size calculations were performed to determine whether it was likely to obtain a data set of sufficient size to detect a difference of 7.5 kg (16.5 lb) in the primary outcome, live weight, in a population with 33% of calves in the IBK group and 67% in the unaffected group, with a type I error probability of 0.05, a type II error probability of 0.8, and a 1:2 ratio for case and control calves. The rationale for use of these parameters was that results of a prior study suggested that calves with IBK weighed approximately 7.5 kg less at weaning than unaffected calves, and the prevalence of IBK was approximately 33% in the study herd.

Explanation

Samples sizes should be calculated based on realistic estimates. Although statistical power can be determined using the effect estimate precision and low power affects precision not bias, providing the rationale and assumptions used in the calculations allows the reader to infer the impact of those assumptions on the sample size. For example, what constitutes a meaningful difference might vary between different regions, and the assumed level of nonindependence can vary between populations.

11 Quantitative Variables: Explain How Quantitative Variables were Handled in the Analyses. If Applicable, Describe Which Groupings were Chosen, and Why

Examples

Age was grouped on a biological basis into less than 2.5 years, between 2.5 and 4.5 and >4.5 years. This categorisation was decided upon as 2.5 and 4.5 years approximately coincide with ages at first and second parturition in llamas.

Explanation

Investigators make choices regarding how to collect and analyze quantitative data about exposures, effect modifiers, and confounders. Grouping choices might have important consequences for later analyses.68, 69 We advise that authors explain why and how they grouped quantitative data, including the number of categories, the cut points, and category mean or median values (as appropriate). Whenever data are reported in tabular form, the counts of cases, noncases or controls, animals at risk, animal time at risk, etc. should be given for each category. Tables should not consist solely of effect‐measure estimates or results of model fitting. Authors should state whether categories were selected a priori or based on the collected data.

Investigators might model an exposure as continuous to retain all the information. In making this choice, one needs to consider the nature of the relationship of the exposure to the outcome. Investigators should report how departures from linearity were investigated, for example, using log transformation, quadratic terms, or spline functions. Several methods exist for fitting a nonlinear relation between the exposure and outcome.69, 70, 71 Also, it might be informative to present both continuous and grouped analyses for a quantitative exposure of prime interest.

Box 4. Organization Structures in Animal Populations.

Many animal populations occur in organizational structures, which results in individual animals (or groups of animals) not being independent from one another.4, 148 These organizational structures might be hierarchical, such as those related to housing (animals within barns, barns within farms, farms within production systems, production systems within regions) or genetics (piglets within sows, calves within dams, daughters within sires). Animal populations can also be nonindependent but not hierarchical. For example, beef calves from several cow‐calf farms might be transported to multiple feedlots, where calves from multiple farms commingle in pens. Calves from the same farm or housed in the same pen or feedlot probably have more exposures in common than calves at a different farm or in a different pen or feedlot. Such organizational structures imply nonindependence, which will influence the actual number of observational units in the study and power in the statistical analyses. Therefore, the nonindependence must be accounted for in the study design or adjusted for in the data analysis.149

Further, the study's end users might be interested in different hierarchy levels. Thus, it is essential that the authors clearly state what level is being studied. For example, for a particular disease, producers and veterinarians might focus on the disease prevalence within herds and factors associated with individual risk of developing disease.150 However, company officials might be interested in the prevalence of positive herds within a production system and factors associated with a herd being positive or with high or low prevalence.150 Government officials might concentrate on differences in the prevalence of positive herds across regions of a country or among countries. It is also possible to report the outcomes of interest at different organizational structure levels in a single study.151, 152, 153 Given this complexity, authors must ensure that readers are aware of the organizational level(s) that exist within the study population and the level at which variables are measured and summarized. This information allows the reader to (1) decide whether the paper is of interest and (2) assess experimental approaches for biases, which might differ based on the hierarchy level summarized. A diagram showing the organizational structure might be helpful to convey this information.

The organizational structure is relevant to numerous parts of a publication. In particular, we advise providing information about the study population's organizational structure in items 3, 6, 7, 12, 13, 14, and 15. Here, we provide two study examples along with a description of how to report organization structures in items 3, 6, 7, and 12.

Example 1. A Hypothetical Multiclinic Study of Demographic Factors Affecting Survival of dogs with Osteosarcoma

Item 3 would describe the study objective: to understand demographic factors that impact a dog's survival time. For this theoretical example, the hypothesis is that dog age is associated with reduced survival time in individual dogs.

Item 5 would describe the clinics and clinicians participating in the study and indicate that they are a likely source of nonindependence.

Item 6 would describe the eligibility criteria for selecting clinics, clinicians, and clients and dogs for the study.

Item 7 would define the outcome and other variables, as well as the organizational level for each variable. For this hypothetical example, the measurement level for the outcome was at the individual level such as a dog's survival time. The exposure factors of interest were also at the individual level such as the dog age, dog weight, and dog breed.

Item 8 would describe how each of the variables listed in item 7 was measured and state that all of these measurements were performed at the individual level (the dog level).

Item 12 would describe how the analysis approach accounted for the impact of the organization structure such as dog nonindependence, nested within clinics and clinicians.

Example 2. A Hypothetical Multifarm Study of Factors Affecting the Prevalence of Salmonella in Swine Barns

Item 3 would describe the study objective: to understand barn‐, site‐, and company‐level characteristics associated with the prevalence of Salmonella in swine barns. In the example study, the hypothesis was that the prevalence of Salmonella is higher in barns where birds are observed.

Item 5 would state that the pigs are nested within barns, the barns are nested within sites, and the sites within companies. Other possible sources of nonindependence (eg, if farms are nested geographically) should also be stated.

Item 6 would describe the criteria for selecting the companies, the sites within each company, the barns within each site, and the pigs within each barn. It would include at what organizational levels, convenience sampling was used. For example, in our hypothetical study, researchers used a relationship with a production company to gain access to a production site. They also used convenience to decide which production sites to study and selected all barns on each site to be surveyed. Then, they randomly selected 30 pigs within each barn to obtain barn‐level estimates of Salmonella prevalence.

Item 7 would define the outcome and other variables, ensuring the organizational level is stated clearly. For this study, the outcome of interest was the prevalence of Salmonella in each barn. The exposure variables of interest were feed type (a site‐level variable), and potential confounders included the feed mill used (a site‐level variable) and the presence of birds in barns (a barn‐level variable).

Item 8 would describe how each of the variables defined in item 7 was measured. In this study, it would be important to clarify that Salmonella status was measured in pigs (an individual‐level variable) and the prevalence was summarized as a proportion, so it could be expressed as a barn‐level outcome. The laboratory approach for determining Salmonella status should be described here. This item should state that data regarding the feed mills used at each site were obtained from company records and the presence of birds was determined using a questionnaire administered to the site manager. The validity of that questionnaire should be described as a component of this item.

Item 12 would describe how the analysis approach accounted for the organizational structure such as nonindependence of barns within farms, farms within regions, farms within the production system. Because the outcome was measured at the barn level (as clarified in item 7), authors would need to account for the clustering of pigs within barns.

12 Statistical Methods 12(a) Describe All Statistical Methods for Each Objective, at a Level of Detail Sufficient for a Knowledgeable Reader to Replicate the Methods. Include a Description of the Approaches to Variable Selection, Control of Confounding, and Methods used to Control for Nonindependence of Observations

Example 1

Collinearity between the variables was investigated by χ2 analysis. The risk factors initially offered to the model were excluded from the model with a conditional backward elimination procedure; the possible interaction terms were then investigated with a forward conditional selection procedure. A factor was entered in the model at P ≤ .05 and removed at P ≥ .10. The likelihood ratio test was used to assess the overall significance of the model (two‐tailed significance level P ≤ .05). Confounding was monitored by evaluating the change in the coefficient of a factor after removing another factor; if the change exceeds 25% of the coefficient value, the removed factor is considered a potential confounder. The significance of each term in the model was tested by Wald's χ2. In the final model, biologically plausible interaction between factors was investigated by significance. Estimated OR and 95% Wald's confidence interval (CI) were obtained as measures of predictor effect.

Example 2

To account for the hierarchical structure of the data, a cross‐classification of feedlot‐years (11 feedlots in 2000, 13 in 2001–2002…) was included as a random intercept to model the overdispersion arising from the lack of independence of cohorts nested within feedlots, and of feedlots nested within arrival years. In addition, arrival month … was modeled as a random intercept using a first‐order autoregressive covariance structure to account for the repeated measures of cohorts, within feedlot‐years, over months with decay in correlation with increasing distance between observations [] Lastly, arrival week … within a month was modeled as a random intercept to control for the correlation of weeks within arrival months.

Explanation

Describing statistical methods can be challenging, because the level of detail sufficient for a knowledgeable reader to replicate the methods is open to interpretation. (http://cdn.elsevier.com/promis_misc/AMEPRE_gfa_mar2015.pdf). The author should focus on clearly describing the approach rather than listing statistical tests. Inclusion of a diagram or flowchart to explain a complex analytical process might be helpful. One applicable resource for reporting statistical methods are the SAMPL guidelines.74 Based on the SAMPL guidelines, the description of the analysis approach can be split into three components: (1) the preliminary analysis, (2) the primary analysis, and (3) any supplementary analysis. Authors are encouraged to make the data and their software coding available as supplementary material or in data depositories.

In general, there is no one correct statistical analysis but, rather, several possibilities that might address the same question, but make different assumptions. Regardless, investigators should predetermine analyses at least for the primary study objectives in a study protocol. Often additional analyses are needed, either instead of, or as well as those originally envisaged, and these might sometimes be motivated by the data. Authors should tell readers whether particular analyses were suggested by data inspection. Even though the distinction between prespecified and exploratory analyses might sometimes be blurred, authors should clarify reasons for particular analyses.

Box 5. Confounding.

Confounding literally means the confusion of effects. A study might seem to show either an association or no association between an exposure and the risk of a disease. In reality, the seeming association or lack of association is due to another factor that determines the occurrence of the disease but that is also associated with the exposure. The other factor is called the confounding factor or confounder. Confounding thus gives a wrong assessment of the potential “causal” association of an exposure. For example, an apparent positive association between dogs attending obedience classes and dog bites could occur if specific, large‐breed dogs that are prone to biting were more likely to attend the observed obedience classes. In this instance, breed would confound the relationship between obedience class attendance and biting.

Investigators should think beforehand about potential confounding factors, a process that could be enhanced by constructing a causal diagram (see item 7c). An a priori consideration of potential confounding variables will inform the study design and allow proper data collection by identifying the confounders for which detailed information should be sought. Restriction, matching, or analytical adjustment might also control confounding. In the example above, the study might be restricted to specific breeds. Matching on breed might also be possible, although not necessarily desirable (see Box 2: Matching in case‐control studies). There are a number of analytic approaches for identifying confounding variables, which can be broadly grouped into knowledge‐based and statistical.154, 155