Abstract

There is accumulating correlational evidence that the effect of specific types of reading instruction depends on children’s initial language and literacy skills, called child characteristics × instruction (C×I) interactions. There is, however, no experimental evidence beyond first grade. This randomized control study examined whether C×I interactions might present an underlying and predictable mechanism for explaining individual differences in how students respond to third-grade classroom literacy instruction. To this end, we designed and tested an instructional intervention (Individualizing Student Instruction [ISI]). Teachers (n = 33) and their students (n = 448) were randomly assigned to the ISI intervention or a vocabulary intervention, which was not individualized. Teachers in both conditions received professional development. Videotaped classroom observations conducted in the fall, winter, and spring documented the instruction that each student in the classroom received. Teachers in the ISI group were more likely to provide differentiated literacy instruction that considered C×I interactions than were the teachers in the vocabulary group. Students in the ISI intervention made greater gains on a standardized assessment of reading comprehension than did students in the vocabulary intervention. Results indicate that C×I interactions likely contribute to students’ varying response to literacy instruction with regard to their reading comprehension achievement and that the association between students’ profile of language and literacy skills and recommended instruction is nonlinear and dependent on a number of factors. Hence, dynamic and complex theories about classroom instruction and environment impacts on student learning appear to be warranted and should inform more effective literacy instruction in third grade.

Students’ ability to read and understand text is a key skill required for their academic and life success in our global and information-driven society. Yet, an alarming percentage of students, more than 70%, reach fourth grade unable to read and comprehend text at or above proficient levels, and this rate is higher for students who attend higher poverty schools (Lee, Grigg, & Donahue, 2007). Reading comprehension has been defined as the active extraction and construction of meaning from all kinds of text (Snow, 2002). One of the more important sources of influence on students’ literacy development is the classroom instruction they receive (Morrison, Bachman, & Connor, 2005), and thus, finding ways to improve teachers’ effectiveness with regard to reading comprehension instruction may prove a powerful tool in ensuring student achievement overall, especially for students living in poverty.

Whereas the field has been generally successful in identifying mechanisms for improving students’ basic word reading skills, the anticipated growth in comprehension skills has not been realized (Gamse, Jacob, Horst, Boulay, & Unlu, 2008). One reason may be that, in general, teachers provide insufficient amounts of the types of instruction that are associated with stronger reading comprehension skill growth (Block, Parris, Reed, Whiteley, & Cleveland, 2009; Connor, Morrison, & Petrella, 2004; Improving America’s Schools Act, 1994; National Institute of Child Health and Human Development [NICHD], 2000; Snow, 2002). Moreover, complicating teachers’ task is accumulating evidence that the effect of particular types of instruction on reading gains may depend on students’ reading and oral language skills (i.e., there are child characteristics by instruction (C×I) interactions, also called aptitude by treatment interactions (Connor et al., 2004; Connor, Jakobsons, & Granger, 2006; Connor, Piasta, et al., 2009; Cronbach & Snow, 1969; Juel & Minden-Cupp, 2000). Thus, specific instructional activities that are effective for students with typical reading and language skills may be ineffective for students with weaker or above-average skills and vice versa.

Although there is a general consensus in the educational community that differentiated reading instruction is a good thing (e.g., Tomlinson, 2001), there is surprisingly little empirical evidence or examination of the underlying mechanisms that might warrant such claims, particularly for reading comprehension. There is accumulating evidence that the impact of explicit instruction in the alphabetic principle, phonological awareness, and phonics depends on students’ vocabulary and reading skills. This evidence is both correlational (Foorman, Francis, Fletcher, Schatschneider, & Mehta, 1998; Juel & Minden-Cupp, 2000; Morrison et al., 2005) and experimental (Connor et al., in press; Connor, Morrison, Fishman, Schatschneider, & Underwood, 2007). To date, however, there is virtually no experimental evidence of C×I interactions for the arguably more complex construct of third graders’ reading comprehension, and only limited correlational evidence (Connor et al., 2004).

The purpose of this study was to explicitly consider whether C×I interactions represent an underlying mechanism that helps explain individual differences among third graders in their response to reading instruction as their reading skills move beyond basic decoding and increasingly toward reading for understanding. We did this by conducting a field experiment in which teachers and their students were randomly assigned to one of two interventions: one incorporating differentiated reading, called Individualizing Student Instruction (ISI), and the other incorporating an undifferentiated vocabulary intervention.

A DEVELOPMENTAL MODEL OF READING COMPREHENSION

As Perfetti, Landi, and Oakhill (2005) aptly noted, there are numerous reasons why many students have difficulty achieving proficient reading comprehension skills, which requires students to fluently decode and then understand what they are reading (Rapp, van den Broek, McMaster, Kendeou, & Espin, 2007; Scarborough, 2001). Proficient reading comprehension is defined as the ability “to demonstrate an overall understanding of the text…to extend the ideas in the text by making inferences, drawing conclusions, and making connections to their own experiences” (National Assessment Governing Board, 2006, p. 24). Basic processes underlying reading comprehension are complex and call on the oral language system and a conscious understanding of this system (i.e., metalinguistic awareness) at all levels from semantic and morphosyntactic to pragmatic awareness (Morrison et al., 2005). Higher order metacognitive skills also appear to contribute to comprehension (Rapp et al., 2007; Willson & Rupley, 1997).

Thus, there is accumulating research on the underlying knowledge, skills, and strategies related to comprehension (NICHD, 2000; Rayner, Foorman, Perfetti, Pesetsky, & Seidenberg, 2001; Willson & Rupley, 1997). These include semantic knowledge and vocabulary (Biemiller & Boote, 2006), comprehension strategy use (NICHD, 2000; van den Broek, Risden, Fletcher, & Thurlow, 1996; Willson & Rupley, 1997), awareness of text structure (Williams, Stafford, Lauer, Hall, & Pollini, 2009), background knowledge (Rapp et al., 2007; Willson & Rupley, 1997), and self-regulation, including attention (McClelland et al., 2007).

Building on the work of Perfetti and colleagues (2005), Scarborough (1990), Catts and Kamhi (2004), and other researchers (e.g., Locke, 1993), as well as our own work (Connor, Piasta, et al., 2009), the current study relied on a developmental model of reading comprehension. The first assumption in this model is that the ability to read proficiently for understanding is built on students’ developing social, cognitive, and linguistic systems. As these systems mature and increase in sophistication, so too do students’ ability to co-opt these systems in the service of reading. In addition to decoding and letter/word reading skills, we consider comprehension processes, which may be largely automatic and unconscious higher order processes identified in the cognitive psychology literature (Perfetti, 2008; Rapp et al., 2007) or reflective or interrogative comprehension processes, which include conscious efforts to understand text and are largely identified in the education literature (NICHD, 2000; Pressley & Wharton-McDonald, 1997).

In this model, reading comprehension requires fluent decoding and word-level skills and fluent, automatic higher order processes, as well as the ability to use the automatic skills actively and consciously when the reading task demands it (i.e., reflective comprehension processes). The developmental model elucidates key skills that students bring to the task of learning that may moderate the impact of the reading instruction they receive on their comprehension gains (i.e., C×I interactions). These include students’ basic word reading and decoding skills, their oral language, specifically vocabulary skills, and their comprehension skills.

Because reading comprehension is a complex construct, there is ongoing debate regarding the best way to assess reading comprehension (Keenan, Betjemann, & Olson, 2008; Sabatini & Albro, in press; Woodcock, McGrew, & Mather, 2001). In this study, we relied on well-regarded and psychometrically strong measures that are widely used in schools. The differentiated reading instruction intervention presented in this study used scores from the Woodcock-Johnson III (WJ–III) Passage Comprehension Test (Woodcock et al., 2001).

Although widely used, such assessments have been criticized by Keenan and colleagues (2008), who argued that the various comprehension assessments are not measuring the same skills. This conclusion is based on modest intercorrelations among the measures. Moreover, students’ decoding and not their listening comprehension skills accounted for most of the variance on the WJ–III passage comprehension task. In our judgment, however, this cloze task requires students to utilize implicit understanding of the semantic and morphosyntactic systems to select the correct word that is missing from the sentence or passage. Thus, it assesses skills specifically identified in our developmental model. At the same time, we were cognizant of these concerns when we selected our outcome measure, the Gates–MacGinitie Reading Tests (GMRTs). The GMRT comprehension task, which requires students to read a fairly long passage and answer questions that are increasingly abstract (described more fully in the Methods section), arguably requires more inferencing and attention to text structure and has greater face validity than the WJ–III passage comprehension task, which is why we selected the GMRT comprehension task as our outcome for this study.

CHARACTERIZING READING INSTRUCTION IN THIRD GRADE

The goal of reading instruction is to help students acquire the skills “that enable learning from, understanding, and enjoyment of written language” (Torgesen, 2002, p. 9). Increasingly, researchers and educators are finding that combinations of instructional activities and strategies are generally more effective than one method used to the exclusion of others (Block et al., 2009; NICHD, 2000). Indeed, when classrooms are observed, evidence reveals that effective teachers use a variety of strategies and types of lessons (Connor, Morrison, et al., 2009; Wharton-McDonald, Pressley, & Hampston, 1998). Moreover, with emerging evidence of C×I interactions, it is unlikely that we will find one single method of instruction that is optimal for all students. Thus, in this study, instruction is described across three dimensions focusing on (1) the content of the reading instruction, including phonological awareness, word decoding and encoding, text structure, vocabulary, and comprehension, (2) who is managing or focusing the students’ attention on the learning activity at hand, the teacher or the student individually or with peers, and (3) grouping (i.e., whole class, small group, individual; Connor, Morrison, et al., 2009). These dimensions operate simultaneously to define any evidence-based literacy activity (see Table 1). We discuss each below.

Table 1.

Examples of Instructional Strategies Defined by Content and Management of Instruction

| Dimension | Teacher/student-managed strategy | Student-managed strategy |

|---|---|---|

| Code-focused | The teacher works with a small group of students on an activity designed to help decode and spell multisyllabic words by using similar root words with different prefixes and suffixes (morphological awareness) |

Students work in small peer groups to practice spelling and decoding multisyllabic words (word encoding) |

| Meaning- focused |

The teacher, working with a small group of students, asks them to make inferences between two or more stories read in class in order to make connections and build background knowledge (listening and reading comprehension) |

Students work on a multiple-meaning vocabulary worksheet with the following words: bark, story, and track (print vocabulary). Other students engage in writing a summary of a story that they have recently read (writing). |

Content of Instruction: Code- Versus Meaning-Focused Instruction

Content of instruction can be defined at different grain sizes from a fairly coarse curricular level (e.g., SRA/ McGraw-Hill’s Reading Mastery; Crowe, Connor, & Petscher, 2009) to a fairly fine level (e.g., teaching students to summarize). Following our developmental model of reading comprehension, in which proficient reading is a function of fluent decoding and strategic and flexible use of oral language (including semantic, morphosyntactic, and pragmatic skills) and background/academic knowledge to build coherent representations of the meanings of the text (Rapp et al., 2007), components of the content of literacy instruction can be defined. For this study, we use a coding system that examines instruction at a very fine grain, including types of morphemic awareness, types of listening and reading comprehension instruction (see Appendix A for definitions), to a larger grain size used in the ISI intervention, which we describe as either code- or meaning-focused instruction. The advantage of the larger grain size was that teachers were provided with more flexibility in selecting instructional activities based on their professional judgment and the scope and sequence of their core literacy curriculum.

Code-focused instruction is any instructional activity that builds students’ grasp of the alphabetic principle, orthographic knowledge, and fluent decoding. This instruction includes phonics, phonological awareness, letter and word fluency, and spelling (see Table 1). In third grade, code-focused or word study instruction might include decoding multisyllabic words, morphological awareness, and other encoding strategies. Key is that code-focused instruction in third grade should likely focus on higher order and more complex decoding and encoding strategies than are observed in the earlier grades, depending on students’ decoding skills.

Meaning-focused instruction is any instructional activity that is intended to improve students’ ability to understand what they are reading and build coherent mental representations of the information in the text (Perfetti, 2008). Examples are provided in Table 1. Converging evidence reveals that from first through third grade, greater time in meaning-focused activities is associated with students’ gains in reading comprehension (Connor, Piasta, et al., 2009; Guthrie et al., 2004; Williams et al., 2009). Meaning-focused activities include a wide range of activities, such as comprehension strategy instruction and practice, discussion, text reading, writing, and vocabulary, that may explicitly or implicitly affect reading comprehension gains.

Research has revealed that explicit instruction of comprehension strategies is associated with gains in reading comprehension and reading more generally (NICHD, 2000). Comprehension strategies include predicting, questioning, monitoring, highlighting, summarizing, using context clues, retelling, using prior knowledge, comparing and contrasting, and sequencing ideas (Block et al., 2009; NICHD, 2000; Pressley & Wharton-McDonald, 1997). In the direct and inferential mediation model (Cromley & Azevedo, 2007), for example, such comprehension strategies, in combination with students’ decoding, oral language skills, and background knowledge, allow them to make appropriate inferences about the content of the text they are reading regarding information that is not explicitly stated in the text but can be inferred from information already conveyed in the text, their background knowledge, or other texts (Cain, Oakhill, & Lemmon, 2004). Of note, Cromley and Azevedo observed that students’ ability to use strategies may not directly predict their comprehension but, instead, may support their ability to make inferences, which directly predicts their comprehension. Hence, in our coding system, we capture listening and reading comprehension instruction in great detail (see Appendix A).

The link between vocabulary and reading comprehension has been documented for over two decades, and correlational studies have shown a positive association between students’ vocabulary knowledge and reading comprehension outcomes (Anderson & Freebody, 1981; Biemiller & Boote, 2006; Duke & Pearson, 2002; Storch & Whitehurst, 2002). Plus, vocabulary interventions have been associated with improved comprehension (Duke & Pearson, 2002; NICHD, 2000). The findings of the National Reading Panel, a meta-analysis of over 50 studies relating to best practices for the teaching of vocabulary instruction and its relation to reading comprehension, suggested that when instruction focused on building vocabulary, students’ reading skills improved (NICHD, 2000). The National Reading Panel stated that “reading vocabulary is crucial to the comprehension processes of a skilled reader” (NICHD, 2000, p. 4–3).

Teacher/Student- Versus Student-Managed Instruction

An important dimension of instruction, but one that is frequently overlooked, is who is focusing students’ attention on the learning activity at hand (Connor, Morrison, et al., 2009). Are the students working independently or with peers (i.e., student managed)? Or, is the teacher actively interacting with students and focusing their attention on the learning activity (i.e., teacher/ student managed)? Examples of teacher/student-managed (TSM) and student-managed (SM) instruction are provided in Table 1. A teacher working with students to reach a consensus for the definition of a new vocabulary word is an example of a TSM activity. Students completing a vocabulary worksheet at their desks or in pairs are examples of SM activities.

Grouping: Whole-Class, Small-Group, and Individually Delivered Instruction

Another dimension of instruction captures the grouping context of instruction: whether it is delivered to all of the students in the classroom (i.e., whole class), to small groups of students, or individually. Many teachers use whole-class instruction, which is encouraged by several core literacy curricula (Block et al., 2009) and literacy approaches (Dahl & Freppon, 1995). However, research on effective schools (Wharton-McDonald et al., 1998) and differentiated or individualized instruction (Connor, Piasta, et al., 2009; Gersten et al., 2009) has indicated that the use of smaller, flexible learning groups based on students’ current skills and learning needs may be more effective than whole-class instruction. Correlational evidence suggests that instruction provided in small groups may be up to four times as effective as instruction delivered to the entire class (Connor, Morrison, & Slominski, 2006). This is possibly because teachers may be more sensitive to students’ response to what is being taught and can change instructional strategies and activities more flexibly to optimize learning.

In this study, teachers in the ISI intervention group were specifically taught to use small, flexible learning groups based on students’ reading skills while the other students worked in small peer groups or independently. During small-group time, teachers in the ISI intervention group were specifically taught to focus on providing instruction aligned with students’ skills and abilities following recommendations based on C×I interaction research. The teachers in the vocabulary group were not specifically taught about using flexible learning groups, although of course, they were free to do so. Again, these dimensions operate simultaneously (Connor, Morrison, et al., 2009). Thus, the teacher working with a small group of students on decoding multisyllabic words would be defined as a TSM, small-group, code-focused activity (i.e., in the coding system, the content would be a type of morphological awareness). In the same way, a small group of students writing together in the publishing corner and discussing a story that they are writing would be a SM, small-group, meaning-focused activity.

C×I INTERACTIONS FOR READING COMPREHENSION

The correlational evidence for C×I interactions in third grade, although limited, has consistently shown that more time in explicit, TSM, meaning-focused (TSMMF) types of instruction is associated with stronger student reading comprehension gains and that the effect is greater for students with weaker initial reading comprehension skills (Connor, Jakobsons, Crowe, & Meadows, 2009; Connor et al., 2004). At the same time, greater amounts of SM, code-focused (SM-CF) instruction appear to be associated with weaker gains in reading comprehension overall. Effective amounts of TSM, code-focused (TSM-CF) instruction depended on students’ word decoding, vocabulary, and comprehension skills, and such instruction was generally only effective for students with word reading skills that fell below grade expectations (Connor et al., 2004). It is these C×I interactions that the present study was designed to test.

To test these C×I interactions, we created algorithms based on the hierarchical linear models (HLMs) used in the correlational studies. These HLM equations were reverse engineered, so to speak. The original equations could be used to predict a student’s spring reading comprehension outcome based on fall scores, the amounts and types of reading instruction that the student received, and identified C×I interactions. To create the C×I algorithms, we set an outcome target, which we defined as on grade level by the end of the year or a school year’s growth in reading comprehension, whichever was greater. Using grade equivalent (GE) as the metric, 3.9 would represent the minimum end of the year target in third grade. We then solved for amounts for each type of instruction (e.g., TSM-MF) using the student’s assessed vocabulary, word reading, and passage comprehension GEs. The equations function somewhat like meteorologists’ dynamical system forecasting models, which are used to predict, for example, the trajectory of hurricanes (National Hurricane Center, 2009). The key difference is that the models used in this study predict the amounts of each type of reading instruction that are required for students to reach their optimal trajectory of learning (Raudenbush, 2007) and have been called dynamical forecasting intervention models.

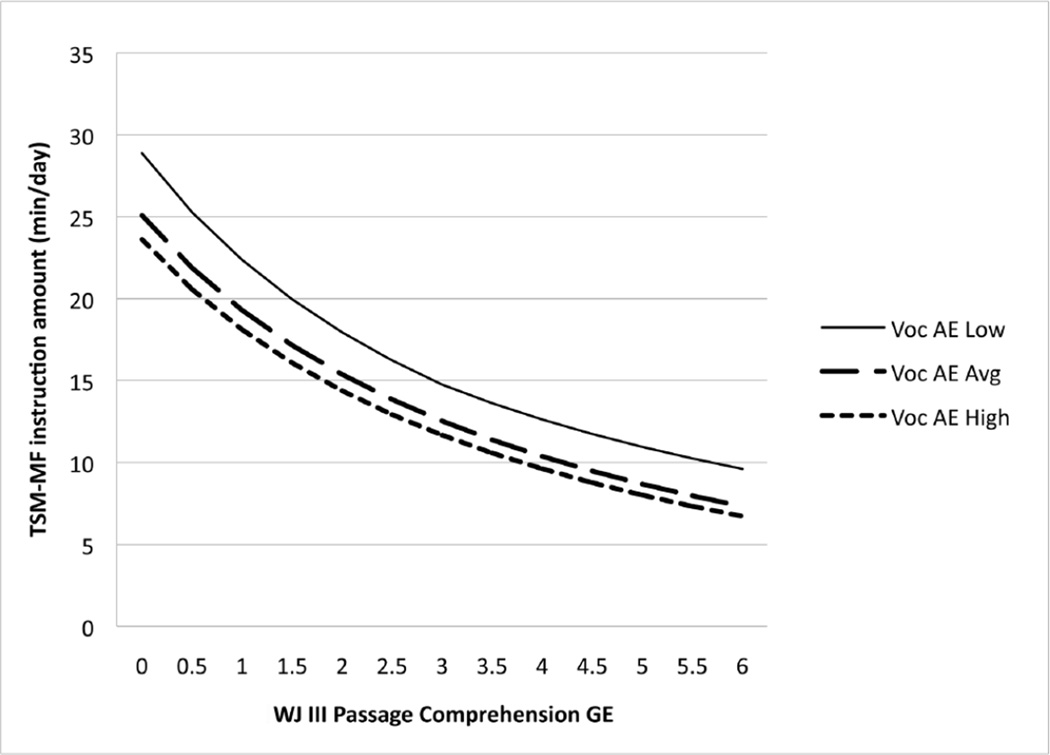

The equation used in the computer algorithm for TSM-MF instruction is provided in the following equation, and the recommended amounts charted as a function of students’ reading comprehension GEs are provided in Figure 1.

FRCGE = fall reading comprehension GE on the WJ III Passage Comprehension

Test. FVAE = fall vocabulary age equivalent on the WJ–III Picture Vocabulary

Test. TO = target outcome of 3.9 or (FRCGE + 0.9), whichever is greater.

Figure 1.

Recommended Minutes/Day of Teacher/Student-Managed, Meaning-Focused Instruction as a Function of Students’ Woodcock-Johnson III Passage Comprehension Grade-Equivalent Score

Note. AE = age expectation. GE = grade equivalent. TSM-MF = teacher/student-managed, meaning-focused. Voc = vocabulary. WJ III = Woodcock-Johnson III. Students with vocabulary scores falling below AEs (top solid line, AE = 5 years) would be provided more time in TSM-MF instruction, for example, than would students with more typical vocabulary skills (middle dashed line, AE = 8.6 years, mean of the sample) or students with stronger vocabulary (bottom dotted line, AE = 11 years).

As can be seen in Figure 1, the function is nonlinear and recommends exponentially more TSM-MF instruction as students’ reading comprehension skills decrease. Relatively more time is recommended for students with weaker vocabulary scores. The recommended amounts are computed by the A2i (assessment to instruction) Web-based software, using these dynamical forecasting intervention models. A2i is described in the next section.

INTERVENTIONS

Both interventions, ISI and vocabulary, were provided by the schools’ general education classroom teachers as an integral part of the 90-minute block of time dedicated to literacy instruction during the 2008–2009 school year. All teachers used their school’s core literacy curriculum, Open Court Reading, which encourages the use of small groups during workstation time and during the teaching of vocabulary. The interventions focused on improving teachers’ practice for instruction that they were already expected to provide.

The ISI Intervention

The goal of the ISI intervention was to support teachers’ efforts to differentiate reading instruction so that we could investigate the role of C×I interactions in understanding individual differences in students’ literacy learning. The ISI intervention has three key components:

Assessment—All students receive vocabulary, word reading, and passage comprehension assessments three times per year, which are used in the A2i algorithms.

Assessment–instruction links that explicitly consider C×I interactions—The A2i software, which uses the dynamical forecasting intervention models (i.e., computer algorithms) to provide teachers with specific recommended amounts and types of literacy instruction for each student, computed using his or her vocabulary and reading scores, assessment and skill progress monitoring, and online training resources.

Professional development—Teachers’ use of A2i and implementation of differentiated instruction in the classroom is supported through professional development provided by research-funded teacher mentors who are called research partners.

The professional development, coupled with the A2i software, is designed to provide teachers with explicit support and recommendations as they organize, plan, and differentiate literacy instruction. Teachers use their school’s curriculum and other materials that they are currently using. Thus, ISI and A2i do not comprise a literacy curriculum per se. Rather, they provide a framework for differentiated instruction that relies on valid and ongoing assessment of three literacy skills— word reading, reading comprehension, and vocabulary knowledge—and empirical evidence regarding how instruction interacts with these skills to impact student outcomes. A2i might be described as an instructional decision support system (Landry, Anthony, Swank, & Monseque-Bailey, 2000), analogous to what is described in the medical field as a clinical decision support system (Garg et al., 2005; Kawamoto, Houlihan, Balas, & Lobach, 2005). In Response to Intervention parlance, the instruction provided would be considered differentiated Tier 1 or a hybrid Tier 1/Tier 2 intervention (Al Otaiba et al., in press; Gersten et al., 2009).

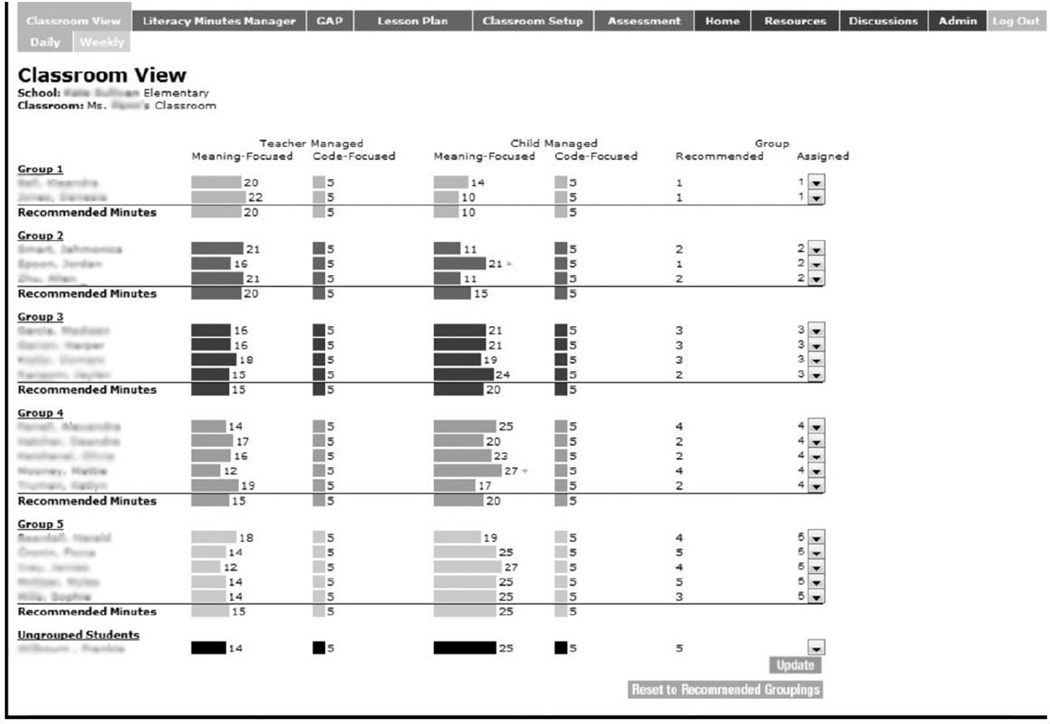

To access A2i (see isi.fcrr.org), teachers log on to the password-protected system and are taken to their homepage, where they can access text and video training materials (e.g., using assessment to guide instruction, using workstations and center activities effectively) and the planning components of A2i. The classroom view (see Figure 2) provides the recommended amounts of each type of literacy instruction (e.g., TSM-MF, TSM-CF) and recommended groupings based on students’ reading comprehension skills. Teachers were encouraged to use the recommended groupings but could change them. Teachers were expected to provide the group mean recommended amounts in a flexible learning group format, attending to content and student skill level using their professional judgment. The literacy core curriculum, Open Court Reading, was indexed to the four types of instruction, as were the teacher-developed and Florida Center for Reading Research (www.fcrr.org) activities.

Figure 2.

A2i Classroom View Showing the Recommended Amounts (minutes/day) of Each Type of Instruction

Note. Student-managed code-focused instruction was set to a constant of five minutes per day. Teacher/student-managed, code-focused amounts depended on students’ Woodcock-Johnson III letter/word identification grade-equivalent (GE) score (see Figure 3). None of the students in this classroom had letter/word GE scores that fell more than one GE below grade-level expectations.

A computer screenshot showing a third-grade classroom with recommended amounts for each student is provided in Figure 2. Charts showing the algorithm-recommended amounts of instruction as a function of students’ skills are provided in Figures 1 and 3. For students with generally weaker reading comprehension skills, more time daily in TSM-MF small-group instruction was recommended (see Figures 1 and 2), about 20 minutes per day for group 1, which included the students with the weakest reading and vocabulary scores. Fairly small amounts of SM, meaning-focused (SM-MF) instruction, about 10 minutes in the fall for group 1, with increasing amounts over the course of the school year were recommended.

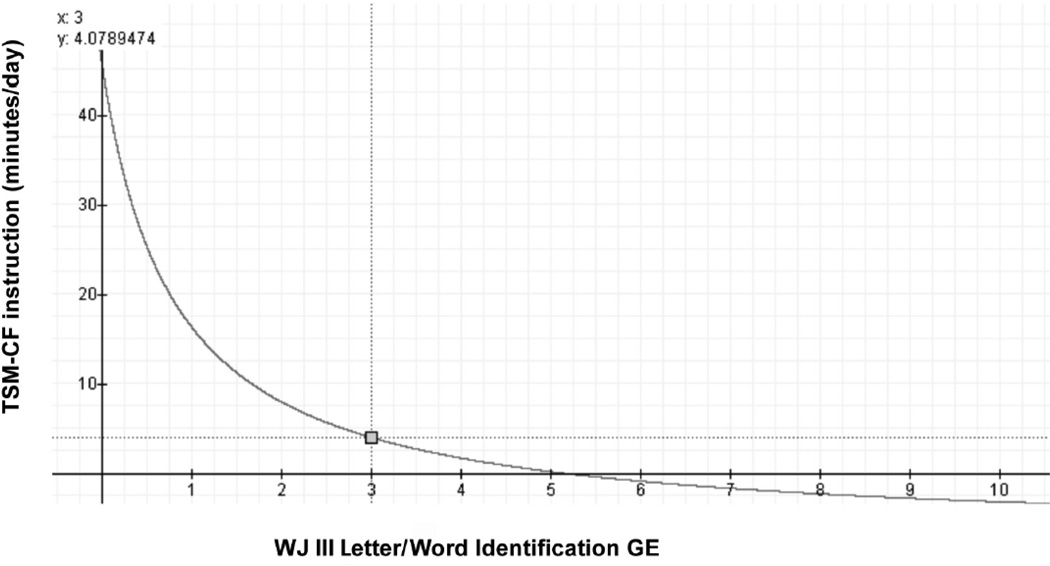

Figure 3.

Recommended Minutes/Day of Teacher/Student-Managed, Code-Focused Instruction as a Function of Students’ Woodcock-Johnson III Letter/Word Identification Grade Equivalent

Note. GE = grade equivalent. TSM-CF = teacher/student-managed, code-focused. WJ III = Woodcock-Johnson III. A minimum of five minutes was set in the A2i software (see Figure 1).

For students with more typical reading comprehension skills (e.g., groups 3 and 4), about 15 minutes per day of TSM-MF, small-group instruction was recommended, with fairly substantial amounts of SM-MF instruction, about 20 minutes per day. For the strongest readers (group 5), about 10–15 minutes per day of TSMMF and about 25 minutes per day of SM-MF instruction were recommended.

The A2i algorithms recommended very small amounts of TSM-CF instruction (5 minutes) unless students were reading well below grade expectations based on their word reading skills (see Figure 3). Indeed, in the classroom depicted in Figure 2, none of the students were reading more than half a grade below grade level, and five minutes per day were recommended for all. However, there were students in other classrooms for whom substantial amounts of TSM-CF instruction were recommended, and this amount increased exponentially as students’ initial reading comprehension skills fell further below grade level. SM-CF instruction was held constant at five minutes per day, because there was no reliable correlational evidence regarding the contribution of this type of instruction to students’ reading comprehension skills, with some indication of a negative association.

Teachers received intensive training using a coaching model (Bos, Mather, Narr, & Babur, 1999), which focused on how to use A2i software, implementing the recommended amounts and types of instruction in flexible learning groups. Other topics included planning and classroom management strategies and using assessment results to guide instruction. ISI group teachers participated in a half-day workshop in the fall, attended monthly one-hour meetings with other teachers in the ISI treatment group in communities of practice (Wenger, McDermott, & Snyder, 2002), and received biweekly classroom-based support during the literacy block (Bos et al., 1999).

The Alternative Vocabulary Treatment

For this study, the alternative vocabulary intervention focused implicitly on building students’ comprehension by supporting teachers’ efforts to provide effective vocabulary instruction using an adaptation of a teacher study group model (Bos et al., 1999; Gersten, Dimino, Jayanthi, Kim, & Santoro, 2007), in which teachers read the book Bringing Words to Life: Robust Vocabulary Instruction (Beck, McKeown, & Kucan, 2002). We selected this intervention because we wanted an intervention that might support students’ vocabulary and comprehension growth but contrast with the ISI intervention, in that it was not differentiated by students’ skill levels, nor was it intended to be. Prior to the monthly meetings, teachers read the assigned chapter in Bringing Words to Life. They then discussed the book during the meeting and, based on what they learned, designed vocabulary lessons collaboratively with a group of other teachers.

The grouping of teachers varied each month. Before the next meeting, each teacher implemented the lessons in his or her classroom. At the next meeting, the teachers discussed the implementation and shared student work samples. Then, the next chapter was discussed and new lessons developed. The procedure continued for each chapter throughout the school year. The research assistant leading the teacher study group was a certified teacher working on her master’s degree in reading and language arts.

In the book that the teachers read, discussed, and used as a guide for designing lessons, Beck et al. (2002) argued that for vocabulary instruction to be effective, instruction must be robust and explore information about target vocabulary words. The authors suggested that students’ vocabulary will improve when teachers build students’ background knowledge, provide them with multiple meanings of words across diverse contexts, and offer opportunities for them to read and listen to words. Such vocabulary instruction includes, but is not limited to, antonyms/synonyms, homonyms, classifying words, class discussion, and defining.

As described in Bringing Words to Life, words may have different levels of utility. The book describes three tiers of words: Tier 1, Tier 2, and Tier 3. Words that would be considered basic would be placed at Tier 1 (e.g., baby, clock, happy, walk). Words found in this tier rarely require instructional attention, according to Beck et al. (2002). Tier 1 also includes sight words and other words commonly found in a young student’s environmental print and includes approximately 8,000 word families. Tier 2 words are considered high-frequency and high-utility words that are critical for understanding a specific text (Beck et al., 2002) and are used across multiple domains (e.g., coincidence, absurd, industrious, fortunate). Beck et al. suggested that a rich knowledge of Tier 2 words can have a positive impact on verbal functioning. Thus, instruction of Tier 2 words can be highly productive in supporting students’ vocabulary and reading comprehension skills.

Beck et al. (2002) described Tier 3 words as occurring less frequently in written and spoken language and as genre specific, such as for science, math, and social sciences (e.g., isotope, peninsula, lathe, refinery). These words may be best learned when the need arises, such as introducing peninsula during a geography lesson, according to Beck et al. The professional development for the vocabulary intervention focused on supporting teachers’ efforts to follow the Beck et al. approach to robust vocabulary instruction, as described in the book, as closely as possible.

PURPOSE OF THE STUDY

Again, the purpose of this study was to examine whether C×I interactions are causally implicated in students’ varying reading comprehension outcomes in response to third-grade reading instruction. The research questions and hypotheses were the following:

Research question 1—What is the effect of differentiating third-grade students’ literacy instruction, using the ISI intervention, on their reading comprehension skill gains compared to the gains of students whose teachers were randomly assigned to the vocabulary intervention? If C×I instruction interactions are causally related to students’ reading comprehension outcomes, we hypothesize that students whose teachers were in the ISI group would demonstrate stronger reading comprehension skill gains than would students whose teachers were in the vocabulary group. This is because, although both interventions seek to improve students’ comprehension, the ISI intervention explicitly considers C×I interactions and the vocabulary intervention does not.

Research question 2—What is the effect of the vocabulary intervention on students’ vocabulary gains compared to gains for students in ISI classrooms? We anticipated that students whose teachers were in the vocabulary intervention group would demonstrate stronger vocabulary gains than would students in ISI classrooms.

Research question 3—To further explore the role of C×I instruction interactions in students’ learning, we asked, What was the nature and variability of the quality, amounts, and types of literacy instruction that third graders received during the dedicated block of time devoted to literacy? How precisely did teachers provide the A2i-recommended amounts and types of instruction? We anticipated that instruction would vary both within and between classrooms generally and that there would be systematic differences in amounts and types of instruction depending on the intervention condition. We predicted that students in ISI intervention classrooms would be more likely to receive the A2i-recommended amounts of small-group differentiated literacy instruction than would students in the vocabulary intervention classrooms. At the same time, we expected students in the vocabulary intervention classrooms to spend more time in oral language and print vocabulary instruction compared to students in ISI classrooms.

METHODS

Participants

This study used a cluster-randomized treated control design in which third-grade teachers (n = 33) within schools (n = 7) were randomly assigned to implement the ISI intervention or implement an alternative treatment, the vocabulary intervention. All teachers were randomly assigned: 16 were assigned to the ISI group and 17 to the vocabulary group. No teachers withdrew; however, three teachers (n = 2 in the ISI group and 1 in the vocabulary group) went on leave and did not teach the last month of the study. These teachers and their students were included in all analyses. Similarly across groups, all teachers met state certification requirements. All 33 teachers reported that they had a bachelor’s degree related to an educational field, and seven of the teachers had certifications or degrees beyond a bachelor’s degree (n = 3 in the ISI group and 4 in the vocabulary group). Teachers’ classroom teaching experience ranged from 0 to 30 years, with a mean of 10.9 years of experience (M = 11.2 years for the ISI teachers and 10.6 years for the vocabulary teachers). There were no significant differences in any of these teacher characteristics between the ISI intervention and vocabulary teachers.

The schools were located in a large school district in the southeastern United States and included suburban, urban, and rural communities. Schoolwide percentages of students qualifying for the federal free and reduced lunch program (FARL), which we used as a proxy for family socioeconomic status, ranged from high poverty (92%) to affluent (4%), with a mean of 47% of students qualifying for FARL study-wide. The literacy blocks for these schools was about 90 minutes each day, and teachers used the school-adopted Open Court Reading curriculum (2008; Crowe et al., 2009).

Students were automatically assigned to the condition to which their teacher was assigned; thus, 219 students were in the ISI condition, and 229 were in the vocabulary condition (total n = 448). According to school records and parent reports, approximately 36% of the students were white, 51% were African American/black, 3% were Hispanic, 3% were Asian/Asian American, 3% were multiracial, and the remaining 4% belonged to other ethnic groups. There were no differences between the two intervention groups with regard to the percentage of students for race/ethnicity or qualifying for FARL. Of the 448 students, 100 had participated in ISI randomized control trials in first and second grade. Seven students were in control/alternative treatment classrooms for all three grades, 27 were in ISI classrooms for one of the grades, 42 were in ISI classrooms for two grades, and 24 were in ISI classrooms all three years. Comparison of fall reading and vocabulary total scores for this sample of 100 students with the sample as a whole indicates that their scores were not significantly different (p = .266). Fifty-eight students were in ISI classrooms and 42 in vocabulary classrooms.

Student Assessments

ISI Intervention Assessments

Students were assessed on a battery of language and literacy skills in fall, winter, and spring. GEs from the WJ–III Passage Comprehension, Letter/Word Identification, and Picture Vocabulary Tests (Woodcock et al., 2001) were used by the A2i software algorithms to compute recommended amounts and types of instruction. These scores were available to teachers in the ISI group throughout the school year. Paper reports of the scores were provided to teachers in the vocabulary condition.

Outcome Assessments

Reading comprehension and vocabulary were assessed in the fall and spring using the level 3 GMRTs, with alternative forms administered in the fall and spring. These scores were not used by the A2i software, although they were provided to teachers after administration and scoring were completed. These are multiple-choice, group-administered assessments. In the comprehension assessment, students read a variety of passages, including both narrative and expository text excerpted from books used widely in schools. Students then answer questions, with increasing levels of inference required, by selecting the best of four responses. For example, after reading a passage about emperor penguins, the students answer four questions, including, “Why does the mother penguin juggle the egg?”

In the reading vocabulary assessment, students select the meaning of a word provided in a short sentence (e.g., “a perfect grace”) among four possible responses (“dance, grade, beauty of movement, lawn”). Internal consistency estimates (Cronbach’s a) of 0.96 construct validity estimates, which show that the test is actually assessing the construct of reading comprehension, of about 0.80, and test–retest reliability ranging from 0.85 to 0.90 were reported for the 2006 standardization sample, which are acceptable (MacGinitie, MacGinitie, Maria, & Dreyer, 2006). Several types of scores are provided, including extended scale scores, GEs, percentile ranks, and normal curve equivalents. Extended scale scores, which have the advantage of providing equal intervals between points similar to a Rasch score (Winsteps version 3.30) to show gains in scores, were used in all analyses.

Instruction

For both conditions, instruction and the classroom environment were investigated in two ways: (1) using a rating scale from 1 (low) to 6 (high) with detailed rubrics designed to capture the general fidelity of teachers’ implementation of ISI and vocabulary instruction on four scales that were specifically targeted to capture key aspects of the two interventions and which have been generally associated with more effective instruction (i.e., higher quality) in the extant literature (Brophy, 1979; Cameron, 2004; NICHD Early Child Care Research Network, 2004; Pianta, La Paro, Payne, Cox, & Bradley, 2002; Snow, Burns, & Griffin, 1998; Wharton-McDonald et al., 1998) and (2) capturing the amount and type of literacy instruction provided across three dimensions: management, context or grouping, and content.

Both systems relied on videotaped observations of instruction obtained during the literacy block in the fall, winter, and spring. Video was captured using two digital camcorders with wide-angle lenses. During the live observations, trained research assistants recorded detailed field notes regarding the activities and materials used, including careful descriptions of target students and activities of students who might be off camera (Bogdan & Biklen, 1998). Observations were scheduled at the teachers’ convenience.

Fidelity

The fidelity of implementation was evaluated using four scales (see Appendix B):

Classroom implementation of individualized instruction—The extent to which teachers actually differentiated instruction in the classroom using small groups and centers, focusing on the content and level of the types of instruction (e.g., TSM-CF, SM-MF)

Classroom orienting, organization, and planning—The extent to which teachers planned center activities and small groups and used lesson plans (either their own or the A2i lesson plan) and in-classroom organizational strategies that supported effective and efficient use of instructional time (e.g., chart for students depicting centers and group membership)

Robust vocabulary instruction—The extent to which teachers provided robust vocabulary instruction

Warmth and responsiveness, control, and discipline—The extent to which teachers’ classroom use of appropriate ways to redirect students’ behavior, including warmth and responsiveness to students, supported effective and efficient instruction

Teachers received scores from 1 (low) to 6 (high) for each dimension. Each scale was considered separately for purposes of this study. The scales and rating rubrics are provided in Appendix B. Rubrics and scores were not shared with the participating teachers.

The scales were completed by research assistants who were certified teachers and, to the extent possible, blind to teachers’ treatment group assignment. They observed video collected in late winter and early spring when, based on previous research, teachers are most likely to have mastered new ways of teaching (Hamre, Pianta, Downer, & Mashburn, 2007). Before beginning to rate instruction, coders worked together to achieve adequate levels of inter-rater reliability (Cohen’s Kappa = 0.73). Approximately 10% of the coded videos were chosen at random and recoded. Inter-rater reliability remained at acceptable levels (Cohen’s Kappa = 0.73) based on Landis and Koch (1977) criteria.

Amounts and Types of Instruction

Amounts and types of instruction were obtained using three classroom observation videos for each classroom: fall, winter, and spring. The instruction that each student received was coded across the three dimensions: management, grouping, and content (Connor, Morrison, et al., 2009). An excerpt of the coding manual is provided in Appendix A. Using Noldus Observer Video-Pro software (XT version 8.0), any activity (i.e., both instruction and noninstruction, e.g., transitions) that lasted at least 15 seconds was coded directly from video so that all of the instructional and noninstructional time that individuals spent during the literacy block was identified by content, management, and context. The output for one videotape from a classroom observed in spring 2009 is provided in Appendix C.

Literacy instruction was coded for a randomly selected subset of students (n = 364). Missing data analyses with fall and spring reading comprehension scores as the outcome revealed no significant differences between the full sample of 448 students and the selected sample of 364 students (Wilks’s lambda = 0.996, F[2, 445] = 0.89, p = .411). For the selected students, 184 were in the ISI intervention classrooms and 180 in the vocabulary intervention classrooms.

For the purposes of this study, we focused on the amount and type of instruction provided individually or in small groups to students during the literacy block, as well as whole-class TSM instruction. Video coding was conducted by trained research assistants. All coders were required to attain acceptable inter-rater reliability (computed by the Noldus software) with a series of training videos of third-grade classrooms (Cohen’s Kappa > 0.7). Inter-rater reliability during the coding process was obtained for about 10% of videos selected at random (mean Cohen’s Kappa = 0.72), which is considered acceptable based on Landis and Koch (1977) criteria. The mean length of observation was 85 minutes in the fall (standard deviation [SD] = 30), 73 minutes in the winter (SD = 35), and 79 minutes in the spring (SD = 23).

The fine-grained coding system identifies over 200 instruction variables, and a sample of the coded output is provided in Appendix C. There were five code-focused types of instruction: phonological awareness, morpheme awareness, word decoding, word encoding, and fluency. There were six meaning-focused types of instruction coded: print and text concepts, oral language (including oral vocabulary), print vocabulary, listening and reading comprehension, text reading, and writing (spelling was coded as word encoding).

Within each content area, we considered specific activities. For example, in listening and reading comprehension, there were 19 different types of listening and reading comprehension activities (see Appendixes A and C). For this study, we considered only the duration of the principal content areas. Of note, instruction was coded at the level of the individual student (see Appendix C) rather than at the classroom level, unlike the quality ratings, which were global teacher/classroom-level ratings (see Appendix B). Thus, we could determine with some precision how much of each type of instruction each student received even if this varied within classrooms. Returning to the multiple dimensions of instruction, we considered whether the instruction was TSM or SM and whether it was provided to the whole class, in small groups, or individually.

To obtain a single value for each student, we aggregated the multiple observations first by summing results within season (for multiple videotapes) and then aggregated using the mean amount observed for each type of instruction for each student across the three observations.

To assess how precisely teachers provided the A2irecommended amounts, difference scores were computed by subtracting the amount (in minutes) of each type of instruction observed (TSM-CF and -MF; SMMF; small-group/individual instruction) from the A2irecommended amount at the time of the observation. Thus scores that were closer to 0 indicated that the recommended amounts of each type of instruction were provided more precisely.

Analytic Strategies

For the first two research questions regarding the impact of the different interventions, because students were nested in classrooms that are nested in schools, we created a single model using HLMs (Raudenbush & Bryk, 2002), because failing to account for shared classroom and school variance may lead to misestimation of standard errors and, hence, effect sizes. We built the model systematically, starting with an unconditional model, which was used to compute intraclass correlations (ICCs). ICC is the classroom-level variance divided by the total model variance (student + classroom-level variance) and represents the variance explained between classrooms. Preliminary three-level models (students nested in classrooms that are nested in schools) revealed no significant between-school variance, so we used more parsimonious two-level models (students nested in classrooms) and included schoolwide FARL. The model is provided here

where Yij is the predicted spring score for child i in classroom j and is a function of the grand mean (γ00), the students’ fall score (γ10), the effect of treatment (γ01)—where 1 = the ISI intervention, and 0 = the vocabulary intervention—and school FARL (γ02) and the fall score × school FARL interaction (γ11). r0ij represents the student-level variance, and u0j represents classroom-level variance.

For all analyses, continuous variables were grand mean centered.

Effect sizes were computed by dividing the coefficient of the treatment effect (γ01) by the square root of the student-level variance, which is the standard deviation of the model outcome. Other analyses are described in the results.

To answer the third research question regarding the literacy instruction that the third graders received, we computed amounts of instruction (in seconds) that each student received for the fall, winter, and spring observations. For each season, students’ data were aggregated by summing the observed amounts across multiple videotapes to obtain a single amount for each student in each of the major content area types for TSM and SM, small-group and whole-class instruction. To examine differences in instructional content (e.g., vocabulary, listening and reading comprehension) and the difference scores (i.e., precision) by condition, we used multilevel multivariate models (HMLM2; Raudenbush & Bryk, 2002; Raudenbush, Bryk, Cheong, Congdon, & du Toit, 2004).

RESULTS

Across the conditions, students generally made grade-appropriate gains in reading comprehension from fall to spring when normal curve equivalents (NCEs), which should remain the same because they take into account students’ age, were compared (fall reading comprehension NCE = 51.8; spring reading comprehension NCE = 50.9; t[447] = 0.56, p = .574, where NCEs have a standard mean of 50 and are similar to percentile ranks except that they have equal intervals and can be averaged). On the total score, students gained more than 2 NCE points, which was a significant increase, suggesting greater than expected growth overall on reading comprehension and vocabulary growth when combined (fall total NCE score = 52.7; spring total score NCE = 55.1; t[447] = 4.85, p < .001).

Extended scale scores’ descriptive statistics are provided by condition in Table 2. Extended scale scores, which were used in the analyses, increased as expected from fall to spring. These scores are similar to raw scores except that they have equal intervals and have been scaled so that they can be used in statistical analyses and to model across grades. Comparison of fall scores for the ISI and vocabulary group students revealed that students in the ISI condition began the year with significantly lower reading comprehension and total scores on the GMRTs than did the students in the vocabulary condition (t[446] = −2.98, p = .003; t[446] = −2.01, p = .045, respectively). There were no significant differences between groups for fall reading vocabulary (t[446] = −0.330, p = .742).

Table 2.

Descriptive Statistics for the Gates–MacGinitie Comprehension, Reading Vocabulary, and Total Scores (extended scale scores) by Treatment Condition

| Assessment group | Condition | Mean | Standard deviation |

|---|---|---|---|

| Fall total | Vocabulary | 468.45 | 35.17 |

| ISI | 461.27 | 40.34 | |

| Total | 464.94 | 37.92 | |

| Fall reading comprehension | Vocabulary | 470.35 | 39.37 |

| ISI | 458.77 | 43.01 | |

| Total | 464.69 | 41.55 | |

| Fall reading vocabulary | Vocabulary | 468.14 | 37.93 |

| ISI | 466.85 | 45.00 | |

| Total | 467.51 | 41.49 | |

| Spring total | Vocabulary | 483.26 | 37.34 |

| ISI | 480.47 | 37.58 | |

| Total | 481.90 | 37.44 | |

| Spring reading comprehension | Vocabulary | 480.89 | 42.35 |

| ISI | 476.25 | 43.27 | |

| Total | 478.62 | 42.82 | |

| Spring reading vocabulary | Vocabulary | 487.75 | 37.91 |

| ISI | 487.31 | 39.56 | |

| Total | 487.53 | 38.68 |

Note. ISI = Individualizing Student Instruction.

Research Question 1

Three models were built using HLMs: one for the total GMRT score, one for comprehension, and another for reading vocabulary (see Table 3). Again, we hypothesized that if C×I interactions were causally implicated in students’ varying reading comprehension outcomes in response to reading instruction, the ISI intervention would have a greater positive effect on students’ reading comprehension skill growth than would the undifferentiated vocabulary intervention.

Table 3.

Hierarchical Linear Model Results for Gates–MacGinitie Reading Tests (GMRTs)—Total Score, Reading Comprehension, and Reading Vocabulary Scores (extended scale scores)—Comparing Effects Between Treatment Conditions

| Fixed effect | GMRT's total coefficient |

p value | Comprehension coefficient |

p value | Vocabulary coefficient |

p value |

| Fitted spring score | 478.11 | <.001 | 473.97 | <.001 | 485.04 | <.001 |

| Student | ||||||

| Fall score | 0.81 | <.001 | 0.76 | <.001 | 0.74 | <.001 |

| Classroom | ||||||

| ISI = 1 | 3.40 | .049 | 4.95 | .044 | 0.75 | .724 |

| School FARL | −0.14 | <.001 | −0.23 | <.001 | −0.14 | <.001 |

| Child × classroom interactions | ||||||

| Fall score × school FARL | −0.001 | .260 | −0.001 | .487 | −0.001 | .287 |

| Random effects | Variance | p value | Variance | p value | Variance | p value |

| Student (r01j) | 316.51 | 640.48 | 396.97 | |||

| Classroom (u0j) | 1.01 | .180 | 20.88 | .190 | 8.09 | .095 |

| Fall score (u1j) | 0.01 | .341 | ||||

Note. FARL = free and reduced lunch. ISI = Individualizing Student Instruction. ISI classroom = 1, and the vocabulary classroom = 0. Approximate degrees of freedom for fixed effects are 30 and 444 for GMRTs’ total and vocabulary scores. Degrees of freedom for fixed effects are 30 and 31, respectively, for GMRTs’ comprehension scores. Results control for the fall score and percentage of students qualifying for the school’s FARL program. All continuous variables are grand mean centered.

Supporting our hypothesis, HLM results revealed that students in the ISI intervention demonstrated significantly greater gains (i.e., residualized change) on the total GMRTs’ score and on the reading comprehension assessment (see Table 3) compared with students who participated in the vocabulary intervention. The ISI effect size (d) for the total score was 0.19 and for reading comprehension was 0.20, which are relatively small effect sizes, using criteria suggested by Rosenthal and Rosnow (1984). The models explained 77% and 64% of the total variance, respectively. ICCs were 0.27 for the total score and 0.23 for comprehension. This indicates that 27% of the variability in students’ total score and 23% of the variability in comprehension scores were explained by which classroom they attended. In all three models, as the schoolwide percentage of students qualifying for the school FARL program increased, students’ outcome gains generally decreased. There was not a significant treatment × fall score interaction (comprehension × treatment coefficient = 0.053, p = .304; total GMRT × treatment coefficient = −0.036, p = .547).

Research Question 2

We anticipated that students whose teachers were in the vocabulary intervention group would demonstrate stronger vocabulary gains than would students in the ISI condition, but this was not supported by the results. HLM analyses revealed no significant differences in students’ reading vocabulary gains (i.e., residualized change) whether their teachers were assigned to the vocabulary or the ISI intervention condition (d = 0.04; see Table 3). The model explained 73% of the total variance, and the ICC was 0.24. Nor was there a treatment × fall vocabulary score interaction (vocabulary × treatment coefficient = −0.001, p = .301).

Research Question 3

It is possible that the ISI intervention effect may have been the result of the professional development provided rather than the differentiated instruction informed by C×I interactions. To substantiate the claim that ISI represented instruction based on C×I interactions, we hypothesized that students in ISI intervention classrooms would be more likely to receive the A2i-recommended amounts of TSM, small-group, differentiated meaning-focused instruction and code-focused instruction than would students in the vocabulary intervention classrooms. We examined the overall fidelity of implemented instruction that students received to examine qualitative differences in fidelity as an explanation for the ISI treatment effect. We then examined amounts and types of instruction that each group received and compared the precision with which teachers provided the A2i-recommended amounts, but first we examined the amounts and types of instruction that third graders received overall in these classrooms.

Overall Description of Third-Grade Literacy Instruction

Amounts (in seconds) of each type of instruction for TSM, small-group and individual instruction are provided in Table 4 and for TSM, whole-class instruction, aggregated to the classroom level, in Table 5. For these third graders, very little TSM-CF, small-group instruction was observed compared with TSM-MF, small-group instruction: less than 3 minutes per day (SD = 5) in TSM-CF, small-group activities, compared with more than 20 minutes per day (SD = 17) spent in meaning-focused activities. Amounts of small-group instruction ranged widely among classrooms from 0 to 50 minutes of TSM-CF instruction during the literacy block and 0 to almost 75 minutes of TSM-MF instruction.

Table 4.

Means and Standard Deviations in Seconds/Day of Teacher/Student-Managed, Small-Group and Individual Instruction by Content Area for 347 Target Students

| Content area | Condition | Mean | Standard deviation |

|---|---|---|---|

| Phonological awareness | Vocabulary | 17.69 | 58.23 |

| ISI | 1.74 | 9.91 | |

| Total | 9.42 | 41.73 | |

| Morphological awareness | Vocabulary | 32.51 | 100.76 |

| ISI | 129.19 | 294.87 | |

| Total | 82.66 | 228.46 | |

| Word identification and decoding | Vocabulary | 11.66 | 33.92 |

| ISI | 23.96 | 72.59 | |

| Total | 18.04 | 57.58 | |

| Word identification and encoding | Vocabulary | 4.49 | 40.32 |

| ISI | 71.00 | 154.97 | |

| Total | 38.99 | 119.63 | |

| Grapheme-phoneme correspondence | Vocabulary | 5.48 | 32.51 |

| ISI | 8.74 | 64.36 | |

| Total | 7.17 | 51.50 | |

| Fluency | Vocabulary | 14.80 | 58.31 |

| ISI | 33.27 | 66.88 | |

| Total | 24.38 | 63.49 | |

| Print and text concepts | Vocabulary | 49.98 | 208.45 |

| ISI | 50.79 | 158.93 | |

| Total | 50.40 | 184.16 | |

| Oral language | Vocabulary | 1.53 | 6.95 |

| ISI | 20.43 | 45.43 | |

| Total | 11.34 | 34.35 | |

| Print vocabulary | Vocabulary | 136.41 | 277.71 |

| ISI | 236.05 | 401.12 | |

| Total | 188.10 | 350.32 | |

| Listening and reading comprehension | Vocabulary | 292.74 | 281.04 |

| ISI | 428.83 | 425.44 | |

| Total | 363.33 | 369.00 | |

| Text reading | Vocabulary | 335.70 | 590.06 |

| ISI | 481.59 | 472.19 | |

| Total | 411.38 | 536.40 | |

| Writing | Vocabulary | 218.07 | 353.52 |

| ISI | 79.14 | 189.22 | |

| Total | 146.00 | 288.64 |

Note. ISI = Individualizing Student Instruction.

Table 5.

Means and Standard Deviations in Seconds/Day of Teacher/Student-Managed, Whole-Class Instruction by Content Area

| Content area | Condition | Mean | Standard deviation |

|---|---|---|---|

| Phonological awareness | Vocabulary | 94.63 | 126.75 |

| ISI | 42.50 | 98.55 | |

| Total | 68.56 | 114.78 | |

| Morphological awareness | Vocabulary | 255.13 | 303.94 |

| ISI | 278.38 | 432.35 | |

| Total | 266.75 | 367.82 | |

| Word identification and decoding | Vocabulary | 192.69 | 215.13 |

| ISI | 178.88 | 171.48 | |

| Total | 185.78 | 191.50 | |

| Word identification and encoding | Vocabulary | 157.75 | 259.22 |

| ISI | 244.31 | 336.36 | |

| Total | 201.03 | 298.65 | |

| Grapheme-phoneme correspondence | Vocabulary | 34.81 | 51.96 |

| ISI | 30.88 | 41.30 | |

| Total | 32.84 | 46.21 | |

| Fluency | Vocabulary | 102.31 | 171.95 |

| ISI | 62.94 | 85.93 | |

| Total | 82.63 | 135.20 | |

| Print and text concepts | Vocabulary | 349.75 | 467.25 |

| ISI | 188.75 | 148.97 | |

| Total | 269.25 | 350.81 | |

| Oral language | Vocabulary | 150.25 | 184.34 |

| ISI | 100.50 | 110.53 | |

| Total | 125.38 | 151.63 | |

| Print vocabulary | Vocabulary | 906.56 | 925.52 |

| ISI | 625.69 | 479.04 | |

| Total | 766.13 | 738.83 | |

| Listening and reading comprehension | Vocabulary | 1065.00 | 599.10 |

| ISI | 779.75 | 737.10 | |

| Total | 922.38 | 676.44 | |

| Text reading | Vocabulary | 919.44 | 489.26 |

| ISI | 751.50 | 470.33 | |

| Total | 835.47 | 479.73 | |

| Writing | Vocabulary | 119.94 | 166.97 |

| ISI | 229.94 | 471.47 | |

| Total | 174.94 | 352.38 |

Note. ISI = Individualizing Student Instruction.

Overall, about three times as much time was spent in TSM-CF, whole-class instruction (13 minutes/day, SD = 11, range = 0–44 minutes) as in small-group and individual instruction. Even more time was spent in TSM-MF, whole-class instruction (53 minutes/day, SD = 27, range = 14–155 minutes).

Generally, TSM-CF activities tended to focus on the more complex skills of morphological awareness, word decoding, and word encoding. Very little time was spent on phonological awareness and grapheme–phoneme correspondences. Most of the time in TSM-MF, small-group instruction was spent in text reading (about 7 minutes) or listening and reading comprehension activities (6 minutes). Only about 2 minutes per day were spent in writing activities. TSM-MF, whole-class instruction was generally spent in listening and reading comprehension, text reading, and print vocabulary instruction.

Adding together small-group, individual, and whole-class instruction indicated that students spent about 35 minutes per day (SD = 22), on average, in TSM and SM literacy instruction, but this ranged from as little as 2 minutes for one student to more than 105 minutes for another. This does not include time spent in transition and other noninstructional activities or time that students were not in the classroom.

Comparing Instruction for the Two Conditions

Fidelity

Again, the fidelity of implementation was rated for four aspects of the classroom environment: individualization, organization and planning, robust vocabulary instruction, and teacher warmth and responsiveness at the level of the classroom. We hypothesized that teachers in the ISI group would receive higher ratings on the individualization and organization/planning scales, whereas teachers in the vocabulary group would receive higher ratings for robust vocabulary. We assumed that there would be no differences in teacher warmth and responsiveness. For all four scales, ratings for the ISI and vocabulary intervention classrooms did not significantly differ (see Table 6).

Table 6.

Means and Standard Deviations for Teacher Fidelity Quality Scales by ISI and Vocabulary Intervention Groups

| Scale | Condition | Mean | Standard deviation |

|---|---|---|---|

| Individualized instruction | Vocabulary | 2.30 | 1.58 |

| ISI | 2.94 | 1.91 | |

| Total | 2.62 | 1.75 | |

| Organization and planning | Vocabulary | 4.32 | 1.17 |

| ISI | 4.91 | 1.00 | |

| Total | 4.61 | 1.12 | |

| Use of robust vocabulary strategies | Vocabulary | 3.71 | 1.17 |

| ISI | 3.56 | 1.14 | |

| Total | 3.64 | 1.14 | |

| Warmth and responsiveness to students, control, and discipline |

Vocabulary | 4.26 | 1.46 |

| ISI | 4.38 | 1.06 | |

| Total | 4.32 | 1.26 |

Note. 1 = low, and 6 = high. ISI = Individualizing Student Instruction. There were no significant differences by condition overall or by scale.

We used multivariate ANOVA (MANOVA, using PASW version 17.0.3), because fidelity was judged at the level of the classroom, so there was no nesting. Results revealed that there were no overall significant differences in fidelity of observed instruction between teachers in the two intervention groups (Wilks’s lambda = 0.880, F[4, 28] = 1.137, p = .359). Post hoc analyses revealed that ISI and vocabulary intervention teachers did not differ significantly on any of the four scales with effect sizes all negligible (partial eta squared ≤ 0.07). Differences might have existed, but we did not have the power to detect them. The smallest effect size that could be detected given the teacher sample size and parameters of the model was 0.30 (G-Power version 3.1). In general, trends were in the direction anticipated, with teachers in the ISI intervention group demonstrating slightly higher mean scores on the individualization and planning and organization scales and the vocabulary intervention group teachers demonstrating slightly higher mean scores on the vocabulary scale. Generally, teachers received the highest ratings on the planning and organization scale and the lowest ratings for individualized instruction.

Amount of Each Type of Instruction

Two-level HLMs (Raudenbush & Bryk, 2002) examining TSM, small-group code- and meaning-focused instruction (minutes/day) revealed substantial within-and between-classroom variability. HLMs were used because small-group instruction was observed at the level of the individual student, and hence students were nested in classrooms. For TSM-CF, small-group instruction, within-classroom variance (i.e., student level, r) was 1.32, and the between-classroom variance (u0) was 2.07 (χ2[31] = 519.45, p < .001). The ICC was 0.61, which indicates that more than half of the variability in students’ amount of instruction received was explained by which classroom they attended. For TSM-MF, small-group instruction, the within-classroom variance (r) was 15.66, and the between-classroom variance (u0) was 70.13 (χ2[31] = 1688.03, p < .001). The ICC was 0.81. Both ICCs represent very high levels of between-classroom variance (Hedges & Hedberg, 2007).

Multivariate multilevel analyses (HMLM2; Raudenbush et al., 2004) were used to take into consideration the nested structure of the observation data, individual students nested in classrooms, and the significant between-classroom variance in the amounts of each instruction type (see Table 7). The multiple variables included each type of TSM, small-group and individual instruction, with code- and meaning-focused models run separately to preserve parsimony for these highly complex models. For both models, the unrestricted model provided the best fit (see Raudenbush & Bryk, 2002). Results revealed that students in the ISI intervention spent significantly more time overall in TSM, small-group and individual meaning- and code-focused instruction compared with students in the vocabulary intervention group.

Table 7.

Results of Multilevel Multivariate Models Comparing Teacher/Student-Managed (TSM), Meaning- and Code-Focused, Small-Group and Individual Instruction for Students in ISI and Vocabulary Intervention Classrooms

| Variable | TSM, meaning-focused instruction |

TSM, code-focused instruction |

||

|---|---|---|---|---|

| Fixed effects | Coefficient | p value | Coefficient | p value |

| Fitted mean (intercept) | −2.33 | .657 | 14.44 | <.001 |

| ISI = 1 | 14.95 | .045 | 30.21 | <.001 |

| Random effect τ | Variance | Variance | ||

| 340.75 | 1309.36 | |||

Note. ISI = Individualizing Student Instruction. Degrees of freedom for intercept and ISI = 30.

Comparing ISI and vocabulary classroom amounts of TSM, whole-class instruction (see Table 5 for means) using MANOVA revealed that students in ISI and vocabulary classrooms generally spent the same amount of time in whole-class instruction (Wilks’s lambda = 0.705, F[12, 19] = 0.663, p = .765). MANOVA is appropriate because whole-class instruction is aggregated to the classroom level, and the data do not have a nested structure. Examining between-subjects effects for TSM, whole-class instruction by content area revealed no mean differences by content area with partial eta squared ranging from 0.001 to 0.053. Thus, teachers in both groups appeared overall to be providing comparable amounts of whole-class literacy instruction to students.

Precision

The ISI intervention A2i software specifically provided recommended amounts (in minutes/day) of small-group or individual instruction, reflecting predicted C×I interactions. These were used to compute difference scores. Again, difference scores were calculated by subtracting a student’s A2i-recommended minutes from the student’s observed minutes of small-group and individual instruction for each instruction type (e.g., TSM-MF). Hence, difference scores closer to 0 indicated that the student received more precisely the recommended amounts of each type of instruction (see Table 8). If the student received less than the recommended amount, the student’s difference score was negative. If the student received more than the recommended amount, the difference score was positive. Because the recommended amounts varied by month and were recalculated after the winter assessments, we computed the difference score for each season separately and then computed the mean difference score for each student. Means, standard deviations, and ranges are provided in Table 8.

Table 8.

Difference Scores (minutes/day) by Condition

| Type of instruction | Condition | Mean | Standard deviation |

Minimum | Maximum |

|---|---|---|---|---|---|

| TSM code-focused difference score |

Vocabulary | −5.08 | 1.37 | −11.71 | 0.28 |

| ISI | −4.28 | 2.23 | −11.30 | 8.15 | |

| TSM meaning-focused difference score |

Vocabulary | −9.18 | 8.81 | −22.39 | 29.36 |

| ISI | −6.14 | 10.56 | −21.94 | 18.38 | |

| SM meaning-focused difference score |

Vocabulary | −11.17 | 7.13 | −22.97 | 14.33 |

| ISI | −10.34 | 6.78 | −25.08 | 8.25 |

Note. Difference scores closer to 0 indicate greater precision meeting the A2i recommended amounts. ISI = Individualizing Student Instruction. SM = student-managed. TSM = teacher/student-managed.

HLM analyses, controlling for the fall reading comprehension score (sample grand mean centered) and schoolwide percentage of students qualifying for FARL, revealed that TSM-CF difference scores were closer to 0 by about one minute, on average, and hence more precise for the ISI intervention students compared with the vocabulary intervention students (see Table 9).

Table 9.

Hierarchical Linear Model Results Comparing Difference Scores by Condition (ISI = 1; vocabulary = 0), Controlling for Fall Reading Comprehension and School Free and Reduced Lunch

| Variable | TSM, code- focused difference score coefficient |

TSM, code- focused difference score standard error |

TSM, meaning- focused difference score coefficient |

TSM, meaning- focused difference score standard error |

|---|---|---|---|---|

| Intercept or fitted mean | −5.08*** | 0.33 | −9.85*** | 2.20 |

| Student level | ||||

| Fall RC | 0.009** | 0.003 | 0.03*** | 0.009 |

| Classroom level | ||||

| ISI | 0.97* | 0.47 | 4.12 | 3.10 |

| School FARL | 0.005 | 0.007 | −0.02 | 0.05 |

| Child × classroom | ||||

| Fall RC × ISI | 0.001 | 0.004 | 0.03* | 0.01 |

| Fall RC × FARL | −0.0003 | 0.0002 | ||

| Random effects | Variance | Chi-square | Variance | Chi-square |

| Classroom | 1.58 | 262.93 | 75.32*** | 1,479.08 |

| Student | 2.05 | 17.58 | ||

Note. FARL = free and reduced lunch. ISI = Individualizing Student Instruction. RC = reading comprehension. TSM = teacher/student-managed. All continuous variables are grand mean centered.

p < .05.

p < .01.

p ≤ .001.

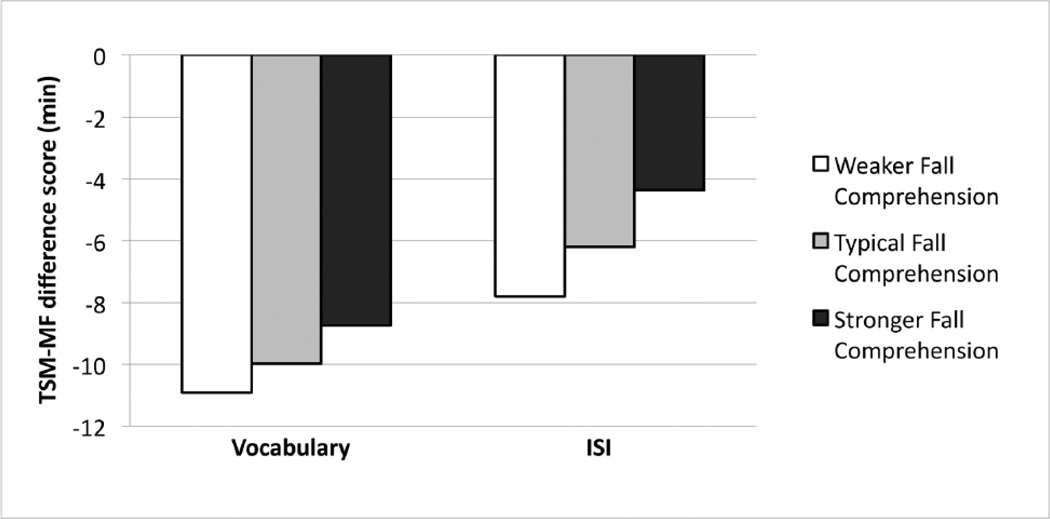

Teachers in the ISI intervention were also more precise in providing the recommended amounts of TSMMF, small-group instruction than were teachers in the vocabulary intervention. That is, students in the ISI group received instruction that was generally closer to the A2i-recommended amounts (i.e., more precise) by about four minutes per day than was the instruction received by the vocabulary group. However, the precision of TSM-MF, small-group instruction increased when students’ fall reading comprehension scores were greater (see Figure 4). There were no significant differences in the SM-MF difference scores across conditions.

Figure 4.

Difference Scores for Students in the Vocabulary and ISI Classrooms as a Function of Their Fall Reading Comprehension Scores

Note. Fall comprehension = Fall Gates–MacGinitie Reading Tests extended scale scores (ESSs). ISI = Individualizing Student Instruction. TSM-MF = teacher/student-managed, meaning-focused. Reading comprehension is modeled at the 25th (white = 435 ESS), 50th (gray = 462 ESS), and 75th (black = 489 ESS) percentiles of the sample. Difference scores closer to 0 indicate greater precision in the A2i recommended amounts of TSM, meaning-focused, small-group instruction that students received (observed amount − A2i recommended amount).

Using HLMs, we then examined the association between precision of TSM-MF, small-group instruction and reading comprehension outcomes. We included all students because there was likely some drift of the ISI intervention, and notably, some vocabulary teachers used small-group instruction. Results, controlling for schoolwide FARL and fall reading comprehension scores, revealed that the more precisely teachers provided the A2i-recommended amounts (i.e., difference score closer to 0 minutes), the greater were students’ reading comprehension gains (coefficient = 0.734, standard error = 0.02, p = .001). The effect size (d) for the range of difference scores for just students in the ISI classrooms (21.95 − 0 minutes) was 0.64.

DISCUSSION

The results of this study revealed that the ISI intervention designed to explicitly consider C×I interactions was generally more effective in improving students’ reading comprehension than was instruction of similar quality that did not take into account C×I interactions. Supporting our hypothesis, we found that, on average, students in the ISI condition demonstrated greater gains in reading comprehension overall than did students in the vocabulary condition. Moreover, teachers in the ISI condition, as compared with the vocabulary condition, were more likely to provide the amounts of each type of small-group and individual instruction recommended by A2i, as evidenced by significantly smaller differences between the observed and recommended amounts of instruction (i.e., difference scores that were generally closer to 0).