Abstract

In this study, we evaluated the effects of positional prompts on teaching receptive identification to six children diagnosed with autism spectrum disorder (ASD). The researchers implemented a most-to-least prompting system using a three level hierarchy to teach receptive picture identification. Within the prompting hierarchy, only positional prompts were used. The most assistive prompt was placing the target stimulus 12 in. closer to the participant, the less assistive prompt was placing the target stimulus 6 in. closer to the participant, and no prompt was placing the target stimulus in line with the alternative stimuli. A non-concurrent multiple baseline design across behaviors was used to evaluate the effectiveness of the positional prompt. Results indicated that the implementation of positional prompts resulted in participants reaching mastery criterion and maintaining skills at follow-up for the majority of the participants. The results of the study have both future clinical and research implications.

Keywords: Autism, Most-to-least prompting, Prompts, Positional prompts, Transfer of stimulus control

Prompts are often used throughout the course of instruction provided for individuals diagnosed with an autism spectrum disorder (ASD) (Green, 2001; Leaf et al., 2014a; MacDuff, Krantz, & McClannahan, 2001). Effective prompts are antecedent manipulations that alter the stimulus conditions in a manner that increases the likelihood of the desired response (Green, 2001; Grow & LeBlanc, 2013; MacDuff et al., 2001; Krantz & McClannahan, 1998; Wolery, Ault, & Doyle, 1992a). For instance, in the case of teaching receptive labels, the teacher may physically guide the learner’s hand to the correct stimulus in an array after delivering an instruction to “touch apple.” Prompts should be faded in a way that gradually shifts stimulus control from the auxiliary, extra, or artificial stimulus (MacDuff et al., 2001) to the stimulus that should occasion the learner’s response in the criterion environment.

Today, there are numerous prompt types utilized by individuals providing treatment for children diagnosed with ASD (Green, 2001; MacDuff et al., 2001). These include but are not limited to the following: verbal, modeling, manual, gestural, photographs and line drawing, and textual (see MacDuff et al., 2001 for a review). It can be difficult to determine when to provide a prompt, when to fade a prompt, and what level of assistance to provide. Therefore, several prompt fading systems have been developed which include, but are not limited to, constant time delay (e.g., Walker, 2008), progressive time delay (e.g., Walker, 2008), simultaneous prompting (e.g., Leaf, Sheldon, & Sherman, 2010), no-no prompting (e.g., Leaf et al., 2010), flexible prompt fading (e.g., Soluaga, Leaf, Taubman, McEachin, & Leaf, 2008), and least-to-most prompting (e.g., Libby, Weiss, Bancroft, & Ahearn, 2008). Another common prompt fading system is most-to-least prompting (e.g., Libby et al., 2008). In most-to-least prompting, the therapist begins by providing the most assistive prompt, usually a controlling prompt, which should guarantee a correct response (Wolery, Holcombe, Werts, & Cipolloni, 1992), and systematically fades to a less assistive prompt (e.g., a non-controlling-prompt which increases the likelihood of a correct response). Over time, the goal is to transfer stimulus control from the most assistive prompt to the desired controlling stimulus while limiting errors.

When using only one or a variety of prompt types, there is often a risk of prompt dependence or failure to transfer stimulus control to the desired stimuli. When this occurs, a learner might not engage in an approximation of the terminal response without the provision of a prompt or fail to respond correctly when prompts are completely faded. As a result of these two common concerns, some authors have recommended against the use of particular prompt types altogether. For example, Grow and LeBlanc (2013) recommend against the use of extra-stimulus prompts, such as position prompts, to avoid establishing faulty stimulus control during teaching.

To use position prompts, the target item is moved closer to the student while the other item(s) in the array remains further away, which increases the likelihood of selecting the correct, closer item (Lovaas, 2003). For example, in a match-to-sample task using three stimuli, the teacher may place the target stimulus closer to the participant than the other stimuli and gradually move the target back to the original field. Transfer of stimulus control is displayed when the learner responds correctly with all of the stimuli equidistant from the learner. Despite recommendations against their use, position prompts have been commonly implemented in clinical settings, recommended in curricular books (e.g., Lovaas, 1981, 2003), and evaluated in empirical investigations when combined with other prompt types (e.g., Leaf et al., 2014a; Soluaga et al., 2008).

Soluaga et al. (2008) compared the use of a time delay prompting procedure to flexible prompt fading to teach receptive identification to five children diagnosed with ASD. The authors evaluated five different prompts (i.e., physical, gestural, 2D, reduction of the field, and positional) to determine what controlling prompt to use with the time delay prompting procedure. Additionally, positional prompts were used as part of teaching in the flexible prompt fading condition. The results of the study indicated that both prompting procedures were effective, and the efficiency was idiosyncratic to the learner. Although the results showed the effectiveness of the two prompting procedures, there were no data on how frequently positional prompts were implemented. Furthermore, data were not reported on the accuracy of the participants’ responses when positional prompts were used.

In 2014, Leaf and colleagues compared most-to-least prompting to error correction for two young children diagnosed with ASD. A four-step prompt hierarchy was used in the most-to-least procedure for both participants. A positional prompt was the second most assistive prompt used for one of the participants and the least assistive prompt for the other participant. The results of the study showed that both of the procedures were effective in teaching the participants the receptive tasks. The authors evaluated participant responding during teaching but did not specifically evaluate the rate of correct responding when a positional prompt was provided. Similarly, Leaf, Leaf, Taubman, McEachin, and Delmolino (2014b) compared flexible prompt fading to error correction to teach five children, diagnosed with ASD, to vocally state pictures of Muppet© characters. Once again, positional prompts were used as part of the flexible prompt fading procedures. Results of the study showed that across two different sites, participants learned the skills taught using the flexible prompt fading procedure. However, like the two previous studies, there was no data indicating participant responding when a positional prompt was presented.

While the use of positional prompts in combination with other prompt types (e.g., flexible prompt fading) has been shown to be an effective teaching tool, it is unclear if position prompts alone would yield similar results. Additionally, professional recommendations against the use of positional prompts (e.g., Grow & Leblanc, 2013) may result professionals avoiding the use of potentially effective prompt types. Therefore, research evaluating the use of positional prompts in the absence of other prompt types is warranted to determine if positional prompts can be effective and to evaluate best practice recommendations (e.g., Grow & Leblanc, 2013). The purpose of the present study was to evaluate the effects of positional prompts implemented in a most-to-least prompting system, in the absence of other prompt types, on receptive label acquisition with six children diagnosed with ASD.

Method

Participants

Six children all independently diagnosed with ASD participated in this study. All participants had a previous history with discrete trial teaching (DTT). Each participant had a learning history with flexible prompt fading (Soluaga et al., 2008) in which multiple prompt types were used (e.g., vocal prompts, reduction of the field prompts, physical prompts, model prompts, and multiple alternatives). However, each participant had minimal experience with positional prompts prior to this study. At the time of the study, all participants were receiving behavioral intervention which included programming for receptive labels. Table 1 provides the Peabody Picture Vocabulary Standard Score and Expressive One Word Standard Score for each of the six participants.

Table 1.

Participant demographic information

| Participant | Expressive One Word Standard Score | Peabody Picture Vocabulary Standard Score |

|---|---|---|

| Michael | 90 | 85 |

| Dwight | 97 | 109 |

| Andy | 145 | 120 |

| Pam | 145 | 132 |

| Jim | 106 | 90 |

| Angela | 84 | 81 |

Michael was a 9-year-old male who was placed in a general education classroom with behavioral supports. Michael could expressively label over 1000 items but rarely displayed spontaneous language. He could sustain attending for approximately 5 min prior to the start of the study. Michael displayed high rates of stereotypic behavior, including hand flapping, rocking, and making vocal noises. Michael had no previous history with positional prompting but had 6 years of experience with DTT.

Dwight was a 7-year-old male who was placed in a special education classroom. He could expressively label over 1000 words, displayed spontaneous language, could attend for up to 15 min in duration, and displayed moderate rates of stereotypic behavior. Dwight had no previous history with positional prompting but had 5 years of experience with DTT.

Andy was a 4-year-old male who was placed in a regular education classroom with supports. He communicated using full sentences, had age typical play skills, and displayed low rates of stereotypic behavior but frequently engaged in non-compliant behavior. Andy had minimal experience with positional prompts and had 1 year of experience with DTT.

Pam was a 4-year-old female who was receiving applied behavior analysis (ABA) intervention as her primary form of education. She communicated using full sentences, had age typical play skills, and displayed low rates of stereotypic behavior but frequently engaged in non-compliant behavior and tantrums. Pam had minimal experience with positional prompts and had 1 year of experience with DTT.

Jim was a 4-year-old male who was receiving ABA-based intervention as his primary form of education. He communicated using full sentences, had limited play skills, and displayed low rates of stereotypic behavior but frequently engaged in non-compliant behavior. Jim had no previous experience with positional prompts and had 6 months of experience with DTT.

Angela was a 4-year-old female who was receiving ABA-based intervention as her primary form of education. She was fully conversational, had limited play skills, and displayed low rates of stereotypic behavior but frequently engaged in non-compliant behavior. Angela had minimal experience with positional prompts and had 6 months of experience with DTT.

Setting

All sessions occurred in a small research room at a private clinic located in Southern California that provided comprehensive behavioral intervention for individuals diagnosed with ASD. The room contained a table and chairs as well as other furniture and educational materials (e.g., books). The table was marked with a minimally visible grid for use by the interventionists during the prompting condition. This was done in an effort for the position of the stimuli to remain consistent across days, trials, and interventionists. Sessions occurred once a day up to 5 days per week and lasted approximately 15 min.

Targets

Prior to baseline, the researchers met with the participants’ parents or clinical supervisor (i.e., the person in charge of developing programming) to determine the targets for the study. The researchers, parents, and/or clinical supervisors selected targets in which the participant’s peers showed interest so that the participant could participate in conversations about the targets and/or play appropriately with the targets. As such, the researchers selected unknown pictures of either cartoon characters, comic book characters, sports teams, or athletes for use during the intervention (see Table 2). Stimuli were introduced in sets of three with each set consisting of three different stimuli (i.e., nine pictures in total), with the exception of Angela with whom only two sets were used. None of the targets were available for teaching outside of research sessions.

Table 2.

Skills taught to each participant

| Participant | Set one | Set two | Set three |

|---|---|---|---|

| Michael | Ohio Statea, Notre Dameb, Florida Statec | USCa, Oregonb, Alabamac | TCUa, Michigan Stateb, Baylorc |

| Dwight | Oregona, USCb, Alabamac | Dwight Howarda, Carmelo Anthonyb, Tim Duncanc | Mike Trouta Peyton Manningb, Kobe Bryantc |

| Andy | Colossusa, Sabertoothb, Sentinelc | Galactusa, Apocolypseb, Thanosc | Modoka, Task Masterb Baron Zemoc, |

| Pam | Magnetoa, Professor Xb, Wolverinec | Modoka, Baron Zemob, Task Masterc | Mirror Mastera, Baneb, Sinestoc |

| Jim | Raptora, Trexb, Stegosaursc | Galactusa, Thanosb, Apocolypsec, | Mirror Mastera, Baneb, Sinestoc |

| Angela | Raptora, Trexb, Stegosaursc | Glimmera, Terenceb, Meridac |

aRepresents target 1 for each set

bRepresents target 2 for each set

cRepresents target 3 for each set

Behavior Coding

The researchers implemented conventional DTT within both probe sessions (see below) and teaching sessions (see below). A response was defined as the first stimulus the participant touched after the instruction. The participants’ hands were not allowed to be in contact with any of the stimuli at the onset of the trial. On each trial during probe sessions, the participants’ responses were categorized as correct, incorrect, or no response. A correct response was defined as anytime the participant touched the target stimulus within 5 s of the instruction. An incorrect response was defined as anytime the participant touched a stimulus that did not correspond with the interventionist’s instruction, touched two stimuli simultaneously, or stated she/he did not know the correct stimulus (e.g., “I don’t know”). No response was defined as anytime the participant did not touch any stimulus within 5 s of the interventionist’s instruction.

On each trial during teaching, participant responding was also categorized as correct, incorrect, or no response as defined above. In addition to these measures, prompted correct and incorrect responses were measured during teaching sessions. A prompted correct response was defined as anytime the participant touched the target stimulus within 5 s of the instruction when the target stimulus was 0 or 6 in. from the participant. A prompted incorrect response was defined as anytime the participant touched any stimulus that did not correspond with the interventionist’s instruction, touched two stimuli simultaneously, touched none of the stimuli, or stated she/he did not know the correct stimulus (e.g., “I don’t know”) when the target stimulus was placed 0 or 6 in. away from the participant.

The data sheets used for scoring trials during daily probe and teaching sessions were designed based on Grow and LeBlanc’s (2013) best practice recommendations for receptive language instruction (see Fig. 1 in Grow and Leblanc for a detailed example). The data sheets were designed in an effort to ensure counterbalancing of the three stimuli within each comparison array and the correct stimuli in a semi-randomized fashion.

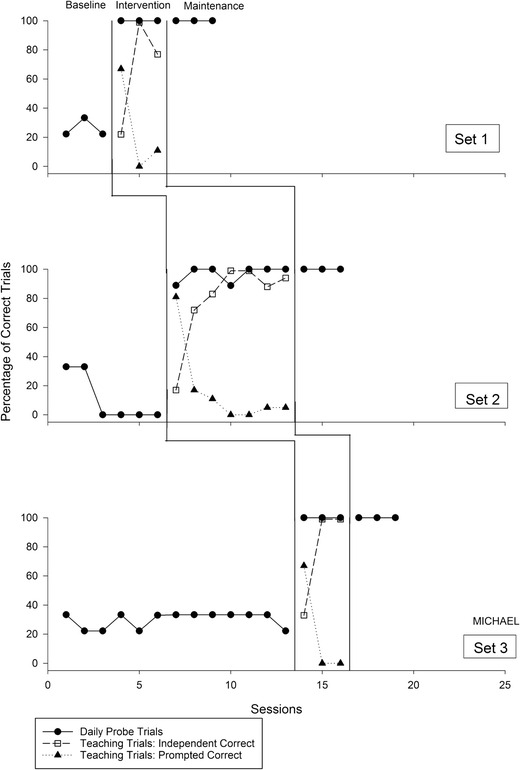

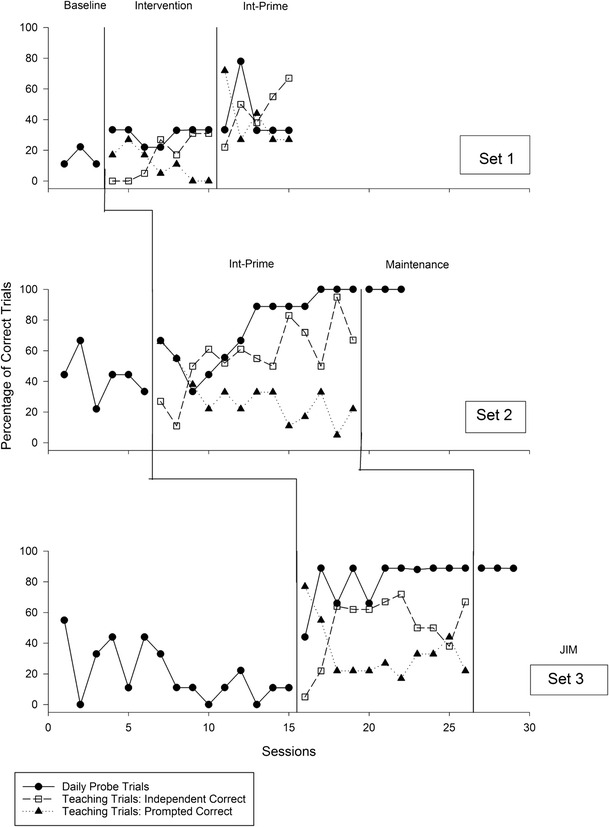

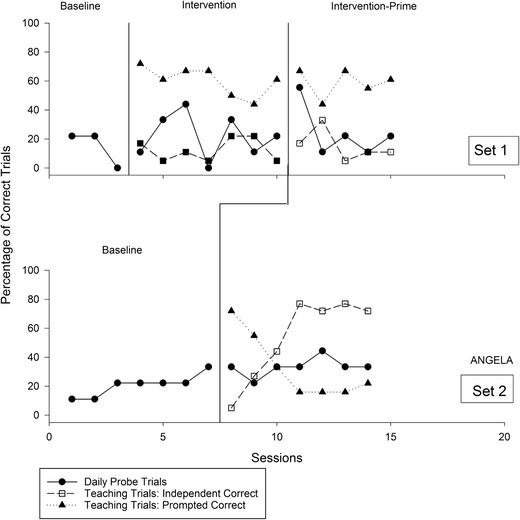

Fig. 1.

Closed circles indicate the percentage of trials with correct responses during daily probe sessions; open squares and closed triangles indicate the percentage of trials with independent and prompted correct responses during teaching sessions, respectively

Dependent Measures

The primary dependent variable was the percentage of trials with correct responding during daily probe sessions (described below). The researchers took the total number of correct trials and divided by the total number of trials to determine the percentage of correct responses per session. Daily probe sessions were used to determine baseline levels, mastery criterion, and maintenance. The mastery criterion was defined as 100 % correct on all targets within a set across three consecutive daily probe sessions.

The second measure evaluated within this study was the total percentage of correct and correct prompted responses during teaching sessions. To calculate the percentage of correct responses, the researchers added the total number of correct responses and divided by the total number of trials and multiplied by 100. To calculate the percentage of prompted correct responses, the researchers added the total number of prompted correct responses and divided by the total number of trials and multiplied by 100. Percentage of prompted correct responses was calculated for each level of prompt (i.e., 0, 6, 12 in.). We also evaluated the total percentage of correct responding across all prompt levels and participants.

The third measure was the overall percentage of correct responding across the three prompting levels (see below) for each set and the overall percentage correct across all teaching sessions. The final measure of the study was a trial-by-trial analysis of the prompt level provided for each target, for each participant, across all sets. The researchers analyzed each trial during teaching sessions, and what prompt level was provided.

Daily Probe Sessions

The interventionists implemented daily probe sessions during baseline, intervention, and maintenance. Daily probe sessions consisted of nine total trials; three for each stimulus. The comparison array was counterbalanced across trials so that the correct comparison was present in each location (i.e., right, center, left) an equal number of times. To begin a trial, the interventionist presented the comparison array 12 in. from the edge of the table where the participant was seated. The interventionist then delivered an instruction to select one of the stimuli (e.g., “Touch Sabertooth”). The interventionist provided 5 s for the participant to respond. If the participant did not respond within 5 s, the interventionist instructed the participant to make a selection (e.g., “You need to try”). Following a response, regardless of accuracy, the interventionist responded with a neutral statement (e.g., “Thanks” or “Thank you”). No programmed reinforcement was delivered for correct, incorrect, or no responses; however, praise was delivered for general compliance (e.g., sitting at the table, touching rather than picking up the correct stimulus) and not engaging in any other aberrant behavior.

Baseline

Baseline consisted of one daily probe (described above) per session. After the daily probe, the interventionist returned the participant back to his or her regularly planned treatment session.

Intervention

No daily probe session was conducted on the first day of intervention for each set. All intervention sessions following the first day of intervention started with a daily probe, followed by a short break before teaching. Trials during teaching sessions were similar to trials during daily probe sessions in that the comparison array and the item targeted were counterbalanced across trials. Each stimulus within the set was the target for six trials with a total of 18 trials during each intervention session.

Positional prompts served as the only prompt type during intervention. To help ensure procedural fidelity, the interventionists lightly marked the table to identify the location of the stimuli and where the positional prompt should be provided (the same table with marks was used during both baseline and the maintenance conditions). The marks were provided so the position of the stimuli remained consistent across days, trials, and interventionists. On each level, there were three marks provided to identify the target on the left, center, and right positions. The three positions were marked X, Y, and Z. The X position was placed on the edge of the table (0 in. away from the participant) closest to where participants were sitting and represented the most assistive prompt provided within teaching sessions. The Y position was placed 6 in. from the edge of the table. The Z position was the location the stimuli were located on each trial during daily probe sessions (i.e., 12 in. away from the edge of the table where the participant was seated). When the target stimuli were 12 in. from the participant (i.e., the Z position), no prompt was provided.

The interventionist used a most-to-least prompting procedure (MacDuff et al., 2001) during all teaching sessions. The most-to-least prompting procedure consisted of moving the target stimulus closer or farther away from the participant. The interventionists used a three-level prompting hierarchy: when the target was 0 in. from the participant, it was considered the most assistive prompt; when the target was 6 in. from the participant, it was considered the second most assistive prompt; and when the target was 12 in. from the participant, there was no prompt provided. Across all three levels, only the target stimulus was placed closer (i.e., 0 or 6 in.) while the other two targets were always placed 12 in. from the participant.

A prompting hierarchy was applied to each stimulus such that each stimulus could move up and down the hierarchy regardless of performance with the other stimuli. The criterion to move to a less assistive prompt was the participant engaging in two correct prompted responses in a row. For example, if the participant selected the correct stimulus on two consecutive trials with the stimulus 0 in. from the participant, that stimulus would be presented 6 in. from the participant on the following trial with that stimulus. The criterion to move to a more assistive prompt was the participant engaging in a single incorrect or prompted incorrect response. For example, if the participant selected an incorrect stimulus when that stimulus was 12 in. from the participant (i.e., no prompt), the next trial that stimulus would be placed 6 in. from the participant. On the first teaching session, the target stimulus always started 0 in. from the participant (i.e., most-to-least prompting).

To begin a trial, the interventionist presented the stimulus array with the correct stimulus located in the predetermined position both horizontally (i.e., left, middle, or right) and vertically (i.e., 0, 6, or 12 in. away from the participant). Then the interventionist delivered an instruction to select the targeted stimulus for that trial (e.g., “Where is Thanos?”). If the participant selected the correct stimulus from the array, the interventionist provided praise (e.g., “That’s right!”) and moved on to the next trial. Praise was selected because it had been demonstrated to be an effective reinforcer during clinical sessions. Moreover, conditioning social praise as a reinforcer was part of all of the participants’ daily programming (Leaf et al., 2016). The names of the stimuli were not provided during feedback. If the participant selected the incorrect stimulus on any trial, the interventionist provided informational feedback (e.g., “That’s not it.”) and moved on to the next trial with a more assistive prompt.

Intervention Prime

After seven sessions with the first set, Jim and Angela were responding at levels similar to baseline that could still be considered chance levels. The researchers hypothesized that praise may not have served as a reinforcer, so a token reinforcement system was implemented. A token paired with praise was delivered contingent on a correct response. The completed token board was then exchanged for access to a treasure chest that contained various toys (e.g., swords, cars, putty).

Maintenance

Maintenance was identical to the baseline (see above). Maintenance data were taken an average of 15 days (range, 2 to 23), 4 days (range, 2 to 6), and 4 days (range, 2 to 7) after teaching had concluded for Michael on sets 1, 2, and 3, respectively. An average of 7 days (range, 3 to 19), 10 days (range, 7 to 14), and 9 days (range, 5 to 14) after teaching had concluded for Dwight on sets 1, 2, and 3, respectively. Maintenance data were taken on an average of 9 days (range, 2 to 15), 8 days (range, 2 to 13), and 6 days (range, 4 to 7) after teaching had concluded for Andy on sets 1, 2, and 3, respectively. An average of 6 days (range, 4 to 9), 24 days (range, 14 to 29), and 7 days (range, 3 to 10) after teaching had concluded for Pam on sets 1, 2, and 3, respectively.

Because Jim did not reach mastery criterion on set 1, maintenance data were not taken. Maintenance data were taken an average of 15 days (range, 13 to 17) and 12 days (range, 9 to 14) after teaching had concluded for Jim for sets 2 and 3, respectively. Because Angela never reached mastery criterion on set 1 or set 2, maintenance data were not collected.

Experimental Design

A non-concurrent multiple baseline across stimuli design (Harvey, May, & Kennedy, 2004; Watson & Workman, 1981) and replicated across participants was utilized to evaluate the effects of positional prompts on the acquisition of the different targets with each of the participants. Sessions lasted up to 15 min and occurred 2 to 5 days a week dependent upon participant availability (e.g., if the participant had a session in the clinical office).

Interobserver Agreement and Treatment Fidelity

The interventionist recorded responding on each trial during each daily probe session and teaching session. A second observer independently recorded responding on each trial during 36.2 % of daily probe sessions (range, 33 to 40.9 % across participants) and 36.4 % of teaching sessions (range, 28.5 to 36.8 % across participants). Agreement was defined as both observers marking the same response occurring on the same trial. Interobserver agreement was calculated by dividing the total number of agreements divided by the total number of agreements plus disagreements and multiplied by 100. Percentage agreement across probes was 99.6 % (range, 97.7 to 100 % across participants) and 100 % for teaching sessions.

Treatment fidelity was assessed to ensure the interventionist implemented daily probe sessions correctly across baseline, intervention, and maintenance conditions. An independent observer recorded the interventionists’ implementation of daily probe sessions in 31.1 % of sessions across the baseline, intervention, and maintenance conditions. Correct interventionist behaviors were (1) placing the comparison array in the correct positions as indicated by the data sheet, (2) providing the correct instruction, (3) providing 5 s for the participant to respond, and (4) providing neutral feedback regardless of accuracy. The interventionists implemented trials during daily probe sessions correctly during 99.8 % of sessions (range, 99.3 to 100 % across participants).

Treatment fidelity was also assessed to ensure the interventionist implemented the trials during teaching sessions correctly during the intervention condition. An independent observer recorded the interventionists’ implementation of the positional prompting hierarchy during 31.4 % of teaching sessions. Correct interventionist behaviors were (1) placing the comparison array in the correct positions as indicated by the data sheet, (2) placing the correct comparison in the correct position (i.e., X, Y, or Z) on the table, (3) providing 5 s for the participant to respond, (4) providing praise, or a token during sessions with Jim and Angela, following a correct response, and (5) providing corrective feedback following an incorrect response. The interventionists implemented the positional prompting procedure correctly during 99.6 % of trials (range, 97.2 to 100 % across participants).

Results

Performance on Daily Probe Sessions

Figures 1, 2, 3, 4, 5, and 6 display the percentage of correct trials during daily probe sessions for each of the six participants. These data are depicted by closed circles. Michael reached the mastery criterion across all three sets of stimuli. During the baseline condition, Michael responded correctly on a low percentage of trials during probe sessions, near chance levels. Michael reached mastery criterion in 3, 7, and 3 daily probe sessions for the first, second, and third set of stimuli, respectively. Michael responded correctly on all trials during teaching (i.e., 100 % of trials) in the maintenance condition across all three sets of stimuli.

Fig. 2.

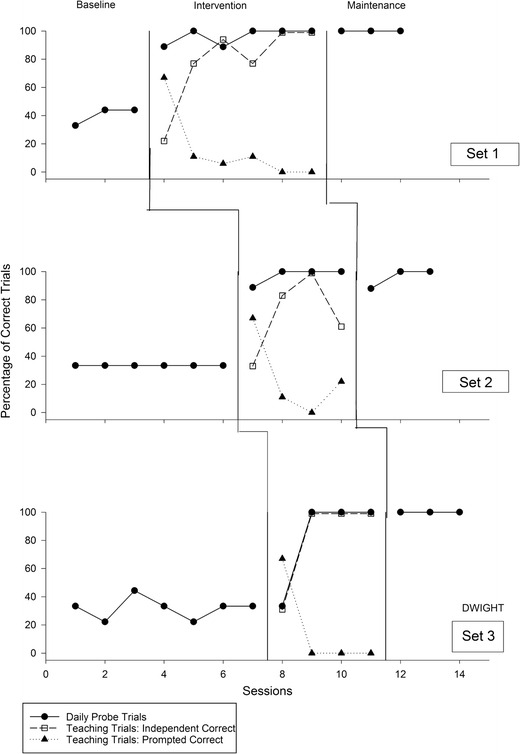

Closed circles indicate the percentage of trials with correct responses during daily probe sessions; open squares and closed triangles indicate the percentage of trials with independent and prompted correct responses during teaching sessions, respectively

Fig. 3.

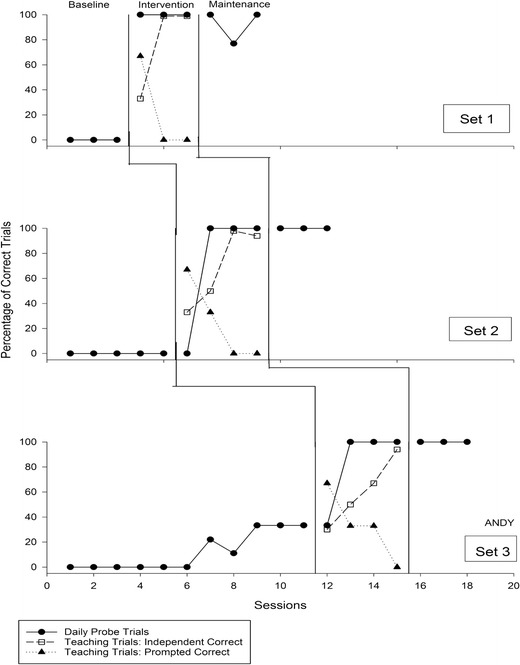

Closed circles indicate the percentage of trials with correct responses during daily probe sessions; open squares and closed triangles indicate the percentage of trials with independent and prompted correct responses during teaching sessions, respectively

Fig. 4.

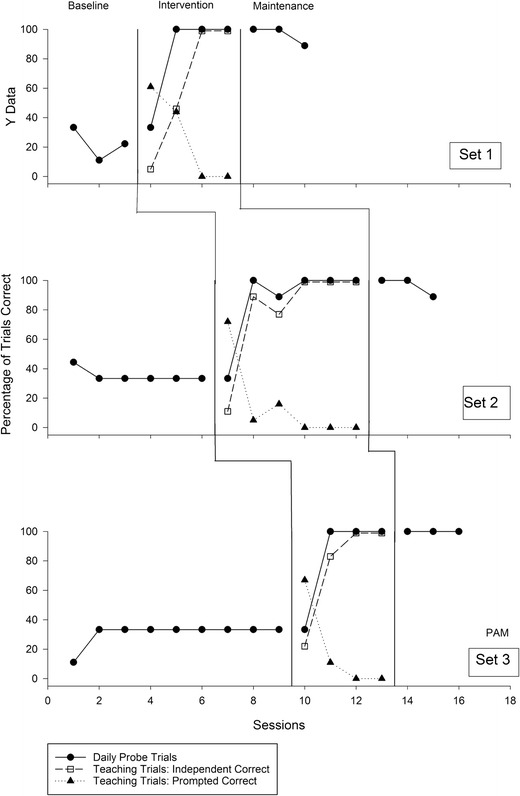

Closed circles indicate the percentage of trials with correct responses during daily probe sessions; open squares and closed triangles indicate the percentage of trials with independent and prompted correct responses during teaching sessions, respectively

Fig. 5.

Closed circles indicate the percentage of trials with correct responses during daily probe sessions; open squares and closed triangles indicate the percentage of trials with independent and prompted correct responses during teaching sessions, respectively

Fig. 6.

Closed circles indicate the percentage of trials with correct responses during daily probe sessions; open squares and closed triangles indicate the percentage of trials with independent and prompted correct responses during teaching sessions, respectively

Dwight also reached the mastery criterion on all three sets of stimuli. During the baseline condition, Dwight responded correctly on a low percentage of trials during probe sessions, near chance levels. Dwight reached mastery criterion in 6, 4, and 4 daily probe sessions for the first, second, and third set of stimuli, respectively. Michael responded correctly on 100 % of trials on all but one daily probe session during the assessment of maintenance.

Andy reached mastery criterion on all three sets of stimuli. During the baseline condition, Andy responded correctly on a low percentage of trials during probe sessions. Although, there was increase in correct responding during baseline with set 3, performance was still at chance levels. Andy reached mastery criterion in 3, 4, and 4 daily probe sessions for the first, second, and third set of stimuli, respectively. Andy responded correctly on 100 % of trials on all but one daily probe session during the assessment of maintenance.

Pam reached mastery criterion on all three sets of stimuli. During the baseline condition, Pam responded correctly on a low percentage of trials during probe sessions, near chance levels. Pam reached mastery criterion in 4, 6, and 4 daily probe sessions for the first, second, and third set of stimuli, respectively. Pam responded correctly on 100 % of trials on all but two daily probe sessions during the assessment of maintenance.

Jim had varied levels of correct responding across the three sets. For the first set, Jim responded correctly on a low percentage of trials during the baseline condition. After seven sessions of intervention, no improvement was observed and a token reinforcement system was implemented (i.e., intervention-prime). After five sessions within this condition, Jim continued to show no improvement and due to ethical reasons (e.g., not prolonging unsuccessful intervention) intervention was discontinued. For the second set, Jim displayed variable levels of correct responding during the baseline condition. Jim reached mastery criterion on the 13th session in the intervention-prime condition. Jim continued to respond correctly on 100 % of trials during all sessions in the maintenance condition. For the third set of stimuli, Jim again displayed variable levels of correct responding in the baseline condition. During the intervention-prime condition, Jim did not reach mastery criterion; however, he did respond correctly on a high percentage of trials. During the assessment of maintenance, he responded incorrectly on one trial during each session.

Angela did not reach the mastery criterion with either set of stimuli. No improvement in correct responding was observed with the first set of stimuli in either the intervention or intervention-prime condition, and due to ethical considerations (e.g., not prolonging unsuccessful intervention), intervention was discontinued. However, to ensure the results were not idiosyncratic to the first set of stimuli, a second set of stimuli were introduced. After seven sessions of intervention-prime, no improvement in correct responding was observed and a third set was not introduced.

Responding During Intervention

Figures 1, 2, 3, 4, 5, and 6 also report the percentage of trials during teaching sessions in which each participant responded independently correct (open squares) and correctly with the provision of a prompt (closed triangles). For Michael, Dwight, Andy, and Pam, two trends emerge. First, an inverse relationship in which correct independent responding increases with a corresponding decrease in prompted correct responses. Second, a quick increase in correct independent responding following the first session of intervention.

Different patterns of responding were observed for Jim and Angela. For Jim, low levels of correct responding with or without a prompt throughout intervention were observed with set 1. However, there was an increase in correct independent responding as intervention continued with sets 2 and 3. For Angela, correct responding was typically only observed when a prompt was used during intervention with set 1. With the introduction of set 2, however, Angela responded correctly without prompting on a high percentage of trials throughout intervention-prime.

Table 3 displays the overall percentage of correct responding during teaching and the percentage of correct responding when the target was placed 0, 6, and 12 in. from the participant. Michael, Dwight, Andy, and Pam all responded correctly on a high percentage of trials across all prompt levels (i.e., above 95, 98, 96, and 95 % across all three sets, respectively). Pam also responded incorrectly on a higher percentage of trials when the most assistive prompt was provided. Jim responded correctly around 75 % of trials across all teaching sessions. Jim also responded incorrectly on a high percentage of trials with set 1 when the most assistive prompt was provided. Angela responded correctly around 78 % of all teaching sessions; however, correct responding typically only occurred when a prompt was provided.

Table 3.

Participant percentage of correct responding at each prompt level for each stimulus set during teaching

| Participant | Prompt level (in.) | Set 1 (%) | Set 2 (%) | Set 3 (%) | Total (%) |

|---|---|---|---|---|---|

| Michael | 0 | 75.0 | 85.7 | 100.0 | 85.7 |

| 6 | 100.0 | 100.0 | 100.0 | 100.0 | |

| 12 | 94.7 | 95.2 | 100.0 | 96.2 | |

| Total | 92.5 | 95.2 | 100.0 | 95.7 | |

| Dwight | 0 | 100.0 | 100.0 | 100.0 | 100.0 |

| 6 | 77.0 | 100.0 | 100.0 | 93.1 | |

| 12 | 99.3 | 94.4 | 96.2 | 98.2 | |

| Total | 98.7 | 95.8 | 97.1 | 98.0 | |

| Andy | 0 | 100.0 | 100.0 | 100.0 | 100.0 |

| 6 | 100.0 | 100.0 | 93.7 | 97.0 | |

| 12 | 100.0 | 94.4 | 95.7 | 96.5 | |

| Total | 100.0 | 95.8 | 95.8 | 96.4 | |

| Pam | 0 | 64.7 | 72.7 | 75.0 | 69.4 |

| 6 | 80.0 | 100.0 | 100.0 | 92.5 | |

| 12 | 100.0 | 97.8 | 97.3 | 98.4 | |

| Total | 88.8 | 95.4 | 94.2 | 93.6 | |

| Jim | 0 | 14.4 | 100.0 | 100.0 | 25.2 |

| 6 | 97.5 | 100.0 | 98.3 | 98.7 | |

| 12 | 83.3 | 80.9 | 79.2 | 80.9 | |

| Total | 55.1 | 86.7 | 85.8 | 75.3 | |

| Angela | 0 | 100.0 | 100.0 | – | 100.0 |

| 6 | 96.2 | 97.3 | – | 96.5 | |

| 12 | 36.4 | 81.2 | – | 56.8 | |

| Total | 73.8 | 87.3 | – | 78.3 |

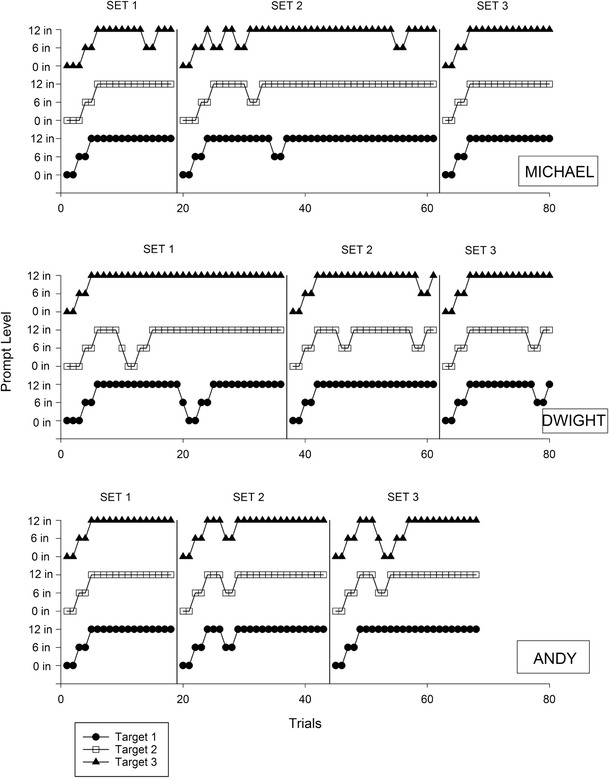

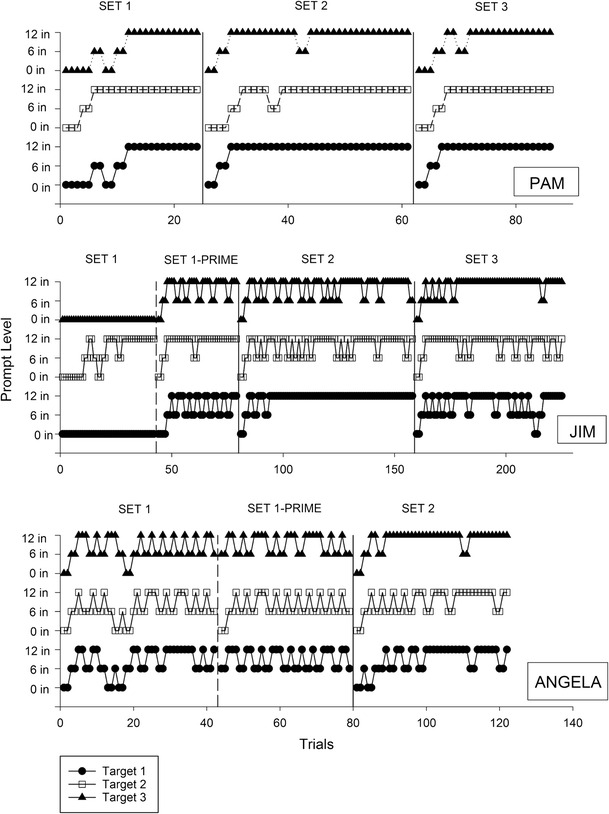

Trial-by-Trial Analysis of Prompts During Teaching

Figures 7 and 8 provide a trial-by-trial analysis of when a prompt was provided for each of the targets across all sets and participants. Each panel represents a different participant and the phase’s change lines represent when a different set was introduced. The x-axis represents each trial during teaching sessions, and the y-axis represents the prompt level that was provided. All three targets are represented in the panel, with target 1 (closed circles) on the bottom, target 2 (open squares) in the middle, and target 3 (closed triangles) on the top. There are three different prompt levels per target (i.e. 0, 6, and 12 in.) along the y-axis. Thus, upward movement indicates the prompt was faded, and downward movement indicates that a more assistive prompt was provided.

Fig. 7.

Each data path represents one stimulus in the set. Upward movement indicates fading to a less assistive prompt, while downward movement indicates moving to a more assistive prompt

Fig. 8.

Each data path represents one stimulus in the set. Upward movement indicates fading to a less assistive prompt, while downward movement indicates moving to a more assistive prompt

For Michael, Dwight, Andy, and Pam, the data displays a quick progression from the most assistive prompt to no prompt across all targets and sets, with few trials in which an incorrect response occurred. For Jim, the data displays several occurrences in which the assistance of the prompt was faded; however, once the prompt was faded completely for the targets in set 1 and 2, incorrect responding occurred and prompts were reintroduced. For Angela, the data displays a similar pattern of responding to Jim across all targets and sets with consistent incorrect responding when prompts were completely faded.

Discussion

This study examined the effectiveness of positional prompts when used to teach receptive labels for six individuals diagnosed with ASD. Four of the six participants (i.e., Michael, Dwight, Andy, and Pam) reached the mastery criterion with all three sets of stimuli. Furthermore, these four participants responded correctly on a high percentage of trials during the maintenance condition. With the addition of a token reinforcement system, Jim reached the mastery criterion with the stimuli in set 2 and reached high levels of correct responding with set 3. Angela did not reach mastery criteria with either set of stimuli that were introduced and, as a result, a third set of stimuli was not introduced. The results also indicated a high percentage of correct responding (independent and prompted) for five of the six participants in the study. Thus, the results of the study showed that the implementation and fading of positional prompts was effective in skill acquisition for most participants. This finding provides clinicians with some additional evidence that positional prompt types could be implemented in clinical practice.

Although positional prompts were effective for the majority of participants, these results were not observed for Jim and Angela. Several potential variables may have prevented Jim and Angela from reaching the mastery criterion on some of the stimulus sets. First, it is possible that an effective reinforcer (i.e., praise or the token economy) was not identified. Future researchers may wish to first conduct preference assessments (Fisher et al., 1992) or in-the-moment reinforcer analysis (Leaf et al., in press) to determine effective reinforcers prior to intervention.

Second, the failure to reach mastery criterion may be due to the manner in which skill acquisition was measured. In this study, the researchers implemented probe sessions to determine mastery of each set. No feedback (i.e., reinforcement or punishment) was provided during probe sessions and therefore may have had an extinction effect for both Jim and Angela. The case for extinction can especially be made for Angela as she responded incorrectly on the majority of trials during probe sessions but responded correctly on the majority of trials during teaching. Another reason Angela and Jim may have responded differently is the interventionists followed strict, prescribed rules for movement between the different prompt levels. It is possible that transfer of stimulus control may have occurred if the fading of the prompt was varied or occurred slower (e.g., Soluaga et al., 2008).

Third, it could be that Jim and Angela had a shorter history of receiving ABA services (i.e., 6 months compared to 12 months or more for the other participants). This difference could have resulted in Jim and Angela missing some component skills required for the intervention under investigation. Finally, it could be that the use of positional prompts in a most-to-least prompt fading system failed to transfer stimulus control from the prompt to the instruction alone. Future researchers may wish to evaluate positional prompts in other prompt fading systems (e.g., constant time delay, flexible prompt fading, or no-no prompt) to determine if transferring stimulus control could be achieved.

Despite the encouraging results of this study, several limitations could be addressed by future research. First, the interventionists were governed by a strict set of rules for when to move up and down the prompt hierarchy, which may not align with typical clinical practices. The strict rules prevented the interventionists from making needed adjustments to the prompt level based on the participants’ behavior in the moment. Researchers have previously argued for the use of more flexible prompting systems which may result in better learning (Leaf et al., 2014b, 2016). For prompts to be successfully faded, different learners may require individualized fading steps and future researchers may examine potential predictors for when prompts should be faded quickly or more slowly. Second, the authors elected to use a non-concurrent multiple baseline design across stimulus sets as opposed to a concurrent multiple baseline design. The authors selected a non-concurrent design, as it was more practical in this particular setting because it took less time away from clinical sessions per day. Although this design does control for threats to internal validity (Harvey, May, & Kennedy, 2004; Watson & Workman, 1981) and is commonly implemented in research studies, the use of a concurrent multiple baseline design may be desired by future researchers.

The failure for some learners to acquire the targeted skill using positional prompts, like in the case of Jim and Angela, may lead some researchers to suggest avoiding extra-stimulus prompts, such as positional prompts, altogether (e.g., Grow & LeBlanc, 2013). Suggesting teachers to avoid the use of these prompts is based on the assumption that it inherently leads to faulty stimulus control. Although Jim and Angela failed to learn the targeted labels, there was no evidence of the type of faulty stimulus control that has been discussed in the literature (e.g., Koegel & Rincover, 1976; Green, 2001). Furthermore, the results from the other four participants (i.e., Michael, Dwight, Andy, and Pam) indicate that positional prompts can be an effective means of developing the desired stimulus control. It is important to note that transfer of stimulus control occurs during the fading of the prompt (Etzel & LeBlanc, 1979; Touchette, 1971; Zygmont, Lazar, Dube, & McIlvane, 1992). Perhaps future research should examine the best practice in successfully fading various prompt types to develop desired stimulus control, rather than determining which prompt itself is the best practice.

Compliance with Ethical Standards

No funding was received for this study. All procedures performed in studies involving human participants were in accordance with ethical standards of the institutional research committee and with 1964 Helsinki Declaration and its later amendments or comparable ethical standards. Informed consent was obtained from the parents of all individual participants included in the study.

Contributor Information

Justin B. Leaf, Email: Jblautpar@aol.com

Joseph H. Cihon, Email: Jcihonautpar@aol.com

Donna Townley-Cochran, Email: dcochranautpar@aol.com.

Kevin Miller, Email: k_millerautpar@aol.com.

Ronald Leaf, Email: Rlautpar@aol.com.

John McEachin, Email: jmautpar@aol.com.

Mitchell Taubman, Email: Mtautpar@aol.com.

References

- Etzel BC, LeBlanc JM. The simplest treatment alternative: the law of parsimony applied to choosing appropriate instructional control and errorless-learning procedures for the difficult-to-teach child. Journal of Autism and Developmental Disorders. 1979;9(4):361–382. doi: 10.1007/BF01531445. [DOI] [PubMed] [Google Scholar]

- Fisher W, Piazza CC, Bowman LG, Hagopian LP, Owens JC, Slevin I. A comparison of two approaches for identifying reinforcers for persons with severe and profound disabilities. Journal of Applied Behavior Analysis. 1992;25:491–498. doi: 10.1901/jaba.1992.25-491. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Green G. Behavior analytic instruction for learners with autism advances in stimulus control technology. Focus on Autism and Other Developmental Disabilities. 2001;16(2):72–85. doi: 10.1177/108835760101600203. [DOI] [Google Scholar]

- Grow L, LeBlanc L. Teaching receptive language skills: recommendations for instructors. Behavior Analysis in Practice. 2013;6(1):56–75. doi: 10.1007/BF03391791. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harvey MT, May ME, Kennedy CH. Nonconcurrent multiple baseline designs and the evaluation of educational systems. Journal of Behavioral Education. 2004;13:267–276. doi: 10.1023/B:JOBE.0000044735.51022.5d. [DOI] [Google Scholar]

- Koegel RL, Rincover A. Some detrimental effects of using extra stimuli to guide learning in normal and autistic children. Journal of Abnormal Child Psychology. 1976;4:59–71. doi: 10.1007/BF00917605. [DOI] [PubMed] [Google Scholar]

- Krantz, P. J., & McClannahan, L. E. (1998) Social interaction skills for children with autism: a script-fading procedure for beginning readers. Journal of Applied Behavior Analysis, 31(2), 191–202 [DOI] [PMC free article] [PubMed]

- Leaf JB, Alcalay A, Leaf JA, Tsuji K, Kassardjian A, Dale S, et al. Comparison of most-to-least to error correction for teaching receptive labelling for two children diagnosed with autism. Journal of Research in Special Educational Needs. 2014 [Google Scholar]

- Leaf, J. B., Leaf, R., Leaf, J. A., Alcalay, A., Ravid, D., Dale, S., Kassardjian, A., Tsuji, K., Taubman, M., McEachin, J., & Oppenheim-Leaf, M. L. (in press). Comparing paired-stimulus preference assessments to the in-the-moment reinforcer analysis on skill acquisition: a preliminary analysis. Focus on Autism and Other Developmental Disabilities.

- Leaf JB, Leaf R, McEachin J, Taubman M, Ala’i-Rosales S, Ross RK, et al. Applied behavior analysis is a science and, therefore, progressive. Journal of Autism and Developmental Disorders. 2016;46(2):720–731. doi: 10.1007/s10803-015-2591-6. [DOI] [PubMed] [Google Scholar]

- Leaf JB, Leaf R, Taubman M, McEachin J, Delmolino L. Comparison of flexible prompt fading to error correction for children with autism spectrum disorder. Journal of Developmental and Physical Disabilities. 2014;26(2):203–224. doi: 10.1007/s10882-013-9354-0. [DOI] [Google Scholar]

- Leaf JB, Sheldon JB, Sherman JA. Comparison of simultaneous prompting and no-no prompting in two-choice discrimination learning with children with autism. Journal of Applied Behavior Analysis. 2010;43:215–228. doi: 10.1901/jaba.2010.43-215. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Libby ME, Weiss JS, Bancroft S, Ahearn WH. A comparison of most-to-least and least-to-most prompting on acquisition of solitary play skills. Behavior Analysis in Practice. 2008;1:37–43. doi: 10.1007/BF03391719. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lovaas OI. Teaching developmentally disabled children: the me book. Austin, TX: PRO-ED Books; 1981. [Google Scholar]

- Lovaas OI. Teaching individuals with developmental delays: basic intervention techniques. Austin, TX: PRO-ED Books; 2003. [Google Scholar]

- MacDuff GS, Krantz PJ, McClannahan LE. Prompts and prompt-fading strategies for people with autism. In: Maurice C, Green G, Foxx RM, editors. Making a difference behavioral intervention for autism. 1st. Austin, TX: Pro Ed; 2001. pp. 37–50. [Google Scholar]

- Soluaga D, Leaf JB, Taubman M, McEachin J, Leaf R. A comparison of flexible prompt fading and constant time delay for five children with autism. Research in Autism Spectrum Disorders. 2008;2:753–765. doi: 10.1016/j.rasd.2008.03.005. [DOI] [Google Scholar]

- Touchette PE. Transfer of stimulus control: measuring the moment of transfer. Journal of the Experimental Analysis of Behavior. 1971;15(3):347–354. doi: 10.1901/jeab.1971.15-347. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Walker G. Constant and progressive time delay procedures for teaching children with autism: a literature review. Journal of Autism and Developmental Disorders. 2008;38:261–275. doi: 10.1007/s10803-007-0390-4. [DOI] [PubMed] [Google Scholar]

- Watson PJ, Workman EA. The non-concurrent multiple baseline across-individuals design: an extension of the traditional multiple baseline design. Journal of Behavioral Therapy and Experimental Psychiatry. 1981;12:257–259. doi: 10.1016/0005-7916(81)90055-0. [DOI] [PubMed] [Google Scholar]

- Wolery, M., Ault, M. J., & Doyle, P. M. (1992a). Teaching students with moderate to severe disabilities: Use of response prompting strategies. White Plains, NY: Longman.

- Wolery M, Holcombe A, Werts MG, Cipolloni RM. Effects of simultaneous prompting and instructive feedback. Early Education & Development. 1992;4(1):20–31. doi: 10.1207/s15566935eed0401_2. [DOI] [Google Scholar]

- Zygmont DM, Lazar RM, Dube WV, McIlvane WJ. Teaching arbitrary matching via sample stimulus-control shaping to young children and mentally retarded individuals: a methodological note. Journal of the Experimental Analysis of Behavior. 1992;57(1):109–117. doi: 10.1901/jeab.1992.57-109. [DOI] [PMC free article] [PubMed] [Google Scholar]