Abstract

Fourier ptychography (FP) is a recently proposed computational imaging technique for high space-bandwidth product imaging. In real setups such as endoscope and transmission electron microscope, the common sample motion largely degrades the FP reconstruction and limits its practicability. In this paper, we propose a novel FP reconstruction method to efficiently correct for unknown sample motion. Specifically, we adaptively update the sample’s Fourier spectrum from low spatial-frequency regions towards high spatial-frequency ones, with an additional motion recovery and phase-offset compensation procedure for each sub-spectrum. Benefiting from the phase retrieval redundancy theory, the required large overlap between adjacent sub-spectra offers an accurate guide for successful motion recovery. Experimental results on both simulated data and real captured data show that the proposed method can correct for unknown sample motion with its standard deviation being up to 10% of the field-of-view scale. We have released our source code for non-commercial use, and it may find wide applications in related FP platforms such as endoscopy and transmission electron microscopy.

OCIS codes: (170.3010) Image reconstruction techniques, (110.1758) Computational imaging, (170.0180) Microscopy

1. Introduction

Fourier ptychography (FP) is a novel computational imaging technique for high space-bandwidth product (SBP) imaging [1, 2]. This technique captures a set of low-resolution (LR) images, which correspond to different Fourier sub-spectra of the sample. By stitching these sub-spectra together in Fourier space using a reconstruction algorithm, a large field-of-view (FOV) and high-resolution (HR) image of the scene can be obtained. It has been successfully demonstrated in optical microscopy as Fourier ptychographic microscopy (FPM) [1], where the incident light is assumed to be a plane wave, and the LR images are captured under different incident angles from the LEDs placed at different locations. The measurements correspond to different Fourier sub-spectra of the sample, as shown in Fig. 1. The synthetic numerical aperture (NA) of the FPM setup reported in ref. [1] is ∼0.5, and the FOV reaches ∼120 mm2, which greatly improve the throughput of existing microscope. Due to its simple setup and super performance, FPM has been widely applied in 3D imaging [3,4], fluorescence imaging [5,6], mobile microscope [7,8], and high-speed in vitro imaging [9,10].

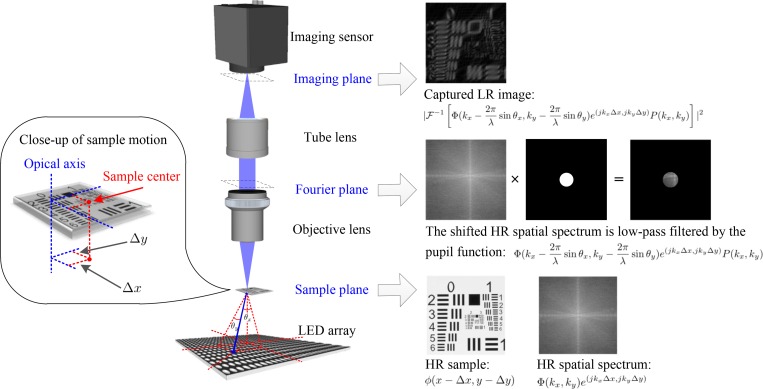

Fig. 1.

The FPM system and its image formation in the case with sample motion.

Fourier ptychographic reconstruction is a typical phase retrieval optimization process, which needs to recover a complex function given the intensity measurements of its linear transforms (Fourier transform in FP) [11]. Existing FP algorithms can be classified into three categories including the alternating projection (AP) method [1,2,12], the semi-definite programming (SDP) based method [13], and the non-convex gradient descent method [14–16]. AP adds constraints alternately in spatial space (captured intensity images) and Fourier space (pupil function), to stitch the LR sub-spectra together. Ou et al. [12] added an additional pupil function update procedure into AP to correct pupil function error. AP is easy to implement and fast to converge, but is sensitive to measurement noise and system errors, which arise from numerous factors such as short camera exposure time [14] and LED misalignment. To tackle measurement noise, Bian et al. [14] proposed the Wirtinger flow optimization for Fourier ptychography (WFP), which uses the gradient-descent scheme and Wirtinger calculus [17] to minimize the intensity errors between estimated LR images and measurements. WFP is robust to Gaussian noise, and produces better reconstruction results than AP in low-exposure imaging scenarios. Thus it can largely decrease the required image acquisition time. However, it needs careful initialization since the optimization is non-convex. Later, Yeh et al. [15] tested different objective functions (intensity based, amplitude based and Poisson maximum likelihood) under the gradient-descent optimization scheme for FP reconstruction. The results show that the amplitude-based and Poisson maximum-likelihood objective functions produce better results than the intensity-based objective function. To address the LED misalignment, the authors added a simulated annealing optimization procedure into each iteration to search for the optimal pupil locations. Recently, Bian et al. [16] proposed a novel FP reconstruction method termed truncated Poisson Wirtinger Fourier ptychographic reconstruction (TPWFP), which utilizes Poisson maximum likelihood for better signal modeling, and truncated Wirtinger gradient for effective aberration removal. The method can efficiently handle pupil location error and various measurement noise including Poisson noise, Gaussian noise and speckle noise. To make the FP reconstruction convex, Horstmeyer et al. [13] proposed an SDP [18,19] based optimization method to recover the HR spatial spectrum. The method is robust to Gaussian noise and guarantees a global optimum, but converges slow which makes it impractical in real applications.

The methods discussed above tackle different challenges in FP reconstruction. However, none of them is able to correct for common sample motion during the multiple image acquisition process. For endoscopy applications, hand-held endoscope probes may move during multiple acquisitions of the same sample [20,21]. In transmission electron microscopy (TEM), sample drift is a common problem for multiple image acquisitions [22,23]. Therefore, tackling the sample-motion problem is a crucial step for applying the FP scheme to high-resolution endoscopy [24,25] and TEM (aperture scanning FP [3]). Also, as shown in the following experiments, sample motion degrades the conventional reconstruction a lot. To tackle this problem, we propose a novel FP reconstruction method in this paper, termed motion-corrected Fourier ptychography (mcFP). This technique adaptively updates the HR spatial spectrum loop by loop from low spatial-frequency regions towards high spatial-frequency ones, similar to ref. [26]. Required by the phase retrieval redundancy theory that measurements should be much more (at least 6 times) than the to-be-recovered signals [14,17,27], there is a large overlap between adjacent sub-spectra in the last and the current loop (typically ∼ 65% of the sub-spectrum). Benefiting from this overlap region which is already updated in the last loop, for each sub-spectrum to be updated in the current loop, we can successfully search for its unknown motion shift by minimizing the difference between the captured image and corresponding reconstruction. Then the obtained motion shift is utilized to compensate the phase offset of the sub-spectrum. Until all the sub-spectra are updated, the current iteration is completed. After several such iterations (2 iterations are enough for convergence as the experimental results indicate), we can recover the high-quality HR spatial spectrum without sample-motion degeneration. To validate the effectiveness of mcFP, we test it on both simulated data and real captured data. Both experimental results show that mcFP can correct for sample motion with its standard deviation being up to 10% of the field-of-view scale. As far as we know, this is the first study that addresses sample motion in FP. It may provide new insights and find important applications in various Fourier ptychographic imaging platforms such as endoscopy and TEM.

2. Methods

In the following, we use FPM as an example to demonstrate the mathematical model of the proposed mcFP method for better understanding. We first derive the image formation of FPM in the case with sample motion, and then introduce the proposed mcFP in detail.

2.1. Image formation of FPM

FPM is a coherent imaging system. For a relatively thin sample [28], its different spatial spectrum regions can be accessed by angularly varying coherent illumination. Specifically, under the assumption that the incident light is a plane wave, we can describe the light field transmitted from the sample as with no sample motion, where ϕ is the sample’s complex spatial map, (x, y) are the 2D spatial coordinates in the sample plane, j is the imaginary unit, λ is the wavelength of illumination, and θx and θy are the incident angles, as shown in Fig. 1. Then the light field is Fourier transformed to the pupil plane when it travels through the objective lens, and subsequently low-pass filtered by the pupil. This process can be represented as , where P(kx, ky) is the pupil function for low-pass filtering, (kx, ky) are the 2D spatial frequency coordinates in the pupil plane, and ℱ is the Fourier transform operator. Afterwards, the light field is Fourier transformed again when it passes through the tube lens to the imaging sensor. Since real imaging sensors can only capture light’s intensity, the image formation of FPM with no sample motion follows

| (1) |

where I is the captured intensity image, ℱ−1 is the inverse Fourier transform operator, and Φ is the spatial spectrum of the sample.

In the case with sample motion, which is denoted as (Δx, Δy) in the sample plane, the light field transmitted from the sample becomes . Assuming that the sample motion of the field of view (FOV) is ideally circular which means that the sample region moving outside of the FOV is the same as that moving inside, the spatial spectrum of the shifted sample correspondingly becomes according to the shift property of Fourier transform, and the captured image is rewritten as

| (2) |

Visual explanation of the above image formation process is diagrammed in Fig. 1. From Eqs. (2) we can see that the sample motion introduces a frequency-dependent phase offset to the sample’s spatial spectrum. The proposed mcFP method targets to compensate the phase offset and eliminate its negative influence on the final reconstruction.

2.2. Motion-corrected Fourier ptychographic reconstruction (mcFP)

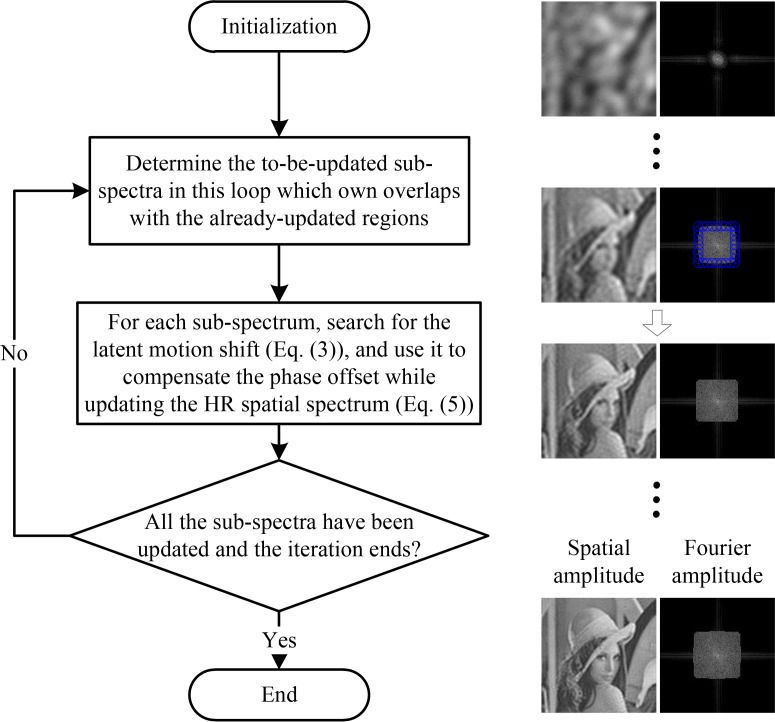

In this subsection, we begin to introduce the proposed motion-corrected Fourier ptychographic reconstruction technique in detail. The algorithm’s flow chart is diagrammed in Fig. 2. Overall, we adaptively update the HR spatial spectrum in an iterative manner similar to ref. [26]. Each iteration includes several loops of motion recovery and spectrum updating from low spatial-frequency regions to high spatial-frequency regions.

Fig. 2.

The algorithmic scheme of the proposed mcFP method.

For initialization, similar to ref. [14,16,26], we use the up-sampled version of the LR image captured under normal incident illumination as an initial guess of the sample. This is necessary for the proposed mcFP because the LR image contains important information of the sample in the low spatial frequency region, which offers a benchmark of the sample’s basic structure and guarantees the precision of the following motion recovery procedure for each to-be-updated sub-spectrum.

Then we move on to the loops of spectrum updating. At the beginning of each loop, we extend the updated sub-spectra of the last loop towards the high-frequency direction, with a fixed step size (determined by the user-defined spectrum overlapping ratio), to determine the sub-spectra to be updated in this loop. As shown on the right side in Fig. 2, the blue circles in the second-row spatial spectrum indicates the to-be-updated sub-spectra of the current loop. Then, for each sub-spectrum , we search for the latent motion shift (Δxopt, Δyopt) by minimizing the difference between the captured image Imotion and corresponding reconstruction . Mathematically, the searching procedure can be described as

| (3) |

In practice, we use an annealing method similar to ref. [29] to realize the optimization in Eq. (3). Specifically, in the first iteration, we roughly choose a number of shift guesses (Δx1,...,m, Δy1,...,m) from a relatively large shift range (for example 30% of the FOV scale), and calculate the image difference of each guess. We select the guess that corresponds to the minimal difference as the optimal shift (Δxopt_1, Δyopt_1) of the first iteration. Then in the second iteration, we choose a number of shift guesses (Δx′1,...,m, Δy′1,...,m) from a relatively small shift range (for example 10% of the FOV scale) around the optimal shift guess (Δxopt_1, Δyopt_1) in the last iteration. Similarly, we select the guess that corresponds to the minimal difference as the optimal shift (Δxopt_2, Δyopt_2) of this iteration. For the following iterations, we can gradually narrow the shift guess range, and finally find the optimal shift guess (Δxopt, Δyopt) that minimizes the image difference in Eq. (3).

The obtained motion shift guess is utilized to compensate the phase offset of the HR spatial spectrum during its updating. The updating procedure is similar to that in ref. [12]. Specifically, we use the square-root of the captured image to replace the amplitude of corresponding reconstruction as

| (4) |

Then we transform ϕ′ to Fourier space and obtain Φ′ = ℱ(ϕ′), and use it to update the sample’s HR spatial spectrum with the phase compensation e(−jkxΔxopt,−jkyΔyopt) as

| (5) |

After all the sub-spectra in the current loop are updated, the loop ends and we move on to the next loop. Until all the sub-spectra corresponding to the captured images are updated, the current iteration is completed. After several such iterations, we can successfully recover the latent motion shift and the high-quality HR spatial spectrum without sample-motion degeneration. Demo code of the proposed mcFP method is available at http://www.sites.google.com/site/lihengbian for non-commercial use.

3. Results

In this section, we test the proposed mcFP method on both simulated and real captured data, to show its advantages over conventional FP reconstruction.

3.1. Quantitative metric

To quantitatively evaluate reconstruction quality in the following simulation experiments, we utilize the relative error (RE) [16,30] metric defined as

| (6) |

This metric describes the difference between two complex functions z and ẑ. We use it here to compare the reconstructed HR complex field of the sample with its ground truth in the simulation experiments.

3.2. Simulation experiments

In the simulation experiments, we simulate the FPM setup with its hardware parameters as follows: the NA of the objective lens is 0.08, and corresponding pupil function is an ideal binary function (all ones inside the NA circle and all zeros outside); the height from the LED plane to the sample plane is 84.8mm, the distance between adjacent LEDs is 4mm, and 15 × 15 LEDs are used to provide a synthetic NA of ∼0.5, with the overlap ratio between adjacent sub-spectra being ∼ 65%; the wavelength of incident light is 625nm; and the pixel size of captured images is 1.6um. We use the ’Lena’ and ’Aerial’ image (512 × 512 pixels) from the USC-SIPI image database [31] as the latent HR amplitude and phase map, respectively. The captured LR images are synthesized based on the image formation in Eqs. (2). Their pixel numbers are set to be one eighth of the HR image along both dimensions, meaning that the pixel size of the HR image is 0.2um. We repeat 20 times for each of the following simulation experiments and average their evaluations to produce final statistical results.

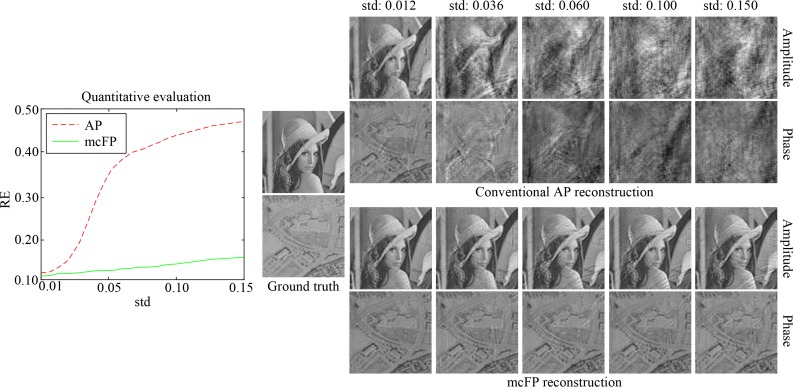

First, we test the proposed mcFP method and conventional AP algorithm on simulated data in the case of ideal circular sample motion, which conforms to the assumption of mcFP in Eqs. (2). Random Gaussian-distributed motion shift is added to the sample while capturing each LR image. For the AP algorithm, 100 iterations are enough to converge as proved in ref. [1]. We set 2 iterations for the proposed mcFP method which is experimentally validated and works well for successful reconstruction (note that in each iteration, we update each sub-spectrum for 10 times after the optimal motion shift guess is obtained), and it needs ∼5 min to reconstruct the HR image using Matlab on a laptop with a 2.5 GHz Intel Core i7 processor and 16 GB RAM. The reconstruction results are shown in Fig. 3, where the left sub-figure plots the quantitative evaluations, and the reconstructed images are shown on the right side. The standard deviation (std) of the motion shift is normalized by the scale of the system’s FOV. From the results, we can see that conventional AP reconstruction degrades a lot as the sample motion becomes severe. Instead, mcFP works well to correct for the sample motion, and is barely affected even in the severe case (e.g., see the results when the std of motion shift is 15% of the FOV scale). This benefits from the additional motion recovery procedure and corresponding phase offset compensation, as well as the complete match between the image formation model (Eqs. (2)) and the reconstruction model (Eq. (5)).

Fig. 3.

Reconstruction comparison between the proposed mcFP method and conventional AP algorithm, in the case with ideal circular sample motion. The quantitative evaluation is plotted on the left side, and the reconstructed amplitude and phase images by the two methods are shown on the right side.

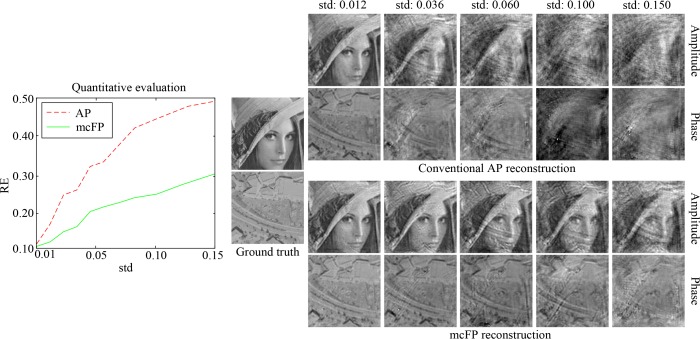

However, the assumption of ideal circular sample motion is usually not valid for real applications, where the sample region moving outside of the FOV is not the same as that moving inside. Next, we consider the real case. To simulate this, we set the FOV as the central region of the sample (central 256 × 256 pixels out of the entire 512 × 512 pixels), and simulate the sample motion by shifting the entire HR image. Corresponding reconstruction results by conventional AP and the proposed mcFP are shown in Fig. 4, from which we can see that mcFP still works well. Even in the case with severe sample motion (e.g. std = 0.1), though mcFP produces some unpleasant artifacts, it still outperforms conventional AP a lot with much more image details. This validates the robustness and effectiveness of mcFP. Note that when sample motion becomes severer than 10% of the FOV scale (e.g. std = 0.15), there exist unpleasant artifacts and noise that bury a lot of image details. In this sense, we draw the conclusion that the proposed mcFP method can correct for unknown sample motion with its standard deviation being up to 10% of the FOV.

Fig. 4.

Reconstruction comparison between the proposed mcFP method and conventional AP algorithm, in the case with non-ideal circular sample motion (the sample region moving outside of the FOV is not the same as that moving inside).

3.3. Real experiment

To further validate the effectiveness of the proposed mcFP method, we test it on two real captured datasets including a USAF target and a red blood cell sample. Similar to the previous reported FPM setup [1], we used an optical microscope platform with a 2X, 0.1 NA Nikon objective lens in this experiment. A 15 × 15 LED array was used for sample illumination, and the incident wavelength is 632 nm. The distance between adjacent LED elements is 4 mm, and that between the LED array and the sample is 88 mm, corresponding to a maximum illumination NA of ∼0.45. In the acquisition process, we randomly translated the sample to different x–y positions and captured corresponding LR images using a monochromatic CCD (GS3-U3-91S6M, Point Grey) with a pixel size of 3.69um (∼1.85um at the object plane). The random x–y motions of the sample range from 0 to 10 microns, and they were treated as unknown parameters in the recovery process. It took ∼6 min to acquire the entire dataset containing 225 low-resolution images. We would like to refer readers to ref. [10] for a much faster laser-illuminated FPM system, which needs only several seconds for capturing. The captured 225 low-resolution images were then used to recover the HR sample image and the 225 x–y motion parameters utilizing the proposed mcFP scheme.

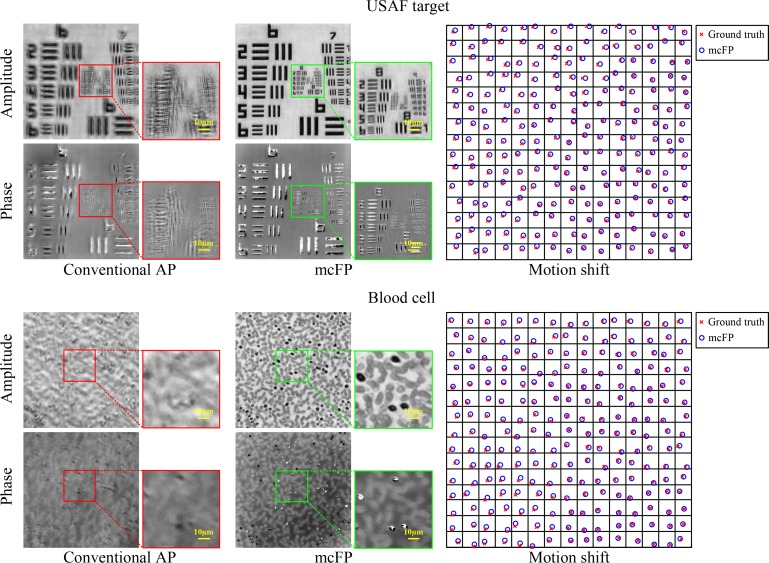

The reconstruction results are shown in Fig. 5. From the results we can see that AP can not work well and produces many unpleasant artifacts in the reconstructed images. mcFP obtains much better results than AP. For example, AP can only resolve the feature of group 6 on the USAF target, while mcFP can even resolve the group 9. The large resolving gap also exists in the results of the blood cell sample. Note that there are several phase discontinuity regions in the reconstructed phase maps of mcFP. This is because in these regions the reconstructed amplitude is close to zero, and the phase can be any value without affecting the final successful convergence. Besides, we also visualize the ground-truth motion shift and corresponding reconstruction of each captured LR image on the right side in the figure, where the precise match between them validates mcFP’s ability to successfully recover unknown sample motion shift. To conclude, mcFP can effectively correct for sample motion and reconstruct high-quality samples with much less artifacts, higher image contrast and more image details.

Fig. 5.

Reconstruction results by conventional AP and the proposed mcFP on real captured datasets (USAF target and red blood cell) by an FPM setup. We also show the ground-truth motion shift and corresponding reconstruction of each measurement on the right, where each sub-square stands for the motion space of the sample when capturing corresponding LR image, and the boundary represents the maximum motion shift being 4% of the system’s FOV scale.

4. Discussion

In this paper, we propose a novel FP reconstruction method termed motion-corrected Fourier ptychography (mcFP), which adds an additional sample-motion recovery and phase-offset compensation procedure to conventional AP reconstruction, and adaptively updates the HR spatial spectrum from low spatial-frequency regions to high spatial-frequency regions. Results on both simulated data and real captured data show that the proposed mcFP method can successfully recover unknown sample shift, as well as high-quality HR amplitude and phase of the sample. It may find wide applications in related FP platforms such as endoscopy and transmission electron microscopy.

The reason that the proposed mcFP method works well for motion correction lies in two aspects. First, it utilizes the adaptive updating manner loop by loop from low spatial-frequency regions to high spatial-frequency regions. Since the overlap region of the spatial spectrum between the previous and the current loop has been updated in the previous loop, it offers an accurate guide for the precise motion shift guess of the current loop. Second, mcFP does not directly search for the solution to the phase aberration of each spatial frequency caused by sample motion, in which case a lot of unknown variables need to be determined. Instead, it searches for the precise sample motion shift in spatial space that owns only two unknown variables (namely Δx and Δy). This largely narrows the variable space and guarantees successful recovery.

We note that the aberration caused by sample motion is different from that of pupil location error [15,16]. For pupil location error which may be introduced by LED misalignment in FPM, it produces the shift of the sample’s spatial spectrum in Fourier space (the pupil plane), namely Δkx and Δky. Thus we obtain an intensity image corresponding to a different sub-region of the sample’s spatial spectrum. Differently, the sample motion we target to correct for in this paper is the shift of the sample in spatial space. It causes phase aberration of the sample’s spatial spectrum instead of (Δkx, Δky) shift.

mcFP can be widely extended. First, the pupil-function updating procedure of the EPRY-FPM algorithm [12] can be incorporated into mcFP to obtain corrected pupil function and better reconstruction. Second, the conjugate gradient descent method or the genetic method [32] can be applied to the motion shift search procedure (Eq. (3)) in mcFP, to accelerate the algorithm and help obtain more precise motion shift. Third, the current AP updating method (Eq. (4) and Eq. (5)) can be replaced with more robust methods such as WFP [14] and TPWFP [16], which would further improve the reconstruction quality, especially in the case with severe sample motion. Fourth, in the above we assume that the sample is fixed during the exposure time of each individual acquisition, because the sample motion is not continuous for the above mentioned systems including endoscope and TEM. Besides, the exposure time can be very short [10]. However, there still exists possibility that the sample moves during the exposure time, which causes motion blur in the captured image. In this case, we can add an additional deblurring procedure to mcFP by either the blind deconvolution methods [33–35] or other methods [36]. Thus we can first find the latent blur-free image, and then perform the above spectrum updating for FP reconstruction. This would help when we apply FP for imaging live samples.

Funding

National Natural Science Foundation of China (NSFC) (61120106003, 61327902); National Institutes of Health (NIH 1R21EB022378-01).

References and links

- 1.Zheng G., Horstmeyer R., Yang C., “Wide-field, high-resolution Fourier ptychographic microscopy,” Nat. Photonics 7, 739–745 (2013). 10.1038/nphoton.2013.187 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Ou X., Horstmeyer R., Yang C., Zheng G., “Quantitative phase imaging via Fourier ptychographic microscopy,” Opt. Lett. 38, 4845–4848 (2013). 10.1364/OL.38.004845 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Dong S., Horstmeyer R., Shiradkar R., Guo K., Ou X., Bian Z., Xin H., Zheng G., “Aperture-scanning Fourier ptychography for 3D refocusing and super-resolution macroscopic imaging,” Opt. Express 22, 13586–13599 (2014). 10.1364/OE.22.013586 [DOI] [PubMed] [Google Scholar]

- 4.Tian L., Waller L., “3D intensity and phase imaging from light field measurements in an LED array microscope,” Optica 2, 104–111 (2015). 10.1364/OPTICA.2.000104 [DOI] [Google Scholar]

- 5.Dong S., Nanda P., Shiradkar R., Guo K., Zheng G., “High-resolution fluorescence imaging via pattern-illuminated Fourier ptychography,” Opt. Express 22, 20856–20870 (2014). 10.1364/OE.22.020856 [DOI] [PubMed] [Google Scholar]

- 6.Chung J., Kim J., Ou X., Horstmeyer R., Yang C., “Wide field-of-view fluorescence image deconvolution with aberration-estimation from Fourier ptychography,” Biomed. Opt. Express 7, 352–368 (2016). 10.1364/BOE.7.000352 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Dong S., Guo K., Nanda P., Shiradkar R., Zheng G., “FPscope: a field-portable high-resolution microscope using a cellphone lens,” Biomed. Opt. Express 5, 3305–3310 (2014). 10.1364/BOE.5.003305 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Phillips Z. F., D’Ambrosio M. V., Tian L., Rulison J. J., Patel H. S., Sadras N., Gande A. V., Switz N. A., Fletcher D. A., Waller L., “Multi-contrast imaging and digital refocusing on a mobile microscope with a domed led array,” PLoS ONE 10, e0124938 (2015). 10.1371/journal.pone.0124938 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Tian L., Liu Z., Yeh L.-H., Chen M., Zhong J., Waller L., “Computational illumination for high-speed in vitro Fourier ptychographic microscopy,” Optica 2, 904–911 (2015). 10.1364/OPTICA.2.000904 [DOI] [Google Scholar]

- 10.Chung J., Lu H., Ou X., Zhou H., Yang C., “Wide-field Fourier ptychographic microscopy using laser illumination source,” arXiv preprint arXiv:1602.02901 (2016). [DOI] [PMC free article] [PubMed]

- 11.Shechtman Y., Eldar Y., Cohen O., Chapman H., Miao J., Segev M., “Phase retrieval with application to optical imaging: A contemporary overview,” IEEE Signal Proc. Mag. 32, 87–109 (2015). 10.1109/MSP.2014.2352673 [DOI] [Google Scholar]

- 12.Ou X., Zheng G., Yang C., “Embedded pupil function recovery for Fourier ptychographic microscopy,” Opt. Express 22, 4960–4972 (2014). 10.1364/OE.22.004960 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Horstmeyer R., Chen R. Y., Ou X., Ames B., Tropp J. A., Yang C., “Solving ptychography with a convex relaxation,” New J. Phys. 17, 053044 (2015). 10.1088/1367-2630/17/5/053044 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Bian L., Suo J., Zheng G., Guo K., Chen F., Dai Q., “Fourier ptychographic reconstruction using Wirtinger flow optimization,” Opt. Express 23, 4856–4866 (2015). 10.1364/OE.23.004856 [DOI] [PubMed] [Google Scholar]

- 15.Yeh L.-H., Dong J., Zhong J., Tian L., Chen M., Tang G., Soltanolkotabi M., Waller L., “Experimental robustness of Fourier ptychography phase retrieval algorithms,” Opt. Express 23, 33214–33240 (2015). 10.1364/OE.23.033214 [DOI] [PubMed] [Google Scholar]

- 16.Bian L., Suo J., Chung J., Ou X., Yang C., Chen F., Dai Q., “Fourier ptychographic reconstruction using Poisson maximum likelihood and truncated Wirtinger gradient,” Sci. Rep. 6, 27384 (2016). 10.1038/srep27384 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Candes E., Li X., Soltanolkotabi M., “Phase retrieval via Wirtinger flow: Theory and algorithms,” arXiv preprint arXiv:1407.1065 (2014).

- 18.Candes E. J., Strohmer T., Voroninski V., “Phaselift: Exact and stable signal recovery from magnitude measurements via convex programming,” Commun. Pure Appl. Math. 66, 1241–1274 (2013). 10.1002/cpa.21432 [DOI] [Google Scholar]

- 19.Waldspurger I., d’Aspremont A., Mallat S., “Phase recovery, maxcut and complex semidefinite programming,” Math. Program. pp. 1–35 (2012).

- 20.Swift D., Birdsill L., “Method and system for automatic correction of motion artifacts,” (2005). US Patent 6,842,196.

- 21.Vercauteren T., Perchant A., Malandain G., Pennec X., Ayache N., “Robust mosaicing with correction of motion distortions and tissue deformations for in vivo fibered microscopy,” Med. Image Anal. 10, 673–692 (2006). 10.1016/j.media.2006.06.006 [DOI] [PubMed] [Google Scholar]

- 22.Li X., Mooney P., Zheng S., Booth C. R., Braunfeld M. B., Gubbens S., Agard D. A., Cheng Y., “Electron counting and beam-induced motion correction enable near-atomic-resolution single-particle cryo-EM,” Nat. Methods 10, 584–590 (2013). 10.1038/nmeth.2472 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Liao M., Cao E., Julius D., Cheng Y., “Structure of the TRPV1 ion channel determined by electron cryo-microscopy,” Nature 504, 107–112 (2013). 10.1038/nature12822 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Pacheco S., Zheng G., Liang R., “Reflective Fourier ptychography,” J. Biomed. Opt. 21, 026010 (2016). 10.1117/1.JBO.21.2.026010 [DOI] [PubMed] [Google Scholar]

- 25.Guo K., Dong S., Zheng G., “Fourier ptychography for brightfield, phase, darkfield, reflective, multi-slice, and fluorescence imaging,” IEEE J. Sel. Top. Quantum Electron. 22, 1–12 (2016). 10.1109/JSTQE.2015.2504514 [DOI] [Google Scholar]

- 26.Bian L., Suo J., Situ G., Zheng G., Chen F., Dai Q., “Content adaptive illumination for Fourier ptychography,” Opt. Lett. 39, 6648–6651 (2014). 10.1364/OL.39.006648 [DOI] [PubMed] [Google Scholar]

- 27.Dong S., Bian Z., Shiradkar R., Zheng G., “Sparsely sampled Fourier ptychography,” Opt. Express 22, 5455–5464 (2014). 10.1364/OE.22.005455 [DOI] [PubMed] [Google Scholar]

- 28.Ou X., Horstmeyer R., Zheng G., Yang C., “High numerical aperture Fourier ptychography: principle, implementation and characterization,” Opt. Express 23, 3472–3491 (2015). 10.1364/OE.23.003472 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Maiden A., Humphry M., Sarahan M., Kraus B., Rodenburg J., “An annealing algorithm to correct positioning errors in ptychography,” Ultramicroscopy 120, 64–72 (2012). 10.1016/j.ultramic.2012.06.001 [DOI] [PubMed] [Google Scholar]

- 30.Chen Y., Candès E. J., “Solving random quadratic systems of equations is nearly as easy as solving linear systems,” arXiv preprint arXiv: 1505.05114 (2015).

- 31.Weber A. G., “The USC-SIPI image database version 5,” USC-SIPI Rep. 315, 1–24 (1997). [Google Scholar]

- 32.Luenberger D. G., Introduction to Linear and Nonlinear Programming, vol. 28 (Addison-Wesley; Reading, MA, 1973). [Google Scholar]

- 33.Fish D., Walker J., Brinicombe A., Pike E., “Blind deconvolution by means of the Richardson–Lucy algorithm,” J. Opt. Soc. Am. A 12, 58–65 (1995). 10.1364/JOSAA.12.000058 [DOI] [Google Scholar]

- 34.Shan Q., Jia J., Agarwala A., “High-quality motion deblurring from a single image,” ACM Trans. Graphic. 27, 73 (2008). 10.1145/1360612.1360672 [DOI] [Google Scholar]

- 35.Cho S., Lee S., “Fast motion deblurring,” ACM Trans. Graphic. 28, 145 (2009). 10.1145/1618452.1618491 [DOI] [Google Scholar]

- 36.Hansen P. C., Nagy J. G., O’leary D. P., Deblurring Images: Matrices, Spectra, and Filtering, vol. 3 (Siam, 2006). 10.1137/1.9780898718874 [DOI] [Google Scholar]