Summary

The ability to genetically identify and manipulate neural circuits in the mouse is rapidly advancing our understanding of visual processing in the mammalian brain [1,2]. However, studies investigating the circuitry that underlies complex ethologically-relevant visual behaviors in the mouse have been primarily restricted to fear responses [3–5]. Here, we show that a laboratory strain of mouse (Mus musculus, C57BL/6J) robustly pursues, captures and consumes live insect prey, and that vision is necessary for mice to perform the accurate orienting and approach behaviors leading to capture. Specifically, we differentially perturbed visual or auditory input in mice and determined that visual input is required for accurate approach, allowing maintenance of bearing to within 11 degrees of the target on average during pursuit. While mice were able to capture prey without vision, the accuracy of their approaches and capture rate dramatically declined. To better explore the contribution of vision to this behavior, we developed a simple assay that isolated visual cues and simplified analysis of the visually guided approach. Together, our results demonstrate that laboratory mice are capable of exhibiting dynamic and accurate visually-guided approach behaviors, and provide a means to estimate the visual features that drive behavior within an ethological context.

Results

C57BL/6J mice robustly perform prey capture

Several species of rodents employ vision to guide prey capture behavior [6–8], but it remains unclear whether the commonly used laboratory species of mouse (Mus musculus) can capture prey using vision. We first tested for prey capture behavior in mus musculus by presenting live crickets (Acheta domestica) to cricket-naïve C57BL/6J mice in their home-cage in the presence of standard mouse chow. Within 24 hours of placing crickets in the home-cage of group-housed mice, all of the crickets were captured and consumed. When the mice were then housed individually, 96.5% (55/57) of the mice captured and consumed crickets. This demonstrates that mice have both the inclination and ability to capture live prey.

To quantify prey capture detection and pursuit behavior, we next recorded prey performance in an open-field arena (Figure 1A, top panel). For mice to perform reliable prey capture under our recording conditions, it was necessary to acclimate the mice to the setup (Figure 1A, training timeline). First, mice were acclimated to their handler and fed crickets once per day in their home-cage for three days. Then, following 24 hours of food restriction they were exposed to the arena and to crickets within the arena. On the first day of hunting (D1) in the arena, mice approached crickets but often fled, leading to prolonged capture times or failure to capture (Figure 1B). This behavior is consistent with the natural tendencies of mice to suppress eating behaviors in novel environments [9] and to find lit open fields inherently aversive [10].

Figure 1. C57BL/6J mice reliably perform prey capture in a laboratory setting.

(A) Top, prey schematic diagram of the arena for prey capture. Left inset: an example video still of the mouse and cricket. Bottom, timeline of the experimental paradigm. Grey lines indicate the days that are relevant to the above label, arrows indicate that food deprivation began at the end of exposures on Acclimation day 3 (A3) and sensory manipulations were performed after testing trials ended on D4. (B) Top, likelihood of successful capture within 10 min of exposure to cricket in the arena. By D3, mice reach 100% capture success. Bottom, mean capture times averaged over three trials per mouse, per day for successful capture trials on days 1–5 within the arena (D1–D5). Inset, the mean capture times on the final three days are plotted on an expanded time scale due to the 10-fold reduction in capture times from the first day of arena exposure. Data are median ± bootstrapped SEM, n = 47 mice, * =p<0.05, *** =p<0.01, one-way ANOVA with Tukey-Kramer HSD posthoc and χ2 goodness of fit applied to p(Capture success) data. (C) Frames from a movie of a prey capture trial depicting a mouse orienting towards (T= −2.25 sec), approaching (T= −1.25 sec) and intercepting (T=0) a prey target. Times relative to prey interception are shown in the upper right-hand corner of each panel. Blue line shows the path of the mouse and the green line shows the path of the cricket.

Importantly, avoidance behavior quickly receded with repeated exposure in the arena. After three days of capture trials, nearly all the mice tested (96.4%, 53/55) captured prey reliably (Figure 1B and 1C). Capture performance was deemed reliable if 3 sequential trials each ended in capture of the cricket in under 30 seconds (see Supplemental Video 1 and Supplemental Experimental Procedures). Prey capture performance, as measured by time-to-capture, reached asymptote at 13 ± 1.1 sec on D4 of hunting in the arena and was stable on D5 (Figure 1B, lower panel). Together, this demonstrates that the commonly employed C57BL/6L strain of mice exhibit robust prey capture behavior, and that only three days of exposure to insects plus three days of contextual acclimation are required to optimize the behavior for measurement in a controlled setting.

Vision is necessary for efficient prey capture performance

We next sought to establish which senses C57BL/6J mice use to detect, pursue and capture live prey. We compared prey capture performance under conditions where we either eliminated visual cues by allowing mice to capture prey in darkness (Supplemental Video 2), or eliminated auditory cues by implanting ear plugs [11] (Supplemental Video 3). We also tested performance when we eliminated both hearing and vision by testing ear plugged animals in the dark (Supplemental Video 4). Testing was performed on Day 5, after four days of acclimation to capturing crickets in the arena (Figure 1A).

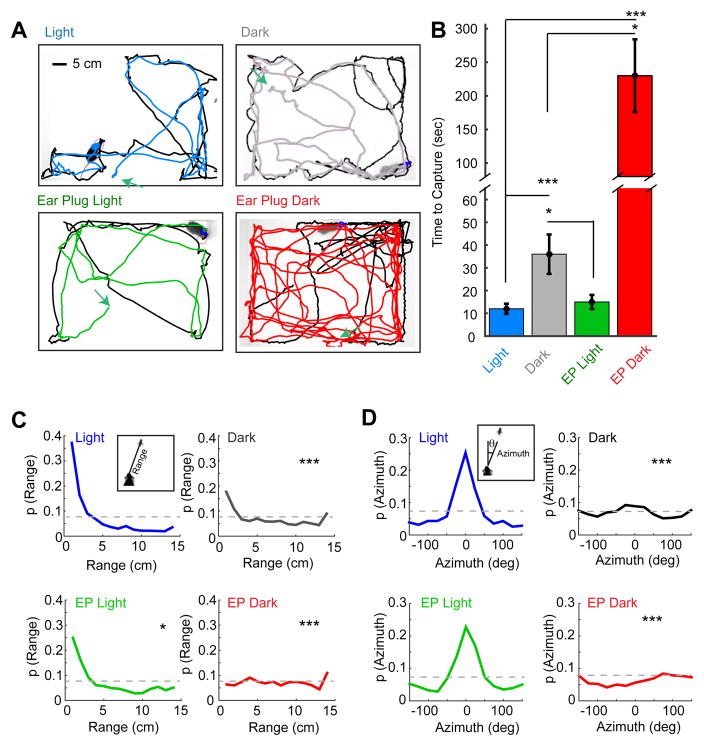

We observed striking impairments in prey capture behavior in the dark (Dark) and ear plug dark (EP Dark) conditions relative to the baseline light (Light) and ear plug only (EP Light) conditions (Figure 2A). Mice took over 3 times as long to capture a cricket in the Dark condition than in the Light condition (Figure 2B; Light: 11 ± 2 s, Dark: 36 ± 9 s, p<0.001). Capture-time in the EP Dark condition was dramatically higher relative to the Dark (230 ± 56 s vs. 36 ± 9 s, p<0.05). This confirms the effectiveness of the ear plug manipulation, and, this difference demonstrates that hearing can contribute to prey capture behavior in the absence of vision. However, loss of hearing alone in the EP Light condition had little effect on capture performance relative to the Light condition (Figure 2B; EP Light: 15 ± 3 s, Light: 11 ± 2 s, p>0.05).

Figure 2. Selective sensory perturbation demonstrates that vision is necessary for efficient prey capture performance.

(A) Representative paths traveled by the mouse and cricket during a single capture trial performed under each of four sensory conditions. The paths of the mice are colored by condition and prey trajectories are black. Green arrows depict starting locations for the mouse in each trial. Scale bar equals 5 cm. (B) Capture time for four groups of mice differentially tested in each of the four sensory conditions. (C–D) Trial-averaged probability density functions for range (C) and azimuth (D) in the four sensory conditions. Insets depict definitions of range and azimuth. Grey lines indicate chance performance. Time-to-capture group data are median ± bootstrapped SEM, n=16, 10, 8 and 8 mice and n=23, 20, 16, 16 trials for the Light, Dark, EP Light and EP Dark conditions respectively; *=p<0.05, ***=p<001, one-way ANOVA with Tukey-Kramer HSD posthoc. Two-sample, Kolmogorov-Smirnov test for differences between each sensory manipulation and the baseline condition (Light, D5). The difference between Dark and EP Dark was also tested, range: p<0.01, azimuth, p<0.05, 2-sample, Kolmogorov-Smirnov test, n=20 and 16 trials respectively).

See also Figure S1, Figure S2, Movie S1, Movie S2, Movie S3 and Movie S4.

Importantly, we observed no significant difference across conditions in the amount of time mice spent in a stationary state (Light: 1.8 ± 0.75%, Dark: 1.5 ± 0.53%, EP Light: 2.4 ± 0.82%, EP Dark: 2.1 ± 0.42%, p>0.05) or in the average locomotor speed (Light: 16.4 ± 2.6 cm/sec, Dark: 16.0 ± 2.1 cm/sec, EP Light: 14.6 ± 2.2 cm/sec, EP Dark: 15.7 ± 2.1 cm/sec) when the mouse was not contacting the cricket. In addition, all mice consumed the cricket following capture. This suggests that impaired performance is not due to differences in level of activity or motivation.

To more clearly understand how vision contributes to efficient prey capture, we quantified the orienting behaviors of the mouse. We measured the distance between the mouse’s head and the cricket, termed “range”, and the angular position of the cricket relative to the bearing of the mouse’s head, termed “azimuth”, across the entirety of each capture trial (Figure 2 C & D). Consistent with the capture-time data, we found that mice spent significantly less time at close range (< 4 cm) under conditions where vision was impaired (Figure 2C). In particular, mice had nearly randomly distributed range to the target when they could neither see nor hear. This suggests a limited role for olfactory and tactile cues in supporting distal orienting behaviors during prey capture under our testing conditions. In the absence of vision, hearing does allow mice to increase the likelihood of being within contact range (<4 cm), compared to the absence of both vision and hearing (p<0.01, χ2, n=20 and 16).

One of the most striking observations is that mice maintained a precise bearing centered on the target in lighted conditions. In particular, prey azimuths were sharply centered on 0° when mice could see (Figure 2D). In contrast, when mice could neither see nor hear, the relative angular position of the prey appeared random and this distribution was significantly different from all the other conditions tested. The distributions of range and azimuth were significantly different between Dark and EP Dark conditions demonstrating that hearing facilitates prey capture orienting behaviors when vision is absent. As with the capture-time data, the range and azimuth data suggest that vision is the primary modality driving efficient prey capture behavior, and that hearing may play a role when vision is absent.

To determine the cues available during prey capture, we made audio recordings of the crickets in the arena. Acoustic analysis showed that crickets never chirped, but did produce audible cues approximately 5–10dB above the background when they moved over the substrate (see Supplemental Experimental Procedures and Supplemental Figure 2). We also tested whether motion was necessary for capture in the light by measuring performance with immobile targets (fresh-frozen crickets). Under lit conditions, mice contacted immobile targets in times comparable to when they first make contact with live crickets (8.06 ± 1.08 vs. 7.6 ± 1.34 seconds, N=8, p>0.05, Mann-Whitney U). Therefore, motion was not necessary to produce optimal prey capture behavior in the light, but auditory cues were available to the mouse when prey moved.

To verify that darkness specifically disrupts performance via visual impairment of the mouse, we also sutured the mouse’s eyelids closed. We saw no significant difference between three conditions in which vision was impaired: Dark, eye-sutured light, or eye-sutured dark (Supplemental Figure 1A & B). This demonstrates that the impairment of prey capture performance in the dark was not due to factors such as changes to cricket behavior, and it confirms the effectiveness of our visual manipulations.

Vision guides accurate and precise orienting behaviors

Given the profound effect vision had on overall prey capture efficiency throughout the duration of a trial, we next sought to quantify how visual information was driving orienting behavior during individual approach events. By examining range, azimuth and mouse speed prior to target contact, we identified behavioral criteria that successfully detected approach epochs. The co-occurrence of a consistent decrease in range and an absolute azimuth of less than 90 degrees, while the mouse was moving at speeds greater than 5cm/sec, predicted 100% of target interceptions under baseline conditions (Figure 3A). We therefore used these as criteria for defining an approach start. Importantly, many interceptions do not result in a final capture. Therefore, we also differentiate between an “interception”, where the mouse successfully reaches the location of the prey, and a “capture”, where the mouse successfully grabs and consumes the prey.

Figure 3. Accurate approach behavior during prey capture requires vision.

(A) From left to right: range, azimuth and mouse speed over the time preceding the prey contact event shown in Figure 1C. The “approach epoch” is shown as a blue trace overlaid on each exemplary behavioral trace. (B) The median number of approaches per minute, averaged over trials. (C) Probability that an approach leads to prey interception, averaged over trials. (D) Probability that an interception will end in a final capture, averaged over trials. (E) The mean azimuth at a given range during the mouse’s approach under the four different sensory conditions. (F) The mean absolute azimuth at the end of each approach for each sensory condition. Azimuth data are mean ± SEM and all other grouped data are median ± bootstrapped SEM, n=23, 20, 16 and 16 trials and n= 47, 100, 46 and 119 approaches for the Light, Dark, EP Light and EP Dark conditions respectively; *=p<0.05, **=p<0.01, ***=p<0.001, one-way ANOVA with Tukey-Kramer HSD posthoc.

See also Figure S1.

We found that the average frequency of initiating approaches, measured as the number of approaches per minute, was significantly greater under both sighted conditions as compared to both dark conditions (Figure 3B). Further, the likelihood that an approach would end with target interception was significantly higher in the light conditions (Figure 3C). Taken together, these results indicate that vision is important for both prey detection and successful targeting during approaches. Surprisingly, we saw no significant differences in these measures of approach between the Dark and EP Dark conditions, further suggesting that hearing does not play a significant role in the long distance approach phase of prey capture.

Although hearing does not affect the accuracy of individual approaches, the total capture-time increased dramatically when hearing is removed in the dark. To understand this, we analyzed the probability of a successful capture given an interception event, or, the p(Capture | Interception) (Figure 3D). This analysis showed that mice performed equally well in the control condition or with either visual or auditory deprivation alone. However, when both visual and auditory cues were removed, the probability of capture following an interception dropped threefold (Figure 3D). Moreover, when we compared the duration of each intercept that did not lead to capture, mice stayed in contact (within 4 cm) significantly longer when hearing was available (Dark: 1.1 ± 0.2 sec, EP Dark: 0.5 ± 0.1 sec, p < 0.05, Kruskal-Wallis, Tukey-Kramer, HSD posthoc). These data argue that either visual or auditory cues can facilitate successful near range pursuit and final capture after the target has been contacted. They also explain the increase in total time to capture when both sensory modalities are removed.

We next sought to determine the distance at which approaches are triggered and measure the accuracy of each approach. The trajectories of approaches were determined by calculating the azimuth as a function of range for each sensory condition. At ranges of 15–20cm or less, prey azimuth decreased significantly under the lighted conditions (Figure 3E). Furthermore, in the light, mice maintained a precise bearing as they closed in on the target (11.4 ± 1.4° at end of approach, Figure 3F). The accuracy of targeting in the dark (41.1 ± 1.2°, Figure 3F) was near chance, since the azimuth must be less than 90° to be approaching the target. Altogether, the analysis of approaches demonstrates that vision is necessary for allowing highly accurate targeting during the approach.

Vision is necessary for prey detection and accurate approach in a modified prey capture paradigm

The demonstration that C57BL/6J mice utilize vision to perform prey capture opens up the potential to study the visual neural circuitry that underlies this behavior. To facilitate these efforts, we developed a simplified approach paradigm that relies on vision. In this assay, we placed live crickets behind a clear acrylic barrier that attenuated non-visual cues (Figure 4A). Furthermore, restricting the cricket to a 1-dimensional path simplified the analysis of approaches. We quantified the horizontal distance between mouse head position and cricket position during approaches, which we term “lateral error” (Figure 4B, inset). Performance in this modified paradigm was assessed on day 5 of their training protocol, identical to the timeline used in the open field condition. Therefore, the mice were experienced in prey capture but naïve to the experience of encountering prey behind an acrylic barrier.

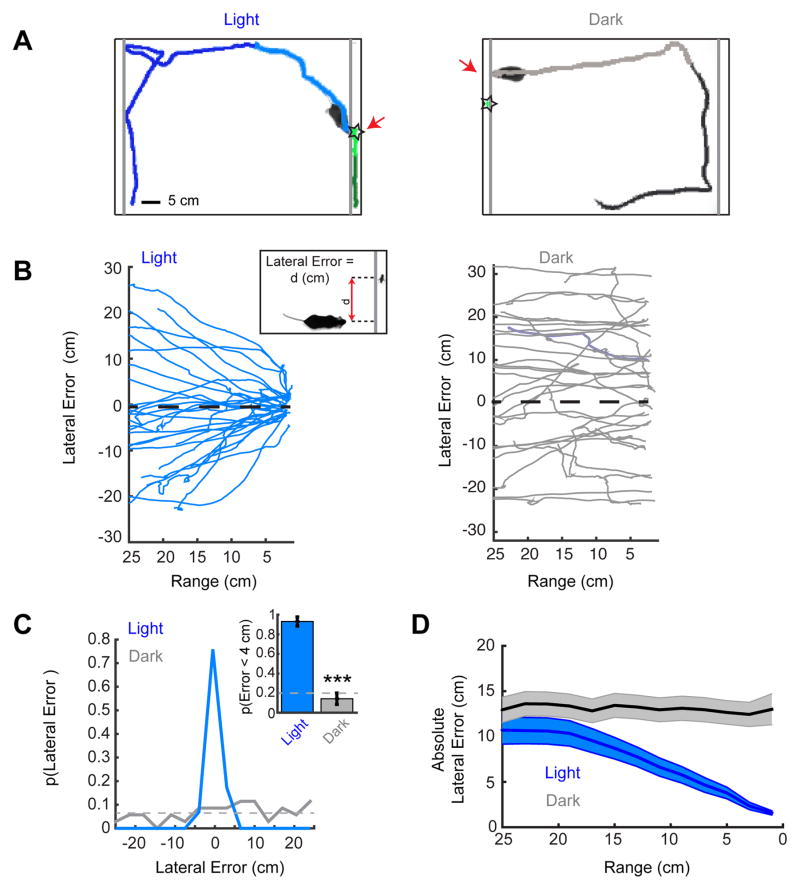

Figure 4. An approach paradigm restricted to the visual modality.

(A) Representative trials with the prey behind an acrylic barrier for the light (left) and dark (right) conditions. Grey lines indicate clear, acrylic barrier locations. The approach sequences are highlighted as lighter shading of the mouse’s path. Stars indicate the prey’s location when the mouse contacts the barrier. Scale bar equals 5 cm. (B) Lateral error as a function of range from the cricket for all of the mouse’s approaches to the barrier in the Light (left) and Dark (right). Inset, lateral error was defined as the horizontal distance (d) that separated the location of the mouse’s head from the location of the cricket. Positive differences denote that prey was to the left of the mouse and negative values to the right of the mouse. (C) Probability density functions of lateral errors at barrier contact in Light and Dark conditions. Inset, the probability that the mouse will contact the barrier at a location with a lateral error of less than 4 cm (~2 cricket body lengths) from cricket. Grey dashed lines indicate chance performance levels. (D) The mean absolute lateral error as a function of range during approach for Light and Dark conditions. Group absolute error data are mean ± SEM, n=13 and 13 mice and n=29 and 35 trials/approaches for the Light and Dark conditions respectively; ***=p<0.001, Mann-Whitney U.

In the light, when mice were on the side of the arena with the cricket they nearly always directly approached and investigated the cricket’s location (Figure 4B, left panel and Supplemental Video 5), even though they could not actually contact or capture the cricket. On average, mice contacted the barrier within a lateral error of 1.6 ± 0.2 cm of the target, or approximately the length of a cricket (Figure 4C). Further, 93 ± 5 % of mice made contact at the barrier with a lateral error of less than 4 cm from target (Figure 4C, inset).

In contrast, when mice were placed in the arena in the dark, their paths appeared directed to random locations along the acrylic barrier (Figure 4B, right panel and Supplemental Video 6). They contacted the barrier on average 13.5 ± 1.4 cm from the target (Figure 4C). This is almost half the length of the barrier, suggesting that barrier contact locations arose randomly. Furthermore, the mice made a “successful” contact on only 14 ± 6 % of approaches, compared to the 20% predicted by chance based on geometry (p > 0.05, χ2, n= 35, Figure 4C, inset). They also failed to modify their approach trajectory relative to the prey as they approached the barrier (Figure 4D). Thus, vision is necessary for successful approach behavior in this paradigm as no other sensory modality could substitute for vision to allow performance above chance levels. Furthermore, the targeting we observed is highly accurate. This demonstrates that the paradigm produces robust, quantifiable visual orienting behaviors in the mouse.

Examining the lateral error during approaches shows that mice began deviating toward the target at a range of 15–20cm, similar to the range at which they deviated toward the target in the open arena (Figure 3E). Given that the crickets are approximately 2 cm in length, a rough estimate of the angular size at which vision begins to guide approach at 15–20 cm is 6–8°. This is consistent with the receptive field diameter for many retinal ganglion cell types, although larger than the limits of visual acuity as assessed with grating stimuli [12–15]. Thus, this assay provides an estimate of the operating range of mouse vision that drives accurate approach behavior in a natural context. In future experiments it will be possible to further explore the visual parameter space that drives prey detection and accurate orientation.

Discussion

Here, we demonstrate that C57BL/6J mice pursue and capture live insect prey. Quantification of behavior under differing sensory conditions established that vision was necessary for accurate and efficient approaches. Most previous visual behavioral paradigms with laboratory mice have either relied on non-ethological operant training [1,16–19] or navigation, including in virtual reality [20,21]. A few recent studies of ethological mouse visual behavior have focused on visually-driven fear responses to simple stimuli such as looming or light flashes [3,4]. Therefore, our demonstration that mice use vision to detect prey stimuli and drive precise visually-guided motor output significantly advances our understanding of mouse visual neuroethology.

This study also revealed that auditory cues play a role in mouse prey capture behavior at short target distances, although they were not sufficient for accurate long-range approaches in our paradigm. This may be due to the fact that the auditory cues generated by spontaneous cricket movement were relatively weak at distances where vision could guide approaches (Supplemental Figure 2). It remains possible that mice could use audition more effectively after learning through extended experience in the dark, or when pursuing targets that generate more salient sounds [22,23]. Different environmental conditions could further affect the use of auditory cues, as the flooring substrate and acrylic walls of our arena may attenuate or reflect the sounds made by the cricket. Our results also suggest that olfactory and tactile cues are insufficient for effective orientation behavior at a distance. However, olfaction may still play a role in motivation, and mouse strains with poor visual acuity have demonstrated relatively enhanced olfactory capabilities [24,25]. Thus, it will be interesting in future experiments to explore the conditions under which the various distance senses may modulate laboratory mouse prey capture performance.

Although prey capture is more ethological than other recent operant visual tasks, freely-moving behaviors introduce additional challenges to visual neurophysiology and imaging experiments relative to behaviors that can be performed in a head-fixed configuration. However, the development of head-mounted imaging systems [26] and eye-tracking [27] may address these challenges. It may also be possible to establish head-fixed prey capture tasks using a spherical treadmill with virtual stimuli [28]

Visual control of prey capture across species

The investigation of prey capture behavior in many species has advanced our understanding of the neural basis of visual processing and sensory-motor integration. However, we have yet to obtain a detailed circuit-level understanding of the visual processing that underlies prey capture in the mammalian brain. Despite the advantages provided by genetic tools available in the mouse, laboratory mice have not previously been used to study prey capture. Of note, a carnivorous species of mouse, the grasshopper mouse (Onychomys leucogaster), has been well-studied and is known to use vision for prey capture [8]. However, this wild species of mouse was subject to extreme environmental selective pressure and had acquired several physical and behavioral adaptations that are unique to the species and specifically aid prey capture. In contrast, M. musculus is more often considered to be a nocturnal prey species rather than a predator [29]. Nevertheless, they do consume invertebrates in the wild [30] and are active at dawn and dusk as well as night, consistent with our findings that they use vision for prey capture.

Studies of prey capture in non-mammalian species suggest a role for the superior colliculus in the behavior we observe here. Classic work investigating prey capture versus object avoidance in toads demonstrated that distinct visual pathways are required to produce the two types of visually guided behaviors: the optic tectum (non-mammalian homolog of the superior colliculus) mediates prey capture and the pre-tectum mediates avoidance [31–33]. In addition, recent work in the zebrafish, a genetically tractable species, has established a role for specific retinal ganglion cell types in triggering approach, as well as defined inhibitory cell types within the optic tectum that provide the size tuning that distinguishes prey versus predator stimuli [34].

In rodents, a combination of lesion and micro-stimulation experiments conducted in the superior colliculus have shown striking effects on orienting and alerting behavior [6,35,36]. These behaviors are thought to underlie prey capture behavior and argue for significant conservation of visual system structure and function across vertebrate species. Moreover, recent studies of predator avoidance behavior in mice have revealed that cortex can modulate the processing of visual stimuli that drive innate behaviors in the superior colliculus [4,5]. Therefore, an important future goal will be to understand how defined neural circuits within both superior colliculus and cortex contribute to prey capture, from stimulus detection to visually-guided locomotor output.

Supplementary Material

Acknowledgments

We thank Dolly Zhen, Greg Smithers and Emily Galletta for their work on behavioral data collection and tracking. We are grateful to Dr. Judith Eisen, Dr. Sunil Gandhi, Dr. Clifford Keller, and Dr. Matt Smear for their careful review of manuscript drafts, and to members of the Niell, Wehr, and Takahashi labs for helpful discussions. This work was supported by NIH grants F32EY24179 (JLH) and R01EY023337 (CMN).

Footnotes

Author Contributions

J.L.H, conceived the project. J.L.H., M.W. and C.M.N. designed the experiments. J.L.H., and I.Y. refined experimental approaches. J.L.H. performed and supervised behavioral experiments, scoring and tracking. J.L.H. C.M.N and M.W. analyzed and interpreted the data. J.L.H. and C.M.N. co-wrote the manuscript. J.L.H., I. Y., M.W., and C.M.N. finalized the manuscript.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Carandini M, Churchland AK. Probing perceptual decisions in rodents. Nat Neurosci. 2013;16:824–31. doi: 10.1038/nn.3410. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Huberman AD, Niell CM. What can mice tell us about how vision works? Trends Neurosci. 2011;34:464–473. doi: 10.1016/j.tins.2011.07.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Yilmaz M, Meister M. Rapid innate defensive responses of mice to looming visual stimuli. Curr Biol. 2013;23:2011–2015. doi: 10.1016/j.cub.2013.08.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Liang F, Xiong XR, Zingg B, Ji X ying, Zhang LI, Tao HW. Sensory Cortical Control of a Visually Induced Arrest Behavior via Corticotectal Projections. Neuron. 2015;86:755–767. doi: 10.1016/j.neuron.2015.03.048. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Zhao X, Liu M, Cang J. Visual cortex modulates the magnitude but not the selectivity of looming-evoked responses in the superior colliculus of awake mice. Neuron. 2014;84:202–213. doi: 10.1016/j.neuron.2014.08.037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Dean P, Redgrave P. The superior colliculus and visual neglect in rat and hamster. I behavioural evidence. Brain Res Rev. 1984;8:129–141. doi: 10.1016/0165-0173(84)90002-x. [DOI] [PubMed] [Google Scholar]

- 7.Polsky R. Influence of Eating Dead Prey on Subsequent capture of live Prey in Golden hamsters. Physiol Behav. 1978;20:677–680. doi: 10.1016/0031-9384(78)90291-3. [DOI] [PubMed] [Google Scholar]

- 8.Langley WM. Grasshopper mouse’s use of visual cues during a predatory attack. Behav Processes. 1989;19:115–125. doi: 10.1016/0376-6357(89)90035-1. [DOI] [PubMed] [Google Scholar]

- 9.Tennant Ka, Asay AL, Allred RP, Ozburn AR, Kleim Ja, Jones Ta. The vermicelli and capellini handling tests: simple quantitative measures of dexterous forepaw function in rats and mice. J Vis Exp. 2010:1–6. doi: 10.3791/2076. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Bailey KR, Crawley JN. Anxiety-Related Behaviors in Mice. Methods Behav Anal Neurosci. 2009:13. [PubMed] [Google Scholar]

- 11.Karcz A, Allen PD, Walton J, Ison JR, Kopp-Scheinpflug C. Auditory deficits of Kcna1 deletion are similar to those of a monaural hearing impairment. Hear Res. 2015;321:45–51. doi: 10.1016/j.heares.2015.01.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Prusky GT, Douglas RM. Characterization of mouse cortical spatial vision. In Vision Research. 2004:3411–3418. doi: 10.1016/j.visres.2004.09.001. [DOI] [PubMed] [Google Scholar]

- 13.Bleckert A, Schwartz GW, Turner MH, Rieke F, Wong ROL. Visual space is represented by nonmatching topographies of distinct mouse retinal ganglion cell types. Curr Biol. 2014;24:310–315. doi: 10.1016/j.cub.2013.12.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Jeon CJ, Strettoi E, Masland RH. The major cell populations of the mouse retina. J Neurosci. 1998;18:8936–8946. doi: 10.1523/JNEUROSCI.18-21-08936.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Farrow K, Masland RH. Physiological clustering of visual channels in the mouse retina. J Neurophysiol. 2011;105:1516–1530. doi: 10.1152/jn.00331.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Andermann ML, Kerlin AM, Reid RC. Chronic cellular imaging of mouse visual cortex during operant behavior and passive viewing. Front Cell Neurosci. 2010;4:3. doi: 10.3389/fncel.2010.00003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Zhang S, Xu M, Kamigaki T, Hoang Do JP, Chang WC, Jenvay S, Miyamichi K, Luo L, Dan Y. Selective attention. Long-range and local circuits for top-down modulation of visual cortex processing. Science (80- ) 2014;345:660–665. doi: 10.1126/science.1254126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Busse L, Ayaz A, Dhruv NT, Katzner S, Saleem AB, Scholvinck ML, Zaharia AD, Carandini M. The detection of visual contrast in the behaving mouse. J Neurosci. 2011;31:11351–11361. doi: 10.1523/JNEUROSCI.6689-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Keller GB, Bonhoeffer T, Hübener M. Sensorimotor Mismatch Signals in Primary Visual Cortex of the Behaving Mouse. Neuron. 2012;74:809–815. doi: 10.1016/j.neuron.2012.03.040. [DOI] [PubMed] [Google Scholar]

- 20.Morris RGM. Spatial localization does not require the presence of local cues. Learn Motiv. 1981;12:239–260. [Google Scholar]

- 21.Youngstrom Ia, Strowbridge BW. Visual landmarks facilitate rodent spatial navigation in virtual reality environments. Learn Mem. 2012;19:84–90. doi: 10.1101/lm.023523.111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Whitchurch Ea, Takahashi TT. Combined auditory and visual stimuli facilitate head saccades in the barn owl (Tyto alba) J Neurophysiol. 2006;96:730–745. doi: 10.1152/jn.00072.2006. [DOI] [PubMed] [Google Scholar]

- 23.Knudsen EI. Instructed learning in the auditory localization pathway of the barn owl. Nature. 2002;417:322–328. doi: 10.1038/417322a. [DOI] [PubMed] [Google Scholar]

- 24.Brown RE, Wong Aa. The influence of visual ability on learning and memory performance in 13 strains of mice. Learn Mem. 2007;14:134–144. doi: 10.1101/lm.473907. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Felsen G, Mainen ZF. Neural Substrates of Sensory-Guided Locomotor Decisions in the Rat Superior Colliculus. Neuron. 2008;60:137–148. doi: 10.1016/j.neuron.2008.09.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Barretto RPJ, Ko TH, Jung JC, Wang TJ, Capps G, Waters AC, Ziv Y, Attardo A, Recht L, Schnitzer MJ. Time-lapse imaging of disease progression in deep brain areas using fluorescence microendoscopy. Nat Med. 2011;17:223–8. doi: 10.1038/nm.2292. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Wallace DJ, Greenberg DS, Sawinski J, Rulla S, Notaro G, Kerr JND. Rats maintain an overhead binocular field at the expense of constant fusion. Nature. 2013;498:65–9. doi: 10.1038/nature12153. [DOI] [PubMed] [Google Scholar]

- 28.Dombeck DA, Harvey CD, Tian L, Looger LL, Tank DW. Functional imaging of hippocampal place cells at cellular resolution during virtual navigation. Nat Neurosci. 2010;13:1433–40. doi: 10.1038/nn.2648. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Jensen P. The ethology of domestic animals. Etol los Anim Domest. 2004:250. [Google Scholar]

- 30.Whitaker JO. Food of Mus musculus, Peromyscus maniculatus bairdi and Peromyscus leucopus in Vigo County, Indiana. J Mammal. 1966;47:473–486. [Google Scholar]

- 31.Borchers HW, Burghagen H, Ewert JP. Key stimuli of prey for toads (Bufo bufo L.): Configuration and movement patterns. J Comp Physiol A. 1978;128:189–192. [Google Scholar]

- 32.Ewert JP. Quantitative analysis of stimulus-reaction relations in the visually released orienting component of the prey-catching behavior of the common toad(Bufo buf L.) Pflugers Arch. 1969;308:225–43. doi: 10.1007/BF00586556. [DOI] [PubMed] [Google Scholar]

- 33.Ingle DJ, Sprague JM. Sensori-motor functions of the midbrain tectum. Neurosci Res Progr Bull. 1975;13:169–288. [Google Scholar]

- 34.Filosa Alessandro, Barker Alison J, Marco Dal Maschio HB. Feeding State Modulates Behavioral Choice and Processing of Prey Stimuli in the Zebrafish Tectum. Neuron. 2016;90:596–608. doi: 10.1016/j.neuron.2016.03.014. [DOI] [PubMed] [Google Scholar]

- 35.Westby GWM, Keay KA, Redgrave P, Dean P, Bannister M. Output pathways from the rat superior colliculus mediating approach and avoidance have different sensory properties. Exp Brain Res. 1990;81:626–638. doi: 10.1007/BF02423513. [DOI] [PubMed] [Google Scholar]

- 36.Overton P, Dean P, Redgrave P. Detection of visual stimuli in far periphery by rats: possible role of superior colliculus. Exp Brain Res. 1985;59:559–569. doi: 10.1007/BF00261347. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.