Abstract

Background

The aim of this study was to evaluate the current state of two publication practices, reporting guidelines requirements and clinical trial registration requirements, by analyzing the “Instructions for Authors” of emergency medicine journals.

Methods

We performed a web-based data abstraction from the “Instructions for Authors” of the 27 Emergency Medicine journals catalogued in the Expanded Science Citation Index of the 2014 Journal Citation Reports and Google Scholar Metrics h5-index to identify whether each journal required, recommended, or made no mention of the following reporting guidelines: EQUATOR Network, ICMJE, ARRIVE, CARE, CONSORT, STARD, TRIPOD, CHEERS, MOOSE, STROBE, COREQ, SRQR, SQUIRE, PRISMA-P, SPIRIT, PRISMA, and QUOROM. We also extracted whether journals required or recommended trial registration. Authors were blinded to one another’s ratings until completion of the data validation. Cross-tabulations and descriptive statistics were calculated using IBM SPSS 22.

Results

Of the 27 emergency medicine journals, 11 (11/27, 40.7%) did not mention a single guideline within their “Instructions for Authors,” while the remaining 16 (16/27, 59.3%) mentioned one or more guidelines. The QUOROM statement and SRQR were not mentioned by any journals whereas the ICMJE guidelines (18/27, 66.7%) and CONSORT statement (15/27, 55.6%) were mentioned most often. Of the 27 emergency medicine journals, 15 (15/27, 55.6%) did not mention trial or review registration, while the remaining 12 (12/27, 44.4%) at least mentioned one of the two. Trial registration through ClinicalTrials.gov was mentioned by seven (7/27, 25.9%) journals while the WHO registry was mentioned by four (4/27, 14.8%). Twelve (12/27, 44.4%) journals mentioned trial registration through any registry platform.

Discussion

The aim of this study was to evaluate the current state of two publication practices, reporting guidelines requirements and clinical trial registration requirements, by analyzing the “Instructions for Authors” of emergency medicine journals. In this study, there was not a single reporting guideline mentioned in more than half of the journals. This undermines efforts of other journals to improve the completeness and transparency of research reporting.

Conclusions

Reporting guidelines are infrequently required or recommended by emergency medicine journals. Furthermore, few require clinical trial registration. These two mechanisms may limit bias and should be considered for adoption by journal editors in emergency medicine.

Trial registration

Electronic supplementary material

The online version of this article (doi:10.1186/s13049-016-0331-3) contains supplementary material, which is available to authorized users.

Keywords: Reporting guidelines, EQUATOR network, ICMJE, CONSORT, PRISMA, STROBE, STARD, Clinical trial registry, ClinicalTrials.gov, WHO

Background

Reporting guidelines have been developed for authors to improve the quality of research reporting, encourage transparency, and discourage methodological aspects of study design that contribute to bias [1, 2]. Hirst and Altman call the poor reporting plaguing medical research “unethical, wasteful of scarce resources and even potentially harmful” [3] and suggest that guideline adoption may help mitigate these issues. Evidence suggests that guideline adoption improves the quality of research reporting [4, 5], in part, by minimizing the omission of critical information in methods sections, inadequate reporting of adverse events, or misleading presentations of results [6]. The EQUATOR (Enhancing the QUAlity of Transparency Of health Research) Network is an international initiative devoted to advancement of high quality reporting of health research studies [7]. To date, EQUATOR has catalogued 308 guidelines for all types of study designs [7]. CONSORT guidelines for randomized controlled trials, PRISMA guidelines for systematic reviews, and STROBE guidelines for observational studies are among the most used guidelines found in the EQUATOR library.

Clinical trial registration has also been recognized as a means to limit bias [8, 9]. A large body of evidence suggests, for example, that selective reporting of outcomes is a common problem across many areas of medicine [10]. The International Committee of Medical Journal Editors (ICMJE) require all journals within its network only accept manuscripts for publication if prior trial registration occurred before the first patient was enrolled [11]. The World Health Organization has also released a position statement supporting trial registration [12], and the United States government enacted the FDA Amendments Act requiring such registrations. Therefore, the requirement by journals to register clinical trials coupled with guidance on use of reporting guidelines may lead to improved study design and reporting. Here, we investigate the policies of Emergency Medicine journals concerning guideline adoption and clinical trial registration to understand the extent to which journals are using these mechanisms as a means to improve reporting practices.

Methods

We conducted a review of journal policies and “Instructions for Authors” concerning guideline adherence and trial registration requirements. This study did not meet the regulatory definition of human subject research as defined in 45 CFR 46.102(d) and (f) of the Department of Health and Human Services’ Code of Federal Regulations and, therefore, was not subject to Institutional Review Board oversight. We applied relevant SAMPL guidelines for reporting descriptive statistics [13]. This study is registered on the University hospital Medical Information Network Clinical Trial Registry (UMIN-CTR, UMIN000022486) and data from this study is publically available on figshare (https://dx.doi.org/10.6084/m9.figshare.3406477.v6).

We conducted a search of two journal indexing databases, the Expanded Science Citation Index of the 2014 Journal Citation Reports [14–23] (Thomson Reuters; New York, NY) accessed on February 17, 2016 and Google Scholar Metrics h5-index [23–27] emergency medicine subcategory (Google Inc; Mountain View, CA) accessed on October 11, 2016. Between the two databases, we selected 27 emergency medicine journals. Additional file 1: Table S1 details journal selection. The second author (NMH) performed web-based searches for each journal and located the “Instructions for Authors” information (performed June 4, 2016 and October 11, 2016). The first (MTS) and last (MV) authors reviewed together each journal’s website to determine the types of articles accepted for publication. In many cases, the descriptions were vague and required further clarification. These authors emailed the Editor-in-Chief of the journals to inquire about the types of study designs considered for publication (systematic reviews/meta-analyses, clinical trials, diagnostic accuracy studies, case reports, epidemiological studies, and animal studies). For non-responding editors, we emailed three times in one week intervals in an attempt to obtain this information as suggested by Dillman to improve response rates [28]. A second round of emails were sent to the Editor-in-Chief of the journals to inquire about an additional set of study designs considered for publication (qualitative research, quality improvement studies, economic evaluations, and study protocols). For non-responding editors, follow up emails were sent in a one-week interval as well to maintain consistency in data obtaining methods.

Prior to the study, all authors met to plan the study design and discuss any anticipated problems. Follow-up meetings were held to pilot test the process and address any issues that arose after evaluating a subset of journal guidelines. Adjustments were made as necessary based on consensus of the authors, and a final extraction manual was created to standardize the process.

The second author (NMH) first catalogued the journal title, impact factor, and geographic location (defined by the primary location of the journal’s editorial office as indexed in the Expanded Science Citation Index) for each journal. We followed the classification of Meerpohl et al. [22] and classified geographic location as either North America (United States and Canada), the United Kingdom, Europe (without the United Kingdom), and other countries. Next, this author used a combination of keyword searches and reviewed the full-text versions of the “Instructions for Authors,” Submission Guidelines, Editorial Policies, or other relevant sections related to the manuscript submission (hereafter referred to as “Instructions for Authors”). The policy statements for the following guidelines were extracted: Consolidated Standards of Reporting Trials (CONSORT), Meta-Analysis of Observational Studies in Epidemiology (MOOSE), Quality of Reporting of Meta-analyses (QUOROM), Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA), Standards for Reporting Diagnostic Accuracy Studies (STARD), Strengthening the Reporting of Observational Studies in Epidemiology (STROBE), Animal Research: Reporting of In Vivo Experiments (ARRIVE), Case Reports (CARE), Consolidated Health Economic Evaluation Reporting Standards (CHEERS), Standards for Reporting Qualitative Research (SRQR), Standards for Quality Improvement Reporting Excellence (SQUIRE), Standard Protocol Items: Recommendations for Interventional Trials (SPIRIT), Consolidated Criteria for Reporting Qualitative Research (COREQ), Transparent Reporting of a Multivariate Prediction Model for Individual Prognosis or Diagnosis (TRIPOD), Preferred Reporting Items for Systematic Review and Meta-Analysis Protocols (PRISMA-P) and the International Committee of Medical Journal Editors (ICMJE) guidelines. Information regarding clinical trial registration and review registration requirements and acceptable or recommended trials and review registries, when provided, were also extracted. Statements that were extracted in a language other than English were translated using Google Translate (Google Inc; Mountain View, CA).

Following data extraction, the first (MTS) and third (CCW) authors examined each extracted statement and, using a Google form, rated whether the journal required, recommended, or failed to mention each reporting guideline. Statements about trial registration were rated the same way. During the pilot session, it was agreed that words or phrases such as “should,” “prefer,” “encourage,” or “in accordance to the recommendation of” were rated as recommended, while words or phrases such as “must,” “need,” or “manuscripts won’t be considered for publication unless” were regarded as required/compulsory. If the authors (MTS and CCW) were unable to determine whether a journal required or recommended a particular guideline, then the statement was marked as unclear. Each author was blinded to the ratings of the other. MTS and CCW met after completing the rating process to compare scores and resolve any disagreements. Cross-tabulations and descriptive statistics were calculated using IBM SPSS 22 (IBM Corporation; Armonk, NY). If a journal did not publish a particular type of study, then it was not considered when computing proportions. For example, if a journal did not publish preclinical animal studies, then the ARRIVE Guidelines were not relevant to that journal.

Results

Our sample was comprised of 27 emergency medicine journals. The impact factors of these journals ranged from 0.200 to 4.695 [x̄ = 1.504 (SD = 1.145)]. Editorial offices were located in North America (15/27, 55.6%), Europe (6/27, 22.2%), the United Kingdom (4/27, 14.8%), and other countries (2/27, 7.4%). For each study type, the appropriate guideline was identified (Table 1). Following a review of “Instructions for Authors” and editor-in-chief email inquiries (response rate = 13/27, 48.1%), the following reporting guidelines were removed from computing proportions due to their study type not being accepted by the journal: CARE statement (7/27, 25.9%), STARD (1/27, 3.7%), TRIPOD statement (1/27, 3.7%), MOOSE checklist (1/27, 3.7%), STROBE checklist (1/27, 3.7%), and SRQR (2/27, 7.4%) (Table 2).

Table 1.

Reporting guidelines by study type

| Animal Research | ARRIVE Guidelines |

|---|---|

| Case Reports | CARE Guidelines |

| Clinical Trials | CONSORT Statement |

| Diagnostic Accuracy Studies | STARD |

| TRIPOD Statement | |

| Economic Evaluations | CHEERS Statement |

| Observational Studies in Epidemiology | MOOSE Statement |

| STROBE Statement | |

| Qualitative Research | COREQ Checklist |

| SRQR | |

| Quality Improvement Studies | SQUIRE Checklist |

| Study Protocols | PRISMA-P Statement |

| SPIRIT Statement | |

| Systematic Reviews & Meta-Analyses | PRISMA Statement |

| QUOROM Checklist |

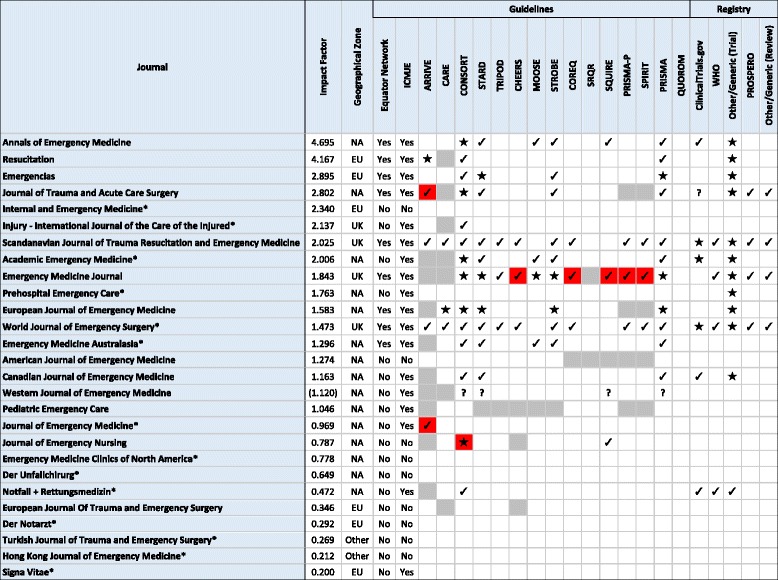

Table 2.

Use of reporting guidelines and study registration by journal

★ = Required/Compulsory, ✔ = Recommended, ? = Unclear, Boxes filled with grey = journal does not accept the study design requiring these guidelines, Boxes filled with red = journal does not accept the study design yet the “Instructions for Authors” mention the guideline, * = editor in chief did not respond to email inquiry. (Impact Factor) = self-calculated impact factor by the journal. All data was extracted on June 4, 2016 except Journal of Trauma and Acute Care Surgery, Internal and Emergency Medicine, & Western Journal of Emergency Medicine which data extraction occurred on October 27, 2016

Reporting guidelines

The “Instructions for Authors” of nine (9/27, 33.3%) journals referenced the EQUATOR Network. The authors’ guidelines of 18 (18/27, 66.7%) journals referenced the ICMJE guidelines. Of the 27 emergency medicine journals, 11 (11/27, 40.7%) did not mention a single guideline within their “Instructions for Authors,” while the remaining 16 (16/27, 59.3%) mentioned one or more guidelines.

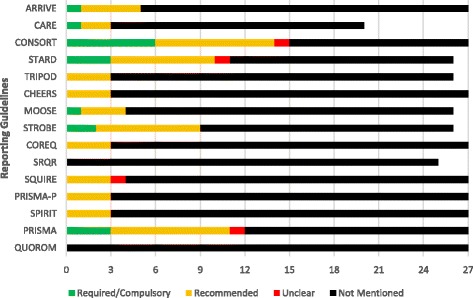

Guideline usage is presented in Table 2. Across reporting guidelines, the CONSORT statement (15/27, 55.6%) was most frequently required (6/15, 40.0%) and recommended (8/15, 53.3%) by journal in our sample followed by the PRISMA guidelines (12/27, 44.4%) and STARD guidelines (11/27, 40.7%). The QUOROM statement and SRQR were not mentioned by any journals (Fig. 1).

Fig. 1.

Frequency of reporting guideline adherence across journals. This figure depicts the adherence to each guideline reviewed within this study. Studies that weren’t accepted by journals had their guidelines removed when computing proportions

Adherence to each of the following guidelines was mentioned within the “Instructions for Authors” of an emergency medicine journal; however, the editor-in-chief for the respective journal listed the guideline’s study type as unaccepted. The ARRIVE statement was mentioned by four journals: one (1/4, 25.0%) required adherence and three (3/4, 75.0%) recommended adherence. The SQUIRE checklist was mentioned by four journals: three (3/4, 75.0%) recommended adherence and one (1/4, 25.0%) had an “unclear” adherence statement. The CHEERS statement, SPIRIT checklist, COREQ checklist, and PRISMA-P statement were each mentioned by three journals: every journal recommended adherence (Table 2).

Study registration

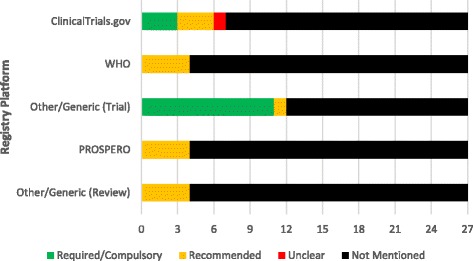

Of the 27 emergency medicine journals, 15 (15/27, 55.6%) did not mention trial or review registration, while the remaining 12 (12/27, 44.4%) at least mentioned one of the two. Trial registration through ClinicalTrials.gov was required by three (3/27, 11.1%) journal, recommended by three (3/27, 11.1%) journals, and one (1/27, 3.7%) journal contained an “unclear” adherence statement. Registration through World Health Organization (WHO) was recommend by four (4/27, 14.8%) journals. Eleven (11/27, 40.7%) journals required trial registration through any trial registry and one (3.7%, 1/27) journals recommended registration. Review registration through the PROSPERO platform was recommended by four (4/27, 14.8%) journals. Review registration through any review registry platform was recommended by four (4/27, 14.8%) journals (Fig. 2).

Fig. 2.

Frequency of registration adherence across journals. This figure depicts the adherence to each type of trial and/or review registriation

Discussion

The aim of this study was to evaluate the current state of two publication practices, reporting guidelines requirements and clinical trial registration requirements, by analyzing the “Instructions for Authors” of emergency medicine journals. In this study, there was not a single reporting guideline mentioned in more than half of the journals. This undermines efforts of other journals to improve the completeness and transparency of research reporting. For example, the 2001 CONSORT revision states, “…inadequate reporting borders on unethical practice when biased results receive false credibility” [29]. Furthermore, it could be argued that it is the moral duty of researchers and reviewers to report their findings as clearly as possible to allow readers the ability to accurately judge the validity of the results [29–33]. The scientific process demands transparency and without it, authors are blinding their readers [29, 31, 32]. While blinding participants and researchers is a significant aspect of study quality, blinding readers impairs the scientific inquiry [29, 31, 32].

In this study, we assessed whether journals continued to mention adherence to the QUOROM statement even though it was superseded by the PRISMA statement in 2009 [34]. Our analysis of emergency medicine journals indicated that none of the journals mentioned adherence to the QUOROM statement. Evidence suggests that a few journals continue to refer to the QUOROM statement [18].

In 2006, the EQUATOR Network was developed with the intent of improving the value and reliability of research literature by promoting accurate and transparent reporting [7]. The EQUATOR Network provides access to guidelines including: CONSORT, STROBE, PRISMA, CARE, STARD, ARRIVE, and others. With the support of major editorial groups, guidelines such as CONSORT, STROBE, and PRISMA are recognized by high impact factor journals [30]. Hopewell et al. [35] reported that between 2000 and 2006, journals that adopted these guidelines displayed an improvement in reporting of critical elements. While there has been an improvement in trial quality throughout the research field, evidence suggests that journals adopting the CONSORT guidelines have been improving at a faster pace than journals that did not [36].

While reporting guidelines are invaluable for achieving accurate, complete, and transparent reporting, trial registration is a major contributor as well. In a recent British Medical Journal (BMJ) publication, medical journal editors expressed their firm belief that required trial registration is the single most valuable tool in ensuring unbiased reporting [37]. In 2007, clinical trial registration became a requirement of investigators in accordance with United States (US) law [Public Law 110–85, Title VIII, also called the FDA Amendments Act (FDAAA) of 2007, Section 801] [38, 39]. However, a significant number of researchers and journal editors are reluctant to completely endorse trial registration [40–42]. and enforce this existing legislation [43, 44]. In an attempt to reduce publication bias, the ICMJE and CONSORT statement require trial registration in order to be considered for publication [45, 46]. Regardless of these requirements and US legislation, one in four published studies is not registered allowing for potential selective reporting bias [47]. Trial registration remains a problem. Recent studies have indicated that many publish RCTs are unregistered or prospectively registered, and rates of trial registration vary by clinical specialty [48–55]. These practices might be improved if journals would develop and adhere to policies requiring prospective registration prior to submission. Our study found that most emergency medicine journals do not have such policies.

In some fields of medical research, such as rehabilitation and disability, the journals have formed a collaboration to enhance research reporting standards [56]. As of 2014, 28 major rehabilitation and disability journals have formed a group to require adherence to reporting guidelines to improve the quality of research reporting not only within their journal, but their field of medicine and research as a whole. This movement has made it difficult for authors receiving unfavorable reviews due to inadequate guideline adherence to pick and choose journals that may present more lax reporting guideline adherence. It would be reasonable for emergency medicine journal editors to consider forming such a collaboration, especially if evidence suggests that such collaborations improve the quality of research reporting.

Study limitations

In our study, we attempted to contact editors to obtain information when the “Instructions for Authors” were unclear, some did not respond to our inquiries, and we were not able to verify or clarify whether these journals published certain types of study designs.

Future research

In future studies, researchers could evaluate the practical aspects of using guidelines from both the authors’ and editors’ perspectives. Here, we discuss the use of these reporting guidelines as a means to mitigate bias and provide more complete reporting of study information. The practical aspects of guideline reporting and monitoring, however, are not well understood, and it is conceivable that active monitoring of guideline adherence is not feasible given limited resources. Another interesting line for future research would be to investigate whether guideline adherence improves quality within other study designs such as systematic reviews. Researchers could investigate differences in quality between journals that require guideline adherence, recommend guideline adherence, and omit information about reporting guidelines.

Conclusion

In conclusion, reporting guidelines are infrequently required or recommended by emergency medicine journals. Furthermore, few require clinical trial registration. These two mechanisms may limit bias and should be considered for adoption by journal editors in emergency medicine. Emergency medicine journals might recommend, rather than mandate, these mechanisms as first steps toward adoption. Additional research is needed to determine the effectiveness of these mechanisms.

Acknowledgements

Not applicable.

Funding

This project was not funded.

Availability of data and material

Data from this study is publically available on figshare (https://dx.doi.org/10.6084/m9.figshare.3406477.v6).

Author’s contributions

MTS, NMH, CCW and MV made substantial contributions to the conception and design of the work; the acquisition, analysis, and interpretation of data for the work. MTS, NMH, CCW and MV contributed to drafting the work and revising it critically for important intellectual content. MTS, NMH, CCW and MV approved the final version to be published. MTS, NMH, CCW and MV agreed to be accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved.

Competing interests

The authors declare that they have no competing interests.

Consent for publication

Not applicable.

Ethics approval and consent to participate

This study did not meet the regulatory definition of human subject research as defined in 45 CFR 46.102(d) and (f) of the Department of Health and Human Services’ Code of Federal Regulations and, therefore, was not subject to Institutional Review Board oversight.

Abbreviations

- ARRIVE

Animal Research: Reporting of In Vivo Experiments

- BMJ

British Medical Journal

- CARE

CAse REports

- CHEERS

Consolidated Health Economic Evaluation Reporting Standards

- CONSORT

Consolidated Standards of Reporting Trials

- COREQ

Consolidated Criteria for Reporting Qualitative Research

- EQUATOR

Enhancing the QUAlity of Transparency Of health Research

- ICMJE

International Committee of Medical Journal Editors

- MOOSE

Meta-Analysis of Observational Studies in Epidemiology

- PRISMA

Preferred Reporting Items for Systematic Reviews and Meta-Analyses

- PRISMA-P

Preferred Reporting Items for Systematic Review and Meta-Analysis Protocols

- QUOROM

Quality of Reporting of Meta-analyses

- SPIRIT

Standard Protocol Items: Recommendations for Interventional Trials

- SQUIRE

Standards for Quality Improvement Reporting Excellence

- SRQR

Standards for Reporting Qualitative Research

- STARD

Standards for Reporting Diagnostic Accuracy Studies

- STROBE

Strengthening the Reporting of Observational Studies in Epidemiology

- TRIPOD

Transparent Reporting of a Multivariate Prediction Model for Individual Prognosis or Diagnosis

- US

United States

Additional file

Journal selection, with exclusions. (DOCX 13 kb)

Contributor Information

Matthew T. Sims, Phone: 469-667-8099, Email: matt.sims@okstate.edu

Nolan M. Henning, Email: nhenning6@gmail.com

C. Cole Wayant, Email: cole.wayant@okstate.edu.

Matt Vassar, Email: matt.vassar@okstate.edu.

References

- 1.Smith TA, Kulatilake P, Brown LJ, Wigley J, Hameed W, Shantikumar S. Do surgery journals insist on reporting by CONSORT and PRISMA? A follow-up survey of “instructions to authors”. Ann Med Surg. 2015;4:17–21. doi: 10.1016/j.amsu.2014.12.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Simera I, Moher D, Hoey J, Schulz KF, Altman DG. The EQUATOR Network and reporting guidelines: Helping to achieve high standards in reporting health research studies. Maturitas. 2009;63:4–6. doi: 10.1016/j.maturitas.2009.03.011. [DOI] [PubMed] [Google Scholar]

- 3.Hirst A, Altman DG. Are peer reviewers encouraged to use reporting guidelines? A survey of 116 Health Research Journals. PLoS ONE. 2012;7(4):e35621. doi:10.1371/journal.pone.0035621. [DOI] [PMC free article] [PubMed]

- 4.Plint AC, Moher D, Morrison A, Schulz K, Altman DG, Hill C, et al. Does the CONSORT checklist improve the quality of reports of randomised controlled trials? A systematic review. Med J Aust. 2006;185:263–267. doi: 10.5694/j.1326-5377.2006.tb00557.x. [DOI] [PubMed] [Google Scholar]

- 5.Prady SL, Richmond SJ, Morton VM, Macpherson H. A systematic evaluation of the impact of STRICTA and CONSORT recommendations on quality of reporting for acupuncture trials. PLoS ONE. 2008;3(2):e1577. doi:10.1371/journal.pone.0001577. [DOI] [PMC free article] [PubMed]

- 6.Simera I, Moher D, Hirst A, Hoey J, Schulz KF, Altman DG. Transparent and accurate reporting increases reliability, utility, and impact of your research: reporting guidelines and the EQUATOR Network. BMC Med. 2010;8:24. doi:10.1186/1741-7015-8-24. [DOI] [PMC free article] [PubMed]

- 7.The EQUATOR Network. Enhancing the QUAlity and Transparency of health Research. [Internet]. Oxford; Centre for Statistics in Medicina. Available at http://www.equator-network.org/. Accessed on 18 Mar 2015.

- 8.Bonati M, Pandolfini C. Trial registration, the ICMJE statement, and paediatric journals. Arch Dis Child. 2006;91(1):93. doi:10.1136/adc.2005.085530. [DOI] [PMC free article] [PubMed]

- 9.Rivara FP. Registration of Clinical Trials. Arch Pediatr & Adolesc Med. 2005;1592005;159(7):685. doi:10.1001/archpedi.159.7.685.

- 10.Jones CW, Keil LG, Holland WC, Caughey MC, Platts-Mills TF. Comparison of registered and published outcomes in randomized controlled trials: a systematic review. BMC Med. 2015;13:282. doi:10.1186/s12916-015-0520-3. [DOI] [PMC free article] [PubMed]

- 11.International Committee of Medical Journal Editors (ICMJE). Clinical Trial Registration. Available at http://www.icmje.org/recommendations/browse/publishing-and-editorial-issues/clinical-trial-registration.html. Accessed 18 Mar 2016.

- 12.World Health Organization (WHO); International Clinical Trials Registry Platform (ICTRP). WHO Statement on Public Disclosure of Clinical Trial Results. Available at http://www.who.int/ictrp/results/WHO_Statement_results_reporting_clinical_trials.pdf?ua=1. Accessed on 18 Mar 2016.

- 13.Lang TA, Altman DG. Basic statistical reporting for articles published in biomedical journals: The “Statistical Analyses and Methods in the Published Literature” or The “SAMPL Guidelines.” In: Smart P, Maisonneuve H, Polderman A (eds). Science Editors’ Handbook. Oxford: European Association of Science Editors; 2013. [DOI] [PubMed]

- 14.Onishi A, Furukawa TA. Publication bias is underreported in systematic reviews published in high-impact-factor journals: metaepidemiologic study. J Clin Epidemiol. 2014;67(12):1320–6. doi: 10.1016/j.jclinepi.2014.07.002. [DOI] [PubMed] [Google Scholar]

- 15.Bonnot B, Yavchitz A, Mantz J, Paugam-Burtz C, Boutron I. Selective primary outcome reporting in high-impact journals of anaesthesia and pain. Br J Anaesth. 2016;117(4):542–3. doi: 10.1093/bja/aew280. [DOI] [PubMed] [Google Scholar]

- 16.Helfer B, Prosser A, Samara MT, Geddes JR, Cipriani A, Davis JM, Mavridis D, Salanti G, Leucht S. Recent meta-analyses neglect previous systematic reviews and meta-analyses about the same topic: a systematic examination. BMC Med. 2015;13(1):1. doi: 10.1186/s12916-015-0317-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Uhlig C, Krause H, Koch T, de Abreu MG, Spieth PM. Anesthesia and monitoring in small laboratory mammals used in anesthesiology, respiratory and critical care research: a systematic review on the current reporting in top-10 impact factor ranked journals. PLoS One. 2015;10(8):e0134205. doi: 10.1371/journal.pone.0134205. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Tao KM, Li XQ, Zhou QH, Moher D, Ling CQ, Yu WF. From QUOROM to PRISMA: a survey of high-impact medical Journals’ instructions to authors and a review of systematic reviews in anesthesia literature. PLoS One. 2011;6(11):e27611. doi: 10.1371/journal.pone.0027611. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Tunis AS, McInnes MD, Hanna R, Esmail K. Association of study quality with completeness of reporting: have completeness of reporting and quality of systematic reviews and meta-analyses in major radiology journals changed since publication of the PRISMA statement? Radiology. 2013;269(2):413–26. doi: 10.1148/radiol.13130273. [DOI] [PubMed] [Google Scholar]

- 20.Panic N, Leoncini E, de Belvis G, Ricciardi W, Boccia S. Evaluation of the endorsement of the preferred reporting items for systematic reviews and meta-analysis (PRISMA) statement on the quality of published systematic review and meta-analyses. PLoS One. 2013;8(12):e83138. doi: 10.1371/journal.pone.0083138. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Bigna JJ, Um LN, Nansseu JR. A comparison of quality of abstracts of systematic reviews including meta-analysis of randomized controlled trials in high-impact general medicine journals before and after the publication of PRISMA extension for abstracts: a systematic review and meta-analysis. Syst Rev. 2016;5(1):174. doi: 10.1186/s13643-016-0356-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Meerpohl JJ, Wolff RF, Niemeyer CM, Antes G, von Elm E. Editorial policies of pediatric journals: survey of instructions for authors. Arch Pediatr Adolesc Med. 2010;164(3):268–72. doi: 10.1001/archpediatrics.2009.287. [DOI] [PubMed] [Google Scholar]

- 23.Harzing AWK, van der Wal R. Google Scholar as a new source for citation analysis. Ethics Sci Environ Polit. 2008;8:61–73. doi: 10.3354/esep00076. [DOI] [Google Scholar]

- 24.Delgado-Lopez-Cozar E, Cabezas-Clavijo A. Ranking journals: could Google scholar metrics be an alternative to journal citation reports and Scimago journal rank? Learn Publ. 2013;26(2):101–13. doi: 10.1087/20130206. [DOI] [Google Scholar]

- 25.Delgado E, Repiso R. The impact of scientific journals of communication: comparing Google scholar metrics, web of science and scopus. Comunicar. 2013;21(41):45. doi: 10.3916/C41-2013-04. [DOI] [Google Scholar]

- 26.Truex DP, III, Cuellar MJ, Takeda H. Assessing scholarly influence: using the Hirsch indices to reframe the discourse. J Assoc Inf Syst. 2009;10(7):560–594. [Google Scholar]

- 27.Mingers J, Macri F, Petrovici D. Using the h-index to measure the quality of journals in the field of business and management. Inform Process Manag. 2012;48(2):234–41. doi: 10.1016/j.ipm.2011.03.009. [DOI] [Google Scholar]

- 28.Dillman DA, Smyth JD, Christian LM. Internet, Phone, Mail, and Mixed-Mode Surveys: The Tailored Design Method 2013.

- 29.Moher D, Schulz KF, Altman DG. The CONSORT statement: revised recommendations for improving the quality of reports of parallel-group randomised trials. Lancet. 2001;357:1191–1194. doi: 10.1016/S0140-6736(00)04337-3. [DOI] [PubMed] [Google Scholar]

- 30.Vandenbroucke JP. STREGA, STROBE, STARD, SQUIRE, MOOSE, PRISMA, GNOSIS, TREND, ORION, COREQ, QUOROM, REMARK… CONSORT: for whom does the guideline toll? J Clin Epidemiol. 2009;62:594–596. doi: 10.1016/j.jclinepi.2008.12.003. [DOI] [PubMed] [Google Scholar]

- 31.Moher D, Schulz KF, Altman D, CONSORT Group (Consolidated Standards of Reporting Trials) The CONSORT statement: revised recommendations for improving the quality of reports of parallel-group randomized trials. JAMA. 2001;285:1991. doi: 10.1001/jama.285.15.1987. [DOI] [PubMed] [Google Scholar]

- 32.Moher D, Schulz KF, Altman DG, CONSORT GROUP (Consolidated Standards of Reporting Trials). The CONSORT statement: revised recommendations for improving the quality of reports of parallel-group randomized trials. Ann Intern Med. 2001;134:657–662. doi: 10.7326/0003-4819-134-8-200104170-00011. [DOI] [PubMed] [Google Scholar]

- 33.Moher D. Reporting research results: a moral obligation for all researchers. Can J Anaesth. 2007;54:331–335. doi: 10.1007/BF03022653. [DOI] [PubMed] [Google Scholar]

- 34.Liberati A, Altman DG, Tetzlaff J, Mulrow C, Gotzsche PC, Ioannidis JPA, et al. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate healthcare interventions: explanation and elaboration. PLoS Med. 2009;6(7):e1000100. doi: 10.1371/journal.pmed.1000100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Hopewell S, Dutton S, Yu LM, Chan AW, Altman DG. The quality of reports of randomised trials in 2000 and 2006: comparative study of articles indexed in PubMed. BMJ. 2010;340:c723. doi:10.1136/bmj.c723. [DOI] [PMC free article] [PubMed]

- 36.Moher D, Jones A, Lepage L, CONSORT Group (Consolidated Standards for Reporting of Trials) Use of the CONSORT statement and quality of reports of randomized trials: a comparative before-and-after evaluation. JAMA. 2001;285:1992–1995. doi: 10.1001/jama.285.15.1992. [DOI] [PubMed] [Google Scholar]

- 37.Weber WEJ, Merino JG, Loder E. Trial registration 10 years on. BMJ. 2015;351:h3572. doi: 10.1136/bmj.h3572. [DOI] [PubMed] [Google Scholar]

- 38.National Institute of Health (NIH); National Institute of Neurological Disorders and Stroke. ClinicalTrials.gov Registration and Reporting. Modified on September 18, 2015. Available at http://www.ninds.nih.gov/research/clinical_research/basics/clinicaltrials_required_registration.htm. Accessed 26 May 2016.

- 39.ClinicalTrials.gov. FDAAA 801 Requirements. Modified on November 1, 2015. Available at https://clinicaltrials.gov/ct2/manage-recs/fdaaa. Accessed 26 May 2016.

- 40.Malicki M, Marusic A, OPEN (to Overcome failure to Publish nEgative fiNdings) Consortium Is there a solution to publication bias? Researchers call for changes in dissemination of clinical research results. J Clin Epidemiol. 2014;67:1103–1110. doi: 10.1016/j.jclinepi.2014.06.002. [DOI] [PubMed] [Google Scholar]

- 41.Reveiz L, Krleza-Jeric K, Chan AW, de Aguiar S. Do trialists endorse clinical trial registration? Survey of a Pubmed sample. Trials. 2007;8:30. doi:10.1186/1745-6215-8-30. [DOI] [PMC free article] [PubMed]

- 42.Wager E, Williams P, Project Overcome failure to Publish nEgative fiNdings Consortium. “Hardly worth the effort”? Medical journals’ policies and their editors’ and publishers’ views on trial registration and publication bias: quantitative and qualitative study. BMJ. 2013;347:f5248. [DOI] [PMC free article] [PubMed]

- 43.Jones CW, Handler L, Crowell KE, Keil LG, Weaver MA, Platts-Mills TF. Non-publication of large randomized clinical trials: cross sectional analysis. BMJ. 2013;347:f6104. doi:10.1136/bmj.f6104. [DOI] [PMC free article] [PubMed]

- 44.Prayle AP, Hurley MN, Smyth AR. Compliance with mandatory reporting of clinical trial results on ClinicalTrials.gov: cross sectional study. BMJ. 2012;344:d7373. [DOI] [PubMed]

- 45.De Angelis C, Drazen JM, Frizelle FA, et al. International Committee of Medical Journal Editors. Clinical trial registration: a statement from the International Committee of Medical Journal Editors. New Engl J Med. 2004;351:1250–1251. doi: 10.1056/NEJMe048225. [DOI] [PubMed] [Google Scholar]

- 46.Schulz KF, Altman DG, Moher D, CONSORT Group. CONSORT 2010 statement: updated guidelines for reporting parallel group randomised trials. PLoS Med. 2010;7(3):e1000251. [DOI] [PMC free article] [PubMed]

- 47.Mathieu S, Boutron I, Moher D, Altman DG, Ravaud P. Comparison of registered and published primary outcomes in randomized controlled trials. JAMA. 2009;302:977–984. doi: 10.1001/jama.2009.1242. [DOI] [PubMed] [Google Scholar]

- 48.Van de Wetering FT, Scholten RJPM, Haring T, Clarke M, Hooft L. Trial registration numbers are underreported in biomedical publications. PloS One. 2012;7(11):e49599. [DOI] [PMC free article] [PubMed]

- 49.Hardt JLS, Metzendorf MI, Meerpohl JJ. Surgical trials and trial registers: a cross-sectional study of randomized controlled trials published in journals requiring trial registration in the author insturctions. Trials. 2013;13:407. doi: 10.1186/1745-6215-14-407. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Killeen S, Souralious P, Hunter IA, Hartley JE, Grady HL. Registration rates, adequacy of registration, and a comparison of registered and published primary outcomes in randomized controlled trials published in surgery journals. Ann Surg. 2014;259(1):193–6. doi: 10.1097/SLA.0b013e318299d00b. [DOI] [PubMed] [Google Scholar]

- 51.Hooft L, Korevaar DA, Molenaar N, Bossuyt PM, Scholten RJ. Endorsement of ICMJE’s clinical trial registration policy: A survey among journal editors. Neth J Med. 2014;72(7):349–55. [PubMed] [Google Scholar]

- 52.Anand V, Scales DC, Parshuram CS, Kavanagh BP. Registration and design alterations of clinical trials in critical care: A cross-sectional observational study. Intensive Care Med. 2014;40(5):700–22. doi: 10.1007/s00134-014-3250-7. [DOI] [PubMed] [Google Scholar]

- 53.Kunath F, Grobe HR, Keck Bastian, Rucker G, Wullich B, Antes G, Meerpohl JJ. Do urology journals enforce trial registration? A cross-sectional study of published trials. BMJ Open. 2011;1(2):e000430. [DOI] [PMC free article] [PubMed]

- 54.Mann E, Nguyen N, Fleischer S, Meyer G. Compliance with trial registration in five core journals of clinical geriatrics: A survey of original publications on randomised controlled trials from 2008 to 2012. Age Ageing. 2014;43(6):872–6. doi: 10.1093/ageing/afu086. [DOI] [PubMed] [Google Scholar]

- 55.Huser V, Cimino JJ. Evaluating adherence to the International Committee of Medical Journal Editors’ policy of mandatory, timely clinical trial registration. J Am Med Informatics Assoc. 2013;20:e169–e174. doi: 10.1136/amiajnl-2012-001501. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.The EQUATOR Network. Collaborative initiative involving 28 rehabilitation and disability journals | The Equator Network. 2016. Available at http://www.equator-network.org/2014/04/09/collaborative-initiative-involving-28-rehabilitation-and-disability-journals/. Accessed 26 July 2016.