Abstract

The centrality of quality as a strategy to achieve impact within the U.S. President’s Emergency Plan for AIDS Relief (PEPFAR) has been widely recognized. However, monitoring program quality remains a challenge for many HIV programs, particularly those in resource-limited settings, where human resource constraints and weaker health systems can pose formidable barriers to data collection and interpretation. We describe the practicalities of monitoring quality at scale within a very large multicountry PEPFAR-funded program, based largely at health facilities. The key elements include the following: supporting national programs and strategies; developing a conceptual framework and programmatic model to define quality and guide the provision of high-quality services; attending to program context, as well as program outcomes; leveraging existing and routinely collected data whenever possible; developing additional indicators for judicious use in targeted, in-depth assessments; providing hands-on support for data collection and use at the facility, sub-national, and national levels; utilizing web-based databases for data entry, analysis, and dissemination; and multidisciplinary support from a large team of clinical and strategic information advisors.

Keywords: HIV, informatics, Kenya, monitoring and evaluation, PEPFAR, quality, quality assurance, quality management, resource-limited settings

Introduction

The quality of health services drives both utilization and outcomes, and is an essential component of effective and efficient health systems [1]. In the context of HIV programs, high-quality treatment of people living with HIV (PLWH) leads not only to decreased morbidity and mortality but also to decreased transmission of HIV to others [2]; achieving broad coverage with high-quality HIV services is a key element of epidemic control.

The importance of enhancing program quality to achieve impact against HIV has been emphasized by diverse national and international agencies, and an increasing number of countries now have national HIV quality strategies, including Tanzania, Kenya, and Haiti – all profiled in this Supplement. Quality assurance, including defining quality, setting standards, and developing performance indicators, is a critical step towards identifying and addressing quality deficits. Implementing quality assurance remains an important challenge for HIV programs, particularly those in resource-limited settings, where human resource constraints and weaker health systems can pose formidable barriers to data collection and interpretation [3]. Quality improvement is a second key component of quality management, and building site-level and program-level capacity to identify quality barriers and to rapidly test interventions is a high priority in many countries.

We describe the practicalities of monitoring quality at scale within a large multicountry program funded by the U.S. President’s Emergency Plan for AIDS Relief (PEPFAR), and describe a country-specific case study from Kenya. ICAP at Columbia University has been a PEPFAR implementing partner since 2004, and has supported more than 4000 health facilities in 15 countries to implement HIV prevention, care, and treatment services. Over the past decade, ICAP has supported the enrollment of 2.3 million adults and children in HIV care, and the initiation of 1.4 million people on antiretroviral therapy (ART). Working hand in hand with national governments and local partners, ICAP supports a wide range of complex HIV and health programs, including those targeting HIV prevention, HIV diagnosis, prevention of mother-to-child transmission (PMTCT), care and treatment of adults, adolescents and children, HIV services for key populations [including men who have sex with men (MSM), people who inject drugs, sex workers, prisoners, and migrant and displaced populations], tuberculosis (TB), malaria, noncommunicable diseases, reproductive health, and maternal/child health. In all of its programs, ICAP works to strengthen health systems, to build capacity of healthcare workers and program managers, and to support national policies, strategies, and guidelines.

Drawing on its years of experience supporting the design, implementation, evaluation, and analysis of HIV programs in low-resource settings, as well as resources from national partners, PEPFAR, and multilateral agencies, ICAP has developed an approach to quality management that includes both quality assurance and quality improvement. Quality assurance – the focus of this article – starts with ICAP’s ‘Model of Care’, which is a quality framework characterized by the delivery of comprehensive, integrated, family-focused services along the HIV prevention and care continuum for adults, adolescents, children, and pregnant women. This model defines a comprehensive ‘Package of Care’ for PLWH, encompassing the full continuum of HIV care, including: linkage to health services for people testing positive for HIV; support for retention in care; provision of appropriate clinical and laboratory monitoring; prompt initiation of ART for eligible patients; ongoing adherence support; and services to enhance psychosocial well being and secondary prevention of HIV [4].

Within this comprehensive package, ICAP highlights 10 ‘Core Interventions’ – domains that should be available at all health facilities (Table 1). These evidence-based interventions were selected on the basis of their impact on incidence, morbidity and mortality, and each includes multiple components. ICAP programs are not limited to the Core Interventions; additional services are provided as needed within specific countries and contexts. For example, country programs are responsive to national epidemics, providing appropriate interventions targeted at local key populations, such as MSM and/or people who inject drugs.

Table 1.

ICAP’s Core Interventions.

| Core Intervention | Components |

|---|---|

| HIV testing and counselling | Includes provider-initiated testing and counseling (PITC) and testing for partners and family members |

| Linkage to HIV care for those testing positive for HIV | Linkage from all HIV testing entry points |

| Cotrimoxazole (CTX) prophylaxis | CTX prophylaxis for all eligible people living with HIV (PLWH) |

| Tuberculosis screening | Regular screening with a symptom-based checklist Additional evaluation and treatment for those who screen positive Isoniazid preventive therapy (IPT) for those who screen negative |

| Assessment of ART eligibility, with prompt ART initiation, if eligible |

WHO staging at every visit, including assessment and treatment of opportunistic illnesses Monitoring of child growth and development CD4+ testing at enrollment in care and every 6 months thereafter |

| Prevention of mother-to-child transmission of HIV | Family planning services to prevent unintended pregnancy ART for all pregnant and breastfeeding women with HIV Prompt provision of antiretroviral and other prophylaxis at birth Ongoing care for HIV-infected mothers and HIV-exposed infants |

| Medication monitoring and management | Monitoring of medication adherence & provision of adherence support Monitoring for and management of medication-related side effects and toxicity Monitoring for treatment response and treatment failure, with regimen adjustment, as needed |

| Monitoring and supporting retention in HIV care | Appointment reminders Monitoring of appointment attendance Community-based patient groups for ART refills Outreach and tracking of those who miss appointments and/or are lost to follow-up |

| Access to laboratory testing for HIV diagnosis, determining ART eligibility, monitoring treatment response, and diagnosing TB |

HIV diagnosis including rapid HIV testing and dried blood spot-based HIV-1 DNA PCR for early infant diagnosis CD4+ T-cell testing for assessing ART eligibility and monitoring treatment response Routine or targeted viral load monitoring to detect treatment failure, if available Microscopic or molecular TB diagnosis |

| Psychosocial support | Patient education Counseling to support disclosure, manage stigma and discrimination Support groups Financial support and microfinance projects |

ART, antiretroviral therapy; TB, tuberculosis.

Once Core Interventions were defined, ICAP developed a strategy to routinely measure and monitor program quality across all ICAP-supported health facilities, mapping indicators to Core Interventions and their key components while minimizing the collection of extraneous data. This approach to quality assurance, which leverages existing and routinely collected data as much as possible, is described below.

Methods

ICAP assesses the extent to which the Core Interventions are implemented across 15 countries and thousands of health facilities, utilizing routinely collected PEPFAR indicators that allow comparisons over time and across countries. In addition, ICAP developed program-specific indicators [‘Standards of Care’ (SOC)] for targeted, in-depth assessment of quality, and periodic health facility surveys (’PFaCTs’ or Program and Facility Characteristics Tracking Systems) to describe and monitor facility-level services, infrastructure, context, and capacity. Whenever possible, ICAP uses existing national tools and systems to monitor the program quality.

Routinely collected PEPFAR indicators

PEPFAR indicators are designed by the US Office of the Global AIDS Coordinator (OGAC) to be efficient and practical, and to meet the U.S. government’s (USG’s) minimum needs in demonstrating progress towards its key legislative goals: treatment of six million people; 80% coverage of HIV testing and counseling among pregnant women; 85% coverage of antiretroviral prophylaxis for HIV-positive pregnant women; care for 12 million people; and professional training for 140 000 healthcare workers (www.pepfar.gov). As a PEPFAR-implementing partner, ICAP collects a standard set of programmatic indicators for all of its programs and health facilities, and reports these quarterly to USG agency country offices.

PEPFAR indicators have evolved over time; the latest set includes 61 major indicators, many of which are also disaggregated by patient age and sex [5]. In addition to submitting these indicators to USG agency country offices, each ICAP country team submits 30 indicators – a subset of the 61 major indicators – to ICAP headquarters on a quarterly basis via a web-based reporting and dissemination tool called the ICAP Unified Reporting System (URS) (see below). Twenty-one of these 30 indicators map to ICAP’s Core Interventions and their key components, and ICAP teams use these 21 ‘Priority Indicators’ for quality assessment purposes. ICAP established targets for these Priority Indicators against which all country programs assess their achievements (Table 2).

Table 2.

Sample Priority Indicators and ICAP minimum targets.

| 95% of patients in HIV care and treatment receive cotrimoxazole (CTX) prophylaxis |

| 90% of patients still alive and on treatment 12 months after initiating ART |

| 95% of patients in HIV care and treatment screened for TB at their last clinical visit |

| 95% of HIV positive pregnant women receive multidrug antiretrovirals (including ART) in ANC to reduce MTCT |

ANC, antenatal case; ART, antiretroviral therapy; MTCT, mother-to-child transmission; TB, tuberculosis.

Each ICAP-supported health facility submits an average of 200 data elements [interquartile range (IQR) 28–227] for the 30 indicators, including data stratified by age, sex, and points of service, requiring approximately 700 000 data points to be submitted, verified, collated, and summarized every quarter from nearly 3400 health facilities. Accurate and timely collection of these data to facilitate effective monitoring of program quality requires intense and ongoing work by a large team of approximately 180 data collectors, informatics, and strategic information experts. The median size of the ICAP country office Strategic Information teams is 13 people (IQR 7–20), supported by a headquarters team of 15 staff members with expertise in monitoring and evaluation (M&E), epidemiology, data analysis, and surveillance.

Data collection

To collect and manage this large volume of data every quarter, ICAP provides technical assistance and hands-on support to health facilities as they implement the national standardized monthly summary forms developed by Ministries of Health (MoH). ICAP clinical and M&E advisors provide routine supportive supervision on a monthly to quarterly basis to health facility staff to use these forms to report on performance on a monthly basis. Data from standardized registers, appointment log books, and patient treatment cards are summarized on these forms and sent to the district and provincial health offices for aggregation, as illustrated in the case study from Kenya below.

In several countries, including Kenya, the MoH uses an open source database program called the District Health Information Software (DHIS2) (https://www.dhis2.org/) to aggregate data from facilities at district, province, and national levels. In six country programs, ICAP uses DHIS2 to store performance data for supported facilities, so as to harmonize with MoH DHIS2 databases. ICAP also actively supports MoH to adopt and implement national database platforms to streamline data management and promote data use by program managers. For example, ICAP is currently supporting the MoH in Lesotho to implement an ambitious plan to build a national data warehouse for all health data in a web-based DHIS2 platform. This initiative will harmonize data currently collected via disparate legacy systems that are not linked or interoperable.

To ensure that assessment of program quality is based on high-quality data, ICAP country offices also conduct regular data quality assurance (DQA) exercises using a standard approach, selecting a random sample of patient files, and comparing completeness and consistency across various data sources, including registers, monthly summary forms, and facility-based or ICAP country databases. Each country sets targets for completeness and accuracy, and monitors facility performance on data quality over time. Data quality generally improves over time as facilities receive continued, on-site mentoring from ICAP advisors and gain experience in completing and reviewing data.

Unified reporting system

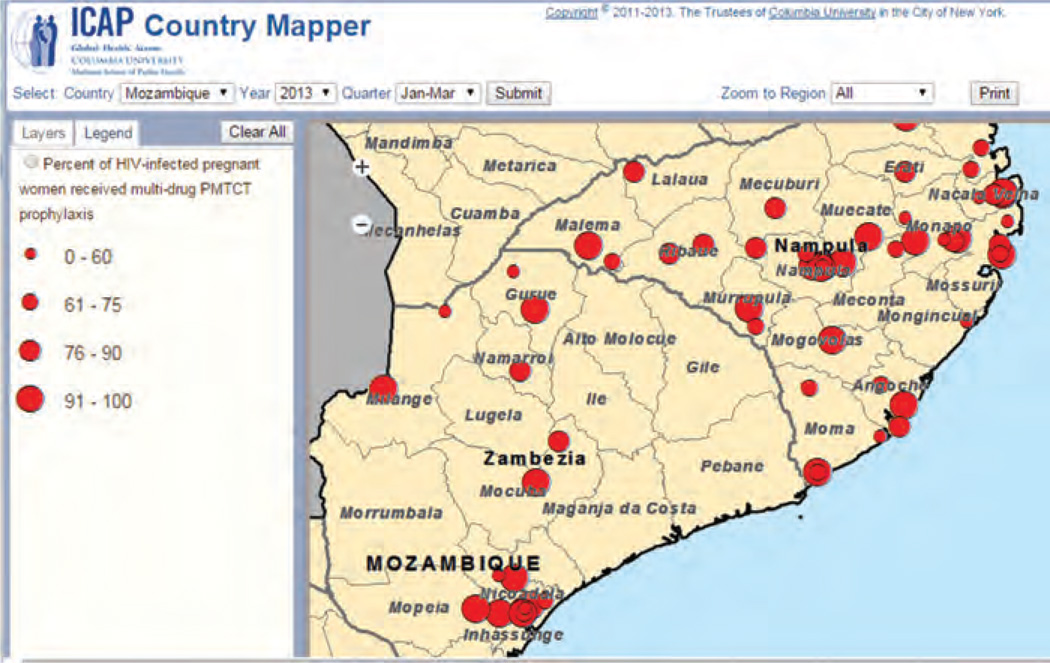

In 2005, ICAP developed a database platform called the Unified Reporting System (URS) to collect, archive, and disseminate aggregate program data. The URS is designed to help keep track of sites, indicators, and achievements by different funding mechanisms – a functionality critical to effectively respond to funder requirements. The URS is fully interoperable with DHIS and other databases used by ICAP country programs, whether they are developed in house or by host governments, allowing smooth data exchange. Automated consistency checks flag potential errors, ensuring that high-quality data are submitted. The URS also provides ‘real-time’ data feedback in graphical and tabular formats via dashboards that display data at the country, sub-national, and health-facility levels. Dashboards can be organized by programmatic areas and priority indicators, and can be stratified by time and geographic regions. It also has an on-line mapper that allows users to view data spatially and create user-defined maps. Figure 1 shows the URS mapper showing performance of a specific Priority Indicator in two provinces of Mozambique. Coverage of multidrug regimens for PMTCT of HIV amongst HIV-positive pregnant women is spatially represented, with each dot representing a health facility, and the size of the dot representing performance for the quarter. ICAP field staff use the URS to identify and track quality challenges, as well as to access aggregate data and to generate reports.

Fig. 1. URS mapper showing performance of a specific Priority Indicator in two provinces of Mozambique.

URS, Unified Reporting System.

Standards of care

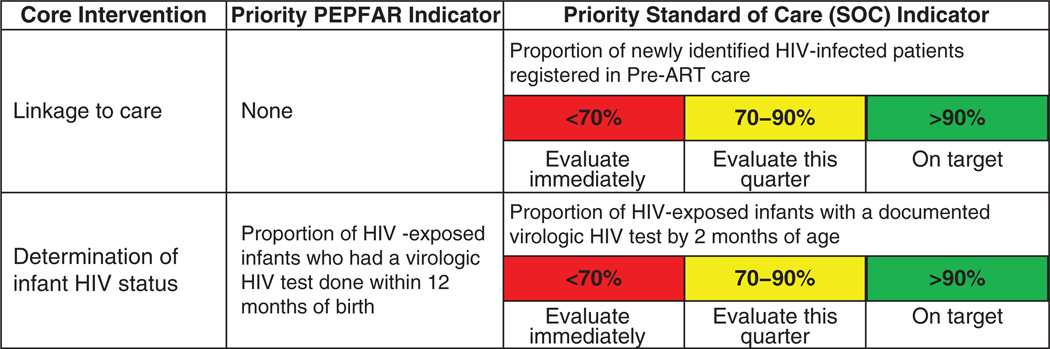

Although the 21 Priority Indicators are used to track program quality, some Core Interventions have no corresponding PEPFAR indicators (e.g., linkage to care), and some may require a more granular evaluation of facility performance (e.g. determination of infants’ HIV status). In these cases, ICAP has developed ‘SOC’ indicators, which combine additional indicators with performance standards. There are 18 priority SOCs. Unlike the Priority Indicators, SOCs often require manual data collection from patient charts and are generally collected from a small subset of patients at selected health facilities. Figure 2 shows two Core Interventions, with corresponding priority PEPFAR indicators and priority SOC indicators. ICAP color-codes performance on SOC indicators for easy interpretation; performance that falls into a red zone requires immediate attention, whereas ‘yellow’ indicators can be addressed with slightly less urgency, and green zone performance corresponds to high quality of care.

Fig. 2. Examples of Priority Indicators and Standards of Care Indicators for selected core interventions.

ICAP country teams are encouraged to select Core Interventions that require more attention due to MoH priorities and/or performance challenges, and to assess relevant SOC indicators as needed. Decisions regarding which SOC indicators to prioritize are made judiciously, based on context and national priorities, recognizing the additional effort required to measure program performance above and beyond routinely collected PEPFAR indicators. The concept of targeted, in-depth assessment of quality using a standardized approach has been well received by the MoH with whom ICAP collaborates. In Mozambique, ICAP’s SOC work substantially contributed to the development of a ‘National Quality Improvement Initiative’ (Iniciativa de Melhoria de Qualidade) developed by the MoH and partners [6].

Standards of Care are measured manually during supportive supervision visits, by reviewing facility-based records (patient cards and/or registers) for a sample (e.g. 10%) of eligible patients. ICAP Clinical and M&E Advisors work closely with facility-level providers to collect and analyze data, and to develop action plans to address deficiencies. Standard operating procedures, as well as data collection tools and facility feedback forms, have been developed to streamline this process. SOC measurement, although only assessed on subsets of patients, is useful for identifying gaps in the quality of care when relevant routinely collected data are not available, and offers an opportunity to engage clinic providers in the quality assurance process and provide immediate feedback on program performance.

Facility surveys (PFaCTS)

While routine indicators, Priority Indicators, and SOC indicators describe program performance, they provide minimal information about the context in which ICAP-supported programs operate. As context can impact performance, it is critical to collect this information regularly. Often, contextual information helps to explain poor performance and to focus activities to address gaps. To this end, ICAP conducts periodic facility surveys at all supported HIV care and treatment facilities and laboratories. The Program and Facilities Characteristics Tracking System (PFaCTS) assessment collects information on the facility type and context, staffing structure, data systems, available services, and tests. Survey results are used to assess whether the Core Interventions are available at ICAP-supported health facilities, and whether there are any major gaps. For example, power outages and stock-outs that could cause disruption to services, availability of CD4+ T-cell testing to assess ART eligibility and monitor treatment response, availability of family planning services to prevent unintended pregnancies in women with HIV infection, availability of psychosocial support services and provision of isoniazid preventive therapy (IPT) can be tracked using the facility survey tool. Importantly, PFaCTS assesses availability of interventions, but does not measure the degree of coverage among eligible patients.

Aggregation, interpretation, and use of data to support quality

ICAP uses several different approaches and platforms to communicate outcomes and priorities at headquarters, country offices, sub-national offices, and health facilities. At each level, ICAP staff critically examine routine, priority, and SOC indicators to identify quality challenges and to inform corrective action when needed. To reduce manual work as much as possible, the URS as well as the country databases contain online and dynamic dashboards and data query modules that help track progress towards targets using data visualizations, as described above. The dashboards enable ICAP staff to drill down from country to subnational to facility levels, and to compare trends across these units and time to allow users to visually identify areas that are falling short of certain targets.

ICAP Clinical and M&E Advisors share facility-level data with facility staff on a regular basis. Quarterly data are reviewed during supportive supervision visits, and wall charts showing performance against targets are created and displayed at health facilities to facilitate discussion and strategic planning. Regular data review meetings are also held between Clinical and M&E teams to inform supportive supervision strategies.

Results

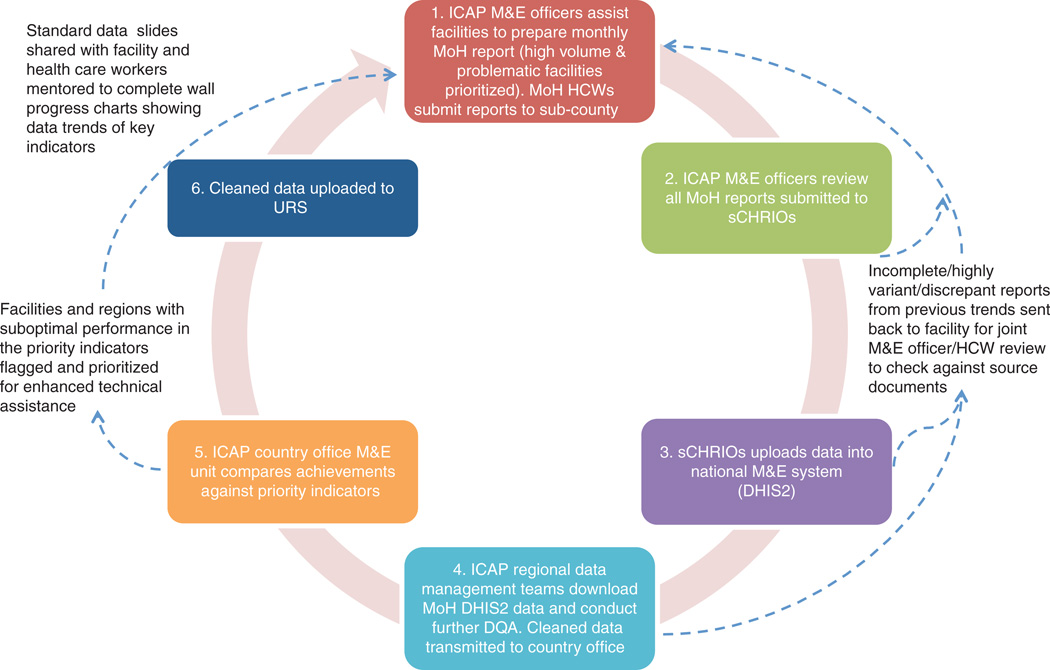

A case study from Kenya illustrates the results of this approach to quality assurance. In 2006, ICAP initiated direct support for HIV care and treatment services at four health facilities in Kenya. Over the ensuing years, ICAP in Kenya has expanded its support to 398 health facilities offering HIV prevention, care, and treatment, TB diagnosis and treatment, and HIV testing and counseling services, as well as to an additional 755 facilities offering PMTCT services. These facilities include referral hospitals, county hospitals, and sub-district hospitals, spread over a geographic area of roughly 42 929 km2, across five administrative counties. As of September 2014, 787 470 clients had cumulatively been tested for HIV; 277 711 had enrolled in HIV care; and 150 293 had initiated ART. ICAP in Kenya supports the ‘Three Ones’ principle – one national HIV action framework, one national AIDS coordinating authority, and one national M&E system – and provides technical assistance and hands-on support for the national HIV program database using DHIS2. Figure 3 illustrates the support provided for routine data collection by ICAP in Kenya.

Fig. 3. Support for Routine Data Collection at ICAP in Kenya. HCWs, Health care workers.

ICAP M&E officers assist facilities to prepare monthly MoH reports, which are due by the fifth day of each month. As each M&E officer supports 13–21 facilities, and it takes between 20 min to 4 h to travel from facility to facility on rural roads, it is not possible to be physically present at each facility to assist staff in preparing all the required reports. Instead, ICAP M&E officers prioritize visits to high-volume facilities with a history of data quality challenges to assist them in preparing the MoH reports. By the second week of the month, the M&E officers move on to the sub-county health offices where they obtain draft facility-level MoH data from sub-county health records and information officers.

M&E officers review the reports from their assigned health facilities and conduct a data variance analysis. Here, facilities with notable variations in key indicators (greater than ±10% change from previous report) are flagged and prioritized for more in-depth DQA. During these in-depth DQAs, M&E officers return to the targeted facilities and re-tally the data together with the facility staff. Observed discrepancies are immediately corrected, and the revised MoH report is shared with the sCHRIOs. All completed and verified facility-level MoH monthly reports without discrepancies are keyed into the national DHIS2 database by the 15th of each month, and are available to all stakeholders countrywide.

After the 15th of each month, ICAP regional data management teams download the required MoH data from DHIS2 into the regional ICAP aggregate database. Here, further checks on data trends are conducted to ensure that reports are consistent across time, and that no obvious data transcription errors occurred as the data were transferred from paper to electronic records at the sub-county level. Thereafter, data are transmitted to the ICAP Kenya central office, where the M&E Unit checks performance against the ICAP Priority Indicators. Facilities that have underperformed are flagged and discussed with the ICAP Clinical Advisors, enabling them to better target support. Finally, clean data are uploaded to the URS.

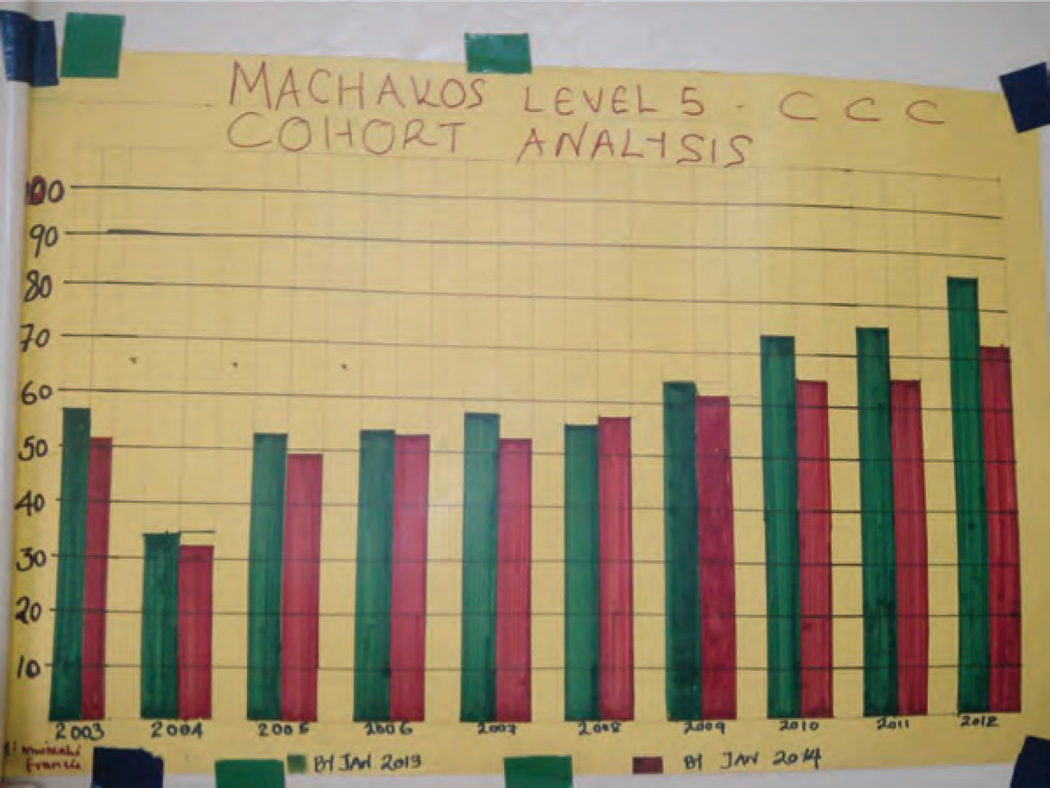

Fostering demand for data and the routine use of data for decision-making is an important step towards creating a culture of quality in health systems. To facilitate data consumption at the facility level, the ICAP M&E team generates standard charts that serve as routine quarterly feedback reports, showcasing the performance of each supported facility by quarter over the span of two years. These charts are mounted strategically at relevant service delivery points within the facility, as illustrated in Fig. 4. ICAP M&E officers use these wall charts and graphs to generate discussion, which often identifies poorly understood or problematic indicators that can be addressed during their routine mentorship visits. The data are also reviewed at multidisciplinary team meetings, enabling different facility-level providers to contribute to problem-solving and quality improvement activities.

Fig. 4. Wall chart in use at Machakos District Hospital in Eastern Province of Kenya.

The wall chart tracks retention on ART by ART initiation year. The X-axis represents different cohorts of ART patients by the year they initiated ART. The Y-axis represents % retained of all patients initiated on ART in that year. The green bar represents retention by January 2013, while the red bar represents retention by January 2014. ART, antiretroviral therapy.

Discussion

Monitoring program quality across a large and heterogeneous program in multiple resource-limited countries requires an overall quality framework and carefully targeted data collection, cognizant of the constraints of working within weak health systems with limited human resources and largely paper-based data systems. A parsimonious and efficient approach to data collection requires programs to leverage existing routinely collected data as much as possible, while also developing additional indicators that can be used for targeted, in-depth quality assessment. Equally critical are investments in electronic systems with automated processes, as well as human resources to support aggregation, verification, dissemination, and utilization of hundreds of thousands of data points every quarter to support data-driven decisions for program quality improvement.

The strength of the ICAP quality assurance model is its ability to leverage innovation, technology, and on-the ground presence to support national M&E systems. ICAP’s approach has been designed to align with national systems and to strengthen the culture of data verification, data review, and data use to enhance program quality. These quality assurance systems also provide the foundation for quality improvement initiatives, which are critical elements of ongoing program improvement.

While the intent is to build sustainable local capacity, enabling ICAP’s support to be transferred to District Health Management Teams and other local partners over time, this has not yet happened in all countries. In some contexts, despite continual efforts to build M&E capacity, high staff turnover at the facility and sub-national levels can combine with ICAP’s availability, expertise, and hands-on approach to create dependency on ICAP staff for routine M&E activities. Although this is uncommon in more mature national HIV programs, there are some contexts in which M&E remains largely dependent on external support.

ICAP’s experience suggests the need for robust investment in quality assurance and M&E by both national governments and external donors in order to effectively implement and monitor large and complex public health initiatives, such as national HIV programs. Investments in well designed electronic data management systems, pre-service education, in-service training, and supportive supervision in quality assurance and M&E, as well as inclusion and capacity building of key cadres such as data entry staff and M&E managers within the national health systems, is critical to ensure routine collection, aggregation, and review of program performance data.

Acknowledgments

S.S., A.A.H., and M.R. conceptualized the paper. S.S., A.A.H., and M.R. drafted the paper with key inputs from D.C., T.E., L.A., and B.E. All authors reviewed the final manuscript and approved submission.

Funding for ICAP’s work to support PEPFAR programs is provided by the US Centers for Disease Control and Prevention, the United States Agency for International Development, and the Health Resources and Services Administration.

Footnotes

Conflicts of interest

There are no conflicts of interest.

References

- 1.Heiby J. The use of modern quality improvement approaches to strengthen African health systems: a 5-year agenda. Int J Qual Healthcare. 2014;26:117–123. doi: 10.1093/intqhc/mzt093. [DOI] [PubMed] [Google Scholar]

- 2.Cohen MS, Chen YQ, McCauley M, Gamble T, Hosseinipour MC, Kumarasamy N, et al. Prevention of HIV-1 infection with early antiretroviral therapy. N Eng J Med. 2011;365:493–505. doi: 10.1056/NEJMoa1105243. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Nash D, Elul B, Rabkin M, Tun M, Saito S, Becker M, Nuwagaba-Biribonwoha H. Strategies for more effective monitoring and evaluation systems in HIV programmatic scale-up in resource-limited settings: implications for health systems strengthening. J Acquir Immune Defic Syndr. 2009;52:S58–S62. doi: 10.1097/QAI.0b013e3181bbcc45. [DOI] [PubMed] [Google Scholar]

- 4.McNairy ML, El-Sadr WM. The HIV care continuum: no partial credit given. AIDS. 2012;26:1735–1738. doi: 10.1097/QAD.0b013e328355d67b. [DOI] [PubMed] [Google Scholar]

- 5.PEPFAR Monitoring, Evaluation, and Reporting Indicator Reference Guide, version 2.0. 2014 Nov [Google Scholar]

- 6.República de Moçambique Ministério da Saúde. Manual de Melhoria de Qualidade de Cuidados e Tratamentos e de Prevenção da Transmissão Vertical do HIV. 2013 Jul [Google Scholar]