Abstract

Integrating research experiences into undergraduate life sciences curricula in the form of course-based undergraduate research experiences (CUREs) can meet national calls for education reform by giving students the chance to “do science.” In this article, we provide a step-by-step practical guide to help instructors assess their CUREs using best practices in assessment. We recommend that instructors first identify their anticipated CURE learning outcomes, then work to identify an assessment instrument that aligns to those learning outcomes and critically evaluate the results from their course assessment. To aid instructors in becoming aware of what instruments have been developed, we have also synthesized a table of “off-the-shelf” assessment instruments that instructors could use to assess their own CUREs. However, we acknowledge that each CURE is unique and instructors may expect specific learning outcomes that cannot be assessed using existing assessment instruments, so we recommend that instructors consider developing their own assessments that are tightly aligned to the context of their CURE.

INTRODUCTION

There have been national recommendations to integrate research experiences into the undergraduate biology curriculum (1, 49). While independent research in a faculty member’s lab is one way to meet this recommendation, course-based undergraduate research experiences (CUREs) are a way to scale the experience of doing research to a much broader population of students, thereby increasing the accessibility of these experiences (4). In contrast to traditional “cookbook” lab courses where students complete a pre-determined series of activities with a known answer, CUREs are lab courses where students work on novel scientific problems with unknown answers that are potentially publishable (3, 11). These research experiences have been shown to benefit both students and the instructors of the CUREs (45, 54, 55). As such, CUREs are growing in popularity as an alternative to the traditional laboratory course, particularly in biology lab classes (3, 10, 12, 36, 37, 51, 54, 62). The in-class research projects often last the duration of a semester or quarter, although sometimes they are implemented as shorter modules. CUREs vary in topics, techniques, and research questions—with the common thread being that the scientific questions addressed are novel, and perhaps most significantly, of interest to the scientific research community beyond the scope of the course. CUREs meet national calls for reforming biology education and may provide unique learning outcomes for students, as emphasized in Vision and Change: “learning science means learning to do science” (1).

Studies have reported various student outcomes resulting from CUREs including: increased student self-confidence (6, 40), improved attitudes toward science (35, 36), ability to analyze and interpret data (10, 13), more sophisticated conceptions of what it means to think like a scientist (10), and increased content knowledge (34, 46, 62). Although there is general consensus that CUREs can have a positive impact on students, it is often unclear what specific aspect of a CURE leads to a measured outcome (17). Further, given the uniqueness of each individual CURE, instructors and evaluators of CUREs may struggle to identify how to effectively assess particular elements of their CURE. Further, a combination of assessment techniques may yield the most holistic understanding of course outcomes, especially for CUREs that are already being implemented (3, 29).

While an ideal assessment of outcomes from any experimental pedagogy would include an analysis of a matched comparison course, logistical reasons may likely prevent an instructor from executing a randomized controlled study or even a quasi-experimental design format allowing a comparison of students in a CURE with students in a non-CURE course (but see 13, 31, 36 for examples). Further, finding an appropriate comparison group of students can be difficult; in particular, if students choose to take the CURE, then there is a possible selection bias (13). However, one can account for known differences and similarities in students by using multiple linear regression models that control for student demographics and ability such as incoming grade point average (GPA) (e.g., 59). Although these are all factors to take into consideration, in this article, our aim is to provide instructors with fundamental guidelines on how to get started assessing their own CUREs, without necessarily needing a comparison group.

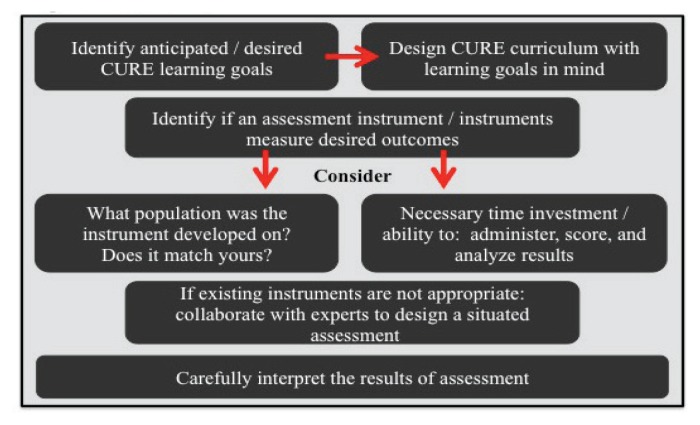

In assessing their CURE, we propose that instructors first begin with their intended learning outcomes for the CURE. Once an instructor’s learning outcomes have been identified, they can align assessments with those outcomes. We suggest that instructors browse existing assessments that may align with their learning outcomes to see whether any present appropriate ways to evaluate their CURE. If not, instructors should consider designing their own assessment situated in the specific context of their CURE, which may require collaboration with an education researcher or group of researchers with expertise in assessment. Finally, instructors need to critically evaluate the results of the assessment, being cautious in their interpretation. Taken together, these steps will provide a “best practices” model of how to effectively assess CURE learning environments (Fig. 1).

FIGURE 1.

Guide to assessing course-based undergraduate research experiences (CUREs).

Step 1: Identify learning outcomes

The first step in evaluating a CURE is to identify learning goals so that an instructor can assess how successful the CURE was at attaining those learning goals. There are a number of resources available to help instructors identify and establish learning goals (33) and design a course based on these learning goals, i.e., ‘backward design’ (22, 64). We use the terms “learning goals” and “learning outcomes” as discussed by Handelsman et al. in Scientific Teaching (2004): “Learning goals are useful as broad constructs, but without defined learning outcomes, goals can seem unattainable and untestable. The outcome is to the goal what the prediction is to the hypothesis” (33). Thus, the first question to ask is not, “How can I assess my CURE?”, but “What learning outcomes do I want to measure?” Learning outcomes can vary, ranging from technical skills (e.g., “students will be able to micropipette small amounts of substances accurately”), to content knowledge (e.g., “students will be able to explain the steps of the polymerase chain reaction”), to high-level analytical skills (e.g., “students will be able to adequately design a scientific experiment”). More general learning goals for a CURE may also include affective gains such as self-efficacy or improved attitude toward science (6, 36). These affective gains may be more difficult for traditionally trained biologists to measure, or to evaluate the results of, particularly if one is less familiar with the theoretical frameworks that define these constructs. Collaboration with experts in education to understand affective gains may be particularly appropriate if these are the anticipated outcomes for one’s CURE. These presented learning outcomes are intended to serve as examples of the diversity of conceivable student outcomes from a CURE but reflect only a fraction of those possible. Frameworks to identify learning outcomes from CUREs have been developed elsewhere (e.g., 11, 16) and could be used in conjunction with the present article as a starting point for CURE assessment.

While it is possible for CUREs to lead to gains in various domains, instructors may want to focus the learning goals on those with potentially measurable learning outcomes that are either not feasible in a lecture course, or are best-suited for a lab course. For example, any biology course can cover content, but in addition to other possible gains afforded by the CURE format, a lab course focusing on novel data is uniquely positioned to teach students about the process of science or perhaps the importance of repeating experiments. However, if the CURE is a required course in a department or if CUREs are being taught parallel to traditional lab courses, there may be already-established departmental learning goals and specific outcomes that must be targeted by the course.

Step 2: Select an assessment aligned with your learning outcomes

Once the anticipated learning outcomes for the CURE have been identified, the next step is to find an assessment strategy that aligns with the learning goals and anticipated learning outcomes. For some of the more common learning outcomes from CUREs, assessment instruments may either already exist or have been developed specifically for CUREs. These are sometimes referred to as “off-the-shelf” assessments because they have previously been published and instructors could in theory grab one “off the shelf” and administer it in the CURE classroom. However, it is important to consider how well these assessment instruments measure an instructor’s specific intended learning outcomes. Tight alignment of the assessment instrument with the desired learning outcomes is essential to accurately interpret CURE results. We further encourage instructors to critically evaluate these instruments in terms of: administration (e.g., How much class time does it require to administer? Is it a pre-post-course comparison?), time required to score the assessment (e.g., multiple choice, which can be auto-graded vs. open-ended responses, which need to be evaluated with a rubric), and what validation has been conducted on the instrument and how appropriate it is for an instructor’s specific population of students (e.g., has the instrument been previously administered to high school students but not to undergraduates?).

Previously developed assessments

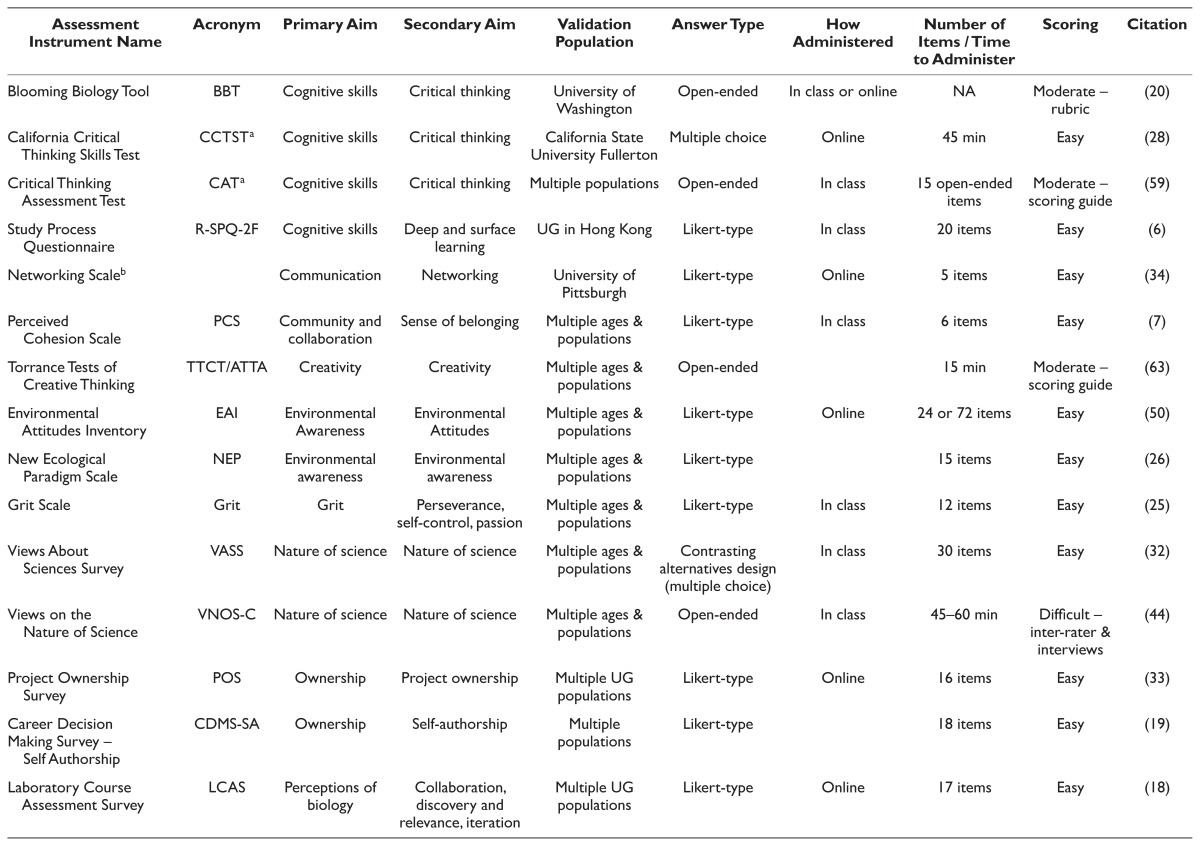

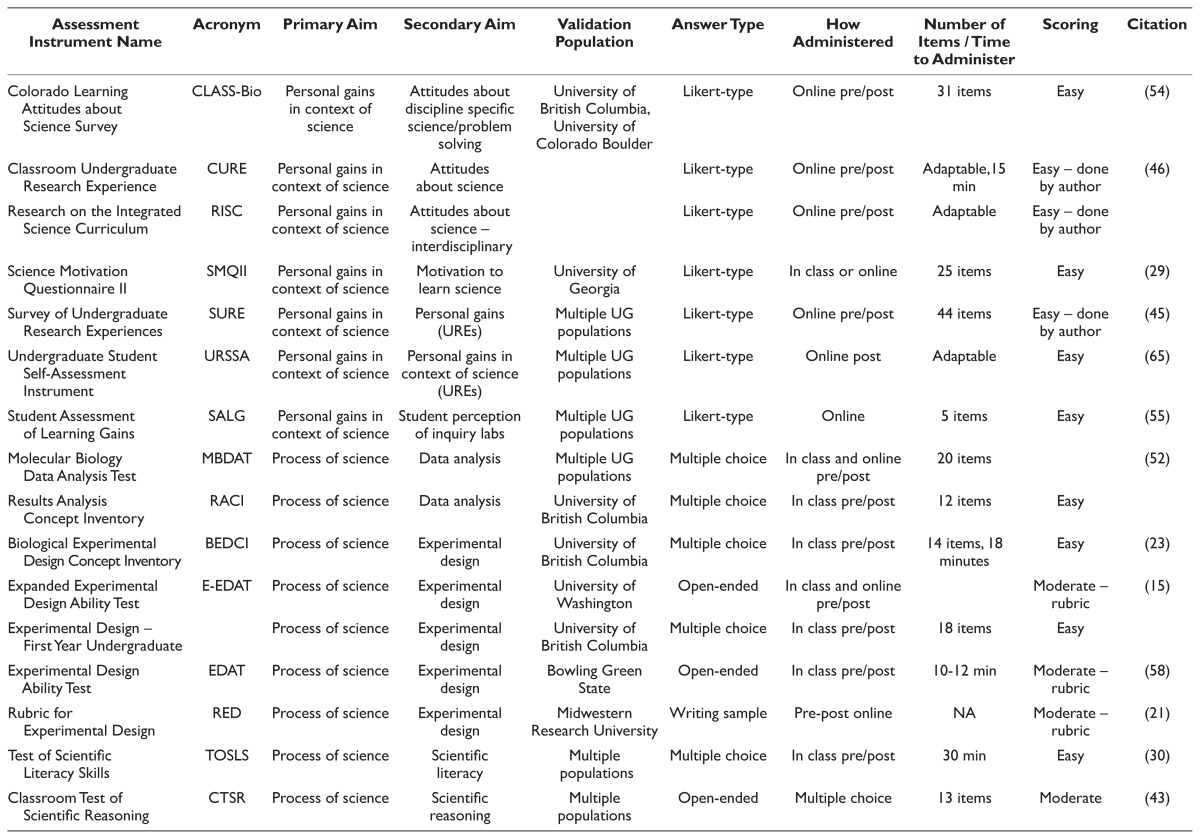

Table 1 outlines assessment instruments that instructors could potentially use to evaluate their CURE, ordered by their primary aims. The table includes details of the format of the assessment, ease of administration and grading, and the population(s) that the instrument has been developed on. While we are not intending for this list to be comprehensive, and there are likely other assessment instruments that can be used to assess CUREs, we hope that this could be helpful for CURE instructors who are at the beginning stages of thinking about assessment.

TABLE 1.

Assessment instrument table.

| Assessment Instrument Name | Acronym | Primary Aim | Secondary Aim | Validation Population | Answer Type | How Administered | Number of Items/Time to Administer | Scoring | Citation |

|---|---|---|---|---|---|---|---|---|---|

| Blooming Biology Tool | BBT | Cognitive skills | Critical thinking | University of Washington | Open-ended | In class or online | NA | Moderate – rubric | (20) |

| California Critical Thinking Skills Test | CCTST a | Cognitive skills | Critical thinking | California State University Fullerton | Multiple choice | Online | 45 min | Easy | (28) |

| Critical Thinking Assessment Test | CAT a | Cognitive skills | Critical thinking | Multiple populations | Open-ended | In class | 15 open-ended items | Moderate – scoring guide | (59) |

| Study Process Questionnaire | R-SPQ-2F | Cognitive skills | Deep and surface learning | UG in Hong Kong | Likert-type | In class | 20 items | Easy | (6) |

| Networking Scaleb | Communication | Networking | University of Pittsburgh | Likert-type | Online | 5 items | Easy | (34) | |

| Perceived Cohesion Scale | PCS | Community and collaboration | Sense of belonging | Multiple ages & populations | Likert-type | In class | 6 items | Easy | (7) |

| Torrance Tests of Creative Thinking | TTCT/ATTA | Creativity | Creativity | Multiple ages & populations | Open-ended | 15 min | Moderate – scoring guide | (63) | |

| Environmental Attitudes Inventory | EAI | Environmental Awareness | Environmental Attitudes | Multiple ages & populations | Likert-type | Online | 24 or 72 items | Easy | (50) |

| New Ecological Paradigm Scale | NEP | Environmental awareness | Environmental awareness | Multiple ages & populations | Likert-type | 15 items | Easy | (26) | |

| Grit Scale | Grit | Grit | Perseverance, self-control, passion | Multiple ages & populations | Likert-type | In class | 12 items | Easy | (25) |

| Views About Sciences Survey | VASS | Nature of science | Nature of science | Multiple ages & populations | Contrasting alternatives design (multiple choice) | In class | 30 items | Easy | (32) |

| Views on the Nature of Science | VNOS-C | Nature of science | Nature of science | Multiple ages & populations | Open-ended | In class | 45–60 min | Difficult – inter-rater & interviews | (44) |

| Project Ownership Survey | POS | Ownership | Project ownership | Multiple UG populations | Likert-type | Online | 16 items | Easy | (33) |

| Career Decision Making Survey – Self Authorship | CDMS-SA | Ownership | Self-authorship | Multiple populations | Likert-type | 18 items | Easy | (19) | |

| Laboratory Course Assessment Survey | LCAS | Perceptions of biology | Collaboration, discovery and relevance, iteration | Multiple UG populations | Likert-type | Online | 17 items | Easy | (18) |

| Colorado Learning Attitudes about Science Survey | CLASS-Bio | Personal gains in context of science | Attitudes about discipline specific science/problem solving | University of British Columbia, University of Colorado Boulder | Likert-type | Online pre/post | 31 items | Easy | (54) |

| Classroom Undergraduate Research Experience | CURE | Personal gains in context of science | Attitudes about science | Likert-type | Online pre/post | Adaptable,15 min | Easy – done by author | (46) | |

| Research on the Integrated Science Curriculum | RISC | Personal gains in context of science | Attitudes about science – interdisciplinary | Likert-type | Online pre/post | Adaptable | Easy – done by author | ||

| Science Motivation Questionnaire II | SMQII | Personal gains in context of science | Motivation to learn science | University of Georgia | Likert-type | In class or online | 25 items | Easy | (29) |

| Survey of Undergraduate Research Experiences | SURE | Personal gains in context of science | Personal gains (UREs) | Multiple UG populations | Likert-type | Online pre/post | 44 items | Easy – done by author | (45) |

| Undergraduate Student Self-Assessment Instrument | URSSA | Personal gains in context of science | Personal gains in context of science (UREs) | Multiple UG populations | Likert-type | Online post | Adaptable | Easy | (65) |

| Student Assessment of Learning Gains | SALG | Personal gains in context of science | Student perception of inquiry labs | Multiple UG populations | Likert-type | Online | 5 items | Easy | (55) |

| Molecular Biology Data Analysis Test | MBDAT | Process of science | Data analysis | Multiple UG populations | Multiple choice | In class and online pre/post | 20 items | (52) | |

| Results Analysis Concept Inventory | RACI | Process of science | Data analysis | University of British Columbia | Multiple choice | In class pre/post | 12 items | Easy | |

| Biological Experimental Design Concept Inventory | BEDCI | Process of science | Experimental design | University of British Columbia | Multiple choice | In class pre/post | 14 items, 18 minutes | Easy | (23) |

| Expanded Experimental Design Ability Test | E-EDAT | Process of science | Experimental design | University of Washington | Open-ended | In class and online pre/post | Moderate – rubric | (15) | |

| Experimental Design – First Year Undergraduate | Process of science | Experimental design | University of British Columbia | Multiple choice | In class pre/post | 18 items | Easy | ||

| Experimental Design Ability Test | EDAT | Process of science | Experimental design | Bowling Green State | Open-ended | In class pre/post | 10–12 min | Moderate – rubric | (58) |

| Rubric for Experimental Design | RED | Process of science | Experimental design | Midwestern Research University | Writing sample | Pre-post online | NA | Moderate – rubric | (21) |

| Test of Scientific Literacy Skills | TOSLS | Process of science | Scientific literacy | Multiple populations | Multiple choice | In class pre/post | 30 min | Easy | (30) |

| Classroom Test of Scientific Reasoning | CTSR | Process of science | Scientific reasoning | Multiple populations | Open-ended | Multiple choice | 13 items | Moderate | (43) |

| The Rubric for Science Writing | Rubric | Process of science | Scientific reasoning/science communication | University of South Carolina | Writing-sample | Out of class | NA | Moderate – rubric | (62) |

| National Survey of Student Engagement | NSSE a | Student engagement | Student engagement | Multiple ages & populations | Likert-type | Online | 70 items | Easy | (41) |

Indicates that the instrument has a fee for use;

Instrument is to be used in conjunction with the POS.

Blank cells indicate that the information was not specified.

NA = not applicable; UG = undergraduate; URE = undergraduate research experience.

Included in the table are primary references for each instrument so instructors can find more information on the process of the development of the instrument as well as the efforts made by the assessment developers to ensure that the instruments produce data that has been shown to be valid and reliable (25, 47, 58). There are a set of best practices standards for educational and psychological measures outlined by the American Educational Research Association (2), and assessment instruments ideally adhere to these standards and provide evidence in support of the validity and reliability of the resulting data. It is important to note that no assessment instrument is generally validated— it is only valid for the specific population on which it was tested. An assessment instrument that was developed on a high school population may not perform the same way on a college-level population, and even an assessment instrument developed on a population of students at a research-intensive institution may not perform the same way on a community college student population. There is not a “one size fits all” for assessment, nor is there ever a perfect assessment instrument (5). There are pros and cons to each depending on one’s specific intentions for using the assessment instrument, which is why it is critical for instructors to judiciously evaluate the differences among instruments before choosing to use one.

If no existing assessment fits, then design your own

Existing assessment instruments may not be specific enough to align with an instructor’s anticipated learning outcomes. Instructors may want to measure learning outcomes that are specific to the CURE, and using an instrument that is not related to the specific context of the CURE may not be able to achieve that. We recommend that instructors consider working with education researchers to design their own assessments that are situated in the context of the CURE (e.g., 38), and/or use standard quizzes and exams as a measure of expected student CURE outcomes (e.g., 10). The choices instructors make will depend on the intention of their assessment efforts: is the intent to make a formative or summative assessment? What does the instructor intend to learn from and do with the measured outcome data? For example, do they wish to use the results to advance their own knowledge of the course success, for a research study, or a programmatic evaluation?

Step 3: Interpret the results of the assessment

Once instructors administer an assessment of their CURE, it is important to be careful in interpreting the results. In a CURE, students are often doing many different things and it is difficult to attribute a learning gain to one particular aspect of the course (16). Further, a survey that asks students about how well they think they can analyze data is measuring student perception of their ability to analyze data (e.g., 12), which could be different than their actual ability (e.g., 38) or the instructor’s perception of that student’s ability to analyze data (e.g., 55). Thus, it is important that instructors not try to overgeneralize the results of their assessment, and that they are aware of the limitations of student self-reported gains (9, 40). Yet, student perceptions are not always limitations, as student self-report can be the best way to measure learning goals such as confidence, sense of belonging, and interest in pursuing research—here it is appropriate to document how a student feels (15). Further, the instructor may want to know what the student thinks they are gaining from the course. For example, if an instructor’s expected learning outcome is for students to learn to interpret scientific figures, they could work to answer the question using a multi-pronged approach, measuring student perception of ability paired with a measure of actual ability. To achieve this, an instructor could use or design an assessment that asks students to self-report on their perceived ability to interpret scientific graphs. The instructor could then pair the self-report instrument with an assessment testing their actual ability to interpret scientific graphs. Using this approach, an instructor could learn whether there is alignment between what the instructor thinks the students are learning, what the students think they are learning, and whether the students are actually learning the skill. Thus, the attributes and limitations of assessment instruments and strategies are dependent on both the learning outcomes one wants to measure and the conclusions one wants to draw from the data.

Putting the steps in action: An example of alignment of goals and assessment

Here we present guiding questions for instructors to ask when approaching an assessment instrument. These steps meet minimum expectations for using best practices in evaluating CURE outcomes.

a) How is this instrument aligned with the learning goals of my CURE? Is it specifically aimed at measuring this particular outcome?

b) What populations has it been previously administered to? Does that student population reasonably match mine?

c) What is the time needed to use, administer, and analyze the results of the instrument? Is this feasible within my course timeline and personal availability?

Possible follow-up question:

d) Do I aim to use the assessment results outside of my own classroom and/or try to publish them? If no, then validity and reliability measures may be less critical for an interpretation of the results. If yes, what validity and reliability measures have been performed and reported on for this instrument, and should I consider collaborating with an education researcher?

Assessing student understanding of experimental design

To help instructors determine how to assess their CUREs, we have identified one of the most commonly expected learning outcomes from CUREs: Students will learn how to design scientific experiments. We conducted phone surveys with faculty members who we had previously interviewed regarding their experiences developing and teaching their own CURE (55). We asked them to identify whether they thought students gained particular outcomes as a result of participating in their CURE (See Appendix 1 for details). Of the 35 surveys conducted, 86% of faculty participants reported that they perceived that students learned to design scientific experiments as a result of the CURE. Using the steps outlined in this essay, we provide an example of how to begin to assess this learning outcome by considering the pros and cons of different assessment instruments (all cited in Table 1). The instruments we discuss below have the explicit primary aim of evaluating student understanding of the “Process of Science” and the secondary aim of evaluating student understanding of “Experimental Design.” Further, the instruments were developed using undergraduate students at large, public research universities.

One of the first instruments to be developed to measure students’ ability to design biology experiments was the Experimental Design Ability Tool (EDAT) (56). The EDAT is a pre-post instrument, intended to be administered at the beginning and end of a course or module to evaluate gains in student ability. The EDAT consists of open-ended prompts asking students to design an experiment: the pretest prompt is focused on designing an investigation into the benefits of ginseng supplements, and the posttest prompt asks students to design an investigation into the impact of iron supplements on women’s memory. Student written responses to both prompts are evaluated using a rubric. This assessment was developed using a nonmajors biology class and has been since adapted for a majors class; the revised instrument is the Expanded-Experimental Design Ability Tool (E-EDAT) (14). The E-EDAT has the advantage that the revised rubric gives a more detailed report of student understanding, as it allows for intermediate evaluation of student ability to design experiments. However, the open-ended format of both these assessments means that grading student responses using the designated rubrics may be too time-consuming for many instructors. Additionally, the prompts of the EDATs are specific to human-focused medical scenarios, which may not reflect the type of experimental design that students are learning in their CURE.

Another pre-post assessment instrument, the Rubric for Experimental Design (RED), is a way to measure changes in student conceptions about experimental design (20). The RED is a rubric that can be used to evaluate student writing samples on experimental design, but is not associated with specific predetermined questions (20). Since many CUREs adopt a model where students write a final paper taking the form of a grant proposal or journal article, and the RED requires the instructor to have some sort of student writing sample already in place, the RED may be appropriate. Yet, similar to the EDAT/E-EDAT, the scoring of this instrument is time-consuming and the writing samples will need to be coded by more than one rater to achieve inter-rater reliability, which may be a limitation for some instructors. However, instructors using the RED have the advantage of a rubric that targets five common areas where students traditionally struggle regarding experimental design, thus potentially helping an instructor to disaggregate specific areas of student misconceptions and understanding of experimental design principles.

A pre-post, multiple-choice concept inventory, the Biological Experimental Design Concept Inventory (BEDCI), was developed to test student ability to design experiments (21). The BEDCI has the advantage that it is easy to score since it can be automated and the instructor can quickly identify student gains on the test, but a disadvantage is that the BEDCI consists of a fixed set of questions. The specific context of each question could impact how students perform on the assessment, and the context of these questions may not overlap with the context of the CURE. Additionally, the BEDCI is to be presented as a PowerPoint during class, so instructors need to allocate in-class time for administration.

These instruments may help an instructor to understand whether their students have achieved some level of experimental design ability, but the majority of these instruments are not specific to the context of any given CURE. Thus, there may be specific learning goals related to the experimental design context of the particular CURE that an instructor wants to probe. An additional and/or alternative approach is to design a test of experimental design ability using the specific context of the CURE. While we often use the term “experimental design” to include any aspect of designing an experiment in science, aspects of experimental design in a molecular biology CURE are different than aspects of experimental design in a field ecology CURE. Further, even if students can design an experiment in one context, this does not mean that they can design an appropriate experiment in another context, nor should they necessarily be expected to do so, particularly if understanding nuances of both experimental systems was not a predetermined learning goal. Instructors may miss important gains in their students’ abilities to design relevant experiments if they are using a generic experimental design assessment instrument (38). Perhaps students can design experiments in the specific context of their CURE (e.g., design an experiment to test the levels of protein in yeast cells in a molecular biology CURE versus design an experiment to identify abiotic factors influencing the presence of yeast in a flowering plant’s nectar in an ecology CURE), but they are unable to effectively design experiments in the converse scientific context. Even skilled scientists can have difficulty in designing an experiment in an area that is not in their specific domain of biological expertise. It may be important to test students using their specific CURE context in order to maximize the chance of seeing an outcome effect that can be credibly attributed to the CURE. It is unlikely that a previously developed assessment instrument will be directly aligned with expected outcomes from one’s CURE, so we encourage instructors to work with education researchers to develop situated assessments that are appropriate for each specific CURE context (e.g., 38).

CONCLUSION

As more CUREs are developed and implemented in biology lab courses across the country, instructors are becoming increasingly interested in assessing the impact of their CUREs. Although there is complexity in assessment, the aim of this paper is not to overwhelm instructors, but instead to offer a basic assessment strategy: identify anticipated CURE learning outcomes, select an assessment instrument that is aligned with the learning outcomes, and cautiously interpret the results of the assessment instrument. We also present a table of previously developed assessment instruments that could be of use to CURE instructors depending on their learning goals, student populations, and course context. While this is only the tip of the iceberg as far as how instructors can assess their CUREs, and we anticipate that many more assessment instruments will be developed in the coming years, we hope that this table can provide instructors with a starting point for considering how to assess their CUREs. We encourage instructors to be thoughtful and critical in their assessment of their CUREs as we continue to learn more about the impact of these curricula on students.

SUPPLEMENTAL MATERIALS

Appendix 1: Faculty perceptions of student gains from participation in CUREs

ACKNOWLEDGMENTS

The authors would like to thank J. Barbera, K. McDonald, and K. Stedman for their thoughtful comments on an early version of the manuscript. We also thank the faculty members who participated in the study referenced in the Appendix. The authors declare that there are no conflicts of interest.

Footnotes

Supplemental materials available at http://asmscience.org/jmbe

REFERENCES

- 1.American Association for the Advancement of Science. Vision and change in undergraduate biology education: a call to action: a summary of recommendations made at a national conference organized by the American Association for the Advancement of Science; July 15–17, 2009; Washington, DC. 2011. [Google Scholar]

- 2.American Educational Research Association, American Psychological Association, National Council on Measurement in Education. Standards for educational and psychological testing. AERA Publication Series; Washington, DC: 1999. [Google Scholar]

- 3.Auchincloss LC, et al. Assessment of course-based undergraduate research experiences: a meeting report. CBE Life Sci Educ. 2014;13:29–40. doi: 10.1187/cbe.14-01-0004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Bangera G, Brownell SE. Course-based undergraduate research experiences can make scientific research more inclusive. CBE Life Sci Educ. 2014;13:602–606. doi: 10.1187/cbe.14-06-0099. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Barbera J, VandenPlas J. All assessment materials are not created equal: the myths about instrument development, validity, and reliability. In: Bunce DM, editor. Investigating Classroom Myths through Research on Teaching and Learning. American Chemical Society; Washington, DC: 2011. pp. 177–193. [Google Scholar]

- 6.Bascom-Slack CA, Arnold AE, Strobel SA. Student-directed discovery of the plant microbiome and its products. Science. 2012;338:485–486. doi: 10.1126/science.1215227. [DOI] [PubMed] [Google Scholar]

- 7.Biggs J, Kember D, Leung DY. The revised two-factor study process questionnaire: R-SPQ-2F. Brit J Educ Psychol. 2001;71:133–149. doi: 10.1348/000709901158433. [DOI] [PubMed] [Google Scholar]

- 8.Bollen KA, Hoyle RH. Perceived cohesion: a conceptual and empirical examination. Soc Forces. 1990;69(2):479–504. doi: 10.1093/sf/69.2.479. [DOI] [Google Scholar]

- 9.Bowman NA. Validity of college self-reported gains at diverse institutions. Educ Res. 2011;40:22–24. doi: 10.3102/0013189X10397630. [DOI] [Google Scholar]

- 10.Brownell SE, et al. A high-enrollment course-based undergraduate research experience improves student conceptions of scientific thinking and ability to interpret data. CBE Life Sci Educ. 2015;14:ar21. doi: 10.1187/cbe.14-05-0092. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Brownell SE, Kloser MJ. Toward a conceptual framework for measuring the effectiveness of course-based undergraduate research experiences in undergraduate biology. Studies Higher Educ. 2015;40:525–544. doi: 10.1080/03075079.2015.1004234. [DOI] [Google Scholar]

- 12.Brownell SE, Kloser MJ, Fukami T, Shavelson R. Undergraduate biology lab courses: comparing the impact of traditionally based “cookbook” and authentic research-based courses on student lab experiences. J Coll Sci Teach. 2012;41:18–27. [Google Scholar]

- 13.Brownell SE, Kloser MJ, Fukami T, Shavelson RJ. Context matters: volunteer bias, small sample size, and the value of comparison groups in the assessment of research-based undergraduate introductory biology lab courses. J Microbiol Biol Educ. 2013;14(2):176–182. doi: 10.1128/jmbe.v14i2.609. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Brownell SE, et al. How students think about experimental design: novel conceptions revealed by in-class activities. BioScience. 2013:bit016. [Google Scholar]

- 15.Chan D. So why ask me? Are self-report data really that bad? In: Lance CE, Vandenberg RJ, editors. Statistical and methodological myths and urban legends: doctrine, verity and fable in the organizational and social sciences. Routledge; New York, NY: 2009. pp. 309–336. [Google Scholar]

- 16.Corwin LA, Graham MJ, Dolan EL. Modeling course-based undergraduate research experiences: an agenda for future research and evaluation. CBE Life Sci Educ. 2015;14:es1. doi: 10.1187/cbe.14-10-0167. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Corwin LA, Runyon C, Robinson A, Dolan EL. The laboratory course assessment survey: a tool to measure three dimensions of research-course design. CBE Life Sci Educ. 2015;14:ar37. doi: 10.1187/cbe.15-03-0073. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Creamer EG, Magolda MB, Yue J. Preliminary evidence of the reliability and validity of a quantitative measure of self-authorship. J Coll Student Devt. 2010;51:550–562. doi: 10.1353/csd.2010.0010. [DOI] [Google Scholar]

- 19.Crowe A, Dirks C, Wenderoth MP. Biology in bloom: implementing Bloom’s taxonomy to enhance student learning in biology. CBE Life Sci Educ. 2008;7:368–381. doi: 10.1187/cbe.08-05-0024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Dasgupta AP, Anderson TR, Pelaez N. Development and validation of a rubric for diagnosing students’ experimental design knowledge and difficulties. CBE Life Sci Educ. 2014;13:265–284. doi: 10.1187/cbe.13-09-0192. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Deane T, Nomme K, Jeffery E, Pollock C, Birol G. Development of the biological experimental design concept inventory (BEDCI) CBE Life Sci Educ. 2014;13:540–551. doi: 10.1187/cbe.13-11-0218. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Dolan EL, Collins JP. We must teach more effectively: here are four ways to get started. Mol Biol Cell. 2015;26:2151–2155. doi: 10.1091/mbc.E13-11-0675. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Duckworth AL, Quinn PD. Development and validation of the short grit scale (GRIT–S) J Pers Assess. 2009;91:166–174. doi: 10.1080/00223890802634290. [DOI] [PubMed] [Google Scholar]

- 24.Dunlap R, Liere K, Mertig A, Jones RE. Measuring endorsement of the new ecological paradigm: a revised NEP scale. J Soc Iss. 2000;56:425–442. doi: 10.1111/0022-4537.00176. [DOI] [Google Scholar]

- 25.Ebel R, Frisbie D. Essentials of educational measurement. Prentice-Hall; Englewood Cliffs, NJ: 1979. [Google Scholar]

- 26.Facione PA. Using the California Critical Thinking Skills Test in Research, Evaluation, and Assessment. 1991. [Online.] http://www.insightassessment.com/Products/Products-Summary/Critical-Thinking-Skills-Tests/California-Critical-Thinking-Skills-Test-CCTST.

- 27.Glynn SM, Brickman P, Armstrong N, Taasoobshirazi G. Science motivation questionnaire II: validation with science majors and nonscience majors. J Res Sci Teach. 2011;48:1159–1176. doi: 10.1002/tea.20442. [DOI] [Google Scholar]

- 28.Gormally C, Brickman P, Lutz M. Developing a test of scientific literacy skills (TOSLS): measuring undergraduates’ evaluation of scientific information and arguments. CBE Life Sci Educ. 2012;11:364–377. doi: 10.1187/cbe.12-03-0026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Gurel DK, Eryılmaz A, McDermott LC. A review and comparison of diagnostic instruments to identify students’ misconceptions in science. Eurasia J Math Sci Technol Educ. 2015;11:989–1008. [Google Scholar]

- 30.Halloun I, Hestenes D. Interpreting VASS dimensions and profiles for physics students. Sci Educ. 1998;7:553–577. doi: 10.1023/A:1008645410992. [DOI] [Google Scholar]

- 31.Hanauer DI, Dolan EL. The project ownership survey: measuring differences in scientific inquiry experiences. CBE Life Sci Educ. 2014;13:149–158. doi: 10.1187/cbe.13-06-0123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Hanauer DI, Hatfull G. Measuring networking as an outcome variable in undergraduate research experiences. CBE Life Sci Educ. 2015;14:ar38. doi: 10.1187/cbe.15-03-0061. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Handelsman J, et al. Scientific teaching. Science. 2004;304:521–522. doi: 10.1126/science.1096022. [DOI] [PubMed] [Google Scholar]

- 34.Hark AT, Bailey CP, Parrish S, Leung W, Shaffer CD, Elgin SC. Undergraduate research in the genomics education partnership: a comparative genomics project exploring genome organization and chromatin structure in Drosophila. FASEB J. 2011;25:576.573. [Google Scholar]

- 35.Harrison M, Dunbar D, Ratmansky L, Boyd K, Lopatto D. Classroom-based science research at the introductory level: changes in career choices and attitude. CBE Life Sci Educ. 2011;10:279–286. doi: 10.1187/cbe.10-12-0151. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Jordan TC, et al. A broadly implementable research course in phage discovery and genomics for first-year undergraduate students. MBio. 2014;5:e01051–01013. doi: 10.1128/mBio.01051-13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Kloser MJ, Brownell SE, Chiariello NR, Fukami T. Integrating teaching and research in undergraduate biology laboratory education. PLoS Biol. 2011;9:e1001174. doi: 10.1371/journal.pbio.1001174. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Kloser MJ, Brownell SE, Shavelson RJ, Fukami T. Effects of a research-based ecology lab course: a study of nonvolunteer achievement, self-confidence, and perception of lab course purpose. J Coll Sci Teach. 2013;42:90–99. [Google Scholar]

- 39.Kuh GD. The national survey of student engagement: conceptual and empirical foundations. New Direct Inst Res. 2009;2009:5–20. doi: 10.1002/ir.283. [DOI] [Google Scholar]

- 40.Kuncel NR, Credé M, Thomas LL. The validity of self-reported grade point averages, class ranks, and test scores: a meta-analysis and review of the literature. Rev Educ Res. 2005;75:63–82. doi: 10.3102/00346543075001063. [DOI] [Google Scholar]

- 41.Lawson AE, Clark B, Cramer-Meldrum E, Falconer KA, Sequist JM, Kwon YJ. Development of scientific reasoning in college biology: do two levels of general hypothesis-testing skills exist? J Res Sci Teach. 2000;37:81–101. doi: 10.1002/(SICI)1098-2736(200001)37:1<81::AID-TEA6>3.0.CO;2-I. [DOI] [Google Scholar]

- 42.Lederman NG, Abd-El-Khalick F, Bell RL, Schwartz RS. Views of nature of science questionnaire: toward valid and meaningful assessment of learners’ conceptions of nature of science. J Res Sci Teach. 2002;39:497–521. doi: 10.1002/tea.10034. [DOI] [Google Scholar]

- 43.Lopatto D. Survey of undergraduate research experiences (SURE): first findings. Cell Biol Educ. 2004;3:270–277. doi: 10.1187/cbe.04-07-0045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Lopatto D. Classroom Undergraduate Research Experiences Survey (CURE) 2008. [Online.] https://www.grinnell.edu/academics/areas/psychology/assessments/cure-survey.

- 45.Lopatto D, et al. A central support system can facilitate implementation and sustainability of a classroom-based undergraduate research experience (CURE) in Genomics. CBE Life Sci Educ. 2014;13:711–723. doi: 10.1187/cbe.13-10-0200. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Makarevitch I, Frechette C, Wiatros N. Authentic research experience and “big data” analysis in the classroom: maize response to abiotic stress. CBE Life Sci Educ. 2015:14. doi: 10.1187/cbe.15-04-0081. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Messick S. Meaning and values in test validation: the science and ethics of assessment. Educ Res. 1989;18:5–11. doi: 10.3102/0013189X018002005. [DOI] [Google Scholar]

- 48.Milfont TL, Duckitt J. The environmental attitudes inventory: a valid and reliable measure to assess the structure of environmental attitudes. J Environ Psychol. 2010;30:80–94. doi: 10.1016/j.jenvp.2009.09.001. [DOI] [Google Scholar]

- 49.President’s Council of Advisors on Science and Technology. Engage to excel: producing one million additional college graduates with degrees in science, technology, engineering, and mathematics. PCAST; Washington, DC: 2012. [Google Scholar]

- 50.Rybarczyk BJ, Walton KL, Grillo WH. The development and implementation of an instrument to assess students’ data analysis skills in molecular biology. J Microbiol Biol Educ. 2014;15(2):259–267. doi: 10.1128/jmbe.v15i2.703. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Sanders ER, Hirsch AM. Immersing undergraduate students into research on the metagenomics of the plant rhizosphere: a pedagogical strategy to engage civic-mindedness and retain undergraduates in STEM. Front Plant Sci. 2014;5:157. doi: 10.3389/fpls.2014.00157. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Semsar K, Knight JK, Birol G, Smith MK. The Colorado learning attitudes about science survey (CLASS) for use in biology. CBE Life Sci Educ. 2011;10:268–278. doi: 10.1187/cbe.10-10-0133. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Seymour E, Wiese D, Hunter A, Daffinrud SM. Creating a better mousetrap: on-line student assessment of their learning gains. Paper presented at the National Meeting of the American Chemical Society; San Francisco, CA. 2000. [Google Scholar]

- 54.Shaffer CD, et al. A course-based research experience: how benefits change with increased investment in instructional time. CBE Life Sci Educ. 2014;13:111–130. doi: 10.1187/cbe-13-08-0152. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Shortlidge EE, Bangera G, Brownell SE. Faculty perspectives on developing and teaching course-based undergraduate research experiences. BioScience. 2016;66:54–62. doi: 10.1093/biosci/biv167. [DOI] [Google Scholar]

- 56.Sirum K, Humburg J. The experimental design ability test (EDAT) Bioscene J Coll Biol Teach. 2011;37:8–16. [Google Scholar]

- 57.Stein B, Haynes A, Redding M, Ennis T, Cecil M. Innovations in e-learning, instruction technology, assessment, and engineering education. Springer; Netherlands: 2007. Assessing critical thinking in STEM and beyond; pp. 79–82. [DOI] [Google Scholar]

- 58.Stiggins RJ. Classroom assessment for student learning: doing it right—using it well. Assessment Training Institute Inc.; Portland, OR: 2004. [Google Scholar]

- 59.Theobald R, Freeman S. Is it the intervention or the students? Using linear regression to control for student characteristics in undergraduate STEM education research. CBE Life Sci Educ. 2014;13:41–48. doi: 10.1187/cbe-13-07-0136. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Timmerman BEC, Strickland DC, Johnson RL, Payne JR. Development of a ‘universal’ rubric for assessing undergraduates’ scientific reasoning skills using scientific writing. Assess Eval Higher Educ. 2011;36:509–547. doi: 10.1080/02602930903540991. [DOI] [Google Scholar]

- 61.Torrance EP. Torrance tests of creative thinking: norms-technical manual: figural (streamlined) forms A & B. Scholastic Testing Service Inc.; Bensenville, IL: 1998. [Google Scholar]

- 62.Ward JR, Clarke HD, Horton JL. Effects of a research-infused botanical curriculum on undergraduates’ content knowledge, STEM competencies, and attitudes toward plant sciences. CBE Life Sci Educ. 2014;13:387–396. doi: 10.1187/cbe.13-12-0231. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Weston TJ, Laursen SL. The undergraduate research student self-assessment (URSSA): validation for use in program evaluation. CBE Life Sci Educ. 2015;14(3) doi: 10.1187/cbe.14-11-0206. pii:ar33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Wiggins GP, McTighe J, Kiernan LJ, Frost F. Understanding by design. Association for Supervision and Curriculum Development; Alexandria, VA: 1998. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Appendix 1: Faculty perceptions of student gains from participation in CUREs