Short abstract

Measurement of outcomes from medical or surgical interventions is part of good practice, but publication of individual doctors' results remains controversial. The authors discuss this issue in the context of cardiothoracic surgery

After the General Medical Council hearings and the subsequent Bristol Royal Infirmary Inquiry into paediatric cardiac deaths, cardiac surgeons expected a stinging attack on British cardiac surgical practice. What emerged instead, in 2001, was a comprehensive report highlighting many of the difficulties facing frontline clinicians and managers in the NHS.1

The story of the paediatric cardiac surgical service in Bristol is not an account of bad people. Nor is it an account of people who did not care, nor of people who wilfully harmed patients. It is an account of people who cared greatly about human suffering, and were dedicated and well-motivated. Sadly, some lacked insight and their behaviour was flawed. Many failed to communicate with each other, and to work together effectively for the interests of their patients. There was a lack of leadership, and of teamwork. It is an account of healthcare professionals who were victims of a combination of circumstances which owed as much to general failings in the NHS at the time than to any individual failing.1

The report included 198 recommendations, of which two stated that patients must be able to obtain information on the relative performance of the trust and of consultant units within the trust. This led to an increasing belief that the interests of the public and patients would be served by publication of individuals' surgical performance in the form of postoperative mortality. A precedent for this existed in the United States, where in 1990, the New York Department of Health published mortality statistics for coronary surgery for all hospitals in the state, and has published comparable data each year since.2 A newspaper, Newsday, successfully sued the department under the state's Freedom of Information Law to gain access to surgeon specific data on mortality, which the newspaper published in December 1991, evoking a hostile response from surgeons. New Jersey and Pennsylvania states have also started publishing mortality data, but the practice has not yet spread to any other state or country.

Cardiac surgeons had seen this coming, so during the Bristol Royal Infirmary Inquiry the Society of Cardiothoracic Surgeons of Great Britain and Ireland tried to redress perceived deficiencies in surgeons' approach to national data collection and audit3 by producing unambiguous guidelines on data collection and clinical audit in cardiac surgical units (see www.scts.org) and by debating how to measure their clinical performance.

After detailed discussion, the society agreed to institute the collection of data on surgeon specific activity and in-hospital mortality for several index procedures and to use a stringent set of limits to initiate an internal assessment. An annual mortality of greater than 2 SD above the mean was set as the trigger for a review by local clinical governance. This was intended to be a constructive process, not a trigger for criticism, blame, or ill considered actions. The problem with this approach is that there will always be 2.5% of consultants under review.

In-hospital mortality was chosen as a performance measure because it was understandable, easy to measure, could be validated, and included all patients who died in hospital (not just those within a certain time frame). Furthermore, it was used by all public reporting systems in the United States.

Index procedures of isolated, first time coronary surgery, lobectomy for lung cancer,4 and correction of aortic coarctation or isolated ventricular septal defect repair were identified. But the collected data were only for activity and mortality, which did not allow for casemix adjustment.

In 1998, when the decision to collect these data was taken, cardiac surgeons were anxious. They were fearful that in the shadow of what had happened at Bristol chief executives would have a low threshold for suspension, which could unjustly derail the careers of perfectly competent surgeons. Nevertheless, such was the recognition of the importance of this venture that voluntary compliance among consultant surgeons for individual data submission has been 100% from that time.

From individual surgeons' data and subsequent reviews, two things have been learnt. Firstly, little relation exists between volume and mortality. Detailed statistical analysis shows a significant volume effect in which a 20% increase in workload is associated with reduction by a 20th in operative mortality (5% relative reduction, 95% confidence interval 2% to 8%). In real terms, this translates to a reduction in operative mortality from 2% to 1.9%, which is negligible in practical terms. Secondly, when surgeons have been reviewed, several issues of process and organisation, rather than technical, surgical ability, have usually been the underlying problem.

Why publish results on individual surgeons?

A detailed analysis by the Nuffield Trust has shown that the arguments for and against publication are finely balanced.5 The reason for publication determines the way such data are presented. The two key reasons are either to facilitate patient choice or to demonstrate safety. Publishing for patient choice requires detailed, risk adjusted tables of outcome published in a comparative fashion. Publishing to indicate whether a surgeon is safe or not requires agreeing a threshold of unacceptable mortality and then showing where each individual surgeon's results lie relative to that threshold. This is analogous to the blood alcohol level test for driving—a driver is either above or below the agreed or legal limit.

The comparative cardiac surgery reporting programmes in Pennsylvania, New Jersey, and New York have been well publicised. The claims are that these systems are transparent and that in New York the associated scrutiny has resulted in a demonstrable reduction in post operative mortality.6-9 Counter claims suggest that this reduction in mortality is no greater than that seen across the rest of the United States and that in a litigious climate the data required protracted, detailed auditing and validation with the result that, when finally published three years later, the data are no longer relevant. Furthermore, there is a feeling in the US cardiac surgery community that an unintended negative consequence of public disclosure is that surgeons may be protecting their results by avoiding higher risk cases if they feel that their results are drifting into a range that might attract unnecessary yet easily avoidable scrutiny.10-13 The improvement in mortality is easy to show. The avoidance of high risk surgery is less easy to show because of the subjective and immeasurable nature of the clinical decision making process in these complex patients. This is a real irony because the evidence suggests that patients are the one group who pay little attention to these data. What they really want is an operation in a hospital close to home and as soon as possible.14-16

Although the surgeon plays an important role in surgical outcome, so does the anaesthetist, the intensive care physician, and the intensive care nurse. Surgical results are also influenced by the socioeconomic status of the local population; severity of cardiac illness; prevalence of comorbidities; threshold of referral from both the general practitioner and the cardiologist; threshold of acceptance by the surgeons; standards of anaesthesia, surgery, and intensive care; adequacy of facilities and staffing levels; attitude to training; interpersonal relationships between staff; and the geographical layout of the unit (for example, in some units the wards are so far from the theatre and intensive care unit that surgeons have no time to check up on ward patients between surgery cases). So the concept of blaming the surgeon was perceived as unfair.17

These concerns have been reflected in the decision by the Veterans Administration (the biggest US healthcare provider) to discourage the generation of surgeon specific outcomes. The administration believes the performance of a surgeon cannot be separated from that of his or her institution as quality is highly dependent on institutional systems.18,19 Others argue that it is the doctors who are best placed to change institutional processes that influence outcome and they are therefore a logical target.20

Public disclosure of hospital and surgeon specific data in other specialties has not been well publicised but will gain increasing prominence.21,22

We thought carefully about ways to present the data in the United Kingdom to avoid some of the pitfalls of the US models. We agreed we would base any risk adjusted comparative analyses on lower risk cases alone, leaving surgeons able to tackle more complex and difficult cases without unnecessary apprehension. The wisdom of this strategy was recently highlighted by a study in the BMJ confirming that risk stratification systems that may be good at predicting risk in large institutional groups of patients are much less reliable in high risk cases at the level of an individual surgeon because they tend to “under-predict” for higher risk groups. More importantly this study defined the level of predicted risk above which we should exclude patients from comparative analyses.23

The national service framework for coronary heart disease

The national service framework for coronary heart disease, launched in early 2000, included clear recommendations for comparative audit based on the Society of Cardiothoracic Surgeons' clinical dataset.24 The framework led to a national coronary heart disease information strategy,25 which released funds and mandated collection of this dataset through the National Clinical Audit Support Programme under the jurisdiction of the Commission for Health Improvement (now the Healthcare Commission).26 The vision was to harmonise data collection between cardiology, cardiac surgery, and other administrative systems so that everyone had ownership of, and was working from, the same base dataset and the same definitions.

Since 1996 the society has also been collecting comprehensive data on anonymised individual patients from an increasing number of units throughout the United Kingdom that would allow for casemix adjustment. But the data are not yet good enough to allow for meaningful comparisons of units, let alone surgeons. The Nuffield Trust (United Kingdom) and Rand (United States) did a rigorous, independent review of the quality of data in the clinical databases of 10 units in England, and this showed serious but remediable deficiencies in data quality. The review has led to a series of recommendations on data collection, including the requirement for a “permanent cycle of independent external monitoring” and “validation by an independent source” before release.27,28

As part of the national service framework, data collection in England would shift from the Society of Cardiothoracic Surgeons to the central cardiac audit database, part of the National Clinical Audit Support Programme in the NHS Information Authority. The added value would be that this system would provide mortality tracking through the Office for National Statistics. This would enable the society to start analysing and understanding the factors influencing long term survival rather than focusing solely on early postoperative mortality. This is particularly relevant given the observation that the hazard of early death after coronary artery surgery remains raised for 60-90 days.29 This should lead to an understanding of which kinds of patient benefit most from which operation and so contribute substantially to the overall quality of care and more specifically to the basis of informed consent.

The price the surgical community had to pay for these long term benefits was the publication of individual surgeons' results: the first set of results would be released in some form by the end of 2004. But to retain the confidence of all parties—surgeons, the public, and the healthcare regulators—the project would be overseen jointly by the surgical community, the then Commission for Health Improvement, and the Department of Health.

This was an ambitious programme. The society's dataset had to be changed to accommodate standards on NHS data; units had to be connected to the central cardiac audit database through secure connections; and the transmission specifications for the clinical data required standardisation and testing. Locally, data managers were appointed, and networked computer systems were put in place. The first data trickled into the central cardiac audit database in October 2003, too late for the production of validated, risk adjusted, surgeon specific results in 2004.

So the society began to consider other options. In October 2002, it had published unadjusted mortality for coronary and aortic valve surgery for every unit in the United Kingdom. But it had also been collecting individual surgeons' unadjusted mortality data for some years as part of its quality assurance programme. Could it analyse and present these data constructively?

Can crude mortality be usefully presented?

Tables 1 and 2 show that most deaths in coronary surgery occur in high risk patients, however they are stratified. Because of this casemix influence it would not be sensible to publish unadjusted mortality by surgeon. But crude mortality could be used to show that surgeons lie within or outside a certain predefined limit.

Table 1.

Observed versus predicted mortality for 2001-3 using a nine variable Bayes model built on 2000 data

|

Observed

|

Predicted

|

|||||

|---|---|---|---|---|---|---|

| Bayes score (%)* | Alive | Deaths | Total | Observed mortality (%) | Deaths | Predicted mortality (%) |

| <1.0% | 20 467 | 91 | 20 558 | 0.4 | 144.7 | 0.7 |

| 1.0-1.9% | 23 834 | 271 | 24 105 | 1.1 | 339.2 | 1.4 |

| 2.0-2.9% | 9913 | 217 | 10 130 | 2.1 | 245.7 | 2.4 |

| 3.0-4.9% | 7656 | 262 | 7918 | 3.3 | 302.3 | 3.8 |

| 5.0-9.9% | 4288 | 293 | 4581 | 6.4 | 307.8 | 6.7 |

| >9.9% | 1765 | 333 | 2098 | 15.9 | 373.7 | 17.8 |

| All | 67 923 | 1467 | 69 390 | 2.1 | 1713.3 | 2.5 |

The model takes account of age, body surface area, diabetes, hypertension, left ventricular function, the presence of left main coronary disease, renal disease, and previous heart surgery.

The higher the score, the higher the risk of death for the patient.

Table 2.

Observed versus predicted mortality for 2001-3 using a modified EuroSCORE* to maximise the number of scorable patients

|

Observed

|

Predicted

|

|||||

|---|---|---|---|---|---|---|

| EuroSCORE | Alive | Deaths | Total | Observed mortality (%) | Deaths | Predicted mortality (%) |

| 0-1 | 11 998 | 53 | 12 051 | 0.4 | 60.3 | 0.5 |

| 2-3 | 14 450 | 134 | 14 584 | 0.9 | 367.4 | 2.5 |

| 4-5 | 10 784 | 194 | 10 978 | 1.8 | 486.6 | 4.4 |

| 6-7 | 4989 | 189 | 5178 | 3.7 | 330.2 | 6.4 |

| 8-9 | 1819 | 140 | 1959 | 7.1 | 163.6 | 8.4 |

| >9 | 953 | 195 | 1148 | 17.0 | 133.8 | 11.7 |

| All | 44 993 | 905 | 45 898 | 2.0 | 1542.0 | 3.4 |

EuroSCORE is an additive score based on logistic regression, which takes account of age, sex, previous heart surgery, pulmonary disease, extracardiac arteriopathy, neurological dysfunction, serum creatinine concentration, left ventricular function, unstable angina or recent myocardial infarction, urgency, and a critical preoperative state such as mechanical ventilation, inotropic support, intra-aortic balloon counterpulsation, or acute renal failure. The higher the score, the higher the risk of death for the patients.

It is reasonable that the threshold should be considerably higher when risk adjustment is not used than when it is. So how has the society set the limits? In industry, 99.9% confidence limits (3 SD) are commonly used for quality control processes for manufacturing, where there is control of raw materials. Sadly, this level of standardisation does not hold for cardiac surgery patients, who can be very heterogeneous. So the limits were widened to 99.99% (4 SD) to take this additional, inherent variation into consideration. The society proposes to use these limits as our its basis for publication of individual surgeons' results. So, for the purposes of safety it will consider that any surgeon whose mortality is within 99.99% (4 SD) over an aggregated three year period will have met transparent and defined standards. This means that any outlier is likely to be real—there is less than a 1 in 10000 chance that the society would assert that any particular surgeon with average case mix did not meet its standard. The deficiency is that these limits become very wide at lower volumes, opening the way to accusations of professional protectionism for surgeons with lower volumes.

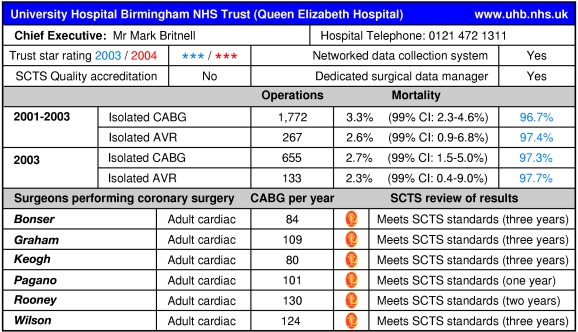

Surgeons who have been in post for fewer than three years will be analysed similarly for one or two years. Those whose mortality lies within 99.99% confidence limits will be said to “meet” the society's standards; those whose mortality lies below and outside these limits will be said to “exceed” the standards; and those whose mortality lies above these limits with a high mortality will be described as “not meeting” the standards (figure, p 450).

Figure 1.

Proposed method of presentation of surgeon specific data in cardiac surgery. Pagano and Rooney were recently appointed, so the full three years of data are not available

The Healthcare Commission is taking a similarly cautious statistical approach in their clinical indicator of “deaths following a heart bypass operation,” which contributes to the balanced scorecard component of the annual star ratings for hospitals. From this year the indicator will be based on three years' data derived from hospital episode statistics, with possible expansion of the control limits to allow for any observed “overdispersion” arising from inadequate risk adjustment.30

The use of data that are not risk adjusted is still very controversial, but their value is being increasingly recognised. The use of a single risk adjusted number to summarise a surgeon's results runs the risk of lending a level of spurious credibility to an analysis that does not take into account the impact of influences that are not patient related. To many, the number will simply represent the final analysis.31,32 On the other hand, data that are not risk adjusted simply say, “Take a closer look at the bigger picture.” It inevitably invokes a review process of which detailed, risk stratified analysis is only a part. No conclusions can be drawn until a full review has taken place.

This sort of data cannot contribute to patient choice. So patient choice will be driven by other considerations, but patients will know the society is constantly reviewing its results without fear or favour.

When these results are published later this year, medicine in the United Kingdom will have crossed a threshold into a new era. Cardiothoracic surgeons will have shown that it is possible for a surgical specialty to review its own performance at an individual clinician level by professional consensus. This system is not perfect; it is a first step, which, in the words of Alan Milburn in 2003, when he was secretary of state for health, has “opened a door which other branches of medicine will need to enter.” Most importantly, cardiac surgeons will have opened a more general debate that will revolve around the balance between the relative influence of individual physicians and institutional influences on patient outcomes and how this relation translates to transparent public accountability.

The final question is whether, with transparent systems in place to maintain standards, it is necessary to publish a list of names, or can the public good can be served just as well by the knowledge that appropriate mechanisms are in place and independently regulated.

Summary points

Measurement of outcomes from medical or surgical interventions is part of good practice

Knowing where those outcomes lie with respect to others is an individual professional responsibility

Professional bodies can help by providing benchmarking

Publication of national and institutional results is right and proper

Publication of individuals' results remains controversial because of the potential, unintended negative effects and increasing recognition that individuals' results are strongly influenced by institutional influences that may impinge differently on different individuals

The utility of such publications depends on the relation of the outcome to quality of care, the ability to cater for casemix, and whether the publication is designed to facilitate patient choice or show consistency of standards

BK is consultant cardiothoracic surgeon at Queen Elizabeth Hospital, Birmingham, and coordinator of the national adult cardiac surgical database. DS is a senior statistician at the MRC Biostatistics Unit, Institute of Public Health, Cambridge. JR is consultant cardiothoracic surgeon at St Thomas' Hospital, London. PM is consultant cardiothoracic surgeon at the London Chest Hospital, London. CH is consultant cardiothoracic surgeon at the Freeman Hospital, Newcastle upon Tyne.

Contributors: BK drafted the manuscript, managed the collection of the surgeon specific data, and helped with analysis. DS gave statistical advice on how to analyse the surgeon specific data. AB merged and checked the data and helped with analysis. JR, PM, and CH helped design the methodology of analysis and the presentation of surgeon specific data and refine the manuscript. BK is the guarantor.

Funding: This work was funded entirely through membership subscription to the Society of Cardiothoracic Surgeons of Great Britain and Ireland.

Competing interests: BK is a Commissioner on the Healthcare Commission and coordinator for the National Adult Cardiac Surgical Database and UK Cardiac Surgical Register for the Society of Cardiothoracic Surgeons of Great Britain and Ireland. DS is a statistical adviser to the Healthcare Commission.

References

- 1.Learning from Bristol: the report of the public inquiry into children's heart surgery at the Bristol Royal Infirmary 1984-1995. www.bristolinquiry.org.uk/final_report/index.htm (accessed 9 August 2004).

- 2.Chassin MR, Hannan EL, DeBuono BA. Benefits and hazards of reporting medical outcomes publicly. New Engl J Med 1996;334: 394-8. [DOI] [PubMed] [Google Scholar]

- 3.Keogh B, Dussek J, Watson D, Magee P, Wheatley D. Public confidence and cardiac surgical outcome. BMJ 1998;316: 1759-60. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Treasure T, Utley M, Bailey A. Assessment of whether in-hospital mortality for lobectomy is a useful standard for the quality of lung cancer surgery: retrospective study. BMJ 2003;327: 73-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Marshall M, Sheklle P, Brook R, Leatherman S. Dying to know: public release of information about quality of healthcare. London: Nuffield Trust and Rand, 2000.

- 6.Hannan EL, Kilburn H Jr, Racz M, Shields E, Chassin MR. Improving the outcomes of coronary artery bypass surgery in New York state. JAMA 1994;271: 761-6. [PubMed] [Google Scholar]

- 7.Hannan EL, Siu AL, Kumar D, Kilburn H Jr, Chassin MR. The decline in coronary artery bypass graft surgery mortality in New York state: the role of surgeon volume. JAMA 1995;273: 209-13. [PubMed] [Google Scholar]

- 8.Hannan EL, Kumar D, Racz M, Siu AL, Chassin MR. New York state's cardiac surgery reporting system: four years later. Ann Thorac Surg 1994;58: 1852-7. [DOI] [PubMed] [Google Scholar]

- 9.Chassin MR, Hannan EL, DeBuono BA. Benefits and hazards of reporting medical outcomes publicly. New Engl J Med 1996;334: 394-8. [DOI] [PubMed] [Google Scholar]

- 10.Omoigui N, Annan K, Brown K, Miller D, Cosgrove D, Loop F. Potential explanation for decreased CABG related mortality in New York state: outmigration to Ohio. Circulation 1994;90: I93[abstract]. [Google Scholar]

- 11.Schneider E, Epstein A. Influence of cardiac surgery performance reports on referral practices and access to care. New Engl J Med 1996;335: 251-6. [DOI] [PubMed] [Google Scholar]

- 12.Dranove D, Kessler D, McClellan M, Satterthwaite M. Is more information better? The effects of report cards on health care providers. National Bureau of Economic Research. (Working paper 8697.) www.nber.org/papers/w8697 (accessed 9 August 2004).

- 13.Burack J, Impellizzeri P, Homel P, Cunningham J. Public reporting of surgical mortality: a survey of New York state cardiothoracic surgeons. Ann Thorac Surg 199;68: 1195-200. [DOI] [PubMed] [Google Scholar]

- 14.Schneider E, Epstein A. Use of public performance reports. A survey of patients undergoing cardiac surgery. JAMA 1998;279: 1638-42. [DOI] [PubMed] [Google Scholar]

- 15.Shahian D, Yip W, Westcott G, Johnson J. Selection of a cardiac surgery provider in the managed care era. J Thorac Cardiovasc Surg 2000;120: 978-89. [DOI] [PubMed] [Google Scholar]

- 16.Schneider EC, Lieberman T. Publicly disclosed information about the quality of healthcare: response of the US public. Qual Health Care 2001;10: 96-103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Keogh B. Facts of life the figures can hide. Times 2001. Nov 19.

- 18.Khuri SK. Quality, advocacy, healthcare policy and the surgeon. Ann Thorac Surg 2002;74: 641-94. [DOI] [PubMed] [Google Scholar]

- 19.Albert A, Walter J, Arnrich B, Hassanein W, Rosendahl U, Bauer S, et al. On-line variable live-adjusted displays with internal and external risk-adjusted mortalities. A valuable method for benchmarking and early detection of unfavourable trends in cardiac surgery. Eur J Cardiothorac Surg 2004;25: 312-9. [DOI] [PubMed] [Google Scholar]

- 20.Chassin MR. Improving the quality of care. Part 3: improving the quality of care. N Engl J Med 1996;335: 1060-3. [DOI] [PubMed] [Google Scholar]

- 21.Marshall M, Sheklle P, Leatherman S, Brook R. The public release of performance data. What do we expect to gain? A review of the evidence. JAMA 2000;283: 1866-74. [DOI] [PubMed] [Google Scholar]

- 22.Hannan E, Radzyner M, Rubin D, Dougherty J, Brennan M. The influence of hospital and surgeon volume on in-hospital mortality for colectomy, gastrectomy and lung lobectomy in patients with cancer Surgery 2002;131: 6-15. [DOI] [PubMed] [Google Scholar]

- 23.Bridgewater B, Grayson A, Jackson M, Brooks N, Grotte G, Keenan D, et al. Surgeon specific mortality in adult cardiac surgery: comparison between crude and risk stratified data. BMJ 2003;327: 13-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Department of Health. Coronary heart disease: national service framework for coronary heart disease: modern standards and service models. London: DoH, 2000. (Search at www.dh.gov.uk/).

- 25.Department of Health. Coronary heart disease information strategy. London: DoH, 2001. www.dh.gov.uk/PolicyAndGuidance/InformationPolic y/InformationSupportingNSFAndNCASP/InformationPolic yCoronaryHeartDisease/fs/en?CONTENT_ID=4015656&chk=HUnWUQ (accessed 9 August 2004).

- 26.National clinical audit support programme: support for national service frameworks and clinical govenance. www.dh.gov.uk/assetRoot/04/05/95/61/04059561.pdf (accessed 9 August 2004).

- 27.Fine L, Keogh B, Orlando M, Cretin S, Gould M. Improving the credibility of information on healthcare outcomes. London: Nuffield Trust, 2003.

- 28.Fine L, Keogh B, Cretin S, Orlando M, Gould M. How to evaluate and improve the quality and credibility of an outcomes database: validation and feedback study on the UK Cardiac Surgery Experience. BMJ 2003;326: 25-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Sergeant P, Blackstone E, Meyns B. Validation and interdependence with patient-variables of the influence of procedural variables on early and late survival after CABG. KU Leuven coronary surgery program. Eur J Cardiothorac Surg 1997;12: 1-19. [DOI] [PubMed] [Google Scholar]

- 30.Healthcare Commission. Clinical indicator. Deaths following a heart bypass operation. 2004. http://ratings.healthcarecommission.org.uk/indicators_2004/trust/indicato r/indicatordescriptionshort.asp?indicatorid=1400 (accessed 9 August 2004).

- 31.Shahian D, Normand S Torchiana D, Lewis SM, Pastore JO, Kuntz RE, et al. Cardiac surgery report cards; comprehensive review and statistical critique. Ann Thorac Surg 2001;72: 2155-68. [DOI] [PubMed] [Google Scholar]

- 32.Iezzoni L. The risks of risk adjustment. JAMA 1997;278: 1600-11. [DOI] [PubMed] [Google Scholar]