We show behavioral evidence for motion integration between the current and the future retinotopic locations of a salient object just before a saccade. This presaccadic integration occurs only when the features at the two locations are congruent, in other words, only when the information from the target's future location matches the value it is expected to have once the target actually gets there. These results suggest the existence of a feature-gated integration occurring before saccades.

Keywords: saccade, remapping, space constancy, motion integration

Abstract

Object tracking across eye movements is thought to rely on presaccadic updating of attention between the object's current and its “remapped” location (i.e., the postsaccadic retinotopic location). We report evidence for a bifocal, presaccadic sampling between these two positions. While preparing a saccade, participants viewed four spatially separated random dot kinematograms, one of which was cued by a colored flash. They reported the direction of a coherent motion signal at the cued location while a second signal occurred simultaneously either at the cue's remapped location or at one of several control locations. Motion integration between the signals occurred only when the two motion signals were congruent and were shown at the cue and at its remapped location. This shows that the visual system integrates features between both the current and the future retinotopic locations of an attended object and that such presaccadic sampling is feature specific.

NEW & NOTEWORTHY

We show behavioral evidence for motion integration between the current and the future retinotopic locations of a salient object just before a saccade. This presaccadic integration occurs only when the features at the two locations are congruent, in other words, only when the information from the target's future location matches the value it is expected to have once the target actually gets there. These results suggest the existence of a feature-gated integration occurring before saccades.

our visual system needs to integrate information about different features and locations to form a continuous and comprehensive experience of our surrounding world (Treisman and Gelade 1980). Elements of the visual scene are represented by different feature-specific receptive fields in retinotopically organized visual areas (Essen and Zeki 1978; Gardner et al. 2008; Sereno et al. 1995; Tanigawa et al. 2010; Van Essen et al. 1981). Therefore, every time the eyes move, the visual system has to update the locations of objects of interest in retinotopic coordinates to keep track of the objects' features. Earlier work has demonstrated that humans can track spatial (Boi et al. 2011; Deubel et al. 1998; Jonikaitis et al. 2013; Jonikaitis and Belopolsky 2014; Parks and Corballis 2010; Pertzov et al. 2010; Szinte et al. 2012) and feature-based information (Deubel 1991; Deubel et al. 2002; Harrison and Bex 2014; Hollingworth et al. 2008; Verfaillie et al. 1994) across eye movements. However, whether and how we integrate feature information from different locations across eye movements is debated (Knapen et al. 2010; Mathôt and Theeuwes 2013; Melcher 2007; Melcher and Morrone 2003; Morris et al. 2010).

Mechanisms proposed to explain transsaccadic feature integration rely on either presaccadic predictions (Cavanagh et al. 2010a) or top-down postsaccadic strategies (Boi et al. 2011; Deubel et al. 1998; Tatler and Land 2011). Postsaccadic strategies can be thought of as a search for task relevant features or objects after each eye movement. Presaccadic predictions, on the other hand, propose that the visual system anticipates the consequences of impending saccades by predicting where task relevant targets will be after an eye movement (Cavanagh et al. 2010a; Duhamel et al. 1992; Wurtz 2008). This prediction mechanism, called remapping, focuses on tracking the spatial location of targets across eye movements, but the extent to which feature information is carried over from the target's current to its remapped location is less clear. Some investigators have suggested that this feature transfer may occur even before the saccade (Harrison et al. 2013; Melcher 2007) and report an influence of the presaccadic target's features on postsaccadic probes presented at the target's predicted location (the remapped or spatiotopic location). Although these results are variable, they suggest that transsaccadic memory for visual content is more abstract and limited (Carlson-Radvansky and Irwin 1995; Melcher and Morrone 2003; Pollatsek et al. 1984; Verfaillie et al. 1994). Moreover, others either have failed to report such feature-specific interactions (Knapen et al. 2010; Mathôt and Theeuwes 2013; Morris et al. 2010) or showed only limited feature-specific effects (for example, Subramanian and Colby 2014; Yao et al. 2016).

In the current study, we measured the integration of feature-based information (motion direction) presented at two locations, the current and the remapped location of an attended target, before the execution of the saccade (not across saccades as reported by Melcher and Morrone 2003). Integration in this case refers to the summation of visual signals across different retinotopic positions, similar to the integration across sources modeled with Bayesian theories in cue combination (Landy et al. 1995) or multisensory integration studies (Ernst and Banks 2001; Hillis et al. 2002). This contrasts with previous reports described above, which consider integration as a transfer of feature information from the target's location to its remapped location. We report evidence for a bifocal sampling of feature information by showing that an attended motion signal can be integrated presaccadically with another motion signal presented at its remapped location. Critically, this integration is both spatially specific and feature specific, suggesting that the visual system activates a feature-specific integration process in anticipation of the retinal consequences induced by the impending saccade. This constitutes the first evidence of a mechanism simultaneously integrating features across different positions in space, provided they are expected to originate from the same object across the saccade.

MATERIAL AND METHODS

Participants

Eleven students (9 in the saccade task and 8 in the fixation task, with 6 participating in both tasks) of the Ludwig-Maximilians-Universität (LMU) München participated in the experiments (age 21–31 yr, 6 women, 10 right-eye dominant, 2 authors) for compensation of 9 euros per hour of testing. All participants except two authors were naive as to the purpose of the study, and all had normal or corrected-to-normal vision. The experiments were undertaken with the understanding and written informed consent of all participants and were carried out in accordance with the Declaration of Helsinki. Experiments were designed according to the ethical requirements specified by the LMU München and an institutional review board ethics approval for experiments involving eye tracking.

Setup

Participants sat in a quiet and dimly illuminated room, with their head positioned on a chin and forehead rest. The experiment was controlled by an Apple iMac Intel Core i5 computer (Cupertino, CA). Manual responses were recorded via a standard keyboard. The dominant eye's gaze position was recorded and made available online with the use of an EyeLink 1000 with desktop mount (SR Research, Osgoode, ON, Canada) at a sampling rate of 1 kHz. The experimental software controlling the display and the response collection as well as the eye tracking was implemented in MATLAB (The MathWorks, Natick, MA) using the Psychophysics (Brainard 1997; Pelli 1997) and EyeLink toolboxes (Cornelissen et al. 2002). Stimuli were presented at a viewing distance of 60 cm, on a 21-in. gamma-linearized SONY GDM-F500R CRT screen (Tokyo, Japan) with a spatial resolution of 1,024 × 768 pixels and a vertical refresh rate of 120 Hz.

Procedure

The study was composed of a saccade and a fixation task tested in 4–5 experimental sessions (on different days) of about 60–90 min each (including breaks). These main tasks were preceded by their respective threshold tasks for each experimental session. Participants started with a training phase in which they were familiarized with the stimuli and the two different tasks. After the training phase, participants started each experimental session with one to two threshold blocks followed by four to five blocks of the saccade or fixation task. Participants ran a total of 3 blocks of the fixation task and 16 blocks of the saccade task. All participants who ran both tasks first completed the saccade task before starting the fixation task.

Saccade task.

Each trial began with participants fixating a central fixation target (ft) forming a black (∼0 cd/m2) and white (60 cd/m2) “bull's eye” (0.45° radius) on a gray background (30 cd/m2). When the participant's gaze was detected within a 2.0°-radius virtual circle centered on the ft for at least 200 ms, the trial began with a random fixation period (500–750 ms in steps of 50 ms), after which a black (∼0 cd/m2) circle replaced the ft and the bull's eye jumped to one of four possible saccade target positions (st) located 11° right, left, up, or down from the ft. Participants were instructed to follow the bull's eye with their gaze as quickly and accurately as possible.

In addition to the ft and st, the display contained four random dot kinematograms (RDKs) centered halfway between the horizontal and vertical potential st locations (Fig. 1, eccentricity of the RDK center ∼8.5°). Each RDK was composed of half black (∼0 cd/m2) and half white (60 cd/m2) dots (10' radius), restricted to 2.5°-radius apertures. Dots moved in random directions at a constant speed of 5°/s (limited lifetime of 83 ms plus an exponentially distributed jitter with a mean of 67 ms). At different times following the appearance of the st (0–250 ms in steps of 50 ms), one or two of the RDKs became coherent for 100 ms, moving in one of the four cardinal directions (right, 0°; up, 90°; left, 180°; or down, 270°). The direction was selected randomly and independently such that when two signals were presented, their directions were either congruent (1/4 of the trials) or incongruent (3/4 of the trials). We drew the motion direction of each dot from a circular normal distribution (von Mises) with a certain degree of concentration κ (inverse of the variance of a normal distribution) around the main motion direction (as in Williams and Sekuler 1984, except for the limited lifetime of our random dots). The value of κ was 0 (uniform distribution across all directions) while the RDKs moved randomly, and two values were chosen for the coherent motion signals: a lower level, s1, and higher level, s2. These two κ values were determined in threshold tasks separately for the fixation and saccade conditions and individually for each participant. s1 produced 50% correct discrimination when presented in isolation at the cued location, whereas s2, also at the cued location, produced 87.5% correct discrimination (see Saccade and fixation threshold task). In the main tasks, s1 was always presented at the cued location, and when there were two signals, s1 was at the cued location and s2 was always at an uncued location. Finally, in additional control trials during the saccade and fixation tasks, either s1 or s2 was presented alone at the cued location to check that the signal levels from the threshold trials produced the expected performance.

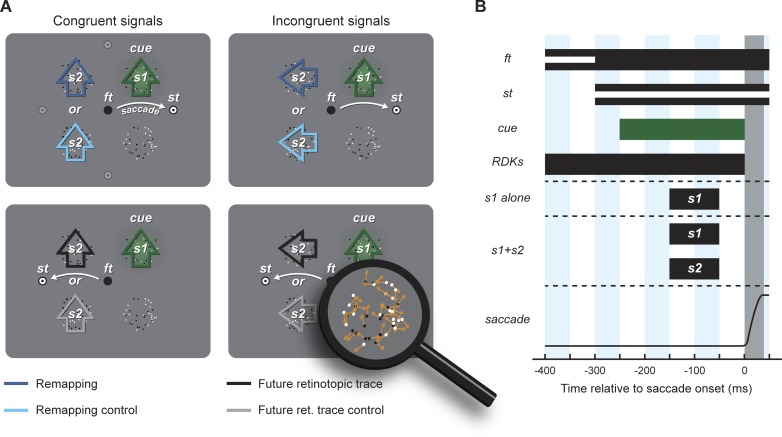

Fig. 1.

Experimental procedure. A: display setup and main conditions. Participants were instructed to saccade from the fixation target (ft) to the saccade target (st) that appeared at 1 of 4 possible cardinal directions (during a trial a single st was shown; light gray targets are shown here for illustration). At 4 locations equidistant from ft and the potential saccade targets, we presented 4 random dot kinematograms (RDKs) showing incoherent motion (see zoomed image, bottom right). At different times before saccade onset, motion briefly became coherent either at the cued location only (using signal level s1) or simultaneously at the cued location (s1) and another location (using signal level s2). One hundred milliseconds before the onset of the motion signal(s), one of the RDKs was cued by a green blob (cue). At the end of each trial, participants reported the motion direction at the cued location. Relative to the location of the cue and to the saccade direction, s2 appeared at the remapping location of the cue (dark blue arrow), at the future retinotopic trace location of the cue (black arrow), or at their respective control locations mirrored relative to the saccade vector (light blue and gray arrows, respectively). Moreover, because directions of signals were selected randomly and independently, s1 and s2 could have either congruent (left) or incongruent (right) directions. B: stimulus timing. At different times after a random fixation duration, the bull's eye at ft was replaced by a black dot together with the onset of the bull's eye at the st (see white lines). At different times after the appearance of the st (0–250 ms in steps of 50 ms), one (s1 alone) or two (s1+s2) RDKs became coherent simultaneously for 100 ms. One hundred milliseconds before the motion signal(s), one location was cued by a green blob. Everything except ft and st was erased upon online saccade detection.

One hundred milliseconds before the onset of the motion signal(s), we cued one location with a green Gaussian blob (5° radius, σ ∼1.7°, 80% contrast, mean luminance 30 cd/m2) presented in the background of one randomly selected RDK (i.e., the RDK partially occluded the blob). Stimulus onset asynchrony between the st and the onset of the motion signal(s) were selected to maximize trials in which signal(s) ended before saccade onset (mean saccade latency across participants, 385.6 ± 9.8 ms; median saccade latency, 367.3 ± 12.1 ms; means ± SE). All stimuli except ft and st were erased upon online saccade detection (which lagged the offline mean latency by 18.6 ± 0.5 ms, with all RDKs disappearing from the screen 41.4 ± 2.0 ms before saccade offset). Note that in this task, saccade latencies were relatively long, reflecting both the difficulty of our dual-task (motion discrimination during saccade preparation) and the experimental settings: 1) the ft did not jump to the st location, but rather was replaced by a black dot; 2) the saccade target appeared at an unpredictable one of four possible locations at a relatively large eccentricity (11°); and 3) the RDKs were composed of limited lifetime dots, increasing the number of transients on the screen and therefore reducing saliency of the st onset.

At the end of each trial, participants reported the main direction of the motion signal presented at the cued location using the keyboard (using the right, up, left, or down arrow key), followed by a positive-feedback sound in case of a correct response. They were told to ignore any motion signals they could detect in the uncued locations but, interestingly, consistently reported that they rarely or never experienced coherent motion signals anywhere other than at the cued location.

Around 83% of the trials contained two signals. In these trials, s1 was presented at the cue location and s2 was presented (with equal probability) at the remapping, the remapping control, the future retinotopic trace, or the future retinotopic trace control location (in a single trial, only 2 positions could be tested depending on the saccade direction and the cue position; see Fig. 1A; signal s2 never appeared at the mirrored location of the cue relative to the saccade direction, i.e., below the cue in Fig. 1A). Around 17% of the trials contained one signal only (s1 alone at the cue location, s2 alone at the cue, remapping, remapping control, future retinotopic trace, or future retinotopic trace control location) with each of these having the same probability. We had more two-signal trials than single-signal trials because we were particularly interested in comparing two-signal trials with one another (e.g., s1+s2 at remapping vs. s1+s2 at remapping control, see Fig. 2B), which we consider the fairest comparison (because it rules out effects of probability summation). However, because some previous studies of motion integration (e.g., Melcher and Morrone 2003) used the comparison of single- to two-signals trials, we did also include a fair proportion of single-signal trials.

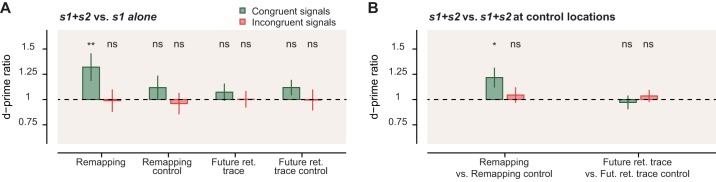

Fig. 2.

Motion integration. A: motion sensitivity gain (d′ ratio) for congruent (green) and incongruent (red) signal directions. Ratios were computed for each participant by dividing sensitivity (d′) in trials with 2 signals (e.g., s1 at the cue and s2 at remapping) by the sensitivity in single-signal trials (see material and methods). B: motion sensitivity gain computed as the ratio between trials with s1 and s2 presented together at the relevant location (“remapping” or “future retinotopic trace” of the cue) and s1 and s2 at their control locations (“remapping control” or “future retinotopic trace control”). Error bars represent SE (statistical significance: *P < 0.05; **P < 0.01; ns, nonsignificant).

Participants completed between 3,153 and 3,727 trials of the saccade task. Correct fixation as well as correct saccade landing within a 2.0°-radius virtual circle centered respectively on the ft and st were checked online. We also ensured that saccade trajectories did not cross the RDK locations. Trials with fixation breaks or incorrect saccades were immediately discarded and repeated at the end of each block in a random order (each participant repeated a total of 81 to 655 trials across all sessions).

Fixation task.

In the fixation task, participants were instructed to continuously keep their eyes on the central ft. This task was identical to the saccade task except that the st never appeared. After a random period of fixation (400–650 ms), one randomly selected location was then cued and followed 100 ms later by the presentation of one or two concurrent motion signals. Moreover, to match the saccade task, all stimuli except the ft were blanked between 0 and 150 ms after the motion signals offset (time that approximately matched to the offset occurring in the saccade task). As in the saccade task, participants reported the main direction of the motion signal presented at the cued location at the end of each trial.

Around 57% of the trials contained two signals. In these trials, s1 was presented at the cued location and s2 was equally likely to be presented at another location rotated by ±90° or 180° from the cued patch. Around 43% of the trials contained one signal only (s1 alone at the cue location or s2 alone at the cue, or at a location ±90° or 180° relative to the cued patch, with each of these having the same probability). In the fixation task we did not expect any interaction when the two signals were presented at separate locations, and we therefore increased the proportion of trials with only a single signal to get a more stable baseline for the comparison of single-signal and two-signals trials.

Participants completed between 648 and 704 trials of the fixation task. Correct fixation within a 2.0°-radius virtual circle centered on the ft was checked online. Trials with fixation breaks were immediately discarded and repeated at the end of each block in a random order (each participant repeated a total of 0 to 56 trials).

Saccade and fixation threshold tasks.

To avoid possible effects of task learning and to adjust across participants the baseline performance for the presentation of both s1 and s2, threshold task blocks preceded the main tasks (including blocks of the saccade and the fixation tasks) at the beginning of each experimental session. The saccade and fixation threshold tasks matched their respective main tasks (same stimuli, timing, and instructions) with the exception that in all trials only one motion signal was presented, and always at the cued location. As in the main tasks, participants reported the main direction of the cued motion patch. Following a procedure of constant stimuli, the motion dots' concentration κ varied randomly across trials around the main motion direction from 0.1 (very dispersed) to 10 (very coherent) in nine linearly spaced steps.

Participants completed 2 blocks of 192 trials each during the saccade threshold task and 1 block of 192 trials each during the fixation threshold task. As in the main tasks, correct fixation and saccade execution (in saccade threshold task) were checked online, and incorrect trials were repeated at the end of each threshold block in a random order.

For each participant and experimental session individually, we determined two threshold values: the κ value leading to correct main motion direction discrimination in 50% (s1) and in 87.5% (s2) of the trials. To do so, we fitted cumulative Gaussian functions to performance gathered in the threshold blocks. The two threshold levels were used in the main tasks. The s1 threshold level (50%) was chosen to allow both an increase or decrease of discrimination performance without reaching ceiling levels too easily. The second threshold level (87.5%) was chosen to be higher, because it would be used in s1+s2 trials at uncued locations where performance would be worse by definition. Moreover, in the saccade threshold task, thresholds were defined separately for the two possible cue locations: in between the ft and st or in the opposite direction of the saccade. We used these values in the corresponding conditions of the saccade task.

Data Preprocessing

Before proceeding to the analysis of the behavioral results we scanned offline the recorded eye-position data. Saccades were detected on the basis of their velocity distribution (Engbert and Mergenthaler 2006) by using a moving average over 20 subsequent eye-position samples. Saccade onset was detected when the velocity exceeded the median of the moving average by 3 SDs for at least 20 ms. We included trials if a correct fixation was maintained within an 2.0°-radius centered on ft (all tasks), if a correct saccade started at ft and landed within an 2.0°-radius centered on st (saccade and saccade threshold tasks only), and if no blink occurred during the trial (all tasks). In total, we included 22,958 trials (88.4% of the online selected trials, 74.9% of all trials played) in the saccade task and 5,178 trials (97.1% of the online selected trials, 97.0% of all trials played) in the fixation task.

Behavioral Data Analysis

For each participant, we computed performance (percentage of correct discrimination of the cued motion signal) across trials in which the coherent motion signals ended in the last 150 ms before the saccade onset in the saccade task and across all trials in the fixation task. We next transformed performance to sensitivity (d′): d′ = z(hit rate) − z(false alarm rate), where hits were trials in which observers reported the correct signal direction (e.g., rightward response for rightward stimulus) and false alarms were trials in which observers reported that direction for any other signal direction (e.g., rightward response for leftward, upward, or downward stimulus). From these values we determined d′ ratios by dividing sensitivity in two conditions (e.g., with d′s1+s2/d′s1 alone for the comparison of sensitivity in two-motion-signal trials with sensitivity in single-signal trials and d′s1+s2 remapping/d′s1+s2 remapping control for the comparison of sensitivity between two-motion-signal trials at the remapping location). Figures 2, 3, and 5B show averages across participants of individual d′ ratios computed as explained above. Figure 5A shows the average across participants of individual sensitivity (d′).

Fig. 3.

Motion integration relative to saccade onset. A: motion sensitivity gain (d′ ratio) computed by dividing sensitivity (d′) in trials with 2 signals by the sensitivity in single-signal trials for congruent (green) and incongruent (red) signal directions and for different signal offset times relative to the onset of the saccade (gray bar). B: motion sensitivity gain computed as the ratio between trials with s1 and s2 presented together at the relevant location (“remapping” or “future retinotopic trace” of the cue) and s1 and s2 at their control locations (“remapping control” or “future retinotopic trace control”) for congruent (green) and incongruent (red) signal directions and for different signal offset times relative to the onset of the saccade. Error bands represent SE; lines show cubic spline interpolation between the data points. Filled dots indicate statistical significance (*P < 0.05; **P < 0.01; ***P < 0.001).

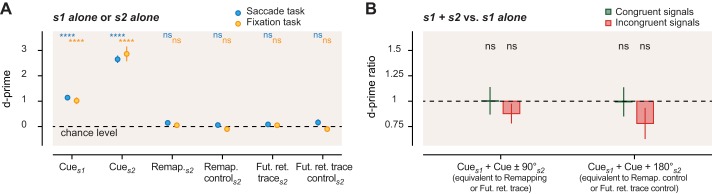

Fig. 5.

Single-signal and fixation control trials. A: motion sensitivity (d′) for the presentation of a single signal (s1 alone or s2 alone) at either the cue (Cues1, Cues2), the remapping (Remap.s2), the remapping control (Remap. controls2), the future retinotopic trace (Fut. ret. traces2), and the future retinotopic trace control (Fut. ret. trace controls2) locations in both the saccade (blue dots) and the fixation task (orange dots). Note that s2 was presented at a higher signal level to compensate for reduced performance at the uncued locations in the s1+s2 trials of the main tasks (see material and methods), but it was also tested again at the cued location to verify the threshold settings. For the fixation task, results are displayed for signals presented at spatial positions equivalent to those in the saccade task, now defined relative to the cue location because without the saccade there is no true remapping or future retinotopic trace location. Under these conditions, these remapping and the future retinotopic trace locations correspond to signal presented at ±90° of rotation from the cue location; the remapping control and future retinotopic trace control locations then correspond to signal presented at 180° of rotation from the cue location. B: motion sensitivity gain (d′ ratio) in the fixation task with two- vs. one-signal trials, computed for congruent (green) and incongruent (red) signal directions. Ratios are computed by dividing sensitivity (d′) in trials with 2 signals (e.g., s1 at the cue and s2 presented at ±90° from the cue location) by the sensitivity in single-signal trials. Error bars represent SE (statistical significance: ****P < 0.0001; ns, nonsignificant).

Next, we drew (with replacement) 10,000 bootstrap samples from the original d′ ratios and computed 10,000 samples. We determined the significance of d′ ratios by comparing the bootstrapped samples with a parity value (d′ ratio = 1). To do so, we subtracted the means of the bootstrap distribution to the parity value and derived two-tailed P values from the distribution of these differences. An equivalent procedure was used to test sensitivity (d′) against chance level (d′ = 0) for different control conditions (see results and Fig. 5A).

For incongruent s1+s2 trials, we tested whether the observed proportion of incorrect reports of the cued direction differed from the expected number, assuming that the incorrect reports were randomly distributed across the different possible options. To do this, we first computed the expected frequencies for each combination of incorrect report directions and s2 directions (right, 0°; up, 90°; left, 180°; or down, 270°). The expected frequency for choosing the s2 direction was ∼8.33% (e.g., reporting “right” if s2 was right), whereas it was ∼5.56% for all other combinations (see Saarela and Landy 2015 for the probability equations). We next tested the observed frequencies against these expected frequencies for the different directions of s2 signals using the bootstrapping procedure as described above (see results and Fig. 4).

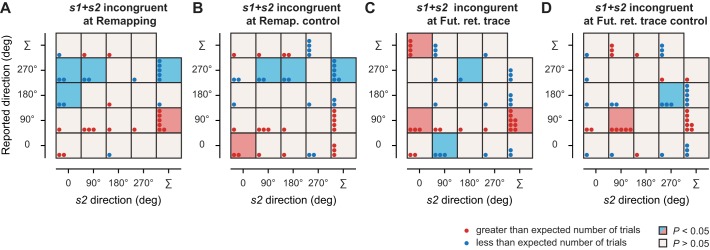

Fig. 4.

Analysis of incorrect report trials for incongruent trials. Cells display dots indicating the number of trials above (red) or below (blue) the expected number for each combination of reported direction and s2 direction (assuming randomly allocated incorrect reports). These differences are computed across participants and rounded to the nearest integer for incongruent signals presented at the remapping (A), the future retinotopic trace location (C), and their respective control locations (B and D). The top row and right column of each panel show the marginal distributions. Colored boxes indicate statistical significance (red or blue boxes, P < 0.05; open boxes, P > 0.05).

RESULTS

Our goal was to determine if presaccadic motion integration occurred between an attended location and its remapped location (i.e., the location on the retina that the cued location will occupy after an imminent saccade). To this end, we presented four random dot kinematograms (RDKs) and cued one of them with a salient attention-capturing color cue during saccade preparation (Fig. 1). Following the onset of the cue and preceding the saccade, we presented a coherent motion signal (s1) for 100 ms at the cued location. In the s1 alone condition, we presented this signal alone. In the s1+s2 condition, we presented s1 simultaneously with a second coherent motion signal, s2, at one of four locations relative to the s1: at the remapped location of the cue (dark blue arrows in Fig. 1A), at a remapping control location (light blue arrows), at the retinotopic location where the presaccadic cue will be after the saccade (i.e., in the direction of the saccade), which we call the future retinotopic trace of the cue (black arrows; Golomb et al. 2008, 2014; Jonikaitis et al. 2013), or at the future retinotopic trace control (light gray arrows). Furthermore, the motion direction of s2 was either congruent or incongruent (Fig. 1A, left and right, respectively) with the direction of s1. At the end of each trial, participants reported the cardinal direction of the cued location (s1: up, down, left, or right). We measured the influence of s2 on participants' ability to discriminate the direction of the cued motion signal, s1. We quantified motion integration as a motion sensitivity ratio (d′ ratio) by dividing a participant's sensitivity (d′) to discriminate the cued signal s1 in s1+s2 trials by their sensitivity to s1 alone trials. A d′ ratio of 1 indicates the absence of integration, whereas a d′ ratio larger than or smaller than 1 indicates respectively an increase or decrease in motion sensitivity in s1+s2 trials.

We observed that motion sensitivity at the cue location s1 increased by 32% (1.32 ± 0.14, P = 0.008) if a congruent motion signal s2 was presented at the remapping location (Fig. 2A). Interestingly, motion sensitivity remained unaffected when motion signals at the cue and remapping locations were incongruent (0.99 ± 0.11, P = 0.89). Moreover, the presaccadic integration of the motion signals was specific to the remapping location. It did not occur when a second signal s2 was presented at the remapping control location (congruent: 1.12 ± 0.12, P = 0.32; incongruent: 0.96 ± 0.11, P = 0.66), the future retinotopic trace location (congruent: 1.07 ± 0.09, P = 0.39; incongruent: 1.00 ± 0.08, P = 0.97), or the future retinotopic trace control location (congruent: 1.12 ± 0.08, P = 0.09; incongruent: 1.00 ± 0.10, P = 0.93).

Next, we quantified the spatial specificity of motion integration by comparing s1+s2 trials when s2 was presented at the remapping vs. the remapping control location. In this analysis, we compare two s1+s2 conditions with each other, thus ruling out spatially unspecific probability summation effects (i.e., where participants could respond on the basis of 2 independent decisions made on each signal irrespective of its position; Meese and Williams 2000; Watson 1979). We found motion integration of 22% when s2 was presented at the remapped location of the cue compared with when it was presented at its control location (Fig. 2B; 1.22 ± 0.10, P = 0.014). Again, motion integration was limited to congruent motion directions, and we did not observe motion integration when comparing future retinotopic trace location vs. future retinotopic trace control location (0.97 > d′ ratios > 1.04; 0.67 > P > 0.52).

We also computed motion integration with trials binned as a function of the motion offset relative to the saccade onset in a 100-ms-wide moving window (corresponding to the motion signal duration) in 50-ms steps for signal offset of −100 to 0 ms before the saccade onset (Fig. 3). Most notably, in the last 50 ms preceding a saccade, a congruent motion signal (s2) at the remapped location of the cue was significantly integrated with the cued (s1) motion signal both when compared with discrimination of a single signal at the cued location (Fig. 3A; signal ending between −150 and −50 ms before saccade: 1.33 ± 0.14, P = 0.013; signal ending between −100 and 0 ms before saccade: 1.38 ± 0.16, P = 0.001) and when compared with two signals at the cued and at the remapping control location (Fig. 3B; signal ending between −150 and −50 ms before saccade: 1.30 ± 0.16, P = 0.028; signal ending between −100 and 0 ms before saccade: 1.32 ± 0.14, P = 0.009). These effects follow the typical time course of remapping; we only found significant motion integration if the motion signals ended within 50 ms of the saccade onset (Jonikaitis et al. 2013; Kusunoki and Goldberg 2003; Rolfs et al. 2011; Szinte et al. 2015) and not for motion signals presented earlier during saccade preparation (all Ps > 0.08). Nevertheless, we decided to collapse trials in which motion signals ended in the last 150 ms before the saccade onset in all main analyses, to profit from the maximum power of our data set. Note that all conclusions presented above were confirmed when we restricted analyses to the last 50 ms preceding the saccade.

Together, these results indicate that presaccadic motion integration is robust and highly spatially specific, because the cued motion signal s1 was only integrated with a second motion signal s2 presented at the location on the retina that the cue will occupy after the saccade (i.e., the cue's remapped location). Moreover, the results suggest that motion integration before the saccade is feature specific, because we found enhancement of motion sensitivity only when we presented matching motion directions at the cued and remapped locations. Interestingly, when motion signal directions did not match, one might have expected that sensitivity would drop, because both directions would be integrated into a third one. Instead, we found that the presentation of an incongruent motion signal at the remapped location of the cue had no effect on the discrimination of the cued motion signal.

Next, we verified that our observed motion integration effects for congruent trials were not due to participants simply reporting the second signal's direction. To do this, we evaluated the trials in which participants did not correctly report the direction of the cued signal. Figure 4 shows the difference between the expected (assuming random report) and the observed number of incorrect direction reports for the four possible directions of s2 for incongruent s1+s2 trials. If participants simply reported the s2 directions, the right diagonal would systematically show a much higher than expected number of trials (Saarela and Landy 2015). This did not happen (Fig. 4A). Instead, we found that when an s2 incongruent signal was presented at the remapped location of the cue, incorrect reports were randomly distributed across the different possible options (all Ps > 0.06). Moreover, s2 rarely influenced any of the responses (see colored boxes in Fig. 4), suggesting that participants tended to ignore the s2 signal when its direction was incongruent with the cued signal.

We also presented s2 alone at uncued locations in a fraction of the trials, without s1 at the cued location (s2 alone condition). We found that motion discrimination was at chance level (Fig. 5A) for s2 presented alone at any uncued location: at the remapped location (d′ = 0.15 ± 0.11, P = 0.14), at the future retinotopic trace location (d′ = 0.09 ± 0.11; P = 0.40), and at their respective control locations (remapping control: d′ = 0.06 ± 0.08, P = 0.38; future retinotopic trace control: d′ = 0.16 ± 0.12, P = 0.16). The fact that the direction of s2 alone at the uncued location was not reportable at better than chance levels indicates that the contribution of s2 when both signals are present must reflect predecision integration of the two signals.

Finally, we investigated whether our effects were contingent on saccade preparation or reflected motion integration biases present even in fixation conditions. In a control task, participants maintained fixation while judging the direction of motion in the cued patch. We did not find any significant motion integration (d′ ratio) between the cued signal and a signal presented at any other location (s1+s2 compared with s1 alone), either for congruent or for incongruent trials (Fig. 5B; all P > 0.12). In particular, and in contrast with the saccade task, we did not find any marked increase of sensitivity when we presented a congruent s2 at a position that would have corresponded to the remapping location in the saccade task (location ±90° from s1: 1.00 ± 0.14, P = 1.00).

Importantly, for both the fixation and the saccade tasks reported above, we presented the signal(s) when the participants' gaze was resting in the center of the display. Although the execution of the saccades introduced a small additional delay between the presentation of the motion signals and the participants' report of the cued direction, the crucial difference between the two tasks was the saccade preparation and execution.

DISCUSSION

We have demonstrated that two motion signals are partially integrated when they are presented before a saccade and occur at two specific retinotopic locations: the location of an attended object and the location that the same object will occupy after the saccade (remapping location). This presaccadic motion integration was spatially specific (it occurred only between these 2 locations), feature specific (it occurred only for matching features), and contingent on the preparation and execution of a saccade (it was not found for the fixation task). As described below, these effects cannot be explained by probability summation or by visual priming. They constitute the first evidence of a mechanism that integrates matching visual features across different positions in space when these features are located at the two retinotopic locations that an attended object occupies across a saccade. Whereas others have shown previously that some visual features can be integrated over different positions in space either after (Golomb et al. 2014) or across a saccade (Melcher and Morrone 2003; but see Ganmor et al. 2015; Morris et al. 2010; Oostwoud Wijdenes et al. 2015; Wolf and Schütz 2015), our study is the first to show motion integration over two spatially and retinotopically distinct positions implicated in the presaccadic predictions of remapping.

We suggest that the motion direction at the cued location, which is detectable on its own at significantly above-chance levels, acts as a filter or prior, selecting the signal from the remapped location when it matches the signal at the cued location and integrating the two, improving detection in this context only. The signal at the remapped location on its own cannot be detected above chance levels, and it is only in combination with the cued signal, when the two match, that it has an influence. This bi-local, feature-gated integration is functionally plausible, since the same object will fill these two locations with matching features before and after an eye movement. Given the imperfect timing in switching selection from the pre- to the postsaccadic location (Jonikaitis et al. 2013; Kusunoki and Goldberg 2003, Nummela and Krauzlis 2011; Rolfs 2015), it is reasonable that the sampling from the two may overlap in time. It would then be useful to limit the concurrent sampling to matching features, because this would avoid picking up features from irrelevant (i.e., incongruent) items that happen to be at the remapped location.

Our observation that, before a saccade, the visual system samples the retinotopic location an attended object will occupy after a saccade is in line with earlier work reporting remapping of spatial attention (Jonikaitis et al. 2013; Rolfs et al. 2011; Szinte et al. 2015). In particular, it has been proposed that spatial attention shifts toward retinotopic postsaccadic target locations even before the onset of an eye movement (Cavanagh et al. 2010a). These findings can be linked to electrophysiological studies (see Rolfs and Szinte 2016 for potential mechanisms) showing that neurons activate predictively if a saccade will bring a target into their receptive fields (Duhamel et al. 1992; Goldberg and Bruce 1990; Sommer and Wurtz 2006; Umeno and Goldberg 1997; Walker et al. 1995). These studies proposed that the visual system uses an efference copy of the forthcoming saccade (Holst and Mittelstaedt 1950; Sommer and Wurtz 2002; Sperry 1950) to predict the future locations of stimuli of interest. This “remapping” occurs for salient and behaviorally relevant objects (Berman and Colby 2009; Gottlieb et al. 1998; Kusunoki et al. 2000) and is well-suited to contribute to the transsaccadic processing of attended object locations (Cavanagh et al. 2010a; Jonikaitis et al. 2013; Rolfs 2015; Yao et al. 2016; Wurtz 2008). Our current findings constitute further evidence for the existence of processes sampling information at attended and remapped locations.

Although the remapping process is consistent with our results, we must also consider the possible effects of other consequences of saccade preparation, specifically, the biased spatial processing surrounding the saccade target itself (Moore et al. 1998; Tolias et al. 2001; Zirnsak et al. 2014). Indeed, large biases in sensory processing at the saccade target location are evident during saccade preparation resulting in improved detection of visual information (Baldauf and Deubel 2008; Deubel and Schneider 1996; Rolfs et al. 2011), increased perceived contrast (Rolfs and Carrasco 2012), and reduced visual crowding (Harrison and Bex 2014). If our observed motion integration were due to such biased processing around the target of the saccade, or to the allocation of attention toward locations close to the saccade target (Zirnsak and Moore 2014), we might have observed motion integration effects at those two locations (Fig. 1A, “future retinotopic trace” and its control). Instead, we observed integration with signals at the location farthest from the saccade target (Fig. 1A, “remapping”), consistent with a recent physiological report (Neupane et al. 2016).

The link between remapping and the presaccadic shift of attention we observed in the present and previous studies (Jonikaitis et al. 2013; Rolfs et al. 2011; Szinte et al. 2015) relies on the similarities in both the timing and spatial characteristics. Nevertheless, a debate exists on whether single-cell receptive field shifts could really support the remapping of attention we found. In particular, different authors have pointed out that the size of the receptive fields in remapping areas is too big to account for the precision of observed attentional shifts (Mayo and Sommer 2010; Zirnsak and Moore 2014). In a previous response to this point, it was suggested that localization cannot be a function of individual receptive fields, but rather of populations (Cavanagh et al. 2010b). In this study we show spatially specific effects that, again, could only be explained by a profile of activity across many responding units given the large size of receptive fields in areas known to be involved in remapping.

We observed integration of motion signals presented at the attended and its remapped location only if they had congruent directions. Although these effects are feature specific, they differ markedly from earlier reports of feature-based attention that operates throughout the whole visual field (Jonikaitis and Theeuwes 2013; Liu and Mance 2011; Martinez-Trujillo and Treue 2004; Maunsell and Treue 2006; Melcher et al. 2005; Wegener et al. 2008; White and Carrasco 2011). Our effects instead rely on a feature-specific mechanism that is also spatially localized. One earlier study found that motion signals were integrated across eye movements when they occurred at the same spatial but different retinotopic locations, separated in time (Melcher and Morrone 2003). In contrast to this earlier report, we investigated the integration that occurs between two locations presented simultaneously and before the onset of the saccade. In addition, we addressed two issues concerning integration that may have been problematic in the Melcher and Morrone (2003) study. First, Morris et al. (2010) showed that when comparing single- and two-signal trials to evaluate integration in the two-signal case, temporal uncertainty of the motion signal onsets can cause the sensitivity to the single motion signal to be underestimated. This, in turn, leads to an overestimation of signal integration when the single- and two-signal trials are compared. To deal with this in our study, the spatial cue exactly predicted the onset time of the simultaneously presented motion signals, greatly reducing temporal uncertainty. Second, as Morris et al. (2010) noted, a performance improvement with two signals that may appear to be evidence of integration can often be explained by the probability summation of two independent detection events (Meese and Williams 2000; Watson 1979). To determine how probability summation might explain our results, consider first that we found a performance improvement only when the same signal was present at the cue and at its remapped location just before the execution of a saccade. To consider a probability summation stage that pools independent detections, we would therefore have to limit this pooling to only the cued and remapped locations, and only in the saccade task. In this case, however, detections would be pooled from the cue and its remapped location before a saccade, when either congruent or incongruent signals were shown. This would improve (congruent) and degrade (incongruent) the performance, respectively. Instead, we found significant improvement only for congruent signals presented at these locations, whereas incongruent signals were simply ignored as shown by the analysis of both incorrect trials (Fig. 4) and of trials in which a signal was presented alone at the remapped location of the cue (Fig. 5). These results argue against probability summation as a possible mechanism for our observed spatially and feature-specific integration effects. Moreover, because the two signals were presented simultaneously, we can also rule out priming and serial dependence as possible contributors to our effects (Fischer and Whitney 2014; Maljkovic and Nakayama 1994, 1996).

Previous studies have shown presaccadic interactions for visual features other than motion. First, strong interference effects on an attended target were reported if its remapped location was either filled with a mask or flanked by distractors prior to the saccade (Harrison et al. 2013; Hunt and Cavanagh 2011). Interestingly, we did not observe such masking effects in the present study (no decrease of performance when incongruent signals were at the remapped location), probably because we used faint and below-threshold stimuli at the remapping location (the direction of s2 alone was unreportable) rather than the high-contrast stimuli used in the previous studies at or around the remapped location. Second, studies of another form of presaccadic feature transfer found that orientation adaptation transferred from the fixation to the saccade target location before the execution of the eye movement (Melcher 2007; Zirnsak et al. 2011). These positions are analogous to the “cue” and “future retinotopic trace” locations in our stimulus layout (ours were offset into the periphery), where we never found any significant integration. Additionally, because the location tested in that study was the saccade target location, these results are hard to interpret given that saccade preparation strongly biases saccade target processing (Baldauf and Deubel 2008; Deubel and Schneider 1996; Harrison and Bex 2014; Rolfs and Carrasco 2012). Finally, a recent single-cell recording study showed shape selectivity in remapping lateral intraparietal cortex neuron units, which remap both spatial and shape information (Subramanian and Colby 2014). The spatial and feature specificity of the motion integration that we show in this report suggests that our effects may rely on such cells.

Physiological studies also have reported that when a target is presented just before a saccade, it activates cells with receptive fields at both the current retinal location and the remapped location of the target before the saccade (Kusunoki and Goldberg 2003). Our results suggest that these two populations of cells are linked together to feed information into a single, object-specific representation. However, the integration only occurs when the remapped location has the same features as the target location, as they would if they originated from the same object across the saccade. This suggests that the features sampled from the target location serve as strong priors for evaluation of signals from the remapped location (e.g., a Bayesian-biased integration). Such a mechanism could explain the fact that incongruent signals presented at the cue and at its remapped location were largely ignored (Fig. 4). This predictive spatially and feature-based selection may also be related to the earlier observations that the visual system constructs object-centered, spatial representations across eye movements (Boi et al. 2011), because these would be facilitated by the presaccadic selection of object features from their expected postsaccadic location (Cavanagh et al. 2010a; Lisi et al. 2015).

We consider that the occurrence of these effects even before the saccade reflects the imperfect timing of the predictions that keep track of attended object across saccades (see also Jonikaitis et al. 2013; Nummela and Krauzlis 2011; Rolfs 2015). In addition, given the inevitable delays and slow speed in implementing the remapping, it is plausible that it has to begin before the saccade for it to be in place when it is needed, as the saccade lands. In any case, our results show that the effect of this premature selection would not be detrimental to everyday vision, because the integration that occurs would be limited to situations in which the two locations contain the same features.

Altogether, our results show that before saccades, motion information is sampled and integrated from both the current location and the predicted postsaccadic retinotopic location of a salient and behaviorally relevant object. These results provide the first evidence for a mechanism integrating features across nonoverlapping locations in space, provided they are expected to come from the same object before and after an eye movement.

GRANTS

This research was supported by an Alexander von Humboldt Foundation Fellowship (to M. Szinte), by funding from the European Research Council under the European Union's Seventh Framework Program FP7/2007–2013 ERC Grant Agreement No. AG324070 (to P. Cavanagh), Deutsche Forschungsgemeinschaft (DFG) Emmy Noether Grant RO 3579/2-1 (to M. Rolfs), and DFG Temporary Position for Principal Investigator Awards JO 980/1-1 (to D. Jonikaitis) and SZ343/1 (to M. Szinte).

DISCLOSURES

No conflicts of interest, financial or otherwise, are declared by the authors.

AUTHOR CONTRIBUTIONS

M.S., D.J., M.R., P.C., and H.D. conception and design of research; M.S. performed experiments; M.S. analyzed data; M.S., D.J., M.R., P.C., and H.D. interpreted results of experiments; M.S. prepared figures; M.S. and D.J. drafted manuscript; M.S., D.J., M.R., P.C., and H.D. edited and revised manuscript; M.S., D.J., M.R., P.C., and H.D. approved final version of manuscript.

ACKNOWLEDGMENTS

We are grateful to the members of the Deubel laboratory in Munich, the Centre Attention and Vision in Paris, and the Rolfs laboratory in Berlin for helpful comments and discussions, and to Elodie Parison and Alice and Clémence Szinte for invaluable support.

REFERENCES

- Baldauf D, Deubel H. Properties of attentional selection during the preparation of sequential saccades. Exp Brain Res 184: 411–425, 2008. [DOI] [PubMed] [Google Scholar]

- Berman RA, Colby C. Attention and active vision. Vision Res 49: 1233–1248, 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boi M, Vergeer M, Öğmen H, Herzog MH. Nonretinotopic Exogenous Attention. Curr Biol 21: 1732–1737, 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brainard DH. The Psychophysics Toolbox. Spat Vis 10: 433–436, 1997. [PubMed] [Google Scholar]

- Carlson-Radvansky LA, Irwin DE. Memory for structural information across eye movements. J Exp Psychol Learn Mem Cogn 21: 1441–1458, 1995. [Google Scholar]

- Cavanagh P, Hunt AR, Afraz A, Rolfs M. Visual stability based on remapping of attention pointers. Trends Cogn Sci 14: 147–153, 2010a. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cavanagh P, Hunt AR, Afrax A, Rolfs M. Attention Pointers: Response to Mayo and Sommer. Trends Cogn Sci 14: 390–391, 2010b. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cornelissen FW, Peters EM, Palmer J. The EyeLink Toolbox: eye tracking with MATLAB and the Psychophysics Toolbox. Behav Res Methods Instrum Comput 34: 613–617, 2002. [DOI] [PubMed] [Google Scholar]

- Deubel H. Adaptive control of saccade metrics. In: Perspectives in Vision Research, edited by Obrecht G, Stark L. Boston, MA: Springer, 1991, p. 93–100. [Google Scholar]

- Deubel H, Bridgeman B, Schneider WX. Immediate post-saccadic information mediates space constancy. Vision Res 38: 3147–3159, 1998. [DOI] [PubMed] [Google Scholar]

- Deubel H, Schneider WX. Saccade target selection and object recognition: evidence for a common attentional mechanism. Vision Res 36: 1827–1837, 1996. [DOI] [PubMed] [Google Scholar]

- Deubel H, Schneider WX, Bridgeman B. Transsaccadic memory of position and form. Prog Brain Res 140: 165–180, 2002. [DOI] [PubMed] [Google Scholar]

- Duhamel JR, Colby CL, Goldberg ME. The updating of the representation of visual space in parietal cortex by intended eye movements. Science 255: 90–92, 1992. [DOI] [PubMed] [Google Scholar]

- Engbert R, Mergenthaler K. Microsaccades are triggered by low retinal image slip. Proc Natl Acad Sci USA 103: 7192–7197, 2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ernst MO, Banks MS. Humans integrate visual and haptic information in a statistically optimal fashion. Nature 415: 429–433, 2002. [DOI] [PubMed] [Google Scholar]

- Essen DC, Zeki SM. The topographic organization of rhesus monkey prestriate cortex. J Physiol 277: 193–226, 1978. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fischer J, Whitney D. Serial dependence in visual perception. Nat Neurosci 17: 738–743, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ganmor E, Landy MS, Simoncelli EP. Near-optimal integration of orientation information across saccades. J Vis 15: 1–12, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gardner JL, Merriam EP, Movshon JA, Heeger DJ. Maps of visual space in human occipital cortex are retinotopic, not spatiotopic. J Neurosci 28: 3988–3999, 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldberg ME, Bruce CJ. Primate frontal eye fields. III. Maintenance of a spatially accurate saccade signal. J Neurophysiol 64: 489–508, 1990. [DOI] [PubMed] [Google Scholar]

- Golomb JD, Chun MM, Mazer JA. The native coordinate system of spatial attention is retinotopic. J Neurosci 28: 10654–10662, 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Golomb JD, L'heureux ZE, Kanwisher N. Feature-binding errors after eye movements and shifts of attention. Psychol Sci 25: 1067–1078, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gottlieb JP, Kusunoki M, Goldberg ME. The representation of visual salience in monkey parietal cortex. Nature 391: 481–484, 1998. [DOI] [PubMed] [Google Scholar]

- Harrison WJ, Bex PJ. Integrating retinotopic features in spatiotopic coordinates. J Neurosci 34: 7351–7360, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harrison WJ, Retell JD, Remington RW, Mattingley JB. Visual crowding at a distance during predictive remapping. Curr Biol 23: 793–798, 2013. [DOI] [PubMed] [Google Scholar]

- Hillis JM, Ernst MO, Banks MS, Landy MS. Combining sensory information: mandatory fusion within, but not between, senses. Science 298: 1627–1630, 2002. [DOI] [PubMed] [Google Scholar]

- Hollingworth A, Richard AM, Luck SJ. Understanding the function of visual short-term memory: transsaccadic memory, object correspondence, and gaze correction. J Exp Psychol Gen 137: 163–181, 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holst von E, Mittelstaedt H. Das Reafferenzprinzip. Wechselwirkungen zwischen Zentralnervensystem und Peripherie. Naturwissenschaften 37: 464–476, 1950. [Google Scholar]

- Hunt AR, Cavanagh P. Remapped visual masking. J Vis 11: 1–8, 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jonikaitis D, Belopolsky AV. Target-distractor competition in the oculomotor system is spatiotopic. J Neurosci 34: 6687–6691, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jonikaitis D, Szinte M, Rolfs M, Cavanagh P. Allocation of attention across saccades. J Neurophysiol 109: 1425–1434, 2013. [DOI] [PubMed] [Google Scholar]

- Jonikaitis D, Theeuwes J. Dissociating oculomotor contributions to spatial and feature-based selection. J Neurophysiol 110: 1525–1534, 2013. [DOI] [PubMed] [Google Scholar]

- Knapen T, Rolfs M, Wexler M, Cavanagh P. The reference frame of the tilt aftereffect. J Vis 10: 8.1–13, 2010. [DOI] [PubMed] [Google Scholar]

- Kusunoki M, Goldberg ME. The time course of perisaccadic receptive field shifts in the lateral intraparietal area of the monkey. J Neurophysiol 89: 1519–1527, 2003. [DOI] [PubMed] [Google Scholar]

- Kusunoki M, Gottlieb JP, Goldberg ME. The lateral intraparietal area as a salience map: the representation of abrupt onset, stimulus motion, and task relevance. Vision Res 40: 1459–1468, 2000. [DOI] [PubMed] [Google Scholar]

- Landy MS, Maloney LT, Johnston EB, Young M. Measurement and modeling of depth cue combination: in defense of weak fusion. Vision Res 35: 389–412, 1995. [DOI] [PubMed] [Google Scholar]

- Lisi M, Cavanagh P, Zorzi M. Spatial constancy of attention across eye movements is mediated by the presence of visual objects. Atten Percept Psychophys 77: 1159–1169, 2015. [DOI] [PubMed] [Google Scholar]

- Liu T, Mance I. Constant spread of feature-based attention across the visual field. Vision Res 51: 26–33, 2011. [DOI] [PubMed] [Google Scholar]

- Maljkovic V, Nakayama K. Priming of pop-out: I. Role of features. Mem Cognit 22: 657–672, 1994. [DOI] [PubMed] [Google Scholar]

- Maljkovic V, Nakayama K. Priming of pop-out: II. The role of position. Percept Psychophys 58: 977–991, 1996. [DOI] [PubMed] [Google Scholar]

- Martinez-Trujillo JC, Treue S. Feature-based attention increases the selectivity of population responses in primate visual cortex. Curr Biol 14: 744–751, 2004. [DOI] [PubMed] [Google Scholar]

- Mathôt S, Theeuwes J. A reinvestigation of the reference frame of the tilt-adaptation aftereffect. Sci Rep 3: 1152, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maunsell JHR, Treue S. Feature-based attention in visual cortex. Trends Neurosci 29: 317–322, 2006. [DOI] [PubMed] [Google Scholar]

- Mayo JP, Sommer MA. Shifting attention to neurons. Trends Cogn Sci 14: 389, 2010. [DOI] [PubMed] [Google Scholar]

- Meese TS, Williams CB. Probability summation for multiple patches of luminance modulation. Vision Res 40: 2101–2113, 2000. [DOI] [PubMed] [Google Scholar]

- Melcher D. Predictive remapping of visual features precedes saccadic eye movements. Nat Neurosci 10: 903–907, 2007. [DOI] [PubMed] [Google Scholar]

- Melcher D, Morrone MC. Spatiotopic temporal integration of visual motion across saccadic eye movements. Nat Neurosci 6: 877–881, 2003. [DOI] [PubMed] [Google Scholar]

- Melcher D, Papathomas TV, Vidnyánszky Z. Implicit attentional selection of bound visual features. Neuron 46: 723–729, 2005. [DOI] [PubMed] [Google Scholar]

- Moore T, Tolias AS, Schiller PH. Visual representations during saccadic eye movements. Proc Natl Acad Sci USA 95: 8981–8984, 1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morris AP, Liu CC, Cropper SJ, Forte JD, Krekelberg B, Mattingley JB. Summation of visual motion across eye movements reflects a nonspatial decision mechanism. J Neurosci 30: 9821–9830, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neupane S, Guitton D, Pack CC. Two distinct types of remapping in primate cortical area V4. Nat Commun 7: 10402, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nummela SU, Krauzlis RJ. Superior colliculus inactivation alters the weighted integration of visual stimuli. J Neurosci 31: 8059–8066, 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oostwoud Wijdenes L, Marshall L, Bays PM. Evidence for optimal integration of visual feature representations across saccades. J Neurosci 35: 10146–10153, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parks NA, Corballis PM. Human transsaccadic visual processing: presaccadic remapping and postsaccadic updating. Neuropsychologia 48: 3451–3458, 2010. [DOI] [PubMed] [Google Scholar]

- Pelli DG. The Video Toolbox software for visual psychophysics: transforming numbers into movies. Spat Vis 10: 437–442, 1997. [PubMed] [Google Scholar]

- Pertzov Y, Zohary E, Avidan G. Rapid formation of spatiotopic representations as revealed by inhibition of return. J Neurosci 30: 8882–8887, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pollatsek A, Rayner K, Collins WE. Integrating pictorial information across eye movements. J Exp Psychol Gen 113: 426–442, 1984. [DOI] [PubMed] [Google Scholar]

- Rolfs M. Attention in active vision: a perspective on perceptual continuity across saccades. Perception 44: 900–919, 2015. [DOI] [PubMed] [Google Scholar]

- Rolfs M, Carrasco M. Rapid simultaneous enhancement of visual sensitivity and perceived contrast during saccade preparation. J Neurosci 32: 13744a–13752a, 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rolfs M, Jonikaitis D, Deubel H, Cavanagh P. Predictive remapping of attention across eye movements. Nat Neurosci 14: 252–256, 2011. [DOI] [PubMed] [Google Scholar]

- Rolfs M, Szinte M. Remapping attention pointers: linking physiology and behavior. Trends Cogn Sci 20: 399–401, 2016. [DOI] [PubMed] [Google Scholar]

- Saarela TP, Landy MS. Integration trumps selection in object recognition. Curr Biol 25: 920–927, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sereno MI, Dale AM, Reppas JB, Kwong KK, Belliveau JW, Brady TJ, Rosen BR, Tootell RB. Borders of multiple visual areas in humans revealed by functional magnetic resonance imaging. Science 268: 889–893, 1995. [DOI] [PubMed] [Google Scholar]

- Sommer MA, Wurtz RH. A pathway in primate brain for internal monitoring of movements. Science 296: 1480–1482, 2002. [DOI] [PubMed] [Google Scholar]

- Sommer MA, Wurtz RH. Influence of the thalamus on spatial visual processing in frontal cortex. Nature 444: 374–377, 2006. [DOI] [PubMed] [Google Scholar]

- Sperry RW. Neural basis of the spontaneous optokinetic response produced by visual inversion. J Comp Physiol Psychol 43: 482–489, 1950. [DOI] [PubMed] [Google Scholar]

- Subramanian J, Colby CL. Shape selectivity and remapping in dorsal stream visual area LIP. J Neurophysiol 111: 613–627, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Szinte M, Carrasco M, Cavanagh P, Rolfs M. Attentional trade-offs maintain the tracking of moving objects across saccades. J Neurophysiol 113: 2220–2231, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Szinte M, Wexler M, Cavanagh P. Temporal dynamics of remapping captured by peri-saccadic continuous motion. J Vis 12: 1–18, 2012. [DOI] [PubMed] [Google Scholar]

- Tanigawa H, Lu HD, Roe AW. Functional organization for color and orientation in macaque V4. Nat Neurosci 13: 1542–1548, 2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tatler BW, Land MF. Vision and the representation of the surroundings in spatial memory. Philos Trans R Soc Lond B Biol Sci 366: 596–610, 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tolias AS, Moore T, Smirnakis SM, Tehovnik EJ, Siapas AG, Schiller PH. Eye movements modulate visual receptive fields of V4 neurons. Neuron 29: 757–767, 2001. [DOI] [PubMed] [Google Scholar]

- Treisman AM, Gelade G. A feature-integration theory of attention. Cogn Psychol 12: 97–136, 1980. [DOI] [PubMed] [Google Scholar]

- Umeno MM, Goldberg ME. Spatial processing in the monkey frontal eye field. I. Predictive visual responses. J Neurophysiol 78: 1373–1383, 1997. [DOI] [PubMed] [Google Scholar]

- Van Essen DC, Maunsell JHR, Bixby JL. The middle temporal visual area in the macaque: myeloarchitecture, connections, functional properties and topographic organization. J Comp Neurol 199: 293–326, 1981. [DOI] [PubMed] [Google Scholar]

- Verfaillie K, De Troy A, Van Rensbergen J. Transsaccadic integration of biological motion. J Exp Psychol Learn Mem Cogn 20: 649–670, 1994. [DOI] [PubMed] [Google Scholar]

- Walker MF, Fitzgibbon EJ, Goldberg ME. Neurons in the monkey superior colliculus predict the visual result of impending saccadic eye movements. J Neurophysiol 73: 1988–2003, 1995. [DOI] [PubMed] [Google Scholar]

- Watson AB. Probability summation over time. Vision Res 19: 515–522, 1979. [DOI] [PubMed] [Google Scholar]

- Wegener D, Ehn F, Aurich MK, Galashan FO, Kreiter AK. Feature-based attention and the suppression of non-relevant object features. Vision Res 48: 2696–2707, 2008. [DOI] [PubMed] [Google Scholar]

- White AL, Carrasco M. Feature-based attention involuntarily and simultaneously improves visual performance across locations. J Vis 11: 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Williams DW, Sekuler R. Coherent global motion percepts from stochastic local motions. Vision Res 24: 55–62, 1984. [DOI] [PubMed] [Google Scholar]

- Wolf C, Schütz AC. Trans-saccadic integration of peripheral and foveal feature information is close to optimal. J Vis 15: 1–18, 2015. [DOI] [PubMed] [Google Scholar]

- Wurtz RH. Neuronal mechanisms of visual stability. Vision Res 48: 2070–2089, 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yao T, Treue S, Krishna BS. An attention-sensitive memory trace in macaque MT following saccadic eye movements. PLoS Biol 14: e1002390, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zirnsak M, Gerhards RGK, Kiani R, Lappe M, Hamker FH. Anticipatory saccade target processing and the presaccadic transfer of visual features. J Neurosci 31: 17887–17891, 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zirnsak M, Moore T. Saccades and shifting receptive fields: anticipating consequences or selecting targets? Trends Cogn Sci 18: 621–628, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zirnsak M, Steinmetz NA, Noudoost B, Xu KZ, Moore T. Visual space is compressed in prefrontal cortex before eye movements. Nature 507: 504–507, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]