Abstract

Sleep is important for memory consolidation and contributes to the formation of new perceptual categories. This study examined sleep as a source of variability in typical learners’ ability to form new speech sound categories. We trained monolingual English speakers to identify a set of non-native speech sounds at 8PM, and assessed their ability to identify and discriminate between these sounds immediately after training, and at 8AM on the following day. We tracked sleep duration overnight, and found that light sleep duration predicted gains in identification performance, while total sleep duration predicted gains in discrimination ability. Participants obtained an average of less than 6 hours of sleep, pointing to the degree of sleep deprivation as a potential factor. Behavioral measures were associated with ERP indexes of neural sensitivity to the learned contrast. These results demonstrate that the relative success in forming new perceptual categories depends on the duration of post-training sleep.

Keywords: memory consolidation, speech perception, perceptual learning

Introduction

Perceptual categories are the foundation for mental organization of the external world and underlie complex cognitive operations such as object recognition and linguistic communication. The capacity to acquire new perceptual categories is fundamental to learning. A core question in cognitive science is what kind of experiences facilitates category acquisition. Recent work has pointed to the importance of memory consolidation during sleep in certain types of perceptual learning. The present study asks whether sleep behavior can account for the variability that exists in the ability to acquire new speech sound categories.

Memory processes that are involved in capturing and retaining acoustic information aggregate one’s experience with spoken language into functional acoustic-phonetic units, speech sounds such as /d/ or /a/. Variations in the quality of these speech sound representations have been linked to differences in language and reading ability [1,2]. While differences in linguistic input are often cited as the source of such perceptual variability [3], we propose that variance in the representation of speech sounds amongst typical learners can in part be attributed to differences in sleep. Sleep’s importance in memory consolidation has been documented across many domains [4], and sleep appears to play a role in the organization of speech information as well. Prior work suggests that a period of sleep following perceptual training is associated with generalization of speech information to unfamiliar lexical contexts in mapping synthetic tokens to native categories [5]. In the acquisition of nonnative speech sounds, the effect of sleep appears to be in protecting phonetic features from conflicting acoustic information [6], and in the generalization of acoustic-phonetic features to an unfamiliar talker [7]. In these prior investigations, we have found the magnitude of the effect of offline consolidation on nonnative category acquisition to be highly variable between individuals. One possibility is that the magnitude of overnight gain is dependent on the amount of learning achieved during training. Another possibility, based on a study of word learning that showed that larger gains in learning were associated with longer sleep duration [8], is that the length of post-training sleep determines the degree of improvement. Non-rapid eye movement (NREM) sleep is considered critically important for the consolidation of hippocampal-encoded memory [9]. Specifically, it has been suggested that light sleep (NREM stages 1 and 2) is responsible for active potentiation of wake-state memory, in contrast with deep sleep’s (NREM stage 3) role in maintaining homeostatic regulation [10].

The aim of the current study is to test the relationship between sleep behavior and non-native speech sound learning. At 8PM on day 1, we trained monolingual English speakers with typical language and reading ability to identify voiced Hindi dental and retroflex consonants. The dental sound is produced with the tongue tip placed behind the teeth, and the retroflex with the tongue curled behind the alveolar ridge, and both sounds perceptually assimilate to the English (alveolar) /d/ for most monolingual speakers of English. Participants were trained by the experimenter to use a sleep-monitoring headband [11] prior to leaving the lab on the first day (see [12] for reliability of the device), wore the device overnight, and returned at 8AM the next morning for reassessment. We measured learning of the non-native contrast through two tasks: discrimination and identification. In discrimination, the listener compares two tokens to determine if the speech features are categorically similar or dissimilar, but this skill does not require awareness of category identity. In contrast, identification requires an explicit recall of a category label for tokens falling within a specific distribution of auditory stimuli. Therefore, the changes in performance on these perceptual tasks reflect different types of perceptual learning that are both commonly used to describe the representational quality of speech sounds. We tracked performance in each task on days 1 and 2 (see Figure 1 for scheduling of tasks). We predicted that sleep duration would be positively associated with gains in behavioral performance.

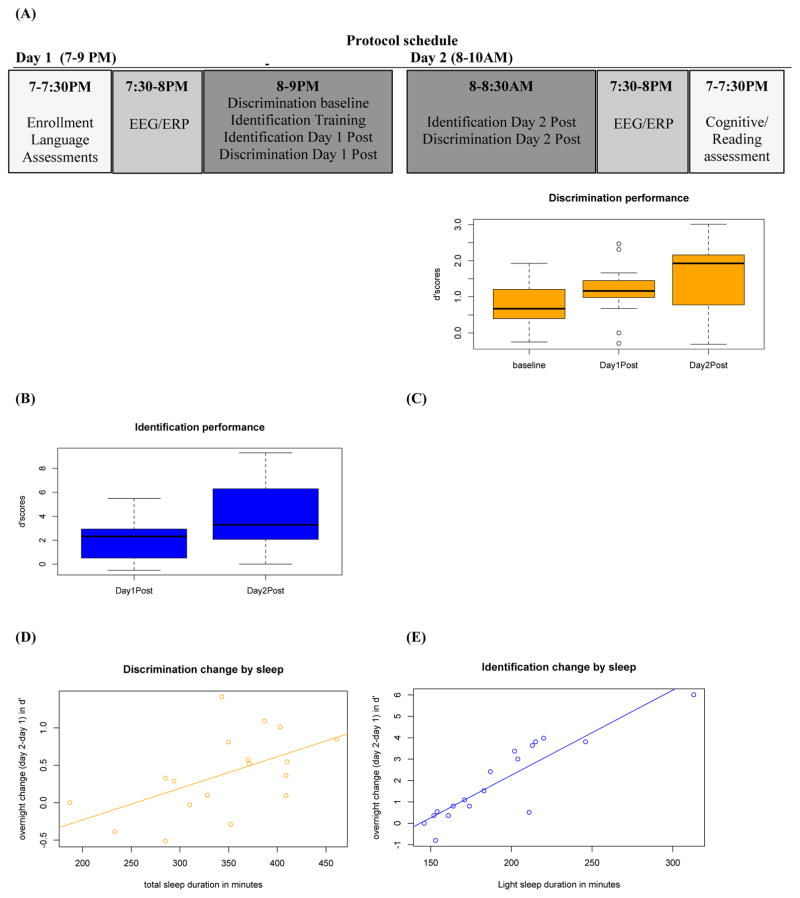

FIGURE 1. Perceptual performance over time and relationships with sleep duration.

(A) Schedule of tasks in the study protocol.

(B, C) Mean perceptual task performance by time point: before training on day 1 (baseline), immediately following training on day 1 (day 1 post), and 12 hours later (day 2 post). Performance is measured by d′ scores (y-axis).

(D, E) Relationships between individual differences in sleep duration and the overnight change to perceptual task performance (day 2 – day 1 d′ scores on y-axis).

It is possible that discrimination and identification tasks underestimate participants’ ability to perceive differences among the trained sounds, or that motivational factors that vary as a function of fatigue might affect task performance. Thus, in order to obtain a task-independent measure of changes to perceptual sensitivity, we also collected electrophysiological recordings during an oddball paradigm to determine each participant’s mismatch negativity (MMN) response to the trained contrast immediately before training on day 1, and after behavioral reassessment on day 2. The MMN component of the event-related potential (ERP) reflects pre-attentive responses to stimulus change, and has been used to measure phonological processing in both typical and atypical populations [13]. We predicted that overnight changes in the MMN response would be positively associated with changes in behavioral performance.

Materials and Methods

Participants

All participants provided informed written consent in accordance with our human subjects research protocol approved by the University of Connecticut Institutional Review Board prior to participation. The data presented in this manuscript is from a subset from a larger dataset collected from monolingual, native speakers of American English, 18–24 years of age, who grew up in a household with only native speakers of American English. The participants included in the current report met additional criteria as follows. Participants reported no neurological, socio-emotional, or attention disorder, and a history of typical language, reading, and cognitive development. In addition, participants passed a pure tone hearing screening and obtained scores at or above the 25th percentile on the following standardized assessments of reading and nonverbal cognition at the time of the study: Woodcock Reading Mastery Test-Revised [14], Test of Word Reading Efficiency [15], Wechsler Abbreviated Scale of Intelligence [16]. These participants were further identified as ‘typical’ in language by the Modified Token Test and the 15-word spelling test, which are non-standardized measures devised to identify adults with a history of language impairment [17]. Twenty-seven participants (Mean: 20 years, 5 months; SD: 16 months; 17 female, 25 right-handed) met all criteria and completed the EEG recording and the perceptual training; of these, data from two participants were excluded from the current analyses for the reason that behavioral performance immediately after training was greater than 3 standard deviations above the mean; this brought their scores close to task ceiling (equal to or greater than 95% accurate) and as such, a meaningful measure of overnight improvement on perceptual tasks could not be obtained. Data on the remaining 25 participants contributed behavioral/EEG measures. For analyses involving sleep data, we excluded data points that revealed missing segments due to signal dropout during the recording period (n=7). The remaining 18 (Mean: 20 years, 4 months; SD: 17 months; 11 female, 16 right-handed) completed sleep data collection.

Standardized assessments were administered and scored by the first author or one other graduate student, and rescored by one of two trained undergraduate students. Any discrepancies in scoring were flagged by the second scorer and resolved by the first author.

Stimuli

Nonnative phonetic training/assessment

A set of 10 naturally spoken tokens (5 each: /d̪ug/ [dental], /ɖug/ [retroflex]) were produced by a male, native speaker of Hindi in a sound attenuated audiology booth and recorded by an Edirol digital recorder [18]. Praat software [19] was used to cut tokens to the burst onset and to adjust mean amplitudes to 70dB. For training, two novel visual objects [20] were used, each corresponding to one ‘word’ within the minimal pair: /u̪g/-/ɖug/ used during training.

Mismatch Negativity (MMN) response

Two tokens from the above training set (one dental and one retroflex) were further modified in Praat to equate burst, vowel, and token duration to total 250ms from onset to offset. The dental token was used as the frequently occurring Standard, and the retroflex token was used as the infrequently occurring Deviant.

Procedure

Participants were scheduled for the experiment on two consecutive days: from 7–9PM on day 1, and 8–10AM on day 2 (Figure 1). At the end of the first session, participants were instructed on the use of the sleep-monitoring headband [11,12], and were sent home with the device. Participants returned at 8AM the next day and were first reassessed behaviorally on their perception of the nonnative contrast. This was followed by a second EEG/ERP recording session, and then by administration of the remaining language and cognitive assessments.

Nonnative phonetic training/assessment

On day 1, participants completed a baseline assessment of discrimination, training using an identification task, identification post-training assessment, and a post-training discrimination assessment. On day 2, participants completed a post-training identification assessment, followed by a post-training discrimination assessment. E-Prime 2.0 software [21] was used for stimulus presentation and recording participant response. Participants were presented the auditory stimuli through SONY MDR-7506 Hi-Fi digital Sound Monitor headphones, at an average listening level of 70 dB SPL.

During training, participants were first exposed to a familiarization sequence of each auditory token presented with the corresponding picture. They were then instructed to choose which of two pictures of novel objects corresponded to each of the two ‘words.’ Participants completed 200 trials of this task with a 2-minute break halfway through, and were provided with written feedback (‘correct’ or ‘incorrect’) after every trial. During each post-training assessment, participants completed 50 trials of the same identification task without feedback. For the discrimination task, participants were presented two tokens in sequence (stimulus onset asynchrony [SOA] of 1 second), and instructed to indicate if the sound at the beginning of the two words belonged to the same or different speech sound category. During each assessment, participants completed 128 (64 ‘same’ and ‘different’) discrimination trials without feedback.

Performance accuracy for each task was converted to d′ scores [22]. In order to avoid infinite values, proportion accuracy scores of 0 or 1 were adjusted to a ceiling z-value of 4.65 [23].

ERP recording and analysis

E-Prime 2.0 software was used for stimulus presentation. Stimuli were presented over two wall-mounted free-field speakers approximately two feet in front and two feet above the participants at an average listening level of 75dB SPL. Participants watched a muted nature documentary during the entire recording. In each recording session, participants were presented with a train of 667 stimuli (15%, or 100, deviant tokens) with an SOA of 650ms in two blocks. Stimuli were arranged in a pseudorandom order such that deviant tokens were presented no fewer than 3, and no more than 8, standard tokens apart.

The EEG was recorded continuously using a 128-channel Hydrocel geodesic sensor net [24] with a Cz reference. Data were sampled at 500 Hz/channel with hardware filter settings 0.1–100 Hz. Using Netstation software [24], the recordings were bandpass filtered (0.3–30 Hz) and segmented (−100 to 800 ms from stimulus onset) to obtain ERPs. Segments containing artifacts (defined as min-max voltage > 200μv over a moving average of 80ms for eye blinks, and min-max voltage > 100 μv over a moving average of 80ms for eye movements) were removed and bad channels (defined as channels with greater than 40% of segments marked bad) were spline interpolated before averaging. The data was re-referenced to the average reference and baseline corrected to 100 ms pre-stimulus presentation. Across participants, the number of segments retained for statistical analyses are as follows: for day 1, 72% deviant, 74% standard; for day 2, 71% deviant, 71% standard.

Only Standard tokens that immediately preceded Deviant tokens were included in statistical analyses. Area under the curve from 150 to 200ms post-stimulus onset (where the MMN has been identified for auditory stimuli with only a subtle difference [25]) was calculated for the response waveforms at the electrode corresponding to Fz in the 10/20 system for each condition separately, and subjected to further statistical analysis. Individual MMN values were computed by calculating the difference between the Deviant and Standard waveforms for the specified time window.

Results

Association between behavior and sleep

We tracked performance in each perceptual task (discrimination and identification) on days 1 and 2 (Figure 1). An initial repeated measures analysis of variance (ANOVA) with time as the within-subjects factor, and subsequent paired samples t-tests, determined that participants improved in discrimination performance at each time point (Time main effect: F(2,34) = 12.09; p<.001, η2=.42; baseline to day 1 post, t(17)=2.74, p=.014; day 1 to day 2 post, t(17)=3.02, p=.008; all pairwise comparisons significant at .05 after Bonferroni correction). A paired samples t-test determined that participants improved in identification performance between days 1 and 2 (t(17)=4.51, p<.001). The increase in performance from day 1 to day 2 in the absence of further training replicates previous findings showing that an intervening period of sleep facilitates improvement on perceptual tasks [5].

The average sleep duration across participants was 351 minutes (SD 45 min, range [285, 410]). Different stages of sleep are associated with consolidation of different types of memory [26]; therefore, the two perceptual tasks likely differ in their respective relationships to sleep as well, depending on the type of memory on which performance relies. Because duration of sleep stages are highly collinear, total sleep, rapid eye movement (REM) sleep, light sleep (NREM stages 1 and 2), and deep sleep (NREM stages 3 and 4) durations were entered stepwise as predictors into a multiple regression model using SPSS software [27] with performance gain (the difference between day 2 and day 1 posttest scores) as the dependent variable for each task. Total sleep duration accounted for the largest proportion of variance in overnight discrimination gain (F(1,16) = 6.94, p = .018, r2 = .302), and light sleep duration accounted for the largest proportion of variance in identification gain (F(1,16) = 8.01, p < .001, r2 = .800).

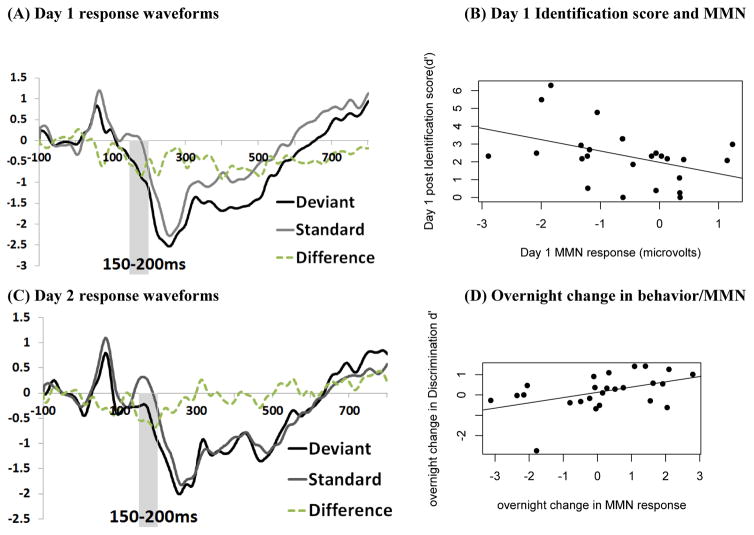

The significant MMN response on day 1 (F(1,23) = 4.96, p = .007, η2 = .27) was found to be associated with identification performance on day 1 (F(1,23) = 4.84, p = .038, r2 = .17). While the MMN response on day 2 was marginal (F(1,23) = 3.98, p = .057, η2 = .14), we found that individual changes in the MMN between days were significantly correlated with the magnitude of behavioral performance gain on the discrimination task (F(1,23) = 6.10, p = .021, r2 = .21), with a larger increase in MMN associated with improved discrimination performance from day 1 to day 2 (Figure 2).

FIGURE 2. ERP responses to the target contrast and relationships with behavioral performance.

(A, C) ERP waveforms for each stimulus condition: Standard, Deviant, and Difference (Deviant minus Standard) recorded at the FZ electrode. Amplitude (y-axis) is in microvolts.

(B, D) Relationships between MMN amplitude (area under the curve 150–200 ms of the difference waveform) and behavior

Discussion

The present findings lend strong support to the hypothesis that some differences in perceptual speech category learning are attributable to differences in sleep behavior. The observation that light sleep duration is associated with category identification performance is consistent with the argument that light sleep plays a dominant role in the consolidation of hippocampal memory [10].

The finding that discrimination performance is associated with total sleep duration allows for several possibilities for interpretation. For one, category discrimination may be facilitated by a sleep-mediated process (or a combination of processes) that are not confined to a particular stage of sleep. A second possibility is that improvement on perceptual discrimination may be facilitated by a period of a lack of conflicting speech input (i.e. silence) that is indirectly provided by periods of sleep. This interpretation would be consistent with our previous findings [6] that discrimination performance is vulnerable to degradation following exposure to conflicting phonetic information, while identification performance is not. Finally, all participants obtained less than 7 hours of sleep between sessions, raising the possibility that sleep deprivation may have played a role in performance, particularly in those who showed the least amounts of performance gain. We note that performance in category discrimination, because the task was not explicitly trained, is consequently an indirect measure of learning. This may explain the discrepancy in the magnitude of training-induced gain between the two tasks.

Future investigations will address the need to disambiguate the subtle distinctions between different perceptual tasks as a measure of perceptual learning. Nonetheless, the relationship between learning and sleep duration suggests that, among typical individuals with comparable linguistic experience, sleep duration accounts for differences in the encoding of new speech features, necessary in the formation of new perceptual categories. Furthermore, electrophysiological results support the interpretation that the observed changes in perceptual performance reflect changes to neural structure and/or physiological changes.

Conclusions

This study demonstrated that relative success in the acquisition of new speech sounds is determined by post-exposure sleep duration. Over time, habitual differences in sleep habits may lead incrementally to differences in the quality of the resultant linguistic units. This work prompts further investigation into the relationship between habitual sleep behaviors and language function.

Highlights.

Sleep duration predicts improvement in measures of speech sound learning

Behavioral gains are associated with changes in ERP response magnitude overnight

Duration of sleep determines relative success of forming new perceptual categories

Acknowledgments

F.S.E. conceived the study, and together with E.B.M. designed the experiment. F.S.E. carried out the data collection and analysis with assistance from N.L. in analysis and interpretation of EEG data; F.S.E., E.B.M., and N.L. co-wrote the paper. We thank Nina Gumkowski for EEG technical support, Stephanie Del Tufo for assistance in participant classification, and Megan Speed and Jessica Joseph for rescoring standardized assessments.

Funding

This work was supported by NIH NIDCD grants R01 DC013064 to EBM, F31 DC014194 to FSE, and NIH NICHD grant P01 HD001994 (Rueckl, PI). FSE was supported by an ASHFoundation scholarship, and the Fund for Innovation in Science Education at the University of Connecticut. The content is the responsibility of the author and does not necessarily represent official views of our funding sources.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Contributor Information

F. Sayako Earle, Department of Speech, Language, and Hearing Sciences, University of Connecticut, Storrs, CT, USA. Department of Communication Sciences and Disorders, University of Delaware, Newark, DE, USA.

Nicole Landi, Department of Psychology, University of Connecticut, Storrs, CT, USA. Haskins Laboratories, New Haven, CT, USA.

Emily B. Myers, Department of Speech, Language, and Hearing Sciences, University of Connecticut, Storrs, CT, USA. Haskins Laboratories, New Haven, CT, USA

References

- 1.Nation K, Snowling MJ. Individual differences in contextual facilitation: Evidence from dyslexia and poor reading comprehension. Child development. 1998;69(4):996–1011. [PubMed] [Google Scholar]

- 2.Joanisse MF, Manis FR, Keating P, Seidenberg MS. Language deficits in dyslexic children: Speech perception, phonology, and morphology. Journal of experimental child psychology. 2000;77(1):30–60. doi: 10.1006/jecp.1999.2553. [DOI] [PubMed] [Google Scholar]

- 3.Hartas D. Inequality and the home learning environment: predictions about seven-year-olds’ language and literacy. British Educational Research Journal. 2012;38(5):859–879. [Google Scholar]

- 4.Rasch B, Born J. About sleep’s role in memory. Physiological reviews. 2013;93(2):681–766. doi: 10.1152/physrev.00032.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Fenn KM, Nusbaum HC, Margoliash D. Consolidation during sleep of perceptual learning of spoken language. Nature. 2003;425(6958):614–616. doi: 10.1038/nature01951. [DOI] [PubMed] [Google Scholar]

- 6.Earle FS, Myers EB. Sleep and native language interference affect non-native speech sound learning. Journal of Experimental Psychology: Human Perception and Performance. 2015;41(6):1680–1695. doi: 10.1037/xhp0000113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Earle FS, Myers EB. Overnight consolidation promotes generalization across talkers in the identification of nonnative speech sounds. The Journal of the Acoustical Society of America. 2015;137(1):EL91–EL97. doi: 10.1121/1.4903918. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Lahl O, Wispel C, Willigens B, Pietrowsky R. An ultra short episode of sleep is sufficient to promote declarative memory performance. Journal of sleep research. 2008;17(1):3–10. doi: 10.1111/j.1365-2869.2008.00622.x. [DOI] [PubMed] [Google Scholar]

- 9.Diekelmann S, Born J. The memory function of sleep. Nature Reviews Neuroscience. 2010;11(2):114–126. doi: 10.1038/nrn2762. [DOI] [PubMed] [Google Scholar]

- 10.Genzel L, Kroes MC, Dresler M, Battaglia FP. Light sleep versus slow wave sleep in memory consolidation: a question of global versus local processes? Trends in neurosciences. 2014;37(1):10–19. doi: 10.1016/j.tins.2013.10.002. [DOI] [PubMed] [Google Scholar]

- 11.Zeo, Inc., Boston, MA

- 12.Shambroom JR, Fabregas SE, Johnstone J. Validation of an automated wireless system to monitor sleep in healthy adults. Journal of sleep research. 2012;21(2):221–230. doi: 10.1111/j.1365-2869.2011.00944.x. [DOI] [PubMed] [Google Scholar]

- 13.Näätänen R, Paavilainen P, Rinne T, Alho K. The mismatch negativity (MMN) in basic research of central auditory processing: a review. Clinical Neurophysiology. 2007;118(12):2544–2590. doi: 10.1016/j.clinph.2007.04.026. [DOI] [PubMed] [Google Scholar]

- 14.Woodcock RW. Woodcock Reading Mastery Tests – Revised/Normative Update (WRMT-R/NU) Circle Pines, MN: American Guidance Service; 1998. [Google Scholar]

- 15.Torgesen JK, Wagner R, Rashotte C. Test of Word Reading Efficiency (TOWRE) Austin, TX: Pro-Ed; 1999. [Google Scholar]

- 16.Wechsler D. Wechsler Abbreviated Scale of Intelligence. 3. San Antonio, TX: The Psychological Corporation; 1999. [Google Scholar]

- 17.Fidler LJ, Plante E, Vance R. Identification of adults with developmental language impairments. American Journal of Speech-Language Pathology. 2011;20(1):2–13. doi: 10.1044/1058-0360(2010/09-0096). [DOI] [PubMed] [Google Scholar]

- 18.Roland corporation, Los Angeles, CA.

- 19.Boersma P, Weenink D. Praat: Doing phonetics by computer [Computer program] 2011. Version 5.3. 03. [Google Scholar]

- 20.“fribbles”; Stimulus images courtesy of Michael J. Tarr. Center for the Neural Basis of Cognition and Department of Psychology, Carnegie Mellon University; http://www.tarrlab.org/ [Google Scholar]

- 21.Psychology Software Tools, Pittsburgh, PA

- 22.Macmillan NA, Creelman CD. Detection theory: A user’s guide. Cambridge, England: Cambridge University Press; 1991. [Google Scholar]

- 23.Iverson P, Kuhl PK. Influences of phonetic identification and category goodness on American listeners’ perception of/r/and/l. The Journal of the Acoustical Society of America. 1996;99(2):1130–1140. doi: 10.1121/1.415234. [DOI] [PubMed] [Google Scholar]

- 24.Electrical Geodesics, Inc., Eugene, OR, USA

- 25.Sallinen M, Kaartinen J, Lyytinen H. Processing of auditory stimuli during tonic and phasic periods of REM sleep as revealed by event-related brain potentials. Journal of sleep research. 1996;5(4):220–228. doi: 10.1111/j.1365-2869.1996.00220.x. [DOI] [PubMed] [Google Scholar]

- 26.Fogel SM, Smith CT, Cote KA. Dissociable learning-dependent changes in REM and non-REM sleep in declarative and procedural memory systems. Behavioural brain research. 2007;180(1):48–61. doi: 10.1016/j.bbr.2007.02.037. [DOI] [PubMed] [Google Scholar]

- 27.IBM Corp. IBM SPSS Statistics for Windows, Version 22.0. Armonk, NY: IBM Corp; Released 2013. [Google Scholar]