Abstract

The objective of this work was to assess the functional utility of new display concepts for an emergency department information system created using cognitive systems engineering methods, by comparing them to similar displays currently in use. The display concepts were compared to standard displays in a clinical simulation study during which nurse-physician teams performed simulated emergency department tasks. Questionnaires were used to assess the cognitive support provided by the displays, participants’ level of situation awareness, and participants’ workload during the simulated tasks. Participants rated the new displays significantly higher than the control displays in terms of cognitive support. There was no significant difference in workload scores between the display conditions. There was no main effect of display type on situation awareness, but there was a significant interaction; participants using the new displays showed improved situation awareness from the middle to the end of the session. This study demonstrates that cognitive systems engineering methods can be used to create innovative displays that better support emergency medicine tasks, without increasing workload, compared to more standard displays. These methods provide a means to develop emergency department information systems—and more broadly, health information technology—that better support the cognitive needs of healthcare providers.

Keywords: cognitive systems engineering, design evaluation, healthcare delivery, human in the loop simulation

INTRODUCTION

Background

Hospital emergency departments (EDs) are unique clinical environments characterized by high acuity patients, intense time pressure, and unpredictable patient arrivals. Providers (physicians and advanced-practice providers) working in the ED must cope with high levels of patient activity that create high cognitive workloads and high decision density (Croskerry & Sinclair, 2001; Schenkel, 2000). In addition, patient information is exchanged in an environment with frequent interruptions, distractions, and multitasking. Tasks including patient hand-offs, procedures, documentation, teaching, and consulting must be conducted while maintaining situation awareness of patient flow and individual patient status (Chisholm, Collison, Nelson, & Cordell, 2000). Thus, ED team communication and coordination is critical for delivering safe, high-quality care (Bagnasco et al., 2013; Fairbanks, Bisantz, & Sunm, 2007; Kilner & Sheppard, 2010; Redfern, Brown, & Vincent, 2009), with teamwork and communication also playing a role in improving both patient and staff satisfaction (Kilner & Sheppard, 2010).

ED patient status boards (also known as patient tracking boards) are one tool commonly used to manage the challenges and cognitive stresses present in the ED (Laxmisan et al., 2007). ED status boards are used by multiple ED staff members, such as physicians, nurses, and technicians, in order to track the demographic information, health status, plans, and assigned caregivers for each patient in the ED. ED status boards have been shown to provide individual memory support, facilitate scheduling and shared cognition between team members, and allow for asynchronous communication events (Hertzum & Simonsen, 2015; Xiao, Schenkel, Faraj, Mackenzie, & Moss, 2007). Furthermore, observational studies in EDs have shown that a wide variety of information is displayed by status boards and frequent provider communication events occur at these boards, establishing their role as critical information artifacts in the ED (Bisantz et al., 2010; Wears, Perry, Wilson, Galliers, & Fone, 2006).

As part of the widespread implementation of computerized health records and processes, ED patient status boards have transitioned from dry-erase whiteboards to electronic emergency department information systems (EDISs). The benefits of these systems include the integration of information from other electronic medical record systems, increased information storage, information recovery, and the ability to use the system from any computer terminal (Bisantz et al., 2010). Although the implementation of electronic health systems is aimed at improving care, there have been unforeseen consequences that have limited the anticipated benefits (Hertzum & Simonsen, 2013). Current EDISs often exhibit a similar format to that of previous whiteboard versions (Bisantz et al., 2010). These electronic systems may lack important features present in the manual versions, particularly with respect to implicit communication among providers and tracking of work and patient progress (Bisantz et al., 2010). These limitations in the new electronic systems may lead to new sources of error (Fairbanks et al., 2008). For instance, previous work (Bisantz et al., 2010) that documented the transition from manual to electronic patient status boards identified unanticipated effects, including changes in communication and coordination. The design of the new electronic status board made it difficult to document and track patient progress. As a result, physicians carried personal notes regarding patient status, resulting in a loss of information that was previously visible and easily shared with other clinical staff. An unanticipated use for the new system was its use to track patients’ dietary needs and provide lists of diets to meal delivery staff. This new use was a benefit to some, but constraints on space meant that there was less space for clinical information (Bisantz et al., 2010; Pennathur et al., 2007; Pennathur et al., 2008).

Cognitive Systems Engineering (CSE) in Healthcare

Cognitive systems engineering supports human performance in complex, dynamic environments by providing a better understanding of human-technology systems and by providing insight for design (Bisantz, 2008; Bisantz & Roth, 2008; Rasmussen, Pejtersen, & Goodstein, 1994). CSE methods have been successfully applied to interface design in a variety of safety-focused industries including defense (Naikar, Moylan, & Pearce, 2006), process control (Jamieson, Miller, Ho, & Vicente, 2007), and aviation (Ahlstrom, 2005; Seamster, Redding, & Kaempf, 1997).

Within healthcare, use of CSE methods have aimed to characterize the complexities and demands of the environment as well as concentrated on the design of new technology and the impact of its implementation (Bisantz, 2008). One such complexity is communication and coordination within healthcare teams. Information displays (e.g., EDISs) are used to support this collaboration by fulfilling administrative and management needs (e.g., providing information about personnel, workload distribution, resource status), providing decision-making support (e.g., care algorithms), and sharing information among people (Parush, 2015). Gaining an understanding of the environment in terms of workflow, communication patterns, and information needs provides a clearer picture of the design requirements for any type of information display and should motivate their design. For example, Parush (2015) and Parush et al. (2011) used CSE methods to create information displays in support of cardiac surgical teams. An evaluation showed that the displays depicted information effectively, that clinicians understood the information, and that situation awareness was supported. Other work has also been completed using CSE methods, with the goal of improving the healthcare environment, for example in burn intensive care (Nemeth et al., 2015) and at the healthcare organizational level to demonstrate a framework for use of CSE concepts to improve patient safety (Xiao & Probst, 2015).

Cognitive work analysis, a particular CSE method, has been applied to the healthcare domain for over 20 years (Jiancaro, Jamieson, & Mihailidis, 2013). “Its goal is to help designers of complex sociotechnical systems create computer-based information support that helps workers adapt to the unexpected and changing demands of their jobs. In short, cognitive work analysis is about designing for adaptation” (Vicente, 1999, p. xiv). Previous work in applying cognitive work analysis to healthcare has had a focus on acute care such as in the intensive care unit (Effken, 2002), anesthesiology (Hajdukiewicz, Vicente, Doyle, Milgram, & Burns, 2001), and regarding trauma resuscitations (Sarcevic, Lesk, Marsic, & Burd, 2008). Specific cognitive work analysis research has focused on issues related to medical informatics, error investigation (Lim, Anderson, & Buckle, 2008), and decision support (Effken, Brewer, Logue, Gephart, & Verran, 2011). Ecological interface design, a methodology that relies on cognitive work analyses, has also been applied in healthcare. Displays created using ecological interface design methods make apparent the various constraints and complexities of the system in a way that facilitates effective action for users to complete objectives in their environment (Burns & Hajdukiewicz, 2004). Various studies applying ecological interface design to healthcare interfaces have shown improved results when compared to standard displays. For example, application of ecological interface design in the intensive care unit demonstrated greater user satisfaction and potentially greater efficiency with the ecologically designed displays, although there was little change regarding recognition speed and overall cognitive workload (Effken, Loeb, Kang, & Lin, 2008). A study using ecological interface design methods in a neonatal intensive care unit found that physician performance improved with the new interface (Sharp & Helmicki, 1998) and a study developing interfaces on mobile devices for diabetes management demonstrated that performance was better and user satisfaction was greater on the ecological interface compared to the standard interface (Kwok & Burns, 2005).

Thus, although CSE methods have been successfully applied to design interfaces in a range of industries, including healthcare, there has been limited application of CSE methods in emergency medicine or to design aspects of health information systems. Using CSE methods may support design of information displays that provide necessary information more effectively. Our research goals were (a) to perform a CSE analysis of the ED and use the results to design novel information displays and (b) to evaluate the success of these methods using a clinical simulation study by comparing the new displays to those based on a currently implemented system. The remainder of this paper is organized as follows: an overview of the CSE analysis and display design process, followed by the evaluation study.

DISPLAY DESIGN

CSE Analysis and Display Design Process

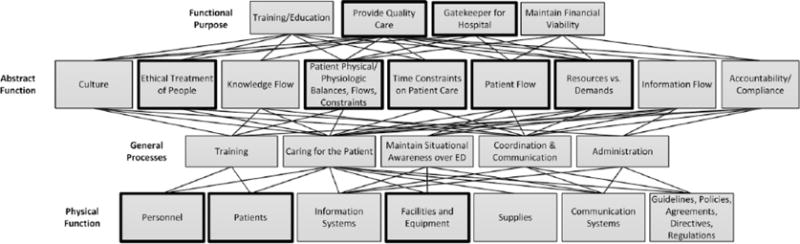

The prototype displays were developed over a 2-year period using CSE, ecological interface design, and user-centered design methods. Responses from semistructured interviews with ED personnel along with information from three subject matter experts (R.J.F., R.L.W., S.P.) on the research team were used to create an abstraction hierarchy of the ED work system (Figure 1). Abstraction hierarchies represent relationships among system purposes, constraints, processes, and physical components of complex systems and can be used to identify information required to monitor and control such systems (Bisantz & Burns, 2008). For extensive descriptions on these methods and how to create such models, see Bisantz, Burns, and Fairbanks (2014), Bisantz and Roth (2008), Burns and Hajdukiewicz (2004), Naikar (2013), and Vicente (1999).

Figure 1.

Abstraction hierarchy, highlighted nodes made up the primary information displayed in the prototype screens. Copyright Ann Bisantz, University at Buffalo, The State University of New York.

Information requirements identified from the model addressed the following:

goals of providing quality care and serving as the gateway or gatekeeper to the hospital;

constraints related to limited time, space, personnel, and equipment;

processes involved with caring for patients, maintaining situational awareness over the ED, and communication and coordination among care providers; and

system components such as staff, patients, facilities, and equipment.

Key insights derived from the modeling exercise included needs to:

represent patients as they moved through phases of care from triage to disposition both individually, and across all patients;

support quick assessment and comparison of waiting room patients;

support identification of bottlenecks in care (such as unusual waits for testing or delays in reassessing patients); and

support balancing workload across care providers.

See Guarrera et al. (2015) and Guarrera et al. (2012) for more details on the abstraction hierarchy models and information requirements identified.

These information requirements were next used as part of a multistep, iterative design process to develop innovative ED display concepts. A series of brainstorming and review sessions were held with members of the research team in order to develop and refine display concepts and to create a set of semifunctioning displays using Adobe Flash Builder (Version 4.6; Adobe, 2010). Of particular note were several new information concepts and system variables identified through the CSE analysis that had not typically been shown in patient tracking systems. One advantage of using CSE methods that include models of the work domain is that new variables necessary for monitoring and control can be identified, including those that are not currently included in system interfaces and, in some cases, those that are not currently being measured (Burns, Bisantz, & Roth, 2004). The new displays included variables such as patient pain level, the time beds have been occupied, and wait times for laboratory tests. These variables may be available but may not be synthesized or salient in current systems. For instance, the ED status display (one of the final prototype displays) combined eight indicator variables to give an at-a-glance indication of whether the ED was meeting high-level goals. Other concepts, such as explicit representation of the phase of patient care, were novel. This concept represented patients as they moved through five care phases (in waiting room, in ED bed waiting to been seen, assessment and treatment, orders complete/needs reassessment, and ready for discharge or admission) and was used in several of the display panels to support care coordination and flow (e.g., by conveying that orders are complete [therefore, time to recheck the patient], or which patients are discharged but still in a bed).

The initial display concepts were evaluated by ED physicians and nurses who used the displays to perform think-aloud tasks, including an orientation (i.e., after returning from a resuscitation) and planning (i.e., transitioning to a new shift) task. Assessments were generally positive, and feedback was used to create a final set of displays (Clark et al., 2014).

Final Prototype Displays

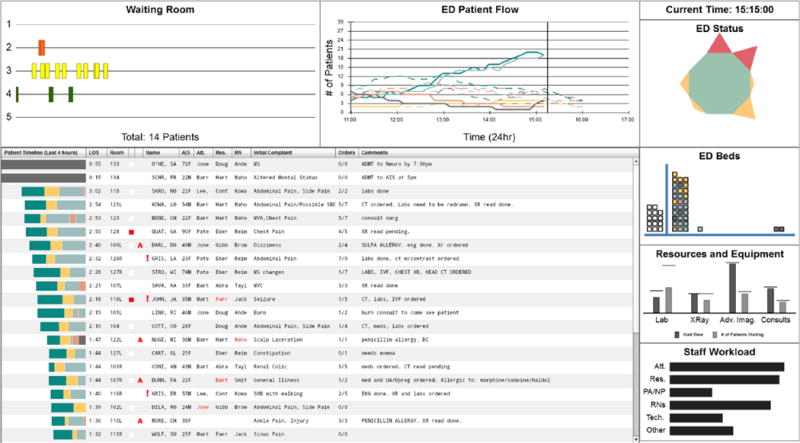

The final set of displays comprised seven display areas, with condensed/miniaturized views of each display provided on an overview display (Figure 2). The overview display was used to navigate to the seven detailed, full-screen displays.

Figure 2.

The prototype overview display used to present a condensed/miniaturized version of the seven display areas and to navigate into those displays. Copyright Ann Bisantz, University at Buffalo, The State University of New York.

The seven display areas were as follows (clockwise from upper left of Figure 2):

Waiting Room: information about waiting room patients, including triage acuity score, wait duration, demographics, initial complaint, as well as pending ED arrivals. The detailed display had two primary components: (a) a “timeline” view, in which small bars representing individual patients moved along a horizontal axis representing time, and (b) detailed patient views, where information on up to four patients at a time could be viewed and compared. Five color-coded timelines stacked vertically correspond to five triage acuity score levels, and patient details could be obtained by hovering or clicking on the small bars.

ED Patient Flow: line graphs of number of patients, over time, in the five phases of treatment (e.g., waiting room, being treated, dispositioned), accompanied by historical trends (dashed lines).

ED Status: overview of ED state based on the following eight key indicator variables: number of patients in the waiting room, number of patients in the ED, percentage of patients boarding, average patient pain level, average time to first evaluation by a physician, average time to first medication, number of patients who left without being seen, and average patient length of stay. Variables were arranged in a “spider” chart format and color-coded according to whether their values were acceptable (green), approaching unacceptable (yellow), or unacceptable (red). If all variables were in the acceptable range, the entire chart collapsed to a small green octagon; approaching unacceptable and unacceptable variable values distorted the shape and added yellow and red triangular areas, respectively. The detailed view provided variable names as well as the current and various threshold values for each variable.

ED Beds: display of available and unavailable beds in the ED and inpatient units (e.g., Floor, ICU). ED beds were represented as stacked squares and were categorized as empty, unavailable, or in use. In-use beds were further categorized according to the length of time they had been occupied and color-coded to indicate the occupying patient’s phase of care. Patient details could be obtained by hovering on the squares.

Resources and Equipment: bar graphs representing queue length and waiting time (current and historical averages) for laboratory, imaging, and consultant resources.

Staff Workload: Information about the number of patients assigned to each staff member was presented as a series of segmented bar graphs—one graph per staff member, one segment per patient. Segment length corresponded to the estimated workload associated with that patient (in this study, it was directly proportional to the patient’s triage acuity score) and the length of the whole bar corresponded to a particular staff member’s total workload.

Patient Progress Overview: Evoking the traditional ED patient status boards, this display showed patient demographics, chief complaint, staff assignments, vital signs, and order information for all patients in tabular form. A color-coded timeline bar to the left of each patient represented the length of time they had spent in each of five phases of treatment. Clicking on a single patient would bring up a detailed view of patient information including order details and results, as well as staff comments.

Overall, the displays represented information related to purposes of providing quality care and serving as the “gatekeeper” or entrance to the hospital—essentially, supporting activities related to the management of patient care and patient flow through the ED; constraints related to timeliness of treatment/appropriate triaging, patient physical constraints, time constraints, flows of patients, and various resource-demand balances (testing, staff, etc.); processes related to caring for the patient, maintaining situation awareness, and communication/coordination; and system components such as patient states, personnel workloads, and availability of required laboratory and imagining facilities.

For additional information regarding the displays as well as the design process, see Guarrera et al. (2015).

EVALUATION STUDY: METHODS

Participants

Sixteen physicians and 16 nurses with emergency medicine experience were recruited from EDs within an academic healthcare system. Participants were recruited individually and then scheduled as nurse-physician teams based on availability. Data from the first two teams (four participants) were not analyzed due to technical problems with the simulation software, which resulted in lost data. Self-reported demographic data were obtained, with incomplete data obtained for nine participants (Table 1). All participants provided written consent and were compensated US$250.00 for their time. Research procedures were approved by the relevant institutional review boards.

TABLE 1.

Participant Demographic Information

| Clinician Role | Gender |

Age |

|||

|---|---|---|---|---|---|

| Male (7) | Female (21) | M | SD | n | |

| Physician - Attending | 1 | 5 | 38.0 | 9.1 | 6 |

| Physician - Resident | 2 | 4 | 27.2 | 1.3 | 6 |

| Physician - Unknown type | 2 | 0 | — | ||

| Nurse | 2 | 12 | 34.6 | 10.9 | 7 |

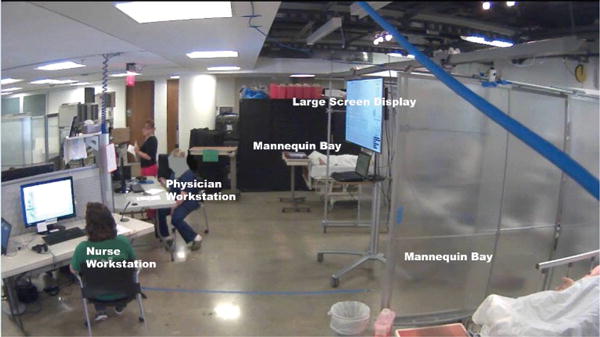

Study Setting

The study was conducted at a clinical simulation center that was part of an academic hospital system. The set-up included two computer workstations showing the displays (one each for the physician and nurse), one large screen monitor showing the displays, and two bays where patient mannequins could be treated. Figure 3 shows the experimental set-up.

Figure 3.

Experimental set up.

Independent Variables

There were two independent variables included: participant role (nurse vs. physician) and display type (prototype vs. control).

Prototype display

The prototype displays consisted of the seven display areas described above.

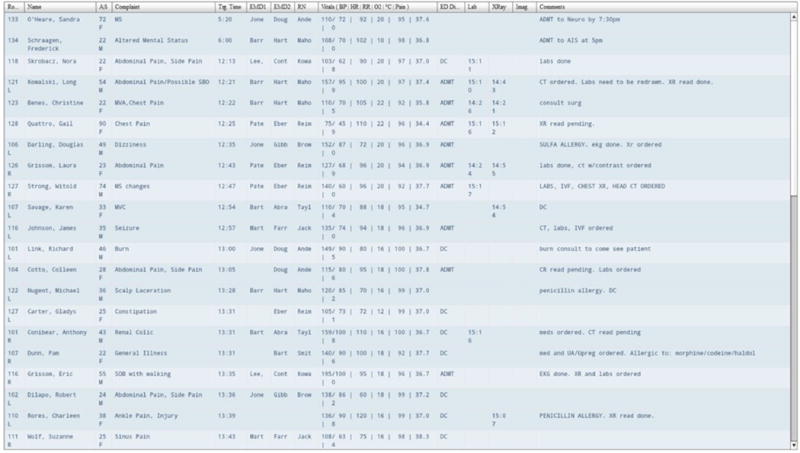

Control display

The control display closely mimicked the primary display of an existing information system used in hospitals where participants worked. This control display showed mock patient information in rows and columns, including content related to patient demographic, chief complaint, vital sign, order/result information, and comments (Figure 4). Participants could obtain additional information about orders by clicking in the lab, imaging, or x-ray order columns. A pop-up box containing information about all orders and their results would then be shown. This feature varied slightly from the actual clinical system that was in use by the healthcare workers, but this information was provided so participants in the control condition had the same access to order details as in the prototype condition.

Figure 4.

The control display, closely mimicking the primary display of an existing information system used in hospitals. It shows mock patient information in a row and column format. Copyright Ann Bisantz, University at Buffalo, The State University of New York.

Task Scenarios

Patient and ED data

Representative, fictional patient cases and ED characteristics were created to populate the displays and were identical between display conditions. Demographic and clinical information about each patient (e.g., name, age, gender, initial complaint, triage acuity score) along with time-stamped events and data related to the patient’s ED visit (e.g., arrival and triage, orders, results, disposition) were generated by combining fictional patient details used in a previous study (Pennathur et al., 2010) with additional information (e.g., additional labs and test results, information about the size of the ED, staffing levels) added by four members of the research team with emergency medicine experience. General characteristics (e.g., rate of patient arrivals, percentage of patients with different incoming triage scores) were based on empirical studies in an actual ED (Pennathur et al., 2010). The study displays did not have any links to more detailed charts or hospital systems.

Information content

Information content was controlled so that similar data about patients were present in both display conditions. As is typical with CSE-informed displays, the prototype displays provided integrated and derived measures, often in graphical formats, which could be inferred or computed from information on the control display. For instance, the prototype displays showed “average” pain level across the ED, whereas within the control display only individual pain values were provided. Comments in the control condition were used to indicate patient information such as allergies that were present graphically on the prototype. Some ED and hospital-level information identified through the CSE methods is not typically found in current systems and was therefore not included in the control condition. This information included historical data about patient flows and wait times for resources, hospital bed availability, average times to first doctor assessment or medication administration, number of patients that left without being seen, and incoming or anticipated patients (though careful monitoring of patients in the control condition could have provided some sense of these variables, which is the method used to currently track these types of measures in many systems).

Study Design

Participants performed the study as a nurse-physician team (each team consisted of one nurse and one physician; participants did not assume alternate “roles” for study purposes). Teams were randomly assigned to either the prototype or control display condition.

Dependent Measures

Cognitive support objectives ratings

The ability of the displays to support various cognitive objectives was assessed using 19 questions developed by the research team regarding the ability of the displays to support high-level objectives in ED oversight and patient care. This questionnaire was based on one developed to evaluate military command-and-control displays (Truxler, Roth, Scott, Smith, & Wampler, 2012). Responses were made on a 9-point rating scale from not at all effective (1) to extremely effective (9), with the additional response option of not experienced during session (NA).

Situation awareness

The Situation Awareness Global Assessment Technique (SAGAT; Endsley, 2000) was used to measure participant’s level of situation awareness. This method employs a “simulation freeze” in which simulated tasks are stopped, information regarding the tasks is removed, and participants are queried regarding task-relevant information to assess their level of awareness of various aspects of the task and situation. Questions were developed to measure three levels of situation awareness: (a) information perception, (b) state comprehension, and (c) planning/projection into the future. Questions were administered at Phase 4 and Phase 8 (see “Experimental Session” subsection for experimental session phase descriptions). Each set (two different sets of questions, referred to as “question set” in analysis) included 15 multiple-choice questions (unique for each set) and one rating scale question (same for each set; range from 1 to 10). Example questions include the following: “Which of these patients is most likely to need an ICU bed?”, “Which patient has a same name alert?”, “Which nurse has the lowest patient workload right now?”, and “Who might you guess will be most likely to have the next disposition decision?” Correct answers to the questions were determined by one of the subject matter experts (A.Z.H.) using the interface and information content displayed at the times of the simulation freezes. The entire SAGAT questionnaire is included (Supplemental Digital Content).

Workload

The NASA Task Load Index (NASA-TLX) was used to measure subjective workload based on six categories (physical demand, mental demand, temporal demand, performance, effort, and frustration) (Hart, 2006; Hart & Staveland, 1988).

Data Collection

SAGAT questions were administered halfway through and at the end of the session, and the cognitive support objectives questionnaire and NASA-TLX were administered at the end of the session. Additionally, three overhead cameras, four microphones (one at each computer workstation and one on each participant), and screen capture software were used to capture activities during the session. The physician also wore a portable eye tracking unit that captured both audio and gaze data. This paper only presents results from the questionnaires (situation awareness, workload, and cognitive support ratings).

Procedure

Orientation

Upon arrival participants provided written informed consent, received an introduction to the goals of the study, were given a tour of the experimental set-up, and were oriented to how the patient mannequins functioned and the placement of supplies available for mannequin treatment. Participants were fitted with microphones and the eye tracker (for the physician).

Subsequently, participants heard a brief description of study components and watched a prerecorded computer demonstration showing how the displays worked, including available information and methods of interaction. Simulated patient and ED data used during this orientation were different than that used during data collection.

Experimental session

After orientation, the experimental session, which simulated a portion of an ED shift, started. During the session, a computer simulation was run to populate the displays with information about the ED and simulated patients, in real time. Staff assignments were included in the data profiles created to populate the displays, and the nurse-physician participant team was assigned to a set of patients for the session. These patients included the two patient mannequins and five additional “virtual” patients with whom participants did not directly interact but were able to monitor and submit orders for using the displays. At the start of the experimental session, participants listened to a 4-minute audio recording simulating provider sign-out that presented participants with information about their assigned patients. During this introductory period, participants could view and interact with the displays.

After the simulated sign-out, participants had 5 additional minutes to view and interact with the displays in order to learn about patients in the system. Then, the 45-minute computer simulation started. The computer simulation was used to update the displays with information about the patients in real time.

The 45-minute session had eight conceptual phases (Table 2), although participants experienced these phases as a continuous session. During Phases 1, 3, and 5, an experimenter provided participants with a variety of interruptions (e.g., phone calls, requests from colleagues) regarding patients represented within the displays in the form of paper requests; they responded by writing answers on the paper request. At the start of Phases 2 and 6, an experimenter announced that a patient who was either experiencing shortness of breath (Phase 2) or chest pain (Phase 6) had been brought in for treatment and directed participants to one of the two patient bays where they proceeded to provide care to the patient simulation mannequins, using typical patient simulation methods. After care was complete, participants returned to their workstations as they normally would during a shift in the ED. During Phase 7, the experimenter announced that there had been a multiple vehicle collision on a nearby highway and that the ED needed to prepare for a large number of incoming patients. This mass casualty incident interruption was intended to cause participants to interact with all ED patients within the displays and not just with those to whom the participants were assigned.

TABLE 2.

Conceptual Phases During Experimental Session

| Phase | Time | Content |

|---|---|---|

| 1 | 5 min | Interface use and interruptions |

| 2 | 10 min | Treat patient mannequin with shortness of breath |

| 3 | 5 min | Interface use and interruptions |

| 4 | 10 min | Situation awareness questions |

| 5 | 5 min | Interface use and interruptions |

| 6 | 15 min | Treat patient mannequin with chest pain |

| 7 | 5 min | Interface use and mass casualty motor vehicle collision notification |

| 8 | 10 min | Situation awareness questions, then workload and cognitive support questions |

As previously mentioned, at two points (Phases 4 and 8) the simulation was paused and participants were presented with 16 situation awareness questions. After the first set of situation awareness questions, simulation resumed. After the second set of situation awareness questions, the experimental simulation session ended and participants completed the workload and cognitive support questionnaires.

While participants were at their workstations, they were talking with their team member regarding patient care, writing orders, using the displays to review patient and ED information, as well as entering notes and comments for both mannequin and virtual patients.

Analysis

Statistical analyses were conducted on data from seven teams in each display condition using SAS 9.4. Analysis of variance (ANOVA) was conducted for the SAGAT, cognitive support objectives ratings, and NASA-TLX survey responses using a mixed model including the following factors: display type (prototype/control, between subjects), clinician role (physician/nurse, between subjects), survey item (question set, question or subscale, for SAGAT, cognitive support objectives ratings, and NASA-TLX, respectively, within-subjects), and subject nested within team (nurse-physician pair). Post hoc results are reported using Least Square Difference method and two-sample t test 95% confidence intervals. Additional characteristics of the SAGAT responses are also presented.

For the cognitive support objectives ratings, three of the physician participants (two prototype and one control condition) responded “NA” to one or more of the questions. For the NASA-TLX, one nurse participant using the control displays did not answer the questionnaire. Analysis was completed both using averages for these cells (within clinician role, display type, and question/subscale) and with these cases excluded from analysis; statistical results were the same in both cases. Results will be presented using the exclusion case.

RESULTS

Cognitive Support Objectives Ratings

Results for the cognitive support objectives ratings show significant main effects of display type (F1, 426 = 5.68, p = .018) and question (F18, 426 = 4.60, p < .0001). The interaction was not significant (F18, 426 = 1.55, p = .068). Support for cognitive objectives was significantly higher for the prototype compared to the control displays (5.72 vs. 4.53, respectively). At the question level, the prototype displays had higher cognitive support ratings for all except one question (Question 13), and post hoc testing indicated significant differences between the prototype and control displays for 7 of the 19 questions (Table 3).

TABLE 3.

Cognitive Support Objectives, Rating Results for Each Question

| Cognitive Support Objectives Question | Prototype Mean Rating | Control Mean Rating | 95% Confidence Interval (Difference) |

|---|---|---|---|

| 1. Assess the overall state of the ED (is it a good day or a bad day?) | 5.62 | 5.07 | (−2.02, 0.93) |

| 2. Assess whether you have the resources required (e.g. beds, staffing) for the current patient demand | 5.75 | 5.29 | (−1.93, 1.00) |

| 3. Project whether you will have the resources required (e.g., beds, staffing) to meet demands for the next few hours (e.g., anticipating arrivals, discharges, admissions, etc.) | 4.83 | 4.50 | (−2.01, 1.35) |

| 4. Support effective communication and coordination among ED staff | 5.86 | 5.00 | (−2.20, 0.49) |

| *5. Maintain awareness of overall acuity of patients waiting and currently being treated (do we have lots of sick patients, or are they mostly nonacute?) | 5.93 | 4.36 | (−3.14, 0.00)* |

| *6. Identify which patients are most critical | 5.71 | 3.64 | (−3.56, −0.59)* |

| *7. Maintain awareness of acuity and changes in acuity of individual ED patients | 4.79 | 3.21 | (−2.86, −0.28)* |

| 8. Identify which patients have been in the ED the longest | 7.36 | 6.14 | (−2.71, 0.28) |

| *9. Identify where patients are in the care process (across all patients) | 7.21 | 4.29 | (−4.36, −1.50)* |

| 10. Identify where only my patients are in the care process | 5.21 | 5.07 | (−2.03, 1.75) |

| 11. Identify bottlenecks or hold-ups preventing overall patient flow through the ED | 4.64 | 3.57 | (−2.44, 0.30) |

| 12. Identify hold-ups in the care of an individual patient | 5.36 | 4.21 | (−2.42, 0.13) |

| 13. Support effective communication and coordination among ED staff, in regard to an individual patient and that patient’s treatment plan | 5.21 | 5.21 | (−1.65, 1.65) |

| 14. Support effective planning for individual patient care | 6.14 | 5.21 | (−2.41, 0.55) |

| *15. Provide support for prioritizing your tasks | 5.43 | 3.79 | (−3.25, −0.04)* |

| 16. Identify the next patient I should sign up for (e.g., patients that need to be seen out of order or seen from waiting room) | 6.15 | 4.00 | (−3.20, 0.38) |

| 17. Understand whether individual patients are waiting for you to assess or treat them (i.e., if you are the hold-up) | 5.50 | 4.07 | (−3.24, 0.38) |

| *18. Assess the current state of the ED with respect to balancing patients and workload across providers | 6.43 | 4.79 | (−3.17, −0.12)* |

| *19. Support effective communication and coordination among ED staff, in regard to balancing patients and workload across providers | 6.14 | 4.36 | (−3.27, −0.30)* |

p < .05.

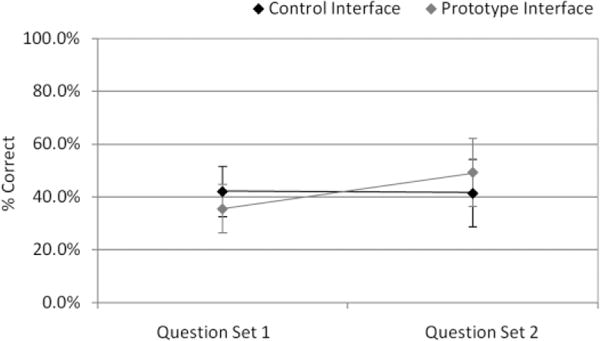

Situation Awareness

There was a significant interaction between question set and display type for SAGAT scores (F1, 21 = 4.56, p = .045), but there were no main effects (Figure 5). Post hoc testing showed a significant difference in the percent of correct responses between question sets for the prototype displays (t21 = −2.80, p = .011). The average percent of correct responses increased from 35.6% on Question Set 1 to 49.2% on Question Set 2. Note that chance performance (based on the number of multiple choice answers) was 28%.

Figure 5.

Situation awareness results, significant interaction between question set and display type.

The rating question in each question set asked participants to choose a number from 1 (running smoothly) to 10 (out of control) to rate the current state of the ED. Responses on this question showed a significant display type main effect (F1,24 = 4.91, p = .036). Prototype display scores (6.23) were significantly greater (i.e., the ED was rated more “out of control”) than control display scores (5.11).

Workload

There were no significant effects due to display type for NASA-TLX scores, but subscale was a significant main effect (F5, 115 = 27.05, p < .0001). Physicians had higher mean workload than nurses (50.96 vs. 40.22), but this effect was not significant (F1, 115 = 3.31, p = .072). Post hoc pairwise comparisons showed physical demand had significantly lower scores than the other five factors (all p values < .0001), reflecting the primarily cognitive nature of the tasks. The performance subscale was also significantly lower than the remaining four subscales (p values ≤ .026).

DISCUSSION

The primary purpose of this study was to assess the efficacy of innovative displays, developed using systematic CSE methods, for supporting the work of ED clinicians. In particular, prototype displays were compared to standard displays through a controlled experiment conducted in a clinical simulation environment. Although there has been continued discussion regarding the usability of health information technology systems, the focus is often on the basic user-interface design, such as the number of clicks, font, layout, and color (Perry, 2004). Although these factors are important, providing higher level support of the cognitive work of the end user is a critical and often underrecognized component of the overall usability of a system.

There were several important findings. First, the prototype displays were rated better with respect to the cognitive support objectives than the control displays, providing support for the use of systematic, CSE design that incorporate extensive knowledge elicitation and analysis of clinician work activities, information needs, and system constraints in the development of health information technology systems. Although CSE methods have been applied in other medical contexts (Jiancaro et al., 2013), to our knowledge this is the first application of these methods to the design of EDISs as well as the first to demonstrate the benefits of this approach through a clinical simulation study.

The concepts demonstrating improved support while using the prototype displays included those related to patient acuity, identifying the most critical patients, monitoring patient status in the care process, and balancing workload across providers. Providing better support regarding these concepts is critical for improving the function of the ED, resulting in potentially better patient care and a generally more efficient ED.

The only cognitive support objective with a rating score not greater for the prototype compared to the control related to helping clinicians track individual patients under their care. There was no view within the prototype displays that showed only “your patients,” merely a view of all ED patients that could be sorted by provider. If this “your patient” view option was available, perhaps this support objective would have shown improvement with the prototype.

Second, although there was no overall difference in situation awareness between display conditions, there was a significant interaction between the display type (prototype/control) and question set (half-way through/at end of the 45-min session). Situation awareness for the prototype displays significantly improved from the first to second question set. This result suggests there was an effect of experience with the prototype displays. At first, participants using the prototype may have been at a disadvantage using the new displays, but with experience, situation awareness improved. Thus, participants were able to learn and take advantage of the new displays in a relatively short amount of time. This may imply that displays designed using CSE methods would better support situation awareness in the ED.

Participants using the prototype displays rated the ED as more “out of control” than the control display did (though both scores were greater than the neutral point on the scale), demonstrating different overall perspectives on the state of the ED. Although there is no objective measure of “out of control” for comparison, this result could be due to the fact that the prototype displays provided more explicit, graphic information that was particularly useful to the participants’ clinical work in the ED, such as information about the waiting time, severity of patients in the waiting room, status of beds as opened or filled, as well as an explicit representation of the ED status (the “spider” display). Taken together, this information may have allowed users a more direct assessment of the ED state.

Finally, despite the fact that the prototype included very different information representations and organization than the control display, there was no increase in workload as measured by NASA-TLX. This result is even more compelling given that the control display was very similar to displays that had been in use in the hospital system from which the participants had been recruited. Therefore, they were likely familiar with it. Thus, using the unfamiliar prototype displays did not result in increased workload in this study.

Limitations

The results from this study are based on a relatively small sample of participants. Gender demographics of the physicians in our sample differed from national statistics (75% male nationally vs. 36% male reported in our sample) (Association of American Medical Colleges, Center for Workforce Studies Colleges, 2012) but were similar for nurses (9% male nationally vs. 14% male reported in our sample) (U.S. Census Bureau, 2013); we did not attempt to balance gender due to the difficulty in recruiting experienced personnel for a relatively time- consuming study. Some participant teams may have worked together in real ED shifts, which could have affected the results. Participant scheduling was done randomly within the constraints of participants’ schedules to mitigate this issue. The scenario used was shorter in duration than a typical ED shift and therefore may not have been representative of all activity levels (i.e., lulls vs. busy periods), which may have affected the degree to which participants monitored the systems looking for changes. This could be investigated through additional study. Additionally, the study took place in a simulated environment, and the displays developed for this study were not designed to emulate a complete EDIS or full electronic health record and did not link to detailed patient charts. The use of an advanced clinical simulation center, as well as the development of detailed patient cases and sign-over information by a team of experienced emergency medicine physicians, helped to mitigate these limitations.

CONCLUSIONS

The present study demonstrated that the CSE methodology used to design these prototype displays was effective in creating displays that support the work of ED clinicians without increasing workload, with the additional potential for improved situation awareness. These results have important implications for the design of IT systems that support emergency medicine as well as other aspects of healthcare. Further analysis of participants’ interaction with the prototype could be conducted to determine how and when the specific features and display areas were used. Such an analysis could guide future research to enhance the usability and usefulness of the different features of the displays. This study represents a rare comparison between a current type of EDIS with a novel, CSE-derived prototype for the ED, and as such provides valuable directions for future research and design.

Supplementary Material

Acknowledgments

The project described was supported by Agency for Healthcare Research and Quality (AHRQ) Grant R18HS020433. Its contents are solely the responsibility of the authors and do not necessarily represent the official views of AHRQ. During the time of this study, Dr. Fairbanks was supported by National Institute for Biomedical Imaging and Bioengineering Grant 1K08EB009090. The authors acknowledge research team members Vicki Lewis and Angelica Hernandez for their various contributions throughout the project.

Biographies

Nicolette McGeorge, MS, is a graduate student in Industrial and Systems Engineering at the University at Buffalo, State University of New York (SUNY). Her research interests include application of cognitive engineering in healthcare and health information technology as well as decision making in complex, dynamic environments. She has a master’s degree in industrial and systems engineering from Rochester Institute of Technology.

Sudeep Hegde, PhD, is a postdoctoral research fellow in the Department of Anesthesia, Critical Care and Pain Medicine at Beth Israel Deaconess Medical Center. His research interests include applying human factors methods and resilience engineering principles to patient safety and healthcare in general. He has a doctorate in industrial and systems engineering from the University at Buffalo, SUNY.

Rebecca L. Berg, BS, is a graduate student in industrial and systems engineering at the University at Buffalo, SUNY. Her research interests include human factors and ergonomics in the healthcare setting. She has a bachelor’s degree in biomedical engineering from the University at Buffalo, SUNY.

Theresa K. Guarrera-Schick, MS, is a senior user experience designer specializing in the application of human factors engineering methods in product design and user interface design. She has a master’s degree in industrial and systems engineering from the University at Buffalo, SUNY.

David T. LaVergne, MS, is a graduate student in industrial and systems engineering at the University at Buffalo, SUNY. His research interests include sonification and the design of auditory information displays. He has a master’s degree in industrial and systems engineering from the University at Buffalo, SUNY.

Sabrina N. Casucci, PhD, MBA, is a research scientist in the School of Nursing and Department of Industrial and Systems Engineering at the University at Buffalo, SUNY. Her research interests include healthcare process modeling and the design of consumer and clinical health information technologies. She has a doctorate in industrial and systems engineering and a master’s degree in business administration from the University at Buffalo, SUNY.

A. Zachary Hettinger, MD, MS, is an emergency physician, assistant professor of emergency medicine in the School of Medicine at Georgetown University, and a clinical informatacist. He applies human factors engineering principles to improving healthcare. He has a medical degree from the University of Rochester, School of Medicine.

Lindsey N. Clark, MA, is a human factors research specialist whose research focuses on user-centered design processes for medical devices and social network analysis in healthcare settings. She has a master’s degree in mass communications from the University of Florida, Gainesville.

Li Lin, PhD, is a professor in the Department of Industrial and Systems Engineering at the University at Buffalo, SUNY. His research interests are in manufacturing and healthcare systems, including computer simulation, workflow analysis and improvement; and efficient healthcare deliveries in ED, OR, and home care. He has a doctorate in industrial engineering from Arizona State University.

Rollin J. Fairbanks, MD, MS, is an emergency physician; associate professor of emergency medicine in the School of Medicine at Georgetown University; adjunct associate professor in the Department of Industrial and Systems Engineering at the University at Buffalo, SUNY; and human factors engineer. His research interests include applying the science of safety to medical systems. He has a medical degree from Virginia Commonwealth University, School of Medicine.

Natalie C. Benda, BS, is a graduate student in industrial and systems engineering at the University at Buffalo, SUNY. Her research interests involve improving health information technology. She has a bachelor’s degree in industrial engineering from Purdue University.

Longsheng Sun, MS, is a graduate student in industrial and systems engineering at the University at Buffalo, SUNY. His research interests include optimization theories and their applications. He has a master’s degree in industrial and system engineering from the University at Buffalo, SUNY.

Robert L. Wears, MD, PhD, is a professor in the Department of Emergency Medicine at the University of Florida and a visiting professor in the Clinical Safety Research Unit at Imperial College London. His research interests lie in technical work studies, joint cognitive systems, and particularly the impact of information technology on safety and resilient performance. He has a medical degree from Johns Hopkins University, School of Medicine.

Shawna Perry, MD, is an associate professor in the Department of Emergency Medicine at the University of Florida. Her research interest is patient safety, with an emphasis in human factors/ergonomics and system failure. She has published widely on topics related to these areas as well as transitions in care, the impact of information technology on clinical care, and naturalistic decision making. She has a medical degree from Case Western Reserve University, School of Medicine.

Ann Bisantz, PhD, is a professor in the Department of Industrial and Systems Engineering at the University at Buffalo, SUNY. Her research interests focus on cognitive engineering methods, decision-making, and information display, in complex settings such as healthcare and military command-and-control. She has a doctorate in industrial and systems engineering from Georgia Institute of Technology. She is a fellow of the Human Factors and Ergonomics Society.

Footnotes

SUPPLEMENTARY MATERIAL

The online supplementary material is available at http://edm.sagepub.com/supplemental.

Contributor Information

Nicolette McGeorge, Department of Industrial and Systems Engineering, University at Buffalo, State University of New York.

Sudeep Hegde, Department of Industrial and Systems Engineering, University at Buffalo, State University of New York.

Rebecca L. Berg, Department of Industrial and Systems Engineering, University at Buffalo, State University of New York

Theresa K. Guarrera-Schick, Department of Industrial and Systems Engineering, University at Buffalo, State University of New York

David T. LaVergne, Department of Industrial and Systems Engineering, University at Buffalo, State University of New York

Sabrina N. Casucci, Department of Industrial and Systems Engineering, University at Buffalo, State University of New York

A. Zachary Hettinger, National Center for Human Factors in Healthcare, MedStar Institute for Innovation, and Department of Emergency Medicine, Georgetown University.

Lindsey N. Clark, National Center for Human Factors in Healthcare, MedStar Institute for Innovation

Li Lin, Department of Industrial and Systems Engineering, University at Buffalo, State University of New York.

Rollin J. Fairbanks, National Center for Human Factors in Healthcare, MedStar Institute for Innovation, and Department of Industrial and Systems Engineering, University at Buffalo, State University of New York, and Department of Emergency Medicine, Georgetown University, and Simulation Training & Education Lab (SiTEL), MedStar Health

Natalie C. Benda, Department of Industrial and Systems Engineering, University at Buffalo, State University of New York, and National Center for Human Factors in Healthcare, MedStar Institute for Innovation

Longsheng Sun, Department of Industrial and Systems Engineering, University at Buffalo, State University of New York.

Robert L. Wears, Department of Emergency Medicine, University of Florida, and Clinical Safety Research Unit, Imperial College London

Shawna Perry, Department of Emergency Medicine, University of Florida.

Ann Bisantz, Department of Industrial and Systems Engineering, University at Buffalo, State University of New York.

References

- Adobe. Adobe Flash Builder. San Jose, CA: Adobe Systems Inc; 2010. (Version 4.6). [Google Scholar]

- Ahlstrom U. Work domain analysis for air traffic controller weather displays. Journal of safety research. 2005;36(2):159–169. doi: 10.1016/j.jsr.2005.03.001. [DOI] [PubMed] [Google Scholar]

- Association of American Medical Colleges, Center for Workforce Studies Colleges. 2012 physician specialty data book. Washington, DC: Author; 2012. [Google Scholar]

- Bagnasco A, Tubino B, Piccotti E, Rosa F, Aleo G, Di Pietro P, Gambino L. Identifying and correcting communication failures among health professionals working in the Emergency Department. International Emergency Nursing. 2013;21(3):168–172. doi: 10.1016/j.ienj.2012.07.005. [DOI] [PubMed] [Google Scholar]

- Bisantz AM. Cognitive engineering applications in health care. Paper presented at the Frontiers of Engineering: Reports on Leading-Edge Engineering from the 2008 Symposium; San Diego, CA. 2008. [Google Scholar]

- Bisantz AM, Burns CM. Applications of cognitive work analysis. Boca Raton, FL: CRC Press; 2008. [Google Scholar]

- Bisantz AM, Burns CM, Fairbanks RJ. Cognitive systems engineering in health care. Boca Raton, FL: CRC Press, Taylor & Francis; 2014. [Google Scholar]

- Bisantz AM, Pennathur PR, Guarrera TK, Fairbanks RJ, Perry SJ, Zwemer F, Wears RL. Emergency department status boards: A case study in information systems transition. Journal of Cognitive Engineering and Decision Making. 2010;4(1):39–68. [Google Scholar]

- Bisantz AM, Roth E. Analysis of cognitive work. Paper presented at the Reviews of Human Factors and Ergonomics; Santa Monica, CA. 2008. [Google Scholar]

- Burns CM, Bisantz AM, Roth EM. Lessons from a comparison of work domain models: Representational choices and their implications. Human Factors: The Journal of the Human Factors and Ergonomics Society. 2004;46(4):711–727. doi: 10.1518/hfes.46.4.711.56810. [DOI] [PubMed] [Google Scholar]

- Burns CM, Hajdukiewicz J. Ecological interface design. Boca Raton, FL: CRC Press; 2004. [Google Scholar]

- Chisholm CD, Collison EK, Nelson DR, Cordell WH. Emergency department workplace interruptions are emergency physicians “interrupt-driven” and “multitasking”? Academic Emergency Medicine. 2000;7(11):1239–1243. doi: 10.1111/j.1553-2712.2000.tb00469.x. [DOI] [PubMed] [Google Scholar]

- Clark LN, Guarrera TK, McGeorge NM, Hettinger AZ, Hernandez A, LaVergne DT, Bisantz AM. Usability evaluation and assessment of a novel emergency department IT system developed using a cognitive systems engineering approach. Paper presented at the Proceedings of the 2014 International Symposium on Human Factors and Ergonomics in Healthcare: Leading the Way; Chicago. 2014. [Google Scholar]

- Croskerry P, Sinclair D. Emergency medicine: A practice prone to error? Canadian Journal of Emergency Medicine. 2001;3(4):271. doi: 10.1017/s1481803500005765. [DOI] [PubMed] [Google Scholar]

- Effken JA. Different lenses, improved outcomes: A new approach to the analysis and design of healthcare information systems. International Journal of Medical Informatics. 2002;65(1):59–74. doi: 10.1016/s1386-5056(02)00003-5. http://dx.doi.org/10.1016/S1386-5056(02)00003-5. [DOI] [PubMed] [Google Scholar]

- Effken JA, Brewer BB, Logue MD, Gephart SM, Verran JA. Using Cognitive Work Analysis to fit decision support tools to nurse managers’ work flow. International Journal of Medical Informatics. 2011;80(10):698–707. doi: 10.1016/j.ijmedinf.2011.07.003. http://dx.doi.org/10.1016/j.ijmedinf.2011.07.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Effken JA, Loeb RG, Kang Y, Lin ZC. Clinical information displays to improve ICU outcomes. International Journal of Medical Informatics. 2008;77(11):765–777. doi: 10.1016/j.ijmedinf.2008.05.004. [DOI] [PubMed] [Google Scholar]

- Endsley MR. Theoretical underpinnings of situation awareness: A critical review. In: Endsley MR, Garland DJ, editors. Situation awareness analysis and measurement. Mahwah, NJ: Lawrence Erlbaum Associates; 2000. pp. 3–32. [Google Scholar]

- Fairbanks RJ, Bisantz AM, Sunm M. Emergency department communication links and patterns. Annals of Emergency Medicine. 2007;50(4):396–406. doi: 10.1016/j.annemergmed.2007.03.005. [DOI] [PubMed] [Google Scholar]

- Fairbanks R, Karn K, Caplan S, Guarrera T, Shah M, Wears R. Use error hazards from a popular emergency department information system. Paper presented at the Usability Professionals Association 2008 International Conference; Baltimore. 2008. [Google Scholar]

- Guarrera TK, McGeorge NM, Clark LN, LaVergne DT, Hettinger ZA, Fairbanks RJ, Bisantz AM. Cognitive engineering design of an emergency department information system. In: Bisantz AM, Burns CM, Fairbanks RJ, editors. Cognitive systems engineering in healthcare. Boca Raton, FL: CRC Press, Taylor & Francis; 2015. pp. 43–74. [Google Scholar]

- Guarrera TK, Stephens RJ, Clark LN, McGeorge NM, Fairbanks RJT, Perry SJ, Bisantz AM. Engineering better health IT: Cognitive systems engineering of a novel emergency department IT system. Paper presented at the Proceedings of the 2012 Symposium on Human Factors and Ergonomics in Health Care; Baltimore. 2012. [Google Scholar]

- Hajdukiewicz JR, Vicente KJ, Doyle DJ, Milgram P, Burns CM. Modeling a medical environment: An ontology for integrated medical informatics design. International Journal of Medical Informatics. 2001;62(1):79–99. doi: 10.1016/s1386-5056(01)00128-9. http://dx.doi.org/10.1016/S1386-5056(01)00128-9. [DOI] [PubMed] [Google Scholar]

- Hart S. NASA-task load index (NASA-TLX); 20 years later. Paper presented at the Proceedings of the Human Factors and Ergonomics Society Annual Meeting; Santa Monica, CA. 2006. [Google Scholar]

- Hart S, Staveland LE. Development of NASA-TLX (Task Load Index): Results of empirical and theoretical research. Human Mental Workload. 1988;1(3):139–183. [Google Scholar]

- Hertzum M, Simonsen J. Work-practice changes associated with an electronic emergency department whiteboard. Health Informatics Journal. 2013;19(1):46–60. doi: 10.1177/1460458212454024. [DOI] [PubMed] [Google Scholar]

- Hertzum M, Simonsen J. Visual overview, oral detail: The use of an emergency-department whiteboard. International Journal of Human-Computer Studies. 2015;82(0):21–30. http://dx.doi.org/10.1016/j.ijhcs.2015.04.004. [Google Scholar]

- Jamieson G, Miller CA, Ho WH, Vicente KJ. Integrating task- and work domain-based work analyses in ecological interface design: A process control case study. Systems, Man and Cybernetics, Part A: Systems and Humans, IEEE Transactions on. 2007;37(6):887–905. [Google Scholar]

- Jiancaro T, Jamieson GA, Mihailidis A. Twenty years of cognitive work analysis in health care: A scoping review. Journal of Cognitive Engineering and Decision Making. 2013;8(1):3–22. [Google Scholar]

- Kilner E, Sheppard LA. The role of teamwork and communication in the emergency department: A systematic review. International Emergency Nursing. 2010;18(3):127–137. doi: 10.1016/j.ienj.2009.05.006. [DOI] [PubMed] [Google Scholar]

- Kwok J, Burns CM. Usability evaluation of a mobile ecological interface design application for diabetes management. Paper presented at the Proceedings of the Human Factors and Ergonomics Society Annual Meeting.2005. [Google Scholar]

- Laxmisan A, Hakimzada F, Sayan OR, Green RA, Zhang J, Patel VL. The multitasking clinician: Decision-making and cognitive demand during and after team handoffs in emergency care. International Journal of Medical Informatics. 2007;76(11–12):801–811. doi: 10.1016/j.ijmedinf.2006.09.019. [DOI] [PubMed] [Google Scholar]

- Lim R, Anderson J, Buckle P. Analysing care home medication errors: A comparison of the London protocol and work domain analysis. Proceedings of the Human Factors and Ergonomics Society Annual Meeting. 2008;52(4):453–457. doi: 10.1177/154193120805200453. [DOI] [Google Scholar]

- Naikar N. Work domain analysis: Concepts, guidelines, and cases. Boca Raton, FL: CRC Press; 2013. [Google Scholar]

- Naikar N, Moylan A, Pearce B. Analysing activity in complex systems with cognitive work analysis: Concepts, guidelines and case study for control task analysis. Theoretical Issues in Ergonomics Science. 2006;7(4):371–394. [Google Scholar]

- Nemeth C, Anders S, Brown J, Grome A, Crandall B, Pamplin JC. Support for ICU Clinician Cognitive Work through CSE. In: Bisantz AM, Burns CM, Fairbanks RJ, editors. Cognitive systems engineering in healthcare. Boca Raton, FL: CRC Press, Taylor & Francis; 2015. pp. 127–152. [Google Scholar]

- Parush A. Displays for health care teams: A conceptual framework and design methodology. In: Bisantz AM, Burns CM, Fairbanks RJ, editors. Cognitive systems engineering in health care. Boca Raton, FL: CRC Press; 2015. pp. 75–95. [Google Scholar]

- Parush A, Kramer C, Foster-Hunt T, Momtahan K, Hunter A, Sohmer B. Communication and team situation awareness in the OR: Implications for augmentative information display. Journal of Biomedical Informatics. 2011;44(3):477–485. doi: 10.1016/j.jbi.2010.04.002. [DOI] [PubMed] [Google Scholar]

- Pennathur PR, Bisantz AM, Fairbanks RJ, Perry SJ, Zwemer F, Wears RL. Assessing the impact of computerization on work practice: Information technology in emergency departments. Paper presented at the Proceedings of the Human Factors and Ergonomics Society Annual Meeting; Santa Monica, CA. 2007. [Google Scholar]

- Pennathur PR, Cao D, Sui Z, Lin L, Bisantz AM, Fairbanks RJ, Wears RL. Development of a simulation environment to study emergency department information technology. Simulation in Healthcare. 2010;5(2):103–111. doi: 10.1097/SIH.0b013e3181c82c0a. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pennathur PR, Guarrera TK, Bisantz AM, Fairbanks RJ, Perry SJ, Wears RL. Cognitive artifacts in transition: An analysis of information content changes between manual and electronic patient tracking systems. Paper presented at the Proceedings of the Human Factors and Ergonomics Society Annual Meeting; Santa Monica, CA. 2008. [Google Scholar]

- Perry SJ. An overlooked alliance: Using human factors engineering to reduce patient harm. Joint Commission Journal on Quality and Patient Safety. 2004;30(8):455–459. doi: 10.1016/s1549-3741(04)30052-3. [DOI] [PubMed] [Google Scholar]

- Rasmussen J, Pejtersen AM, Goodstein LP. Cognitive systems engineering. New York: John Wiley & Sons, Inc; 1994. [Google Scholar]

- Redfern E, Brown R, Vincent C. Identifying vulnerabilities in communication in the emergency department. Emergency Medicine Journal. 2009;26(9):653–657. doi: 10.1136/emj.2008.065318. [DOI] [PubMed] [Google Scholar]

- Sarcevic A, Lesk ME, Marsic I, Burd RS. Quantifying adaptation parameters for information support of trauma teams. Paper presented at the CHI ‘08 Extended Abstracts on Human Factors in Computing Systems; Florence, Italy. 2008. [Google Scholar]

- Schenkel S. Promoting patient safety and preventing medical error in emergency departments. Academic Emergency Medicine. 2000;7(11):1204–1222. doi: 10.1111/j.1553-2712.2000.tb00466.x. [DOI] [PubMed] [Google Scholar]

- Seamster TL, Redding RE, Kaempf GL. Applied cognitive task analysis in aviation. Aldershot, UK: Ashgate; 1997. [Google Scholar]

- Sharp TD, Helmicki AJ. The application of the ecological interface design approach to neonatal intensive care medicine. Paper presented at the Proceedings of the Human Factors and Ergonomics Society Annual Meeting; Santa Monica, CA. 1998. [Google Scholar]

- Truxler R, Roth E, Scott R, Smith S, Wampler J. Designing collaborative automated planners for agile adaptation to dynamic change. Paper presented at the Proceedings of the Human Factors and Ergonomics Society Annual Meeting; Santa Monica, CA. 2012. [Google Scholar]

- U.S. Census Bureau. Men in nursing occupations: American Community Survey highlight report. Washington, DC: Author; 2013. [Google Scholar]

- Vicente KJ. Cognitive work analysis: Toward safe, productive, and healthy computer-based work. Boca Raton, FL: CRC Press; 1999. [Google Scholar]

- Wears RL, Perry SJ, Wilson S, Galliers J, Fone J. Emergency department status boards: User-evolved artefacts for inter- and intra-group coordination. Cognition, Technology & Work. 2006;9(3):163–170. doi: 10.1007/s10111-006-0055-7. [DOI] [Google Scholar]

- Xiao Y, Probst CA. Engagement and macroergonomics: Using cognitive engineering to improve patient safety. In: Bisantz AM, Burns CM, Fairbanks RJ, editors. Cognitive systems engineering in healthcare. Boca Raton, FL: CRC Press, Taylor & Francis; 2015. pp. 175–198. [Google Scholar]

- Xiao Y, Schenkel S, Faraj S, Mackenzie CF, Moss J. What whiteboards in a trauma center operating suite can teach us about emergency department communication. Annals of Emergency Medicine. 2007;50(4):387–395. doi: 10.1016/j.annemergmed.2007.03.027. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.