Abstract

Over the past two decades the number of opioid pain relievers sold in the United States rose dramatically. This rise in sales was accompanied by an increase in opioid-related overdose deaths. In response, forty-nine states (all but Missouri) created prescription drug monitoring programs to detect high-risk prescribing and patient behaviors. Our objectives were to determine whether the implementation or particular characteristics of the programs were effective in reducing opioid-related overdose deaths. In adjusted analyses we found that a state’s implementation of a program was associated with an average reduction of 1.12 opioid-related overdose deaths per 100,000 population in the year after implementation. Additionally, states whose programs had robust characteristics—including monitoring greater numbers of drugs with abuse potential and updating their data at least weekly—had greater reductions in deaths, compared to states whose programs did not have these characteristics. We estimate that if Missouri adopted a prescription drug monitoring program and other states enhanced their programs with robust features, there would be more than 600 fewer overdose deaths nationwide in 2016, preventing approximately two deaths each day.

The number of prescriptions written for opioid pain relievers has risen across the United States.1 In 2012, 259 million prescriptions were written for opioid pain relievers—more than one forevery US adult.2 As the number of prescriptions increased, so did complications from the drugs’ use and misuse, including neonatal opioid withdrawal,3–5 treatment facility admissions,1 and overdose deaths.1,6

In 2014, 47,055 people died in the United States from a drug overdose, and 61 percent of those deaths were related to opioids.7 Several high-risk behaviors have been associated with increased rates of overdose deaths among people who use opioids, including obtaining multiple prescriptions for opioid pain relievers from different providers.8 Furthermore, recent studies suggest that inappropriate prescribing patterns9 lead to overuse of long-acting preparations of the drugs that, in turn, are linked to increased risk of overdose death.10

In an effort to curb high-risk patient and prescriber behaviors, many states have implemented prescription drug monitoring programs. These programs collect data from pharmacies on the prescribing of controlled substances, review and analyze the data, and report them to prescribers. The aim of the programs is to identify high-risk behaviors on the part of patients (for example, obtaining a prescription from multiple providers, known as “doctor shopping”) and providers (such as prescribing abnormally high doses of the substances) that are associated with poor outcomes.8,11,12 The programs can also facilitate referrals to substance abuse treatment and inform prevention strategies by providing population-level data on opioid use.13 By 2014 forty-nine states had implemented a prescription drug monitoring program.14 Only Missouri does not now have a program either planned or implemented.15

Several studies of the programs’ effectiveness found that they improved clinicians’ confidence in opioid prescribing, identified and reduced doctor shopping, decreased overall opioid prescribing and treatment facility admissions, reduced diversion of opioids (that is, the transfer for illegal use or distribution of opioids that were received legally), and improved clinicians’ ability to monitor opioid dependency treatment.16 However, studies evaluating the programs’ effectiveness at reducing opioid-related overdose deaths are limited17 or are several years old, having been conducted before many of the programs were implemented.18,19

Furthermore, research evaluating the effectiveness of specific characteristics of prescription drug monitoring programs remains limited, despite variability in those characteristics across states. For example, states vary in the types of drugs with abuse potential that they monitor. The Drug Enforcement Administration (DEA) categorizes drugs by abuse potential using a system called Controlled Substance Schedules. The system includes five schedules that range from one for substances with no medical purposes and high addiction potential, such as heroin (Schedule I), to a schedule for substances that have a medical purpose and low addiction potential, such as codeine cough syrup (Schedule V). States vary in the number of DEA schedules they monitor, and some states monitor additional substances not included in the DEA classification.

Building on the previous literature about prescription drug monitoring programs, we used publicly available data from all of the states that implemented a program in the period 1999–2013 to determine whether implementation or different characteristics of a program were associated with decreases in opioid-related overdose deaths.

Study Data And Methods

STUDY DESIGN

We used an interrupted time-series design to examine the association of both implementation and characteristics of prescription drug monitoring programs with the rate of opioid-related overdose deaths. Our unit of analysis was the state-year pair. The study design was strengthened by the variation across states in the timing of programs’ enactment and implementation (that is, when a program began collecting data), and the implementation of specific features.

We obtained data from multiple public sources (described below) for the period 1999–2013. Our analysis focused on the thirty-five states that implemented programs during the study period.

This was a study of aggregate-level deidentified death data. Accordingly, the Vanderbilt University Medical Center Institutional Review Board considered it exempt from review.

DATA

To evaluate the effectiveness of state prescription drug monitoring programs, we gathered data on the programs’ year of legislative enactment and year of implementation. Data for the year of enactment were obtained from LawAtlas,20 a database that contains a historical review of laws about the programs. Data on enactment were included to account for changes in prescriber or patient behavior that might have occurred after enactment but that would be independent of the implementation of a program. Data for the year of implementation were obtained from a technical assistance website managed by Brandeis University.21

Data on the characteristics of the programs—namely, how many drug schedules were monitored, how frequently the data were updated, and whether or not registration or use of the program was mandated—were also obtained from the LawAtlas. These data were reported only through 2011.20 For 2012 and 2013, we used data compiled from the National Alliance for State Model Drug Laws.22,23

A set of time-varying indicator variables was constructed for each state to capture when a prescription drug monitoring program statute passed and when the program was implemented. We also tested the interaction of the year a program was implemented with time, to determine if the association between opioid-related overdose deaths and implementation of a program changed over time. In addition, a set of indicators was created to identify program features, including the number of drug schedules monitored, the frequency of data updates, and whether or not registration or use was mandatory.

Because social disadvantage, such as unemployment24,25 and limited education,26 may affect an individual’s risk of overdose death, our models included variables that captured these factors. Annual state unemployment data (not adjusted for season) were obtained from the Bureau of Labor Statistics.27 Educational attainment data (the percentage of a state’s population ages twenty-five and older with at least a college degree) wereobtained from the decennial census for 200028 and the American Community Survey (ACS) for the period 2005–13.29

Opioid prescribing rates at the state level have been associated with opioid-related overdose deaths.30 However, given that opioid prescribing rates are in the causal pathway between those deaths and the implementation of a prescription drug monitoring program, prescribing data were not included to avoid overadjustment bias.31 In other words, the mechanism by which programs likely reduce opioid-related overdose deaths is through patterns of opioid prescribing and use. Therefore, including opioid prescribing rates in our model would have biased our estimate of the effect of the programs to the null.

The outcome of interest was the annual rate of opioid-related overdose deaths per 100,000 population in each state. Data for each state and year of interest were abstracted from the Wide-Ranging Online Data for Epidemiologic Research (WONDER) database of multiple causes of death maintained by the Centers for Disease Control and Prevention (CDC).32 As was done in previous studies,24,33 we defined opioid-related overdose deaths as deaths in which the underlying cause was drug overdose, whether accidental or intentional,34 using the International Statistical Classification of Diseases and Related Health Problems, Tenth Revision (ICD-10), codes X40–X44, X60–X64, and Y10–Y14, when a code for an opioid analgesic (T40.2–T40.4) was also present. This methodology captured all deaths involving prescription opioids, including methadone.35

The mechanism by which an individual obtained the opioid (either legally through a licensed prescriber or illicitly through diversion) could not be determined. The WONDER database suppressed state-level data if there were fewer than ten deaths in a given year. Overall, 3.3 percent of state-year combinations were suppressed or missing outcome data. We used linear imputation and the data from the years before and after the missing observations to account for these missing data in our sample, and we conducted our analyses both with and without imputed data (see online Technical Appendix Exhibit 1).36 One state (North Dakota) was dropped because of its high rate of suppressed data, which left thirty-four states in our sample.

ANALYSIS

We examined bivariate and multivariable results for our outcome of interest during our study period. In the first phase of our analysis, we sought to determine whether there was an association between implementing a prescription drug monitoring program and reductions in opioid-related overdose deaths among all states that implemented a program in the study period. Linear regression models with state fixed effects were employed to account for unmeasured variation at the state level and for specific time-varying state-level factors (unemployment and educational attainment rate) that might have an effect on prescription opioid use.

Because legislators might react to an increase in deaths by implementing a program, we included both the year of legislative enactment and the year of implementation to isolate the effect of the latter. We also examined the interaction of program implementation with time to capture any modification of that effect. Our data were nested within states and years. Therefore, data were treated as a time-series panel with appropriate precision adjustment of our estimates.

In the second phase of our analysis, we sought to determine if specific program characteristics were associated with greater changes in rates of opioid-related overdose deaths, compared to other characteristics. For this analysis, we excluded West Virginia because it was an extreme outlier, with an opioid-related overdose death rate nearly twice as high as that of the next highest state (Utah), and because it implemented a program early in our study period.

We added indicators for specific features of programs designed to broaden a program’s scope or improve the quality of its data. The details of our regression analysis can be found in the Technical Appendix.36

We then used our regression models to predict rates of opioid-related overdose deaths for states that had implemented a program and for those that had not, as well as for states whose programs had certain features. For the predicted death rates, the median year in our study period (2006) was set to zero, and predicted values for three years before and after implementation were generated to ensure adequate time to see trends in our regression model.

To ensure that our analyses were robust, we conducted a series of sensitivity analyses. We conducted analyses with and without West Virginia for the reasons stated above, and with and without Florida, given the potential impact of the state’s recent and unique legislation designed to close “pill mills” (operations in which a provider prescribes or dispenses controlled substances without a legitimate medical purpose)37 (for results of these sensitivity analyses, see Appendix Exhibit 2).36 Lastly, because some prescription opioid-related deaths may also involve opium or heroin, we conducted a sensitivity analysis that included deaths attributed to opioids (ICD-10 codes T40.2–T40.4), opium (T40.0), or heroin (T40.1) (for results of this sensitivity analysis, see Appendix Exhibit 3).36

All statistical analysis was done using Stata, version 13.1. Geographic information systems processing was done using ArcGIS, version 10.3.

LIMITATIONS

Our study had some limitations. First, each source of data had its own potential sources of error. For example, opioid-related overdose deaths may be underreported if medical examiners do not have a suspicion of opioid use and do not perform toxicology testing. However, unless the reporting of opioid-related overdose deaths decreased in each state only after the implementation of that state’s prescription drug monitoring program, this should not confound our results.

Second, while states generally follow the DEA schedules, this is not universally true. If, for example, a state were to combine two DEA schedules, this might have biased our results toward the null.

Third, although we attempted to adjust for important state-level confounders, it is possible that we did not include some important time-varying, state-level factors associated with both our outcome and the predictor of interest.

Fourth, because prescription drug monitoring programs were not implemented in isolation, several other state and federal policy changes might have influenced our results. For example, Florida, in partnership with the federal government, was effective in closing pill mills, and this action was associated with a decrease in opioid-related overdose deaths.38 However, the results of a sensitivity analysis in which we excluded Florida did not differ from those of our main analysis (Appendix Exhibit 2).36

Lastly, several agencies in the US Department of Health and Human Services have been working together to improve access to substance abuse treatment and mitigate the risk of deaths related to opioid pain relievers.39 Other innovations, including expanding the use of the opioid-reversal agent naloxone for first responders and families40 and abuse-deterrent formulations of opioids,41 were not captured in our data. But changes in federal practices or innovations in drug development or delivery would likely have affected all states at the same time and would likely be independent of our variables that captured program implementation or features in specific states and years.

Study Results

By 2013, forty-eight states had created a prescription drug monitoring program, leaving only Missouri and New Hampshire42 without a program (Exhibit 1). For additional data on growth of the programs by state, see Appendix Exhibit 4.36

EXHIBIT 1.

Characteristics of states in the study sample with and without prescription drug monitoring programs (PDMPs), selected years 1999—2013

| With PDMP | Without PDMP | Overall mean | |

|---|---|---|---|

| 1999 | |||

|

| |||

| Number of states | —a | 34 | 34 |

| Opioid-related overdose deaths per 100,000 population | —a | 1.36 | 1.36 |

| Unemployment rate (%) | —a | 3.99 | 3.90 |

| Educational attainment | —a | 23.24 | 23.20 |

|

| |||

| 2007 | |||

|

| |||

| Number of states | 11 | 23 | 34 |

| Opioid-related overdose deaths per 100,000 population | 6.88 | 4.75 | 5.44 |

| Unemployment rate (%) | 4.27 | 4.69 | 4.55 |

| Educational attainment | 24.36 | 27.24 | 26.31 |

|

| |||

| 2013 | |||

|

| |||

| Number of states | 32 | 2 | 34 |

| Opioid-related overdose deaths per 100,000 population | 6.19 | 6.50 | 6.21 |

| Unemployment rate (%) | 6.58 | 5.90 | 6.54 |

| Educational attainment | 28.03 | 29.95 | 28.14 |

SOURCE Authors’ analysis of data from the National Center for Health Statistics, National Vital Statistics System, and Detailed Mortality File, all accessed through CDC WONDER (see Note 32 in text); American Community Survey; the censuses of 2000 and 2010; Bureau of Labor Statistics; Brandeis University’s Prescription Drug Monitoring Program Training and Technical Assistance Center; and LawAtlas. NOTE “Educational attainment” is the percentage of the population ages twenty-five and older with at least a college degree.

Not applicable.

Throughout our study period, rates of opioid-related overdose deaths increased across the United States, albeit with great variability (see Appendix Exhibit 5).36 For example, Nebraska’s opioid-related overdose death rate increased from 0.4 per 100,000 population in 1999 to 2.3 in 2013, while Maryland’s death rate increased from 0.3 per 100,000 population in 1999 to 8.9 in 2913.

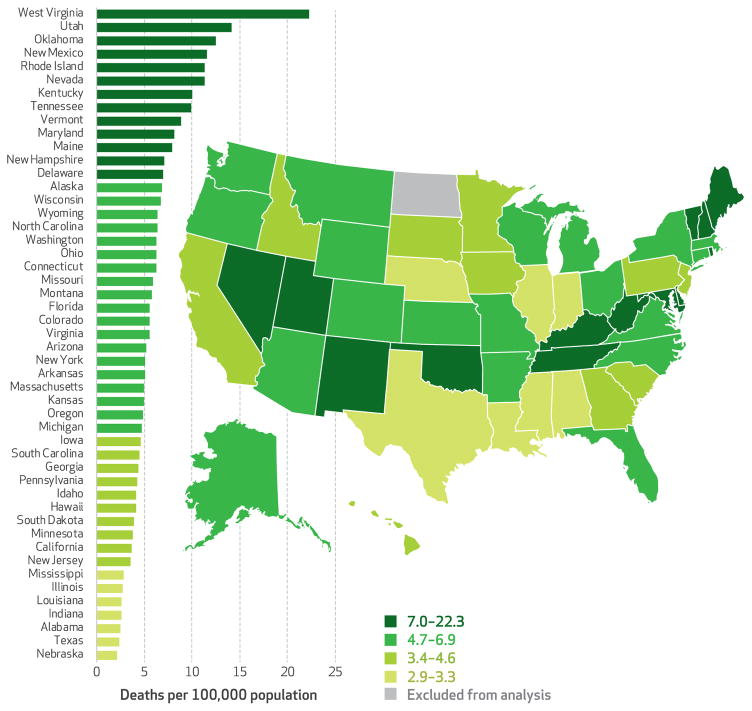

In 2013 seven states (Kentucky, Nevada, New Mexico, Oklahoma, Rhode Island, Utah, and West Virginia) had opioid-related overdose death rates of at least ten per 100,000 population (Exhibit 2). In the same year, seven other states (Alabama, Illinois, Indiana, Louisiana, Mississippi, Nebraska, and Texas) had death rates of fewer than three per 100,000 population.

EXHIBIT 2. Prescription opioid–related deaths in the United States, 2013.

SOURCE Authors’ analysis of data from the National Center for Health Statistics, National Vital Statistics System, and Detailed Mortality File, all accessed through CDC WONDER (see Note 32 in text). NOTES Appendix Exhibit 1 shows the growth of these deaths and the spread of prescription drug monitoring programs in the thirty-four states in our sample over the entire study period (see Note 36 in text). Appendix Exhibit 5 consists of a graphical representation of the change in opioid-related overdose deaths for the study period (see Note 36 in text). North Dakota value suppressed as explained in the text.

The average opioid-related overdose death rate for the thirty-four states in our sample rose from 1.4 per 100,000 population in 1999 to 6.2 per 100,000 population in 2013 (Exhibit 1). States that implemented a prescription drug monitoring program during the study period were similar to those that did not in terms of educational attainment and unemployment rates in the population. However, by the end of the study period, states that implemented a program had a lower opioid-related overdose death rate, compared to those that did not (6.19 per 100,000 population versus 6.50 per 100,000 population) (Exhibit 1). Data for each year in our study period can be found in Appendix Exhibit 6.36

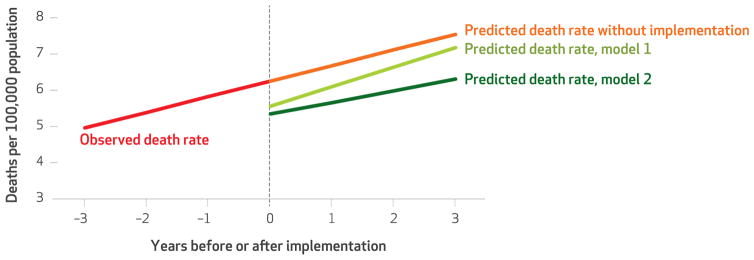

In multivariable analyses that accounted for state program legislation enactment and implementation, educational attainment, unemployment rate, state fixed effects, and a linear time trend, we found that the implementation of a program was associated with a decrease of 1.12 opioid-related overdose deaths per 100,000 population annually after implementation (Exhibit 3, model 1).

EXHIBIT 3.

Associations between use and features of prescription drug monitoring programs (PDMPs) and opioid-related overdose death rates, 1999–2013

| Deaths per 100,000 population

|

||||||

|---|---|---|---|---|---|---|

| Unadjusted

|

Model 1 (adjusted)

|

Model 2 (adjusted)

|

||||

| Deaths | 95% CI | Deaths | 95% CI | Deaths | 95% CI | |

| Explanatory variables | ||||||

|

| ||||||

| PDMP implementation | 2.35**** | 1.91, 2.80 | −1.12**** | −1.68, −0.55 | −0.18 | −0.65, 0.28 |

| Interaction of PDMP implementation with time | 0.62**** | 0.52, 0.73 | 0.11* | 0.00, 0.23 | −0.11** | −0.20, −0.02 |

| Four or more drug schedules monitored | 1.76**** | 1.19, 2.32 | —a | —a | −0.55** | −1.02, −0.08 |

| Data updated at least weekly | 1.76**** | 1.31, 2.21 | —a | —a | −0.82**** | −1.25, −0.38 |

| Mandatory use or registration | 2.06**** | 1.25, 2.88 | —a | —a | 0.30 | −0.27, 0.87 |

|

| ||||||

| State-level and time-trend controls | ||||||

|

| ||||||

| PDMP legislation enactment | 2.66**** | 2.28, 3.04 | 0.17 | −0.40, 0.74 | −0.08 | −0.47, 0.31 |

| Educational attainment | 1.06**** | 0.95, 1.16 | −0.08 | −0.40, −0.24 | −0.08 | −0.30, 0.15 |

| Unemployment rate | 0.59**** | 0.49, 0.70 | −0.08 | −0.19, 0.03 | 0.00 | −0.08, 0.07 |

| Linear time trend | 0.37**** | 0.34, 0.40 | 0.43**** | 0.33, 0.52 | 0.46**** | 0.36, 0.55 |

SOURCE Authors’ analysis of data from the sources listed in Exhibit 1. NOTES The explanatory variables, as well as the PDMP legislation enactment, are per year of implementation. State fixed effects were used for all models. Model 1 includes data for all states that implemented a PDMP during our study period. Model 2 excludes West Virginia. For each bivariate and multivariable regression, the outcome of interest is the opioid-related overdose death rate per 100,000 population. For example, model 1 shows that PDMP implementation was associated with a reduction of 1.2 opioid-related overdose deaths per 100,000 population per year. “Educational attainment” is the percentage of the population ages twenty-five and older with at least a college degree. CI is confidence interval.

Not applicable.

p < 0.10

p < 0.05

p < 0.001

Programs that monitored four or more drug schedules and updated their data at least weekly were associated with greater reductions in opioid-related overdose deaths than programs without these characteristics (Exhibit 3, model 2). A state newly implementing a program with both of those features was predicted to have 1.55 fewer opioid-related overdose deaths per 100,000 population annually than a state without a program. State requirements for registration with or use of a program were not common during our study period, and our estimates did not show those features as having a significant effect.

Exhibit 4 graphically depicts the predicted death rates for a simulated three-year period after the implementation of a prescription drug monitoring program and after the implementation of a program with robust characteristics.

EXHIBIT 4. Opioid-related overdose death rates, by use and features of prescription drug monitoring programs.

SOURCE Authors’ analysis of data from the sources listed in Exhibit 1. NOTES Model 1 includes data for all states that implemented a PDMP during our study period. Model 2 excludes West Virginia.

Discussion

In this retrospective study of states, we found that the implementation of a prescription drug monitoring program was associated with a subsequent decrease in opioid-related overdose deaths. In adjusted analyses, states with programs that monitored four or more drug schedules and updated data at least weekly were found to have lower opioid-related overdose death rates, compared to states whose programs lacked these characteristics.

This study adds to a growing body of evidence that describes the effectiveness of prescription drug monitoring programs in reducing adverse events associated with the use and misuse of opioid pain relievers. Previous studies found the use of a program to be effective in reducing doctor shopping, opioid diversion, and inappropriate prescribing.16 Some previous research18,19 found little to no association between program implementation and deaths related to opioid pain relievers. Our study enhances these analyses by using the most recent data available on such deaths and employing state fixed effects to account for state-specific differences in the use of opioid pain relievers at baseline.

Interestingly, we found that the interaction of time with program implementation was positive and nearly significant (p = 0.06) when West Virginia was included in our analyses (Exhibit 3). West Virginia is an extreme outlier in terms of opioid-related overdose deaths, having a death rate nearly twice as high as that of the next highest state in 2013. Furthermore, West Virginia implemented its program in 2002, early in our study period. It appears that the association of program implementation in West Virginia with a decrease in opioid-related overdose deaths became attenuated over time (that is, there was a subsequent increase in deaths). However, excluding West Virginia from the analyses suggested that program implementation in all other states was associated with a reduction of opioid-related overdose deaths—an effect that grew slightly over time (Appendix Exhibit 2).36

We found that prescription drug monitoring programs that reported data for a broader range of drug schedules and that were updated with greater frequency were associated with greater declines in opioid-related overdose deaths, compared to other programs. Notably, however, states vary greatly in program structure, including in the program’s ease of use for prescribers, frequency of reporting, interoperability with programs in neighboring states, number of drug schedules monitored, and requirements for pharmacies and physicians to register with and use the program.20 As the prescription opioid epidemic grew, some states implemented more stringent program features to mitigate the epidemic’s growth (Appendix Exhibit 7).36

Future research should evaluate additional innovations to prescription drug monitoring programs, such as interoperability between states’ programs and requiring prescribers to register with and use a program, and it should exploit natural experiments in program characteristics to understand the impact of these innovations. For example, though we found no effect of requiring providers to register with or use a program, as recently implemented by a number of states, some evidence suggests that mandating program use by prescribers significantly decreased doctor shopping and the number of prescriptions for opioid pain relievers written in specific states.43 These policies should be evaluated as additional years of data become available and as more states implement them.

Missouri, a state whose opioid-related overdose death rate grew faster than the national average (data not shown), remains the only state without a prescription drug monitoring program. States have been moving toward more comprehensive monitoring of drug schedules and more frequent updating of data. In 2015, thirty-three states monitored at least four drug schedules, and forty-eight states updated program data at least weekly.44,45

Our data suggest that policy makers in the states whose programs do not include these features could consider increasing their frequency of updating and monitoring all schedules of drugs that have a medical use and abuse potential as a strategy for reducing opioid-related overdose deaths. We estimate that if these remaining states did so and Missouri implemented an equally robust monitoring program, over 600 deaths would be avoided in 2016.46

Conclusion

In 2011 the Executive Office of the President of the United States12 set forth the goal of establishing a prescription drug monitoring program in every state and improving interstate interoperability of the programs. Similarly, the Centers for Disease Control and Prevention’s Prevention for States program, which has made large-scale grants to sixteen states to enhance existing programs, set forth the goal of making data from the programs more timely47—a key characteristic we found to be associated with enhanced effectiveness. While these goals have gained widespread support, funding for the programs is frequently inconsistent and at risk, which jeopardizes their ability to remain operational.48–50

Our findings provide support for bolstering prescription drug monitoring programs and establishing a consistent and predictable funding source for them. Additional funding could be provided to increase the number of drug schedules monitored by the programs and the frequency of data updating—both features associated in our study with a reduction in opioid-related overdose deaths. It also may be valuable to tailor programs for easy use by physicians, who often find the programs’ data difficult to access,51 and to streamline interoperability between states.52

It is also important to note that prescription drug monitoring programs are just one tool that could limit the complications of overuse of opioid pain relievers. A comprehensive approach to the prescription drug abuse epidemic is needed, including improving education of providers and the public and improving and augmenting the ability of law enforcement officials to target illegal activities such as operating pill mills.12

That said, implementation of a prescription drug monitoring program with advanced features was strongly associated with a reduction in opioid-related overdose deaths. Research is needed to determine the importance of other improvements to program operations, such as increasing rates of registration, requiring all providers to use the programs, and increasing the identification and investigation of high-risk providers and patients. As the use of these programs becomes more common and consistent, their effect on decreasing the prescription opioid epidemic is likely to grow.

Supplementary Material

Acknowledgments

The authors thank Corey Davis for assisting them with information on dates of enactment and operation of prescription drug monitoring programs. Research reported in this publication was supported by the National Institute on Drug Abuse of the National Institutes of Health (NIH; Award No. K23DA038720). The content is solely the responsibility of the authors and does not necessarily represent the official views of the NIH or the Tennessee Department of Health.

Footnotes

Findings reported in this article were presented at the AcademyHealth Annual Research Meeting, Minneapolis, Minnesota, June 2015, and the Robert Wood Johnson Foundation Clinical Scholars Program National Meeting, Seattle, Washington, November 2015.

Contributor Information

Stephen W. Patrick, Email: stephen.patrick@vanderbilt.edu, Assistant professor of pediatrics and health policy, Division of Neonatology, Vanderbilt University School of Medicine, Nashville, Tennessee

Carrie E. Fry, Health policy and data analyst, Department of Health Policy, Vanderbilt University School of Medicine

Timothy F. Jones, State epidemiologist, Tennessee Department of Health, Nashville

Melinda B. Buntin, Professor and chair, Department of Health Policy, Vanderbilt University Medical Center, Nashville

NOTES

- 1.Centers for Disease Control and Prevention. Vital Signs: overdoses of prescription opioid pain relievers—United States, 1999–2008. MMWR Morb Mortal Wkly Rep. 2011;60(43):1487–92. [PubMed] [Google Scholar]

- 2.Paulozzi LJ, Mack KA, Hockenberry JM. Vital signs: variation among states in prescribing of opioid pain relievers and benzodiazepines—United States, 2012. MMWR Morb Mortal Wkly Rep. 2014;63(26):563–8. [PMC free article] [PubMed] [Google Scholar]

- 3.Patrick SW, Schumacher RE, Benneyworth BD, Krans EE, McAllister JM, Davis MM. Neonatal abstinence syndrome and associated health care expenditures: United States, 2000–2009. JAMA. 2012;307(18):1934–40. doi: 10.1001/jama.2012.3951. [DOI] [PubMed] [Google Scholar]

- 4.Patrick SW, Davis MM, Lehman CU, Cooper WO. Increasing incidence and geographic distribution of neonatal abstinence syndrome: United States 2009 to 2012. J Perinatol. 2015;35(8):667. doi: 10.1038/jp.2015.63. [DOI] [PubMed] [Google Scholar]

- 5.Patrick SW, Dudley J, Martin PR, Harrell FE, Warren MD, Hartmann KE, et al. Prescription opioid epidemic and infant outcomes. Pediatrics. 2015;135(5):842–50. doi: 10.1542/peds.2014-3299. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Centers for Disease Control and Prevention. Vital signs: overdoses of prescription opioid pain relievers and other drugs among women—United States, 1999–2010. MMWR Morb Mortal Wkly Rep. 2013;62(26):537–42. [PMC free article] [PubMed] [Google Scholar]

- 7.Rudd RA, Aleshire N, Zibbell JE, Gladden RM. Increases in drug and opioid overdose deaths—United States, 2000–2014. MMWR Morb Mortal Wkly Rep. 2016;64(50):1378–82. doi: 10.15585/mmwr.mm6450a3. [DOI] [PubMed] [Google Scholar]

- 8.Hall AJ, Logan JE, Toblin RL, Kaplan JA, Kraner JC, Bixler D, et al. Patterns of abuse among unintentional pharmaceutical overdose fatalities. JAMA. 2008;300(22):2613–20. doi: 10.1001/jama.2008.802. [DOI] [PubMed] [Google Scholar]

- 9.Mack KA, Zhang K, Paulozzi L, Jones C. Prescription practices involving opioid analgesics among Americans with Medicaid, 2010. J Health Care Poor Underserved. 2015;26(1):182–98. doi: 10.1353/hpu.2015.0009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Miller M, Barber CW, Leatherman S, Fonda J, Hermos JA, Cho K, et al. Prescription opioid duration of action and the risk of unintentional overdose among patients receiving opioid therapy. JAMA Intern Med. 2015;175(4):608–15. doi: 10.1001/jamainternmed.2014.8071. [DOI] [PubMed] [Google Scholar]

- 11.Government Accountability Office. Prescription drugs: state monitoring programs provide useful tool to reduce diversion [Internet] Washington (DC): GAO; 2002. May, [cited 2016 May 12]. (Publication No. GAO-02-634). Available from: http://www.gao.gov/new.items/d02634.pdf. [Google Scholar]

- 12.Executive Office of the President of the United States. Epidemic: responding to America’s prescription drug abuse crisis [Internet] Washington (DC): White House; 2011. [cited 2016 May 12]. Available from: https://www.whitehouse.gov/sites/default/files/ondcp/policy-and-research/rx_abuse_plan.pdf. [Google Scholar]

- 13.Drug Enforcement Administration, Office of Diversion Control. State prescription drug monitoring programs [Internet] Springfield (VA): The Office; [cited 2016 May 12]. Available from: http://www.deadiversion.usdoj.gov/faq/rx_monitor.htm. [Google Scholar]

- 14.Prescription Drug Monitoring Program Training and Technical Assistance Center. Status of prescription drug monitoring programs (PDMPs) [Internet] Waltham (MA): The Center; 2014. [cited 2016 May 12]. Available from: http://www.pdmpassist.org/pdf/PDMPProgramStatus2014.pdf. [Google Scholar]

- 15.Schwarz A. Missouri alone in resisting prescription drug database. New York Times; 2014. Jul 20, [Google Scholar]

- 16.Prescription Drug Monitoring Program Center of Excellence at Brandeis. Briefing on PDMP effectiveness [Internet] Waltham (MA): The Center; [updated 2013 Apr; cited 2016 May 12]. Available from: http://www.pdmpexcellence.org/sites/all/pdfs/briefing_PDMP_effectiveness_april_2013.pdf. [Google Scholar]

- 17.Haegerich TM, Paulozzi LJ, Manns BJ, Jones CM. What we know, and don’t know, about the impact of state policy and systems-level interventions on prescription drug overdose. Drug Alcohol Depend. 2014;145:34–47. doi: 10.1016/j.drugalcdep.2014.10.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Paulozzi LJ, Kilbourne EM, Desai HA. Prescription drug monitoring programs and death rates from drug overdose. Pain Med. 2011;12(5):747–54. doi: 10.1111/j.1526-4637.2011.01062.x. [DOI] [PubMed] [Google Scholar]

- 19.Li G, Brady JE, Lang BH, Giglio J, Wunsch H, DiMaggio C. Prescription drug monitoring and drug overdose mortality. Inj Epidemiol. 2014;1(9) doi: 10.1186/2197-1714-1-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.LawAtlas. The policy surveillance portal [Internet] Philadelphia (PA): LawAtlas; [cited 2016 May 12]. Available from: http://lawatlas.org/query?dataset=corey-matt-pmp. [Google Scholar]

- 21.Prescription Drug Monitoring Program Training and Technical Assistance Center. PDMP legislation and operational dates [Internet] Waltham (MA): The Center; [cited 2016 May 25]. Available from: http://www.pdmpassist.org/content/pdmp-legislation-operational-dates. [Google Scholar]

- 22.O’Connell S. SJR 20: prescription-drug abuse: state prescription drug monitoring program practices [Internet] Helena (MT): Montana Legislature; 2013. Jan, [cited 2016 May 12]. Available from: http://leg.mt.gov/content/Committees/Interim/2013-2014/Children-Family/Committee-Topics/SJR20/sjr20-prescription-drug-monitoring-program-practices-jan2014.pdf. [Google Scholar]

- 23.National Alliance for Model State Drug Laws. Compilation of prescription monitoring program maps [Internet] Charlottesville (VA): NAMSDL; c2015. [cited 2016 May 12]. Available from: http://www.namsdl.org/library/F2582E26-ECF8-E60A-A2369B383E97812B/ [Google Scholar]

- 24.Bachhuber MA, Saloner B, Cunningham CO, Barry CL. Medical cannabis laws and opioid analgesic overdose mortality in the United States, 1999–2010. JAMA Intern Med. 2014;174(10):1668–73. doi: 10.1001/jamainternmed.2014.4005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Rönkä S, Karjalainen K, Vuori E, Mäkelä P. Personally prescribed psychoactive drugs in overdose deaths among drug abusers: a retrospective register study. Drug Alcohol Rev. 2015;34(1):82–9. doi: 10.1111/dar.12182. [DOI] [PubMed] [Google Scholar]

- 26.Lanier WA, Johnson EM, Rolfs RT, Friedrichs MD, Grey TC. Risk factors for prescription opioid-related death, Utah, 2008–2009. Pain Med. 2012;13(12):1580–9. doi: 10.1111/j.1526-4637.2012.01518.x. [DOI] [PubMed] [Google Scholar]

- 27.Department of Labor, Bureau of Labor Statistics. Local area unemployment statistics [Internet] Washington (DC): BLS; [cited 2016 May 12]. Available from: http://www.bls.gov/lau/ [Google Scholar]

- 28.American FactFinder. Community facts [Internet] Washington (DC): Census Bureau; [cited 2016 May 12]. Available from: http://factfinder.census.gov/ [Google Scholar]

- 29.Census Bureau. American Community Survey (ACS) [home page on the Internet] Washington (DC): Census Bureau; [cited 2016 May 12]. Available from: http://www.census.gov/acs/www/ [Google Scholar]

- 30.Paulozzi LJ, Ryan GW. Opioid analgesics and rates of fatal drug poisoning in the United States. Am J Prev Med. 2006;31(6):506–11. doi: 10.1016/j.amepre.2006.08.017. [DOI] [PubMed] [Google Scholar]

- 31.Schisterman EF, Cole SR, Platt RW. Overadjustment bias and unnecessary adjustment in epidemiologic studies. Epidemiology. 2009;20(4):488–95. doi: 10.1097/EDE.0b013e3181a819a1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Centers for Disease Control and Prevention. CDC WONDER: about underlying cause of death, 1999–2014 [Internet] Atlanta (GA): CDC; [cited 2016 May 12]. Available from: http://wonder.cdc.gov/ucd-icd10.html. [Google Scholar]

- 33.Jones CM, Mack KA, Paulozzi LJ. Pharmaceutical overdose deaths, United States, 2010. JAMA. 2013;309(7):657–9. doi: 10.1001/jama.2013.272. [DOI] [PubMed] [Google Scholar]

- 34.While we recognize that prescription drug monitoring programs may have a greater effect in reducing accidental deaths than in reducing intentional deaths, we chose to include both accidental and intentional deaths because program implementation may also affect the availability of opioids for intentional overdose.

- 35.Ray WA, Chung CP, Murray KT, Cooper WO, Hall K, Stein CM. Out-of-hospital mortality among patients receiving methadone for noncancer pain. JAMA Intern Med. 2015;175(3):420–7. doi: 10.1001/jamainternmed.2014.6294. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.To access the Appendix, click on the Appendix link in the box to the right of the article online.

- 37.Rutkow L, Chang H, Daubresse M, Webster D, Stuart EA, Alexander GC. Effect of Florida’s prescription drug monitoring program and pill mill laws on opioid prescribing and use. JAMA Intern Med. 2015;175(10):1642–9. doi: 10.1001/jamainternmed.2015.3931. [DOI] [PubMed] [Google Scholar]

- 38.Johnson H, Paulozzi L, Porucznik C, Mack K, Herter B. Decline in drug overdose deaths after state policy changes—Florida, 2010–2012. MMWR Morb Mortal Wkly Rep. 2014;63(26):569–74. [PMC free article] [PubMed] [Google Scholar]

- 39.Volkow ND, Frieden TR, Hyde PS, Cha SS. Medication-assisted therapies—tackling the opioid-overdose epidemic. N Engl J Med. 2014;370(22):2063–6. doi: 10.1056/NEJMp1402780. [DOI] [PubMed] [Google Scholar]

- 40.Bagley SM, Peterson J, Cheng DM, Jose C, Quinn E, O’Connor PG, et al. Overdose education and naloxone rescue kits for family members of individuals who use opioids: characteristics, motivations, and naloxone use. Subst Abus. 2015;36(2):149–54. doi: 10.1080/08897077.2014.989352. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Cassidy TA, DasMahapatra P, Black RA, Wieman MS, Butler SF. Changes in prevalence of prescription opioid abuse after introduction of an abuse-deterrent opioid formulation. Pain Med. 2014;15(3):440–51. doi: 10.1111/pme.12295. [DOI] [PubMed] [Google Scholar]

- 42.New Hampshire passed legislation to create a prescription drug monitoring program in 2012, and its program became operational in 2014.

- 43.Prescription Drug Monitoring Program Center of Excellence at Brandeis. Mandating PDMP participation by medical providers: current status and experience in selected states [Internet] Waltham (MA): The Center; 2014. Feb, [cited 2016 May 12]. (COE Briefing). Available from: http://www.pdmpexcellence.org/sites/all/pdfs/COE%20briefing%20on%20mandates%20revised_a.pdf. [Google Scholar]

- 44.Prescription Drug Monitoring Program Training and Technical Assistance Center. Drug schedules monitored [Internet] Waltham (MA): The Center; [cited 2016 May 25]. Available from: http://www.pdmpassist.org/content/drug-schedules-monitored. [Google Scholar]

- 45.Prescription Drug Monitoring Program Training and Technical Assistance Center. PDMP data collection frequency [Internet] Waltham (MA): The Center; [cited 2016 May 25]. Available from: http://www.pdmpassist.org/content/pdmp-data-collection-frequency. [Google Scholar]

- 46.These estimates were obtained by applying our regression model to 2015 data, assuming that Missouri would enact a program and that other states without at least weekly updating of data or monitoring of at least four drug schedules would adopt these features in 2016.

- 47.Centers for Disease Control and Prevention. Prevention for states [Internet] Atlanta (GA): CDC; [last updated 2016 Mar 15; cited 2016 May 13]. Available from: http://www.cdc.gov/drugoverdose/states/state_prevention.html. [Google Scholar]

- 48.Prescription Drug Monitoring Program PDMP Training and Technical Assistance Center. Technical assistance guide No. 04-13: funding options for prescription drug monitoring programs [Internet] Waltham (MA): The Center; 2013. Jul 3, [cited 2016 May 13]. Available from: http://www.pdmpassist.org/pdf/PDMP_Funding_Options_TAG.pdf. [Google Scholar]

- 49.American Medical Association. Florida prescription drug monitoring program in jeopardy. amednews.com [serial on the Internet] 2010 Sep 28; [cited 2016 May 13]. Available from: http://www.amednews.com/article/20100928/business/309289997/8/

- 50.Hinman M. Bondi saves Fasano’s prescription drug program with $2M pledge. Laker/Lutz News [serial on the Internet] 2014 May 5; [cited 2016 May 13]. Available from: http://lakerlutznews.com/lln/?p=18469.

- 51.Rutkow L, Turner L, Lucas E, Hwang C, Alexander GC. Most primary care physicians are aware of prescription drug monitoring programs, but many find the data difficult to access. Health Aff (Millwood) 2015;34(3):484–92. doi: 10.1377/hlthaff.2014.1085. [DOI] [PubMed] [Google Scholar]

- 52.Deyo RA, Irvine JM, Millet LM, Beran T, O’Kane N, Wright DA, et al. Measures such as interstate cooperation would improve the efficacy of programs to track controlled drug prescriptions. Health Aff (Millwood) 2013;32(3):603–13. doi: 10.1377/hlthaff.2012.0945. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.