Abstract

Objective: Do-not-resuscitate (DNR) orders and advance directives are increasingly prevalent and may affect medical interventions and outcomes. Simple, automated techniques to identify patients with DNR orders do not currently exist but could help avoid costly and time-consuming chart review. This study hypothesized that a decision to withhold cardiopulmonary resuscitation would be included in a patient's dictated reports. The authors developed and validated a simple computerized search method, which screens dictated reports to detect patients with DNR status.

Methods A list of concepts related to DNR order documentation was developed using emergency department, hospital admission, consult, and hospital discharge reports of 665 consecutive, hospitalized pneumonia patients during a four-year period (1995–1999). The list was validated in an independent group of 190 consecutive inpatients with pneumonia during a five-month period (1999–2000). The reference standard for the presence of DNR orders was manual chart review of all study patients. Sensitivity, specificity, predictive values, and nonerror rates were calculated for individual and combined concepts.

Results The list of concepts included: DNR, Do Not Attempt to Resuscitate (DNAR), DNI, NCR, advanced directive, living will, power of attorney, Cardiopulmonary Resuscitation (CPR), defibrillation, arrest, resuscitate, code, and comfort care. As determined by manual chart review, a DNR order was written for 32.6% of patients in the derivation and for 31.6% in the validation group. Dictated reports included DNR order–related information for 74.5% of patients in the derivation and 73% in the validation group. If mentioned in the dictated report, the combined keyword search had a sensitivity of 74.2% in the derivation group (70.0% in the validation group), a specificity of 91.5% (81.5%), a positive predictive value of 80.9% (63.6%), a negative predictive value of 88.0% (85.5%), and a nonerror rate of 85.9% (77.9%). DNR and resuscitate were the most frequently used and power of attorney and advanced directives the least frequently used terms.

Conclusion Dictated hospital reports frequently contained DNR order–related information for patients with a written DNR order. Using an uncomplicated keyword search, electronic screening of dictated reports yielded good accuracy for identifying patients with DNR order information.

Resuscitating people with sudden cardiac or respiratory arrest using closed-chest compression and mouth-to-mouth ventilation was introduced formally in 1960.1 Since then, cardiopulmonary resuscitation efforts have become part of standard clinical care for hospitalized patients who suffer a cardiac or respiratory arrest. However, clinicians, patients, and families decide to forego resuscitation in certain situations.2

Discussion about end-of-life issues has gained increased attention in medical and lay publications. Medical societies and other organizations have issued consensus statements, and legislation has codified patient autonomy in end-of-life decisions in the Patient Self-Determination Act.3,4 Patients can specify their medical care for end-of-life situations in an advanced directive document, such as a living will or a durable power of attorney for health care. Physicians may honor a patient's request to limit aggressive treatment by writing a do-not-resuscitate (DNR) order. Cardiopulmonary resuscitation may be the only medical procedure that does not require a physician's order, but requires an order to withhold it.5 Because of the public's increased awareness of end-of-life issues, advance directives and DNR orders are increasingly discussed and documented in the hospital setting.6 DNR orders are written for 18% to 28% of hospitalized patients and for up to 70% of hospitalized patients at the time of death.5,6

As advance directives and DNR orders have fundamental implications for patient care, the discussions about end-of-life treatment options between the physician and the patient or the patient's family are documented in the patient's chart. The documentation of a DNR order usually consists of hand-written notes on order sheets, handwritten progress notes, designated procedure-specific paper-based forms, or references to discussions and decisions in unstructured dictated reports.

Investigators need to identify patients with DNR orders in retrospective research studies that intend to exclude patients with DNR orders, because they may experience different care and outcomes that bias study results. Studies that focus on end-of-life issues should identify all DNR patients in the study population. As long as electronic documentation of advanced directives and DNR orders remains the exception,7,8,9 identifying patients with DNR orders remains challenging and generally requires costly and time-consuming manual chart review.

Due to the common use of DNR orders and a heightened focus on appropriate documentation, we hypothesized that DNR orders are mentioned in the dictated reports that enter the computerized patient record. This study examined whether a simple, computerized keyword search of dictated reports is able to identify patients with DNR orders.

Materials and Methods

The study was performed at LDS Hospital, Salt Lake City, Utah, a tertiary care, university-affiliated hospital with 540 beds. LDS Hospital uses an integrated clinical information system (HELP System) for documenting, reporting, and supporting physicians with a variety of computerized clinical decision support systems.10 This analysis was based on information collected for two studies, the primary goal of which was to examine processes related to the delivery of pneumonia care and the evaluation of a computerized pneumonia decision support system.11 The study included all adult patients with pneumonia who were seen initially in the emergency department and subsequently admitted to the hospital during two separate study periods. Patients from the first, four-year long study period (June 1995–June 1999) were used in the derivation group. Patients from the second, five-month study period (November 1999–April 2000) were included in the validation group. For patients in the derivation group, the definition of community-acquired pneumonia included an ICD-9 code of pneumonia and a chest x-ray report compatible with pneumonia as determined by the majority vote of three physician reviewers. In the validation group, patients with community-acquired pneumonia were identified using a three-step review process, which has been described previously.12 In summary, the process reviewed all emergency department encounters during the study period and applied increasingly stringent criteria on each step to exclude patients with only a remote chance of having pneumonia. The third step included a panel of three physicians who reviewed the patients' chart and radiology films and determined whether pneumonia was present or absent. The study was approved by the local Institutional Review Board and Research and Human Rights Committee.

Do-Not-Resuscitate Orders

To establish a reference standard for resuscitation status, one author manually reviewed the paper charts of all patients during the two study periods using a standardized abstraction form. Data abstraction included whether a written DNR order was present and whether DNR order information was mentioned in dictated reports. During the first study period, the Division of Medical Ethics instituted a procedure-specific paper-based DNR form and promoted its use through educational instruction.13 The DNR form was slightly larger than regular-sized paper and had a colored edge to make it visible and easy to locate in a patient's chart. A DNR order was considered present if there was a written order in the patient's medical chart during the hospitalization. The mentioning of a DNR order in a dictated report was insufficient if a written DNR order was not actually present in the chart.

Among patients without a DNR order, we identified those patients who had actions described in the chart consistent with a DNR order. An example would be a patient without an explicit DNR order but with documentation that describes withdrawing of life-sustaining interventions and providing comfort measures only.

The performance of an electronic screening method can only be evaluated if information is available in computable format. For example, if DNR status information is noted in handwritten progress notes only and unavailable in electronic format, a keyword search will not be able to detect patients with a DNR order. In these instances, electronic screening for DNR orders in dictated reports will fail. However, failure to detect such cases does not provide information about the quality of electronic screening of concepts but rather reflects the documentation practices. To evaluate the keywords used to identify patients with a DNR order, we performed a second analysis. For this second analysis, the reference standard included patients who had DNR order information both in paper-based (e.g., DNR order form, progress report) and electronic form (dictated reports).

Selection of Keywords

A list of DNR-related keywords was developed using the derivation population and reviewed in group discussions. The list of keywords included terms that are directly and indirectly related to DNR order information. In addition, the list of keyword searches was submitted to a Unified Medical Language System (UMLS) search to identify possible abbreviations and synonyms.14 For common abbreviations, the fully spelled term, such as do not resuscitate for DNR, was included. Fully spelled terms were searched in truncated format (indicated by using the symbol “$”) to allow for different deviations of a root term. For example, the truncated term resusc$ includes concepts such as resuscitate, resuscitation, resuscitated, or resuscitative. Variants of keywords, such as concatenation of keywords by dashes (Do Not Resuscitate and Do-Not-Resuscitate), or the use of punctuation (DNR and D.N.R.), were also considered. We did not consider variants that may have occurred due to misspelled terms in dictated reports. For abbreviations, we evaluated the shortest term possible but added leading or trailing blank spaces if the abbreviation was a substring of other frequently used terms. This approach was used for the keyword NCR (no cardiopulmonary resuscitation), which is a substring of the term iNCRease$ or paNCReat$, and the keyword POA (power of attorney), which is a substring of hyPOActiv$ or hyPOAlbumin$. Because the concept resuscitation appeared frequently in the context of fluid resuscitation, we specifically excluded the concept fluid resuscitation. Similarly, we excluded the term full code when searching for the concept code. The evaluated keywords are listed in ▶.

Table 1.

Concepts, Search Terms, and Variations Used to Identify DNR Patients

| Concept | Search Term | Variants |

|---|---|---|

| DNR | DNR | Includes punctuation |

| Do not resusc$ | Includes dashes | |

| To be resusc$ | Includes “not to be resusc$” | |

| To be not resusc$ | Includes dashes | |

| To not be resusc$ | Includes dashes | |

| DNAR | DNAR | Includes punctuation |

| Do not attempt | Includes dashes | |

| DNI | DNI | Includes punctuation |

| Do not intub$ | Includes dashes | |

| Not be intub$ | Includes dashes | |

| Not to be intub$ | Includes dashes | |

| Be not intub$ | Includes dashes | |

| NCR | _NCR or NCR_ | Includes punctuation |

| No cardiopulmonary | ||

| No-cardiopulmonary | ||

| No cardio-pulmonary | ||

| No-cardio-pulmonary | ||

| No cardio pulmonary | ||

| No chest compr$ | ||

| No cardiac compr$ | ||

| Advanced directives | $ced dire$ | |

| Living will | Living will | |

| Power of attorney | _POA or POA_ | Includes punctuation |

| $er of att$ | Includes dashes | |

| CPR | CPR | Includes punctuation |

| Cardio pulmonary resusc$ | Includes dashes | |

| Cardiac resusc$ | ||

| Cardiac compr$ | ||

| Chest compr$ | ||

| Defibrillation | Defibrill$ | |

| Arrest | Arrest | |

| $ac arres$ | ||

| $ory arres$ | ||

| Resuscitate | Resusc$ | Excludes “fluid resusc$” |

| Code | Code status | Excludes “full code” |

| Comfort care | Comfort care |

NOTE: The $ symbol indicates the truncation of a term. The underscore symbol (“_”) indicates the explicit use of a leading or trailing blank space.

DNR = do not resuscitate; DNAR = do not attempt to resuscitate; DNI = do not intubate; NCR = no cardiopulmonary resuscitation; CPR = cardiopulmonary resuscitation.

In addition, we examined whether claims data could be used to identify patients with DNR orders and augment the identification rate when combined with keywords. Although ICD-9 codes do not exist for DNR information (the explicit withholding of a procedure), surrogate codes exist that might indicate that a life-supporting discussion has occurred with the patient or the family. We applied a previously used list of ICD-9 codes15: 427.5 (cardiac arrest), 427.41 (ventricular fibrillation), 427.1 (ventricular tachycardia), 996.2 (defibrillation), and 996 (cardiopulmonary resuscitation).

Dictated Reports

All dictated reports are stored electronically in the clinical information system. Due to their likelihood of mentioning a DNR order, the following dictated reports were examined for DNR order status: emergency department reports (including addenda), hospital admission reports, consultations, and hospital discharge notes (including death summaries).

Outcome Variables

We computed standard test characteristics for the derivation and validation groups. The test characteristics included sensitivity, specificity, positive and negative predictive values, and nonerror rates. They were determined for each term individually and for the combination of all DNR order–related keywords. Sensitivity was determined by dividing the number of patients who had a DNR order and a keyword present by the total number of patients with a DNR order. Specificity was calculated by dividing the number of patients without a DNR order and without the keyword present by the total number of patients without a DNR order. Positive predictive value was determined by dividing the number of patients with a DNR order and a keyword present by the number of all patients with the keyword present. Negative predictive value was calculated by dividing the number of all patients without a DNR order and without the keyword present by the total number of patients without the keyword present. The nonerror rate provides an overall accuracy measure. The nonerror rate is the sum of patients with a DNR order and the keyword present (true-positive rate) and the patients without a DNR order and without the keyword present (true-negative rate) divided by all patients.

We calculated 95% confidence intervals for the differences of proportions between the derivation and validation group. Because values in the 2 x 2 matrices included small numbers and resulted in proportions smaller than 10% and larger than 90%, we calculated confidence intervals following the methods proposed by Newcombe.16

Results

The derivation group included 665 pneumonia patients (female, 46.8%; mean age, 66.7 ± 19.8 years; in-hospital mortality rate, 8.1%) and 190 validation group patients (female, 50%; mean age, 66.9 ± 17.2 years; in-hospital mortality rate, 8.9%). Five patients were excluded because their charts were not available. The number and category of dictated reports are shown in ▶.

Table 2.

Availability of Dictated Reports

| Type of Report | Derivation (n = 665) | Validation (n = 190) |

|---|---|---|

| Emergency department report | 97.1% | 98.9% |

| Hospital admission report | 92.2% | 94.2% |

| Consultation report | 22.0% | 31.1% |

| Hospital discharge report | 95.0% | 94.7% |

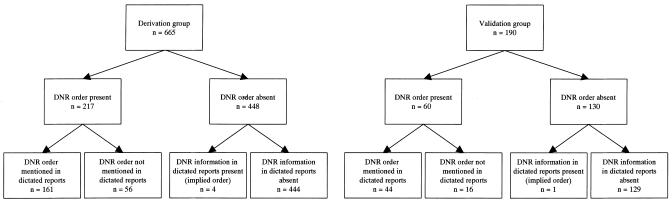

▶ shows the categorization of patients for both groups as determined by manual chart review. In the derivation group, a DNR order was written for 217 (32.6%) patients (female, 54.4%; mean age, 79.9 ± 12.8 years; mortality rate, 24.9%). DNR order information was present in dictated reports for 161 patients (74.5%). Compared with the derivation population, the patients in the validation group had similar descriptive DNR order characteristics. Of the 54 patients who died, 46 (85%) had a DNR order written, and 171 (28.0%) DNR orders were written for the 611 patients who survived. In the validation group, a DNR order was written for 60 (31.6%) patients (female, 52%; mean age, 78.1 ± 10.7 years; mortality rate, 23.3%). There were 44 DNR orders (73%) mentioned in the dictated reports. Of the 17 patients who died, 14 (82%) had a DNR order written, and 46 (26.6%) DNR orders were written for the 173 patients who survived. Among patients without a DNR order, four patients in the derivation and one patient in the validation group had an implied DNR order. In these patients, clinical care was compatible with following a DNR order, but no DNR order was written.

Figure 1.

Flow diagram of patients, DNR order status, and order documentation in dictated reports.

In the derivation group, keywords appeared 444 times in 199 different patients. The occurrence of keywords ranged from 1 to nine different concepts and averaged 2.2 per patient. In the validation group, keywords were mentioned 168 times in 66 different patients. The frequency of concepts ranged from 1 to eight and averaged 2.5 per patient. DNR and resuscitate were the most frequently dictated and power of attorney and advanced directives the least frequently dictated terms in both groups. Despite the fact that DNAR (do-not-attempt-to-resuscitate) is explicitly used on the institutional DNR order form, it was never mentioned in either group. The overall occurrence of concepts was comparable between the derivation and validation group.

Performance measures for individual concepts are shown in ▶. They ranged considerably when compared against the reference standard, which included patients without DNR order information in the dictated reports. DNR, resuscitation, and code were the most sensitive terms. DNI and comfort care had the highest positive predictive value in both groups. In both groups, the term arrest never occurred isolated and did not increase the number of identified patients with a DNR order. The nonerror rate for individual concepts ranged between 68.1% and 79.5% for the derivation (with power of attorney being an exception) and between 66.8% and 82.6% for the validation group (▶).

Table 3.

Performance Measures of Concepts if a DNR Order Was Present

| Frequency |

Sensitivity |

Specificity |

Positive Predictive Value |

Negative Predictive Value |

Nonerror Rate |

||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Concept | Deriv | Valid | Deriv | Valid | CI | Deriv | Valid | CI | Deriv | Valid | CI | Deriv | Valid | CI | Deriv | Valid | CI |

| DNR | 85 | 37 | 38.2 | 53.3 | −0.286 to −0.01 | 99.6 | 96.2 | 0.009 to 0.083 | 97.6 | 86.5 | −0.016 to 0.257 | 76.9 | 81.7 | −112 to 0.028 | 79.5 | 82.6 | −0.088 to 0.039 |

| DNI | 14 | 15 | 6.5 | 23.3 | −0.293 to −0.071 | 100 | 99.2 | −0.003 to 0.042 | 100 | 93.3 | −0.156 to 0.298 | 68.8 | 73.7 | −0.119 to 0.029 | 69.5 | 75.3 | −0.124 to 0.016 |

| NCR | 62 | 11 | 26.3 | 16.7 | −0.03 to 0.192 | 98.9 | 99.2 | −0.019 to 0.032 | 91.9 | 90.9 | −0.11 to 0.3 | 73.5 | 72.1 | −0.057 to 0.092 | 75.2 | 73.2 | −0.047 to 0.094 |

| Advance directives | 7 | 3 | 2.8 | 3.3 | −0.087 to 0.034 | 99.8 | 99.2 | −0.007 to 0.04 | 85.7 | 66.7 | −0.269 to 0.664 | 67.9 | 69.0 | −0.082 to 0.067 | 68.1 | 68.9 | −0.08 to 0.069 |

| Living will | 14 | 9 | 5.5 | 13.3 | −0.0189 to −0.003 | 99.6 | 99.2 | −0.01 to 0.038 | 85.7 | 88.9 | −0.304 to 0.308 | 68.5 | 71.3 | −0.099 to 0.05 | 68.9 | 72.1 | −0.101 to 0.043 |

| CPR | 49 | 16 | 19.4 | 20.0 | −0.133 to 0.094 | 98.4 | 96.9 | −0.01 to 0.062 | 85.7 | 75.0 | −0.086 to 0.362 | 71.6 | 72.4 | −0.08 to 0.07 | 72.6 | 72.6 | −0.068 to 0.075 |

| Defibrillation | 10 | 9 | 3.7 | 11.7 | −0.186 to −0.012 | 99.6 | 98.5 | −0.005 to 0.05 | 80.0 | 77.8 | −0.326 to 0.378 | 68.1 | 70.7 | −0.098 to 0.052 | 68.3 | 71.1 | −0.098 to 0.048 |

| Arrest | 38 | 11 | 10.1 | 6.7 | −0.064 to 0.097 | 96.4 | 94.6 | −0.017 to 0.073 | 57.9 | 36.4 | −0.108 to 0.0471 | 68.9 | 68.7 | −0.072 to 0.081 | 68.3 | 66.8 | −0.058 to 0.092 |

| Resuscitate | 84 | 34 | 31.8 | 30.0 | −0.12 to 0.138 | 96.7 | 87.7 | 0.039 to 0.158 | 82.1 | 52.9 | 0.109 to 0.467 | 74.5 | 73.1 | −0.059 to 0.096 | 75.5 | 69.5 | −0.01 to 0.136 |

| Code | 54 | 19 | 21.7 | 25.0 | −0.165 to 0.076 | 98.4 | 96.9 | −0.01 to 0.062 | 87.0 | 78.9 | −0.089 to 0.313 | 72.2 | 73.7 | −0.086 to 0.063 | 73.4 | 74.2 | −0.075 to 0.066 |

| Power of attorney | 5 | 0 | 1.8 | 0.0 | −0.043 to 0.046 | 99.2 | 100 | −0.046 to 0.021 | 80.0 | N/A | N/A | 35.5 | 68.4 | −0.409 to −0.242 | 36.1 | 68.4 | −0.402 to −0.236 |

| Comfort care | 22 | 4 | 10.1 | 6.7 | −0.064 to 0.097 | 100 | 100 | −0.009 to 0.029 | 100 | 100 | −0.149 to 0.049 | 69.7 | 69.9 | −0.074 to 0.075 | 70.7 | 70.5 | −0.069 to 0.078 |

| ICD-9 code | 35 | 6 | 6.0 | 1.7 | −0.033 to 0.085 | 95.1 | 96.2 | −0.043 to 0.04 | 37.1 | 16.7 | −0.216 to 0.419 | 67.6 | 67.9 | −0.077 to 0.076 | 66.0 | 66.3 | −0.076 to 0.075 |

Values are in percent. The concept “DNAR” (do-not-attempt-to-resuscitate) was never used and, therefore, not included in the table.

Deriv = derivation group; Valid = validation group; CI = 95% confidence interval around the difference between the proportions of the derivation group and the validation group; DNR = do not resuscitate; DNI = do not intubate; NCR = no cardiopulmonary resuscitation; CPR = cardiopulmonary resuscitation.

With respect to individual concepts, ICD-9 codes had relatively poor performance characteristics (▶). Based on the sole presence of an ICD-9 code, an additional three patients with a DNR order were identified in the derivation group; in the validation group the ICD-9 codes did not identify any additional patients.

When all keywords were combined and compared against the reference standard, the sensitivities in the derivation and validation group were 74.2% and 70.0% (95% confidence interval of difference [CI]: −0.077–0.178), the specificities 91.5% and 81.5% (CI: 0.035–0.178), the positive predictive values 80.9% and 63.6% (CI: 0.051–0.303), the negative predictive values 88.0% and 85.5% (CI: −0.036–0.102), and the nonerror rates 85.9% and 77.9% (CI: 0.019–0.148).

The results of the second analysis, which examined the ability of the keywords to identify DNR patients when DNR order information was available in electronic format, are shown in ▶. The nonerror rate for individual concepts ranged between 76.1% and 88% for the derivation group and between 75.3% and 91.1% for the validation group.

Table 4.

Performance Measures of Concepts if a DNR Order Was Mentioned in the Dictated Reports

| Frequency | Sensitivity |

Specificity |

Positive Predictive Value |

Negative Predictive Value |

Nonerror Rate |

||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Concept | Deriv | Valid | Deriv | Valid | CI | Deriv | Valid | CI | Deriv | Valid | CI | Deriv | Valid | CI | Deriv | Valid | CI |

| DNR | 85 | 37 | 51.0 | 72.7 | −0.345 to −0.047 | 99.6 | 96.2 | 0.008 to 0.074 | 97.6 | 86.5 | 0.016 to 0.257 | 86.6 | 81.0 | −0.101 to 0.003 | 88.0 | 91.1 | −0.073 to 0.023 |

| DNI | 14 | 15 | 6.7 | 31.8 | −0.383 to −0.101 | 100 | 99.3 | −0.003 to 0.038 | 100 | 93.3 | −0.156 to 0.298 | 77.4 | 82.9 | −0.114 to 0.015 | 77.9 | 83.7 | −0.114 to 0.008 |

| NCR | 62 | 11 | 35.4 | 22.7 | −0.032 to 0.252 | 99.0 | 99.3 | −0.017 to 0.028 | 91.9 | 90.9 | −0.11 to 0.3 | 82.8 | 81.0 | −0.043 to 0.087 | 83.6 | 81.6 | −0.037 to 0.087 |

| Advance directives | 7 | 3 | 3.7 | 4.5 | −0.116 to 0.045 | 99.8 | 99.3 | −0.006 to 0.036 | 85.7 | 66.7 | −0.269 to 0.664 | 76.4 | 77.5 | −0.075 to 0.061 | 76.5 | 77.4 | −0.072 to 0.063 |

| Living will | 14 | 9 | 7.5 | 18.2 | −0.249 to −0.007 | 99.6 | 99.3 | −0.009 to 0.034 | 85.7 | 88.9 | −0.304 to 0.308 | 77.1 | 80.1 | −0.092 to 0.041 | 77.3 | 80.5 | −0.092 to 0.037 |

| CPR | 49 | 16 | 24.2 | 27.3 | −0.188 to 0.1 | 98.0 | 97.3 | −0.016 to 0.049 | 79.6 | 75.0 | −0.153 to 0.307 | 80.2 | 81.6 | −0.075 to 0.057 | 80.2 | 81.1 | −0.068 to 0.059 |

| Defibrillation | 10 | 9 | 4.3 | 13.6 | −0.226 to −0.008 | 99.4 | 97.9 | −0.003 to 0.053 | 70.0 | 66.7 | −0.337 to 0.4 | 76.5 | 79.0 | −0.088 to 0.047 | 76.4 | 78.4 | −0.083 to 0.051 |

| Arrest | 38 | 11 | 12.4 | 9.1 | −0.095 to 0.115 | 96.4 | 95.2 | −0.019 to 0.062 | 52.6 | 36.4 | −0.159 to 0.422 | 77.5 | 77.7 | −0.066 to 0.072 | 76.1 | 75.3 | −0.057 to 0.081 |

| Resuscitate | 84 | 34 | 41.0 | 40.9 | −0.157 to 0.16 | 96.6 | 89.0 | 0.03 to 0.138 | 79.8 | 52.9 | 0.084 to 0.446 | 83.8 | 83.3 | −0.055 to 0.077 | 83.3 | 77.9 | 0.007 to 0.124 |

| Code | 54 | 19 | 0.6 | 34.1 | −0.216 to 0.088 | 98.4 | 97.3 | −0.011 to 0.053 | 85.2 | 78.9 | −0.011 to 0.296 | 81.2 | 83.0 | −0.078 to 0.051 | 81.5 | 82.6 | −0.068 to 0.055 |

| Power of attorney | 5 | 0 | 2.5 | 0.0 | −0.057 to 0.062 | 99.8 | 100 | −0.011 to 0.024 | 80.0 | N/A | N/A | 76.2 | 76.8 | −0.07 to 0.066 | 76.2 | 76.8 | −0.07 to 0.066 |

| Comfort care | 22 | 4 | 12.4 | 9.1 | −0.095 to 0.115 | 99.6 | 100 | −0.014 to 0.022 | 90.9 | 100 | −0.278 to 0.403 | 78.1 | 78.5 | −0.067 to 0.067 | 78.5 | 78.9 | −0.066 to 0.065 |

| ICD-9 code | 35 | 6 | 5.6 | 0.0 | −0.029 to 0.103 | 94.8 | 96.1 | −0.043 to 0.035 | 25.7 | 0 | −0.15 to 0.421 | 75.9 | 76.8 | −0.074 to 0.063 | 73.2 | 74.5 | −0.078 to 0.06 |

All values in percent. The concept “DNAR” (do-not-attempt-to-resuscitate) was never used and, therefore, not included in the table.

Deriv = derivation group; Valid = validation group; CI = 95% confidence interval around the difference between the proportions of the derivation group and the validation group; DNR = do not resuscitate; DNI = do not intubate; NCR = no cardiopulmonary resuscitation; CPR = cardiopulmonary resuscitation.

For the second analysis the combined search using all keywords resulted in sensitivities of 96.3% in the derivation and 93.2% in the validation group (CI: −0.03–0.147), specificities of 91.3% and 89.7% (CI: −0.033–0.079), positive predictive values of 77.9% and 73.2% (CI: −0.07–0.185), negative predictive values of 98.7% and 97.8% (CI: −0.011–0.051) and nonerror rates of 92.5% and 90.5% (CI: −0.021–0.073). The combined keyword search missed only six (false-negative cases) of 161 patients in the derivation and three of 44 patients in the validation group. Examples for missed DNR order–related information included: withdraw life support measures, [the patient] desired no heroic measures or mechanical support measures including ventilation or chest compressions, and they [the family members] decided to withdraw support. Among the five patients with an implied DNR order (DNR order information mentioned in the dictated report, but no written order), the combined keyword search identified three of five patients.

Discussion

Our evaluation of a large pneumonia population showed that an uncomplicated search algorithm can identify patients with a documented DNR order in a dictated report. The test characteristics for single concepts varied considerably and were relatively low, indicating that different concepts are used to describe DNR orders in dictated reports. However, a combined search using multiple keywords had high overall accuracy in identifying patients with DNR order information in electronically available reports. The list of keywords represents a practical and cost-effective approach to screening dictated reports for the presence of DNR order information.

The high specificity and high negative predictive value of the combined search indicate that DNR order information is more frequently available in dictated reports when DNR orders are written. In the absence of a documented patient–provider discussion about end-of-life issues, full life support remains an implicit notion of the patient's preference. For certain applications, high sensitivity is needed to find all patients with DNR order information. In such situations, the list of keywords may include additional terms or combinations of terms that may indicate the presence of DNR order–related information but are more frequently used in a different context and may result in many false-positive results. An example of such a term includes the concept withdraw$, which is frequently used in the context of alcohol abuse.

Documentation, reporting, and communication of DNR order information and patient preferences varies among clinicians. For about 25% of patients in both cohorts, DNR orders were written during the patient's hospitalization but not mentioned in any dictated reports. For these patients, electronic screening of dictated reports needs to be augmented by manual chart review.

Documentation of patients' end-of-life preferences occurs throughout the spectrum of health care. A feasible and simple approach to easily identify such patients retrospectively does not currently exist but is necessary in a variety of situations. Patients with DNR orders frequently need to be identified and excluded in retrospective studies. These patients represent a population with different characteristics that may confound outcome variables. In prospective studies, a prevalence estimate of patients with DNR orders who subsequently need to be excluded from the study cohort is useful for study planning purposes. The examination of large clinical datasets may benefit from a feasible and cost-effective approach to electronically screen for DNR patients. Studies that focus on end-of-life and DNR order–related issues could identify patients across different diseases and settings. Lastly, the keyword search may support audit functions to monitor and understand the patterns of DNR order documentation within an institution.

We believe that our methodology to identify DNR orders from text-based documents is primarily applicable to retrospective data analyses. Although it is conceivable to create a real-time decision support system that is based on a keyword search of available narrative documents and prompts clinicians to place or initiate a discussion with the patient about a DNR order, there are important ethical and legal implications that require careful consideration about pursuing such an approach. For clinical purposes, it is not possible to access the most current DNR status information through an electronic search if the most current document is only paper based.

Natural language understanding tools have been successfully applied for mining and extracting information from text-based documents.17,18,19 They are particularly helpful in situations that require the semantic interpretation of a phrase, sentence, lexical variant, or negated finding to infer the presence or absence of a concept.20 Despite their advantages over simple keyword searches, natural language understanding tools require specialized parsing programs that are capable of applying domain knowledge to determine whether a term is represented in a text-based document. In our study, the keyword search missed a few cases in which the semantic capabilities of a natural language understanding method may have succeeded. Our keyword-based approach, however, is a simple, cost-effective, yet quite accurate method that can be implemented by researchers using a commonly available database or word processing tools.

Our study has several limitations. We considered only pneumonia patients for this study. As DNR order rates vary among different diseases,21,22 an alternative study design may have examined a DNR keyword search for all hospitalized patients independent of their disease status. Although it remains to be demonstrated, we do not believe that the terminology used to document DNR status varies among different diseases during a certain time period, which would lead to a change in performance of the DNR keyword search and bias our results in an unknown direction. We believe that pneumonia is a reasonable choice for the purpose of our study as the frequency of DNR orders is relatively high in pneumonia patients compared with other diseases.21,22 In addition, patients with pneumonia can have a variety of concomitant chronic diseases or conditions that may warrant end-of-life discussions. It is not uncommon that a DNR status discussion between patient and physician is initiated as a result of the patient's underlying disease and general condition, rather than the pneumonia episode.

Physician-based documentation bias may be present as this study included reports from only a single institution; however, the long study period during which many different physicians provided and documented care makes documentation bias less likely. For comparison, pneumonia patients from a study that included four different hospitals (1991–1994), had a comparable average age for DNR patients, a lower DNR order prevalence (22.1%), and a slightly lower mortality rate (6.6%).22 DNR order prevalence was similar in another study (28.9%), which examined 23,709 pneumonia patients over a seven-year period (1991–1997).23

We did not examine additional factors such as the timing of DNR orders (early versus late DNR orders), the particular treatment preferences of patients, or the reasons a DNR order was written. Such detailed analyses are beyond the capabilities of an uncomplicated keyword search and may continue to require detailed abstraction from chart review.

Conclusion

This study examined the ability of an uncomplicated, electronic search of dictated reports to identify patients with a DNR order written during their hospitalization. If DNR order information is documented in the patients' dictated reports, the keyword search is a feasible and accurate approach to electronically detect patients with a written DNR order. It may provide a minimum estimate of the patients in a study with DNR orders and enable investigators to evaluate bias or confounding attributable to the inclusion of these patients. The method may reduce the workload of a manual chart review, which remains necessary for DNR patients whose dictated reports do not mention DNR status.

Dr. Aronsky was supported by grant 31-44406.95 from the Deseret Foundation.

References

- 1.Kouwenhoven WB, Jude JR, Knickerbocker GG. Closed-chest cardiac massage. JAMA. 1960;173:1064–7. [DOI] [PubMed] [Google Scholar]

- 2.Rabkin MT, Gillerman G, Rice NR. Orders not to resuscitate. N Engl J Med. 1976;295:364–6. [DOI] [PubMed] [Google Scholar]

- 3.Eliasson AH, Parker JM, Shorr AF, et al. Impediments to writing do-not-resuscitate orders. Arch Intern Med. 1999;159:2213–8. [DOI] [PubMed] [Google Scholar]

- 4.Omnibus Budget Reconciliation Act of 1990; 42 USC §§ 1395cc(f)(1) and 1396a(a): “Patient Self-Determination Act” (P.L. 101-508 sec. 4206 & 4751).

- 5.Rubenfeld GD. Do-not-resuscitate orders: a critical review of the literature. Respir Care. 1995;40:528–35. [PubMed] [Google Scholar]

- 6.Wenger NS, Pearson ML, Desmond KA, Brook RH, Kahn KL. Outcomes of patients with do-not-resuscitate orders. Toward an understanding of what do-not-resuscitate orders mean and how they affect patients. Arch Intern Med. 1995;155:2063–8. [PubMed] [Google Scholar]

- 7.Heffner JE, Barbieri C, Fracica P, Brown LK. Communicating do-not-resuscitate orders with a computer-based system. Arch Intern Med. 1998;158:1090–5. [DOI] [PubMed] [Google Scholar]

- 8.Sulmasy DP, Marx ES. A computerized system for entering orders to limit treatment: implementation and evaluation. J Clin Ethics. 1997;8:258–63. [PubMed] [Google Scholar]

- 9.Dexter PR, Wolinsky FD, Gramelspacher GP, et al. Effectiveness of computer-generated reminders for increasing discussions about advance directives and completion of advance directive forms. A randomized, controlled trial. Ann Intern Med. 1998;128:102–10. [DOI] [PubMed] [Google Scholar]

- 10.Gardner RM, Pryor TA, Warner HR. The HELP hospital information system: update 1998. Int J Med Inf. 1999;54:169–82. [DOI] [PubMed] [Google Scholar]

- 11.Aronsky D, Haug PJ. Automatic identification of patients eligible for a pneumonia guideline. Proc AMIA Symp. 2000:12–6. [PMC free article] [PubMed]

- 12.Aronsky D, Chan KJ, Haug PJ. Evaluation of a computerized diagnostic decision support system for patients with pneumonia: study design considerations. J Am Med Inform Assoc. 2001;8:473–85. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Jacobson JA, Kasworm E. May I take your order: a user-friendly resuscitation status and medical treatment plan form. Qual Manag Health Care. 1999;7:13–20. [DOI] [PubMed] [Google Scholar]

- 14.Humphreys BL, Lindberg DA, Schoolman HM, Barnett GO. The Unified Medical Language System: an informatics research collaboration. J Am Med Inform Assoc. 1998;5:1–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Kernerman P, Cook DJ, Griffith LE. Documenting life-support preferences in hospitalized patients. J Crit Care. 1997;12:155–60. [DOI] [PubMed] [Google Scholar]

- 16.Newcombe RG. Interval estimation for the difference between independent proportions: comparison of eleven methods. Stat Med. 1998;17:873–90. [DOI] [PubMed] [Google Scholar]

- 17.Hripcsak G, Friedman C, Alderson PO, DuMouchel W, Johnson SB, Clayton PD. Unlocking clinical data from narrative reports: a study of natural language processing. Ann Intern Med. 1995;122:681–8. [DOI] [PubMed] [Google Scholar]

- 18.Friedman C, Hripcsak G. Natural language processing and its future in medicine. Acad Med. 1999;74:890–5. [DOI] [PubMed] [Google Scholar]

- 19.Fiszman M, Chapman WW, Aronsky D, Evans RS, Haug PJ. Automatic detection of acute bacterial pneumonia from chest X-ray reports. J Am Med Inform Assoc. 2000;7:593–604. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Chapman WW, Bridewell W, Hanbury P, Cooper FG, Buchanan BG. A simple algorithm for identifying negated findings and diseases in discharge summaries. J Biomed Inform. 2001;34:301–10. [DOI] [PubMed] [Google Scholar]

- 21.Wenger NS, Pearson ML, Desmond KA, et al. Epidemiology of do-not-resuscitate orders. Disparity by age, diagnosis, gender, race, and functional impairment. Arch Intern Med. 1995;155:2056–62. [PubMed] [Google Scholar]

- 22.Baker DW, Einstadter D, Husak S, Cebul RD. Changes in the use of do-not-resuscitate orders after implementation of the Patient Self-Determination Act. J Gen Intern Med. 2003;18:343–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Marrie TJ, Fine MJ, Kapoor WN, Coley CM, Singer DE, Obrosky DS. Community-acquired pneumonia and do not resuscitate orders. J Am Geriatr Soc. 2002;50:290–9. [DOI] [PubMed] [Google Scholar]