Abstract

Background: Neuropsychological testing is a central aspect of stroke research because it provides critical information about the cognitive-behavioral status of stroke survivors, as well as the diagnosis and treatment of stroke-related disorders. Standard neuropsychological methods rely upon face-to-face interactions between a patient and researcher, which creates geographic and logistical barriers that impede research progress and treatment advances. Introduction: To overcome these barriers, we created a flexible and integrated system for the remote acquisition of neuropsychological data (RAND). The system we developed has a secure architecture that permits collaborative videoconferencing. The system supports shared audiovisual feeds that can provide continuous virtual interaction between a participant and researcher throughout a testing session. Shared presentation and computing controls can be used to deliver auditory and visual test items adapted from standard face-to-face materials or execute computer-based assessments. Spoken and manual responses can be acquired, and the components of the session can be recorded for offline data analysis. Materials and Methods: To evaluate its feasibility, our RAND system was used to administer a speech-language test battery to 16 stroke survivors with a variety of communication, sensory, and motor impairments. The sessions were initiated virtually without prior face-to-face instruction in the RAND technology or test battery. Results: Neuropsychological data were successfully acquired from all participants, including those with limited technology experience, and those with a communication, sensory, or motor impairment. Furthermore, participants indicated a high level of satisfaction with the RAND system and the remote assessment that it permits. Conclusions: The results indicate the feasibility of using the RAND system for virtual home-based neuropsychological assessment without prior face-to-face contact between a participant and researcher. Because our RAND system architecture uses off-the-shelf technology and software, it can be duplicated without specialized expertise or equipment. In sum, our RAND system offers a readily available and promising alternative to face-to-face neuropsychological assessment in stroke research.

Keywords: : behavioral health, cardiology/cardiovascular disease, telemedicine, telepsychiatry, teleneurology

Introduction

Stroke is a leading cause of adult disability in the United States.1,2 Clinical and basic researchers rely on neuropsychological testing to document the natural course of stroke recovery, permit causal inferences about human brain structure–function relationships, reveal factors that predict positive outcomes, and test the efficacy of interventions.3–15 Because neuropsychological testing is a central aspect of stroke research, factors that impede its use negatively impact research progress and treatment advances. One critical impediment is that neuropsychological testing typically involves face-to-face interactions between researchers and participants. This makes it difficult for researchers to work with participants who do not live in the same local area,16,17 and it diminishes the participation of individuals with limited physical mobility or unreliable sources of transportation.18–20 The ability to remotely acquire neuropsychological data from stroke survivors located in their homes would, therefore, be an attractive alternative to face-to-face testing.19,21–23 This motivates the present effort to develop a flexible and integrated system for the remote acquisition of neuropsychological data (RAND).

The utility of telehealth and related applications for poststroke care and research was evaluated in a 2013 systematic review,17 which identified 24 peer-reviewed publications that examined two-way audiovisual communication for poststroke care. The review found that all of the primary data articles, small and preliminary in scope, were most focused on rehabilitation of adults. The authors concluded that the use of telemedicine in stroke is a nascent area of study. For similar conclusions, see Refs.24,25 The feasibility of remote assessment has been shown in actual or simulated satellite clinical testing sites. For review, see Refs.18,20,26–28 Furthermore, videoconferencing has been extended into home settings to deliver treatment for stuttering without face-to-face researcher contact.29–31 However, none of these prior works has involved a system suitable for the flexible acquisition of neuropsychological data in home-based settings.

There are a number of challenges associated with the development of a suitable system. One is that many neuropsychological tests require participants to interact with and respond to stimulus materials, making an audiovisual connection alone insufficient.20,27,32 A solution is to combine videoconferencing with accessories for collaborative stimulus delivery and response monitoring.20,33,34 However, past solutions of this type have involved special-purpose equipment and software, and so, the systems could not be easily deployed in home-based settings without considerable technical assistance.

Related work has involved computer-based test administration, which diminishes the need for administration expertise.21,35,36 A few studies have coupled computerized testing with remote assessment.21 For instance, Uysal et al.22 had a portion of their postsurgery patient sample complete an online neurocognitive battery in their homes without prior researcher contact. In a handful of other studies, face-to-face training allowed individuals with neurogenic disorders to subsequently complete home treatment or rehabilitation.37–41 One unmet challenge is the use of computerized test administration to acquire verbal responses, an essential capability for many language tests.21,42 It is also unclear whether approaches of this type would be suitable for individuals with stroke, who may have language, cognitive, motor, and sensory impairments that limit the effectiveness and usability of computer-based testing. This may be particularly true when the initial instructions for technology use and test administration are provided remotely, and not in a face-to-face introductory session.

Overall, past work highlights both the feasibility of remote assessment and the challenges associated with home-based testing environments. In the current work, these challenges are addressed through the integrated use of off-the-shelf technology and commercial software for collaborative videoconferencing. The system offers a flexible and fully featured method for home-based remote neuropsychological testing that can be readily adapted and adopted by other investigators. Results from a feasibility study indicate that it has the potential to meet a diverse set of research needs.

Materials and Methods

We developed a neuropsychological testing system for the RAND. The system integrates off-the-shelf technology and software, which allows it to be easily duplicated with minimal technical expertise and support, and deployed at a modest cost per system. It is suitable for use in home-based settings without preexisting Internet service, computing hardware, technical support, or prior contact between a participant and researcher.

RAND System Architecture

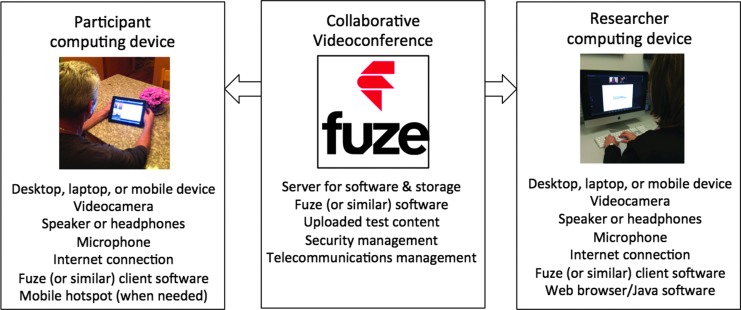

The basic architecture of our RAND system consists of a secure commercial cloud-based server and two Internet-enabled computing devices (one for the researcher and one for the participant) (Fig. 1). A variety of computing devices can be used (e.g., desktop, laptops, and mobile devices with either Macintosh or Windows operating software). The RAND equipment allows neuropsychological data to be acquired through the use of collaborative videoconferencing software. Our system uses the Fuze43 software product to connect both devices to a cloud-based server for hosting and recording collaborative videoconferences.

Fig. 1.

RAND system architecture. The architecture for our RAND system consists of a secure commercial cloud-based server and two Internet-enabled computing devices (one for the researcher and one for the participant). A variety of computing devices can be used (e.g., desktop, laptops, and mobile devices with either Macintosh or Windows operating software). For the specific system that we developed, the participant device was a Wi-Fi enabled iPad with a mobile broadband data plan. The researcher device was an Apple iMac with a wired 100 MB Ethernet connection. The RAND equipment allows neuropsychological data to be acquired through collaborative videoconferencing. For the system we developed, this functionality is supported by Fuze, a commercially available software product. The Fuze client software is installed on both the computing devices. The Fuze software connects both devices to a cloud-based server, which is able to host and record collaborative videoconferences. Neuropsychological test content can be stored and presented using the Fuze server and software, or the researcher device can be used to execute a computer-based neuropsychological assessment. In either case, the test content becomes accessible to the participant through a collaborative videoconference portal. RAND, remote acquisition of neuropsychological data.

RAND System Capabilities

Internet connectivity

The RAND system depends on Internet connectivity between the researcher and participant. To achieve this, the devices can be connected (1) through a wired Ethernet connection, (2) through a wireless network already available at the respective device location(s), or (3) through broadband Internet access through a provider account linked to the respective device(s) or an attached mobile hotspot.

Collaborative videoconferencing

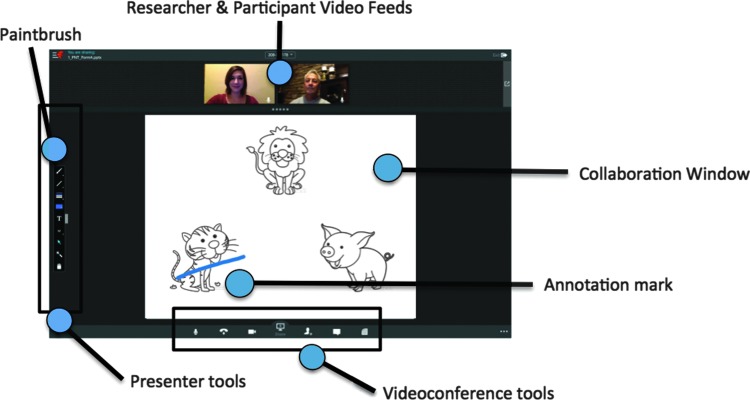

A researcher can initiate a collaborative videoconference by using Fuze to host a virtual meeting. The built-in cameras and microphones on the researcher and participant computing devices provide for a continuous audiovisual feed of the researcher and the participant, respectively, both of which can be displayed in real time on the researcher and participant device screens. In addition, both the researcher and the participant can view and interact with audiovisual content presented through a shared collaboration window using shared presentation tools (Fig. 2).

Fig. 2.

Representative RAND screen display. A typical display on the researcher computing device during a Fuze testing session. The small images on the top show the audiovisual feeds from the participant and researcher computing device, a set of presenter tools is arranged along the left-side of the screen, a set of videoconference tools is shown along the bottom side of the screen, and the large collaboration window displays a test trial with an annotation mark made by the subject using an activated paintbrush tool.

Neuropsychological testing through shared content

Neuropsychological testing typically requires the delivery of test items. This can be accomplished within the RAND system by sharing a whiteboard or a presentation that has been uploaded onto the Fuze server (Fig. 3). Alternatively, the collaboration window can be used for shared computing. In this case, the desktop of the researcher device or a software application is shared with the participant device (Fig. 3). Computer-based testing can then be performed either by using a browser to navigate to an online testing Web site or by executing testing software housed on the researcher computing device.

Fig. 3.

Overview of RAND videoconference toolbar in sharing mode. The videoconference toolbar, which is enlarged in this figure, is located at the bottom of the RAND screen display. Before or during a RAND testing session, the researcher can select a sharing tool on the videoconference toolbar. This activates a sharing mode, in which a whiteboard or a presentation file can be selected for sharing, or the researcher's desktop, or an active software application can be selected for shared computing.

Videoconference quality

Fuze is robust under a wide range of Internet connection conditions. The software utilizes a wideband Voice over Internet Protocol (VoIP) to provide twice the quality of a standard phone call, with a low latency global architecture to reduce VoIP chop. Intelligent software is used to automatically and adaptively adjust audiovisual delivery based on location and bandwidth.

Session archives

Fuze can be used to record the audiovisual feeds from the researcher and participant computing devices, as well as any annotations made to the presentation content. The recorded content is password protected and stored using a cloud-based server.

Security

The researcher has control over meeting access, including the ability to grant access on a per user basis. Importantly, Fuze maintains a high level of infrastructure security. Customer passwords are not stored in the Fuze database, all traffic between sites is on private lines, all traffic to and from Fuze over the Internet is encrypted using the standards required by government agencies and the healthcare industry, and data are stored using a secure storage provider.

Neuropsychological Test Battery

A neuropsychological test battery was developed to probe the usability of our RAND system. Since a large percentage of stroke survivors have a communication disorder,44–46 we selected speech-language tasks that draw upon a wide range of the RAND system capabilities.

For the first five tests in the battery, test materials are embedded in a PowerPoint file. The first test is a short form of the Philadelphia Naming Test,47 which is freely available in digital form.48 For each trial, a line drawing appears and the participants attempt to name aloud the depicted object. Next is a custom-created short form of the Pyramids and Palm Trees Test49 and then a short form of the Camel and Cactus Test.50,51 Permission to adapt and digitally administer the former was obtained from the publisher, Pearson Clinical Assessment (UK), while the latter is freely available in digital form.52 For each trial, a probe stimulus appears on the screen along with a set of choice stimuli. The participants manually mark the choice stimulus most meaningfully related to the probe stimulus. Next, a word naming and then a nonword naming test are administered. For each trial, participants attempt to read aloud the printed stimulus. The items for these tests were taken from previous studies.53,54

The battery concludes with the Peabody Picture Vocabulary Test.55 It is administered using shared computing to administer an online form of the test through Pearson's Q-global interface.56 For each trial, a set of images is displayed on the browser screen along with a spoken word. Participants select the image that best represents the spoken word. A license to administer this task was obtained from Pearson Clinical, Q-global (U.S.).

Usability Evaluation

We conducted two usability studies. For an in-laboratory usability study, the RAND system was used to administer our speech-language battery to eight participants with a history of stroke. The testing for this initial study was performed in a research facility. A subsequent at-home usability study involved the deployment of the RAND system in the homes of the eight participants with a history of stroke.

Participants were recruited from a registry of stroke survivors interested in research (the Western Pennsylvania Patient Registry). Eligibility criteria for both usability studies included premorbid right-handedness, presence of a left unilateral brain damage from a single stroke event, being a native English speaker with no history of a learning disability or developmental neurological disorder, and ability to provide informed consent and understand simple spoken sentences. Participants provided informed consent and received monetary compensation ($50). Participant details are provided in Table 1.

Table 1.

Participant Demographics, Neurologic Status, and Internet Connectivity

| AGE | SEX | ED | TECH USE | APHASIA STATUS | MOTOR LOSS | VISION LOSS | HEARING LOSS | CONNECTION METHOD | CONNECTION QUALITY | |

|---|---|---|---|---|---|---|---|---|---|---|

| IL-1 | 59 | M | 15 | 5.5 | 0 | 1 | 1 | 0 | Wi-Fi | Fair |

| IL-2 | 59 | M | 14 | 6 | 3 | 3 | 0 | 0 | Data plan | Poor |

| IL-3 | 78 | M | 12 | 2 | 0 | 3 | 1 | 0 | Data plan | Good |

| IL-4 | 68 | F | 15 | 3 | 2 | 3* | 1 | 1 | Data plan | Good |

| IL-5 | 69 | F | 12 | 6 | 3 | 3 | 2 | 0 | Data plan | Poor |

| IL-6 | 52 | M | 18 | 8 | 0 | 2 | 0 | 0 | Wi-Fi | Fair |

| IL-7 | 61 | F | 12 | 3 | 0 | 3 | 1 | 0 | Data plan | Fair |

| IL-8 | 50 | M | 12 | 6 | 1 | 3* | 0 | 1 | Wi-Fi | Good |

| AH-1 | 67 | M | 12 | 3 | 0 | 2* | 0 | 0 | Hotspot | Good |

| AH-2 | 60 | F | 18 | 7.5 | 1 | 2* | 0 | 0 | Hotspot | Good |

| AH-3 | 78 | M | 20 | 9 | 2 | 3 | 0 | 0 | Hotspot | Good |

| AH-4 | 74 | M | 16 | 5 | 0 | 1 | 0 | 2 | Data plan | Fair |

| AH-5 | 51 | F | 14 | 5 | 0 | 3 | 0 | 0 | Data plan | Good |

| AH-6 | 58 | F | 13 | 5.5 | 1 | 2* | 0 | 2 | Wi-Fi | Good |

| AH-7 | 52 | M | 14 | 8 | 1 | 0 | 1 | 1 | Data plan | Fair |

| AH-8 | 79 | F | 16 | 2 | 0 | 3 | 1 | 0 | Hotspot | Good |

Note: Age, sex (M = male, F = female), and years of education (Ed) for the in-laboratory (IL-1 to IL-8) and at-home (AH-1 to AH-8) participants. Technology use (Tech Use) summarizes the cumulative score on a technology use survey, in which scores can range from 0 to 9 depending upon an individual's history of use with various communication and computing technology and Internet-based software (e.g., Skype, FaceTime). Individuals with scores of 2–3 typically have little to no experience with mobile computing technology and Internet software. Aphasia Status was determined through observation by a trained speech-language pathologist (0 = none, 1 = mild, 2 = moderate, 3 = severe). Motor Loss was based upon finger tapping performance (0 = none, 49+ taps; 1 = mild, 45–48 taps; 2 = moderate, 37–44 taps; 3 = severe, 0–36 taps; * indicates poststroke use of the previously nondominant hand). Vision loss was determined using an Amsler grid (0 = none; 1 = mild, with peripheral loss or distortion in one or both eyes; 2 = moderate, with central blurring, peripheral loss in both eyes, and brightness sensitivity). Hearing loss was determined using an audiometer (0 = none; 1 = slight, with loss of 16–25 dB in one or both ears; 2 = mild, with loss of 26–40 in one or both ears). Connection Method describes the Internet connection method used for the delivery of the assessment battery. For the at-home study, in most cases, the researcher first tried to use the provider data plan on the iPad to establish a mobile broadband connection, switching to mobile hotspot technology as necessary. In the case of one individual with no cellular service, a home wireless network was used. The connection quality during the delivery of the assessment battery is noted in the final column (Good = approximately, packet loss < 5% and/or jitter <60 ms; Fair = approximately, packet loss of 5–10% and/or jitter 60–90 ms; Poor = approximately, packet loss >10% and jitter > 90 ms).

Our neuropsychological battery, a technology use survey, and a usability survey were administered to all participants. The technology use survey focused on prior experience with wireless and cellular phones, computing devices (desktops, laptops, smartphones, tablet computers), and Internet-enabled virtual communication (e.g., use of Skype and FaceTime). The usability survey, adapted from Parmanto et al.,34 focused on the simplicity and learnability of the RAND system, the quality of audiovisual interactions with the researcher, the ease of producing motor responses, comparison with face-to-face interactions, satisfaction with the testing, and likelihood of future use. The responses to each item were provided using a seven-point Likert scale where 1 signified that the participant disagreed with the statement and 7 signified agreement.

Results

Feasibility of the RAND System

The in-laboratory usability study of the RAND system was conducted within a research facility using a protocol that simulated a home-based testing scenario. To begin, the researcher escorted participants to a quiet conference room equipped with an appropriately configured iPad, a telephone, and a printed instruction manual. The researcher then departed to her office. For the at-home usability study, the researcher mailed the participant an iPad, a mobile hotspot device (if necessary), the instruction manual, and the usability questionnaire.

The researcher initiated each RAND session by calling the conference room or the participant's home at a prearranged time. She then talked the participant through turning on the iPad, launching Fuze, and signing on to a meeting. The instruction manual complemented the spoken instructions. The researcher then used the RAND system to administer the neuropsychological test protocol. She first shared the PowerPoint file for the Philadelphia Naming Test, which caused the video feed windows to reduce and a collaboration window with the slide content to appear (Fig. 2). The researcher used presentation control buttons to advance the slide show when appropriate. The subsequent two tests (Pyramids and Palm Trees, and Cactus and Camel tests) also involved a shared PowerPoint presentation, but with manual rather than spoken responses. To enable manual responding, the researcher explained how to activate a paint tool (Fig. 2), so that whenever and wherever the participant touched the collaboration window on their iPad screen, it left an annotation mark. The Word and Nonword Naming Tests followed and involved shared PowerPoint content and spoken responses. During the last test, the researcher shared the Q-global Web site for the Peabody Picture Vocabulary Test.56 In this case, the collaboration window displayed the Web site content. With some RAND system configurations, such as those using Windows software, the Q-global system would automatically register the touch responses of the participant. For the system we used, this was not possible. Instead, the participants used the paint tool to annotate a selected drawing, and the researcher entered the corresponding response using her keyboard.

Evaluation of RAND System Usability

Our RAND system was used to administer the neuropsychological test battery to eight participants in an in-laboratory usability study and eight participants in an at-home usability study. All 16 participants successfully used the RAND technology to initiate the collaborative videoconference and participate in neuropsychological testing. This RAND session occurred without prior face-to-face instruction in the RAND technology, which is notable because many of the participants had limited experience with computer technology, mobile devices, and virtual videoconferencing (Table 1). In addition, even those participants with a diagnosed communication disorder, a hearing or vision impairment, or poor fine motor control (Table 1) were able to comprehend and follow the virtually delivered instructions for operating the RAND technology and perceive and respond to neuropsychological test items. Overall, these results indicate that a diverse range of participants can use the RAND system without requiring special training or expertise.

The RAND system requires both the researcher and participant devices to be connected to the Internet. For the researcher device, a wired Ethernet connection was used for both the in-laboratory and at-home studies. A variety of connection methods were explored for the participant device. For the in-laboratory study, a connection was achieved using either a local wireless network or a mobile broadband data plan on the iPad. With both methods, there were instances in which bandwidth limitations caused mild, moderate, or severe disruptions in the videoconference quality (Table 1). These problems did not prevent the researcher from acquiring the neuropsychological data from any of the participants, although in three cases the researcher returned to the conference room to troubleshoot the Fuze software. The connection issues experienced in the in-laboratory study led us to incorporate mobile hotspot technology as an additional connection option for the at-laboratory study (Table 1). Furthermore, continued development of the Fuze software led to improvements in Fuze's alert system for videoconference quality. This allowed the researcher to ascertain whether the bandwidth was sufficient to initiate a high-quality videoconference (minimum of 4 Mbps downloads and 1 Mbps upload capacity), so that she could switch to an alternative connection method, if necessary. These changes improved Internet connectivity for the at-home study, with good to fair videoconference quality achieved throughout testing for all participants (Table 1).

Five of the six neuropsychological tests were administered successfully to all 16 participants. The exception was the Peabody Picture Vocabulary Test, which requires Web-based access to the Q-global online administration system.56 At the present time, Q-global targets its development efforts for Windows operating systems and Windows-compatible Internet browser and Java software. This created recurrent and unpredictable compatibility issues between the Q-global system and the Macintosh-compatible browser and Java software installed on the RAND system.

As another component of the usability evaluation, participants provided feedback through a survey form. The survey evaluated the ease of use and learnability of the RAND system. The in-laboratory and at-home groups gave it average overall scores of 6.13 and 6.88 (out of 7), respectively. Detailed results for the at-home participants are listed in Table 2. These survey results indicate that participants were very satisfied with the RAND system and their remote assessment experience.

Table 2.

Average User Ratings of RAND System and Testing Experience

| QUESTION | AVERAGE RATING (SD) |

|---|---|

| 1. It was simple to use the iPad. | 6.5 (1.1) |

| 2. It was simple to learn to use the iPad. | 6.6 (0.5) |

| 3. I liked using the iPad. | 6.9 (0.4) |

| 4. It was simple to use the testing software. | 6.8 (0.5) |

| 5. I liked using the software. | 6.8 (0.5) |

| 6. The iPad made it difficult to hear the researcher. | 3.0 (2.6) |

| 7. The iPad made it difficult to see the researcher. | 1.3 (0.5) |

| 8. The iPad made it difficult to hear the spoken items. | 1.0 (0.0) |

| 9. The iPad made it difficult to see the visual items. | 1.1 (0.4) |

| 10. The iPad made it difficult to verbally respond to items. | 1.0 (0.0) |

| 11. The iPad made it difficult to respond to items using my finger. | 1.6 (1.4) |

| 12. The iPad made it difficult to express myself. | 1.1 (0.3) |

| 13. The iPad made it difficult for me to perform my best. | 1.8 (2.1) |

| 14. Using the iPad was as good as having the researcher in the room with me. | 5.6 (1.5) |

| 15. The iPad is an acceptable way to participate in testing. | 6.2 (1.2) |

| 16. If there was a problem, the researcher was able to walk me through the solution. | 7.0 (0.0) |

| 17. I would participate in a testing session with this iPad again. | 7.0 (0.0) |

| 18. Overall, I was satisfied with the testing with the iPad. | 6.9 (0.4) |

RAND, remote acquisition of neuropsychological data.

Note: The scores for items 6–13 were reverse scored (i.e., subtracted from 8), since the phrasing of these questions indicates a rating of dissatisfaction rather than satisfaction.

Discussion

We developed a system for the RAND, using off-the-shelf technology and commercial software. The system we developed can be duplicated and deployed with little technical expertise, for a modest cost. The capabilities of our RAND system were broadly sampled and included home-based neuropsychological testing of eight individuals by a geographically distant investigator. Across the set of tests, auditory and visual stimuli were successfully presented, and verbal and manual responses were successfully acquired. Secure session archives were recorded and stored on a cloud-based server for later access. The protocol administration proceeded smoothly for most tests. These results indicate that our RAND system is suitable for the virtual acquisition of neuropsychological data without prior face-to-face interaction with a researcher.

The participants in the usability evaluation were individuals with a history of stroke who varied in their self-reported technology use. They included individuals with a diagnosed communication disorder, vision or hearing impairment, loss of fine motor control, and/or limited history of technology use. Despite the potential for these challenges to cause participant-related testing failures, none was observed in the current study.

Furthermore, participants were satisfied with the RAND system. For both the in-laboratory and at-home usability studies, the mean scores were substantially higher than the scale midpoint for positively scored items and substantially lower for negatively scored items. The primary goal of our RAND development effort was to produce a flexible system for remote neuropsychological assessment in home-based settings without preexisting computer hardware and Internet connectivity. The high degree of testing success and usability scores suggests that this goal has been met.

However, it is important to recognize that the results address the feasibility but not the generalizability and reliability of RAND-delivered assessment. This is because the study sample was small and it contained few individuals with low levels of education or severe physical limitations. A larger evaluation study, involving a more diverse range of participants is currently underway. This study will extend the current work by comparing test results obtained through the RAND system with those obtained through face-to-face testing, to establish the validity of RAND-delivered assessments. It is possible this larger study will reveal instances of testing failure, in which the characteristics of a participant make use of a RAND system a poor alternative to face-to-face testing.

While the overall pattern of results is positive, evaluation of our RAND system did reveal some technological limitations. Most notably, administration of an online computer-based test failed for the majority of test sessions. The problems did not arise from the core system technology, but rather from software incompatibilities between the test administration Web site and the Internet browser and Java software installed on the researcher computing device. To avoid or minimize such problems, careful choice of online computer-based test platforms and the RAND software is warranted. Alternatively, a computer-based assessment software could be installed on the researcher computing device, with test administration making use of application sharing. In sum, the results indicate that compatibility issues may compromise the utility of the RAND system for computer-based assessment, but these can be overcome and are not inherent limitations of a RAND system.

Finally, it should be noted that there is considerable flexibility in potential RAND system architectures, due to the interoperability of the Fuze software and other commercially available collaborative videoconferencing software. We chose a tablet computing device for the participant (an iPad) due to its ease-of-use, relatively low cost, and portability. We chose the Fuze software because at the time of study initiation, we judged it to stand above other products due to its easy-to-operate and intuitive interface, attention to security and privacy issues, and the broad range of collaborative videoconference functions it supports. Peripheral devices, such as high-definition Webcams and audio headsets, could be used to further enhance audiovisual quality. The particular needs of each research study, in combination with an evaluation of available technology and software, should be considered in designing the most suitable RAND system architecture for a given study. We caution, however, that evaluation results obtained using our specific RAND system configuration might not apply to RAND systems with different technology and collaborative videoconferencing software components.

In conclusion, our feasibility results indicate that a RAND system can provide a flexible and robust solution to the problem of obtaining neuropsychological assessment data remotely. This solution can decrease the burdens associated with participation in clinical and basic research on stroke-related disorders, and it can remove geographical barriers to neuropsychological assessment for both research and clinical purposes. Removing these impediments will help facilitate progress in stroke research and treatment.

Acknowledgments

We gratefully acknowledge the support of American Heart Association (AHA-RMT31010862) and the National Institute of Deafness and Communication Disorders (NIH R21DC013568A).

Disclosure Statement

No competing financial interests exist.

References

- 1.Mendis S. Stroke disability and rehabilitation of stroke: World Health Organization perspective. Int J Stroke 2013;8:3–4 [DOI] [PubMed] [Google Scholar]

- 2.U. S. Burden of Disease Collaborators. The state of U. S. health, 1990–2010: Burden of diseases, injuries, and risk factors. J Am Med Assoc 2013;310:591–608 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Schwartz MF, Kimberg DY, Walker GM, Faseyitan OK, Brecher A, Dell GS, Coslett HB. Anterior temporal lobe involvement in semantic word retrieval: Voxel-based lesion-symptom mapping evidence from aphasia. Brain 2009;132:3411–3427 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Rorden C, Karnath HO. Opinion: Using human brain lesions to infer function: A relic from a past era in the fMRI age? Nat Rev Neurosci 2004;5:812–819 [DOI] [PubMed] [Google Scholar]

- 5.Chatterjee A. A madness to the methods in cognitive neuroscience? J Cogn Neurosci 2005;17:847–849 [DOI] [PubMed] [Google Scholar]

- 6.Schwartz M, Brecher A, Whyte J, Klein M. A patient registry for cognitive rehabilitation research: A strategy for balancing patients' privacy rights with researchers' need for access. Arch Phys Med Rehabil 2005;86:1807–1814 [DOI] [PubMed] [Google Scholar]

- 7.Fellows LK, Heberlein AS, Morales DA, Shivde G, Waller S, Wu DH. Method matters: An empirical study of impact in cognitive neuroscience. J Cogn Neurosci 2005;17:850–858 [DOI] [PubMed] [Google Scholar]

- 8.Wolf MS, Bennett CL. Local perspective on the impact of the HIPAA privacy rule on research. Cancer 2006;106:474–479 [DOI] [PubMed] [Google Scholar]

- 9.Kable JW. The cognitive neuroscience toolkit for the neuroeconomist: A functional overview. J Neurosci Psychol Econ 2011;4:63–84 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Fiez JA. Bridging the gap between neuroimaging and neuropsychology: Using working memory as a case-study. J Clin Exp Neuropsychol 2001;23:19–31 [DOI] [PubMed] [Google Scholar]

- 11.Harvey PD. Clinical applications of neuropsychological assessment. Dialogues Clin Neurosci 2012;14:91–99 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Barrett AM. Rose-colored answers: Neuropsychological deficits and patient-reported outcomes after stroke. Behav Neurol 2009;22:17–23 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Cumming TB, Marshall RS, Lazar RM. Stroke, cognitive deficits, and rehabilitation. Int J Stroke 2013;8:38–45 [DOI] [PubMed] [Google Scholar]

- 14.Wagle J, Farmer L, Flekkoy K, Bruun WT, Sandvik L, Fure B, Stensrod B, Engedal K. Early post-stroke cognition in stroke rehabilitation patients predicts functional outcome at 13 months. Dement Geriatr Cogn Disord 2001;31:379–387 [DOI] [PubMed] [Google Scholar]

- 15.Suzuki M, Sugimura Y, Yamada S, Omori Y, Miyamoto , Yamamoto J. Predicting recovery of cognitive function soon after stroke: Differential modeling of logarithmic and linear regression. PLoS One 2013;8:e53488. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Levine SR, Gorman M. “Telestroke”: The application of telemedicine for stroke. Stroke 1999;30:464–469 [DOI] [PubMed] [Google Scholar]

- 17.Rubin MN, Welilik KE, Channer DD, Demaerschalk BM. Systematic review of telestroke for post-stroke care and rehabilitation. Curr Atheroschler Rep 2013;15:343. [DOI] [PubMed] [Google Scholar]

- 18.Grosch MC, Gottlieb MC, Cullum CM. Initial practice recommendations for teleneuropsychology. Clin Neuropsychol 2011;25:1119–1133 [DOI] [PubMed] [Google Scholar]

- 19.Bauer RM, Iverson GL, Cernich AN, Binder LM, Ruff RM, Naugle RI. Computerized neuropsychological assessment devices: Joint position paper of the American Academy of Clinical Neuropsychology and the National Academy of Neuropsychology. Arch Clin Neuropsychol 2012;27:362–373 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Brennan DM, Mawson S, Brownswell S. Telerehabilitation: Enabling the remote delivery of healthcare, rehabilitation, and self-management. Stud Health Technol Inform 2009;145:231–248 [PubMed] [Google Scholar]

- 21.Schatz P, Browndyke J. Applications of computer-based neuropsychological assessment. J Head Trauma Rehabil 2002;2002:395–410 [DOI] [PubMed] [Google Scholar]

- 22.Uysal S, Mzaaeffi M, Lin H-M, Fischer GW, Griepp RB, Adams DH, Reich DL. Internet-based assessment of postoperative neurocognitive function in cardiac and thoracic aortic surgery patients. J Thorac Cardiovasc Surg 2011;141:777–781 [DOI] [PubMed] [Google Scholar]

- 23.Winters JM. Telerehabiliation research: Emerging opportunities. Annu Rev Biomed Eng 2002;4:287–320 [DOI] [PubMed] [Google Scholar]

- 24.Hailey D, Roine R, Ohinmaa A, Dennett L. Evidence of benefit from telerehabilitation in routine care: A systematic review. J Telemed Telecare 2011;17:281–287 [DOI] [PubMed] [Google Scholar]

- 25.Rogante M, Grigioni M, Cordella D, Giacomozzi C. Ten years of telerehabilitation: A literature overview of technologies and clinical applications. NeuroRehabilitation 2010;27:287–304 [DOI] [PubMed] [Google Scholar]

- 26.McCue M, Cullum CM. Telerehabilitation and teleneuropsychology: emerging practices. In: Noggle C, Dean R, Barisa MT, eds. Neuropsychological rehabilitation. New York: Springer, 2013 [Google Scholar]

- 27.Mashima PA, Doarn CR. Overview of telehealth activities in speech-language pathology. Telemed J E Health 2008;14:1101–1117 [DOI] [PubMed] [Google Scholar]

- 28.Caltagirone C, Zannino GD. Telecommunications technology in cognitive rehabilitation. Funct Neurol 2008;23:195–199 [PubMed] [Google Scholar]

- 29.Lewis C, Packman A, Onslow M, Simpson JM, Jones M. A phase II trial of telehealth delivery of the Lidcombe Program of Early Stuttering. Am J Speech Lang Pathol 2008;17:139–149 [DOI] [PubMed] [Google Scholar]

- 30.O'Brian S, Packman A, Onslow M. Telehealth delivery of the Camperdown program for adults who stutter: A phase I trial. J Speech Hear Res 2008;51:184–195 [DOI] [PubMed] [Google Scholar]

- 31.Carey B, O'Brian S, Onslow M, Block S, Jones M, Packman A. Randomized controlled non-inferiority trial of a telehealth treatment for chronic stuttering: The Camperdown Program. Int J Lang Commun Disord 2010;45:108–120 [DOI] [PubMed] [Google Scholar]

- 32.Brennan D, Georgeadis A, Baron C. Telerehabilitation tools for the provision of remote speech-language treatment. Top Stroke Rehabil 2002;8:71–78 [DOI] [PubMed] [Google Scholar]

- 33.Theodoros D, Hill A, Russell T, Ward E, Wootton R. Assessing acquired language disorders in adults via the internet. Telemed J E Health 2008;14:552–559 [DOI] [PubMed] [Google Scholar]

- 34.Parmanto B, Lulantara IW, Schutte JL, Saptono A, McCue MP. An integrated telehealth system for remote administration of an adult autism assessment. Telemed J E Health 2012;19:88–94 [DOI] [PubMed] [Google Scholar]

- 35.Schlegel RE, Gilliland K. Development and quality assurance of computer-based assessment batteries. Arch Clin Neuropsychol 2007;22S:S49–S61 [DOI] [PubMed] [Google Scholar]

- 36.Wild K, Howieson D, Webbe F, Seelye A, Kaye J. Status of computerized cognitive testing in aging: A systematic review. Alzheimers Dement 2008;4:428–437 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Bergquist T, Gehl C, Lepore S, Holzworth N, Beaulieu W. Internet-based cognitive rehabilitation in individuals with acquired brain injury: A pilot feasibility study. Brain Inj 2008;22:891–897 [DOI] [PubMed] [Google Scholar]

- 38.Becker JT, Dew MA, Aizenstein HA, Lopez OL, Morrow L, Saxton J, Tarraga L. A pilot study of the effects of internet-based cognitive stimulation on neuropsychological function in HIV disease. Disabil Rehabil 2012;34:1848–1852 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Linder SM, Buchanan S, Sahu K, Rosenfeldt AB, Clark C, Wolf SL, ALberts JL. Incorporating robotic-assisted telerehabilitation in a home program to improve arm function following stroke. J Neurol Phys Ther 2013;37:125–132 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Hujgen BC, et al. . Feasibility of a home-based telerehabilitation system compared to usual care: arm/hand function in patients with stroke, traumatic brain injury and multiple sclerosis. J Telemed Telecare 2008;14:249–256 [DOI] [PubMed] [Google Scholar]

- 41.Durfee WK, Weinstein SA, Bhatt E, Nagpal A, Carey JR. Design and usability of a home telerehabilitation system to train hand recovery following stroke. J Med Device 2009;3:041003-1–041003-8 [Google Scholar]

- 42.Letz R. Continuing challenges for computer-based neuropsychological tests. Neurotoxicology 2003;24:479–489 [DOI] [PubMed] [Google Scholar]

- 43.Fuzebox. Fuze. 2015. Available at www.fuze.com, (last accessed July28, 2015)

- 44.Kauhanen ML, Korpelainen JT, Hiltunen P, Maatta R, Mononen H, Brusin E, Sotaniemi KA, Myliyia VV. Aphasia, depression, and non-verbal cognitive impairment in ischaemic stroke. Cebrovasc Dis 2000;10:455–461 [DOI] [PubMed] [Google Scholar]

- 45.Pedersen PM, Jorgensen HS, Nakayama H, Raaschou HO, Olsen TS. Aphasia in acute stroke: Incidence, determinants, and recovery. Ann Neurol 1995;38:659–666 [DOI] [PubMed] [Google Scholar]

- 46.Engelter ST, Gostynski M, Papa S, Frei M, Born C, Ajdacic-Gross V, Gutzwiller F, Lyrer PA. Epidemiology of aphasia attributable to first ischemic stroke: Incidence, severity, fluency, etiology, and thrombolysis. Stroke 2006;37:1379–1384 [DOI] [PubMed] [Google Scholar]

- 47.Roach A, Schwartz MF, Martin N, Grewal RS, Brecher A. The Philadelphia Naming Test: Scoring and rationale. Clin Aphasiol 1996;24:121–133 [Google Scholar]

- 48.Moss Rehabiltation Research Institute. Philadelphia Naming Test (PNT)-175 items. 2015. Available at www.mrri.org/philadelphia-naming-test, (last accessed June1, 2014)

- 49.Howard D, Patterson K. Pyramids and palm trees: A test of semantic access from pictures and words. Bury St. Edmunds, UK: Thames Valley Test Company, 1992 [Google Scholar]

- 50.Adlam AL, Patterson K, Bozeat S, Hodges JR. The Cambridge Semantic Memory Test Battery: Detection of semantic deficits in semantic dementia and Alzheimer's disease. Neurocase 2010;16:193–207 [DOI] [PubMed] [Google Scholar]

- 51.Bozeat S, Lambon Ralph MA, Patterson K, Garrard P, Hodges JR. Non-verbal semantic impairment in semantic dementia. Cogn Neuropsychol 2000;11:617–660 [DOI] [PubMed] [Google Scholar]

- 52.Neuroscience Research Australia. Test downloads. 2015. Available at www.neura.edu.au/frontier/research/test-downloads/, (last accessed June1, 2014)

- 53.Fiez JA, Tranel D, Seager-Frerichs D, Damasio H. Specific reading and phonological processing deficits are associated with damage to the left frontal operculum. Cortex 2006;42:624–643 [DOI] [PubMed] [Google Scholar]

- 54.Fiez JA, Balota DA, Raichle ME, Petersen SE. Effects of lexicality, frequency, and spelling-to-sound consistency on the functional anatomy of reading. Neuron 1999;24:205–218 [DOI] [PubMed] [Google Scholar]

- 55.Dunn LM, Dunn DM. Peabody picture vocabulary test—fourth edition (PPVT-4). San Antonio, TX: Pearson, 2007 [Google Scholar]

- 56.Pearson Clinical Assessment. Q-global. 2015. Available at http://images.pearsonclinical.com/images/qglobal/, (last accessed July28, 2015)