Abstract

This paper brings out a neoteric frame of reference for visual semantic based 3d video search and retrieval applications. Newfangled 3D retrieval application spotlight on shape analysis like object matching, classification and retrieval not only sticking up entirely with video retrieval. In this ambit, we delve into 3D-CBVR (Content Based Video Retrieval) concept for the first time. For this purpose, we intent to hitch on BOVW and Mapreduce in 3D framework. Instead of conventional shape based local descriptors, we tried to coalesce shape, color and texture for feature extraction. For this purpose, we have used combination of geometric & topological features for shape and 3D co-occurrence matrix for color and texture. After thriving extraction of local descriptors, TB-PCT (Threshold Based- Predictive Clustering Tree) algorithm is used to generate visual codebook and histogram is produced. Further, matching is performed using soft weighting scheme with L2 distance function. As a final step, retrieved results are ranked according to the Index value and acknowledged to the user as a feedback .In order to handle prodigious amount of data and Efficacious retrieval, we have incorporated HDFS in our Intellection. Using 3D video dataset, we future the performance of our proposed system which can pan out that the proposed work gives meticulous result and also reduce the time intricacy.

Keywords: Bag Of Visual Words, Predictive Clustering Tree, Hadoop Distributed File System, video retrieval, local descriptors

I Introduction

Content based video retrieval (CBVR) plays an imperative role in the field of multimedia retrieval applications. In [1], the concept of STAR is being used for an efficient video retrieval. Most probably reviewers use distance function and clustering algorithms for retrieval applications. But it creates limitation for the usage of large amount of data. Our modern life application usage like face book, and many other social media involves large scale multimedia files sharing like images, video, audio etc [9]. To cope up with such an enormous multimedia file sharing application in an efficient manner, creates a challenging issues to the mankind. To fulfill this ulterior motive, a new programming paradigm namely Map Reduce is required. MapReduce is a parallel programming model which is capable to exercise large-scale data and well perceived in Hadoop. It is one of the open source cloud computing implementation and reduces the time complexity by handling mammoth amount of data in parallel [6]. A series of operations is performed, each of which consists of Map and Reduce stage to execute an enormous number of independent data items [12]. Simultaneously, there is a rapid increase to improve the efficiency of 3D retrieval application [4]. Applications of the 3D models include medical imaging, films, industrial design and gaming. But it is basically focused on object matching, classification and retrieval [2]. For this purpose, various strategies and methodologies are implemented in [4] [2] [5]. To perform the above task, visual features of images should be extracted in an effective manner. Based on this extracted features of 3D model, it is classified into three major categories namely graph-based model, geometry-based model and view-based model.

To accomplish the purpose of retrieval, we consider two different shape features namely topology and geometry. In [17], there is a detail explanation about shape matching. Using frame conversion tools, the video is divided into number of frames. More often in videos, frames are merely redundant, so there is a need to uncover key frame from collection of video frames. To perform key frame selection, number of algorithms is used [20]. To enhance the performance, interms of accuracy and time complexity of 3D model retrieval application, we need to bring in new mechanisms.

One of the effective data mining algorithms is BoF (Bag of Feature) extraction procedure. Most common usage of this algorithm is in the field of text classification. It works by computing the number of occurrence of words in a sentence. Far cry from other approaches, it is conceptually simple, computationally cheaper and bestow better results for classification and retrieval applications. This algorithm starts by considering a feature as order less collection of local key points and generate visual vocabulary using cluster process. Now each key point cluster is considered as a visual word in the visual vocabulary. From these key points of the images, features are extracted. Here we signify features as color, texture and shape.

However 3D model focus mainly on shape which include its topology and geometric features or combination of both. Combination of color and shape feature for 3D model is explained in [21]. Feature vector is used to map visual vocabulary to key point by counting the number of occurrences of the word in each sentence. For the purpose of vocabulary construction, algorithms like clustering [2], [3, [9] and [10] tree formation algorithms [15] can be used.

To carry out matching and classification, supervised learning approaches [27] [2] are used. The proposed perception of Map Reduce based BOF retrieval, use soft weighting scheme [10] or pyramid matching methodologies to accomplish the purpose of matching. Conclusively, the notion is to implement BOVW (Bag of Visual Words) in 3D model retrieval application to get fruitful results [2] [4]. Search engines for 3D models are discussed in [7]. It gives detailed explanation about web based search engine which supports queries based on 2D sketches, text key words, 3D sketches and/or 3D models. To attain scalability and handle huge amount of data in an efficient way combination of BoF with Map Reduce framework is introduced in [3] [9] [12] and [13]. Survey of the 3D model retrieval applications are presented in [25] and [26]. Various shape matching procedures such as model graph, global feature, global feature distribution, deformation, local feature, weighted point set, spatial map, volumetric error, skeleton, view model and Reeb graph is discussed in [25] and Object based shape retrieval applications are presented in [26]. It mainly classifies 3D object model into view based, statistics based, graph based and geometry based. Each one is elucidated in detail in [26] .The aspiration of this article is to develop 3D CBVR (Content Based Video Retrieval) application using Bag of Visual Words (BoVW) in Map Reduce framework. Main contribution of our proposed framework includes,

➢ Newfangled approach of bringing 3D CBVR (Content Based Video Retrieval) application in Map reduce framework using Bag of Visual Words (BoVW) methodology.

➢ Initial step includes conversion of video into frames, from which key frames are selected using Temporally Maximum Occurrence of Frame. To the selected frames BOVW is applied and local descriptors like shape, color and texture to be extracted. For shape, combined feature (topology & geometric) is used and for color & texture 3D co-occurrence matrix is being used.

➢ Fork Strategy based Medial Surface Extraction is a novel method used for shape extraction without affecting its geometry.

➢ To generate visual vocabulary, TB-PCT (Threshold Based – Predictive Clustering Tree) is used. To accomplish the purpose of matching, soft weighting scheme with L2 distance function is considered as an innovative approach.

➢ Construction of Visual words sometimes causes noise due to the occurrence of repeated visual words, in order to overcome it, we have introduce a Numerical Semantic Analysis.

➢ Further matched results are ranked and efficient results are experienced by the User.

Above mentioned all information's are thrashed out in detail in the upcoming sections. Section 2 contains related works and its detailed explanation. Section 3 deals with the proposed Map Reduce framework with 3D CBVR (Content Based Video Retrieval) application. Section 4 details about the experimental result including comparisons with prior approaches. Finally, Section 5 concludes this paper.

II Related Work

In past decades, several approaches have done for efficient retrieval of video and Images. But it provided less effective results. We have considered all this for the research purpose. [27] Discuss about video instance retrieval (for 2-dimension) where we take object of interest (OOI) from the query image given as Input. Query-Adaptive multiple Instances learning (q-MIL) is used to identify the video frames containing OOI in order to solve the problem of MIL (frame level feature representation with window proposals and also optimization problem for learning the OOI detector). In q-MIL algorithm, Video frames should enfold OOI visually distinct appearance.

Several multimedia applications for 3D shape retrieval, classification and matching processes are discussed in [2]. All the applications are performed using shape as a basic feature. For the purpose of matching, technique of SPD matrices is used and results are obtained. Covariance matrix better works with combined features rather than supporting individual feature. Survey of 3D shape retrieval methods are thrashed out in [25], which considered feature based methods, graph based methods and other methods. It's not good to use Feature based shape matching for indexing as it results inefficient indexing. Reason for this is based on the fact that indexing is not a straightforward method and does not support triangle inequality. Graph based methods are proven to be efficient in case of small volume models.

In Search engine based 3D model retrieval [7], inputs are to the search engine are given in various formats like 3D sketches, 2D sketches and 3D models. Shape is used for the purpose of feature descriptor process. The newly created shape descriptor uses spherical harmonics to provide perceptive and robust results. This model requires improvement in terms of better matching models, indexing algorithms, modeling tools and query interface.

For 3D model retrieval applications, many state-of-the-art techniques are illustrated in [4], [5] and [21]. Shape is used as a basic feature to produce an efficient result for Bag-of-View-Words. [4] Explains the generation of codebook using k-mean algorithm and performs matching using histogram. In [5] classification based 3D model retrieval is furnished by combining the concept of multiple SVM classifiers with D-S evidence theory. D-S evidence theory is used to fuse multiple classifiers. To accomplish the above task, authors tried two kinds of shape descriptor namely shape distribution and visual similarity. D-S evidence theory enhances similarity between the 3D query model and its relevant 3D model present in the database. But this approach does not focus on the way to establish reasonable measures to evaluate the classifiers. On the process of 3D model retrieval we had to perform combined feature extraction [21]. Shape & color features are extracted based on D2 shape descriptor and color similarity measurements. Here, we had used multi feature similarity measure for combining color and shape weight values.

Some other feature extraction procedures are discussed in [17], [18], [23], [28] and [29]. Geometric and topology features are combined in [17] and discussed the reason of combining both of them. But it considered shape features only. Remaining papers [18], [23], [28] and [29] fully consider texture features and combination of color features. In texture feature extraction most of the researchers explained the techniques of cooccurrence matrix and GLCM process, because texture features are most important feature in image processing applications. Some of the authors processed 2-dimensional images and 3-dimensional volumetric data. But 3D volumetric data could provide effective and efficient results. Combination of color & texture feature descriptors, first of all processing a color feature and also texture. Even it provides beneficial results but it does not provide better accuracy for several applications.

Authors of [22] discussed about codebook generation process in depth with the help of clustering. The process of Codebook generation involves mapping of low level features to fixed level length vector in the histogram space. Some of the codebook models presented includes category specific clustered codebook, globally clustered codebook and semantic codebook. Clustering process is done to build visual codebook as well as group's similar values. This paper portrays about several clustering algorithm in a apparent way. But mostly k-mean algorithm is used for clustering. It works by grouping similar values based on to centroid value. Another superior form of clustering method includes randomized forests clustering [16]. This algorithm is mainly used in the field of Image processing for the purpose of classification. For visual codebook generation, it proves to provide fruitful results and it also supports local visual features of vector quantization process. However it is appropriate only for image recognition, object detection and segmentation process.

Krisch descriptor based video retrieval process explained in [19], uses k-nearest neighbor search algorithm for sequential matching. Key frames are used to ease the retrieval purpose using video database of TRECVID. Before this paper, key frame extraction methods have been proposed in [24], [20] and [30]. [24] Details about the key frame extraction method for the purpose of video segmentation. It deals with a set of rules to haul out key frames from shot types. Based on the outcome of rules, key frame which is having higher probability is preferred. In [20], detail explanation about video segmentation is provided and [30] introduced the novel key frame extraction process based on similarity measure. Here, need some calculation to get optimal key frame such as rate, distortion and its weighted sum and also conciseness cost. It could leads to shortest path problem but it automatically determined optimal key frame selection. Major drawback of that kind of process is to take more time for graph construction and key frame selection. Image retrieval can be done through feature propagation on image webs. Content based image retrieval based on Bag –of-feature is clearly explained in [13].

It provides adequate result but as far as dissimilarity ranking is concerned, it does not ensure enough heterogeneity for large clusters. Survey paper [26], confer about several 3D CBOR methods. This paper spotlight on retrieval methods which are classified as view based, graph based, geometry based, statistics based and few general methods. Core problem with view based approach basically involves the high cost it requires for large storage space to acquire sufficient number of views. Graph based approach face major challenge in terms of its computational inefficiency to construct consistent graph and computing its sub graph. Geometry based approaches are computationally efficient only for global methods but in case of local methods it does not provide proficient result. For smaller datasets, statistical method does not provide result with higher precision.

III Proposed System

In this field of reference, we are going to capsulate our overall proposed work. Fig. 1 presents the overview of the proposed work.

Fig. 1.

Overview of Proposed System

We have partite our work into two modes namely offline and online mode. Admin does all works in offline mode (Of) while user works in online mode (On). In order to handle huge amount of videos as well as make the result more efficient and efficacious, we have included the notion of Hadoop MapReduce. Overall working procedure of MapReduce in retrieval application is shown in fig.2. It works by dividing each process into Map and Reduce functions.

Fig. 2.

Architecture of MapReduce

As an initial step to our notion, Videos uploaded by the admin is converted into frames via frame conversion tool and are considered as dataset. As a further step ahead, key frame selection process is used to make searching, browsing and video retrieval process more efficient. The notion of key frames represents the entire frame sequence [19]. From the entire frame sequence, we need to select a particular key frame. For this purpose, we have used TMOF (Temporally Maximum Occurrence Frame) [20]. And then to the selected key frames, BOVW (Bag of Visual Word) is applied to extract the local descriptors like shape, color and texture. Integrating the concept of geometric and topological features for shape and 3D co-occurrence matrix for color & texture, we have given a new direction to our proposed work. We have affirmed that our work is unique and far cry from the conventional system as it is based only on shape.

As further step to our process, we need to construct the visual vocabulary using BOVW. To the resultant, TB-PCT (Threshold Based-Predictive Clustering Tree) is used for visual codebook generation process. Then count the number of occurrence of visual word from the vocabulary and compute the occurrence value. As a final step to the above process generate a histogram. On the other side, user provides a query image in the online mode and BOVW is computed. Next, matching should be performed between the query image and resultant dataset of our proposed work. To make the matching process more Efficacious, we came out with an ingenious idea of soft weighting scheme with L2 distance function. Finally, Index value is used to rank the matched image and is returned to the user as a feedback. Further subsections are noted detailed information's of our propose system.

3.1 Key Frame Selection

Key frame selection plays a vital role in video retrieval application as it represents the whole video content. Several key frame selection approaches were discussed in the related work but these approaches overlook some temporal information. To overcome this problem, we have suggested optimal key frame selection procedure by TMOF in [20] in our proposed work. First step towards our work is to provide video as input to the computer nodes. Then this video is converted into frames using frame conversion tool and from the frames, we extract key frame using TMOF to corresponding Map task. The output frames are saved in HDFS.TMOF is constructed based on the probability of occurrence of pixel value at each pixel position for all the frames. The outcome from TMOF is the maximum occurred frames which have multiple entities.

From the Group of Frames (GoF), histogram is formed based on the pixel values at each corresponding pixel position. Gaussian function is applied to smooth the histogram. Pixels of the histograms are computed using the equation as follows,

| (1) |

Where I'*J'*K' is the size of a TMOF, I, J, K represents the Width, Height, Depth and bopt is chosen as follows:

| (2) |

By using Gaussian filter, smoothed histogram is determined as follows,

| (3) |

Where G(σ,b) is a Gaussian function with variance σ

| (4) |

Ka,b,c represents the histogram formed by the corresponding pixel at the position of pixels in (a,b,c), fn(a, b, c) which represents the pixel level at coordinates (a,b,c) in frame n, total number of frames in GoF is represented as N and number of bins in the histogram is B. Generally, the intensity level of the pixel is equal to the number of bins in a histogram. Final values are the selected key frames which are represented as Max Frame (a,b,c).

3.2 BOVW (Bag of Visual Word) Generation

Bag of Visual Word is an extension of Bag of Words concept. It is an effective, potent and scalable approach for performing Content Based Video Retrieval (CBVR). Usually, BOVW is used to extract set of visual words from an enormous visual vocabulary. There are four basic steps in BOVW, which are as follow: : (i) Feature extraction, (ii) Construction of visual vocabulary (iii) Quantization of each image features as discrete visual words, (iv) Construction of inverted-index using visual words and based on it matching is performed. The overall architecture of BOVW is presented in fig 3. It envisions the sequence of work performed by Bag of Visual Word.

Fig. 3.

BOVW procedure

Commonly, we use any one local descriptor to extract image feature in 3d model. But it is the first time we utilize three different image features (shape, color & texture) for feature extraction. In particular, we have considered combined feature (topological and geometric) for shape. Map reduce is used as a background for BOVW in implementation. Following section further explain in detail about the above mentioned four steps.

3.2.1 Feature Extraction

Initial stage of BOVW is feature extraction from the large dataset. For this motive, several local descriptors were used in the Conventional system. In most of the previous work [3], [7], [9] SIFT is used as local descriptor. Feature extraction process is briefly shown in fig 4.

For feature extraction, we consider three different features of the image. In MapReduce, each task can be handled in parallel manner. So it makes feature extraction much simpler as one image does not depend on another and we can easily break down the tasks. Therefore, individual image is an input to the map task and extracted local feature of that image is the output. Map and Reduce functions states as follows,

| (5) |

| (6) |

Where fl(n) is the key which represents the image filename or unique identifier. I(n) is nth image of N images in the dataset. Key value assigned to the map tasks are unique identifiers. Each map tasks handles one image pair (i.e.) < fl(n), I(n)>. Single map task will emit all local features of (F(In)) as ft1...F(I) of I(n) image. To note, here the reducer is null reducer as the resultant output <key, value> pair is same as the input given to mapper.

In this manner, we can reduce the additional execution of reducer. Hence the output from mapper is given as input to the pipeline stage. As per our work shape feature is extracted first, followed by the color and texture features. The resultant feature extraction is a combination of all above mentioned features.

a. Shape Feature Extraction

Shape is considered as one of the prime feature of an image. In general, 3D model consider features like geometrical and topological for shape extraction. Previous works used any one of feature [25] or combination of two [17] for feature extraction. But previous work has many problems which we explained in related work. In order to overcome this, we have defined some rules to combine topological and highly biased geometrical feature. We make use of [17] for our proposed work. Overall step of shape feature extraction involves:

Medial surface extraction & segmentation

Re-adjustment of segmentation

Use super ellipsoid to approximate the segmentation

Use 3D DFD (Distance Field Descriptor)

In order to perform the medial surface segmentation, first of all we need to extract the Medial surface (MS). In [17], medial surface extraction is executed using the Hamilton-Jacobbi equations. Based on this equation, topological thinning is performed. Based on the topology, the result obtained from the key selection process is given to shape feature extraction which follows,

| (7) |

To extract MS, we make use of appropriate Fork Strategy based Medial Surface Extraction. We start our process with medial surface extraction. Medial surface extraction is modeled using topology, distance transform and Voronoi diagram method. In [17] topology thinning was performed for medial surface extraction which was based on Average Outward Flux (AOF) of the Euclidean distance but it does not guarantee geometry. In our proposed fork strategy, images are splited based on the points present on it. We split the plane into cells, edges and vertices. Each point should be equidistance from at least two edges. Then the corner cells are eliminated until the central point gets single neighboring cell. Every cell is the intersection of (n–1) half-planes, if there are n points. All the cells are convex and have up to (n–1) edges in the boundary. To split ‘n’ points in the plane, required time is computed as,

| (8) |

Next step is MS segmentation which involves use of different classification methods to classify every voxel on medial surface. The classification methods are namely Simple, Surface Voxel, Line Voxel and Junction. After the completion of classification, apply rules to perform segmentation in MS. The rules includes,

All the neighboring line voxels along with the neighboring simple voxels form a line segment.

All the neighboring surface voxels along with the neighboring simple voxels form a surface segment.

By using few criterions, perform re-adjustment in segmentation to overcome the issues of traditional systems. The criterions are Correction Criterion, Elimination Criterion, Over segmentation Criterion and Merging Criterion. Now theMS is segmented into meaningful parts, while its topology is preserved. After successful completion of readjustment process, following definitions are used to allocate the voxel of the object's boundary surface to the segmentation process.

Set of medial surface segment is where n is the number of medial surface segments

Set of medial surface voxels is Si = {xi[k] | k=1 ...Ni}. That belong to the segment Si, and xi[k] are coordinates of the k medial surface voxels center assigned to i part. Ni is the number of the medial surface voxels assigned to the i segment

Set of boundary voxels is P = {p[1] | l = 1....L} where p[l] are the coordinates of the l boundary voxels center, P ⊂ UA, while UA is the set of voxels lying inside the object A and L is the number of objects boundary voxels.

Euclidean distance of the 1st boundary voxel from the kth medial surface voxel of the segment Si is d(p[l],xi[k])

Di[l] = argmin1≤k≤ {d(p[l],Ni,xi[k]} is the minimum Euclidean distance of the 1st boundary voxel from the segment Si.

Pi = {pi[1] |l = 1....Li} is a subset of P, which represents the set of boundary voxels assigned to segment Si and pi[l] are the coordinates of the l boundary voxels center assigned to i part. Li is the number of boundary voxels assigned to i part.

A boundary voxel p[l] is assigned to the segment Si,

| (9) |

Where wi is a weight factor for given by the following equation:

| (10) |

Let σi be the standard deviation of Di[l] for all boundary voxels pi[l] assigned to the segment Si. Each and every extracted segment of the 3d-objects is approximated using super ellipsoids to extract the shape without any loss. By using the attributed graph representation, both topology and geometrical information's are combined. The result obtained from medial surface extraction and readjustment procedures are considered as topological information whereas the meaningful parts assigned to each surface segment are well thought out to be geometrical information.

The graph is represented as,

| (11) |

where V is the non-empty set of vertices, E is the set of edges and A is the binary symmetric adjacency matrix. According to the mapping definition, an undirected vertex-attributed graph is constructed. In equation below, the parameters specified within the function F is (x, y, z) which represents the co-ordinates of the 3D point.

| (12) |

Function (10) is commonly called inside-outside function, since it a 3-D point with coordinates(x,y,z):

| (13) |

The issue of modeling 3-D object using super quadratic can be prevail over by reducing it to the least squares minimization of nonlinear inside-outside function F(x,y,z) with respect to several shape parameters,

| (14) |

Where tx, ty, tz and Φ, θ, χ are Euler angles and translation vector coefficients, a1,a2,a3, ε1, ε2, ε3, are the superquatratic shape parameters and (x,y,z) are the coordinates of 3-D objects. Using mean-square error, the above found parameters are minimized as,

| (15) |

where, N is the number of points of the 3-D object.

As mentioned in over segmentation criteria, all the parts of the image will be merged into a single part, if the number of resulted parts is comparable to the number of medial surface voxels. In case of merging, only geometry feature is considered ignoring the topology information. Coming up to the 3-D DFD, it is used to compute the difference between the surface of an ellipsoid and surface of an object. Using the following scaling procedure, the 3-D DFD is extorted from every segment of 3-D object,

| (16) |

| (17) |

| (18) |

| (19) |

di,j is the signed distance between the surface of an object and surface of the ellipsoid at the same(θi, Φj).

Equation for 3D-Distance Field Description is acquired as a result of shape based feature extraction,

| (20) |

The above mentioned equation is 3D-Distance Field Descriptor which is obtained using maximum occurred frames. This value is further applied for color & feature extraction process.

b. Color & Texture Feature Extraction

The color and texture is also a significant feature of image. As we already extorted the shape feature, now there is a need to extract other features like color and texture which can be done using the approximated super ellipsoid 3-D object of an image. In reference to our article, we make use of 3d cooccurrence matrix as feature descriptor for both color and texture. As an initial step, RGB color component is converted into HIS color component in order to separate the color and intensity component. HIS color component is quantized into 128(8*4*4) bins. Next, the image is split into squared window. Our concept includes overlap regions as an added advantage. Nine orientations are employed in each window to define the neighborhood of each pixel along the HSI plane. As a result of 3D co-occurrence matrices, six textural features are calculated. In total 54 measures are generated as 9 matrices were calculated.

Formulation of 3D co-occurrence matrix makes use of orientation of angle ‘θ’ which occurs between the pair of gray levels and the axis. We consider four directions, they are θ = 0°, 45°, 90°, 135°. The corresponding Gray-level Cooccurrence matrix is given as,

| (21) |

Where,

The above mentioned equation is reconstructed by modifying ‘θ’ orientation for the remaining three angles. Next, the gray level co-occurrence is defined as p(i, j|d, θ) with respect to the distance ′d′ and angle ‘θ’. Further the probability value of gray level co-occurrence matrix is given as,

| (22) |

From the estimated p(i, j|d, θ) component, we get features which can be further utilized for extraction process. The texture features are calculated using,

Angular second moment (ASM)

It measures the number of repeated pairs. Only limited number of gray levels is presented in the homogeneous scene. ASM can be calculated as follow,

| (23) |

Contrast (CN)

Local intensity variation of an image and it will favor contributions from P(i,j) away from the diagonal, i.e. i≠j. contrast can be calculated using the equation,

| (24) |

Entropy (ET)

This parameter calculates the randomness of gray-level distribution.

| (25) |

Correlation (CR)

It provides the correlation between two pixels present in the pixel pair.

| (26) |

Where μ and σ indicate mean and standard deviation.

Mean (MN)

Parameter is used to calculate the mean of gray level in an image. It can be manipulated as,

| (27) |

Variance (VN)

Variance is used to explain the overall distribution of gray level. The following equation is used to calculate the variance,

| (28) |

The above mentioned equations are applied to nine texture matrices and resulted as a single entity. The extracted feature is the combination of all the features mentioned in our work, which given as,

| (29) |

Equation (27) gives the feature extracted (Etdf) from all the three features taken into account (Shape, Color, Texture).

3.2.2 Visual vocabulary Generation using TB-PCT

Construction of Visual vocabulary (Vc) is a central part of the CBVR system. It is the next stage of BOVW.

In the previous work, several clustering algorithms were used for vocabulary generation process [2][4][9]. K-means clustering algorithm is frequently used technique but it limits its merit in case of handling large amount of datasets. To overcome this problem, we used PCT (predictive clustering Tree) [15] in our work by modifying it as per our need.

To construct PCT, we combine the concept of predictive clustering and randomized tree construction. PCT can be provoked using a standard top-down induction of decision trees (TDIDT) algorithm. During the tree construction process, use local descriptors values in a random manner. Algorithm 1 shows the TDIDT algorithm. In our proposed work, we introduced the concept of TB-PCT (Threshold Based – Predictive Clustering Tree) which is slight modification of PCT. Algorithm 2 depicts the overall working procedure for the construction of visual vocabulary using threshold.

Algorithm 1.

TDIDT

| procedure PCT(E) returns tree | |

| 1: | (t*,h*,p*) = BestTest(E) |

| 2: | if t*≠ none then |

| 3: | for each Ei ∈ p* do |

| 4: | treei = PCT(Ei) |

| 5: | return node(t*,) |

| 6: | else |

| 7: | return leaf(Prototype(E)) |

| procedure BestTest(E) | |

| 1: | (t*,h*,p*) = (none, 0, Φ) |

| 2: | for each possible test t do |

| 3: | p= partition induced by t on E |

| 4: | |

| 5: | if(h>h*) ^ Acceptable (t,p) then |

| 6: | (t*,h*,p*) = (t,h,p) |

| 7: | return (t*,h*,p*). |

Algorithm 2.

Construction of visual vocabulary using TB-PCT

| Input: set of subsets of local descriptors as |

| Si = {s1, s2 ......sn} |

| Output: visual vocabulary. |

| Begin |

| Choose si based on threshold(t) |

| Set TS = si |

| Assign da = ta & ca = 128 vector |

| Perform cluster c using PCT |

| Construct tree(T) using c based on randomized tree |

| leaf (l) = Vw |

| Vc = Σ l; |

| End |

Construction of visual words suffers problem due to some noise, which occurs when the same visual word exist more than once. These noisy visual words are referred as unusable visual words which can create difficulty during retrieval. To overcome this existing problem, we have introduce an innovative approach of numerical semantic analysis. More number of repeated visual words is considered as noise, since it takes more computation time to analyze multiple queries. To overcome the noise problem, we have assigned numbers for each visual word. Unique numbers are assigned to unique visual words and similar numbers are assigned for similar visual words. By using this technique, we can find similar visual word that occurs more than once as they will have similar numbers. Hence, we can reduce noise which in turns reduces computation time of visual words

As a first step, local descriptors are gathered from section 3.2.1 and partitioned into subsets (Si) using machine learning algorithm and predictive clustering [17]. Resultant subsets are given as input for vocabulary construction and output from this is used to construct Vc. Subsets of local descriptors (si) is selected using threshold value (t). The selected values (si) constitute a training set (TS). For constructing tree (T), we set descriptive attribute (da) as target (ta) and also clustering attribute (ca). The value of descriptive attribute (da) is 128dimensional vectors. This is one of the unique characteristics of the PCT. Root node maintains the overall information of the image and it recursively partition to construct tree. Apply pre-pruning techniques to control the size of visual vocabulary. Pre –pruning requires information regarding the minimum number of descriptor in each tree leaf (l). We can easily determine the number of instance required for a leaf to get the desired number of leaves for the tree, for the given dataset. Each leaf ‘l’ of the tree has a separate visual word (Vw). We need to combine all the leaves to form Vc i.e. visual codebook (VCb) for a frame. These processes are managed by Map and Reduce functions in parallel manner [3]. The Map and Reduce functions is given as,

| (30) |

The map task holds the information of leaf node. TB-PCTs are efficient in enumeration. Here we resolve the problems of handling small amount of dataset by using random forest tree. By concatenating the individual VCb from each of the TB-PCT we can obtain final codebook. The formula for evaluating TB-PCT is as given below,

| (31) |

After completion of the VCb computation process, the next step is to constitute each 3D model (frames in dataset or query) as a histogram which is nothing but occurrences of the codebook element. For this purpose, we sort all the descriptors along the tree.

As a Further step, we need to count the number of descriptors that is present in a given ‘l’ i.e. leaf, which accounts for the number of visual word. We have presented the frame as a histogram of descriptors per visual word.

3.2.3 Matching (soft-weighting schemes)

Matching is the final stage of CBVR where we have used the distance function and similarity schemes to retrieve proficient results. To measure the similarity between the two images, distance function is needed. In order to accomplish this task, we have used L2 (Euclidean) distance function and soft weighting scheme [10]. Map reduce task is partite as Map and reduce function.

a. Map stage

In Map stage, measurement between one key point and its neighboring visual word is performed to compute the similarity function. From this top-k nearest neighbors is found. Now the mapping process takes place by mapping the key point to its top-k nearest neighbor. From each mapper, we get result as the number of output pairs (Ix(n), vtk(n)). Here, Ix(n) indicates the key(Ix(n)) which is the index of ith ranking visual word in the top-k results and vtk(n) indicates value(vtk(n))which represent the similarity score with partial weight according to the rule that forms proximity with visual word. Priority is given to the highest similarity score during the process.

b. Reduce stage

In Reduce stage, histogram is computed by arranging the (key, value) (Ix(n), vtk(n)) pair to produce video representation. In particular, reduce stage gathers the partial weight value of each key pair- value (Ix(n), vtk(n)) to its corresponding key index.

Soft-weighting scheme

Scheme plays an important role to find the weight of each visual code word. For each key point in the image (query image & dataset frame), we select the top-k nearest visual words instead of searching only for the nearest visual words. If suppose we have a visual vocabulary of k visual words, we use a k-dimensional vector VT = [vt1, vt2, ...vtk] with each component vtk representing the weight of a visual word k in an image. It can be calculated as follows,

| (32) |

| (33) |

Where Mi represents the number of key points whose ith nearest neighbor is visual word k. Estimating sim(j,k) constitutes the similarity between key point j and visual word k. In (25), key point is found based on its similarity to word k, weighted by where the constituted word is its ith nearest neighbor. Usually, N=4 is reasonable to set. The component disj,k represent the distance between two images. To predict the distance, we have used L2 distance functions.

Occurrence of visual word is constructed as a tree like index structure, which proves to be computationally efficient.

L2 distance is to measure similarity between the input image and stored (database) image using distance measurement. Distance is measured between any two pixels in the image

We fetch weights from visual Vector of query image and image retrieved from nearest neighbors, further we estimate distance separately for query image and for retrieved image. Formula to determine L2 distance is given as,

| (34) |

Where,

Based on the calculated visual vector similarity, ranking is performed using k-nearest neighboring visual vector images. It makes our proposed system efficient and effective. Finally the matched results are ranked according to the index value of an images and it is returned back to the queried users as a feedback.

IV Experimental Evaluation

4.1 Dataset and Tools

Our proposed system is evaluated as per the data set, kept in the view for user convenience. Some of the experimental data sets are used in research papers such as text documents, images and audio files. Generally the sizes of the video files are larger when it compared with other files like images or audios. In our experiment, we take video files and convert it into frames using frame conversion tools, converted frames are considered as dataset. Whereas our experiments are considered 3D videos which are gathered from different web pages such as YouTube, Wikipedia, Flickr etc., which are also includes some other web pages that shows videos on its pages. Such web pages consist of more than thousands of video files in it. Here our proposed system brings out an effective retrieval of related videos as per the user's request.

Assessment of our proposed system includes a short description about the computer systems which is based on the storage capacity, speed, number of systems used, etc. Based upon the specifications, computer systems are to be participated and to provide estimated results regarding our progress. Table 1 shows the hardware configurations of the implementation.

Table1.

Hardware Configuration of our Implementation

| Specifications | N1 | N2 | N3 | N4 | N5 |

|---|---|---|---|---|---|

| Processor | Intel Core i3 | Dual core | Intel Pentium QuadCore Processor | AMDFx-6100 | Intel Core i5 |

| CPU core | 4 | 2 | 4 | 6 | 4 |

| CPU Speed | 2.53GHz | 3GHz | 2.2GHz | 3.9GHz | 3.7GHz |

| RAM | 4GB | 2GB | 2GB | 8GB | 8GB |

We take five computer systems which are named as Node 1 – Node 5 with different specifications. Here Node 1 acts as the master that leads alike a leader and other systems process under the supervising of Node 1. The nodes specifications are designed based on the hardware configurations.

The architecture of the proposed running system is shown in Figure 5 which consists of a single master slave node with four slave nodes. Master slave node is represented as N1 and other slave nodes are represented as N2 – N5. The user requests a 3d image and retrieves number of relevant 3d videos as per the user input query. Here single user is supposed to look for only one image at a time. In case of remote access, multiple users can give request to the search engine, query as 3d images from different systems. As, if the user request 3d image then the system starts run and retrieve the available number of related 3d videos.

Fig. 5.

Architecture of running Systems on mapreduce framework

4.2 Performance Evaluation

For the purpose of comparative analysis we broadly classified in three scenarios for each process that is takes place in our proposed mapreduce framework. The three scenarios are as follows (i) Scenario-1: Node 1 and Node 2 is used, (ii) Scenario-2: Node 1, Node 2 and Node 3 are used and at last in (iii) Scenario-3: All the five nodes from Node 1 – Node 5 are used. Our implementation work to be done on the software specifications of ubuntu 14.04 and hadoop2.7.0 and JDK 1.7 version.

We consider parameters for evaluating 3D video retrieval of our proposed system. We tack the following parameters in account such as Precision-Recall, E-Measure and Discounted Cumulative Gain and Mapreduce Time.

4.2.1 Precision-Recall

The parameter Precision is the ratio of retrieved 3D models which are related to the specified query. Then the parameter Recall is the ratio of the successfully retrieved 3D models which are related to the specified input query. Formula for precision and recall are as follows,

| (35) |

| (36) |

By using the above mentioned formula, the Precision-recall estimation can be done. Where Q represents the relevant query that is retrieved from the 3D models and R is the retrieved 3D models.

4.2.2 E-Measure

E-Measure is defined as the measurement of precision and recall for a fixed number of retrieved 3D models. E-Measure is defined in the form of an equation which follows as,

| (37) |

From the above equation, we can estimate E-Measure for entire proposed system.

4.2.3 Discounted Cumulative Gain

Discounted cumulative gain is defined as the total gain accumulated for a particular rank. This DCG measures is used for the gain based on the estimated ranking list. This is more effective to calculate for the application of search engines since the ranking list efficiency which retrieves relevant results can be predicted. The general formula to determine DCG is as follows,

| (38) |

Where,

The value for ‘reli’’ mentioned in the equation can be either good or bad which ranges between 0 ≤ reli ≤ 1. This is a parameter to prove that our ranking procedure brings only relevant result. Here we estimate to show our ranking list gives relevant results when compared with previous work.

4.2.4 Map-Reduce

A Map-Reduce is a collection of Map and Reduce procedure as discussed earlier in above sections. The map tasks runs in parallel by splitting the given input data. Similarly, reducer performs its work in parallel on different intermediate keys. Estimation of the overall processing time is calculated by Map-Reduce.

4.3 Experimental Results

As per the dataset, our system is composed of 12890 videos and 399015 key frames generated for the videos. Then a set of key frames are taken as training set and few are taken for testing.

The performance of Precision-Recall is experimentally analyzed by the graph. A curve is plotted for Precision-Recall parameter based on the three scenarios mentioned in the section 4.2. Overall precision –recall values displays the retrieval accuracy. From the Plot 6, we can observe that there is slight difference among the three scenarios. However scenario 3 shows better performance as compared to other two scenarios.

The resulted values are the processing time (unit mentioned in seconds) taken for three different scenarios. They are tabulated in Table 2 and Table 3. All the three scenarios are dissimilar as they differ in their processing time. The values tabulated in the tables are obtained for the above mentioned 399015 key frames.

Table2.

Training Results for three scenarios

| Processing Steps | Training |

||

|---|---|---|---|

| Scenario 1 | Scenario 2 | Scenario 3 | |

| Shape Feature Extraction | 702 | 421.2 | 210.6 |

| Overall Feature Extraction | 1367.5 | 820.25 | 407.65 |

| Codebook Generation | 1277 | 892.2 | 446.1 |

Table3.

Testing Results for three scenarios

| Processing Steps | Testing |

||

|---|---|---|---|

| Scenario 1 | Scenario 2 | Scenario 3 | |

| Overall Feature Extraction | 1510.5 | 963.28 | 550.79 |

| Shape Feature Extraction | 836 | 555 | 345 |

This analysis shows difference in the processing speed based on our scenarios. We have compared the scenarios and finally concluded that scenario 3 shows better performance than the other two scenarios.

Figure 6 shows three different plots for three scenarios, considering the usage of hadoop.

Fig. 6.

Precision-Recall for three scenarios

It implies that the presentation of scenario 3 is better in case of Precision-Recall rate when compared to other scenarios. These two parameters are described above with unique formulas. The entire E-measure percentage obtained for our proposed is around 64%. Whereas DCG obtained is of 69.4 % based on the list of ranking obtained from the retrieved 3d videos.

Table 4 shows the resulted processing time taken for the overall Map-Reduce process. Here also the training and the testing time varies for each scenario.

Table4.

Experimental results obtained for overall mapreduce

| Overall Mapreduce | ||

|---|---|---|

| Training | ||

| Scenario 1 | Scenario 2 | Scenario 3 |

| 2003 | 1202 | 601 |

| Testing | ||

| Scenario 1 | Scenario 2 | Scenario 3 |

| 3677 | 2206 | 1103 |

Figure 7 shows the plot for overall Map-Reduce processing time for 1000 samples considered in our process. This result represents the entire processing time taken for the number of samples considered in our Map reduce framework.

Fig. 7.

Overall mapreduce time for training and testing set

Table 5 implies the average matching time taken for both, with and without hadoop process. The process which uses hadoop entirely gives efficient results in terms of time and retrieval.

Table5.

Comparison of BOVW in matching and retrieval

| Bag Of Visual Words | Average Matching Time | Average Retrieval (%) |

|---|---|---|

| Without Hadoop | 0.002972 | 75 |

| With Hadoop | 0.00014 | 97 |

Figure 8 illustrates the comparison between PCT [31] and TB-PCT. Here, accuracy is related to the retrieval of relevant results for the given number of queries. In normal PCT process the local descriptors are selected in random manner whereas in TB-PCT local descriptors are selected based on the threshold.

Fig.8.

Comparison between PCT and TB-PCT

Table 6 shows the relevant and irrelevant results retrieved from the number of queries given by multiple users by using hadoop and without hadoop. While using hadoop, we retrieve maximum positive results even if the number of user gets increased. Further this result proves that our proposed system stands ahead when compared to the conventional process performed without using hadoop.

Table 6.

Comparison of Relevant and Irrelevant results

| With Hadoop | Without Hadoop | |||

|---|---|---|---|---|

| Number of queries | Positive Results | Negative Results | Positive Results | Negative Results |

| 1000 | 1000 | 0 | 988 | 12 |

| 1500 | 1500 | 0 | 1487 | 13 |

| 2000 | 1997 | 3 | 1980 | 20 |

| 2500 | 2498 | 2 | 2475 | 25 |

Figure 9 illustrates the plot between numbers of queries given by multiple users and the response for multiple users to retrieve positive results for all the queries of the user. Above figure implies the comparison between the system with hadoop and without hadoop. Finally, we can conclude that the system using hadoop gets positive response for all its queries.

Fig. 9.

comparison on Positive results retrieved

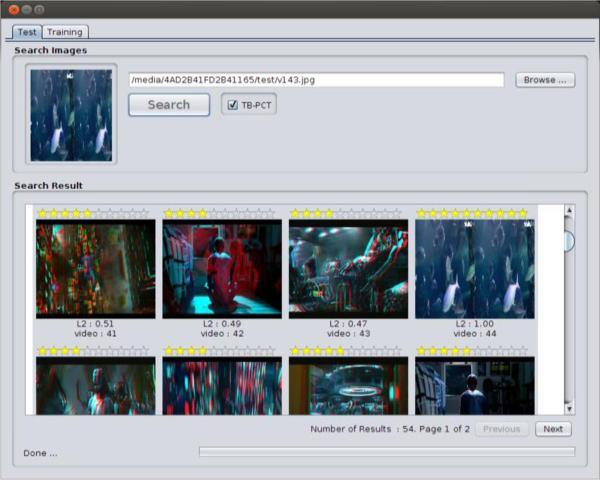

Figure 10 represents the result obtained for the input query image by the user to retrieve the relevant video. From this, we can calculate the overall Map-Reduce time for testing and training .The processing time differs for each input in the query image. The processing time variance is based upon the videos available for the given input query.

Fig. 10.

Resulted Output

From the resulted figure 10, we can say that the retrieved 3d videos looks like the given query image. The total number of results in this form conveys the number of relevant 3d videos retrieved for the given 3d query.

V Conclusion

We have included map reduce framework in our system in order to speed up the processing time of 3D video retrieval process. Our newfangled approach is mainly focused on scalability and fast processing of large dataset. Map reduce has following processing stage which includes key frame selection, feature extraction visual codebook generation and matching. All this process runs in the background of map reduce. From our experimental result, we can have proved that our system reduces the time complexity. Our 3D-CBVR system contains 399015 key frames for processing And hence produce efficient result. 3D-CBVR system has different precision & recall rate for different scenarios. Hadoop based 3D-CBVR system has an added advantage that it takes less time for clustering (generating visual codebook) because we have used TB-PCT method which proves to be efficient for large dataset. We have combined all the features (shape, color & texture) to acquire fruitful result and improve precision. Due to this, no dataset(videos) could be missed. We have affirmed that our system that is BOVW and Mapreduce based 3D-CBVR system take less time for video retrieval process. We have overcome the problem of time complexity and produced efficient result. In the future work, we will incorporate the concept of duplicate video detection to further reduce the processing time.

Fig. 4.

Flow of Feature Extraction

Contributor Information

C.Ranjith Kumar, Bharathiar University, Coimbatore, Coimbatore District, cranjithkumarscholar@gmail.com.

S. Suguna, Dept of Computer Science, Sri Meenakshi Govt Arts College, Madurai, kt.suguna@gmail.com.

REFERENCES

- 1.Ranjith Kumar C, Naga Nadhini S. Star: semi supervised-clustering technique with applications for retrieval of video. International conference on Intelligent computing Applications. 2014:223–22. [Google Scholar]

- 2.Tabia Hedi, Laga Hamid. Covariance-based descriptors for efficient 3D shape matching, retrieval and classification. IEEE Transactions on Multimedia. 2015;17:1591–1603. [Google Scholar]

- 3.Hare Jonathon S., Samangooei Sina, Lewis Paul H. Practical scalable image analysis and indexing using hadoop. Multimedia Tools and Applications. 2012;71:1215–1248. [Google Scholar]

- 4.Ke Ding WeiWang, Liu Yunhui. 3D model retrieval using bag of view words. Multimedia Tools and Applications. 2013;72:2701–2722. [Google Scholar]

- 5.Li Zongmin, Wu Zijian, Kuang Zhenzhong, Chen Kai, Gan Yongzhou, Fan Jiapping. Evidence-based SVM fusion for 3D model retrieval. Multimedia Tools and Applications. 2013;72:1731–1749. [Google Scholar]

- 6.Wang Hanli, zhu Fengkuangtian, Xiao Bo, Wang Lei, Jiang YuGang. GPU based map-reduce for large- scale near duplicate video retrieval. Multimedia Tools and Applications. 2014;74:10515–10534. [Google Scholar]

- 7.Funkhouser Thomas, Min Patrick, Kazhdan Michael, chen Joyce, Halderman Alex, Dobkin David. A search Engine for 3D models. ACM Transactions on Graphics. 2013;22:83–105. [Google Scholar]

- 8.Umesh KK, suresha Web image retrieval using visual dictionary. International journal on web service computing. 2012;3 [Google Scholar]

- 9.White Brandyn, Yeh Tom, Lin Jimmy, Davis Lany. Web-scale computer vision using map reduce for multimedia data mining. 10th ACM International workshop on multimedia data mining. 2010 [Google Scholar]

- 10.Jiang Yu-Gang, Ngo Chong-Wah, Yang Jun. Towards optimal bag-of-features for object categorization and semantic video retrieval. 6th ACM International Conference on Image and Video Retrieval. 2007:494–501. [Google Scholar]

- 11.Liu Jialu. Image retrieval based on bag-of-words model. information retrieval. 2013 [Google Scholar]

- 12.wnag Hanli, shen Yun, Wang Lei, zhufeng Kuangtian, wang Wei, cheng cheng. Large scale multimedia data mining using map reduce framework. IEEE 4th international conference on cloud computing technology and science. 2012:287–292. [Google Scholar]

- 13.Brachmann Eric, Spehr Marcel, Stefan G. Feature propagation on image webs for enhanced image retrieval. 3rd ACM international conference on multimedia retrieval. 2013:25–32. [Google Scholar]

- 14.Guo KEhua, pan Wei, Lu Mingming, zhou Xiaoke, Jianhua MA. An effective and economical architecture for semantic-based heterogeneous multimedia big data retrieval. Journal of System and Software. 2014;102:207–216. [Google Scholar]

- 15.Dimitroyski Iyica, Kocev Dragi, Loskovyska Suzna, Dzeroski saso. Fast and scalable image retrieval using clustering trees. Lecture notes in computer science. 2013;8140:33–48. [Google Scholar]

- 16.Moosmann Frank, Nowak Eric, Jurie Randomized Frederic. Clustering Forests for Image Classification. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2008;30:1632 – 1646. doi: 10.1109/TPAMI.2007.70822. [DOI] [PubMed] [Google Scholar]

- 17.Mademlis Athanasios, Daras Petros, Axenopoulos Apostolos, Tzovarasm Dimitrios, Strintzis Michael G. Combining Topological and Geometrical Features for Global and Partial 3-D Shape Retrieval. IEEE Transactions on Multimedia. 2010:819–831. [Google Scholar]

- 18.Robson Schwartz William, Pedrini Hélio. Color textured image segmentation based on spatial dependence using 3d co-occurrence matrices and markov random fields. Journal of Science. 2012;53:693–702. [Google Scholar]

- 19.Sheka BH, Raghurama Holla K, Sharmila Kumari M. Video retrieval. An accurate approach based on kirsch descriptor. IEEE International Conference on Contemporary Computing and Informatics. 2014:1203 – 1207. [Google Scholar]

- 20.Sze Kin-Wai, Lam Kin-Man, Qiu Guoping. A new key frame representation for video segment retrieval. IEEE Transactions on Circuits and Systems for Video Technology. 2005;15:1148 – 1155. [Google Scholar]

- 21.Ruiz Conrado R., Jr, Cabredo Rafael, Jones Monteverde Levi, Huang Zhiyong. Combining shape and color for retrieval of 3d models. IEEE 5th International Joint Conference. 2009:1295 – 1300. [Google Scholar]

- 22.Ramanan Amirthalingam, Niranjan Mahesan. A Review of Codebook Models in Patch-Based Visual Object Recognition. Journal of Signal Processing System. 2012;68:333–352. [Google Scholar]

- 23.Kurani Arati S., Xu Dong-Hui, Furst Jacob, Stan Raicu Daniela. Co-Occurrence matrices for volumetric data. 7th International Conference on Computer Graphics and Imaging. 2014 [Google Scholar]

- 24.Calic J, Thomas BT. Spatial analysis in key-frame extraction using video segmentation. Workshop on Image Analysis for Multimedia Interactive Services. 2004 [Google Scholar]

- 25.Johan WH, Tangelder Remco C. Veltkamp, A survey of content based 3d shape retrieval methods. IEEE Proceedings of Shape Modeling Applications. 2004:145 – 156. [Google Scholar]

- 26.ElNaghy Hanan, Hamad Safwat, Essam Khalifa M. Taxonomy for 3d content-based object retrieval methods. Journal of Research and Reviews in Applied Sciences. 2013:412–446. [Google Scholar]

- 27.Lin Ting-Chu, Yang Min-Chun, Tsai Chia-Yin, Frank Wang Yu-Chiang. Query-adaptive multiple instance learning for video instance retrieval. IEEE Transactions on Image Processing. 2015;24:1330–1340. doi: 10.1109/TIP.2015.2403236. [DOI] [PubMed] [Google Scholar]

- [28.Yan Chunlai. Accurate Image Retrieval Algorithm Based on Color and Texture Feature. Journal of Multimedia. 2013;8:277–283. [Google Scholar]

- 29.Baharul Islam Md., Kundu Krishanu, Ahmed Arif. Texture feature based image retrieval algorithms, International. Journal of Engineering and Technical Research (IJETR) 2014;2:71 – 75. [Google Scholar]

- 30.Huang Peng, Hilton Adrian, Starck Jonathan. Automatic 3d video summarization: key frame extraction from self-similarity, Fourth International Symposium on 3D Data Processing. Visualization and Transmission. 2008 [Google Scholar]

- 31.zenko Bernard, Dzeroski Saso, Struyf Jan. Learning predictive clustering rules. Lnowledge discovery in inductive data bases. 2006;3933:234–250. [Google Scholar]