Summary

A wide range of human factors approaches have been developed and adapted to healthcare for detecting and mitigating negative unexpected consequences associated with technology in healthcare (i.e. technology-induced errors). However, greater knowledge and wider dissemination of human factors methods is needed to ensure more usable and safer health information technology (IT) systems.

Objective

This paper reports on work done by the IMIA Human Factors Working Group and discusses some successful approaches that have been applied in using human factors to mitigate negative unintended consequences of health IT. The paper addresses challenges in bringing human factors approaches into mainstream health IT development.

Results

A framework for bringing human factors into the improvement of health IT is described that involves a multi-layered systematic approach to detecting technology-induced errors at all stages of a IT system development life cycle (SDLC). Such an approach has been shown to be needed and can lead to reduced risks associated with the release of health IT systems into live use with mitigation of risks of negative unintended consequences.

Conclusion

Negative unintended consequences of the introduction of IT into healthcare (i.e. potential for technology-induced errors) continue to be reported. It is concluded that methods and approaches from the human factors and usability engineering literatures need to be more widely applied, both in the vendor community and in local and regional hospital and healthcare settings. This will require greater efforts at dissemination and knowledge translation, as well as greater interaction between the academic and vendor communities.

Keywords: Human factors, usability, electronic health records, safety, error, human-computer interaction, technology-induced error

1 Introduction

The usability of health information systems has been recognized as being a major issue in the deployment and adoption of health information technologies (IT) internationally. Although several decades of work have been spent in developing and applying methods for improving the usability of health IT, there are still frequent and numerous reports of health IT systems that are unusable. This has inadvertently impacted healthcare workflow and is associated with serious negative unintended consequences. In some cases, systems have been reported that are unusable and could be considered to be safety hazards [1]. In this paper we discuss the issue of unintended negative consequences of health IT [2] that have come to be known as technology-induced errors [3]. The paper then explores the link that has been documented in the literature between poor usability and technology-induced errors. The question of how human factors methods and approaches can be used to improve the situation is then discussed, including how methods can be applied that are proactive in identifying unintended human factors issues prior to widespread system release. Thoughts on the current state of usability of health IT are presented along with the working group’s position regarding what can and needs to be done to lead to more usable and safer health IT that are free from negative unintended consequences. By applying human factors approaches it is clear that some previous negative unintended consequences of health IT have been detected and rectified. However, new ones are appearing and greater knowledge about and application of human factors methods are still needed.

1.1 What Are Technology-induced Errors and How Can They Be Dealt with?

Over the past decade a variety of papers have been published to document the existence of a category of negative unintended consequence now known as technology-induced errors (i.e. which are a subclass of unintended consequences). Technology-induced errors arise from the complex interplay between health IT and end users interacting with that technology in real-world settings such as clinics and hospitals [4]. Technology-induced errors may be difficult to prevent as they may arise from any stage of the system development life cycle (SDLC), stay latent, and be detected only after the system has been released. Examples of such errors include clinicians failing to attend to computer-based alerts or drug allergy information due to complex screen interactions or difficulties in navigating through a user interface to find the information during an emergency. Such systems may have passed traditional software testing methods, but technology-induced errors may only appear once the system is in use in real healthcare settings. Other technology-induced errors result from the inflexibility of systems to adapt to complex workflows or emergency situations (e.g. when an emergency override of a system is needed but that function has not been anticipated prior to the system release). Since the first papers on this topic appeared, an increasing number of reported technology-induced errors have been described [5, 6]. One approach to dealing with technology-induced errors has been to develop error-reporting systems that allow end users to indicate if they believe a technology-induced error has occurred after a system has been deployed [7]. A complementary stream of research that borrows heavily from human factors research has emerged that involves setting up usability tests and clinical simulations to detect technology-induced errors before health information systems have been released [8]. This more proactive approach attempts to identify such errors earlier in the SDLC, i.e., before the system is released for routine use rather than after, as discussed in this article.

1.2 What is the Link between Poor Usability and Technology-induced Errors?

Starting in 2004, work was begun to show a statistical relationship between the presence of serious usability problems and the occurrence of specific types of technology-induced errors. In one study, users of a mobile health application (clinicians) were video recorded while entering medication data into a prescription writing program [6]. Usability problems were recorded and statistically related to occurrences of technology-induced errors (e.g. entry of a wrong dose for a medication), and it was found that all detected technology-induced errors were associated with one or more usability problems. Usability problems included problems users encountered in navigating through a healthcare user interface, problems in entering medical data, problems in finding relevant sections of an electronic medical record, and a number of other issues. Medical errors included the entry of a wrong medication, an incorrect dosage, and missing medication alerts or reminders. Thus a statistical link between poor usability and technology-induced errors has been reported (where serious usability problems were linked to unintended medication errors), leading to the recognition of the importance of identifying and preventing this kind of error through the application of human factors approaches such as usability testing and inspection prior to widespread system release.

2 Usability Engineering Approaches for Identifying and Preventing Technology-induced Errors in Healthcare

Despite the importance of current work underway in providing better mechanisms for reporting the occurrence of serious usability problems and related technology-induced errors, there is a need to apply human factors approaches earlier in the SDLC. This is needed in order to prevent the occurrence of such errors in the first place and to ensure systems that we do release are as safe from error as possible before going live.

2.1 Usability Testing and Assessment Methods

Usability problems with health IT systems have been long reported in the literature for several decades and have included a wide range of issues such as problems in navigating through complex screen sequences, lack of consistency in user interfaces, lack of appropriate feedback for user actions, failure of systems to provide meaningful guidance to users, lack of visibility of critical information, poor training and poor integration into the clinician workflow [8, 9]. Usability inspection methods have been employed to identify such problems during the design of systems [10]. The inspection method known as heuristic evaluation involves one or more analysts systematically stepping through a user interface and noting usability issues [10]. A cognitive walkthrough involves an analyst walking through a user interface to carry out tasks, noting steps taken by users, system responses, and potential user problems [10]. Usability testing methods have also been widely used involving observation (and video recording) of representative end users (e.g. physicians or nurses) interacting with a system to carry out representative tasks (e.g. entry of patient data) [10]. The literature in health informatics is replete with hundreds of studies using these methods and showing a wide range of usability problems associated with health IT. Furthermore, this has been documented in a wide range of applications, including electronic health records, decision support systems, mobile applications, and interoperable health IT networks. Since the early 1990s, a wide range of studies have documented these approaches to addressing usability issues, including variations of usability testing methods involving the observation of representative users being observed while carrying out representative tasks using a technology under study.

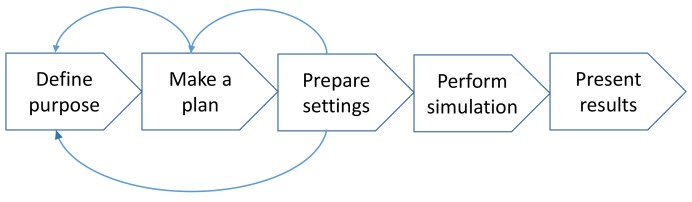

More recently, clinical simulations have emerged where representative users are observed carrying out representative tasks in real or realistic (representative) environments, such as hospitals, clinics, and even homes [8, 11]. There is both a safety and ethical imperative to ensure that systems are properly tested under real or realistic conditions prior to widespread release. To do so, clinical simulations offer an important tool that could be used to evaluate systems for unintended negative consequences. The performance of clinical simulations consists of five iterative phases – see Figure 1.

Fig. 1.

The iterative phases involved in conducting clinical simulations

Firstly, the purpose of the simulation has to be defined. As in any other study, the aim of the activity must be explicated and agreed on through a rational discussion of opportunities and limitations. In the planning phase, the scope is determined which will establish the content of the scenarios as well as the number of simulations necessary to run and the profiles of the participating clinicians and patient actors. Preparing the settings includes writing the scenarios and designing the clinical and technical set-up. The purpose and the plan of the simulation must be carefully considered. Preparing complex scenarios and detailed patient cases is resource-demanding and the need for complexity should be carefully considered. However, the resources spent in this phase will benefit the efficiency and decrease the time spent by the clinicians performing the simulation. The participating clinicians should be familiar with routine daily work tasks – quality managers etc. are inappropriate to participate to the simulations but can be used as observers. The simulation can be observed from an observation room through a one-way mirror or by video recording. Data from observations can be obtained on patient safety issues, organizational or technical challenges, and the need for special users training. Additional data can be obtained using questionnaires and/or interviews. The results of the simulation are usually presented in a report which also includes recommendations. Although high fidelity clinical simulations are capable of mirroring very complex work situations, there are still aspects difficult to capture: it has been difficult to realistically stress and interrupt the participants during a simulation and likewise the time it usually takes to get acquainted to a new system is not reflected in clinical simulations [11].

2.2 Towards a Layered “Safety Net” Approach to Ensuring Usability and Safety

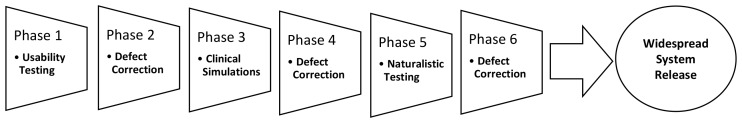

Recent work by IMIA human factors and related working groups [8, 9] has argued for the need to employ a phased sequence of tests and mixed methods (that have emerged from human factors research as described in the previous section) prior to deploying health IT systems in order to identify and mitigate the risk of technology-induced errors. These approaches have mainly emerged from the area of usability engineering and are user-centered design and evaluation methods meant to complement rather than replace traditional software testing approaches (such as black and white box testing). It is argued that prior to system release health IT should first undergo thorough usability testing followed by remediation of identified defects. Based on the results from testing, identified usability problems can be prioritized and fixed (see Figure 2). Systems should then undergo clinical simulation testing (as described above), whereby users are observed using the system under realistic or “near-live” contexts. The results of such testing again can be used to feed back problem rectification. Finally, prior to the release of health IT on a widespread basis, it is recommended that the observation of the system use (including video analysis) be carried out in limited naturalistic testing to detect any errors that could not be detected under conditions that are less than real. The layers of testing thus form a “safety net” (as displayed in Figure 2) for catching a wide array of usability problems and technology-induced errors.

Fig. 2.

Recommended sequence of testing phases to identify and mitigate technology-induced errors (adapted from [9])

Although carrying out such a sequence of testing would not guarantee there would not be any problem (i.e. a system would be free from unintended negative consequences) identified after the widespread system deployment, the application of such a sequential and rigorous array of methods would likely go a long way in preventing many negative unintended consequences (i.e. technology-induced errors). Evidence for this argument has emerged from studies where such a phased sequential approach to testing have been successfully employed. For example, Li and colleagues [12] have applied a multi-phase approach to optimize the integration of clinical guidelines into an electronic health record. The approach taken began with simple in-situ (i.e. conducted in real clinical settings) usability testing that detected a number of serious (but easy to fix) issues related to users not understanding user interface labels. Then, the testing moved to the clinical simulation step, where users interacted with a “digital patient” (i.e. a multi-media video patient) to determine if there were issues when the users interacted with the system in a less prescribed and artificial way. Based on results from this level of testing, the invocation of guidelines and their integration into the electronic health record were optimized. Finally, near-live as well as live testing of the system lead to a final optimization, with the resultant system leading to high user uptake and adoption. It was concluded by Li and colleagues that the application of a systematic layered approach to testing health IT would lead to a greater chance of identifying and rectifying negative unexpected consequences prior to the widespread release of new systems into healthcare environments.

3 What Are Current Issues and Future Directions?

Despite the documentation of human factors methods and the existence of approaches emerging from usability engineering, such as those described above, there continue to be many reported issues with the usability of health information systems [7, 8, 9, 13]. Part of this paradox may be related to the understanding of the in-depth and layered approach to optimizing systems that is needed. Indeed many organizations and vendors have defended their systems and products by arguing that they were developed by applying rigorous human factors approaches. However, it is argued in this position paper that the proper application of human factors approaches can be complex and may require not just one approach (e.g. usability testing at a centralized conformance testing or beta-test site hospital), but rather an array of testing approaches (e.g. usability testing and clinical simulation) and multi-phased approaches conducted with a wider range of user groups and under a wider range of environmental conditions than is currently the case. In addition, the widespread dissemination of usability testing methods, clinical simulation approaches, error reporting results, and knowledge and uptake of emerging national and international standards is needed.

3.1 Need for Knowledge Translation

Approaches are needed to “demystify” the cost, complexity, and resources needed for applying human factors approaches in order to make user testing and evaluation more routinely applied both by vendors and developers of systems, as well as by local and regional organizations (e.g. hospitals and health authorities and their respective IT departments) that customize, implement, and deploy health IT. In addition, development and dissemination of evidence-based guidelines are needed to help ensure that unexpected negative consequences do not appear. For example, NHS’ guidelines on how to display medication information in computer systems is based on many years of experience and they should lead to more usable and safer systems if they were followed by designers and customizers of systems [14]. Another direction that should be used to put pressure on vendors so that they develop more usable and safer systems is the requirement for the application of human factors approaches in the selection and procurement phases where healthcare organizations are attempting to select systems that lead to a good system-organization “fit” when buying systems. For example, Kushniruk and colleagues describe an approach to gathering stronger evidence for system-organization fit that considers testing candidate systems under real or realistic conditions of clinical simulations [15]. In contrast to usability testing methods, whereby system and representative users are observed doing representative tasks, with clinical simulations, representative users are observed doing representative tasks in representative (real or highly realistic) contexts of use.

3.2 Application in System Procurement

An example of a specific use of clinical simulation to assess multifunctional systems in complex work situations occurred when a large procurement of a new EHR-platform took place in 2012 to 2013 in two major regions in Denmark [16]. The platform was intended to support clinical and administrative core processes with increased effectiveness and quality of care including patient safety. The two regions had a strategic demand of user involvement prior to any procurement usability, and human factors issues were required to be explicitly assessed. The procurement of the EHR platform was the largest in Denmark as it was expected to cover 14 hospitals and almost half the Danish population (5.6 million inhabitants in total). Clinical simulation was chosen as the methodological approach, as it covered the interests from various end-users, medical specialties, and work cultures, and at the same time it would live up to transparency demands according to European Union rules. The specific method developed for the simulation was challenged with a number of issues: 1) results must be comparable; 2) the assessment of the different vendor products must be homogenous; 3) the process has to be transparent; 4) limited time to perform and report on the assessment, and 5) assessment data must be easily collectable and instantly available for analysis. Assessing the vendor products by clinical simulation methods proved valuable in achieving comparable results for the usefulness and ease of use of the systems and it demonstrated practicality in giving voice to the future users who will use the chosen system in their daily work.

3.3 From Small to Large-scale Usability Studies

Integration of data on usability issues from small scale qualitative usability studies will need to be complemented with data from large deployments as we move beyond the laboratory to include collection and reporting of data from in-situ testing and large-scale online user experience studies. In addition, study of usability data along the entire continuum from the individual user to the various organizational levels will be needed [17]. Along these lines, data mining and data analytic approaches to identifying patterns (e.g. patterns of technology-induced errors) in large-scale naturalistic usability data will be essential [18, 19]. In addition, there is a move to the public reporting of usability issues of commercial healthcare IT products and this is a trend that will need to be expanded (with the hope of greater regional, national, and international reporting and regulation in this area [20]). Application of methods for the remotely recording use of mobile healthcare applications integrating data from a large number of users will be needed in order to provide data for improving user interactions with mobile and ubiquitous health applications [21].

3.4 Need for Widespread Application both Centrally and Locally

Finally, it will be important for organizations, vendors, and end users to understand how human factors, and especially usability engineering methods, can be applied locally, at low-cost, and rapidly within healthcare organizations such as hospitals and clinics (to complement centralized conformance testing processes at a regional and national levels). This will require a shift from thinking that the application of usability engineering and human factors methods is only the domain of selected researchers and a limited number of highly trained practitioners. Rather, infusion of the methods, approaches, and impact of applying human factors methods needs to be inserted into the curricula of health professionals, health informatics specialists, and decision makers responsible for selecting and deploying health information systems. The wider application of methods from human factors at all stages in the SDLC (particularly prior to the widespread system deployment and release) will be needed in order to identify, mitigate, and head off negative unintended consequences such as technology-induced errors. Proving that such methods are cost-effective and capable of detecting potentially expensive and dangerous negative consequences is a first step, and more demonstrations of how basic, yet inexpensive, human factors methods can be applied more widely in healthcare are needed [22-24]. In addition, the development of a knowledge base of evidence-based approaches to applying human factors in order to help decrease technology-induced errors in healthcare is a new and important direction that is being taken by members of the IMIA human factors working group [25].

4 Conclusion

Effective human factors methods do exist that if applied routinely and during key phases of the SDLC would lead to a lessened chance of negative unintended consequences of health information technology (in particular technology-induced errors). Research and applied studies have demonstrated that employing a combination of well-known approaches in a systematic and phased manner can lead to the detection of technology-induced errors prior to the system release. However, greater dissemination and knowledge about these approaches and their potential to reduce negative unintended consequences are needed. Along these lines, more educational initiatives are needed at multiple levels, from universities and colleges to industrial training and national educational initiatives regarding human factors in healthcare, such as those provided by the National Institute of Standards and Technology [26], the Agency for Healthcare Research and Quality in the United States [27] or the Office of the National Coordinator [20, 28-30]. Aligned with initiatives targeted to education and awareness, regulatory and national usability standards development will be an ongoing direction of work to lead to more usable and safer healthcare IT from a human factors perspective [14, 20, 31, 32].

References

- 1.Carayon P. Human factors and ergonomics in health care and patient safety. In; P. Carayon P, editor. Handbook of human factors and ergonomics in health care and patient safety. Baca Raton, FL: CRC Press; 2012. [Google Scholar]

- 2.Ash JS, Berg M, Coiera E. Some unintended consequences of information technology in health care: the nature of patient care information system-related errors. J Am Med Inform Assoc 2004;11:104-12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Borycki EM, Kushniruk AW, Bellwood P, Brender J. Technology-induced errors: The current use of frameworks and models from the biomedical and life sciences literatures. Methods Inf Med 2012;2;95-103. [DOI] [PubMed] [Google Scholar]

- 4.Borycki EM, Kushniruk AW. Where do technology-induced errors come from? Towards a model for conceptualizing and diagnosing errors caused by technology. Kushniruk A, Borycki E, editors. Human, social, and organizational aspects of health information systems. IGI Press: Hershey Penn; 2008. [Google Scholar]

- 5.Koppel R, Metlay JP, Cohen A, Abaluck B, Localio AR, Kimmel SE, et al. Role of computerized physician order entry systems in facilitating medication errors. J Am Med Assoc 2005. Mar 9;293(10):1197-203. [DOI] [PubMed] [Google Scholar]

- 6.Kushniruk AW, Triola MM, Borycki EM, Stein B, Kannry JL. Technology induced error and usability: the relationship between usability problems and prescription errors when using a handheld application. Int J Med Inform 2005;74(7):519-26. [DOI] [PubMed] [Google Scholar]

- 7.Magrabi F, Ong MS, Runciman W, Coiera E. Using FDA reports to inform a classification for health information technology safety problems. J Am Med Inform Assoc 2012;19(1):45-53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Kushniruk A, Nohr C, Jensen S, Borycki EM. From Usability Testing to Clinical Simulations: Bringing Context into the Design and Evaluation of Usable and Safe Health Information Technologies. Contribution of the IMIA Human Factors Engineering for Healthcare Informatics Working Group. Yearb Med Inform 2012;8(1):78-85. [PubMed] [Google Scholar]

- 9.Borycki E, Kushniruk A, Nohr C, Takeda H, Kuwata S, Carvalho C, et al. Usability Methods for Ensuring Health Information Technology Safety: Evidence-Based Approaches. Contribution of the IMIA Working Group Health Informatics for Patient Safety. Yearb Med Inform 2012;8(1):20-7. [PubMed] [Google Scholar]

- 10.Nielsen J. Usability engineering. New York: Academic Press; 1993. [Google Scholar]

- 11.Jensen S, Kushniruk A, Nøhr C. Clinical simulation: A method for development and evaluation of clinical information systems. J Biomed Inform 2015;54:65-76. [DOI] [PubMed] [Google Scholar]

- 12.Li AC, Kannry JL, Kushniruk A, Chrimes D, McGinn TG, Edonyabo D, et al. Integrating usability testing and think-aloud protocol analysis with “near-live” clinical simulations in evaluating clinical decision support. Int J Med Inform 2012. Nov 30;81(11):761-72. [DOI] [PubMed] [Google Scholar]

- 13.Beuscart-Zéphir MC, Pelayo S, Anceaux F, Meaux JJ, Degroisse M, Degoulet P. Impact of CPOE on doctor–nurse cooperation for the medication ordering and administration process. Int J Med Inform 2005. Aug 31;74(7):629-41. [DOI] [PubMed] [Google Scholar]

- 14.National Patient Safety Agency, National Reporting and Learning Service. Design for patient safety: Guidelines for safety on-screen display of medication information. United Kingdom, National Patient Safety Agency; 2010. [Google Scholar]

- 15.Kushniruk A, Beuscart-Zéphir MC, Grzes A, Borycki E, Watbled L, Kannry J. Increasing the safety of healthcare information systems through improved procurement: toward a framework for selection of safe healthcare systems. Healthc Q 2009;13:53-8. [DOI] [PubMed] [Google Scholar]

- 16.Jensen S, Rasmussen SL, Lyng KM. Use of clinical simulation for assessment in EHR-procurement: design of method. Stud Health Technol Inform 2013;192: 576–80. [PubMed] [Google Scholar]

- 17.Russ AL, Fairbanks RJ, Karsh BT, Militello LG, Saleem JJ, Wears RL. The science of human factors: separating fact from fiction. BMJ Qual Saf 2013. Nov;22(11):964-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Albert B, Tullis T, Tedesco D. Beyond the usability lab: Conducting large-scale online user experience studies. Elsevier: New York; 2010. [Google Scholar]

- 19.Kushniruk A, Kaipio J, Nieminen M, Hyppönen H, Lääveri T, Nohr C, et al. Human Factors in the Large: Experiences from Denmark, Finland and Canada in Moving Towards Regional and National Evaluations of Health Information System Usability: Contribution of the IMIA Human Factors Working Group. Yearb Med Inform 2014;9(1):67. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Kushniruk AW, Bates DW, Bainbridge M, Househ MS, Borycki EM. National efforts to improve health information system safety in Canada, the United States of America and England. Int J Med Inform 2013;82(5):e149-60. [DOI] [PubMed] [Google Scholar]

- 21.Nielsen J, Budiu R. Mobile usability. Berkeley CA: New Riders; 2013. [Google Scholar]

- 22.Bias RG, Mayhew DJ, editors. Cost-justifying usability: An update for the Internet age. Elsevier; 2005. May 9. [Google Scholar]

- 23.Baylis TB, Kushniruk AW, Borycki EM. Low-cost rapid usability testing for health information systems: is it worth the effort? Stud Health Technol Inform 2011;180:363-7. [PubMed] [Google Scholar]

- 24.Karat CM. Cost-benefit analysis of usability engineering techniques. In: Proceedings of the Human Factors and Ergonomics Society Annual Meeting 1990. Oct 1;34(12):839-43. [Google Scholar]

- 25.Marcilly R, Beuscart-Zéphir MC, Ammenwerth E, Pelayo S. Seeking evidence to support usability principles for medication-related clinical decision support (CDS) functions. In: MedInfo 2013. Aug 13. p. 427-31. [PubMed] [Google Scholar]

- 26.National Institute of Standards and Technology, Health IT Usability, Accessed (January 9, 2016) from: http://www.nist.gov/healthcare/usability/

- 27.Agency for Healthcare Research and Quality, EHR Usability Toolkit. Accessed (January 9, 2016) from: https://healthit.ahrq.gov/sites/default/files/docs/citation/EHR_Usability_Toolkit_Background_Report.pdf [DOI] [PubMed]

- 28.Office of the National Coordinator. Safer guides will help optimize safety. 2014. https://www.healthit.gov/buzz-blog/electronic-health-and-medical-records/safer-guides-optimize-safety/

- 29.Sittig DF, Ash JS, Singh H. ONC issues guides for SAFER EHRs. J AHIMA 2014;85(4):50-2. [PubMed] [Google Scholar]

- 30.Middleton B, Bloomrosen M, Dente MA, Hashmat B, Koppel R, Overhage JM, et al. Enhancing patient safety and quality of care by improving the usability of electronic health record systems: recommendations from AMIA. J Am Med Inform Assoc 2013; 20(e1):e2-e8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Chan J, Shojania KG, Easty AC, Etchells EE. Does user-centred design affect the efficiency, usability and safety of CPOE order sets? J Am Med Inform Assoc 2011; 18(3):276-81. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Australian Commission on Safety and Quality in Health Care. National Guidelines for On-Screen Display of Clinical Medicines Information. Sydney, Commonwealth of Australia; 2016. [Google Scholar]