Summary

Objectives

The objectives of this paper are to review and discuss the methods that are being used internationally to report on, mitigate, and eliminate technology-induced errors.

Methods

The IMIA Working Group for Health Informatics for Patient Safety worked together to review and synthesize some of the main methods and approaches associated with technology-induced error reporting, reduction, and mitigation. The work involved a review of the evidence-based literature as well as guideline publications specific to health informatics.

Results

The paper presents a rich overview of current approaches, issues, and methods associated with: (1) safe HIT design, (2) safe HIT implementation, (3) reporting on technology-induced errors, (4) technology-induced error analysis, and (5) health information technology (HIT) risk management. The work is based on research from around the world.

Conclusions

Internationally, researchers have been developing methods that can be used to identify, report on, mitigate, and eliminate technology-induced errors. Although there remain issues and challenges associated with the methodologies, they have been shown to improve the quality and safety of HIT. Since the first publications documenting technology-induced errors in healthcare in 2005, we have seen in a short 10 years researchers develop ways of identifying and addressing these types of errors. We have also seen organizations begin to use these approaches. Knowledge has been translated into practice in a short ten years whereas the norm for other research areas is of 20 years.

Keywords: Technology-induced error, health information technology, patient safety, risk management, safety

Introduction

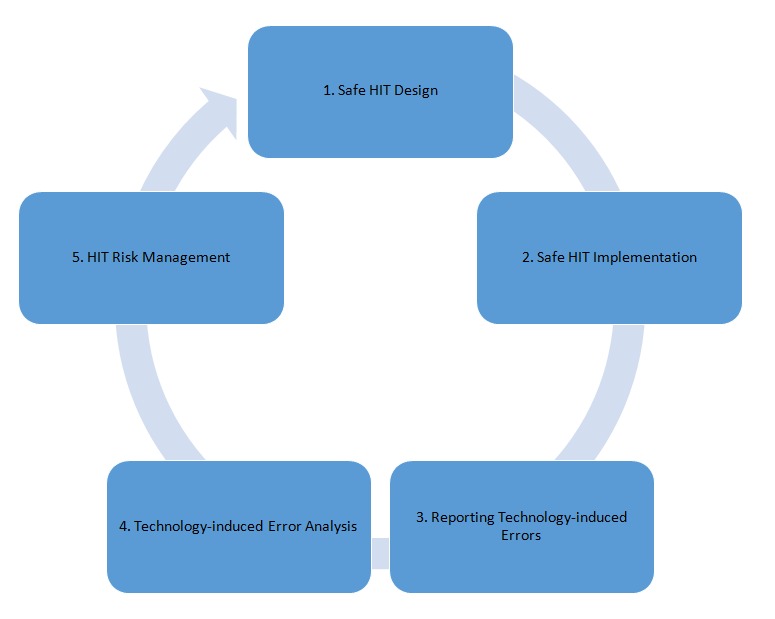

With the modernization of health care and the introduction of health information technology (HIT) into the global market, we have seen a reduction in the number of medical errors. Technologies such as electronic health records (EHRs) and decision support systems (DSSs) have improved health care safety [1]. However, we have also seen the introduction of a new type of error: the technology-induced error. Technology-induced errors are a type of unintended consequence that arise from the introduction of new HIT [2]. Unintended consequences can be positive or negative for citizens, patients and/or health professionals [3, 4]. However, technology-induced errors are a type of unintended consequence that may lead to citizen or patient harm, disability or death [2, 5-8]. Due to the potential of technology-induced errors to do harm, they must be reduced [2, 5-8]. There is a growing body of research and supporting evidence pointing to a number of methods that can be used to address technology-induced errors. More specifically, in this paper we discuss the methods that are currently being used by researchers from around the world to identify, mitigate, and eliminate technology-induced errors. We discuss: (1) safe HIT design, (2) safe HIT implementation, (3) reporting on technology-induced errors, (4) technology-induced error analysis, and (5) HIT risk management. Our work seeks to review the current state of the research aimed at improving HIT quality and safety. Through a cyclic process (see Figure 1) informed by quality improvement literature, it is argued that we can reduce technology-induced errors over time.

Fig. 1.

Cyclic Process of Improving HIT Safety

It is from this perspective that the Health Informatics for Patient Safety Working Group has reviewed some of the current methods and approaches addressing technology-induced errors in the literature. This work is essential as it lays the foundation for addressing this rapidly growing HIT safety issue.

Background

Historically, technology-induced errors have been defined as errors that “arise from the: a) design and development of a technology, b) implementation and customization of a technology, c) interactions between the operation of a technology and the new work processes that arise from a technology’s use” [5, p. 388), d) its maintenance, and e) the interfacing of two or more technologies [9] used to provide or support health care activities. Technology-induced errors are often not detected until a system has been implemented or is in use and are often not caught by conventional software testing methods (as they are often not technically software bugs, but rather emerge from the features and functions of HIT design that lead to errors once a system is used in a complex real-world setting). There are many examples of technology-induced errors. For example, a user’s inability to locate patient allergy information in the user interface of an electronic health record (due to poor user interface navigation and screen design) can lead to a patient receiving a medication (s)he is allergic to [5]. In addition, some systems may auto-populate fields of an electronic record or other HIT tool with default values that may not be relevant for a given patient, leading to errors [2]. Other examples of technology errors that have been reported are related to electronic decision support systems, in particular medication alerts, which are prevalent throughout almost every implementation of computerized provider order entry (CPOE). Medication alerts are primarily drug-allergy, drug-drug interaction, or drug dosage based. Medication alerts are designed to help improve prescribing practices and patient care. They offer an important safeguard and a safety check to ensure correctly-written orders. In pediatric dosing, they are frequently correct. At one pediatric institution, approximately 8% of medication orders generated an alert; unfortunately, more than 90% of the visible alerts were ignored [10]. These extraneous alerts can contribute to alert fatigue and ignored medication alerts. However, due to the implementation of medication alert rules, technology-induced errors may become more prevalent as relevant alerts can be ignored alongside erroneous ones. These errors and the potential harm of an inappropriate order reaching the patient should be studied in more detail as they constitute a type of technology-induced error [11].

Technology-induced errors occur in acute, community, public health, and long term care settings [2-9, 12]. They also occur when citizens use HIT such as mobile eHealth applications in conjunction with peripheral devices (e.g. a blood pressure cuffs or oximeters attached to a mobile application) and wearable devices that are used by citizens to track health habits as well as support health-related decision making [2, 13]. Technology-induced errors can have their origins in governmental policy decisions, health care organizational processes on which HIT is modelled, vendor software design, development and implementation processes, healthcare organizations who implement HIT, and any individual using the technology [5, 14-21]. They can also grow over time even after a system is implemented as documented by incident reporting systems [15, 22-29]. We begin our paper by discussing cur rent methods and approaches from the published research investigating safe HIT design.

1 Safe HIT Design

There are several approaches to safe design that have been identified in the health care literature as having the potential to improve the safety of software; for example, user-centered, participative and composable design approaches. Along these lines, we try to demonstrate the rise of these evidence-based approaches to design. Design guidelines based on the published empirical literature as well as safety heuristics are beginning to influence software design and procurements. We are also seeing that evidence from incident reports and usability tests are being used to improve HIT safety.

1.1 User-Centred Design and Usability Engineering

The source of many technology-induced errors can be related to poor system design and limited testing of HIT during design and development [30]. User-centred design is an approach to the design of information systems characterized as follows: (1) an early and continual focus on end users, (2) the empirical evaluation of systems, and (3) application of iterative design processes. As part of user-centred design, usability testing of systems has become a key method for carrying out empirical evaluation of designs from the end user’s perspective. Results from iterative and continued usability testing of early system designs, prototypes, and near completed systems can reveal a range of usability problems and areas where systems can be optimized and improved during the design process and before finalization of the system [30]. In addition, work has indicated that usability testing during the design process can help to identify and characterize technology-induced errors well before systems are released on a widespread basis [2]. Therefore, iterative usability testing (involving observation of the interactions of representative users of HIT doing representative tasks), early and throughout the design process, is essential in order to catch potential technology-induced errors when they are easier and less costly to rectify than if they are reported on after the system is released [31]. Usability testing methods can also be applied after a system has been released. In this case, error reports are made by end users. After system release, usability testing can help to identify details of why and when a technology-induced error is occurring and can provide specific feedback to design and implementation teams about where technology-induced errors are coming from [31, 32]. Research has shown a strong relationship between the occurrence of serious usability problems and technology-induced errors, and a user-centred design approach (coupled with the use of usability engineering methods) can lead to a reduction of both serious usability problems and concomitantly technology-induced errors that might be associated with them [2].

Usability testing involves the analysis and observation of representative end users (e.g. physicians or nurses) while they carry out representative tasks (e.g. medication administration) using the HIT tool under study. In recent years, with a move towards testing systems in-situ (i.e. in real or realistic settings), the term clinical simulation has appeared [33]. Clinical simulation involves observing representative users doing representative tasks in real or representative environments and contexts of use (e.g. in a hospital setting, clinics etc.). Clinical simulations may be conducted in simulation laboratories, but may also be conducted in real settings (e.g. an operating room after hours) to increase the level of fidelity and realism. Just as results of usability testing can be fed back to improve design of HIT prior to their widespread release, clinical simulations have been found to be useful in identifying serious usability and workflow problems, including technology-induced errors [34].

1.2 Participatory Design

User involvement is essential to ensuring patient safety [35]. Participatory Design (PD) is one way of involving users and other stakeholders (e.g. administrators) as it focuses on making users participate. PD overcomes organizational barriers and establishes ownership of a design solution within an organization [36]. Three issues dominate the discourse about PD: 1) the philosophy and politics behind the design concept, 2) the tools and techniques supplied by the approach, and 3) the ability of the approach to provide a realm for understanding the socio-technical context and business strategic aims where the design solutions are to be applied [37]. A core principle of PD is that users and other stakeholders are actively participating in design activities, where they have the power to influence the design solutions, and that they participate on equal terms [37]. PD is not a predefined method, but an approach that includes a conglomerate of tools and techniques to be applied. These tools and techniques serve as ways to establish a shared realm of understanding based on knowledge of how work is carried out, and how it can be carried out in the future. They may be used as boundary objects. Among these tools are observational studies, questionnaires, diagrams, pictures, photos, interviews, workshops, role-playing and simulated environments, mock-ups and prototyping [36], as well as clinical simulation [39]. PD leads to safer HIT designs. PD’s focus on high levels of user involvement would assure the technology is fully integrated into the environment of its use. To illustrate, researchers were able to develop electronic documentation templates and overview reports that were previously rejected by end-users and hospital managers [39]. Through the use of clinical simulations and a PD approach, stakeholders were involved in the process of re-design and templates were developed that could be used across an entire region [39]. This is reflective of the research that has shown that greater user involvement in design (including use of PD) leads to improved quality of systems and fewer errors from more accurate user requirements [38].

1.3 Composable Design

Other approaches to improving the safety of HIT include the use of composable designs. Current EHRs have been made based on interaction paradigms that include a fixed location of information which must be accessed via menus and multiple screens (‘display fragmentation’) [40], with an information often organized by type (e.g notes, laboratory results and so on, on separate screens) rather than juxtaposed for the convenience of the end-user. Rigid architectural design in which any change requires programming and thus extra time, money, and vendor consent also makes rapid modification difficult such as in response to public health emergencies (e.g. the Ebola outbreak). Thus exposure time to suboptimal design further endangers users or patients.

A different approach which has promise to remedy some of these effects includes architectures and user interface (UI) design which are modular and user-composable (meaning that change does not require re-programming and can be done by non-programmer clinicians or administrators via drag/drop). This allows rapid response (e.g. in seconds or minutes) to new threats or discovery of HIT dangers. The ability to control information selection, arrangement, formatting, and prioritization is important to fit to actual tasks and contexts. Composable approaches allow the user to gather all information s/he considers relevant to the patient case on the same screen, as a normal part of using the system. Because screen transitions in conventional systems impose a cognitive load, this may reduce cognitive load and free users’ mental resources for clinical reasoning. When clinicians are able to do these changes software can be aligned with their deep domain and contextual knowledge. It can allow for tests of new interaction design patterns to foster data entry and review while minimizing risk of wrong entry and/or other identified design flaws [40]. Examples of this type of approach include the work of Jon Patrick [41], Senathirajah [42-44], and in a different high-stakes domain, NASA’s use of a user-composable approach for its critical mission of software control [45].

1.4 Design Guidelines

In more recent years, research evidence in the health and biomedical informatics literature has begun to accumulate. Some of this evidence suggests that the safety of specific systems can be improved. Nowhere is this more evident than in the area of user interface design. In addition to the use of user-centred, participative, and composable design approaches to improve safety, there has emerged a movement towards developing heuristics and design guidelines that are associated with safer user interface design features and functions [46]. The National Health Service has developed standards for HIT user interface design by creating a common user interface design guide. The work was done to improve the consistency of user interfaces, reduce confusion from non-standard displays of medications and numbers, and thereby improve the overall safety of HIT [47]. Other work has focused on the development of guidelines and heuristics based on published empirical research and expert panel review to create safer HIT user interfaces. Carvalho and colleagues [46] developed safety heuristics from a review of the literature on technology-induced errors. The work provides a detailed guide of specific user interface features and functions that improve HIT safety. Heuristics have been used to evaluate the safety of an EHR (as it would be done during a HIT procurement process) to provide feedback to health care organizations and vendors about the technology [46].

2 Safe HIT Implementation

In an extension of the work on safe HIT design, vendor, consulting companies, and/or healthcare organizations who are responsible for implementing HIT also have a role in ensuring that technologies implemented in healthcare settings do not introduce technology-induced errors [5,7,14,18,56]. Implementers of systems need to ensure: (1) there is a strong fit between the HIT and health care context (including other technologies used in that context), (2) there is sufficient usability and clinical simulation testing of HIT prior to full system implementation, (3) health professionals have been adequately trained, (4) the newly implemented HIT is monitored for technology-induced errors, (5) there are reporting systems available for health professionals to report on near misses and actual technology-induced errors during the implementation, (6) there are technology-induced error investigation protocols, and (7) risk management plans have been implemented.

Usability testing, clinical simulations, and techno-anthropologic approaches can be undertaken after a system has been implemented to fully describe and mitigate instances of near misses or actual technology-induced errors during and shortly after implementation.

Usability testing of a newly implemented system can help to identify the root causes of technology-induced errors. Usability testing can be performed to describe the problem and determine the frequency of its occurrence [2, 32]. Such information would be valuable to system implementers that identify technology-induced errors during implementation. Here, the implementer can modify the HIT user interface at the organizational level, re-test it, and re-deploy the HIT with the safer user interface [2, 31].

Clinical simulations can help implementers identify user workflows arising from the newly implemented technology that lead to a technology-induced error. Such information can be used to modify the software, lead to the use of more task-fitting hardware, and/or might lead to user training in order to enhance awareness regarding activities that might lead to a technology-induced error.

Techno-anthropologic approaches such as rapid clinical assessment and ethnography employed shortly after implementation, where implementers directly observe user interactions with the HIT in context, can be used to improve user interfaces, training, and future implementation of the technology at other organizational sites [57, 58].

Managing technology-induced errors requires us to understand the contextual interface between human users and HIT. Poorly implemented HIT has the potential to lead to large scale errors, particularly in complex situations and contexts [59]. As outlined in the previous paragraph, usability testing, clinical simulations and/or techno-anthropologic approaches can be used to describe near misses involving technology-induced errors as well as technology-induced errors themselves. This can be done at the individual and the team levels [57, 58]. For instance, one complex situation is team-based collaborative care delivery, which is a common objective for health systems worldwide. However, a poor alignment of HIT with the work of health professional teams leads to communication issues and may introduce technology-induced errors [60, 61]. Better management of technology-induced errors in the context of collaborative care delivery requires us to understand the ‘rules of engagement’ for how collaborative care teams work [62,63]. A significant aspect of defining collaborative teamwork is the individual-team interchanges, which are the point of transition from individual to collaborative processes of the team. Individual issues such as usability issues (e.g. inability to find a field for data entry), or poor task-technology fit between the team and the HIT can lead to technology-induced errors at the team level [64-67]. This is best illustrated by Holden et al. [68] who describe how HIT necessitates the reconciliation of processes at the group level, which can force individuals to no longer be able to use certain problem solving processes leading to their use of risky workarounds [68].

3 Reporting Technology-Induced Errors

Internationally, the number of reports involving technology-induced errors is growing. Published research has documented their presence in countries such as the United States of America [6, 7, 58], United Kingdom [56], China [89] and Finland [90]. Early publications suggested that technology-induced errors represented 0.2% of all incident reports. These first studies documented the presence of these types of errors in countries that were early in HIT implementation at that point in time. In fully digitized health care systems and settings, the reported incident rate is much higher. Samaranyake et al. [89] report that 17% of all medication incidents in their health system involve HIT, and Palojoki and colleagues’ work [90] identified HIT to be involved in 8.5% of technology-induced error events in Finland where there is a fully digitized health care setting that collects data from 23 hospitals.

Technology-induced error reports can be submitted by patients, clinicians (e.g. doctors, nurses and other health professionals), health care administrators, health informaticians, and health information technology professionals. In many countries, they are often submitted alongside other types of patient safety reports and reports of adverse events (e.g. reports of patient injury due to a fall from a bed, wrong site surgery, etc.). No one country has adopted a standardized approach to collect data about technology-induced errors, but rather information about technology-induced errors is collected from information systems that typically collect data about non-technology related errors in healthcare [6]. For example, in some countries there appears to be several different types of national patient safety incident reporting systems: systems for sentinel events only (often obligatory by law), systems focusing on specific clinical domains (often voluntary), comprehensive reporting systems (which include both adverse events and ’near misses’) [16]. As a result, each reporting system, whether it asks for voluntary reports or involves mandatory reporting, produces its own data (although there seem to be some similarities among systems in terms of the types of data collected). The main structure of a reporting system may consist of common data variables such as background information (informant, time, place, and incident type) and specific incident information (narratives for incident description, consequences for the patient and organization, and reviewer’s comprehension of how this kind of incident could be prevented). Although partly detailed, data collected by various systems has not been fully standardized and unified into categories for defining incidents internationally [17]. This lack of standardization and unification of categories leads to discrepancies and causes difficulties in creating a comprehensive representation of the phenomena of technology-induced errors: (1) prevalence variations as categories are used irregularly (mixed categories e.g. medication and communication or communication and technology), (2) incidence variations as categories are too abstract (difficulties to link the incident to a given practice due to the special language used e.g., interoperability or down time), and/or (3) organizational variations as reporting refers more to causes and contributing factors (potential lack of knowledge and skills or resources) [18, 19]. Researchers have suggested there is a need to update categories from time to time especially in connection with legislative changes [16, 20, 31]. This would support managerial work as timely data is crucial in decision-making and risk management. However, there are challenges associated with continual reporting system changes (as such changes may affect the quality of the data collected). Even so, there is a need to continually review and standardize the categories as well as educate health professionals about how to fully report on these types of errors.

3.1 Identifying Technology-Induced Errors in Incident Reports

In attempt to address the limitations associated with technology-induced error reporting, efforts are underway to use data-driven approaches to reduce or eliminate the presence of recurring events (i.e. technology-induced errors) and to share knowledge and/or solutions regarding ways in which patient safety events may be mitigated or managed [21]. Beyond the hospital level or the health care system level, Patient Safety Organizations (PSOs) in the US listed by the Agency for Healthcare Research and Quality (AHRQ) are helping healthcare providers to advance patient safety through data-driven safety improvement by aggregating event-level data. The Common Formats created by AHRQ are designed to collect and analyze event-level data in a standardized manner which helps health care providers to uniformly report on events by using both structured and narrative data through the use of generic or event-specific forms [22].

The event-specific formats have been developed for frequently occurring and/or serious events, including technology-induced errors. The structured data in the common formats, if complete and accurate, can be easily de-identified and aggregated within and across provider organizations which allows for analysis of incidents, near misses, or unsafe conditions, as well as for trending and learning the patterns of similar or recurring events at the hospital, regional, or national levels. Narrative information, though it may not be easily aggregated, is indispensable for defining or describing the causes, evidence surrounding, and patient outcomes of the events. Narrative information presented in these reports may also serve as a basis for improving structured data in the common formats and should help providers and PSOs reduce patient risks [23]. Efforts are being made to aggregate and understand reports including both structured data and narrative information.

To enhance the quality of reporting in terms of completeness and accuracy, text-predicting approaches have shown promising results [24]. To identify the similarity of events, K-nearest neighbour (KNN) algorithms and ontological approaches have been applied [25, 26]. Overall, the next generation of patient safety reporting systems should have the ability to gather information from previous experiences and inform subsequent action in a timely manner. Healthcare providers would utilize such an intelligent system to avoid hazardous consequences and prevent their recurrence.

3.2 Reporting on Technology-Induced Errors: Use of National Surveys

Although the use of incidence reports and data driven analyses can provide significant insights into the types of errors that occur and the frequency with which they occur, reports suggest that incident reporting systems may capture only a limited subset of technology-induced error events [27]. To address this issue, surveys of system users may help safety organizations to gather information about other types of technology-induced errors (not reported in incident reports). For example, Finnish researchers were able to collect data about technology-induced errors as part of a national physician usability survey [28, 29]. Survey findings revealed that HIT systems, particularly EHRs, do not support physicians’ daily work and may cause technology-induced errors. The survey findings identified that only 56% of nearly 3,700 physician respondents agreed with the statement “Routine tasks can be performed in a straight forward manner without the need for extra steps using the system” [28]. This result indicates that in order to support meaningful use, EHR systems need to be better integrated into physician work procedures, and forcing them to adapt to new processes or to perform additional tasks is not a good solution. In addition to this, findings revealed that almost half (48%) of the survey respondents had experienced a faulty system function (i.e. technology-induced error) that had caused or had nearly caused a serious adverse event for the patient [28]. The cross-sectional survey on physician’s experiences of EHR system use and usability also shows that despite the development efforts dedicated towards improving EHRs over the last few years, the situation has not improved in Finland when the survey results were compared between 2010 and 2014 [28, 29].

In summary incident reporting and classification of incidents remain an important area of study to better understand technology-induced errors. Data driven approaches hold promise in identifying technology-induced errors as well as help to learn about the methods that can be used to mitigate them. Surveys of HIT users may provide insights into other types of technology-induced errors not reported in incident reporting systems. Surveys may also provide additional information about the extent of the problem and whether policy changes can lead to improvements in the quality of HIT.

3.3 Incident Reports and Usability Testing to Inform Design

Marcilly and colleagues [50] advocate the use of a two-stage evidence-based approach to improve the safety of HIT. In the first stage of the process, the researchers reviewed incident reports for safety issues associated with HIT usability. Then, they used usability testing to identify potential sources of technology-induced error. Researchers focus on the analysis of incident reports regarding health technologies has shown that approximately 8% of the problems reported are related to defects in the usability design; moreover, those usability issues were found to be four times more likely to lead to patient harm than technical issues [6].

Usability-related technology-induced errors can be avoided by integrating an evidence-based usability practice within the design/evaluation process in order to help design teams make informed decisions. Evidence-based usability practice involves “the conscientious, explicit and judicious use of current best evidence in making decisions in design of interactive systems in health by applying usability engineering and usability design principles that have proven their value in practice” [48]. Promoting evidence-based usability first requires gathering empirical data that prove or refute the value in practice of usability engineering and usability design principles. To do so, scientific and grey literature along with incident report databases must be systematically searched in order to identify instances of usability-related technology-induced errors. Then, for a given type of technology, the cases found must be analyzed and compared in a standardized manner and findings must be synthesized for designers to learn from these reports. Published research has demonstrated that there is an empirical support for usability design principles dedicated to HIT [48,49] in the areas of medication-related alerting systems, for the electronic medical record (EMR) [51], or for CPOE [52]. Although there is a need for more research in this area [53, 54], the importance of usability to design, development, and implementation of safer HIT has been demonstrated [2, 55]. In summary there is a growing body of literature that is demonstrating the value of incident reports in supporting the safe design of HIT.

4 Technology-Induced Error Analysis: Models and Methods

Technology-induced error analysis is important. When an error occurs, there exists a number of models and methods that can be used to investigate and learn from the technology-induced error. The models and methods are drawn from a number of literatures including the software engineering, organizational, sociotechnical, and human factors [69]. For example, Leveson’s System-Theoretic Accident Model and Processes (STAMP) that models the system as hierarchical control processes [70] has its origins in the software engineering literature. Each control process consists of a basic structure including a controller, the controlled process, and a set of actuators and sensors. Safety concerns are modelled as constraints associated with the controller. While this basic structure appears simplistic, it can be used to represent complex dynamic systems by (de)composing any of its components with additional control processes. For example, a CPOE system can be modelled as a double (indirect) control loop, where the first control loop consists of a human prescriber (controller) using an order entry form (actuator) to control a patient’s care directive stored in an EMR (controlled process), while observing the patient’s health status, e.g., based on lab test results (sensor). The second control loop consists of the EMR (controller) displaying an order view to a nurse (actuator), who administers the prescribed order to the patient (controlled health process), while the patient’s health status is assessed, e.g., using laboratory tests, by another clinician who submits the results to the EMR (sensor). Additional control loops can be added to account for other aspects relevant to the safety of the CPOE system, e.g., the presence of a clinical decision support system or a hospital pharmacy system that checks for potentially adverse prescriptions. Once a system has been modelled this way, STAMP provides methods to analyze it with respect to possible safety problems. These methods are based on the use of guide words, similar to techniques used in HAZOP [74]. STAMP also includes methods for investigating the cause for specific accidents (i.e. technology-induced errors) and near misses, which generally indicate a set of missing or ineffective constraints on any of its controllers. A detailed application case study of STAMP in the context of CPOE systems has been presented by Weber et al. [75]. The method has also been used in the safety design of other medical devices [71, 73].

Other models draw on the organizational and socio-technical literature. To illustrate, Harrison et al., have proposed a similar approach to modelling systems as dynamic control loops in their Interactive Sociotechnical Analysis (ISTA) method and they placed an even greater emphasis on the socio-technical aspect of HIT systems [72]. In particular, they proposed that systems (including their technological and non-technological components) must be considered as dynamically evolving processes with mutual control influence and feedback [74,75]. Other socio-technical models and methods that draw on this literature include: Bloomrosen et al.’s Input-output Model of Unintended Consequences [76], Kushiniruk et al.’s Framework for Selecting Health Information Systems to Prevent Error [77], Sittig and Singh’s Eight Dimensional Model of Socio-Technical Challenges [78], and Ash et al.’s Thematic Hierarchical Network Model of Consequences of CPOE [79].

Lastly, human factors models and methods have contributed to our understanding of technology-induced error. The root cause analysis (RCA) is one such modeling approach/method. When conducting a root cause analysis to learn about how a technology-induced error occurs, the entire system needs to be considered [80]. The technology-induced error involving the individual and the HIT as well as any hidden system level problems that could have contributed to the event are analyzed to understand how the technology-induced error occurred (i.e. the technology-induced error occurred as an outcome of a sequence of events) [22]. The analysis should always include a RCA followed by a presentation in the form of a cause-effect diagram [80]. The London Protocol recommends performing a RCA to reconstruct and chronologically map Care Delivery Problems (CDP) and contributory factors (CF) associated with the technology-induced error [81,82]. If medical devices or clinical information systems are involved in an incident, the linear representation of the RCA is not sufficient. In this case, it helps to explore the cause and contributing factors by repeatedly asking the question “Why (could this happen)?”. The repetition of “Why?”questions on every cause and CF often uncovers branched error-chains. Most of these chains have 5-6 elements (causes or CFs) between the incidence and the real causes (modified 5-whys –technique). For structuring and completing the causes and CF, these should be presented in a cause and effect diagram (i.e. fishbone diagram, Ishikawa diagram, or Fishikawa diagram). For this purpose, the causes and factors favoring a technology-induced error are grouped into major categories. Major categories recommended by the London Protocol are Patient, Task, Individual, Team, Environment, and Organisation and management. The categories can be modified depending on the event of a fault, the involved technology, and the employees involved. It is important that the categories for all people involved in the error analysis be comprehensible. Understanding the causes and CF based on this analysis allows for risks to be re-evaluated and measures to control risk to be developed and implemented. Other models and methods that have been used to reason about technology-induced errors from the human factors literature include: the Continuum of Methods for Diagnosing Technology-induced Errors [83], the Framework for Considering the Origins of Technology-induced Errors [14], Zhang et al.’s Theoretical and Conceptual Cognitive Taxonomy of Medical Errors [84], and Horsky et al.’s Multifaceted Cognitive Methodology for Characterizing Cognitive Demands of Systems [85].

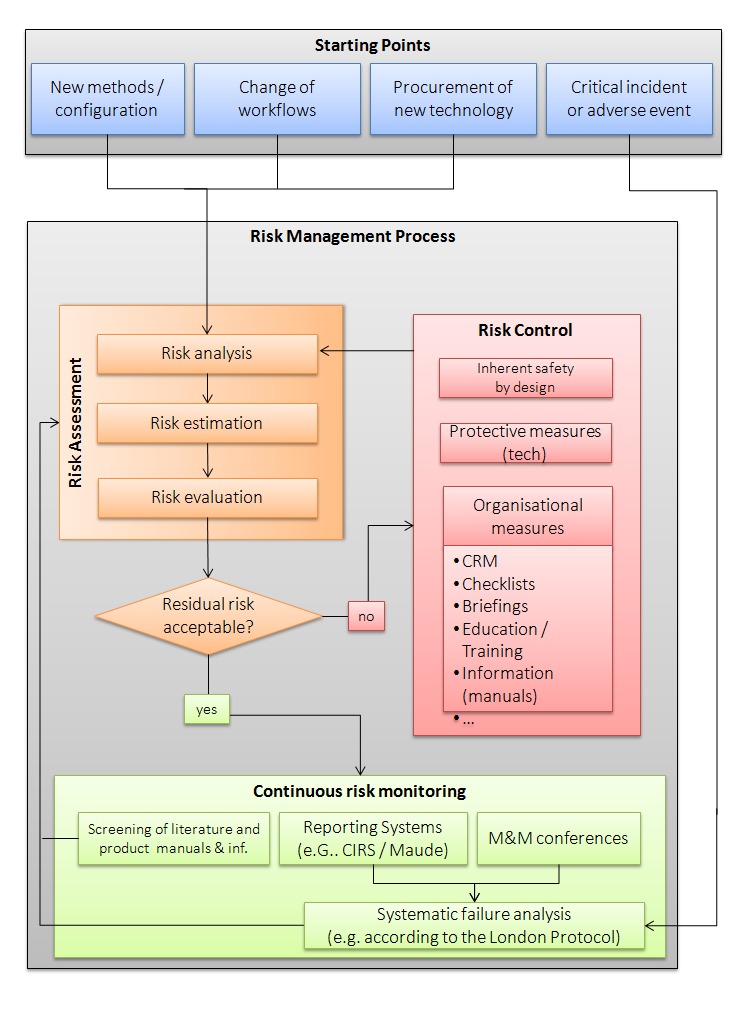

5 HIT Risk Management

Patient safety is an ongoing hospital and regional health authority responsibility. All health organizations need to establish risk management processes. Risk management approaches should be employed when (i) new methods/configurations of HIT are introduced to a healthcare organization, (ii) there is a change in workflows arising from a new technology, (iii) a technology is being newly procured, (iv) and/or when there is a technology-induced error. A risk assessment should be carried out in case of any of these events. If the outcome of the risk assessment is not acceptable, residual risks may exist. Therefore, there is a need for risk control measures to reduce the risks to an acceptable level (see Figure 2). These may include safe design, protective technology measures, and organizational measures. When possible, structural measures should be applied to avoid risks. If structural measures are not possible, protective measures should be taken to minimize risks. If protective measures are not sufficient, then additional organizational measures need to be put in place. This may include providing information to users (or employees) about the technology and its risks. After completing an implementation and transitioning the HIT from being a project to being an integral part of supporting health professional work, all equipment, systems, and processes need to be continuously monitored for potential risks. Therefore, a continuous risk monitoring process needs to be established. This may consist of regular reviews of the current literature and product information, review of reports submitted to error reporting systems and clinical morbidity and mortality conferences (MMC). If a technology-induced error happens or if there is new information from an error reporting system or MMC about such critical incidents or if unknown risks emerge, then a systematic error analysis should be performed, e.g., using the London Protocol [81,82], and the risk management team should go through the risk management process again (see Figure 2).

Fig. 2.

Risk Management for Technology-Induced Errors

In addition to the use of risk management processes, organizational risk assessment guides and/or guidelines aimed at improving the safety and safe use of HIT after implementation can be used by organizations to support the development of a culture of safety. For example, Sittig et al. [86] developed guides for the Office of the National Coordinator (ONC) to help healthcare organizations self-evaluate safety and safe use of EHRs. The guides can be used by organizations to conduct pro-active organizational self-risk assessments to “identify specific areas of vulnerability and create solutions and culture changes to mitigate risks“. Checklists are used by clinicians, care teams, and administrative leaders to identify potential risks in their organizations. Other examples include Canada’s Health Informatics Association eSafety Guidelines [87]. Developed by a team of industry and academic experts from across Canada, the guidelines help organizations to develop a culture of safety. The guidelines describe how risk management and regulatory assessments can be introduced as an adjunct to existing organizational risk management processes.

Conclusions and Future Research Directions

Technology-induced errors remain a significant and growing concern for health informatics and biomedical informatics practitioners. Internationally, researchers are improving the evidence base for reporting and monitoring systems [16, 19-21, 23]. Other researchers are developing evidence-based methods that can be used to identify and analyze data found in reports [21, 23]. To add to this work, some technology design researchers are conducting studies that employ user-centred, participative, and composable designs to improve the quality and safety of HIT [30, 36, 43, 44]. Along these lines, safety heuristics and guidelines to improve the safety of user interface designs and software functions are being developed following the review of published literature [46, 47, 88]. Additionally, researchers have developed methods that involve the use of incident reports and usability testing to inform and improve the safety of the HIT being tested [16-23]. Research is being conducted in an effort to improve the safety of HIT with attention to systems implementation in differing contexts [34]. Here, technology-induced error models and methods drawn from the systems engineering, human factors, and organizational and sociotechnical literatures are being adapted for use in health care [70-86]. Lastly, researchers are now developing comprehensive evidence-based approaches to risk management tackling technology-induced errors to improve the overall safety of HIT and create organizations where there is a culture of HIT safety.

The number of advances in HIT safety research has been significant since the first documented reports on technology-induced errors in healthcare in 2005 [2-8]. In a span of six years, government and policy organizations recognized the importance of addressing this issue. Over a 10-year period, health and biomedical informatics researchers have developed and extended the evidence for addressing technology-induced errors. Today, there exist models, frameworks, methods, design, and risk management approaches that mitigate and eliminate technology-induced errors based on the research in health informatics. Organizations around the world are now implementing these models and methods [88]. According to the AHRQ, it typically takes up to two decades to begin to translate knowledge into practice – which HIT safety researchers have done in a short six to ten years since the first published reports of technology-induced errors emerged.

Even if we have seen significant advances in the research evidence and practice literature, there remains much work to be done. There is a need to study the safety of HIT used in the community. With the rise of the number of technologies being used by citizens and the chronically ill living in the community, more research is needed to evaluate the safety of mobile eHealth applications, wearable devices, and peripheral devices used by citizens and patients. In addition to this, much of the published research focusing on technology-induced error and HIT safety was conducted in countries that are more technologically mature and have highly developed healthcare services. There is a need to study the safety of these technologies in developing countries and in technologically poor settings to assess the impacts of context on HIT safety. Lastly, the effectiveness of public policies in fostering regional health authority, vendor and government adoption of HIT safety reporting systems, models, frameworks, methods, and organizational cultures of safety needs to be studied.

In conclusion, reports of technology-induced errors in the research literature are growing, and they are expected to continue to grow as the number and rate of usage of these technologies by healthcare systems and among health care consumers increase. Policy makers, health care administrators, vendors, and health professionals have recognized that technology-induced errors are a global issue that needs to be addressed as reports continue to come from varying regions of the world. Research from Australia, Canada, China, France, Finland, Germany, Saudi Arabia, United Kingdom, United States, and other countries have identified the presence of the issue internationally. Over the past several years, the working group on Health Informatics for Patient Safety has written several papers emphasizing varying aspects of the published research and evidence-based approaches to prevent technology-induced errors and improve HIT safety. This paper represents an additional production to this work. Our hope is to improve the quality and safety of HIT through the work conducted by researchers in this area of study.

References

- 1.Kohn LT, Corrigan JM, Donaldson MS, editors. To err is human: Building a Safer Health System (Vol. 6). National Academies Press; 2000 [PubMed] [Google Scholar]

- 2.Kushniruk AW, Triola MM, Borycki EM, Stein B, Kannry JL. Technology induced error and usability: the relationship between usability problems and prescription errors when using a handheld application. Int J Med Inform 2005; August 31;74(7):519-26. [DOI] [PubMed] [Google Scholar]

- 3.Kuziemsky CE, Borycki E, Nøhr C, Cummings E. The nature of unintended benefits in health information systems. Stud Health Technol Inform 2012; 180:896-900. [PubMed] [Google Scholar]

- 4.Kuperman GJ, McGowan JJ. Potential Unintended Consequences of Health Information Exchange. J Gen Int Med 2011;28(12):1663-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Borycki EM, Kushniruk AW. Where do technology-induced errors come from? Towards a model for conceptualizing and diagnosing errors caused by technology. Human, Social and Organizational Aspects of Health Information Systems; 2008. p. 148-65 [Google Scholar]

- 6.Magrabi F, Ong MS, Runciman W, Coiera E. Using FDA reports to inform a classification for health information technology safety problems. J Am Med Inform Assoc 2012; January 1;19(1):45-53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Koppel R, Metlay JP, Cohen A, Abaluck B, Localio AR, Kimmel SE, et al. Role of computerized physician order entry systems in facilitating medication errors. J Am Med Inform Assoc 2005; March 9; 293(10):1197-203. [DOI] [PubMed] [Google Scholar]

- 8.Ash JS, Berg M, Coiera E. Some unintended consequences of information technology in health care: the nature of patient care information system-related errors. J Am Med Inform Assoc 2004; March 1; 11(2):104-12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Kushniruk A., Surich J, Borycki E. Detecting and Classifying Technology-Induced Error in the Transmission of Healthcare Data. In24th International Conference of the European Federation for Medical Informatics Quality of Life Quality of Information (Vol. 26). MIE2012/CD/Short Communications. [Google Scholar]

- 10.Kirkendall ES, Kouril M, Minich T, Spooner SA. Analysis of electronic medication orders with large overdoses: opportunities for mitigating dosing errors. Appl Clin Inform 2014; 5(1):25-45 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Phansalkar S, Van der Sijs H, Tucker AD, Desai AA, Bell DS, Teich JM, et al. Drug—drug interactions that should be non-interruptive in order to reduce alert fatigue in electronic health records. J Am Med Inform 2013; May 1; 20(3):489-93. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Borycki EM, Househ MS, Kushniruk AW, Nohr C, Takeda H. Empowering patients: Making health information and systems safer for patients and the public. Contribution of the IMIA Health Informatics for Patient Safety Working Group. Yearbook Med Inform 2011; December; 7(1):56-64. [PubMed] [Google Scholar]

- 13.Househ M, Borycki E, Kushniruk AW, Alofaysan S. mHealth: A passing fad or here to stay. Telemedicine and E-health services, policies and applications: Advancements and developments 2012; April 30:151-73. [Google Scholar]

- 14.Borycki EM, Kushniruk AW, Keay L, Kuo A. A framework for diagnosing and identifying where technology-induced errors come from. Stud Health Tech Inform 2008; December 148:181-7. [PubMed] [Google Scholar]

- 15.Sherer SA, Meyerhoefer CD, Sheinberg M, Levick D. Integrating commercial ambulatory electronic health records with hospital systems: An evolutionary process. Int J Med Inform 2015; September; 84(9):683-93. [DOI] [PubMed] [Google Scholar]

- 16.Doupi P. National reporting Systems for Patient Safety Incidents. A review of the situation in Europe. Report 13. Helsinki: National Institute for Health and Welfare; 2009. [Google Scholar]

- 17.Levtzion-Korach O, Frankel A, Alcalai H, Keohane C, Orav J, Graydon-Baker E, et al. Integrating incident data from five reporting systems to assess patient safety: Making sense of the elephant. Joint Comm J Qual Patient Saf 2010;36(9):402410. [DOI] [PubMed] [Google Scholar]

- 18.IOM. Health IT and patient safety: Building safer systems for better care. Washington, DC: The National Academies Press; 2011. [PubMed] [Google Scholar]

- 19.Jylhä V, Saranto K, Bates DW. Preventable adverse drug events and their causes and contributing factors: the analysis of register data. Int J Qual Health Care 2011;23(2):187-97. [DOI] [PubMed] [Google Scholar]

- 20.Christiaans-Dingelhoff I, Smits M, Zwaan L, Lubberding S, Van DW, Wagner C. To what extent are adverse events found in patient records reported by patients and healthcare professionals via complaints, claims and incident reports. BMC Health Serv Res 2011;11(1):49. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Gong Y, Song H-Y, Wu X, Hua L. Identifying barriers and benefits of patient safety event reporting toward user-centered design. Safety in Health 2015;1(1),7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.AHRQ. Common Formats. from https://www.psoppc.org/web/patientsafety/version-1.2_documents 2011.

- 23.Gong Y. Data consistency in a voluntary medical incident reporting system. J Med Syst 2011;August; 35(4):609-15. [DOI] [PubMed] [Google Scholar]

- 24.Hua L, Gong Y. Toward user-centered patient safety event reporting system: A trial of text prediction in clinical data entry. Stud Health Technol Inform 2015;216:188-92. [PubMed] [Google Scholar]

- 25.Liang C, Gong Y. Enhancing patient safety event reporting by K-nearest neighbor classifier. Stud Health Technol Inform 2015;218:406. [PubMed] [Google Scholar]

- 26.Liang C, Gong Y. On building an ontological knowledge base for managing patient safety events. Stud Health Technol Inform 2015;216:202-6. [PubMed] [Google Scholar]

- 27.Morisky DE, Green LW, Levine DM. Concurrent and predictive validity of a self-reported measure of medication adherence. Med Care 1986; January 1; 24(1):67-74. [DOI] [PubMed] [Google Scholar]

- 28.Vänskä J, Vainiomäki S, Kaipio J, Hyppönen H, Reponen J, Lääveri T. Potilastietojärjestelmät lääkärin työvälineenä 2014: käyttäjäkokemuksissa ei merkittäviä muutoksia. [Electronic Patient Record systems as physicians’ tools in 2014: no significant changes in user experience reported by physicians]. Finnish Med J 2014;49(69):3351-8 (in Finnish, English summary). [Google Scholar]

- 29.Viitanen J, Hyppönen H, Lääveri T, Vänskä J, Reponen J, Winblad I. National questionnaire study on clinical ICT systems proofs: Physicians suffer from poor usability. Int J Med Inform 2011;80(10),708-25. [DOI] [PubMed] [Google Scholar]

- 30.Kushniruk A. Evaluation in the design of health information systems: Application of approaches emerging from usability engineering. Comput Biol Med 2002; May 31; 32(3):141-9. [DOI] [PubMed] [Google Scholar]

- 31.Borycki EM, Kushniruk AW, Keay L, Kuo A. A framework for diagnosing and identifying where technology-induced errors come from. Stud Health Tech Inform 2008; December;148:181-7. [PubMed] [Google Scholar]

- 32.Borycki E, Kushniruk A, Nohr C, Takeda H, Kuwata S, Carvalho C, et al. Usability methods for ensuring health information technology safety: Evidence-based approaches. Contribution of the IMIA Working Group Health Informatics for Patient Safety. Yearb Med Inform 2012; December; 8(1):20-7. [PubMed] [Google Scholar]

- 33.Borycki EM, Kushniruk AW, Kuwata S, Kannry J. Use of simulation approaches in the study of clinician workflow. AMIA Annual Symposium Proceedings; 2006. p. 61. [PMC free article] [PubMed] [Google Scholar]

- 34.Borycki E, Kushniruk A, Anderson J, Anderson M. Designing and integrating clinical and computer-based simulations in health informatics: From real-world to virtual reality. INTECH Open Access Publisher; 2010. [Google Scholar]

- 35.Carayon P. Human factors and ergonomics in health care and patient safety. In Handbook of Human Factors and Ergonomics in Patient Safety. Edited by Carayon P: CRC Press; 2007. [Google Scholar]

- 36.Kensing F, Blomberg J. Participatory design: Issues and concerns. 7th edition Computer Supported Cooperative Work: Springer Science and Business Media; 1998. p. 167-85. [Google Scholar]

- 37.Lyng KM, Pedersen BS. Participatory design for computerization of clinical practice guidelines. J Biomed Inform 2011;44:909-18. [DOI] [PubMed] [Google Scholar]

- 38.Kujala S. User involvement: A review of the benefits and challenges. Behav Inform Tech 2003;22(1):1-16. [Google Scholar]

- 39.Rasmussen SL, Lyng KM, Jensen S. Achieving IT-supported standardized nursing documentation through participatory design. Stud Health Technol Inform 2012;180:1055-9. [PubMed] [Google Scholar]

- 40.Technology Committee on Patient Safety in Health IT. Health IT and patient safety: Building safer systems for better care. Institute of Medicine; 2011. [PubMed] [Google Scholar]

- 41.Patrick J, Budd P. Ockham’s razor of design. Proceedings of the 1st ACM International Health Informatics Symposium, Washington DC; Nov 2010. http://portal.acm.org/citation.cfm?id=1882998&CFID=116605072&CFTOKEN=43603995. [Google Scholar]

- 42.Senathirajah Y, Bakken S. Architectural and usability considerations in the development of a Web 2.0- based EHR. Adv Inform Tech Comm Health, IOS Press; 2009. p. 315-21. [PubMed] [Google Scholar]

- 43.Senathirajah Y, Kaufman D, Bakken S. The clinician in the driver’s Seat: Part 1 - A User-composable electronic health record platform. J Biomed Inform 2014. December;52:165-76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Senathirajah Y, Kaufman D, Bakken S. The clinician in the driver’s seat: Part 2 - intelligent uses of space in a drag/drop user-composable electronic health record. J Biomed Inform 2014. December;52:177-88. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Trimble JP, Dayton T, Quinol M. Putting the users in charge: A Collaborative, User-composable interface for NASA’s Mission Control. 45th International Conference on System Sciences, Hawaii; 2012. [Google Scholar]

- 46.Carvalho CJ, Borycki EM, Kushniruk A. Ensuring the safety of health information systems: using heuristics for patient safety. Healthc Q 2008; December;12:49-54. [DOI] [PubMed] [Google Scholar]

- 47.Coiera EW. Lessons from the NHS National Programme for IT. Med J Aust 2007; January 1; 186(1):3. [DOI] [PubMed] [Google Scholar]

- 48.Marcilly R, Peute LW, Beuscart-Zephir MC, Jaspers MW. Towards Evidence Based Usability in Health Informatics? Stud Health Technol Inform 2015;218:55-60. [PubMed] [Google Scholar]

- 49.Marcilly R, Ammenwerth E, Vasseur F, Roehrer E, Beuscart-Zephir MC. Usability flaws of medication-related alerting functions: A systematic qualitative review. J Biomed Inform 2015;55:260-71. [DOI] [PubMed] [Google Scholar]

- 50.Marcilly R, Ammenwerth E, Roehrer E, Pelayo S, Vasseur F, Beuscart-Zephir MC. Usability flaws in medication alerting systems: Impact on usage and work system. Yearb Med Inform 2015;10(1):55-67. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Zahabi M, Kaber DB, Swangnetr M. Usability and safety in electronic medical records interface design: A review of recent literature and guideline formulation. Hum Factors 2015; 57(5):805-34. [DOI] [PubMed] [Google Scholar]

- 52.Khajouei R, Jaspers MW. The impact of CPOE medication systems’ design aspects on usability, workflow and medication orders: a systematic review. Methods Inf Med 2010;49(1):3-19. [DOI] [PubMed] [Google Scholar]

- 53.Pereira de Araujo L, Mattos MM. A systematic literature review of evaluation methods for health collaborative systems. Proceedings of the IEEE 18th International Conference on Computer Supported Cooperative Work in Design; 2014. p. 366-9. [Google Scholar]

- 54.Wills J, Hurley K. Testing usability and measuring task-completion time in hospital-based health information systems: A systematic review. Proceedings of the 28th IEEE Canadian conference on electrical and computer engineering (CCECE); 2012. p. 1-4. [Google Scholar]

- 55.British Standards Institution (BSI). The growing role of human factors and usability engineering for medical devices. White paper. http://www.bsigroup.com/LocalFiles/en-GB/Medical-devices/whitepapers/, [Last accessed 2015 Jul 24].

- 56.Magrabi F, Baker M, Sinha I, Ong MS, Harrison S, Kidd MR, et al. Clinical safety of England’s national programme for IT: A retrospective analysis of all reported safety events 2005 to 2011. Int J Med Inform 2015;84(3):198-206. [DOI] [PubMed] [Google Scholar]

- 57.Ash JS, Sittig DF, McMullen CK, Guappone K, Dykstra R, Carpenter J. A rapid assessment process for clinical informatics interventions. AMIA Annu Symp Proc 2008. November 6:26-30. [PMC free article] [PubMed] [Google Scholar]

- 58.Ash JS, Sittig DF, Poon EG, Guappone K, Campbell E, Dykstra RH. The extent and importance of unintended consequences related to computerized provider order entry. J Am Med Inform Assoc 2007;August 31;14(4):415-23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Karsh BT, Weinger MB, Abbott PA, Wears RL. Health information technology: Fallacies and sober realities. J Am Med Inform Assoc 2010;17: 617-23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Ash JS, Berg M, Coiera E, Ash JS, Berg M, Coiera E, Paper V, Ash JS, Berg M, Coiera E, et al. Some unintended consequences of information technology in health care: The nature of patient care information system-related errors. J Am Med Inform Assoc 2004;11:104–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Varpio L, Rashotte J, Day K, King J, Kuziemsky C, Parush A. The EHR and building the patient’s story: A qualitative investigation of how EHR use obstructs a vital clinical activity. Int J Med Inform Assoc 2015; 84(12):1019-28. [DOI] [PubMed] [Google Scholar]

- 62.Xiao Y, Parker SH, Manser T. Teamwork and collaboration. Review Human Factors Ergonomics 2013;8(1):55–102. [Google Scholar]

- 63.West MA, Lyubovnikova J. Illusions of team working in health care. J Health Organ Manag 2013;27(1):134-42. [DOI] [PubMed] [Google Scholar]

- 64.Kuziemsky C, Bush P. Coordination considerations of health information technology. Stud Health Tech Inform 2013;194,133-8. [PubMed] [Google Scholar]

- 65.Wong MC, Cummings E, Turner P. User-centered design in clinical handover: Exploring post-implementation outcomes for clinicians. Stud Health Tech Inform 2013;192(1-2):253-7. [PubMed] [Google Scholar]

- 66.Kuziemsky CE. A model of tradeoffs for understanding health information technology implementation. Stud Health Technol Inform 2015; 215:116-28. [PubMed] [Google Scholar]

- 67.Saleem JJ, Russ AL, Sanderson P, Johnson TR, Zhang J, Sittig DF. Current challenges and opportunities for better integration of human factors research with development of clinical information systems. Yearb Med Inform 2009:48-58. [PubMed] [Google Scholar]

- 68.Holden RJ, Rivera-Rodriguez AJ, Faye H, Scanlon MC, Karsh BT. Automation and adaptation: Nurses’ problem-solving behavior following the implementation of bar-coded medication administration technology. Cog Tech Work 2013;15(3):283-96. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Borycki EM, Kushniruk AW, Bellwood P, Brender J. Technology-induced errors. Methods Inf Med 2012;51(2):95-103. [DOI] [PubMed] [Google Scholar]

- 70.Leveson N. Engineering a safer world: Systems thinking applied to safety. MIT Press; 2011. [Google Scholar]

- 71.Alemzadeh H, Chen D, Lewis A, Kalbarczyk Z, Raman J, Leveson N, et al. Systems-theoretic safety assessment of robotic telesurgical systems. Computer Safety, Reliability, and Security. LNCS 9337. Springer; 2015. [Google Scholar]

- 72.Harrison MI, Koppel R, Bar-Lev S. Unintended consequences of information technologies in health care: An interactive sociotechnical analysis. J Am Med Inform Assoc 2007;14(5):542-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Procter S, Hatcliff J. An architecturally-integrated, systems-based hazard analysis for medical applications. In: Formal Methods and Models for Codesign (MEMOCODE), 2014 Twelfth ACM/IEEE International Conference on. IEEE; 2014. p. 124-33. [Google Scholar]

- 74.Redmill F, Chudleigh M, Catmur J. System safety: HAZOP and software HAZOP. Chichester: Wiley; 1999. [Google Scholar]

- 75.Weber-Jahnke JH, Mason-Blakley F. On the safety of electronic medical records. In Foundations of Health Informatics Engineering and Systems. Berlin, Heidelberg: Springer; 2012. p. 177-94. [Google Scholar]

- 76.Bloomrosen M, Starren J, Lorenzi NM, Ash JS, Patel VL, Shortliffe EH. Anticipating and addressing the unintended consequences of health IT and policy: A report from the AMIA 2009 Health Policy Meeting. J Am Med Inform Assoc 2011; January 1; 18(1):82-90. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Kushniruk A, Beuscart-Zéphir MC, Grzes A, Borycki E, Watbled L, Kannry J. Increasing the safety of healthcare information systems through improved procurement: toward a framework for selection of safe healthcare systems. Healthc Q 2009; December;13:53-8. [DOI] [PubMed] [Google Scholar]

- 78.Sittig DF, Singh H. A new sociotechnical model for studying health information technology in complex adaptive healthcare systems. Qual Saf Health Care 2010; October 1;19(Suppl 3):i68-74. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Ash JS, Sittig DF, Dykstra RH, Guappone K, Carpenter JD, Seshadri V. Categorizing the unintended sociotechnical consequences of computerized provider order entry. Int J Med Inform 2007; June 30;76:S21-7. [DOI] [PubMed] [Google Scholar]

- 80.Neuhaus C, Röhrig R, Hofmann G, Klemm S, Neuhaus S, Hofer S, et al. Patient safety in anesthesiology : Multimodal strategies for perioperative care. Anaesthesist 2015; December; 64(12):911-26. [DOI] [PubMed] [Google Scholar]

- 81.Vincent C, Taylor-Adams S. Systems analysis of critical incidents: The London Protocol; 1999. http://www1.imperial.ac.uk/cpssq/cpssq_publications/resources_tools/the_london_protocol/. Last Access: 1/11/2016

- 82.Vincent C, Taylor-Adams S, Chapman EJ, Hewett D, Prior S, Strange P, et al. How to investigate and analyse clinical incidents: Clinical risk unit and association of litigation and risk management protocol. 2000; BMJ March 18;320(7237):777-81. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Borycki E, Keay E. Methods to assess the safety of health information systems. Healthc Q 2009; December;13:47-52. [DOI] [PubMed] [Google Scholar]

- 84.Zhang J, Patel VL, Johnson TR, Shortliffe EH. A cognitive taxonomy of medical errors. J Biomed Inform 2004; June 30; 37(3):193-204. [DOI] [PubMed] [Google Scholar]

- 85.Horsky J, Kuperman GJ, Patel VL. Comprehensive analysis of a medication dosing error related to CPOE. J Am Med Inform Assoc 2005; July 1; 12(4):377-82. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86.Sittig DF, Singh H. A new sociotechnical model for studying health information technology in complex adaptive healthcare systems. Qual Saf Health Care 2010; October 1;19(Suppl 3):i68-74. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Canada’s Health Informatics Association. eSafety guidelines: eSafety for eHealth. COACH: Ottawa; 2013. [Google Scholar]

- 88.Kushniruk AW, Bates DW, Bainbridge M, Househ MS, Borycki EM. National efforts to improve health information system safety in Canada, the United States of America and England. Int J Med Inform 2013; May 31; 82(5):e149-60. [DOI] [PubMed] [Google Scholar]

- 89.Samaranayake NR, Cheung ST, Chui WC, Cheung BM. Technology-related medication errors in a tertiary hospital: A 5-year analysis of reported medication incidents. Int J Med Inform 2012; December 31; 81(12):828-33. [DOI] [PubMed] [Google Scholar]

- 90.Palojoki S, Mäkelä M, Lehtonen L, Saranto K. An analysis of electronic health record–related patient safety incidents. Health Inform J 2016; March 7. [DOI] [PubMed] [Google Scholar]