Abstract

Background

The increasing use of workplace-based assessments (WBAs) in competency-based medical education has led to large data sets that assess resident performance longitudinally. With large data sets, problems that arise from missing data are increasingly likely.

Objective

The purpose of this study is to examine (1) whether data are missing at random across various WBAs, and (2) the relationship between resident performance and the proportion of missing data.

Methods

During 2012–2013, a total of 844 WBAs of CanMEDs Roles were completed for 9 second-year emergency medicine residents. To identify whether missing data were randomly distributed across various WBAs, the total number of missing data points was calculated for each Role. To examine whether the amount of missing data was related to resident performance, 5 faculty members rank-ordered the residents based on performance. A median rank score was calculated for each resident and was correlated with the proportion of missing data.

Results

More data were missing for Health Advocate and Professional WBAs relative to other competencies (P < .001). Furthermore, resident rankings were not related to the proportion of missing data points (r = 0.29, P > .05).

Conclusions

The results of the present study illustrate that some CanMEDS Roles are less likely to be assessed than others. At the same time, the amount of missing data did not correlate with resident performance, suggesting lower-performing residents are no more likely to have missing data than their higher-performing peers. This article discusses several approaches to dealing with missing data.

What was known and gap

The growing use of workplace-based assessment makes it important to assess the properties of these large data sets.

What is new

A study of missing data in CanMEDs assessments for Canadian emergency medicine residents found more missing data for Health Advocate and Professional roles.

Limitations

Small sample, single institution, and single specialty limit the ability to generalize.

Bottom line

Missing data were not correlated with resident performance and can be dealt with by programs.

Introduction

Competency-based medical education (CBME) is changing the way physicians are educated,1–4 with a heavy emphasis on quantifying and qualifying their performance via robust assessments. The central tenet of CBME is that trainees must demonstrate competence in applying acquired skills during patient care activities,5 and CBME requires assessment strategies that ensure trainees apply their knowledge, skills, and abilities in authentic or simulated environments.6 This necessity for direct observation and assessment of trainees' performance has resulted in a shift toward workplace-based assessments (WBAs) as a primary method of assessment.

There is no single comprehensive WBA assessment tool, and experts have argued that decisions regarding trainees' progression should be based on aggregates of multiple measures of performance using both qualitative and quantitative methods.6,7 The implementation of WBAs allows educators to identify patterns in the development of knowledge, skills, and performance. While educators and researchers have paid considerable attention to understanding patterns in WBA data, less attention has been paid to missing data. Identifying and understanding potential patterns underlying missing data is an important step in accurately interpreting WBA data.

While mechanisms exist to deal with missing data (eg, multiple imputation and maximum likelihood methods), many of these presume that data are missing at random.8 This may not be the case in the context of WBA portfolios. For example, residents may be more likely to complete WBAs for tasks that they enjoy and/or perform well; consequently, there may be missing data for more poorly developed knowledge, skills, and abilities. Similarly, certain WBA tools (eg, multi-source feedback) may be particularly challenging to complete because of the logistics of collecting the data. Nonrandomly missing data could threaten the inherent validity of WBA portfolios.

The purpose of this study is twofold. First, we examined whether data are, in fact, missing at random across various competencies within the context of our local WBA system. Second, we assessed whether the amount of missing data correlated with overall resident performance as determined by a panel of faculty from the residency education committee.

Methods

Setting and Population

In 2011, McMaster University's emergency medicine (EM) program developed and piloted a programmatic WBA system, referred to as the McMaster Modular Assessment Program (McMAP).9 This WBA system consists of a variety of brief, observed, clinically embedded tasks mapped to global competencies of EM physicians, which is completed on each shift (ie, “micro”-CEX).9

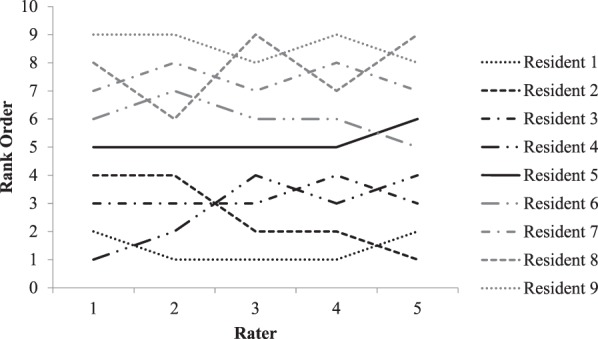

The study population was 9 second-year residents from a 5-year EM postgraduate training program. To examine how missing WBA scores compared with resident ranking, 5 members of the program's residency education committee were asked to independently rank-order residents on the basis of their overall clinical performance. These committee members were medical educators with experience supervising and assessing residents and had participated as trained assessors.

Materials

The McMAP pilot project consisted of a collection of 42 workplace assessments organized in portfolios that cluster around specific CanMEDS Roles, targeting junior and intermediate residents (postgraduate years 1 and 2, respectively). The CanMEDS Framework10,11 is a physician competency framework that guides specialty training in Canada. The 7 Roles—Medical Expert, Communicator, Collaborator, Leader (formerly Manager), Health Advocate, Professional, and Scholar—organize the associated competencies. Each clinical task assessment was evaluated using a 7-point, behaviorally anchored, global rating scale. The details of this project and its development have been previously described.9

The study received a waiver of exemption from our Institutional Ethics Review Board.

Data Analysis

To examine whether data were missing as a function of the CanMEDS Role being assessed, we conducted a 1-way within-subjects analysis of variance, with the CanMEDS Role serving as the independent variable and the proportion of missing data acting as the dependent variable. We calculated predicted proportions of the CanMEDS Roles for the intermediate-level portfolio, and then compared this to the percentage achieved by residents in the 8-month pilot period. All pairwise comparisons were Bonferroni corrected to the .05 level.

Five faculty members of the residency education committee, the local body that reviews resident files and approves the promotion of residents, independently rank-ordered residents on the basis of their perceived overall performance. A median rank score was calculated for each resident, and correlated with the mean proportion of missing data using a Spearman statistic. The rankings were completed before the residency education committee members had access to the aggregate portfolios and/or reports to ensure that members did not use the scores to make their rankings.

Results

Descriptive Statistics

The current analyses were based on 844 WBAs collected over 8 months. On average, 94 assessments were completed for each resident (ranging from 65 to 113). The majority of the WBAs assessed the Medical Expert (20%, 165 of 844), Communicator (19%, 161), and Professional (20%, 171) domains. The remaining WBAs evaluated the roles of Scholar (10%, 87), Collaborator (11%, 92), Manager (12%, 101), and Health Advocate (8%, 67).

Question 1: Are Data Missing at Random Across the 7 CanMEDS Roles?

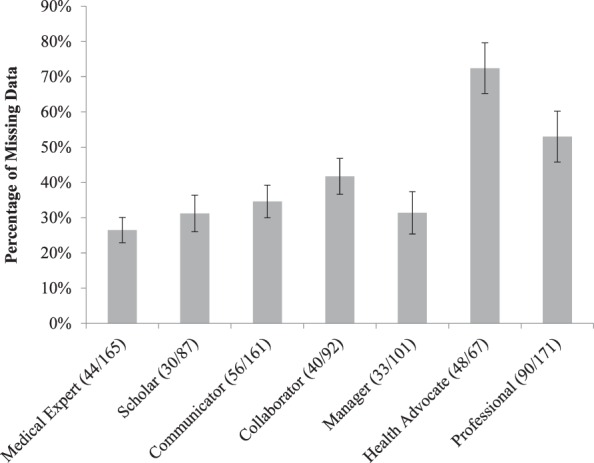

A total of 40% (n = 341) of the 844 WBAs had missing data. The proportion of missing data was unequally distributed across the 7 CanMEDS Roles (F(6,42) = 11.6, P < .001; figure 1). After accounting for multiple post hoc comparisons:

Health Advocate had significantly more missing data than Medical Expert, Scholar, Communicator, Collaborator, and Manager (all t > 3.9, P < .002).

Professional had significantly more missing data than Medical Expert and Communicator (all t > 4.5, P < .001).

Question 2: Is There a Relationship Between Resident Performance and the Proportion of Missing Data Points?

Internal consistency among the experts' rankings of the residents was high (Cronbach's alpha = 0.97; figure 2). Lower-performing residents did not have more missing data, as the proportion of missing data points was not significantly correlated with experts' rankings (r(9) = 0.29, P > .05).

figure 1.

Proportion of Missing Data as a Function of CanMEDS Role

Note: Numbers in parentheses represent the raw number of missing data points out of the total number of items required for all 9 residents.

figure 2.

Rank Order of Residents Obtained From Members of Emergency Medicine Residency Education Committee

Discussion

In our study of the effects of missing WBAs in 1 Canadian EM residency, there was a larger proportion of missing data for the Professional or Health Advocate roles, compared to the other CanMEDS Roles. The proportion of missing data for residents also was unrelated to experts' rankings of the residents' performance.

While it might be tempting to assume that trainees bear the burden of completing the necessary WBAs, and infer that missing data is simply the result of resident action or inaction, we believe that this understates the complexity of data acquisition in CBME. For example, some faculty may be less willing to complete specific WBAs. Indeed, a recent study within a similar rater pool in the same teaching center found that faculty members often avoid assessing certain CanMEDS Roles, particularly those related to Collaborator, Manager/Leader, Health Advocate, and Scholar.12 Missing data likely reflect multiple factors (eg, design of the assessment tool, raters, systems issues), and the present study highlights the need for medical educators to better understand the reasons data are missing.

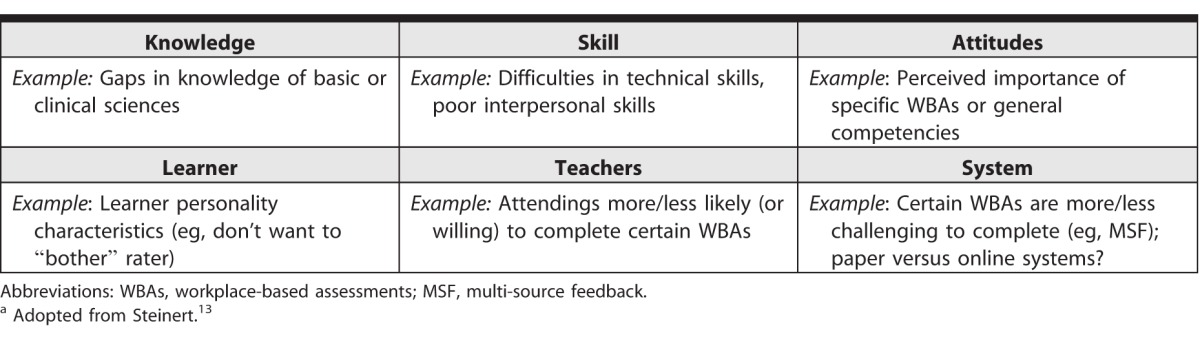

Developed to identify and work with “problem learners,” Steinert's KSALTS framework offers potential explanations for nonrandom missing data (table).13 The benefit of this framework is that it identifies why data might be missing, and that some of these reasons are under the control of trainees (eg, lack of knowledge and skills, poor attitudes, lack of motivation, etc), while others are outside their control (eg, rater discomfort, fewer opportunities to demonstrate certain competencies, etc). By applying the KSALTS model we can better understand other phenomena that may affect the implementation of a WBA program.

table.

KSALTS Frameworka

It is important to note that the concept of missing data is not new in education research, particularly with large-scale and/or longitudinal data. While a variety of methods exist that enable researchers to estimate (or impute) the values of missing data points,8 these models assume that data are missing at random, an assumption that is not valid in our data set. For example, data missing in a nonrandom fashion have implications toward the reliability, validity, feasibility, and acceptability of assessment decisions based on WBA portfolios. Using Messick's validity framework,14 it is important to go beyond measures of reliability and validity when evaluating the properties of aggregate WBA portfolios. Understanding response process and relations to other measures (eg, resident education committee decision making) is crucial. Given the consequential nature of assessment, a robust manner to investigate and handle missing data will be paramount, especially given that aggregate WBA portfolios will be of such great importance as the key determinant of displayed clinical competence.

Key limitations of this study are that it is based on a small cohort from 1 year at a single institution, reducing the ability to generalize to other educational settings using different WBA tools. We also did not explore why the data were missing; rather, we wish to stimulate discussion about the best practices for: (1) handling missing data; (2) the responsible aggregation of data; and (3) the importance of incorporating systematic evaluation processes to monitor for nonintentional data loss.

Reasons for missing data could be important for determining a solution. If data are difficult to acquire in the workplace, for instance, other curricular components (eg, simulation) could be used to fill the gap. Ultimately, our study reveals that a contextualized analysis of each local system will be imperative to best understand how to handle missing data in assessment portfolios. Future research and scholarship should look at the learner, faculty, and systemic influences that result in missing data.

Conclusion

Our study of missing WBA data in 1 Canadian emergency medicine residency showed more missing data for 2 of the 7 CanMEDS Roles, and that the proportion of missing data was unrelated to experts' rankings of resident performance. Together, these findings highlight the need for more attention to missing data. Procedures and policies that appropriately address the idiosyncrasies of missing data based on local contexts will be imperative for generating decisions about clinical competence.

References

- 1. General Medical Council. Tomorrow's doctors: outcomes and standards for undergraduate medical education. http://www.gmc-uk.org/Tomorrow_s_Doctors_1214.pdf_48905759.pdf. Accessed August 30, 2016. [Google Scholar]

- 2. General Medical Council. Supplementary guidance to medical schools. http://www.gmc-uk.org/education/undergraduate/8837.asp. Accessed August 30, 2016. [Google Scholar]

- 3. Accredication Council for Graduate Medical Education. Milestones. http://www.acgme.org/acgmeweb/tabid/430/ProgramandInstitutionalAccreditation/NextAccreditationSystem/Milestones.aspx. Accessed August 30, 2016. [Google Scholar]

- 4. Royal College of Physicians and Surgeons of Canada. Competence by design: reshaping Canadian medical education. March 2014. http://www.royalcollege.ca/rcsite/documents/educational-strategy-accreditation/royal-college-competency-by-design-ebook-e.pdf. Accessed August 30, 2016. [Google Scholar]

- 5. Iobst WF, Sherbino J, Cate OT, et al. Competency-based medical education in postgraduate medical education. Med Teach. 2010; 32 8: 651– 656. [DOI] [PubMed] [Google Scholar]

- 6. van der Vleuten CPM, Schuwirth LWT, Scheele F, et al. The assessment of professional competence: building blocks for theory development. Best Pract Res Clin Obstet Gynaecol. 2010; 24 6: 703– 719. [DOI] [PubMed] [Google Scholar]

- 7. Moonen-van Loon JM, Overeem K, Donkers HH, et al. Composite reliability of a workplace-based assessment toolbox for postgraduate medical education. Adv Heal Sci Educ. 2013; 18 5: 1087– 1102. [DOI] [PubMed] [Google Scholar]

- 8. Graham JW. Missing Data: Analyis and Design. New York, NY: Springer Science & Business Media; 2012. [Google Scholar]

- 9. Chan T, Sherbino J. McMap Collaborators. The McMaster Modular Assessment Program (McMAP): a theoretically grounded work-based assessment system for an emergency medicine residency program. Acad Med. 2015; 90 7: 900– 905. [DOI] [PubMed] [Google Scholar]

- 10. Frank JR. ed The CanMEDS 2005 physician competency framework: better standards, better physicians, better care. http://www.ub.edu/medicina_unitateducaciomedica/documentos/CanMeds.pdf. Accessed August 30, 2016. [Google Scholar]

- 11. Frank JR, Danoff D. The CanMEDS initiative: implementing an outcomes-based framework of physician competencies. Med Teach. 2007; 29 7: 642– 647. [DOI] [PubMed] [Google Scholar]

- 12. Sherbino J, Kulasegaram K, Worster A, et al. The reliability of encounter cards to assess the CanMEDS roles. Adv Health Sci Educ Theory Pract. 2013; 18 5: 987– 996. [DOI] [PubMed] [Google Scholar]

- 13. Steinert Y. The “problem” learner: whose problem is it? AMEE guide no. 76. Med Teach. 2013; 35 4: e1035– e1045. [DOI] [PubMed] [Google Scholar]

- 14. Messick S. Validity. : Linn RL. Educational Measurement. 3rd ed. London, UK: Macmillan Publishing Co; 1989: 13– 104. [Google Scholar]