Abstract

Background

Postoperative surgical site infections (SSI) are common and costly. Most occur post-discharge, and may result in potentially preventable readmission and/or unnecessary urgent evaluation. Mobile health approaches incorporating patient-generated wound photos are being implemented in an attempt to optimize triage and management. We assessed how adding wound photos to existing data sources modifies provider decision-making.

Study Design

Web-based simulation survey using convenience sample of providers with expertise in surgical infections. Participants viewed a range of scenarios including surgical history, physical exam and description of wound appearance. All participants reported SSI diagnosis, diagnostic confidence, and management recommendations (main outcomes), first without, and then with accompanying wound photos. At each step, participants ranked the most important features contributing to their decision.

Results

Eighty-three participants completed a median of 5 scenarios (IQR 4-7). Most participants were physicians in academic surgical specialties (N=70, 84%). Addition of photos improved overall diagnostic accuracy from 67% to 76% (p<0.001), and increased specificity from 77% to 92% (p<0.001) but did not significantly increase sensitivity (55% to 65%, p=0.16). Photos increased mean confidence in diagnosis from 5.9/10 to 7.4/10 (p<0.001). Overtreatment recommendations decreased from 48% to 16% (p<0.001) while undertreatment did not change (28% to 23%, p=0.20) with addition of photos.

Conclusions

Addition of wound photos to existing data as available via chart review and telephone consultation with patients significantly improved diagnostic accuracy and confidence, and prevented proposed overtreatment in scenarios without SSI. Post-discharge mobile health technologies have the potential to facilitate patient-centered care, decrease costs, and improve clinical outcomes.

Keywords: Surgical site infection, mobile health, simulation, wound photography, diagnostic accuracy, diagnostic confidence, health care utilization, post-discharge surveillance

Introduction

Surgical site infection (SSI) is a common post-operative complication, occurring in at least 3-5% of surgical patients and up to 33% of patients undergoing abdominal surgery.(1–4) Of the estimated 500,000 SSIs in the US annually, approximately 69% occur after hospital discharge, placing the burden of problem recognition on patients who are often ill-prepared to manage SSI.(5–10) More than half of these post-discharge infections result in readmission, making SSI the most costly healthcare-associated infection.(7,11–13) Often those readmissions are non-reimbursable as SSI after procedures such as elective colorectal surgery, joint replacements, and hysterectomies are considered preventable conditions by the Center for Medicare and Medicaid Services. Additionally, recent studies suggest that inadequate post-discharge communication, care fragmentation, and untimely, infrequent follow-up contribute to these poorer outcomes.(14–16)

As providers and hospitals seek to address the gap between discharge and follow-up visits, many are turning to technological approaches made possible by the increasing prevalence of smartphones coupled with patients’ increasing interest in tracking their own health.(17–19) Indeed, patients and providers have both expressed interest in using mobile health (mHealth) tools to facilitate improved post-discharge wound tracking and several small trials have shown feasibility of mHealth for wound monitoring.(14,20–24) At our institution, providers increasingly ask patients, especially those who must travel long distances to seek evaluation and treatment, to email or text wound photos to enhance their follow up care. Anecdotally, providers believe this practice improves triage, resulting in fewer unnecessary visits and earlier identification of potential problems.(25) Yet, the impact on provider decision-making of adding wound photographs to existing clinical data has not been previously studied in the context of post-discharge wound monitoring.

The primary goal of this study was to assess how the addition of wound photographs impacts providers’ diagnostic accuracy of SSI, confidence in diagnosis, and patient management. Secondary goals were to assess the relationship between diagnostic confidence and accuracy, and to determine which data elements providers consider most important to forming their diagnosis. We hypothesized that addition of wound photos would improve diagnostic accuracy, confidence in diagnosis, and patient management.

Methods

This study was approved by the University of Washington Institutional Review Board and consent was obtained electronically from all participants.

Participants and setting

Providers with experience in managing SSIs were recruited by hosting a display in the exhibit area at the 2015 annual meeting of the Surgical Infection Society. Follow-up recruitment emails were sent to the membership via listserv email. Inclusion criteria were English-speaking medical providers (e.g. physicians, nurses) who regularly manage SSIs. Those who did not complete 2 or more patient scenarios were excluded from analysis to filter out minimally engaged participants. Participants could complete the survey on a tablet at the SIS meeting exhibit area, or their own computer or mobile device at their convenience.

Survey construction

Sixteen patient scenarios were sampled from an existing database created from a prior prospective cohort study(26) of in-hospital SSI among open abdominal surgery patients in which patient wounds were systematically examined and photographed from post-operative day 2-21. In the original study, wounds were assessed daily for SSI using Centers for Disease Control (CDC) criteria.(27) The 16 scenarios were delivered to participants with stratified randomization so that half were SSI (superficial or deep) and half were non-SSI. Subsequently, within SSI cases and non-SSI cases, half were ambiguous and half were unambiguous diagnoses, as judged by the consensus of 3 providers on our study team (HLE, PS, CALA). Among the ambiguous cases, 4 were due to symptoms unsupportive of the diagnosis and 4 were due to a photo unsupportive of the diagnosis.

Data collection

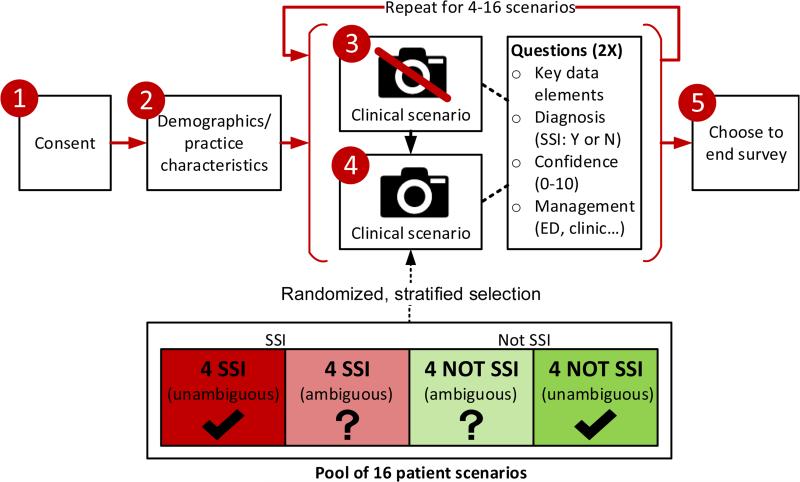

Participants were directed to an anonymous, web-based survey delivered via the Qualtrics (www.qualtrics.com) platform (Figure 1). Briefly, participants gave consent and then provided demographics and practice characteristics. They were then asked to complete scenarios ad lib, suggested to complete at least four. Each scenario consisted of 2 steps. In the first step, participants were given details about the patient including operative data, demographic/risk factors, limited vital signs, and wound features, meant to replicate the details that might be available by looking at the patient's chart and speaking to them on the phone (eTable 1). At this first step, they chose up to 3 of the most important descriptive features for assessing SSI (of the 20 provided), then were asked to make a diagnosis (SSI/not SSI), rate their diagnostic confidence on a 0-10 scale (0=“not at all confident” to 10=“very confident”), then choose one or more management options. For the second step (on the next page), they were again shown the original descriptive details for the case, along with a corresponding wound photograph. They then were asked to choose up to 3 of the most important photo-related features (of 7 provided; See eTable 2), followed by the same 3 questions regarding SSI diagnosis, confidence, and management. Participants sequentially completed scenarios until they elected to end the session. For each participant, scenarios were selected from a pool of 16 using stratified randomization to ensure that each participant received a balanced 1:1:1:1 mix of unambiguous SSI, ambiguous SSI, unambiguous non-SSI, and ambiguous non-SSI. Additionally, across all participants, each scenario type was presented in equal proportions using this feature of the Qualtrics randomizer. Participants were blinded to the actual diagnosis of SSI and proportion of patients with SSI in their set of scenarios.

Figure 1.

Study design overview. Red circles denote steps/pages participants encountered. Scenarios were selected for participants using stratified randomization from a pool of 16 (bottom). In steps 3 and 4, participants are first presented with a clinical scenario without photo (Step 3), then the same scenario with a wound photo (Step 4). The same questions are asked at both Step 3 and 4. SSI, surgical site infection.

eTable 1.

Details Provided To Providers At First Step (Without Photo)

| Operative | |

| Type of surgery | Categorical |

| Emergency surgery | Binary |

| Wound class | Categorical |

| Surgery duration | Continuous |

| ASA score | Categorical |

| Demographics/risk factors | |

| Sex | Binary |

| Smoker | Binary |

| Age | Continuous |

| Diabetic | Binary |

| BMI | Continuous |

| Vitals | |

| Pulse rate | Continuous |

| Morning temperature (tympanic) | Continuous |

| Wound | |

| Postoperative day | Continuous |

| Type of discharge | Categorical |

| Amount of discharge | Categorical |

| Odor | Binary |

| Skin color at wound edge | Categorical |

| Induration/swelling | Categorical |

| Wound pain | 0-10 scale |

| Wound edge separation | Continuous |

Participants chose 3 of these 20 details as most important for decision-making (surgical site infections vs no surgical site infection) for each particular scenario.

eTable 2.

Choices Offered about which Photo-Related Elements Were Most Important for Decision-Making (Surgical Site Infection vs No Surgical Site Infection) for Each Particular Scenario

| Discharge |

| Skin color around wound |

| Wound color |

| Swelling/induration |

| Slough |

| Wound separation |

| Granulation |

Data analysis

Participant-level data

Demographics and practice characteristics were summarized using counts and percentages for categorical data, means and standard deviations for normally-distributed continuous data, and medians and interquartile ranges for non-normally distributed continuous data. Correlations between participant characteristics and percentage of scenarios correct (both with and without photos) were tested using Pearson's correlation coefficient.

Scenario-level data

Changes in diagnostic accuracy, confidence and management were analyzed with scenarios, rather than participants, as the primary unit of analysis. SSI and non-SSI scenarios were analyzed separately. When analyzing change due to addition of photos (e.g. of diagnostic accuracy or confidence), paired tests for significance were used: Wilcoxon Signed Rank test for ordinal variables (e.g. confidence) and McNemar's test for binary variables (e.g. diagnostic accuracy). McNemar's exact test was used if there were fewer than 20 discordant pairs. When evaluating change in management, we defined undertreatment of SSI cases as not receiving a recommendation for ED visit, next day clinic visit or antibiotics, and overtreatment of non-SSI cases as receiving a recommendation for ED visit, next day clinic visit or antibiotics. Data was exported from Qualtrics survey platform into Stata (Stata v13, StataCorp LP) for analysis. Data visualizations were created in Microsoft Excel 2013. P-values of 0.05 or less were considered significant.

Results

Participant-level data

Of 137 providers who initiated the study, 54 were excluded due to completion of less than 2 scenarios, leaving 83 providers included in analysis. Participants tended to be male (63%), surgical specialists (84%), holding MD degrees (87%), and practicing in an academic setting (78%) (Table 1). Most (57%) do not report currently receiving wound photos from patients. Addition of photos improved participants’ median accuracy from 67% to 75%; participants in the 25th percentile improved from 50% to 66% correct. There was no correlation between accuracy and level of training, years in practice, time taken per scenario, number of scenarios completed, or screen size (all p>0.3).

Table 1.

Participant Characteristics

| Factor, level | Value |

|---|---|

| n | 83 |

| Level of training, n (%) | |

| MD | 72 (87) |

| ARNP/PA | 2 (2) |

| RN | 7 (8) |

| Other | 2 (2) |

| Specialty, n (%) | |

| Surgery | 70 (84) |

| Infectious disease | 7 (8) |

| Other | 6 (7) |

| Practice setting, n (%) | |

| Academic | 65 (78) |

| Community | 17 (20) |

| Sex, n (%) | |

| Male | 52 (63) |

| Female | 31 (37) |

| Years in practice, mean (SD) | 15 (12) |

| Wound photos seen per mo, median (IQR) | 0 (0-2) |

| Surgical site infections managed per mo, median (IQR) | 4 (2-5) |

| Age, y, mean (SD) | 42 (13) |

| Time taken for survey, min, median (IQR) | 15 (11-22) |

| No. of scenarios completed, median (IQR) | 5 (4-7) |

| Screen size, n (%) | |

| Smartphone | 19 (23) |

| Tablet or larger | 62 (77) |

| Scenarios (without photo) correct, %, median (IQR) | 67 (50-80) |

| Scenarios (with photo) correct, %, median (IQR) | 75 (66-92) |

Scenario-level data

Diagnostic accuracy and predictive values

Mean diagnostic accuracy across all scenarios improved from 67% to 76% (p=0.0003) with photos. This improvement was driven in large part by increased accuracy among the “ambiguous” non-SSI scenarios (Table 2).

Table 2.

Accuracy, Confidence, and Test Characteristics, Without and With Photos

| Without photo | With photo | p Value | |

|---|---|---|---|

| Accuracy, numerator/denominator (%) | |||

| SSI | 75/122 (61.5) | 74/115 (64.3) | 0.24 |

| SSI (ambiguous) | 65/128 (50.8) | 73/121 (60.3) | 0.13 |

| Not SSI (ambiguous) | 86/128 (67.2) | 105/123 (85.4) | <0.001 |

| Not SSI | 94/103 (91.3) | 97/99 (98.0) | 0.109 |

| Overall | 320/481 (66.5) | 349/458 (76.2) | <0.001 |

| Confidence, mean (SD), 0-10 scale | |||

| SSI | 5.8 (1.6) | 6.7 (1.9) | <0.001 |

| SSI (ambiguous) | 5.9 (1.8) | 7.2 (1.9) | <0.001 |

| Not SSI (ambiguous) | 5.8 (2.1) | 7.6 (1.9) | <0.001 |

| Not SSI | 6.3 (1.8) | 8.4 (1.6) | <0.001 |

| Correct diagnosis (SSI) | 6.1 (1.7) | 7.2 (1.8) | <0.001 |

| Incorrect diagnosis (SSI) | 5.7 (2.9) | 6.7 (3.4) | <0.001 |

| Correct diagnosis (not SSI) | 6.3 (1.8) | 8.3 (1.7) | <0.001 |

| Incorrect diagnosis (not SSI) | 6.0 (2.7) | 6.6 (2.1) | 0.82 |

| Overall | 6.1 (1.8) | 7.6 (1.9) | <0.001 |

| Test characteristics, % | |||

| Sensitivity | 56.0 | 62.3 | 0.16 |

| Specificity | 77.9 | 91.0 | <0.001 |

| Positive predictive value | 73.3 | 88.0 | <0.001 |

| Negative predictive value | 62.1 | 69.4 | 0.06 |

SSI, surgical site infection.

Specificity and positive predictive value improved (p<0.001) with addition of wound photos. Overall, sensitivity for remote diagnosis of SSI using symptom report and wound photos was moderate (62%) and specificity was high (91%).

Diagnostic confidence

Over all scenarios, mean confidence increased from 6.1 to 7.5 (p<0.001) and increased in all four individual scenario groups (p<0.001). Participants with incorrect answers had lower confidence levels than those with correct answers, with and without photos (Table 2).

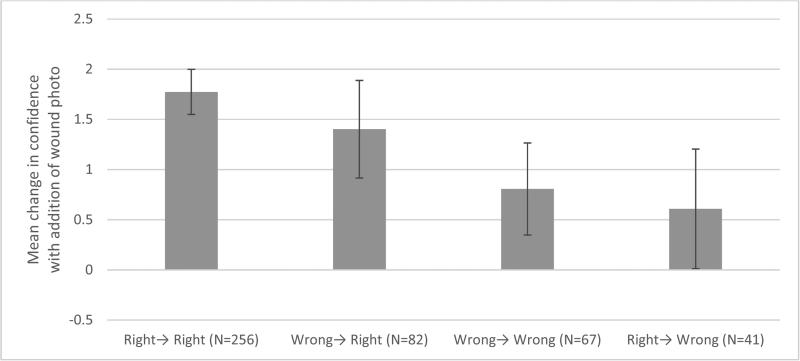

Participants who had the correct diagnosis both with and without photo or who changed their diagnosis to the correct diagnosis after viewing the wound photo (left half of Figure 2) had the largest increases in confidence (1.5-2 units on a 0-10 scale). Participants with incorrect initial diagnoses or who changed their diagnosis from correct to incorrect had smaller increases (0.5-1 units) in confidence.

Figure 2.

Mean change in confidence by category of answer change. For example, “Wrong→Right” represents participants who changed their incorrect diagnosis to a correct diagnosis after viewing the wound photo. Error bars represent 95% CI.

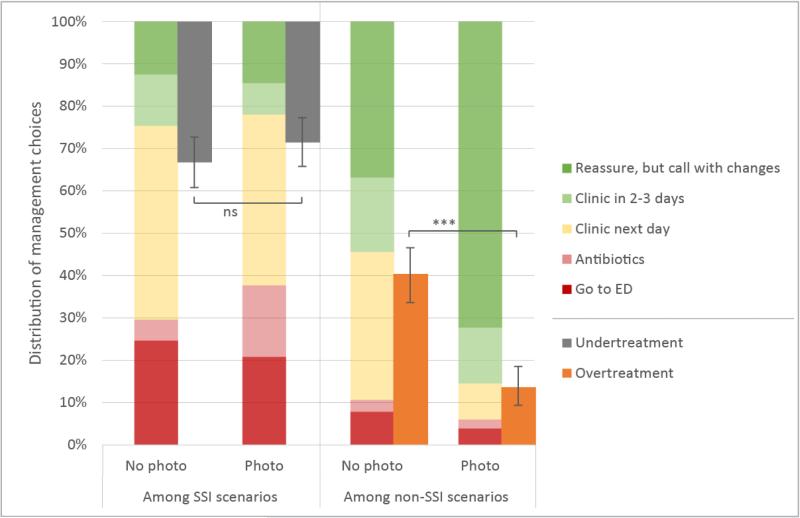

Management

Addition of photos decreased management recommendations for next day clinic visits (32% vs 8%, p<0.0001) and increased reassurance among patients without SSI (34% vs 72%, p<0.0001)(Figure 3). Among patients with SSI, more antibiotics were prescribed (5% vs 17%, p<0.0001), but recommendations for ED visits, next day clinic, and reassurance remained similar. Relatively few participants were interested in continuing to receive symptom reports (~10%) or photos (~15-20%) after initial diagnosis, even among patients with suspected SSI.

Figure 3.

Management decisions with and without photos in surgical site infection (SSI) and non-SSI scenarios. Secondary axis (right offset) shows undertreatment among SSI scenarios (left half) and overtreatment among non-SSI scenarios (right half). Undertreatment is defined as a patient with SSI who was not advised to go to emergency department (ED), next-day clinic visit, or prescribed antibiotics. Overtreatment is defined as a patient without SSI who was advised to go to ED, next-day clinic visit or prescribed antibiotics.

Figure 3 also shows the effect of addition of photos on undertreatment of SSI cases (i.e., not receiving a recommendation for ED, next day clinic or antibiotics) and overtreatment of non-SSI cases (i.e., receiving a recommendation for ED, next day clinic or antibiotics). Overtreatment among non-SSI patients decreased from 40% to 14% (p<0.0001) with the most significant drop occurring among unambiguous non-SSI cases (31% to 5%, p<0.0001). Assuming a post-discharge SSI rate of 15%, the number needed to treat (NNT) to prevent overtreatment would be 4.5. There was a trend toward less undertreatment of ambiguous SSI cases (38% to 30%, p=0.19; NNT=83). Generally, both groups received treatment that was either similar or more appropriate, with the addition of photos.

Key descriptive features

Among the 20 non-photo related features (i.e., items communicated in words) presented, participants indicated that the following were most important (with % of scenarios where participants ranked the element among the top 3): Skin color (62%), discharge type (43%), body temperature (29%), induration (29%), discharge amount (28%), wound class (20%), wound pain (16%), type of surgery (14%), heart rate (11%), and surgery duration (10%). These features did not significantly vary by type of scenario (i.e., SSI vs non-SSI), suggesting they help to both rule in and rule out SSI. Of the 20 data elements presented, the lowest ranking (ranked important in <10% of cases) were: diabetes, wound edge separation, American Society of Anesthesiologists score, body mass index, emergency surgery, post-operative day, smoking, age, wound odor, and sex.

Among the 7 photo-related features (i.e., items visualized by participants in actual wound photos), participants again indicated skin color to be most important (86%), followed by swelling (54%), discharge (49%), wound color (36%) and wound separation (27%).

Discussion

Providing wound photos significantly increased diagnostic accuracy and confidence in the diagnosis of SSI following abdominal surgery. Further, in this simulated-based survey of providers, the addition of photos changed management decisions, most notably in decreasing overtreatment among patients without SSI. Participants recommended fewer next day clinic visits with more reassurance for patients without SSI. In addition, participants recommended more antibiotics for patients with SSI. Skin color around the wound was ranked as the most important symptom in diagnosing SSI, both as reported by patients over the phone and as visualized in photographs by participants.

Several previous studies have evaluated the use of digital photography in assessing inpatient wound infection in laparotomy (28) and vascular surgery wounds.(29,30) Our results were in line with these studies, with sensitivities for diagnosing SSI tending to be lower (42-71%) and specificity tending to be higher (65-97%). In these studies, accuracy was lower in remotely assessing symptoms, but was generally higher when making remote management decisions. In other words, remote assessors may not correctly assess whether a wound is red, but they frequently identify what, if any, intervention is necessary. In both vascular wound studies, the authors found that remote agreement was comparable to in-person agreement, suggesting that diagnosis of SSI can be reliably done remotely.

Our study results suggest that photos increase diagnostic confidence across the board, even in incorrect diagnoses. However, we found a dose-response relationship (see Figure 2) whereby participants who arrived at the correct diagnosis had significantly larger increases in confidence than those who arrived at incorrect diagnoses. Diagnostic confidence in remote assessment of wounds has been previously reported by Wirthlin et al.(29) The participants’ confidence in that study tended to be higher (8.2-9.8/10) compared to our results (mean 7.5/10 with photos), though what diagnostic confidence “level” is needed to impact management is unclear. Furthermore, the effect of diagnostic confidence in clinical practice is unclear, but would be expected to serve as a mediator between the provider's “hunch” and the likelihood of that provider making a definitive management recommendation. In our study, this was manifested though increased reassurance (and fewer clinic visits) among patients without SSI.

This work has several limitations; first, the original patient data on which we based our scenarios was derived from a cohort of primarily Caucasian open abdominal surgery inpatients in the Netherlands with a median length of stay of 12 days. We sought to select patients who were representative of post-discharge surgical patients in the US (i.e. only post-operative days 6-14 were included in scenarios based on median length of stay in the US of 5-6 days (31,32)); however, our results might not be generalizable to patients who didn't undergo open abdominal operations, or who have darker skin tones. Second, the photos and wound data used in this study were collected by research team members and not patients themselves. As such, it should be regarded as “gold standard” data. Photos and wound data entered by patients or their caregivers may be less helpful for providers due to variability in quality and subjectivity. Third, our participant sample was primarily academic surgeons with most being members of the Surgical Infection Society; our sample was underpowered to detect differences based on training, specialty, or expertise, meaning that our results may not be generalizable to providers in other settings. Fourth, our study design may have suffered from anchoring bias—each scenario showed the wound photograph after an initial decision about diagnosis and treatment was already made, making it easier for the photo to reinforce the decision rather than contradict it. This would be expected to skew participants toward the null hypothesis, or not changing their initial diagnosis, and lends further validity to the value of wound photos at improving diagnosis. Finally, we stratified our scenarios on a number of dimensions that are not epidemiologically representative of SSIs in practice, e.g. we oversampled SSI (50% of scenarios). This results in a biased estimate of positive and negative predictive values, but not sensitivity and specificity. Based on the population that our scenarios were drawn from and other literature,(1–4) we estimate the prevalence of post-discharge SSI to be 5-15% following open abdominal surgery. Since addition of photos in our study most enhanced diagnosis and management among patients without SSI, it is likely that when photos are included in a more epidemiologically representative population, diagnostic accuracy would increase to an even greater extent.

Conclusions

Addition of wound photos to existing data as available via chart review and telephone consultation with patients improved diagnostic accuracy and confidence, and prevented overtreatment with potentially beneficial effects on utilization. Mobile health technologies to capture wound photos and other key data from patients during the post-discharge period have the potential to facilitate patient-centered care, reduce costs, and improve clinical outcomes. Our team is proceeding in developing and evaluating such a system—the mobile post-operative wound evaluator (mPOWEr). Future work should empirically assess the impact of implementing such technologies on outcomes relevant to patients, providers, and health systems.

Acknowledgment

We acknowledge the generous feedback from the mPOWEr research group and Dr Gabrielle Van Ramshorst who provided access to the dataset that formed the basis of the clinical scenarios for the survey.

Support: Research reported in this publication was supported by the Surgical Infection Society Foundation for Education and Research, the University of Washington Magnuson Scholarship, and National Institutes of Health (T32DK070555).

Abbreviations

- SSI

surgical site infection

- mHealth

mobile health

- CDC

Centers for Disease Control

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Disclosure Information: Nothing to disclose.

Disclosures outside the scope of this work: Dr Hartzler is employed as a full-time researcher for Group Health Research Institute. She has a patent pending for social signal processing technology with Microsoft Research.

Disclaimer: The content is solely the responsibility of the authors and does not necessarily represent the official views of any of the sponsors above. Funders played no role in the design and conduct of the study; collection, management, analysis, and interpretation of the data; or preparation, review, or approval of the manuscript.

Presented at 36th Surgical Infection Society Annual Meeting, Palm Beach FL, May 2016.

References

- 1.Bruce J, Russell EM, Mollison J, Krukowski ZH. The measurement and monitoring of surgical adverse events. Health Technol Assess (Rockv) 2001:5. doi: 10.3310/hta5220. [DOI] [PubMed] [Google Scholar]

- 2.Smyth ETM, McIlvenny G, Enstone JE, et al. Four Country Healthcare Associated Infection Prevalence Survey 2006: overview of the results. J Hosp Infect. 2008;69:230–248. doi: 10.1016/j.jhin.2008.04.020. doi:10.1016/j.jhin.2008.04.020. [DOI] [PubMed] [Google Scholar]

- 3.Pinkney TD, Calvert M, Bartlett DC, et al. Impact of wound edge protection devices on surgical site infection after laparotomy: multicentre randomised controlled trial (ROSSINI Trial). BMJ. 2013;347:f4305. doi: 10.1136/bmj.f4305. doi:10.1136/bmj.f4305. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Krieger BR, Davis DM, Sanchez JE, et al. The use of silver nylon in preventing surgical site infections following colon and rectal surgery. Dis Colon Rectum. 2011;54:1014–1019. doi: 10.1097/DCR.0b013e31821c495d. doi:10.1097/DCR.0b013e31821c495d. [DOI] [PubMed] [Google Scholar]

- 5.Mangram AJ, Horan TC, Pearson ML, et al. Guideline for prevention of surgical site infection, 1999. Infect Control Hosp Epidemiol. 1999;27:97–134. doi: 10.1086/501620. doi:10.1016/S0196-6553(99)70088-X. [DOI] [PubMed] [Google Scholar]

- 6.Daneman N, Lu H, Redelmeier DA. Discharge after discharge: predicting surgical site infections after patients leave hospital. J Hosp Infect. 2010;75:188–194. doi: 10.1016/j.jhin.2010.01.029. http://www.ncbi.nlm.nih.gov/pubmed/20435375. [DOI] [PubMed] [Google Scholar]

- 7.Gibson A, Tevis S, Kennedy G. Readmission after delayed diagnosis of surgical site infection: a focus on prevention using the American College of Surgeons National Surgical Quality Improvement Program. Am J Surg. 2014;207:832–839. doi: 10.1016/j.amjsurg.2013.05.017. doi:10.1016/j.amjsurg.2013.05.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Kazaure HS, Roman SA, Sosa JA. Association of postdischarge complications with reoperation and mortality in general surgery. Arch Surg. 2012;147:1000–1007. doi: 10.1001/2013.jamasurg.114. doi:10.1001/2013.jamasurg.114. [DOI] [PubMed] [Google Scholar]

- 9.Martone WJ, Nichols RL. Recognition, prevention, surveillance, and management of surgical site infections: introduction to the problem and symposium overview. Clin Infect Dis. 2001;33:S67–S68. doi: 10.1086/321859. doi:10.1086/321859. [DOI] [PubMed] [Google Scholar]

- 10.Woelber E, Schrick E, Gessner B, Evans HL. Proportion of surgical site infection occurring after hospital discharge: A systematic review. Surg Infect (Larchmt) 2016 doi: 10.1089/sur.2015.241. In press. doi: 10.1089/sur.2015.241. [DOI] [PubMed] [Google Scholar]

- 11.Zimlichman E, Henderson D, Tamir O, et al. Health Care–Associated Infections. JAMA Intern Med. 2013;173:2039. doi: 10.1001/jamainternmed.2013.9763. doi:10.1001/jamainternmed.2013.9763. [DOI] [PubMed] [Google Scholar]

- 12.Limón E, Shaw E, Badia JM, et al. Post-discharge surgical site infections after uncomplicated elective colorectal surgery: impact and risk factors. The experience of the VINCat Program. J Hosp Infect. 2014;86:127–132. doi: 10.1016/j.jhin.2013.11.004. doi:10.1016/j.jhin.2013.11.004. [DOI] [PubMed] [Google Scholar]

- 13.Stone PW, Braccia D, Larson E. Systematic review of economic analyses of health care-associated infections. Am J Infect Control. 2005;33:501–509. doi: 10.1016/j.ajic.2005.04.246. doi:10.1016/j.ajic.2005.04.246. [DOI] [PubMed] [Google Scholar]

- 14.Sanger P, Hartzler A, Lober WB, Evans HL. Provider needs assessment for mPOWEr: a Mobile tool for Post-Operative Wound Evaluation.. Proceedings of AMIA Annual Symposium; Washington DC. 2013; p. 1236. http://knowledge.amia.org/amia-55142-a2013e-1580047/t-06-1.582200/f-006-1.582201/a-457-1.582620/ap-600-1.582621. [Google Scholar]

- 15.Saunders RS, Fernandes-Taylor S, Rathouz PJ, et al. Outpatient follow-up versus 30-day readmission among general and vascular surgery patients: A case for redesigning transitional care. Surgery. 2014;156:949–958. doi: 10.1016/j.surg.2014.06.041. doi:10.1016/j.surg.2014.06.041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Tsai TC, Orav EJ, Jha AK. Care fragmentation in the postdischarge period surgical readmissions, distance of travel, and postoperative mortality. JAMA Surg. 2015;02115:59–64. doi: 10.1001/jamasurg.2014.2071. doi:10.1001/jamasurg.2014.2071. [DOI] [PubMed] [Google Scholar]

- 17.Klasnja P, Pratt W. Healthcare in the pocket: mapping the space of mobile-phone health interventions. J Biomed Inform. 2012;45:184–198. doi: 10.1016/j.jbi.2011.08.017. doi:10.1016/j.jbi.2011.08.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Pew Research Center [January 3, 2015];Tracking for Health | Pew Research Center's Internet & American Life Project. http://www.pewinternet.org/Reports/2013/Tracking-for-Health.aspx. Published 2013.

- 19.Pew Research Center [January 3, 2015];Health Online 2013 | Pew Research Center's Internet & American Life Project. http://www.pewinternet.org/Reports/2013/Health-online.aspx. Published 2013.

- 20.Sanger PC, Hartzler A, Han SM, et al. Patient Perspectives on Post-Discharge Surgical Site Infections: Towards a Patient-Centered Mobile Health Solution. PLoS One. 2014;9:e114016. doi: 10.1371/journal.pone.0114016. doi:10.1371/journal.pone.0114016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Armstrong KA, Semple JL, Coyte PC. Replacing ambulatory surgical follow-up visits with mobile app home monitoring: modeling cost-effective scenarios. J Med Internet Res. 2014;16:e213. doi: 10.2196/jmir.3528. doi:10.2196/jmir.3528. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Chen DW, Davis RW, Balentine CJ, et al. Utility of routine postoperative visit after appendectomy and cholecystectomy with evaluation of mobile technology access in an urban safety net population. J Surg Res. 2014;190:478–483. doi: 10.1016/j.jss.2014.04.028. doi:10.1016/j.jss.2014.04.028. [DOI] [PubMed] [Google Scholar]

- 23.Martínez-Ramos C, Cerdán MT, López RS. Mobile phone-based telemedicine system for the home follow-up of patients undergoing ambulatory surgery. Telemed J E Health. 2009;15:531–537. doi: 10.1089/tmj.2009.0003. doi:10.1089/tmj.2009.0003. [DOI] [PubMed] [Google Scholar]

- 24.Semple JL, Sharpe S, Murnaghan ML, Theodoropoulos J, Metcalfe KA. Using a Mobile App for Monitoring Post-Operative Quality of Recovery of Patients at Home: A Feasibility Study. JMIR mHealth uHealth. 2015;3:e18. doi: 10.2196/mhealth.3929. doi:10.2196/mhealth.3929. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Sanger P, Hartlzer A, Lordon R, et al. A patient-centered system in a provider-centered world: challenges of incorporating post-discharge wound data into practice. J Am Med Informatics Assoc. 2016;23:514–525. doi: 10.1093/jamia/ocv183. doi:10.1093/jamia/ocv183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.van Ramshorst GH, Vos MC, den Hartog D, et al. A comparative assessment of surgeons’ tracking methods for surgical site infections. Surg Infect (Larchmt) 2013;14:181–187. doi: 10.1089/sur.2012.045. doi:10.1089/sur.2012.045. [DOI] [PubMed] [Google Scholar]

- 27.CDC [June 10, 2015];Surgical Site Infection (SSI) Event. 2015 http://www.cdc.gov/nhsn/PDFs/pscManual/9pscSSIcurrent.pdf.

- 28.van Ramshorst G, Vrijland W. Validity of Diagnosis of Superficial Infection of Laparotomy Wounds Using Digital Photography: Inter-and Intra-observer Agreement Among Surgeons. Wounds. 2010;22:38–43. [PubMed] [Google Scholar]

- 29.Wirthlin DJ, Buradagunta S, Edwards R a, et al. Telemedicine in vascular surgery: feasibility of digital imaging for remote management of wounds. J Vasc Surg. 1998;27:1089–1099. doi: 10.1016/s0741-5214(98)70011-4. discussion 1099-1100. [DOI] [PubMed] [Google Scholar]

- 30.Wiseman JT, Fernandes-taylor S, Gunter R, et al. Inter-rater agreement and checklist validation for postoperative wound assessment using smartphone images in vascular surgery. J Vasc Surg. 2015;4:320–328. e2. doi: 10.1016/j.jvsv.2016.02.001. doi:10.1016/j.jvsv.2016.02.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Mahmoud NN, Turpin RS, Yang G, Saunders WB. Impact of surgical site infections on length of stay and costs in selected colorectal procedures. Surg Infect (Larchmt) 2009;10:539–544. doi: 10.1089/sur.2009.006. doi:10.1089/sur.2009.006. [DOI] [PubMed] [Google Scholar]

- 32.Bratzler DW, Houck PM, Richards C, et al. Use of antimicrobial prophylaxis for major surgery: baseline results from the National Surgical Infection Prevention Project. Arch Surg. 2005;140:174–182. doi: 10.1001/archsurg.140.2.174. doi:10.1001/archsurg.140.2.174. [DOI] [PubMed] [Google Scholar]